PDE-based Dynamic Density Estimation for Large-scale Agent Systems

Abstract

Large-scale agent systems have foreseeable applications in the near future. Estimating their macroscopic density is critical for many density-based optimization and control tasks, such as sensor deployment and city traffic scheduling. In this paper, we study the problem of estimating their dynamically varying probability density, given the agents’ individual dynamics (which can be nonlinear and time-varying) and their states observed in real-time. The density evolution is shown to satisfy a linear partial differential equation uniquely determined by the agents’ dynamics. We present a density filter which takes advantage of the system dynamics to gradually improve its estimation and is scalable to the agents’ population. Specifically, we use kernel density estimators (KDE) to construct a noisy measurement and show that, when the agents’ population is large, the measurement noise is approximately “Gaussian”. With this important property, infinite-dimensional Kalman filters are used to design density filters. It turns out that the covariance of measurement noise depends on the true density. This state-dependence makes it necessary to approximate the covariance in the associated operator Riccati equation, rendering the density filter suboptimal. The notion of input-to-state stability is used to prove that the performance of the suboptimal density filter remains close to the optimal one. Simulation results suggest that the proposed density filter is able to quickly recognize the underlying modes of the unknown density and automatically ignore outliers, and is robust to different choices of kernel bandwidth of KDE.

Index Terms:

Large-scale systems, estimation, Kalman filtering, stochastic systemsI Introduction

Efficiently estimating the continuously varying probability density of the states of large-scale agent systems is an important step for many density-based optimization and control tasks, such as sensor deployment [1] and city traffic scheduling. In such scenarios, the agents (such as UAVs) are built by task designers and follow the specified control commands, which means their dynamics are known. While density estimation is a fundamental problem extensively studied in statistics, we are particularly interested in the case where the samples (i.e. the agent states) are governed by known dynamics (which can be nonlinear and time-varying). We study how to take advantage of their dynamics to obtain efficient and convergent density estimates in real time.

In general, density estimation algorithms are classified as parametric and non-parametric. Parametric algorithms assume that the samples are drawn from a known parametric family of distributions with a fixed set of parameters, such as the Gaussian mixture models [2]. Performance of such estimators rely on the validity of the assumed models, and therefore they are unsuitable for estimating an evolving density. In non-parametric approaches, the data are allowed to speak for themselves in determining the density estimate. As a representative, kernel density estimation (KDE) [2] has been widely used for estimating the global density in the study of swarm robotic systems [3, 4, 5]. It is known that the performance of KDE largely depends on a smoothing parameter called the bandwidth [2]. When the samples are stationary, many bandwidth selection techniques have been proposed, such as cross-validation [2], adaptive bandwidth [6] and the plug-in technique [7]. Such techniques are heuristic in general, since any predefined optimality of bandwidth selection requires certain information of the unknown density. They are also not suitable for dynamic estimation which requires the algorithm to be computationally efficient and adapt to the density evolution. For dynamic density estimation, many adaptations of KDE have been proposed in the domain of data stream mining [8]. In such problems, the dynamics of the density are usually unknown, and therefore little can be claimed about the convergence of the algorithm.

To estimate the global distribution of large-scale agents with known dynamics, an alternative way is to formulate it as a filtering problem, for which there exists a large body of literature, such as the celebrated Kalman filters and their variants [9, 10], the more general Bayesian filters and their Monte Carlo approach, also known as particle filters [11]. Unfortunately, all these methods are known to suffer from the curse of dimensionality, especially for large-scale nonlinear systems. A potential solution is to use the so-called consensus filters [12, 13, 14], which obtain local distribution estimates of the agent system using local Kalman or Bayesian filters, and then compute the global distribution by averaging local estimates through some consensus protocols. However, few conclusions are made concerning the relationship between the true distribution and the estimated global distribution. Moreover, stability analysis is difficult when the agents’ dynamics are nonlinear and time-varying.

In summary, considering efficiency and convergence requirements, existing methods are unsuitable for estimating the time-varying density of large-scale agent systems. This motivates us to propose a dynamic and scalable density estimation algorithm that can perform online and take advantage of the dynamics to guarantee its convergence. Specifically, we show that the agents’ density is governed by a linear partial differential equation (PDE), called the Fokker-Planck equation, which is uniquely determined by the agents’ dynamics. KDE is used to construct a noisy measurement of the density (the state of this PDE). The measurement noise is approximately “Gaussian” (more precisely, asymptotically Gaussian when the agents’ population tends to infinity), so that infinite-dimensional Kalman filters can be used to design density filters. It turns out that the covariance of measurement noise depends on the true density, for which approximating the covariance is required and the density filter becomes suboptimal. We then use the notion of input-to-state stability to prove the stability of the suboptimal density filter and the associated operator Riccati equation.

Our contributions contain the following aspects: (i) By using a density filter, we can (largely) circumvent the problem of bandwidth selection; (ii) The density filter takes advantage of the agents’ dynamics to improve its density estimation, in the sense of minimizing the covariance of estimation error; (iii) The density filter is proved to be convergent and is scalable to the population of agents; (iv) All the results hold even if the agents’ dynamics are nonlinear and time-varying.

The rest of the paper is organized as follows. Section II introduces some preliminaries. Problem formulation is given in Section III. Section IV is our main results, in which we present a density filter and then study its stability/optimality. Section V performs an agent-based simulation to verify the effectiveness of the density filter. Section VI summarizes the contribution and points out future research.

II Preliminaries

II-A Infinite-Dimensional Kalman Filters

The Kalman filter is an algorithm that uses the system’s model, known control inputs and sequential measurements to form a better state estimate [15]. It was later extended to infinite-dimensional systems, represented using linear operators [16, 17]. Suppose the signal and its measurement , both in a Hilbert space, are generated by the stochastic linear differential equations

where and are infinite-dimensional Wiener processes with incremental covariance operators and , respectively [18]. Assume and for all . Denote and . The Kalman filter is an algorithm that minimizes the covariance of the estimation error, given by [16]

where is the called the optimal Kalman gain, and is the solution of the Riccati equation

with .

II-B Input-to-state stability

Input-to-state stability (ISS) is a stability notion widely used to study stability of nonlinear control systems with external inputs [19]. Roughly speaking, a control system is ISS if it is asymptotically stable in the absence of external inputs and if its trajectories are bounded by a function of the magnitude of the input. To define the ISS concept for infinite-dimensional systems we need to introduce the following classes of comparison functions [19].

Definition 1

(ISS [20]). Consider a control system consisting of normed linear spaces and , called the state space and the input space, endowed with the norms and respectively, and a transition map . The system is said to be ISS if there exist and , such that

holds , and . It is called locally input-to-state stable (LISS), if there also exists constants such that the above inequality holds and .

III Problem formulation

This paper studies the problem of estimating the dynamically varying probability density of large-scale stochastic agents. The dynamics of the agents are assumed to be known and satisfy the following stochastic differential equations

| (1) |

where represents the state of the -th agent (e.g. positions), is the deterministic dynamics (e.g. velocity fields), is an -dimensional standard Wiener process, and represents the stochastic dynamics. We also assume the collection of states are observable.

The probability density of agent states is known to satisfy the Fokker-Planck equation [21]:

| (2) |

where is the initial density and

If the agent states are confined within a bounded domain , one can impose a reflecting boundary condition

| (3) |

where , is the boundary of and is the outward normal to .

Remark 1

Note that the dynamics of agents’ density (2) are uniquely determined by their individual dynamics (1) and are therefore known. This relationship holds even if (1) is nonlinear and time-varying. Therefore, the density filter to be presented applies to a very wide class of systems. It is worth pointing out that PDE (2) is always linear even if (1) is not. (It is time-varying if (1) is time-varying.)

Now, we can formally state the problem to be solved as follows:

Problem 1

Given the density dynamics (2) and agent states , we want to estimate their density .

IV Main Results

In this section, we design a density filter to estimate the state (i.e. the density) of (2) by combining KDE and infinite-dimensional Kalman filters. Specifically, we use KDE to construct a noisy measurement of the unknown density and show that the measurement noise is approximately “Gaussian”, which enables us to use infinite-dimensional Kalman filters to design a density filter. Stability analysis is also presented.

IV-A Density Filter Design

In this section, we use KDE to construct a measurement with Gaussian noise and design a density filter.

KDE is a non-parametric way to estimate an unknown probability density function (pdf) [2]. The agents’ states at time can be seen as a set of independent samples drawn from the pdf . Fix and denote . The density estimator is given by

| (4) |

where is a kernel function [2] and is the bandwidth, usually chosen as a function of such that and . The Gaussian kernel is frequently used due to its infinite order of smoothness, given by

It is known that the is asymptotically normal and that and are asymptotically uncorrelated for any . These two properties are summarized as follows.

Lemma 1

(Asymptotic normality [22]) Under conditions and , if the bandwidth tends to zero faster than the optimal rate, i.e.,

then as , we have

| (5) |

Lemma 2

(Asymptotic uncorrelatedness [22]) Let and be two distinct continuity points of . Then under , as , the asymptotic covariance of and satisfies

In practice, is finite. Hence, the kernel density estimator is biased (i.e. ). Also, and are correlated. However, one can always choose a smaller bandwidth to reduce the bias and the covariance [22, 2], which means that can be made approximately Gaussian with independent components when is large. It is known that the performance of KDE largely depends on its bandwidth [2]. Any predefined optimality of the bandwidth selection requires certain information of the unknown density . For example, the one that balances the estimation bias and variance depends on the second-order derivatives of . An excessively large (small) bandwidth may cause the problem of oversmoothing (undersmoothing). For the density filter to be presented, we tend to choose a smaller bandwidth. We recall that “filters” essentially combine past outputs to produce better estimates, which can ease the problem of undersmoothing. In this regard, by using a density filter, we can largely circumvent the problem of optimal bandwidth selection. Simulation studies will show that the performance of the proposed density filter is robust to different choices of bandwidth.

We are now ready to present a density filter using the infinite-dimensional Kalman filters. We rewrite the PDE of density evolution (2) in the form of an evolution equation and use KDE to construct a noisy measurement :

| (6) | ||||

where is a linear operator, represents a kernel density estimator using the states at time , and is the measurement noise which is approximately Gaussian with covariance operator where is a constant depending on and .

According to Section II-A, the optimal density filter can be designed as

| (7) |

where is the optimal Kalman gain and is a solution of the following operator Riccati equation

| (8) |

The Riccati equation (8) actually depends on the unknown state/density because , which means we have to approximate at the same time. Intuitively, would be a reasonable approximation. However, this would make (7) and (8) strongly coupled, for which it is very difficult to analyze the stability. Therefore, we use , where is computed as according to (5). In this way, we can treat the approximation error as an external disturbance and use the concept of ISS to study its stability. We note that (5) should be understood to be accurate in the limit (). When is finite, the estimate may be inaccurate. However, we shall point out that the estimation error of is also included as part of the approximation error of .

By approximating with , the “suboptimal” density filter is correspondingly given by

| (9) |

where is the suboptimal Kalman gain and is a solution of the approximated Riccati equation

| (10) |

The optimal density filter (7) essentially combines linearly all past density estimations and the most recent KDE measurement to produce a density estimate with minimum estimation error covariance. The approximated density filter (9) is called “suboptimal” in the sense that the suboptimal gain remains “close” to the optimal gain (which will be proved in Corollary 1).

IV-B Stability Analysis of the Suboptimal Density Filter

In this section, we study the stability of the suboptimal density filter (9) and the associated Riccati equation (10).

Let be the minimum (but unknown) covariance at . We denote by the solution of (8) with the initial condition , which represents the flow of minimum covariance and also generates the optimal gain. Let be a “guessed” initial condition. Denote by the solution of (10) with the initial condition . We will show that converges to and remains close to in the presence of approximation error on .

Our idea is to show that (11) is ISS with respect to the approximation error on (more precisely , which equals if and only if ). In this way, the suboptimal gain remains close to when there is any approximation error, and converges to when the approximation error vanishes. We also show that the suboptimal density filter (9) is stable even though we use an approximation for .

Remark 2

To avoid confusions, we point out that if we use (8) to compute the gain for (7), then the estimation error covariance along also satisfies (8), which is a property of Kalman filters [15]. However, if we use (10) to compute the gain for (9), then the covariance along , denoted by in this case, actually satisfies

| (13) |

It would be desirable to prove that the solutions of (8) and (13) remain close, which is much harder because it involves three Riccati equations and will be left as our future work.

The following assumption is required for proving stability.

Assumption 1

Assume that and are uniformly bounded, and that there exist positive constants and such that for all ,

| (14) |

Remark 3

The assumption for is an imposed requirement for considering , which roughly speaking, requires that has positive upper bounds and lower bounds. The assumption for can be easily satisfied because and we construct . For finite-dimensional systems, the assumptions for , , and follow the assumption for and . This is because when and are large, the solutions of (8) and (10) will be dominated by the negative second-order term and decay. Although intuitively correct, we find it much harder to prove for the infinite-dimensional case and thus leave it as our future work.

Theorem 1

Proof:

To prove the first statement, consider a Lyapunov functional . We have

In view of (14), we conclude that (15) is uniformly exponentially stable. Similarly, by considering a Lyapunov functional defined by , one can show that the following system along is also uniformly exponentially stable:

| (16) |

We also note that the inner products in and are equivalent because of the assumption (14). To prove the second statement, we rewrite (11) as

| (17) | ||||

We note that (17) is essentially a linear equation. Now fix with . Since (15) and (16) are uniformly exponentially stable, and and are assumed to be uniformly bounded, there exist constants such that

where and . Under the uniform boundedness assumption of and , we conclude that (11) is LISS. ∎

We can conclude from Theorem 1 that the suboptimal gain also remains close to the optimal gain , given as follows.

Corollary 1

Under Assumption 1, there exist functions and such that

V Simulation Studies

In this section, we study the performance of the proposed density filter. Consider a collection of agents given by

| (18) |

where the states are restricted within , and is a continuous pdf over to be specified. The initial conditions are drawn from the uniform distribution over . Therefore, the ground truth density of the agents satisfies

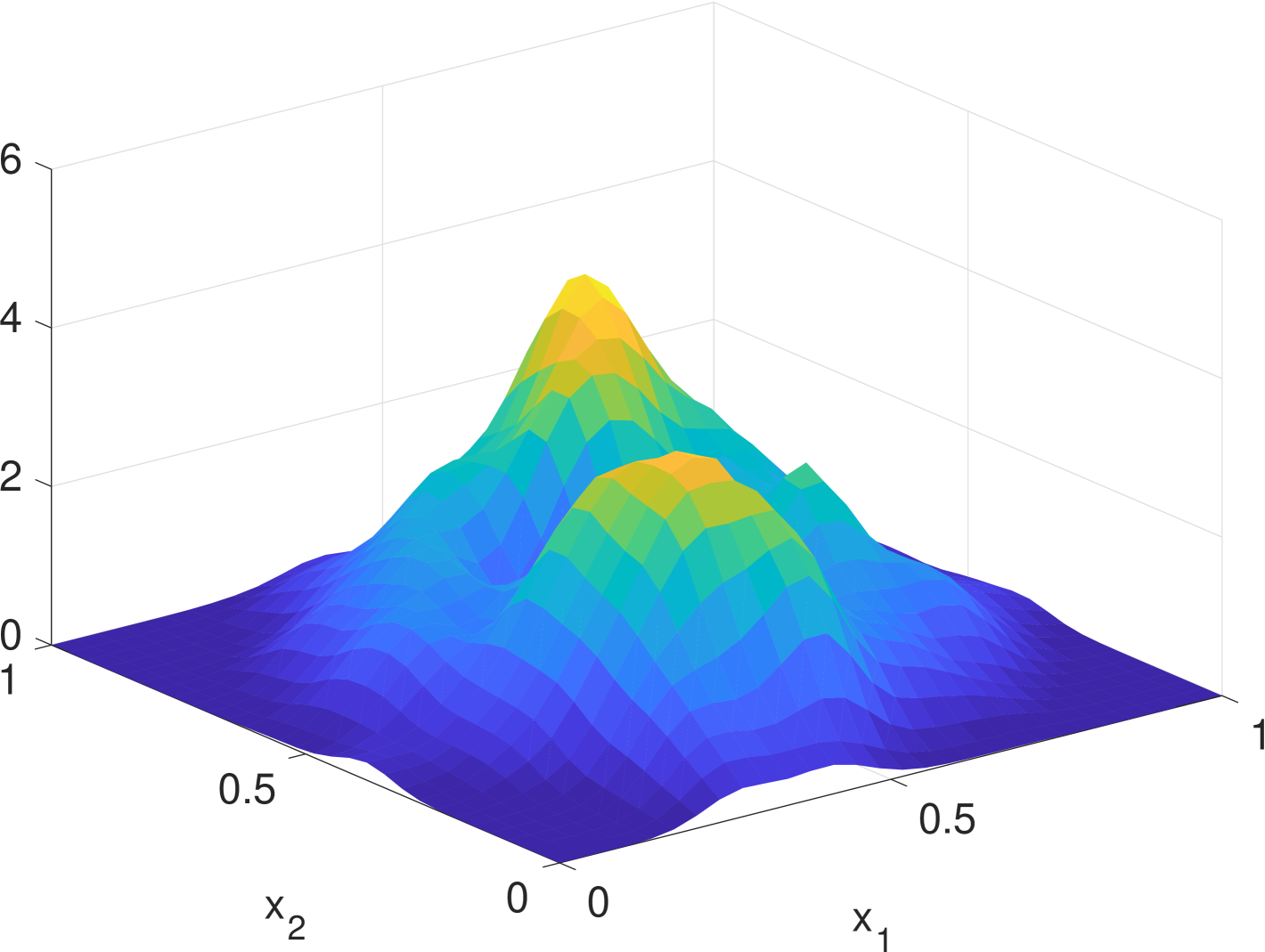

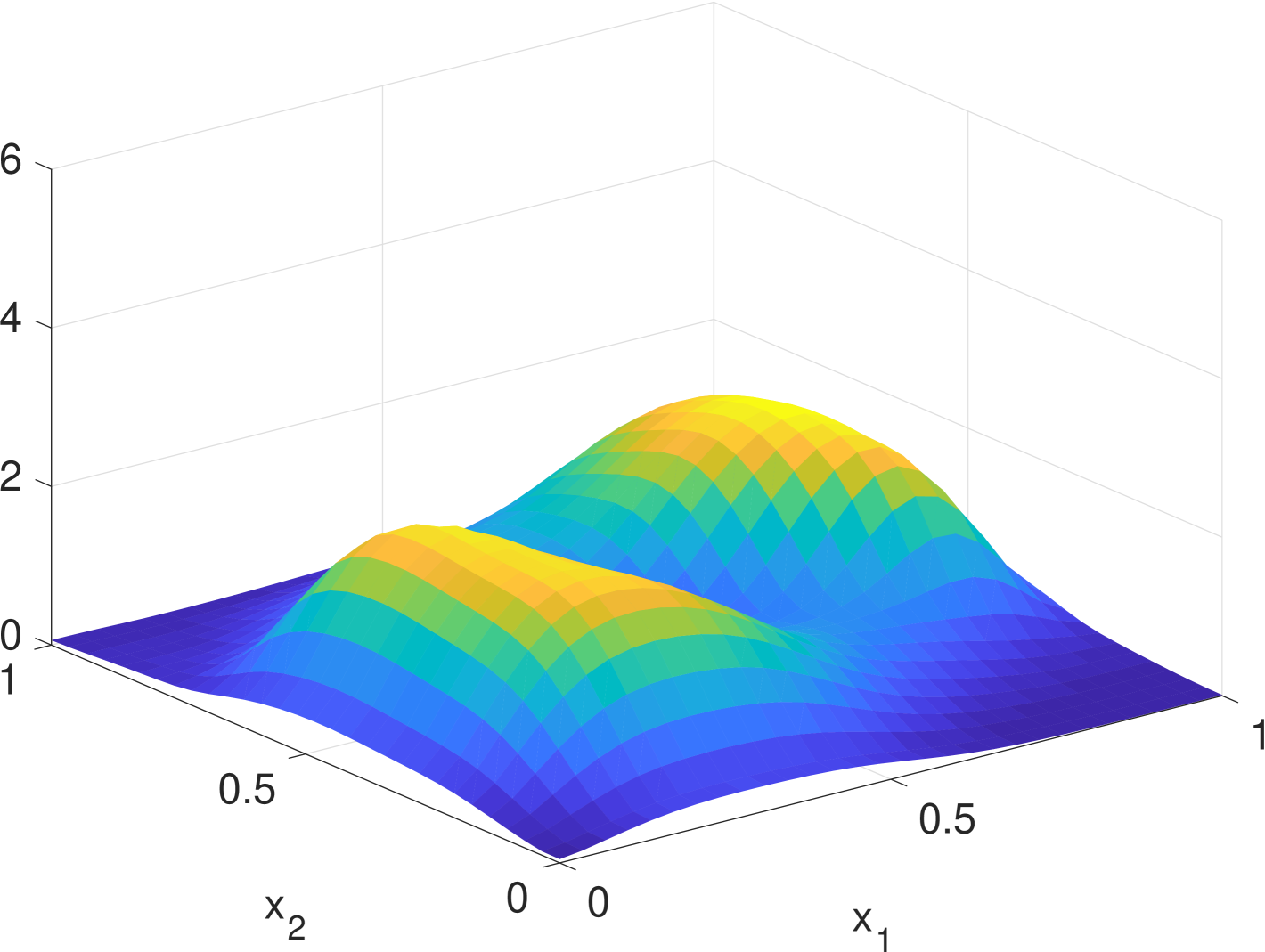

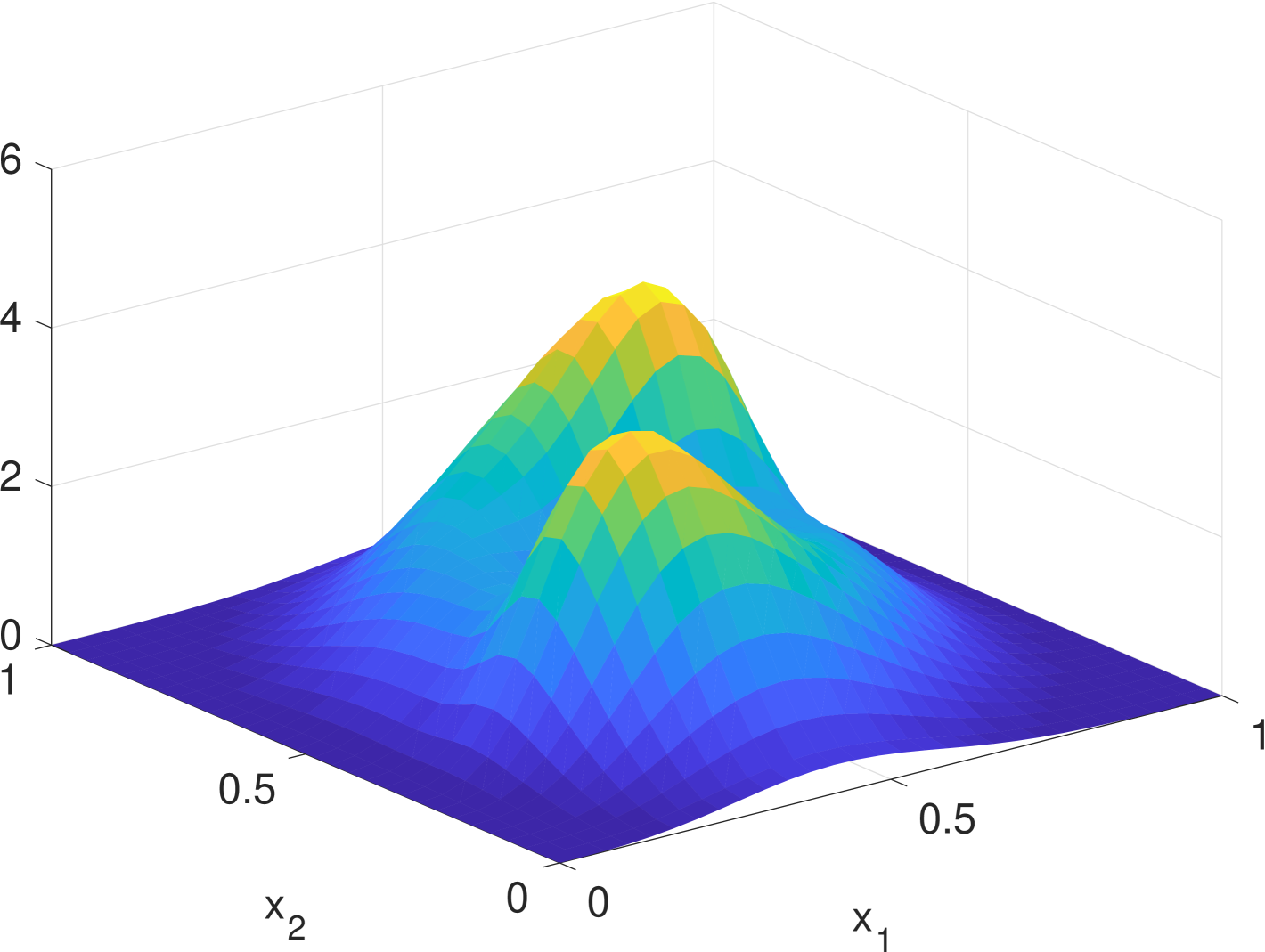

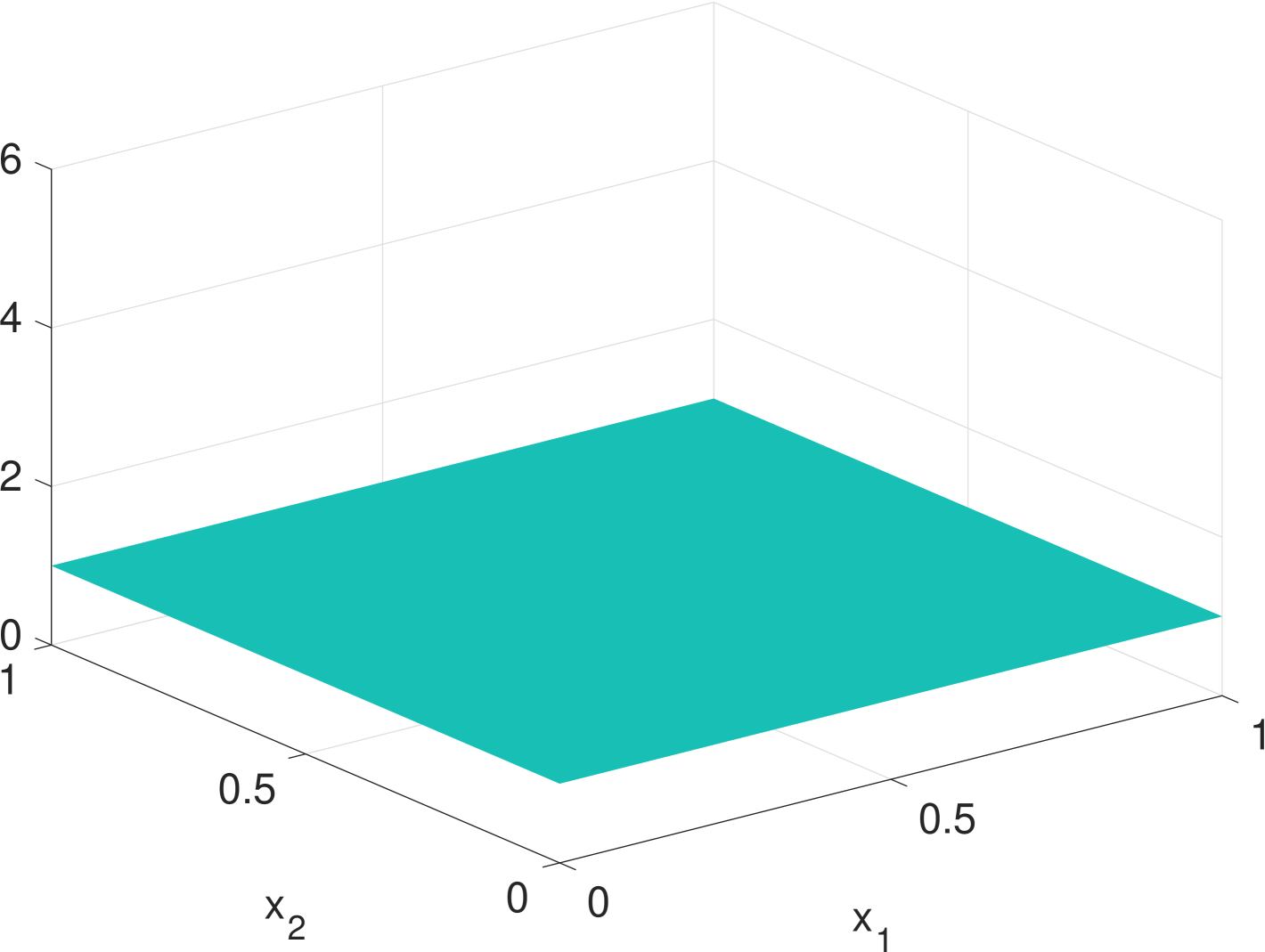

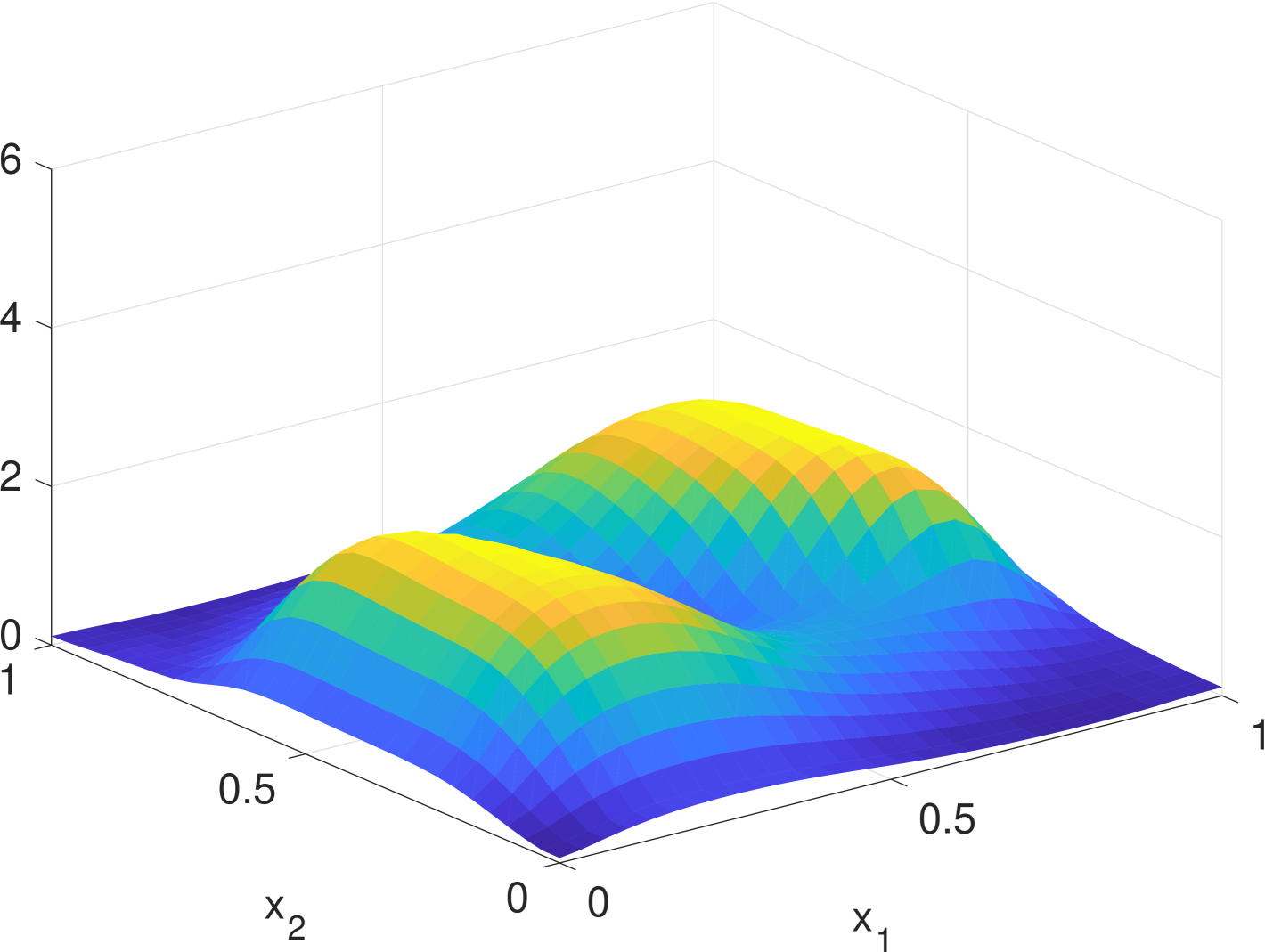

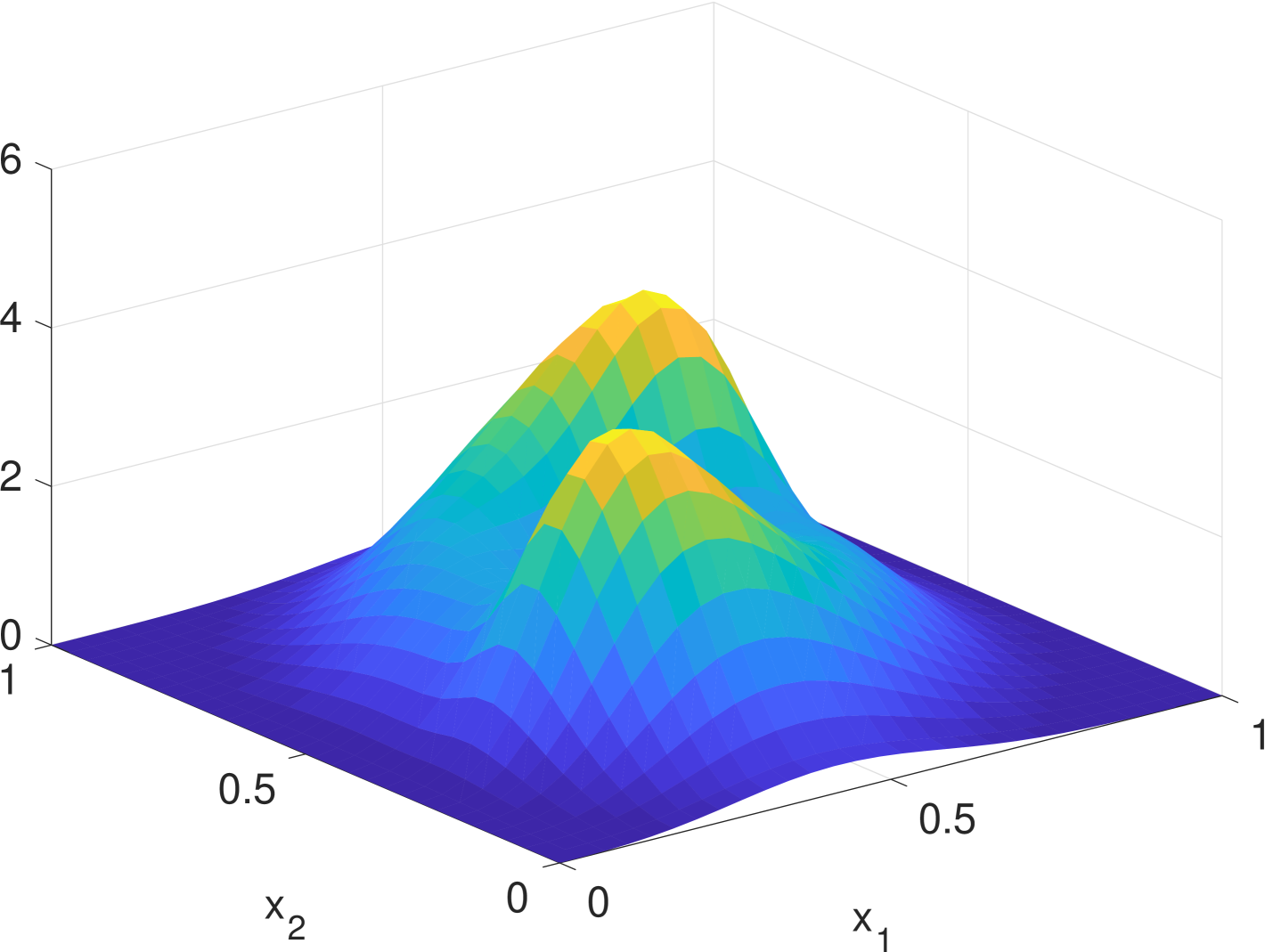

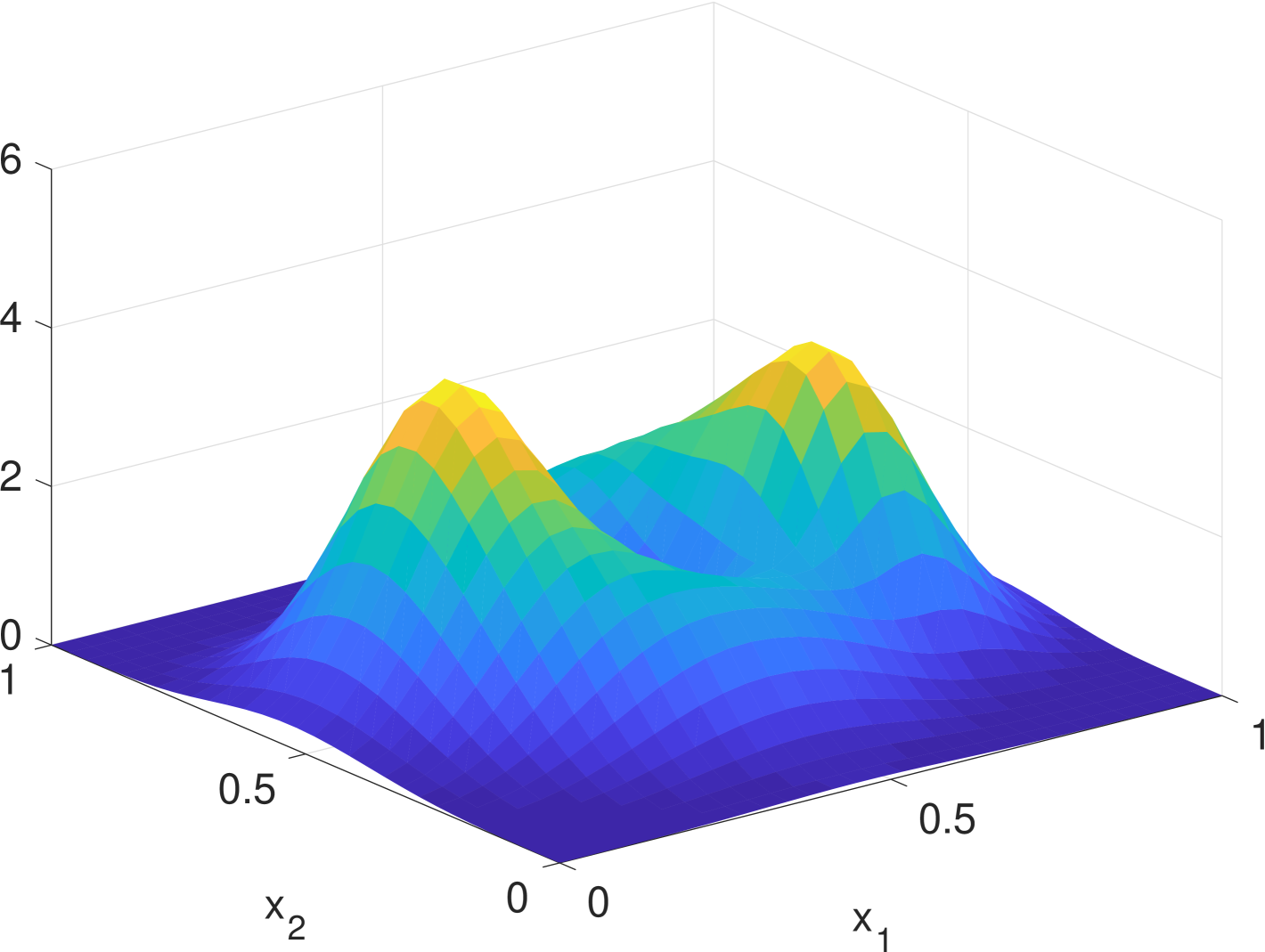

with a reflecting boundary condition (3). For illustration purpose, we let be a Gaussian mixture of two components with the common covariance matrix but different time-varying means and . Under this design, the agents are nonlinear and time-varying and their states will converge to the two “spinning” Gaussian components.

We use the finite difference method [23] to numerically solve the density filter (9) and the operator Riccati equation (10). Specifically, is equally divided into a grid. The pdfs and are represented as vectors. The operators , and are represented as matrices. We set the initial conditions to be and . The time difference is and the bandwidth is . Note that is highly sparse and is diagonal, so the computation is very fast in general.

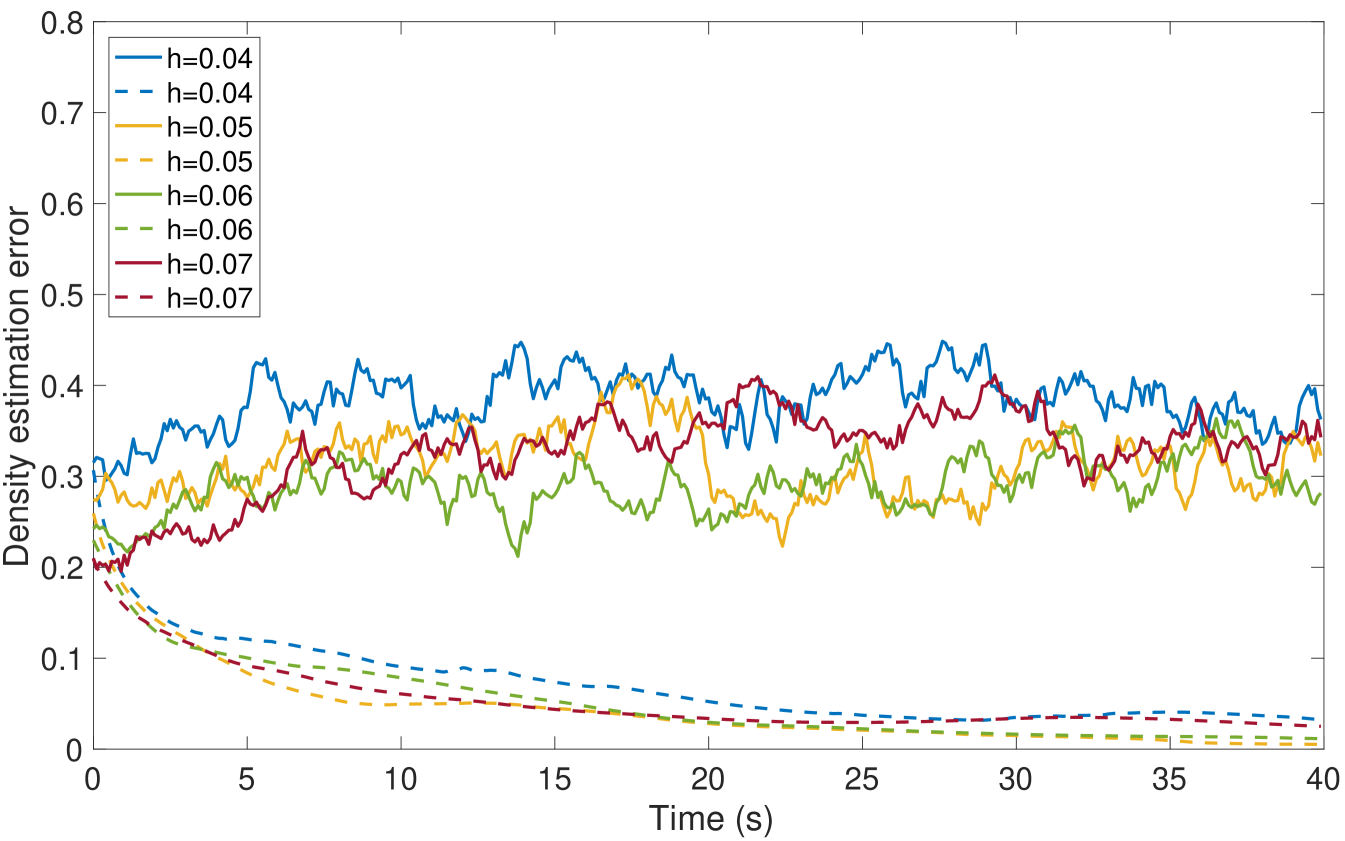

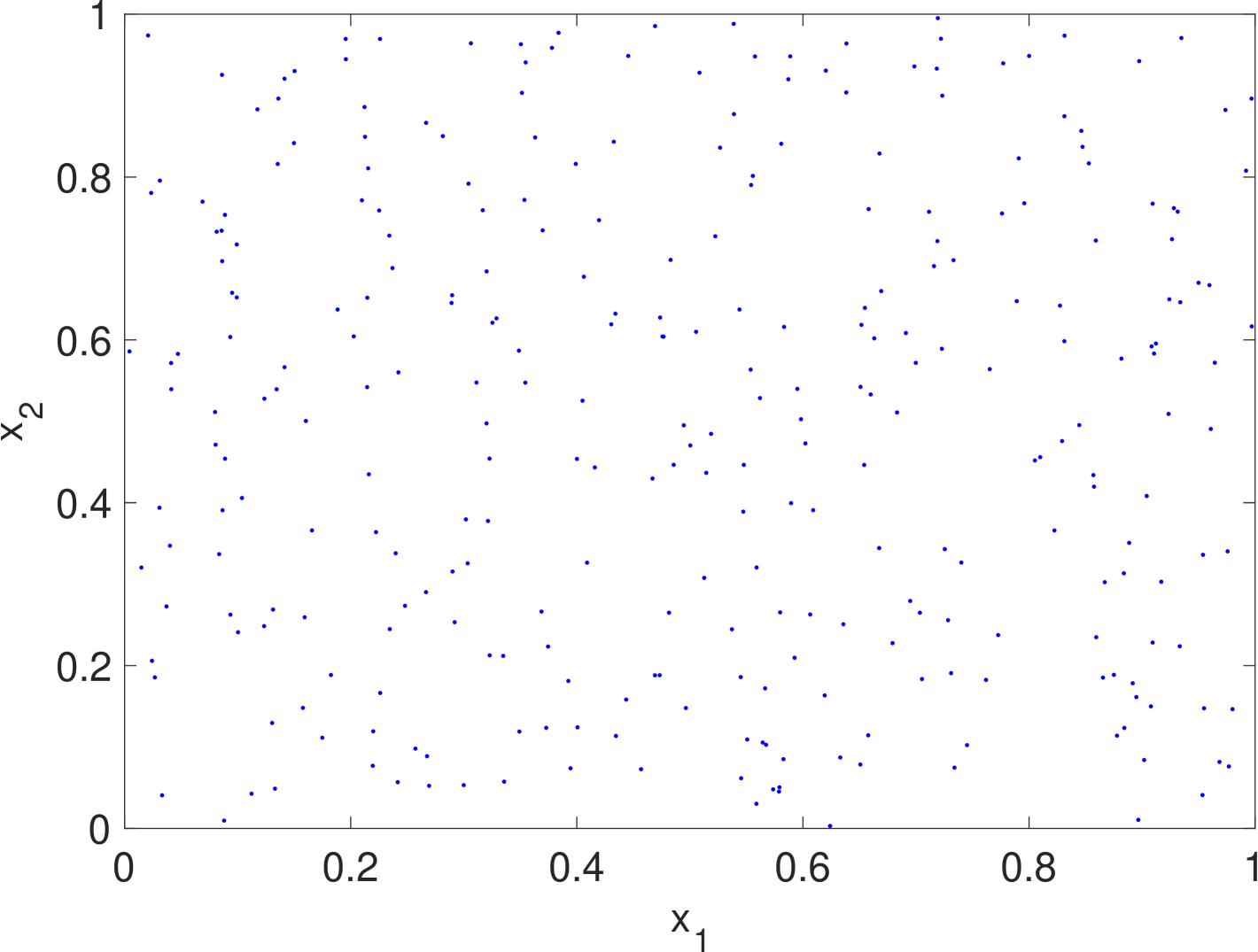

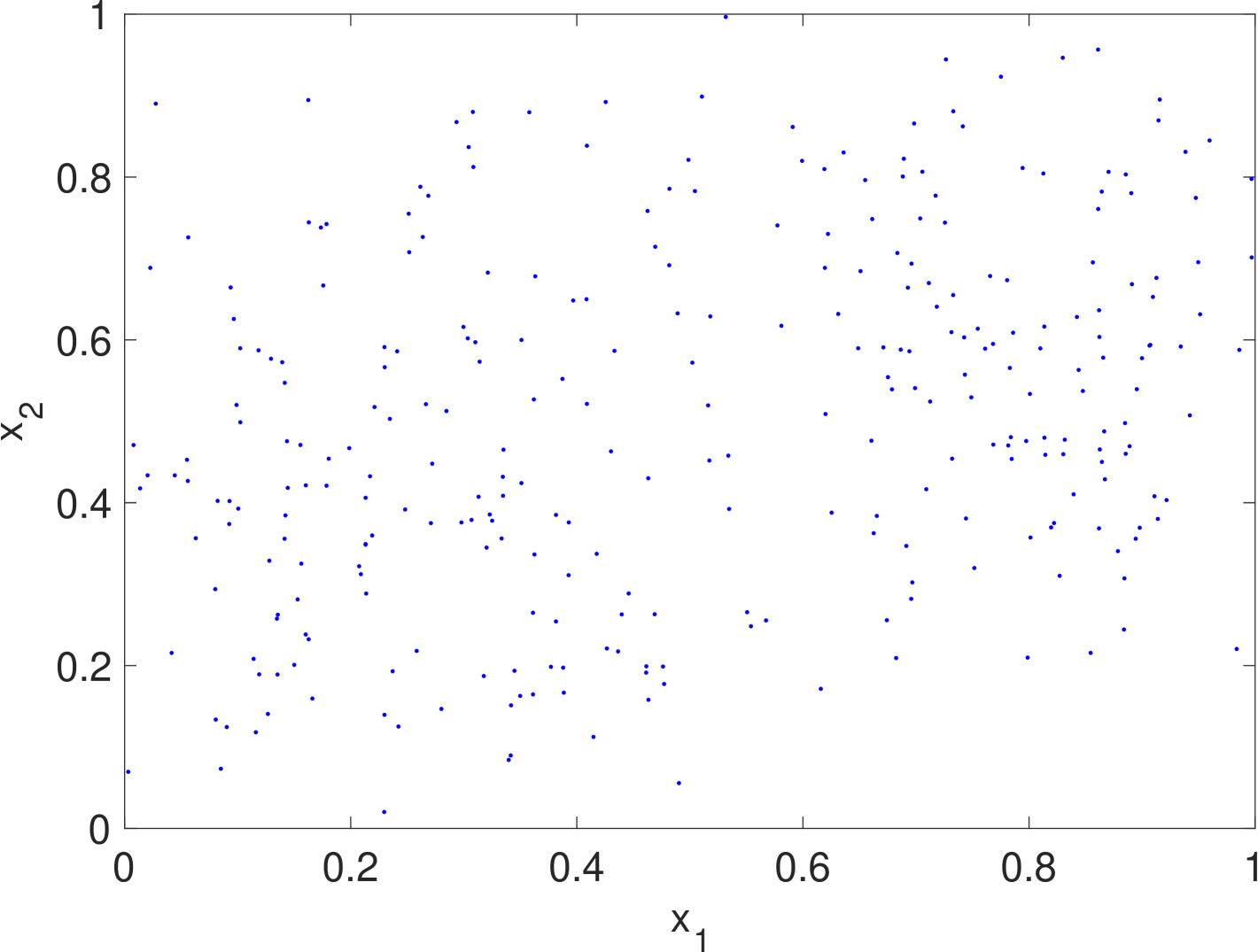

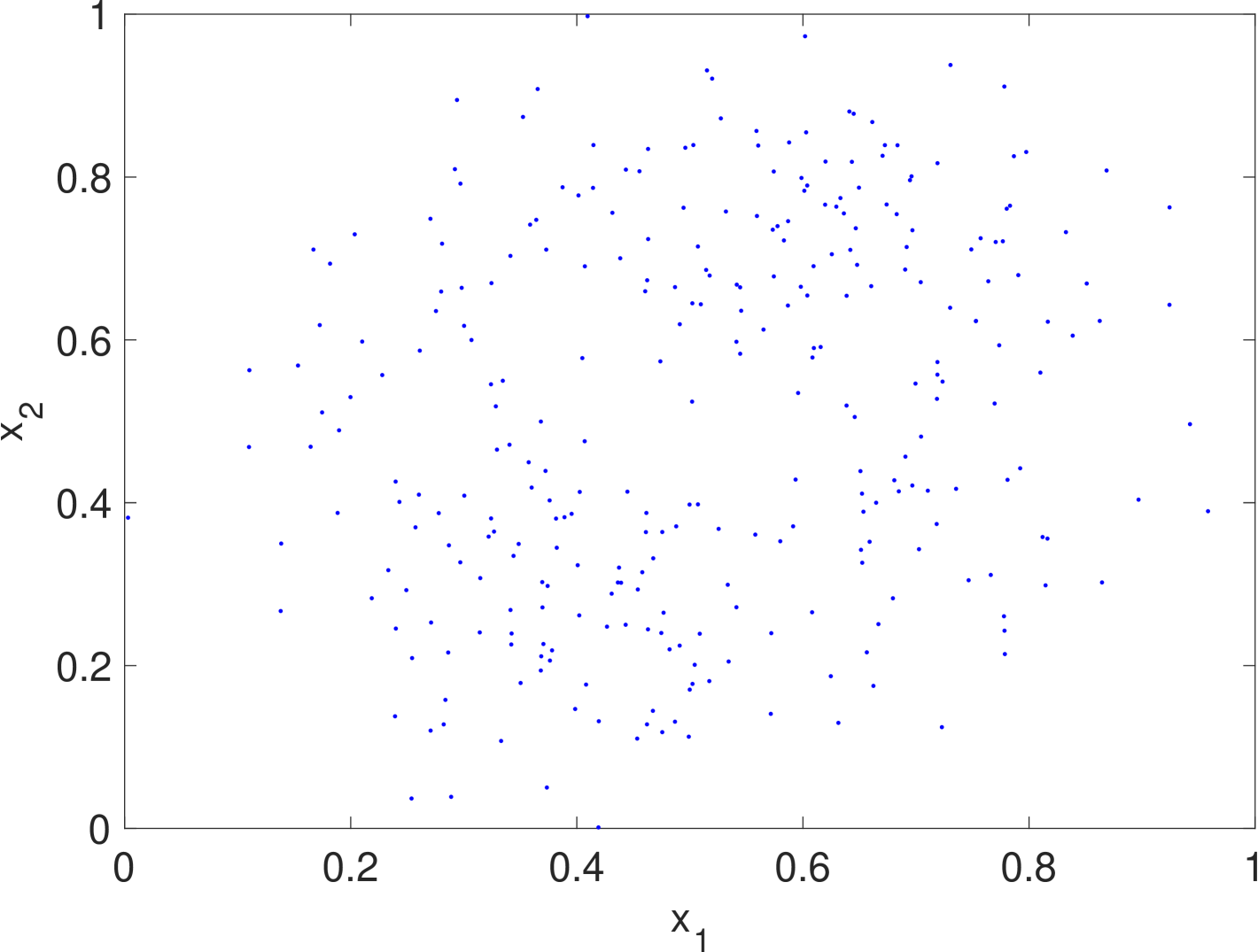

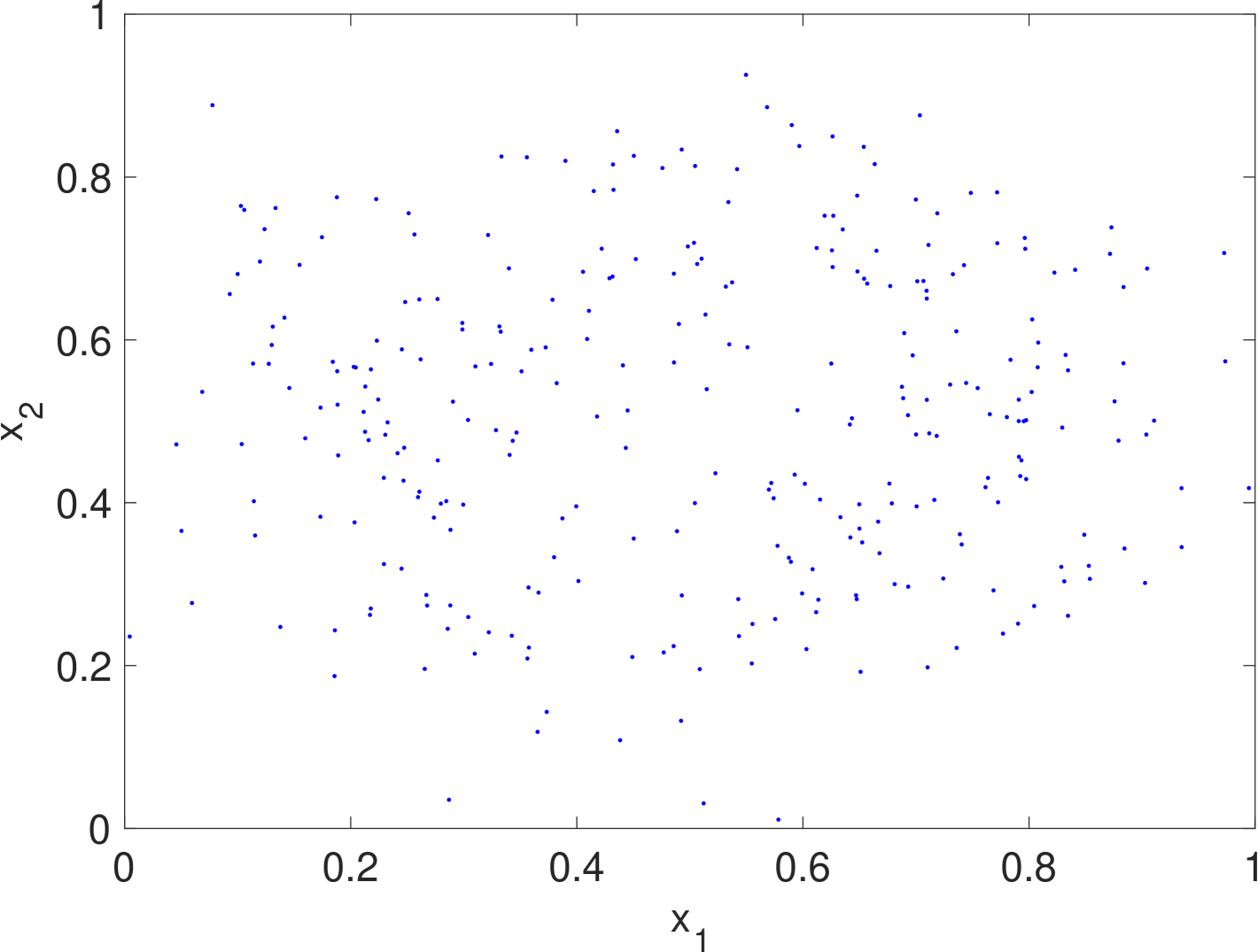

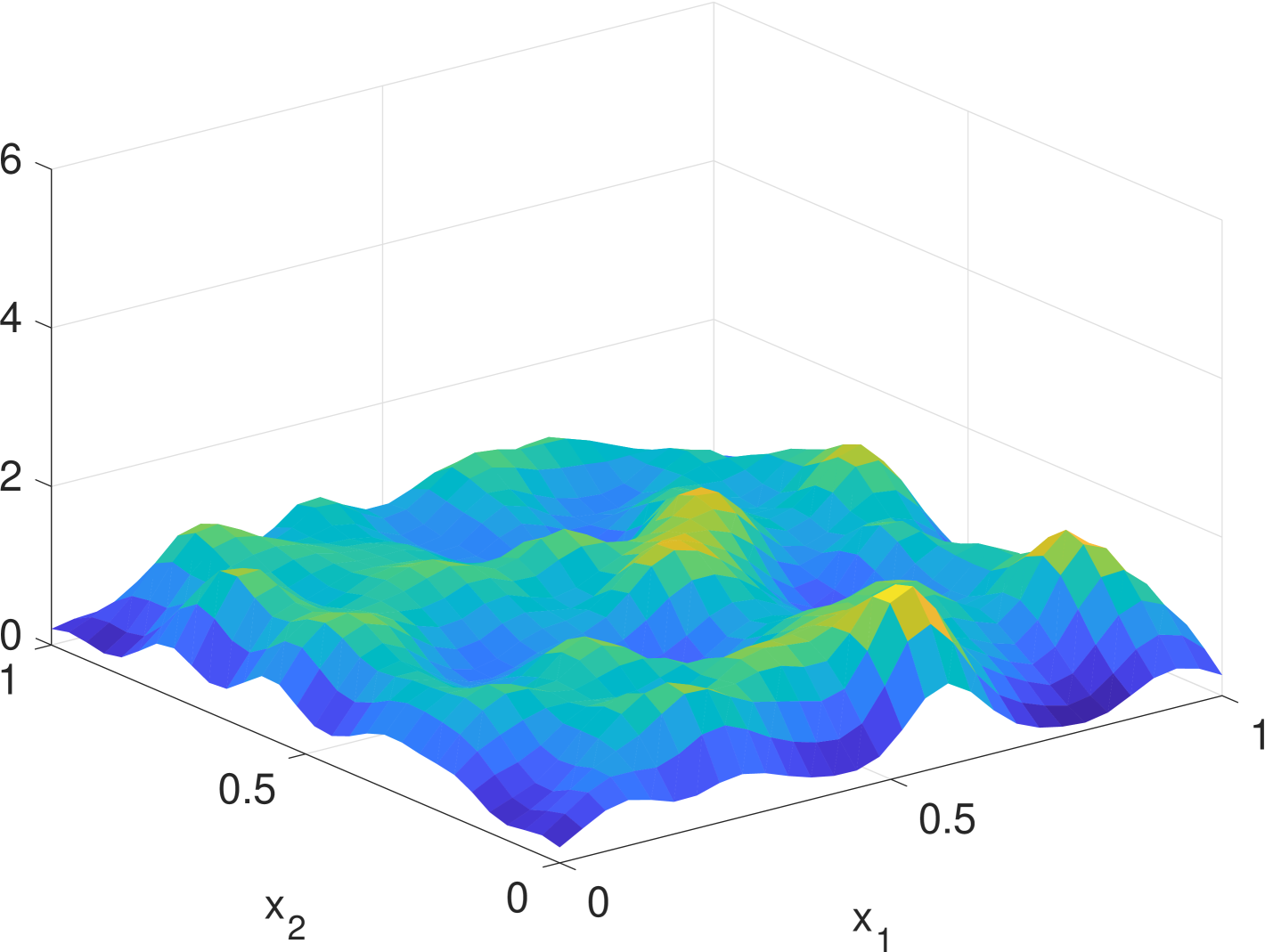

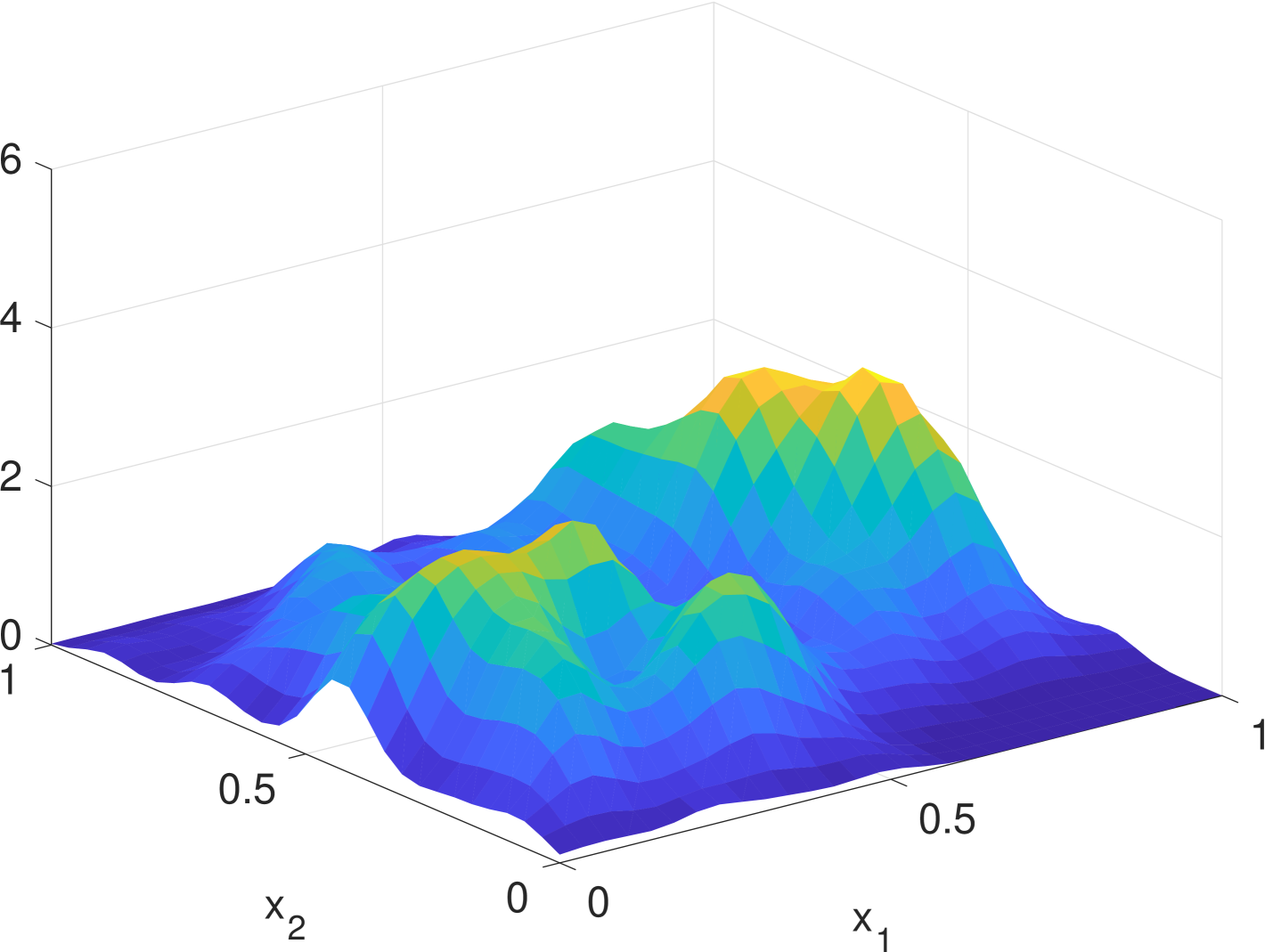

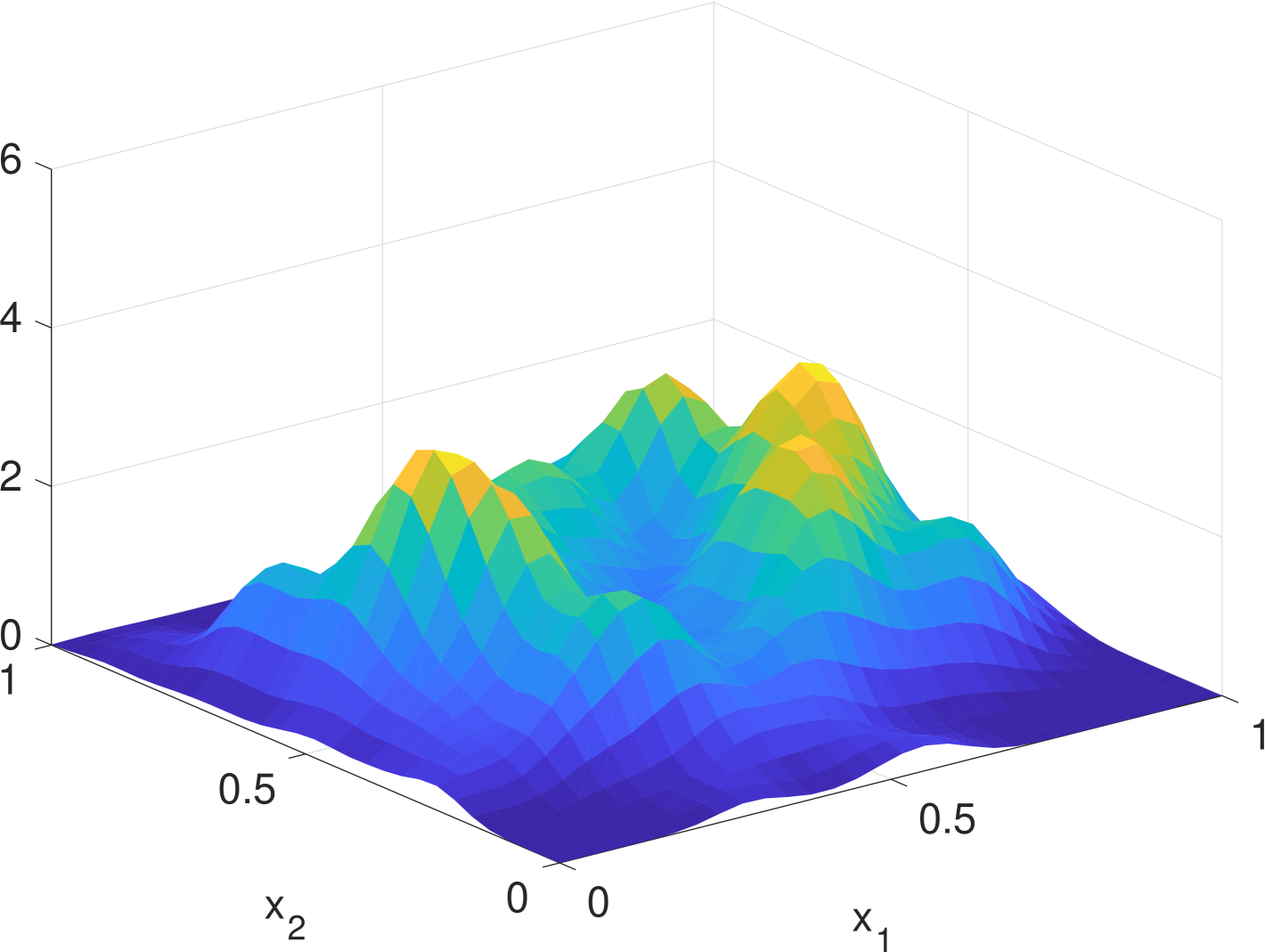

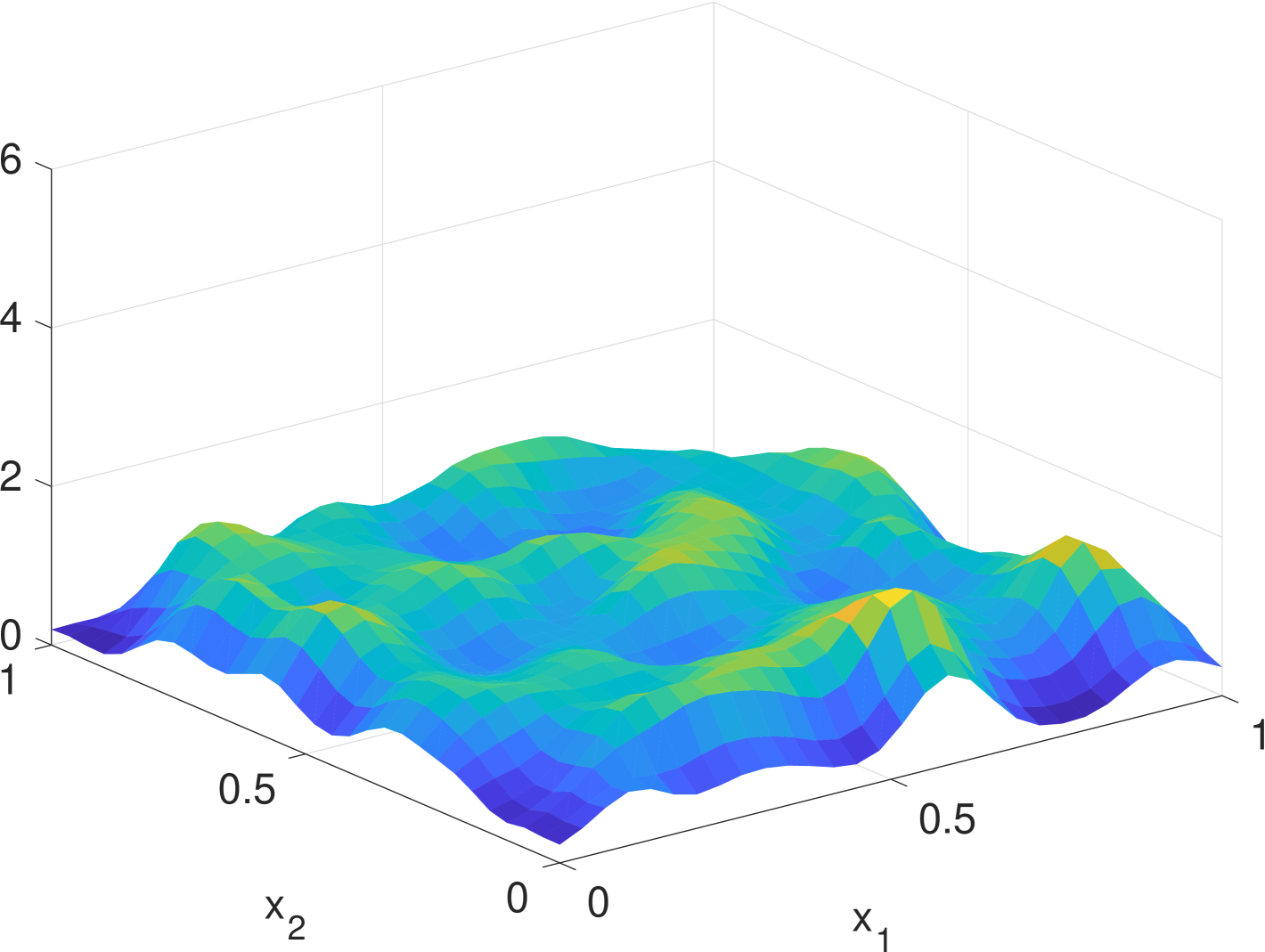

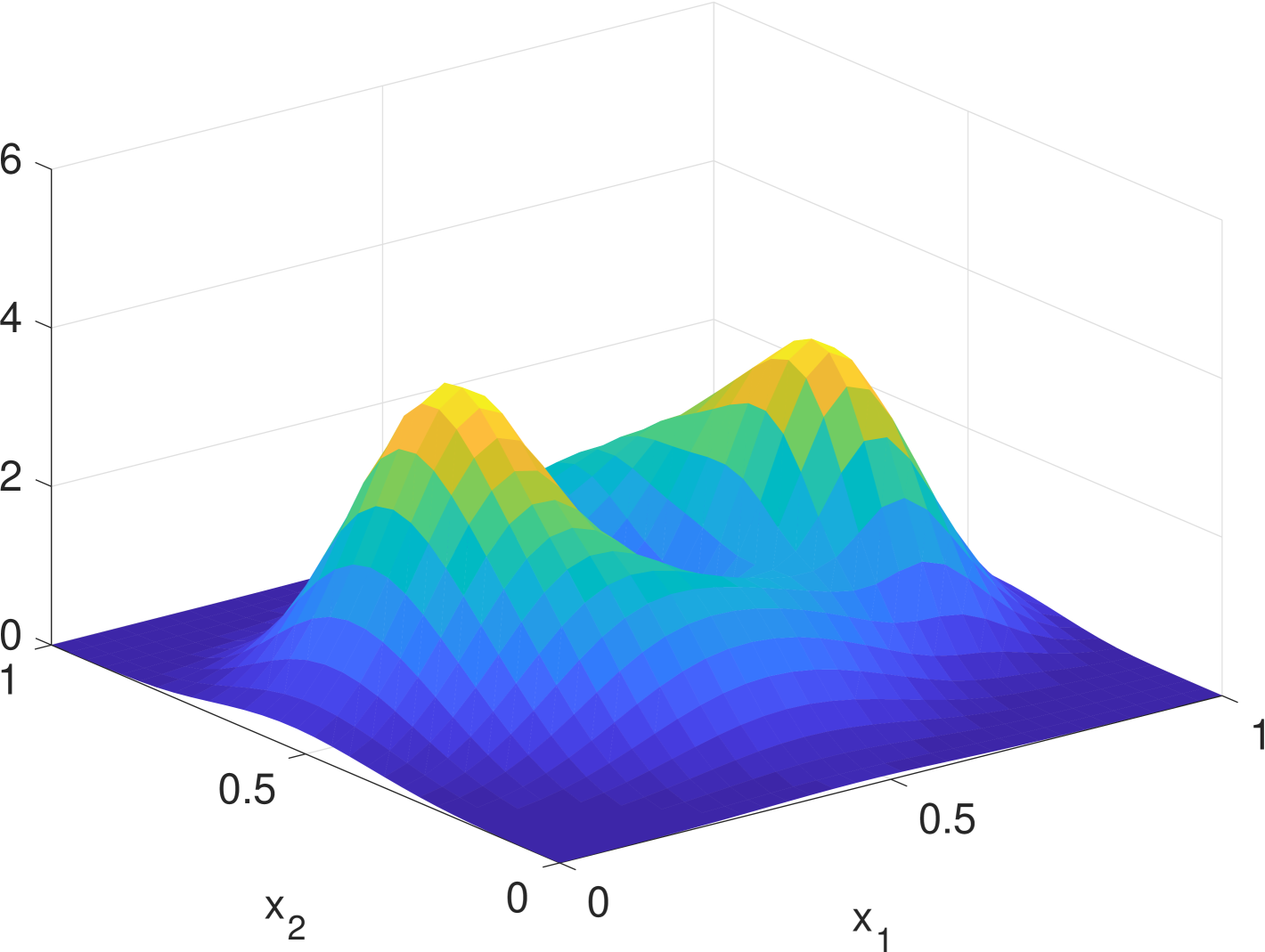

Simulation results are given in Fig. 2. We see that the estimate by KDE is usually rugged, especially in the early stage when the true density is nearly uniform. (If we had known that it is nearly uniform, we would have chosen a much larger bandwidth for better smoothing.) In the late stage, the estimate by KDE has two major components since the samples are more concentrated. But it still has undesired modes due to outliers. The density filter, by taking advantage of the dynamics, quickly ”recognizes” the two underlying Gaussian components and gradually catches up with the evolution of the ground truth density. In Fig. 1, we compare the norms of estimation errors of the KDE and the density filter under different choices of bandwidth. It shows that the estimation error of the density filter quickly converges and the performance is robust to different choices of bandwidth.

VI Conclusion

We have presented a density filter for estimating the dynamic density of large-scale agent systems with known dynamics by combining KDE with infinite-dimensional Kalman filters. The density filter improved its estimation using the dynamics and the real-time states of the agents. It was scalable to the population of agents and was proved to be convergent. All results held even if the agents’ dynamics are nonlinear and time-varying. This algorithm can be used for many density-based optimization and control tasks of large-scale agent systems when density feedback information is required, and can be potentially extended to other dynamic density estimation problems when certain prior knowledge of its spatiotemporal evolution is available, such as population migration. Our future work includes addressing the remaining problems noted in Remark 2 and 3, decentralizing the density filter and integrating it into density feedback control for large-scale agent systems.

References

- [1] G. Foderaro, P. Zhu, H. Wei, T. A. Wettergren, and S. Ferrari, “Distributed optimal control of sensor networks for dynamic target tracking,” IEEE Transactions on Control of Network Systems, vol. 5, no. 1, pp. 142–153, 2016.

- [2] B. W. Silverman, Density Estimation for Statistics and Data Analysis. CRC Press, 1986, vol. 26.

- [3] G. Foderaro, S. Ferrari, and M. Zavlanos, “A decentralized kernel density estimation approach to distributed robot path planning,” in Proceedings of the Neural Information Processing Systems Conference, Lake Tahoe, NV, 2012.

- [4] V. Krishnan and S. Martínez, “Distributed control for spatial self-organization of multi-agent swarms,” SIAM Journal on Control and Optimization, vol. 56, no. 5, pp. 3642–3667, 2018.

- [5] M. H. de Badyn, U. Eren, B. Açikmeşe, and M. Mesbahi, “Optimal mass transport and kernel density estimation for state-dependent networked dynamic systems,” in 2018 IEEE Conference on Decision and Control (CDC). IEEE, 2018, pp. 1225–1230.

- [6] S. R. Sain, “Multivariate locally adaptive density estimation,” Computational Statistics & Data Analysis, vol. 39, no. 2, pp. 165–186, 2002.

- [7] S. J. Sheather and M. C. Jones, “A reliable data-based bandwidth selection method for kernel density estimation,” Journal of the Royal Statistical Society: Series B (Methodological), vol. 53, no. 3, pp. 683–690, 1991.

- [8] A. Qahtan, S. Wang, and X. Zhang, “Kde-track: An efficient dynamic density estimator for data streams,” IEEE Transactions on Knowledge and Data Engineering, vol. 29, no. 3, pp. 642–655, 2016.

- [9] S. J. Julier and J. K. Uhlmann, “Unscented filtering and nonlinear estimation,” Proceedings of the IEEE, vol. 92, no. 3, pp. 401–422, 2004.

- [10] I. Arasaratnam and S. Haykin, “Cubature kalman filters,” IEEE Transactions on automatic control, vol. 54, no. 6, pp. 1254–1269, 2009.

- [11] Z. Chen et al., “Bayesian filtering: From kalman filters to particle filters, and beyond,” Statistics, vol. 182, no. 1, pp. 1–69, 2003.

- [12] R. Olfati-Saber, “Distributed kalman filtering for sensor networks,” in 2007 46th IEEE Conference on Decision and Control. IEEE, 2007, pp. 5492–5498.

- [13] S. Bandyopadhyay and S.-J. Chung, “Distributed estimation using bayesian consensus filtering,” in 2014 American control conference. IEEE, 2014, pp. 634–641.

- [14] G. Battistelli and L. Chisci, “Kullback–leibler average, consensus on probability densities, and distributed state estimation with guaranteed stability,” Automatica, vol. 50, no. 3, pp. 707–718, 2014.

- [15] R. E. Kalman and R. S. Bucy, “New results in linear filtering and prediction theory,” Journal of basic engineering, vol. 83, no. 1, pp. 95–108, 1961.

- [16] P. L. Falb, “Infinite-dimensional filtering: The kalman-bucy filter in hilbert space,” Information and Control, vol. 11, no. 1-2, pp. 102–137, 1967.

- [17] A. Bensoussan, Filtrage optimal des systèmes linéaires. Dunod, 1971.

- [18] G. Da Prato and J. Zabczyk, Stochastic equations in infinite dimensions. Cambridge university press, 2014.

- [19] E. D. Sontag and Y. Wang, “On characterizations of the input-to-state stability property,” Systems & Control Letters, vol. 24, no. 5, pp. 351–359, 1995.

- [20] S. Dashkovskiy and A. Mironchenko, “Input-to-state stability of infinite-dimensional control systems,” Mathematics of Control, Signals, and Systems, vol. 25, no. 1, pp. 1–35, 2013.

- [21] G. A. Pavliotis, Stochastic processes and applications: diffusion processes, the Fokker-Planck and Langevin equations. Springer, 2014, vol. 60.

- [22] T. Cacoullos, “Estimation of a multivariate density,” Annals of the Institute of Statistical Mathematics, vol. 18, no. 1, pp. 179–189, 1966.

- [23] J. Chang and G. Cooper, “A practical difference scheme for fokker-planck equations,” Journal of Computational Physics, vol. 6, no. 1, pp. 1–16, 1970.