Penalized Principal Component Analysis Using Smoothing

Corresponding author: rebeccahurwitz@hsph.harvard.edu)

Abstract

Principal components computed via PCA (principal component analysis) are traditionally used to reduce dimensionality in genomic data or to correct for population stratification. In this paper, we explore the penalized eigenvalue problem (PEP) which reformulates the computation of the first eigenvector as an optimization problem and adds an penalty constraint to enforce sparseness of the solution. The contribution of our article is threefold. First, we extend PEP by applying smoothing to the original LASSO-type penalty. This allows one to compute analytical gradients which enable faster and more efficient minimization of the objective function associated with the optimization problem. Second, we demonstrate how higher order eigenvectors can be calculated with PEP using established results from singular value decomposition (SVD). Third, we present four experimental studies to demonstrate the usefulness of the smoothed penalized eigenvectors. Using data from the 1000 Genomes Project dataset, we empirically demonstrate that our proposed smoothed PEP allows one to increase numerical stability and obtain meaningful eigenvectors. We also employ the penalized eigenvector approach in two additional real data applications (computation of a polygenic risk score and clustering), demonstrating that exchanging the penalized eigenvectors for their smoothed counterparts can increase prediction accuracy in polygenic risk scores and enhance discernibility of clusterings. Moreover, we compare our proposed smoothed PEP to seven state-of-the-art algorithms for sparse PCA and evaluate the accuracy of the obtained eigenvectors, their support recovery, and their runtime.

Keywords: 1000 Genomes Project; Covariance Matrix; Eigenvector; Genomic Relationship Matrix; Nesterov; Principal Component Analysis; Singular Value Decomposition; Smoothing.

1 Introduction

Statistical genomic analyses often utilize eigenvectors to adjust and correct for population stratification, or differences in frequencies of alleles between subgroups in a population. Eigenvectors are routinely used in principal component analysis (PCA) to transform large genomic datasets into reduced, lower-dimensional representations while aiming to preserve a maximal amount of information.

The classic eigenvector problem can easily be generalized to the so-called generalized eigenvector problem (GEP), which asks to find a pair satisfying such that for two given matrices and . As GEP generalizes the usual eigenvector concept, its applications likewise cover multivariate analysis, PCA, canonical correlation analysis (CCA), linear discriminant analysis (LDA), or invariant coordinate selection (Tyler et al.,, 2009; Li,, 2007). Moreover, GEP appears in nonlinear dimension reduction (Kokiopoulou et al.,, 2011) and in computer vision and image processing (Zhang et al.,, 2013). Naturally, several variants of GEP have been considered in the literature, for instance the -constrained GEP (Sriperumbudur et al.,, 2011), numerical approximations (Song et al.,, 2015), the computation of GEP via maximization of the Rayleigh coefficient (Tan et al.,, 2018), the computation of multiple eigen-pairs (Song et al.,, 2015), or GEP under the assumption of positive definiteness (Han and Clemmensen,, 2016).

As noted in Jung et al., (2019), sparsity-inducing methods for computing eigenvectors and factor loadings have the potential to improve estimation accuracy in settings of high dimensions and low sample size, thus providing better interpretable eigenvectors through variable selection. For this reason, in Witten and Tibshirani, (2011) the authors introduce an optimization problem called the penalized eigenvalue problem (PEP). In PEP, the computation of the first eigenvector is expressed as the optimization of a quadratic form, where the maximum value of the objective function is attained for the first eigenvector. Such a formulation is interesting as it allows one to add a LASSO-type penalty (Tibshirani,, 1996) with loss to the objective function, thereby modifying the problem (Gaynanova and Wang,, 2019; Guan,, 2022). The tradeoff incurred by controlling the sparseness at the expense of changing the problem is one of the topics of the present work.

Solving the optimization problem posed by PEP comes with numerical issues which are caused by the non-differentiability of the penalty. In this work, we are interested in understanding the behavior of PEP when substituting the penalty with a smooth surrogate. The smooth surrogate is derived with the help of smoothing (Chen and Mangasarian,, 1995; Nesterov,, 2005; Trendafilov and Gallo,, 2021), and it is guaranteed to be uniformly close to the original penalty while being differentiable everywhere. Moreover, we apply a computational trick involving an SVD decomposition in order to extract higher order eigenvectors.

We validate our approach in four experimental studies. First, we apply it to a dataset of the 1000 Genomes Project (The 1000 Genomes Project Consortium,, 2015, 2023), a catalogue of human genetic variation created with the goal of discerning common variants frequent in at least percent of the populations studied. To assess the quality of eigenvectors computed for cluster analysis, we compute within-cluster and between-cluster sums of squares as well as silhouette scores. Through those experiments, we demonstrate that our smoothing approach allows one to obtain numerically more stable eigenvectors. Second, we compare the penalized eigenvectors and the smoothed penalized eigenvectors in a polygenic risk score application which aims to predict SARS-CoV-2 mortality. We demonstrate that using the smoothed penalized eigenvectors yields a polygenic risk score with increased accuracy, measured using the area under the curve (AUC) metric, than using the unsmoothed counterparts. Third, we apply the unsmoothed and smoothed PEP to analyze clusters in the Iris benchmark dataset (UC Irvine Machine Learning Repository,, 1988), demonstrating that the principal components computed with smoothed PEP lead to an increased discernibility of the clustering. Fourth, we compare smoothed PEP with seven state-of-the-art algorithms for sparse PCA (Journée et al.,, 2008; d’Aspremont et al.,, 2007; Jung et al.,, 2019; Gaynanova et al.,, 2017; Song et al.,, 2015; Zhang et al.,, 2010; Shen and Huang,, 2008) on simulated data whereby we generate matrices with known planted eigenvectors and sparsity. We evaluate all approaches with respect to the accuracy of the obtained eigenvectors, their support recovery, and their runtime.

This article is structured as follows. Section 2 introduces both the penalized eigenvalue problem as well as smoothing methodology before presenting our proposed smoothed version of PEP. It also highlights our approach for computing higher order eigenvectors, discusses an iterative solving algorithm, and proposes a thresholding approach to enforce sparsity. All experiments are included in Section 3, where we showcase our experiments on the data of the 1000 Genomes Project, the polygenic risk score and clustering applications, as well as the simulation study with other state-of-the-art algorithms for sparse PCA. The article concludes with a discussion in Section 4. Two additional simulations can be found in Appendix A.

The proposed methodology has been implemented in the R-package SPEV, available on CRAN (Hurwitz and Hahn,, 2023). Throughout the article, the norm is denoted with , the Euclidean norm is denoted with , and denotes the indicator function.

2 Methods

This section starts with a review of the penalized eigenvalue problem (Section 2.1), introduced in Gaynanova et al., (2017). Section 2.2 reviews the smoothing of piecewise affine functions, which serves as the basis of the proposed smoothing approach for the penalized eigenvalue problem proposed in this publication. In Section 2.3, we identify the non-smooth component of the penalized eigenvalue problem and apply smoothing to it. We conclude with a series of remarks on the extraction of higher-order eigenvectors besides the first eigenvector (Section 2.4), some considerations on an iterative solving scheme (Section 2.5), and thresholding to enforce sparsity (Section 2.6).

2.1 The penalized eigenvalue problem

In order to allow for the addition of a penalty to the eigenvalue or eigenvector problem, we first formulate the computation of the leading eigenvector as an optimization problem (Golub and van Loan,, 1996). Precisely, letting be a symmetric and positive semidefinite matrix for a fixed dimension , and be a symmetric and strictly positive definite matrix, it can be shown that the solution of the optimization problem

| (1) |

is the leading eigenvector of the matrix . Following this observation, the penalized eigenvalue problem of Gaynanova et al., (2017) is defined by adding an penalty to the objective function being maximized in eq. (1), leading to the Lasso-type (Tibshirani,, 1996) objective function

| (2) |

where is assumed to be the identity matrix, and is a penalty parameter. Note that in eq. (2), the objective is maximized, thus the penalty (which is non-negative) is being subtracted.

2.2 A brief overview of the smoothing methodology

We employ the unified framework of Chen and Mangasarian, (1995); Nesterov, (2005); Trendafilov and Gallo, (2021) to smooth the objective function in eq. (2). We consider the smoothing of a piecewise affine and convex function , where . As noted in Chen and Mangasarian, (1995); Nesterov, (2005); Trendafilov and Gallo, (2021), piecewise affine functions can be written as

| (3) |

where and denotes the concatenation of and the scalar . The function in eq. (3) is called piecewise affine as it is composed of linear pieces, parameterized by the rows of . To be precise, each row in contains the coefficients of one of the linear pieces, with the constant coefficients being in column .

A smooth surrogate for the function of eq. (3) is given by

| (4) |

In eq. (4), the optimization is carried out over the unit simplex in dimensions, given by

The function which appears in eq. (4) is called the prox-function. The prox-function must be nonnegative, continuously differentiable, and strongly convex. A specific choice of the prox-function is given below. The parameter is called the smoothing parameter. The choice recovers .

The advantage of the smoothed surrogate of eq. (4) consists of the fact that it is both smooth for any choice and uniformly close to , that is the approximation error is uniformly bounded as

| (5) |

see (Nesterov,, 2005, Theorem 1). As can be seen from eq. (4) and eq. (5), larger values of result in a stronger smoothing effect and a higher approximation error, while smaller values of result in a weaker smoothing effect and a higher degree of similarity between and .

The choice of the prox-function is not unique, and in Chen and Mangasarian, (1995); Nesterov, (2005); Trendafilov and Gallo, (2021), several choices for the prox-function can be found. In the remainder of the article, we focus on one particular choice called the entropy prox-function as it allows for simple closed-form expressions of the smoothed objective for the penalized eigenvalue problem of eq. (2). The entropy prox-function is given by

| (6) |

and as shown in (Nesterov,, 2005, Section 4.1) and Hahn et al., 2021b ; Hahn et al., (2020), it satisfies the uniform bound

| (7) |

on the approximation error between and .

2.3 A smoothed version of the penalized eigenvalue problem

Equipped with the results from Section 2.2, we now state the proposed smoothed version of the penalized eigenvalue problem of eq. (2). To this end, it is important to note that in eq. (2), the quadratic form is differentiable, while the penalty is not. We thus apply the smoothing to the penalty of the objective function only. Moreover, since in eq. (2) for can be written as , it suffices to smooth the non-differentiable absolute value applied to each entry of the vector separately.

The absolute value can be expressed in the form of eq. (3) with pieces, that is the function with

where is a scalar. We aim to replace the absolute value by the smooth surrogate with the entropy prox-function of eq. (6). Simplifying eq. (6) for the specific choice of results in

| (8) |

which is the smooth surrogate for the absolute value we use. Leaving the quadratic form in eq. (2) unchanged, and substituting the penalty by its smoothed version , then results in the smoothed penalized eigenvalue problem

| (9) |

Due to its simple form, the first derivative of can be stated explicitly as

Therefore, denoting the objective function in eq. (9) as

its gradient is given by

which is useful for the maximization of eq. (9) via gradient descent/ ascent solvers.

2.4 Extraction of further eigenvectors via SVD

Solving the unsmoothed penalized eigenvalue problem of eq. (2), or the smoothed version of eq. (9), yields the leading eigenvector. Naturally, we are interested in further eigenvectors, in particular in Section 3 we will present population stratification plots of the first two leading eigenvectors, and compute a polygenic risk score using the first leading eigenvectors.

Deflation is a standard technique to extract higher order eigenvectors from a matrix , see Mackey, (2009); Trendafilov and Gallo, (2021). The idea is based on the Eckart-Young theorem (Eckart and Young,, 1936) for the SVD representation , where is an unitary matrix, is an matrix with off-diagonal elements (that is, for ) being zero, and is a unitary matrix. The representation can be rewritten as , where denotes the outer product between two vectors and is the th entry on the diagonal of . Using this representation allows one to subtract the leading eigenvector from once it is known.

We therefore first solve the unsmoothed penalized eigenvalue problem of eq. (2), or the smoothed version of eq. (9), and obtain the leading eigenvector of a matrix , denoted as . We compute the corresponding eigenvalue using the Rayleigh coefficient as . We then compute , the matrix with leading eigencomponent subtracted. As seen from the Eckart–Young theorem, the representation still holds true, now with the second eigencomponent being the leading one. Applying the (unsmoothed or smoothed) penalized eigenvalue problem to thus yields the second penalized eigenvector.

2.5 Iterative solving approach

Using the smoothed version of the penalized eigenvalue problem of eq. (9) and its explicit gradient given in Section 2.3, the minimization of eq. (9) is carried out using the quasi-Newton method BFGS (Broyden–Fletcher–Goldfarb–Shanno) which is implemented in the function optim in R (R Core Team,, 2014).

To improve the numerical accuracy of optimizing eq. (9), an iterative approach can be used. Given a target value of and an additional parameter (the number of steps), we start solving eq. (9) using a random initial starting value and the smoothing parameter . After the optimization is complete, we use the obtained maximizer as the initial value for a new run, this time with smoothing parameter . We continue seeding each new optimization with the solution of the previous run while lowering the smoothing parameter further until the target smoothing parameter is reached. The target value of should be chosen as the machine precision or the square root of the machine precision. This is to ensure that the obtained estimates with smoothed PEP of eq. (9) closely resemble the ones obtained if the original PEP of eq. (2) had been solved.

2.6 Thresholding to enforce sparsity

Solving the smoothed PEP objective of eq. (9) yields an eigenvector which is not guaranteed to be sparse. This is due to the lack of an penalty in the formulation of eq. (9). Nevertheless, we can enforce sparsity again via thresholding. To be precise, let be the vector obtained by maximizing eq. (9). Denoting the vector as , we first choose a desired sparsity level . We then compute the threshold as the empirical -quantile of the values , thus ensuring that a proportion of is smaller than in absolute value. Finally, we threshold all entries in against , meaning we compute a new thresholded vector with entries for all .

3 Experimental results

In this section, we present our numerical results, which are subdivided into several sections. Section 3.1 details the experimental setting and Section 3.2 introduces the three datasets we employ, precisely the one of the 1000 Genomes Project (The 1000 Genomes Project Consortium,, 2015, 2023), the GISAID dataset (Elbe and Buckland-Merrett,, 2017; Shu and McCauley,, 2017) of SARS-CoV-2 nucleotide sequences, and the Iris dataset of the UC Irvine Machine Learning Repository (UC Irvine Machine Learning Repository,, 1988). The results for the unsmoothed penalized eigenvalue problem of eq. (2) are presented in Section 3.3 for a variety of Lasso penalties. The results for smoothed PEP are shown in Section 3.4 using the same Lasso penalties. Moreover, we are interested in the quality of the clusters spanned by the eigenvectors in the PCA population stratification plots, and present cluster assessment metrics in Section 3.5. The choice of the smoothing parameter is investigated in Section 3.6. Section 3.7 presents the first real data application, precisely the computation of a polygenic risk score to predict SARS-CoV-2 mortality using both unsmoothed and smoothed PEP eigenvectors. Section 3.8 presents the second real data application in the context of clustering in machine learning. Section 3.9 presents the third simulation study in which we compare our smoothed PEP to seven other state-of-the-art algorithms.

3.1 Experimental setting

In all of our experiments, whenever we refer to the smoothed version of PEP, we use the iterative solving approach outlined in Section 2.5 to solve eq. (9) with a target smoothing parameter of . The smoothed version of PEP is used with steps, that is starting from . The choice of the parameter is investigated further in Section 3.6.

Moreover, in all of our experiments, sparsity is enforced via thresholding as described in Section 2.6. We use a target sparsity of (5% percent zeros).

All experiments are carried out using the statistics software (R Core Team,, 2014). The maximization of unsmoothed PEP in eq. (2) is conducted with the FISTA algorithm of Beck and Teboulle, (2009). The smoothed PEP of eq. (9) is carried out using the optim function in . The parameter of eq. (2) and eq. (9) is given individually in each simulation subsection.

3.2 Datasets

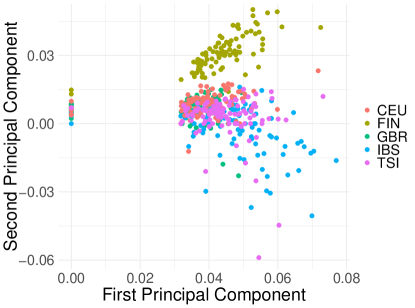

The first dataset under consideration is the 1000 Genomes Project (The 1000 Genomes Project Consortium,, 2015, 2023). The goal of the 1000 Genomes Project was to discover genetic variants with frequencies of at least percent in the populations studied. In order to prepare the raw 1000 Genomes Project data and select rare variants, we used PLINK2 (Purcell and Chang,, 2019) with the cutoff value set to 0.01 for the –max-maf option. We then used the LD pruning method with the parameteters –indep-pairwise set to . These results focus on the European super population (subpopulations: CEU, FIN, GBR, IBS, and TSI), with our dataset containing 503 subjects and 5 million rare variants in total.

Next, we compute a similarity measure on the genomes included in the dataset. We employ the genomic relationship matrix (GRM) of Yang et al., (2011). We then compute two sets of leading eigenvectors, precisely the first two eigenvectors of the unsmoothed (see eq. (2)) and the smoothed (see eq. (9)) penalized eigenvector problems, respectively.

The second dataset under consideration consists of 1000 nucleotide sequences of the SARS-CoV-2 virus, which were extracted from patients diagnosed with Covid-19. The sequences were downloaded from the GISAID database (Elbe and Buckland-Merrett,, 2017; Shu and McCauley,, 2017). The sequences have accession numbers in the range of EPI_ISL_404227 to EPI_ISL_766048. All nucleotide sequences were aligned with MAFFT (Katoh et al.,, 2002) to the official SARS-CoV-2 reference sequence (available on GISAID under the accession number EPI_ISL_402124) using the keeplength option, and with all other parameters set to their default values. This procedure yields aligned sequences of length base pairs.

After alignment, we compare each aligned nucleotide sequence against the reference sequence, resulting in a binary vector with indicating a mismatch to the reference genome, and otherwise. As before, we compute the genomic relationship matrix (GRM) of Yang et al., (2011) on the binary matrix obtained in this way (with each row corresponding to one sample), resulting in a similarity matrix for all subjects. The eigenvectors of the unsmoothed and smoothed PEP are computed on this similarity matrix (with for smoothed PEP). Additional to the SARS-CoV-2 nucleotide sequence for each patient, we also obtain patient status meta information from GISAID, consisting of age (numeric), sex (male, female), geographic region (WHO geographic region encoded as WPRO, EURO, AFRO, PAHO, EMRO, SEARO), and binary mortality (patient deceased which is encoded with 1, or alive which is encoded with 0).

The third dataset under consideration is the Iris dataset of UC Irvine Machine Learning Repository (UC Irvine Machine Learning Repository,, 1988), a simple but widely used benchmark for clustering. The dataset contains observations (rows), where each observation is a plant. The true labels is the plant species (setosa, versicolor, virginica). The covariates are the plant’s sepal length, sepal width, petal length, and petal width (all measured as floating point numbers). The task is to recover the species from the four covariates measured for each plant.

3.3 Results for the unsmoothed penalized eigenvectors

Figure 1 displays the results of unsmoothed PEP in eq. (2) using the first two eigenvectors, colored by subpopulation (CEU, FIN, GBR, IBS, and TSI) of the 1000 Genomes Project dataset. We evaluated unsmoothed PEP for equal to 0, 1, 10, and 100. We can see a good stratification for equal to 0, 1, and 10, indicated by the relative discernibility of colored population clusters (notably becoming less discernable as increases). We also observe that some entries of the principal component vectors are zero, leading to points which are located on the x-axis or y-axis. The amount of entries shrunk to zero seems to increase with , as expected. However, when increases to 100, the populations are no longer discernible.

In Appendix A we present two additional figures showing population stratification plots for the dataset of the 1000 Genomes Project. Those two additional figures are computed with (a) the classic eigenvectors, as well as (b) the first two principal components as in eq. (2), however with an norm instead of the norm.

3.4 Results for the smoothed penalized eigenvectors

Similarly to Figure 1, Figure 2 shows the results for the smoothed version of PEP of eq. (9) using the first two eigenvectors, colored by subpopulation (CEU, FIN, GBR, IBS, and TSI). As before, we evaluated smoothed PEP for equal to 0, 1, 10, and 100. However, in contrast to Figure 1, we see good stratification across all penalty values, again indicated by the relative discernibility of colored population clusters. While the clusters still become less discernable as increases, for we see a plot that is much more clearly clustered and conspicuous than the equivalent one in Figure 1. As before, the thresholding operation shrinks some of the entries in the first and second principal components to zero, leading to points which are located on the x-axis or y-axis. The amount of entries shrunk to zero seems to increase with , as expected.

3.5 Assessing clusters

The population stratification plots of Section 3.3 and Section 3.4 exhibit slightly different clusters by population. Naturally, our aim is to achieve a good separation between the clusters. In order to quantify the quality of the clustering achieved, we compute three metrics, the within- and between-cluster sums of squares as well as the silhouette score. As in Section 3.1, denotes the number of subjects, which is also the dimension of the matrix of Section 2. We denote the number of clusters with , and the set of points contained in the -th cluster with , where and . Moreover, we denote each point in the population stratification plots with and the cluster this point belongs to with , where . The three aforementioned metrics are defined as follows:

-

1.

The within-cluster sum of squares is defined as

(10) where denotes the mean of all the points in the -th cluster , where , and denotes the number of elements in the set .

-

2.

The between-cluster sum of squares is defined as

(11) where is the mean of all the points , where .

-

3.

The silhouette score for point is defined as

(12) where is the average distance of to all the other data points in the same cluster as , and the quantity is the minimum among all the mean distances of to the other clusters that does not belong to. We compute the silhouette score for each point with the help of the function silhouette of the R-package cluster (Maechler et al.,, 2022). We report the mean over all silhouette scores as a summary metric.

By evaluating , , and for the projected data points, we assess the effectiveness of the penalized eigenvalue problem solutions in separating clusters in meaningful ways. These metrics provide a robust way to compare the results obtained from the unsmoothed PEP of Section 3.3 and the smoothed PEP of Section 3.4.

Tables 1, 2, and 3 show the within-cluster sum of squares, between-cluster sum of squares, and silhouette scores for the clusters displayed in Figure 1 and Figure 2 (again for ). For the within-cluster sum of squares and the silhouette score (Tables (1) and (3)), a smaller value indicates better clustering, and for the between-cluster sum of squares metric (Table (2)), a larger value indicates a better clustering. Across all tables, for both the smoothed and unsmoothed PEP, we can see that as we increase the Lasso penalty , the quality of the clusters decreases across the board. We also note that as the values increase, the smoothed models achieve better clustering in comparison to the unsmoothed models across all three metrics. Therefore, we posit that this smoothing method enhances the performance of these models via more discernable clustering.

| Model | 0 | 1 | 10 | 100 |

|---|---|---|---|---|

| Unsmoothed PEP | 1.6654 | 1.6702 | 1.7244 | 1.8839 |

| Smoothed PEP | 1.6621 | 1.6626 | 1.6665 | 1.6910 |

| Model | 0 | 1 | 10 | 100 |

|---|---|---|---|---|

| Unsmoothed PEP | 0.1278 | 0.1231 | 0.0912 | 0.0770 |

| Smoothed PEP | 0.1334 | 0.1313 | 0.1149 | 0.0531 |

| Model | 0 | 1 | 10 | 100 |

|---|---|---|---|---|

| Unsmoothed PEP | -0.0459 | -0.0475 | -0.0610 | -0.2066 |

| Smoothed PEP | -0.0175 | -0.0183 | -0.0257 | -0.0828 |

3.6 Choice of the smoothing parameter

We investigate the effect of the smoothing parameter on the obtained clustering. For this, we compute the figures of Section 3.4 with different choices of . We note that if the Lasso penalty in eq. (2) is negligible, then so will be the norm penalty for the objective function in eq. (2). Therefore, exchanging the norm for the smoothed counterpart will likely have a minor effect only. Therefore, we choose a relatively large Lasso penalty of in order to see the influence of the penalty and, accordingly, of its smoothed approximation of Section 2.3.

Figure 3 shows results for the fixed choice and varying values of . In contrast to Figure 1 and Figure 2, the points in Figure 3 are not colored by population label in the 1000 Genomes Project data but unison by value of , precisely black for , blue for , green for , and red for . We observe that as increases, the plots become more and more compressed in one spot, whereas smaller values of nicely stratify the data. We explain this finding with the fact that for a fixed , larger values of will excessively smooth out the objective function, thus rendering the maximum delocalized. In contrast, smaller values of cause the smoothed objective of eq. (9) to be pointwise close to the unsmoothed one of eq. (2), thus recovering the original principal components.

3.7 Polygenic risk score

We aim to compare the impact of using either the unsmoothed or the smoothed PEP eigenvectors to compute a polygenic risk score to predict SARS-CoV-2 mortality. To be precise, using the dataset described in Section 3.1, we fit a linear regression model to the outcome (mortality as binary vector) as a function of age, sex, geographic region, and principal components computed on the GRM (genomic relationship matrix) similarity measure of the SARS-CoV-2 nucleotide sequences.

While keeping the regression model unchanged otherwise, we once use principal components computed with the unsmoothed PEP of eq. (2) and smoothed PEP of eq. (9). For smoothed PEP we employ . We train the model on a proportion of of the individuals, and evaluate the prediction against the true outcome on the withheld proportion of individuals. As the response is binary, we employ the AUC (area under the curve) metric to assess the accuracy of the prediction.

Figure 4 shows the results of this experiment as a function of the proportion used for training, both for the model using principal components from the unsmoothed (blue) and the smoothed (red) PEP. With an AUC above , we observe that indeed, the covariates age, sex, geographic region, and the principal components of the nucleotide data are good predictors of mortality. Moreover, we observe that the prediction accuracy increases with an increasing training proportion, which is to be expected. Importantly, using the principal components from the smoothed PEP seems to give a slight edge in prediction in comparison to using the unsmoothed PEP eigenvectors.

3.8 Clustering of the Iris machine learning benchmark

As a second real data example, we aim to visually assess the clustering obtained on the Iris benchmark dataset of the UC Irvine Machine Learning Repository (UC Irvine Machine Learning Repository,, 1988). This simple but widely used benchmark for clustering contains data on plants, each belonging to one of three species (setosa, versicolor, virginica). The covariates are the plant’s sepal length, sepal width, petal length, and petal width.

As described in Section 3.1, we first compute a similarity measure on the dataset to obtain a similarity matrix of dimensions , where each matrix entry is a measure of similarity between any pair of plants. As a similarity measure we use the Jaccard similarity matrix computed on (Jaccard,, 1901; Prokopenko et al.,, 2016; Hahn et al., 2021a, ), where is the Iris dataset (150 observations and 4 covariates). Afterwards, we compute two principal components with both unsmoothed and smoothed PEP (for ) and plot them. The results are shown in Figure 5, where each point is colored based on its true label. We observe that the principal components computed with smoothed PEP lead to visibly clearer clustering by species.

3.9 Comparison with state-of-the-art sparse PCA algorithms

We compare our smoothed PEP approach of eq. (9) with seven other state-of-the-art algorithms on simulated data. Those approaches are the power method for sparse principal component analysis of Journée et al., (2008), the semidefinite programming formulation of d’Aspremont et al., (2007), the penalized orthogonal iteration of Jung et al., (2019), the penalized formulation of Gaynanova et al., (2017), minorization-maximization algorithm for sparse PCA of Song et al., (2015), the first-order algorithm of Zhang et al., (2010), and the sPCA-rSVD algorithm Shen and Huang, (2008).

Specifically, we implement Algorithm 6 of Journée et al., (2008) (referred to as Journee6), Algorithm 2 of d’Aspremont et al., (2007) (referred to as Aspremont2), Algorithm 2 of Jung et al., (2019) (referred to as Jung2), Algorithm 1 of Gaynanova et al., (2017) (referred to as Gaynanova1), Algorithm 4 of Song et al., (2015) (referred to as Song4), Algorithm 1 of Zhang et al., (2010) (referred to as Zhang1), and Algorithm 1 of Shen and Huang, (2008) (referred to as Shen1). Our codes also use functionality of the R-packages RSpectra (Qiu et al.,, 2022), alabama (Varadhan,, 2023), irlba (Baglama et al.,, 2022), and epca (Chen,, 2023).

In order to be able to know the true sparse eigenvector, we employ the following simulation scenario. We generate square matrices of dimensions with a planted first eigenvector in its first column. The sparsity (proportion of zeros) of the first eigenvector is varied in . The nonzero entries of the first eigenvector are drawn independently from a uniform distribution in . The remaining columns are also randomly drawn from a uniform distribution in . We also generate a diagonal matrix of dimension with randomly drawn eigenvalues, sorted in decreasing order, on its diagonal. We use both and as the inputs of an eigendecomposition, and thus generate a test matrix as .

Using constructed in the aforementioned way, we apply our smoothed PEP algorithm of Section 2.5 (with ) and the aforementioned seven state-of-the-art algorithms to recover the first sparse eigenvector of . As the methods of Journée et al., (2008) and Zhang et al., (2010) return a full matrix, we only consider its first column. We assess all algorithms with respect to the accuracy of the obtained eigenvectors, their support recovery, and their runtime. This is done on the same data, that is we apply all algorithms and then return four metrics for each result. To be precise, we assess the accuracy of the returned sparse eigenvector by calculating the cosine similarity measure between the returned sparse eigenvector and the true (planted) eigenvector (ranging from to , where encodes maximual disagreement of the two vectors, and encodes maximal agreement). We also consider the support recovery, meaning the ability to recover the true nonzero entries. We measure this with the true positive rate, meaning the proportion of truly recovered nonzero entries (ranging from to , where is worst and is best). At the same time, we also report the false positive rate, meaning the proportion of falsely proclaimed nonzero entries (ranging from to , where is best and is worst). Finally, we report the runtime of all algorithms in seconds.

The accuracy results are given in Table 4. We observe that, as expected, the accuracy of the solution decreases with . Interestingly, we do not observe a strong dependence of the accuracy on the sparsity . All algorithms achieve a reasonable cosine similarity with the planted true eigenvector. However, the algorithms of Gaynanova et al., (2017), Shen and Huang, (2008), and our smoothed PEP perform best.

Next, we look at the support recovery, specifically the ability to recover the true nonzero entries measured with the True Positive Rate in Table 5. We observe that all methods, possibly apart from the one of Shen and Huang, (2008) as increases, accurately recover the support in this experiment. It is noteworthy that the algorithms of Jung et al., (2019), Gaynanova et al., (2017), Zhang et al., (2010), and our smoothed PEP perform particularly well in this task. At the same time, we also assess the False Positive Rate, meaning the proportion of falsely identified nonzero entries in Table 6. Table 6 again shows that all methods, possibly apart from the ones of Song et al., (2015) and Shen and Huang, (2008), achieve a low false positive rate.

Finally, we assess the runtime (in seconds) of all algorithms in Table 7. We observe most methods are able to compute the leading sparse eigenvector in a fraction of a second for all matrices under consideration. Notably, the algorithms of Journée et al., (2008) and d’Aspremont et al., (2007) are much slower than the others.

| Sparsity | Cosine Similarity | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Journee6 | Aspremont2 | Jung2 | Gaynanova1 | Song4 | Zhang1 | Shen1 | Smoothed PEP | ||

| 10 | 0.1 | 0.9037999 | 0.5275607 | 0.11229495 | 0.9914799 | 0.92853305 | 0.32581428 | 0.9759526 | 0.9914892 |

| 0.2 | 0.9317490 | 0.8869066 | 0.58735246 | 0.9965534 | 0.91725763 | 0.63849359 | 0.9970452 | 0.9964332 | |

| 0.3 | 0.9089373 | 0.3214823 | 0.19747244 | 0.9973516 | 0.90620679 | 0.13018827 | 0.9894365 | 0.9971395 | |

| 0.4 | 0.8738096 | 0.8606155 | 0.04051950 | 0.9952860 | 0.73089078 | 0.19253621 | 0.9916363 | 0.9949463 | |

| 0.5 | 0.8927113 | 0.9034062 | 0.08860906 | 0.9945179 | 0.91792699 | 0.27524794 | 0.9971003 | 0.9942426 | |

| 20 | 0.1 | 0.9039006 | 0.4619756 | 0.14390288 | 0.9965611 | 0.88988985 | 0.24930905 | 0.9758550 | 0.9962799 |

| 0.2 | 0.6297668 | 0.4087665 | 0.17633557 | 0.9965252 | 0.90907549 | 0.09943160 | 0.9712495 | 0.9963236 | |

| 0.3 | 0.7863718 | 0.6305140 | 0.00825861 | 0.9937772 | 0.76705991 | 0.06413241 | 0.9655523 | 0.9934047 | |

| 0.4 | 0.7391398 | 0.8164070 | 0.23050779 | 0.9954877 | 0.90933260 | 0.27164501 | 0.9815923 | 0.9952056 | |

| 0.5 | 0.9278953 | 0.6929346 | 0.15936494 | 0.9964539 | 0.52389778 | 0.13210300 | 0.9731384 | 0.9958513 | |

| 50 | 0.1 | 0.8838522 | 0.1805368 | 0.12775405 | 0.9921327 | 0.84785138 | 0.10742227 | 0.8802893 | 0.9920830 |

| 0.2 | 0.8770866 | 0.2299920 | 0.22687887 | 0.9935021 | 0.84988699 | 0.15669446 | 0.8508515 | 0.9932049 | |

| 0.3 | 0.8785249 | 0.1048715 | 0.14177232 | 0.9943568 | 0.85364046 | 0.03533143 | 0.9335731 | 0.9936751 | |

| 0.4 | 0.2689499 | 0.5967501 | 0.11209041 | 0.9927075 | 0.06249241 | 0.16759339 | 0.9361840 | 0.9920095 | |

| 0.5 | 0.5923197 | 0.6376264 | 0.19823132 | 0.9946354 | 0.69934528 | 0.15969902 | 0.9290508 | 0.9936740 | |

| 100 | 0.1 | 0.8904958 | 0.2116873 | 0.10061459 | 0.9933355 | 0.88229786 | 0.17661137 | 0.7553414 | 0.9934581 |

| 0.2 | 0.1882329 | 0.2977159 | 0.11584467 | 0.9927417 | 0.88426056 | 0.06979850 | 0.8078601 | 0.9924776 | |

| 0.3 | 0.3219067 | 0.1805002 | 0.06364077 | 0.9943353 | 0.86676384 | 0.05520514 | 0.8453197 | 0.9936151 | |

| 0.4 | 0.1661451 | 0.4221019 | 0.02058195 | 0.9941975 | 0.81987146 | 0.08392663 | 0.8391396 | 0.9928401 | |

| 0.5 | 0.8969307 | 0.2601693 | 0.00060988 | 0.9943816 | 0.90599720 | 0.24571967 | 0.8685434 | 0.9930091 | |

| Sparsity | Support Recovery (True Positive Rate) | ||||||||

| Journee6 | Aspremont2 | Jung2 | Gaynanova1 | Song4 | Zhang1 | Shen1 | Smoothed PEP | ||

| 10 | 0.1 | 0.889 | 1.000 | 1.000 | 1.000 | 0.889 | 1.000 | 0.778 | 1.000 |

| 0.2 | 0.875 | 1.000 | 1.000 | 1.000 | 0.875 | 1.000 | 1.000 | 1.000 | |

| 0.3 | 0.857 | 1.000 | 1.000 | 1.000 | 0.857 | 1.000 | 0.714 | 1.000 | |

| 0.4 | 1.000 | 0.667 | 1.000 | 1.000 | 0.667 | 1.000 | 0.500 | 1.000 | |

| 0.5 | 0.800 | 0.600 | 1.000 | 1.000 | 1.000 | 1.000 | 1.000 | 1.000 | |

| 20 | 0.1 | 0.944 | 1.000 | 1.000 | 0.944 | 0.889 | 1.000 | 0.722 | 1.000 |

| 0.2 | 1.000 | 1.000 | 1.000 | 1.000 | 0.750 | 1.000 | 0.563 | 1.000 | |

| 0.3 | 1.000 | 0.857 | 1.000 | 1.000 | 0.786 | 1.000 | 0.500 | 1.000 | |

| 0.4 | 1.000 | 0.833 | 1.000 | 1.000 | 0.917 | 1.000 | 0.833 | 1.000 | |

| 0.5 | 0.900 | 0.800 | 1.000 | 1.000 | 0.900 | 1.000 | 0.500 | 1.000 | |

| 50 | 0.1 | 0.978 | 1.000 | 1.000 | 0.978 | 0.778 | 1.000 | 0.289 | 1.000 |

| 0.2 | 0.975 | 1.000 | 1.000 | 1.000 | 0.850 | 1.000 | 0.275 | 1.000 | |

| 0.3 | 0.971 | 1.000 | 1.000 | 0.971 | 0.800 | 1.000 | 0.400 | 1.000 | |

| 0.4 | 1.000 | 0.933 | 1.000 | 1.000 | 0.233 | 1.000 | 0.500 | 1.000 | |

| 0.5 | 1.000 | 0.960 | 1.000 | 1.000 | 0.720 | 1.000 | 0.280 | 1.000 | |

| 100 | 0.1 | 0.989 | 1.000 | 1.000 | 0.967 | 0.767 | 1.000 | 0.167 | 1.000 |

| 0.2 | 0.988 | 1.000 | 1.000 | 0.913 | 0.663 | 1.000 | 0.200 | 1.000 | |

| 0.3 | 1.000 | 1.000 | 1.000 | 1.000 | 0.700 | 1.000 | 0.229 | 1.000 | |

| 0.4 | 1.000 | 0.967 | 0.983 | 0.983 | 0.717 | 1.000 | 0.250 | 1.000 | |

| 0.5 | 0.980 | 0.960 | 1.000 | 1.000 | 0.760 | 1.000 | 0.340 | 1.000 | |

| Sparsity | Support Recovery (False Positive Rate) | ||||||||

| Journee6 | Aspremont2 | Jung2 | Gaynanova1 | Song4 | Zhang1 | Shen1 | Smoothed PEP | ||

| 10 | 0.1 | 0.000 | 0.000 | 0.000 | 0.000 | 1.000 | 0.000 | 1.000 | 0.000 |

| 0.2 | 0.000 | 0.000 | 0.000 | 0.000 | 1.000 | 0.000 | 1.000 | 0.000 | |

| 0.3 | 0.000 | 0.000 | 0.000 | 0.000 | 1.000 | 0.000 | 1.000 | 0.000 | |

| 0.4 | 0.250 | 0.250 | 0.000 | 0.000 | 1.000 | 0.000 | 1.000 | 0.000 | |

| 0.5 | 0.000 | 0.400 | 0.000 | 0.200 | 1.000 | 0.000 | 1.000 | 0.000 | |

| 20 | 0.1 | 0.000 | 0.000 | 0.000 | 0.000 | 1.000 | 0.000 | 1.000 | 0.000 |

| 0.2 | 0.250 | 0.000 | 0.000 | 0.250 | 1.000 | 0.000 | 1.000 | 0.000 | |

| 0.3 | 0.167 | 0.000 | 0.000 | 0.000 | 0.833 | 0.000 | 1.000 | 0.000 | |

| 0.4 | 0.125 | 0.000 | 0.000 | 0.125 | 1.000 | 0.000 | 1.000 | 0.000 | |

| 0.5 | 0.000 | 0.000 | 0.000 | 0.200 | 0.200 | 0.000 | 1.000 | 0.000 | |

| 50 | 0.1 | 0.000 | 0.000 | 0.000 | 0.000 | 1.000 | 0.000 | 1.000 | 0.000 |

| 0.2 | 0.000 | 0.000 | 0.000 | 0.400 | 0.900 | 0.000 | 1.000 | 0.000 | |

| 0.3 | 0.000 | 0.000 | 0.000 | 0.267 | 1.000 | 0.000 | 1.000 | 0.000 | |

| 0.4 | 0.050 | 0.000 | 0.000 | 0.300 | 0.650 | 0.000 | 1.000 | 0.000 | |

| 0.5 | 0.040 | 0.000 | 0.000 | 0.160 | 0.760 | 0.000 | 1.000 | 0.000 | |

| 100 | 0.1 | 0.000 | 0.000 | 0.000 | 0.500 | 1.000 | 0.000 | 1.000 | 0.000 |

| 0.2 | 0.000 | 0.000 | 0.000 | 0.250 | 0.950 | 0.000 | 1.000 | 0.000 | |

| 0.3 | 0.033 | 0.000 | 0.000 | 0.367 | 1.000 | 0.000 | 1.000 | 0.000 | |

| 0.4 | 0.025 | 0.000 | 0.000 | 0.200 | 0.850 | 0.000 | 1.000 | 0.000 | |

| 0.5 | 0.000 | 0.000 | 0.000 | 0.300 | 1.000 | 0.000 | 1.000 | 0.000 | |

| Sparsity | Elapsed Time (seconds) | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Journee6 | Aspremont2 | Jung2 | Gaynanova1 | Song4 | Zhang1 | Shen1 | Smoothed PEP | ||

| 10 | 0.1 | 0.028 | 0.015 | 0.003 | 0.000 | 0.002 | 0.016 | 0.000 | 0.001 |

| 0.2 | 0.028 | 0.047 | 0.003 | 0.000 | 0.002 | 0.015 | 0.000 | 0.001 | |

| 0.3 | 0.027 | 0.015 | 0.003 | 0.000 | 0.053 | 0.014 | 0.001 | 0.001 | |

| 0.4 | 0.027 | 0.233 | 0.003 | 0.001 | 0.003 | 0.015 | 0.000 | 0.002 | |

| 0.5 | 0.027 | 0.450 | 0.003 | 0.000 | 0.002 | 0.015 | 0.000 | 0.001 | |

| 20 | 0.1 | 0.106 | 0.052 | 0.005 | 0.000 | 0.003 | 0.027 | 0.000 | 0.003 |

| 0.2 | 0.108 | 0.051 | 0.005 | 0.000 | 0.003 | 0.023 | 0.000 | 0.005 | |

| 0.3 | 0.108 | 0.349 | 0.004 | 0.000 | 0.004 | 0.023 | 0.000 | 0.005 | |

| 0.4 | 0.109 | 0.841 | 0.004 | 0.000 | 0.004 | 0.022 | 0.000 | 0.003 | |

| 0.5 | 0.104 | 0.780 | 0.004 | 0.000 | 0.003 | 0.023 | 0.001 | 0.002 | |

| 50 | 0.1 | 0.740 | 0.810 | 0.018 | 0.000 | 0.007 | 0.084 | 0.001 | 0.018 |

| 0.2 | 0.753 | 0.720 | 0.017 | 0.000 | 0.007 | 0.093 | 0.001 | 0.015 | |

| 0.3 | 0.736 | 0.731 | 0.018 | 0.000 | 0.007 | 0.089 | 0.001 | 0.018 | |

| 0.4 | 0.754 | 1.901 | 0.018 | 0.001 | 0.008 | 0.096 | 0.001 | 0.016 | |

| 0.5 | 0.738 | 4.272 | 0.018 | 0.000 | 0.007 | 0.088 | 0.001 | 0.020 | |

| 100 | 0.1 | 3.585 | 14.089 | 0.102 | 0.001 | 0.017 | 0.353 | 0.005 | 0.106 |

| 0.2 | 3.837 | 13.657 | 0.102 | 0.000 | 0.016 | 0.263 | 0.005 | 0.105 | |

| 0.3 | 3.715 | 13.425 | 0.102 | 0.000 | 0.017 | 0.262 | 0.004 | 0.113 | |

| 0.4 | 3.705 | 23.994 | 0.102 | 0.000 | 0.016 | 0.264 | 0.005 | 0.124 | |

| 0.5 | 3.964 | 46.213 | 0.102 | 0.000 | 0.016 | 0.341 | 0.005 | 0.118 | |

4 Discussion

This paper investigates the suitability of the Penalized Eigenvalue Problem (PEP) for visualizing population stratification in genomic data, as opposed to using ordinary eigenvectors. Our main contribution is the application of smoothing to the penalty of PEP (Chen and Mangasarian,, 1995; Nesterov,, 2005; Trendafilov and Gallo,, 2021), thereby facilitating the efficient optimization of the PEP objective function and the computation of higher-order eigenvectors. An algorithm to iteratively apply smoothing to increase accuracy, and a thresholding approach to enforce sparsity are presented.

In an extensive experimental study, we showcase the numerical difficulties that can arise when optimizing the PEP objective function, rooted in the non-differentiability of its penalty. We showcase that our proposed smoothed version of PEP retains clear population stratification, and that its principal components can be utilized to correct a linear regression as demonstrated with an example in which we predicted mortality in Covid-19 patients using their age, sex, geographic region, and principal components.

Most importantly, our simulation study with seven other state-of-the-art algorithms demonstrates that for the scenarios considered in this simulation study, our smoothed PEP approach yields a high accuracy with respect to the true (planted) sparse eigenvector, a state-of-the-art support recovery (as demonstrated with a high True Positive Rate and a low False Positive Rate), and a fast runtime. It is comparable in performance to the algorithm of Gaynanova et al., (2017), which performs equally well in this simulation study.

This project leaves scope for further research. Most importantly, a rigorous theoretical proof demonstrating the increased numerical stability of PEP is desirable and would set the proposed methodology on a rigorous mathematical foundation. Moreover, further experiments on correcting linear regressions or association analyses on genomic data are warranted. It would be interesting in this context if the modified regression problem allowed one to detect associations not detectable with ordinary principal components, for instance through smaller p-values. Finally, the application of smoothed PEP in lieu of ordinary eigenvectors has broader applicability in fields like machine learning, quantitative finance, computational biology, and computer vision via the reduction of dimensionality and the improvement of computational efficiency.

Statements and Declarations

Competing Interests

The authors have no competing interests to declare that are relevant to the content of this article.

Funding

Funding for this research was provided through Cure Alzheimer’s Fund, the National Institutes of Health [1R01 AI 154470-01; 2U01 HG 008685; R21 HD 095228 008976; U01 HL 089856; U01 HL 089897; P01 HL 120839; P01 HL 132825; 2U01 HG 008685; R21 HD 095228, P01 HL132825], the National Science Foundation [NSF PHY 2033046; NSF GRFP 1745302], and a NIH Center grant [P30-ES002109].

Acknowledgements

The authors thankfully acknowledge support of an ERC training grant (T42 OH008416). The authors gratefully acknowledge the contributors of the 1000 Genomes Project (The 1000 Genomes Project Consortium,, 2023).

The authors gratefully acknowledge the contributors, originating and submitting laboratories of the sequences from GISAID’s EpiCoV™ Database (Elbe and Buckland-Merrett,, 2017; Shu and McCauley,, 2017) on which this research is based. The accession numbers of all nucleotide sequences used in this work are given in the supplementary material.

Data Availability Statement

The datasets generated and/or analysed during the current study are available in the International Genome Sample Resource repository at the address https://www.internationalgenome.org/.

The data that support the findings of this study are publicly available in the GISAID database (Elbe and Buckland-Merrett,, 2017; Shu and McCauley,, 2017), see https://gisaid.org/. The supplementary material of this manuscript contains a list of all EPI_ISL identifiers of the nucleotide sequences which were included in the experiments.

Appendix A Two additional simulation results

This section presents two additional simulation results. The first is a population stratification plot obtained by computing the GRM matrix on the 1000 Genomes Project dataset and by plotting its regular first two eigenvectors. The resulting plot is shown in Figure 6. We observe that the stratification is similar to the one obtained in Figure 1 and Figure 2, however no thresholding is applied in Figure 6.

The second figure obtained by computing the first two principal components as in eq. (2), however with an norm instead of the norm. The resulting plot for can be found in Figure 7. It is similar to the ones seen in Figure 1 and Figure 2.

References

- Baglama et al., (2022) Baglama, J., Reichel, L., and Lewis, B. (2022). irlba: Fast Truncated Singular Value Decomposition and Principal Components Analysis for Large Dense and Sparse Matrices. R-package version 2.3.5.1: https://cran.r-project.org/package=irlba.

- Beck and Teboulle, (2009) Beck, A. and Teboulle, M. (2009). A Fast Iterative Shrinkage-Thresholding Algorithm for Linear Inverse Problems. SIAM J Imaging Sciences, 2(1):183–202.

- Chen and Mangasarian, (1995) Chen, C. and Mangasarian, O. (1995). Smoothing methods for convex inequalities and linear complementarity problems. Mathematical Programming, 71:51–69.

- Chen, (2023) Chen, F. (2023). epca: Exploratory Principal Component Analysis. R-package version 1.1.0: https://cran.r-project.org/package=epca.

- d’Aspremont et al., (2007) d’Aspremont, A., El Ghaoui, L., Jordan, M., and Lanckriet, G. (2007). A direct formulation for sparse PCA using semidefinite programming. SIAM Review, 49(3):1–8.

- Eckart and Young, (1936) Eckart, C. and Young, G. (1936). The approximation of one matrix by another of lower rank. Psychometrika, 1:211–8.

- Elbe and Buckland-Merrett, (2017) Elbe, S. and Buckland-Merrett, G. (2017). Data, disease and diplomacy: GISAID’s innovative contribution to global health. Global Chall., 1(1):33–46.

- Gaynanova et al., (2017) Gaynanova, I., Booth, J., and Wells, M. (2017). Penalized Versus Constrained Generalized Eigenvalue Problems. Journal of Computational and Graphical Statistics, 26(2):379–387.

- Gaynanova and Wang, (2019) Gaynanova, I. and Wang, T. (2019). Sparse quadratic classification rules via linear dimension reduction. Journal of Multivariate Analysis, 169:278–299.

- Golub and van Loan, (1996) Golub, G. H. and van Loan, C. (1996). Matrix Computations. Johns Hopkins University Press; 3rd Edition.

- Guan, (2022) Guan, L. (2022). -norm constrained multi-block sparse canonical correlation analysis via proximal gradient descent. arXiv:2201.05289, pages 1–60.

- (12) Hahn, G., Lutz, S., Hecker, J., Prokopenko, D., Cho, M., Silverman, E., Weiss, S., Lange, C., and The NHLBI Trans-Omics for Precision Medicine (TOPMed) Consortium (2021a). locStra: Fast analysis of regional/global stratification in whole-genome sequencing studies. Genet Epidemiol, 45(1):82–98.

- (13) Hahn, G., Lutz, S., Laha, N., Cho, M., Silverman, E., and Lange, C. (2021b). A fast and efficient smoothing approach to LASSO regression and an application in statistical genetics: polygenic risk scores for Chronic obstructive pulmonary disease (COPD). Stat Comput, 31(353):1–11.

- Hahn et al., (2020) Hahn, G., Lutz, S., Laha, N., and Lange, C. (2020). A framework to efficiently smooth L1 penalties for linear regression. bioRxiv:2020.09.17.301788, pages 1–35.

- Han and Clemmensen, (2016) Han, X. and Clemmensen, L. (2016). Regularized Generalized Eigen-Decomposition With Applications to Sparse Supervised Feature Extraction and Sparse Discriminant Analysis. Pattern Recognition, 49:43–54.

- Hurwitz and Hahn, (2023) Hurwitz, R. and Hahn, G. (2023). SPEV: Unsmoothed and Smoothed Penalized PCA using Nesterov Smoothing. R-package version 1.0.0: https://CRAN.R-project.org/package=SPEV.

- Jaccard, (1901) Jaccard, P. (1901). Étude comparative de la distribution florale dans une portion des Alpes et des Jura. Bull Soc Vaud Des Sci Nat, 37:547–579.

- Journée et al., (2008) Journée, M., Nesterov, Y., Richtárik, P., and Sepulchre, R. (2008). Generalized power method for sparse principal component analysis. arXiv:0811.4724, pages 1–30.

- Jung et al., (2019) Jung, S., Ahn, J., and Jeon, Y. (2019). Penalized Orthogonal Iteration for Sparse Estimation of Generalized Eigenvalue Problem. Journal of Computational and Graphical Statistics, 28(3):710–721.

- Katoh et al., (2002) Katoh, K., Misawa, K., Kuma, K., and Miyata, T. (2002). MAFFT: a novel method for rapid multiple sequence alignment based on fast Fourier transform. Nucleic Acids Res, 30(14):3059–66.

- Kokiopoulou et al., (2011) Kokiopoulou, E., Chen, J., and Saad, Y. (2011). Trace Optimization and Eigenproblems in Dimension Reduction Methods. Numerical Linear Algebra With Applications, 18(3):565–602.

- Li, (2007) Li, L. (2007). Sparse Sufficient Dimension Reduction. Biometrika, 94(3):603–613.

- Mackey, (2009) Mackey, L. (2009). Deflation methods for sparse PCA. In NIPS’08: Proceedings of the 22nd International Conference on Neural Information Processing Systems, pages 1017–1024.

- Maechler et al., (2022) Maechler, M., Rousseeuw, P., Struyf, A., Hubert, M., Hornik, K., Studer, M., Roudier, P., Gonzalez, J., Kozlowski, K., Schubert, E., and Murphy, K. (2022). cluster: ”Finding Groups in Data”: Cluster Analysis Extended Rousseeuw et al. R-package version 2.1.4: https://cran.r-project.org/package=cluster.

- Nesterov, (2005) Nesterov, Y. (2005). Smooth minimization of non-smooth functions. Math. Program. Ser. A, 103:127–152.

- Prokopenko et al., (2016) Prokopenko, D., Hecker, J., Silverman, E., Pagano, M., Nöthen, M., Dina, C., Lange, C., and Fier, H. (2016). Utilizing the Jaccard index to reveal population stratification in sequencing data: a simulation study and an application to the 1000 Genomes Project. Bioinformatics, 32(9):1366–1372.

- Purcell and Chang, (2019) Purcell, S. and Chang, C. (2019). Plink2. www.cog-genomics.org/plink/2.0/. Version 2.0.

- Qiu et al., (2022) Qiu, Y., Mei, J., Guennebaud, G., and Niesen, J. (2022). RSpectra: Solvers for Large-Scale Eigenvalue and SVD Problems. R-package version 0.16-1: https://cran.r-project.org/package=RSpectra.

- R Core Team, (2014) R Core Team (2014). R: A Language and Environment for Statistical Computing. R Foundation for Stat Comp, Vienna, Austria.

- Shen and Huang, (2008) Shen, H. and Huang, J. (2008). Sparse principal component analysis via regularized low rank matrix approximation. Journal of Multivariate Analysis, 99(6):1015–1034.

- Shu and McCauley, (2017) Shu, Y. and McCauley, J. (2017). GISAID: global initiative on sharing all influenza data—from vision to reality. Euro Surveill., 22(13):30494.

- Song et al., (2015) Song, J., Babu, P., and Palomar, D. (2015). Sparse Generalized Eigenvalue Problem via Smooth Optimization. IEEE Transactions on Signal Processing, 63(7):1627–1642.

- Sriperumbudur et al., (2011) Sriperumbudur, B., Torres, D., and Lanckriet, G. (2011). A Majorization-Minimization Approach to the Sparse Generalized Eigenvalue Problem. Machine Learning, 85:3–39.

- Tan et al., (2018) Tan, K., Wang, Z., Liu, H., and Zhang, T. (2018). Sparse Generalized Eigenvalue Problem: Optimal Statistical Rates via Truncated Rayleigh Flow. arXiv:1604.08697, pages 1–35.

- The 1000 Genomes Project Consortium, (2015) The 1000 Genomes Project Consortium (2015). A global reference for human genetic variation. Nature, 526(68-74).

- The 1000 Genomes Project Consortium, (2023) The 1000 Genomes Project Consortium (2023). The International Genome Sample Resource. https://www.internationalgenome.org/.

- Tibshirani, (1996) Tibshirani, R. (1996). Regression shrinkage and selection via the lasso. J. R. Statist. Soc. B, 58(1):267–288.

- Trendafilov and Gallo, (2021) Trendafilov, N. and Gallo, M. (2021). Multivariate Data Analysis on Matrix Manifolds. Springer (1st ed.).

- Tyler et al., (2009) Tyler, D., Critchley, F., Dümbgen, L., and Oja, H. (2009). Invariant Coordinate Selection. Journal of the Royal Statistical Society Series B, 71:549–592.

- UC Irvine Machine Learning Repository, (1988) UC Irvine Machine Learning Repository (1988). Iris. https://archive.ics.uci.edu/dataset/53/iris.

- Varadhan, (2023) Varadhan, R. (2023). alabama: Constrained Nonlinear Optimization. R-package version 2023.1.0: https://cran.r-project.org/package=alabama.

- Witten and Tibshirani, (2011) Witten, D. and Tibshirani, R. (2011). Penalized classification using Fisher’s linear discriminant. Journal of the Royal Statistical Society Series B, 73(5):753–772.

- Yang et al., (2011) Yang, J., Lee, S., Goddard, M., and Visscher, P. (2011). GCTA: a tool for genome-wide complex trait analysis. Am J Hum Genet, 88(1):76–82.

- Zhang et al., (2010) Zhang, Y., d’Aspremont, A., and El Ghaoui, L. (2010). Sparse PCA: Convex Relaxations, Algorithms and Applications. arXiv:1011.3781, pages 1–22.

- Zhang et al., (2013) Zhang, Z., Chow, T., and Zhao, M. (2013). Trace Ratio Optimization-Based Semi-supervised Nonlinear Dimensionality Reduction for Marginal Manifold Visualization. IEEE Transactions on Knowledge and Data Engineering, 25:1148–1161.