33institutetext: Shenzhen Key Laboratory of Robotics and Computer Vision https://github.com/GCChen97/ppa4p3d

Perspective Phase Angle Model for

Polarimetric 3D Reconstruction

Abstract

Current polarimetric 3D reconstruction methods, including those in the well-established shape from polarization literature, are all developed under the orthographic projection assumption. In the case of a large field of view, however, this assumption does not hold and may result in significant reconstruction errors in methods that make this assumption. To address this problem, we present the perspective phase angle (PPA) model that is applicable to perspective cameras. Compared with the orthographic model, the proposed PPA model accurately describes the relationship between polarization phase angle and surface normal under perspective projection. In addition, the PPA model makes it possible to estimate surface normals from only one single-view phase angle map and does not suffer from the so-called -ambiguity problem. Experiments on real data show that the PPA model is more accurate for surface normal estimation with a perspective camera than the orthographic model.

Keywords:

Polarization Image, Phase Angle, Perspective Projection, 3D Reconstruction1 Introduction

The property that the polarization state of light encodes geometric information of object surfaces has been researched in computer vision for decades. With the development of the division-of-focal-plane (DoFP) polarization image sensor [23] in recent years, there has been a resurgent interest in 3D reconstruction with polarization information. In the last decade, it has been shown that polarization information can be used to enhance the performance of traditional reconstruction methods of textureless and non-Lambertian surfaces [17, 2, 21, 26]. For accurate reconstruction of objects, shape from polarization and photo-polarimetric stereo can recover fine-grain details [26, 10] of the surfaces. For dense mapping in textureless or specular scenes, multi-view stereo can also be improved with polarimetric cues [7, 30, 5, 21].

In computer vision and robotics, the use of perspective cameras is common. However, in all the previous literature on polarimetric 3D reconstruction, to the best of our knowledge, the use of polarization and its derivation are without exception under the orthographic projection assumption. Therefore, the dense maps or shapes generated by these methods from polarization images captured by perspective cameras will be flawed without considering the perspective effect. In this paper, we present an accurate model of perspective cameras for polarimetric 3D reconstruction.

One of the key steps in polarimetric 3D reconstruction is the optimization of depth maps with a linear constraint on surface normals by the polarization phase angles. In this paper, we refer to this constraint as the phase angle constraint. This constraint was first proposed in [27] under the orthographic projection assumption and has become a standard practice of utilizing the phase angles. In the literature, this constraint has been derived from a model, which we refer to as the orthographic phase angle (OPA) model in this paper, where the azimuth of the normal is equivalent to the phase angle [20, 7]. If observed objects are in the middle of the field of view in a 3D reconstruction application, orthographic projection could be a reasonable assumption. However, for dense mapping and large objects, this assumption is easily violated.

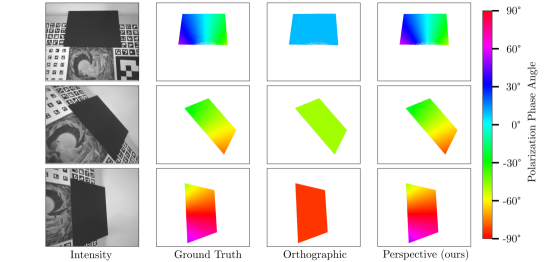

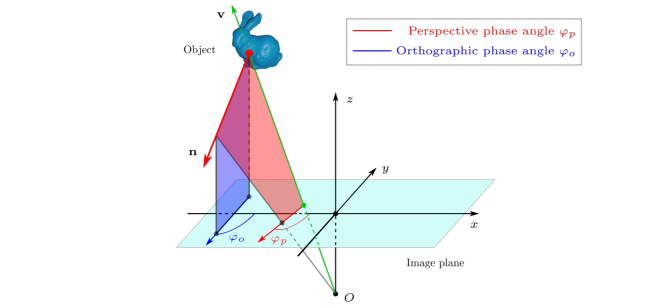

In this work, an alternative perspective phase angle (PPA) model is developed to solve this problem. The PPA model is inspired by the geometric properties of a polarizer in [13]. Different from the OPA model, the proposed PPA model defines the phase angle as the direction of the intersecting line of the image plane and the plane of incident (PoI) spanned by the light ray and the surface normal (see Fig. 2 for details). As shown in Fig. 1, given the ground-truth normal of the black board in the images, the phase angle maps calculated using the proposed PPA model are much more accurate than those using the OPA model. Under perspective projection, we also derive a new linear constraint on the surface normal by the phase angle, which we refer to as the PPA constraint. As a useful by-product of our PPA model, the PPA constraint makes it possible to estimate surface normal using only one single-view phase angle map without suffering from the well-known -ambiguity problem, which requires at least two views to resolve as shown in previous works [27, 19]. In addition, the PPA constraint leads to improved the accuracy of normal estimation from phase angle maps of multiple views [27, 15]. The main contributions of this paper are summarized as follows:

-

•

A perspective phase angle model and a corresponding constraint on surface normals by polariztion phase angles are proposed. The model and the constraint serve as the basis for accurately estimating surface normals from phase angles.

-

•

A novel method is developed to estimate surface normals from a single-view phase angle map. The method does not suffer from the -ambiguity problem as does a method using the orthographic phase angle model.

-

•

We make use of the proposed model and the corresponding constraint to improve surface normal estimation from phase angle maps of multiple views.

The rest of this paper is organized as follows. Section 2 overviews related works. Section 3 reviews the utilization of polarization phase angles under orthographic projection. Section 4 describes our proposed PPA model, PPA constraint and normal estimation methods. Experimental evaluation is presented in Section 5. The conclusion of this paper and the discussions of our future work are presented in Section 6.

2 Related Works

The proposed PPA model is fundamental to polarimetric 3D reconstruction and thus it is related to the following three topics: 1) shape and depth estimation from single-view polarization images, 2) multi-view reconstruction with additional polarization information and 3) camera pose estimation with polarization information.

2.1 Polarimetric Single-view Shape and Depth Estimation

This topic is closely related to two lines of research: shape from polarization (SfP) and photo-polarimetric stereo. SfP first estimates surface normals that are parameterized in the OPA model by azimuth and zenith angles from polarization phase angles and degree of linear polarization and then obtains Cartesian height maps by integrating the normal maps [17, 2]. With additional spectral cues, refractive distortion [12] of SfP can be solved [11]. Alternatively, it has been shown that the surface normals, refractive indexes, and light directions can be estimated from photometric and polarimetric constraints [18]. The recent work of DeepSfP [4] is the first attempt to use a convolutional neural network (CNN) to estimate normal maps from polarization images. It is reasonable to expect more accurate surface normal estimation when these methods parameterize the surface normal with the help of the proposed PPA model in perspective cameras. In fact, a recent work shows that learning-based SfP can benefit from considering the perspective effect [14]. Regardless, as a two-stage method, SfP is sensitive to noise.

As a one-stage method, photo-polarimetric stereo directly estimates a height map from polarization images and is able to avoid cumulative errors and suppress noise. By constructing linear constraints on surface heights from illumination and polarization, height map estimation is solved through the optimization of a non-convex cost function [22]. Yu et al. [31] derives a fully differentiable method that is able to optimize a height map through non-linear least squares optimization. In [26], variations of the photo-polarimetric stereo method are unified as a framework incorporating different optional photometric and polarimetric constraints. In these works, the OPA constraint is a key to exploiting polarization during height map estimation. In perspective cameras, the proposed PPA constraint can provide a more accurate description than the OPA constraint.

2.2 Polarimetric Multi-view 3D Reconstruction

Surface reconstruction can be solved by optimizing a set of functionals given phase angle maps of three different views [20]. To address transparent objects, a two-view method [17] exploits phase angles and degree of linear polarization to solve correspondences and polarization ambiguity. Another two-view method [3] uses both polarimetric and photometric cues to estimate reflectance functions and reconstruct shapes of practical and complex objects. By combining space carving and normal estimation, [16] is able to solve polarization ambiguity problems and obtain more accurate reconstructions of black objects than pure space carving. Fukao et al. [10] models polarized reflection of mesoscopic surfaces and proposes polarimetric normal stereo for estimating normals of mesoscopic surfaces whose polarization depends on illumination (i.e., polarization by light [6]). These works are all based on the OPA model without considering the perspective effect.

Recently, traditional multi-view 3D reconstruction methods enhanced by polarization prove to be able to densely reconstruct textureless and non-Lambertian surfaces under uncalibrated illumination [7, 30, 21]. Cui et al. [7] proposes polarimetric multi-view stereo to handle real-world objects with mixed polarization and solve polarization ambiguity problems. Yang et al. [30] proposes a polarimetric monocular dense SLAM system that propagates sparse depths in textureless scenes in parallel. Shakeri et al. [21] uses relative depth maps generated by a CNN to solve the -ambiguity problem robustly and efficiently in polarimetric dense map reconstruction. In these works, iso-depth contour tracing [33] is a common step to propagate sparse depths on textureless or specular surfaces. It is based on the proposition that, with the OPA model, the direction perpendicular to the phase angle is the tangent direction of an iso-depth contour [7]. However, in a perspective camera, this proposition is only an approximation.

Besides, the OPA constraint can be integrated in stereo matching [5], depth optimization [30] and mesh refinement [32]. However, only the first two components of the surface normal are involved in the constraint, in addition to its inaccuracy in perspective cameras. With the proposed PPA model, all three components of a surface normal are constrained and the constraint is theoretically accurate for a perspective camera.

2.3 Polarimetric Camera Pose Estimation

The polarization phase angle of light emitted from a surface point depends on the camera pose. Therefore, it is intuitive to use this cue for camera pose estimation. Chen et al. [6] proposes polarimetric three-view geometry that connects the phase angle and three-view geometry and theoretically requires six triplets of corresponding 2D points to determine the three rotations between the views. Different from using only phase angles in [6], Cui et al. [8] exploits full polarization information including degree of linear polarization to estimate relative poses so that only two 2D-point correspondences are needed. The method achieves competitive accuracy with the traditional five 2D-point method. Since both works are developed without considering the perspective effect, the proposed PPA model can be used to generalize them to a perspective camera.

3 Preliminaries

In this section, we review methods for phase angle estimation from polarization images, as well as the OPA model and the corresponding OPA constraint that are commonly adopted in the existing literature.

3.1 Phase Angle Estimation

As a function of the orientation of the polarizer , the intensity of unpolarized light is attenuated sinusoidally as . The parameters of polarization state are the average intensity , the degree of linear polarization (DoLP) and the phase angle . The phase angle is also called the angle of linear polarization (AoLP) in [32, 28, 24, 21]. In this paper, we uniformly use the term “phase angle” to refer to AoLP.

Given images captured through a polarizer at a minimum of three different orientations, the polarization state can be estimated by solving a linear system [30]. For a DoFP polarization camera that captures four images , , and in one shot, the polarization state can be extracted from the Stokes vector as follows:

| (1) |

where , and . Although it has been shown that further optimization of the polarization state from multi-channel polarization images is possible [25], for this paper, Eq. (1) is sufficiently accurate for us to generate ground-truth phase angle maps from polarization images.

3.2 The OPA Model

The phase angles estimated from polarization images through Eq. (1) are directly related to the surface normals off which light is reflected. It is this property that is exploited in polarimetric 3D reconstruction research to estimate surface normal from polarization images. Model of projection, which defines how light enters the camera, is another critical consideration in order to establish the relationship between the phase angle and the surface normal.

The OPA model assumes that all light rays enter the camera in parallel, as shown by the blue plane in Fig. 2, so that the azimuth angle of the surface normal is equivalent to the phase angle up to a -ambiguity and a -ambiguity [32]. Specifically, can be parameterized by the phase angle and the zenith angle in the camera coordinate system:

| (2) |

With this model, can be calculated from as

| (3) |

We can use Eq. (3) to evaluate the accuracy of the OPA model. I.e., if the OPA model is accurate, then the phase angle calculated by Eq. (3) should agree with that estimated from the polarization images. Although this model is based on the orthographic projection assumption, it is widely adopted in polarimetric 3D reconstruction methods even when polarization images are captured by a camera with perspective projection. In addition, Eq. (2) defines a constraint on by , i.e., the OPA constraint:

| (4) |

This linear constraint is commonly integrated in depth map optimization [7, 30, 26]. It is also the basis of iso-depth contour tracing [1, 7] as it gives the tangent direction of an iso-depth contour. Obviously, this constraint is strictly valid only in the case of orthographic projection.

4 Phase Angle Model under Perspective Projection

In the perspective camera model, light rays that enter the camera are subject to the perspective effect as illustrated by the red plane in Fig. 2. As a result, the estimated phase angle by Eq. (1) not only depends on the direction of the surface normal but also on the direction in which the light ray enters the camera. In this section, the PPA model is developed to describe the relationship between the polarization phase angle and the surface normal. The PPA constraint naturally results from the PPA model as well as two methods for normal estimation from the phase angles.

4.1 The PPA Model

The geometric properties of a polarizer in [13] inspire us to model the phase angle as the direction of the intersecting line of the image plane and the PoI spanned by the light ray and the surface normal. As shown in Fig. 2, there is an obvious difference between the two definitions of the phase angle, depending upon the assumed type of projection.

Specifically, let the optical axis be and the light ray be , for an image point at the pixel coordinates and a camera with intrinsic matrix . From Fig. 2, the PPA model can be formulated as follows:

| (5) |

where , and are normalized to 1, is a constant and . From Eq. (5), the phase angle can be obtained from and as:

| (6) |

Similar to Eq. (3), Eq. (6) can also be used for evaluating the accuracy of the PPA model. Different from , not only depends on the surface normal but also on the light ray .

In addition, can be parameterized by and as where is the normal of the PoI and represents the skew-symmetric matrix of . Eq. (2) can also be reformulated into a similar form as where . Obviously, the difference of the two parameterizations of is the axis about which rotates.

With the PPA model, the PPA constraint can be derived from Eq. (5) as follows:

| (7) |

Note that Eq. (7) can be used just as the OPA constraint Eq. (4), without the knowledge of object geometry since is a scene-independent directional vector. Compared with Eq. (4), Eq. (7) has an additional constraint on the third component of the normal. This additional constraint is critical in allowing us to estimate of a planar surface from a single view as we will show in the next section. Table 1 summaries the definitions of the phase angle, the normal and the constraint of the OPA and PPA models.

| OPA model | PPA model | |

|---|---|---|

| Phase angle | ||

| Normal | ||

| Constraint |

4.2 Relations with the OPA Model

Comparing the two constraints in Table 1, the coefficient implies that there exist four cases in which the two models are equivalent:

-

1.

, when the light ray is parallel to the optical axis, which corresponds to orthographic projection.

-

2.

, when the PoI is perpendicular to the image plane.

-

3.

, when the normal tends to be parallel to the optical axis. In this case, the PoI is also perpendicular to the image plane.

-

4.

, when the normal is perpendicular to the optical axis.

Besides, according to Eq. (3) and Eq. (4), if , the OPA model and the OPA constraint will become degenerate whereas ours will still be useful.

4.3 Normal Estimation

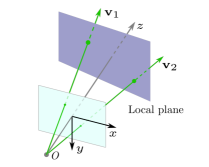

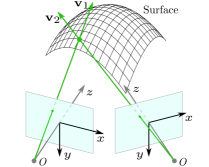

Theoretically, a surface normal can be solved from at least its two observations with the PPA constraint. The observations can either be the phase angles from multiple pixels in the same phase angle map or from phase angle maps of multiple views as shown in Fig. 3. The two cases lead to the following two methods.

4.3.1 Single-view Normal Estimation.

In the same phase angle map, if a set of phase angles of the points share the same surface normal, e.g., points in a local plane or points in different parallel planes, they can be used for estimating the normal. Let denote the coefficients in Eq. (7) as . Given points that have the same normal , a coefficient matrix can be constructed from their coefficients:

| (8) |

then can be obtained by the eigen decomposition of . This method directly solves with only one single-view phase angle map and does not suffer from the -ambiguity problem, while previous works based on the OPA model require at least two views [27, 19]. Ideally the rank of should be exactly two so that can be solved. In practice, with image noise, we can construct a well-conditioned with more than two points that are on the same plane for solving as we will show in Section 5.3.

4.3.2 Multi-view Normal Estimation.

If a point is observed in views, another coefficients matrix can be constructed as follows:

| (9) |

where () is a camera rotation matrix. The normal can be solved as in the case of single-view normal estimation above. Similar to , can be solved if the rank of is two and well conditioned. Therefore, observations made from sufficiently different camera poses are desirable. This method is the generalization of the method proposed in [27, 15] to the case of perspective projection. We will verify that a method using the PPA constraint is more accurate in a perspective camera than using the OPA constraint in Section 5.3.

5 Experimental Evaluation

In this section, we present the experimental results that verify the proposed PPA model and PPA constraint in a perspective camera. Details on the experimental dataset and experimental settings are provided in Section 5.1. In Section 5.2, we evaluate the accuracy of the PPA model and analyze its phase angle estimation error. To verify that the PPA model is beneficial to polarimetric 3D reconstruction, we conduct experiments on normal estimation in Section 5.3 and on contour tracing in Section 5.4.

5.1 Dataset and Experimental Settings

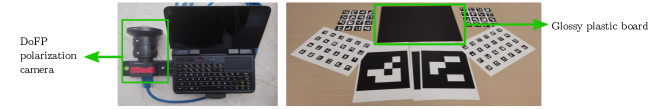

An image capture setup is shown in Fig. 4. Our camera has a lens with a focal length of 6 mm and a field of view of approximately . Perspective projection is therefore appropriate to model its geometry. We perform camera calibration first to obtain its intrinsics, and use the distortion coefficients to undistort the images before they are used in our experiments. The calibration matrix is used to generate the light ray in homogeneous coordinates.

We capture a glossy and black plastic board with a size of 300 mm by 400 mm on a table by a handheld DoFP polarization camera [9]. The setting is such that: 1) the board is specular-reflection-dominant so that the ambiguity problem can be easily solved and 2) the phase angle maps of the board can be reasonably estimated. Although this setting could be perceived as being limited, it is carefully chosen to verify the proposed model accurately and conveniently.

We create a dataset that contains groups of grayscale polarization images (four images per group) of the board captured at random view points. The camera poses and the ground-truth normal of the board are estimated with the help of AR tags placed on the table. Ground-truth phase angle maps are calculated from polarization images by Eq. (1). To reduce the influence of noise, we only use the pixels with DoLP higher than in the region of the board, and apply Gaussian blur to the images before calculating phase angle maps and DoLP maps. This dataset is used in all the following experiments.

5.2 Accuracy of the PPA Model

To evaluate the accuracy of the OPA model and the PPA model, we calculate the phase angle of every pixel with Eq. (3) and Eq. (6), respectively, given the ground-truth normals, and compare the results to the estimated phase angle by Eq. (1). The mean and the root mean square error (RMSE) of the phase angle errors of the OPA model are , and respectively, and the ones of our proposed PPA model are and respectively. Although the phase angle error of both the OPA and the PPA models is unbiased with a mean that is close to zero, the RMSE of the phase angle estimated by the PPA model is only of that by the OPA model in relative terms, and small in absolute terms from a practical point of view. As shown in Fig. 1, the PPA model accurately describes the spatial variation of the phase angle while the phase angle maps of the OPA model are spatially uniform and inaccurate, in comparison with the ground truth.

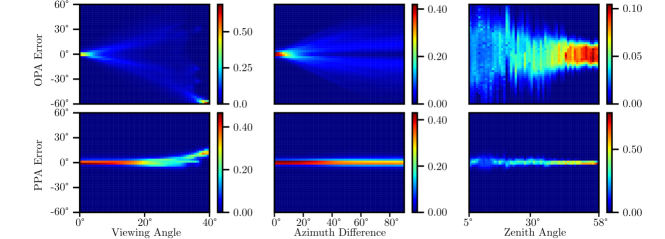

In addition, we plot the error distributions with respect to the viewing angle (the angle between the light ray and the normal), the azimuth difference (the angle between the azimuths of the light ray and the normal) and the zenith angle of the normal. As shown in Fig. 5, the deviations of the PPA error are much smaller than those of the OPA error. It is shown that pixels closer to the edges of the views have larger errors with the OPA model. Additionally, the errors are all close to zeros in the first three equivalent cases stated in Section 4.2. The high density at viewing angles near in the first column of Fig. 5 is likely a result of the nonuniform distribution of the positions of the board in the images since they correspond to the pixels at the edges of the views.

5.3 Accuracy of Normal Estimation

5.3.1 Single-view Normal Estimation.

As has been mentioned, it is possible to estimate surface normal from a single view by a polarization camera. Methods based on the OPA model however suffer from the -ambiguity problem. In contrast, given a single-view phase angle map, surface normal estimation can be solved with the proposed PPA constraint without suffering from the -ambiguity problem. Therefore, we only evaluate our method developed in Section 4.3.1 with the PPA constraint for the normal of a planar surface.

In this experiment, for every image in the dataset introduced in Section 5.1, we use the phase angles of the pixels in the region of the black board in Fig. 4 to estimate its normal. The number of the pixels contributing to the normal in one estimation varies from 100,000 to 300,000, depending on the size of area of the board in the image. We obtain 282 estimated normals among the 282 images (see the estimated normals in our supplementary video). The mean and the RMSE of the angular errors of these estimated normals are and , respectively. Such excellent performance is in part due to our highly accurate PPA model in describing the image formation process and in part due to the simplicity of the scene (planar surface) and a large number of measurements available.

5.3.2 Multi-view Normal Estimation.

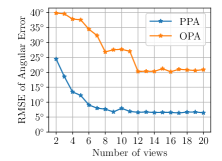

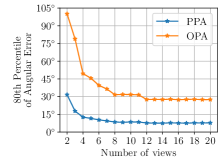

As well, it is possible to estimate surface normal from multiple views of a polarization camera. To establish the superiority of our proposed model, we compare the accuracy of multi-view normal estimation with the proposed method using the PPA constraint developed in Section 4.3.2 and the one using the OPA constraint proposed in [27, 19], respectively.

In this experiment, we randomly sample 3D points on the board among the 282 images and individually estimate the normals of the points from multiple views. For every point, the number of views for its normal estimation varies from two to 20, and the views are selected using the code of ACMM [29] and the ground-truth poses of the camera and the board are used to resolve the pixel correspondences among the multiple views.

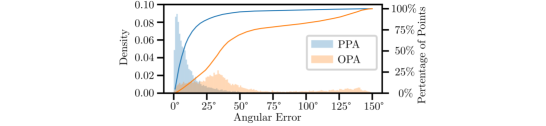

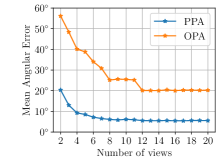

Fig. 6 shows the distribution of the angular errors of the three-view case. of the results of our method have errors smaller than compared with only of the one using the OPA constraint. Fig. 7 shows the normal estimation errors with different number of views. Although the accuracy of these two methods can be improved with the increase of the number of views, the errors of our method with three views are already smaller than those using the OPA model with more than ten views. The results of this experiment can be visualized in our supplementary video. Note that the error of the estimated normal in this case is significantly larger than that in the single-view case, mostly because of the small number of measurements (2-20) used in estimating each normal and the uncertainty in the relative camera poses used to solve data association. Nonetheless, the superiority of the PPA model over the OPA model is clearly established.

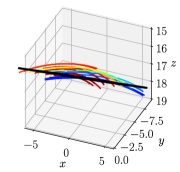

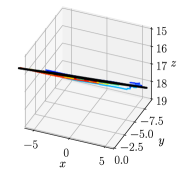

5.4 Comparison of Contour Tracing

This experiment is designed to illustrate the influence of the perspective effect on the iso-depth surface contour tracing with the OPA model and to show the accuracy of the proposed PPA model. We sample 20 seed 3D points on the edge of the board and propagate their depths to generate contours of the board. With the OPA model, the iso-depth contour tracing requires only the depths and a single-view phase angle map. However, according to the proposed PPA model, the iso-depth contour tracing is infeasible in perspective cameras. Therefore, we estimate the normals of the points through the method developed in Section 4.3.2 with two views for generating surface contours. To clearly compare the quality of the contours, our method is set to generate 3D contours that have the same 2D projections on the image plane as those by iso-depth contour tracing. The propagation step size is set to pixel, which is the same as that in [7].

The iso-depth contours of the board are expected to be straight in perspective cameras. However, as shown in Fig. 8, the contours generated by iso-depth contour tracing using the OPA model are all curved and lie out of the ground-truth plane, while those generated by our PPA model are well aligned with the ground-truth plane. We also compute the RMSE of the distances between the points on the contours and the plane. The RMSE of the contours using our PPA model is mm, of that using the OPA model which is mm.

6 Conclusions and Future Work

In this paper, we present the perspective phase angle (PPA) model as a superior alternative to the orthographic phase angle (OPA) model for accurately utilizing polarization phase angles in 3D reconstruction with perspective polarization cameras. The PPA model defines the polarization phase angle as the direction of the intersecting line of the image plane and the plane of incident, and hence allows the perspective effect to be considered in estimating surface normals from the phase angles and in defining the constraint on surface normal by the phase angle. In addition, a novel method for surface normal estimation from a single-view phase angle map naturally results from the PPA model that does not suffer from the well-known -ambiguity problem as in the traditional orthographic model. Experimental results on real data validate that our PPA model is more accurate than the commonly adopted OPA model in perspective cameras. Overall, we demonstrate the necessity of considering the perspective effect in polarimetric 3D reconstruction and propose the PPA model for realizing it.

As a limitation of our work, we have so far only conducted experiments on surface normal estimation and contour tracing. We have not used our model in solving other problems related to polarimetric 3D reconstruction.

Our immediate future plan includes improving polarimetric 3D reconstruction methods with the proposed PPA model.

We are also interested in synthesizing polarization images with open-source datasets and the PPA model for data-driven approaches.

We leave the above interesting problems as our future research.

Acknowledgments.

We thank the reviewers for their valuable feedback.

This work was done while Guangcheng Chen was a visiting student at Southern University of Science and Technology.

This work was supported in part by the National Natural Science Foundation of China under Grant No. 62173096,

in part by the Leading Talents Program of Guangdong Province under Grant No. 2016LJ06G498 and 2019QN01X761,

in part by Guangdong Province Special Fund for Modern Agricultural Industry Common Key Technology R&D Innovation Team under Grant No. 2019KJ129,

in part by Guangdong Yangfan Program for Innovative and Entrepreneurial Teams under Grant No. 2017YT05G026.

References

- [1] Alldrin, N.G., Kriegman, D.J.: Toward reconstructing surfaces with arbitrary isotropic reflectance: A stratified photometric stereo approach. In: 2007 IEEE 11th International Conference on Computer Vision. pp. 1–8. IEEE (2007)

- [2] Atkinson, G.A., Hancock, E.R.: Recovery of surface orientation from diffuse polarization. IEEE transactions on image processing 15(6), 1653–1664 (2006)

- [3] Atkinson, G.A., Hancock, E.R.: Shape estimation using polarization and shading from two views. IEEE transactions on pattern analysis and machine intelligence 29(11), 2001–2017 (2007)

- [4] Ba, Y., Gilbert, A., Wang, F., Yang, J., Chen, R., Wang, Y., Yan, L., Shi, B., Kadambi, A.: Deep shape from polarization. In: European Conference on Computer Vision. pp. 554–571. Springer (2020)

- [5] Berger, K., Voorhies, R., Matthies, L.H.: Depth from stereo polarization in specular scenes for urban robotics. In: 2017 IEEE international conference on robotics and automation (ICRA). pp. 1966–1973. IEEE (2017)

- [6] Chen, L., Zheng, Y., Subpa-Asa, A., Sato, I.: Polarimetric three-view geometry. In: Proceedings of the European Conference on Computer Vision (ECCV). pp. 20–36 (2018)

- [7] Cui, Z., Gu, J., Shi, B., Tan, P., Kautz, J.: Polarimetric multi-view stereo. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 1558–1567 (2017)

- [8] Cui, Z., Larsson, V., Pollefeys, M.: Polarimetric relative pose estimation. In: Proceedings of the IEEE/CVF International Conference on Computer Vision. pp. 2671–2680 (2019)

- [9] FLIR: Blackfly s usb3, https://www.flir.com.au/products/blackfly-s-usb3/?model=BFS-U3-51S5P-C, accessed 2022-02-14

- [10] Fukao, Y., Kawahara, R., Nobuhara, S., Nishino, K.: Polarimetric normal stereo. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 682–690 (2021)

- [11] Huynh, C.P., Robles-Kelly, A., Hancock, E.: Shape and refractive index recovery from single-view polarisation images. In: 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition. pp. 1229–1236. IEEE (2010)

- [12] Kadambi, A., Taamazyan, V., Shi, B., Raskar, R.: Polarized 3d: High-quality depth sensing with polarization cues. In: Proceedings of the IEEE International Conference on Computer Vision. pp. 3370–3378 (2015)

- [13] Korger, J., Kolb, T., Banzer, P., Aiello, A., Wittmann, C., Marquardt, C., Leuchs, G.: The polarization properties of a tilted polarizer. Optics express 21(22), 27032–27042 (2013)

- [14] Lei, C., Qi, C., Xie, J., Fan, N., Koltun, V., Chen, Q.: Shape from polarization for complex scenes in the wild. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). pp. 12632–12641 (June 2022)

- [15] Miyazaki, D., Shigetomi, T., Baba, M., Furukawa, R., Hiura, S., Asada, N.: Polarization-based surface normal estimation of black specular objects from multiple viewpoints. In: 2012 Second International Conference on 3D Imaging, Modeling, Processing, Visualization & Transmission. pp. 104–111. IEEE (2012)

- [16] Miyazaki, D., Shigetomi, T., Baba, M., Furukawa, R., Hiura, S., Asada, N.: Polarization-based surface normal estimation of black specular objects from multiple viewpoints. In: 2012 Second International Conference on 3D Imaging, Modeling, Processing, Visualization & Transmission. pp. 104–111. IEEE (2012)

- [17] Miyazaki, D., Tan, R.T., Hara, K., Ikeuchi, K.: Polarization-based inverse rendering from a single view. In: Computer Vision, IEEE International Conference on. vol. 3, pp. 982–982. IEEE Computer Society (2003)

- [18] Ngo Thanh, T., Nagahara, H., Taniguchi, R.i.: Shape and light directions from shading and polarization. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 2310–2318 (2015)

- [19] Rahmann, S.: Polarization images: a geometric interpretation for shape analysis. In: Proceedings 15th International Conference on Pattern Recognition. ICPR-2000. vol. 3, pp. 538–542. IEEE (2000)

- [20] Rahmann, S., Canterakis, N.: Reconstruction of specular surfaces using polarization imaging. In: Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition. CVPR 2001. vol. 1, pp. I–I. IEEE (2001)

- [21] Shakeri, M., Loo, S.Y., Zhang, H., Hu, K.: Polarimetric monocular dense mapping using relative deep depth prior. IEEE Robotics and Automation Letters 6(3), 4512–4519 (2021)

- [22] Smith, W.A., Ramamoorthi, R., Tozza, S.: Linear depth estimation from an uncalibrated, monocular polarisation image. In: European Conference on Computer Vision. pp. 109–125. Springer (2016)

- [23] Sony: Polarization image sensor with four-directional on-chip polarizer and global shutter function, https://www.sony-semicon.co.jp/e/products/IS/industry/product/polarization.html, accessed 2022-02-14

- [24] Ting, J., Wu, X., Hu, K., Zhang, H.: Deep snapshot hdr reconstruction based on the polarization camera. In: 2021 IEEE International Conference on Image Processing (ICIP). pp. 1769–1773. IEEE (2021)

- [25] Tozza, S., Smith, W.A., Zhu, D., Ramamoorthi, R., Hancock, E.R.: Linear differential constraints for photo-polarimetric height estimation. In: Proceedings of the IEEE international conference on computer vision. pp. 2279–2287 (2017)

- [26] Tozza, S., Zhu, D., Smith, W., Ramamoorthi, R., Hancock, E.: Uncalibrated, two source photo-polarimetric stereo. IEEE Transactions on Pattern Analysis and Machine Intelligence (2021)

- [27] Wolff, L.B.: Surface orientation from two camera stereo with polarizers. In: Optics, Illumination, and Image Sensing for Machine Vision IV. vol. 1194, pp. 287–297. SPIE (1990)

- [28] Wu, X., Zhang, H., Hu, X., Shakeri, M., Fan, C., Ting, J.: Hdr reconstruction based on the polarization camera. IEEE Robotics and Automation Letters 5(4), 5113–5119 (2020)

- [29] Xu, Q., Tao, W.: Multi-scale geometric consistency guided multi-view stereo. Computer Vision and Pattern Recognition (CVPR) (2019)

- [30] Yang, L., Tan, F., Li, A., Cui, Z., Furukawa, Y., Tan, P.: Polarimetric dense monocular slam. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 3857–3866 (2018)

- [31] Yu, Y., Zhu, D., Smith, W.A.: Shape-from-polarisation: a nonlinear least squares approach. In: Proceedings of the IEEE International Conference on Computer Vision Workshops. pp. 2969–2976 (2017)

- [32] Zhao, J., Monno, Y., Okutomi, M.: Polarimetric multi-view inverse rendering. In: European Conference on Computer Vision. pp. 85–102. Springer (2020)

- [33] Zhou, Z., Wu, Z., Tan, P.: Multi-view photometric stereo with spatially varying isotropic materials. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. pp. 1482–1489 (2013)