Phase-Only Beam Broadening of Contiguous Uniform Subarrayed Arrays Utilizing Three Metaheuristic Global Optimization Techniques

Abstract

Radar beam broadening provides continuous coverage of a wider angular extent. While many methods have been published that address beam broadening of traditional (non-subarrayed) arrays, there is a knowledge gap in the published literature with respect to efficient and effective beam broadening of contiguous uniform subarrayed arrays. This paper presents efficient and effective methods for beam broadening of contiguous uniform subarrayed arrays where elements of the array are grouped together to have the same element excitations. Particularly, this paper focuses on phase-only optimization to preserve maximum power output. The high dimensionality of the solution space of possible phase settings causes brute force techniques to be infeasible for exhaustively evaluating the entire space. This paper presents three metaheuristic global optimization techniques that efficiently and effectively search for optimal phase values in this large solution space that satisfy the desired broadened pattern. The techniques presented in this paper are simulated annealing, genetic algorithm with elitism, and particle swarm optimization. These techniques are evaluated on idealized 40x40 and 80x80 element rectangular arrays with 5x5 element subarrays. The results of this study show that as configured in this paper the simulated annealing and particle swarm techniques outshine the genetic algorithm technique for 40x40 and 80x80 rectangular arrays grouped into contiguous uniform 5x5 element subarrays.

Index Terms:

Antenna pattern synthesis, planar arrays, subarrayed arrays, beam broadening, particle swarm optimization, genetic algorithm, simulated annealing.I Introduction

Continuous coverage of wide areas is desirable in many application realms including radar and cellular communications. Radars are challenged with finding efficient means to scan wide angular extents to provide detection, tracking, characterization and measurement of objects within their coverage area. Similarly, the cellular communication industry needs to efficiently provide voice and data communications over very broad coverage areas [10]. This coverage problem can be addressed by steering the main beam across the coverage area using mechanical or electronic means, but beam broadening has the advantage of continuous coverage of a wider angular extent instead of using a time-multiplexing scheme as utilized by beam steering.

In a phased array radar, broadening of the main beam can be accomplished by either adjusting the amplitude or phase of the individual elements of the array or by adjusting both the amplitude and phase. In today’s modern phased arrays that utilize solid-state transmit amplifiers which operate most efficiently in saturation, it is often desired to utilize phase-only approaches to beam broadening as presented in [10, 4, 3] to optimize power efficiency. However, these published methods of beam broadening assume that the phase of each element in the array can be independently adjusted which is not true of contiguous uniform subarrayed arrays.

In a contiguous uniform subarrayed array architecture the elements are grouped together in a manner that only allows the amplitude and phase of each subarray, not each element, to be adjusted. Contiguous uniform subarrayed arrays are commonly used in large array designs to reduce cost by limiting the numbers of control elements. However, the subarrayed architecture also makes it more difficult to form a low-ripple broadened main beam and to reduce the sidelobes. There is currently a gap in the available literature regarding the efficiency of global optimization methods to synthesize antenna patterns with a broadened main beam for subarrayed architectures when the synthesis is constrained to be phase-only, utilize contiguous uniform subarrays, and produce low sidelobes. [5, 13, 12] address synthesis with subarrayed architectures but they concentrate on optimization of combined sum and difference patterns, not broadening the main beam. [2] proposes a synthesis method for subarrayed arrays but this method is amplitude only, utilizes subarrays of unequal sizes, and does not address beam broadening. [11, 6, 9, 7] approach our desired domain space but utilize hybrid subarrays where the amplitude is dependent on the subarray amplitude but the phase of each element in the subarray can still be adjusted independently. The closest published material that this author has found is [10] which presents a beam broadening approach for subarrayed architectures where the synthesis is constrained to be phase-only and utilize contiguous uniform subarrays, but this method constrains the widened full array beam widths to only those beam widths that are multiples of the subarray beam width. This method also produces unacceptable sidelobe levels.

Metaheuristic global optimization methods such as Simulated Annealing (SA) [18], Genetic Algorithms (GA) [19, 1], and Particle Swarm Optimization (PSO) [20, 17] have been used to find solutions for several radar array architectures utilzing both amplitude or phase adjustment. These optimization problems cannot be optimized efficiently utilizing brute force techniques due to computational limits. These global optimization algorithms are capable of searching complicated solution spaces with many local optima to find the global optimum. Due to the dimensionality and computational difficulty of radar beam broadening problems, these techniques are well-suited for synthesis of antenna patterns using various cost evaluation functions.

This paper compares three of the most popular global optimization algorithms and their ability to generate broadened main beam patterns in subarrayed radar architectures. It compares the efficiency, the rate at which a sufficient solution is found, and effectiveness, the relative quality of the solutions, of these techniques in terms of cost function evaluation of the pattern generated from a given phase setting for 40x40 element arrays and 80x80 element arrays. Simulation results of pattern optimization for these subarrayed arrays are given to demonstrate the validity of these techniques and their comparability.

The mathematical model of the optimization algorithms utilized in this paper are presented in Section II which is followed by Section III which presents the cost function used. Section IV shows the simulated results of the various global optimization algorithms. Finally, Section V presents the conclusions that are drawn from the results.

II Mathematical Model - Global Optimization Algorithms

II-A Simulated Annealing

Simulated annealing (SA) is inspired by the annealing process in metallurgy where material is heated and cooled to increase the size of its crystals and reduce their defects. This optimization technique can be utilized to find an approximation of a global minimum for functions with a large number of variables and a large solution space. The notion of slow cooling implemented in the simulated annealing algorithm is interpreted as a slow decrease in the probability of accepting worse solutions as the solution space is explored. Accepting worse solutions is a fundamental property of metaheuristics because it allows for a more extensive search for the global optimal solution. In general, the simulated annealing algorithms work as follows. At each iteration, the algorithm randomly selects a solution close to the current one, measures its quality, and then decides to move to it or to stay with the current solution based on either one of two probabilities between which it chooses based on the relative fitness of the new solution to the old one. During the search, the temperature is progressively decreased from an initial positive value towards zero and affects the probability of changing solutions: at each temperature change, the probability of moving to a better new solution is true, but the probability of moving to a worse new solution is progressively changed towards zero.

The SA process used in this paper is derived from the implementation described in [16, Chapter 7]. The variables utilized are defined as:

-

•

: The candidate solution of element excitations.

-

•

: The current annealing temperature. This starts at .

-

•

The number of temperature changes desired.

-

•

: The exponential temperature decrease constant.

-

•

: Normalize the fitness function so high temperatures are more reactive than low temperatures.

-

•

: A function that assigns an evaluation score to a given candidate set of element excitations.

-

•

: The number of iterations of the algorithm between temperature change.

-

•

: Number of variables (dimensions of solution space).

The implemented SA process can be described as:

-

1.

Randomly generate a candidate of size element excitations.

-

2.

Synthesize the array pattern and evaluate the generated pattern against the desired pattern via a cost function, .

-

3.

For temperature changes.

-

(a)

For iterations

-

i.

Generate a neighboring solution by randomly changing one value in .

-

ii.

Synthesize the array pattern and calculate the cost.

-

iii.

If the new solution’s cost is less than the old solution’s cost , replace with it. If it is not, replace with the new solution if

-

i.

-

(b)

-

(a)

-

4.

Return the solution .

II-B Genetic Algorithm

A genetic algorithm (GA) is inspired by the process of natural selection and relies on bio-inspired operators such as mutation, crossover and selection to evolve an initial population of candidate solutions toward better solutions. Each candidate solution has a set of properties (its chromosomes or genotype) which can be mutated and altered. The evolution usually starts from a population of randomly generated individuals, and is an iterative process, with the population in each iteration called a generation. In each generation, the fitness of every individual in the population is evaluated; the fitness is usually the value of the objective function in the optimization problem being solved. The more fit individuals are stochastically selected from the current population, and each individual’s genome is modified (recombined and possibly randomly mutated) to form a new generation. In addition, elite members of the population with the highest fitness are preserved. The new generation of candidate solutions is then used in the next iteration of the algorithm. The algorithm terminates when either a maximum number of generations has been produced, or a satisfactory fitness level has been reached for the population.

For our beam broadening array pattern optimization problem the population includes candidate sets of element excitations for the array. Therefore, in genetic algorithm terminology, a set of element excitations for each element in the array is the chromosome and each individual element citation is the gene. The new population of sets of element excitations is generated as:

The GA process used in this paper is derived from the implementation described in [8] but minimizes a cost function instead of maximizing a fitness function. In addition, elite members of the population are introduced due to analysis in [14]. The variables utilized in the description of the GA process are defined as:

-

•

: The population of candidate sets of element excitations.

-

•

: The new population of candidate sets of element excitations derived from the previous population.

-

•

: A function that assigns an evaluation score to a given candidate set of element excitations.

-

•

: The maximum number of generations.

-

•

: The number of candidate sets of element excitations to be included in the population.

-

•

: The fraction of the population to be replaced via the crossover technique at each step.

-

•

: The mutation rate.

The implemented GA process can be described as:

-

1.

Randomly generate a population, , of size candidate sets of subarray excitations.

-

2.

For each candidate set of subarray excitations, synthesize the array pattern and evaluate the generated pattern against the desired pattern via a cost function, .

-

3.

For generations of solutions

-

(a)

Create a set of parents by probabilistically selecting members of . The probability of selecting candidate set from is given by

(1) if all the values of are the same, .

-

(b)

For each pair of candidate sets from , , produce two offspring by applying the crossover operator. Add all offspring to .

-

(c)

For each of the members of , perform the following mutation operator from [14]: for each gene, if , mutate it.

-

(d)

Add the members of with the lowest cost into as elites.

-

(e)

Update the population: .

-

(f)

For each candidate set of subarray excitations, synthesize the array pattern and evaluate the generated pattern against the desired pattern via a cost function, .

-

(a)

-

4.

Return the candidate set from that has the lowest cost function value.

II-C Particle Swarm Optimization

Particle swarm optimization (PSO) functions by following a physical analogy of particles swarming around a solution with a given velocity. Particles solutions are randomly created and move around the solution space with a velocity created from their personal best solution, the global best solution, and inertia. This allows the particles to explore the solution space while still being influenced by the swarm intelligence. Due to the cyclical nature of the solution space, was not found to be necessary because of angle wraparound of phase degree values, since it would have been set to the dynamic range of each variable which is radians.

The PSO used in this paper is derived from the implementation described in [15]. With regard to the synthesis of array patterns, a particle is defined as a set of subarray excitations. The variables utilized in the description of the PSO are defined as:

-

•

: The particles (subarray excitations) in the population.

-

•

: The velocities of the particles in the population.

-

•

: The best solution found by a particle.

-

•

and : Acceleration constants for the velocity update rule.

-

•

: The inertia of the particle: used to perserve some of the prior velocity.

-

•

: A function that assigns an evaluation score to each particle.

-

•

: The number of particles to be included in the population of particles.

-

•

: An index of which particle is being evaluated.

-

•

: The index of the global best particle.

-

•

: Number of variables (dimensions of the solution space) or independent subarray excitations.

The implemented PSO process can be described as:

-

1.

Randomly generate a population of where each particle is defined by a position, , and a velocity, . Generate velocities for each particle in the population from phase values.

-

2.

While the number of iterations is less than some given maximum number, repeat the following steps.

-

(a)

For each particle, synthesize the array pattern and evaluate the generated pattern against the desired pattern via a cost function, .

-

(b)

Check each particle if there is a new personal best and check for a new global best .

-

(c)

Calculate the velocity given by

(2) -

(d)

Add the velocity to the existing position to move the particles to new locations in the solution space.

-

(a)

-

3.

Return the candidate set from that has the lowest cost function value.

For our purposes the velocity calculation in Step 2 was modified from the equation given in [15] because phase is a circular function and wraps around at or 360 degrees. For example, if the current particle, , is at 10 degrees and the local best particle, , is at 290 degrees the shortest distance between the particles is not degrees. The shortest distance is actually through the wrap-around at 360 degrees. If the calculated local distance or global distance was greater than 180 degrees, then 360 degrees was subtracted from the calculated distance. Similarly, if the calculated local distance or global distance was less than -180 degrees, then 360 degrees was added to the calculated distance. This adjustment ensures that the velocity of the particle is at the correct amplitude and direction to more quickly converge to a global solution.

III Mathematical Model - Cost Function

In this paper a cost function determines how close the generated array factor, , is to the desired array factor, . The desired array factor is defined as a normalized flat-top function with a main beam and sidelobes. The main beam consists of a desired angular width with an amplitude of 0 dB. The sidelobes are the points in outside this main beam with a desired sidelobe level defined in negative dB. is computed by taking the IFFT of the element excitations and is normalized before comparing it to . The cost function, , is defined as:

| (3) |

where is the error calculated for the points that are in the main beam and below 0 dB, , and is defined as:

| (4) |

and is the error calculated for the points that are outside the main beam and above the desired sidelobe level, , and is defined as:

| (5) |

.

The logarithm is used in (3) to reduce the values returned to a more condensed range so that the convergence rate of the cost function can be more easily visualized. For a more tangible performance measure, (3) can be converted to a percentage pattern effectiveness metric as

| (6) |

where represents the number of angular points used to evaluate vs. .

IV Results

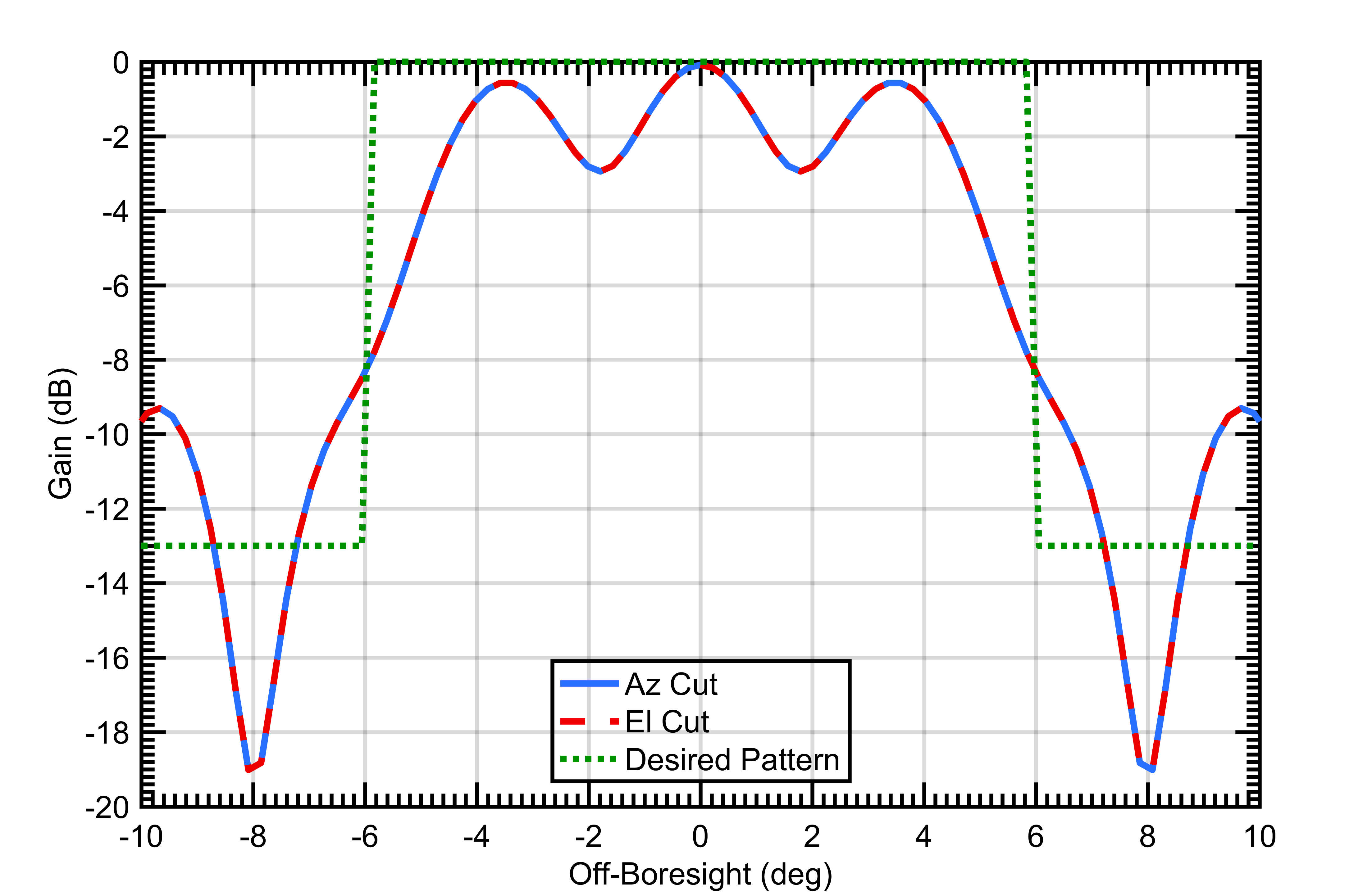

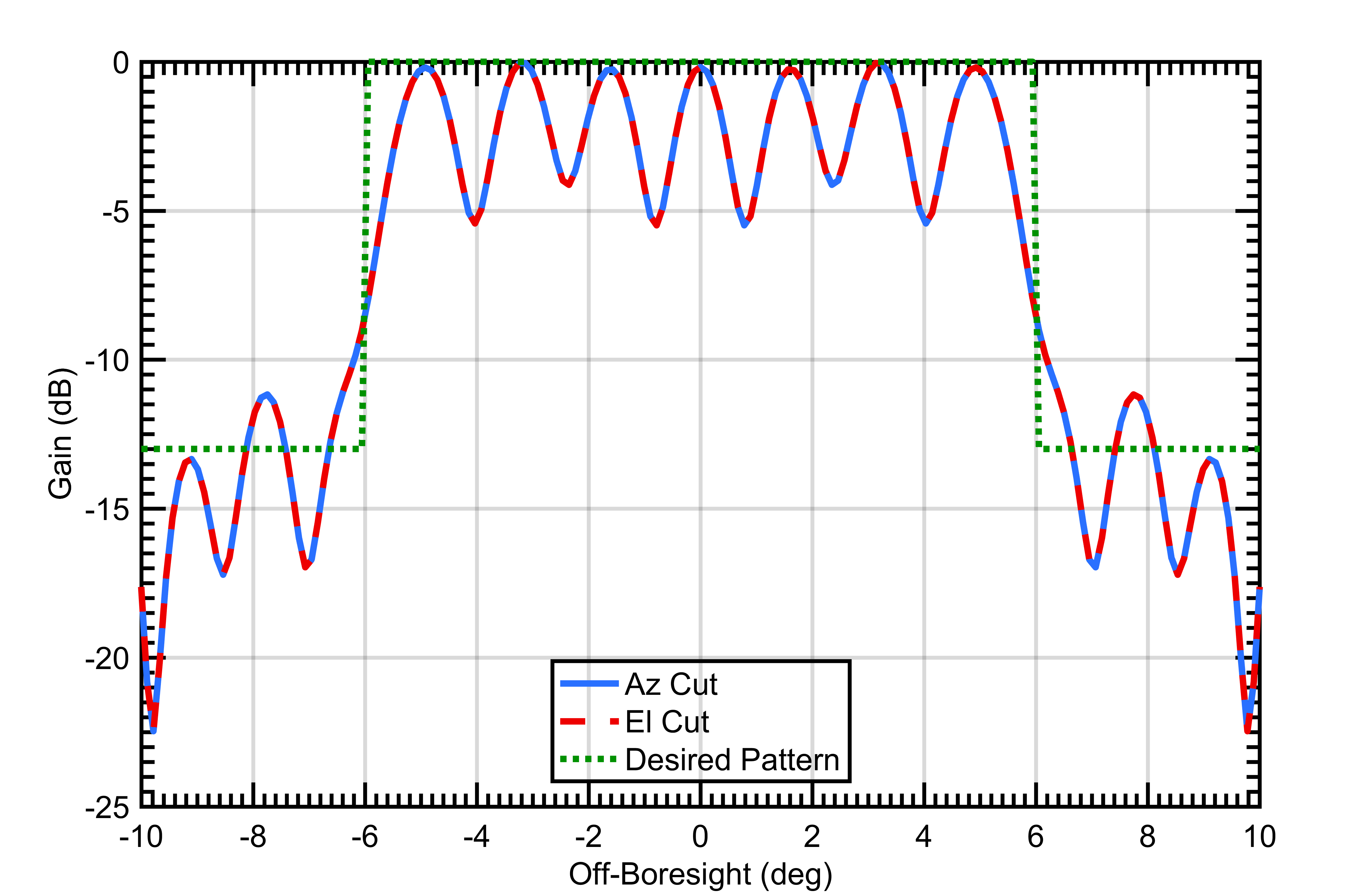

The results presented in this paper were generated using a computer simulation that synthesized an approximation of the array pattern where each element was assumed to be an isotropic radiator. The array pattern was generated with an FFT-based approach for computational efficiency. This simulation contained adjustable parameters that allowed arbitrary arrays to be modeled based on the number of elements in each subarray, the number of subarrays in the array, the spacing between elements, and the number of bits for each phase shifter. The desired antenna pattern was described using parameters for width (12 degrees) of the broadened main beam and maximum sidelobe level (-13 dB).

The simulation was configured to model rectangular arrays of 40x40 and 80x80 elements where the elements were uniformly spaced a half wavelength apart. The rectangular arrays were grouped into contiguous uniform subarrays of 5x5 elements; thus, the 40x40 array had 8x8 subarrays and the 80x80 had 16x16 subarrays. Each array was also configured to utilize 6-bit phase shifters. These array configurations create solution spaces with and possible permutations for each respective array.

A few techniques were utilized in the simulation to shrink these solution spaces. First, array symmetry is assumed in order to simplify the problem. Symmetry is created by setting subarrays equidistant from the array center equal to the same phase. Assuming symmetry reduces the 40x40 array to only needing 9 independent phase settings instead of 64, and the 80x80 array requires 32 instead of 256. Also, since it is the relative phase of the elements and subarrays to each other that is important, the innermost subarrays were fixed to zero phase to reduce the solution space to 8 and 31 independent phase settings for each respective array. Therefore, assuming symmetry and fixing the center subarray phase to zero shrinks the solution spaces to and possible permutations for each respective array.

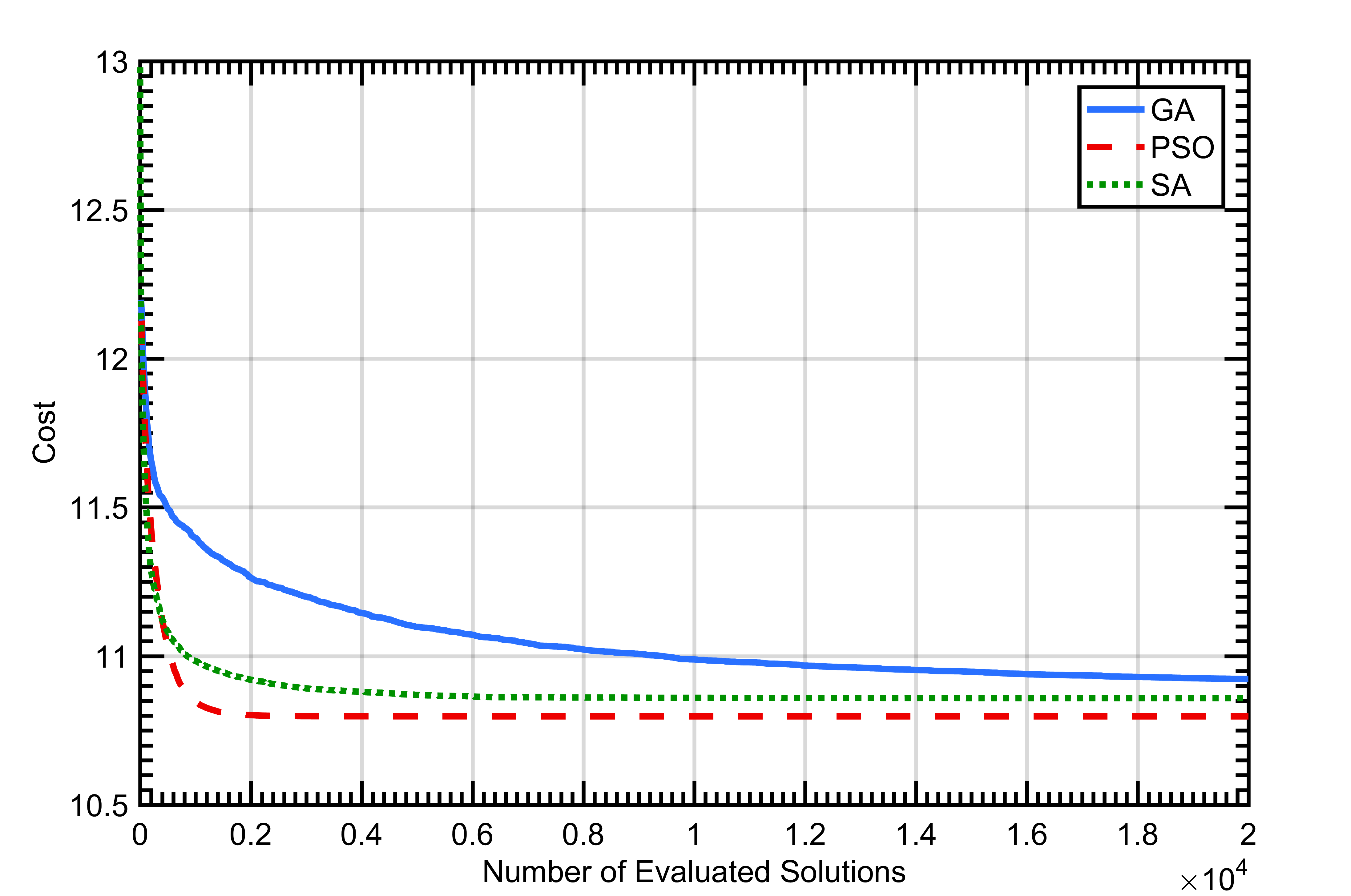

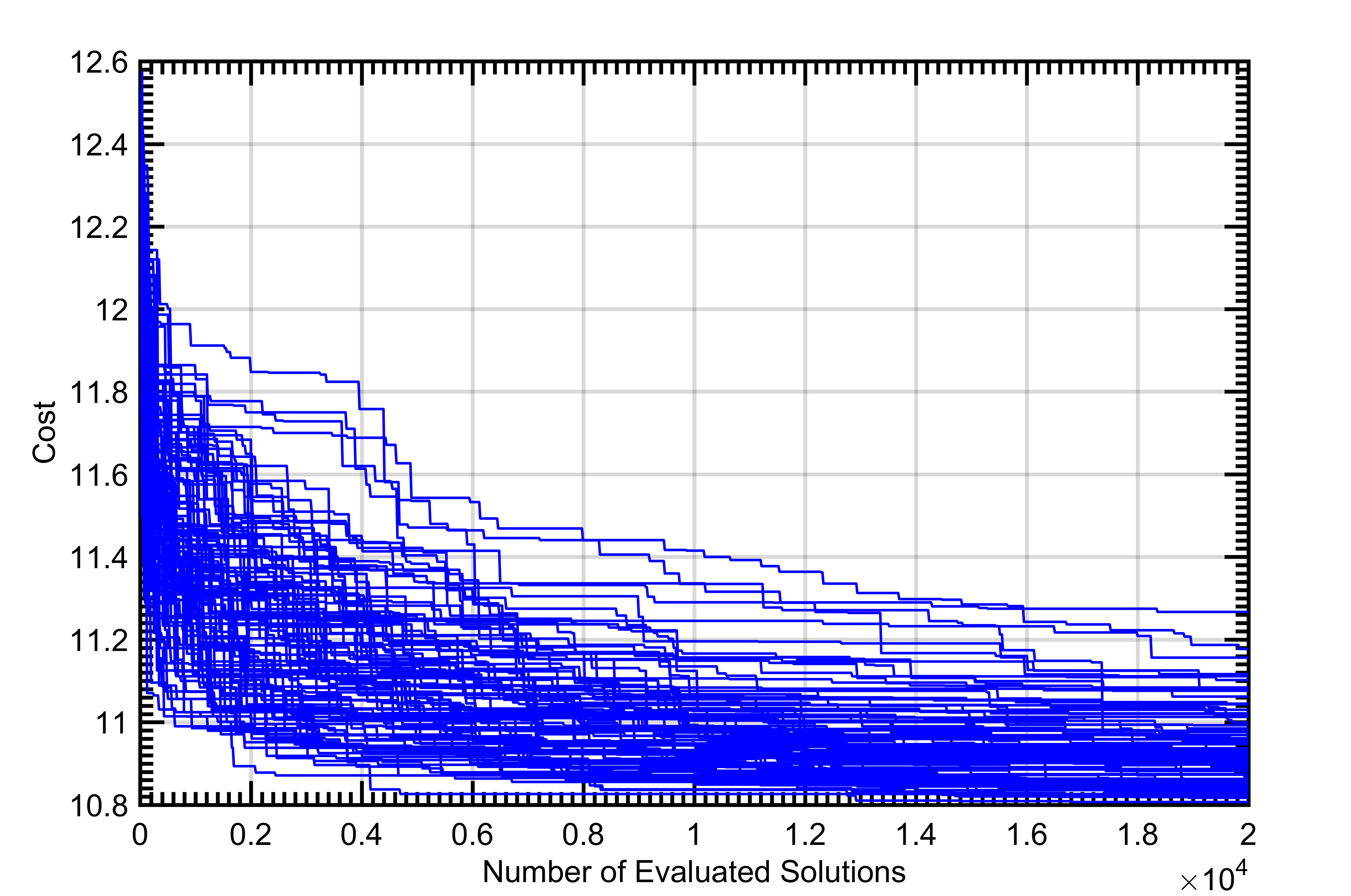

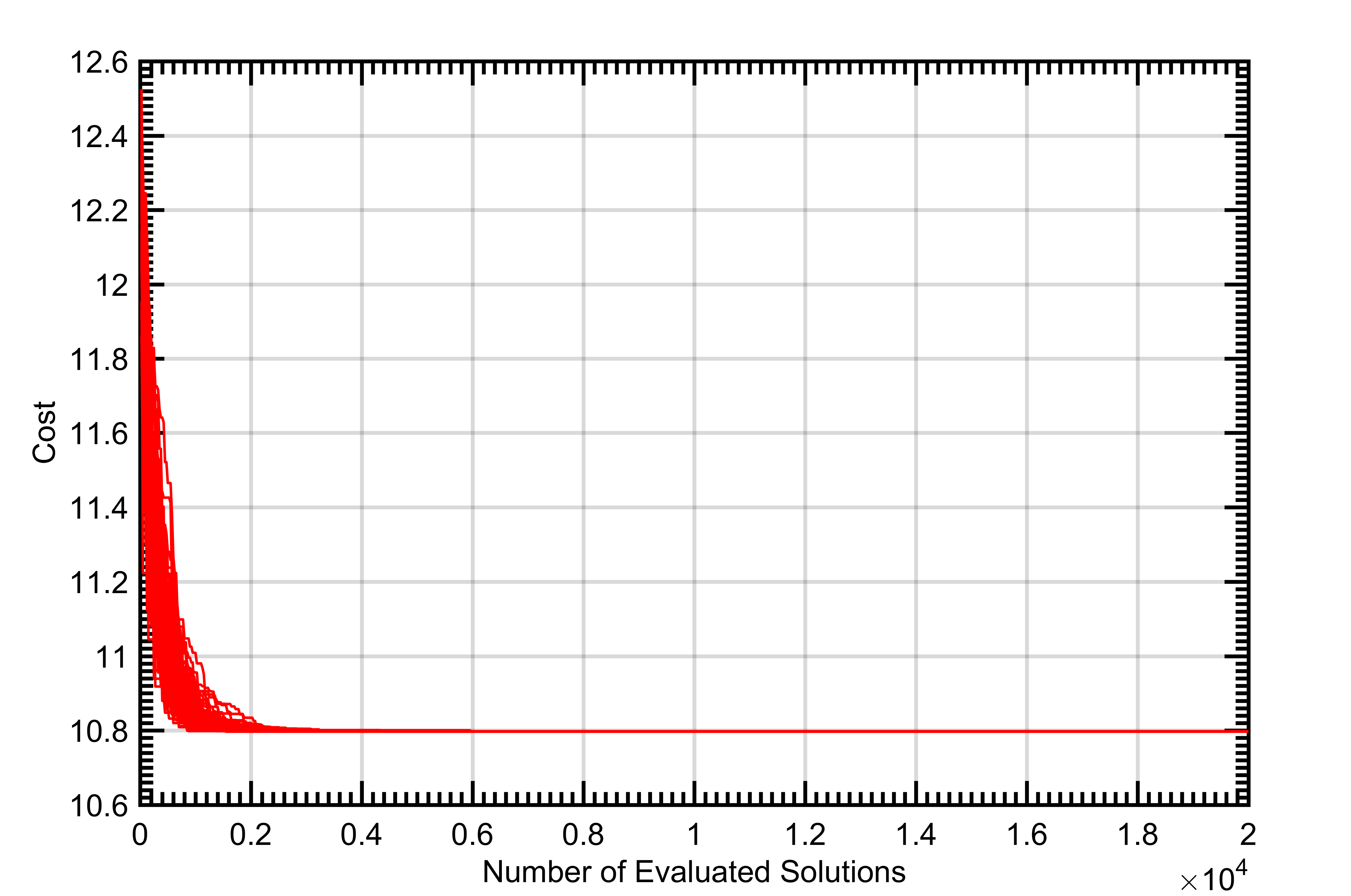

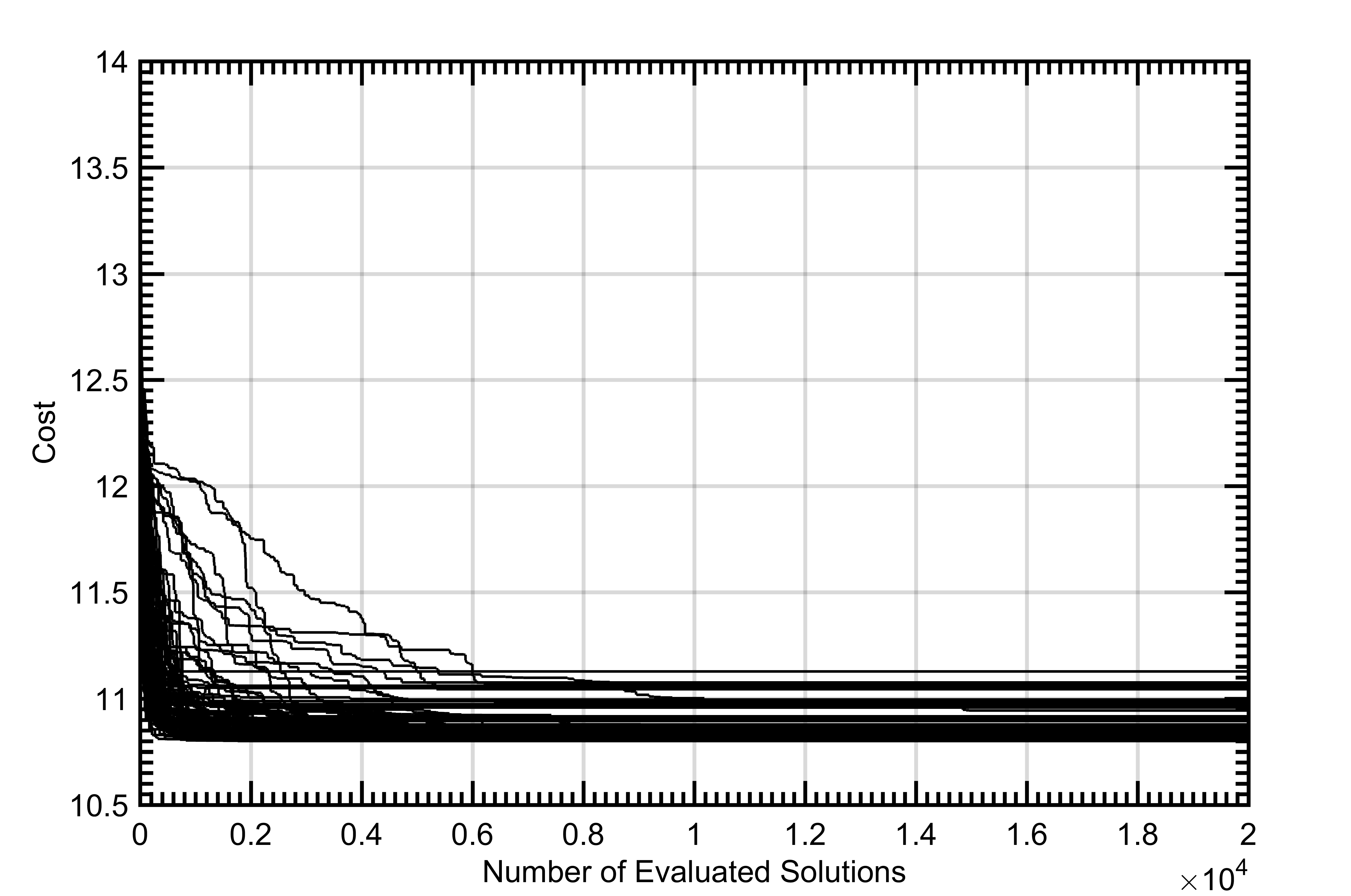

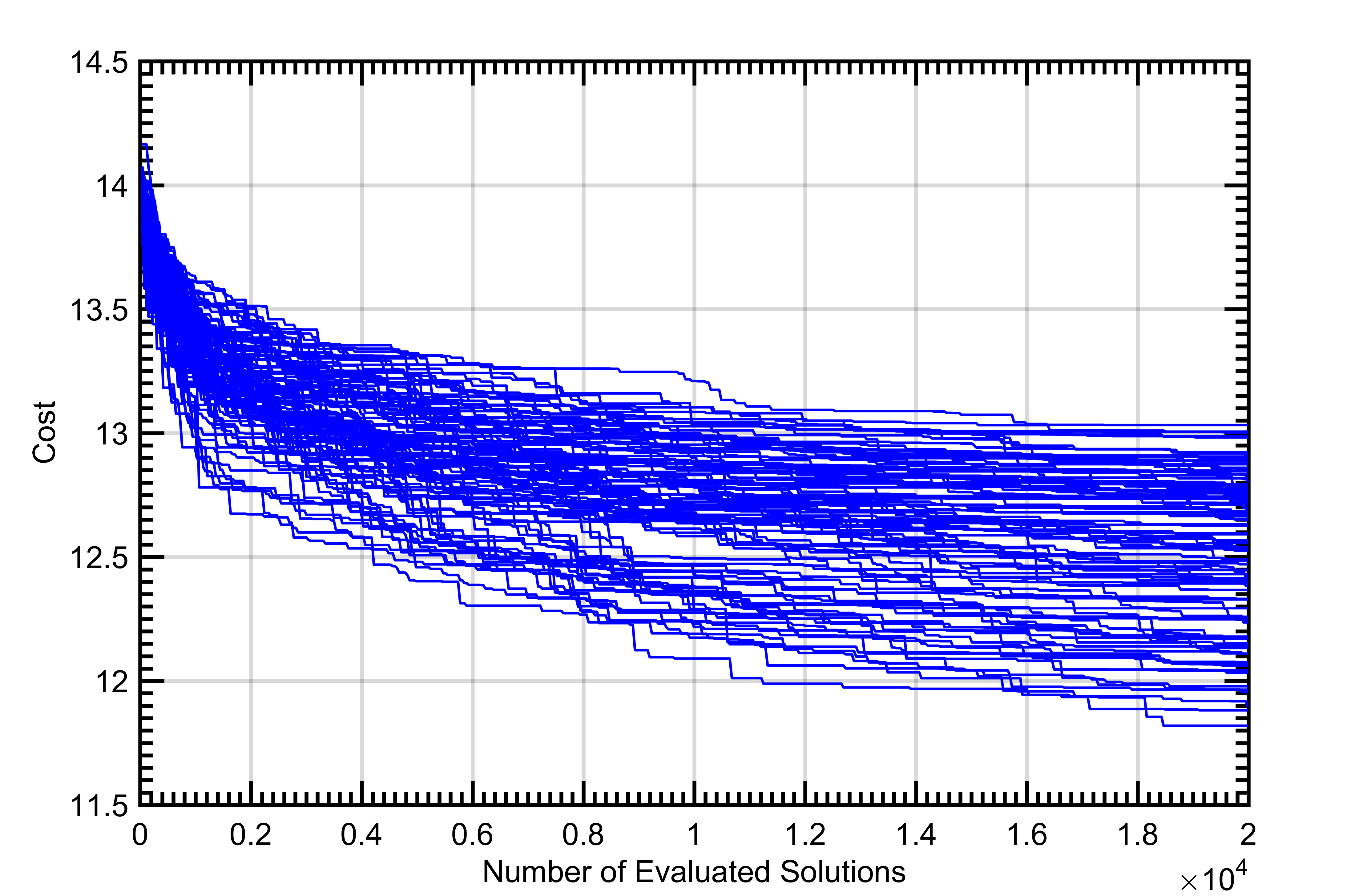

In order to effectively evaluate the stochastic algorithms, we allowed each algorithm to converge until they had the opportunity to evaluate 20,000 solutions. For each iteration of the algorithm, we recorded the best solution as convergence curves. Since the SA, GA, and PSO algorithms are not deterministic processes, they were executed 100 times with the same settings to obtain statistical significance. The convergence curves from each of the 100 executions were averaged to form a mean convergence curve for each algorithm.

For SA, constants in Table I were selected from ranges in [16]. was chosen in order to accept many solutions at the beginning but to accept fewer as the temperature neared 0. It was based on the cost obtained from . The logarithm utilized in was important for ensuring that was a satisfactory value for the duration of the algorithm as the cost decreased. Since involves a 2D summation of errors, differing patterns can have magnitudes of difference in fitness, and the logarithm allows to better cover the range of costs. was selected to generally cover the range of over the course of the algorithm: its value is fairly standard. In addition, the number of iterations between temperature changes was selected to allow an appropriate number of changes in the algorithm given the rough number of evaluations of desired.

| 0.97 | 0.01 | 200 | 100 |

|---|

Constants in Table II were empirically determined using a limited parameter search and could likely be improved with a more extensive search. This more extensive search is left as a an area for future study. Using a quarter of the population as elites in the parent selection was determined to improve convergence. Due to the use of elitism preserving genes in the GA, and were both set higher to increase the rate of testing new solutions. The selection of population size was based on the rule-of-thumb that population sizes between 20 - 50 are usually sufficient. For the smaller 40x40 array with a smaller solution space, a population of size 25 was used. However, in the case of the 80x80 radar, due to the increased solution space size, a larger population size of 50 was used.

| r | |||

|---|---|---|---|

| 40x40 | 0.07 | 1 | 25 |

| 80x80 | 0.07 | 1 | 50 |

Constants in Table III utilized in the PSO algorithm were chosen based on [15]. To allow a fair comparison between the GA and PSO algorithms, the same population sizes were used for each algorithm.

| 40x40 | 0.729 | 1.49445 | 1.49445 | 25 |

| 80x80 | 0.729 | 1.49445 | 1.49445 | 50 |

IV-A 40x40 Results

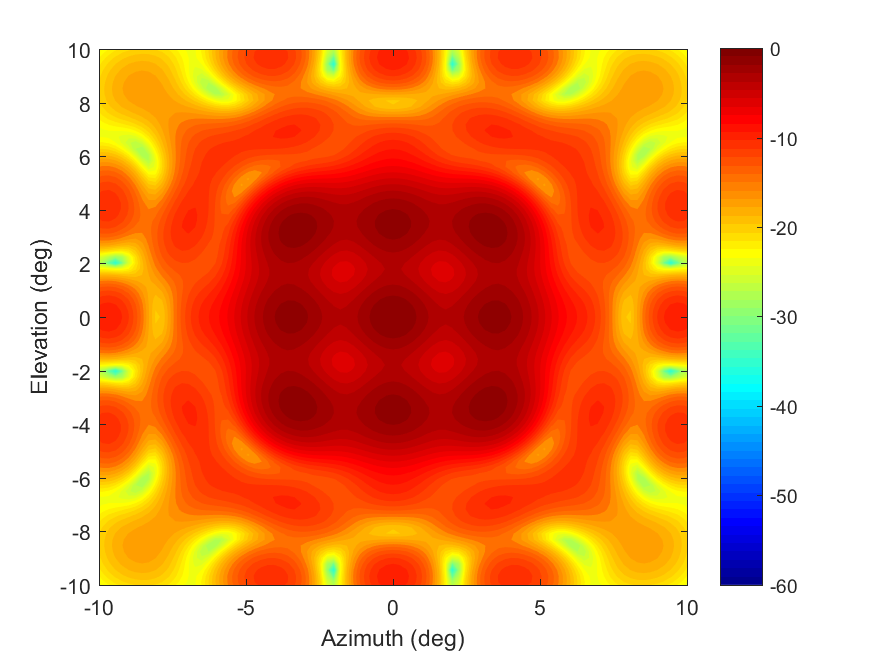

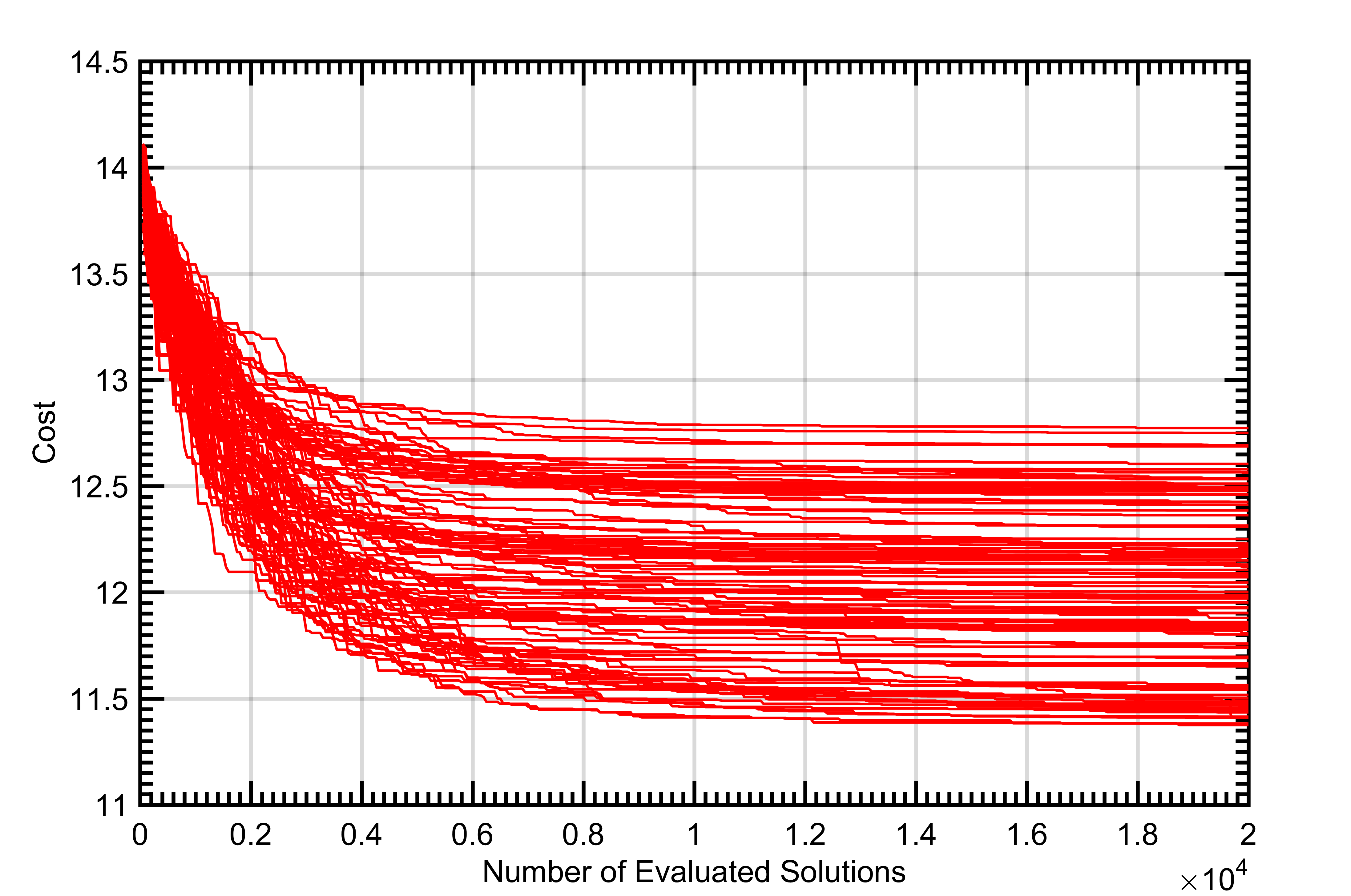

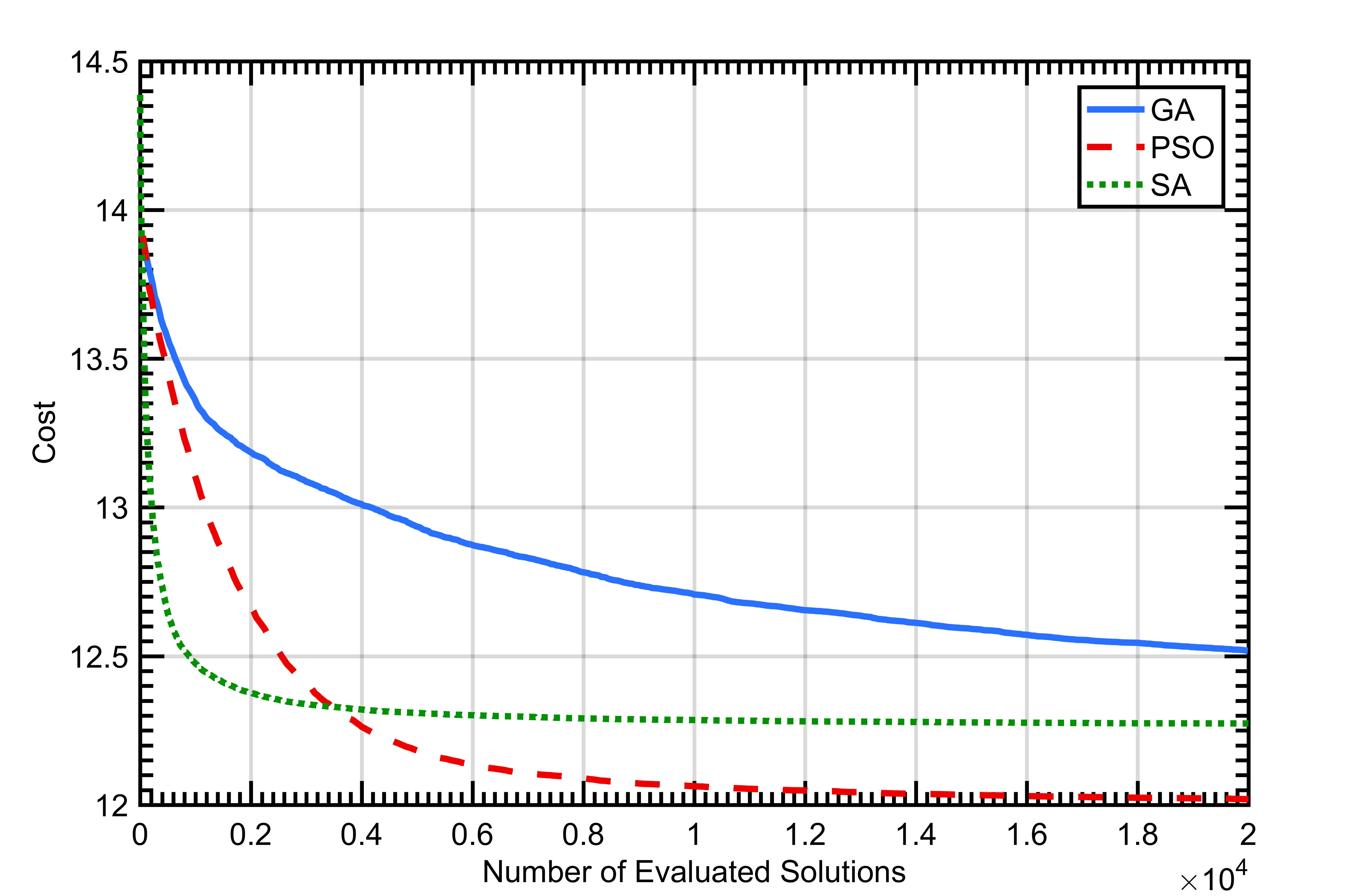

All the algorithms performed well on the 40x40 array. As can be seen in Figure 1.

On average, PSO and SA performed more efficiently than GA. However, the best solutions from the repeated iterations of all the algorithms resulted in the same cost solution that had the same phase values. Therefore, all three algorithms were equally effective and converged to the same global optimum solution for at least some of the 100 executions performed for each algorithm. Figure 2 shows the convergence of each of the 100 executions of each of the algorithms for the 40x40 arrays.

The pattern of the best solution is seen in Figure 3. The cost of the solution was 10.8 after the 20,000 evaluations of .

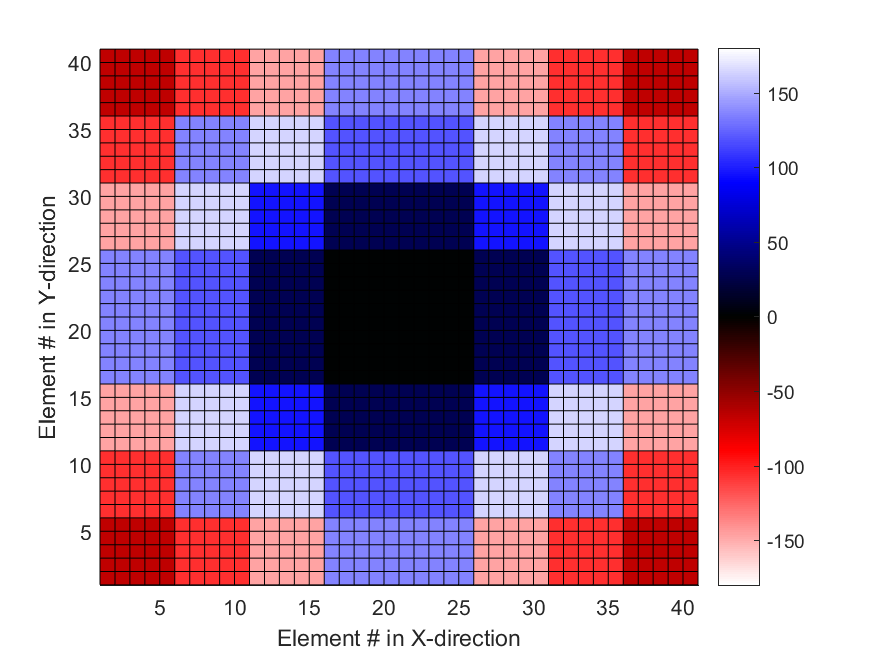

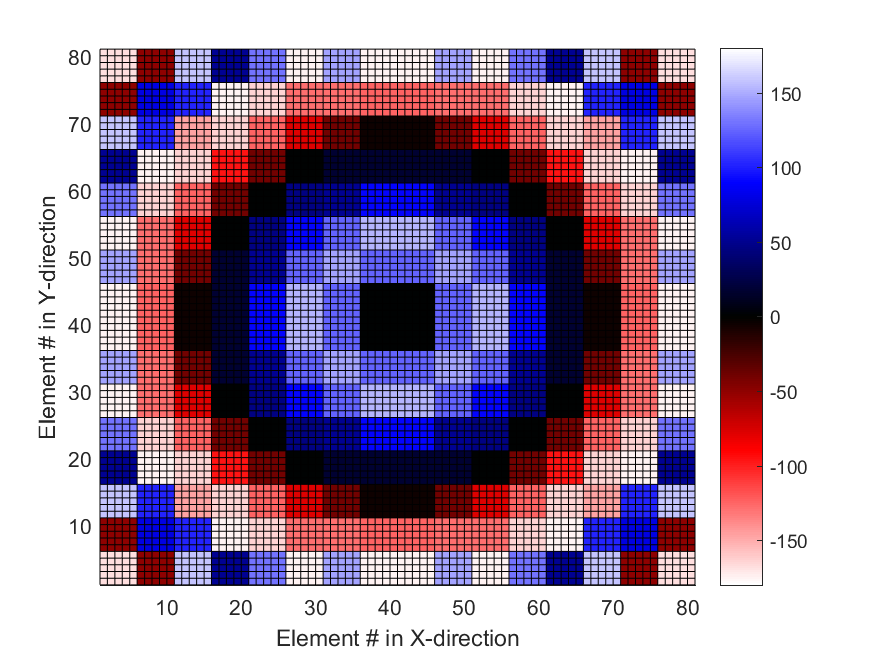

The phase values of the subarrays for this best solution are shown in Figure 4. The phase values form somewhat concentric rings of similar phase values. This is difficult to visualize in this smaller-sized array and can be more clearly observed in the larger 80x80 arrays.

IV-B 80x80 Results

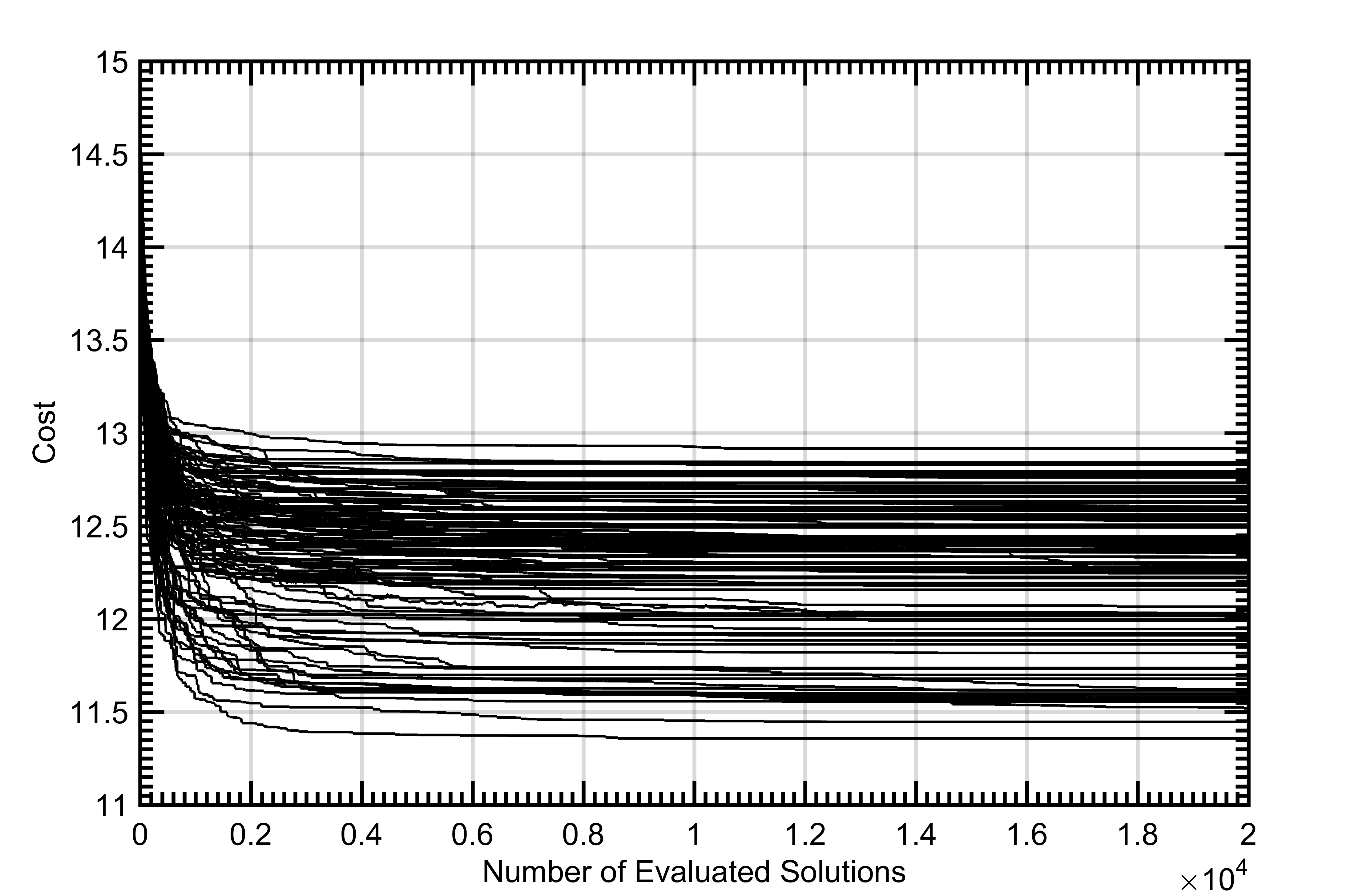

The performance of the algorithms on the 80x80 array, as seen in Figure 6, varies more significantly than on the 40x40 array. In general, all three algorithms had a more difficult time finding the global optimum and were plagued with getting stuck in local optima. This struggle is shown in Figure 5 which shows the convergence of each of the 100 executions of each of the algorithms for the 80x80 arrays.

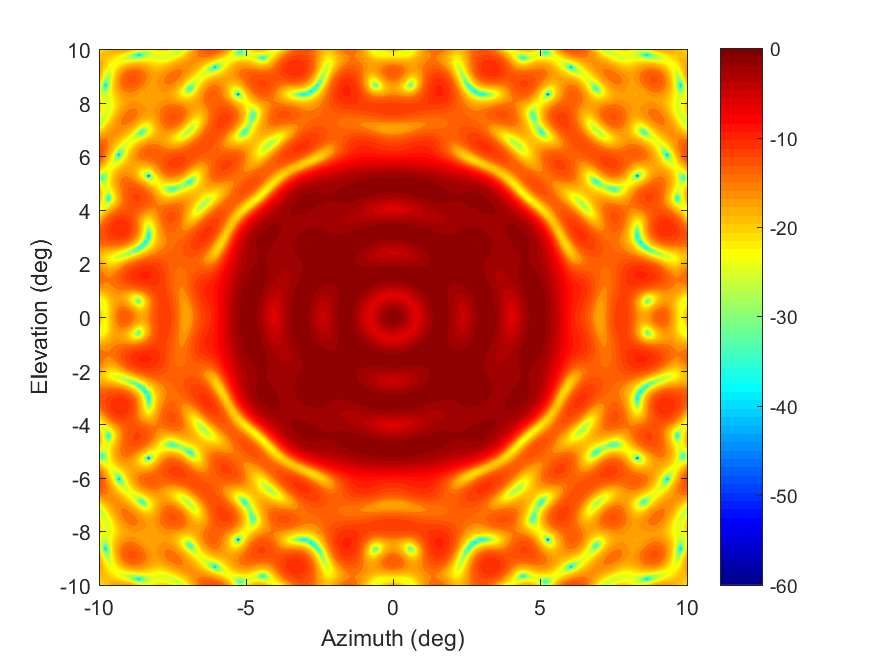

The pattern of the best solution for the 80x80 array is seen in Figure 7. The cost of the best solution was 11.36 after the 20,000 evaluations of .

The phase values of the subarrays for this best solution are shown in Figure 8. Concentric rings of phase are clearly visible.

V Summary and Conclusions

In the 40x40 radar architecture, all three algorithms in this study found the global optimum on at least some of their 100 executions. On average, PSO was slightly more effective than SA. While SA started with the steepest convergence (Figure 1), it tended to get stuck in local optima more frequently than PSO (Figure 2). Both PSO and SA outperformed genetic algorithm. However, it should be noted that each metaheuristic technique can be configured to manage the speed of convergence and the probability that the convergence stalls in a local optima instead of the global optimum. The overall poorer performance of the GA when compared to the PSO and SA is likely influenced by the GA configuration and would likely have more comparable performance if more time were spent optimizing the configuration. This increased optimization of the GA algorithm is outside the scope of this study and is an area of future work.

In the 80x80 radar architecture, the algorithms showed larger differences in their efficiency and effectiveness. Simulated annealing again showed the steepest convergence decent (Figure 6) and did find the best solution of any of the algorithms () but still suffered from stalling in local optima (Figure 5). This effect is realized by PSO showing better average effectiveness than SA even though SA’s best solution was slightly better than PSO’s best solution. Again, GA performed worse than SA and PSO, but it had less difficulty with stalling in local optima. In Figure 7 it should be noted that the ripples in the array pattern are deeper on the azimuth and elevation axes than at other orientations. While the nulls in the mainbeam are deeper than desired, actual measurements of similar arrays show that measured results typically smooth the nulls in simulated patterns so that in reality the nulls are not as deep as simulation can sometimes predict.

In this study, we explore the ability of SA, GA, and PSO to generate desired beam broadened array patterns in subarrayed arrays using phase-only modification of element excitations. We have adapted and configured each algorithm for use with the problem domain, especially the PSO algorithm where the velocity calculation needed to be modified because phase is a circular function. We find that SA and PSO are more effective and efficient than GA for the configurations shown in this study. SA is more efficient than PSO, but PSO is on average more effective than SA.

Future studies can examine these techniques for larger subarrayed arrays to better understand their effectiveness. Comparisons of these techniques utilized for both subarrayed and non-subarrayed arrays, with and without symmetry, is another potential path for research. Also, utilizing a supercomputing cluster could allow verification of the global optimum for these array configurations and better quantify the optimality of solutions found from these techniques.

References

- [1] Appasani Bhargav and Nisha Gupta. Multiobjective genetic optimization of nonuniform linear array with low sidelobes and beamwidth. IEEE Antennas and Wireless Propagation Letters, 12:1547–1549, 2013.

- [2] R. L. Haupt. Optimized weighting of uniform subarrays of unequal sizes. IEEE Transactions on Antennas and Propagation, 55(4):1207–1210, 2007.

- [3] X. Huang, Y. H. Liu, P. F. You, J. Yang, and Q. H. Liu. Efficient phase-only linear array synthesis including mutual coupling and platform effect. 2016 Ieee International Conference on Computational Electromagnetics (Iccem), pages 328–329, 2016.

- [4] J. C. Kerce, G. C. Brown, and M. A. Mitchell. Phase-only transmit beam broadening for improved radar search performance. In 2007 IEEE Radar Conference, pages 451–456, 2007.

- [5] T-S Lee and T-K Tseng. Subarray-synthesized low-side-lobe sum and difference patterns with partial common weights. IEEE transactions on antennas and propagation, 41(6):791–800, 1993.

- [6] Luca Manica, Paolo Rocca, and Andrea Massa. Design of subarrayed linear and planar array antennas with sll control based on an excitation matching approach. IEEE Transactions on Antennas and Propagation, 57(6):1684–1691, 2009.

- [7] Luca Manica, Paolo Rocca, Giacomo Oliveri, and Andrea Massa. Synthesis of multi-beam sub-arrayed antennas through an excitation matching strategy. IEEE Transactions on Antennas and Propagation, 59(2):482–492, 2011.

- [8] Tom M Mitchell. Machine learning. McGraw-Hill Boston, MA:, 1997.

- [9] Giacomo Oliveri. Multibeam antenna arrays with common subarray layouts. IEEE Antennas and Wireless Propagation Letters, 9:1190–1193, 2010.

- [10] S. Rajagopal. Beam broadening for phased antenna arrays using multi-beam subarrays. In 2012 IEEE International Conference on Communications (ICC), pages 3637–3642, 2012.

- [11] Paolo Rocca, Randy L Haupt, and Andrea Massa. Sidelobe reduction through element phase control in uniform subarrayed array antennas. IEEE Antennas and Wireless Propagation Letters, 8:437–440, 2009.

- [12] Paolo Rocca, Luca Manica, Renzo Azaro, and Andrea Massa. A hybrid approach to the synthesis of subarrayed monopulse linear arrays. IEEE Transactions on Antennas and Propagation, 57(1):280–283, 2009.

- [13] Paolo Rocca, Luca Manica, and Andrea Massa. Hybrid approach for sub-arrayed monopulse antenna synthesis. Electronics Letters, 44(2):75–76, 2008.

- [14] Günter Rudolph. Convergence analysis of canonical genetic algorithms. IEEE transactions on neural networks, 5(1):96–101, 1994.

- [15] Yuhui Shi et al. Particle swarm optimization: developments, applications and resources. In evolutionary computation, 2001. Proceedings of the 2001 Congress on, volume 1, pages 81–86. IEEE, 2001.

- [16] Steven S Skiena. The algorithm design manual, volume 1. Springer Science & Business Media, 1998.

- [17] Jinghai Song, Huili Zheng, and Lin Zhang. Application of particle swarm optimization algorithm and genetic algorithms in beam broadening of phased array antenna. In Signals Systems and Electronics (ISSSE), 2010 International Symposium on, volume 1, pages 1–4. IEEE, 2010.

- [18] A Trastoy and F Ares. Phase-only control of antenna sum patterns. Progress In Electromagnetics Research, 30:47–57, 2001.

- [19] Ya-Qing Wen, Bing-Zhong Wang, and Xiao Ding. A wide-angle scanning and low sidelobe level microstrip phased array based on genetic algorithm optimization. IEEE Transactions on Antennas and Propagation, 64(2):805–810, 2016.

- [20] Song-Han Yang and Jean-Fu Kiang. Adjustment of beamwidth and side-lobe level of large phased-arrays using particle swarm optimization technique. IEEE Transactions on Antennas and Propagation, 62(1):138–144, 2014.