∗ This is the authors’ version of a survey to appear in the proceedings of the workshop “AI+Sec: Artificial Intelligence and Security”, which was held 2–6 December, 2019, in Lorentz Center (for more details, see https://www.lorentzcenter.nl/aisec-artificial-intelligence-and-security.html [Accessed February 11, 2021]). This chapter also partially covers what has been presented in the tutorial “Security of PUFs: Lessons Learned after Two Decades of Research” given at CHES 2019.

Physically Unclonable Functions and AI

Abstract

The current chapter aims at establishing a relationship between artificial intelligence (AI) and hardware security. Such a connection between AI and software security has been confirmed and well-reviewed in the relevant literature. The main focus here is to explore the methods borrowed from AI to assess the security of a hardware primitive, namely physically unclonable functions (PUFs), which has found applications in cryptographic protocols, e.g., authentication and key generation. Metrics and procedures devised for this are further discussed. Moreover, by reviewing PUFs designed by applying AI techniques, we give insight into future research directions in this area.

[font=itshape,leftmargin=0.25rightmargin=2em]“ The design of cryptographic systems must be based on firm foundations, whereas ad-hoc approaches and heuristics are a very dangerous way to go. ”

1 Introduction

In order to realize a cryptographic protocol or primitive, the assumptions made during design must hold. These assumptions relate in particular to secure key storage and secure execution of the protocol/primitive, which have been proven hard to attain in practice. The notion of root-of-trust has been introduced to deal with this by providing adequate reasoning in relation to physical security, i.e., the guarantee to resist certain physical attack cf. maes2013 . In this regard, physically unclonable functions (PUFs) have been identified as a promising solution to secure key generation and storage issues gassend2002silicon ; gassend2004identification .

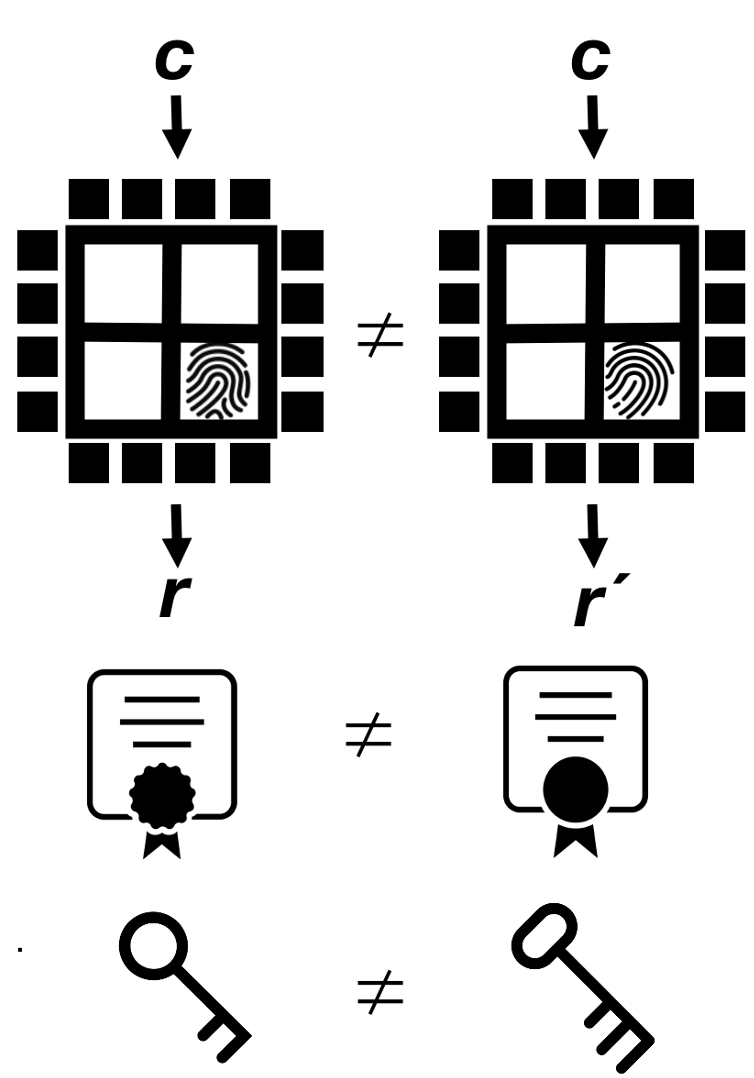

The key premise of PUFs is that physical characteristics of the system embodying the PUF can be tailored to derive an instance-specific feature of that, which is inherent and unclonable maes2013 (see Figure 1). Among the variants of PUFs being considered in the literature are the so-called “intrinsic” PUFs, where the above feature (1) is the result of the production process, and (2) can be evaluated/ measured internally guajardo2007fpga . A prime example of PUF families meeting these criteria is (some types of) silicon PUFs with manufacturing process variations as the production process. One of the main advantages of these PUFs is the easy-to-integrate aspect, enabling the direct application of the PUF in integrated circuits (ICs). This is of great importance for ICs used in not only every-day applications, but also cryptosystems.

In the literature, a great number of research studies have been carried out to evaluate the properties of PUFs; at the heart of them is the unclonability. Obviously, if this property of a PUF is not fulfilled, neither the PUF nor the system encompassing that is secure. This chapter is devoted to the relationship between PUFs and AI, where the latter is used in a natural way to assess not only the security of PUFs, but also design PUFs. Among such approaches, if we focus on machine learning (ML)-enabled ones, the following interesting observation can be made.

For PUFs, as cryptographic primitives, one of the most effective techniques to assess their security lies at the intersection of cryptography and machine learning, namely provable ML algorithms. In addition to exploring the difference between such algorithms and ML algorithms commonly employed in various fields of studies, this chapter describes why provable ML algorithms should be considered when evaluating the security of PUFs. For this purpose, pitfalls in methods adopted to demonstrate the robustness of a PUF against ML attacks are explained. Besides, the metrics that have been defined to assess this are mentioned.

We put emphasis on the point that this chapter serves as neither an introduction to the concept of PUFs, nor their formalization and architectures. For these topics, we refer the reader to the seminal work and surveys published over the past decades, e.g., armknecht2011formalization ; MaVe10 ; gao2020physical ; maes2013 .

2 Background on PUFs

PUFs exploit the process variations and imperfections of metals and transistors in similar chips to provide a device-specific fingerprint. More formally, a PUF is a mathematical mapping generating virtually unique outputs (i.e., responses) to a given set of input bits (i.e., challenges). These responses can be used either to authenticate and identify a specific chip or as cryptographic keys for encryption/decryption. To use a PUF in an authentication scenario, the PUF has to go through the enrollment and verification phases. In the enrollment phase, the verifier creates a database of challenge-response-pairs (CRPs) for the PUF.

During the verification phase in the field, a set of challenges from the verifier is fed to the PUF, and the generated responses will be compared to the stored responses in the database for verification. In this phase, due to the natural noisy characteristics of PUFs, the verifier must resolve the noisy CRPs, i.e., for a given challenge, the response could be different, when the measurement is repeated (so-called the reliability problem). For this purpose, application of majority voting and fuzzy extractors are the prominent examples delvaux2016efficient . The latter is a sub-set of helper data algorithms, performing post-processing to meet key generation requirements: reproducibility, high-entropy, and control. For this purpose, such algorithms generate helper data that can be stored in insecure (off-chip) non-volatile memory (NVM), for instance, or by another party delvaux2014helper . It is worth mentioning that the helper data is considered public. More importantly, this data inevitably leaks information about the PUF responses, leaving the door open for the cloning of PUFs.

PUF Instantiations

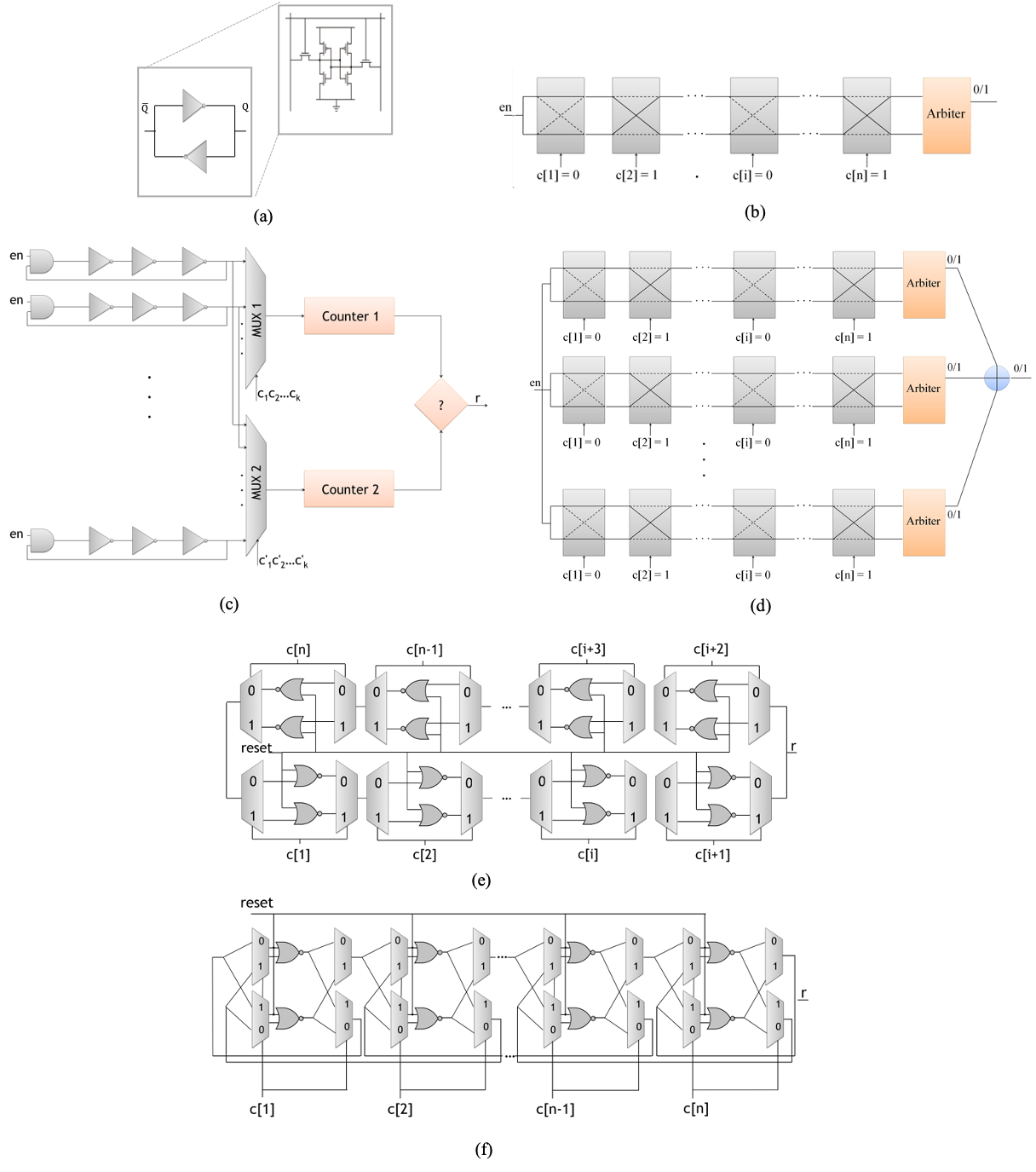

There are several ways to categorize PUFs. One of the most well-known ways to classify them is based on the amount of CRPs that they can provide. PUFs with a small number of CRPs are considered as weak PUFs, and PUFs with an exponential number of CRPs are considered strong PUFs. The most prominent examples of weak PUFs are SRAM PUF, Ring-Oscillator (RO) PUF, and Butterfly PUF. SRAM PUFs holcomb2008power exploits the random power-up pattern inside an SRAM to generate a unique fingerprint. Since the inverters inside each SRAM cell (Fig.2(a)) have mismatches due to process variations, it is predictable at which logical value the cell is settled after power-up. In this case, the challenge to the PUF can be the address of memory cells, and the response is the value stored in each cell. The RO PUF, on the other hand, utilizes the intrinsic differences between frequencies of equal lengths ROs to produce the unique fingerprints. In the case of RO PUF suh2007physical , a pair of ROs is selected by a given challenge, and the response is a binary value based on the comparison of the RO frequencies, see Fig.2(c).

The underlying principles of the strong PUFs are very similar to weak PUFs, i.e., exploiting bistable and delay-based circuits. However, in contrast to weak PUFs, the components of strong PUFs are tailored in a way that results in an exponential number of CRPs. The most prominent strong PUF is the Arbiter PUF family lee2004technique , where the intrinsic timing differences of two symmetrically designed paths, chosen by a challenge, are exploited to generate a binary response at the output of the circuit, see Fig.2(b). It has become clear from the very beginning that the Arbiter PUF is vulnerable to machine learning attacks (see Section 3 for more details). Therefore, XOR Arbiter PUFs and other Arbiter-based PUFs have been proposed to mitigate the vulnerability of PUFs to machine learning attacks, see Fig.2(d). Further research in the area of strong PUFs has led to other strong PUFs constructions, which are inspired by bistable memory cells. The main instances of these classes are bistable-ring (BR) chen2011bistable and twisted bistable-stable (TBR) PUFs schuster2014evaluation , see Fig.2(e) and Fig.2(f).

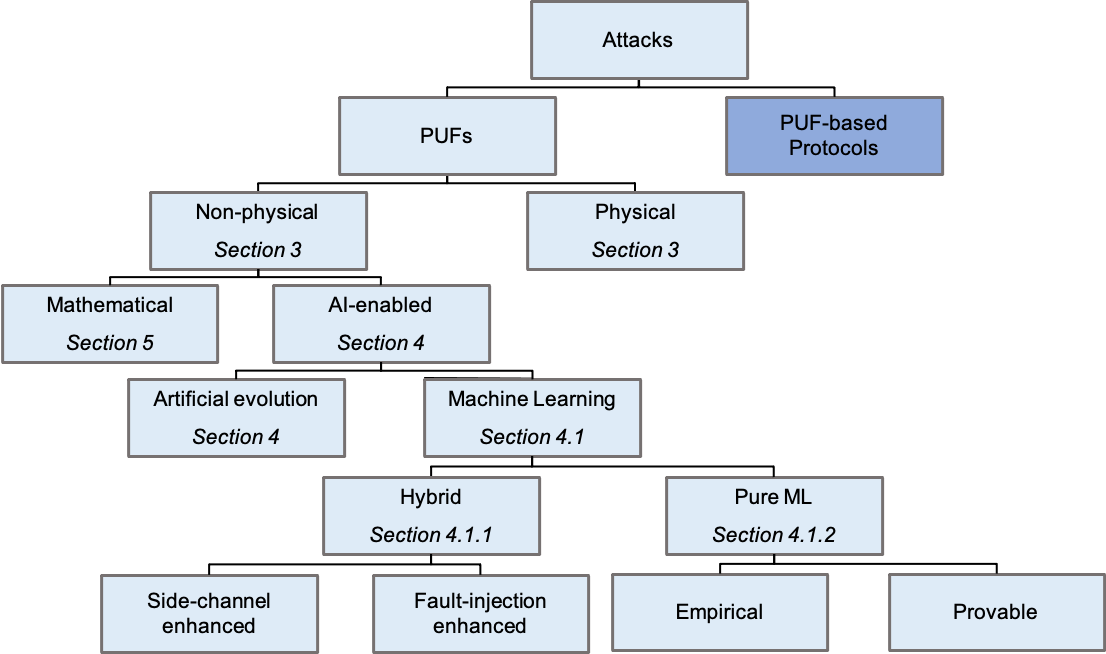

3 Attacks against PUFs: Physical vs. Non-physical

In the same vein as other security primitives, designs have been coming hand in hand with attacks. Irrespective of the methods and means, through which an attack is carried out, the goal of the adversary is to determine how the inputs are mapped to outputs of the PUF, thereby predicting the responses to unseen challenges. As broad as the range of PUF designs, attacks against PUFs have covered the whole spectrum in terms of different levels of destruction that they could cause. At one end of the spectrum, there are physical invasive attacks attempting to clone a PUF physically following a destructive procedure, e.g., through Focused Ion Beam (FIB) circuit edit111Note that through this chapter, to classify the attacks, the step taken to clone a PUF is considered. helfmeier2013cloning . While fully-invasive attacks can arbitrarily alter the functionality of the circuit and disable any countermeasures, semi-invasive attacks with a lower level of destruction can be launched to extract the “secret” from the PUF cf. nedospasov2013invasive . Here, secret extraction refers to the process of either reading-out the PUF responses nedospasov2013invasive or measuring some parameters that can be further analyzed to characterize a PUF tajik2014physical ; tajik2017photonic . The latter is, of course, of greater interest to attackers as such attacks are not limited to memory-based PUFs. Interestingly, a group of semi-invasive attacks may not even damage the underlying PUF structure, see, e.g., merli2011semi . Due to their nature, we categorize the above attacks as “physical attacks,” see Figure 3.

At the other end of the spectrum are physical non-invasive attacks, the adversary can observe/measure some challenge-response behavior-related parameters. The latter case is similar to that of side-channel attacks, widely studied in cryptography, and in particular, hardware security. Not surprisingly, PUFs have also been susceptible to side-channel attacks, which is not limited to memory- or delayed-based PUFs. An attempt has been made by the authors of oren2013effectiveness to present an attack taking advantage of remanence decay side-channels in SRAM PUF, which enables the attacker to recover the response of the PUF. Additionally, under the principle of side-channel analysis, an electromagnetic emission analysis against RO PUFs has been reported in merli2013localized .

As the leakage from the helper data (see Section 2) can be thought of as a side-channel revealing some information regarding the PUF, attacks depending on this vulnerability are other particularly interesting examples of side-channel analysis. This type of attacks has been mounted against RO PUF constructions delvaux2014key . Similarly, the power leakage from a fuzzy extractor used to perform error correction has been exploited to recover PUF responses karakoyunlu2010differential . Another examples include attacks, where the measurements are fed into machine learning (ML) algorithms to predict the response of the PUF to unknown CRPs. Therefore, in our taxonomy, we classify these attacks into the “AI-enabled” category, and more concretely, in “Machine Learning” one, see Figure 3 (see Section 4.1).

Next section covers a special type of non-invasive attacks, where AI - in particular, machine learning (ML) algorithms- enables the attacker to learn the challenge-response behavior of PUFs (see Figure 3). These attacks either rely on the availability of some physical measurements (see Section 4.1) or perform learning solely based on available CRPs (see Section 4.1).

4 AI-enabled attacks

This class is composed of attacks enhanced by incorporating techniques borrowed from the field of AI. In this regard, the application of ML algorithm has become a prominent subject for research due to their availability and ease of use. Nonetheless, other AI methods could be beneficial, when it comes to the security assessment of PUFs. For PUFs, ruhrmair2010modeling ; saha2013model are two of the first studies focusing on this by employing evolution strategies and genetic programming against feed-forward Arbiter PUFs and RO PUFs, respectively. In this context, the security of recently emerging PUF architectures, e.g., non-linear current mirror PUFs kumar2014design has been compromised through genetic algorithms guo2016efficient .

Further application of such algorithms has been observed in becker2015gap , where Becker et al. have applied an optimization technique relying on artificial evolution, in particular, covariance matrix adaptation evolution strategy (CMA-ES). In doing so, it has been shown that the security of an XOR Arbiter PUFs embedded in a real-world radio-frequency identification (RFID) tag can be compromised. Interestingly enough, to launch the attack, the reliability information222Repetition of the measurement by giving the same challenge results in different responses. collected from the PUF, which can be thought of as a “side-channel”, has been further exploited. A similar concept has been adopted against Feed-forward Arbiter PUFs and leakage current-based PUFs. However, fault injection has been performed to assist the evolutionary algorithm applied against these PUFs kumar2014hybrid ; kumar2015side . Regardless of the issues raised about the convergence of the evolutionary strategies, see, e.g., nguyen2017mxpuf , its scalability, and reliance on the side-channels/injected faults, these attacks have attracted a great deal of attention in the hardware security community. For instance, in addition to above-mentioned attacks against PUFs as hardware primitives, delvaux2019machine has demonstrated that recent Arbiter PUF-based protocols are susceptible to attacks employing the CMA-ES algorithm.

Next, attacks have been explained that apply the concept of learning the challenge-response behavior of a PUF through ML algorithms (i.e., a subset of AI).

4.1 Machine Learning attacks

As explained before, the main goal of the attacker is to mimic the challenge-response behavior of a PUF, preferably at a minimal cost. For this purpose, given the CRPs exchanged between the verifier and the chip, it is natural to think of ML attacks attempting to learn the mechanism underlying the response generation. In the early stages of introducing PUFs it was assumed that such attacks could not be successful gassend2002silicon ; however, soon after conjecturing that PUFs are hard to learn, it has been experimentally verified that learning Arbiter PUFs is indeed possible gassend2004identification . This result initiated a line of research, being pursued for almost two decades now and resulted in studies covering various types of ML algorithms applied against a wide variety of PUFs. Among the ML algorithms discussed in the PUF-related literature are empirical and provable techniques, which we briefly review in this section. Before elaborating on pure ML attacks, which are not refined by means of side-channels, we focus on hybrid, physical-ML attacks.

Hybrid Attacks

The basic premise, on which these attacks are based, is the presence of physical, measurable quantities that either can enhance the success rate of a ML attack against a PUF or, from another perspective, need to be analyzed through ML. While the latter is being widely studied in other hardware-security domains, e.g., side-channel analysis against cryptosystems, the former has become more popular for PUFs. This has been partially motivated by the existence of PUFs, which have been considered harder to learn. In this context, for XOR Arbiter PUFs, it has been proposed to incorporate the timing and power information as side-channels in order to improve the learning process ruhrmair2014efficient . Another example of such attacks has been explained in becker2014active , where controlled PUFs composed of an Arbiter PUF and the lightweight block cipher PRESENT has come under power side-channel analysis. The practical feasibility of these attacks has been questioned as accurate timing and power measurements may not be available.

This problem can be overcome by combing the ML and fault injection attacks, although at the cost of being (semi-) invasive in some cases. XOR Arbiter PUFs have been attacked following this observation, where the fault is injected in a semi-invasive manner tajik2015laser . Nevertheless, fault injection procedures used to facilitate the learning process can be non-invasive as well; however, the famous examples of such attacks have not employed ML algorithms, but rather evolutionary strategies becker2014active and mathematical modeling delvaux2014fault (see Section 5).

Pure ML Attacks

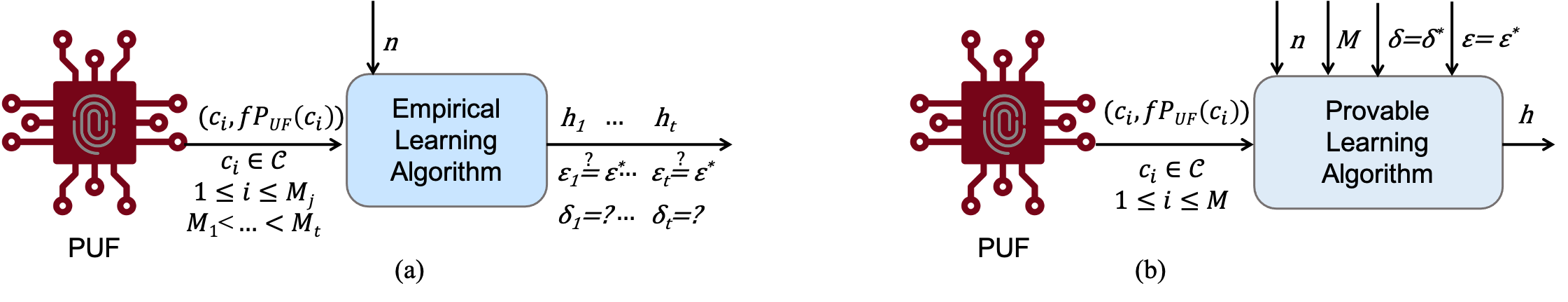

Following the way paved by gassend2004identification to show the vulnerability of Arbiter PUFs to the Perceptron algorithm, Lim has demonstrated that a support vector machine (SVM) algorithm can also be used to learn the challenge-response behavior of Arbiter PUFs lim2004extracting . This line of research has been pursued and has led to exploring the application of two groups of ML algorithms in the context of PUFs, namely empirical and provable ML approaches. The former is widely taken in various domains, including hardware security, whereas the latter has been identified as a “sister field” of the cryptography and linked to complexity theory rivest1991cryptography . A major success factor of the provable techniques, in particular, Valiant’s probably approximately correct (PAC) framework, is the link established between the probability of successful learning (so-called, confidence level), the number of training examples and their distribution, the complexity of the model assumed for the unknown function under test, and the accuracy of the learned model valiant1984theory . Such relationships cannot be made for empirical ML algorithms, more concretely, (1) the probability of successful learning is not known and cannot be defined prior to the experiments, and (2) the number of training examples cannot be determined beforehand. Moreover, empirical ML algorithms can suffer from a lack of generalizability and reproducibility. While generalization refers to the ability of the model to adapt properly to unseen data, drawn from the same distribution as of that followed to create the model, reproducibility means that an experiment can be repeated to reach the same conclusion.

Although the above challenges are not specific to the security assessment of PUFs, this assessment may become impossible due to the lack of generalizability and reproducibility. To investigate the vulnerability of a PUF with instance-specific features and sensitivity to environmental changes, it is crucial to come up with ML approaches, making standardization and comparison feasible. For these purposes, provable ML algorithms seem promising. Next, a brief overview of empirical and provable attacks against PUFs is provided.

| Ref. | PUF under attack | ML algorithm | Mathematical model |

|---|---|---|---|

| gassend2004identification | Arbiter PUFs | Perceptron | Linear combinations of delays |

| lim2004extracting | Arbiter PUFs | Support Vector Machines (SVMs) | Hyperplanes |

| RuSeSo10 | Arbiter PUFs, XOR Arbiter PUFs, Lightweight Secure PUFs | Logistic regression | Hyperplanes |

| RuSeSo10 | RO PUFs | Quick Sort | NA |

| hospodar2012machine | Arbiter and XOR Arbiter PUFs | Artificial Neural Networks and Support Vector Machines | NA |

| schuster2014evaluation | Bistable Ring (BR) and twisted BR PUFs | Artificial Neural Networks | NA |

| xu2015security | BR PUFs | SVM | LTFs |

| vijayakumar2016machine | Non-linear voltage transfer characteristics (VTC) | Tree classifiers and bagging and boosting techniques | NA |

| khalafalla2019pufs ; awano2019pufnet | Double Arbiter PUFs machida2014new | Deep learning | NA |

| santikellur2019deep | XOR APUF, Lightweight Secure PUF, Multiplexer PUF and its variants | Deep learning | NA |

| wisiol2020splitting | Interpose PUF nguyen2019interpose | Logistic regression | Hyperplanes |

Empirical ML Methods

As mentioned before, along with the development of PUFs have come ML attacks gassend2004identification . These attacks have become more critical as Arbiter PUFs, XOR Arbiter PUFs, Lightweight Secure PUFs majzoobi2008lightweight , and RO PUFs have been successfully targeted by applying logistic regression RuSeSo10 . This work has been followed by numerous studies that aim to assess the security of various PUF families against different empirical ML algorithms. Table 1 summarizes some of these studies by focusing on PUFs and the ML algorithms discussed in those studies.

Remarks: With regard to the attacks listed in Table 1, an interesting observation can be made: except for some of the studies, no model, describing the functionality of the PUF, has been taken into account333Next, we discuss that for some provable techniques, this information is not required.. Such models are viewed as “data transformation” in empirical ML-related literature. In the absence of these transformations, it is not straightforward to justify why an algorithm should be chosen to perform the learning task.

Note that without such a justification, it is impossible to generalize the results obtained for a given PUF to other instances from that PUF family. This, of course, does not undervalue the importance of research on empirical ML algorithms in the context of PUFs; however, we emphasize that the results achieved through using such algorithms should be interpreted with caution.

Another remark is that some of the PUFs listed in Table 1 were proposed as a remedy for ML attacks, but have been attacked by other algorithms. This raises the question of whether the resistance to ML should be defined as resistance to “known ML attacks”, which is answered in Section 6. In line with this, another question is what to do to increase the security of PUFs targeted by ML attacks? An illustrative example is the XOR Arbiter PUFs, where adding non-linearity through using the XOR combination function is suggested. Nevertheless, to understand the effectiveness of such a countermeasure, it is not enough to rely on specific ML algorithms. Moreover, it is important to understand how and why ML algorithms could break the security of a PUF. Next, we discuss this in further detail.

Provable ML Methods

What can be understood from the above discussion is that attacks mounted by applying empirical ML algorithms depend largely on trial-and-error approaches. The importance of this problem becomes apparent as countermeasures designed to defeat them would also render ineffective. Moreover, since security assessment with regard to empirical ML algorithms is instance-, parameter-, and algorithm-dependent with no convergence guarantees, standardization and comparison between PUFs may not be feasible. In response to this, a provable ML framework, namely probably approximately correct (PAC) learning, has found application in studies on PUFs. Figure 4 presents the differences between an empirical algorithm and a provable one. It is worth noting here that ML attacks reported in the literature usually achieve an acceptable level of accuracy (e.g., 95%) regardless of employing an empirical or a provable algorithm. Hence, the main advantage of provable algorithms is not the accuracy of the model learned, but the possibility of defining the level of accuracy and confidence a priori.

PAC learning framework has been employed to launch provable ML attacks against PUFs. Families of PUFs targeted by PAC learning attacks include Arbiter ganji2016pac , XOR Arbiter ganji2015attackers , RO ganji2015let 444Note that although weak PUFs are not interesting targets from the point of view of ML attacks, their characteristics can be analyzed by applying provable methods and the metrics defined based on them cf. ganji2019pufmeter ., and BR and twisted BR PUFs ganji2016strong ; ganji2017having . These results have been extended to the cases where the noisy CRPs are available to learn a PUF ganji2018fourier .

PAC learning of BR and twisted BR PUFs serves as a special example since no mathematical model describing their internal functionality has been known so far; hence, no data transformation, or so-called “representation” could be presented. Regardless of that, characteristics of these PUFs are formulated as Boolean functions, useful to launch the attack. In this case, it has been proven that, in general, the challenge bits have different amounts of influence on the response of a PUF to a given challenge.

Moreover, ganji2017having has reintroduced property testing in the PUF-related literature. Property testing algorithms developed in ML theory examine whether properties of a specific class can be found in a given function, i.e., a PUF under test in our case. In doing so, if no representation is known for a PUF, it is at least possible to understand whether it can be represented by some functions. It could be a necessary step when looking for an appropriate, efficient ML algorithm to learn a PUF. Furthermore, if an incorrect representation is chosen without either knowing the internal functionality of a PUF or performing property tests, it is not possible to decide about the learnability of the PUF ganji2020pitfalls .

Remarks: We stress that when interpreting the above results, a great deal of attention should be paid to the setting of the PAC learning framework (access granted to the CRPs, distribution of the CRPs given to the machine, etc.) considered in a study. As explained in ganji2020pitfalls , this setting plays an important role when deciding if a PUF is provably (in)secure (see Section 6 for more details).

5 Mathematical Modeling

Mathematical modeling attacks do not fall within the category “AI-enabled” attacks, which is the main focus of this section; however, valuable lessons can be learned from these attacks. In this respect, one of the key messages to convey is that not every attack needs to be conducted with a view to adopting AI. In other words, security assessment of PUFs relying on AI, albeit a useful and important objective, should not be overstated as, in some cases, other mathematical methods can give more insight into the security of PUFs. This has been observed in a recent work of Zeitouni et al. zeitouni2018s , demonstrating that standard interpolation algorithms can be employed to predict the responses of (Rowhammer) DRAM-PUFs schaller2017intrinsic . Another instance is the analysis performed in delvaux2013side that has not only resulted in a high-accuracy model of the PUF under test, namely Arbiter PUFs, but also linked the reliability of a PUF to CMOS (and interconnect) noise sources and further to the PUF model. As shown for Arbiter, RO and RO sum PUFs, the effectiveness of this attack can be enhanced by injecting fault through changes in environmental conditions delvaux2014fault .

Another example of such attacks concerns the so-called “cryptanalysis” of PUFs, where the adversary mounts computational attacks to predict the response to an unseen challenge with the probability higher than that of a random guess sahoo2015case ; nguyen2015efficient . For this purpose, she can use the CRPs to partition the challenge-response space into subsets corresponding to the responses output by the PUF. To evaluate the effect of their attacks, the authors have targeted and successfully broken the security of RO PUFs with enhanced CRPs maiti2011robust , composite PUFs sahoo2014composite and the lightweight secure PUF majzoobi2008lightweight , with multi-bit responses.

Within this category, the analysis presented in yamamoto2014security plays a crucial role in understanding the mechanism of generating responses in BR PUFs. More specifically, by carrying out linear and differential cryptanalysis-inspired attacks, this study has shown that the response of a BR PUF can be determined by a few number of the challenge bits. This work motivates the study of provable techniques to quantify the influence of challenge bits on the responses of PUFs ganji2016strong ; ganji2019pufmeter .

Finally, the attack presented in ganji2015lattice can be considered, where a lattice basis-reduction method has been applied to XOR Arbiter PUFs with an arbitrarily large number of chains and controlled inputs and outputs, i.e., unknown challenges and responses from the adversary perspective. These methods have been first found in a number of cryptography problems, e.g., hidden subset sum problem (i.e.,a variant of the subset sum problem with the hidden set of summands). The similarity between this problem and controlled XOR Arbiter PUFs can become evident as the side-channel analysis is conducted to measure the accumulated delays at the output of the last stage of the Arbiter PUFs in the XOR Arbiter PUF. It has been demonstrated that this information is sufficient to reveal not only the hidden challenges and responses, but also model the PUF.

Remarks: Perhaps, the most important message of this section is that the failure of AI-enabled attacks per se should not be considered as a guarantee for the security of PUFs. As explained above, PUFs that are robust to some AI-enabled attacks, e.g., XOR Arbiter PUFs with a large number of chains, can be vulnerable to other families of attacks. These attacks can, of course, improve designers’ understanding of mechanisms underlying the challenge-response behavior of PUFs, and consequently, results in developing more attack-resilient PUFs.

6 Resiliency against ML attacks

The attacks discussed in the previous section have posed serious challenges for the PUF designers and manufacturers. To tackle this issue, various countermeasures have been introduced in the literature, including controlled PUFs majzoobi2009techniques ; yu2016lockdown , re-configurable PUFs majzoobi2009techniques , PUFs with noise-induced CRPs yu2014noise ; yashiro2019deep ; wang2019adversarial , to name a few. “Controlled PUFs” is the umbrella term given to PUFs, where the adversary has restricted access to the CRPs through either obfuscating the challenges/responses gassend2002controlled ; gassend2008controlled or mechanisms used to feed the challenges/collect the responses majzoobi2009techniques ; yu2016lockdown ; lao2011novel ; ye2016rpuf ; katzenbeisser2011recyclable ; lao2011novel ; dubrova2019crc . When it comes to re-configurability, PUFs with a mechanism to update the architecture of a PUF are devised kursawe2009reconfigurable ; gehrer2014reconfigurable ; gehrer2015using ; sahoo2017multiplexer .

When reviewing the papers mentioned above and in this section in general, it becomes evident that virtually all of the PUFs proposed in the literature have been designed having ML attacks in mind. This seems, however, paradoxical as attacks against PUFs, supposed to be ML attack-resilient, are being reported, see, e.g., delvaux2019machine ; wisiol2020splitting . The reason behind this can be the lack of (1) procedures to prove that the PUF exhibits this feature, and (2) metrics to assess whether a PUF is robust against ML attacks.

6.1 How to Prove the Security of a PUF against ML Attacks

Proving the security of cryptographic primitives has been practiced for decades. For that, the security of the system must be defined, which is typically carried out through the definition of an adversary model and a security game. The former determines the power of the adversary in terms of, e.g., being uniform/non-uniform, interactive/non-interactive, etc. Adversary models also describe how the attacker interacts with the security game. This game further gives insight into the power of the adversary over the cryptosystem, i.e., her access to the systems and the conditions for considering an attack successful.

Definition of security from the perspective of information theory (e.g., “perfect secrecy”) and computational complexity have been employed to argue about the security of cryptosystems. This is natural as an adversary (a.k.a., “codebreaker”) has bounded computational resources. Consequently, designers attempt to make the codebreaking problem computationally difficult to ensure security. This definition of security in cryptography has been linked to machine theory in a seminal work of Rivest in 1991 rivest1991cryptography . Rivest observed the reliance of cryptography and machine learning on computational complexity, and further identified the similarities and differences between these two fields of study, including attack types and the queries required by an ML algorithm, exact versus approximate inference of an unknown target, etc.

To assess the security of a scheme against a learning algorithm, i.e., the adversary, the ML setting is specified in the PAC learning framework. In this regard, one can define a set of parameters including the number of examples given to the algorithm, the distribution from that they are drawn (if needed), a representative model of the target function (if any), and the accuracy of the approximation. Afterward, if any polynomial algorithm can learn the target function describing the cryptosystem, the security of the system is compromised. For PUFs, as cryptographic primitives, the same procedure can be followed; however, approaches proposed in the PUF-related literature have not pursued this.

In particular, it is common that the robustness of a newly designed PUF against ML attacks is evaluated by applying a couple of ML algorithms against the PUF. This is indeed not sufficient to claim that the PUF is robust against ML attacks in general. In the best case, if the experiment is repeated for numerous instances of a PUF to make the results statistically relevant, one can claim that the PUF is resilient to the specific ML algorithm applied in the experiment.

In contrast to such an ad-hoc process, the security of PUFs in the face of ML attacks have been analyzed rigorously in ganji2020rock ; hammouri2008tamper ; yu2016lockdown ; herder2016trapdoor . Specifically, ganji2020rock has dealt with this from the point of view of PAC learning and computational complexity. The security of a PUF proposed in yu2016lockdown has been based upon a result previously obtained with regard to the PAC learning framework ganji2015attackers . Herder et al. herder2016trapdoor have also designed their PUF on the basis of a reduction to a problem known to be hard to learn, namely learning parities with noise.

We sum up by quoting from Shannon’s work that has argued against imprecise proof of security.

“In designing a good cipher […] it is not enough merely to be sure none of the standard methods of cryptanalysis work– we must be sure that no method whatever will break the system easily. This, in fact, has been the weakness of many systems. […] The problem of good cipher design is essentially one of finding difficult problems, subject to certain other conditions” shannon1949communication (see also arora2009computational ).

6.2 Metrics for Evaluating the Security of a PUF against ML attacks

In order to verify the robustness of a PUF against ML attacks in practice, a set of metrics should be provided that is well suited to various ML attacks. This task has been already accomplished for the purpose of verification that a PUF exhibits features relating to the quality. In this regard, a comprehensive set of metrics has been developed, which comprises cost, reliability, and security kim2010statistics ; maiti2013systematic ; kang2012performance . Nevertheless, metrics associated with the ML attacks have not been well studied in the literature. In the following paragraphs, some of such metrics proposed in the literature are discussed.

Entropy: One of the first attempts to define a metric indicating the ML attack-resiliency has been made in majzoobi2008testing ; majzoobi2009techniques . The authors have used entropy to quantify the unclonability, i.e., being resistant to reverse engineering and modeling (i.e., mainly non-physical attacks). Entropy of the PUF responses can be estimated by the uniqueness, or measured by inter-distance kim2010statistics . In more concrete terms, unclonability due to the ML attacks is translated to statistical prediction and measured by the Hamming distance between two challenges majzoobi2008testing .

Strict avalanche criterion (SAC): It has been suggested that unpredictability can be achieved if the SAC property is satisfied: by flipping a bit in the challenge, each of the output bits flips with a probability of one half majzoobi2009techniques . This has been further improved in nguyen2016security , where the authors have shown that the distance between two challenges considered for computing the metric plays an important role.

Bias: In addition to the above metrics, it is obvious that the bias in the PUF responses can be beneficial to launch mathematical, in particular, machine learning attacks.

Noise sensitivity: The study in nguyen2016security has presented a prime example illustrating the lack of firm foundations for evaluating of resiliency of PUFs to ML attacks. To address this issue, as provable ML methods applied against PUFs have become established, new metrics originating from Boolean and Fourier analyses have been introduced ganji2019pufmeter . The notion of noise sensitivity is closely-related to the SAC property: the probability of flipping the output bit, when filliping each bit of the challenge with a pre-defined probability. It has been proved that the smaller and bounded the noise sensitivity is, the higher the probability of learning the PUF would be. More importantly, the algorithms for testing PUFs in terms of this metric have been made available cf. ganji2019pufmeter .

Expected bias: Recent developments in the design and verification of PUFs with reliance on computational complexity result in adding a new metric to the list of formally-defined metrics: the expected bias ganji2020rock . The notion of the expected bias encompasses the average bias of a PUF in the presence of the noise, inherent to the design of the PUF due to, e.g., the effect of the routing, and/or having not sufficient deviation in the manufacturing process variations. These phenomena not only affect the responses of a PUF, but also induce correlation between two instances of a PUF implemented on a platform. Hence, the expected bias is a suitable measure to assess the security of PUFs, even ones composed of some PUF instances, e.g., XOR PUFs.

7 AI-enabled Design of PUFs

Up until this point, we have focused on the interplay between the AI and PUFs from one point of view: applying ML to assess the security of PUFs. From another perspective, it is interesting to explore how AI can be employed to design PUFs. In the same way that ML-enabled attacks have been classified, this type of PUF design can be categorized into empirical and provable methods. In the first category, a PUF proposed in de2019design can be considered, where weightless neural networks (WNNs) have been adopted to transform the challenges in the sense of controlled PUF. Another interesting example of such PUFs has been proposed in chowdhury2020weak , where a weak PUF relying on the concept of asynchronous reset (ARES) behavior is designed. For this, a genetic algorithm has been employed with a fitness function defined based on the physical parameters of the transistors involved in the PUF circuit. In their scenario, the genetic algorithm automatically outputs an optimal PUF design for a given load.

On the other hand, in the second category, methods have been established that rely on the impossibility of learning specific functions. These impossibility results have been usually formulated in the PAC learning framework. Note that the impossibility taken into account here is not due to the setting chosen for the problem, e.g., representation, but the problem is inherently hard to learn. For instance, Herder et al. herder2016trapdoor have proposed a PUF relying on the hardness of learning parity with noise (LPN) problem. This problem remains open in computational learning theory, although it seems that the LPN problem is not as hard as NP-Hard problems kalai2008agnostic .

Hammouri et al. hammouri2008tamper have presented one of the first studies on the application of PAC learning in the design of PUFs. For this, through reductions to problems that are known to be hard to learn, a proof of security has been suggested. This work has been followed by yu2016lockdown , where the design of the PUF is based upon a hardness problem proved for XOR Arbiter PUFs ganji2015attackers . The study presented in ganji2020rock has demonstrated that a family of functions, known to be hard-to-predict (i.e., Tribes function) can be applied to amplify the hardness of somewhat hard PUFs. Their proof of security against ML attacks relies on the unpredictability of the proposed PUF, composed of PUFs with lower levels of unpredictability.

Interestingly, by adopting the concepts introduced in the context of PAC-learnability of PUFs, it has been shown that one could go one step further by devising an automated CAD framework to design a PUF, which can be provably ML attack-resilience chatterjee2020puf .

Future directions: Finally, we stress that compared to techniques originating in ML and used to attack PUF, not much effort has been invested in this topic. We expect that lessons learned from the AI-enabled attacks, and in particular, ML-based ones, become a tool for designing PUFs.

8 Conclusion

This chapter attempts to demonstrate how AI can offer the potential for verifying that security-related features of a PUF are met. In this regard, the application of AI is not limited to processing the data to, e.g., classify the seen input-output pairs of a PUF and predict the output associated with an unknown input (i.e., mounting an ML attack). On the contrary, when shifting our focus to a provable ML framework, it is possible to come up with security proofs in the sense of cryptography. This has been explained in detail in this chapter, along with countermeasures, developed to protect PUFs against ML attacks. Moreover, metrics and approaches to quantify the robustness of a PUF to such attacks have been described. Last but not least, a new line of research devoted to the design of PUFs through ML techniques is discussed.

Acknowledgements.

We are deeply grateful for the guidance and support of our Ph.D. advisor, Jean-Pierre Seifert. Throughout our Ph.D. studies at the Technical University of Berlin, and after that, his insightful comments and advice have helped us to broaden our knowledge and expand our horizons. We are also very thankful to Domenic Forte for his guidance, tremendous support during our stay at the University of Florida, and encouragement, in particular, to make the tools (PUFmeter) publicly available.References

- (1) de Araújo, L.S., Patil, V.C., Prado, C.B., Alves, T.A., Marzulo, L.A., França, F.M., Kundu, S.: Design of robust, high-entropy strong PUFs via weightless neural network. Journal of Hardware and Systems Security 3(3), 235–249 (2019)

- (2) Armknecht, F., Maes, R., Sadeghi, A.R., Standaert, F.X., Wachsmann, C.: A formalization of the security features of physical functions. In: 2011 IEEE Symposium on Security and Privacy, pp. 397–412. IEEE (2011)

- (3) Arora, S., Barak, B.: Computational complexity: a modern approach. Cambridge University Press (2009)

- (4) Awano, H., Iizuka, T., Ikeda, M.: PUFNet: A deep neural network based modeling attack for physically unclonable function. In: 2019 IEEE International Symposium on Circuits and Systems (ISCAS), pp. 1–4. IEEE (2019)

- (5) Becker, G.T.: The gap between promise and reality: On the insecurity of XOR arbiter PUFs. In: International Workshop on Cryptographic Hardware and Embedded Systems, pp. 535–555. Springer (2015)

- (6) Becker, G.T., Kumar, R., et al.: Active and passive side-channel attacks on delay based PUF designs. IACR Cryptology ePrint Archive 2014, 287 (2014)

- (7) Chatterjee, D., Mukhopadhyay, D., Hazra, A.: PUF-G: a CAD framework for automated assessment of provable learnability from formal PUF representations. In: Proceedings of the 39th International Conference on Computer-Aided Design, pp. 1–9 (2020)

- (8) Chen, Q., Csaba, G., Lugli, P., Schlichtmann, U., Rührmair, U.: The bistable ring PUF: A new architecture for strong physical unclonable functions. In: 2011 IEEE International Symposium on Hardware-Oriented Security and Trust, pp. 134–141. IEEE (2011)

- (9) Chowdhury, S., Acharya, R.Y., Boullion, W., Felder, A., Howard, M., Di, J., Forte, D.: A weak asynchronous reset (ares) PUF using start-up characteristics of null conventional logic gates. In: IEEE International Test Conference (ITC). IEEE (2020)

- (10) Delvaux, J.: Machine-learning attacks on PolyPUFs, OB-PUFs, RPUFs, LHS-PUFs, and PUF–FSMs. IEEE Transactions on Information Forensics and Security 14(8), 2043–2058 (2019)

- (11) Delvaux, J., Gu, D., Schellekens, D., Verbauwhede, I.: Helper data algorithms for PUF-based key generation: Overview and analysis. IEEE Transactions on Computer-Aided Design of Integrated Circuits and Systems 34(6), 889–902 (2014)

- (12) Delvaux, J., Gu, D., Verbauwhede, I., Hiller, M., Yu, M.D.M.: Efficient fuzzy extraction of PUF-induced secrets: Theory and applications. In: International Conference on Cryptographic Hardware and Embedded Systems, pp. 412–431. Springer (2016)

- (13) Delvaux, J., Verbauwhede, I.: Side channel modeling attacks on 65nm arbiter PUFs exploiting cmos device noise. In: 2013 IEEE International Symposium on Hardware-Oriented Security and Trust (HOST), pp. 137–142. IEEE (2013)

- (14) Delvaux, J., Verbauwhede, I.: Fault injection modeling attacks on 65 nm arbiter and ro sum PUFs via environmental changes. IEEE Transactions on Circuits and Systems I: Regular Papers 61(6), 1701–1713 (2014)

- (15) Delvaux, J., Verbauwhede, I.: Key-recovery attacks on various ro PUF constructions via helper data manipulation. In: 2014 Design, Automation & Test in Europe Conference & Exhibition (DATE), pp. 1–6. IEEE (2014)

- (16) Dubrova, E., Näslund, O., Degen, B., Gawell, A., Yu, Y.: CRC-PUF: A machine learning attack resistant lightweight PUF construction. In: 2019 IEEE European Symposium on Security and Privacy Workshops (EuroS&PW), pp. 264–271. IEEE (2019)

- (17) Ganji, F., Amir, S., Tajik, S., Forte, D., Seifert, J.P.: Pitfalls in machine learning-based adversary modeling for hardware systems. In: 2020 Design, Automation & Test in Europe Conference & Exhibition (DATE), pp. 514–519. IEEE (2020)

- (18) Ganji, F., Forte, D., Seifert, J.P.: PUFmeter a property testing tool for assessing the robustness of physically unclonable functions to machine learning attacks. IEEE Access 7, 122,513–122,521 (2019)

- (19) Ganji, F., Krämer, J., Seifert, J.P., Tajik, S.: Lattice basis reduction attack against physically unclonable functions. In: Proceedings of the 22nd ACM SIGSAC Conference on Computer and Communications Security, pp. 1070–1080 (2015)

- (20) Ganji, F., Tajik, S., Fäßler, F., Seifert, J.P.: Strong machine learning attack against PUFs with no mathematical model. In: International Conference on Cryptographic Hardware and Embedded Systems, pp. 391–411. Springer (2016)

- (21) Ganji, F., Tajik, S., Fäßler, F., Seifert, J.P.: Having no mathematical model may not secure PUFs. Journal of Cryptographic Engineering 7(2), 113–128 (2017)

- (22) Ganji, F., Tajik, S., Seifert, J.P.: Let me prove it to you: Ro PUFs are provably learnable. In: ICISC 2015, pp. 345–358. Springer (2015)

- (23) Ganji, F., Tajik, S., Seifert, J.P.: Why attackers win: on the learnability of XOR arbiter PUFs. In: International Conference on Trust and Trustworthy Computing, pp. 22–39. Springer (2015)

- (24) Ganji, F., Tajik, S., Seifert, J.P.: Pac learning of arbiter PUFs. Journal of Cryptographic Engineering 6(3), 249–258 (2016)

- (25) Ganji, F., Tajik, S., Seifert, J.P.: A fourier analysis based attack against physically unclonable functions. In: International Conference on Financial Cryptography and Data Security, pp. 310–328. Springer (2018)

- (26) Ganji, F., Tajik, S., Stauss, P., Seifert, J.P., Tehranipoor, M., Forte, D.: Rock’n’roll PUFs: Crafting provably secure PUFs from less secure ones (extended version). Journal of Cryptographic Engineering (2020). DOI 10.1007/s13389-020-00226-7

- (27) Gao, Y., Al-Sarawi, S.F., Abbott, D.: Physical unclonable functions. Nature Electronics 3(2), 81–91 (2020)

- (28) Gassend, B., Clarke, D., Van Dijk, M., Devadas, S.: Controlled physical random functions. In: Proceedings of 18th Annual Computer Security Applications Conference, pp. 149–160 (2002)

- (29) Gassend, B., Clarke, D., Van Dijk, M., Devadas, S.: Silicon physical random functions. In: Proceedings of the 9th ACM conference on Computer and communications security, pp. 148–160 (2002)

- (30) Gassend, B., Dijk, M.V., Clarke, D., Torlak, E., Devadas, S., Tuyls, P.: Controlled physical random functions and applications. ACM Transactions on Information and System Security (TISSEC) 10(4), 1–22 (2008)

- (31) Gassend, B., Lim, D., Clarke, D., Van Dijk, M., Devadas, S.: Identification and authentication of integrated circuits. Concurrency and Computation: Practice and Experience 16(11), 1077–1098 (2004)

- (32) Gehrer, S., Sigl, G.: Reconfigurable PUFs for FPGA-based socs. In: 2014 International Symposium on Integrated Circuits (ISIC), pp. 140–143. IEEE (2014)

- (33) Gehrer, S., Sigl, G.: Using the reconfigurability of modern FPGAs for highly efficient PUF-based key generation. In: 2015 10th International Symposium on Reconfigurable Communication-centric Systems-on-Chip (ReCoSoC), pp. 1–6. IEEE (2015)

- (34) Goldreich, O.: Foundations of cryptography: volume 1, basic tools. Cambridge university press (2007)

- (35) Guajardo, J., Kumar, S.S., Schrijen, G.J., Tuyls, P.: FPGA intrinsic PUFs and their use for ip protection. In: International workshop on cryptographic hardware and embedded systems, pp. 63–80. Springer (2007)

- (36) Guo, Q., Ye, J., Gong, Y., Hu, Y., Li, X.: Efficient attack on non-linear current mirror PUF with genetic algorithm. In: 2016 IEEE 25th Asian Test Symposium (ATS), pp. 49–54. IEEE (2016)

- (37) Hammouri, G., Öztürk, E., Sunar, B.: A tamper-proof and lightweight authentication scheme. Pervasive and mobile computing 4(6), 807–818 (2008)

- (38) Helfmeier, C., Boit, C., Nedospasov, D., Seifert, J.P.: Cloning physically unclonable functions. In: 2013 IEEE International Symposium on Hardware-Oriented Security and Trust (HOST), pp. 1–6. IEEE (2013)

- (39) Herder, C., Ren, L., Van Dijk, M., Yu, M.D., Devadas, S.: Trapdoor computational fuzzy extractors and stateless cryptographically-secure physical unclonable functions. IEEE Transactions on Dependable and Secure Computing 14(1), 65–82 (2016)

- (40) Holcomb, D.E., Burleson, W.P., Fu, K.: Power-up sram state as an identifying fingerprint and source of true random numbers. IEEE Transactions on Computers 58(9), 1198–1210 (2008)

- (41) Hospodar, G., Maes, R., Verbauwhede, I.: Machine learning attacks on 65nm arbiter PUFs: Accurate modeling poses strict bounds on usability. In: 2012 IEEE international workshop on Information forensics and security (WIFS), pp. 37–42. IEEE (2012)

- (42) Kalai, A.T., Mansour, Y., Verbin, E.: On agnostic boosting and parity learning. In: Proceedings of the fortieth annual ACM symposium on Theory of computing, pp. 629–638 (2008)

- (43) Kang, H., Hori, Y., Satoh, A.: Performance evaluation of the first commercial PUF-embedded rfid. In: Global Conference on Consumer Electronics, pp. 5–8. IEEE (2012)

- (44) Karakoyunlu, D., Sunar, B.: Differential template attacks on PUF enabled cryptographic devices. In: 2010 IEEE International Workshop on Information Forensics and Security, pp. 1–6. IEEE (2010)

- (45) Katzenbeisser, S., Kocabaş, Ü., Van Der Leest, V., Sadeghi, A.R., Schrijen, G.J., Wachsmann, C.: Recyclable PUFs: Logically reconfigurable PUFs. Journal of Cryptographic Engineering 1(3), 177 (2011)

- (46) Khalafalla, M., Gebotys, C.: PUFs deep attacks: Enhanced modeling attacks using deep learning techniques to break the security of double arbiter PUFs. In: 2019 Design, Automation & Test in Europe Conference & Exhibition (DATE), pp. 204–209. IEEE (2019)

- (47) Kim, I., Maiti, A., Nazhandali, L., Schaumont, P., Vivekraja, V., Zhang, H.: From statistics to circuits: Foundations for future physical unclonable functions. In: Towards Hardware-Intrinsic Security, pp. 55–78. Springer (2010)

- (48) Kumar, R., Burleson, W.: Hybrid modeling attacks on current-based PUFs. In: 2014 IEEE 32nd International Conference on Computer Design (ICCD), pp. 493–496. IEEE (2014)

- (49) Kumar, R., Burleson, W.: On design of a highly secure PUF based on non-linear current mirrors. In: 2014 IEEE international symposium on hardware-oriented security and trust (HOST), pp. 38–43. IEEE (2014)

- (50) Kumar, R., Burleson, W.: Side-channel assisted modeling attacks on feed-forward arbiter PUFs using silicon data. In: International Workshop on Radio Frequency Identification: Security and Privacy Issues, pp. 53–67. Springer (2015)

- (51) Kursawe, K., Sadeghi, A.R., Schellekens, D., Skoric, B., Tuyls, P.: Reconfigurable physical unclonable functions-enabling technology for tamper-resistant storage. In: 2009 IEEE International Workshop on Hardware-Oriented Security and Trust, pp. 22–29. IEEE (2009)

- (52) Lao, Y., Parhi, K.K.: Novel reconfigurable silicon physical unclonable functions. In: Proceedings of Workshop on Foundations of Dependable and Secure Cyber-Physical Systems (FDSCPS), pp. 30–36 (2011)

- (53) Lee, J.W., Lim, D., Gassend, B., Suh, G.E., Van Dijk, M., Devadas, S.: A technique to build a secret key in integrated circuits for identification and authentication applications. In: 2004 Symposium on VLSI Circuits. Digest of Technical Papers (IEEE Cat. No. 04CH37525), pp. 176–179. IEEE (2004)

- (54) Lim, D.: Extracting secret keys from integrated circuits. Ph.D. thesis, Massachusetts Institute of Technology (2004)

- (55) Machida, T., Yamamoto, D., Iwamoto, M., Sakiyama, K.: A new mode of operation for arbiter PUF to improve uniqueness on FPGA. In: 2014 Federated Conference on Computer Science and Information Systems, pp. 871–878. IEEE (2014)

- (56) Maes, R.: Physically Unclonable Functions: Constructions, Properties and Applications. Springer Berlin Heidelberg (2013)

- (57) Maes, R., Verbauwhede, I.: Physically unclonable functions: A study on the state of the art and future research directions. In: Towards Hardware-Intrinsic Security, pp. 3–37. Springer (2010)

- (58) Maiti, A., Gunreddy, V., Schaumont, P.: A systematic method to evaluate and compare the performance of physical unclonable functions. In: Embedded systems design with FPGAs, pp. 245–267. Springer (2013)

- (59) Maiti, A., Kim, I., Schaumont, P.: A robust physical unclonable function with enhanced challenge-response set. IEEE Transactions on Information Forensics and Security 7(1), 333–345 (2011)

- (60) Majzoobi, M., Koushanfar, F., Potkonjak, M.: Lightweight secure PUFs. In: 2008 IEEE/ACM International Conference on Computer-Aided Design, pp. 670–673. IEEE (2008)

- (61) Majzoobi, M., Koushanfar, F., Potkonjak, M.: Testing techniques for hardware security. In: 2008 IEEE International Test Conference, pp. 1–10. IEEE (2008)

- (62) Majzoobi, M., Koushanfar, F., Potkonjak, M.: Techniques for design and implementation of secure reconfigurable PUFs. ACM Transaction on Reconfigurable Technology and Systems (TRETS) 2 (2009)

- (63) Merli, D., Heyszl, J., Heinz, B., Schuster, D., Stumpf, F., Sigl, G.: Localized electromagnetic analysis of ro PUFs. In: 2013 IEEE International Symposium on Hardware-Oriented Security and Trust (HOST), pp. 19–24. IEEE (2013)

- (64) Merli, D., Schuster, D., Stumpf, F., Sigl, G.: Semi-invasive em attack on FPGA ro PUFs and countermeasures. In: Proceedings of the Workshop on Embedded Systems Security, pp. 1–9 (2011)

- (65) Nedospasov, D., Seifert, J.P., Helfmeier, C., Boit, C.: Invasive PUF analysis. In: 2013 Workshop on Fault Diagnosis and Tolerance in Cryptography, pp. 30–38. IEEE (2013)

- (66) Nguyen, P.H., Sahoo, D.P., Chakraborty, R.S., Mukhopadhyay, D.: Efficient attacks on robust ring oscillator PUF with enhanced challenge-response set. In: Proceedings of Design, Automation & Test in Europe Conference & Exhibition, pp. 641–646. EDA Consortium (2015)

- (67) Nguyen, P.H., Sahoo, D.P., Chakraborty, R.S., Mukhopadhyay, D.: Security analysis of arbiter PUF and its lightweight compositions under predictability test. ACM Transactions on Design Automation of Electronic Systems (TODAES) 22(2), 1–28 (2016)

- (68) Nguyen, P.H., Sahoo, D.P., Jin, C., Mahmood, K., van Dijk, M.: MXPUF: Secure PUF design against state-of-the-art modeling attacks. IACR Cryptology ePrint Archive 2017, 572 (2017)

- (69) Nguyen, P.H., Sahoo, D.P., Jin, C., Mahmood, K., Rührmair, U., van Dijk, M.: The interpose PUF: Secure PUF design against state-of-the-art machine learning attacks. IACR Transactions on Cryptographic Hardware and Embedded Systems pp. 243–290 (2019)

- (70) Oren, Y., Sadeghi, A.R., Wachsmann, C.: On the effectiveness of the remanence decay side-channel to clone memory-based PUFs. In: International Workshop on Cryptographic Hardware and Embedded Systems, pp. 107–125. Springer (2013)

- (71) Rivest, R.L.: Cryptography and machine learning. In: Intrl. Conference on the Theory and Application of Cryptology, pp. 427–439. Springer (1991)

- (72) Rührmair, U., Sehnke, F., Sölter, J., Dror, G., Devadas, S., Schmidhuber, J.: Modeling attacks on physical unclonable functions. In: Proceedings of the 17th ACM Conference on Computer and communications security, pp. 237–249. ACM (2010)

- (73) Rührmair, U., Sehnke, F., Sölter, J., Dror, G., Devadas, S., Schmidhuber, J.: Modeling attacks on physical unclonable functions. In: Proceedings of the 17th ACM Conference on Computer and Communications Security, pp. 237–249 (2010)

- (74) Rührmair, U., Xu, X., Sölter, J., Mahmoud, A., Majzoobi, M., Koushanfar, F., Burleson, W.: Efficient power and timing side channels for physical unclonable functions. In: Cryptographic Hardware and Embedded Systems–CHES 2014, pp. 476–492. Springer (2014)

- (75) Saha, I., Jeldi, R.R., Chakraborty, R.S.: Model building attacks on physically unclonable functions using genetic programming. In: 2013 IEEE International Symposium on Hardware-Oriented Security and Trust (HOST), pp. 41–44. IEEE (2013)

- (76) Sahoo, D.P., Mukhopadhyay, D., Chakraborty, R.S., Nguyen, P.H.: A multiplexer-based arbiter PUF composition with enhanced reliability and security. IEEE Transactions on Computers 67(3), 403–417 (2017)

- (77) Sahoo, D.P., Nguyen, P.H., Mukhopadhyay, D., Chakraborty, R.S.: A case of lightweight PUF constructions: Cryptanalysis and machine learning attacks. IEEE Transactions on Computer-Aided Design of Integrated Circuits and Systems 34(8), 1334–1343 (2015)

- (78) Sahoo, D.P., Saha, S., Mukhopadhyay, D., Chakraborty, R.S., Kapoor, H.: Composite PUF: A new design paradigm for physically unclonable functions on FPGA. In: 2014 IEEE International Symposium on Hardware-Oriented Security and Trust (HOST), pp. 50–55. IEEE (2014)

- (79) Santikellur, P., Bhattacharyay, A., Chakraborty, R.S.: Deep learning based model building attacks on arbiter PUF compositions. Cryptology ePrint Archive, Report 2019/566 (2019)

- (80) Schaller, A., Xiong, W., Anagnostopoulos, N.A., Saleem, M.U., Gabmeyer, S., Katzenbeisser, S., Szefer, J.: Intrinsic rowhammer PUFs: Leveraging the rowhammer effect for improved security. In: 2017 IEEE International Symposium on Hardware Oriented Security and Trust (HOST), pp. 1–7. IEEE (2017)

- (81) Schuster, D., Hesselbarth, R.: Evaluation of bistable ring PUFs using single layer neural networks. In: Trust and Trustworthy Computing, pp. 101–109. Springer (2014)

- (82) Shannon, C.E.: Communication theory of secrecy systems. The Bell system technical journal 28(4), 656–715 (1949)

- (83) Suh, G.E., Devadas, S.: Physical unclonable functions for device authentication and secret key generation. In: 2007 44th ACM/IEEE Design Automation Conference, pp. 9–14. IEEE (2007)

- (84) Tajik, S., Dietz, E., Frohmann, S., Dittrich, H., Nedospasov, D., Helfmeier, C., Seifert, J.P., Boit, C., Hübers, H.W.: Photonic side-channel analysis of arbiter PUFs. Journal of Cryptology 30(2), 550–571 (2017)

- (85) Tajik, S., Dietz, E., Frohmann, S., Seifert, J.P., Nedospasov, D., Helfmeier, C., Boit, C., Dittrich, H.: Physical characterization of arbiter PUFs. In: International Workshop on Cryptographic Hardware and Embedded Systems, pp. 493–509. Springer (2014)

- (86) Tajik, S., Lohrke, H., Ganji, F., Seifert, J.P., Boit, C.: Laser fault attack on physically unclonable functions. In: 2015 Workshop on Fault Diagnosis and Tolerance in Cryptography (FDTC), pp. 85–96 (2015)

- (87) Valiant, L.G.: A theory of the learnable. Communications of the ACM 27(11), 1134–1142 (1984)

- (88) Vijayakumar, A., Patil, V.C., Prado, C.B., Kundu, S.: Machine learning resistant strong PUF: Possible or a pipe dream? In: International Symposium on Hardware Oriented Security and Trust (HOST), pp. 19–24. IEEE (2016)

- (89) Wang, S.J., Chen, Y.S., Li, K.S.M.: Adversarial attack against modeling attack on PUFs. In: 2019 56th ACM/IEEE Design Automation Conference (DAC), pp. 1–6. IEEE (2019)

- (90) Wisiol, N., Mühl, C., Pirnay, N., Nguyen, P.H., Margraf, M., Seifert, J.P., van Dijk, M., Rührmair, U.: Splitting the interpose PUF: A novel modeling attack strategy. IACR Transactions on Cryptographic Hardware and Embedded Systems pp. 97–120 (2020)

- (91) Xu, X., Rührmair, U., Holcomb, D.E., Burleson, W.: Security evaluation and enhancement of bistable ring PUFs. In: International Workshop on Radio Frequency Identification: Security and Privacy Issues, pp. 3–16. Springer (2015)

- (92) Yamamoto, D., Takenaka, M., Sakiyama, K., Torii, N.: Security evaluation of bistable ring PUFs on FPGAs using differential and linear analysis. In: Computer Science and Information Systems (FedCSIS), 2014 Federated Conference on, pp. 911–918 (2014)

- (93) Yashiro, R., Hori, Y., Katashita, T., Sakiyama, K.: A deep learning attack countermeasure with intentional noise for a PUF-based authentication scheme. In: International Conference on Information Technology and Communications Security, pp. 78–94. Springer (2019)

- (94) Ye, J., Hu, Y., Li, X.: RPUF: Physical unclonable function with randomized challenge to resist modeling attack. In: 2016 IEEE Asian Hardware-Oriented Security and Trust (AsianHOST), pp. 1–6. IEEE (2016)

- (95) Yu, M.D.M., Hiller, M., Delvaux, J., Sowell, R., Devadas, S., Verbauwhede, I.: A lockdown technique to prevent machine learning on PUFs for lightweight authentication. IEEE Transactions on Multi-Scale Computing Systems 2(3), 146–159 (2016)

- (96) Yu, M.D.M., Verbauwhede, I., Devadas, S., M’Raihi, D.: A noise bifurcation architecture for linear additive physical functions. Hardware-Oriented Security and Trust (HOST), 2014 IEEE International Symposium on pp. 124–129 (2014)

- (97) Zeitouni, S., Gens, D., Sadeghi, A.R.: It’s hammer time: how to attack (rowhammer-based) DRAM-PUFs. In: 2018 55th ACM/ESDA/IEEE Design Automation Conference (DAC), pp. 1–6. IEEE (2018)