vskip=0pt

PIRLNav: Pretraining with Imitation and RL Finetuning for ObjectNav

Abstract

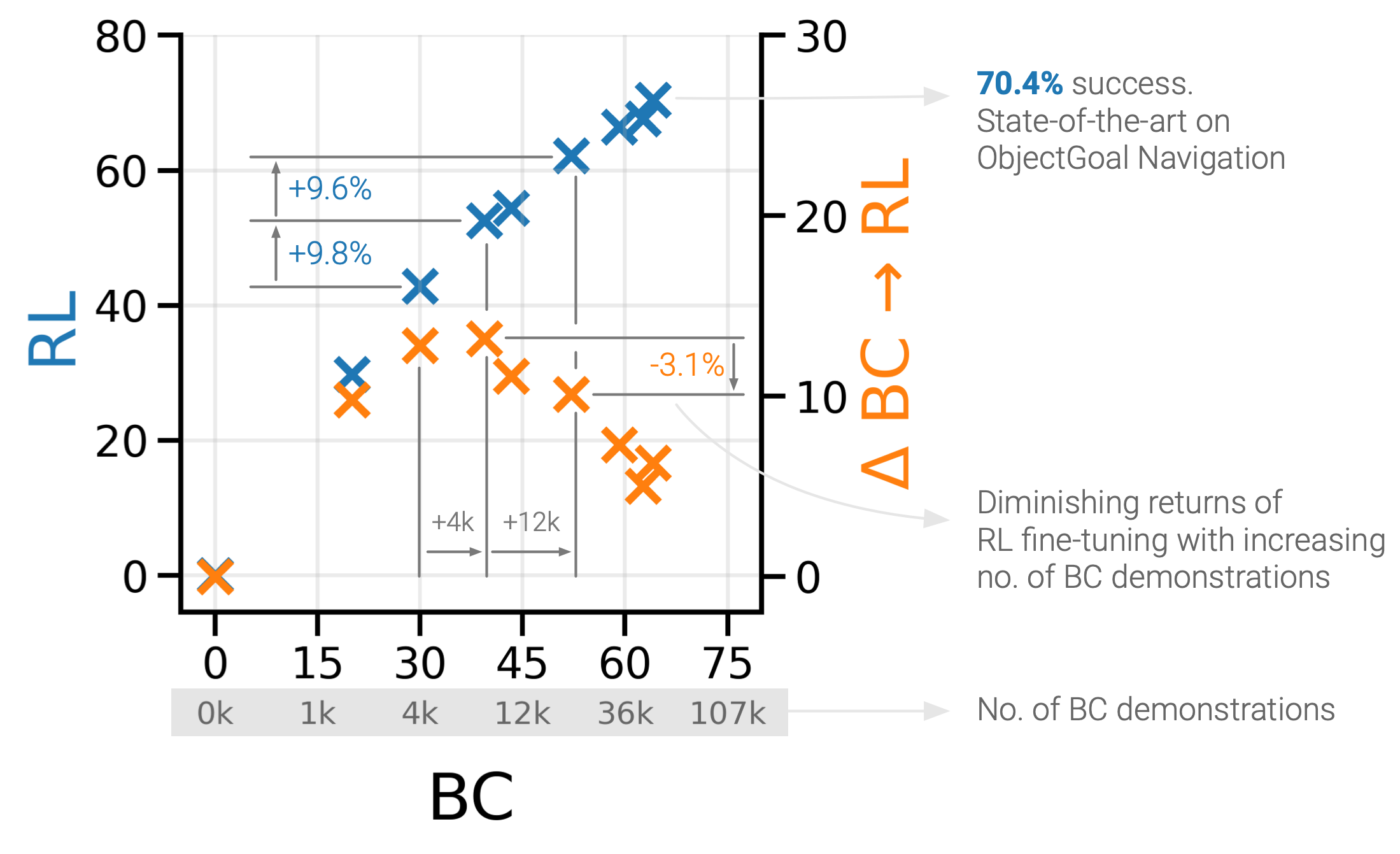

We study ObjectGoal Navigation – where a virtual robot situated in a new environment is asked to navigate to an object. Prior work [1] has shown that imitation learning (IL) using behavior cloning (BC) on a dataset of human demonstrations achieves promising results. However, this has limitations – 1) BC policies generalize poorly to new states, since the training mimics actions not their consequences, and 2) collecting demonstrations is expensive. On the other hand, reinforcement learning (RL) is trivially scalable, but requires careful reward engineering to achieve desirable behavior. We present PIRLNav, a two-stage learning scheme for BC pretraining on human demonstrations followed by RL-finetuning. This leads to a policy that achieves a success rate of on ObjectNav ( absolute over previous state-of-the-art).

Using this BCRL training recipe, we present a rigorous

empirical analysis of design choices. First, we investigate whether

human demonstrations can be replaced with ‘free’ (automatically generated) sources of demonstrations, e.g. shortest paths (SP) or task-agnostic frontier exploration (FE) trajectories.

We find that BCRL on human demonstrations outperforms

BCRL on SP and FE trajectories, even when

controlled for the same BC-pretraining success on train, and

even on a subset of val episodes where BC-pretraining success favors

the SP or FE policies.

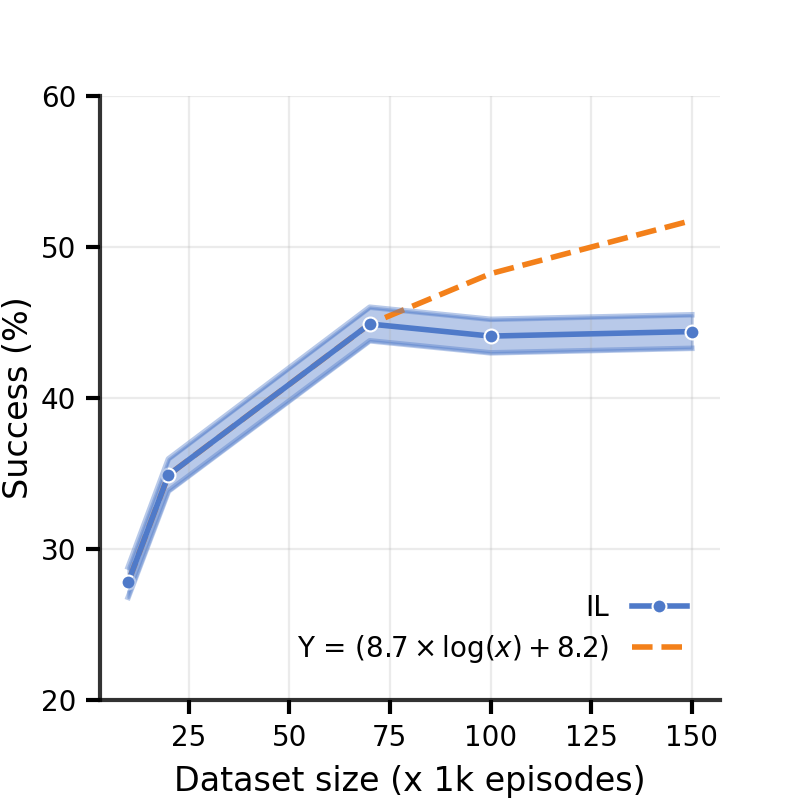

Next, we study how RL-finetuning performance scales with the size of the BC pretraining dataset.

We find that as we increase the size of the BC-pretraining dataset and get to

high BC accuracies, the improvements from RL-finetuning are smaller,

and that of the performance of our best BCRL policy can be

achieved with less than half the number of BC demonstrations.

Finally, we analyze failure modes of our ObjectNav policies, and present guidelines for further improving them.

Project page: ram81.github.io/projects/pirlnav.

1 Introduction

Since the seminal work of Winograd [2], designing embodied agents that have a rich understanding of the environment they are situated in, can interact with humans (and other agents) via language, and the environment via actions has been a long-term goal in AI [3, 4, 5, 6, 7, 8, 9, 10, 11, 12]. We focus on ObjectGoal Navigation [13, 14], wherein an agent situated in a new environment is asked to navigate to any instance of an object category (‘find a plant’, ‘find a bed’, etc.); see Fig. 2. ObjectNav is simple to explain but difficult for today’s techniques to accomplish. First, the agent needs to be able to ground the tokens in the language instruction to physical objects in the environment (e.g. what does a ‘plant’ look like?). Second, the agent needs to have rich semantic priors to guide its navigation to avoid wasteful exploration (e.g. the microwave is likely to be found in the kitchen, not the washroom). Finally, it has to keep track of where it has been in its internal memory to avoid redundant search.

Humans are adept at ObjectNav. Prior work [1] collected a large-scale dataset of human demonstrations for ObjectNav, where human subjects on Mechanical Turk teleoperated virtual robots and searched for objects in novel houses. This first provided a human baseline on ObjectNav of success rate on the Matterport3D (MP3D) dataset [15]111On val split, for 21 object categories, and a maximum of 500 steps. compared to success rate of the best performing method [1]. This dataset was then used to train agents via imitation learning (specifically, behavior cloning).

While this approach achieved state-of-art results ( success rate on MP3D val dataset), it has two clear limitations. First, behavior cloning (BC) is known to suffer from poor generalization to out-of-distribution states not seen during training, since the training emphasizes imitating actions not accomplishing their goals. Second and more importantly, it is expensive and thus not scalable. Specifically, Ramrakhya et al. [1] collected demonstrations on scenes in Matterport3D Dataset, which took hours of human teleoperation and $ dollars. A few months after [1] was released, a new higher-quality dataset called HM3D-Semantics v0.1 [16] became available with annotated 3D scenes, and a few months after that HM3D-Semantics v0.2 added additional scenes. Scaling Ramrakhya et al.’s approach to continuously incorporate new scenes involves replicating that entire effort again and again.

On the other hand, training with reinforcement learning (RL) is trivially scalable once annotated 3D scans are available. However, as demonstrated in Maksymets et al. [17], RL requires careful reward engineering, the reward function typically used for ObjectNav actually penalizes exploration (even though the task requires it), and the existing RL policies overfit to the small number of available environments.

Our primary technical contribution is PIRLNav, an approach for pretraining with BC and finetuning with RL for ObjectNav. BC pretrained policies provide a reasonable starting point for ‘bootstrapping’ RL and make the optimization easier than learning from scratch. In fact, we show that BC pretraining even unlocks RL with sparse rewards. Sparse rewards are simple (do not involve any reward engineering) and do not suffer from the unintended consequences described above. However, learning from scratch with sparse rewards is typically out of reach since most random action trajectories result in no positive rewards.

While combining IL and RL has been studied in prior work [18, 19, 20, 21, 22], the main technical challenge in the context of modern neural networks is that imitation pretraining results in weights for the policy (or actor), but not a value function (or critic). Thus, naively initializing a new RL policy with these BC-pretrained policy weights often leads to catastrophic failures due to destructive policy updates early on during RL training, especially for actor-critic RL methods [23]. To overcome this challenge, we present a two-stage learning scheme involving a critic-only learning phase first that gradually transitions over to training both the actor and critic. We also identify a set of practical recommendations for this recipe to be applied to ObjectNav. This leads to a PIRLNav policy that advances the state-the-art on ObjectNav from success rate (in [24]) to (, relative improvement).

Next, using this BCRL training recipe, we conduct an empirical analysis of design choices. Specifically, an ingredient we investigate is whether human demonstrations can be replaced with ‘free’ (automatically generated) sources of demonstrations for ObjectNav, e.g. (1) shortest paths (SP) between the agent’s start location and the closest object instance, or (2) task-agnostic frontier exploration [25] (FE) of the environment followed by shortest path to goal-object upon observing it. We ask and answer the following:

-

1.

‘Do human demonstrations capture any unique ObjectNav-specific behaviors that shortest paths and frontier exploration trajectories do not?’ Yes. We find that BC / BCRL on human demonstrations outperforms BC / BCRL on shortest paths and frontier exploration trajectories respectively. When we control the number of demonstrations from each source such that BC success on train is the same, RL-finetuning when initialized from BC on human demonstrations still outperforms the other two.

-

2.

‘How does performance after RL scale with BC dataset size?’ We observe diminishing returns from RL-finetuning as we scale BC dataset size. This suggests, by effectively leveraging the trade-off curve between size of pretraining dataset size vs. performance after RL-Finetuning, we can achieve closer to state-of-the-art results without investing into a large dataset of BC demonstrations.

-

3.

‘Does BC on frontier exploration demonstrations present similar scaling behavior as BC on human demonstrations?’ No. We find that as we scale frontier exploration demonstrations past trajectories, the performance plateaus.

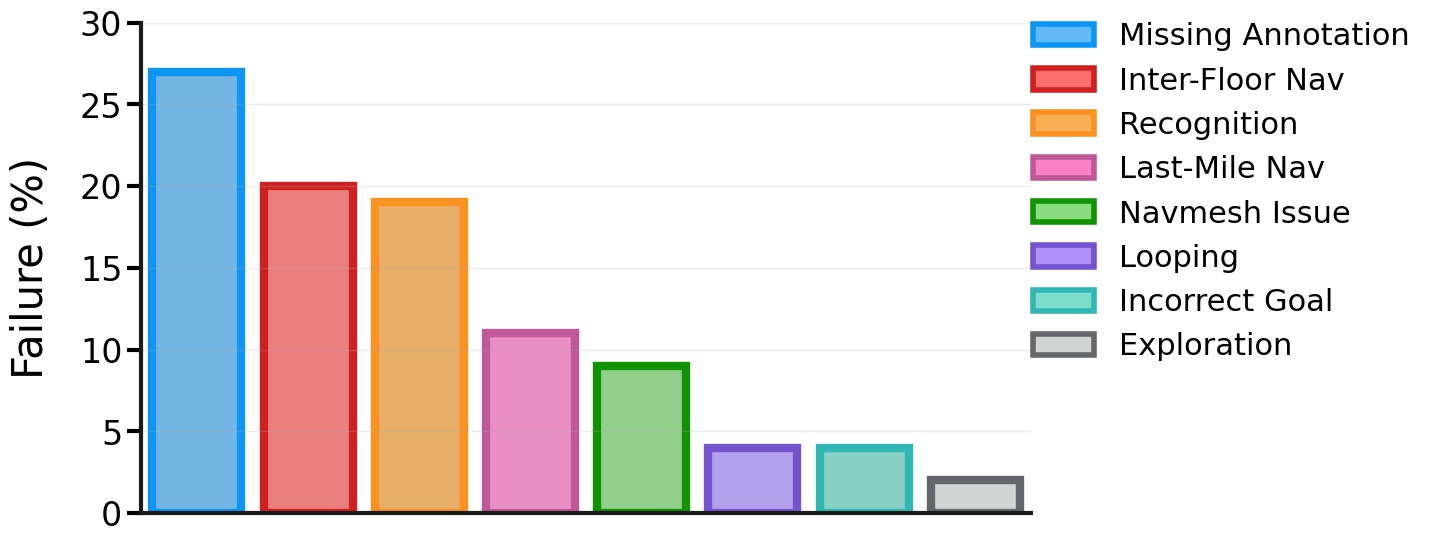

Finally, we present an analysis of the failure modes of our ObjectNav policies and present a set of guidelines for further improving them. Our policy’s primary failure modes are: a) Dataset issues: comprising of missing goal annotations, and navigation meshes blocking the path, b) Navigation errors: primarily failure to navigate between floors, c) Recognition failures: where the agent does not identify the goal object during an episode, or confuses the specified goal with a semantically-similar object.

2 Related Work

ObjectGoal Navigation. Prior works on ObjectNav have used end-to-end RL [26, 27, 17], modular learning [24, 28, 29], and imitation learning [1, 30]. Works that use end-to-end RL have proposed improved visual representations [26, 31], auxiliary tasks [27], and data augmentation techniques [17] to improve generalization to unseen environments. Improved visual representations include object relation graphs [31] and semantic segmentations [26]. Ye et al. [27] use auxiliary tasks like predicting environment dynamics, action distributions, and map coverage in addition to ObjectNav and achieve promising results. Maksymets et al. [17] improve generalization of RL agents by training with artificially inserted objects and proposing a reward to incentivize exploration.

Modular learning methods for ObjectNav have also emerged as a strong competitor [24, 28, 32]. These methods rely on separate modules for semantic mapping that build explicit structured map representations, a high-level semantic exploration module that is learned through RL to solve the ‘where to look?’ subproblem, and a low-level navigation policy that solves ‘how to navigate to ?’.

The current state-of-the-art methods on ObjectNav [1, 30] make use of BC on a large dataset of human demonstrations. with a simple CNN+RNN policy architecture. In this work, we improve on them by developing an effective approach to finetune these imitation-pretrained policies with RL.

Imitation Learning and RL Finetuning. Prior works have considered a special case of learning from demonstration data. These approaches initialize policies trained using behavior cloning, and then fine-tune using on-policy reinforcement learning [18, 20, 21, 22, 33, 34], On classical tasks like cart-pole swing-up [18], balance, hitting a baseball [33], and underactuated swing-up [34], demonstrations have been used to speed up learning by initializing policies pretrained on demonstrations for RL. Similar to these methods, we also use a on-policy RL algorithm for finetuning the policy trained with behavior cloning. Rajeswaran et al. [20] (DAPG) pretrain a policy using behavior cloning and use an augmented RL finetuning objective to stay close to the demonstrations which helps reduce sample complexity. Unfortunately DAPG is not feasible in our setting as it requires solving a systems research problem to efficiently incorporate replaying demonstrations and collecting experience online at our scale. [20] show results of the approach on a dexterous hand manipulation task with a small number of demonstrations that can be loaded in system memory and therefore did not need to solve this system challenge. This is not possible in our setting, just the 256256 RGB observations for the demos we collect would occupy over 2 TB memory, which is out of reach for all but the most exotic of today’s systems. There are many methods for incorporating demonstrations/imitation learning with off-policy RL [35, 36, 37, 38, 39]. Unfortunately these methods were not designed to work with recurrent policies and adapting off-policy methods to work with recurrent policies is challenging [40]. See the Appendix A for more details. The RL finetuning approach that demonstrates results with an actor-critic and high-dimensional visual observations, and is thus most closely related to our setup is proposed in VPT [21]. Their approach uses Phasic Policy Gradients (PPG) [41] with a KL-divergence loss between the current policy and the frozen pretrained policy, and decays the KL loss weight over time to enable exploration during RL finetuning. Our approach uses Proximal Policy Gradients (PPO) [42] instead of PPG, and therefore does not require a KL constraint, which is compute-expensive, and performs better on ObjectNav.

3 ObjectNav and Imitation Learning

3.1 ObjectNav

In ObjectNav an agent is tasked with searching for an instance of the specified object category (e.g., ‘bed’) in an unseen environment. The agent must perform this task using only egocentric perceptions. Specifically, a RGB camera, Depth sensor222We don’t use this sensor as we don’t find it helpful., and a GPS+Compass sensor that provides location and orientation relative to the start position of the episode. The action space is discrete and consists of move_forward (), turn_left (), turn_right (), look_up (), look_down (), and stop actions. An episode is considered successful if the agent stops within Euclidean distance of the goal object within steps and is able to view the object by taking turn actions [14].

We use scenes from the HM3D-Semantics v0.1 dataset [16]. The dataset consists of scenes and unique goal object categories. We evaluate our agent using the train/val/test splits from the 2022 Habitat Challenge333https://aihabitat.org/challenge/2022/.

3.2 ObjectNav Demonstrations

Ramrakhya et al. [1] collected ObjectNav demonstrations for the Matterport3D dataset [15]. We begin our study by replicating this effort and collect demonstrations for the HM3D-Semantics v0.1 dataset [16]. We use Ramrakhya et al.’s Habitat-WebGL infrastructure to collect demonstrations, amounting to human annotation hours.

3.3 Imitation Learning from Demonstrations

We use behavior cloning to pretrain our ObjectNav policy on the human demonstrations we collect. Let denote a policy parametrized by that maps observations to a distribution over actions . Let denote a trajectory consisting of state, observation, action tuples: and denote a dataset of human demonstrations. The optimal parameters are

| (1) |

We use inflection weighting [43] to adjust the loss function to upweight timesteps where actions change (i.e. ).

Our ObjectNav policy architecture is a simple CNN+RNN model from [30]. To encode RGB input CNN, we use a ResNet50 [44]. Following [30], the CNN is first pre-trained on the Omnidata starter dataset [45] using the self-supervised pretraining method DINO [46] and then finetuned during ObjectNav training. The GPS+Compass inputs, , and , are passed through fully-connected layers FC FC to embed them to 32-d vectors. Finally, we convert the object goal category to one-hot and pass it through a fully-connected layer FC, resulting in a 32-d vector. All of these input features are concatenated to form an observation embedding, and fed into a 2-layer, 2048-d GRU at every timestep to predict a distribution over actions - formally, given current observations , GRU. To reduce overfitting, we apply color-jitter and random shifts [47] to the RGB inputs.

4 RL Finetuning

Our motivation for RL-finetuning is two-fold. First, finetuning may allow for higher performance as behavior cloning is known to suffer from a train/test mismatch – when training, the policy sees the result of taking ground-truth actions, while at test-time, it must contend with the consequences of its own actions. Second, collecting more human demonstrations on new scenes or simply to improve performance is time-consuming and expensive. On the other hand, RL-finetuning is trivially scalable (once annotated 3D scans are available) and has the potential to reduce the amount of human demonstrations needed.

4.1 Setup

The RL objective is to find a policy that maximizes expected sum of discounted future rewards. Let be a sequence of object, action, reward tuples (, , ) where is the action sampled from the agent’s policy, and is the reward. For a discount factor , the optimal policy is

| (2) |

To solve this maximization problem, actor-critic RL methods learn a state-value function (also called a critic) in addition to the policy (also called an actor). The critic represents the expected value of returns when starting from state and acting under the policy , where returns are defined as . We use DD-PPO [48], a distributed implementation of PPO [42], an on-policy RL algorithm. Given a -parameterized policy and a set of rollouts, PPO updates the policy as follows. Let , be the advantage estimate and be the ratio of the probability of action under current policy and under the policy used to collect rollouts. The parameters are updated by maximizing:

| (3) |

We use a sparse success reward. Sparse success is simple (does not require hyperparameter optimization) and has fewer unintended consequences (e.g. Maksymets et al. [17] showed that typical dense rewards used in ObjectNav actually penalize exploration, even though exploration is necessary for ObjectNav in new environments). Sparse rewards are desirable but typically difficult to use with RL (when initializing training from scratch) because they result in nearly all trajectories achieving reward, making it difficult to learn. However, since we pretrain with BC, we do not observe any such pathologies.

4.2 Finetuning Methodology

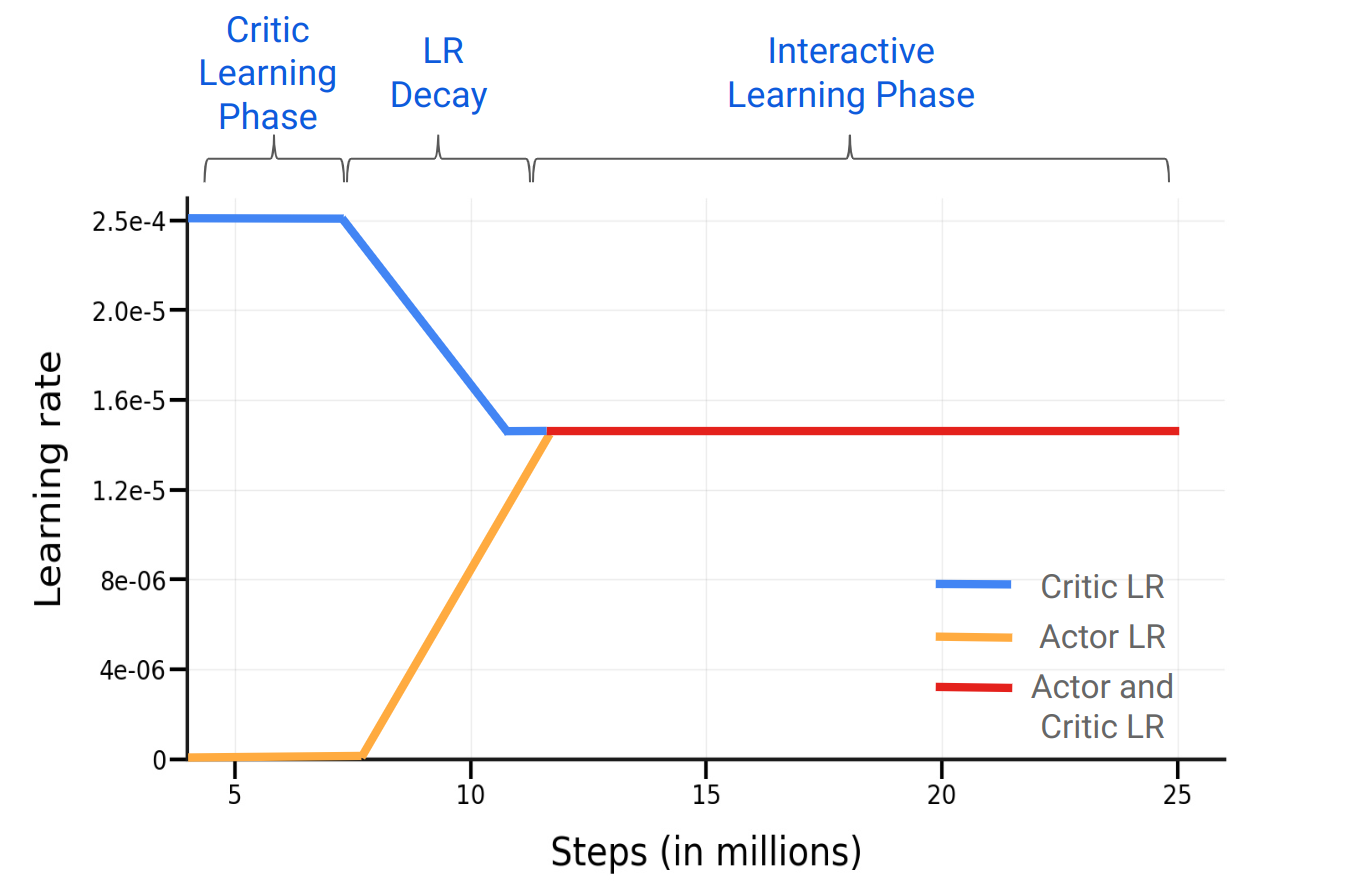

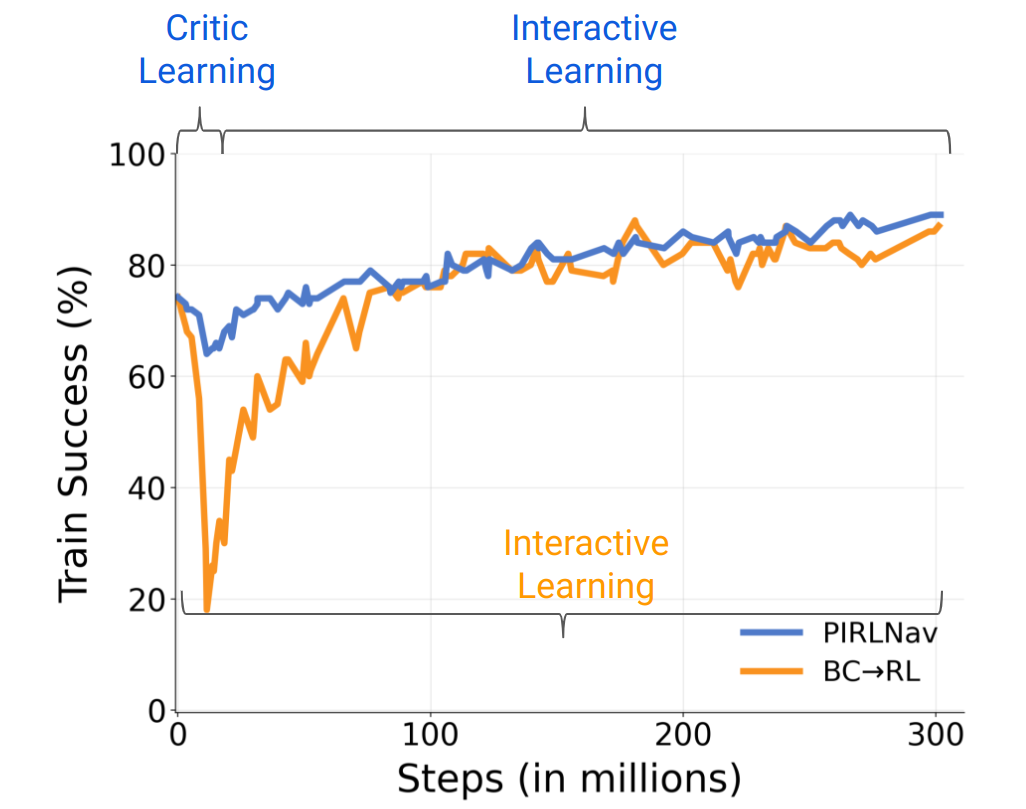

We use the behavior cloned policy weights to initialize the actor parameters. However, notice that during behavior cloning we do not learn a critic nor is it easy to do so – a critic learned on human demonstrations (during behavior cloning) would be overly optimistic since all it sees are successes. Thus, we must learn the critic from scratch during RL. Naively finetuning the actor with a randomly-initialized critic leads to a rapid drop in performance444After the initial drop, the performance increases but the improvements on success are small. (see Fig. 8) since the critic provides poor value estimates which influence the actor’s gradient updates (see Eq.(3)). We address this issue by using a two-phase training regime:

Phase 1: Critic Learning. In the first phase, we rollout trajectories using the frozen policy, pre-trained using BC, and use them to learn a critic. To ensure consistency of rollouts collected for critic learning with RL training, we sample actions (as opposed to using argmax actions) from the pre-trained BC policy: . We train the critic until its loss plateaus. In our experiments, we found steps to be sufficient. In addition, we also initialize the weights of the critic’s final linear layer close to zero to stabilize training.

Phase 2: Interactive Learning. In the second phase, we unfreeze the actor RNN555The CNN and non-visual observation embedding layers remain frozen. We find this to be more stable. and finetune both actor and critic weights. We find that naively switching from phase 1 to phase 2 leads to small improvements in policy performance at convergence. We gradually decay the critic learning rate from to while warming-up the policy learning rate from to between to steps, and then keeping both at through the course of training. See Fig. 3. We find that using this learning rate schedule helps improve policy performance. For parameters that are shared between the actor and critic (i.e. the RNN), we use the lower of the two learning rates (i.e. always the actor’s in our schedule). To summarize our finetuning methodology:

-

–

First, we initialize the weights of the policy network with the IL-pretrained policy and initialize critic weights close to zero. We freeze the actor and shared weights. The only learnable parameters are in the critic.

-

–

Next, we learn the critic weights on rollouts collected from the pretrained, frozen policy.

-

–

After training the critic, we warmup the policy learning rate and decay the critic learning rate.

-

–

Once both critic and policy learning rate reach a fixed learning rate, we train the policy to convergence.

4.3 Results

Comparing with the RL-finetuning approach in VPT [21]. We start by comparing our proposed RL-finetuning approach with the approach used in VPT [21]. Specifically, [21] proposed initializing the critic weights to zero, replacing entropy term with a KL-divergence loss between the frozen IL policy and the RL policy, and decay the KL divergence loss coefficient, , by a fixed factor after every iteration. Notice that this prevents the actor from drifting too far too quickly from the IL policy, but does not solve uninitialized critic problem. To ensure fair comparison, we implement this method within our DD-PPO framework to ensure that any performance difference is due to the fine-tuning algorithm and not tangential implementation differences. Complete training details are in the Sec. C.3. We keep hyperparameters constant for our approach for all experiments. Table 1 reports results on HM3D val for the two approaches using human demonstrations. We find that PIRLNav achieves Success compared to VPT and comparable SPL.

| Method | Success | SPL |

|---|---|---|

| 1) BC | ||

| 2) BCRL-FT w/ VPT | ||

| 3) PIRLNav (Ours) |

| Method | Success | SPL |

|---|---|---|

| 4) BC | ||

| 5) BCRL-FT | ||

| 6) BCRL-FT (+ Critic Learning) | ||

| 7) BCRL-FT (+ Critic Learning, Critic Decay) | ||

| 8) BCRL-FT (+ Critic Learning, Actor Warmup) | ||

| 9) PIRLNav |

Ablations. Next, we conduct ablation experiments to quantify the importance of each phase in our RL-finetuning approach. Table 2 reports results on the HM3D val split for a policy BC-pretrained on human demonstrations and RL-finetuned for steps, complete training details are in Sec. C.4. First, without a gradual learning transition (row ), i.e. without a critic learning and LR decay phase, the policy improves by on success and on SPL. Next, with only a critic learning phase (row ), the policy improves by on success and on SPL. Using an LR decay schedule only for the critic after the critic learning phase improves success by and SPL by , and using an LR warmup schedule for the actor (but no critic LR decay) after the critic learning phase improves success by and SPL by . Finally, combining everything (critic-only learning, critic LR decay, actor LR warmup), our policy improves by on success and on SPL.

| test-std | test-challenge | ||||

| Method | Success | SPL | Success | SPL | |

| 10) Stretch [24] | |||||

| 11) ProcTHOR-Large [49] | - | - | |||

| 12) Habitat-Web [1] | - | - | |||

| 13) DD-PPO [50] | - | - | |||

| 14) Populus A. | |||||

| 15) ByteBOT | 64.0% | 35.0% | |||

| 16) PIRLNav666The approach is called “BadSeed” on the HM3D leaderboard: eval.ai/web/challenges/challenge-page/1615/leaderboard/3899 | |||||

ObjectNav Challenge 2022 Results. Using our overall two-stage training approach of BC-pretraining followed by RL-finetuning, we achieve state-of-the-art results on ObjectNav– success and SPL on both the test-standard and test-challenge splits and success and SPL on val. Table 3 compares our results with the top-4 entries to the Habitat ObjectNav Challenge 2022 [50]. Our approach outperforms Stretch [24] on success rate on both test-standard and test-challenge and is comparable on SPL ( worse on test-standard, better on test-challenge). ProcTHOR [49], which uses procedurally-generated environments for training, achieves success and SPL on test-standard split, which is worse at success and worse at SPL than ours. For sake of completeness, we also report results of two unpublished entries uploaded to the leaderboard – Populus A. and ByteBOT. Unfortunately, there is no associated report yet with these entries, so we are unable to comment on the details of these approaches, or even whether the comparison is meaningful.

5 Role of demonstrations in BCRL transfer

Our decision to use human demonstrations for BC-pretraining before RL-finetuning was motivated by results in prior work [1]. Next, we examine if other cheaper sources of demonstrations lead to equally good BCRL generalization. Specifically, we consider sources of demonstrations:

Shortest paths (SP). These demonstrations are generated by greedily

sampling actions to fit the geodesic shortest path to the nearest navigable goal

object, computed using the ground-truth map of the environment.

These demonstrations do not capture any exploration, they only capture

success at the ObjectNav task via the most efficient path.

Task-Agnostic Frontier Exploration (FE) [24].

These are generated by using a 2-stage approach: 1) Exploration: where a

task-agnostic strategy is used to maximize exploration coverage and build a top-down

semantic map of the environment, and

2) Goal navigation: once the goal object is detected by the semantic predictor,

the developed map is used to reach

it by following the shortest path. These demonstrations capture ObjectNav-agnostic

exploration.

Human Demonstrations (HD) [1]. These are collected by asking humans on Mechanical Turk to control an agent and navigate to the goal object. Humans are provided access to the first-person RGB view of the agent and tasked to reach within m of the goal object category. These demonstrations capture human-like ObjectNav-specific exploration.

5.1 Results with Behavior Cloning

Using the BC setup described in Sec. 3.3, we train on SP, FE, and HD demonstrations. Since these demonstrations vary in trajectory length (e.g. SP are significantly shorter than FE), we collect steps of experience with each method. That amounts to SP, FE, and HD demonstrations respectively. As shown in Table 4, BC on SP demonstrations leads to success and SPL. We believe this poor performance is due to an imitation gap [51], i.e. the shortest path demonstrations are generated with access to privileged information (ground-truth map of the environment) which is not available to the policy during training. Without a map, following the shortest path in a new environment to find a goal object is not possible. BC on FE demonstrations achieves success and SPL, which is significantly better than BC on shortest paths ( success, SPL). Finally, BC on HD obtains the best results – success, SPL. These trends suggest that task-specific exploration (captured in human demonstrations) leads to much better generalization than task-agnostic exploration (FE) or shortest paths (SP).

| Training demonstrations | Success | SPL |

|---|---|---|

| Shortest paths () | ||

| Frontier exploration () | ||

| Human demonstrations () |

5.2 Results with RL Finetuning

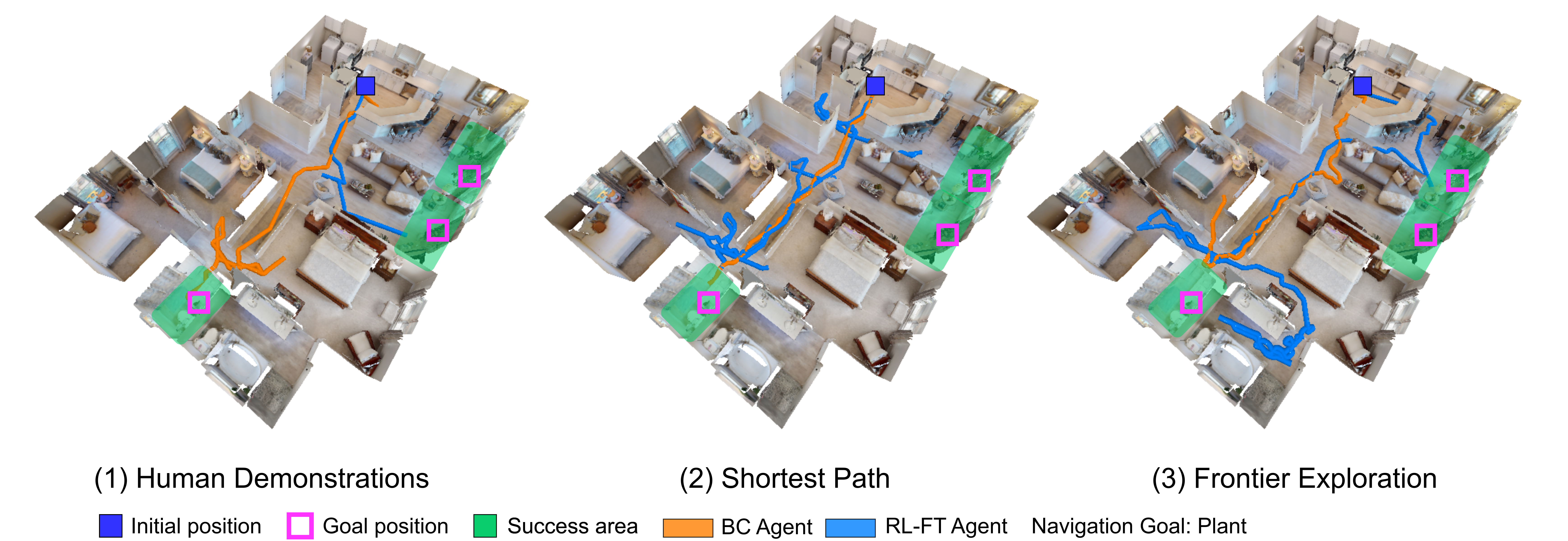

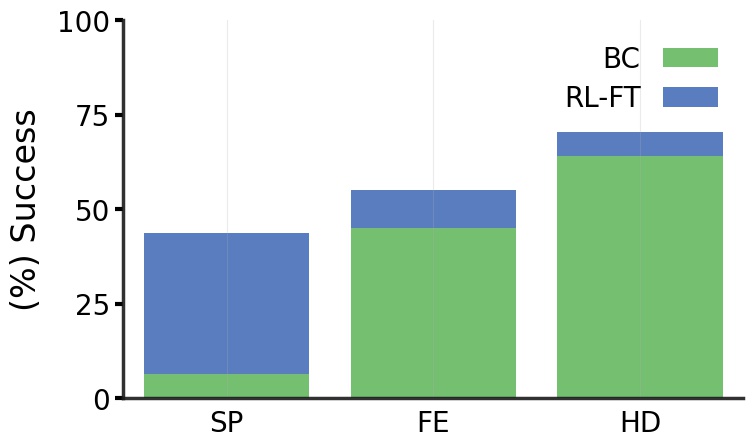

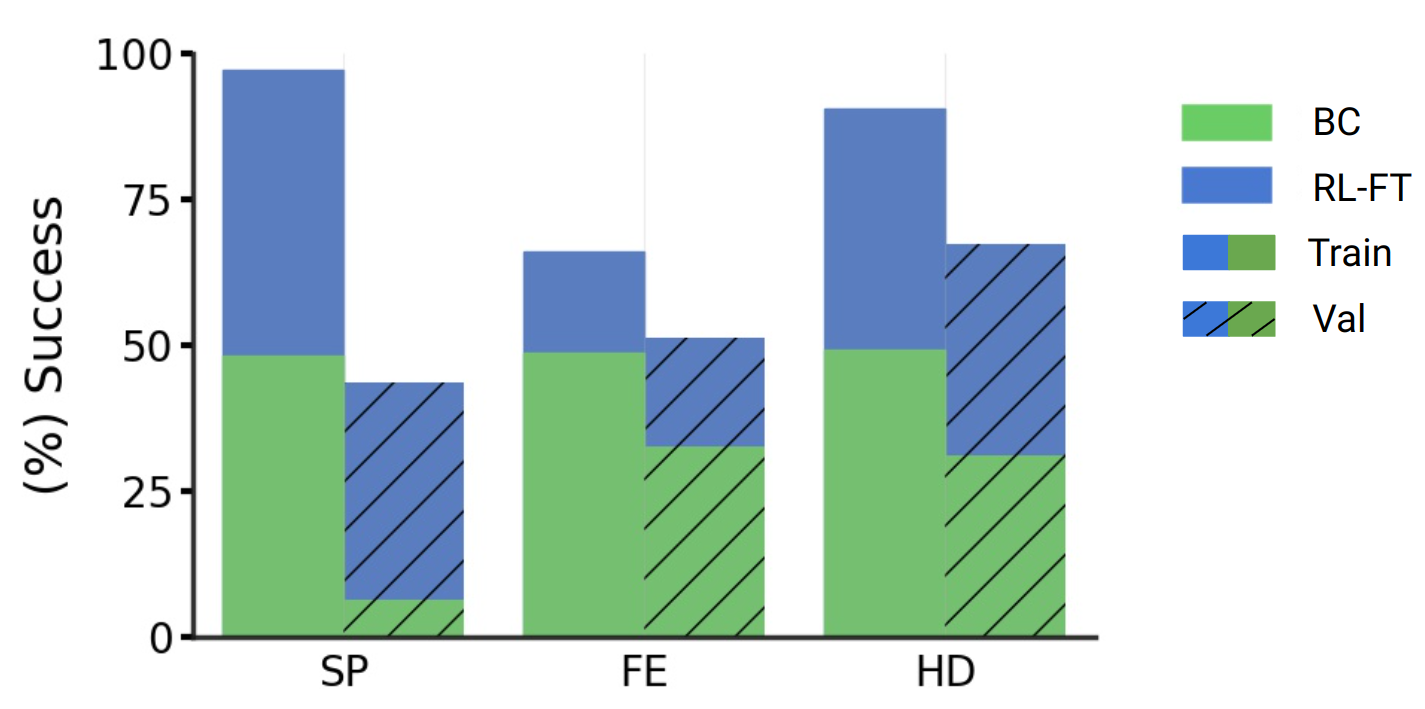

Using the BC-pretrained policies on SP, FE, and HD demonstrations as initialization, we RL-finetune each using our approach described in Sec. 4. These results are summarized in Fig. 4. Perhaps intuitively, the trends after RL-finetuning follow the same ordering as BC-pretraining, i.e. RL-finetuning from BC on HD FE SP. But there are two factors that could be leading to this ordering after RL-finetuning – 1) inconsistency in performance at initialization (i.e. BC on HD is already better than BC on FE), and 2) amenability of each of these initializations to RL-finetuning (i.e. is RL-finetuning from HD init better than FE init?).

We are interested in answering (2), and so we control for (1) by selecting BC-pretrained policy weights across SP, FE, and HD that have equal performance on a subset of train success. This essentially amounts to selecting BC-pretraining checkpoints for FE and HD from earlier in training as success is the maximum for SP.

Fig. 5 shows the results after BC and RL-finetuning on a subset of the HM3D train and on HM3D val. First, note that at BC-pretraining train success rates are equal (), while on val FE is slightly better than HD followed by SP. We find that after RL-finetuning, the policy trained on HD still leads to higher val success () compared to FE () and SP (). Notice that RL-finetuning from SP leads to high train success, but low val success, indicating significant overfitting. FE has smaller train-val gap after RL-finetuning but both are worse than HD, indicating underfitting. These results show that learning to imitate human demonstrations equips the agent with navigation strategies that enable better RL-finetuning generalization compared to imitating other kinds of demonstrations, even when controlled for the same BC-pretraining accuracy.

| Training demonstrations | BC Success | RL-FT Success |

|---|---|---|

| 17) SP | ||

| 18) HD | ||

| 19) FE | ||

| 20) HD |

Results on SP-favoring and FE-favoring episodes. To further emphasize that imitating human demonstrations is key to good generalization, we created two subsplits from the HM3D val split that are adversarial to HD performance – SP-favoring and FE-favoring. The SP-favoring val split consists of episodes where BC on SP achieved a higher performance compared to BC on HD, i.e. we select episodes where BC on SP succeeded but BC on HD did not or both BC on SP and BC on HD failed. Similarly, we also create an FE-favoring val split using the same sampling strategy biased towards BC on FE. Next, we report the performance of RL-finetuned from BC on SP, FE, and HD on these two evaluation splits in Table 5. On both SP-favoring and FE-favoring, BC on HD is at success (by design), but after RL-finetuning, is able to significantly outperform RL-finetuning from the respective BC on SP and FE policies.

5.3 Scaling laws of BC and RL

In this section, we investigate how BC-pretraining RL-finetuning success scales with no. of BC demonstrations.

Human demonstrations. We create HD subsplits ranging in size from to episodes, and BC-pretrain policies with the same set of hyperparameters on each split. Then, for each, we RL-finetune from the best-performing checkpoint. The resulting BC and RL success on HM3D val vs. no. of HD episodes is plotted in Fig. 1. Similar to [1], we see promising scaling behavior with more BC demonstrations.

Interestingly, as we increase the size of of the BC pretraining dataset and get to high BC accuracies, the improvements from RL-finetuning decrease. E.g. at BC demonstrations, the BCRL improvement is success, while at BC demonstrations, the improvement is . Furthermore, with BC-pretraining demonstrations, the RL-finetuned success is only worse than RL-finetuning from BC demonstrations ( vs. ). Both suggest that by effectively leveraging the trade-off between the size of the BC-pretraining dataset vs. performance gains after RL-finetuning, it may be possible to achieve close to state-of-the-art results without large investments in demonstrations.

How well does FE Scale? In Section 5.1, we showed that BC on human demonstrations outperforms BC on both shortest paths and frontier exploration demonstrations, when controlled for the same amount of training experience. In contrast to human demonstrations however, collecting shortest paths and frontier exploration demonstrations is cheaper, which makes scaling these demonstration datasets easier. Since BC performance on shortest paths is significantly worse even with x more demonstrations compared to FE and HD ( SP vs. FE and HD demos, Sec. 5.1), we focus on scaling FE demonstrations. Fig. 6 plots performance on HM3D val against FE dataset size and a curve fitted using demonstrations to predict performance on FE dataset-sizes . We created splits ranging in size from to . Increasing the dataset size doesn’t consistently improve performance and saturates after demonstrations, suggesting that generating more FE demonstrations is unlikely to help. We hypothesize that the saturation is because these demonstrations don’t capture task-specific exploration.

6 Failure Modes

To better understand the failure modes of our BCRL ObjectNav policies,

we manually annotate failed HM3D val episodes from our

best ObjectNav agent. See Fig. 7.

The most common failure modes are:

Missing Annotations (): Episodes where the agent navigates

to the correct goal object category but the episode is counted

as a failure due to missing annotations in the data.

Inter-Floor Navigation ():

The object is on a different floor and the

agent fails to climb up/down the stairs.

Recognition Failure (): The agent sees the object in

its field of view but fails to navigate to it.

Last Mile Navigation [52] ().

Repeated collisions against objects or mesh geometry close to the goal object preventing the agent

from reaching close to it.

Navmesh Failure ().

Hard-to-navigate meshes blocking the path of the agent.

E.g. in one instance, the agent fails to climb stairs because of a

narrow nav mesh on the stairs.

Looping ().

Repeatedly visiting the same location and not exploring the rest of the environment.

Semantic Confusion (). Confusing

the goal object with a semantically-similar object.

E.g. ‘armchair’ for ‘sofa’.

Exploration Failure (). Catch-all for failures in a

complex navigation environment, early termination, semantic failures

(e.g. looking for a chair in a bathroom), etc.

As can be seen in Fig. 7, most failures () are due to issues in the ObjectNav dataset – due to missing object annotations due to holes / issues in the navmesh. failures are due to the agent being unable to climb up/down stairs. We believe this happens because climbing up / down stairs to explore another floor is a difficult behavior to learn and there are few episodes that require this. Oversampling inter-floor navigation episodes during training can help with this. Another failure mode is failing to recognize the goal object – where the object is in the agent’s field of view but it does not navigate to it, and where the agent navigates to another semantically-similar object. Advances in the visual backbone and object recognition can help address these. Prior works [1, 24] have used explicit semantic segmentation modules to recognize objects at each step of navigation. Incorporating this within the BCRL training pipeline could help. failures are due to last mile navigation, suggesting that equipping the agent with better goal-distance estimators could help. Finally, only failures are due to looping and lack of exploration, which is promising!

7 Conclusion

To conclude, we propose PIRLNav, an approach to combine imitation using behavior cloning (BC) and reinforcement learning (RL) for ObjectNav, wherein we pretrain a policy with BC on human demonstrations and then finetune it with RL, leading to state-of-the-art results on ObjectNav ( success, improvement over previous best). Next, using this BCRL training recipe, we present a thorough empirical study of the impact of different demonstration datasets used for BC-pretraining on downstream RL-finetuning performance. We show that BC / BCRL on human demonstrations outperforms BC / BCRL on shortest paths and frontier exploration trajectories, even when we control for same BC success on train. We also show that as we scale the pretraining dataset size for BC and get to higher BC success rates, the improvements from RL-finetuning start to diminish. Finally, we characterize our agent’s failure modes, and find that the largest sources of error are 1) dataset annotation noise, and inability of the agent to 2) navigate across floors, and 3) recognize the correct goal object.

Acknowledgements. We thank Karmesh Yadav for OVRL model weights [30], and Theophile Gervet for answering questions related to the frontier exploration code [24] used to generate demonstrations. The Georgia Tech effort was supported in part by NSF, ONR YIP, and ARO PECASE. The views and conclusions contained herein are those of the authors and should not be interpreted as necessarily representing the official policies or endorsements, either expressed or implied, of the U.S. Government, or any sponsor.

References

- [1] R. Ramrakhya, E. Undersander, D. Batra, and A. Das, “Habitat-web: Learning embodied object-search strategies from human demonstrations at scale,” in CVPR, 2022.

- [2] T. Winograd, “Understanding natural language,” Cognitive Psychology, 1972.

- [3] L. Smith and M. Gasser, “The development of embodied cognition: six lessons from babies.,” Artificial life, vol. 11, no. 1-2, 2005.

- [4] K. M. Hermann, F. Hill, S. Green, F. Wang, R. Faulkner, H. Soyer, D. Szepesvari, W. Czarnecki, M. Jaderberg, D. Teplyashin, et al., “Grounded language learning in a simulated 3D world,” arXiv preprint arXiv:1706.06551, 2017.

- [5] F. Hill, K. M. Hermann, P. Blunsom, and S. Clark, “Understanding grounded language learning agents,” arXiv preprint arXiv:1710.09867, 2017.

- [6] D. S. Chaplot, K. M. Sathyendra, R. K. Pasumarthi, D. Rajagopal, and R. Salakhutdinov, “Gated-attention architectures for task-oriented language grounding,” in AAAI, 2018.

- [7] P. Anderson, Q. Wu, D. Teney, J. Bruce, M. Johnson, N. Sünderhauf, I. Reid, S. Gould, and A. van den Hengel, “Vision-and-language navigation: Interpreting visually-grounded navigation instructions in real environments,” in CVPR, 2018.

- [8] U. Jain, L. Weihs, E. Kolve, M. Rastegari, S. Lazebnik, A. Farhadi, A. G. Schwing, and A. Kembhavi, “Two body problem: Collaborative visual task completion,” in CVPR, 2019.

- [9] A. Das, Building agents that can see, talk, and act. PhD thesis, Georgia Institute of Technology, 2020.

- [10] J. Abramson, A. Ahuja, I. Barr, A. Brussee, F. Carnevale, M. Cassin, R. Chhaparia, S. Clark, B. Damoc, A. Dudzik, et al., “Imitating interactive intelligence,” arXiv preprint arXiv:2012.05672, 2020.

- [11] L. Weihs, A. Kembhavi, K. Ehsani, S. M. Pratt, W. Han, A. Herrasti, E. Kolve, D. Schwenk, R. Mottaghi, and A. Farhadi, “Learning generalizable visual representations via interactive gameplay,” in ICLR, 2021.

- [12] C. Lynch, A. Wahid, J. Tompson, T. Ding, J. Betker, R. Baruch, T. Armstrong, and P. Florence, “Interactive language: Talking to robots in real time,” arXiv preprint arXiv:2210.06407, 2022.

- [13] P. Anderson, A. X. Chang, D. S. Chaplot, A. Dosovitskiy, S. Gupta, V. Koltun, J. Kosecka, J. Malik, R. Mottaghi, M. Savva, and A. R. Zamir, “On evaluation of embodied navigation agents,” arXiv preprint arXiv:1807.06757, 2018.

- [14] D. Batra, A. Gokaslan, A. Kembhavi, O. Maksymets, R. Mottaghi, M. Savva, A. Toshev, and E. Wijmans, “ObjectNav revisited: On evaluation of embodied agents navigating to objects,” arXiv preprint arXiv:2006.13171, 2020.

- [15] A. Chang, A. Dai, T. Funkhouser, M. Halber, M. Niessner, M. Savva, S. Song, A. Zeng, and Y. Zhang, “Matterport3D: Learning from RGB-D Data in Indoor Environments,” in 3DV, 2017. MatterPort3D dataset license: http://kaldir.vc.in.tum.de/matterport/MP_TOS.pdf.

- [16] K. Yadav, R. Ramrakhya, S. K. Ramakrishnan, T. Gervet, J. Turner, A. Gokaslan, N. Maestre, A. X. Chang, D. Batra, M. Savva, et al., “Habitat-matterport 3d semantics dataset,” arXiv preprint arXiv:2210.05633, 2022.

- [17] O. Maksymets, V. Cartillier, A. Gokaslan, E. Wijmans, W. Galuba, S. Lee, and D. Batra, “THDA: Treasure Hunt Data Augmentation for Semantic Navigation,” in ICCV, 2021.

- [18] S. Schaal, “Learning from demonstration,” in NIPS, 1996.

- [19] A. Das, G. Gkioxari, S. Lee, D. Parikh, and D. Batra, “Neural Modular Control for Embodied Question Answering,” in CoRL, 2018.

- [20] A. Rajeswaran, V. Kumar, A. Gupta, G. Vezzani, J. Schulman, E. Todorov, and S. Levine, “Learning complex dexterous manipulation with deep reinforcement learning and demonstrations,” in RSS, 2018.

- [21] B. Baker, I. Akkaya, P. Zhokhov, J. Huizinga, J. Tang, A. Ecoffet, B. Houghton, R. Sampedro, and J. Clune, “Video pretraining (vpt): Learning to act by watching unlabeled online videos,” arXiv preprint arXiv:2206.11795, 2022.

- [22] A. Gupta, V. Kumar, C. Lynch, S. Levine, and K. Hausman, “Relay policy learning: Solving long horizon tasks via imitation and reinforcement learning,” in CoRL, 2019.

- [23] I. Uchendu, T. Xiao, Y. Lu, B. Zhu, M. Yan, J. Simon, M. Bennice, C. Fu, C. Ma, J. Jiao, S. Levine, and K. Hausman, “Jump-start reinforcement learning,” arXiv preprint arXiv:2204.02372, 2022.

- [24] D. S. Chaplot, D. Gandhi, A. Gupta, and R. Salakhutdinov, “Object goal navigation using goal-oriented semantic exploration,” in NeurIPS, 2020.

- [25] B. Yamauchi, “A frontier-based approach for autonomous exploration,” in Proceedings 1997 IEEE International Symposium on Computational Intelligence in Robotics and Automation CIRA’97. ’Towards New Computational Principles for Robotics and Automation’, 1997.

- [26] A. Mousavian, A.toshev, M. Fiser, J. Kosecka, A. Wahid, and J. Davidson, “Visual representations for semantic target driven navigation,” in ICRA, 2019.

- [27] J. Ye, D. Batra, A. Das, and E. Wijmans, “Auxiliary Tasks and Exploration Enable ObjectNav,” in ICCV, 2021.

- [28] Y. Liang, B. Chen, and S. Song, “SSCNav: Confidence-aware semantic scene completion for visual semantic navigation,” in ICRA, 2021.

- [29] S. K. Ramakrishnan, D. S. Chaplot, Z. Al-Halah, J. Malik, and K. Grauman, “PONI: Potential Functions for ObjectGoal Navigation with Interaction-free Learning,” in CVPR, 2022.

- [30] K. Yadav, R. Ramrakhya, A. Majumdar, V.-P. Berges, S. Kuhar, D. Batra, A. Baevski, and O. Maksymets, “Offline visual representation learning for embodied navigation,” arXiv preprint arXiv:2204.13226, 2022.

- [31] W. Yang, X. Wang, A. Farhadi, A. Gupta, and R. Mottaghi, “Visual semantic navigation using scene priors,” in ICLR, 2019.

- [32] S. K. Ramakrishnan, D. Jayaraman, and K. Grauman, “An exploration of embodied visual exploration,” arXiv preprint arXiv:2001.02192, 2020.

- [33] J. Peters and S. Schaal, “Reinforcement learning of motor skills with policy gradients,” Neural Networks, vol. 21, no. 4, pp. 682–697, 2008.

- [34] J. Kober and J. Peters, “Policy search for motor primitives in robotics,” in NeurIPS, 2008.

- [35] A. Nair, M. Dalal, A. Gupta, and S. Levine, “Accelerating online reinforcement learning with offline datasets,” arXiv preprint arXiv:2006.09359, 2020.

- [36] Y. Lu, K. Hausman, Y. Chebotar, M. Yan, E. Jang, A. Herzog, T. Xiao, A. Irpan, M. Khansari, D. Kalashnikov, and S. Levine, “AW-Opt: Learning robotic skills with imitation andreinforcement at scale,” in CoRL, 2021.

- [37] D. Kalashnikov, A. Irpan, P. Pastor, J. Ibarz, A. Herzog, E. Jang, D. Quillen, E. Holly, M. Kalakrishnan, V. Vanhoucke, et al., “Scalable deep reinforcement learning for vision-based robotic manipulation,” in CoRL, 2018.

- [38] X. B. Peng, A. Kumar, G. Zhang, and S. Levine, “Advantage-weighted regression: Simple and scalable off-policy reinforcement learning,” arXiv preprint arXiv:1910.00177, 2019.

- [39] Q. Wang, J. Xiong, L. Han, p. sun, H. Liu, and T. Zhang, “Exponentially weighted imitation learning for batched historical data,” in NeurIPS, 2018.

- [40] S. Kapturowski, G. Ostrovski, J. Quan, R. Munos, and W. Dabney, “Recurrent experience replay in distributed reinforcement learning,” in ICLR, 2019.

- [41] K. Cobbe, J. Hilton, O. Klimov, and J. Schulman, “Phasic policy gradient,” arXiv preprint arXiv:2009.04416, 2020.

- [42] J. Schulman, F. Wolski, P. Dhariwal, A. Radford, and O. Klimov, “Proximal policy optimization algorithms,” arXiv preprint arXiv:1707.06347, 2017.

- [43] E. Wijmans, S. Datta, O. Maksymets, A. Das, G. Gkioxari, S. Lee, I. Essa, D. Parikh, and D. Batra, “Embodied Question Answering in Photorealistic Environments with Point Cloud Perception,” in CVPR, 2019.

- [44] K. He, X. Zhang, S. Ren, and J. Sun, “Deep Residual Learning for Image Recognition,” in CVPR, 2016.

- [45] A. Eftekhar, A. Sax, J. Malik, and A. Zamir, “Omnidata: A scalable pipeline for making multi-task mid-level vision datasets from 3d scans,” in ICCV, 2021.

- [46] M. Caron, H. Touvron, I. Misra, H. Jégou, J. Mairal, P. Bojanowski, and A. Joulin, “Emerging properties in self-supervised vision transformers,” in ICCV, 2021.

- [47] D. Yarats, R. Fergus, A. Lazaric, and L. Pinto, “Mastering visual continuous control: Improved data-augmented reinforcement learning,” arXiv preprint arXiv:2107.09645, 2021.

- [48] E. Wijmans, A. Kadian, A. Morcos, S. Lee, I. Essa, D. Parikh, M. Savva, and D. Batra, “DD-PPO: Learning near-perfect pointgoal navigators from 2.5 billion frames,” in ICLR, 2020.

- [49] M. Deitke, E. VanderBilt, A. Herrasti, L. Weihs, J. Salvador, K. Ehsani, W. Han, E. Kolve, A. Farhadi, A. Kembhavi, and R. Mottaghi, “Procthor: Large-scale embodied ai using procedural generation,” in NeurIPS, 2022.

- [50] H. Team, “Habitat challenge, 2022.” https://aihabitat.org/challenge/2022, 2020.

- [51] L. Weihs, U. Jain, I.-J. Liu, J. Salvador, S. Lazebnik, A. Kembhavi, and A. Schwing, “Bridging the imitation gap by adaptive insubordination,” in NeurIPS, 2021. the first two authors contributed equally.

- [52] J. Wasserman, K. Yadav, G. Chowdhary, A. Gupta, and U. Jain, “Last-mile embodied visual navigation,” in CoRL, 2022.

- [53] V. Kumar and E. Todorov, “Mujoco haptix: A virtual reality system for hand manipulation,” in 2015 IEEE-RAS 15th International Conference on Humanoid Robots (Humanoids), 2015.

- [54] M. Hausknecht and P. Stone, “Deep Recurrent Q-Learning for Partially Observable MDPs,” in AAAI, 2015.

- [55] V. Mnih, K. Kavukcuoglu, D. Silver, A. Graves, I. Antonoglou, D. Wierstra, and M. A. Riedmiller, “Playing atari with deep reinforcement learning,” arXiv preprint arXiv:1312.5602, 2013.

- [56] S. Ross, G. Gordon, and D. Bagnell, “A reduction of imitation learning and structured prediction to no-regret online learning,” in AISTATS, 2011.

- [57] J. Ho and S. Ermon, “Generative adversarial imitation learning,” in NIPS, 2016.

- [58] D. Bahdanau, F. Hill, J. Leike, E. Hughes, A. Hosseini, P. Kohli, and E. Grefenstette, “Learning to understand goal specifications by modelling reward,” in ICLR, 2019.

- [59] P. Abbeel and A. Y. Ng, “Apprenticeship learning via inverse reinforcement learning,” in ICML, 2004.

- [60] B. D. Ziebart, J. A. Bagnell, and A. K. Dey, “Maximum entropy inverse reinforcement learning,” in AAAI, 2008.

- [61] J. Fu, K. Luo, and S. Levine, “Learning robust rewards with adverserial inverse reinforcement learning,” in ICLR, 2018.

- [62] J. Schulman, P. Moritz, S. Levine, M. Jordan, and P. Abbeel, “High-dimensional continuous control using generalized advantage estimation,” in ICLR, 2016.

Appendix A Prior work in RL Finetuning

A.1 DAPG [20]

Preliminaries. Rajeswaran et al. [20] proposed DAPG, a method which incorporates demonstrations in RL, and thus quite relevant to our methodology. DAPG first pretrains a policy using behavior cloning then finetunes the policy using an augmented RL objective (shown in Eq. (4)). DAPG proposes to use different parts of demonstrations dataset during different stages of learning for tasks involving sequence of behaviors. To do so, they add an additional term to the policy gradient objective:

| (4) |

Here is a trajectory obtained by executing the current policy, denotes a trajectory obtained by replaying a demonstration, and is a weighting function to alternate between imitation and reinforcement learning. DAPG uses a heuristic weighting scheme to set to decay the auxiliary objective:

| (5) |

where and are hyperparameters and is the update iteration counter. The decaying weighting term is used to avoid biasing the gradient towards the demonstrations data towards the end of training.

Implementation Details. [20] showed results of using DAPG on dexterous hand manipulation tasks for object relocation, in-hand manipulation, tool use, etc. To train the policy with behavior cloning, they use demonstrations for each task gathered using the Mujoco HAPTIX system [53]. The small size of the demonstrations dataset and the observation input allows DAPG to load the demonstrations dataset in system memory which makes it feasible to compute the augmented RL objective shown above.

Challenges in adopting [20]’s setup. Compared to [20], our setup uses high-dimensional visual input (256256 RGB observations) and ObjectNav demonstrations for training. Following DAPG’s training implementation, storing the visual inputs for demonstrations in system memory would require TB, which is significantly higher than what is possible on today’s systems. An alternative is to leverage on-the-fly demonstration replay during RL training. However, efficiently incorporating demonstration replay with experience collection online requires solving a systems research problem. Naively switching between online experience collection using the current policy and replay demonstrations would require x the current experience collection time, overall hurting the training throughput.

A.2 Feasibility of Off-Policy RL finetuning

There are several methods for incorporating demonstrations with off-policy RL [35, 36, 37, 38, 39]. Algorithm 1 shows the general framework of off-policy RL (finetuning) methods.

Unfortunately, most of these methods use feedforward state encoders, which is ill-posed for partially observable settings. In partially observable settings, the agent requires a state representation that combines information about the state-action trajectory so far with information about the current observation, which is typically achieved using a recurrent network.

To train a recurrent policy in an off-policy setting, the full state-action trajectories need to be stored in a replay buffer to use for training, including the hidden state of the RNN. The policy update requires a sequence input for multiple time steps where is sampled sequence length. Additionally, it is not obvious how the hidden state should be initialized for RNN updates when using a sampled sequence in the off-policy setting. Prior work DRQN[54] compared two training strategies to train a recurrent network from replayed experience:

-

1.

Bootstrapped Random Updates. The episodes are sampled randomly from the replay buffer and the policy updates begin at random steps in an episode and proceed only for the unrolled timesteps. The RNN initial state is initialized to zero at the start of the update. Using randomly sampled experience better adheres to DQN’s [55] random sampling strategy, but, as a result, the RNN’s hidden state must be initialized to zero at the start of each policy update. Using zero start state allows for independent decorrelated sampling of short sequences which is important for robust optimization of neural networks. Although this can help RNN to learn to recover predictions from an initial state that mismatches with the hidden state from the collected experience but it might limit the ability of the network to rely on it’s recurrent state and exploit long term temporal correlations.

-

2.

Bootstrapped Sequential Updates. The full episode replays are sampled randomly from the replay buffer and the policy updates begin at the start of the episode. The RNN hidden state is carried forward throughout the episode. Eventhough this approach avoids the problem of finding the correct initial state it still has computational issues due to varying sequence length for each episode, and algorithmic issues due to high variance of network updates due to highly correlated nature of the states in the trajectory.

Even though using bootstrapped random updates with zero start states performed well in Atari which is mostly fully observable, R2D2[40] found using this strategy prevents a RNN from learning long-term dependencies in more memory critical environments like DMLab. [40] proposed two strategies to train recurrent policies with randomly samples sequences:

-

1.

Stored State. In this strategy, the hidden state is stored at each step in the replay and use it to initialize the network at the time of policy updates. Using stored state partially remedies the issues with initial recurrent state mismatch in zero start state strategy but it suffers from ‘representational drfit’ leading to ‘recurrent state staleness’, as the stored state generated by a sufficiently old network could differ significantly from a state from the current policy.

-

2.

Burn-in. In this strategy the initial part of the replay sequence is used to unroll the network and produce a start state (‘burn-in period’) and update the network on the remaining part of the sequence.

While R2D2 [40] found a combination of these strategies to be effective at mitigating the representational drift and recurrent state staleness, this increases computation and requires careful tuning of the replay sequence length and burn-in period .

Both [40, 54] demonstrate the issues associated with using a recurrent policy in an off-policy setting and present approaches that mitigate issues to some extent. Applying these techniques for Embodied AI tasks and off-policy RL finetuning is an open research problem and requires empirical evaluation of these strategies.

Appendix B Prior work in Imitation Learning

In Imitation Learning (IL), we use demonstrations of successful behavior to learn a policy that imitates the expert (demonstrator) providing these trajectories. The simplest approach to IL is behavior cloning (BC), which uses supervised learning to learn a policy to imitate the demonstrator. However, BC suffers from poor generalization to unseen states, since the training mimics the actions and not their consequences. DAgger [56] mitigates this issue by iteratively aggregating the dataset using the expert and trained policy to learn the policy . Specifically, at each step , the new dataset is generated by:

| (6) |

where, is a queryable expert, and is the trained policy at iteration . Then, we aggregate the dataset and train a new policy on the dataset . Using experience collected by the current policy to update the policy for next iteration enables DAgger [56] to mitigate the poor generalization to unseen states caused by BC. However, using DAgger [56] in our setting is not feasible as we don’t have a queryable human expert for policies being trained with human demonstrations.

Alternative approaches [57, 58, 59, 60, 61] for imitation learning are variants of inverse reinforcement learning (IRL), which learn reward function from expert demonstrations in order to train a policy. IRL methods learn a parameterized reward function, which models the behavior of the expert and assigns a scalar reward to a demonstration. Given the reward , a policy is learned to map states to distribution over actions at each time step. The goal of IRL methods is to learn a reward function such that a policy trained to maximize the discounted sum of the learned reward matches the behavior of the demonstrator. Compared to prior works [57, 58, 59, 60, 61], our setup uses a partially-observable setting and high-dimensional visual input for training. Following training implementation from prior works, storing visual inputs of demonstrations for reward model training would require system memory, which is significantly higher than what is possible on today’s systems. Alternatively, efficiently replaying demonstrations during RL training with reward model learning in the loop requires solving an open systems research problem. In addition, applying these methods for tasks in a partially observable setting is an open research problem and requires empirical evaluation of these approaches.

Appendix C Training Details

C.1 Behavior Cloning

We use a distributed implementation of behavior cloning by [1] for our imitation pretraining. Each worker collects frames of experience from environments parallely by replaying actions from the demonstrations dataset. We then perform a policy update using supervised learning on mini batches. For all of our BC experiments, we train the policy for steps on GPUs using Adam optimizer with a learning rate which is linearly decayed after each policy update. Tab. 6 details the default hyperparameters used in all of our training runs.

| Parameter | Value |

|---|---|

| Number of GPUs | 64 |

| Number of environments per GPU | 8 |

| Rollout length | 64 |

| Number of mini-batches per epoch | 2 |

| Optimizer | Adam |

| Learning rate | |

| Weight decay | |

| Epsilon | |

| DDPIL sync fraction | 0.6 |

C.2 Reinforcement Learning

To train our policy using RL we use PPO with Generalized Advantage Estimation (GAE) [62]. We use a discount factor of and set GAE parameter to 0.95. We do not use normalized advantages. To parallelize training, we use DD-PPO with workers on GPUs. Each worker collects frames of experience from environments parallely and then performs epochs of PPO update with mini batches in each epoch. For all of our experiments, we RL finetune the policy for steps. Tab. 7 details the default hyperparameters used in all of our training runs.

| Parameter | Value |

|---|---|

| Number of GPUs | 16 |

| Number of environments per GPU | 8 |

| Rollout length | 64 |

| PPO epochs | 2 |

| Number of mini-batches per epoch | 2 |

| Optimizer | Adam |

| Weight decay | |

| Epsilon | |

| PPO clip | 0.2 |

| Generalized advantage estimation | True |

| 0.99 | |

| 0.95 | |

| Value loss coefficient | 0.5 |

| Max gradient norm | 0.2 |

| DDPPO sync fraction | 0.6 |

C.3 RL Finetuning using VPT

To compare with RL finetuning approach proposed in VPT [21] we implement the method in DD-PPO framework. Specifically, we initialize the critic weights to zero, replace the entropy term in PPO [42] with a KL-divergence loss between the frozen IL policy and RL policy, and decay the KL divergence loss coefficient, , by a fixed factor after every iteration. This loss term is defined as:

| (7) |

C.4 RL Finetuning Ablations

| Method | Success | SPL |

|---|---|---|

| 21) BC | ||

| 22) BCRL-FT | ||

| 23) BCRL-FT (+ Critic Learning) | ||

| 24) BCRL-FT (+ Critic Learning, Critic Decay) | ||

| 25) BCRL-FT (+ Critic Learning, Actor Warmup) | ||

| 26) PIRLNav |

For ablations presented in Sec. 4.3 of the main paper (also shown in Tab. 8) we use a policy pretrained on human demonstrations using BC and finetuned for steps using hyperparameters from Tab. 7. We try learning rates (, , and ) for both BC RL (row 2) and BC RL (+ Critic Learning) (row 3) and we report the results with the one that works the best. For PIRLNav we use a starting learning rate of and decay it to , consistent with learning rate schedule of our best performing agent. For ablations we do not tune learning rate parameters of PIRLNav, we hypothesize tuning the parameters would help improve performance.

We find BC RL (row 2) works best with a smaller learning rate but the training performance drops significantly early on, due to the critic providing poor value estimates, and recovers later as the critic improves. See Fig. 8. In contrast when using proposed two phase learning setup with the learning rate schedule we do not observe a significant drop in training performance.