Placement is not Enough: Embedding with Proactive Stream Mapping on the Heterogenous Edge

Abstract

Edge computing is naturally suited to the applications generated by Internet of Things (IoT) nodes. The IoT applications generally take the form of directed acyclic graphs (DAGs), where vertices represent interdependent functions and edges represent data streams. The status quo of minimizing the makespan of the DAG motivates the study on optimal function placement. However, current approaches lose sight of proactively mapping the data streams to the physical links between the heterogenous edge servers, which could affect the makespan of DAGs significantly. To solve this problem, we study both function placement and stream mapping with data splitting simultaneously, and propose the algorithm DPE (Dynamic Programming-based Embedding). DPE is theoretically verified to achieve the global optimality of the embedding problem. The complexity analysis is also provided. Extensive experiments on Alibaba cluster trace dataset show that DPE significantly outperforms two state-of-the-art joint function placement and task scheduling algorithms in makespan by % and %, respectively.

I Introduction

Nowadays, widely used stream processing platforms, such as Apache Spark, Apache Flink, Amazon Kinesis Streams, etc., are designed for large-scale data-centers. These platforms are not suitable for real-time and latency-critical applications running on widely spread Internet of Things (IoT) nodes. By contrast, near-data processing within the network edge is a more applicable way to gain insights, which leads to the birth of edge computing. However, state-of-the-art edgy stream processing systems, for example, Amazon Greengrass, Microsoft Azure IoT Edge and so on, do not consider that how the dependent functions of the IoT applications distributed to the resource-constrained edge. To address this limitation, works studying function placement across the distributed edge servers spring up [1, 2, 3]. In these works, the IoT application is structured as a service function chain (SFC) or a directed acyclic graph (DAG) composed of interdependent functions, and the placement strategy of each function is obtained by minimizing the makespan of the application, under the trade-off between node processing time and cross-node communication overhead.

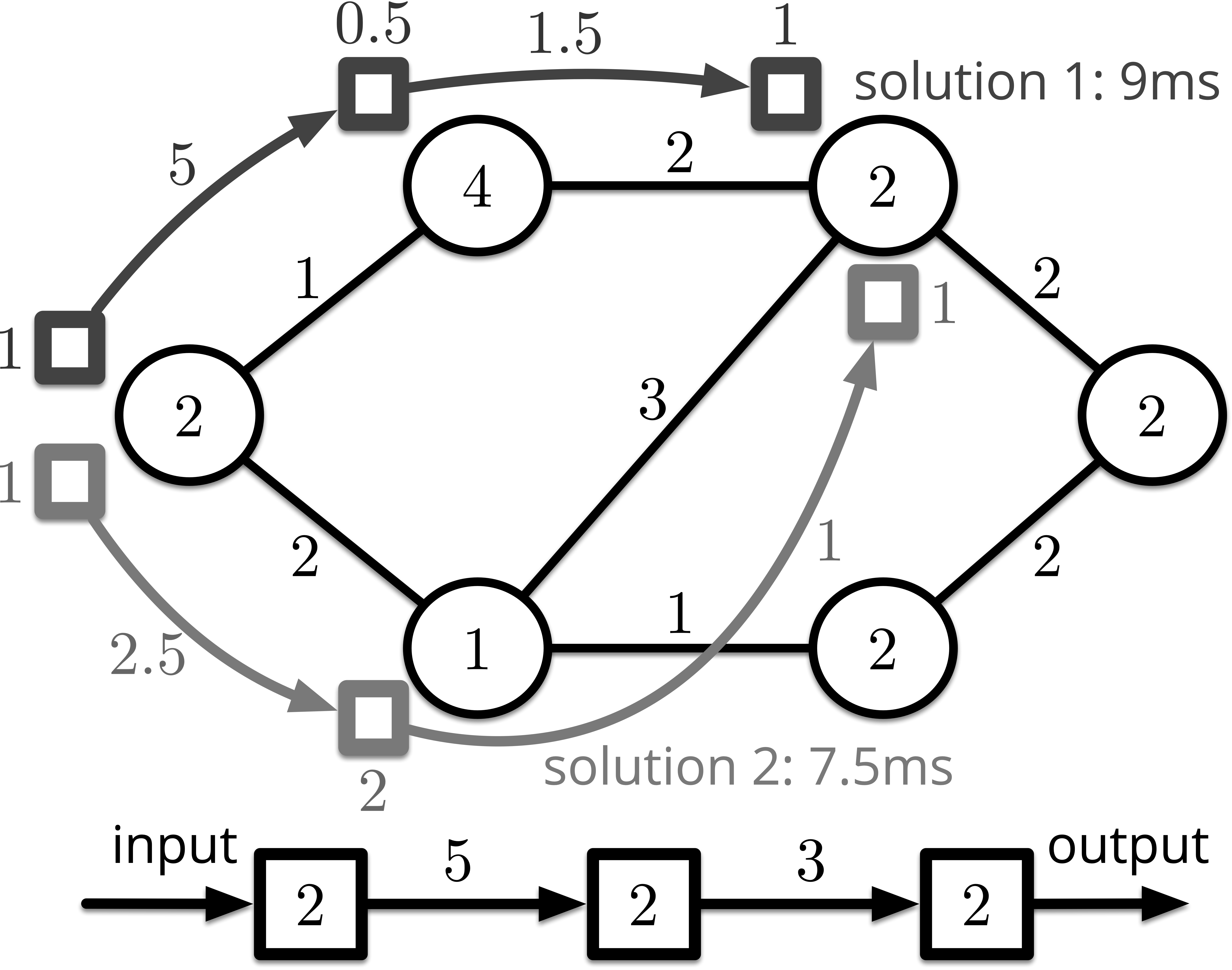

However, when minimizing the makespan of the application, state-of-the-art approaches only optimize the placement of functions, while passively generate the stream mapping. Here the stream mapping refers to mapping the input/output streams to the physical links between edge servers. The passivity here means the routing path between each placed function is not optimized but generated automatically through SDN controllers. Nevertheless, for the heterogenous edge, where each edge server has different processing power and each link has various throughput, better utilization of the stream mapping can result in less makespan even though the corresponding function placement is worse. This phenomenon is illustrated in Fig. 1. The top half of this figure is an undirected connected graph of six edge servers, abstracted from the physical infrastructure of the heterogenous edge. The numbers tagged in each node and beside each link of the undirected graph are the processing power (measured in flop/s) and throughput (measured in bit/s), respectively. The bottom half is a SFC with three functions. The number tagged inside each function is the required processing power (measured in flops). The number tagged beside each data stream is the size of it (measured in bits). Fig. 1 demonstrates two solutions of function placement. The numbers tagged beside nodes and links of each solution are the time consumed (measured in second). Just in terms of function placement, solution 1 enjoys lower function processing time (s s), thus performs better. However, the makespan of solution 2 is s lesser than solution 1 because the path which solution 2 routes through possess a higher throughput.

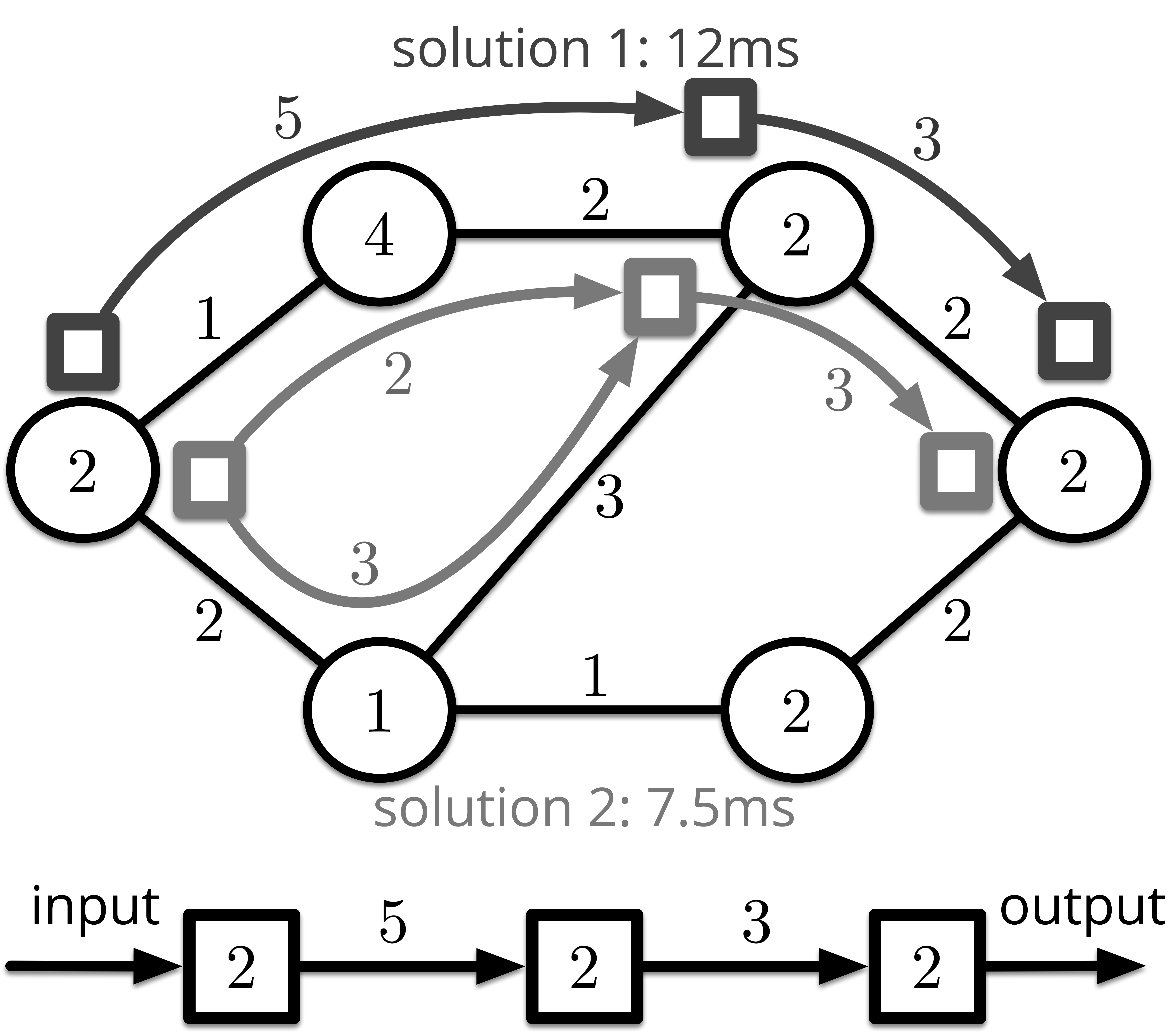

The above example implies that different stream mappings can significantly affect the makespan of the IoT applications. It enlightenes us to take the stream mapping into consideration proactively. In this paper, we name the combination of function placement and stream mapping as function embedding. Moreover, if stream splitting is allowed, i.e., the output data stream of a function can be splitted and route on multiple paths, the makespan can be decreased further. This phenomenon is captured in Fig. 2. The structure of this figure is the same as Fig. 1. It demonstrates two function embedding solutions with stream splitting allowed or not, respectively. In solution 2, the output stream of the first function is divided into two parts, with bits and bits, respectively. Correspondingly, the time consumed on routing are s and s, respectively. Although the two solutions have the same function placement, the makespan of solution 2, which is calculated as s, is s lesser than solution 1. In practice, segment routing (SR) can be applied to split and route data stream to different edge servers by commercial DNS controllers and HTTP proxies [4].

To capture the importance of stream mapping and embrace the future of 5G communications, in this paper we study the dependent function embedding problem with stream splitting on the heterogenous edge. The problem is similar with Virtual Network Embedding (VNE) problem in 5G network slicing for virtualized network functions (VNFs) [5]. The difference lies in that the VNFs are user planes, management planes and control planes with different levels of granularities, which are virtualized for transport and computing resources of telecommunication networks [6], rather than the IoT applications this paper refers to. For a DAG with complicated structure, the problem is combinatorial and difficult to solve when it scales up. In this paper, we firstly find the optimal substructure of the problem. The basic framework of our algorithm is based on Dynamic Programming. For each substructure, we seperate several linear programming sub-problems and solve them optimally. We do not adopt the regular iteration-based solvers such as simplex method and dual simplex method, but derive the optimal results directly. At length, our paper make the following contributions:

-

•

Model contribution: We study the dependent function embedding problem on the heterogenous edge. Other than existing works where only function placement is studied, we novelly take proactive stream mapping and data splitting into consideration and leverage dynamic programming as the approach to embed DAGs of IoT applications onto the constrained edge.

-

•

Algorithm contribution: We present an algorithm that solves the dependent function embedding problem optimally. We firstly find the optimal substructure of the problem. In each substructure, when the placement of each function is fixed, we derive the paths and the data size routes through each path optimally.

- •

The remainder of the paper is organized as follows. In Sec. II, we present the system model and formulate the problem. In Sec. III, we present the proposed algorithms. Performance guarantee and complexity analysis are provided in Sec. IV. The experiment results are demonstrated in Sec. V. In Sec. VI, we review related works on functions placement on the heterogenous edge. Sec. VII concludes this paper.

II System Model

Let us formulate the heterogenous edge as an undirected connected graph , where is the set of edge servers and is the set of links. Each edge server has a processing power , measured in flop/s while each link has the same uplink and downlink throughput , measured in bit/s.

II-A Application as a DAG

The IoT application with interdependent functions is modeled as a DAG. The DAG can have arbitrary shape, not just linear SFC. In addition, multi-entry multi-exit is allowed. We write for the DAG, where is the set of interdependent functions listed in topological order. , if the output stream of is the input of its downstream function , a directed link exists. is the set of all directed links. For each function , we write for the required number of floating point operations of it. For each directed link , the data stream size is denoted as (measured in bits).

II-B Dependent Function Embedding

We write for the chosen edge server which to be placed on, and for the set of paths from to . Obviously, for all path , it consists of links from without duplication. For a function pair and its associated directed link , the data stream can be splitted and route through different paths from . , let us use to represent the allocated data stream size for path . Then, , we have the following constraint:

| (1) |

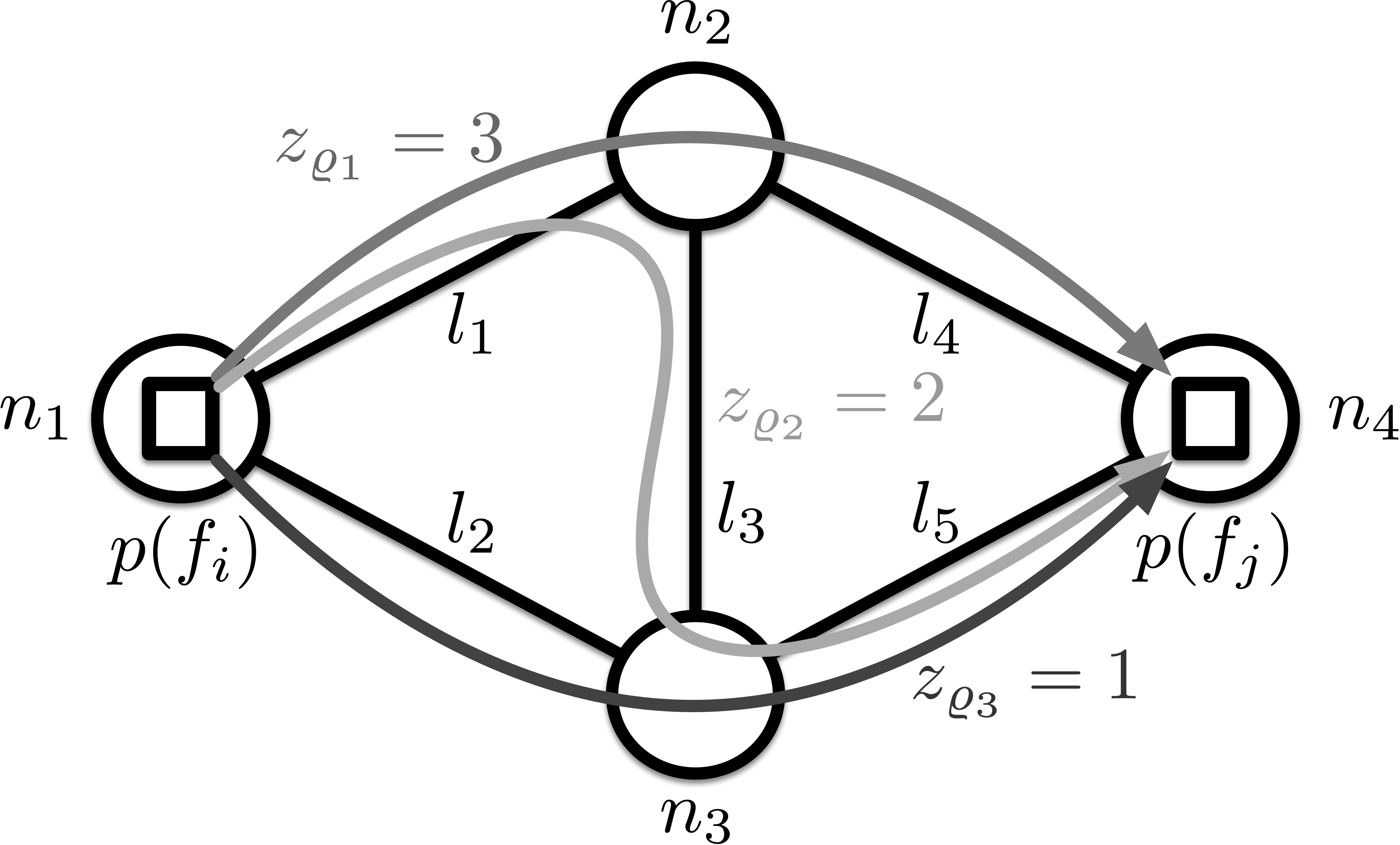

Notice that if , i.e., and are placed on the same edge server, then and the routing time is zero. Fig. 3 gives a working example. The connected graph in it has four edge servers and five links, from to . The two squares represents the source function and the destination function . From the edge server to the edge server , routes through three paths with data size of bits, bits, and bits, respectively. In this example, . On closer observation, we can find that two data streams route through . Each of them is from path and with bits and bits, respectively.

II-C Involution Function of Finish Time

Let us use to denote the finish time of on edge server . Considering that the functions of the DAG have interdependent relations, for each function pair where is defined, should involve according to

| (2) |

where is the routing time of the directed link and is the processing time of on edge server . Corresponding to (2), for each entry function ,

| (3) |

where stores the beginning time for processing . If is the first function which is scheduled on server , is zero. Otherwise, it is the finish time of the last function which is scheduled on server .

, is decided by the paths in . Specifically, when and are not placed on the same edge server,

| (4) |

where is the indicator function. (4) means that the routing time is decided by the slowest branch of data streams. Solution 2 in Fig. 2 is an example.

, is decided by the processing power of the chosen edge server :

| (5) |

To describe the earliest makespan of the DAG, inspired by [1], we add a dummy tail function . As a dummy function, the processing time of is set as zero whichever edge server it is placed on. That is,

| (6) |

Besides, each destination function needs to point to with a link weight of its output data stream size. We write for the set of destination functions and for the set of entry functions of the DAG. In addition, we write for the set of new added links which point to , i.e.,

| (7) |

As such, the DAG is updated as , where and .

II-D Problem Formulation

Our target is to minimize the makespan of the DAG by finding the optimal , , and for all , , and . Let us use to represent the earliest finish time of on edge server . Further, we use to represent the earliest makespan of the DAG when is placed on . Obviously, it is equal to . The dependent function embedding problem can be formulated as:

| (8) |

III Algorithm Design

III-A Finding Optimal Substructure

The set of function embedding problems are proved to be NP-hard [5]. As a special case of these problems, is NP-hard, too. because of the dependency relations of fore-and-aft functions, the optimal placement of functions and optimal mapping of data streams cannot be obtained simultaneously. Nevertheless, we can solve it by finding its optimal substructure.

Let us dig deeper into the involution equation (2). We can find that

| (9) |

because has no impact on . Notice that is replaced by . Then the following expression holds:

| (10) |

Besides, for all the entry functions , is calculated by (3) without change.

With (10), for each function pair where exists, we define the sub-problem :

In , is fixed. We need to decide where shoud be placed and how is mapped. Based on that, can be updated by . As a result, can be solved optimally by calculating the earliest finish time of each function in turn.

The analysis is captured in Algorithm 1, i.e. Dynamic Programming-based Embedding (DPE). Firstly, DPE finds all the simple paths (paths without loops) between any two edge servers and , i.e. (Step 2 Step 6). It will be used to calculate the optimal and . For all the functions who directly point to , DPE firstly fixs the placement of . Then, for the function pair , is solved and the optimal solution is stored in (Step 18 Step 19). If has been decided beforehand, can be directly obtained by finding and between and . The optimal transmission cost is stored in (Step 11 Step 14). The if statement holds for when is a predecessor of multiple functions. Step 12 is actually a sub-problem of with fixed. Notice that the calculation of the finish time of the entry functions and the other functions are different (Step 16 and Step 21, respectively). At last, the global minimal makespan of the DAG is . The optimal embedding of each function can be retrieved from .

III-B Optimal Proactive Stream Mapping

Now the problem lies in that how to find all the simple paths and solve optimally. For the former, we propose a Recursion-based Path Finding (RPF) algorithm, which will be detailed in Sec. III-B1. For the latter, When is fixed, the value of is known and can be viewed as a constant for . Besides, from Step 12 of DPE we can find that, for all , , is already updated in the last iteration. Thus, for solving , the difficulty lies in that how to select the optimal placement of and the optimal mapping of . It will be detailed in Sec. III-B2.

III-B1 Recursion-based Path Finding

We use to represent the set of simple paths from to where no path goes through nodes from the set . The set of simple paths from to we want, i.e. , is equal to . , let us use to represent the set of edge servers adjcent to . Then, can be calculated by the following recursion formula:

where . is a function that joins the node to the path and returns the new joint path . The analysis in this paragraph is summarized in Algorithm 2, i.e. Recursion-based Path Finding (RPF) algorithm.

Before calling RPF, we need to initialize the global variables. Specifically, stores all the simple paths, which is initialized as . , as the set of visited nodes, is initialized as . is allocated for the storage of current path, which is also initialized as . Whereafter, by calling , all the simple paths between and are stored in . is used to replace Step 4 of DPE.

In the following, we calculate the optimal value of for each simple path .

III-B2 Optimal Data Splitting

Since both and are fixed, and are constants. Therefore, solving is equal to solving the following problem:

| (13) | |||||

(13) is reconstructed from (1) and (8). To solve , we define a diagonal matrix

Obviously, all the diagonal elements of are positive real numbers. The variables that need to be determined can be written as . Thus, can be transformed into

| (16) |

is an infinity norm minimization problem. By introducing slack variables and , can be transformed into the following linear programming problem:

is feasible and its optimal objective value is finite. As a result, simplex method and dual simplex method can be applied to obtain the optimal solution efficiently.

However, simplex methods might be time-consuming when the scale of increases. In fact, we can find that the optimal objective value of is

| (18) |

if and only if

| (19) |

where is the -th component of vector and is the -th diagonal element of . Detailed proof of this result is provided in Sec. IV-A. Base on (19), we can infer that the optimal variable , which means that , . Therefore, the assumption in Sec. III-B1 is not violated and the optimal is equivalent to .

Up to now, when is fixed, we have calculate the optimal and for all paths . The analysis in this subsection are summarized in Algorithm 3, Optimal Stream Mapping (OSM) algorithm. In OSM, is the -th objective value of by taking . Similarly, is the -th set of simple paths obtained by RPF. For at most choices of , OSM calculates the optimal objective value (Step 1 Step 5). The procedure is executed in parallel (with different threads) because intercoupling is nonexistent. Then, OSM finds the best placement of and returns the corresponding , . OSM is used to replace Step 18 of DPE.

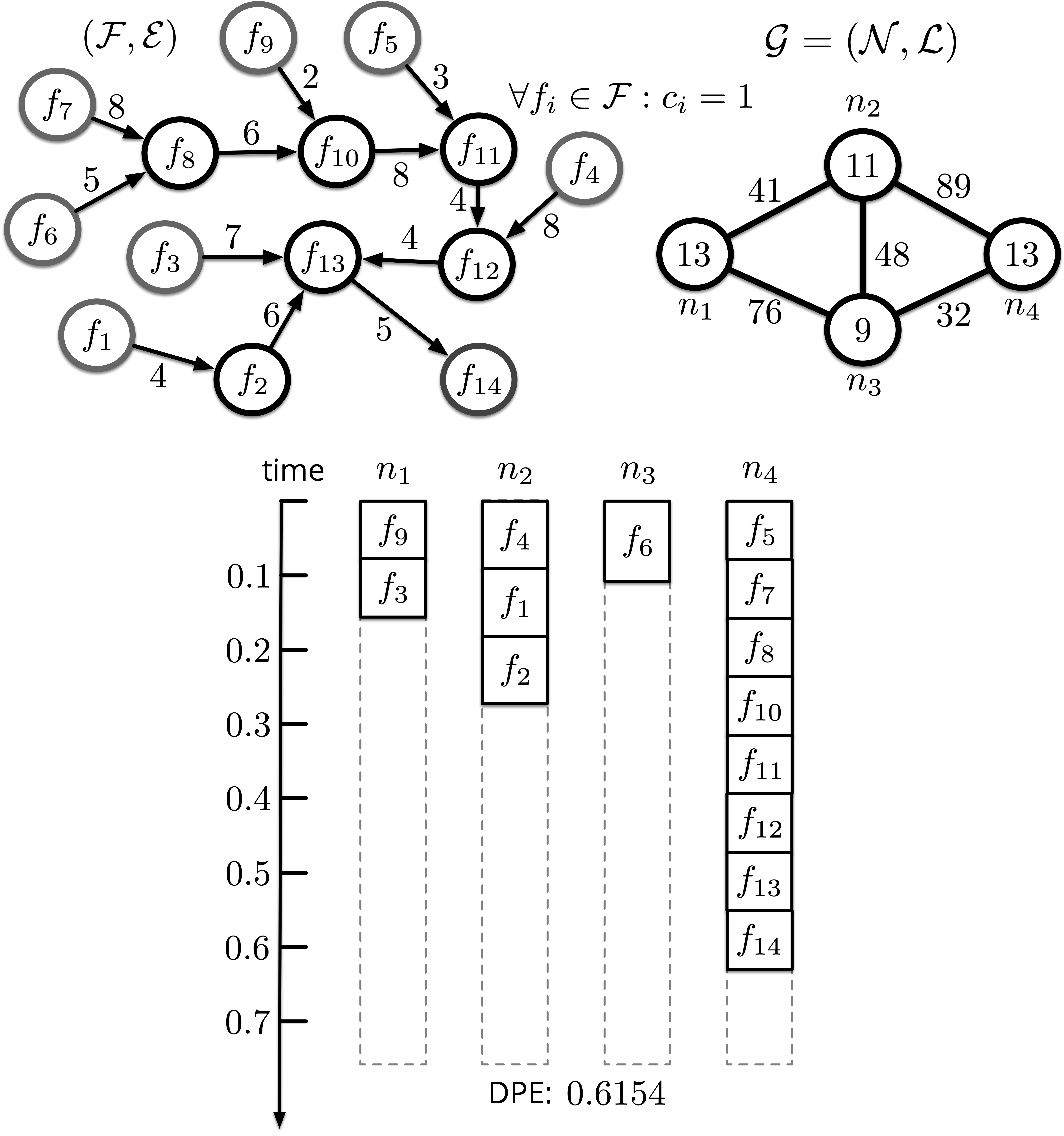

Fig. 4 demonstrates an example on how PDE works. The top left portion of the figure is a DAG randomly sampled from the Alibaba cluster trace, where all the functions are named in the manner of topological order. , is set as . The top right is the edge server cluster . The bottom demonstrates how the functions are placed and scheduled by DPE.

IV Theoretical Analysis

IV-A Performance Guarantee

In this subsection, we analyze the optimality of the proposed algorithm, DPE.

Theorem 1.

Optimality of DPE For a DAG with a given topological order, DPE can achieve the global optimality of by replacing the Step 4 of it with RPF and Step 18 of it with OSM.

Proof.

We firstly prove that OSM solves optimally. Recall that solving is equal to solving , and can be transformed into . For , we have

because . According to the AM–GM inequality, the following inequality always holds:

| (20) |

iff (19) is satisfied. It yields that ,

| (21) |

Multiply both sides of (21) by , we have

| (22) |

By taking the limit of (22), we have

| (23) |

Combining with (16) and (19), the right side of (23) is actually a constant. In other words,

The result shows that (18) and (19) are the optimal objective value and corresponding optimal condition of , respectively, if the topological ordering is given and regarded as an known variable. The theorem is immediate from the optimality of DP. ∎

IV-B Complexity Analysis

In this subsection, we analyze the complexity of the proposed algorithms in the worst case, where is fully connected.

IV-B1 Complexity of RPF

Let us use to denote the flops required to compute all the simple paths between and . If is fully connected,

To simplify notations, we use to replace . We can conclude that ,

| (24) |

Based on (24), we have

| (25) | |||||

which is the maximum flops required to compute all the simple paths between any two edge servers. Before calculating the complexity of RPF, we prove two lemmas.

Lemma 1.

and , .

Proof.

Lemma 2.

and , .

Proof.

We can verify that when , the lamma holds. In the following we prove the lamma holds for by induction. Assume that the lemma holds for , i.e., (induction hypothesis). Then, for , we have

| (28) |

By applying Lemma 1, we get

which means the lemma holds for . ∎

Based on these lemmas, we can obtain the complexity of RPF, as illustrated in the following theorem:

Theorem 2.

Complexity of RPF In worst case, where is a fully connected graph and , the complexity of OSM is .

Proof.

Finding all the simple paths between arbitrary two nodes is a NP-hard problem. To solve it, RPF is based on depth-first search. In real-world edge computing scenario for IoT stream processing, might not be fully connected. Even though, the number of edge servers is small. Thus, the real complexity is much lower.

IV-B2 Complexity of DPE

Notice that RPF is called by OSM times in parallel. It is easy to verify that the complexity of OSM is in worst case, too. OSM is designed to replaced the Step 6 of DPE. Thus, we have the following theorem:

Theorem 3.

Complexity of DPE In worst case, where is a fully connected graph and , the complexity of DPE is

Proof.

From Step 2 to Step 6, DPE calls RPF times. Thus, the complexity of this part (Step 2 Step 6) is according to Theorem 2. The average number of pre-order functions for each non-source function is . As a result, in average, OSM is called times. In OSM, the step required the most flops is Step 8. If the variable substitution method is adopted, the flops required of this step is . Thus, the complexity of Step 7 Step 19 of DPE is . The theorem is immediate by combining the two parts. ∎

Although is of great order of complexity, is not too large in real-world edgy scenario. Even if it is not, as an offline algorithm, it is worth the sacrifice of runtime overhead in pursuit of global optimality.

V Experimental Validation

V-A Experiment Setup

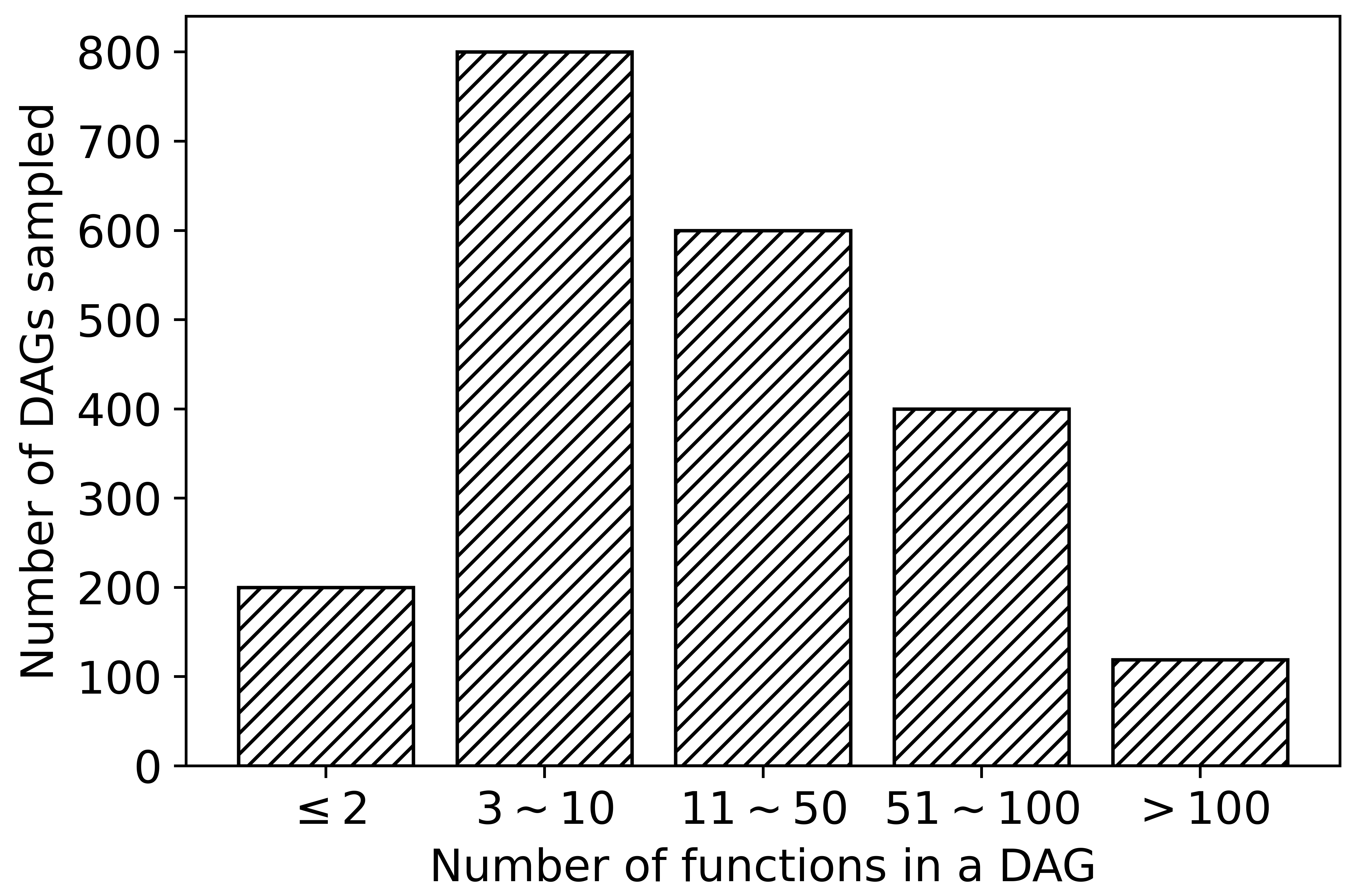

IoT stream processing workloads. The simulation is conducted based on Alibaba’s cluster trace of data analysis. This dataset contains more than 3 million jobs (called applications in this work), and 20365 jobs with unique DAG information. Considering that there are too many DAGs with only single-digit functions, we sampled 2119 DAGs with different size from the dataset. The distribution of the samples is visualized in Fig. 5. For each , the processing power required and output data size are extracted from the corresponding job in the dataset and scaled to giga flop and kbits, respectively.

Heterogenous edge servers. In our simulation, the number of edge servers is in default. Considering that the edge servers are required to formulate a connected graph, the impact of the sparsity of the graph is also studied. The processing power of edge servers and the maximum throughput of physical links are uniformly sampled from giga flop and kbit/s in default, respectively.

Algorithms compared. We compare DPE with the following algorithms.

-

•

FixDoc [1]: FixDoc is a function placement and DAG scheduling algorithm with fixed function configuration. FixDoc places each function onto homogeneous edge servers optimally to minimize the DAG completion time. Actually, [1] also proposes an improved version, GenDoc, with function configuration optimized, too. However, for IoT streaming processing scenario, on-demand function configuration is not applicative. Thus, we only compare DPE with FixDoc.

-

•

Heterogeneous Earliest-Finish-Time (HEFT) [8]: HEFT is a heuristic to schedule a set of dependent functions onto heterogenous workers with communication time taken into account. Starting with the highest priority, functions are assigned to different workers to heuristically minimize the overall completion time. HEFT is an algorithm that stands the test of time.

V-B Experiment Results

All the experiments are implemented in Python 3.7 on macOS Catalina equipped with 3.1 GHz Quad-Core Intel Core i7 and 16 GB RAM.

V-B1 Theoretical Performance Verification

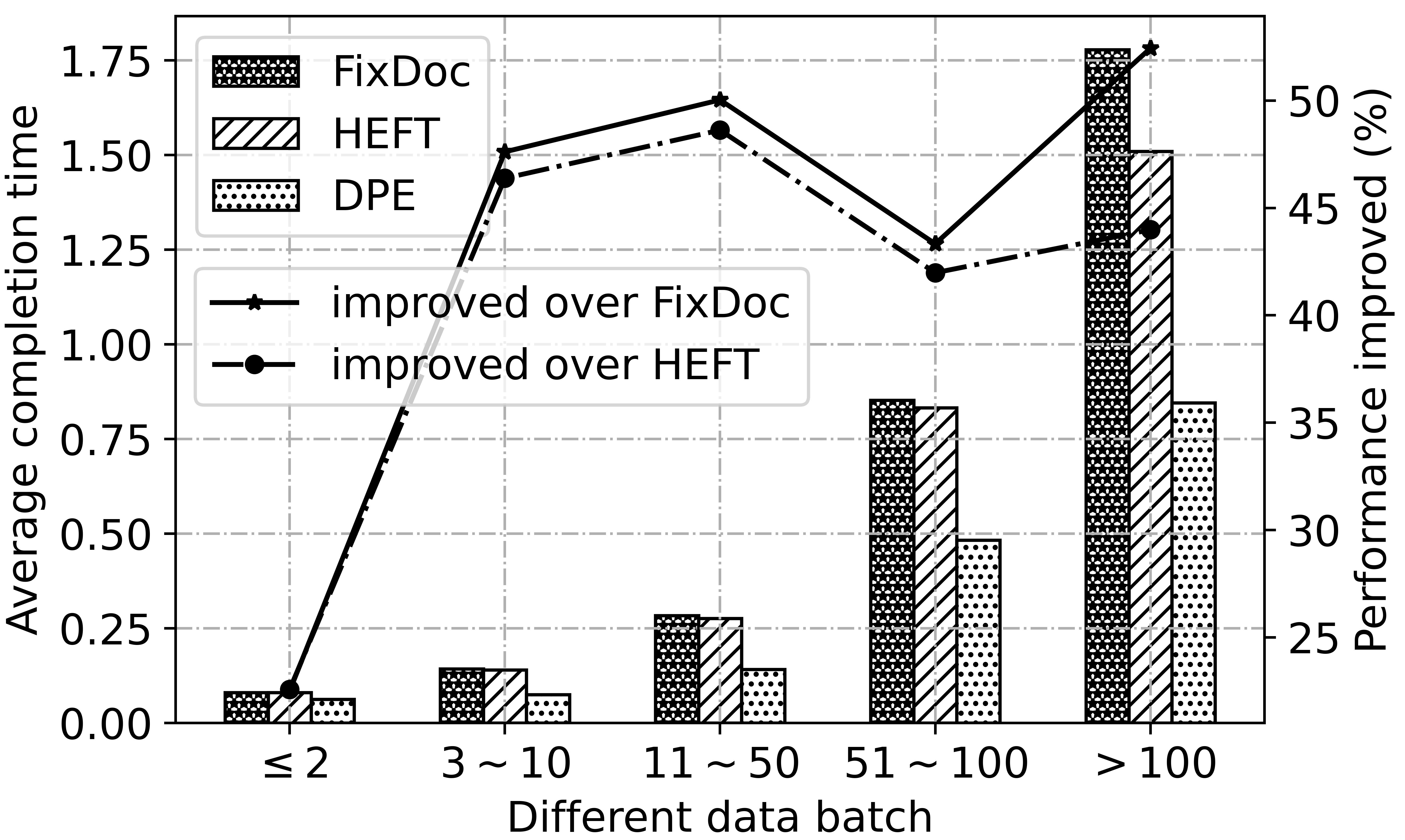

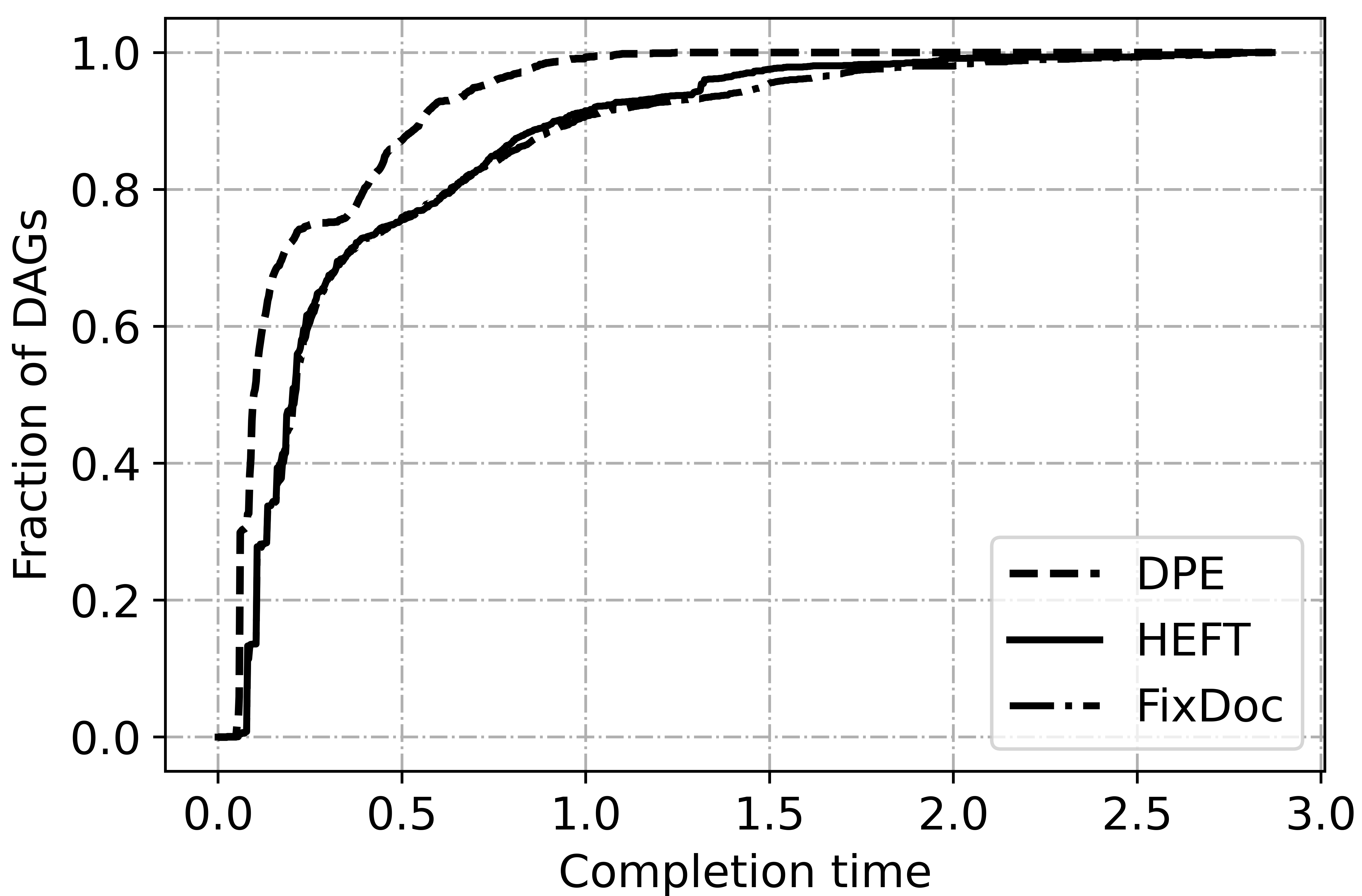

Fig. 6 illustrates the overall performance of the three algorithms. For different data batch, DPE can reduce and of the completion time on average over FixDoc and HEFT on 2119 DAGs. The advantage of DPE is more obvious when the scale of DAG is large because the parallelism is fully guaranteed. Fig. 7 shows the accumulative distribution of 2119 DAGs’ completion time. DPE is superior to HEFT and FixDoc on of the DAGs. Specifically, the maximum completion of DAG achieved by DPE is s. By contrast, only less of DAGs’ completion time achieved by HEFT and FixDoc can make it. The results verify the optimality of DPE.

Fig. 6 and Fig. 7 verify the superiority of proactive stream mapping and data splitting. By spreading data streams over multiple links, transmission time is greatly reduced. Besides, the optimal substructure makes sure DPE can find the optimal placement of each function simultaneously.

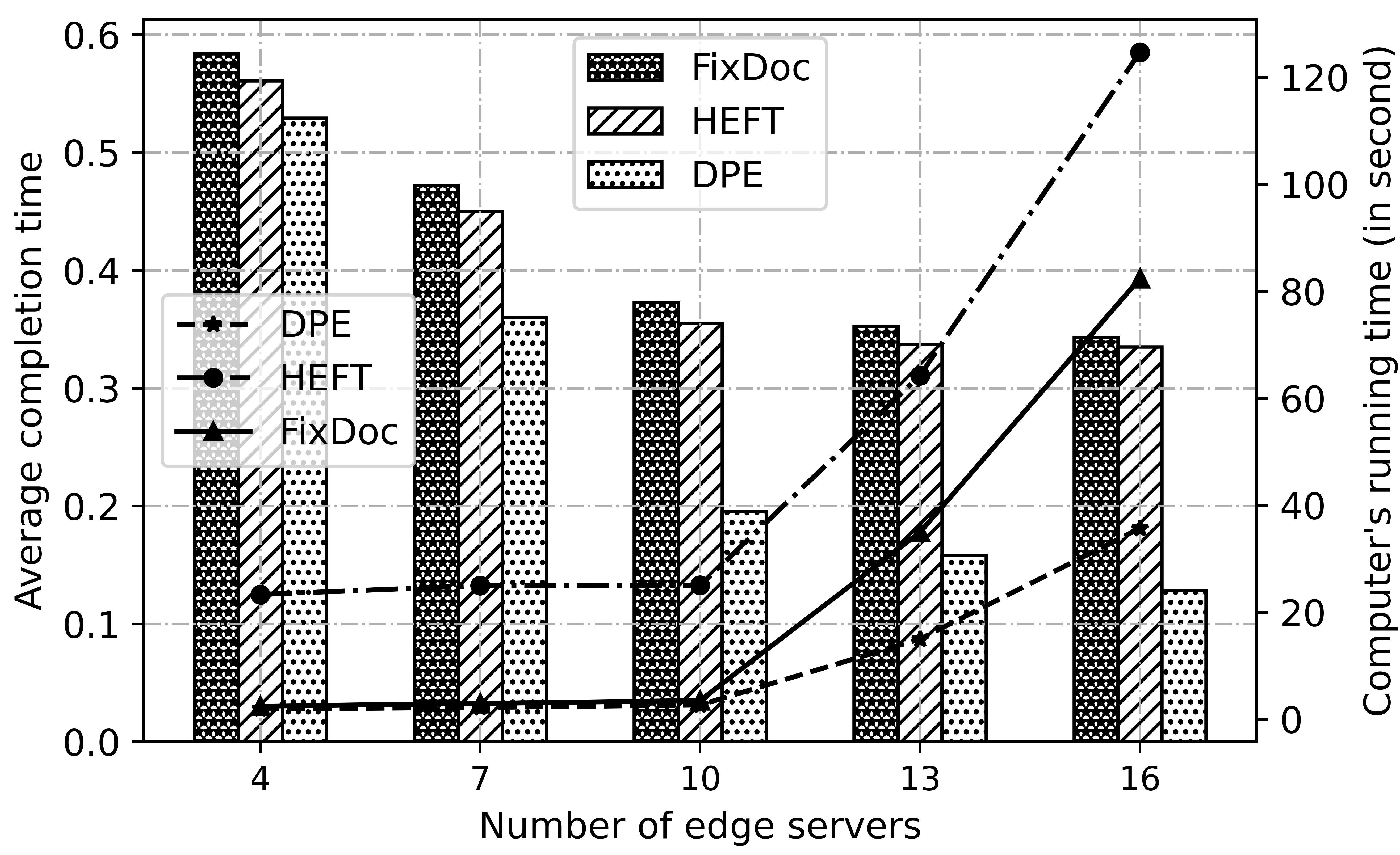

V-B2 Scalability Analysis

Fig. 8 and Fig. 9 shows the impact of the scale of the heterogenous edge . In Fig. 8, we can find that the average completion time achieved by all algorithms decreases as the edge server increases. The result is obvious because more idle servers are avaliable, which ensures that more functions can be executed in parallel without waiting. For all data batches, DPE achieves the best result. It is interesting to find that the gap between other algorithms and DPE get widened when the scale of increases. This is because the avaliable simple paths become more and the data transmission time is reduced even further. Fig. 8 also demonstrates the run time of different algorithms. The results show that DPE has the minimum time overhead.

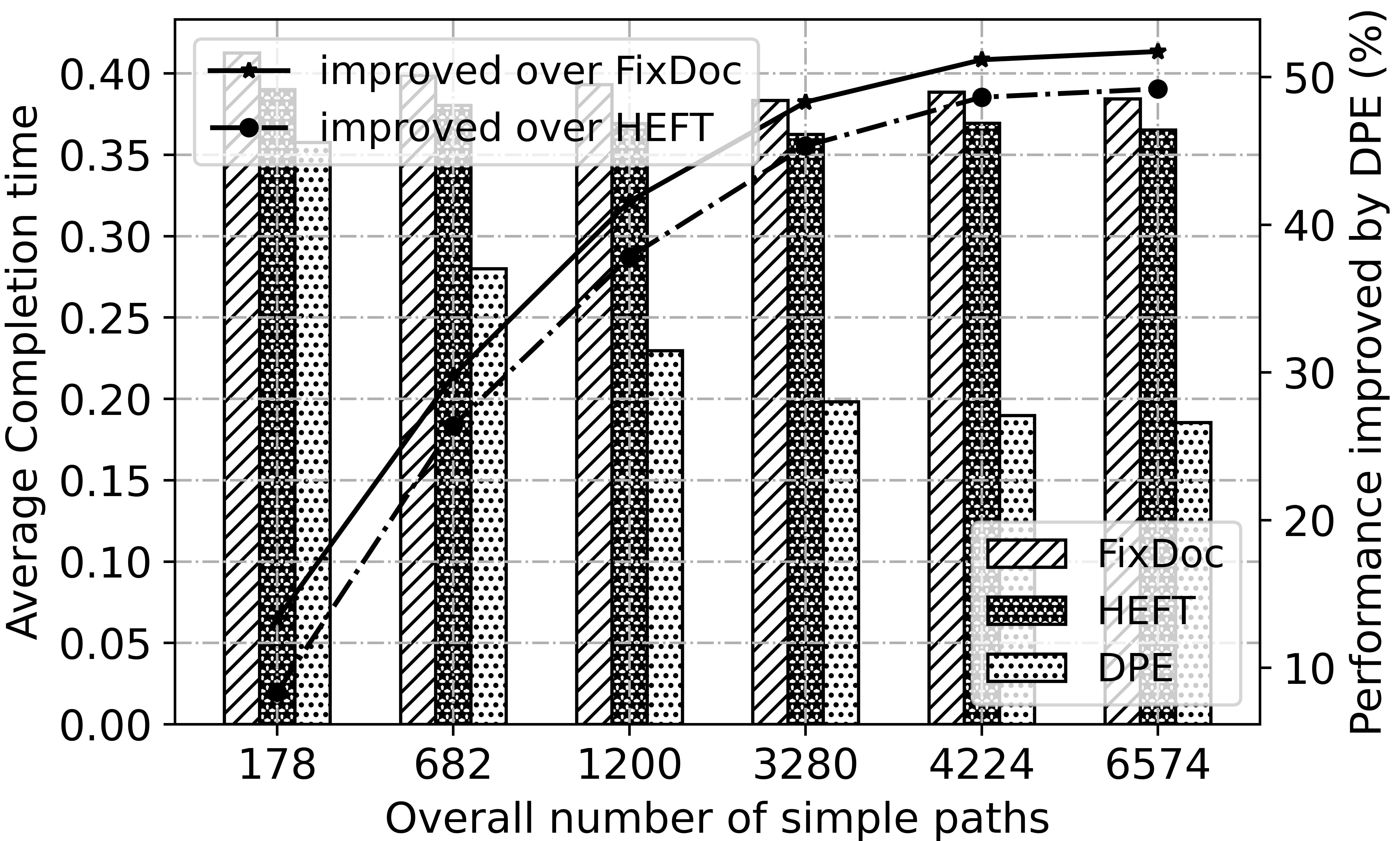

Fig. 9 show the impact of sparsity of . The horizonal axis is the overall number of simple paths . As it increases, becomes more dense. because DPE can reduce transmission time with optimal data splitting and mapping, average completion time achieved by it decreases pretty evident. By contrast, FixDoc and HEFT have no obvious change.

V-B3 Sensitivity Analysis

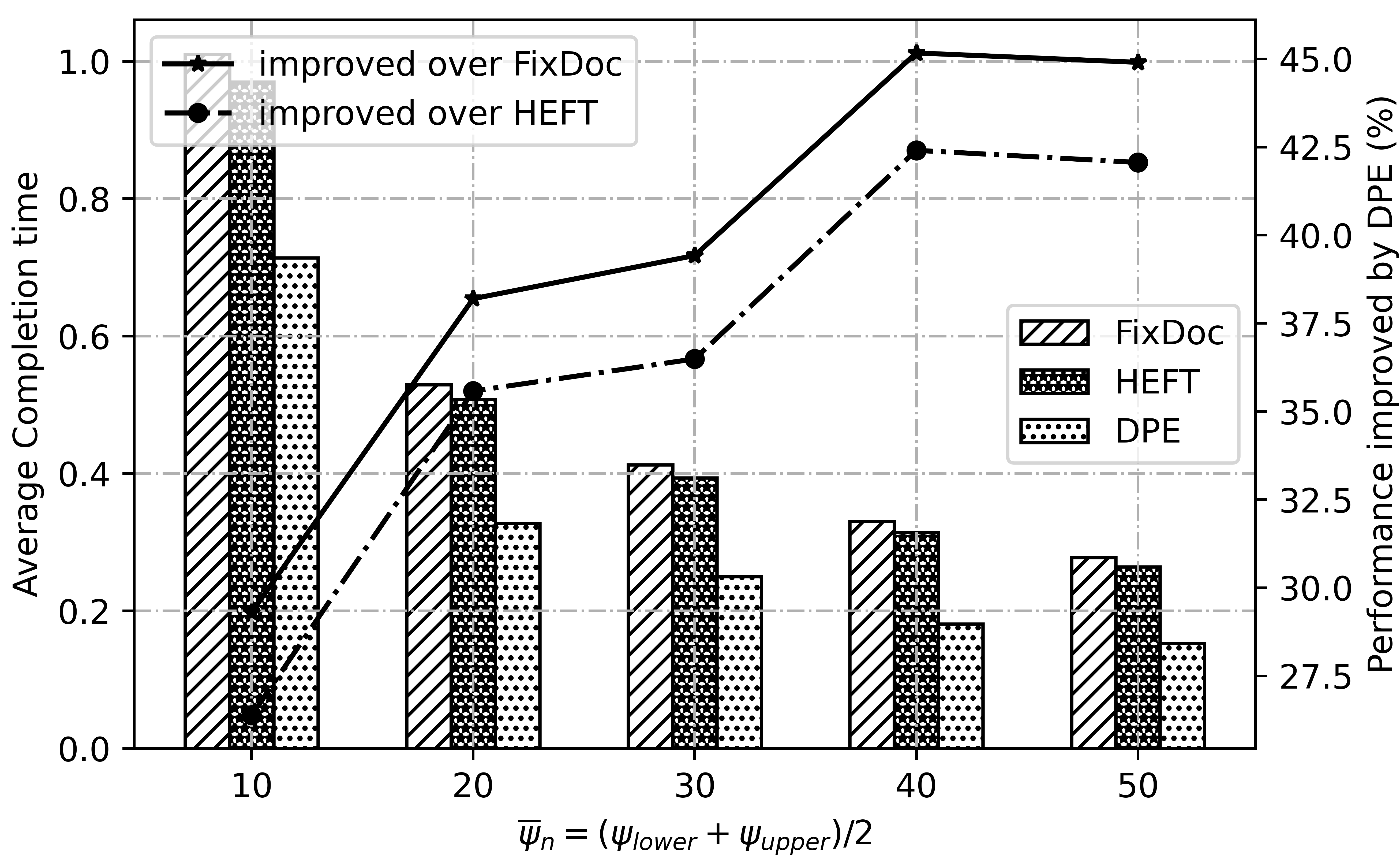

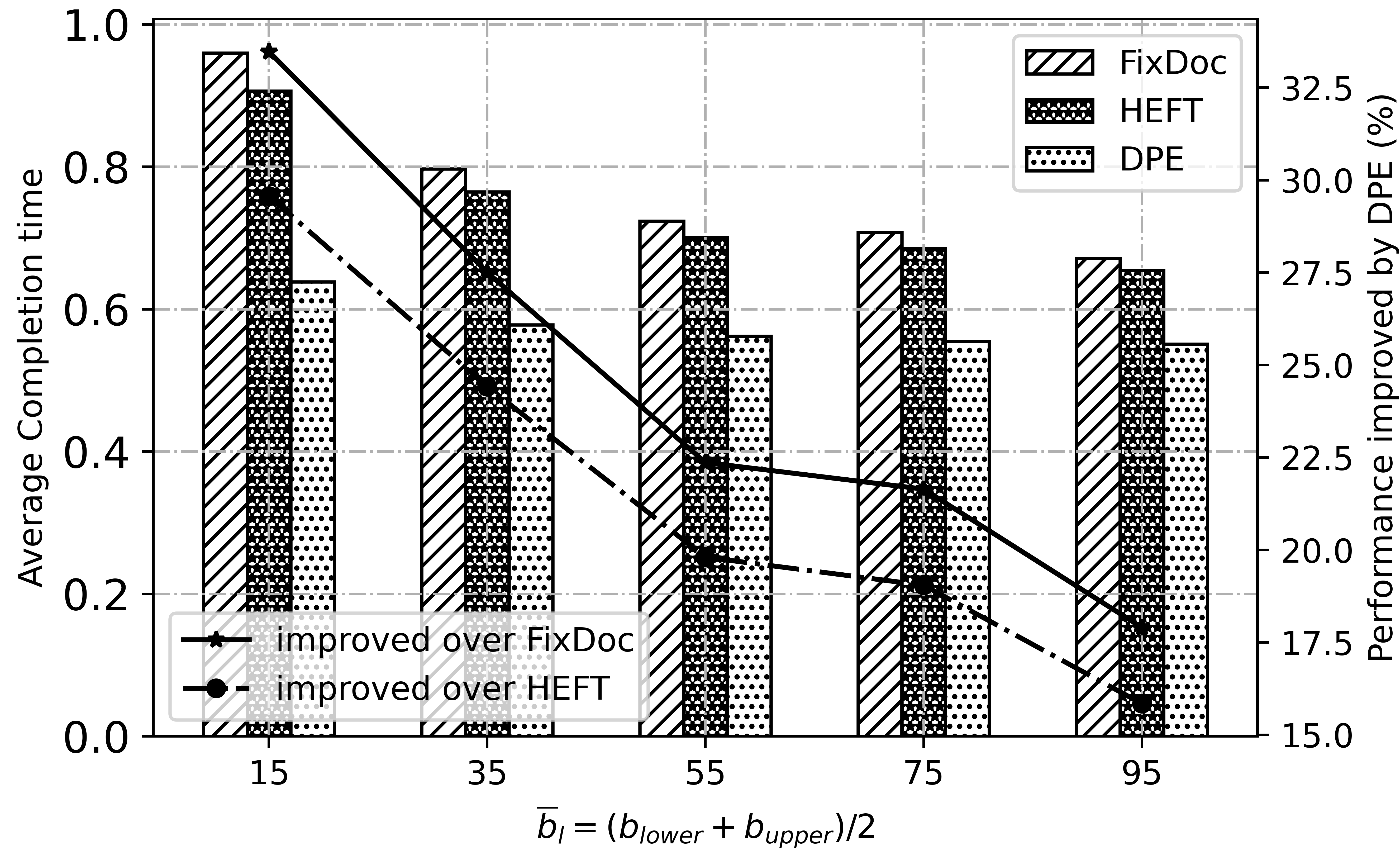

Fig. 10 and Fig. 11 demonstrate the impact of system parameters, and . Notice that , and are sampled from the interval and uniformly, respectively. When the processing power and throughput increase, the computation and transmission time achieved by all algorithms are reduced. Even so, DPE outperforms all the other algorithms, which verifies the robustness of DPE adequately.

VI Related Works

In this section, we review related works on function placement and DAG scheduling at the network edge.

Studying the optimal function placement is not new. Since cloud computing paradigm became popular, it has been extensively studied in the literature [9][10]. When bringing function placement into the paradigm of edge computing, especially for the IoT stream processing, different constraints, such as the response time requirement of latency-critical applications, availability of function instances on the heterogenous edge servers, and the wireless and wired network throughput, etc., should be taken into consideration. In edge computing, the optimal function placement strategy can be used to maximize the network utility [11], minimize the inter-node traffic [12], minimize the makespan of the applications [1, 2, 3], or even minimize the budget of application service providers [13].

The application is either modeled as an individual blackbox or a DAG with complicated composite patterns. Considering that the IoT stream processing applications at the edge usually have interdependent correlations between the fore-and-aft functions, dependent function placement problem has a strong correlation with DAG dispatching and scheduling. Scheduling algorithms for edgy computation tasks have been extensively studied in recent years [8][14, 15, 16]. In edge computing, the joint optimization of DAG scheduling and function placement is usually NP-hard. As a result, many works can only achieve a near optimal solution based on heuristic or greedy policy. For example, Gedeon et al. proposed a heuristic-based solution for function placement across a three-tier edge-fog-cloud heterogenous infrastructure [17]. Cat et al. proposed a greedy algorithm for function placement by estimating the response time of paths in a DAG with queue theory [18]. Although FixDoc [1] can achieve the global optimal function placement, the completion time can be reduced further by optimizing the stream mapping.

VII Conclusion

This paper studies the optimal dependent function embedding problem. We first point out that proactive stream mapping and data splitting could have a strong impact on the makespan of DAGs with several use cases. Based on these observations, we design the DPE algorithm, which is theoretically verified to achieve the global optimality for an arbitrary DAG when the topological order of functions is given. DPE calls the RPF and the OSM algorithm to obtain the candidate paths and optimal stream mapping, respectively. Extensive simulations based on the Alibaba cluster trace dataset verify that our algorithms can reduce the makespan significantly compared with state-of-the-art function placement and scheduling methods, i.e., HEFT and FixDoc. The makespan can be further decreased by finding the optimal topological ordering and scheduling multiple DAGs simultaneously. We leave these extensions to our future work.

References

- [1] L. Liu, H. Tan, S. H.-C. Jiang, Z. Han, X.-Y. Li, and H. Huang, “Dependent task placement and scheduling with function configuration in edge computing,” in Proceedings of the International Symposium on Quality of Service, ser. IWQoS ’19, New York, NY, USA, 2019.

- [2] S. Khare, H. Sun, J. Gascon-Samson, K. Zhang, A. Gokhale, Y. Barve, A. Bhattacharjee, and X. Koutsoukos, “Linearize, predict and place: Minimizing the makespan for edge-based stream processing of directed acyclic graphs,” in Proceedings of the 4th ACM/IEEE Symposium on Edge Computing, ser. SEC ’19, New York, NY, USA, 2019, p. 1–14.

- [3] Z. Zhou, Q. Wu, and X. Chen, “Online orchestration of cross-edge service function chaining for cost-efficient edge computing,” IEEE Journal on Selected Areas in Communications, vol. 37, no. 8, pp. 1866–1880, 2019.

- [4] F. Duchene, D. Lebrun, and O. Bonaventure, “Srv6pipes: Enabling in-network bytestream functions,” Computer Communications, vol. 145, pp. 223 – 233, 2019.

- [5] S. Vassilaras, L. Gkatzikis, N. Liakopoulos, I. N. Stiakogiannakis, M. Qi, L. Shi, L. Liu, M. Debbah, and G. S. Paschos, “The algorithmic aspects of network slicing,” IEEE Communications Magazine, vol. 55, no. 8, pp. 112–119, Aug 2017.

- [6] I. Afolabi, T. Taleb, K. Samdanis, A. Ksentini, and H. Flinck, “Network slicing and softwarization: A survey on principles, enabling technologies, and solutions,” IEEE Communications Surveys Tutorials, vol. 20, no. 3, pp. 2429–2453, thirdquarter 2018.

- [7] “Alibaba cluster trace program,” https://github.com/alibaba/clusterdata.

- [8] H. Topcuoglu, S. Hariri, and Min-You Wu, “Performance-effective and low-complexity task scheduling for heterogeneous computing,” IEEE Transactions on Parallel and Distributed Systems, vol. 13, no. 3, pp. 260–274, 2002.

- [9] G. T. Lakshmanan, Y. Li, and R. Strom, “Placement strategies for internet-scale data stream systems,” IEEE Internet Computing, vol. 12, no. 6, pp. 50–60, 2008.

- [10] L. Tom and V. R. Bindu, “Task scheduling algorithms in cloud computing: A survey,” in Inventive Computation Technologies, S. Smys, R. Bestak, and Á. Rocha, Eds. Cham: Springer International Publishing, 2020, pp. 342–350.

- [11] M. Leconte, G. S. Paschos, P. Mertikopoulos, and U. C. Kozat, “A resource allocation framework for network slicing,” in IEEE INFOCOM 2018 - IEEE Conference on Computer Communications, 2018, pp. 2177–2185.

- [12] J. Xu, Z. Chen, J. Tang, and S. Su, “T-storm: Traffic-aware online scheduling in storm,” in 2014 IEEE 34th International Conference on Distributed Computing Systems, 2014, pp. 535–544.

- [13] L. Chen, J. Xu, S. Ren, and P. Zhou, “Spatio–temporal edge service placement: A bandit learning approach,” IEEE Transactions on Wireless Communications, vol. 17, no. 12, pp. 8388–8401, 2018.

- [14] Y. Kao, B. Krishnamachari, M. Ra, and F. Bai, “Hermes: Latency optimal task assignment for resource-constrained mobile computing,” IEEE Transactions on Mobile Computing, vol. 16, no. 11, pp. 3056–3069, 2017.

- [15] S. Sundar and B. Liang, “Offloading dependent tasks with communication delay and deadline constraint,” in IEEE INFOCOM 2018 - IEEE Conference on Computer Communications, 2018, pp. 37–45.

- [16] J. Meng, H. Tan, C. Xu, W. Cao, L. Liu, and B. Li, “Dedas: Online task dispatching and scheduling with bandwidth constraint in edge computing,” in IEEE INFOCOM 2019 - IEEE Conference on Computer Communications, 2019, pp. 2287–2295.

- [17] J. Gedeon, M. Stein, L. Wang, and M. Muehlhaeuser, “On scalable in-network operator placement for edge computing,” in 2018 27th International Conference on Computer Communication and Networks (ICCCN), 2018, pp. 1–9.

- [18] X. Cai, H. Kuang, H. Hu, W. Song, and J. Lü, “Response time aware operator placement for complex event processing in edge computing,” in Service-Oriented Computing, C. Pahl, M. Vukovic, J. Yin, and Q. Yu, Eds. Cham: Springer International Publishing, 2018, pp. 264–278.