PMSSC: Parallelizable multi-subset based self-expressive model for subspace clustering

Abstract

Subspace clustering methods which embrace a self-expressive model that represents each data point as a linear combination of other data points in the dataset provide powerful unsupervised learning techniques. However, when dealing with large datasets, representation of each data point by referring to all data points via a dictionary suffers from high computational complexity. To alleviate this issue, we introduce a parallelizable multi-subset based self-expressive model (PMS) which represents each data point by combining multiple subsets, with each consisting of only a small proportion of the samples. The adoption of PMS in subspace clustering (PMSSC) leads to computational advantages because the optimization problems decomposed over each subset are small, and can be solved efficiently in parallel. Furthermore, PMSSC is able to combine multiple self-expressive coefficient vectors obtained from subsets, which contributes to an improvement in self-expressiveness. Extensive experiments on synthetic and real-world datasets show the efficiency and effectiveness of our approach in comparison to other methods.

I Introduction

In many real-world cases, approximating high-dimensional data as a union of low-dimensional subspaces is a beneficial technique for reducing computational complexity and the effects of noise. The task of subspace clustering [1, 2], which is the segmentation of a set of data points into those lying on certain subspaces, has been studied in many practical applications such as face clustering [3], image segmentation [4], motion segmentation [5], scene segmentation [6], and homography detection [7]. Recently, self-expressive models [8, 9] have been explored, which embrace the self-expressive property of subspaces to compute an affinity matrix. The self-expressive property states that each data point from a union of subspaces can be represented as a linear combination of other points. Specifically, given a data matrix in which each data point is a column, the self-expressive model of data point can be described as

| (1) |

where is a coefficient vector, and the constraint avoids the trivial solution of representing a point as a linear combination of itself. The feasible solutions of Eq. (1) are generally not unique because the number of data points lying on a subspace is larger than its dimensionality. However, at least one exists where is nonzero only if data points are in the same subspace, and such a state is called subspace-preserving [10]. Previous works have tried to compute subspace-preserving representations by imposing a regularization term on the coefficients . In particular, one algorithm for obtaining a sparse solution to Eq. (1), sparse subspace clustering (SSC) [8, 9], can recover subspaces under mild conditions by regularizing the coefficient matrix corresponding to the coefficient vector of each data point . SSC not only achieves high clustering accuracy for datasets with outliers and missing entries, but also has the useful properties of giving theoretical guarantees and providing modeling flexibility, which have influenced many other approaches such as [11, 12]. However, SSC suffers from high computational and memory costs when dealing with a large-scale dataset because of the need to determine the coefficients of . In light of these problems, there has been much interest in recent years in developing scalable subspace clustering algorithms that can be applied to large-scale datasets, taking advantage of the ease of analyzing computational complexity due to the simplicity of the model.

Several works have attempted to address the problem of computational cost for large-scale datasets using a sampling strategy, motivated by the sparsity assumption that each data point can be represented as a linear combination of a few basis vectors. The self-expressive property with a few sampled data points and classifying of the other data points was proposed in [13]. While this strategy can produce clustering results more efficiently for a large-scale dataset than directly applying SSC to all data, it leads to poor clustering performance when the sampled data is not representative of the original dataset. Although a learning-based sampling method has also been proposed for generating a coefficient matrix that is representative of the original dataset [14], the accuracy and computational complexity still depend largely on the size of the subsets, as these methods attempt to solve for a self-expressive model in a single subset. Also, no effort has been made to explicitly improve the self-expressiveness of the self-expressive coefficient vectors in these methods.

To further improve self-expressiveness without increasing the computational burden, in this paper, we propose a self-expressive model adopting multiple subsets, which is computable in parallel. Specifically, our model obtains a self-expressive coefficient matrix by combining multiple subsets; each subset consists of only a small proportion of the samples. This strategy not only enjoys the benefit of low computational cost like other single subset-based methods, but also is more effectively subspace-preserving because the representation of the original data is a linear combination of multiple self-expressive coefficient vectors.

Our contributions are highlighted as follows:

-

•

a novel clustering approach that exploits a self-expressive model based on multiple subsets,

-

•

a concisely formulated model,

-

•

each subset can be computed independently in parallel without additional computational overhead,

-

•

extensive experiments on both synthetic data and real-world datasets showing that our proposed method can achieve better results without increasing processing time.

II Related Work

II-A Background

In the past few years, there has been a surge of spectral clustering-based algorithms that segment a set of data points by performing spectral clustering. Previous classical methods, such as -subspaces [15] and median -flats [16], assume that the dimensionalities of the underlying subspaces are given in advance. This latent knowledge is generally hard to access in many real-world applications. In addition, these methods are usually non-convex and thus sensitive to initialization [17, 18]. Aiming to relax the limitations of the -subspace algorithm, the majority of modern subspace clustering methods explored have turned to spectral clustering [19, 20], which segment data using an affinity matrix that captures whether a certain pair of data points lie on the same subspace. While many early methods [21, 22, 12, 23] achieve better segmentation than classical clustering algorithms even without the latent knowledge, these methods produce erroneous segmentation results for data points near the intersection of two subspaces due to the dense sampling of points lying on the subspace [24]. We now introduce previous subspace clustering approaches based on spectral clustering, then describe various techniques of scalable subspace clustering methods for dealing with large-scale datasets, which are closer to our proposed method.

II-B Subspace Clustering Using Spectral Clustering

Most subspace clustering approaches based on spectral clustering consist of two phases: (i) computing an affinity matrix based on the nonzero coefficients that appear in the representation of each data point as a combination of other points, and (ii) segmenting data points from the computed affinity matrix by applying spectral clustering. The key to the success of segmentation is the phase of computing the affinity matrix. Therefore, many methods have been proposed to compute the affinity matrix. For example, local subspace affinity [24] and spectral curvature clustering (SCC) [25] find neighborhoods based on the observation that a point and its -nearest neighbors often lie on the same subspace. However, the computational complexity of finding multi-way similarity in these methods grows exponentially with the number of subspace dimensions, motivating the use of a sampling strategy to lower the computational complexity [9]. Recently, the self-expressive model, which employs the self-expressive property in Eq. (1), has become the most popular one. In particular, SSC takes advantage of sparsity [26] by adopting norm regularization of the coefficient vector to achieve high clustering performance. This idea has motivated many methods, using the norm in least squares regression [27], the nuclear norm in low rank representation (LRR) [28], the plus norm in elastic net subspace clustering (EnSC) [29], and the Frobenius norm in efficient dense subspace clustering [30]. In practice, however, solving the norm minimization problem for large-scale data may be prohibitive. Also, the memory required becomes larger as the amount of data increases.

II-C Scalable Subspace Clustering

When constructing the affinity matrix, several methods based on spectral clustering suffer from high computational complexity. To reduce the computational complexity of this phase, a sparse self-expressive model adopting a greedy algorithm was proposed in [31, 10]. However, these approaches lead to unsatisfactory clustering results if the nonzero elements do not contain sufficient connections within each optimized coefficient vector [32]. Other popular approaches to alleviate the computational and memory loads were inspired by a sampling strategy. In [13], scalable sparse subspace clustering (SSSC) is computationally efficient, using a subset generated by random sampling. However, because the random sampling method relies on a single subset, data points from the same subspace will not be represented by the self-expressive model if they are not appropriately sampled. Exemplar-based subspace clustering [33, 12] is an efficient sampling technique that iteratively selects the least well-represented point as a subset to address the problem. Selective sampling-based scalable sparse subspace clustering (S5C) [14], which generates a subset by selective sampling, provides approximation guarantees of the subspace-preserving property. In [34], the subspace-preserving representations are found by solving a consensus problem with multiple subsets to improve the connectivity of the affinity matrix. In [35], a divided-and-conquer framework using multiple subsets obtained by separating the entire dataset is proposed. While this approach can deal with large-scale data, final segmentation results depend on the self-expressive properties of the optimized self-expressive coefficient vectors of each subset. Our method differs significantly from [35] and [34] in that our proposed self-representation model is designed to minimize the difference from the original data points by combining the self-expressive property of multiple subsets. Lastly, in this paper, we limit our discussion to non-deep learning approaches, which are more mathematically straightforward to explain and rely less on parameter tuning.

III Parallelizable Multi-Subset Based Sparse Subspace Clustering

III-A Problem and Approach

As a problem definition, our final goal is to find the self-expressive coefficient vector , which satisfies the subspace-preserving representation in Eq. (1). That is, the self-expressive residual can be obtained by solving the following optimization problem,

| (2) |

where is the pseudo-norm that returns the number of nonzero entries in the vector. This optimization problem has been shown [36, 37] to recover provably subspace-preserving solutions using the orthogonal matching pursuit (OMP) algorithm [38]. is a tuning parameter for the OMP algorithm, which controls the sparsity of the solution by selecting up to entries in the coefficient vector . Although the OMP algorithm is computationally efficient and is guaranteed to give subspace-preserving solutions under mild conditions, it is unable to produce a subspace-preserving solution with a number of nonzero entries exceeding the dimensionality of the subspace [10]. This leads to poor clustering performance with too sparse affinity between data points, especially when the density of data points lying on the subspace is low.

We propose a novel subspace clustering algorithm with a parallelizable multi-subset based self-expressive model, as illustrated in Fig. 1. Sec. III-B introduces our proposed self-expressive model that extends the model in Eq. (2) to multiple subsets via a sampling technique. Sec. III-C then explains the solution of our self-expressive model by the OMP algorithm. Finally, we summarize the proposed subspace clustering algorithm in Algorithm 4.

III-B Parallelizable Multi-Subset based Self-Expressive Model

To deal with large-scale data, we first generate index subsets from the whole dataset by weighted random sampling [39] as follows:

| (3) |

where is the index set of the -th subset that is sampled with probability proportional to the elements of the weight vector , is indices , is the sampling rate, and is the cardinality function that is a measure of the number of elements. The -th selected element of is updated as . Then, in each sampled -th subset, the optimization problem in Eq. (2) can be expressed as follows:

| (4) |

where is the data matrix of the randomly sampled -th subset. is the self-expressive coefficient vector for each data point in the -th subset. Note that to ensure the dimensionality of is , the columns of each data matrix corresponding to the non-sampled indices are replaced by zero-vectors: . From each optimized coefficient vector , each data point can be represented by a self-expressive model, given by:

| (5) |

where is the data point computed by the self-expressive model from the -th subset. In practice, however, the data point in Eq. (5) generally has an error term , i.e., , because of the limitations of using as a dictionary for reconstruction. To minimize , we first represent as a linear combination of , as follows:

| (6) |

where is the weight coefficient vector to represent , and is the -th entry of . The coefficient vector of the linear combination in Eq. (6) can be obtained by solving the following optimization problem,

| (7) |

For simplicity, we introduce a data matrix with each data point from Eq. (5) as columns, and rewrite Eq. (7) as

| (8) |

This is the formulation of the optimization problem for subspace clustering in Eq. (2), and can be further described as:

| (9) |

Unlike in Eq. (1), here is the data matrix computed from each subset to represent . Thus, no constraint is required to avoid the trivial solution of representing a point as a linear combination of itself. To explicitly express Eq. (2), the self-expressive coefficient vector corresponding to is obtained by

| (10) |

It is worth noting that each can be determined independently from each subset, so can be computed in parallel for speed.

III-C Optimization with Orthogonal Matching Pursuit

In this section, we show that the parameters of the proposed PMS model can be determined by dividing the optimization problem into two small optimization problems as summarized in Algorithm 1. Overall, Eq. (10) can be determined by solving the minimization problems in Eqs. (4) and (8). We introduce both of the minimization procedures below. Specifically, initially, subset data matrices are generated based on the sampling set in Eq. (3).

To efficiently solve for in Eq. (4), we introduce Algorithm 2 based on the OMP algorithm. The support set and the residual are initialized to and , respectively. denotes the index set, which is updated on each iteration by adding one index . is computed using

| (11) |

Then, using the updated , the self-expressive coefficient vector is found by solving the following problem:

| (12) |

where is the support function that returns the subgroup of the domain containing the elements not mapped to zero. is updated using:

| (13) |

This process is repeated until the number of iterations reaches its limit or is smaller than the error .

To find , Eq. (8) can also be solved via the OMP algorithm, as shown in Algorithm 3. The input data matrix is generated by Eq. (5) from . Note that the size of depends on the number of subsets and is much smaller than the number of data points. The maximum number of repetitions is , and is updated by finding the index satisying

| (14) |

In addition, the weight coefficient vector and update of are determined by solving

| (15) |

| (16) |

For clarity, we summarize the entire framework of our proposed subspace clustering approach in Algorithm 4, calling it the parallelizable multi-subset based sparse subspace clustering (PMSSC) method. Given and parameters , , , and , the optimal solution can be found using Algorithm 1. We thus define the affinity matrix as using the computed ; the final clustering results can be obtained by applying spectral clustering to via normalized cut [19].

IV Experiments and Results

We have evaluated our approach using both synthetic data and real-world benchmark datasets.

IV-A Baselines and Evaluation Metrics.

We compare our approach to the following eight methods: SCC [25], LRR [28], thresholding-based subspace clustering (TSC) [40], low rank subspace clustering (LRSC) [41], SSSC [13], EnSC [29], SSC-OMP [10], and S5C [14]. Tests for all comparative methods used provided source code, and each parameter was carefully tuned to give the best clustering accuracy. For spectral clustering, except for SCC, S5C, and SSSC, we applied normalized cut [19] to the affinity matrix . (SCC and S5C have their own spectral clustering phase, while SSSC obtains clustering results from the data split into two parts). Unlike SSC-OMP, our method, which involves independent calculation for each subset, can be implemented in parallel with multi-core processing. All algorithms ran on an AMD Ryzen 7 3700x processor with 32 GB RAM. Following [10], as quantitative evaluation metrics, we evaluated each algorithm using clustering accuracy (acc: ), subspace-preserving representation error (sre: ), connectivity (conn: ), and runtime ( seconds). Clustering accuracy represents the percentage of correctly labeled data points:

| (17) |

where is a permutation of the cluster groups. and are the estimated labeling result and ground-truth, respectively, scoring one in the th entry if a data point belongs to the -th cluster and zero otherwise. The subspace-preserving representation error indicates the average fraction of affinities formed from other subspaces in each ,

| (18) |

where is the true affinity, and returns the norm. The connectivity shows the average connection of the affinity matrix with cluster groups as follows:

| (19) |

where is the second smallest eigenvalue of the normalized Laplacian for each of the subgraph, and indicates the algebraic connectivity for each cluster. If , then at least one subgraph is not connected [42].

| Datasets | Ex. Yale B | ORL | GTSRB | BBCSport | MNIST4000 | MNIST10000 | MNIST | EMNIST | CIFAR-10 |

|---|---|---|---|---|---|---|---|---|---|

| 5 | 5 | 3 | 3 | 10 | 10 | 10 | 10 | 3 | |

| 0.6 | 0.6 | 0.2 | 0.4 | 0.3 | 0.2 | 0.1 | 0.2 | 0.2 | |

| 6 | 11 | 8 | 15 | 7 | 10 | 19 | 12 | 18 |

IV-B Experiments on Synthetic Data

IV-B1 Setup

We first report experimental results on data synthesised by randomly generating five linear subspaces of as ground-truth in an ambient space of . Each subspace contains randomly sampled data points. To confirm the statistical results, we conducted the experiments by varying from 100 to 4,000, so the total number of data points varies from 500 to 20,000. We set the parameters , , and . The percentage of in-sample in SSSC is set to of the total number of data points. All experimental results recorded on synthetic data were averaged over 50 trials.

IV-B2 Results

The curve for each metric is shown as a function of in Fig. 2. We can observe from Fig. 2 that PMSSC outperforms SSC-OMP in terms of clustering accuracy. The difference is especially large when the density of data points on the underlying subspace is lower. This could be partly due to the fact that PMSSC succeeds in generating better connectivity than SSC-OMP (see Fig. 2), and achieves lower subspace-preserving representation error (see Fig. 2). On the other hand, as Fig. 2 shows, PMSSC is much faster with parallel implementation, which is advisable for solving problems involving large-scale data. In addition, compared to SSSC which adopts a similar sampling approach to our method, PMSSC outperforms both in clustering accuracy and runtime (using a parallel implementation).

IV-C Experiments on Benchmark Datasets for Real-world Applications

IV-C1 Setup

We conducted experiments on five benchmark datasets: Extended Yale B [43] and ORL [44] for face clustering, BBCSport [45] for text document clustering, German Traffic Sign Recognition Benchmark (GTSRB) [46] for street sign clustering, Modified National Institute of Standards and Technology database (MNIST) [47] and Extended MNIST (EMNIST) [48] for handwritten character clustering, and Canadian Institute For Advanced Research (CIFAR-10) [49] for object clustering. Parameter settings used for our method in these experiments are shown in Table I. Since the sparsity in PMSSC and SSC-OMP is related to the intrinsic dimensionality of the subspace, it is manually determined to be close to the dimensionality of the subspaces. For sampling rate , we picked a smaller for a larger dataset. For the number of subsets , we adopted a value that takes into account the trade-off between runtime and clustering accuracy. All experimental results recorded on all benchmark datasets are averaged over 10 trials. Details of each benchmark dataset and the corresponding clustering results are now given.

| Method | Extended Yale B | |||

|---|---|---|---|---|

| acc () | sre () | conn () | (sec.) | |

| SCC | 9.54 | - | - | 293.01 |

| LRR | 37.58 | 97.44 | 0.8175 | 219.91 |

| TSC | 52.40 | - | 0.0014 | 10.46 |

| LRSC | 56.71 | 91.64 | 0.4360 | 4.37 |

| SSSC | 49.77 | - | - | 18.22 |

| EnSC | 55.76 | 18.90 | 0.0395 | 4.57 |

| SSC-OMP | 73.82 | 20.07 | 0.0364 | 1.27 |

| S5C | 62.99 | 58.74 | 0.2238 | 952.26 |

| PMSSC | 80.24 | 22.35 | 0.0858 | 2.66 |

| Method | ORL | |||

|---|---|---|---|---|

| acc () | sre () | conn () | (sec.) | |

| SCC | 16.40 | - | - | 89.45 |

| LRR | 32.98 | 97.70 | 0.8394 | 3.57 |

| TSC | 68.03 | - | 0.0992 | 0.72 |

| LRSC | 43.12 | 93.72 | 0.5248 | 0.24 |

| SSSC | 65.12 | - | - | 1.78 |

| EnSC | 70.03 | 32.46 | 0.1825 | 0.65 |

| SSC-OMP | 60.12 | 34.14 | 0.0770 | 0.12 |

| S5C | 69.48 | 63.26 | 0.3868 | 54.28 |

| PMSSC | 74.45 | 40.97 | 0.1708 | 0.51 |

| Method | GTSRB | |||

|---|---|---|---|---|

| acc () | sre () | conn () | (sec.) | |

| SCC | 59.68 | - | - | 84.26 |

| LRR | 27.87 | 86.64 | 0.4255 | 725.18 |

| TSC | 56.36 | - | 0.0016 | 242.62 |

| LRSC | 83.97 | 80.14 | 0.6056 | 12.89 |

| SSSC | 88.03 | - | - | 16.86 |

| EnSC | 85.92 | 0.59 | 0.0065 | 10.77 |

| SSC-OMP | 81.28 | 5.38 | 0.0211 | 3.72 |

| S5C | 61.60 | 80.99 | 0.5941 | 422.35 |

| PMSSC | 91.57 | 7.69 | 0.0434 | 3.40 |

| Method | BBCSport | |||

|---|---|---|---|---|

| acc () | sre () | conn () | (sec.) | |

| SCC | 23.12 | - | - | 3.60 |

| LRR | 71.37 | 76.26 | 0.7744 | 7.59 |

| TSC | 73.95 | - | 0.0053 | 0.36 |

| LRSC | 89.53 | 66.38 | 0.5997 | 0.18 |

| SSSC | 50.24 | - | - | 0.26 |

| EnSC | 59.48 | 11.43 | 0.0243 | 0.61 |

| SSC-OMP | 69.85 | 15.96 | 0.0393 | 0.10 |

| S5C | 55.90 | 65.78 | 0.5434 | 17.99 |

| PMSSC | 81.71 | 14.36 | 0.0509 | 0.47 |

| Method | MNIST4000 | MNIST10000 | ||||||

|---|---|---|---|---|---|---|---|---|

| acc () | sre () | conn () | (sec.) | acc () | sre () | conn () | (sec.) | |

| SCC | 67.45 | - | - | 5.93 | 70.43 | - | - | 11.67 |

| LRR | 78.49 | 90.21 | 0.8979 | 43.03 | 77.53 | 90.60 | 0.8818 | 396.45 |

| TSC | 79.57 | - | 0.0009 | 11.76 | 80.62 | - | 0.0005 | 132.08 |

| LRSC | 81.23 | 75.67 | 0.5984 | 1.61 | 80.86 | 77.30 | 0.5983 | 7.58 |

| SSSC | 70.73 | - | - | 3.11 | 84.32 | - | - | 13.20 |

| EnSC | 89.08 | 21.14 | 0.1174 | 12.71 | 88.24 | 17.34 | 0.0975 | 35.63 |

| SSC-OMP | 91.49 | 34.69 | 0.1329 | 1.61 | 91.40 | 32.23 | 0.1169 | 6.44 |

| S5C | 81.52 | 66.28 | 0.4476 | 277.93 | 79.30 | 66.23 | 0.4466 | 683.65 |

| PMSSC | 92.85 | 38.27 | 0.1944 | 1.42 | 93.57 | 36.43 | 0.1817 | 4.55 |

| Method | MNIST70000 | |||

|---|---|---|---|---|

| acc () | sre () | conn () | (sec.) | |

| SCC | 69.08 | - | - | 388.00 |

| LRR | M | - | - | - |

| TSC | M | - | - | - |

| LRSC | M | - | - | - |

| SSSC | 81.57 | - | - | 303.28 |

| EnSC | 93.79 | 11.26 | 0.0596 | 408.62 |

| SSC-OMP | 82.83 | 28.57 | 0.0830 | 248.50 |

| S5C | 72.99 | 66.87 | 0.4437 | 4953.28 |

| PMSSC | 84.45 | 32.63 | 0.1148 | 65.08 |

IV-C2 Extended Yale B

Extended Yale B contains 2,432 facial images in 38 classes; see Fig. 3. In this experiment, following [9], we concatenated the pixels of each image resized to , and used the 2016-dimensional vector as input data.

The results on Extended Yale B are shown in Table II. In each column, the best result is shown in bold, and the second-best result is underlined. They confirm that PMSSC yields the best clustering accuracy, and improves the clustering accuracy over SSC-OMP by . Although the subspace-preserving error and runtime are slightly lower than SSC-OMP, the connectivity is greatly improved compared to SSC-OMP, leading to a better clustering accuracy. LRR, LRSC, and S5C have good connectivity, but poor subspace-preserving errors result in low clustering accuracy.

IV-C3 ORL

ORL contains 400 facial images in 40 classes, as shown in Fig. 3. In this experiment, following [50], we concatenate the pixels of each image resized to , and use a 1024-dimensional vector as input data. Compared to Extended Yale B, ORL is a more difficult problem setting for subspace clustering because the density of data lying near the same subspace (10 vs. 64) is lower due to the small number of images of each subject, and the subspaces have more non-linearity due to changes in facial expressions and details.

The results for ORL are listed in Table III. We can again observe that PMSSC achieves the best clustering accuracy, and improves the connectivity compared to SSC-OMP. However, since PMSSC does not incorporate nonlinear constraints, the subspace-preserving error does not improve along with the improvement of the connectivity.

IV-C4 GTSRB

GTSRB contains over 50,000 street sign images in 43 classes; see Fig. 3. Following [33], we preprocess the dataset represented by a 1568-dimensional HOG feature to get an imbalanced dataset of the 500-dimensional vectors with 12,390 samples in 14 classes.

The results on GTSRB are reported in Table IV. Again PMSSC yields the best clustering accuracy and runtime, and improves the clustering accuracy roughly by compared to SSC-OMP. In particular, PMSSC has both good subspace-preserving error and connectivity. While EnSC and SSSC also achieve competitive clustering accuracy, their computational costs are much higher.

| Method | EMNIST-Letters | |||

|---|---|---|---|---|

| acc () | sre () | conn () | (sec.) | |

| SCC | M | - | - | - |

| LRR | M | - | - | - |

| TSC | M | - | - | - |

| LRSC | M | - | - | - |

| SSSC | 60.62 | - | - | 1538.46 |

| EnSC | 64.15 | 26.20 | 0.0086 | 1575.46 |

| SSC-OMP | 58.71 | 43.93 | 0.0000 | 1214.31 |

| S5C | 60.01 | 83.37 | 0.3517 | 15698.90 |

| PMSSC | 66.52 | 46.76 | 0.0019 | 638.03 |

IV-C5 BBCSport

BBCSport contains 737 documents in five classes. The data provided by the database has been preprocessed by stemming, stop-word removal, and low term frequency filtering. In this experiment, we reduced the dimensionality of feature vectors to 500 by PCA.

The results on BBCSport are summarized in Table V. We can observe that PMSSC yields the second best clustering accuracy and subspace-preserving error. LRSC yields the best clustering accuracy due to good connectivity. For small-scale datasets such as BBCSport and ORL, the speed of PMSSC is slightly lower than for SSC-OMP because the advantage of reducing data size by sampling multiple subsets is diminished.

IV-C6 MNIST and EMNIST-Letters

MNIST contains 70,000 images of handwritten digits (0–9), while EMNIST-Letters contains 145,600 images of handwritten characters in 26 classes, as shown in Figs. 3 and 3. In our experiments, following [34], we generate MNIST4000 and MNIST10000, which are produced by randomly sampling 400 and 1,000 images per class of digit, respectively. Each image is represented as a 3472-dimensional feature vector by using the scattering convolution network [51], and its dimensionality reduced to 500 by PCA.

The results on MNIST and EMNIST-Letters are summarized in Tables VI–VIII. We can observe that PMSSC yields the best clustering accuracy on MNIST4000, MNIST10000, and EMNIST-Letters. In particular, PMSSC is remarkably faster than the comparative methods on MNIST70000 and EMNIST-Letters. In the case of MNIST70000, EnSC yields the best clustering accuracy and subspace-preserving error but its computational cost is high. Similarly, S5C can achieve good connectivity, but is very slow.

IV-C7 CIFAR-10

CIFAR-10 includes 60,000 general objects in 10 classes, as illustrated in Fig. 3. Following [52], we employ the feature representations extracted by MCR2 [53], which learns a union of low-dimensional subspaces representation via self-supervised learning. Each feature is represented by a 128-dimensional feature vector, further normalized to have unit norm.

The comparative results on CIFAR-10 are summarized in Table IX. It can be observed that our method outperforms others in terms of the runtime, while the clustering accuracy is competitive. However, as with SSC-OMP, we see that the connectivity is lower than for S5C, which uses norm regularization.

IV-C8 Summary

Overall, our proposed method becomes significantly faster as the amount of input data increases. In addition, it achieves good clustering accuracy and connectivity, and provides subspace-preserving errors comparable to those of the comparative algorithms.

| Method | CIFAR-10 | |||

|---|---|---|---|---|

| acc () | sre () | conn () | (sec.) | |

| SCC | 37.10 | - | - | 196.40 |

| LRR | M | - | - | - |

| TSC | M | - | - | - |

| LRSC | M | - | - | - |

| SSSC | 63.80 | - | - | 74.36 |

| EnSC | 61.79 | 22.60 | 0.0000 | 178.22 |

| SSC-OMP | 40.86 | 24.92 | 0.0000 | 63.58 |

| S5C | 64.52 | 46.35 | 0.2314 | 2338.55 |

| PMSSC | 63.52 | 26.41 | 0.0000 | 29.60 |

IV-D Analysis

IV-D1 Multi-subset Based Self-Expressive Model

Since our approach aims to minimize the self-expressive residual by the weight coefficient vector solved in Algorithm 3, we show the mean self-expressive residual of data points represented by the coefficient vectors in Fig. 4. This experiment was performed on synthetic data, and we fixed and . Each blue bar indicates the mean self-expressive residual of the data points represented by Eq. (5), computed as

| (20) |

The red bar indicates the mean self-expressive residual of the data points represented by Eq. (9), computed as:

| (21) |

We can clearly observe that the mean self-expressive residual of PMS has lower error than every single subset. To highlight the benefit of , we made a comparison to a variant of our approach, named PMSSC(avg), which replaced by a simple average operation: in PMSSC(avg), Eq. (10) is replaced by

| (22) |

We performed experiments on synthetic data using the same setup as for Fig. 2 and present the results in Fig. 5. As can be seen, incorporating improves clustering performance; in particular, the subspace-preserving representation error is significantly reduced. These experiments indicate that the weight coefficient vector contributes to improving self-expressiveness.

IV-D2 Selection of Parameters

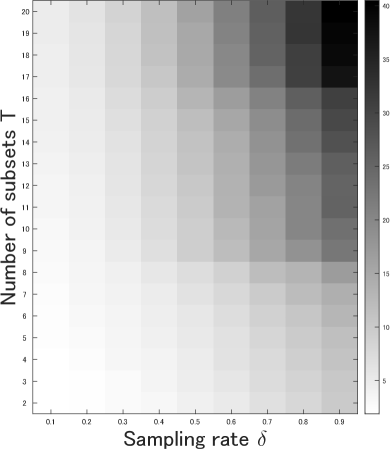

We performed multiple experiments on the GTSRB dataset with various choices of hyperparameters to evaluate the sensitivity of our approach to parameter choice. Changes in clustering accuracy, subspace-preserving representation error, connectivity, and runtime when varying each parameter are illustrated in Fig. 6. We can confirm that high clustering accuracy and low subspace-preserving representation error are maintained in most cases, except when both and are extremely small. This implies that the affinity matrix constructed by PMSSC provides subspace-preserving representations at most data points. We can also see that the connectivity improves as the number of subsets increases, because the affinity matrix contains at most nonzero entries in OMP optimization. Considering runtime, a practical choice of parameters is to increase for small values of , and decrease for large values of . In addition, time taken can be kept low by picking a small value of for large-scale datasets.

IV-D3 Sampling Technique

Our approach adopts weighted random sampling to generate the subset data matrix . To analyze the effect of sampling methods on our approach, we compared weighted random sampling to random sampling with uniform weights. The experimental settings used for synthetic data follow those in Fig. 2. Fig. 7 shows the clustering accuracy and connectivity as functions of . Obviously, weighted random sampling outperforms random sampling in terms of both clustering accuracy and connectivity. In particular, as the density of data points increases, the connectivity of the method with random sampling becomes zero, because imbalanced sampling leads to a disconnected subgraph in an affinity graph.

IV-D4 Computational Complexity

Algorithms 2 and 3 for affinity matrix construction consume most of the processing time. In Algorithm 2, the computational complexity for finding the self-expressive coefficient vector requires time . In Algorithm 3, the computational complexity for finding the weight coefficient vector requires . Because these two algorithms are performed on data points, the computational complexity of PMS requires at least time . However, processing subsets (the part taking ) can be performed in parallel, which reduces the computation time compared to methods that directly deal with the whole dataset. Fig. 2 supports this analysis.

V Conclusions

We have proposed a parallelizable multi-subset based self-expressive model for subspace clustering. A representation of the input data is formulated by combining the solutions of small optimization problems with respect to multiple subsets generated by data sampling. We have shown that this strategy can significantly improve speed with a multi-core approach that can be easily implemented, especially for large-scale data. Moreover, it has been verified that combining multiple subsets can reduce the self-expressive residuals of data compared to a single subset. Extensive experiments on synthetic data and real-world datasets have demonstrated the efficiency and effectiveness of our approach. As a limitation, our method is still unable to handle nonlinear subspaces due to the problem setting. In future, we would like to design a self-expressive model that can handle nonlinear subspaces, with the help of modeling capabilities from neural network architectures.

References

- [1] R. Vidal, “Subspace clustering,” IEEE Signal Processing Magazine, vol. 28, no. 2, pp. 52–68, 2011.

- [2] K. Hotta, H. Xie, and C. Zhang, “Affine subspace clustering with nearest subspace neighbor,” in International Workshop on Advanced Imaging Technology (IWAIT) 2021, vol. 11766, 2021, p. 117661C.

- [3] C. Zhang, “Energy minimization over m-branched enumeration for generalized linear subspace clustering,” IEICE Transactions on Information and Systems, vol. 102, no. 12, pp. 2485–2492, 2019.

- [4] A. Y. Yang, J. Wright, Y. Ma, and S. S. Sastry, “Unsupervised segmentation of natural images via lossy data compression,” Computer Vision and Image Understanding, vol. 110, no. 2, pp. 212–225, 2008.

- [5] R. Vidal, R. Tron, and R. Hartley, “Multiframe motion segmentation with missing data using powerfactorization and gpca,” International Journal of Computer Vision, vol. 79, no. 1, pp. 85–105, 2008.

- [6] S. Tierney, J. Gao, and Y. Guo, “Subspace clustering for sequential data,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2014, pp. 1019–1026.

- [7] C. Zhang, X. Lu, K. Hotta, and X. Yang, “G2mf-wa: Geometric multi-model fitting with weakly annotated data,” Computational Visual Media, vol. 6, pp. 135–145, 2020.

- [8] E. Elhamifar and R. Vidal, “Sparse subspace clustering,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, vol. 6, 2009, pp. 2790–2797.

- [9] ——, “Sparse subspace clustering: Algorithm, theory, and applications,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 35, no. 11, pp. 2765–2781, 2013.

- [10] C. You, D. Robinson, and R. Vidal, “Scalable sparse subspace clustering by orthogonal matching pursuit,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2016, pp. 3918–3927.

- [11] Y. Guo, S. Tierney, and J. Gao, “Efficient sparse subspace clustering by nearest neighbour filtering,” Signal Processing, vol. 185, p. 108082, 2021.

- [12] C. You, C. Li, D. Robinson, and R. Vidal, “Self-representation based unsupervised exemplar selection in a union of subspaces,” IEEE Transactions on Pattern Analysis and Machine Intelligence, 2020.

- [13] X. Peng, L. Zhang, and Z. Yi, “Scalable sparse subspace clustering,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2013, pp. 430–437.

- [14] S. Matsushima and M. Brbic, “Selective sampling-based scalable sparse subspace clustering,” Advances in Neural Information Processing Systems, vol. 32, pp. 12 416–12 425, 2019.

- [15] P. Tseng, “Nearest q-flat to m points,” Journal of Optimization Theory and Applications, vol. 105, no. 1, pp. 249–252, 2000.

- [16] T. Zhang, A. Szlam, and G. Lerman, “Median k-flats for hybrid linear modeling with many outliers,” in Conference on Computer Vision Workshops, 2009, pp. 234–241.

- [17] J. Lipor, D. Hong, Y. S. Tan, and L. Balzano, “Subspace clustering using ensembles of k-subspaces,” Information and Inference: A Journal of the IMA, vol. 10, no. 1, pp. 73–107, 2021.

- [18] C. Lane, B. Haeffele, and R. Vidal, “Adaptive online k-subspaces with cooperative re-initialization,” in Proceedings of the IEEE International Conference on Computer Vision Workshops, 2019, pp. 678–688.

- [19] J. Shi and J. Malik, “Normalized cuts and image segmentation,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 22, no. 8, pp. 888–905, 2000.

- [20] U. Von Luxburg, “A tutorial on spectral clustering,” Statistics and Computing, vol. 17, no. 4, pp. 395–416, 2007.

- [21] C. Lu, J. Feng, Z. Lin, T. Mei, and S. Yan, “Subspace clustering by block diagonal representation,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 41, no. 2, pp. 487–501, 2018.

- [22] W. Dong, X.-J. Wu, J. Kittler, and H.-F. Yin, “Sparse subspace clustering via nonconvex approximation,” Pattern Analysis and Applications, vol. 22, no. 1, pp. 165–176, 2019.

- [23] K. Hotta, H. Xie, and C. Zhang, “Candidate subspace screening for linear subspace clustering with energy minimization,” in Irish Machine Vision and Image Processing Conference, 2020, pp. 125–128.

- [24] J. Yan and M. Pollefeys, “A general framework for motion segmentation: Independent, articulated, rigid, non-rigid, degenerate and non-degenerate,” in European Conference on Computer Vision, 2006, pp. 94–106.

- [25] G. Chen and G. Lerman, “Spectral curvature clustering (scc),” International Journal of Computer Vision, vol. 81, no. 3, pp. 317–330, 2009.

- [26] D. L. Donoho, “For most large underdetermined systems of linear equations the minimal -norm solution is also the sparsest solution,” Communications on Pure and Applied Mathematics: A Journal Issued by the Courant Institute of Mathematical Sciences, vol. 59, no. 6, pp. 797–829, 2006.

- [27] C.-Y. Lu, H. Min, Z.-Q. Zhao, L. Zhu, D.-S. Huang, and S. Yan, “Robust and efficient subspace segmentation via least squares regression,” in European Conference on Computer Vision, 2012, pp. 347–360.

- [28] G. Liu, Z. Lin, Y. Yu et al., “Robust subspace segmentation by low-rank representation,” in Proceedings of the 27th International Conference on International Conference on Machine Learning, 2010, p. 663–670.

- [29] C. You, C.-G. Li, D. P. Robinson, and R. Vidal, “Oracle based active set algorithm for scalable elastic net subspace clustering,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2016, pp. 3928–3937.

- [30] P. Ji, M. Salzmann, and H. Li, “Efficient dense subspace clustering,” in IEEE Winter Conference on Applications of Computer Vision, 2014, pp. 461–468.

- [31] E. L. Dyer, A. C. Sankaranarayanan, and R. G. Baraniuk, “Greedy feature selection for subspace clustering,” The Journal of Machine Learning Research, vol. 14, no. 1, pp. 2487–2517, 2013.

- [32] B. Nasihatkon and R. Hartley, “Graph connectivity in sparse subspace clustering,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2011, pp. 2137–2144.

- [33] C. You, C. Li, D. P. Robinson, and R. Vidal, “Scalable exemplar-based subspace clustering on class-imbalanced data,” in Proceedings of the European Conference on Computer Vision, 2018, pp. 67–83.

- [34] Y. Chen, C.-G. Li, and C. You, “Stochastic sparse subspace clustering,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2020, pp. 4155–4164.

- [35] C. You, C. Donnat, D. P. Robinson, and R. Vidal, “A divide-and-conquer framework for large-scale subspace clustering,” in Proceedings of 50th Asilomar Conference on Signals, Systems and Computers, 2016, pp. 1014–1018.

- [36] M. A. Davenport and M. B. Wakin, “Analysis of orthogonal matching pursuit using the restricted isometry property,” IEEE Transactions on Information Theory, vol. 56, no. 9, pp. 4395–4401, 2010.

- [37] J. A. Tropp, “Greed is good: Algorithmic results for sparse approximation,” IEEE Transactions on Information Theory, vol. 50, no. 10, pp. 2231–2242, 2004.

- [38] Y. C. Pati, R. Rezaiifar, and P. S. Krishnaprasad, “Orthogonal matching pursuit: Recursive function approximation with applications to wavelet decomposition,” in Proceedings of Asilomar Conference on Signals, Systems and Computers, 1993, pp. 40–44.

- [39] C.-K. Wong and M. C. Easton, “An efficient method for weighted sampling without replacement,” SIAM Journal on Computing, vol. 9, no. 1, pp. 111–113, 1980.

- [40] R. Heckel and H. Bölcskei, “Subspace clustering via thresholding and spectral clustering,” in IEEE International Conference on Acoustics, Speech and Signal Processing, 2013, pp. 3263–3267.

- [41] R. Vidal and P. Favaro, “Low rank subspace clustering (lrsc),” Pattern Recognition Letters, vol. 43, pp. 47–61, 2014.

- [42] F. R. Chung, “Spectral graph theory,” 1997.

- [43] K.-C. Lee, J. Ho, and D. J. Kriegman, “Acquiring linear subspaces for face recognition under variable lighting,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 27, no. 5, pp. 684–698, 2005.

- [44] F. S. Samaria and A. C. Harter, “Parameterisation of a stochastic model for human face identification,” in Proceedings of the IEEE Workshop on Applications of Computer Vision, 1994, pp. 138–142.

- [45] D. Greene and P. Cunningham, “Practical solutions to the problem of diagonal dominance in kernel document clustering,” in Proc. 23rd International Conference on Machine learning, 2006, pp. 377–384.

- [46] J. Stallkamp, M. Schlipsing, J. Salmen, and C. Igel, “Man vs. computer: Benchmarking machine learning algorithms for traffic sign recognition,” Neural Networks, vol. 32, pp. 323–332, 2012.

- [47] Y. LeCun, L. Bottou, Y. Bengio, and P. Haffner, “Gradient-based learning applied to document recognition,” Proceedings of the IEEE, vol. 86, no. 11, pp. 2278–2324, 1998.

- [48] G. Cohen, S. Afshar, J. Tapson, and A. Van Schaik, “Emnist: Extending mnist to handwritten letters,” in International Joint Conference on Neural Networks, 2017, pp. 2921–2926.

- [49] A. Krizhevsky, “Learning multiple layers of features from tiny images,” University of Toronto, Tech. Rep., 2009.

- [50] D. Cai, X. He, Y. Hu, J. Han, and T. Huang, “Learning a spatially smooth subspace for face recognition,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2007, pp. 1–7.

- [51] J. Bruna and S. Mallat, “Invariant scattering convolution networks,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 35, no. 8, pp. 1872–1886, 2013.

- [52] S. Zhang, C. You, R. Vidal, and C.-G. Li, “Learning a self-expressive network for subspace clustering,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2021, pp. 12 393–12 403.

- [53] Y. Yu, K. H. R. Chan, C. You, C. Song, and Y. Ma, “Learning diverse and discriminative representations via the principle of maximal coding rate reduction,” Advances in Neural Information Processing Systems, vol. 33, pp. 9422–9434, 2020.