PNVC: Towards Practical INR-based Video Compression

Abstract

Neural video compression has recently demonstrated significant potential to compete with conventional video codecs in terms of rate-quality performance. These learned video codecs are however associated with various issues related to decoding complexity (for autoencoder-based methods) and/or system delays (for implicit neural representation (INR) based models), which currently prevent them from being deployed in practical applications. In this paper, targeting a practical neural video codec, we propose a novel INR-based coding framework, PNVC, which innovatively combines autoencoder-based and overfitted solutions. Our approach benefits from several design innovations, including a new structural reparameterization-based architecture, hierarchical quality control, modulation-based entropy modeling, and scale-aware positional embedding. Supporting both low delay (LD) and random access (RA) configurations, PNVC outperforms existing INR-based codecs, achieving nearly 35%+ BD-rate savings against HEVC HM 18.0 (LD) - almost 10% more compared to one of the state-of-the-art INR-based codecs, HiNeRV and 5% more over VTM 20.0 (LD), while maintaining 20+ FPS decoding speeds for 1080p content. This represents an important step forward for INR-based video coding, moving it towards practical deployment. The source code will be available for public evaluation.

Introduction

There is an ever-increasing requirement for higher video coding efficiency due to the significantly increased demand for high quality digital video content. Unlike standardized video codecs, such as H.265/HEVC (Sullivan et al. 2012) and H.266/VVC (Bross et al. 2021) that offer impressive performance using evolutions of conventional architectures, neural video compression is enjoying much faster development cycles and rapidly increasing performance benchmarks using an optimized, data-driven end-to-end architecture. Advances in this research area have delivered a wide variety of candidate neural video codecs, some of which (Xiang, Tian, and Zhang 2022; Li, Li, and Lu 2024) are reported to match or outperform the (rate-quality) performance of the latest state-of-the-art standard coding methods.

Although showing promise in terms of coding gain, neural video codecs (primarily those using autoencoder-based backbones) are associated with significant complexity issues, in particular on the decoder side, making them resource-intensive and impractical for many real-world applications. Although common complexity reduction techniques such as pruning and quantization (Peng et al. 2024; Hu and Xu 2023) can alleviate these limitations, this typically results in a nontrivial reduction in coding efficiency.

More recently, implicit neural representation (INR)-based coding methods (Chen et al. 2021; He et al. 2023; Kwan et al. 2024a) have attracted increasing attention as a paradigm-shifting solution to achieving high coding performance and low (decoding) complexity. This type of approach typically utilizes a lightweight neural network to overfit input video data by mapping coordinates directly to pixel values. Although the latest INR-based codecs (Kwan et al. 2024a) have shown consistent coding gains over many conventional and neural video codecs, they have a major limitation in that their compression strategy to represent an entire video sequence or a dateset with a single monolithic model. While this practice maximizes compression efficiency, it requires processing a large number of video frames (e.g., a few hundred to a few thousand) in each encoding session, which conflicts with commonly used coding configurations, where flexible system latency111Here latency is defined as the system delays in the coding process, e.g., the number of consecutive bi-directional predicted frames in a GOP (Group of Pictures)., e.g., Low Delay and Random Access modes in VVC VTM (Bossen et al. 2023), is often required. This issue prevents INR-based video codecs from being as performant on shorter sequences or adopted in many practical applications.

In this paper, we introduce PNVC, a novel (Practical) INR-based Video Compression framework that tackles the aforementioned limitations, which enables flexible coding configurations (low latency) while still achieving competitive coding performance and low encoding/decoding complexity. The proposed PNVC is built upon a hierarchical backbone that generalizes autoregressive models (Lu et al. 2024) and is seamlessly interchangeable with content- or modulation-based INR models (Lee et al. 2024; Kwan et al. 2024a). Our approach leverages a pretrain-then-overfit strategy, enabling the model to generalize across diverse content during pretraining whilst adapting to input-specific contents during overfitting. Further, we develop a novel reparameterization method, among other architectural and optimization innovations, that allows for unconstrained model capacity during training while ensuring low-complexity inference. This decoupling enables more effective optimization without sacrificing efficiency in deployment. The main contributions of this paper are summarized as follows.

-

1)

We propose a novel INR-based video coding model that integrates autoencoder-based with overfitted solutions, offering competitive coding performance, relatively low encoding and decoding complexity and a flexible coding latency configuration.

-

2)

We design a novel reparameterization-based scheme (ModMixer) to sufficiently pretrain as well as overfitting a lightweight backbone with stronger modeling capacity and more diverse optimization directions, without extra inference costs.

-

3)

We further introduce several modifications, including hierarchical quality parameters, modulation-based hierarchical entropy model with asymmetric context grouping, and scale-aware hierarchical positional encoding to enhance the compression performance.

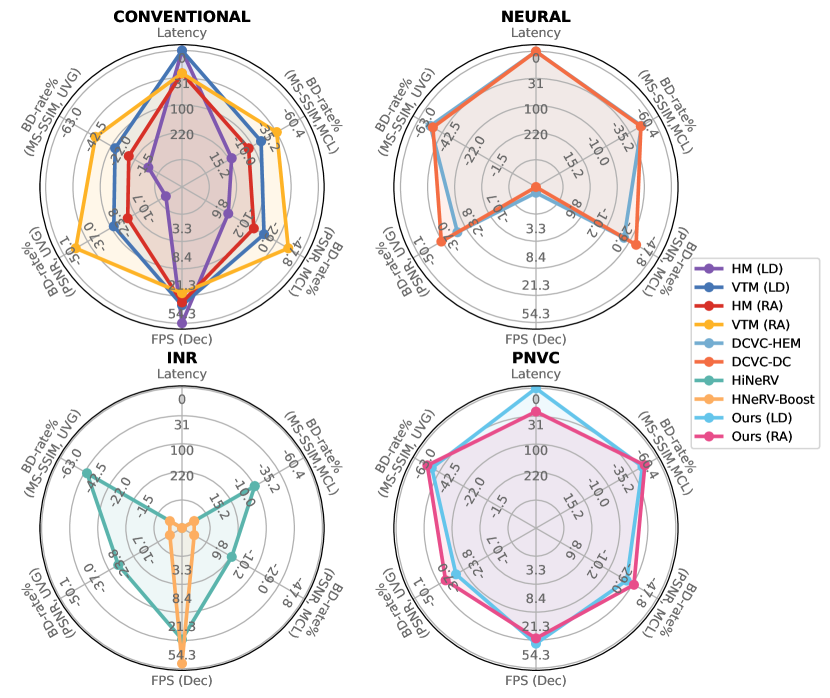

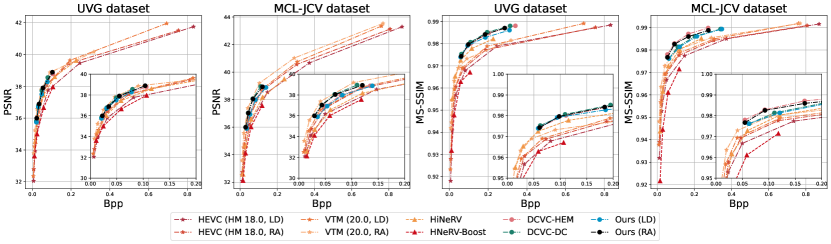

The proposed PNVC demonstrates very competitive rate-distortion performance in both Low Delay and Random Access configurations (as defined in many video coding standards), whilst circumventing the latency and encoding complexity problems associated with existing INR-based video codecs. Specifically, as shown in Figure 1, on both UVG and MCL-JCV, the proposed model evidently outperforms VTM 20.0 (LD), and HiNeRV in BD-rate, measured in both PSNR and MS-SSIM. It is also associated with much lower encoding latency (system delays) compared to existing INR-based videos, and a faster decoding speed over autoencoder-based neural video coding models. We believe that this work yields a major step forward in INR-based video compression, moving it toward practical adoption.

Related Work

Video compression

has been a long-standing research topic, evolving from hand-crafted standard codecs (Wiegand et al. 2003; Sullivan et al. 2012; Bross et al. 2021) to deep learning techniques that enhance conventional tools (Yan et al. 2018; Ma, Zhang, and Bull 2020; Zhang et al. 2021a) and end-to-end optimized frameworks (Lu et al. 2019; Agustsson et al. 2020). Recent learning-based methods have focused on improving motion estimation (Li, Li, and Lu 2023), feature space conditional coding (Hu, Lu, and Xu 2021; Li, Li, and Lu 2021), instance-adaptive overfitting (van Rozendaal, Huijben, and Cohen 2021; Khani, Sivaraman, and Alizadeh 2021; Yang, Oh, and Park 2024), and novel architectures (Ho et al. 2022; Xiang, Tian, and Zhang 2022; Mentzer et al. 2022). Despite competitive coding performance, high computational complexity (in particular in the decoder) has limited practical deployment, with structured pruning approaches (Peng et al. 2024) achieving only a limited reduction in complexity at the cost of significant performance drops.

Implicit Neural Representations

(INRs) have been increasingly employed in recent years to represent and compress various multimedia signals, including images (Dupont et al. 2021; Strümpler et al. 2022), videos (Zhang et al. 2021b; Kwan et al. 2024a) and volumetric content (Ruan et al. 2024; Kwan et al. 2024b). INRs learn a coordinate-based mapping function and encode data in network parameters. Existing implicit neural video representation (NeRV) models can be categorized as: i) index-based methods taking frame (Chen et al. 2021), patch (Bai et al. 2023), or disentangled spatial/grid coordinates (Li et al. 2022) as input, or ii) content-based methods (Chen et al. 2023; Kwan et al. 2024a; Kim et al. 2024; Leguay et al. 2024) with content-specific embeddings as inputs. In these cases, the video coding task is reformulated into a model compression problem, leveraging pruning, quantization, and entropy-constraint optimization (Gomes, Azevedo, and Schroers 2023). However, training NeRV models on entire video sequences or datasets leads to high system latency\footreffn:latency, making them unsuitable for applications requiring quick response times, and leading to less meaningful comparisons with latency-constrained codecs.

Methods

Overview

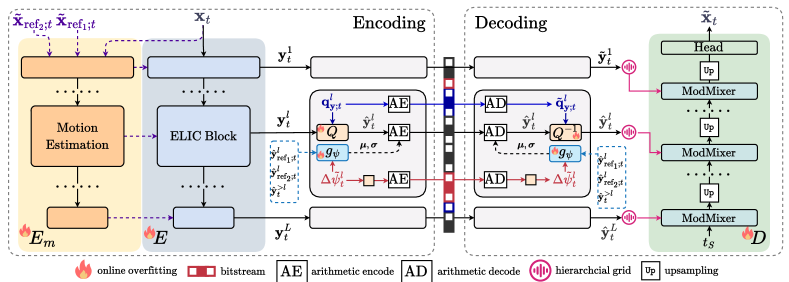

In the proposed PNVC framework, each video sequence , is segmented into Groups of Pictures (GOPs)222We follow the GoP definition in H.266/Versatile Video Coding Test Model (VTM), where the length of a GoP equals the number of consecutive bi-directional predicted (B) frames plus 1. of length that are independently encoded, where , , and represent the length, height, and width of , respectively. Within each GOP, frames are either intra-coded as I frames or inter-coded as P/B frames to exploit spatial and/or temporal redundancy within the video.

Each I frame is encoded into grids of latent tokens by an encoder . For each level , consists of rescale and shift parameters and , concatenated channel-wise, with a resolution of . A learnable quantizer then quantizes these latent grids, producing based on the corresponding quantization parameters , where denotes the channel-wise concatenation.

An entropy model is adopted to estimate the probability mass function (PMF) of the hierarchical latents semi-autoregressively along the spatial, channel, and hierarchical axes. The parameter of the entropy model, , is updated as at each level . Here, represents the pretrained parameters, and are dequantized parameter updates obtained through online overfitting. The bitstream includes , where and are the inverse quantization parameters corresponding to latents and weight updates, respectively. These components are overfitted to the input video frame, entropy encoded, and combined into the bitstream.

At the decoder, the entropy model is updated by decoded from the bitstream. It is used to help entropy decode the hierarchical latent grids that are then dequantized by using to produce . These dequantized latents undergo positional embedding based on an improved scale-aware hierarchical decomposition scheme from (Kwan et al. 2024a), allowing content-specific variations to be queried by spatial-temporal coordinates. A patch-based representation is used, with each element of the smallest corresponding to a patch of the original frame . The INR-based decoder defines a patch-wise neural field as a function of 3D coordinates. It uses stacked ModMixer blocks (proposed in this work) to progressively and conditionally map , which is a learned bias for I-frame and the reference patch for P-/B- frames, to patches of the reconstructed frame , with the intermediate activations of each block modulated by the corresponding latent grid.

Each P or B frame is coded following a workflow similar to that of I frames, but with an additional motion encoder , as shown in Figure 2. With up to two previously reconstructed frames, and , in the decoded frame buffer (they can be I or P/B frames) and the current frame , the multi-resolution latent grids are generated by but with layer-wise conditioning on the motion information extracted from . Here, the entropy model additionally takes into account the decoded reference latent tokens and the reconstructed latent tokens from higher-level layers, , as input to further exploit spatio-temporal and hierarchical redundancy.

Encoder

The ELIC (He et al. 2022) model is adopted here as the image encoder to map I/P/B frames to the latent . For P/B frames, is reconditioned by concatenating the hierarchical optical flow features, extracted by the motion encoder , adapted from SpyNet (Ranjan and Black 2017), to incorporate the estimated motion information. The illustration of its network structure is provided in the Supplementary. Both and are overfitted to generate content-adaptive hierarchical latent representations. The overfitting of the next frame is initialized from the network updated for the current frame to further expedite the encoding process.

Quantization

A hierarchical quality structure (Li, Li, and Lu 2024) is adopted in the quantization module, where the allowable bitrates are adaptively reweighted for each frame based on its distance from reference frames and specific video dynamics. This parameter is referred to as the quality parameter , generated by a ConvLSTM module based on each token’s estimated impact on the GOP-level RD trade-offs. This module is fixed after pretraining to prevent the frame-wise overfitting process from destroying the acquired hierarchical quality structure. For quantization, another set of finer-grained channel-wise quality parameters are used. For inverse quantization, the corresponding are retrieved and encoded into the bitstream as side information. The quantization and inverse quantization steps are exemplified using as:

| (1a) | ||||

| (1b) | ||||

where , , and denote the channel and spatial indices, respectively. Here, we avoid division in both cases to avoid numerical instabilities. The scalar quantization parameters are updated following a similar procedure.

Entropy coding

The discretized hierarchical latents are entropy coded based on arithmetic coding. Here, PMFs are estimated by a Gaussian distribution , where the location and scale parameters of each element are semi-autoregressively predicted by the entropy network based on the spatial, temporal, and hierarchical contexts of the element. We use the quadtree-based factorization (Li, Li, and Lu 2023) to encode , but with two modifications. First, we respectively split the channels of and (they are entropy coded in parallel) into four uneven groups (He et al. 2022), with sizes proportional to 1, 1, 2 and 4, i.e. we double the number of symbols decoded per decoding step due to more contexts available. Moreover, we replace all concatenation operations to aggregate information with element-wise modulation, which we empirically found to be far more efficient in information aggregation, and deploy a new ModMixer module (detailed in the next subsection) to construct and optimize the entropy model. The architecture illustration of the entropy model is provided in the Supplementary. For entropy model’s updates , a fully factorized nonparametric density model (Ballé et al. 2018) is deployed following (Gomes, Azevedo, and Schroers 2023; Zhang et al. 2024).

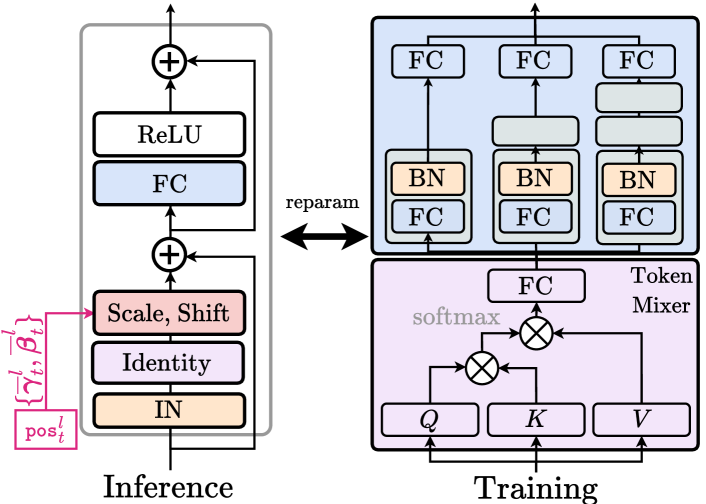

ModMixer

To enhance model performance without introducing extra inference cost, we devise a novel ModMixer module as a basic building block used in our entropy model and decoder . This approach is inspired by reparameterization methods (Ding et al. 2021, 2022; Shi, Zhou, and Gu 2024) that leverage the interconversion between linear architectures to trade training-time complexity for inference-time efficiency. Without loss of generality, we define an arbitrary linear layer (convolutional or fully-connected), , to be contracted algebraically from this general formulation:

| (2) |

where and denote the trainable, parallel basis of and , respectively, and the coefficients denote the channel-wise affine mixand values. Here, each basis may be further expanded serially into (taking as an example) . Compared to standard reparameterization methods, Equation (2) establishes a more generalized visual tuning scheme that better suits the context of instance-adaptive video compression. With the affine transformation applied to both weight and bias terms, it is a superset encompassing both weight and feature space tuning, where the mixands could be flexibly re-casted to feature modulation (as in decoder), weight update (as in entropy model), or any other forms of visual tuning accordingly.

In our approach, to leverage the above reparameterization idea and improve fully connected layers (FC), typically adopted in INR-based methods (Sitzmann et al. 2020; Dupont et al. 2021), a new basic building block, ModMixer, is designed as illustrated in Figure 3. Each FC layer is reparameterized both serially and in parallel through stacks of linear layers with varying depths per branch (one per channel group) to capture the hierarchical representations efficiently. Further, a self-attention-style token-mixer is attached before the FC layer to induce inference-time spatial mixing. During pretraining, the token mixer is progressively degenerated and absorbed into the Instance Normalization (Huang and Belongie 2017) and proceeding FC layer (Lin et al. 2024), by initializing a mask as that is gradually decayed to : .

The mask decaying strategy is inspired by (Wang et al. 2023; Peng et al. 2024), which leverage the more expressive teacher model to distill the smaller student. During overfitting, we optimize the residual updates, w.r.t the pretrained mixands and basis, which are then consolidated into either weight updates for the entropy model or absorbed into the hierarchical latent grids for the decoder (see Supplementary for full derivations and implementation details).

Decoder

In Figure 2, the decoder acting on the grid-based positional encodings incrementally restores spatial information while sequentially composing high-frequency details over low-frequency elements. We follow the hierarchical encoding method proposed by the high-performance HiNeRV (Kwan et al. 2024a), which can be conceptualized as a positional numeral system with power-of-2 bases. This method expresses each coordinate as an ordered set of digits, each of which corresponds to a hierarchy that recursively encodes residuals of the coarser-grained representational level. Specifically, this hierarchical coordinate system decomposes a global coordinate pos into multiple levels of finer detail with base . At each level , the local coordinate for interpolation is computed as:

| (3) |

We make a simple improvement to the original strategy to cope with the increase in patch size at inference time compared to that seen by the model during pretraining. The likely substantial increase in resolution, denoted by , could result in more fine-tuning endeavours. Maintaining the original grid spacing would result in an increase in overall grid numbers, potentially complicating hierarchical pattern capturing and increasing memory/computational demands To address this, we propose to instead mix and rescale the bases non-linearly according to the ratio (). The local coordinate at level is re-calculated as:

| (4) |

subject to and . Solving this constraint yields a set of evenly distributed on a log-scale, as will be detailed in Supplementary. The formulation by Equation (4) essentially distributes the interpolation pressure across different hierarchies. Low frequencies, which are typically less sensitive to resolution changes, can handle more scaling without significant loss of information (sparser grid partition at lower resolution). High frequencies, on the other hand, are preserved more carefully from being scaled less. Based on Equation (4), the -th layer of decoder is given by:

Optimization strategy

The full model is first pretrained offline, based on static training materials, and then overfitted during inference to adapt to the input video sequence to be compressed. Considering the pre-trained parameters, the “meta-initialization” shared between the encoder and the decoder, the iteratively refined parameter updates, relative to this meta-initialization, are quantized alongside associated side information and then entropy coded into the bitstream. We follow (Lu et al. 2020; Li, Li, and Lu 2023) to aggregate the loss over multiple frames to reduce error propagation and establish the hierarchical quality structure. The pretraining involves minimizing the following rate-distortion loss within each GoP:

| (5) |

where denotes the distortion, stands for the rate, and denotes the displaying order of each frame in a GOP. Following (Kim et al. 2024; Leguay et al. 2024), the quantization of hierarchical latents and weight update is approximated by progressively annealed soft-rounding with additive noises when calculating , and by the straight-through estimator (STE) (Minnen and Singh 2020) when rounding them to optimize the distortion metric. Here MSE is used as the distortion metric targeting the best PSNR performance, while additional models are trained by fine-turning MSE models using (Mentzer et al. 2022) as the distortion metric to yield MS-SSIM-based baselines. During frame-wise overfitting, encoding entails searching for the optimal values of the hierarchical latents, network parameters, and quantization parameters:

| (6) |

Detailed hyperparameter configurations could be found in the Supplementary.

| UVG Dataset | MCL-JCV Dataset | Model Complexity (UVG) | |||||||

| Codec (mode) | BD-rate | BD-rate | FPS | Params. (M) | kMACs/pixel | Latency | |||

| (PSNR) | (MS-SSIM) | (PSNR) | (MS-SSIM) | Encoding | Decoding | Full model | Decoding | ||

| *HM 18.0 (LD) | -0.00% | -0.00% | -0.00% | -0.00% | 6.17 | 54.3 | N/A | N/A | 0 |

| HM 18.0 (RA) | -21.21% | -17.02% | -20.36% | -18.10% | 3.48 | 33.2 | N/A | N/A | 31 |

| VTM 20.0 (LD) | -28.94% | -28.90% | -28.47% | -31.35% | 0.05 | 30.7 | N/A | N/A | 0 |

| VTM 20.0 (RA) | -50.08% | -45.94% | -47.78% | -48.02% | 0.001 | 23.8 | N/A | N/A | 31 |

| VCT | +20.12% | -42.06% | +20.70% | -48.57% | 1.10 | 0.39 | 154.2 | 5336 | 0 |

| DCVC-HEM | -34.96% | -63.05% | -33.81% | -60.35% | 0.87 | 1.51 | 48.7 | 1581 | 0 |

| DCVC-DC | -43.94% | -60.83% | -43.46% | -59.65% | 0.96 | 1.29 | 50.8 | 1274 | 0 |

| FFNeRV | +81.50% | +50.21% | +100.93% | +80.31% | Full seq. | ||||

| C3 (Adaptive)333For C3, we adopt the adaptive setting for the UVG dataset, where the patch length ranges from 30 to 75 for different rates yet is fixed to 25 on the MCL-JCV dataset. | -0.75% | -15.53% | +20.64% | +40.76% | 0.0015 | 17.6 | 0.01 | 4.4 | 25-75 |

| HNeRV-Boost | +2.42% | +18.96% | +75.8% | +225.6% | Full seq. | ||||

| HiNeRV | -25.42% | -50.90% | -1.64% | -22.34% | Full seq. | ||||

| PNVC (LD, ours) | -34.35% | -58.97% | -33.56% | -57.73% | 0.014 | 25.3 | 21.8 | 101.1 | 0 |

| PNVC (RA, ours) | -39.98% | -62.72% | -39.23% | -60.21% | 0.011 | 22.6 | 21.8 | 101.1 | 31 |

Experiments

Datasets.

Baselines.

The proposed model is compared with nine open-sourced state-of-the-art conventional and neural video compression baselines including (i) two conventional codecs - H.265/HEVC Test Model HM 18.0 (Sharman, Sjoberg, and Sullivan 2022) and H.266/VVC Test Model VTM 20.0 (Browne, Ye, and Kim 2023); (ii) three neural video codecs - VCT (Mentzer et al. 2022), DCVC-HEM (Li, Li, and Lu 2022), and DCVC-DC (Li, Li, and Lu 2023); (iii) four INR-based codecs - FFNeRV (Lee et al. 2023), HNeRV-Boost (Zhang et al. 2024), C3 (Kim et al. 2024) and HiNeRV (Kwan et al. 2024a).

Test conditions.

Two coding configurations are employed: Low Delay (LD) and Random Access (RA), following the VTM common test conditions (CTC) (Bossen et al. 2023). The LD configuration requires only one intra frame in each sequence (the first one), with subsequent P frames relying solely on previous frames for motion prediction (i.e., GOP=1) as defined in JVET CTC. In RA mode, each GOP consists of one intra frame and 31 B/P frames, allowing a maximum latency of 31 (i.e., GOP=32) with an IntraPeriod of 32. The RA configuration here uses the same hierarchical B frame structure as specified in the JVET CTC. These configurations do not apply to other INR-based benchmarks that encode the entire sequence or dataset.

Metrics.

For each test rate point of a video codec, we calculate the bitrate (in terms of bit/pixel, bpp) and their video quality in terms of PSNR and MS-SSIM in the RGB space. Based on these, we further calculate the Bjøntegaard Delta Rate (BD-rate) (Bjontegaard 2001) using HM (LD) as the anchor. We also measured the complexity figures of each tested codec, including the number of model parameters, the decoding complexity (MACs/pixel) and average encoding and decoding speeds (FPS).

Results

Quantitative results

The BD-rate performance is summarized in Table 1, where it can be seen that PNVC (LD and RA modes) outperforms the anchor HM 18.0 (LD) by 34.35% and 39.98% in BD-rate in terms of PSNR on the UVG and MCL-JCV dataset, respectively. PNVC also significantly outperforms state-of-the-art INR-based codecs and is also competitive against the state-of-the-art conventional and neural video codecs. Furthermore, it should be noted that PNVC embodies latency constraints (31 frames for the RA mode and 0 for the LD mode, the same as HM and VTM), whereas other INR models do not. These demonstrate the strong rate-distortion performance of the proposed model. Among all codecs tested, the next-generation standard VTM (RA) remains to be the best performing baseline.

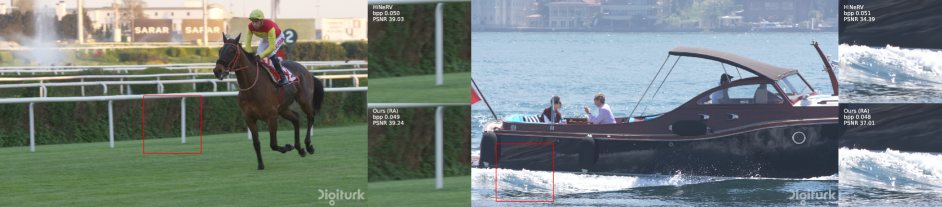

Qualitative results

We present qualitative visual comparisons between frames reconstructed by HiNeRV (the best INR-based video codec) and the proposed PNVC in Figure 5. The visual results show that our method is able to yield visually pleasing reconstructions, accurately capturing the fine texture details and fast/complex motions present in the frame. Please see Supplementary for more thorough comparisons.

Complexity performance

The complexity figures of PNVC and other benchmark codecs, including encoding and decoding speeds (FPS), full model size (number of parameters) and decoding complexity (MACs/pixel), are summarized in Table 1. These are measured by averaging over a GOP of 1080P videos, including a full roll-out of the entropy coding process, on a PC with an NVIDIA 3090 GPU and an Intel Core i7-12700 CPU. It can be observed that our model achieves a higher decoding speed, comparable to the conventional and INR baselines and considerably faster than all the neural video codecs. The PNVC decoder is also similar in size to INR models and much smaller than neural codecs. The well-roundness of our proposed model is illustrated by the Radar plot, as shown in Figure 1, in which decoding speed, encoding latency, BD-rate in PSNR and MS-SSIM of the selected, top-performing baselines are visualized. It is evident that the proposed PNVC yields the most-balanced and also the best overall rate-distortion-complexity trade-off. It is also noted that the encoding speed of PNVC is still relatively low (so as other INR-based codecs), when compared to autoencoder-based neural compression models (and VTM), which limits real-time deployment (e.g., video conferencing). However, the benefits of Low Delay and Random Access compatibility, low decoding complexity, and competitive rate quality performance still offer great potential to support widely used streaming scenarios, which does not require real-time encoding ability.

Ablation studies

To validate the contribution of each design component in the proposed PNVC model, we conducted ablations using the following model variants. The corresponding BD-rate results are summarized in Table 2.

| Version | Ablation option | UVG | MCL-JCV |

|---|---|---|---|

| (V1.1) | ✗ re-param. @ pre-train. | 6.45% | 6.34% |

| (V1.2) | ✗ re-param. @ overfit. | 3.43% | 3.25% |

| (V1.3) | ✗ re-param. @ fully-connected | 2.22% | 2.36% |

| (V1.4) | ✗ re-param. @ token-mix | 4.57% | 4.49% |

| (V2.1) | ✗ adaptive QP (LD) | 1.21% | 1.22% |

| (V2.2) | ✗ adaptive QP (RA) | 2.25% | 2.23% |

| (V2.3) | ✗ overfitting (LD) | 7.95% | 7.95% |

| (V2.4) | ✗ overfitting (RA) | 9.47% | 9.34% |

| (V3.1) | ✗ uneven channel grouping | 1.76% | 1.91% |

| (V3.2) | ✗ modulation ✓ concat. | 3.39% | 3.57% |

| (V4.1) | ✗ scaled hierarchical griding | 2.39% | 2.36% |

| (V4.2) | ✗ rescaling | 0.75% | 0.76% |

| (V4.3) | ✗ shifting | 0.89% | 0.91% |

Reparameterization.

We tested the effectiveness of reparameterization by removing it from pre-training (V1.1), performing online overfitting with the pre-trained model but disregarding the overparameterized basis (V1.2), removing the reparameterization for MLP (V1.3), and removing the degenerated token mixer component (V1.4).

Optimization.

We also validated the adaptive quality control parameter in both the LD and RA coding configurations by removing them from the optimization process (V2.1 and V2.2, respectively). The coding performance of pre-training only without overfitting is ablated in (V2.3).

Entropy model.

We adopted the original quadtree-chunking strategy instead of using uneven channel grouping (V3.1) and replaced temporal feature modulation with concatenation (V3.2) to verify the effectiveness of the improved entropy model.

Decoder.

We respectively replaced the adaptive rescaled hierarchical encoding (V4.1) with the original hierarchical griding scheme (overfitted for the same number of steps), the rescaling feature (V4.2) or the shifting feature (V4.3) to quantify their contributions to the overall performance gain.

It is noted that all the thirteen tested variants perform worse than PNVC on both datasets, as shown in Table 2, demonstrating the effectiveness of each design component.

Conclusion

We propose PNVC, an INR-based video coding framework which 1) combines autoencoder-based compression methods with implicit, coordinate-based overfitting and 2) integrates both offline pretraining and online overfitting. PNVC supports Low Delay and Random Access coding modes and achieves competitive rate-distortion-complexity performance with fast decoding speed. Through innovations including reparameterized visual tuning, hierarchical quality parameters, modulation-based entropy modeling with asymmetric channel partition, and scale-adaptive hierarchical griding, PNVC outperforms VTM 20.0 (LD) and HiNeRV, matches DCVC-DC in compression efficiency, and maintains a low decoding complexity of 200k MACs/pixel and a decoding speed of 20 FPS @1080P. More importantly, PNVC supports flexible latency configurations (LD and RA), which improves the practicality of INR-based video codecs. Future studies must address could exploit meta-learning (Lee et al. 2021) methods to speed up the encoding process.

Acknowledgments.

This work was supported by the UK Research and Innovation (UKRI) MyWorld Strength in Places Program (SIPF00006/1).

References

- Agustsson et al. (2020) Agustsson, E.; Minnen, D.; Johnston, N.; Balle, J.; Hwang, S. J.; and Toderici, G. 2020. Scale-space flow for end-to-end optimized video compression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 8503–8512.

- Bai et al. (2023) Bai, Y.; Dong, C.; Wang, C.; and Yuan, C. 2023. PS-NeRV: Patch-wise stylized neural representations for videos. In IEEE International Conference on Image Processing, 41–45. IEEE.

- Ballé et al. (2018) Ballé, J.; Minnen, D.; Singh, S.; Hwang, S. J.; and Johnston, N. 2018. Variational image compression with a scale hyperprior. In International Conference on Learning Representations.

- Bjontegaard (2001) Bjontegaard, G. 2001. Calculation of average PSNR differences between RD-curves. ITU SG16 Doc. VCEG-M33.

- Bossen et al. (2023) Bossen, F.; Boyce, J.; Suehring, K.; Li, X.; and Seregin, V. 2023. VTM Common Test Conditions and Software Reference Configurations for SDR Video. In the JVET meeting, JVET-T2010.

- Bross et al. (2021) Bross, B.; Wang, Y.-K.; Ye, Y.; Liu, S.; Chen, J.; Sullivan, G. J.; and Ohm, J.-R. 2021. Overview of the versatile video coding (VVC) standard and its applications. IEEE Transactions on Circuits and Systems for Video Technology, 31(10): 3736–3764.

- Browne, Ye, and Kim (2023) Browne, A.; Ye, Y.; and Kim, S. H. 2023. Algorithm description for Versatile Video Coding and Test Model 19 (VTM 19). In the JVET meeting, JVET-AC2002. ITU-T and ISO/IEC.

- Chen et al. (2023) Chen, H.; Gwilliam, M.; Lim, S.-N.; and Shrivastava, A. 2023. HNeRV: A hybrid neural representation for videos. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 10270–10279.

- Chen et al. (2021) Chen, H.; He, B.; Wang, H.; Ren, Y.; Lim, S. N.; and Shrivastava, A. 2021. NeRV: Neural representations for videos. Advances in Neural Information Processing Systems, 34: 21557–21568.

- Ding et al. (2021) Ding, X.; Xia, C.; Zhang, X.; Chu, X.; Han, J.; and Ding, G. 2021. Repmlp: Re-parameterizing convolutions into fully-connected layers for image recognition. arXiv preprint arXiv:2105.01883.

- Ding et al. (2022) Ding, X.; Zhang, X.; Han, J.; and Ding, G. 2022. Scaling up your kernels to 31x31: Revisiting large kernel design in cnns. In IEEE/CVF Conference on Computer Vision and Pattern Recognition, 11963–11975.

- Dupont et al. (2021) Dupont, E.; Golinski, A.; Alizadeh, M.; Teh, Y. W.; and Doucet, A. 2021. COIN: Compression with Implicit Neural representations. In ICLR Workshop on Neural Compression: From Information Theory to Applications.

- Gomes, Azevedo, and Schroers (2023) Gomes, C.; Azevedo, R.; and Schroers, C. 2023. Video compression with entropy-constrained neural representations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 18497–18506.

- He et al. (2023) He, B.; Yang, X.; Wang, H.; Wu, Z.; Chen, H.; Huang, S.; Ren, Y.; Lim, S.-N.; and Shrivastava, A. 2023. Towards scalable neural representation for diverse videos. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 6132–6142.

- He et al. (2022) He, D.; Yang, Z.; Peng, W.; Ma, R.; Qin, H.; and Wang, Y. 2022. ELIC: Efficient learned image compression with unevenly grouped space-channel contextual adaptive coding. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 5718–5727.

- Ho et al. (2022) Ho, Y.-H.; Chang, C.-P.; Chen, P.-Y.; Gnutti, A.; and Peng, W.-H. 2022. CANF-VC: Conditional augmented normalizing flows for video compression. In European Conference on Computer Vision, 207–223. Springer.

- Hu, Lu, and Xu (2021) Hu, Z.; Lu, G.; and Xu, D. 2021. FVC: A new framework towards deep video compression in feature space. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 1502–1511.

- Hu and Xu (2023) Hu, Z.; and Xu, D. 2023. Complexity-guided slimmable decoder for efficient deep video compression. In IEEE/CVF Conference on Computer Vision and Pattern Recognition, 14358–14367.

- Huang and Belongie (2017) Huang, X.; and Belongie, S. 2017. Arbitrary style transfer in real-time with adaptive instance normalization. In Proceedings of the IEEE International Conference on Computer Vision, 1501–1510.

- Khani, Sivaraman, and Alizadeh (2021) Khani, M.; Sivaraman, V.; and Alizadeh, M. 2021. Efficient video compression via content-adaptive super-resolution. In Proceedings of the IEEE/CVF International Conference on Computer Vision, 4521–4530.

- Kim et al. (2024) Kim, H.; Bauer, M.; Theis, L.; Schwarz, J. R.; and Dupont, E. 2024. C3: High-performance and low-complexity neural compression from a single image or video. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 9347–9358.

- Kwan et al. (2024a) Kwan, H. M.; Gao, G.; Zhang, F.; Gower, A.; and Bull, D. 2024a. HiNeRV: Video Compression with Hierarchical Encoding-based Neural Representation. Advances in Neural Information Processing Systems, 36: 72692–72704.

- Kwan et al. (2024b) Kwan, H. M.; Zhang, F.; Gower, A.; and Bull, D. 2024b. Immersive Video Compression using Implicit Neural Representations. In Picture Coding Symposium.

- Lee et al. (2021) Lee, J.; Tack, J.; Lee, N.; and Shin, J. 2021. Meta-learning sparse implicit neural representations. Advances in Neural Information Processing Systems, 34: 11769–11780.

- Lee et al. (2023) Lee, J. C.; Rho, D.; Ko, J. H.; and Park, E. 2023. FFNeRV: Flow-guided frame-wise neural representations for videos. In Proceedings of the 31st ACM International Conference on Multimedia, 7859–7870.

- Lee et al. (2024) Lee, J. C.; Rho, D.; Nam, S.; Ko, J. H.; and Park, E. 2024. Coordinate-aware modulation for neural fields. In The Twelfth International Conference on Learning Representations.

- Leguay et al. (2024) Leguay, T.; Ladune, T.; Philippe, P.; and Déforges, O. 2024. Cool-chic video: Learned video coding with 800 parameters. arXiv preprint arXiv:2402.03179.

- Li, Li, and Lu (2021) Li, J.; Li, B.; and Lu, Y. 2021. Deep contextual video compression. Advances in Neural Information Processing Systems, 34: 18114–18125.

- Li, Li, and Lu (2022) Li, J.; Li, B.; and Lu, Y. 2022. Hybrid spatial-temporal entropy modelling for neural video compression. In Proceedings of the 30th ACM International Conference on Multimedia, 1503–1511.

- Li, Li, and Lu (2023) Li, J.; Li, B.; and Lu, Y. 2023. Neural Video Compression with Diverse Contexts. In IEEE/CVF Conference on Computer Vision and Pattern Recognition, 22616–22626.

- Li, Li, and Lu (2024) Li, J.; Li, B.; and Lu, Y. 2024. Neural video compression with feature modulation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 26099–26108.

- Li et al. (2022) Li, Z.; Wang, M.; Pi, H.; Xu, K.; Mei, J.; and Liu, Y. 2022. E-NeRV: Expedite neural video representation with disentangled spatial-temporal context. In European Conference on Computer Vision, 267–284. Springer.

- Lin et al. (2024) Lin, S.; Lyu, P.; Liu, D.; Tang, T.; Liang, X.; Song, A.; and Chang, X. 2024. MLP Can Be A Good Transformer Learner. In IEEE/CVF Conference on Computer Vision and Pattern Recognition, 19489–19498.

- Lu et al. (2020) Lu, G.; Cai, C.; Zhang, X.; Chen, L.; Ouyang, W.; Xu, D.; and Gao, Z. 2020. Content adaptive and error propagation aware deep video compression. In European Conference on Computer Vision, 456–472.

- Lu et al. (2019) Lu, G.; Ouyang, W.; Xu, D.; Zhang, X.; Cai, C.; and Gao, Z. 2019. DVC: An End-To-End Deep Video Compression Framework. In IEEE/CVF Conference on Computer Vision and Pattern Recognition, 11006–11015.

- Lu et al. (2024) Lu, M.; Duan, Z.; Zhu, F.; and Ma, Z. 2024. Deep Hierarchical Video Compression. In Proceedings of the AAAI Conference on Artificial Intelligence, 8859–8867.

- Ma, Zhang, and Bull (2020) Ma, D.; Zhang, F.; and Bull, D. R. 2020. MFRNet: a new CNN architecture for post-processing and in-loop filtering. IEEE Journal of Selected Topics in Signal Processing, 15(2): 378–387.

- Mentzer et al. (2022) Mentzer, F.; Toderici, G. D.; Minnen, D.; Caelles, S.; Hwang, S. J.; Lucic, M.; and Agustsson, E. 2022. VCT: A Video Compression Transformer. Advances in Neural Information Processing Systems, 35: 13091–13103.

- Mercat, Viitanen, and Vanne (2020) Mercat, A.; Viitanen, M.; and Vanne, J. 2020. UVG dataset: 50/120fps 4K sequences for video codec analysis and development. In Proceedings of the 11th ACM Multimedia Systems Conference, 297–302.

- Minnen and Singh (2020) Minnen, D.; and Singh, S. 2020. Channel-wise autoregressive entropy models for learned image compression. In 2020 IEEE International Conference on Image Processing, 3339–3343. IEEE.

- Peng et al. (2024) Peng, T.; Gao, G.; Sun, H.; Zhang, F.; and Bull, D. 2024. Accelerating Learnt Video Codecs with Gradient Decay and Layer-Wise Distillation. In Picture Coding Symposium.

- Ranjan and Black (2017) Ranjan, A.; and Black, M. J. 2017. Optical flow estimation using a spatial pyramid network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 4161–4170.

- Ruan et al. (2024) Ruan, H.; Shao, Y.; Yang, Q.; Zhao, L.; and Niyato, D. 2024. Point Cloud Compression with Implicit Neural Representations: A Unified Framework. arXiv preprint arXiv:2405.11493.

- Sharman, Sjoberg, and Sullivan (2022) Sharman, C. R. K.; Sjoberg, R.; and Sullivan, G. 2022. High Efficiency Video Coding (HEVC) Test Model 16 (HM 16) Improved Encoder Description Update 16. In the JVET meeting, JVET-Y1002. ITU-T and ISO/IEC.

- Shi, Zhou, and Gu (2024) Shi, K.; Zhou, X.; and Gu, S. 2024. Improved Implicity Neural Representation with Fourier Bases Reparameterized Training. arXiv preprint arXiv:2401.07402.

- Sitzmann et al. (2020) Sitzmann, V.; Martel, J.; Bergman, A.; Lindell, D.; and Wetzstein, G. 2020. Implicit neural representations with periodic activation functions. Advances in neural information processing systems, 33: 7462–7473.

- Strümpler et al. (2022) Strümpler, Y.; Postels, J.; Yang, R.; Gool, L. V.; and Tombari, F. 2022. Implicit neural representations for image compression. In European Conference on Computer Vision, 74–91.

- Sullivan et al. (2012) Sullivan, G. J.; Ohm, J.; Han, W.; and Wiegand, T. 2012. Overview of the High Efficiency Video Coding (HEVC) Standard. IEEE Transactions on Circuits and Systems for Video Technology, 22(12): 1649–1668.

- van Rozendaal, Huijben, and Cohen (2021) van Rozendaal, T.; Huijben, I.; and Cohen, T. S. 2021. Overfitting for fun and profit: Instance-adaptive data compression. In International Conference on Learning Representations.

- Wang et al. (2023) Wang, G.-H.; Li, J.; Li, B.; and Lu, Y. 2023. EVC: Towards Real-Time Neural Image Compression with Mask Decay. In International Conference on Learning Representations.

- Wang et al. (2016) Wang, H.; Gan, W.; Hu, S.; Lin, J. Y.; Jin, L.; Song, L.; Wang, P.; Katsavounidis, I.; Aaron, A.; and Kuo, C.-C. J. 2016. MCL-JCV: a JND-based H. 264/AVC video quality assessment dataset. In IEEE international conference on image processing, 1509–1513.

- Wiegand et al. (2003) Wiegand, T.; Sullivan, G. J.; Bjøntegaard, G.; and Luthra, A. 2003. Overview of the H.264/AVC Video Coding Standard. IEEE Transactions on Circuits and Systems for Video Technology, 13(7): 560–576.

- Xiang, Tian, and Zhang (2022) Xiang, J.; Tian, K.; and Zhang, J. 2022. Mimt: Masked image modeling transformer for video compression. In International Conference on Learning Representations.

- Xue et al. (2019) Xue, T.; Chen, B.; Wu, J.; Wei, D.; and Freeman, W. T. 2019. Video enhancement with task-oriented flow. International Journal of Computer Vision, 127: 1106–1125.

- Yan et al. (2018) Yan, N.; Liu, D.; Li, H.; Li, B.; Li, L.; and Wu, F. 2018. Convolutional neural network-based fractional-pixel motion compensation. IEEE Transactions on Circuits and Systems for Video Technology, 29(3): 840–853.

- Yang, Oh, and Park (2024) Yang, H.; Oh, S.; and Park, E. 2024. Parameter-Efficient Instance-Adaptive Neural Video Compression. arXiv preprint arXiv:2405.08530.

- Zhang et al. (2021a) Zhang, F.; Ma, D.; Feng, C.; and Bull, D. R. 2021a. Video compression with CNN-based postprocessing. IEEE MultiMedia, 28(4): 74–83.

- Zhang et al. (2024) Zhang, X.; Yang, R.; He, D.; Ge, X.; Xu, T.; Wang, Y.; Qin, H.; and Zhang, J. 2024. Boosting Neural Representations for Videos with a Conditional Decoder. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2556–2566.

- Zhang et al. (2021b) Zhang, Y.; van Rozendaal, T.; Brehmer, J.; Nagel, M.; and Cohen, T. 2021b. Implicit Neural Video Compression. In ICLR Workshop on Deep Generative Models for Highly Structured Data.