Policy Mirror Descent for Regularized Reinforcement Learning:

A Generalized Framework with Linear Convergence

Abstract

Policy optimization, which finds the desired policy by maximizing value functions via optimization techniques, lies at the heart of reinforcement learning (RL). In addition to value maximization, other practical considerations arise as well, including the need of encouraging exploration, and that of ensuring certain structural properties of the learned policy due to safety, resource and operational constraints. These can often be accounted for via regularized RL, which augments the target value function with a structure-promoting regularizer.

Focusing on discounted infinite-horizon Markov decision processes, we propose a generalized policy mirror descent (GPMD) algorithm for solving regularized RL. As a generalization of policy mirror descent (Lan,, 2022), our algorithm accommodates a general class of convex regularizers and promotes the use of Bregman divergence in cognizant of the regularizer in use. We demonstrate that our algorithm converges linearly to the global solution over an entire range of learning rates, in a dimension-free fashion, even when the regularizer lacks strong convexity and smoothness. In addition, this linear convergence feature is provably stable in the face of inexact policy evaluation and imperfect policy updates. Numerical experiments are provided to corroborate the appealing performance of GPMD.

Keywords: policy mirror descent, Bregman divergence, regularization, nonsmooth, policy optimization

1 Introduction

Policy optimization lies at the heart of recent successes of reinforcement learning (RL) (Mnih et al.,, 2015). In its basic form, the optimal policy of interest, or a suitably parameterized version, is learned by attempting to maximize the value function in a Markov decision processes (MDP). For the most part, the maximization step is carried out by means of first-order optimization algorithms amenable to large-scale applications, whose foundations were set forth in the early works of Williams, (1992); Sutton et al., (2000). A partial list of widely adopted variants in modern practice includes policy gradient (PG) methods (Sutton et al.,, 2000), natural policy gradient (NPG) methods (Kakade,, 2002), TRPO (Schulman et al.,, 2015), PPO (Schulman et al.,, 2017), soft actor-critic methods (Haarnoja et al.,, 2018), to name just a few. In comparison with model-based and value-based approaches, this family of policy-based algorithms offers a remarkably flexible framework that accommodates both continuous and discrete action spaces, and lends itself well to the incorporation of powerful function approximation schemes like neural networks. In stark contrast to its practical success, however, theoretical understanding of policy optimization remains severely limited even for the tabular case, largely owing to the ubiquitous nonconvexity issue underlying the objective function.

1.1 The role of regularization

In practice, there are often competing objectives and additional constraints that the agent has to deal with in conjunction with maximizing values, which motivate the studies of regularization techniques in RL. In what follows, we isolate a few representative examples.

-

•

Promoting exploration. In the face of large problem dimensions and complex dynamics, it is often desirable to maintain a suitable degree of randomness in the policy iterates, in order to encourage exploration and discourage premature convergence to sub-optimal policies. A popular strategy of this kind is to enforce entropy regularization (Williams and Peng,, 1991), which penalizes policies that are not sufficiently stochastic. Along similar lines, the Tsallis entropy regularization (Chow et al., 2018b, ; Lee et al.,, 2018) further promotes sparsity of the learned policy while encouraging exploration, ensuring that the resulting policy does not assign non-negligible probabilities to too many sub-optimal actions.

-

•

Safe RL. In a variety of application scenarios such as industrial robot arms and self-driving vehicles, the agents are required to operate safely both to themselves and the surroundings (Amodei et al.,, 2016; Moldovan and Abbeel,, 2012); for example, certain actions might be strictly forbidden in some states. One way to incorporate such prescribed operational constraints is through adding a regularizer (e.g., a properly chosen log barrier or indicator function tailored to the constraints) to explicitly account for the constraints.

-

•

Cost-sensitive RL. In reality, different actions of an agent might incur drastically different costs even for the same state. This motivates the design of new objective functions that properly trade off the cumulative rewards against the accumulated cost, which often take the form of certain regularized value functions.

Viewed in this light, it is of imminent value to develop a unified framework towards understanding the capability and limitations of regularized policy optimization. While a recent line of works (Agarwal et al.,, 2020; Mei et al., 2020b, ; Cen et al., 2022b, ) have looked into specific types of regularization techniques such as entropy regularization, existing convergence theory remains highly inadequate when it comes to a more general family of regularizers.

1.2 Main contributions

The current paper focuses on policy optimization for regularized RL in a -discounted infinite horizon Markov decision process (MDP) with state space , action space , and reward function . The goal is to find an optimal policy that maximizes a regularized value function. Informally speaking, the regularized value function associated with a given policy takes the following form:

where denotes the original (unregularized) value function, is the regularization parameter, denotes a convex regularizer employed to regularize the policy in state , and the expectation is taken over certain marginal state distribution w.r.t. the MDP (to be made precise in Section 2.1). It is noteworthy that this paper does not require the regularizer to be either strongly convex or smooth.

In order to maximize the regularized value function (9b), Lan, (2022) exhibited a seminal algorithm called Policy Mirror Descent (PMD), which can be viewed as an adaptation of the mirror descent algorithm (Nemirovsky and Yudin,, 1983; Beck and Teboulle,, 2003) to the realm of policy optimization. In particular, PMD subsumes the natural policy gradient (NPG) method (Kakade,, 2002) as a special case. To further generalize PMD (Lan,, 2022), we propose an algorithm called Generalized Policy Mirror Descent (GPMD). In each iteration, the policy is updated for each state in parallel via a mirror-descent style update rule. In sharp contrast to Lan, (2022) that considered a generic Bregman divergence, our algorithm selects the Bregman divergence adaptively in cognizant of the regularizer, which leads to complementary perspectives and insights. Several important features and theoretical appeal of GPMD are summarized as follows.

-

•

GPMD substantially broadens the range of (provably effective) algorithmic choices for regularized RL, and subsumes several well-known algorithms as special cases. For example, it reduces to regularized policy iteration (Geist et al.,, 2019) when the learning rate tends to infinity, and subsumes entropy-regularized NPG methods as special cases if we take the Bregman divergence to be the Kullback-Leibler (KL) divergence (Cen et al., 2022b, ).

-

•

Assuming exact policy evaluation and perfect policy update in each iteration, GPMD converges linearly—in a dimension-free fashion— over the entire range of the learning rate . More precisely, it converges to an -optimal regularized Q-function in no more than an order of

iterations (up to some logarithmic factor). Encouragingly, this appealing feature is valid for a broad family of convex and possibly nonsmooth regularizers.

-

•

The intriguing convergence guarantees are robust in the face of inexact policy evaluation and imperfect policy updates, namely, the algorithm is guaranteed to converge linearly at the same rate until an error floor is hit. See Section 3.2 for details.

-

•

Numerical experiments are provided in Section 5 to demonstrate the practical applicability and appealing performance of the proposed GPMD algorithm.

Finally, we find it helpful to briefly compare the above findings with prior works. As soon as the learning rate exceeds , the iteration complexity of our algorithm is at most on the order of , thus matching that of regularized policy iteration (Geist et al.,, 2019). In comparison to Lan, (2022), our work sets forth a different framework to analyze mirror-descent type algorithms for regularized policy optimization, generalizing and refining the approach in Cen et al., 2022b far beyond entropy regularization. When constant learning rates are employed, the linear convergence of PMD (Lan,, 2022) critically requires the regularizer to be strongly convex, with only sublinear convergence theory established for convex regularizers. In contrast, we establish the linear convergence of GPMD under constant learning rates even in the absence of strong convexity. Furthermore, for the special case of entropy regularization, the stability analysis of GPMD also significantly improves over the prior art in Cen et al., 2022b , preventing the error floor from blowing up when the learning rate approaches zero, as well as incorporating the impact of optimization error that was previously uncaptured. More detailed comparisons with Lan, (2022) and Cen et al., 2022b can be found in Section 3.

1.3 Related works

Before embarking on our algorithmic and theoretic developments, we briefly review a small sample of other related works.

Global convergence of policy gradient methods.

Recent years have witnessed a surge of activities towards understanding the global convergence properties of policy gradient methods and their variants for both continuous and discrete RL problems, examples including Fazel et al., (2018); Bhandari and Russo, (2019); Agarwal et al., (2020); Zhang et al., 2021b ; Wang et al., (2019); Mei et al., 2020a ; Bhandari and Russo, (2020); Khodadadian et al., (2021); Liu et al., (2020); Mei et al., 2020a ; Agazzi and Lu, (2020); Xu et al., (2019); Wang et al., (2019); Cen et al., 2022b ; Mei et al., (2021); Liu et al., (2019); Wang et al., (2021); Zhang et al., 2020a ; Zhang et al., 2021a ; Zhang et al., 2020b ; Shani et al., (2019), among other things. Neu et al., (2017) provided the first interpretation of NPG methods as mirror descent (Nemirovsky and Yudin,, 1983), thereby enabling the adaptation of techniques for analyzing mirror descent to the studies of NPG-type algorithms such as TRPO (Shani et al.,, 2019; Tomar et al.,, 2020). It has been shown that the NPG method converges sub-linearly for unregularized MDPs with a fixed learning rate (Agarwal et al.,, 2020), and converges linearly if the learning rate is set adaptively (Khodadadian et al.,, 2021), via exact line search (Bhandari and Russo,, 2020), or following a geometrically increasing schedule (Xiao,, 2022). The global linear convergence of NPG holds more generally for an arbitrary fixed learning rate when entropy regularization is enforced (Cen et al., 2022b, ). Noteworthily, Li et al., (2023) established a lower bound indicating that softmax PG methods can take an exponential time—in the size of the state space—to converge, while the convergence rates of NPG-type methods are almost independent of the problem dimension. In addition, another line of recent works (Abbasi-Yadkori et al.,, 2019; Hao et al.,, 2021; Lazic et al.,, 2021) established regret bounds for approximate NPG methods—termed as KL-regularized approximate policy iteration therein—for infinite-horizen undiscounted MDPs, which are beyond the scope of the current paper.

Regularization in RL.

Regularization has been suggested to the RL literature either through the lens of optimization (Dai et al.,, 2018; Agarwal et al.,, 2020), or through the lens of dynamic programming (Geist et al.,, 2019; Vieillard et al.,, 2020). Our work is clearly an instance of the former type. Several recent results in the literature merit particular attention: Agarwal et al., (2020) demonstrated sublinear convergence guarantees for PG methods in the presence of relative entropy regularization, Mei et al., 2020b established linear convergence of entropy-regularized PG methods, whereas Cen et al., 2022b derived an almost dimension-free linear convergence theory for NPG methods with entropy regularization. Most of the existing literature focused on the entropy regularization or KL-type regularization, and the studies of general regularizers had been quite limited until the recent work Lan, (2022). The regularized MDP problems are also closely related to the studies of constrained MDPs, as both types of problems can be employed to model/promote constraint satisfaction in RL, as recently investigated in, e.g., Chow et al., 2018a ; Efroni et al., (2020); Ding et al., (2021); Yu et al., (2019); Xu et al., (2020). Note, however, that it is difficult to directly compare our algorithm with these methods, due to drastically different formulations and settings.

1.4 Notation

Let us introduce several notation that will be adopted throughout. For any set , we denote by the cardinality of a set , and let indicate the probability simplex over the set . For any convex and differentiable function , the Bregman divergence generated by is defined as

| (1) |

For any convex (but not necessarily differentiable) function , we denote by the subdifferential of . Given two probability distributions and over , the KL divergence from to is defined as . For any vectors and , the notation (resp. ) means that () for every . We shall also use (resp. ) to denote the all-one (resp. all-zero) vector whenever it is clear from the context.

2 Model and algorithms

2.1 Problem settings

Markov decision process (MDP).

The focus of this paper is a discounted infinite-horizon Markov decision process, as represented by (Bertsekas,, 2017). Here, is the state space, is the action space, is the discount factor, is the probability transition matrix (so that is the transition probability from state upon execution of action ), whereas is the reward function (so that indicates the immediate reward received in state after action is executed). Here, we focus on finite-state and finite-action scenarios, meaning that both and are assumed to be finite. A policy specifies a possibly randomized action selection rule, namely, represents the action selection probability in state .

For any policy , we define the associated value function as follows

| (2) |

which can be viewed as the utility function we wish to maximize. Here, the expectation is taken over the randomness of the MDP trajectory induced by policy . Similarly, when the initial action is fixed, we can define the action-value function (or Q-function) as follows

| (3) |

As a well-known fact, the policy gradient of (w.r.t. the policy ) admits the following closed-form expression (Sutton et al., (2000))

| (4) |

Here, is the so-called discounted state visitation distribution defined as follows

| (5) |

where denotes the probability of when the MDP trajectory is generated under policy given the initial state .

Furthermore, the optimal value function and the optimal Q-function are defined and denoted by

| (6) |

It is well known that there exists at least one optimal policy, denoted by , that simultaneously maximizes the value function and the Q-function for all state-action pairs (Agarwal et al.,, 2019).

Regularized MDP.

In practice, the agent is often asked to design policies that possess certain structural properties in order to be cognizant of system constraints such as safety and operational constraints, as well as encourage exploration during the optimization/learning stage. A natural strategy to achieve these is to resort to the following regularized value function w.r.t. a given policy (Neu et al.,, 2017; Mei et al., 2020b, ; Cen et al., 2022b, ; Lan,, 2022):

| (7) |

where stands for a convex and possibly nonsmooth regularizer for state , denotes the regularization parameter, and is defined in (5). Here, for technical convenience, we assume throughout that () is well-defined over an “-neighborhood” of the probability simplex defined as follows

where can be an arbitrary constant. For instance, entropy regularization adopts the choice for all and , which coincides with the negative Shannon entropy of a probability distribution. Similar, a KL regularization adopts the choice , which penalizes the distribution that deviates from the reference . As another example, a weighted regularization adopts the choice for all and , where is the cost of taking action at state , and the regularizer captures the expected cost of the policy in state . Throughout this paper, we impose the following assumption.

Assumption 1.

Consider an arbitrarily small constant . For for any , suppose that is convex and

| (8) |

Following the convention in prior literature (e.g., Mei et al., 2020b ), we also define the corresponding regularized Q-function as follows:

| (9a) | |||

| As can be straightforwardly verified, one can also express in terms of as | |||

| (9b) | |||

The optimal regularized value function and the corresponding optimal policy are defined respectively as follows:

| (10) |

It is worth noting that Puterman, (2014) asserts the existence of an optimal policy that achieves (10) simultaneously for all . Correspondingly, we shall also define the resulting optimal regularized Q-function as

| (11) |

2.2 Algorithm: generalized policy mirror descent

Motivated by PMD (Lan,, 2022), we put forward a generalization of PMD that selects the Bregman divergence in cognizant of the regularizer in use. A thorough comparison with Lan, (2022) will be provided after introducing our generalized PMD algorithm.

Review: mirror descent (MD) for the composite model.

To better elucidate our algorithmic idea, let us first briefly review the design of classical mirror descent—originally proposed by Nemirovsky and Yudin, (1983)—in the optimization literature. Consider the following composite model:

where the objective function consists of two components. The first component is assumed to be differentiable, while the second component can be more general and is commonly employed to model some sort of regularizers. To solve this composite problem, one variant of mirror descent adopts the following update rule (see also Beck, (2017); Duchi et al., (2010)):

| (12) |

where is the learning rate or step size, and is the Bregman divergence defined in (1). Note that the first term within the curly brackets of (12) can be safely discarded as it is a constant given . In words, the above update rule approximates via its first-order Taylor expansion at the point , employs the Bregman divergence to monitor the difference between the new iterate and the current iterate , and attempts to optimize such (properly monitored) approximation instead. While one can further generalize the Bregman divergence to for a different generator , we shall restrict attention to the case with in the current paper.

The proposed algorithm.

We are now ready to present the algorithm we come up with, which is an extension of the PMD algorithm (Lan,, 2022). For notational simplicity, we shall write

| (13) |

throughout the paper, where denotes our policy estimate in the -th iteration.

To begin with, suppose for simplicity that is differentiable everywhere. In the -th iteration, a natural MD scheme that comes into mind for solving (7)—namely, for a given initial state —is the following update rule:

| (14) |

for every state , which is a direct application of (12) to our setting. Here, we start with a learning rate , and obtain simplification by replacing with . Notably, the update strategy (14) is invariant to the initial state , akin to natural policy gradient methods (Agarwal et al.,, 2020).

This update rule is well-defined for, say, the case when is the negative entropy, since the algorithm guarantees all the time and hence is always differentiable w.r.t. the -th iterate (see Cen et al., 2022b ). In general, however, it is possible to encounter situations when the gradient of does not exist on the boundary (e.g., when represents a certain indicator function). To cope with such cases, we resort to a generalized version of Bregman divergence (e.g., Kiwiel, (1997); Lan et al., (2011); Lan and Zhou, (2018)). To be specific, we attempt to replace the usual Bregman divergence by the following metric

| (15) |

where can be any vector falling within the subdifferential . Here, the non-negativity condition in (15) follows directly from the definition of the subgradient for any convex function. The constraint on can be further relaxed by exploiting the requirement . In fact, for any vector (with some constant and the all-one vector), one can readily see that

| (16) |

where the last line is valid since . As a result, everything boils down to identifying a vector that falls within upon global shift.

Towards this, we propose the following iterative rule for designing such a sequence of vectors as surrogates for the subgradient of :

| (17a) | ||||

| (17b) | ||||

where is updated as a convex combination of the previous and , where more emphasis is put on when the learning rate is large. As asserted by the following lemma, the above vectors we construct satisfy the desired property, i.e., lying within the subdifferential of under suitable global shifts. It is worth mentioning that these global shifts only serve as an aid to better understand the construction, but are not required during the algorithm updates.

Lemma 1.

For all and every , there exists a quantity such that

| (18) |

In addition, for every , there exists a quantity such that

| (19) |

Proof.

See Appendix A.1. ∎

Thus far, we have presented all crucial ingredients of our algorithm. The whole procedure is summarized in Algorithm 1, and will be referred to as Generalized Policy Mirror Descent (GPMD) throughout the paper. Interestingly, several well-known algorithms can be recovered as special cases of GPMD:

-

•

When the Bregman divergence is taken as the KL divergence, GPMD reduces to the well-renowned NPG algorithm (Kakade,, 2002) when (no regularization), and to the NPG algorithm with entropy regularization analyzed in (Cen et al., 2022b, ) when is taken as the negative Shannon entropy.

-

•

When (no divergence), GPMD reduces to regularized policy iteration in Geist et al., (2019); in particular, GPMD reduces to the standard policy iteration algorithm if in addition is also .

| (20a) | |||

| where | |||

| (20b) | |||

| (20c) |

Comparison with PMD (Lan,, 2022).

Before continuing, let us take a moment to point out the key differences between our algorithm GPMD and the PMD algorithm proposed in Lan, (2022) in terms of algorithm designs. Although the primary exposition of PMD in Lan, (2022) fixes the Bregman divergence as the KL divergence, the algorithm also works in the presence of a generic Bregman divergence, whose relationship with the regularizer is, however, unspecified. Furthermore, GPMD adaptively sets this term to be the Bregman divergence generated by the regularizer in use, together with a carefully designed recursive update rule (cf. (17)) to compute surrogates for the subgradient of to facilitate implementation. Encouragingly, this specific choice leads to a tailored performance analysis of GPMD, which was not present in and instead complementary with that of PMD (Lan,, 2022). In truth, our theory offers linear convergence guarantees for more general scenarios by adapting to the geometry of the regularizer ; details to follow momentarily.

3 Main results

This section presents our convergence guarantees for the GPMD method presented in Algorithm 1. We shall start with the idealized case assuming that the update rule can be precisely implemented, and then discuss how to generalize it to the scenario with imperfect policy evaluation.

3.1 Convergence of exact GPMD

To start with, let us pin down the convergence behavior of GPMD, assuming that accurate evaluation of the policy is available and the subproblem (20a) can be solved perfectly. Here and below, we shall refer to the algorithm in this case as exact GPMD. Encouragingly, exact GPMD provably achieves global linear convergence from an arbitrary initialization, as asserted by the following theorem.

Theorem 1 (Exact GPMD).

Suppose that Assumption 1 holds. Consider any learning rate , and set . Then the iterates of Algorithm 1 satisfy

| (21a) | ||||

| (21b) | ||||

for all , where .

In addition, if is -strongly convex w.r.t. the norm for some , then one further has

| (22) |

Our theorem confirms the fast global convergence of the GPMD algorithm, in terms of both the resulting regularized Q-value (if is convex) and the policy estimate (if is strongly convex). In summary, it takes GPMD no more than

| (23a) | |||

| iterations to converge to an -optimal regularized Q-function (in the sense), or | |||

| (23b) | |||

iterations to yield an -approximation (w.r.t. the norm error) of . The iteration complexity (23) is nearly dimension-free—namely, depending at most logarithmically on the dimension of the state-action space —making it scalable to large-dimensional problems.

Comparison with Lan, (2022, Theorems 1-3).

To make clear our contributions, it is helpful to compare Theorem 1 with the theory for the state-of-the-art algorithm PMD in Lan, (2022).

-

•

Linear convergence for convex regularizers under constant learning rates. Suppose that constant learning rates are adopted for both GPMD and PMD. Our finding reveals that GPMD enjoys global linear convergence—in terms of both and —even when the regularizer is only convex but not strongly convex. In contrast, Lan, (2022, Theorem 2) provided only sublinear convergence guarantees (with an iteration complexity proportional to ) for the case with convex regularizers, provided that constant learning rates are adopted.222In fact, Lan, (2022, Theorem 3) suggests using a vanishing strongly convex regularization, as well as a corresponding increasing sequence of learning rates, in order to enable linear convergence for non-strongly-convex regularizers.

-

•

A full range of learning rates. Theorem 1 reveals linear convergence of GPMD for a full range of learning rates, namely, our result is applicable to any . In comparison, linear convergence was established in Lan, (2022) only when the learning rates are sufficiently large and when is -strongly convex w.r.t. the KL divergence. Consequently, the linear convergence results in Lan, (2022) do not extend to several widely used regularizers such as negative Tsallis entropy and log-barrier functions (even after scaling), which are, in contrast, covered by our theory. It is worth noting that the case with small-to-medium learning rates is often more challenging to cope with in theory, given that its dynamics could differ drastically from that of regularized policy iteration.

-

•

Further comparison of rates under large learning rates. (Lan,, 2022, Theorem 1) achieves a contraction rate of when the regularizer is strongly convex and the step size satisfies , while the contraction rate of GPMD is under the full range of the step size, which is slower but approaches the contraction rate of PMD as goes to infinity. Therefore, in the limit , both GPMD and PMD achieve the contraction rate . As soon as , their iteration complexities are on the same order.

Remark 1.

While our primary focus is to solve the regularized RL problem, one might be tempted to apply GPMD as a means to solve unregularized RL; for instance, one might run GPMD with the regularization parameter diminishing gradually in order to approach a policy with the desired accuracy. We leave the details to Appendix C.

3.2 Convergence of approximate GPMD

In reality, however, it is often the case that GPMD cannot be implemented in an exact manner, either because perfect policy evaluation is unavailable or because the subproblem (20a) cannot be solved exactly. To accommodate these practical considerations, this subsection generalizes our previous result by permitting inexact policy evaluation and non-zero optimization error in solving (20a). The following assumptions make precise this imperfect scenario.

Assumption 2 (Policy evaluation error).

Suppose for any , we have access to an estimate obeying

| (24) |

Assumption 3 (Subproblem optimization error).

Consider any policy and any vector . Define

where is defined in (15). Suppose there exists an oracle , which is capable of returning such that

| (25) |

Note that the oracle in Assumption 3 can be implemented efficiently in practice via various first-order methods (Beck,, 2017). Under Assumptions 2 and 3, we can modify Algorithm 1 by replacing with the estimate , and invoking the oracle to solve the subproblem (20a) approximately. The whole procedure, which we shall refer to as approximate GPMD, is summarized in Algorithm 2.

| (27) |

The following theorem uncovers that approximate GPMD converges linearly—at the same rate as exact GPMD—before an error floor is hit.

Theorem 2 (Approximate GPMD).

Suppose that Assumptions 1, 2 and 3 hold. Consider any learning rate . Then the iterates of Algorithm 2 satisfy

| (28a) | ||||

| (28b) | ||||

where , is defined in Theorem 1, and

In addition, if is -strongly convex w.r.t. the norm for any , then we can further obtain

| (29a) | ||||

| (29b) | ||||

| (29c) | ||||

where

| (30) |

In the special case where and , Algorithm 2 reduces to regularized policy iteration, and the convergence result can be simplified as follows

In particular, when is taken as the negative entropy, our result strengthens the prior result established in Cen et al., 2022b for approximate entropy-regularized NPG method with over a wide range of learning rates. Specifically, the error bound in Cen et al., 2022b reads , where the second term in the bracket scales inversely with respect to and therefore grows unboundedly as approaches . In contrast, (29) and (30) suggest a bound , which is independent of the learning rate in use and thus prevents the error bound from blowing up when the learning rate approaches . Indeed, our result improves over the prior art Cen et al., 2022b whenever .

Remark 2 (Sample complexities).

One might naturally ask how many samples are sufficient to learn an -optimal regularized Q-function, by leveraging sample-based policy evaluation algorithms in GPMD. Notice that it is straightforward to consider an expected version of Assumption 2 as following:

where the expectation is with respect to the randomness in policy evaluation, then the convergence results in Theorem 2 apply to and instead. This randomized version makes it immediately amenable to combine with, e.g., the rollout-based policy evaluators in Lan, (2022, Section 5.1) to obtain (possibly crude) bounds on the sample complexity. We omit these straightforward developments.

Roughly speaking, approximate GPMD is guaranteed to converge linearly to an error bound that scales linearly in both the policy evaluation error and the optimization error , thus confirming the stability of our algorithm vis-à-vis imperfect implementation of the algorithm. As before, our theory improves upon prior works by demonstrating linear convergence for a full range of learning rates even in the absence of strong convexity and smoothness.

4 Analysis for exact GPMD (Theorem 1)

In this section, we present the analysis for our main result in Theorem 1, which follows a different framework from Lan, (2022). Here and throughout, we shall often employ the following shorthand notation when it is clear from the context:

| (33) |

in addition to those already defined in (13).

4.1 Preparation: basic facts

In this subsection, we single out a few basic results that underlie the proof of our main theorems.

Performance improvement.

To begin with, we demonstrate that GPMD enjoys a sort of monotonic improvements concerning the updates of both the value function and the Q-function, as stated in the following lemma. This lemma can be viewed as a generalization of the well-established policy improvement lemma in the analysis of NPG (Agarwal et al.,, 2020; Cen et al., 2022b, ) as well as PMD (Lan,, 2022).

Lemma 2 (Pointwise monotonicity).

For any and any , Algorithm 1 achieves

| (34) |

Proof.

See Appendix A.2. ∎

Interestingly, the above monotonicity holds simultaneously for all state-action pairs, and hence can be understood as a kind of pointwise monotonicity.

Generalized Bellman operator.

Another key ingredient of our proof lies in the use of a generalized Bellman operator associated with the regularizer . Specifically, for any state-action pair and any vector , we define

| (35) |

It is worth noting that this definition shares similarity with the regularized Bellman operator proposed in Geist et al., (2019), where the operator defined there is targeted at , while ours is defined w.r.t. .

The importance of this generalized Bellman operator is two-fold: it enjoys a desired contraction property, and its fixed point corresponds to the optimal regularized Q-function. These are generalizations of the properties for the classical Bellman operator, and are formally stated in the following lemma. The proof is deferred to Appendix A.3.

Lemma 3 (Properties of the generalized Bellman operator).

For any , the operator defined in (35) satisfies the following properties:

-

•

is a contraction operator w.r.t. the norm, namely, for any , one has

(36) -

•

The optimal regularized -function is a fixed point of , that is,

(37)

4.2 Proof of Theorem 1

Inspired by Cen et al., 2022b , our proof consists of (i) characterizing the dynamics of errors and establishing a connection to a useful linear system with two variables, and (ii) analyzing the dynamics of this linear system directly. In what follows, we elaborate on each of these steps.

Step 1: error contraction and its connection to a linear system.

With the assistance of the above preparations, we are ready to elucidate how to characterize the convergence behavior of . Recalling the update rule of (cf. (20c)), we can deduce that

with , thus indicating that

| (38) |

Interestingly, there exists an intimate connection between and that allows us to bound the former term by the latter. This is stated in the following lemma, with the proof postponed to Appendix A.4.

Lemma 4.

Set . The iterates of Algorithm 1 satisfy

| (39) |

Step 2: analyzing the dynamics of the linear system (40).

Before proceeding, we note that a linear system similar to (40) has been analyzed in Cen et al., 2022b (, Section 4.2.2). We intend to apply the following properties that have been derived therein:

| (42a) | ||||

| (42b) | ||||

| (42c) | ||||

Substituting (42c) and (42b) into (42a) and rearranging terms, we reach

| (43) |

which taken together with the definition of gives

| (44a) | ||||

| (44b) | ||||

Step 3: controlling and .

5 Numerical experiments

In this section, we provide some simple numerical experiments to corroborate the effectiveness of the GPMD algorithm.

5.1 Tsallis entropy

While Shannon entropy is a popular choice of regularization, the discrepancy between the value function of the regularized MDP and the unregularized counterpart scales as . In addition, the optimal policy under Shannon entropy regularization assigns positive mass to all actions and is hence non-sparse. To promote sparsity and obtain better control of the bias induced by regularization, Lee et al., (2018, 2019) proposed to employ the Tsallis entropy (Tsallis,, 1988) as an alternative. To be precise, for any vector , the associated Tsallis entropy is defined as

where is often referred to as the entropic-index. When , the Tsallis entropy reduces to the Shannon entropy.

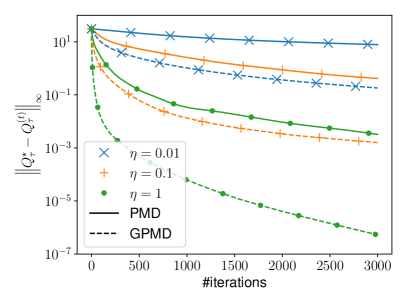

We now evaluate numerically the performance of PMD and GPMD when applied to a randomly generated MDP with and . Here, the transition probability kernel and the reward function are generated as follows. For each state-action pair , we randomly select states to form a set , and set if , and otherwise. The reward function is generated by , where and are independent uniform random variables over . We shall set the regularizer as for all with a regularization parameter . As can be seen from the numerical results displayed in Figure 1(a), GPMD enjoys a faster convergence rate compared to PMD.

|

|

| (a) Tsallis entropy regularization | (b) Log-barrier regularization |

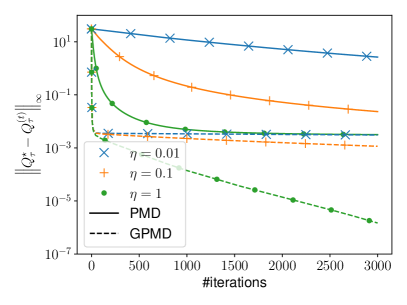

5.2 Constrained RL

In reality, an agent with the sole aim of maximizing cumulative rewards might sometimes end up with unintended or even harmful behavior, due to, say, improper design of the reward function, or non-perfect simulation of physical laws. Therefore, it is sometimes necessary to enforce proper constraints on the policy in order to prevent it from taking certain actions too frequently.

To simulate this problem, we first solve a MDP with and , generated in the same way as in the previous subsection. We then pick 10 state-action pairs from the support of the optimal policy at random to form a set . We can ensure that for all by adding the following log-barrier regularization with :

Numerical comparisons of PMD and GPMD when applied this problem are plotted in Figure 1(b). It is observed that PMD methods stall after reaching an error floor on the order of , while GPMD methods are able to converge to the optimal policy efficiently.

6 Discussion

The present paper has introduced a generalized framework of policy optimization tailored to regularized RL problems. We have proposed a generalized policy mirror descent (GPMD) algorithm that achieves dimension-free linear convergence, which covers an entire range of learning rates and accommodates convex and possibly nonsmooth regularizers. Numerical experiments have been conducted to demonstrate the utility of the proposed GPMD algorithm. Our approach opens up a couple of future directions that are worthy of further exploration. For example, the current work restricts its attention to convex regularizers and tabular MDPs; it is of paramount interest to develop policy optimization algorithms when the regularizers are nonconvex and when sophisticated policy parameterization—including function approximation—is adopted. Understanding the sample complexities of the proposed algorithm—when the policies are evaluated using samples collected over an online trajectory—is crucial in sample-constrained scenarios and is left for future investigation. Furthermore, it might be worthwhile to extend the proposed algorithm to accommodate multi-agent RL, with a representative example being regularized multi-agent Markov games (Cen et al.,, 2021; Zhao et al.,, 2022; Cen et al., 2022a, ; Cen et al., 2022c, ).

Acknowledgements

S. Cen and Y. Chi are supported in part by the grants ONR N00014-19-1-2404, NSF CCF-2106778, DMS-2134080, CCF-1901199, CCF-2007911, and CNS-2148212. S. Cen is also gratefully supported by Wei Shen and Xuehong Zhang Presidential Fellowship, and Nicholas Minnici Dean’s Graduate Fellowship in Electrical and Computer Engineering at Carnegie Mellon University. W. Zhan and Y. Chen are supported in part by the Google Research Scholar Award, the Alfred P. Sloan Research Fellowship, and the grants AFOSR FA9550-22-1-0198, ONR N00014-22-1-2354, NSF CCF-2221009, CCF-1907661, IIS-2218713, and IIS-2218773. W. Zhan and J. Lee are supported in part by the ARO under MURI Award W911NF-11-1-0304, the Sloan Research Fellowship, NSF CCF 2002272, NSF IIS 2107304, and an ONR Young Investigator Award.

Appendix A Proof of key lemmas

In this section, we collect the proof of several key lemmas. Here and throughout, we use to denote the expectation over the randomness of the MDP induced by policy . We shall follow the notation convention in (33) throughout. In addition, to further simplify notation, we shall abuse the notation by letting

| (47a) | ||||

| (47b) | ||||

| (47c) | ||||

for any policy and and any , whenever it is clear from the context.

A.1 Proof of Lemma 1

We start by relaxing the probability simplex constraint (i.e., ) in (20a) with a simpler linear constraint as follows

| (48) |

To justify the validity of dropping the non-negative constraint, we note that for any obeying for some , our assumption on (see Assumption 1) leads to , which cannot possibly be the optimal solution. This confirms the equivalence between (20a) and (48).

Observe that the Lagrangian w.r.t. (48) is given by

where denotes the Lagrange multiplier associated with the constraint . Given that is the solution to (20a) and hence (48), the optimality condition requires that

Rearranging terms and making use of the construction (17), we are left with

thus concluding the proof of the first claim (18).

We now turn to the second claim (19). In view of the property (37), we have

This optimization problem is equivalent to

| (49) |

which can be verified by repeating a similar argument for (48). The Lagrangian associated with (49) is

where denotes the Lagrange multiplier. Therefore, the first-order optimality condition requires that

which immediately finishes the proof.

A.2 Proof of Lemma 2

We start by introducing the performance difference lemma that has previously been derived in Lan, (2022, Lemma 2). For the sake of self-containedness, we include a proof of this lemma in Appendix A.2.1.

Lemma 5 (Performance difference).

Armed with Lemma 5, one can readily rewrite the difference between two consecutive iterates as follows

| (51) |

It then comes down to studying the right-hand side of the relation (A.2), which can be accomplished via the following “three-point” lemma. The proof of this lemma can be found in Appendix A.2.2.

Lemma 6.

For any and any vector , we have

Taking in Lemma 6 and combining it with (A.2), we arrive at

for any , thus establishing the advertised pointwise monotonicity w.r.t. the regularized value function.

When it comes to the regularized Q-function, it is readily seen from the definition (9a) that

for any , where the last line is valid since . This concludes the proof.

A.2.1 Proof of Lemma 5

For any two policies and , it follows from the definition (7) of that

| (52) |

where the penultimate line comes from the definition (9a). To see why the last line of (52) is valid, we make note of the following identity

| (53) |

where the first identity results from the relation (9b), and the last relation holds since . The last line of (52) then follows immediately from the relation (53) and the definition (5) of .

A.2.2 Proof of Lemma 6

For any state , we make the observation that

where the first and the fourth steps invoke the definition (15) of the generalized Bregman divergence and the last line results from the update rule (20c). Rearranging terms, we are left with

Adding the term to both sides of this identity leads to

as claimed, where the last line makes use of the definition (15).

A.3 Proof of Lemma 3

In the sequel, we shall prove each claim in Lemma 3 separately.

Proof of the contraction property (36).

For any , the definition (35) of the generalized Bellman operator obeys

where (a) arises from the elementary fact .

Proof of the fixed point property (37).

Towards this, let us first define

| (54) |

Then it can be easily verified that

| (55) |

where the first identity results from (9), and the second line arises from the maximizing property of (see (54)).

Note that the right-hand side of (55) involves the term , which can be further upper bounded via the same argument for (55). Successively repeating this upper bound argument (and the expansion) eventually allows one to obtain

However, the fact that is the optimal policy necessarily implies the following reverse inequality:

Therefore, one must have

| (56) |

A.4 Proof of Lemma 4

Recall that . In view of the relation (9), one obtains

This combined with the fixed-point condition (37) allows us to derive

| (57) |

In what follows, we control each term on the right-hand side of (A.4) separately.

Step 1: bounding the 1st term on the right-hand side of (A.4).

Lemma 1 tells us that

for some scalar . This important property allows one to derive

| (58) |

where

Recognizing that the function is convex in , we can view as the Lagrangian of the following constrained convex problem with Lagrangian multiplier :

| (59) |

The condition (58) can then be interpreted as the optimality condition w.r.t. the program (59) and , meaning that

or equivalently,

| (60) |

Step 2: bounding the 2nd term on the right-hand side of (A.4).

Step 3: putting all this together.

Appendix B Analysis for approximate GPMD (Theorem 2)

The proof consists of three steps: (i) evaluating the performance difference between and , (ii) establishing a linear system to characterize the error dynamic, and (iii) analyzing this linear system to derive global convergence guarantees. We shall describe the details of each step in the sequel. As before, we adopt the notational convention (47) whenever it is clear from the context.

B.1 Step 1: bounding performance difference between consecutive iterates

When only approximate policy evaluation is available, we are no longer guaranteed to have pointwise monotonicity as in the case of Lemma 2. Fortunately, we are still able to establish an approximate versioin of Lemma 2, as stated below.

Lemma 7 (Performance improvement for approximate GPMD).

For all and all , we have

In addition, if is 1-strongly convex w.r.t. the norm for all , then one further has

In words, while monotonicity is not guaranteed, this lemma precludes the possibility of being be much smaller than , as long as both and are reasonably small.

B.1.1 Proof of Lemma 7

The case when is convex.

Let be the exact solution of the following problem

| (65) |

With this auxiliary policy iterate in mind, we start by decomposing into the following three parts:

| (66) |

where the first identity arises from the performance difference lemma (cf. Lemma 5). To continue, we seek to control each part of (66) separately.

- •

- •

In addition, we note that the term

appears in both (67) and (69), which can be canceled out when summing these two equalities. Specifically, adding (67) and (69) gives

Substituting this into (66) and invoking the elementary inequality thus lead to

| (70) |

where the last line makes use of Assumption 2 and the fact .

Following the discussion in Lemma 1, we can see that with some constant for all . This together with the convexity of (see (15)) guarantees that

| (71) |

for any , thus implying that the first term of (70) is non-negative. It remains to control the second term in (70). Towards this, a little algebra gives

| (72) |

Here, the first and the third lines follow from the definition (15), the second inequality comes from the construction (27), whereas the last step invokes the definition of the oracle (25). Substitution of (71) and (72) into (70) gives

| (73) | ||||

The case when is strongly convex.

When is -strongly convex w.r.t. the norm, the objective function of sub-problem (65) is -strongly convex w.r.t. the norm. Taking this together with the -approximation guarantee in Assumption 3, we can demonstrate that

| (74) |

Additionally, the strong convexity assumption also implies that

where the third line results from Young’s inequality, and the final step follows from (74). We can develop a similar lower bound on as well. Taken together, these lower bounds give

In addition, it is easily seen that

Combining the above two inequalities with (73), we arrive at the advertised bound

B.2 Step 2: connecting the algorithm dynamic with a linear system

Now we are ready to discuss how to control . In short, we intend to establish the connection among several intertwined quantities, and identify a simple linear system that captures the algorithm dynamic.

Bounding .

Bounding .

Bounding .

To begin with, let us decompose into several parts. Invoking the relation (37) in Lemma 3 as well as the property (9), we reach

| (79) |

In the sequel, we control the three terms in (79) separately.

Taken together, the above bounds and the decomposition (79) lead to

| (80) |

A linear system of interest.

B.3 Step 3: linear system analysis

In this step, we analyze the behavior of the linear system (81) derived above. Observe that the eigenvalues and respective eigenvectors of the matrix are given by

| (83) |

| (84) |

Armed with these, we can decompose in terms of the eigenvectors of as follows

| (85) |

where is some constant that does not affect our final result. Also, the vector defined in (82) satisfies

| (86) |

Using the decomposition in (B.3) and (86) and applying the system relation (81) recursively, we can derive

Recognizing that the first two entries of are non-positive, we can discard the term involving and obtain

Making use of the fact that , we can conclude

The above bound essentially says that

and

Appendix C Adaptive GPMD

In this section, we present adaptive GPMD, an adaptive variant of GPMD that computes optimal policies of the original MDP without the need of specifying the regularization parameter in advance. In a nutshell, the proposed adaptive GPMD algorithm is a stage-based algorithm. In the -th stage, we execute GPMD (i.e., Algorithm 1) with regularization parameter for iterations. In what follows, we shall denote by and the -th iterates in the -th stage. At the end of each stage, Adaptive GPMD will halve the regularization parameter , and in the meantime, double (i.e., the auxiliary vector corresponding to some subgradient up to global shift) and use it to as the initial vector for the next stage. To ensure that still lies within the set of subgradients up to global shift, we solve the sub-optimization problem (87) to obtain as the initial policy iterate for the next stage. The whole procedure is summarized in Algorithm 3.

| (87) |

To help characterize the discrepancy of the value functions due to regularization, we assume boundedness of the regularizers as follows.

Assumption 4.

Suppose that there exists some quantity such that holds for all and all .

The following theorem demonstrates that Algorithm 3 is capable of finding an -optimal policy for the unregularized MDP within an order of stages. To simplify notation, we abbreviate , and as , and , respectively, as long as it is clear from the context.

Theorem 3.

As a direct implication of Theorem 3, it suffices to run Algorithm 3 with stages, resulting in a total iteration complexity of at most

| (88) |

In comparison, we recall from Theorem 1 that: directly running GPMD with regularization parameter leads to an iteration complexity of

| (89) |

When focusing on the term , (88) improves upon (89) by a factor of .

Proof of Theorem 3.

To begin with, we make note of the fact that, for any ,

| (90) |

It then follows that

| (91) | ||||

Next, we demonstrate how to control . The definition of implies the existence of some constant such that

| (92) |

By invoking the convergence results of GPMD (cf. (44)), we obtain: for all ,

| (93a) | ||||

| (93b) | ||||

where . To proceed, we follow similar arguments in (45) and show that

where the first step invokes the regularized performance difference lemma (Lemma 5). It then follows that

| (94) |

Substitution of (94) into (93) gives

| (95a) | ||||

| (95b) | ||||

Next, we aim to prove by induction that . Clearly, this claim holds trivially for the base case with . Next, supposing that the claim holds for some , we would like to prove it for as well. Towards this end, observe that

When , we arrive at

which verifies the claim for . Substitution back into (95) leads to

| (96) |

References

- Abbasi-Yadkori et al., (2019) Abbasi-Yadkori, Y., Bartlett, P., Bhatia, K., Lazic, N., Szepesvari, C., and Weisz, G. (2019). Politex: Regret bounds for policy iteration using expert prediction. In International Conference on Machine Learning, pages 3692–3702. PMLR.

- Agarwal et al., (2019) Agarwal, A., Jiang, N., Kakade, S. M., and Sun, W. (2019). Reinforcement learning: Theory and algorithms. Technical report.

- Agarwal et al., (2020) Agarwal, A., Kakade, S. M., Lee, J. D., and Mahajan, G. (2020). Optimality and approximation with policy gradient methods in Markov decision processes. In Conference on Learning Theory, pages 64–66. PMLR.

- Agazzi and Lu, (2020) Agazzi, A. and Lu, J. (2020). Global optimality of softmax policy gradient with single hidden layer neural networks in the mean-field regime. arXiv preprint arXiv:2010.11858.

- Amodei et al., (2016) Amodei, D., Olah, C., Steinhardt, J., Christiano, P., Schulman, J., and Mané, D. (2016). Concrete problems in ai safety.

- Beck, (2017) Beck, A. (2017). First-order methods in optimization. SIAM.

- Beck and Teboulle, (2003) Beck, A. and Teboulle, M. (2003). Mirror descent and nonlinear projected subgradient methods for convex optimization. Operations Research Letters, 31(3):167–175.

- Bertsekas, (2017) Bertsekas, D. P. (2017). Dynamic programming and optimal control (4th edition). Athena Scientific.

- Bhandari and Russo, (2019) Bhandari, J. and Russo, D. (2019). Global optimality guarantees for policy gradient methods. arXiv preprint arXiv:1906.01786.

- Bhandari and Russo, (2020) Bhandari, J. and Russo, D. (2020). A note on the linear convergence of policy gradient methods. arXiv preprint arXiv:2007.11120.

- (11) Cen, S., Chen, F., and Chi, Y. (2022a). Independent natural policy gradient methods for potential games: Finite-time global convergence with entropy regularization. arXiv preprint arXiv:2204.05466.

- (12) Cen, S., Cheng, C., Chen, Y., Wei, Y., and Chi, Y. (2022b). Fast global convergence of natural policy gradient methods with entropy regularization. Operations Research, 70(4):2563–2578.

- (13) Cen, S., Chi, Y., Du, S. S., and Xiao, L. (2022c). Faster last-iterate convergence of policy optimization in zero-sum markov games. arXiv preprint arXiv:2210.01050.

- Cen et al., (2021) Cen, S., Wei, Y., and Chi, Y. (2021). Fast policy extragradient methods for competitive games with entropy regularization. In Advances in Neural Information Processing Systems, volume 34, pages 27952–27964.

- (15) Chow, Y., Nachum, O., Duenez-Guzman, E., and Ghavamzadeh, M. (2018a). A Lyapunov-based approach to safe reinforcement learning. In Proceedings of the 32nd International Conference on Neural Information Processing Systems, pages 8103–8112.

- (16) Chow, Y., Nachum, O., and Ghavamzadeh, M. (2018b). Path consistency learning in Tsallis entropy regularized MDPs. In International Conference on Machine Learning, pages 979–988. PMLR.

- Dai et al., (2018) Dai, B., Shaw, A., Li, L., Xiao, L., He, N., Liu, Z., Chen, J., and Song, L. (2018). SBEED: Convergent reinforcement learning with nonlinear function approximation. In International Conference on Machine Learning, pages 1125–1134. PMLR.

- Ding et al., (2021) Ding, D., Wei, X., Yang, Z., Wang, Z., and Jovanovic, M. (2021). Provably efficient safe exploration via primal-dual policy optimization. In International Conference on Artificial Intelligence and Statistics, pages 3304–3312. PMLR.

- Duchi et al., (2010) Duchi, J. C., Shalev-Shwartz, S., Singer, Y., and Tewari, A. (2010). Composite objective mirror descent. In COLT, pages 14–26. Citeseer.

- Efroni et al., (2020) Efroni, Y., Mannor, S., and Pirotta, M. (2020). Exploration-exploitation in constrained MDPs. arXiv preprint arXiv:2003.02189.

- Fazel et al., (2018) Fazel, M., Ge, R., Kakade, S., and Mesbahi, M. (2018). Global convergence of policy gradient methods for the linear quadratic regulator. In International Conference on Machine Learning, pages 1467–1476.

- Geist et al., (2019) Geist, M., Scherrer, B., and Pietquin, O. (2019). A theory of regularized Markov decision processes. In International Conference on Machine Learning, pages 2160–2169.

- Haarnoja et al., (2018) Haarnoja, T., Zhou, A., Abbeel, P., and Levine, S. (2018). Soft actor-critic: Off-policy maximum entropy deep reinforcement learning with a stochastic actor. In International conference on machine learning, pages 1861–1870. PMLR.

- Hao et al., (2021) Hao, B., Lazic, N., Abbasi-Yadkori, Y., Joulani, P., and Szepesvári, C. (2021). Adaptive approximate policy iteration. In International Conference on Artificial Intelligence and Statistics, pages 523–531. PMLR.

- Kakade, (2002) Kakade, S. M. (2002). A natural policy gradient. In Proceedings of the 14th International Conference on Neural Information Processing Systems, pages 1531–1538.

- Khodadadian et al., (2021) Khodadadian, S., Jhunjhunwala, P. R., Varma, S. M., and Maguluri, S. T. (2021). On the linear convergence of natural policy gradient algorithm. arXiv preprint arXiv:2105.01424.

- Kiwiel, (1997) Kiwiel, K. C. (1997). Proximal minimization methods with generalized Bregman functions. SIAM journal on control and optimization, 35(4):1142–1168.

- Lan, (2022) Lan, G. (2022). Policy mirror descent for reinforcement learning: Linear convergence, new sampling complexity, and generalized problem classes. Mathematical Programming.

- Lan et al., (2011) Lan, G., Lu, Z., and Monteiro, R. D. (2011). Primal-dual first-order methods with iteration-complexity for cone programming. Mathematical Programming, 126(1):1–29.

- Lan and Zhou, (2018) Lan, G. and Zhou, Y. (2018). An optimal randomized incremental gradient method. Mathematical programming, 171(1):167–215.

- Lazic et al., (2021) Lazic, N., Yin, D., Abbasi-Yadkori, Y., and Szepesvari, C. (2021). Improved regret bound and experience replay in regularized policy iteration. In International Conference on Machine Learning, pages 6032–6042. PMLR.

- Lee et al., (2018) Lee, K., Choi, S., and Oh, S. (2018). Sparse Markov decision processes with causal sparse Tsallis entropy regularization for reinforcement learning. IEEE Robotics and Automation Letters, 3(3):1466–1473.

- Lee et al., (2019) Lee, K., Kim, S., Lim, S., Choi, S., and Oh, S. (2019). Tsallis reinforcement learning: A unified framework for maximum entropy reinforcement learning. arXiv preprint arXiv:1902.00137.

- Li et al., (2023) Li, G., Wei, Y., Chi, Y., and Chen, Y. (2023). Softmax policy gradient methods can take exponential time to converge. Mathematical Programming. To appear.

- Liu et al., (2019) Liu, B., Cai, Q., Yang, Z., and Wang, Z. (2019). Neural trust region/proximal policy optimization attains globally optimal policy. In Advances in Neural Information Processing Systems, pages 10565–10576.

- Liu et al., (2020) Liu, Y., Zhang, K., Basar, T., and Yin, W. (2020). An improved analysis of (variance-reduced) policy gradient and natural policy gradient methods. Advances in Neural Information Processing Systems, 33.

- Mei et al., (2021) Mei, J., Gao, Y., Dai, B., Szepesvari, C., and Schuurmans, D. (2021). Leveraging non-uniformity in first-order non-convex optimization. In International Conference on Machine Learning, pages 7555–7564. PMLR.

- (38) Mei, J., Xiao, C., Dai, B., Li, L., Szepesvári, C., and Schuurmans, D. (2020a). Escaping the gravitational pull of softmax. Advances in Neural Information Processing Systems, 33.

- (39) Mei, J., Xiao, C., Szepesvari, C., and Schuurmans, D. (2020b). On the global convergence rates of softmax policy gradient methods. In International Conference on Machine Learning, pages 6820–6829. PMLR.

- Mnih et al., (2015) Mnih, V., Kavukcuoglu, K., Silver, D., Rusu, A. A., Veness, J., Bellemare, M. G., Graves, A., Riedmiller, M., Fidjeland, A. K., Ostrovski, G., et al. (2015). Human-level control through deep reinforcement learning. Nature, 518(7540):529–533.

- Moldovan and Abbeel, (2012) Moldovan, T. M. and Abbeel, P. (2012). Safe exploration in markov decision processes.

- Nemirovsky and Yudin, (1983) Nemirovsky, A. S. and Yudin, D. B. (1983). Problem complexity and method efficiency in optimization.

- Neu et al., (2017) Neu, G., Jonsson, A., and Gómez, V. (2017). A unified view of entropy-regularized Markov decision processes. arXiv preprint arXiv:1705.07798.

- Puterman, (2014) Puterman, M. L. (2014). Markov decision processes: discrete stochastic dynamic programming. John Wiley & Sons.

- Schulman et al., (2015) Schulman, J., Levine, S., Abbeel, P., Jordan, M., and Moritz, P. (2015). Trust region policy optimization. In International Conference on Machine Learning, pages 1889–1897.

- Schulman et al., (2017) Schulman, J., Wolski, F., Dhariwal, P., Radford, A., and Klimov, O. (2017). Proximal policy optimization algorithms. arXiv preprint arXiv:1707.06347.

- Shani et al., (2019) Shani, L., Efroni, Y., and Mannor, S. (2019). Adaptive trust region policy optimization: Global convergence and faster rates for regularized MDPs. arXiv preprint arXiv:1909.02769.

- Sutton et al., (2000) Sutton, R. S., McAllester, D. A., Singh, S. P., and Mansour, Y. (2000). Policy gradient methods for reinforcement learning with function approximation. In Advances in neural information processing systems, pages 1057–1063.

- Tomar et al., (2020) Tomar, M., Shani, L., Efroni, Y., and Ghavamzadeh, M. (2020). Mirror descent policy optimization. arXiv preprint arXiv:2005.09814.

- Tsallis, (1988) Tsallis, C. (1988). Possible generalization of Boltzmann-Gibbs statistics. Journal of statistical physics, 52(1):479–487.

- Vieillard et al., (2020) Vieillard, N., Kozuno, T., Scherrer, B., Pietquin, O., Munos, R., and Geist, M. (2020). Leverage the average: an analysis of KL regularization in reinforcement learning. In NeurIPS-34th Conference on Neural Information Processing Systems.

- Wang et al., (2019) Wang, L., Cai, Q., Yang, Z., and Wang, Z. (2019). Neural policy gradient methods: Global optimality and rates of convergence. In International Conference on Learning Representations.

- Wang et al., (2021) Wang, W., Han, J., Yang, Z., and Wang, Z. (2021). Global convergence of policy gradient for linear-quadratic mean-field control/game in continuous time. In International Conference on Machine Learning, pages 10772–10782. PMLR.

- Williams, (1992) Williams, R. J. (1992). Simple statistical gradient-following algorithms for connectionist reinforcement learning. Machine learning, 8(3-4):229–256.

- Williams and Peng, (1991) Williams, R. J. and Peng, J. (1991). Function optimization using connectionist reinforcement learning algorithms. Connection Science, 3(3):241–268.

- Xiao, (2022) Xiao, L. (2022). On the convergence rates of policy gradient methods. arXiv preprint arXiv:2201.07443.

- Xu et al., (2019) Xu, P., Gao, F., and Gu, Q. (2019). Sample efficient policy gradient methods with recursive variance reduction. In International Conference on Learning Representations.

- Xu et al., (2020) Xu, T., Liang, Y., and Lan, G. (2020). A primal approach to constrained policy optimization: Global optimality and finite-time analysis. arXiv preprint arXiv:2011.05869.

- Yu et al., (2019) Yu, M., Yang, Z., Kolar, M., and Wang, Z. (2019). Convergent policy optimization for safe reinforcement learning. In Proceedings of the 33rd International Conference on Neural Information Processing Systems, pages 3127–3139.

- (60) Zhang, J., Kim, J., O’Donoghue, B., and Boyd, S. (2020a). Sample efficient reinforcement learning with REINFORCE. arXiv preprint arXiv:2010.11364.

- (61) Zhang, J., Koppel, A., Bedi, A. S., Szepesvári, C., and Wang, M. (2020b). Variational policy gradient method for reinforcement learning with general utilities. In Proceedings of the 34th International Conference on Neural Information Processing Systems, pages 4572–4583.

- (62) Zhang, J., Ni, C., Szepesvari, C., Wang, M., et al. (2021a). On the convergence and sample efficiency of variance-reduced policy gradient method. In Advances in Neural Information Processing Systems, volume 34, pages 2228–2240.

- (63) Zhang, K., Hu, B., and Basar, T. (2021b). Policy optimization for linear control with robustness guarantee: Implicit regularization and global convergence. SIAM Journal on Control and Optimization, 59(6):4081–4109.

- Zhao et al., (2022) Zhao, Y., Tian, Y., Lee, J., and Du, S. (2022). Provably efficient policy optimization for two-player zero-sum markov games. In International Conference on Artificial Intelligence and Statistics, pages 2736–2761. PMLR.