PORA: Predictive Offloading and Resource Allocation in Dynamic Fog Computing Systems

Abstract

In multi-tiered fog computing systems, to accelerate the processing of computation-intensive tasks for real-time IoT applications, resource-limited IoT devices can offload part of their workloads to nearby fog nodes, whereafter such workloads may be offloaded to upper-tier fog nodes with greater computation capacities. Such hierarchical offloading, though promising to shorten processing latencies, may also induce excessive power consumptions and latencies for wireless transmissions. With the temporal variation of various system dynamics, such a trade-off makes it rather challenging to conduct effective and online offloading decision making. Meanwhile, the fundamental benefits of predictive offloading to fog computing systems still remain unexplored. In this paper, we focus on the problem of dynamic offloading and resource allocation with traffic prediction in multi-tiered fog computing systems. By formulating the problem as a stochastic network optimization problem, we aim to minimize the time-average power consumptions with stability guarantee for all queues in the system. We exploit unique problem structures and propose PORA, an efficient and distributed predictive offloading and resource allocation scheme for multi-tiered fog computing systems. Our theoretical analysis and simulation results show that PORA incurs near-optimal power consumptions with queue stability guarantee. Furthermore, PORA requires only mild-value of predictive information to achieve a notable latency reduction, even with prediction errors.

Index Terms:

Internet of Things, fog computing, workload offloading, resource allocation, Lyapunov optimization, predictive offloading.I Introduction

In the face of the proliferation of real-time IoT applications, fog computing has come as a promising complement to cloud computing by extending cloud to the edge of the network to meet the stringent latency requirements and intensive computation demands of such applications [1].

A typical fog computing system consists of a set of geographically distributed fog nodes which are deployed at the network periphery with elastic resource provisioning such as storage, computation, and network bandwidth[2]. Depending on their distance to IoT devices, fog nodes are often organized in a hierarchical fashion, with each layer as a fog tier. In such a way, resource-limited IoT devices, when heavily loaded, can delegate workloads via wireless links to nearby fog nodes, a.k.a., workload offloading, to reduce the power consumption and accelerate workload processing; meanwhile, each fog node can offload workloads to nodes in its upper fog tier. However, along with all the benefits come the extended latency and extra power consumption. Given such a power-latency tradeoff, two interesting questions arise. One is where and how much workloads to offload between successive fog tiers. The other is how to allocate resources for workload processing and offloading. The timely decision making regarding these two questions is critical but challenging, due to temporal variations of system dynamics in wireless environment, uncertainty in the resulting offloading latency, and the unknown traffic statistics.

We summarize the main challenges of dynamic offloading and resource allocation in fog computing as follows:

-

Characterization of system dynamics and the power-latency tradeoff: In practice, a fog system often consists of multiple tiers, with complex interplays between fog tiers and the cloud, not to mention the constantly varying dynamics and intertwined power-latency tradeoffs therein. A model that accurately characterizes the system and tradeoffs is the key to the fundamental understanding of the design space.

-

Efficient online decision making: The decision making must be computationally efficient, so as to minimize the overheads. The difficulties often come from the uncertainties of traffic statistics, online nature of workload arrivals, and intrinsic complexity of the problem.

-

Understanding the benefits of predictive offloading: One natural extension to online decision making is to employ predictive offloading to further reduce latencies and improve quality of service. For example, Netflix preloads videos onto users’ devices based on user behavior prediction[3]. Despite the wide applications of such approaches, the fundamental limits of predictive offloading in fog computing still remain unknown.

| D2D-enabled IoT | IoT-Fog1 | Fog-Fog2 | Fog-Cloud3 | Dynamic | Prior Arrival Distribution | Prediction | |

| [1] | ✓ | ✓ | ✓ | – | |||

| [4] | ✓ | ✓ | – | ||||

| [5] | ✓ | ✓ | ✓ | Poisson | |||

| [6] | ✓ | – | |||||

| [7] | ✓ | ✓ | Poisson | ||||

| [8] | ✓ | ✓ | Not Required | ||||

| [9] | ✓ | ✓ | Not Required | ||||

| [10] | ✓ | ✓ | ✓ | Not Required | |||

| [11] | ✓ | ✓ | Not Required | ||||

| Ours | ✓ | ✓ | ✓ | Not Required | ✓ |

-

1,2,3

“IoT-Fog” means offloading from IoT devices to fog, “Fog-Fog” means offloading between fog tiers, while “Fog-Cloud” means offloading from fog to cloud.

In this paper, we focus on the workload offloading problem for multi-tiered fog systems. We address the above challenges by developing a fine-grained queueing model that accurately depicts such systems and proposing an efficient online scheme that proceeds the offloading on a time-slot basis. To the best of our knowledge, we are the first to conduct systematic study on predictive offloading in fog systems. Our key results and main contributions are summarized as follows:

-

Problem Formulation: We formulate the problem of dynamic offloading and resource allocation as a stochastic optimization problem, aiming at minimizing the long-term time-average expectation of total power consumptions of fog tiers with queue stability guarantee.

-

Algorithm Design: Through a non-trivial transformation, we decouple the problem into a series of subproblems over time slots. By exploiting their unique structures, we propose PORA, an efficient scheme that exploits predictive scheduling to make decisions in an online manner.

-

Theoretical Analysis and Experimental Verification: We conduct theoretical analysis and trace-driven simulations to evaluate the effectiveness of PORA. The results show that PORA achieves a tunable power-latency tradeoff while effectively reducing the average latency with only mild-value of predictive information, even in the presence of prediction errors.

-

New Degree of Freedom in the Design of Fog Computing Systems: We systematically investigate the fundamental benefits of predictive offloading in fog computing systems, with both theoretical analysis and numerical evaluations.

We organize the rest of the paper as follows. Section II discusses the related work. Next, in Section III, we provide an example that motivates our design for dynamic offloading and resource consumption in fog computing systems. Section IV presents the system model and problem formulation, followed by the algorithm design of PORA and performance analysis in Section V. Section VI analyzes the results from trace-driven simulations, while Section VII concludes the paper.

II Related Work

In recent years, a series of works have been proposed to optimize the performance fog computing systems from various aspects [1, 12, 13, 4, 5, 6, 7, 8, 9, 10, 11]. Among such works, the most related are those focusing on the design of effective offloading schemes. For example, by adopting alternating direction method of multipliers (ADMM) methods, Xiao et al.[1] and Wang et al.[4] proposed two offloading schemes for cloud-aided fog computing systems to minimize average task duration and average service response time under different energy constraints, respectively. Later, Liu et al. [5] took the social relationships among IoT users into consideration and developed a socially aware offloading scheme by advocating game theoretic approaches. Misra et al. [6] studied the problem in software-defined fog computing systems and proposed a greedy heuristic scheme to conduct multi-hop task offloading with offloading path selection. Lei et al. [7] considered the joint minimization of delay and power consumption over all IoT devices; they formulated the problem under the settings of continuous-time Markov decision process and solved it via approximate dynamic programming techniques. The above works, despite their effectiveness, generally assume the availability of the statistical information on task arrivals in the systems which is usually unattainable in practice with highly time-varying system dynamics [14].

In the face of such uncertainties, a number of works have applied stochastic optimization methods such as Lyapunov optimization techniques to online and dynamic offloading scheme design [8, 9, 10, 11]. For instance, Mao et al.[8] investigated the tradeoff between the power consumption and execution delay, then developed a dynamic offloading scheme for energy-harvesting-enabled IoT devices. Chen et al. [9] designed an adaptive and efficient offloading scheme to minimize the transmission energy consumption with queueing latency guarantee. Gao et al. [10] investigated efficient offloading and social-awareness-aided network resource allocation for device-to-device-enabled (D2D-enabled) IoT users. Zhang et al. [11] designed an online rewards-optimal scheme for the computation offloading of energy harvesting-enabled IoT devices based on Lyapunov optimization and Vickrey-Clarke-Groves auction. Different from such works that focus on fog computing systems with flat or two-tiered architectures, our solution is applicable to general multi-tiered fog computing systems with time-varying wireless channel states and unknown traffic statistics. Moreover, to the best of our knowledge, our solution is also the first to proactively leverage the predicted traffic information to optimize the system performance with theoretical guarantee. We are also the first to investigate the fundamental benefits of predictive offloading in fog computing systems. We compare our work with the above mentioned works in TABLE I.

III Motivating Example

In this section, we provide a motivating example to show the potential power-latency tradeoff in multi-tiered fog computing systems. The objective is to achieve low power consumptions and short average workload latency (in the unit of packets).

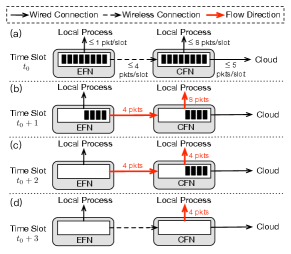

Figure 1 shows an instance of time-slotted fog computing system with two fog tiers, i.e., edge fog tier and central fog tier. Within each fog tier resides one fog node, i.e., an edge fog node (EFN) in edge fog tier and a central fog node (CFN) in central fog tier. The EFN connects to the CFN via a wireless link, while the CFN connects to the cloud data center over wired links. Each fog node maintains one queue to store packets. Figure 1(a) shows that during time slot , both the EFN and the CFN store packets in their queues.

We assume that each fog node sticks to one policy all the time to handle packets, i.e., either processing packets locally or offloading them to its next tier. The local processing capacities of EFN and CFN are and packets per time slot, respectively. The transmission capacities from EFN to CFN and from CFN to cloud are and packets per time slot, respectively. The power consumption is assumed linearly proportional to the number of processed/transmitted packets. In particular, processing one packet locally consumes mW power, while transmitting one packet over wireless link consumes mW. We ignore the processing latency in the cloud due to its powerful processing capacity.

TABLE II lists the total power consumptions and average packet latencies under all four possible settings. Figures 1(b)-1(d) show the case when EFN sticks to offloading and CFN sticks to local processing. In time slot , EFN offloads four packets to CFN at its full transmission capacity, while CFN processes all the eight packets locally. In time slot , EFN offloads the rest four packets to CFN; meanwhile, CFN locally processes the four packets that arrive in previous time slot. In time slot , CFN finishes processing the rest four packets. In this case, the system consumes mW power in local processing and mW power in transmission, with an average packet latency of time slots.

| Policy of | Policy of | Total Power | Average Packet |

| EFN | CFN | Consumptions (mW) | Latency (time slot) |

| Local | Local | 16 | 2.75 |

| Local | Offload | 8 | 2.9375 |

| Offload | Local | 20 | 1.75 |

| Offload | Offload | 4 | 2.125 |

From TABLE II, we conclude that: First, when EFN sticks to offloading and CFN sticks to local processing, the system achieves the lowest average packet latency of slots but the maximum power consumption of mW. Second, with the same offloading policy on EFN, there is a tradeoff between the total power consumptions and the average packet latency when CFN sticks to different policies. The reason is that offloading to the cloud can not only reduce power consumptions but also prolong latency as well. Third, when CFN sticks to local processing, there is a power-latency tradeoff with different policies at EFN, in that offloading to CFN can induce lower processing latency but at the cost of even higher power consumption for wireless transmissions.

IV Model and Problem Formulation

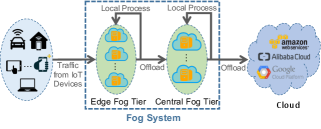

We consider a multi-tiered fog computing system, as shown in Figure 2. The system evolves over time slots indexed by . Each time slot has a length of . Inside the edge fog tier (EFT) are a set of edge fog nodes (EFNs) that offer low-latency access to IoT devices. On the other hand, the central fog tier (CFT) comprises of central fog nodes (CFNs) with greater processing capacities than EFNs. We assume that the workload on each EFN can be offloaded to and processed by any of its accessible CFNs, and that each CFN can offload its workload to the cloud. In our model, we do not consider the power consumptions and latencies within the cloud. We mainly focus on the power consumptions and latencies within fog tiers, as shown in TABLE III. First, the power consumptions we consider include two parts: processing power and transmit power. The processing power consumption is induced by the workload processing on both EFT and CFT. The transmit power is induced by the transmissions from EFT to CFT. We do not consider the transmit power consumption from CFT to cloud because we assume that the CFT communicates with the cloud through wireline connections. Second, the latencies we consider include three parts: queueing latency, processing latency and transmit latency. We focus on the queueing latency on both EFT and CFT. We assume that the workload processing in each time slot can be completed by the end of the same time slot, and then we can ignore the processing latency. Since the EFT communicates with the CFT through high-speed wireless connections and the CFT communicates with the cloud through high-speed wireline connections, we assume that transmission latencies from both EFT to CFT and CFT to Cloud are negligible.

| Power Consumption | Latency | ||||

|---|---|---|---|---|---|

| Processing | Transmit | Queueing | Processing | Transmit | |

| EFT | ✓ | ✓ | |||

| EFT2CFT | ✓ | ||||

| CFT | ✓ | ✓ | |||

| CFT2Cloud | |||||

In the following, we first introduce the basic settings in Section IV-A, then elaborate the queueing models in Section IV-D. Next, we define the optimization objective in Section IV-E while proposing the problem formulation in Section IV-F. We summarize the key notations in TABLE IV.

| Notation | Description |

|---|---|

| Length of each time slot | |

| is the set of EFNs with | |

| is the set of CFNs with | |

| Set of accessible EFNs from CFN | |

| Set of accessible CFNs from EFN | |

| Amount of workload arriving to EFN in time slot | |

| Average workload arriving rate on EFN , | |

| Prediction window size of EFN | |

| Arrival queue backlog of EFN in time slot | |

| Prediction queue backlog of EFN in time slot , such that | |

| Integrate queue backlog of EFN in time slot | |

| Local processing queue backlog of EFN in time slot | |

| Offloading queue backlog of EFN in time slot | |

| Amount of workload to be sent to in time slot | |

| Amount of workload to be sent to in time slot | |

| CPU frequency of EFN in time slot | |

| Wireless channel gain between EFN and CFN | |

| Transmit power from EFN to CFN in time slot | |

| Transmit rate from EFN to CFN in time slot | |

| Arrival queue backlog of CFN in time slot | |

| Local processing queue backlog of CFN in time slot | |

| Offloading queue backlog of CFN in time slot | |

| Amount of workload to be sent to in time slot | |

| Amount of workload to be sent to in time slot | |

| CPU frequency of CFN in time slot | |

| Total power consumptions in time slot |

IV-A Basic Settings

The fog computing system consists of EFNs in EFT and CFNs in CFT. Let and be the sets of EFNs and CFNs. Each EFN has access to a subset of CFNs in their proximities. We denote the subset by . For each CFN , denotes the set of its accessible EFNs. Accordingly, for any we have .

IV-B Queueing Model for Edge Fog Node

During time slot , there is an amount ( for some constant ) of workload generated from IoT devices arrive to be processed on EFN such that . We assume that such arrivals are independent over time slots and different EFNs. Each EFN is equipped with a learning module111We do not specify any particular learning method in this paper, since our work aims to explore the fundamental benefits of predictive offloading. In practice, one can leverage machine learning techniques such as time-series prediction methods [15] for workload arrival prediction. that can predict the future workload within a prediction window of size , i.e. workload will arrive in the next time slots. The predicted arrivals are pre-generated and recorded, then arrive to EFN for pre-serving. Once the predicted arrivals actually arrive after pre-serving, they will be considered finished.

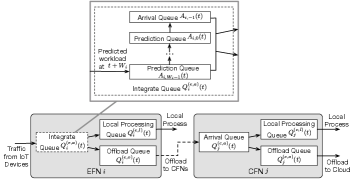

On each EFN, as Figure 3 shows, there are four types of queues: prediction queues with the backlogs as , …, , arrival queue , local processing queue , and offloading queue . In time slot , prediction queue () stores untreated workload that will arrive in time slot . Workload that actually arrives at EFN is stored in the arrival queue , awaiting being forwarded to the local processing queue or the offloading queue . Workload in will be processed locally by EFN , while workload in will be offloaded to CFNs in set .

IV-B1 Prediction Queues and Arrival Queues in EFNs

Within each time slot , in addition to the current arrivals in the arrival queue, EFN can also forward future arrivals in the prediction queues. We define as the amount of output workload from , for . Such workload should be distributed to the local processing queue and offloading queue. We denote the amounts of workloads to be distributed to the local processing queue and offloading queue as and , respectively, such that

| (1) |

where each is a positive constant. As a result, we have

| (2) |

Next, we consider the queueing dynamics for different types of queues in EFN, respectively.

Regarding , it is updated whenever pre-service is finished and the lookahead window moves one slot ahead at the end of each time slot. Therefore, we have

-

(i)

If , then

(3) -

(ii)

If , then

(4)

where for . In time slot , the amount of workload that will arrive after time slots is and it remains unknown until time slot .

Regarding the arrival queue , it records the actual backlog of EFN with the update equation as follows:

| (5) |

Note that denotes the amount of distributed workload that have already being in .

Next, we introduce an integrate queue with a backlog size as the sum of all prediction queues and the arrival queue on EFN , denoted by . Under fully-efficient [16] service policy, is updated as

| (6) |

The input of integrate queue consists of the predicted workload that will arrive at EFN in time slot , while its output consists of workloads being forwarded to the local processing queue and the offloading queue. Note that is the output capacity of integrate queue in time slot . If the capacity is larger than the queue backlog size, the true output amount will be smaller than .

IV-B2 Offloading Queues in EFNs

In time slot , workload in queue will be offloaded to CFNs in set . The transmission capacities are determined by the transmit power decisions , where is the transmit power from EFN to CFN . The transmit power is nonnegative and the total transmit power of each EFN is upper bounded, i.e.,

| (7) | |||

| (8) |

According to Shannon’s capacity formula [17], the transmission capacity from EFN to CFN is

| (9) |

where is the length of each time slot, is the channel bandwidth, is the wireless channel gain between EFN and CFN , and is the system power spectral density of the additive white Gaussian noise. Note that is an uncontrollable environment state with positive upper bound . We do not consider the interferences among fog nodes and tiers. By adjusting the transmit power , we can offload different amounts of workload from EFN to CFN in time slot . Accordingly, the update equation of offloading queue is

| (10) |

where is the total transmission capacity to EFN in time slot . The inequality here means that the actual arrival of may be less than , because is the transmission capacity from integrate queue to offloading queue instead of the amount of truly transmitted workload. Recall that we assume the transmission latency from EFT to CFT is negligible compared to the length of each time slot, the workload transmission in each time slot can be accomplished by the end of that time slot.

IV-C Queueing Model for Central Fog Node

Figure 3 also shows the queueing model on CFN. Each CFN maintains three queues: an arrival queue , a local processing queue , and an offloading queue . Similar to EFNs, workload offloaded from the EFT will be firstly stored in the arrival queue, then distributed to for local processing and to for further offloading.

IV-C1 Arrival Queues in CFNs

The arrivals on CFN consist of workloads offloaded from EFNs in the set . We denote the amounts of workloads distributed to the local processing queue and offloading queue in time slot as and , respectively, such that

| (11) |

where each is a positive constant. Accordingly, is updated as follows:

| (12) |

IV-C2 Offloading Queues in CFNs

For each CFN , its offloading queue stores the workload to be offloaded to the cloud. We define as the transmission capacity of the wired link from CFN to the cloud during time slot , which depends on the network state and is upper bounded by some constant for all and . Then we have the following update function for :

| (13) |

Note that the amount of actually offloaded workload to the cloud is .

IV-D Local Processing Queues on EFNs and CFNs

We assume that all fog nodes are able to adjust their CPU frequencies in each time slot, by applying dynamic voltage and frequency scaling (DVFS) techniques[18]. Next, we define as the number of CPU cycles that fog node requires to process one bit of workload, where is an indicator of fog node ’s type ( if is an EFN, and if is a CFN). is assumed constant and can be measured offline [19]. Therefore, the local processing capacity of fog node is . The local processing queue on fog node evolves as follows:

| (14) |

All CPU frequencies are nonnegative and finite:

| (15) |

where each is a positive constant.

IV-E Power Consumptions

The total power consumptions of fog tiers in time slot consist of the processing power consumption and wireless transmit power consumption. Given a local CPU with frequency , its power consumption per time slot is , where is a parameter depending on the deployed hardware and is measurable in practice [20]. Thus is defined as follows:

| (16) |

where is the vector of all CPU frequencies, and in which is the transmit power allocation of EFN .

IV-F Problem Formulation

We define the long-term time-average expectation of total power consumptions and total queue backlog as follows:

| (17) | |||

| (18) |

In this paper, we aim to minimize the long-term time-average expectation of total power consumptions , while ensuring the stability of all queues in the system, i.e., . The problem formulation is given by

| (19) |

V Algorithm Design

V-A Predictive Algorithm

To solve problem (19), we adopt Lyapunov optimization techniques[16][21] to decouple the problem into a series of subproblems over time slots. We show the detail of this process in Appendix A. By solving each of these subproblems during each time slot, we propose PORA, an efficient and predictive scheme conducts workload offloading in an online and distributed manner. We show the pseudocode of PORA in Algorithm 1. Note that symbol indicates the type of fog node. Specifically, for each fog node , if is an EFN and CFN otherwise.

Next, we introduce PORA in detail.

V-A1 Offloading Decision

In each time slot , under PORA, each fog node decides the amounts of workload scheduled to the offloading queue and the local processing queue, denoted by and , respectively. Such decisions are obtained by solving the following problem:

| (20) |

where . Accordingly, the optimal solution to (20) is

| (21) |

From (21), we see that, to determine the optimal solutions and , each EFN would compare its integrate queue backlog size with its local processing queue backlog size and offloading queue backlog size , respectively. Particularly, if there is too much workload in its integrate queue compared to its local queue (), then it will offload as much workload (up to ) as possible to its local queue. Likewise, if its integrate queue is loaded with more workload than its offloading queue (), it will offload up to an amount of workload to its offloading queue.

Notably, if the backlog size of the EFN ’s integrate queue is larger than both its local queue and offloading queue, then the EFN will transmit the workload one by one unit (e.g. packets); each unit of workload is either sent to the EFN ’s local queue or its offloading queue, such that the amounts of workload distributed to such two queues are no greater than and , respectively. In practice, the workload distributing strategy is left as a degree of freedom to be specified in the implementation of PORA. In our simulation, we adopt the following distributing strategy. When an EFN ’s integrate queue backlog size is greater than both its local queue and its offloading backlog size, then it will transmit workload to its local queue until the amount of transmitted workload reaches . Then the rest workload in the integrate queue is transmitted to the offloading queue until the amount of distributed workload reaches . Such a process terminates whenever the integrate queue becomes empty.

The decision making process is similar for CFNs. Specifically, each CFN determines and by comparing its arrival queue backlog size with its local processing queue backlog size and offloading queue backlog size , respectively.

Remark: For each EFN, we can view the difference between the backlog sizes of its integrate queue and its local processing/offloading queue as its willingness of workload transmission. If such willingness is positive, then the EFN will transmit as much workload as possible from its integrate queue; otherwise, the EFN will leave the workload not distributed in the current time slot. In such a way, PORA always endeavors to balance the integrate queue backlog and the local/offloading queue backlog. Likewise, under PORA, each CFN determines its offloading decisions upon the difference between the backlog sizes of its arrival queue and its local processing/offloading queue to ensure the queue stability.

V-A2 Local CPU Frequency Allocation

Under PORA, in each time slot , each fog node sets its local CPU frequency by solving the following subproblem:

| (22) |

By setting the second derivative of the objective function in (22) to zero, we can obtain the optimal CPU frequency to be set by fog node as

| (23) |

Remark: When , the allocated CPU frequency is proportional to the square root of the backlog size of local processing queue and the inverse of the value of parameter . This shows that, on the one hand, PORA would allocate as much CPU frequency as possible to process the workload in the queues. On the other hand, the value of parameter determines the tradeoff between power consumption and the backlog sizes of queues: a small value of will encourage the fog node to allocate more CPU frequency to process the workload and hence a small queue backlog size; in contrast, a large value of will make the fog node more conservative to allocate resources, leading to less power consumptions but a large queue backlog size as well. In practice, the choice of the value of is dependent on the system design objective.

V-A3 Power Allocations for EFNs

In each time slot , under PORA, each EFN determines its allocated transmit power by solving the following optimization problem.

| (24) |

where and . By applying water-filling algorithm[22], we obtain the optimal solution to problem (24) as

| (25) |

where is the optimal Lagrangian variable that satisfies

| (26) |

The optimality of such solutions is proven in Appendix C. We adopt bisection method (line 15-25 in Algorithm 1) to obtain the value of with its lower and upper bounds as and , respectively. Note that the value of converges asymptotically to the optimum as the tolerance parameter approaches zero, such that .

Remark: PORA tends to allocate more transmit power to the CFN with smaller arrival queue backlog size for load balancing. When , we have and , i.e., EFN allocates no transmit power to CFN unless the backlog size of the arrival queue on CFN is greater than that of the offloading queue on EFN . By increasing the value of , transmit power consumption will be reduced but the backlog size will increase as well.

V-B Computational Complexity of PORA

During each time slot, part of the computational complexity concentrates on the calculation for CPU frequency settings and offloading decision makings. Since the calculation (line 3-11) requires only constant time for each fog node, the total complexity of these steps is . Next, each EFN applies the bisection method (line 15-26) to calculate the optimal dual variable, with a complexity of . After that, EFN determines the transmit power to each CFN in the set . In the worst case, each EFN is potentially connected to all CFNs, thus the total complexity of PORA algorithm is .

V-C Performance Analysis

We conduct theoretical analysis on the relationship between the average power consumption and queue backlog under PORA scheme in the non-predictive case (), and then analyze the benefits of predictive offloading in terms of latency reduction.

V-C1 Time-average Power Consumption and Queue Backlog

Let be the achievable minimum of over all feasible non-predictive polices. We have the following theorem.

Theorem 1

The proof is quite standard and hence omitted here.

Remark: By Little’s theorem[23], the average queue backlog size is proportional to the average queueing latency. Therefore, Theorem 1 implies that by adjusting parameter , PORA can achieve an power-latency tradeoff in the non-predictive case. Furthermore, the average power consumption approaches the optimum asymptotically as the value of increases to infinity.

V-C2 Latency Reduction

We analyze the latency reduction induced by PORA under perfect prediction compared to the non-predictive case. In particular, we denote the prediction window vector by and the corresponding delay reduction by . For each unit of workload on EFN , let denote the steady-state probability that it experiences a latency of time slots in . Without prediction, the average latency on its arrival queues is . Then we have the following theorem.

Theorem 2

Suppose the system steady-state behavior depends only on the statistical behaviors of the arrivals and service processes. Then the latency reduction is

| (27) |

Furthermore, if , as , i.e., with inifinite predictive information, we have

| (28) |

Remark: Theorem 2 implies that predictive offloading conduces to a shorter workload latency; in other words, with predicted information, PORA can break the barrier of power-latency tradeoff. Furthermore, the latency reduction induced by PORA is proportional to the inverse of the prediction window size, and approaches zero as prediction window sizes go to infinity. In our simulations, we see that PORA can effectively shorten the average arrival queue latency with only mild-value of future information.

V-D Impact of Network Topology

Fog computing systems generally proceed in wireless environments, thus the network topology of such systems is usually dynamic and may change over time slots. However, at the beginning of each time slot, the network topology is observed and deemed fixed by the end of the time slot. Therefore, in the following, we put the focus of our discussion on the impact of network topology within each time slot.

Recall that in our settings, each EFN has access to only a subset of CFNs in its vicinity. For each EFN , the subset of its accessible EFNs is denoted by with a size of . From the perspective of graph theory, we can view the interconnection among fog nodes of different tiers as a directed graph, in which each vertex corresponds to a fog node and each edge indicates a directed connection between nodes. Hence, the value of can be regarded as the out-degree of EFN , which is an important parameter of network topology that measures the number of directed connections originating from EFN . Due to time-varying wireless dynamics, the out-degree of each fog node may vary over time slots; consequentially, the resulting topology would significantly affect the system performance. In the following, we discuss such impacts under two channel conditions, respectively.

On the one hand, within each time slot, poor channel conditions (e.g. in terms of low SINR) would often lead to unreliable or even unavailable connections among fog nodes and hence a network topology with a relatively smaller out-degree of nodes. In this case, each fog node may have a very limited freedom to choose the best target node to offload its workloads, further leading to backlog imbalance among fog nodes or even overloading in its upper tier with a large cumulative queue backlog size. Besides, poor channel conditions may also require more power consumptions to ensure reliable communication between successive fog nodes.

On the other hand, within each time slot, good channel conditions allow each fog node to have a broader access to the fog nodes in its upper tier, resulting a network topology with a relatively larger out-degree of nodes. In this case, each fog node is able to conduct better decision-making with more freedom in choosing the fog nodes in its upper fog tier, thereby achieving a better tradeoff between power consumptions and backlog sizes.

| Parameter | Value |

|---|---|

| MHz | |

| +60 a | |

| dBm/Hz | |

| mW | |

| , | cycles/bit |

| G cycles/s | |

| G cycles/s | |

| Ws3/cycle3 | |

| Mb/s | |

| Mb/s | |

| Mb/s |

-

a

is the distance between EFN and CFN .

V-E Use Cases

In practice, PORA can be applied as a theoretical framework to design the offloading schemes for fog computing systems under various use cases, such as public safety systems, intelligent transportation, and smart healthcare systems. For example, in a public safety system, each street is usually deployed with multiple smart cameras (IoT devices). At runtime, such smart cameras would upload real-time vision data to one of their accessible EFNs. Each EFN aggregates such data to extract or even analyze the instant road conditions within multiple streets. Such EFNs can upload some of the workload to their upper-layered CFNs (each taking charge of one community consisting of several streets) with greater computing capacities. Each CFN can further offload the workload to the cloud via optical fiber links. For latency-sensitive applications, the real-time vision data will be processed locally on EFNs or offloaded to CFNs. For latency-insensitive applications with intensive computation demand, the data will be offloaded to the cloud through the fog nodes. PORA conduces to the design of dynamic and online offloading and resource allocation schemes to support such fog systems with various applications.

VI Numerical Results

We conduct extensive simulations to evaluate PORA and its variants. The parameter settings in our simulation are based on the commonly adopted wireless environment settings that have been used in [24, 25]. The simulation is conducted on a MacBook Pro with 2.3 GHz Intel Core i5 processor and 8GB 2133 MHz LPDDR3 memory, and the simulation program is implemented using Python 3.7. This section firstly presents the basic settings of our simulations, and then provides the key results under perfect and imperfect prediction, respectively.

VI-A Basic Settings

We simulate a hierarchal fog computing system with EFNs and CFNs. All EFNs have a uniform prediction window size , which varies from to . Note that refers to the case without prediction. For each EFN , its accessible CFN set is chosen uniformly randomly from the power set of the CFN set with size . We set the time slot length second. During each time slot, workload arrives to the system in the unit of packets, each with a fixed size of bits. The packet arrivals are drawn from previous measurements [26], where the average flow arrival rate is flows/s, and the distribution of flow size has a mean of Kb. Given these settings, the average arrival rate is about Mbps. All results are averaged over time slots. We list all other parameter settings in TABLE V.

VI-B Evaluation with Perfect Prediction

Under the perfect prediction settings, we evaluate how the values of parameter and prediction window size influence the performance of PORA, respectively.

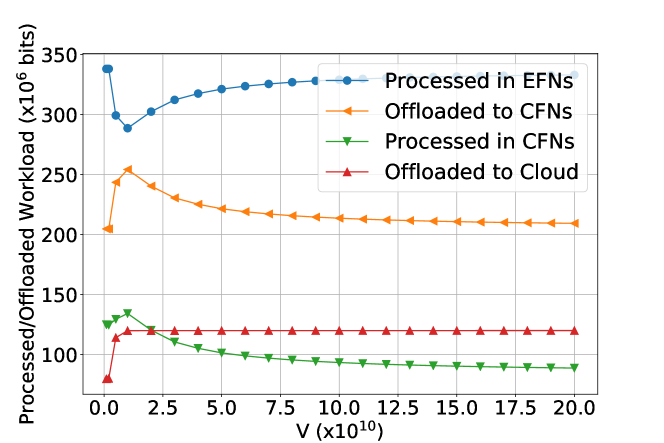

System Performance under Different Values of : Figure 4 shows the impact of parameter on the offloading decisions of PORA: When the value of is around , the time-average amount of locally processed workload on EFNs reaches the bottom of the curve, while other offloading decisions induce the peak workload. The reason is that the offloading decisions are not only determined by the value of , but also influenced by the queue backlog sizes.

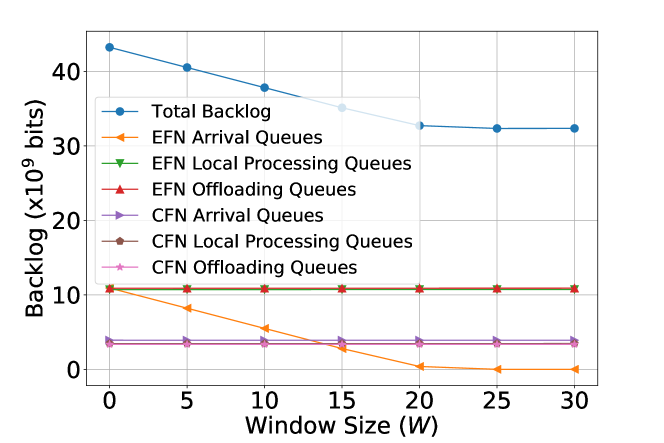

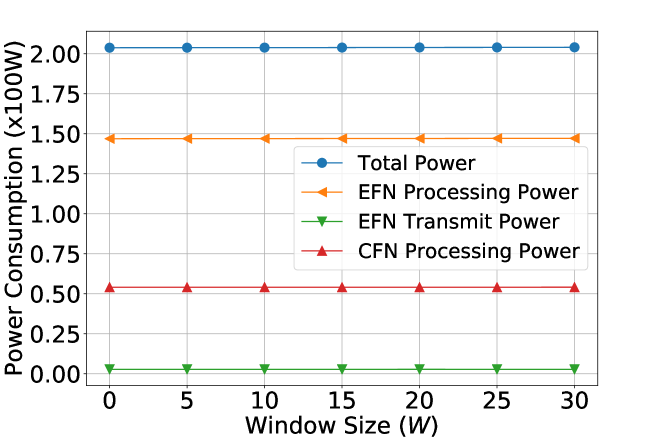

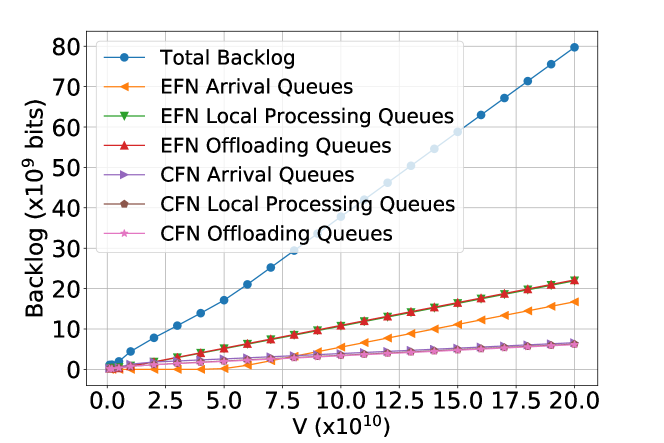

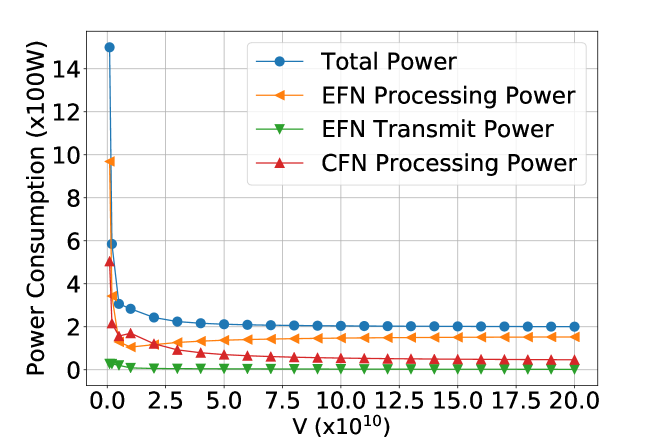

Figure 6 presents the impact of the value of on different types of queues and power consumptions in the system, respectively. As the value of increases, we see a rising trend in the sizes of all types of queue backlogs, and a roughly falling trend in all types of power consumptions.

System Performance with Different Values of Prediction Window Size : Figures 5(a) and 5(b) show the system performance with the prediction window size varying from to . With perfect prediction, PORA effectively shortens the average queueing latencies on EFN arrival queues – eventually close to zero with no extra power consumption and only a mild-value of prediction window size ( in this case).

PORA vs. PORA- (Low-Sampling Variant): In practice, since PORA requires to sample system dynamics across various fog nodes, it may incur considerable sampling overheads. By adopting the idea of randomized load balancing techniques [27], we propose PORA-, a variant of PORA that reduces the sampling overheads by probing () 222When , the scheme degenerates to uniform random sampling. CFNs and conducting resource allocation on which are uniformly chosen for each EFN from its accessible CFN set.

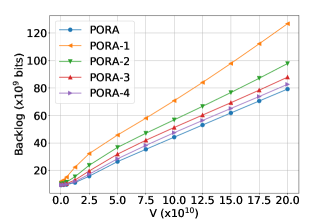

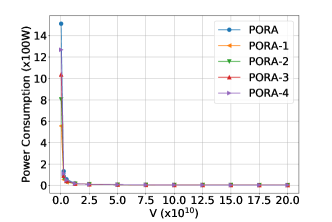

Figure 7 compares the performance of PORA with PORA-. We observe that PORA achieves the smallest queue backlog size. The result is reasonable since each EFN has access to 5 CFNs under PORA, more than the CFNs under PORA-. As a result, each EFN has more chance to access to the CFNs with better wireless channel condition and processing capacity under PORA when compared with PORA-. The observation that the queue backlog size increases as decreases further verifies our analysis. In fact, we can view as the degree of each EFN in the network topology. As decreases, the system performance degrades. However, when the value of is sufficiently large, PORA- achieves the similar power consumptions as PORA and the ratio of increment in the backlog size is small. For example, when , PORA- achieves % larger backlog size than PORA, and PORA- achieves % larger backlog size than PORA. In summary, PORA- (when ) can reduce the sampling overheads by trading off only a little performance degradation under large .

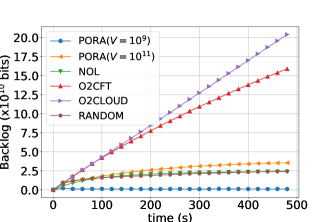

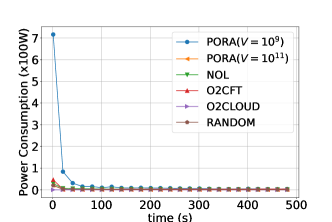

Comparison of PORA and Baselines: We introduce four baselines to evaluate the performance of PORA: (1) NOL (No Offloading): All nodes in the EFT process packets locally. (2) O2CFT (Offload to CFT): All packets are offloaded to the CFT and processed therein. (3) O2CLOUD (Offload to Cloud): All packets are offloaded to the cloud. (4) RANDOM: Each fog node randomly chooses to offload each packet or process it locally with equal chance. Note that all above baselines are also assumed capable of pre-serving future workloads in the prediction window. Figure 8 compares the instant total queue backlog sizes and power consumptions over time slots under the five schemes (PORA, NOL, O2CFT, O2CLOUD, RANDOM), where and .

We observe that scheme O2CLOUD achieves the minimum power consumptions, but incurs constantly increasing queue backlog sizes over time. The reasons are shown as follows. On one hand, in our settings, the mean power consumption for transmitting workload from EFT to CFT is smaller than the mean power consumption of processing the same amount of workload on fog nodes; under scheme O2CLOUD, only wireless transmit power is consumed and hence the minimum is achieved. On the other hand, all the workload must travel through all fog tiers before being offloaded to the cloud, which results in network congestion within fog tiers and thus workload accumulation with increasing queue backlogs.

As Figure 8 illustrates, PORA achieves the maximum power consumptions but the smallest backlog size when . Upon convergence of PORA, the power consumptions under all these schemes reach the same level, but the differences between their queue backlog sizes become more obvious: PORA () reduces % of the queue backlog when compared with NOL and RANDOM. The results demonstrate that with the appropriate choice of the value of , PORA can achieve less latency than the four baselines under the same power consumptions.

VI-C Evaluation with Imperfect Prediction

In practice, prediction errors are inevitable. Hence, we investigate the performance of PORA in the presence of prediction errors [28]. Particularly, we consider two kinds of prediction errors: false alarm and missed detection. A packet is falsely alarmed if it is predicted to arrive but it does not arrive actually. A packet is missed to be detected if it will arrive but is not predicted. We assume that all EFNs have the uniform false-alarm rate and missed-detection rate . In our simulation, we consider different pairs of values of : , , , , and . Note that corresponds to the case when the prediction is perfect.

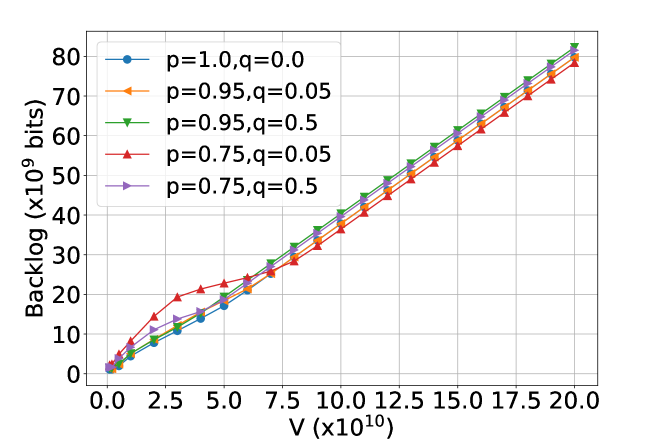

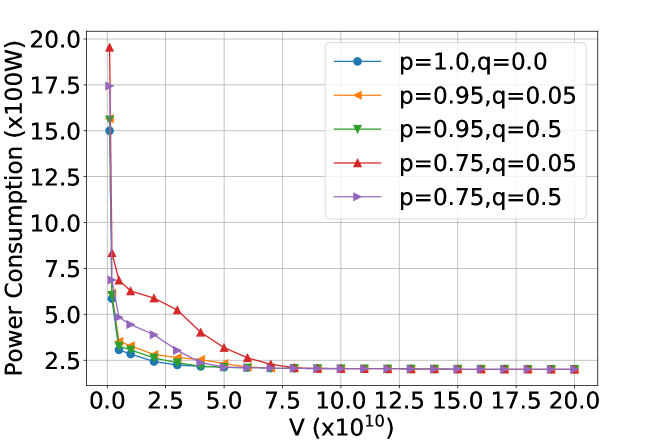

Figure 9 presents the results under prediction window size . We observe when , both the total queue backlog sizes and power consumptions under imperfect prediction are larger than that under perfect prediction. The reason for this performance degradation is twofold: First, arrivals that are missed to be detected cannot be pre-served, thus leading to larger queue backlog sizes. Second, PORA allocates redundant resources to handle the falsely predicted arrivals, thus causing more power consumptions. As the value of increases, this performance degradation becomes negligible. Taking the total queue backlog under as an example, when compared with the case under perfect prediction, it increases by at , and increases by at . Moreover, there is no extra power consumption under imperfect prediction when since PORA tends to reserve resources to reduce power consumptions under large .

In summary, there will be performance degradation in both total queue backlog sizes and power consumptions in the presence of prediction errors. However, as the value of increases, this degradation decreases and becomes negligible. Though a large value of can improve the robustness of PORA and achieve small power consumptions, it brings long workload latencies. In practice, the choice of the value of depends on how the system designer trades off all these criterions.

VII Conclusion

In this paper, we studied the problem of dynamic offloading and resource allocation with prediction in a fog computing system with multiple tiers. By formulating it as a stochastic network optimization problem, we proposed PORA, an efficient online scheme that exploits predictive offloading to minimize power consumption with queue stability guarantee. Our theoretical analysis and trace-driven simulations showed that PORA achieves a tunable power-latency tradeoff, while effectively shortening latency with only mild-value of future information, even in the presence of prediction errors. As for future work, our model can be further extended to more general settings such that the instant wireless channel states may be unknown by the moment of decision making or the underlying system dynamics is non-stationary.

References

- [1] Y. Xiao and M. Krunz, “QoE and power efficiency tradeoff for fog computing networks with fog node cooperation,” in Proceedings of IEEE INFOCOM, 2017.

- [2] S. Yi, Z. Hao, Z. Qin, and Q. Li, “Fog computing: Platform and applications,” in Proceedings of IEEE HotWeb, 2015.

- [3] J. Broughton, “Netflix adds download functionality,” https://technology.ihs.com/586280/netflix-adds-download-support, 2016.

- [4] Y. Wang, X. Tao, X. Zhang, P. Zhang, and Y. T. Hou, “Cooperative task offloading in three-tier mobile computing networks: An ADMM framework,” IEEE Transactions on Vehicular Technology, vol. 68, no. 3, pp. 2763–2776, 2019.

- [5] L. Liu, Z. Chang, and X. Guo, “Socially-aware dynamic computation offloading scheme for fog computing system with energy harvesting devices,” IEEE Internet of Things Journal, vol. 5, no. 3, pp. 1869–1879, 2018.

- [6] S. Misra and N. Saha, “Detour: Dynamic task offloading in software-defined fog for IoT applications,” IEEE Journal on Selected Areas in Communications, vol. 37, no. 5, pp. 1159–1166, 2019.

- [7] L. Lei, H. Xu, X. Xiong, K. Zheng, and W. Xiang, “Joint computation offloading and multi-user scheduling using approximate dynamic programming in NB-IoT edge computing system,” IEEE Internet of Things Journal, vol. 6, no. 3, pp. 5345–5362, 2019.

- [8] Y. Mao, J. Zhang, S. Song, and K. B. Letaief, “Power-delay tradeoff in multi-user mobile-edge computing systems,” in Proceedings of IEEE GLOBECOM, 2016.

- [9] Y. Chen, N. Zhang, Y. Zhang, X. Chen, W. Wu, and X. S. Shen, “Energy efficient dynamic offloading in mobile edge computing for Internet of Things,” IEEE Transactions on Cloud Computing, 2019, doi: 10.1109/TCC.2019.2898657.

- [10] Y. Gao, W. Tang, M. Wu, P. Yang, and L. Dan, “Dynamic social-aware computation offloading for low-latency communications in IoT,” IEEE Internet of Things Journal, 2019, doi: 10.1109/JIOT.2019.2909299.

- [11] D. Zhang, L. Tan, J. Ren, M. K. Awad, S. Zhang, Y. Zhang, and P.-J. Wan, “Near-optimal and truthful online auction for computation offloading in green edge-computing systems,” IEEE Transactions on Mobile Computing, 2019, doi: 10.1109/TMC.2019.2901474.

- [12] M. Taneja and A. Davy, “Resource aware placement of IoT application modules in fog-cloud computing paradigm,” in Proceedings of IFIP/IEEE IM, 2017.

- [13] M. Chen, W. Li, G. Fortino, Y. Hao, L. Hu, and I. Humar, “A dynamic service migration mechanism in edge cognitive computing,” ACM Transactions on Internet Technology, vol. 19, no. 2, p. 30, 2019.

- [14] D. Zhang, Z. Chen, L. X. Cai, H. Zhou, S. Duan, J. Ren, X. Shen, and Y. Zhang, “Resource allocation for green cloud radio access networks with hybrid energy supplies,” IEEE Transactions on Vehicular Technology, vol. 67, no. 2, pp. 1684–1697, 2017.

- [15] N. K. Ahmed, A. F. Atiya, N. E. Gayar, and H. El-Shishiny, “An empirical comparison of machine learning models for time series forecasting,” Econometric Reviews, vol. 29, no. 5-6, pp. 594–621, 2010.

- [16] L. Huang, S. Zhang, M. Chen, and X. Liu, “When backpressure meets predictive scheduling,” IEEE/ACM Transactions on Networking, vol. 24, no. 4, pp. 2237–2250, 2016.

- [17] R. G. Gallager, Principles of Digital Communication. Cambridge University Press, 2008.

- [18] Y. Mao, C. You, J. Zhang, K. Huang, and K. B. Letaief, “A survey on mobile edge computing: The communication perspective,” IEEE Communications Surveys & Tutorials, vol. 19, no. 4, pp. 2322–2358, 2017.

- [19] A. P. Miettinen and J. K. Nurminen, “Energy efficiency of mobile clients in cloud computing,” in Proceedings of ACM HotCloud, 2010.

- [20] Y. Kim, J. Kwak, and S. Chong, “Dual-side optimization for cost-delay tradeoff in mobile edge computing,” IEEE Transactions on Vehicular Technology, vol. 67, no. 2, pp. 1765–1781, 2018.

- [21] M. J. Neely, “Stochastic network optimization with application to communication and queueing systems,” Synthesis Lectures on Communication Networks, vol. 3, no. 1, pp. 1–211, 2010.

- [22] S. Boyd and L. Vandenberghe, Convex Optimization. Cambridge University Press, 2004.

- [23] A. Leon-Garcia, Probability, Statistics, and Random Processes for Electrical Engineering, 3rd ed. Pearson Education, 2017.

- [24] C.-F. Liu, M. Bennis, and H. V. Poor, “Latency and reliability-aware task offloading and resource allocation for mobile edge computing,” in Proceedings of IEEE GLOBECOM, 2017.

- [25] J. Du, L. Zhao, J. Feng, and X. Chu, “Computation offloading and resource allocation in mixed fog/cloud computing systems with min-max fairness guarantee,” IEEE Transactions on Communications, vol. 66, no. 4, pp. 1594–1608, 2017.

- [26] T. Benson, A. Akella, and D. A. Maltz, “Network traffic characteristics of data centers in the wild,” in Proceedings of ACM IMC, 2010.

- [27] M. Mitzenmacher, “The power of two choices in randomized load balancing,” IEEE Transactions on Parallel and Distributed Systems, vol. 12, no. 10, pp. 1094–1104, 2001.

- [28] K. Chen and L. Huang, “Timely-throughput optimal scheduling with prediction,” in Proceedings of IEEE INFOCOM, 2018.

Appendix A Design of Scheme PORA

First, we define Lyapunov function [21] as

| (29) |

Next, we define the drift-plus-penalty as

| (30) |

where is a positive parameter. According to definition (29), the update functions (6), (10), (12), (13), and (14), there exists a positive constant such that

| (31) |

Substituting (A) into the definition of drift-plus-penalty shown in (30) and by , we obtain

| (32) |

Then by the expression of transmission capacity from EFN to CFN shown in (9) and the expression of total power consumptions shown in (16), we have

| (33) |

where and for all .

To solve problem (19), we should minimize the upper bound of in every time slot. However, it is hard to solve a minimization problem with expectation. Thus we approximately solve the problem by considering the following deterministic problem in every time slot :

| (34) |

Problem (34) can be decomposed into subproblems shown in Section V. By solving these subproblems, we develop PORA, an online scheme that indepedently makes predictive offloading decisions , sets CPU frequencies , and allocates transmit powers in every time slot . ∎

Appendix B Proof of Optimal Local CPU Frequency

To solve the optimal solution to subproblem (22), we denote its objective function by

| (35) |

Its first- and second-order derivatives are shown as follows:

| (36) | |||

| (37) |

From the above two derivatives, we conclude that function is convex in interval since its second order derivative satisfies for . On the other hand, its first order derivative satisfies when . Thus the minimum point of over interval is . ∎

Appendix C Proof of Optimal Transmit Power Allocation

We denote the optimal solution to subproblem (24) by and the objective function in subproblem (24) by . Moreover, we define the following function

| (38) |

for each . Then can be expressed as

| (39) |

We denote the minimizer of function in interval by , i.e.,

| (40) |

When , is increasing over interval and . In this case, we have . When , is convex in interval since its second-order derivative satisfies

| (41) |

Thus we obtain by letting its first-order derivative to be zero:

| (42) |

It follows that when ,

| (43) |

If , we have as the constraints in (24) are satisfied. Otherwise, we have the following lemma.

Lemma 1

If , then must satisfy .

Proof: We prove Lemma 1 by contradiction. Suppose that there exists such that . Since , there exist and such that . Note that must hold for since . Now we consider a solution to subproblem (24) which satisfies

| (44) |

where . Then is a feasible solution since

| (45) |

By the definition of in (44), we have

| (46) |

Since is convex and is its unique minimizer, we have

| (47) |

It follows that

| (48) |

which contradicts the fact that is the optimal solution to (24). Thus must equal zero and satisfies . ∎

When , to find the optimal solution to problem (24), we need the following lemma as well.

Lemma 2

For any , if , then .

Proof: By (43), if and only if . Next, we show that if , then the optimal must be zero. Particularly, we prove it by contradiction.

Assume the optimal , then there must exist a feasible solution such that for all and . Then we have

| (49) |

If , according to , we have

| (50) |

If , since is the unique minimizer of over , we have

| (51) |

which contradicts the fact that is the optimal solution of problem (24). Thus for any with , the optimal must be zero. ∎

We define . By applying Lemma 1 and Lemma 2, when , we just need to solve the following problem:

| (52) |

Note that is the optimal solution to problem (52) and it satisfies the following KKT conditions:

| (53) | ||||

| (54) | ||||

| (55) | ||||

| (56) | ||||

| (57) |

where and are the corresponding optimal dual variables. Multiplying both sides of (53) by , we have

| (58) |

It follows by (54) that

| (59) |

On the other hand, according to (53) and (55), we have

| (60) |

for every . Now we consider two cases:

- 1.

-

2.

If , then condition (59) holds if and only if .

In conclusion, we have

| (62) |

or equivalently,

| (63) |

Note that the above expression also applies to the case when . Then by substituting (63) into (56), we obtain

| (64) |

The left-hand side is a piecewise-linear decreasing function of , with the breakpoint at . Therefore, the equation has a unique solution. ∎

Appendix D Proof of Theorem 2

Applying the Corollary 1 in [16], given prediction window size , the average latency of workload in arrival queue of EFN under PORA is

| (65) |

According to Little’s theorem [23], the average arrival queue backlog size of EFN under prediction is

| (66) |

Therefore, the total average arrival queue backlog sizes of all EFNs is

| (67) |

When the prediction window size is zero, i.e., when there is no prediction, the corresponding total average arrival queue backlog size of all EFNs is

| (68) |

Using (67) and (68), we conclude that

| (69) |

Dividing both sides by and using Little’s theorem, we obtain (27).