PR-PL: A Novel Transfer Learning Framework with

Prototypical Representation based Pairwise Learning

for EEG-Based Emotion Recognition

Abstract

Affective brain-computer interfaces based on electroencephalography (EEG) is an important branch in the field of affective computing. However, individual differences and noisy labels seriously limit the effectiveness and generalizability of EEG-based emotion recognition models. In this paper, we propose a novel transfer learning framework with Prototypical Representation based Pairwise Learning (PR-PL) to learn discriminative and generalized prototypical representations for emotion revealing across individuals and formulate emotion recognition as pairwise learning for alleviating the reliance on precise label information. More specifically, a prototypical learning-based adversarial discriminative domain adaptation method is developed to encode the inherent emotion-related semantic structure of EEG data, while pairwise learning with an adaptive pseudo-labeling method is developed to achieve a reliable and stable model learning with noisy labels. Through domain adaptation, feature representations of source and target domains are aligned on a shared feature space, while the feature separability of both source and target domains is also considered. The characterized prototypical representations are evident with a high feature concentration within one single emotion category and a high feature separability across different emotion categories. Extensive experiments are conducted on two benchmark databases under four cross-validation evaluation protocols (cross-subject cross-session, cross-subject within-session, within-subject cross-session, and within-subject within-session). The experimental results demonstrate the superiority of the proposed PR-PL against the state-of-the-arts under all four evaluation protocols, which shows the effectiveness and generalizability of PR-PL in dealing with the ambiguity of EEG responses in affective studies. The source code is available at https://github.com/KAZABANA/PR-PL.

Index Terms:

Electroencephalography; Emotion Recognition; Prototypical Representation; Pairwise Learning; Transfer Learning.I Introduction

Affective computing is a fast growing interdisciplinary research field and is attracting researchers’ attention from different areas including computer science, neuroscience, psychology, and signal processing [1]. Recently, electroencephalography (EEG) based emotion recognition has become an increasingly important topic for affective computing and human sentiment analysis [2, 3]. A proper design of EEG-based emotion recognition models is helpful for facilitating the data processing, benefiting discriminant feature characterization, and lightening the model performance. Currently, there exist two main critical issues in EEG-based emotion recognition. One is individual differences: how to build a generalized affective computing model which could tolerate the remarkable individual differences in the simultaneously collected EEG signals; and another is noisy label learning: how to train a reliable and stable affective computing model which is less reliant on the subjective feedback.

In recent years, more and more researchers have focused on applying transfer learning methods to alleviate the individual differences in EEG signals [4, 5, 6, 7, 8, 9] and improve feature invariant representation [10, 11, 12]. Considering the individuals with and without labels (termed as source domain and target domain), transfer learning tries to minimize the distribution difference between the source and target domains by approximately satisfying the assumption of independent and identical distribution and can consequently realize a higher recognition performance on the target domain. Through a domain-shifting strategy, the invariant feature representations across different domains are learned and the relationships among the learned features, data distribution, and labels are explored. For example, Li et al. [5] proposed a multisource transfer learning method with two transfer learning stages. In the first stage, appropriate samples were selected from the existing source domain. In the second stage, a style transfer mapping was implemented to alleviate the differences between the selected source samples and the unknown target samples. The results showed the proposed transfer method outperformed the non-transfer method with an improvement of 12.72% on the public SEED database [13] with a three-class classification problem (negative, neutral, and positive). Inspired by neuroscience findings that different emotions would lead to different brain reactions, Li et al. [6] proposed a novel R2G-STNN network to integrate the EEG spatial-temporal dynamics at the local and global brain areas and realize an efficient emotion recognition performance together with a domain shift learning. More details about current EEG-based emotion recognition models with the transfer learning algorithms are presented in Section II.

For video-evoking EEG-emotion experiments, subjects may not always be able to accurately react to the intended emotions, and at the same time may not be able to accurately describe and feedback on their emotional changes. This would bring label noise to the emotional information annotation of EEG samples and further lead to a negative impact on the model performance [14]. To tackle this issue, Zhong et al. [8] developed an emotion-aware distribution learning method (RGNN), in which they blurred the label information by changing the one-hot label representation to and trained the model to be less sensitive label noise. However, the model performance would greatly rely on the selection of value, and an optimal value selection could be different for different databases and different individuals. Current EEG-based emotion recognition models are mainly based on pointwise learning, which heavily relies on precisely labeled data. Contrarily, pairwise learning makes it possible to model the relative associations between pairs of instances and to efficiently encode the proximity among samples with less reliance on labeling. Thus, pointwise learning has achieved tremendous success in a number of real-world applications [15, 16, 17, 18].

To further improve the effectiveness and generalizability of EEG-based emotion recognition models and eliminate the negative effects from individual differences and label noises, in this paper, we formulate the emotion recognition tasks as a pairwise learning problem and propose a novel transfer learning framework with prototypical representation based pairwise learning (which is termed as PR-PL below). Here, we model the relative relationship between pairs of EEG samples in terms of prototypical representations, which is advantageous to pointwise learning when the labeling task is difficult and even the provided labels are wrong labels[19]. The major novelties of the proposed PR-PL are summarized as follows. (1) We formulate emotion recognition as pairwise learning to replace the classifier and greatly alleviate the label dependence on emotion labels. The pairwise learning provides us an alternative way to measure whether two EEG signals belong to the same emotion category without the reliance on the precise labeling information. The extensive experimental results on two well-known emotional databases (SEED [13] and SEED-IV [20]) prove the proposed PR-PL is a more accurate model than the state-of-the-arts for solving the EEG-based emotion recognition tasks under different application environments (cross-subject cross-session, cross-subject single-session, within-subject cross-session, and within-subject single-session). (2) We propose a novel prototypical learning-based adversarial discriminative domain adaptation method to explore latent variables of emotion categories, encode the semantic structure of EEG data, and learn subject-generalized prototypical representations for emotion revealing across individuals. The characterized prototypical representations show a high feature concentration within one single emotion category and a high feature separability across different emotion categories. (3) Different from the existing transfer learning methods that only focus on feature separability in the source domain, we consider the feature separability of both source and target domains through the end-to-end domain adversarial training to further enhance the model effectiveness and generalizability.

II Related Work

The existing EEG-based emotion recognition models with the transfer learning algorithms can be generally categorized into two types.

(a) Non-deep transfer learning models. Pan et al. [21] proposed a transfer component analysis (TCA) algorithm to reduce the marginal distribution difference between the source and target domains, in which the transfer information was learned in a reproducing kernel Hilbert space through maximizing mean discrepancy. Zheng and Lu[22] introduced two types of subject-to-subject transfer methods to deal with the challenge of the individual differences in EEG signal processing. One was to explore a shared common feature space underlying source and target domains using TCA and kernel principal analysis (KPCA), and another was to construct multiple personalized classifiers on the source domain and map the classifier parameters to the target domain using transductive parameter transfer (TPT). These non-deep transfer learning strategies show the possibility to bridge the discrepancy across two domains with improved performance on the target domain. However, due to the small capacity and low complexity, the model accuracy and stability are still limited, which fails to satisfy the requirements of affective brain-computer interfaces (aBCI) in practical applications.

(b) Deep transfer learning models. Most of the existing affective models are based on deep transfer learning methods built with domain-adversarial neural network (DANN) proposed in [23]. The main idea of DANN is to find a shared feature representation for source and target domains with indistinguishable distribution differences and also maintain the predictive ability of the estimated features on the source samples for a specific classification task. Li et al. [24] was the first to introduce DANN in aBCI. Benefiting from the powerful feature representation ability of deep networks and the high efficiency of adversarial learning in distributed adaptation, the results showed that DANN based aBCI system was superior to other methods. The following aBCI systems could be considered as a series of DANN-based models, which generally start from two directions to improve the DANN performance in solving EEG-based emotion recognition tasks.

-

•

Incorporating the prior knowledge of neuroscience and brain anatomy with DANN. Inspired by the neuroscience findings of the asymmetry property of the left and right hemispheres in emotional responses, Yang et al. [25] proposed a bi-hemisphere domain adversarial neural network (BiDANN), in which a global and two local domain discriminators were designed to learn discriminant features from each cerebral hemisphere related to emotion perception and also improve the feature stability to the variation of different domains. The experiments on the SEED database demonstrated that BiDANN achieved higher emotion recognition performance than DANN. Considering the emotional responses from different brain regions would be varied, Yang et al. [6] proposed an R2G-STNN (regional to global-spatial-temporal neural network) to integrate the spatial-temporal information from local and global brain regions under importance guidance and characterize hierarchical feature representations. Similarly, under an assumption that not all EEG channels are equally important in emotion recognition tasks, Du et al. [26] integrated attention mechanism, long-short-term memory (LSTM), and DANN to propose an attention-based LSTM with domain discriminator (ATDD-LSTM) and characterize the nonlinear relations among different EEG channels in a data-driven approach and optimally select informative emotion-related channels.

-

•

Incorporating the probability distribution with DANN. To deal with the training instability of DANN, Luo et al. [27] introduced WGAN-GP (Wasserstein generative adversarial network with gradient penalty) to narrow down the distance between the marginal probability distributions of different subjects. The results showed the model stability was improved and a better cross-subject EEG-based emotion recognition was achieved. However, current DANN-based models only consider the marginal distribution differences but ignore the joint distribution differences of different domains. To address this problem, Li et al. [28] introduced a joint domain adaptation network (JLNN), where the joint distribution adaptation (JDA) method was incorporated with a unified framework of task-invariant features (MDA) and task-specific features (CDA).

Although the above models have achieved higher accuracies compared to the original model with DANN in emotion recognition tasks, there still exist three major technical challenges. First, the learned feature representation is susceptible to noise interference from both source and target domains and would further affect the model generalizability [29, 30]. Second, the existing models only focus on the feature separability in the source domain but ignore the feature separability in the target domain. The current DANN-based models mainly concern the emotion classification loss in the source domain, which would lead to the over-fitting of source domain data and the decrease of classification ability on target domain data. Third, the existing algorithms largely rely on a large amount of labeled source domain data. However, in practical EEG applications, it is difficult to collect accurate labels for each single EEG trial.

| Notation | Description |

|---|---|

| source\target domain | |

| source\target feature | |

| source\target label | |

| number of source\target samples | |

| the source\target dataset | |

| sample feature extractor | |

| domain discriminator | |

| bi-linear operation | |

| the parameter of the feature extractor | |

| the parameter of the discriminator | |

| the parameter of the bi-linear operation | |

| centroid | |

| predict label |

III Methodology

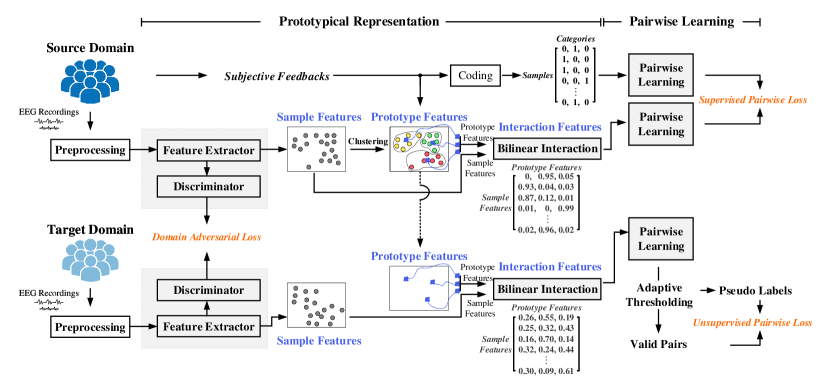

Suppose the EEG trials in the source domain and target domain are given as and , where and . Here, and are EEG samples, and and are the corresponding emotion labels. To make the narrative clearer, the frequently used notations are summarized in Table I. As shown in Fig. 1, the proposed PR-PL includes three losses (domain adversarial loss, pairwise learning loss on source domain, and pairwise learning loss on target domain) and two main parts (prototypical representation and pairwise learning). In the prototypical representation, three types of features are defined. The characterized features or from the EEG samples are termed as sample features, which are forced to be as indistinguishable as possible from the source and target domains. Under an assumption that any emotion could be represented by a prototype via prototypical learning, the prototype features of each emotion category are learned based on the sample features and from the source domain. The interaction relationships between the sample features and prototype features are measured and the interaction features are characterized which will be used in the following pairwise learning. In the pairwise learning, the pair relationships on both source and target domains are explored. As the information about are unknown during model training, an adaptive thresholding method is developed for valid pair selection and pseudo label generation.

III-A Sample feature extraction

To make both source and target data satisfy the assumption of independent and identical distribution and obey the same distribution, we characterize the sample features based on a domain adversarial training introduced in DANN. Here, the distribution difference between the source domain and the target domain is alleviated and the sample features with domain invariant properties are characterized. Through this process, the individual differences in EEG signals could be alleviated and the generalization ability of the models could be improved [25, 27, 6, 26]. Specifically, the domain difference between the source domain sample feature and the target domain sample feature are minimized by adopting domain adversarial training. Here, is the designed feature extractor for extracting the sample features from EEG signals. A discriminator network with the parameter is introduced to distinguish whether the characterized sample features ( or ) come from the source domain () or the target domain (). Its loss function is a standard two-category cross-entropy loss function, given as

| (1) |

In the training process, we adopt the end-to-end training method [23], and implement the domain adversarial training by introducing a gradient reversal layer. The feature extractor maximizes the classification ability to enhance emotion recognition performance and at the same time, the discriminator minimizes the domain discrimination to reduce the distribution difference between the source domain and target domain. The final domain adversarial training objective function is defined as

| (2) |

which is termed as domain adversarial loss in Fig. 1. Here, is the classification loss to measure the classification ability in source domain. In this paper, will be realized by pairwise learning on source and target domains introduced in Section III-D and III-E below. is the adversarial loss for the discriminator to be trained to distinguish the sample features characterized from source and target domains. and are the parameters of and . is a balanced hyperparameter for ensuring the stability of domain adversarial, which is given by a exponential growth method as

| (3) |

Here, is a factor related to the training round, given by a ratio of the current training round to the maximum training round.

III-B Prototype feature extraction

We assume that there exists a prototype for each emotion category. Through prototypical learning, the prototype features are learned to indicate the representation property of every single emotion category. Based on the sample features extracted from different subjects under different emotions, we could consider these sample features are distributed around the prototype features. In other words, for each emotion category, the prototype features could be considered the ”center of mass” of all the sample features. From the perspective of a probability distribution, the prototype feature of an emotion category can be regarded as the mean value of the sample feature distribution of the emotion, and the variance of the distribution is caused by the non-stationary EEG, including but not limited to individual differences. Assume that the sample features under an emotion category obey the Gaussian distribution . The prototype features of the emotion category could be calculated as the mean vector of the distribution. For the source domain data , the corresponding sample features are characterized by the feature extractor , given as (defined in Section III-A). The prototype feature vector of the emotion category can be calculated by averaging all the sample features that belong to this category, given as

| (4) |

where are a collection of source domain data belonging to the emotion category . are the corresponding sample size in this emotion category. In other words, could be expressed as the centroid of the sample features of . The mean value calculation is a widely used, simple, and effective noise reduction strategy, which can make the prototype features stronger than the sample features and help to alleviate the problem of the traditional DANN network being susceptible to related noise interference [31]. It is worth noting that since the calculation of the prototype features needs to use emotional label information, we only use the source domain data here for prototype feature extraction.

III-C Interaction feature extraction

The traditional DANN extracted domain-invariant shared feature representations could be easily contaminated by the shared and related noises in source and target domains. In this paper, we introduce a bilinear interaction to measure the interaction relationships between the sample features and prototype features and extract the interaction features for the following pairwise learning. For a given -dimensional sample feature , its interaction relationship to a certain prototype feature can be measured by a bilinear transformation , defined as

| (5) |

where is a bilinear transformation matrix with trainable parameters and is not restricted by symmetry or positive definiteness. Suppose there are total emotion categories, the interaction measurement between a certain sample feature and different prototype features can be represented as

| (6) |

where are all the prototype features. An introduction of the activation function (softmax) here is to add nonlinear advantages to the interaction measurement, enhance the feature representation ability, and at the same time allow the feature vector to have category prediction capabilities.

III-D Pairwise learning on source domain

Traditional pointwise learning algorithms often regard the feature vector as the predicted label of the sample feature and use the cross-entropy loss function to match the label of based on supervised training. Then, if the sample features of one EEG trial match the prototype features of th emotion category the most, the EEG trial will be assigned to the th emotion category. However, this type of pointwise learning only focuses on the relationship between sample features and prototype features and ignores the relationship between different sample features. For example, the sample features belonging to different emotion categories should be separated from each other, and the sample features belonging to the same emotion category should be gathered together. To tackle this issue, we introduce pairwise learning to capture the inherent relationship of samples.

For the source domain data , the corresponding loss function for pairwise learning is defined as

| (7) |

where is the similarity measurement of the samples and , with the parameter of . According to the assumption of pairwise learning, if , then ; otherwise . The loss function is a difference calculation of and , given by a two-category cross-entropy loss as

| (8) | ||||

In the training process, the label information of source domain data is used to define in a supervised manner. In other words, based on the given information of , if two samples belong to the same emotion category, then , otherwise . A supervised can ensure the stability of the training process and the generalization ability of the model. The next key question is how to define a proper to compute the similarity between and in terms of the characterized interaction features (termed as and ). To make the similarity results locate in the range of and extract better and more robust feature representations for subsequent emotion recognition, we add a norm restriction on as

| (9) |

The similarity of and is calculated as the cosine similarity, given as

| (10) |

where refers to inner product operation. As stated in Chang et al. [32], the above-mentioned norm restriction can make the vector have a clustering function, and the elements in the vector represent the probability that the feature belongs to a certain category cluster. Overall, the objective function of pairwise learning on the source domain is defined as

| (11) |

which is termed as supervised pairwise loss in Fig. 1. Here, , is the parameter of feature extractor , and is the defined bilinear transformation matrix for interaction feature extraction. Besides, to avoid redundant feature extraction, a soft regularization is introduced with a weight parameter of , which is defined as

| (12) |

Here, each row of the matrix refers to the prototype feature belonging to one emotion category, is a norm of the matrix, and is an identity matrix. The above loss function (Eq. 11) could be interpreted as a clustering loss, instead of emotion category classification loss. The main optimization goal is to gather the EEG samples that may belong to the same emotion category and separate the EEG samples that do not belong to the same category. The vector is the characterized interaction feature for mapping to a non-linear informative feature space by measuring the interaction relationship between the sample features and all the available prototype features.

III-E Pairwise learning on target domain

In this paper, besides of source domain, we also introduce the pairwise learning on the target domain to improve the feature separability in the target domain, as

| (13) |

which is termed as unsupervised pairwise loss in Fig. 1. Here, is the interaction features of the target domain data characterized in Eq. 6. The scalar symbolizes the pairing relationship of the samples in the target domain. Since the label information of the target domain is completely missing in the training process, we cannot accurately obtain the pairing relationship as the source domain. To address this issue, we introduce an adaptive thresholding method to generate the valid pseudo labels and define the pairing relationship of the target domain data. Suppose that is defined as

| (14) |

where and are the upper and lower bounds to select valid pairs with high confidence for unsupervised pairwise learning (valid pair selection). Here, if the calculated pairwise similarity is higher or equal to the defined upper bound (), then the corresponding pseudo label would be assigned to 1; while if the calculated pairwise similarity is lower than the defined lower bound (), then the corresponding pseudo label would be assigned to 0. For the other pairs that do not meet the threshold requirement, we would consider that the model is uncertain about whether the sample pair is paired or not and consider these pairs as invalid results. In order to prevent incorrect optimization, the invalid pairs would be temporarily excluded and will not participate in training and loss calculations at the current training round.

In the early training stage, the classification performance in the target domain is not good enough. In order to ensure the training stability, we set a strict upper threshold () and a lower threshold () and exclude most of the pair results in the target domain. Along with the enhancement of model performance in the target domain, we can gradually lower the upper threshold and raise the lower threshold and allow more samples to participate in the training part. In other words, along with the increase in training steps, more pair samples in the target domain will be included for model learning. Here, we form a non-linear dynamic update for thresholding, as

| (15) |

| (16) |

where represents the upper threshold of the current training round , represents the current lower threshold, and is the maximum training round. Based on the given initial values and , the calculations of and could be considered as non-linear changes with respect to the training rounds as

| (17) |

In all, combining the domain adversarial loss (Eq. 2), the pairwise learning loss in the source domain (Eq. 11, and the pairwise learning loss in the target domain (Eq. 13), the final objective function of PR-PL could be given as follows:

| (18) |

where is a hyperparameter to control the importance of the pairing loss of the target domain, given as . is the current training round, and is the maximum training round. Empirically, is set to 2.

IV Experimental Results

IV-A Emotional Databases and Data Preprocessing

To have a fair comparison with the state-of-the-art methods, we validate our proposed model on two well-known public databases: SEED [13] and SEED-IV [20]. In SEED database [13], a total of 15 subjects were invited. Each subject performed three sessions on different days and each session contained 15 trials. A total of three emotions were elicited (negative, neutral, and positive). In SEED-IV database [20], a total of 15 subjects participated in the experiment. For each subject, a total of 3 sessions were performed on different days and each session contained 24 trials. A total of four emotions were elicited ( happiness, sadness, fear, and neutral). The EEG signals of both SEED and SEED-IV databases were simultaneously collected using the 62-channel ESI Neuroscan system.

For EEG preprocessing, the data sampling rate was first downsampled to 200Hz, and the contaminated noises (e.g. EMG and EOG) were manually removed. Then, the data were filtered by a band-pass filter of 0.3 Hz to 50Hz. For each trial, the data was divided into a number of segments with a length of 1s. Based on the pre-defined five frequency bands: Delta (1-3 Hz), Theta (4-7 Hz), Alpha (8-13 Hz), Beta (14-30 Hz), and Gamma (31-50 Hz), the corresponding differential entropy (DE) features were extracted to represent the logarithm energy spectrum in a specific frequency band and total 310 features (5 frequency band 62 channels) were obtained for one EEG segment. Then, all the features were smoothed with the linear dynamic system (LDS) method, which can utilize the time dependency of emotion changes and filter out emotion unrelated and noisy EEG components[33].

IV-B Implementation Results

In our experiments, the feature extractor and discriminator are both made up of multilayer perceptron (MLP) with the Relu activation function. All the parameters are randomly initialized from a uniform distribution. The bilinear operator matrix is also randomly initialized. In the model architecture, the feature extractor structure is designed as 310 (input layer)-64 (hidden layer 1)-Relu activation-64 (hidden layer 2)-Relu activation-64 (output feature layer). The discriminator structure is designed as 64 (input layer)-64 (hidden layer 1)-Relu activation-dropout layer-64 (hidden layer 2)-1 (output layer)-Sigmoid activation. The size of matrix given in Eq. 5 is . Besides, we adopt an RMSprop optimizer for network training, which shows a better performance than the other classic optimizers. The learning rate is set to 1e-3 and the mini-batch size for training is 96. To avoid overfitting problems, we use regularizes (1e-5) in the networks. The regularization coefficient in Eq. 11 is 0.01. The balance parameter for pairwise learning on the target domain in Eq. 18 is controlled by a constant factor of 2. The threshold and are given to 0.9 and 0.5 respectively. All the models are trained on an NVIDIA GeForce RTX 2080 GPU, with CUDA 10.0 using the Pytorch API.

IV-C Experiment Protocols

To fully evaluate the robustness and stability of the proposed model and compare it with the existing literature, we validate PR-PL using four different validation protocols. (1) Cross-subject cross-session leave-one-subject-out cross-validation. We evaluate the model with a strict cross-subject cross-session leave-one-subject-out to fully estimate the model robustness on the unknown subject(s) and session(s). One subject’s all sessions data are used as the target and the remaining subjects’ all sessions are used as the source. We repeat the training validation until each subject’s all sessions are treated as the target for once. Due to the variants in individuals and sessions, this evaluation protocol poses a great challenge to the model’s effectiveness in the EEG-based emotion recognition tasks. (2) Cross-subject single-session leave-one-subject-out cross-validation. It is the most widely used validation scheme in the EEG-based emotion recognition tasks [34, 27, 5, 28]. One subject’s one-session data is treated as the target and the other remaining subjects are used as the source. We repeat the training validation process until each subject is treated as the target for once. Same as the other studies, we only consider the first session in this type of cross-validation. (3) Within-subject cross-session leave-one-session-out cross-validation. Similar to the existing methods, a time-series cross-validation method is adopted here, where the past data is used to predict current or future data. For one subject, the first two sessions are used as the source, and the latter session is used as the target. The average accuracies and standard deviations across subjects are calculated as the final results. (4) Within-subject single-session cross-validation. Following the validation protocol presented in the existing studies [13, 20], for each session of one subject, we use the first 9 (SEED) or 16 (SEED-IV) trials as the source and the rest 6 (SEED) or 8 (SEED-IV) trials as the target. The results are reported as the average performance across all the subjects. In the following performance comparison across four different validation protocols, the model results reproduced by us are indicated by ‘*’.

IV-D Cross-subject cross-session leave-one-subject-out cross-validation results

To verify the model efficiency and stability on both cross-subject and cross-session conditions, we verify the proposed PR-PL using cross-subject cross-session leave-one-subject-out cross-validation on both SEED and SEED-IV databases. As reported in Table II and Table III, the results show our proposed model achieves the highest results, where the emotion recognition performance of PR-PL is 85.56%4.78% for three-class classification task on SEED and 74.92%7.92% for four-class classification task on SEED-IV. Compared to the existing studies, the proposed PR-PL increases the classification accuracy to 3.39% and 1.08% for SEED and SEED-IV, with smaller standard deviations. These results demonstrate the proposed PR-PL has better affective effectiveness with higher recognition accuracy and better generalizability.

| Methods | Methods | ||

| Traditional machine learning methods | |||

| RF*[35] | 69.6007.64 | KNN*[36] | 60.6607.93 |

| SVM*[37] | 68.1507.38 | Adaboost*[38] | 71.8705.70 |

| TCA*[21] | 64.0207.96 | CORAL*[39] | 68.1507.83 |

| SA*[SA2013] | 61.4109.75 | GFK*[40] | 66.0207.59 |

| Deep learning methods | |||

| DCORAL* [41] | 81.9705.16 | DAN*[42] | 81.0405.32 |

| DDC* [43] | 82.1704.96 | DANN* [23] | 81.0805.88 |

| PR-PL | 85.5604.78 | ||

| Methods | Methods | ||

| Traditional machine learning methods | |||

| RF*[35] | 50.9809.20 | KNN*[36] | 40.8307.28 |

| SVM[24] | 51.7812.85 | Adaboost*[38] | 53.4409.12 |

| TCA[44] | 56.5613.77 | CORAL*[39] | 49.4409.09 |

| SA[44] | 64.4409.46 | GFK*[40] | 45.8908.27 |

| KPCA[24] | 51.7612.89 | DNN[24] | 49.3509.74 |

| Deep learning methods | |||

| DGCNN [45] | 52.8209.23 | DAN [24] | 58.8708.13 |

| RGNN [8] | 73.8408.02 | BiHDM [44] | 69.0308.66 |

| BiDANN[25] | 65.5910.39 | DANN[24] | 54.6308.03 |

| PR-PL | 74.9207.92 | ||

IV-E Cross-subject single-session leave-one-subject-out cross-validation results

Table IV summarizes the model results in cross-subject single-session leave-one-subject-out recognition task and compare the performance with the literature. All the results are presented in terms meanstandard deviation. The results show our proposed model (PR-PL) achieves the best performance (93.06%), with a standard deviation of 5.12%. Our PR-PL leads 2.14% against the reported best results in the literature. Especially, compared to the latest proposed DANN based deep transfer learning networks (e.g. ATDD-DANN [26], R2G-STNN[6], BiHDM[44], BiDANN[25], and WGAN-GP[27]), the proposed PR-PL with pairwise learning can avoid the inherent defects of DANN design (e.g. only considers feature separability on source domain) and well address the individual differences and noisy labeling issues in aBCI applications.

| Methods | Methods | ||

| Traditional machine learning methods | |||

| TKL[25] | 63.5415.47 | T-SVM[25] | 72.5314.00 |

| TCA[44] | 63.6414.88 | TPT[24] | 75.1712.83 |

| KPCA[24] | 61.2814.62 | GFK[44] | 71.3114.09 |

| SA[44] | 69.0010.89 | DICA[46] | 69.4007.80 |

| DNN[24] | 61.0112.38 | SVM[24] | 58.1813.85 |

| Deep learning methods | |||

| DGCNN [45] | 79.9509.02 | DAN [24] | 83.8108.56 |

| RGNN [8] | 85.3006.72 | BiHDM [44] | 85.4007.53 |

| WGAN-GP[27] | 87.1007.10 | MMD[28] | 80.8810.10 |

| ATDD-DANN[26] | 90.9201.05 | JDA-Net[28] | 88.2811.44 |

| R2G-STNN[6] | 84.1607.63 | SimNet*[31] | 81.5805.11 |

| BiDANN[25] | 83.2809.60 | DResNet[46] | 85.3008.00 |

| ADA[28] | 84.4710.65 | DANN[28] | 81.6509.92 |

| PR-PL | 93.0605.12 | ||

IV-F Within-subject cross-session cross-validation results

By calculating the average and standard deviation of the experimental results of each subject, the final within-subject cross-session cross-validation results are reported in Table V for the SEED database and Table VI for the SEED-IV database. For both databases, our proposed PR-PL achieves the highest recognition performance compared with the state-of-the-art methods (including both traditional machine learning methods and deep learning methods), where the results are 93.18%6.55% and 74.62%14.15% for SEED (three-class emotion recognition) and SEED-IV (four-class emotion recognition), respectively.

| Methods | Methods | ||

| Traditional machine learning methods | |||

| RF*[35] | 76.4211.15 | KNN*[36] | 75.6813.82 |

| TCA*[21] | 74.2712.88 | CORAL*[39] | 84.1809.81 |

| SA*[SA2013] | 69.8409.46 | GFK*[40] | 78.7909.39 |

| Deep learning methods | |||

| DAN*[42] | 89.1607.90 | SimNet*[31] | 86.8807.83 |

| DDC*[43] | 91.1405.61 | ADA[28] | 89.1307.13 |

| DANN*[23] | 89.4506.74 | MMD[28] | 84.3812.05 |

| JDA-Net[28] | 91.1708.11 | DCORAL*[41] | 88.6706.25 |

| PR-PL | 93.1806.55 | ||

| Methods | Methods | ||

| Traditional machine learning methods | |||

| RF*[35] | 60.2716.36 | KNN*[36] | 54.1816.28 |

| TCA*[21] | 51.8815.84 | CORAL*[39] | 66.0615.13 |

| SA*[SA2013] | 52.8109.53 | GFK*[40] | 56.1412.15 |

| Deep learning methods | |||

| DCORAL [47] | 65.1013.20 | DAN [47] | 60.2010.20 |

| DDC [47] | 68.8016.60 | MEERNet[47] | 72.1014.10 |

| PR-PL | 74.6214.15 | ||

IV-G Within-subject single-session cross-validation results

Consistent with the evaluation method presented in the previous studies that only consider the first two sessions of the SEED database for experiments, we present the within-subject single-session results in Table VII. It shows our proposed model obtains the best recognition performance of 94.84%. Comparing the recognition results between cross-subject single-session (Table IV) and within-subject single-session (Table VII) emotion recognition tasks, the proposed PR-PL achieves the highest accuracies and at the same time perform the closest results on the two cross-validation methods (cross-subject single-session: 93.0605.12; within-subject single-session: 94.8409.16). For the other models, such as DGCNN[45], BiDANN[25], R2G-STNN[6], RGNN[8], and BiHDM[44], there exists a significant difference between cross-subject and within-subject results (9.09% difference on average). This comparison demonstrates the efficiency and reliability of the proposed PR-PL in various emotion recognition applications.

| Methods | Methods | ||

| Traditional machine learning methods | |||

| SVM[44] | 83.9909.72 | GRSLR[48] | 87.3908.64 |

| RF[44] | 78.4611.77 | GSCCA[49] | 82.9609.95 |

| CCA[44] | 77.6313.21 | DBN[13] | 86.0808.34 |

| Deep learning methods | |||

| DGCNN[50] | 90.4008.49 | RGNN[8] | 94.2405.95 |

| ATDD-DANN[26] | 91.0806.43 | BiHDM[44] | 93.1206.06 |

| R2G-STNN[6] | 93.3805.96 | SimNet*[31] | 90.1310.84 |

| BiDANN[25] | 92.3807.04 | STRNN[51] | 89.5007.63 |

| GCNN[44] | 87.4009.20 | DANN[44] | 91.3608.30 |

| PR-PL | 94.8409.16 | ||

For the SEED-IV database, we calculate the performance on all three sessions as reported in the other studies and decode emotions into four categories (happiness, sadness, fear, and neutral). Our proposed model outperforms the existing studies, with the highest accuracy of 83.33%, which leads to a 3.96% increase as compared to the SOTA (79.37%[8]).

| Methods | Methods | ||

| Traditional machine learning methods | |||

| SVM[44] | 56.6120.05 | GRSLR[48] | 69.3219.57 |

| RF[44] | 50.9716.22 | GSCCA[49] | 69.0816.66 |

| CCA[44] | 54.4718.48 | DBN[13] | 66.7707.38 |

| Deep learning methods | |||

| DGCNN[50] | 69.8816.29 | RGNN[8] | 79.3710.54 |

| GCNN[44] | 68.3415.42 | BiHDM[44] | 74.3514.09 |

| A-LSTM[44] | 69.5015.45 | SimNet*[31] | 71.3813.12 |

| BiDANN[25] | 70.2912.63 | DANN[44] | 63.0712.66 |

| PR-PL | 83.3310.61 | ||

V Discussion and Conclusion

To fully study the model performance, we evaluate the effect of different settings in PR-PL. Note that all the results presented in this section are based on the SEED database using the cross-subject single-session cross-validation evaluation protocol.

V-A Ablation Study

We conduct the ablation study to systematically explore the effectiveness of different components in the proposed model and examine the corresponding contributions to the overall performance. As shown in Table IX, it is found that the introduction of domain adversarial training can greatly enhance the emotion recognition performance on the target domain. When the model is without discriminator and target domain information, the recognition accuracy reduces from 93.06% to 83.30%. Such a significant drop shows the significant impact of individual differences problem on model performance and highlights the great potential of transfer learning in aBCI applications. Besides, the results show a combination of pairwise learning on the source and target domain benefits to the model performance, where the recognition accuracy is increased by 6.35% (from 87.5% to 93.06%). For the pseudo-labeling method, the corresponding accuracy increases from 89.92% to 92.46% when the pseudo-labeling method changes from fixed to linear dynamic. The accuracy further increases to 93.06%, when a nonlinear dynamic-based adaptive pseudo-labeling method is adopted. The results show a non-linear dynamic pseudo-labeling could be helpful to screen out the valid paired samples and improve the model trainability. For the final loss function given in Eq. 18, instead of using a fixed weight for the pairwise loss on the target domain, we propose to update the weight gradually along with the training epochs to prevent model learning failures in the early training stage and balance the relationships among different losses. The benefit of a dynamic is also reflected in the ablation study, where the recognition accuracy increases from 89.47% and 93.06%.

| Ablation study about training strategy | |

|---|---|

| w/o discriminator and target information | 83.3004.21 |

| w/o pairwise learning on the source and target | 87.5006.64 |

| w/o pairwise learning on the target | 88.8106.63 |

| w/o prototypical representation | 91.0004.65 |

| w/o thresholding for pseudo label generation | 92.1305.90 |

| About hyperparameter controlling strategy | |

| w/ fixed pseudo-labeling | 89.9207.21 |

| w/ linear dynamic pseudo-labeling | 92.4604.95 |

| w/ fixed for target pairwise loss | 89.4710.22 |

| PR-PL | 93.0605.12 |

V-B Effect of Noisy Labels

To further verify the model robustness during noisy label learning, we randomly contaminate the source labels with % noises and test the corresponding model performance on unknown target data. Specifically, we replace % real labels in using randomly generated labels and train the model in supervised learning. Then, we test the trained model performance on the target domain. Note here that the noisy contamination is only conducted on the source domain, as the target domain needs to be used for model evaluation. In the implementation, % value is adjusted to 10%, 20%, and 30%, respectively. The corresponding model accuracies with the standard deviations are 89.22%6.05%, 88.39%6.73%, and 87.71%5.02%. It shows that, with an increase of label noise ratio from 10% to 30%, the model performance decreases slightly, with a decrease rate of 1.69%. These results demonstrate the proposed PR-PL is a reliable model which has a higher tolerance to noisy labels. In the future works, the recently proposed novel methods, such as [52] and [53], could be incorporated to further eliminate more general noises in EEG signals and improve the model stability in the cross-corpus applications.

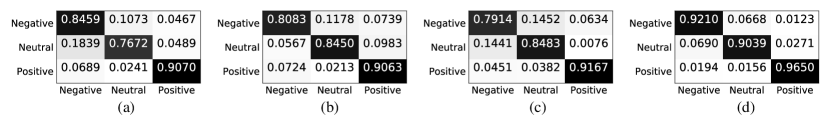

V-C Confusion Matrices

To qualitatively study the model performance in each emotion class, we visually analyze the confusion matrices and compare results with the latest models [25, 44, 8]. As shown in Fig.2, it shows all the models are good at distinguishing positive emotions from other emotions (the recognition rates are all above 90%), but it is relatively poor at distinguishing negative and neutral emotions. For example, the recognition rate of neural in BiDANN [25] is even lower than 80% (76.72%). Compared to the existing methods ((a), (b), and (c)), our proposed model can enhance the model recognition ability, especially for distinguishing neutral and negative emotions. As shown in (d), the recognition rates for negative, neutral, and positive emotions are 92.10%, 90.39%, and 96.50%.

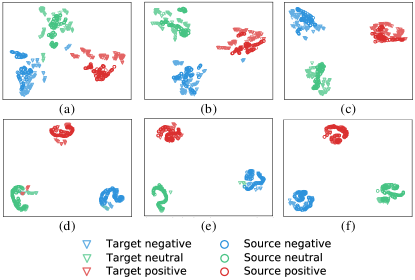

V-D Visualization of Learned Representation

To verify the effectiveness of the proposed model from a more intuitive perspective, we visualize the characterized sample and interaction features of source and target domains using T-SNE[54] in Fig. 3. Here, we randomly select 500 samples from the source and 500 samples from the target for visualization of the learned feature representation. Compared to the representation learned by the other model settings (w/o pairwise learning on the source and target and w/o pairwise learning on the target), the representation learned by the proposed PR-PL forms more separated emotional clusters. Comparing the extracted sample features (c) and interaction features (f) by the proposed PR-PL, the separability of the extracted interaction features from different emotion classes is further enlarged and at the same time, the concentration of the feature distribution for each emotion is also improved.

V-E Conclusion

The paper proposes a novel transfer learning framework with prototypical representation-based pairwise learning (PR-PL), that characterizes EEG data with prototypical representations and formulates the EEG-based emotion recognition task as pairwise learning. We evaluate our proposed model on two well-known emotional databases (SEED and SEED-IV) under four cross-validation protocols (cross-subject single-session, within-subject single-session, within-subject cross-session, and cross-subject cross-session) and compare it with the existing state-of-the-art methods. Our extensive experimental results show PR-PL achieves the best results on all four cross-validation protocols and demonstrate the advantage of PR-PL in tackling individual differences and noisy labeling issues in aBCI systems.

VI Conflicts of Interest

The authors declare that they have no conflicts of interest.

VII Acknowledgment

This work was supported in part by the National Natural Science Foundation of China under Grant 61906122, in part by Shenzhen-Hong Kong Institute of Brain Science-Shenzhen Fundamental Research Institutions (2021SHIBS0003), in part by the Tencent “Rhinoceros Birds”-Scientific Research Foundation for Young Teachers of Shenzhen University, and in part by the High Level University Construction under Grant 000002110133.

References

- [1] S. Siddharth, T.-P. Jung, and T. J. Sejnowski, “Utilizing deep learning towards multi-modal bio-sensing and vision-based affective computing,” IEEE Transactions on Affective Computing, 2019.

- [2] X. Hu, J. Chen, F. Wang, and D. Zhang, “Ten challenges for eeg-based affective computing,” Brain Science Advances, vol. 5, no. 1, pp. 1–20, 2019.

- [3] W. Hu, G. Huang, L. Li, L. Zhang, Z. Zhang, and Z. Liang, “Video-triggered eeg-emotion public databases and current methods: A survey,” Brain Science Advances, vol. 6, no. 3, pp. 255–287, 2020.

- [4] V. Jayaram, M. Alamgir, Y. Altun, B. Scholkopf, and M. Grosse-Wentrup, “Transfer learning in brain-computer interfaces,” IEEE Computational Intelligence Magazine, vol. 11, no. 1, pp. 20–31, 2016.

- [5] J. Li, S. Qiu, Y.-Y. Shen, C.-L. Liu, and H. He, “Multisource transfer learning for cross-subject eeg emotion recognition,” IEEE transactions on cybernetics, vol. 50, no. 7, pp. 3281–3293, 2019.

- [6] Y. Li, W. Zheng, L. Wang, Y. Zong, and Z. Cui, “From regional to global brain: A novel hierarchical spatial-temporal neural network model for eeg emotion recognition,” IEEE Transactions on Affective Computing, 2019.

- [7] H. Cui, A. Liu, X. Zhang, X. Chen, K. Wang, and X. Chen, “Eeg-based emotion recognition using an end-to-end regional-asymmetric convolutional neural network,” Knowledge-Based Systems, vol. 205, p. 106243, 2020.

- [8] P. Zhong, D. Wang, and C. Miao, “Eeg-based emotion recognition using regularized graph neural networks,” IEEE Transactions on Affective Computing, 2020.

- [9] X. Gu, Z. Cao, A. Jolfaei, P. Xu, D. Wu, T.-P. Jung, and C.-T. Lin, “Eeg-based brain-computer interfaces (bcis): A survey of recent studies on signal sensing technologies and computational intelligence approaches and their applications,” IEEE/ACM transactions on computational biology and bioinformatics, 2021.

- [10] O. Özdenizci, Y. Wang, T. Koike-Akino, and D. Erdoğmuş, “Adversarial deep learning in eeg biometrics,” IEEE signal processing letters, vol. 26, no. 5, pp. 710–714, 2019.

- [11] ——, “Learning invariant representations from eeg via adversarial inference,” IEEE access, vol. 8, pp. 27 074–27 085, 2020.

- [12] D. Bethge, P. Hallgarten, T. Grosse-Puppendahl, M. Kari, R. Mikut, A. Schmidt, and O. Özdenizci, “Domain-invariant representation learning from eeg with private encoders,” arXiv preprint arXiv:2201.11613, 2022.

- [13] W.-L. Zheng and B.-L. Lu, “Investigating critical frequency bands and channels for eeg-based emotion recognition with deep neural networks,” IEEE Transactions on Autonomous Mental Development, vol. 7, no. 3, pp. 162–175, 2015.

- [14] Y. Jia, M. Salzmann, and T. Darrell, “Factorized latent spaces with structured sparsity.” in Advances in Neural Information Processing Systems 23: 24th Annual Conference on Neural Information Processing Systems 2010, 01 2010, pp. 982–990.

- [15] H. Bao, G. Niu, and M. Sugiyama, “Classification from pairwise similarity and unlabeled data,” in International Conference on Machine Learning. PMLR, 2018, pp. 452–461.

- [16] H. Bao, T. Shimada, L. Xu, I. Sato, and M. Sugiyama, “Similarity-based classification: Connecting similarity learning to binary classification,” 2020.

- [17] C.-C. Hsu, Y.-X. Zhuang, and C.-Y. Lee, “Deep fake image detection based on pairwise learning,” Applied Sciences, vol. 10, no. 1, p. 370, 2020.

- [18] P. Zhuang, Y. Wang, and Y. Qiao, “Learning attentive pairwise interaction for fine-grained classification,” in Proceedings of the AAAI Conference on Artificial Intelligence, vol. 34, no. 07, 2020, pp. 13 130–13 137.

- [19] L. Yao, S. Li, Y. Li, M. Huai, J. Gao, and A. Zhang, “Representation learning for treatment effect estimation from observational data,” Advances in Neural Information Processing Systems, vol. 31, 2018.

- [20] W.-L. Zheng, W. Liu, Y. Lu, B.-L. Lu, and A. Cichocki, “Emotionmeter: A multimodal framework for recognizing human emotions,” IEEE transactions on cybernetics, vol. 49, no. 3, pp. 1110–1122, 2018.

- [21] S. J. Pan, I. W. Tsang, J. T. Kwok, and Q. Yang, “Domain adaptation via transfer component analysis,” IEEE Transactions on Neural Networks, vol. 22, no. 2, pp. 199–210, 2011.

- [22] W.-L. Zheng and B.-L. Lu, “Personalizing eeg-based affective models with transfer learning,” in Proceedings of the Twenty-Fifth International Joint Conference on Artificial Intelligence, ser. IJCAI’16. AAAI Press, 2016, p. 2732–2738.

- [23] Y. Ganin, E. Ustinova, H. Ajakan, P. Germain, H. Larochelle, F. Laviolette, M. Marchand, and V. Lempitsky, “Domain-adversarial training of neural networks,” The journal of machine learning research, vol. 17, no. 1, pp. 2096–2030, 2016.

- [24] H. Li, Y.-M. Jin, W.-L. Zheng, and B.-L. Lu, “Cross-subject emotion recognition using deep adaptation networks,” in Neural Information Processing, L. Cheng, A. C. S. Leung, and S. Ozawa, Eds. Cham: Springer International Publishing, 2018, pp. 403–413.

- [25] Y. Li, W. Zheng, Y. Zong, Z. Cui, T. Zhang, and X. Zhou, “A bi-hemisphere domain adversarial neural network model for eeg emotion recognition,” IEEE Transactions on Affective Computing, 2018.

- [26] X. Du, C. Ma, G. Zhang, J. Li, Y.-K. Lai, G. Zhao, X. Deng, Y.-J. Liu, and H. Wang, “An efficient lstm network for emotion recognition from multichannel eeg signals,” IEEE Transactions on Affective Computing, pp. 1–1, 2020.

- [27] Y. Luo, S. Y. Zhang, W. L. Zheng, and B. L. Lu, “Wgan domain adaptation for eeg-based emotion recognition,” International Conference on Neural Information Processing, 2018.

- [28] J. Li, S. Qiu, C. Du, Y. Wang, and H. He, “Domain adaptation for eeg emotion recognition based on latent representation similarity,” IEEE Transactions on Cognitive and Developmental Systems, vol. 12, no. 2, pp. 344–353, 2020.

- [29] M. Salzmann, C. H. Ek, R. Urtasun, and T. Darrell, “Factorized orthogonal latent spaces,” Journal of Machine Learning Research, vol. 9, pp. 701–708, 2010.

- [30] K. Bousmalis, G. Trigeorgis, N. Silberman, D. Krishnan, and D. Erhan, “Domain separation networks,” ser. NIPS’16, 2016, p. 343–351.

- [31] P. O. Pinheiro, “Unsupervised domain adaptation with similarity learning,” in 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2018, pp. 8004–8013.

- [32] J. Chang, L. Wang, G. Meng, S. Xiang, and C. Pan, “Deep adaptive image clustering,” in 2017 IEEE International Conference on Computer Vision (ICCV), 2017, pp. 5880–5888.

- [33] L.-C. Shi and B.-L. Lu, “Off-line and on-line vigilance estimation based on linear dynamical system and manifold learning,” in 2010 Annual International Conference of the IEEE Engineering in Medicine and Biology, 2010, pp. 6587–6590.

- [34] W.-L. Zheng, Y.-Q. Zhang, J.-Y. Zhu, and B.-L. Lu, “Transfer components between subjects for eeg-based emotion recognition,” in 2015 International Conference on Affective Computing and Intelligent Interaction (ACII), 2015, pp. 917–922.

- [35] Breiman, “Random forests,” Machine Learning, vol. 45, no. 1, pp. 5–32, 2001.

- [36] “Alternative k-nearest neighbour rules in supervised pattern recognition: Part 1. k-nearest neighbour classification by using alternative voting rules,” Analytica Chimica Acta, vol. 136, pp. 15–27, 1982. [Online]. Available: https://www.sciencedirect.com/science/article/pii/S0003267001953590

- [37] J. Suykens and J. Vandewalle, “Least squares support vector machine classifiers,” Neural Processing Letters, vol. 9, no. 3, pp. 293–300, 1999.

- [38] J. Zhu, A. Arbor, and T. Hastie, “Multi-class adaboost,” Statistics & Its Interface, vol. 2, no. 3, pp. 349–360, 2006.

- [39] B. Sun, J. Feng, and K. Saenko, “Return of frustratingly easy domain adaptation,” in Proceedings of the Thirtieth AAAI Conference on Artificial Intelligence, ser. AAAI’16. AAAI Press, 2016, p. 2058–2065.

- [40] B. Gong, Y. Shi, F. Sha, and K. Grauman, “Geodesic flow kernel for unsupervised domain adaptation,” in 2012 IEEE Conference on Computer Vision and Pattern Recognition, 2012, pp. 2066–2073.

- [41] B. Sun and K. Saenko, “Deep coral: Correlation alignment for deep domain adaptation,” in Computer Vision – ECCV 2016 Workshops, G. Hua and H. Jégou, Eds. Cham: Springer International Publishing, 2016, pp. 443–450.

- [42] M. Long, Y. Cao, J. Wang, and M. Jordan, “Learning transferable features with deep adaptation networks,” in International conference on machine learning. PMLR, 2015, pp. 97–105.

- [43] E. Tzeng, J. Hoffman, N. Zhang, K. Saenko, and T. Darrell, “Deep domain confusion: Maximizing for domain invariance,” CoRR, vol. abs/1412.3474, 2014. [Online]. Available: http://arxiv.org/abs/1412.3474

- [44] Y. Li, L. Wang, W. Zheng, Y. Zong, L. Qi, Z. Cui, T. Zhang, and T. Song, “A novel bi-hemispheric discrepancy model for eeg emotion recognition,” IEEE Transactions on Cognitive and Developmental Systems, vol. 13, no. 2, pp. 354–367, 2020.

- [45] T. Song, W. Zheng, P. Song, and Z. Cui, “Eeg emotion recognition using dynamical graph convolutional neural networks,” IEEE Transactions on Affective Computing, vol. 11, no. 3, pp. 532–541, 2018.

- [46] B.-Q. Ma, H. Li, W.-L. Zheng, and B.-L. Lu, “Reducing the subject variability of eeg signals with adversarial domain generalization,” in Neural Information Processing, T. Gedeon, K. W. Wong, and M. Lee, Eds. Cham: Springer International Publishing, 2019, pp. 30–42.

- [47] H. Chen, Z. Li, M. Jin, and J. Li, “Meernet: Multi-source eeg-based emotion recognition network for generalization across subjects and sessions,” in 2021 43rd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC). IEEE, 2021, pp. 6094–6097.

- [48] Y. Li, W. Zheng, Z. Cui, Y. Zong, and S. Ge, “Eeg emotion recognition based on graph regularized sparse linear regression,” Neural Processing Letters, vol. 49, p. 1–17, 04 2019.

- [49] W. Zheng, “Multichannel eeg-based emotion recognition via group sparse canonical correlation analysis,” IEEE Transactions on Cognitive and Developmental Systems, vol. 9, no. 3, pp. 281–290, 2017.

- [50] T. Song, W. Zheng, P. Song, and Z. Cui, “Eeg emotion recognition using dynamical graph convolutional neural networks,” IEEE Transactions on Affective Computing, vol. 11, no. 3, pp. 532–541, 2020.

- [51] T. Zhang, W. Zheng, Z. Cui, Y. Zong, and Y. Li, “Spatial–temporal recurrent neural network for emotion recognition,” IEEE Transactions on Cybernetics, vol. 49, no. 3, pp. 839–847, 2019.

- [52] X. Xiao, M. Xu, J. Jin, Y. Wang, T.-P. Jung, and D. Ming, “Discriminative canonical pattern matching for single-trial classification of erp components,” IEEE Transactions on Biomedical Engineering, vol. 67, no. 8, pp. 2266–2275, 2019.

- [53] J. Jin, Z. Wang, R. Xu, C. Liu, X. Wang, and A. Cichocki, “Robust similarity measurement based on a novel time filter for ssveps detection,” IEEE Transactions on Neural Networks and Learning Systems, 2021.

- [54] V. D. M. Laurens and G. Hinton, “Visualizing data using t-sne,” Journal of Machine Learning Research, vol. 9, no. 2605, pp. 2579–2605, 2008.