Practical Rateless Set Reconciliation

Abstract.

Set reconciliation, where two parties hold fixed-length bit strings and run a protocol to learn the strings they are missing from each other, is a fundamental task in many distributed systems. We present Rateless Invertible Bloom Lookup Tables (Rateless IBLTs), the first set reconciliation protocol, to the best of our knowledge, that achieves low computation cost and near-optimal communication cost across a wide range of scenarios: set differences of one to millions, bit strings of a few bytes to megabytes, and workloads injected by potential adversaries. Rateless IBLT is based on a novel encoder that incrementally encodes the set difference into an infinite stream of coded symbols, resembling rateless error-correcting codes. We compare Rateless IBLT with state-of-the-art set reconciliation schemes and demonstrate significant improvements. Rateless IBLT achieves – lower communication cost than non-rateless schemes with similar computation cost, and – lower computation cost than schemes with similar communication cost. We show the real-world benefits of Rateless IBLT by applying it to synchronize the state of the Ethereum blockchain, and demonstrate lower end-to-end completion time and lower communication cost compared to the system used in production.

1. Introduction

Recent years have seen growing interest in distributed applications, such as blockchains (Nakamoto, 2008; Wood, 2014; Androulaki et al., 2018), social networks (Raman et al., 2019), mesh messaging (Perry et al., 2022), and file hosting (Trautwein et al., 2022). In these applications, nodes (participating servers) maintain replicas of the entire or part of the application state, and synchronize their replicas by exchanging messages on a peer-to-peer network.

The naive approach to solving this problem requires communication proportional to the size of the set one party holds. For example, some applications send the entire set while other applications have parties exchange the hashes of their items or a Bloom filter of their sets (the Bloom filter’s size is proportional to the set size). A node then requests the items it is missing. All these solutions induce high overhead, especially when nodes have large, overlapping sets. This is a common scenario in distributed applications, such as nodes in a blockchain network synchronizing transactions or account balances, social media servers synchronizing users’ posts, or a name system synchronizing certificates or revocation lists (Summermatter and Grothoff, 2022).

An emerging alternative solution to the state synchronization problem is set reconciliation. It abstracts the application’s state as a set and then uses a reconciliation protocol to synchronize replicas. Crucially, the overhead is determined by the set difference size rather than the set size, allowing support for applications with very large states. However, existing set reconciliation protocols suffer from at least one of two major caveats. First, most protocols are parameterized by the size of the set difference between the two participating parties. However, in practice, setting this parameter is difficult since scenarios such as temporal network disconnections or fluctuating load on the system make it challenging to know what the exact difference size will be ahead of time. Thus, application designers often resort to running online estimation protocols, which induce additional latency and only give a statistical estimate of the set difference size. Such estimators are inaccurate, forcing the application designers to tailor the parameters to the tail of the potential error, resulting in high communication overhead. The second type of caveat is that some set reconciliation protocols suffer from high decoding complexity, where the recipient has to run a quadratic-time or worse algorithm, with relatively expensive operations.

We propose a rateless set reconciliation scheme called Rateless Invertible Bloom Lookup Tables (Rateless IBLT) that addresses these challenges. In Rateless IBLT, a sender generates an infinite stream of coded symbols that encode the set difference, and the recipient can decode the set difference when they receive enough coded symbols. Rateless IBLT has no parameters and does not need an estimate of the set difference size. With overwhelming probability, the recipient can decode the set difference after receiving a number of coded symbols that are proportional to the set difference size rather than the entire set size, resulting in low overhead. Rateless IBLT’s coded symbols are universal. The same sequence of coded symbols can be used to reconcile any number of differences with any other set. Therefore, the sender can create coded symbols once and use them to synchronize with any number of peers. The latter property is particularly useful for applications such as blockchain peer-to-peer networks, where nodes may synchronize with multiple sources with overlapping states, since it allows the node to recover the union of their states using coded symbols it concurrently receives from all of them.

In summary, we make the following contributions:

-

(1)

The design of Rateless IBLT, the first set reconciliation protocol that achieves low computation cost and near-optimal communication cost across a wide range of scenarios: set differences of one to millions, bit strings of a few bytes to megabytes, and workloads injected by potential adversaries.

-

(2)

A mathematical analysis of Rateless IBLT’s communication and computation costs. We prove that when the set difference size goes to infinity, Rateless IBLT reconciles differences with communication. We show in simulations that the communication cost is between to on average for all values of and that it quickly converges to when is in the low hundreds.

-

(3)

An implementation of Rateless IBLT as a library. When reconciling differences, our implementation can process input data (sets being reconciled) at MB/s using a single core of a 2016-model CPU.

-

(4)

Extensive experiments comparing Rateless IBLT with state-of-the-art solutions. Rateless IBLT achieves – lower communication cost than regular IBLT (Goodrich and Mitzenmacher, 2011) and MET-IBLT (Lázaro and Matuz, 2023), two non-rateless schemes; and – lower computation cost than PinSketch (Dodis et al., 2008).

-

(5)

Demonstration of Rateless IBLT’s real-world benefits by applying our implementation to synchronize the account states of the Ethereum blockchain. Compared to Merkle trie (Yue et al., 2020), today’s de facto solution, Rateless IBLT achieves lower completion time and lower communication cost on historic traces.

2. Motivation and Related Work

We first formally define the set reconciliation problem (Minsky et al., 2003; Eppstein et al., 2011). Let and be two sets containing items (bit strings) of the same length . and are stored by two distinct parties, Alice and Bob. They want to efficiently compute the symmetric difference of and , i.e., , denoted as . By convention (Eppstein et al., 2011), we assume that only one of the parties, Bob, wants to compute because he can send the result to Alice afterward if needed.

While straightforward solutions exist, such as exchanging Bloom filters (Bloom, 1970) or hashes of the items, they incur communication and computation costs. The costs can be improved to logarithmic by hashing the sets into Merkle tries (Yue et al., 2020), where a trie node on depth is the hash of a -fraction of the set. Alice and Bob traverse and compare their tries, only descending into a sub-trie (subset) if their roots (hashes) differ. However, the costs are still dependent on , and now takes round trips.

In contrast, the information-theoretic lower bound (Minsky et al., 2003, § 2) of the communication cost is , where .111More precisely, the lower bound is (Minsky et al., 2003, § 2), but the second term can be neglected when . State-of-the-art solutions get close to this lower bound using techniques from coding theory. On a high level, we can view as a copy of with errors (insertions and/or deletions), and the goal of set reconciliation is to correct these errors. Alice encodes into a list of coded symbols and sends them to Bob. Bob then uses the coded symbols and to decode . The coded symbols are the parity data in a systematic error-correcting code that can correct set insertions and deletions (Mitzenmacher and Varghese, 2012). Using appropriate codes, it takes coded symbols, each of length , to correct the errors, resulting in a communication cost of .

The performance of existing solutions varies depending on the codes they use. Characteristic Polynomial Interpolation (CPI) (Minsky et al., 2003) uses a variant of Reed-Solomon codes (Reed and Solomon, 1960), where coded symbols are evaluations of a polynomial uniquely constructed from . CPI has a communication cost of , achieving the information-theoretic lower bound. However, its computation cost is for Alice, and for Bob. The latter was improved to in PinSketch (Dodis et al., 2008; Wuille, 2018) using BCH codes (Bose and Ray-Chaudhuri, 1960) that are easier to decode. Nevertheless, as we show in § 7.2, computation on both Alice and Bob quickly becomes intractable even at moderate , , and , limiting its applicability. For example, Shrec (Han et al., 2020) attempted to use PinSketch to synchronize transactions in a high-throughput blockchain but found that its high computation complexity severely limits system throughput (Han et al., 2020, § 5.2).

Invertible Bloom Lookup Tables (IBLTs) (Goodrich and Mitzenmacher, 2011) use sparse graph codes similar to LT (Luby, 2002) and LDPC (Gallager, 1962) codes. Each set item is summed into coded symbols, denoted as its neighbors in a random, sparse graph. Some variants also consider graphs with richer structures such as varying depending on the set item (Lázaro and Matuz, 2021). The computation cost is for Alice, and for Bob. The communication cost is with a coefficient strictly larger than (e.g., – for small , see § 7.1). Due to their random nature, IBLTs may fail to decode even if properly parameterized (Eppstein et al., 2011). We provide more background on IBLTs in § 3.

The aforementioned discussions assume that the codes are properly parameterized. In particular, we need to decide , the number of coded symbols Alice sends to Bob. Decoding will fail if is too small compared to , and we incur redundant communication and computation if is too large. The optimal choice of is thus a function of . However, accurate prediction of is usually difficult (Naumenko et al., 2019; Han et al., 2020), and sometimes outright impossible (Ozisik et al., 2019). Existing systems often resort to online estimation protocols (Eppstein et al., 2011) and over-provision to accommodate the ensuing errors (Eppstein et al., 2011; Ozisik et al., 2019).

The case for rateless reconciliation. A key feature of Rateless IBLT is that it can generate an infinite stream of coded symbols for a set, resembling rateless error-correcting codes (Byers et al., 1998). For any , the first coded symbols can reconcile set differences with a coefficient close to ( in most cases, see § 7.1). This means that Rateless IBLT does not require parameterization, making real-world deployments easy and robust. Alice simply keeps sending coded symbols to Bob, and Bob can decode as soon as he receives enough—which we show analytically (§ 5) and experimentally (§ 7.1) to be about in most cases—coded symbols. Neither Alice nor Bob needs to know beforehand. The encoding and the decoding algorithms have zero parameters.

The concept of incrementally generating coded symbols is first mentioned in CPI (Minsky et al., 2003). However, as mentioned before, its real-world use has been limited due to the high computation cost. We discuss these limitations in § 7, and demonstrate that Rateless IBLT reduces the computation cost by –, while incurring a communication cost of less than the information-theoretic lower bound. Concurrently with our work, MET-IBLT (Lázaro and Matuz, 2023) proposes to simultaneously optimize the parameters of IBLTs for multiple pre-selected values of , e.g., , such that a list of coded symbols for is a prefix/suffix of that for . However, it only considers a few values of due to the complexity of the optimization so still requires workload-dependent parameterization. As we show in § 7.1, its communication cost is – higher for the values that are not optimized for. In addition, MET-IBLT does not provide a practical algorithm to incrementally generate coded symbols. Rateless IBLT does not have any of these issues.

Rateless IBLT offers additional benefits. Imagine that Alice has the canonical system state, and multiple peers wish to reconcile their states with Alice. In a non-rateless scheme, Alice must separately produce coded symbols for each peer depending on the particular number of differences . This incurs additional computation and storage I/Os for every peer she reconciles with. And, more importantly, Alice must produce the coded symbols on the fly because she does not know before a peer arrives. In comparison, using Rateless IBLT, Alice simply maintains a universal sequence of coded symbols and streams it to anyone who wishes to reconcile. Rateless IBLT also allows her to incrementally update the coded symbols as she modifies the state (set), further amortizing the encoding costs.

To the best of our knowledge, Rateless IBLT is the first set reconciliation solution that simultaneously achieves the following properties:

-

•

Ratelessness. The encoder generates an infinite sequence of coded symbols, capable of reconciling any number of differences with low overhead.

-

•

Universality. The same algorithm works efficiently for any , , , and without any parameter.

-

•

Low communication cost. The average communication cost peaks at when , and quickly converges to when is at the low hundreds.

-

•

Low computation cost. Encoding costs per set item, and decoding costs per difference. In practice, a single core on a 2016-model CPU can encode (decode) 3.4 million items (differences) per second when and bytes.

We demonstrate these advantages by comparing with all the aforementioned schemes in § 7 and applying Rateless IBLT to a potential application in § 7.3.

3. Background

Our Rateless IBLT retains the format of coded symbols and the decoding algorithm of regular IBLTs, but employs a new encoder that is oblivious to the number of differences to reconcile. In this section, we provide the necessary background on IBLTs (Eppstein et al., 2011; Goodrich and Mitzenmacher, 2011) and explain why regular IBLTs fail to provide the rateless property that we desire. We discuss the new rateless encoder in the next section.

On a high level, an IBLT is an encoding of a set. We call the items (bit strings) in the set the source symbols, and an IBLT comprises a list of coded symbols. Each source symbol is mapped to coded symbols uniformly at random, e.g., by using hash functions. Here, and are design parameters.

Coded symbol format. A coded symbol contains two fields: sum, the bitwise exclusive-or (XOR) sum of the source symbols mapped to it; and checksum, the bitwise XOR sum of the hashes of the source symbols mapped to it. In practice, there is usually a third field, count, which we will discuss shortly. Fig. 1 provides an example.

Peeling decoder. To recover source symbols from a list of coded symbols, the decoder runs a recursive procedure called “peeling”. We say a coded symbol is pure when exactly one source symbol is mapped to it; or, equivalently, when its checksum equals the hash of its sum (Eppstein et al., 2011).222Unless there is a hash collision, which happens with negligible probability in the length of the hash. See § 4.3. In this case, its sum field is the source symbol itself, which is now recovered. The decoder then removes the recovered source symbol from any other coded symbols it is mapped to (determined by the agreed-upon hash functions), by XOR-ing the source symbol and its hash into their sum and checksum fields, respectively. This process may generate additional pure symbols; the decoder repeats until no pure symbols are left. Decoding fails if it stops before recovering all source symbols (Goodrich and Mitzenmacher, 2011). Fig. 2 shows the example of decoding the IBLT in Fig. 1.

Subtraction of coded symbols. denotes subtraction of two coded symbols . For the resulting coded symbol, its sum is the bitwise XOR of and ; its checksum is the bitwise XOR of and ; and its count is .

Set reconciliation using IBLTs. IBLTs with the same parameter configuration (, , and hash functions mapping from source symbols to coded symbols) can be subtracted (Eppstein et al., 2011). For any two sets and , , where the operator subtracts each corresponding pair of coded symbols from the two IBLTs. This is because if a source symbol is present in both and , then it is XOR-ed twice into each coded symbol it is mapped to in , resulting in no effect. As a result, is an encoding of the source symbols that appear exactly once across and , i.e., .

To reconcile and , Alice sends to Bob, who then computes and decodes to recover . To determine whether a recovered source symbol belongs to or , we use the count field.333Alternatively, Bob may try looking up each item in against , but this requires indexing , which is undesirable when is large. It records the number of source symbols mapped to a coded symbol. When a coded symbol is pure, indicates that the recovered source symbol is exclusive to , and indicates (Eppstein et al., 2011).

Limitations of IBLTs. IBLTs are not rateless. An IBLT with a particular set of parameters only works for a narrow range of difference size . It quickly becomes inefficient to use it for more or fewer differences than parameterized for. In § A, we show Theorems A.1 and A.2, which we summarize informally here. First, with high probability, Bob cannot recover any source symbol in when , making undersized IBLTs completely useless. On the other hand, we cannot simply default to a very large to accommodate a potentially large . If turns out to be small, i.e., , Alice still has to send almost the entire IBLT ( coded symbols) for Bob to decode successfully, leading to high communication cost. Alice cannot dynamically enlarge , either. Each source symbol is already uniformly mapped to out of coded symbols upon encoding. Increasing post hoc would require remapping the source symbols to the expanded space of coded symbols so that the mapping remains uniform. This requires Alice to rebuild and re-send the entire IBLT. Figs. 3(a), 3(b) show an example.

4. Design

For any set , Rateless IBLT defines an infinite sequence of coded symbols. Intuitively, an infinite number of coded symbols for and allows Rateless IBLT to accommodate an arbitrarily large set difference. Every prefix of this infinite sequence functions like a normal IBLT and can reconcile a number of differences proportional to its length. Meanwhile, because these prefixes belong to a common infinite sequence, Alice simply streams the sequence until Bob receives a long enough prefix to decode. For any , on average, reconciling differences requires only the first – coded symbols in the sequence.

4.1. Coded Symbol Sequence

Our main task is to design the algorithm that encodes any set into an infinite sequence of coded symbols, denoted as . It should provide the following properties:

-

•

Decodability. With high probability, the peeling decoder can recover all source symbols in a set using a prefix of with length .

-

•

Linearity. For any sets and , is the coded symbol sequence for .

-

•

Universality. The encoding algorithm does not need any extra information other than the set being encoded.

These properties allow us to build the following simple protocol for rateless reconciliation. To reconcile and , Alice incrementally sends to Bob. Bob computes , and tries to decode these symbols using the peeling decoder. Bob notifies Alice to stop when he has recovered all source symbols in . As we will soon show, the first symbol in Rateless IBLT is decoded only after all source symbols are recovered. This is the indicator for Bob to terminate.

Linearity guarantees that is the coded symbol sequence for . Decodability guarantees that Bob can decode after receiving coded symbols and recover all source symbols in . Universality guarantees that Alice and Bob do not need any prior context to run the protocol.

If Alice regularly reconciles with multiple peers, she may cache coded symbols for to avoid recomputing them every session. Universality implies that Alice can reuse the same cached symbols across different peers. Linearity implies that if she updates her set , she can incrementally update the cached symbols by treating the updates as a set and subtracting its coded symbols from the cached ones for .

We now discuss how we design an encoding algorithm that satisfies the three properties we set to achieve.

4.1.1. Linearity & Universality

Our key observation is that to ensure linearity, it is sufficient to define a consistent mapping rule, which, given any source symbol and any index , deterministically decides whether should be mapped to the -th coded symbol when encoding a set that contains . This ensures that if , then it will be mapped to both and or neither; in either case, will not be reflected in . On the other hand, if , and should be mapped to index according to the rule, then it will be mapped to exactly one of or , and therefore will be reflected in . Since the mapping rule makes decisions based only on and , the resulting encoding algorithm also satisfies universality.

4.1.2. Decodability

Whether the peeling decoder can recover all source symbols from a set of coded symbols is fully determined by the mapping between the source and the coded symbols. Let be the probability that a random source symbol maps to the -th coded symbol, which we refer to as the mapping probability. It is the key property that defines the behavior of a mapping rule. In the remainder of this subsection, we constrain by examining two necessary conditions for peeling to succeed. Our key conclusion is that in order for decodability to hold, must be inversely proportional to . This rejects most functions as candidates of and leads us to a concrete instantiation of . We will design a concrete algorithm (mapping rule) that realizes the mapping probability in the next subsection, and mathematically prove that it satisfies decodability in § 5.

First, to kick-start the peeling decoder, there must be a coded symbol with exactly one source symbol mapped to it (a pure coded symbol). For a set and index , the probability that this happens decreases quasi-exponentially in . This implies that must decrease quickly with . Otherwise, each of the first coded symbols would have an exponentially small probability of being pure, and it would be likely that none of them is pure, violating decodability.

The following lemma shows that for this reason, the mapping probability cannot decrease slower than for any positive , i.e., almost inversely proportional to . We defer the proof to § C.

Lemma 4.1.

For any , any mapping probability such that , and any , if there exists at least one pure coded symbol within the first coded symbols for a random set with probability , then .

Second, to recover all source symbols in a set , we need at least non-empty coded symbols. This is because during peeling, each pure symbol (which must be non-empty) yields at most one source symbol. Intuitively, cannot decrease too fast with index . Otherwise, the probability that a coded symbol is empty would quickly grow towards as increases. The first coded symbols would not reliably contain at least non-empty symbols, violating decodability.

The following lemma shows that for this reason, the mapping probability cannot decrease faster than . We defer the proof to § C.

Lemma 4.2.

For any mapping probability such that , and any , if there exist at least non-empty coded symbols within the first coded symbols for a random set with probability , then .

The constraints above reject functions that decrease faster than , as well as functions that decrease slower than for any . For simplicity, we ignore the degree of freedom stemming from the factor since for a sufficiently small and any practical , it is very close to 1. The remaining candidates for are the ones in between, i.e., functions of order . We choose the simplest function in this class:

| (1) |

where is a parameter. We shift the denominator by because starts at . In § 5, we prove that this achieves decodability with high efficiency: recovering a set only requires the first – coded symbols on average.

We highlight two interesting properties of our . First, . This means that for any set, every source symbol is mapped to the first coded symbol. This coded symbol is only decoded after all source symbols are recovered. So, Bob can tell whether reconciliation has finished by checking if is decoded. Second, among the first indices, a source symbol is mapped to of them on average, or . It means that the density of the mapping, which decides the computation cost of encoding and decoding, decreases quickly as increases. As we will show in § 7.2, the low density allows Rateless IBLT to achieve – higher throughput than PinSketch.

4.2. Realizing the Mapping Probability

We now design an efficient deterministic algorithm for mapping a source symbol to coded symbols that achieves the mapping probability rule identified in the previous section.

Recall that for a random source symbol , we want to make the probability that is mapped to the -th coded symbol to be in Eq. 1. A simple strawman solution, for example, is to use a hash function that, given , outputs a hash value uniformly distributed in . We then compare the hash value to , and decide to map to the -th coded symbol if the hash value is smaller. Given a random , because its hash value distributes uniformly, the mapping happens with probability .

However, this approach has major issues. First, it requires comparing hash values and for every pair of source symbol and index . As mentioned in § 4.1.2, the density of the mapping is for the first coded symbols. In contrast, generating the coded symbols using this algorithm would require comparisons for each source symbol, significantly inflating the computation cost. Another issue is that we cannot use the same hash function when mapping to different indices and . Otherwise, the mappings to them would not be independent: if and is mapped to , then it will always be mapped to . Using different, independent hash functions when mapping the same source symbol to different indices means that we also need to hash the symbol times.

We design an algorithm that maps each source symbol to the first coded symbols using only computation. The strawman solution is inefficient because we roll a dice (compare hash and ) for every index , even though we end up not mapping to the majority of them ( out of ), so reaching the next mapped index takes many dice rolls ( on average). Our key idea is to directly sample the distance (number of indices) to skip before reaching the next mapped index. We achieve it with constant cost per sample, so we can jump from one mapped index straight to the next in constant time.

We describe our algorithm recursively. Suppose that, according to our algorithm, a source symbol has been mapped to the -th coded symbol. We now wish to compute, in constant time, the next index that is mapped to. Let be the random variable such that for a random , and let () be the probability that . In other words, is the probability that a random is not mapped to any of , but is mapped to , which are all independent events. So,

Generating is then equivalent to sampling , whose distribution is described by , and then computing .

However, since there are (which can go to infinity) terms in , it is still unclear how to sample in constant time. The key observation is that the cumulative mass function of , denoted as , has a remarkably simple form. In particular,

| (2) |

We defer the step-by-step derivation to § B.

Let be the inverse of . The simple form of allows us to compute easily, which we will soon explain. To sample , we sample uniformly, and compute . To make the algorithm deterministic, may come from a pseudorandom number generator seeded with the source symbol . The algorithm outputs as the next index to which is mapped, updates , and is ready to produce another index. Because every source symbol is mapped to the first coded symbol (recall that ), we start the recursion with .

Finally, we explain how to compute . It is the most simple if we set the parameter in to . Plugging into Eq. 2, we get

Its inverse is

For a generic , we can use Stirling’s approximation (Robbins, 1955), and get

Consequently,

In our final design, we set . The main reason is that computing when only requires computing square roots, while it otherwise involves raising to other non-integer powers. We observe that the latter is significantly slower on older CPUs. Meanwhile, as we will show in § 5, setting results in negligible extra communication compared to the optimal setting.

4.3. Resistance to Malicious Workload

In some applications, rogue users may inject items to Alice or Bob’s sets. For example, in a distributed social media application where servers exchange posts, users can craft any post they like. This setting may create an “adversarial workload,” where the hash of the symbol representing the user’s input does not distribute uniformly. If the user injects into Bob’s set a source symbol that hashes to the same value as another source symbol that Alice has, then Bob will never be able to reconcile its set with Alice. This is because Bob will XOR the malicious symbol into the coded symbol stream it receives from Alice, but it will only cancel out the hash of Alice’s colliding symbol from the checksum field, and will corrupt the sum field.

The literature on set reconciliation is aware of this issue, but typically does not specify the required properties from the hash function to mitigate it; most use hash functions with strong properties such as random oracles (Mitzenmacher and Pagh, 2018), which have long outputs (e.g., 256 bits). It is sufficient, however, to use a keyed hash function with uniform and shorter outputs (e.g., 64 bits). This allows Alice and Bob to coordinate a secret key and use it to choose a hash function from the family of keyed hashes. Although with short hashes, an attacker can computationally enumerate enough symbols to find a collision for an item that Alice has, the attacker does not know the key, i.e., the hash function that Alice and Bob use, so she cannot target a collision to one of Alice’s symbols. This allows Rateless IBLT to minimize the size of a coded symbol and save bandwidth, particularly in applications where symbols are short and checksums account for much of the overhead. In practice, we use the SipHash (Aumasson and Bernstein, 2012) keyed hash function. A trade-off we make is that Alice has to compute the checksums separately for each key she uses, which increases her computation load. We believe this is a worthwhile trade-off as SipHash is very efficient, and we find in experiments (§ 7.2) that computing the hashes has negligible cost compared to computing sums, which are still universal. Also, we expect using different keys only in applications where malicious workload is a concern.

5. Analysis

In this section, we use density evolution (Richardson and Urbanke, 2001; Luby et al., 1998) to analyze the behavior of the peeling decoder when decoding coded symbols in Rateless IBLTs. We mathematically prove that as the difference size goes to infinity, the overhead of Rateless IBLTs converges to ; i.e., reconciling differences requires only the first coded symbols. We then use Monte Carlo simulations to show the behavior for finite . In particular, we show that the overhead converges quickly, when is at the low hundreds.

Density evolution is a standard technique for analyzing the iterative decoding processes in error correcting codes based on sparse graphs (Richardson and Urbanke, 2001; Luby et al., 1998), and has been applied to IBLTs with simpler mappings between source and coded symbols (Lázaro and Matuz, 2021). Its high-level idea is to iteratively compute the probability that a random source symbol has not been recovered while simulating the peeling decoder statistically. If this probability keeps decreasing towards as the peeling decoder runs for more iterations, then decoding will succeed with probability converging to (Luby et al., 1998, § 2). The following theorem states our main conclusion. We defer its proof to § C.

Theorem 5.1.

For a random set of source symbols, the probability that the peeling decoder successfully recovers the set using the first coded symbols (as defined in § 4.1) tends to 1 as goes to infinity, provided that is any positive constant that satisfies

| (3) |

Recall that is the exponential integral function;444. is the parameter in the mapping probability as discussed in § 4.1. We stated Theorem 5.1 with respect to a generic set of source symbols and its corresponding coded symbol sequence; in practice, the set is . The decoder (Bob) knows the coded symbol sequence for because he subtracts the coded symbols for (generated locally) from those for (received from Alice) as defined in § 3.

Theorem 5.1 implies that for any choice of parameter , there exists a corresponding threshold which is the smallest that satisfies Eq. 3. Any also satisfies Eq. 3 because the left-hand side monotonically decreases with respect to . (Intuitively, this must be true as a larger means more coded symbols, which should be strictly beneficial for decoding.) As long as Bob receives more than coded symbols per source symbol, he can decode with high probability. In other words, is the communication overhead of Rateless IBLTs, i.e., the average number of coded symbols required to recover each source symbol. is a function of . As discussed in § 4.2, we set in our final design to simplify the process of generating mappings according to . We solve for when and get the following result.

Corollary 5.2.

The average overhead of Rateless IBLTs converges to as the difference size goes to infinity.

5.1. Monte Carlo Simulations

Theorem 5.1 and Corollary 5.2 from the density evolution analysis state the behavior of Rateless IBLTs when the difference size goes to infinity. To understand the behavior when is finite, we run Monte Carlo simulations, and compare the results with the theorems.

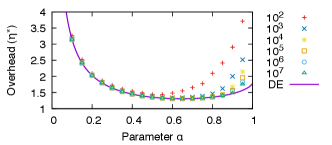

Fig. 4 shows the main results. It compares the overhead predicted by Theorem 5.1 and that observed in simulations. First, notice that as the difference size increases, simulation results converge to the analysis for all . How fast the results converge depends on . For all , convergence happens quickly, and the overhead observed in simulations stays within of the analysis even for the smallest difference size we test. On the other hand, for , simulation results are still higher than the analysis at the largest difference size we test. Second, the figure shows that setting is close to optimal for the communication overhead. Setting results in , while the optimal setting is which results in , a difference of only .

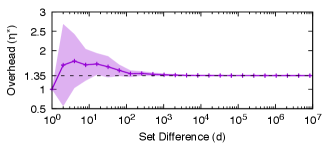

Next, we focus on , the parameter we choose for our final design. Fig. 5 shows the overhead as we vary the difference size . It peaks at when and then converges to as predicted by Corollary 5.2. Convergence happens quickly: for all , the overhead is less than .

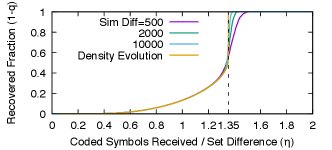

The density evolution analysis also predicts how decoding progresses as the decoder receives more coded symbols. The fixed points of in Eq. 3 represent the expected fraction of source symbols that the peeling decoder fails to recover before stalling, as goes to infinity. Fig. 6 compares this result with simulations (we plot , the fraction that the decoder can recover) and they match closely. There is a sharp increase in the fraction of recovered source symbols towards the end, a behavior also seen in other codes that use the peeling decoder, such as LT codes (Luby, 2002).

6. Implementation

We implement Rateless IBLT as a library in 353 lines of Go code. The implementation is self-contained and does not use third-party code. In this section, we discuss some important optimizations in the implementation.

Efficient incremental encoding. A key feature of Rateless IBLT is that it allows Alice to generate and send coded symbols one by one until Bob can decode. Suppose that Alice has generated coded symbols until index , and now wishes to generate the -th coded symbol. She needs to quickly find the source symbols that are mapped to it. A strawman solution is to store alongside each source symbol the next index it is mapped to, and scan all the source symbols to find the ones mapped to . However, this takes time. In our implementation, we store pointers to source symbols in a heap. It implements a priority queue, where the priority is the index of the next coded symbol that a source symbol is mapped to. A smaller value indicates higher priority. This ensures that source symbols used for generating the next coded symbol are always at the head of the queue so that the encoder can access them efficiently without scanning all the source symbols.

Variable-length encoding for count. Recall that the count field stores the number of source symbols that are mapped to a coded symbol during encoding. The standard approach is to allocate a fixed number of bytes for it (Eppstein et al., 2011; Lázaro and Matuz, 2023), which inflates the size of each coded symbol by a constant amount. However, in Rateless IBLT, the value stored in count decreases with the index of the coded symbol according to a known pattern: the -th coded symbol for a set is expected to have a count of . This pattern allows us to aggressively compress the count field. Instead of storing the value itself, we can store the difference of the actual value and the aforementioned expected value, which is a much smaller number. The node receiving the coded symbol can reconstruct the actual value of count, because it knows (transmitted with the -th coded symbol) and (assuming a transport that preserves ordering). Instead of allocating a fixed number of bytes, we use variable-length quantity (Wang et al., 2017) to store the difference, which uses bytes to store any number . Using our approach, the count field takes only bytes per coded symbol on average when encoding a set of items into coded symbols, keeping the resulting communication cost to a minimum.

7. Evaluation

We compare Rateless IBLT with state-of-the-art set reconciliation schemes, and demonstrate its low communication (§ 7.1) and computation (§ 7.2) costs across a wide range of workloads (set sizes, difference sizes, and item lengths). We then apply Rateless IBLT to synchronize the account states of Ethereum and demonstrate significant improvements over the production system on real workloads (§ 7.3).

Schemes compared. We compare with regular IBLT (Goodrich and Mitzenmacher, 2011; Eppstein et al., 2011), MET-IBLT (Lázaro and Matuz, 2023), PinSketch (Dodis et al., 2008), and Merkle tries (Yue et al., 2020). For Rateless IBLT, we use our implementation discussed in § 6. For regular IBLT and MET-IBLT, we implement each scheme in Python. We use the recommended parameters (Eppstein et al., 2011, § 6.1)(Lázaro and Matuz, 2023, §§ V-A, V-C), and allocate 8 bytes for the checksum and the count fields, respectively. For PinSketch, we use Minisketch (Naumenko et al., 2019, § 6), a state-of-the-art implementation (Wuille, 2018) written in C++ and deployed in Bitcoin. For Merkle tries, we use the implementation in Geth (go-ethereum Authors, 2024a), the most popular client for Ethereum.

7.1. Communication Cost

We first measure the communication overhead, defined as the amount of data transmitted during reconciliation divided by the size of set difference accounted in bytes. We test with set differences of – items. Beyond , the overhead of all schemes stays stable. The set size is 1 million items (recall that it only affects Merkle trie’s communication cost). Each item is 32 bytes, the size of a SHA256 hash, commonly used as keys in open-permission distributed systems (Nakamoto, 2008; Trautwein et al., 2022). For Rateless IBLT and MET-IBLT, we generate coded symbols until decoding succeeds, repeat each experiment times, and then report the average overhead and the standard deviation. Regular IBLTs cannot be dynamically expanded, and tuning the number of coded symbols requires precise knowledge of the size of the set difference. Usually, this is achieved by sending an estimator before reconciliation (Eppstein et al., 2011), which incurs an extra communication cost of at least 15 KB according to the recommended setup (Lázaro and Matuz, 2023, § V-C). We report the overhead of regular IBLT with and without this extra cost. Also, unlike the other schemes, regular IBLTs may fail to decode probabilistically. We gradually increase the number of coded symbols until the decoding failure rate drops below .

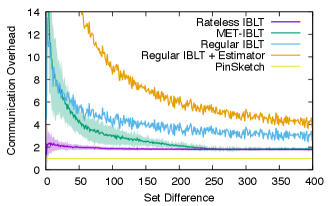

Fig. 7 shows the overhead of all schemes except for Merkle trie, whose overhead is significantly higher than the rest at over across all difference sizes we test. Rateless IBLT consistently achieves lower overhead compared to regular IBLT and MET-IBLT, especially when the set difference is small. For example, the overhead is – lower when the set difference is less than . The improvement is more significant when considering the cost of the estimator for regular IBLTs. On the other hand, PinSketch consistently achieves an overhead of , which is – lower than Rateless IBLT. However, as we will soon show, Rateless IBLT incurs – less computation than PinSketch on both the encoder and the decoder. We believe that the extra communication cost is worthwhile in most applications for the significant reduction in computation cost.

Scalability of Rateless IBLT. We quickly remark on how Rateless IBLT’s communication cost scales to longer or shorter items. Like other schemes based on sparse graphs, the checksum and count fields add a constant cost to each coded symbol. For Rateless IBLT, these two fields together occupy about bytes. Longer items will better amortize this fixed cost. When reconciling shorter items, this fixed cost might become more prominent. However, it is possible to reduce the length of the checksum field if the differences are smaller, because there will be fewer opportunities for hash collisions. We found that hashes of bytes are enough to reliably reconcile differences of tens of thousands. It is also possible to remove the count field altogether; Bob can still recover the symmetric difference as the peeling decoder (§ 3) does not use this field.

7.2. Computation Cost

There are two potential computation bottlenecks in set reconciliation: encoding the sets into coded symbols, and decoding the coded symbols to recover the symmetric difference. Encoding happens at Alice, and both encoding and decoding happen at Bob. In this experiment, we measure the encoding and decoding throughput for sets of various sizes and differences. We focus on comparing with PinSketch. We fix the item size to bytes, because this is the maximum size that the PinSketch implementation supports. We do not compare with regular IBLT or MET-IBLT as we cannot find high-quality open source implementations, and they have similar complexity as Rateless IBLT.555The complexity is linear to the average number of coded symbols each source symbol is mapped to.This is for Rateless IBLT and MET-IBLT (Lázaro and Matuz, 2023), and constant for regular IBLT, where is the number of coded symbols. However, the cost is amortized over the size of the set difference, which is . So, in all three IBLT-based schemes, the cost to encode for each set difference decreases quickly as increases. We will compare with Merkle trie in § 7.3. We run the benchmarks on a server with two Intel Xeon E5-2697 v4 CPUs. Both Rateless IBLT and PinSketch are single-threaded, and we pin the executions to one CPU core using cpuset(1).

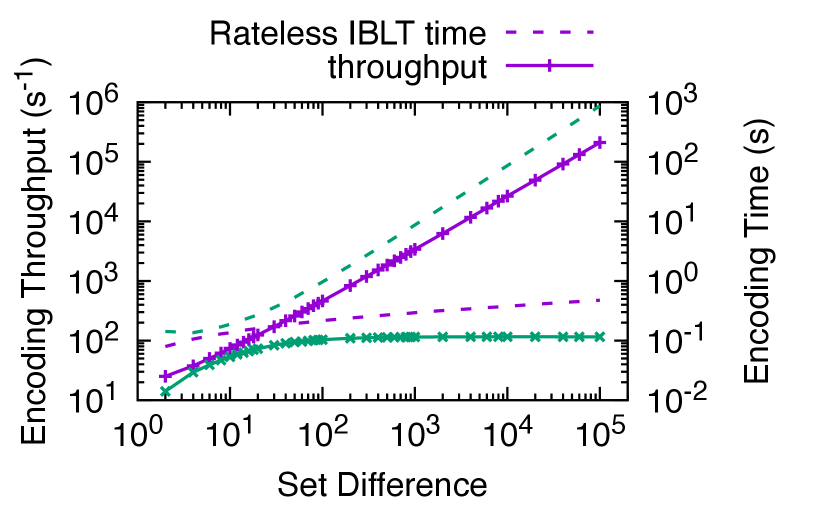

Encoding. Fig. 9 shows in solid lines the encoding throughput, defined as the difference size divided by the time it takes for the encoder to generate enough coded symbols for successful reconciliation. It indicates the number of items that can be reconciled per second with a compute-bound encoder. Rateless IBLT achieves – higher encoding throughput than PinSketch when reconciling differences of – items. The significant gain is because the mapping between source and coded symbols is sparse in Rateless IBLT, and the sparsity increases rapidly with , so the average cost to generate a coded symbol decreases quickly. In comparison, generating a coded symbol in PinSketch always requires evaluating the entire characteristic polynomial, causing the throughput to converge to a constant.

As the difference size increases, the encoding throughput of Rateless IBLT increases almost linearly, enabling the encoder to scale to large differences. In Fig. 9, we plot in dashed lines the time it takes to finish encoding. As the difference size increases by , the encoding time of Rateless IBLT grows by less than . Meanwhile, the encoding time of PinSketch grows by .

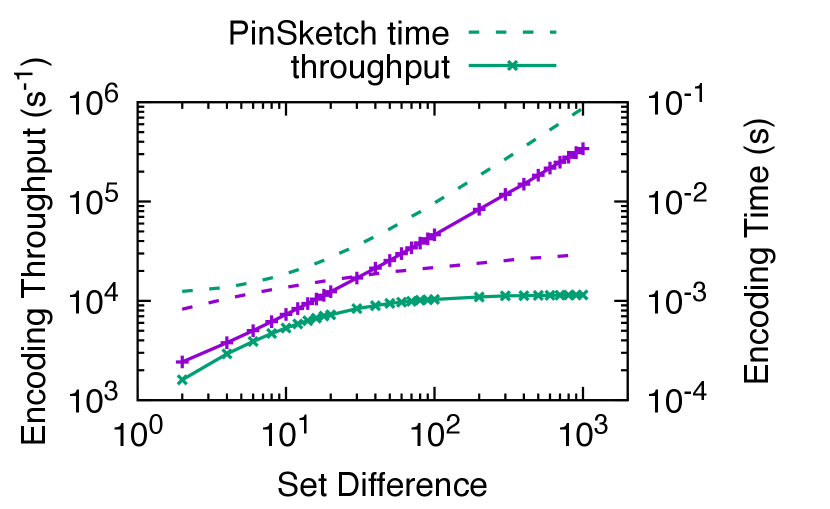

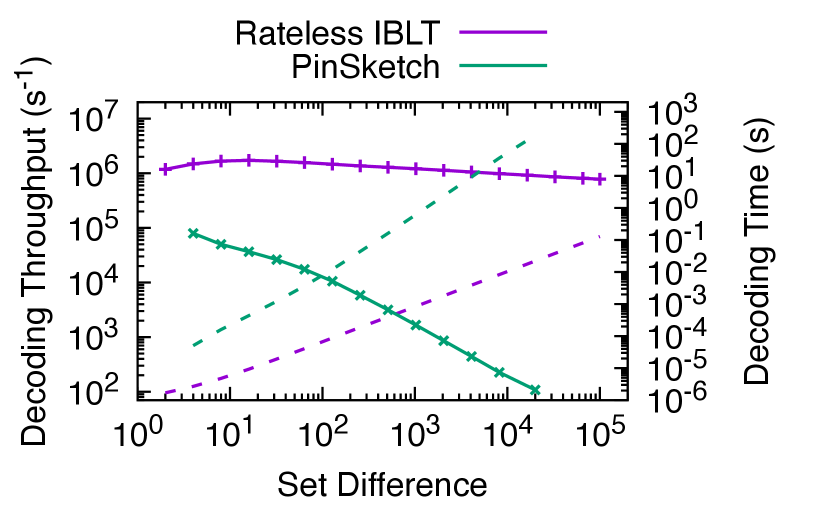

Decoding. Fig. 9 shows the decoding throughput (solid lines) and time (dashed lines), defined similarly as in the encoding experiment. We do not make a distinction of the set size, because it does not affect the decoding complexity. (Recall that decoders operate on coded symbols of the symmetric difference only.) Rateless IBLT achieves – higher decoding throughput than PinSketch. This is because decoding PinSketch is equivalent to interpolating polynomials (Dodis et al., 2008), which has complexity (Wuille, 2018), while decoding Rateless IBLT has only complexity thanks to the sparse mapping between source and coded symbols. As the difference size grows by , the decoding throughput of Rateless IBLT drops by only , allowing it to scale to large differences. For example, it takes Rateless IBLT second to decode differences. In contrast, it takes PinSketch more than a minute to decode differences.

Scalability of Rateless IBLT. We now show that Rateless IBLT preserves its computation efficiency when scaling to larger sets, larger differences, and longer items.

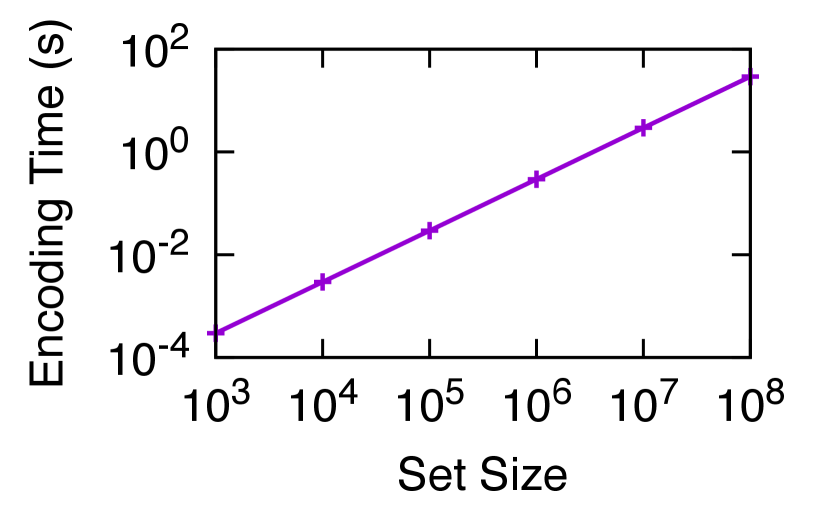

The set size affects encoding, but not decoding, because the decoder operates on coded symbols that represent the symmetric difference. The computation cost of encoding grows linearly with , as each source symbol is mapped to the same number of coded symbols on average and thus adds the same amount of work. For example, in Fig. 9, the encoding time for differences is milliseconds when , and milliseconds when , a difference of that matches the change in . Fig. 11 shows the encoding time measured in experiments with the same configuration for a wider range of .

The difference size affects both encoding and decoding. Recall that Rateless IBLT uses about coded symbols to reconcile differences (§ 5). As increases, the encoder needs to generate more coded symbols. However, unlike PinSketch where the cost is linear in , the cost of Rateless IBLT grows logarithmically. For example, in Fig. 8(a), the encoding time grows by only as the set difference increases from to . This is because the mapping from source to coded symbols is sparse: each source symbol is only mapped to an average of coded symbols. The same result applies to decoding. For example, in Fig. 9, the decoding throughput drops by as the grows by .

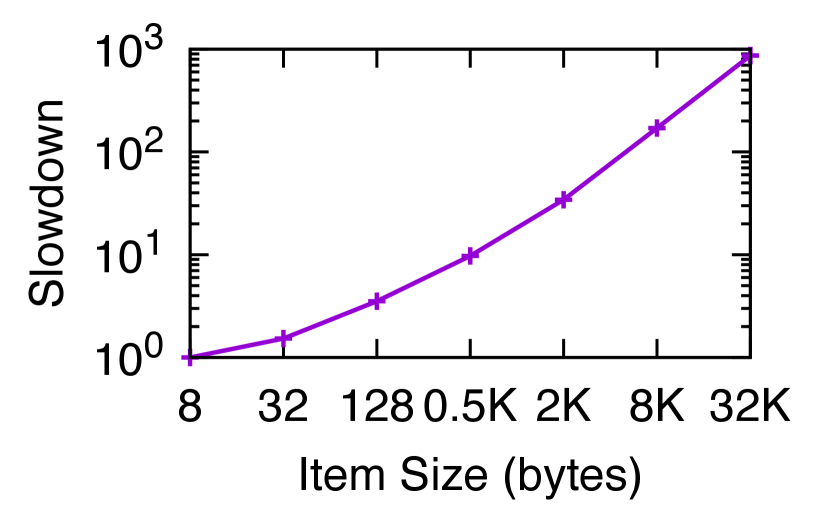

The item size affects both encoding and decoding because it decides the time it takes to compute the XOR of two symbols, which dominates the computation cost in Rateless IBLT. Fig. 11 shows the relative slowdown when as grows from bytes to KB. Initially, the slowdown is sublinear (e.g., less than when grows by from to bytes) because the other costs that are independent of (e.g., generating the mappings) are better amortized. However, after KB, the slowdown becomes linear. This implies that the data rate at which the encoder can process source symbols, measured in bytes per second, stays constant. For example, when encoding for , the encoder can process source symbols at MB/s. The same analysis applies to decoding. In comparison, the encoding complexity of PinSketch increases linearly with , and the decoding complexity increases quadratically (Dodis et al., 2008; Wuille, 2018).

7.3. Application

We now apply Rateless IBLT to a prominent application, the Ethereum blockchain. Whenever a blockchain replica comes online, it must synchronize with others to get the latest ledger state before it can validate new transactions or serve user queries. The ledger state is a key-value table, where the keys are 20-byte wallet addresses, and the values are 72-byte account states such as its balance. There are 230 million accounts as of January 4, 2024. Synchronizing the ledger state is equivalent to reconciling the set of all key-value pairs, a natural application of Rateless IBLT.

Ethereum (as well as most other blockchains) currently uses Merkle tries (§ 2) to synchronize ledger states between replicas. It applied a few optimizations: using a 16-ary trie instead of a binary one, and shortening sub-tries that have no branches. The protocol is called state heal and has been deployed in Geth (go-ethereum Authors, 2024a), the implementation which accounts for 84% of all Ethereum replicas (Sonic, 2024). Variants of Geth also power other major blockchains, such as Binance Smart Chain and Optimism.

State heal retains the issues with Merkle tries despite the optimizations. To discover a differing key-value pair (leaf), replicas must visit and compare every internal node on the branch from the root to the differing leaf. This amplifies the communication, computation, and storage I/O costs by as much as the depth of the trie, i.e., for a set of key-value pairs. In addition, replicas must descend the branch in lock steps, so the process takes round trips. As a result, some Ethereum replicas have reported spending weeks on state heal, e.g., (go-ethereum Authors, 2024b). In comparison, Rateless IBLT does not have these issues. Its communication and computation costs depend only on the size of the difference rather than the entire ledger state, and it requires no interactivity between replicas besides streaming coded symbols at line rate.

Setup. We compare state heal with Rateless IBLT in synchronizing Ethereum ledger states. We implement a prototype in 1,903 lines of Go code. The prototype is able to load a snapshot of the ledger state from the disk, and synchronize with a peer over the network using either scheme. For state heal, we use the implementation (go-ethereum Authors, 2024c) in Geth v1.13.10 without modification. For Rateless IBLT, we use our implementation discussed in § 6. We wrap it with a simple network protocol where a replica requests synchronization by opening a TCP connection to the peer, and the peer streams coded symbols until the requesting replica closes the connection to signal successful decoding.

To obtain workload for experiments, we extract snapshots of Ethereum ledger states as of blocks 18908312–18938312, corresponding to a 100-hour time span between December 31, 2023 and January 4, 2024. Each snapshot represents the ledger state when a block was just produced in the live Ethereum blockchain.666Ethereum produces a block every 12 seconds. Each block is a batch of transactions that update the ledger state. For each experiment, we set up two replicas: Alice always loads the latest snapshot (block 18938312); Bob loads snapshots of different staleness and synchronizes with Alice. This simulates the scenario where Bob goes offline at some point in time (depending on the snapshot he loads), wakes up when block 18938312 was just produced, and synchronizes with Alice to get the latest ledger state. We run both replicas on a server with two Intel Xeon E5-2698 v4 CPUs running FreeBSD 14.0. We use Dummynet (Rizzo, 1997) to inject a 50 ms one-way propagation delay between the replicas and enforce different bandwidth caps of 10 to 100 Mbps.

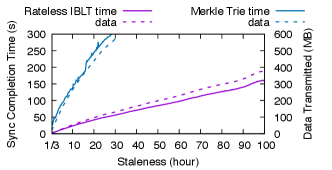

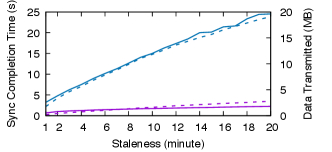

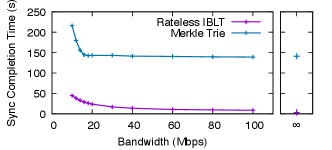

Results. We first vary the state snapshot that Bob loads and measure the completion time and the communication cost for Bob to synchronize with Alice. We fix the bandwidth to 20 Mbps. Fig. 12 shows the results. As Bob’s state becomes more stale, more updates happen between his and the latest states, and the difference between the two grows linearly. As a result, the completion time and the communication cost of both schemes increase linearly. Meanwhile, Rateless IBLT consistently achieves – lower completion time, and – lower communication cost compared to state heal. As discussed previously, state heal has a much higher communication cost because it requires transmitting the differing internal nodes of the Merkle trie in addition to the leaves. For example, this amplifies the number of trie nodes transmitted by when Bob’s state is 30 hours stale. The higher communication cost leads to proportionately longer completion time as the system is throughput-bound.

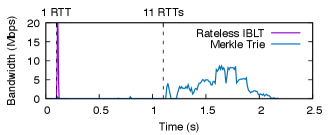

In our experiments, state heal requires at least 11 rounds of interactivity, as Alice and Bob descend from the roots of their tries to the differing leaves in lock steps. Rateless IBLT, in comparison, only requires half of a round because Alice streams coded symbols without waiting for any feedback. This advantage is the most obvious when reconciling a small difference, where the system would be latency-bound. For example, Rateless IBLT is faster than state heal when Bob’s ledger state is only 1 block (12 seconds) stale.

We quickly highlight the impact of interactivity. Fig. 13 shows traces of bandwidth usage when synchronizing one block worth of state difference. For Rateless IBLT, the first coded symbol arrives at Bob in 1 round-trip time (RTT) after his TCP socket opens (0.5 RTT for TCP ACK to reach Alice, and another 0.5 RTT for the first symbol to arrive). Subsequent symbols arrive at line rate, as the peak at 1 RTT indicates. In comparison, for state heal, Alice and Bob reach the bottom of their tries after 11 RTTs; before that, they do not know the actual key-value pairs that differ, and the network link stays almost idle.

Finally, we demonstrate that Rateless IBLT consistently outperforms state heal across different network conditions. We fix Bob’s snapshot to be 10 hours stale and vary the bandwidth cap. Fig. 14 shows the results. Rateless IBLT is faster than state heal at 10 Mbps, and the gain increases to at 100 Mbps. Notice that the completion time of state heal stays constant after 20 Mbps; it cannot utilize any extra bandwidth. We observe that state heal becomes compute-bound: Bob cannot process the trie nodes he receives fast enough to saturate the network. The completion time does not change even if we remove the bandwidth cap. In contrast, Rateless IBLT is throughput-bound, as its completion time keeps decreasing with the increasing bandwidth. If we remove the bandwidth cap, Rateless IBLT takes 2.5 seconds to finish and can saturate a 170 Mbps link using one CPU core on each side.

Before ending, we quickly discuss a few other potential solutions and how Rateless IBLT compares with them. When Bob’s state is consistent with some particular block, he may request Alice to compute and send the state delta from his block to the latest block, which would be as efficient as an optimal set reconciliation scheme. However, this is often not the case when Bob needs synchronization, such as when he recovers from database corruption or he has downloaded inconsistent shards of state snapshots from multiple sources, routine when Geth replicas bootstrap (Taverna and Paterson, 2023). Rateless IBLT (and state heal) does not assume consistent states. Coded symbols in traditional set reconciliation schemes like regular IBLT are tailored to a fixed difference size (§ 3). Alice has to generate a new batch of coded symbols for each peer with a different state. This would add minutes to the latency for large sets like Ethereum, incur significant computation costs, and create denial-of-service vulnerabilities.777These issues also apply to the aforementioned state delta solution to a lesser degree, because Alice has to compute the state deltas on the fly. In contrast, Rateless IBLT allows Alice to prepare a single stream of coded symbols that is efficient for all peers. Because of linearity (§ 4), Alice can incrementally update the coded symbols as her ledger state changes. For an average Ethereum block, it takes 11 ms to update 50 million coded symbols (7 GB) using one CPU core to reflect the state changes.

8. Irregular Rateless IBLTs

When designing Rateless IBLTs (§ 4.1), the key task was to define the mapping rule that decides whether a source symbol should be mapped to the -th coded symbol. Lemmas 4.1 and 4.2 showed that the mapping rule cannot be uniform over coded symbols: the probability that a random source symbol is mapped to the -th coded symbol must decrease with . In other words, different coded symbols are statistically inequivalent. On average, a coded symbol with a smaller index sees more source symbols mapped to it than one with a larger index does.

However, the same is not true for source symbols in our design: every subset of source symbols uses the same mapping probability with the same parameter . This leaves a degree of freedom which we did not explore. We may divide source symbols into multiple subsets and use different (in particular, different ) for each subset. Similar techniques have successfully improved the communication costs of regular IBLTs (Lázaro and Matuz, 2021). In this section, we apply this technique on Rateless IBLTs and discuss the implications.

Concretely, we partition source symbols into mutually exclusive subsets, where is a parameter. A random source symbol belongs to subset () with probability , which is another set of parameters. For a source symbol , we choose the subset it belongs to based on its hash. For example, belongs to subset if assuming the hash is uniformly distributed in . For each subset , we define a parameter and use mapping probability when mapping source symbols in this subset. In other words, we replace with a subset-specific in the algorithm described in § 4.2. By convention, we call this generalized design Irregular Rateless IBLTs. Rateless IBLTs as discussed prior to this section is a special case where , , and .

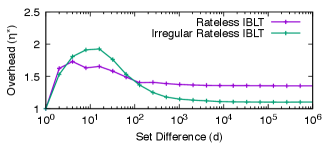

As mentioned, the main benefit of Irregular Rateless IBLTs over Rateless IBLTs is a lower communication cost. Unfortunately, the density evolution analysis does not produce a closed-form result like the one in Theorem 5.1. To find a good configuration of , , and that minimizes the overhead, we use brute force and try different values in simulations. To limit the complexity of the search, we set the number of subsets to and found the following optimal configuration

As shown in Fig. 15, the resulting communication overhead converges to , which is lower than Rateless IBLTs (§ 4) and only above the information-theoretic lower bound. Meanwhile, encoding and decoding are times slower than Rateless IBLTs. As mentioned in § 4.2, the main reason is that computing mappings when requires raising numbers to arbitrary non-integer powers, while the case of only requires computing square roots, which is faster on modern hardware. We leave further optimizations of the parameters and the implementation to future works.

9. Conclusion

We designed, mathematically analyzed, and experimentally evaluated Rateless IBLT. To the best of our knowledge, Rateless IBLT is the first set reconciliation solution with universally low computation cost and near-optimal communication cost across workloads. The distinguishing feature is ratelessness: it encodes any set into an infinitely long codeword, of which any prefix is capable of reconciling a proportional number of differences with another set. Ratelessness simplifies deployment as there is no parameter; reduces overhead as nodes can incrementally send longer prefixes without over- or under-committing resources to fixed-sized codewords; and naturally supports concurrent synchronization with multiple nodes. We mathematically proved its asymptotic efficiency and showed that the actual performance converges quickly with extensive simulations. We implemented Rateless IBLT as a library and benchmarked its performance. Finally, we applied Rateless IBLT to a popular distributed application and demonstrated significant gains in state synchronization over the production system.

We point out a few interesting future directions: optimizing the parameters and the implementation of Irregular Rateless IBLTs; considering scenarios where Alice and Bob’s sets change in the middle of reconciliation; and designing efficient solutions for reconciliation across more than two parties.

Acknowledgments

We thank Francisco Lázaro for fruitful discussions. Lei Yang was supported by a gift from the Ethereum Foundation. Yossi Gilad was partially supported by the Alon Fellowship.

This work does not raise any ethical issues. Appendices are supporting material that has not been peer-reviewed.

References

- (1)

- Androulaki et al. (2018) Elli Androulaki, Artem Barger, Vita Bortnikov, Christian Cachin, Konstantinos Christidis, Angelo De Caro, David Enyeart, Christopher Ferris, Gennady Laventman, Yacov Manevich, Srinivasan Muralidharan, Chet Murthy, Binh Nguyen, Manish Sethi, Gari Singh, Keith Smith, Alessandro Sorniotti, Chrysoula Stathakopoulou, Marko Vukolić, Sharon Weed Cocco, and Jason Yellick. 2018. Hyperledger fabric: a distributed operating system for permissioned blockchains. In Proceedings of the Thirteenth EuroSys Conference (Porto, Portugal) (EuroSys ’18). Association for Computing Machinery, New York, NY, USA, Article 30, 15 pages. https://doi.org/10.1145/3190508.3190538

- Aumasson and Bernstein (2012) Jean-Philippe Aumasson and Daniel J. Bernstein. 2012. SipHash: A Fast Short-Input PRF. In 13th International Conference on Cryptology in India (INDOCRYPT 2012) (Kolkata, India) (Lecture Notes in Computer Science, Vol. 7668). Springer, New York, NY, USA, 489–508. https://doi.org/10.1007/978-3-642-34931-7_28

- Bloom (1970) Burton H. Bloom. 1970. Space/time trade-offs in hash coding with allowable errors. Commun. ACM 13, 7 (jul 1970), 422–426. https://doi.org/10.1145/362686.362692

- Bose and Ray-Chaudhuri (1960) R. C. Bose and Dwijendra K. Ray-Chaudhuri. 1960. On A Class of Error Correcting Binary Group Codes. Inf. Control. 3, 1 (1960), 68–79. https://doi.org/10.1016/S0019-9958(60)90287-4

- Byers et al. (1998) John W. Byers, Michael Luby, Michael Mitzenmacher, and Ashutosh Rege. 1998. A digital fountain approach to reliable distribution of bulk data. In Proceedings of the ACM SIGCOMM ’98 Conference on Applications, Technologies, Architectures, and Protocols for Computer Communication (Vancouver, British Columbia, Canada) (SIGCOMM ’98). Association for Computing Machinery, New York, NY, USA, 56–67. https://doi.org/10.1145/285237.285258

- Cam (1960) Lucien Le Cam. 1960. An approximation theorem for the Poisson binomial distribution. Pacific J. Math. 10, 4 (1960), 1181 – 1197.

- Dodis et al. (2008) Yevgeniy Dodis, Rafail Ostrovsky, Leonid Reyzin, and Adam D. Smith. 2008. Fuzzy Extractors: How to Generate Strong Keys from Biometrics and Other Noisy Data. SIAM J. Comput. 38, 1 (2008), 97–139. https://doi.org/10.1137/060651380

- Eppstein et al. (2011) David Eppstein, Michael T. Goodrich, Frank Uyeda, and George Varghese. 2011. What’s the difference? efficient set reconciliation without prior context. In Proceedings of the ACM SIGCOMM 2011 Conference (Toronto, Ontario, Canada) (SIGCOMM ’11). Association for Computing Machinery, New York, NY, USA, 218–229. https://doi.org/10.1145/2018436.2018462

- Gallager (1962) Robert G. Gallager. 1962. Low-density parity-check codes. IRE Trans. Inf. Theory 8, 1 (1962), 21–28. https://doi.org/10.1109/TIT.1962.1057683

- go-ethereum Authors (2024a) The go-ethereum Authors. 2024a. go-ethereum: Official Go implementation of the Ethereum protocol. https://geth.ethereum.org.

- go-ethereum Authors (2024b) The go-ethereum Authors. 2024b. State heal phase is very slow (not finished after 2 weeks). https://github.com/ethereum/go-ethereum/issues/23191.

- go-ethereum Authors (2024c) The go-ethereum Authors. 2024c. trie package - go-ethereum. https://pkg.go.dev/github.com/ethereum/go-ethereum/trie.

- Goodrich and Mitzenmacher (2011) Michael T. Goodrich and Michael Mitzenmacher. 2011. Invertible Bloom Lookup Tables. In 49th Annual Allerton Conference on Communication, Control, and Computing (Allerton 2011) (Monticello, IL, USA). IEEE, New York, NY, USA, 792–799. https://doi.org/10.1109/ALLERTON.2011.6120248

- Han et al. (2020) Yilin Han, Chenxing Li, Peilun Li, Ming Wu, Dong Zhou, and Fan Long. 2020. Shrec: bandwidth-efficient transaction relay in high-throughput blockchain systems. In Proceedings of the 11th ACM Symposium on Cloud Computing (Virtual Event, USA) (SoCC ’20). Association for Computing Machinery, New York, NY, USA, 238–252. https://doi.org/10.1145/3419111.3421283

- Lázaro and Matuz (2021) Francisco Lázaro and Balázs Matuz. 2021. Irregular Invertible Bloom Look-Up Tables. In 11th International Symposium on Topics in Coding (ISTC 2021) (Montreal, QC, Canada). IEEE, New York, NY, USA, 1–5. https://doi.org/10.1109/ISTC49272.2021.9594198

- Lázaro and Matuz (2023) Francisco Lázaro and Balázs Matuz. 2023. A Rate-Compatible Solution to the Set Reconciliation Problem. IEEE Trans. Commun. 71, 10 (2023), 5769–5782. https://doi.org/10.1109/TCOMM.2023.3296630

- Luby (2002) Michael Luby. 2002. LT Codes. In 43rd Symposium on Foundations of Computer Science (FOCS 2002) (Vancouver, BC, Canada). IEEE Computer Society, Los Alamitos, CA, USA, 271. https://doi.org/10.1109/SFCS.2002.1181950

- Luby et al. (1998) Michael G. Luby, Michael Mitzenmacher, and M. Amin Shokrollahi. 1998. Analysis of random processes via And-Or tree evaluation. In Proceedings of the Ninth Annual ACM-SIAM Symposium on Discrete Algorithms (San Francisco, California, USA) (SODA ’98). Society for Industrial and Applied Mathematics, USA, 364–373.

- Minsky et al. (2003) Yaron Minsky, Ari Trachtenberg, and Richard Zippel. 2003. Set reconciliation with nearly optimal communication complexity. IEEE Trans. Inf. Theory 49, 9 (2003), 2213–2218. https://doi.org/10.1109/TIT.2003.815784

- Mitzenmacher and Pagh (2018) Michael Mitzenmacher and Rasmus Pagh. 2018. Simple multi-party set reconciliation. Distributed Comput. 31, 6 (2018), 441–453. https://doi.org/10.1007/S00446-017-0316-0

- Mitzenmacher and Varghese (2012) Michael Mitzenmacher and George Varghese. 2012. Biff (Bloom filter) codes: Fast error correction for large data sets. In Proceedings of the 2012 IEEE International Symposium on Information Theory (ISIT 2012) (Cambridge, MA, USA). IEEE, New York, NY, USA, 483–487. https://doi.org/10.1109/ISIT.2012.6284236

- Nakamoto (2008) Satoshi Nakamoto. 2008. Bitcoin: A peer-to-peer electronic cash system.

- Naumenko et al. (2019) Gleb Naumenko, Gregory Maxwell, Pieter Wuille, Alexandra Fedorova, and Ivan Beschastnikh. 2019. Erlay: Efficient Transaction Relay for Bitcoin. In Proceedings of the 2019 ACM SIGSAC Conference on Computer and Communications Security (London, United Kingdom) (CCS ’19). Association for Computing Machinery, New York, NY, USA, 817–831. https://doi.org/10.1145/3319535.3354237

- Ozisik et al. (2019) A. Pinar Ozisik, Gavin Andresen, Brian N. Levine, Darren Tapp, George Bissias, and Sunny Katkuri. 2019. Graphene: efficient interactive set reconciliation applied to blockchain propagation. In Proceedings of the ACM Special Interest Group on Data Communication (Beijing, China) (SIGCOMM ’19). Association for Computing Machinery, New York, NY, USA, 303–317. https://doi.org/10.1145/3341302.3342082

- Perry et al. (2022) Neil Perry, Bruce Spang, Saba Eskandarian, and Dan Boneh. 2022. Strong Anonymity for Mesh Messaging. arXiv:2207.04145 [cs.CR]

- Raman et al. (2019) Aravindh Raman, Sagar Joglekar, Emiliano De Cristofaro, Nishanth Sastry, and Gareth Tyson. 2019. Challenges in the Decentralised Web: The Mastodon Case. In Proceedings of the Internet Measurement Conference (Amsterdam, Netherlands) (IMC ’19). Association for Computing Machinery, New York, NY, USA, 217–229. https://doi.org/10.1145/3355369.3355572

- Reed and Solomon (1960) Irving S. Reed and Gustave Solomon. 1960. Polynomial Codes Over Certain Finite Fields. J. Soc. Indust. Appl. Math. 8, 2 (1960), 300–304. https://doi.org/10.1137/0108018

- Richardson and Urbanke (2001) Thomas J. Richardson and Rüdiger L. Urbanke. 2001. The capacity of low-density parity-check codes under message-passing decoding. IEEE Trans. Inf. Theory 47, 2 (2001), 599–618. https://doi.org/10.1109/18.910577

- Rizzo (1997) Luigi Rizzo. 1997. Dummynet: a simple approach to the evaluation of network protocols. SIGCOMM Comput. Commun. Rev. 27, 1 (jan 1997), 31–41. https://doi.org/10.1145/251007.251012

- Robbins (1955) Herbert Robbins. 1955. A remark on Stirling’s formula. The American mathematical monthly 62, 1 (1955), 26–29.

- Sonic (2024) Sonic. 2024. Ethereum Execution Client Diversity. https://execution-diversity.info (retrieved January 22, 2024).

- Steele (1994) J Michael Steele. 1994. Le Cam’s inequality and Poisson approximations. The American Mathematical Monthly 101, 1 (1994), 48–54.

- Summermatter and Grothoff (2022) E. Summermatter and C. Grothoff. 2022. Byzantine Fault Tolerant Set Reconciliation. https://lsd.gnunet.org/lsd0003/.

- Taverna and Paterson (2023) Massimiliano Taverna and Kenneth G. Paterson. 2023. Snapping Snap Sync: Practical Attacks on Go Ethereum Synchronising Nodes. In 32nd USENIX Security Symposium (USENIX Security 23). USENIX Association, Anaheim, CA, 3331–3348. https://www.usenix.org/conference/usenixsecurity23/presentation/taverna

- Trautwein et al. (2022) Dennis Trautwein, Aravindh Raman, Gareth Tyson, Ignacio Castro, Will Scott, Moritz Schubotz, Bela Gipp, and Yiannis Psaras. 2022. Design and evaluation of IPFS: a storage layer for the decentralized web. In Proceedings of the ACM SIGCOMM 2022 Conference (Amsterdam, Netherlands) (SIGCOMM ’22). Association for Computing Machinery, New York, NY, USA, 739–752. https://doi.org/10.1145/3544216.3544232

- Wang et al. (2017) Jianguo Wang, Chunbin Lin, Yannis Papakonstantinou, and Steven Swanson. 2017. An Experimental Study of Bitmap Compression vs. Inverted List Compression. In Proceedings of the 2017 ACM International Conference on Management of Data (Chicago, Illinois, USA) (SIGMOD ’17). Association for Computing Machinery, New York, NY, USA, 993–1008. https://doi.org/10.1145/3035918.3064007

- Wood (2014) Gavin Wood. 2014. Ethereum: A secure decentralised generalised transaction ledger.

- Wuille (2018) Pieter Wuille. 2018. Minisketch: a library for BCH-based set reconciliation. https://github.com/sipa/minisketch.

- Yue et al. (2020) Cong Yue, Zhongle Xie, Meihui Zhang, Gang Chen, Beng Chin Ooi, Sheng Wang, and Xiaokui Xiao. 2020. Analysis of Indexing Structures for Immutable Data. In Proceedings of the 2020 ACM SIGMOD International Conference on Management of Data (Portland, OR, USA) (SIGMOD ’20). Association for Computing Machinery, New York, NY, USA, 925–935. https://doi.org/10.1145/3318464.3389773

Appendix A Inflexibility of Regular IBLTs

We state and prove Theorems A.1 and A.2, which show that the efficiency of regular IBLTs degrades exponentially fast when being used to reconcile more or fewer differences than parameterized for. We state the theorems with respect to generic sets of source symbols. When using IBLTs for set reconciliation (§ 3), the sets are .

Theorem A.1.

For a random set of source symbols and a corresponding regular IBLT with coded symbols, the probability that the peeling decoder can recover at least one source symbol decreases exponentially in .

Proof.

For the peeling decoder to recover at least one source symbol, there must be at least one pure coded symbol at the beginning. Otherwise, peeling cannot start, and no source symbol can be recovered. We now calculate a lower bound on the probability that no pure coded symbol exists. Note that there is another parameter for regular IBLTs, , which determines the number of coded symbols each source symbol is mapped to (§ 3). However, it can be shown that the probability that no pure coded symbol exists increases with , so we set to get a lower bound.

We consider the equivalent problem: if we throw balls (source symbols) uniformly at random into bins (coded symbols), what is a lower bound on the probability that no bin ends up having exactly one ball? We compute the number of ways such that at least one bin has exactly one ball, which is the opposite of the event we are interested in. We set aside one of the bins which will get exactly one ball, and assign one of the balls to this bin. We then throw the remaining balls into the remaining bins freely. Notice that there are duplicates, so we get an upper bound

The total number of ways to throw balls into bins is

Each way of throwing is equally likely to happen, so the probability that no bin ends up with exactly one ball has a lower bound

We are interested in the event where peeling can start, which is the opposite event. Its probability has an upper bound for

∎

The following theorem says that when dropping a fraction of a regular IBLT to reconcile a smaller number of differences with a constant overhead (the ratio between the number of used coded symbols and the number of source symbols ), the success probability decreases quickly as a larger fraction gets dropped.

Theorem A.2.

Consider a random set of source symbols and a corresponding regular IBLT with coded symbols, where each source symbol is mapped to coded symbols. The peeling decoder tries to recover all source symbols using the first coded symbols. and are constants, and . The probability that it succeeds decreases exponentially in .

Proof.

For the peeling decoder to succeed, each of the source symbols must be mapped at least once to the first coded symbols. Because each source symbol is uniformly mapped to of the coded symbols, the probability that one is only mapped to the remaining coded symbols that the decoder does not use (“missed”) is

The last step approximates with . This does not change the result qualitatively because is a constant.

The probability that no source symbol is missed is

∎

Appendix B Calculation of and

Before proceeding to calculate , we first prove a useful identity about quotients of Gamma functions,

We start from the right-hand side,

The identity immediately implies the following

| (4) |

We now calculate .

The second last equality results from applying Eq. 4.

Appendix C Deferred Proofs

Lemma C.1 (Restatement of Lemma 4.1).

For any , any mapping probability such that , and any , if there exists at least one pure coded symbol within the first coded symbols for a random set with probability , then .

Proof.

We need to show . Because , there exists and , such that for all . Let be the smallest non-zero value among for all . Let

where is such that for all ,

Note that , so such always exists.

For any , the -th coded symbol is pure if and only if exactly one source symbol is mapped to it, which happens with probability

The inequality comes from the fact that for any and .

By the definition of , for any and any , either , or and . In either case,

Recall that we want at least one pure symbol among the first coded symbols. Assume for contradiction that . Then, failure happens with probability

∎

We remark that a stronger result which only requires can be shown with a very similar proof, which we omit for simplicity and lack of practical implications. We may also consider a generalization of this lemma, by requiring there to be at least coded symbols with at most source symbols mapped to each, for every . (Lemma 4.1 is the special case of .) This may lead to an even tighter bound on , which we conjecture to be .

Lemma C.2 (Restatement of Lemma 4.2).

For any mapping probability such that , and any , if there exist at least non-empty coded symbols within the first coded symbols for a random set with probability , then .

Proof.

We need to show that . First, note that for there to be non-empty symbols within the first coded symbols, cannot be smaller than , so the statement is trivially true for . We now prove for the case of .

For any , let . Because , there must exist such that for all . Let

For all , the -th coded symbol is non-empty with probability

The first inequality is because for , and the second inequality is because for any and .

In order to get non-empty symbols among the first coded symbols, there must be at least non-empty symbols from index to index . To derive an upper bond on this probability, we assume that each is non-empty with probability , which, as we just saw, is strictly an overestimate. By Hoeffding’s inequality, the probability that there are at least non-empty symbols has an upper bound

when , which is true for all .

Assume for contradiction. By the definition of , the previous upper bound becomes

The right hand side monotonically decreases with . So, by the definition of , for all ,

∎

Theorem C.3 (Restatement of Theorem 5.1).

For a random set of source symbols, the probability that the peeling decoder successfully recovers the set using the first coded symbols (as defined in § 4) goes to 1 as goes to infinity. Here, is any positive constant such that

Before proving the theorem, we introduce the graph representation of a set of source symbols and the corresponding coded symbols. Imagine a bipartite graph where each source or coded symbol is a vertex in the graph, and there is an edge between a source and a coded symbol if and only if the former is mapped to the latter during encoding. Fig. 16 is an example. We define the degree of a symbol as the number of neighbors it has in this bipartite graph, i.e., its degree as in graph theory. For example, in Fig. 16, source symbol has degree 4, and coded symbol has degree 2.