Principal Geodesic Analysis for Probability Measures under the Optimal Transport Metric

Abstract

Given a family of probability measures in , the space of probability measures on a Hilbert space , our goal in this paper is to highlight one ore more curves in that summarize efficiently that family. We propose to study this problem under the optimal transport (Wasserstein) geometry, using curves that are restricted to be geodesic segments under that metric. We show that concepts that play a key role in Euclidean PCA, such as data centering or orthogonality of principal directions, find a natural equivalent in the optimal transport geometry, using Wasserstein means and differential geometry. The implementation of these ideas is, however, computationally challenging. To achieve scalable algorithms that can handle thousands of measures, we propose to use a relaxed definition for geodesics and regularized optimal transport distances. The interest of our approach is demonstrated on images seen either as shapes or color histograms.

1 Introduction

Optimal transport distances (Villani, 2008), a.k.a Wasserstein or earth mover’s distances, define a powerful geometry to compare probability measures supported on a metric space . The Wasserstein space —the space of probability measures on endowed with the Wasserstein distance—is a metric space which has received ample interest from a theoretical perspective. Given the prominent role played by probability measures and feature histograms in machine learning, the properties of can also have practical implications in data science. This was shown by Agueh and Carlier (2011) who described first Wasserstein means of probability measures. Wasserstein means have been recently used in Bayesian inference (Srivastava et al., 2015), clustering (Cuturi and Doucet, 2014), graphics (Solomon et al., 2015) or brain imaging (Gramfort et al., 2015). When is not just metric but also a Hilbert space, is an infinite-dimensional Riemannian manifold (Ambrosio et al. 2006, Chap. 8; Villani 2008, Part II). Three recent contributions by Boissard et al. (2015, §5.2), Bigot et al. (2015) and Wang et al. (2013) exploit directly or indirectly this structure to extend Principal Component Analysis (PCA) to . These important seminal papers are, however, limited in their applicability and/or the type of curves they output. Our goal in this paper is to propose more general and scalable algorithms to carry out Wasserstein principal geodesic analysis on probability measures, and not simply dimensionality reduction as explained below.

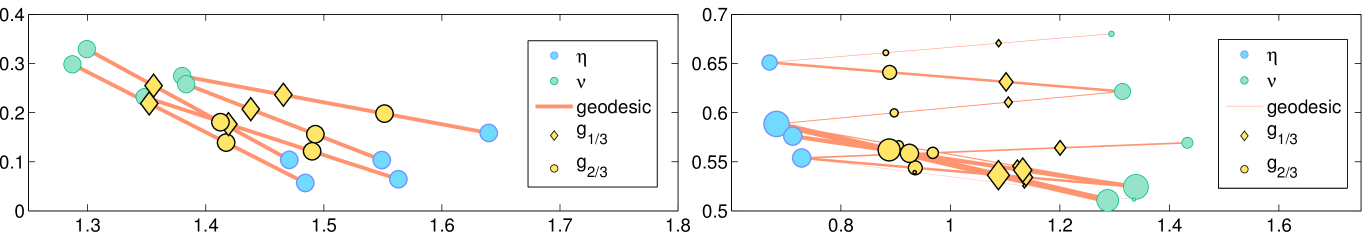

Principal Geodesics in vs. Dimensionality Reduction on

We provide in Fig. 1 a simple example that illustrates the motivation of this paper, and which also shows how our approach differentiates itself from existing dimensionality reduction algorithms (linear and non-linear) that draw inspiration from PCA. As shown in Fig. 1, linear PCA cannot produce components that remain in . Even more advanced tools, such as those proposed by Hastie and Stuetzle (1989), fall slightly short of that goal. On the other hand, Wasserstein geodesic analysis yields geodesic components in that are easy to interpret and which can also be used to reduce dimensionality.

Foundations of PCA and Riemannian Extensions

Carrying out PCA on a family of points taken in a space can be described in abstract terms as: (i) define a mean element for that dataset; (ii) define a family of components in , typically geodesic curves, that contain ; (iii) fit a component by making it follow the ’s as closely as possible, in the sense that the sum of the distances of each point to that component is minimized; (iv) fit additional components by iterating step (iii) several times, with the added constraint that each new component is different (orthogonal) enough to the previous components. When is Euclidean and the ’s are vectors in , the -th component can be computed iteratively by solving:

| (1) |

Since PCA is known to boil down to a simple eigen-decomposition when is Euclidean or Hilbertian (Schölkopf et al., 1997), Eq. (1) looks artificially complicated. This formulation is, however, extremely useful to generalize PCA to Riemannian manifolds (Fletcher et al., 2004). This generalization proceeds first by replacing vector means, lines and orthogonality conditions using respectively Fréchet means (1948), geodesics, and orthogonality in tangent spaces. Riemannian PCA builds then upon the knowledge of the exponential map at each point of the manifold . Each exponential map is locally bijective between the tangent space of and . After computing the Fréchet mean of the dataset, the logarithmic map at (the inverse of ) is used to map all data points onto . Because is a Euclidean space by definition of Riemannian manifolds, the dataset can be studied using Euclidean PCA. Principal geodesics in can then be recovered by applying the exponential map to a principal component , .

From Riemannian PCA to Wasserstein PCA: Related Work

As remarked by Bigot et al. (2015), Fletcher et al.’s approach cannot be used as it is to define Wasserstein geodesic PCA, because is infinite dimensional and because there are no known ways to define exponential maps which are locally bijective between Wasserstein tangent spaces and the manifold of probability measures. To circumvent this problem, Boissard et al. (2015), Bigot et al. (2015) have proposed to formulate the geodesic PCA problem directly as an optimization problem over curves in .

Boissard et al. and Bigot et al. study the Wasserstein PCA problem in restricted scenarios: Bigot et al. focus their attention on measures supported on , which considerably simplifies their analysis since it is known in that case that the Wasserstein space can be embedded isometrically in ; Boissard et al. assume that each input measure has been generated from a single template density (the mean measure) which has been transformed according to one “admissible deformation” taken in a parameterized family of deformation maps. Their approach to Wasserstein PCA boils down to a functional PCA on such maps. Wang et al. proposed a more general approach: given a family of input empirical measures , they propose to compute first a “template measure” using -means clustering on . They consider next all optimal transport plans between that template and each of the measures , and propose to compute the barycentric projection (see Eq. 8) of each optimal transport plan to recover Monge maps , on which standard PCA can be used. This approach is computationally attractive since it requires the computation of only one optimal transport per input measure. Its weakness lies, however, in the fact that the curves in obtained by displacing along each of these PCA directions are not geodesics in general.

Contributions and Outline

We propose a new algorithm to compute Wasserstein Principal Geodesics (WPG) in for arbitrary Hilbert spaces . We use several approximations—both of the optimal transport metric and of its geodesics—to obtain tractable algorithms that can scale to thousands of measures. We provide first in §2 a review of the key concepts used in this paper, namely Wasserstein distances and means, geodesics and tangent spaces in the Wasserstein space. We propose in §3 to parameterize a Wasserstein principal component (PC) using two velocity fields defined on the support of the Wasserstein mean of all measures, and formulate the WPG problem as that of optimizing these velocity fields so that the average distance of all measures to that PC is minimal. This problem is non-convex and non-smooth. We propose to optimize smooth upper-bounds of that objective using entropy regularized optimal transport in §4. The practical interest of our approach is demonstrated in §5 on toy samples, datasets of shapes and histograms of colors.

Notations

We write for the Frobenius dot-product of matrices and . is the diagonal matrix of vector . For a mapping , we say that acts on a measure through the pushforward operator to define a new measure . This measure is characterized by the identity for any Borel set . We write and for the canonical projection operators , defined as and .

2 Background on Optimal Transport

Wasserstein Distances

We start this section with the main mathematical object of this paper:

Definition 1.

(Villani, 2008, Def. 6.1) Let the space of probability measures on a Hilbert space . Let be the set of probability measures on with marginals and , i.e. and . The squared -Wasserstein distance between and in is defined as:

| (2) |

Wasserstein Barycenters

Given a family of probability measures in and weights , Agueh and Carlier (2011) define , the Wasserstein barycenter of these measures:

Wasserstein Geodesics

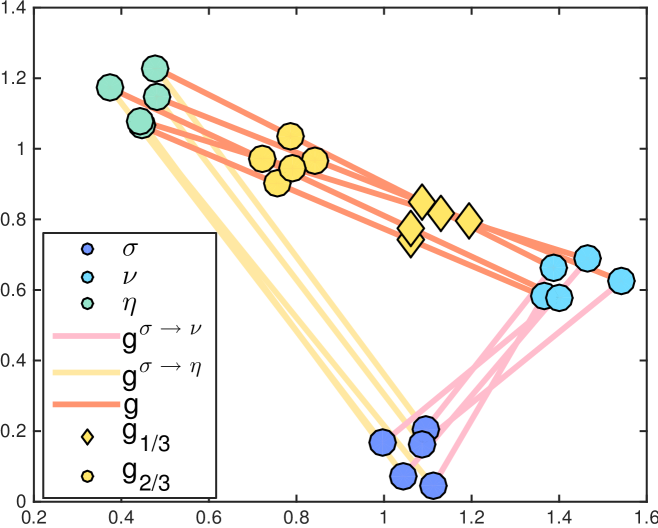

Given two measures and , let be the set of optimal couplings for Eq. (2). Informally speaking, it is well known that if either or are absolutely continuous measures, then any optimal coupling is degenerated in the sense that, assuming for instance that is absolutely continuous, for all in the support of only one point is such that . In that case, the optimal transport is said to have no mass splitting, and there exists an optimal mapping such that can be written, using a pushforward, as . When there is no mass splitting to transport to , McCann’s interpolant (1997):

| (3) |

defines a geodesic curve in the Wasserstein space, i.e. is locally the shortest path between any two measures located on the geodesic, with respect to . In the more general case, where no optimal map exists and mass splitting occurs (for some locations one may have for several ), then a geodesic can still be defined, but it relies on the optimal plan instead: , (Ambrosio et al., 2006, §7.2). Both cases are shown in Fig. 2.

Tangent Space and Tangent Vectors

We briefly describe in this section the tangent spaces of , and refer to (Ambrosio et al., 2006, Chap. 8) for more details. Let be a curve in . For a given time , the tangent space of at is a subset of , the space of square-integrable velocity fields supported on . At any , there exists tangent vectors in such that . Given a geodesic curve in parameterized as Eq. (3), its corresponding tangent vector at time zero is .

3 Wasserstein Principal Geodesics

Geodesic Parameterization

The goal of principal geodesic analysis is to define geodesic curves in that go through the mean and which pass close enough to all target measures . To that end, geodesic curves can be parameterized with two end points and . However, to avoid dealing with the constraint that a principal geodesic needs to go through , one can start instead from , and consider a velocity field which displaces all of the mass of in both directions:

| (4) |

Lemma 7.2.1 of Ambrosio et al. (2006) implies that any geodesic going through can be written as Eq. (4). Hence, we do not lose any generality using this parameterization. However, given an arbitrary vector field , the curve is not necessarily a geodesic. Indeed, the maps are not necessarily in the set of maps that are optimal when moving mass away from . Ensuring thus, at each step of our algorithm, that is still such that is a geodesic curve is particularly challenging. To relax this strong assumption, we propose to use a generalized formulation of geodesics, which builds upon not one but two velocity fields, as introduced by Ambrosio et al. (2006, §9.2):

Definition 2.

Choosing as the base measure in Definition 2, and two fields such that are optimal mappings (in ), we can define the following generalized geodesic :

| (5) |

Generalized geodesics become true geodesics when and are positively proportional. We can thus consider a regularizer that controls the deviation from that property by defining which is minimal when and are indeed positively proportional. We can now formulate the WPG problem as computing, for , the principal (generalized) geodesic component of a family of measures by solving, with :

| (6) |

This problem is not convex in , . We propose to find an approximation of that minimum by a projected gradient descent, with a projection that is to be understood in terms of an alternative metric on the space of vector fields . To preserve the optimality of the mappings and between iterations, we introduce in the next paragraph a suitable projection operator on .

Remark 1.

A trivial way to ensure that is geodesic is to impose that the vector field is a translation, namely that is uniformly equal to a vector on all of . One can show in that case that the WPG problem described in Eq. (6) outputs an optimal vector which is the Euclidean principal component of the family formed by the means of each measure .

Projection on the Optimal Mapping Set

We use a projected gradient descent method to solve Eq. (6) approximately. We will compute the gradient of a local upper-bound of the objective of Eq. (6) and update and accordingly. We then need to ensure that and are such that and belong to the set of optimal mappings . To do so, we would ideally want to compute the projection of in

| (7) |

to update . Westdickenberg (2010) has shown that the set of optimal mappings is a convex closed cone in , leading to the existence and the unicity of the solution of Eq. (7). However, there is to our knowledge no known method to compute the projection of . There is nevertheless a well known and efficient approach to find a mapping in which is close to . That approach, known as the the barycentric projection, requires to compute first an optimal coupling between and , to define then a (conditional expectation) map

| (8) |

Ambrosio et al. (2006, Theorem 12.4.4) or Reich (2013, Lemma 3.1) have shown that is indeed an optimal mapping between and . We can thus set the velocity field as to carry out an approximate projection. We show in the supplementary material that this operator can be in fact interpreted as a projection under a pseudo-metric on .

4 Computing Principal Generalized Geodesics in Practice

We show in this section that when , the steps outlined above can be implemented efficiently.

Input Measures and Their Barycenter

Each input measure in the family is a finite weighted sum of Diracs, described by points contained in a matrix of size , and a (non-negative) weight vector of dimension summing to 1. The Wasserstein mean of these measures is given and equal to , where the nonnegative vector sums to one, and is the matrix containing locations of .

Generalized Geodesic

Two velocity vectors for each of the points in are needed to parameterize a generalized geodesic. These velocity fields will be represented by two matrices and in . Assuming that these velocity fields yield optimal mappings, the points at time of that generalized geodesic are the measures parameterized by ,

The squared 2-Wasserstein distance between datum and a point on the geodesic is:

| (9) |

where is the transportation polytope and stands for the matrix of squared-Euclidean distances between the and column vectors of and respectively. Writing and , we have that

which, by taking into account the marginal conditions on , leads to,

| (10) |

1. Majorization of the Distance of each to the Principal Geodesic

Using Eq. (10), the distance between each and the PC can be cast as a function of :

| (11) |

where we have replaced above by its explicit form in to highlight that the objective above is quadratic convex plus piecewise linear concave as a function of , and thus neither convex nor concave. Assume that we are given and that are approximate arg-minima for . For any in , we thus have that each distance appearing in Eq. (6), is such that

| (12) |

We can thus use a majorization-minimization procedure (Hunter and Lange, 2000) to minimize the sum of terms by iteratively creating majorization functions at each iterate . All functions are quadratic convex. Given that we need to ensure that these velocity fields yield optimal mappings, and that they may also need to satisfy orthogonality constraints with respect to lower-order principal components, we use gradient steps to update , which can be recovered using (Cuturi and Doucet, 2014, §4.3) and the chain rule as:

| (13) |

2. Efficient Approximation of and

As discussed above, gradients for majorization functions can be obtained using approximate minima and for each function . Because the objective of Eq. (11) is not convex w.r.t. , we propose to do an exhaustive 1-d grid search with values in . This approach would still require, in theory, to solve optimal transport problems to solve Eq. (11) for each of the input measures. To carry out this step efficiently, we propose to use entropy regularized transport (Cuturi, 2013), which allows for much faster computations and efficient parallelizations to recover approximately optimal transports .

3. Projected Gradient Update

Velocity fields are updated with a gradient stepsize ,

followed by a projection step to enforce that and lie in in the sense when computing the PC. We finally apply the barycentric projection operator defined in the end of §3. We first need to compute two optimal transport plans,

| (14) |

to form the barycentric projections, which then yield updated velocity vectors:

| (15) |

We repeat steps 1,2,3 until convergence. Pseudo-code is given in the supplementary material.

5 Experiments

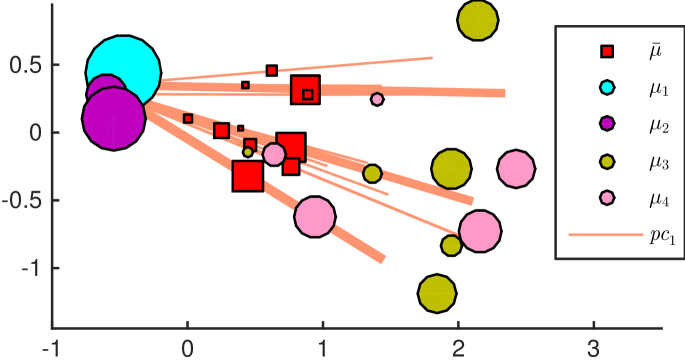

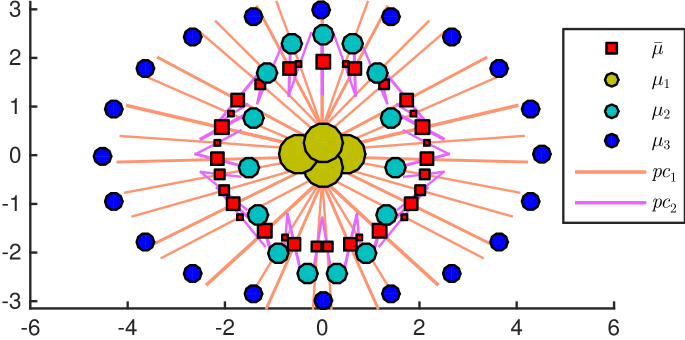

Toy samples: We first run our algorithm on two simple synthetic examples. We consider respectively 4 and 3 empirical measures supported on a small number of locations in , so that we can compute their exact Wasserstein means, using the multi-marginal linear programming formulation given in (Agueh and Carlier, 2011, §4). These measures and their mean (red squares) are shown in Fig. 4. The first principal component on the left example is able to capture both the variability of average measure locations, from left to right, and also the variability in the spread of the measure locations. On the right example, the first principal component captures the overall elliptic shape of the supports of all considered measures. The second principal component reflects the variability in the parameters of each ellipse on which measures are located. The variability in the weights of each location is also captured through the Wasserstein mean, since each single line of a generalized geodesic has a corresponding location and weight in the Wasserstein mean.

MNIST: For each of the digits ranging from to , we sample 1,000 images in the MNIST database representing that digit.

Each image, originally a 28x28 grayscale image, is converted into a probability distribution on that grid by normalizing each intensity by the total intensity in the image. We compute the Wasserstein mean for each digit using the approach of Benamou et al. (2015). We then follow our approach to compute the first three principal geodesics for each digit. Geodesics for four of these digits are displayed in Fig. 5 by showing intermediary (rasterized) measures on the curves. While some deformations in these curves can be attributed to relatively simple rotations around the digit center, more interesting deformations appear in some of the curves, such as the the loop on the bottom left of digit 2. Our results are easy to interpret, unlike those obtained with Wang et al.’s approach (2013) on these datasets, see supplementary material. Fig. 6 displays the first PC obtained on a subset of MNIST composed of 2,000 images of 2 and 4 in equal proportions.

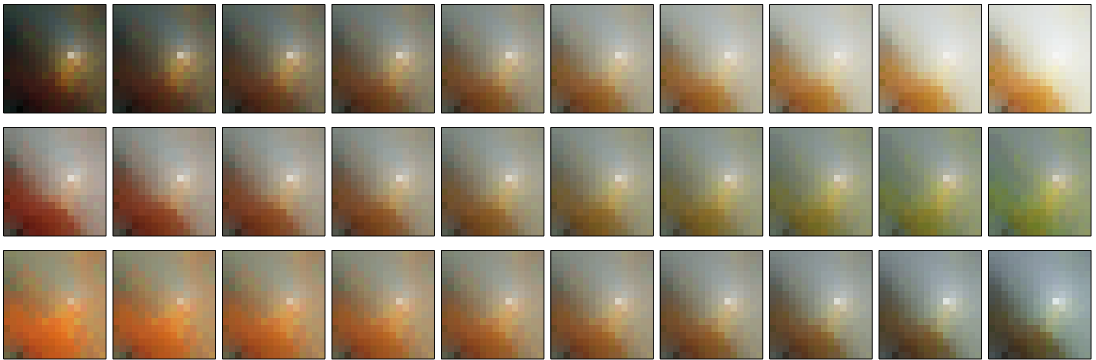

Color histograms: We consider a subset of the Caltech-256 Dataset composed of three image categories: waterfalls, tomatoes and tennis balls, resulting in a set of 295 color images. The pixels contained in each image can be seen as a point-cloud in the RGB color space . We use -means quantization to reduce the size of these uniform point-clouds into a set of weighted points, using cluster assignments to define the weights of each of the cluster centroids. Each image can be thus regarded as a discrete probability measure of atoms in the tridimensional RGB space. We then compute the Wasserstein barycenter of these measures supported on locations using (Cuturi and Doucet, 2014, Alg.2). Principal components are then computed as described in §4. The computation for a single PC is performed within 15 minutes on an iMac (3.4GHz Intel Core i7). Fig. 7 displays color palettes sampled along each of the first three PCs. The first PC suggests that the main source of color variability in the dataset is the illumination, each pixel going from dark to light. Second and third PCs display the variation of colors induced by the typical images’ dominant colors (blue, red, yellow). Fig. 8 displays the second PC, along with three images projected on that curve. The projection of a given image on a PC is obtained by finding first the optimal time such that the distance of that image to the PC at is minimum, and then by computing an optimal color transfer (Pitié et al., 2007) between the original image and the histogram at time .

Conclusion We have proposed an approximate projected gradient descent method to compute generalized geodesic principal components for probability measures. Our experiments suggest that these principal geodesics may be useful to analyze shapes and distributions, and that they do not require any parameterization of shapes or deformations to be used in practice.

Aknowledgements

MC acknowledges the support of JSPS young researcher A grant 26700002.

References

- Agueh and Carlier (2011) Martial Agueh and Guillaume Carlier. Barycenters in the wasserstein space. SIAM Journal on Mathematical Analysis, 43(2):904–924, 2011.

- Ambrosio et al. (2006) Luigi Ambrosio, Nicola Gigli, and Giuseppe Savaré. Gradient flows: in metric spaces and in the space of probability measures. Springer, 2006.

- Benamou et al. (2015) Jean-David Benamou, Guillaume Carlier, Marco Cuturi, Luca Nenna, and Gabriel Peyré. Iterative bregman projections for regularized transportation problems. SIAM Journal on Scientific Computing, 37(2):A1111–A1138, 2015.

- Bigot et al. (2015) Jérémie Bigot, Raúl Gouet, Thierry Klein, and Alfredo López. Geodesic pca in the wasserstein space by convex pca. Annales de l’Institut Henri Poincaré B: Probability and Statistics, 2015.

- Boissard et al. (2015) Emmanuel Boissard, Thibaut Le Gouic, Jean-Michel Loubes, et al. Distribution’s template estimate with wasserstein metrics. Bernoulli, 21(2):740–759, 2015.

- Bonneel et al. (2015) Nicolas Bonneel, Julien Rabin, Gabriel Peyré, and Hanspeter Pfister. Sliced and radon wasserstein barycenters of measures. Journal of Mathematical Imaging and Vision, 51(1):22–45, 2015.

- Cuturi (2013) Marco Cuturi. Sinkhorn distances: Lightspeed computation of optimal transport. In Advances in Neural Information Processing Systems, pages 2292–2300, 2013.

- Cuturi and Doucet (2014) Marco Cuturi and Arnaud Doucet. Fast computation of wasserstein barycenters. In Proceedings of the 31st International Conference on Machine Learning (ICML-14), pages 685–693, 2014.

- Fletcher et al. (2004) P. Thomas Fletcher, Conglin Lu, Stephen M. Pizer, and Sarang Joshi. Principal geodesic analysis for the study of nonlinear statistics of shape. Medical Imaging, IEEE Transactions on, 23(8):995–1005, 2004.

- Fréchet (1948) Maurice Fréchet. Les éléments aléatoires de nature quelconque dans un espace distancié. In Annales de l’institut Henri Poincaré, volume 10, pages 215–310. Presses universitaires de France, 1948.

- Gramfort et al. (2015) Alexandre Gramfort, Gabriel Peyré, and Marco Cuturi. Fast optimal transport averaging of neuroimaging data. In Information Processing in Medical Imaging (IPMI). Springer, 2015.

- Hastie and Stuetzle (1989) Trevor Hastie and Werner Stuetzle. Principal curves. Journal of the American Statistical Association, 84(406):502–516, 1989.

- Hunter and Lange (2000) David R Hunter and Kenneth Lange. Quantile regression via an mm algorithm. Journal of Computational and Graphical Statistics, 9(1):60–77, 2000.

- McCann (1997) Robert J McCann. A convexity principle for interacting gases. Advances in mathematics, 128(1):153–179, 1997.

- Pitié et al. (2007) François Pitié, Anil C Kokaram, and Rozenn Dahyot. Automated colour grading using colour distribution transfer. Computer Vision and Image Understanding, 107(1):123–137, 2007.

- Reich (2013) Sebastian Reich. A nonparametric ensemble transform method for bayesian inference. SIAM Journal on Scientific Computing, 35(4):A2013–A2024, 2013.

- Schölkopf et al. (1997) Bernhard Schölkopf, Alexander Smola, and Klaus-Robert Müller. Kernel principal component analysis. In Artificial Neural Networks—ICANN’97, pages 583–588. Springer, 1997.

- Solomon et al. (2015) Justin Solomon, Fernando de Goes, Pixar Animation Studios, Gabriel Peyré, Marco Cuturi, Adrian Butscher, Andy Nguyen, Tao Du, and Leonidas Guibas. Convolutional wasserstein distances: Efficient optimal transportation on geometric domains. ACM Transactions on Graphics (Proc. SIGGRAPH 2015), to appear, 2015.

- Srivastava et al. (2015) Sanvesh Srivastava, Volkan Cevher, Quoc Tran-Dinh, and David B Dunson. Wasp: Scalable bayes via barycenters of subset posteriors. In Proceedings of the Eighteenth International Conference on Artificial Intelligence and Statistics, pages 912–920, 2015.

- Verbeek et al. (2002) Jakob J Verbeek, Nikos Vlassis, and B Kröse. A k-segments algorithm for finding principal curves. Pattern Recognition Letters, 23(8):1009–1017, 2002.

- Villani (2008) Cédric Villani. Optimal transport: old and new, volume 338. Springer, 2008.

- Wang et al. (2013) Wei Wang, Dejan Slepčev, Saurav Basu, John A Ozolek, and Gustavo K Rohde. A linear optimal transportation framework for quantifying and visualizing variations in sets of images. International journal of computer vision, 101(2):254–269, 2013.

- Westdickenberg (2010) Michael Westdickenberg. Projections onto the cone of optimal transport maps and compressible fluid flows. Journal of Hyperbolic Differential Equations, 7(04):605–649, 2010.