Privacy-Preserving Analytics on Decentralized Social Graphs: The Case of Eigendecomposition

Abstract

Analytics over social graphs allows to extract valuable knowledge and insights for many fields like community detection, fraud detection, and interest mining. In practice, decentralized social graphs frequently arise, where the social graph is not available to a single entity and is decentralized among a large number of users, each holding only a limited local view about the whole graph. Collecting the local views for analytics of decentralized social graphs raises critical privacy concerns, as they encode private information about the social interactions among individuals. In this paper, we design, implement, and evaluate PrivGED, a new system aimed at privacy-preserving analytics over decentralized social graphs. PrivGED focuses on the support for eigendecomposition, one popular and fundamental graph analytics task producing eigenvalues/eigenvectors over the adjacency matrix of a social graph and benefits various practical applications. PrivGED is built from a delicate synergy of insights on graph analytics, lightweight cryptography, and differential privacy, allowing users to securely contribute their local views on a decentralized social graph for a cloud-based eigendecomposition analytics service while gaining strong privacy protection. Extensive experiments over real-world social graph datasets demonstrate that PrivGED achieves accuracy comparable to the plaintext domain, with practically affordable performance superior to prior art.

Index Terms:

Decentralized social graph analytics, cloud computing, security, privacy preservation1 Introduction

Analytics over information-rich social graphs allows the extraction of valuable and impactful knowledge and insights for many fields like community detection, fraud detection, and interest mining [1, 2]. Social graph analytics, however, becomes quite challenging when the social graph is not available to a single entity and presented in a decentralized manner, where each user only holds a limited local view about the whole social graph, and the complete social graph is formed by their collective views. Decentralized social graphs can arise in many practical applications [3, 4, 5, 6, 7]. For example, in a phone network, each user has his own contact list and the collective contact lists of all users form a social graph in a decentralized manner [4].

Collecting individual users’ local views for analytics in the setting of decentralized social graphs can raise critical privacy concerns, as these local views encode sensitive information regarding the social interactions among individuals [4, 8]. Users thus may be reluctant to be engaged in such analytics if their local views gain no protection. Thus, it is of critical importance to ensure that security must be embedded in analytics over decentralized social graphs from the very beginning, so that valuable knowledge and insights can be extracted without compromising the privacy of individual users. Among others, one popular and fundamental graph analytics task is eigendecomposition which we focus on as a concrete instantiation in this paper. Eigendecomposition-based social graph analytics works on the adjacency matrix associated with a social graph to yield eigenvalues/eigenvectors, and can benefit various applications, such as community structure detection [9], important members finding [10], and social graph partitioning [9] (see Section 2.1 for more details on applications).

In the literature, little work [11, 12, 8] has been done regarding privacy-preserving eigendecomposition on graphs. Some works [11, 12] focus on publishing adjacency matrices with differential privacy while preserving their eigenvalues/eigenvectors. Yet these works operate with centralized social graphs, where the social graph is available to a single entity and processed in the plaintext domain. The most related (state-of-the-art) work to ours is due to Sharma et al. [8], who propose a method PrivateGraph that works under a decentralized social graph setting and aims to provide privacy protection for individuals’ local views. However, PrivateGraph is not quite satisfactory due to the following downsides in functionality and security. Firstly, PrivateGraph only supports eigendecomposition on undirected graphs (via the Lanczos method [13]), but many social graphs in practice are directed [14], which cannot be supported via the Lanczos method. Secondly, it requires some users to expose the number of their friends, posing a threat to their privacy [15]. Thirdly, it requires frequent online interactions between the cloud that coordinates the eigendecomposition based analytics task and the entity that requests the eigenvalues/eigenvectors. Therefore, how to achieve privacy-preserving eigendecomposition-based analytics over decentralized social graphs is still challenging and remains to be fully explored.

In light of the above, in this paper, we design, implement, and evaluate PrivGED, a new system that allows privacy-preserving analytics over decentralized social graphs with eigendecomposition. Leveraging the emerging cloud-empowered graph analytics paradigm, PrivGED allows a set of users to securely contribute their local views on a decentralized social graph for an eigendecomposition analytics service empowered by the cloud, while ensuring strong protection on individual local views.

We start with considering how to enable the individual local views to be securely collected so as to form the (encrypted) adjacency matrix adequately for eigendecomposition on the cloud. Each row vector in the adjacency matrix stores information of the local view of a user. Targeting security assurance as well as high efficiency, PrivGED resorts to a lightweight cryptographic technique—additive secret sharing (ASS) [16], for fast encryption of the elements in local view vectors. However, simply applying ASS over each user’s complete vector is inefficient because social graphs are usually large-scale and sparse [14], leading to many zero elements in local view vectors that incur undesirable performance overheads. To tackle this problem, PrivGED develops techniques that allow to exploit the benefits of graph sparsity for efficiency while protecting the privacy of users’ private social relationships, through a delicate synergy of sparse representation, local differential privacy (LDP) [4], and function secret sharing (FSS) [17, 18] techniques. As opposed to PrivateGraph [8], PrivGED does not reveal any users’ exact node degree information.

Subsequently, we consider how to enable eigendecomposition to be securely performed on the cloud over the formed encrypted adjacency matrix. We first make two important practical observations: i) Usually only the top- eigenvalues/eigenvectors (; is the number of users and determines the size of the adjacency matrix) are needed in practice [19, 10, 9, 20, 21, 22, 23]; ii) The desired top- eigenvalues/eigenvectors can be derived from the complete eigenvalues/eigenvectors of a smaller matrix reduced from the original matrix under adequate dimension reduction methods, among which the most popular ones are the Arnoldi method [24] (for general (possibly non-symmetric) matrices) and the Lanczos method [13] (for symmetric matrices). Therefore, PrivGED first introduces effective techniques, which tackle the challenging squared root and division operations in the secret sharing domain, to securely realize the Arnoldi method and the Lanczos method. This allows PrivGED to flexibly work on both undirected and directed graphs. PrivGED then further provides techniques for secure realization of the widely used QR algorithm [25] over the dimension-reduced matrix so as to produce the encrypted desired eigenvalues/eigenvectors. We reformulate the plaintext QR algorithm to ease computation in the ciphertext domain as well as optimize the processing of secret-shared matrix multiplications for high performance. We highlight our main contributions below:

-

•

We present PrivGED, a new system supporting privacy-preserving analytics over decentralized social graphs with eigendecomposition.

-

•

We develop techniques for secure collection of individual local views on the decentralized social graph, which exploit the benefits of graph sparsity for efficiency while protecting the privacy of individual’s social relationships.

-

•

We develop techniques for securely realizing the Arnoldi/Lanczos methods and the QR algorithm, so as to fully support the processing pipeline of secure eigendecomposition on the cloud.

-

•

We formally analyze the security of PrivGED, implement it with 2000 lines of Python code, and conduct an extensive evaluation over three real-world datasets. The results demonstrate that PrivGED achieves accuracy comparable to the plaintext domain, with practically affordable performance superior to prior art.

The rest of this paper is organized as follows. Section 2 discusses the related work. Section 3 introduces preliminaries. Section 4 presents the problem statement. Section 5 and Section 6 give the detailed design. The privacy and security analysis is presented in Section 7. We present experiment results in Section 8 and conclude this paper in Section 9.

2 Related Work

2.1 Graph Analytics via Eigendecomposition

Graphs can characterize the complex inter-dependency among entities, and are used in various applications, such as social networks [14] and webpage networks [20]. As one popular and fundamental graph analytics task, eigendecomposition works on the adjacency matrix to yield eigenvalues/eigenvectors, and can benefit various applications [19, 10, 9, 26, 20, 21, 22]. For example, eigendecomposition-based graph analytics can greatly benefit community detection through the following ways: 1) finding the community structure based on the eigenvectors [19]; 2) identifying and characterizing nodes importance to the community according to the relative change in the eigenvalues after removing them [10]; 3) partitioning a social graph based on its top-2 eigenvectors [9]. Another important application is PageRank [26, 20, 21, 22], which is one of the best-known ranking algorithms in web search. PageRank measures the importance of website pages by computing the principal eigenvector of the matrix describing the hyperlinks of the website pages. In addition, the second eigenvector can be used to detect a certain type of link spamming [23]. However, all of them consider the execution of eigendecomposition in the plaintext domain without privacy protection.

2.2 Privacy-Preserving Graph Analytics

There exist a variety of designs that aim to securely perform certain graph analytics tasks. Some works [27, 28, 29] focus on privacy-preserving training of graph neural networks (GNN) based on the federated learning paradigm [30], which aim to train GNN models across multiple clients holding local datasets (e.g., spatio-temporal datasets [27] or graphs [28, 29]) in such a way that the datasets are kept local. The work [27] focuses on GNNs over decentralized spatio-temporal data, and has the clients exchange model updates with the server in cleartext. In contrast, the works [28, 29] focus on graph datasets and design privacy-preserving mechanisms to protect the individual model updates.

There has been a line of work [31, 32, 33, 34, 35] aimed at the support for graph analytics with cryptographic methods like secure multi-party computation techniques and searchable encryption. The main focus of this line of work has been on supporting different kinds of graph queries under different scenarios in a secure manner. Wu et al. [31] propose a protocol for privacy-preserving shorted path query in a two-party setting, where a client holding a query and a server holding a plaintext graph, based on cryptographic techniques including private information retrieval, garbled circuits, and oblivious transfer. In contrast, the works [34, 35] focus on the support for privacy-preserving shortest path queries in an outsourcing setting, where private queries need to be executed over encrypted graphs outsourced to the cloud. The work [32] designs protocols that can support privacy-preserving shortest distance queries and maximum flow queries over outsourced encrypted graphs. These works rely on the combination of searchable encryption (e.g., order-revealing encryption), homomorphic encryption, and/or garbled circuits. In [33], Araki et al. consider a scenario where all nodes and edges of a graph are secret-shared between three servers and devise protocols for breadth-first search and maximal independent set queries, based on secret sharing and secure shuffling. The above works all target graph analytics tasks that are substantially different from the one considered in this paper.

| Property | PrivateGraph [8] | PrivGED |

| Undirected graph supported | ||

| Directed graph supported | ||

| All users’ degrees protected | ||

| Analyst allowed to stay offline | ||

| Lightweight cryptography |

There is another line of work [11, 12] focuses on publishing graph matrices with differential privacy while preserving their eigenvalues/eigenvectors. They work under the setting of centralized social graphs where the social graph is held by a single entity and processed in the plaintext domain. Some works consider the scenario of decentralized social graphs, and focus on the privacy-preserving support for different tasks with differential privacy, such as estimating subgraph counts [3] and generating representative synthetic social graphs [4].

The state-of-the-art design that is most related to ours is PrivateGraph [8], which is also aimed at eigendecomposition analytics over decentralized social graphs with privacy protection. However, as mentioned above, PrivateGraph is subject to several crucial downsides in terms of functionality and security, which greatly limit its practical usability. In light of this gap, we present a new system design PrivGED for privacy-preserving eigendecomposition analytics on decentralized social graphs. Compared to PrivateGraph, PrivGED is much advantageous in that it (i) supports both directed and undirected social graphs (via secure realizations of both the Arnoldi method and the Lanczos method), (ii) does not reveal any users’ exact degree information, and (iii) fully exploits the cloud to free the analyst from staying online for active and frequent interactions and conducting a large amount of local intermediate and post processing. In particular, as reported in [8], to obtain the eigenvalues/eigenvectors, the analyst must spend 0.2 hours as well as communicate 10 GB with the cloud. In contrast, PrivGED allows the analyst to directly receive the final eigenvalues/eigenvectors. Table I summarizes the prominent advantages of our PrivGED over PrivateGraph.

3 Preliminaries

3.1 Eigendecomposition-based Graph Analytics

A graph comprises a set of nodes with a corresponding set of edges which connect the nodes. The edges may be directed or undirected and may have weights associated with them as well. Eigendecomposition works on the adjacency matrix associated with a graph to yield eigenvalues/eigenvectors. A complete eigendecomposition on an matrix poses a considerable time complexity of , which indeed also results in unnecessary cost for a large since only top- () eigenvalues/eigenvectors are used in most eigendecomposition-based graph analysis tasks [19, 10, 9, 20, 21, 23, 22]. In practice, given a large-scale adjacency matrix , to calculate its top- eigenvalues/eigenvectors, the first step is to reduce its dimension from to ( is usually slightly larger than ), producing a new matrix for further processing. The most popular dimension reduction methods are the Arnoldi method (Algorithm 1) [24] and the Lanczos method (Algorithm 2) [13], which work on general (possibly non-symmetric) matrices and symmetric matrices, respectively. After dimension reduction, the QR algorithm [25] is usually used to efficiently calculate the complete eigenvalues/eigenvectors of . Finally, the top- eigenvalues of are used to represent the top- eigenvalues of , and the corresponding eigenvectors can be transformed to the eigenvectors of by where is determined by line 11, Algorithm 1 or line 11, Algorithm 2.

3.2 Local Differential Privacy

Compared to the traditional differential privacy model [36] that assumes a trusted data collector which can collect and see raw data, the recently emerging LDP model [37] considers the data collector to be untrusted, in which each user only reports perturbed data with calibrated noises added. The formal definition of -LDP is as follows.

Definition 1.

A randomized mechanism satisfies -LDP, if and only if for any inputs and , we have: ,

where denotes the set of all possible outputs of , is the privacy budget, and is a privacy parameter.

Laplace distribution is a widely popular choice to draw the noises, which is formally defined as follows.

Definition 2.

3.3 Additive Secret Sharing

Given a private value , ASS in a two-party setting works by splitting it into two secret shares and such that [16]. Each share alone reveals no information about . We denote by the ASS of for short. It is noted that if , we say the secret sharing is binary sharing, denoted as , and otherwise arithmetic sharing, denoted as . Given a public constant , and the secret sharings and , addition/subtraction and scalar multiplication can be performed without interaction among the two parties that hold the shares respectively, while multiplication requires the two parties to have one round of online communication with the use of Beaver triples which can be prepared offline. It is noted that addition and multiplication over are equivalent to XOR and AND respectively.

3.4 Function Secret Sharing

FSS [39] is a low-interaction secret sharing for secure computation, presenting prominent advantages in online communication and round complexity compared to other alternative techniques, such as garbled circuits [40] or ASS. From a high-level point of view, a two-party FSS-based approach to a private function consists of a pair of probabilistic polynomial time (PPT) algorithms: (i) : given a security parameter and a function description , output two succinct FSS keys , each for one party. (ii) : given an FSS key and an evaluation point , output the share of the function evaluation result. The security of FSS ensures that an adversary learning only one FSS key learns no information about the function and output .

4 System Overview

4.1 Architecture

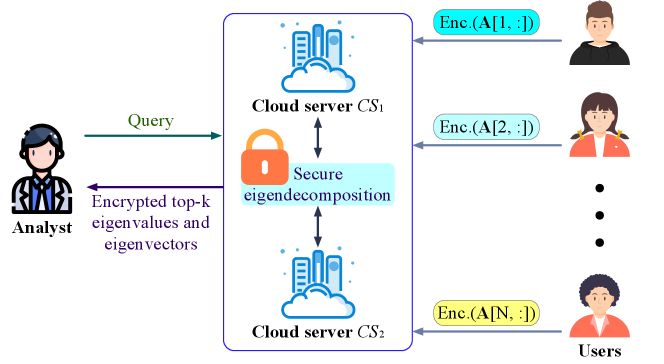

Fig. 1 illustrates PrivGED’s system architecture. There are three kinds of entities: the users ), the cloud, and the analyst. The users and the social relationship (friendship for simplicity) among them constitute a (decentralized) social graph, where each represents a graph node and the friendship between any two users indicates the existence of an edge, and the number of each ’ associated friends is the degree of the corresponding graph node. Consider as a concrete example a phone network [4], where each has a limited phone numbers in his contact list and thus a limited local view about the social graph.

The decentralized social graph can be characterized by an adjacency matrix of size , where each row ) indicates ’s local view. For example, in an unweighted social graph, may indicate that and are friends; in a weighted social graph, may indicate the degree of intimacy between and by a value . The users are willing to allow analytics over their federated data of local views to produce analytical results for the analyst. Our focus in this paper is the analytics task of eigendecomposition, which plays a vital role in graph analytics and has many applications as aforementioned. However, due to privacy concerns, each is not willing to disclose his private social relationship throughout the analytical process over the data federation, so the enforcement of data protection is demanded.

The graph analytics service is empowered by the cloud for well understood benefits. In PrivGED, the power of the cloud is split into two cloud servers from different trust domains which can be hosted by different service providers in practice. Such multi-server model is getting increasingly popular in recent years for security designs in various domains, including both academia [32, 41, 42, 43, 44, 45, 46, 47] and industry [48, 49]. The adoption of such model in PrivGED follows this trend. The two cloud servers in PrivGED, denoted as , collaboratively perform the analytics task of eigendecomposition without seeing each ’s data, and produce encrypted results (the top- eigenvalues/eigenvectors) which are delivered to the analyst on demand. At a high level, PrivGED proceeds through two phases: (i) Secure collection of decentralized social graph data. In this phase, each user ’s local view data regarding the social graph is collected by the cloud servers in encrypted form so as to form the matrix for eigendecomposition, through sparsity-aware and privacy-preserving techniques developed in PrivGED. (ii) Secure eigendecomposition. After the encrypted adjacency matrix is adequately formed at the cloud, the cloud servers collaboratively perform secure eigendecomposition, through a suite of customized secure protocols developed in PrivGED.

4.2 Threat Model

Along the system workflow in PrivGED, we consider that the primary threats come from the cloud entity empowering the eigendecomposition-based graph analytics service. Similar to most prior security designs in the two-server setting [50, 51, 41], we assume a semi-honest and non-colluding adversary model where honestly follow the protocol specification of PrivGED, but may individually attempt to learn private information about individual users’ social connections in the decentralized social graph and the analytics result. Note that each user’s private social connections with other entities in the graph are reflected by the non-zero elements in her local view vector, as introduced above. So, for the privacy of individual users, PrivGED aims to conceal the sensitive information regarding the non-zero elements in each user ’s local view vector , which includes the positions, values, and number.

5 Secure Collection of Decentralized Social Graph Data

5.1 Overview

In this phase, each user provides his local view data in protected form to the service. For high efficiency, PrivGED resorts to the lightweight ASS technique for encryption of the elements in . A simple method is to have each directly apply ASS over his complete of length . However, such simple method is clearly inefficient due to the sparsity of the (decentralized) social graph. According to Facebook’s statistics [14], on average a user has 130 friends in a social network, which is far less than (e.g., hundreds of thousands). This leads to high sparsity, indicating that the complete will be filled with many zeros. So the simple method will incur significant cost on the user side as well as pose unnecessary workload in the subsequent secure eigendecomposition process.

To remedy this, a plausible approach is to leverage sparse representation. Specifically, applies ASS only over each nonzero element at location (denoted as ), and sends to . Such approach yet leads to prominent privacy leakages: (i) The number of nonzero elements (i.e., the degree) indicates the number of ’s friends, which can be used in inferring ’s privacy [15]. (ii) The presence of a nonzero element implies the presence of the relationship between and , which can also be used in inference attacks [15]. (iii) If the social graph is unweighted (i.e., each element is 0 or 1), the presence of a nonzero element implies , revealing the data to .

Therefore, the challenge here is how to preserve the benefits of sparsity while protecting the privacy of individual user’s social relationships. Meanwhile, the effectiveness of subsequent eigendecomposition should not be affected.

Our key insight is to delicately trade off (node degree) privacy for efficiency, by having blend in some dummy edges with zero weights at random empty locations in , and apply ASS over the weights of both true and dummy edges, inspired by [8]. Under ASS, even encrypting the same (zero) value multiple times will result in different shares (ciphertexts) indistinguishable from uniformly random values. Therefore, the dummy edges cannot be distinguished from the true edges, as well as do not affect the effectiveness of the subsequent secure eigendecomposition process.

What remains challenging here is how to appropriately set the number of dummy edges so as to delicately balance the trade-off between efficiency and privacy. Too many dummy edges will impair the sparsity and increase the system overhead, while too few dummy edges will result in weaker privacy protection. Therefore, a tailored security design is demanded to provide a theoretically sound approach by which can select the adequate number of dummy edges to achieve a balance between efficiency and privacy. Our main idea is to rely on LDP so as to make the leakage about the node degrees differentially private. In what follows, we start with some basic approaches and discuss their limitations. Then we present our tailored solution.

5.2 Basic Approaches

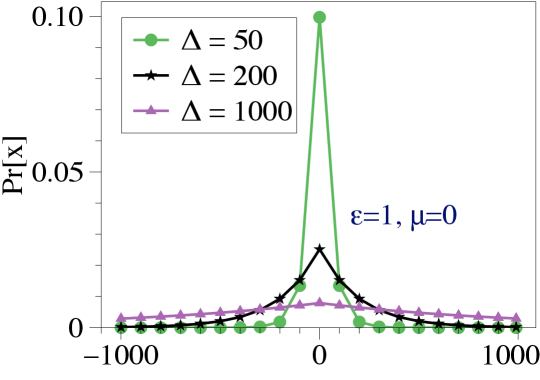

A basic approach based on LDP can work as follows. As aforementioned, each draws a noise from the discrete Laplace distribution, and then blends dummy edges with zero values at random empty locations in , followed by encrypting the weights of both true and dummy edges by ASS. In this way, differential privacy guarantee on the node degree can be achieved, and the presence of edges is disguised as well [8]. Here, the sensitivity needs to be set to , where and are the possible maximum and minimum degree in the decentralized social graph, respectively. The above basic approach, however, would result in a very large (up to in theory) to be applied, leading to a large number of dummy edges to be added and heavily impairing the sparsity. To illustrate why this is the case, Fig. 2 shows the probability density function of the discrete Laplace distribution with different . It is revealed that a larger leads to the shape of the density function being more uniform. This indicates that the larger the sensitivity is, the larger probability that draws a large will be. In contrast, a small will make the probability density function concentrated (e.g., in Fig. 2), which means that will draw a small with a large probability.

In order to achieve better efficiency, an alternative approach as proposed by [8] is to use a bin-based mechanism that provides bin-wise differential privacy rather than graph-wise differential privacy. The main idea is to partition users into several bins, each of which contains an approximately equal number of users whose degrees are within a small interval . As such, all users in the same bin can use a much smaller sensitivity . We note that while the idea proposed by Sagar et al. [8] is useful, their design to instantiate such idea is not satisfactory and has crucial downsides. Firstly, it requires some (sampled) users to open their degrees to estimate the degree histogram of the social graph so as to split users into bins, harming the privacy of the sampled users. Secondly, to avoid edge deletion when the drawn noise is negative, it lets all users add a large offset to the drawn noise, making a notable impact on the sparsity. In addition, no formal analysis regarding the differential privacy guarantee is provided.

5.3 Our Approach

Based on the aforementioned idea of bin-based LDP, we design a new protocol for secure collection of decentralized social graph data, which does not expose any users’ exact node degree information, as opposed to PrivateGraph [8]. Meanwhile, our protocol does not require users to add a large offset to the noises, and thus is much more advantageous in maintaining the sparsity. We also later provide formal analysis on the differential privacy guarantee. Our protocol for secure collection of decentralized social graph data is comprised of the following ingredients: (i) secure degree histogram estimation, (ii) secure binning map generation, and (iii) local view data encryption.

5.3.1 Secure Degree Histogram Estimation

It is challenging for to obliviously estimate the degree histogram without knowing any users’ degrees. In particular, given a public degree and a specific user’s degree , need to efficiently check whether with both and the checking result being encrypted. To counter this challenge, we devise a tailored construction based on function secret sharing [39], a recently developed cryptographic primitive that allows the generation of compact function shares and secure evaluation with the function shares. In particular, we take advantage of distributed point function (DPF) [17], an FSS scheme for the point function which outputs if and otherwise outputs 0. Formally, a two-party DPF, parameterized by a finite Abelian group ( in our design), consists of the following algorithms: (i) : given a security parameter , a string , and a value , outputs two succinct DPF keys representing the function shares. (ii) : given a DPF key and an input , outputs .

Building on top of DPF, we develop a technique for secure degree histogram estimation, as presented in Algorithm 3. As directly engaging all users for building the degree histogram will lead to high performance overhead, PrivGED adopts a sampling strategy following [8], where users, denoted by , are randomly sampled to participate. Each generates DPF keys based on his degree (line 1 in Algorithm 3), where and are set to and respectively. Each then sends to and to . Utilizing the DPF keys , each () can evaluate for all possible degrees . By summing these evaluation results (line 6 in Algorithm 3), can obtain exactly the encrypted number of sampled users whose degree is equal to . Correctness holds since

5.3.2 Secure Binning Map Generation

With the encrypted degree histogram produced on the cloud side, we now introduce how to enable to generate an encrypted binning map, based on which each user can get the sensitivity value for his use in drawing the noise. The binning strategy underlying our secure design follows prior art [8], leading to each bin containing an approximately equal number of users whose degrees are within a small interval. It is noted that unlike our design operating in the ciphertext domain, [8] operates in plaintext domain with exposed raw degrees of sampled users for building the histogram and producing the binning map.

Our secure design is shown in Algorithm 4, which inputs the encrypted degree histogram , the number of bins, and the number of the sampled users, and then outputs the encrypted binning map (i.e., an encrypted bit-string under binary secret sharing), where indicates the boundary of a bin. For example, given , indicates that the users are partitioned into two bins: users whose degree and users whose degree . After obliviously computing , send it to all users, and then each of them can judge to which bin he belongs based on his own degree locally.

As shown in Algorithm 4, first calculate the public bin size based on and (line 1), and then initialize an accumulator . After that, add () to in turn (line 4). After each addition, obliviously evaluate and add the comparison result (in binary secret sharing) to . Specifically, if , , indicating a bin boundary; otherwise, . After that, based on , obliviously evaluate whether to reset the accumulator or not. Specifically, if , a bin boundary appears, and thus needs to be reset to 0 for the next bin; otherwise, remains unchanged. This step is given in line 6, where “” denotes not operation which can be achieved by letting one of flip its share or locally. Finally, output .

In Algorithm 4, the addition operations are easily supported with secret sharing, but the operation is not directly supported. What we need here essentially is secure comparison in the secret sharing domain. From the very recent works [18, 43], we identify two primitives suited to allow secure comparison in the secret sharing domain, and introduce two approaches accordingly to allow the realization of secure binning map generation. The first approach is based on FSS [18], which is more suited for high-latency network scenarios because it requires minimal rounds of interactions (at the cost of more local computation). The second approach is based on ASS [43], which requires a small amount of local computation but higher online communication and round complexities, fitting better into low-latency network scenarios.

FSS-based approach. We identify the state-of-the-art construction of FSS-based secure comparison from [18], which is referred to as distributed comparison function (DCF). DCF is an FSS scheme for the function which outputs if and otherwise. Formally, a two-party DCF, parameterized by two finite Abelian groups , consists of the following algorithms: (i) , which takes as input a security parameter , , and , and outputs two keys and two random values (), each for one party. (ii) , which takes as input a key and a (masked) input , and outputs . The evaluation process in DCF only requires one round of online communication, in which the two parties send to each other to reveal without leaking . The security of DCF states that if an adversary only learns one of , it learns no information about the private input and output .

We now show how PrivGED builds on the DCF for secure evaluation of . To use the DCF in PrivGED, the related public parameters can be set as follows: , , , and . With these parameters, the DCF keys and the random values can be prepared offline and distributed to respectively. Note that such offline work can be done by a third-party server in practice [18]. For the secure online evaluation of , () first exchanges to each other to reveal , and then evaluates , which will output if and otherwise. However, PrivGED requires to output if , and thus PrivGED further lets one of flip its share locally.

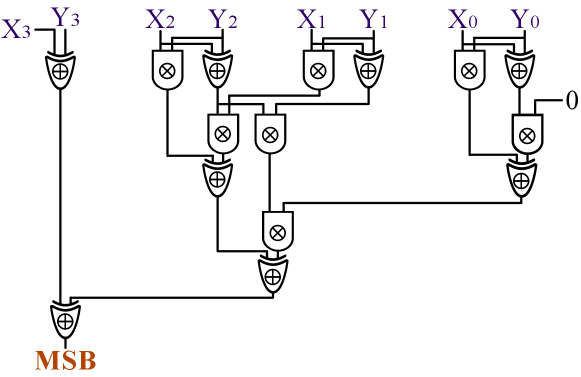

ASS-based approach. This approach is based on the idea of secure bit decomposition in the secret sharing domain [43]. Specifically, given two fixed-point integers under two’s complement representation, the most significant bit (MSB) of can indicate the relationship between and 0. Namely, if , and otherwise . Secure extraction of the MSB in the secret sharing domain can be achieved via secure realization of a parallel prefix adder (PPA) [43], as illustrated in Fig. 3. It is observed that only XOR and AND operations need to be performed in the secret sharing domain. PrivGED leverages the construction from [43], which takes the secret sharings of two values and as input, and outputs . With that construction, are able to obtain , i.e., if and otherwise. However, what is needed in PrivGED instead is that output if and otherwise. PrivGED further lets one of flip its share () locally.

It is noted that the output of the above two approaches are in binary secret sharing. However, for the computation in line 6 of Algorithm 4, the binary secret-shared value needs to be multiplied by an arithmetic secret-shared value, i.e., . We note that this can be achieved based on an existing technique from [52], through the following steps: (i) randomly generates ( is the value length of ), and sends two messages to : . (ii) chooses if , otherwise chooses . Therefore, the share held by is and the share held by is . (iii) For the other secret share , acts as the sender and acts as the receiver to perform step 1), 2) again. At the end of the process, holds . The resulting secret sharing of the binning map can then be sent to all users for use.

5.3.3 Local View Data Encryption

With the binning map recovered at the user side, each then encrypts his local view data, as shown in Algorithm 5. The main problem is that the drawn noise could be negative (recall Fig. 2), which means that should delete some edges. Obliviously, this will seriously impair the accuracy of subsequent secure eigendecomposition. To tackle this problem, PrivGED lets truncate to 0 inspired by [38], i.e., . In Section 7.1, we will formally prove that all users’ degrees still satisfy -LDP although they may truncate to 0. Let denote the set of locations of both true edges and dummy edges, and the resulting data of after adding dummy edges with zero weights. Finally, applies ASS over each and sends to the cloud.

6 Secure Eigendecomposition

6.1 Overview

The encrypted data collected from the users forms the encrypted adjacency matrix under sparse encoding for eigendecomposition at the cloud. We denote it by , where is a multi-set that contains the all locations from , , , . Upon deriving , PrivGED needs to enable to obliviously perform eigendecomposition on , producing the encrypted top- eigenvalues/eigenvectors.

Firstly, PrivGED introduces techniques (Section 6.2) to enable to obliviously transform to (in dense encoding) of a much smaller size , with respect to the Arnoldi method (for general (possibly non-symmetric) matrices) and Lanczos method (for symmetric matrices) as introduced before in Section 3.1. Then, PrivGED provides secure realizations of the QR algorithm so as to achieve secure eigendecomposition over the to produce encrypted top- eigenvalues/eigenvectors. We give a basic design (Section 6.3) for the secure QR algorithm as a starting point, followed by a delicate optimized design (Section 6.4) that further achieves a performance boost.

6.2 Secure Matrix Dimension Reduction

We first consider how to enable to obliviously execute the Arnoldi method on to output . Looking into the operations in Algorithm 1, we note that the operations in line 1-7, which are comprised of addition and multiplication, can be naturally and securely realized in the arithmetic secret sharing domain by . However, it remains challenging for to obliviously perform the operations in lines 8, 9, because square root and division are not directly supported in the secret sharing domain.

Our solution is to approximate the square root and division operations using basic operations (i.e., ), so that they can be securely supported in the secret sharing domain. For secure square root , inspired by the very recent work [53], PrivGED utilizes a roundabout strategy to approximate the inverse square root by the iterative Newton-Raphson algorithm [54]:

| (2) |

which will converge to . Obviously, both subtraction and multiplication operations are naturally supported in the secret sharing domain. After that, PrivGED lets multiply by to derive . For secure division in the secret sharing domain, we note that the main challenge is to compute the reciprocal . However, we also observe that the reciprocal of division in Algorithm 1 is , which is exactly the inverse square root computed in line 8. Therefore, PrivGED lets directly perform the operation in line 9 by multiplying the inverse square root computed in line 8 by .

We give our construction for securely realizing the Arnoldi method in Algorithm 6. Regarding the Lanczos method, we note that the operations required to be securely supported are identical to the Arnoldi method, so we omit the algorithm description for the secure Lanczos method. It is noted that the encrypted adjacency matrix under sparse encoding can significantly save the cloud-side cost. For example, the secure matrix-vector product between and can be efficiently performed by only securely multiplying the elements and the corresponding . Obliviously, the number of multiplications in this example is independent of the number of columns in the original complete and only depends on the number of rows and the number of nonzero elements in .

6.3 Secure QR Algorithm

We now introduce, as a basic design, how to enable to obliviously calculate the complete encrypted eigenvalues/eigenvectors of , through a protocol for securely realizing the widely used QR algorithm. The QR algorithm works in an iterative manner, consisting of a series of QR decomposition. Formally, given matrix and , in the -th () iteration, on input , we compute QR decomposition , where is an orthogonal matrix (i.e., ), is an upper Hessenberg matrix, and then output . At the end of QR algorithm, the diagonal elements of are ’s eigenvalues and are ’s eigenvectors.

In PrivGED, we perform the QR decomposition utilizing the Givens rotations [55]. Formally, given an upper Hessenberg matrix , we create the orthogonal Givens rotation matrix :

| (3) |

where

| (4) | |||

and . At the end of this iteration,

and .

Next, we consider how to securely and efficiently perform the above process in secret sharing domain. The main challenging part of the secure QR algorithm is to let obliviously calculate square root and division in secret sharing. This can be effectively addressed based on the techniques introduced in Section 6.2. In addition, we note that securely realizing the QR Algorithm consists mainly of matrix multiplications in the secret sharing domain.

A straightforward method is to perform secret-shared value-wise multiplication, which would require multiplications and online communication of ring elements. We note that a better choice is to work with vectorization [16], where the Beaver triples needed for secure multiplication are represented in a vectorized form, i.e., , where ; and and play the role in masking the input matrices during secure multiplication. Based on such vectorization trick, the online communication is reduced to only ring elements. PrivGED chooses to work with vectorization for secret-shared matrix multiplication.

Algorithm 7 presents our basic design for securely realizing the QR algorithm. With as input, it outputs and . Note that the top- diagonal elements of represent the desired top- eigenvalues of . For the secret-shared eigenvectors of , they can be obliviously transformed to the corresponding eigenvectors of by , where is the output matrix of our secure Arnoldi method (or Lanczos method).

6.4 Optimizing the Secure QR Algorithm

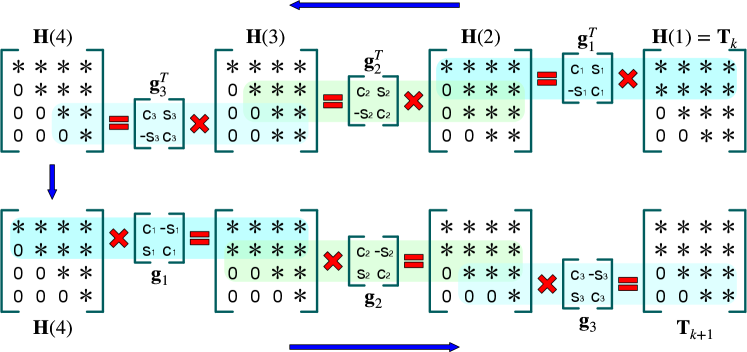

We now show how to further optimize the basic design introduced above to achieve an efficiency boost. Our key insight is to first reformulate the plaintext QR algorithm by simplifying the Givens rotation matrix (i.e., Eq. 3), and then identify the correlated multiplications and extract repetitive multiplicands to further save the cost. We first simplify the Givens rotation matrix based on the observation: in each Givens rotation or , only the -th and -th rows of are updated (recall Eq. 3). Therefore, to save computation, we can reduce the Givens rotation matrix from Eq. 3 to (denoted as ). Fig. 4 illustrates the optimized QR decomposition on a upper Hessenberg matrix utilizing a series of Givens rotations. Similarly, when calculating (i.e., line 15 in Algorithm 7), we can also reduce to .

After the above simplification, we have a new observation: will be used repeatedly in several matrix multiplications. In a recent study [56], Kelkar et al. point out that when one of the multiplicands in a number of (secret-shared) matrix multiplications stays constant, the constant multiplicand can be masked and then opened only once so as to achieve cost savings on communication. For example, supposed we need to multiply with , , , in the secret sharing domain. We only need a single matrix sharing for , rather than matrix sharings as in directly using the Beaver’s trick in vectorized form. Therefore, PrivGED can have only mask once for all secret-shared multiplications. In addition, the masked can be directly achieved by letting locally transpose the masked .

Remark. Our basic design for the secure QR algorithm requires to online communicate ring elements111We ignore the secure square root computation for simplicity.. In contrast, our optimized secure QR algorithm only requires to online communicate ring elements. As later shown in our experiments, the optimized secure QR algorithm can save up to online communication as well as computation cost as compared to the basic design.

6.5 Complexity Analysis

The design of PrivGED is comprised of several subroutines: 1) secure degree histogram estimation ; 2) secure binning map generation ; 3) local view data encryption ; 4) secure matrix dimension reduction ; 5) secure QR algorithm ; 6) optimized secure QR algorithm . Therefore, we analyze their theoretical performance complexities as follows.

-

-

. Algorithm 3 describes running between the cloud servers and some sampled users, which requires each of to evaluate sampled users’ DPF keys for all possible degrees , and thus its computation complexity is . does not require to communicate with each other, but requires each of the sampled users to send a pair of DPF keys to , and thus its communication complexity is , where is the size of DPF keys.

-

-

. Algorithm 4 describes running at the cloud side, which requires to securely evaluate for all possible degrees , and thus its computation complexity is . For communication, it is noted that we provide two secure comparison approaches (FSS-based and ASS-based). The FSS-based approach only requires to send a masked value with bit length to each other, but the ASS-based one requires to communicate bits. Therefore, the communication complexity of FSS-based is , and that of ASS-based is .

-

-

. Algorithm 5 describes running at the users side, which requires each user to encrypt his local view with length , and thus its computation complexity is and communication complexity is , where is the bit length of weight .

-

-

. Algorithm 6 describes running at the cloud side, whose computation complexity is , where is the width (or height) of the original matrix and is the width (or height) of the matrix after dimension reduction. Its communication complexity is . It is noted that the asymptotic computation and communication complexity of secure Lanczos method are also and because its algorithm is similar to secure Arnoldi method (recall Algorithm 1 and 2).

-

-

. Algorithm 7 describes running at the cloud side, whose computation complexity is and communication complexity is where is the number of QR decomposition.

-

-

. It is noted that the asymptotic computation complexity of is identical , i.e., , but its communication complexity is only (recall the Remark in Section 6.4).

7 Privacy and Security Analysis

We now provide analysis regarding the protection PrivGED offers for the users. Note that PrivGED aims to conceal the sensitive information regarding the non-zero elements in each user’s local view vector, which includes the positions, values, and number (i.e., the degree). As PrivGED builds on LDP to perturb the exact degree of users and cryptographic techniques to safeguard the confidentiality of data values, we present our analysis in two parts. The first part is privacy analysis, which is to prove the differential privacy guarantee PrivGED offers for users. Note that blending in dummy edges in each user’s local view vector as per the differential privacy mechanism obfuscates not only the number of non-zero elements but also their positions. The second part is security analysis, where we follow the standard simulation-based paradigm to prove data confidentiality against the cloud servers. Similar treatment also appears in prior works [38, 57] using both DP and cryptography.

7.1 Privacy Analysis

Theorem 1.

PrivGED can achieve -LDP for the degree of users in the same bin according to Definition 1.

Proof.

Given the degrees of and who are in the same bin, and the sensitivity of the bin is . If both the noises drawn from by and are non-negative, the probability of them outputting the same noisy degree is bounded by

In addition, we note that the probability to draw a negative noise from is [38]:

Given Eq. 1 and , we have

which means that the maximum probability of truncation is . Therefore, with , the probability to output the same noisy degree from and is bounded by , which satisfies -LDP in Definition 1. ∎

7.2 Security Analysis

We follow the standard simulation-based paradigm to analyze the security of PrivGED. In this paradigm, a protocol is secure if the view of the corrupted party during the protocol execution can be generated by a simulator given only the party’s input and legitimate output. Let denote the protocol in PrivGED for secure eigendecomposition. Recall that the cloud servers neither provide input nor obtain output in PrivGED.

Definition 3.

Let denote each ’s view during the execution of . We say that is secure in the semi-honest and non-colluding setting, if for each there exists a PPT simulator which can generate a simulated view such that . That is, the simulator can simulate a view for , which is indistinguishable from ’s view during the execution of .

Theorem 2.

PrivGED is secure according to Definition 3.

Proof.

It is noted that PrivGED invokes the subroutines , , , , and / in order. If the simulator for each subroutine exists, then our complete protocol is secure [58, 59, 60]. Since the roles of in these subroutines are symmetric, it suffices to show the existence of simulators for .

-

-

Simulators for in , and . It is easy to see that the simulators for in these subroutines must exist, because they only require basic addition and multiplication over additive secret shares (which can be simulated by random values as per the security of additive secret sharing [16]).

-

-

Simulator for in . only has the public number of sampled users at the beginning, and later receives DPF keys from the sampled users. Since does not receive any other information apart from the DPF keys, the simulator for can be trivially constructed by invoking the DPF simulator. Therefore, from the security of DPF [17], the simulator for exists.

-

-

Simulator for in . In the subroutine (Algorithm 4), the steps that require to interact are in lines 5 and 6. Since each of them is invoked in order as per the processing pipeline and their inputs are secret shares, if the simulator for each of them exists, the simulator exists for in . We first analyze the simulated view about line 5. As aforementioned, we provide two approaches (i.e., FSS-based and ASS-based) to perform the secure comparison operation in line 5. For the FSS-based approach, at the beginning of its each execution, has a DCF key , a random value , a share , and later receives a masked share followed by outputting . Since these information receives is all legitimate in FSS-based DCF, the simulator for can be trivially constructed by invoking the simulator of DCF. From the security of FSS-based DCF [18], the simulator for exists. We then analyze the simulator for ASS-based approach. It is noted that the ASS-based approach is built on realization of a PPA in the secret sharing domain, which consists of basic secret-shared and , and thus the simulator for it exists. Similarly, line 6 also consists of basic multiplication, and thus the simulator for it exists [52].

-

-

Simulator for in . Our optimized secure QR algorithm builds on the technique from [56]. Specifically, when computing multiplications of the form , instead of using independent beaver triples , we can use correlated beaver triples , where a single matrix sharing is used to mask the constant multiplicand . Next, we analyze the existence of the simulator for the technique. We first show that the view of on the correlated Beaver triples can be simulated. It is noted that the shares of each element in the correlated beaver triples is generated based on the standard secret sharing. This means that the distribution of the Beaver triple shares received by is identically distributed with random values in the view of . We then show that the view of on the masked constant multiplicand can be simulated. In this phase, first receives and from , and then outputs . It is noted that is a function of the correlated Beaver triple shares, , and . Therefore, these matrices are uniformly random and independent from one another, and their joint distribution in both real view and simulated view is identical [56]. The simulator for exists.

∎

8 Experiments

8.1 Setup

We implement a prototype system of PrivGED in Python. Our prototype implementation comprises 2000 lines of code (excluding the code of libraries). We also implement a test module with another 500 lines of code. All experiments are performed on a workstation with 16 Intel I7-10700K cores, 64GB RAM, 1TB SSD external storage running Ubuntu 20.04.2 LTS. The network bandwidth and latency are controlled by the local socket protocol.

| Dataset | Type | Nodes | Edges |

| Undirected | 3959 | 170,174 | |

| Directed | 76244 | 1,768,149 | |

| Google+ | Directed | 102100 | 13,673,453 |

Social graph datasets. We use three social graph datasets: Facebook222http://snap.stanford.edu/data/ego-Facebook.html., Twitter333http://snap.stanford.edu/data/ego-Twitter.html., and Google+444http://snap.stanford.edu/data/ego-Gplus.html.. Their statistics are summarized in Table II. For each node in a tested graph dataset, a vector is extracted representing the node’s local view on the whole graph, based on its social connections with other nodes. Recall that for privacy protection, PrivGED aims to conceal the values, positions, and number of non-zero elements in each node’s local view vector, as such information captures a node’s private social interactions. In particular, in the context of these social graph datasets, the value information reflects two users are connected (e.g., they are friends), the position information reflects with which other users a user is connected, and the number information may reflect how many friends/followers a user has (indicating the user’s social skills).

Protocol instantiation. Eigendecomposition usually works on real numbers, while cryptographic computation needs to work with integers. Following priors works on secure computation [16, 52], we use a common fixed-point encoding of real numbers. Specifically, for a private real number , we consider a fixed-point encoding with bits of precision: . In our experiments, we use the ring in the phase of secure degree histogram estimation and secure binning map generation, and the ring with bits of precision in the phase of secure eigendecomposition. The number of iterations of Eq. 2 is set to 25. For DPF and DCF, we set the security parameter to . The size of the output matrix of Arnoldi method and Lanczos method is set to 15*15, and the top-3 eigenvalues/eigenvectors are used to verify the accuracy of eigendecomposition, because only top-2 eigenvalues/eigenvectors are used in most community detection tasks [19, 10, 9].

Ground truth. We use the standard Python library to store the large-scale social graphs in sparse encoding (plaintext and ciphertext), and then use its standard eigendecomposition library and to calculate the eigenvalues/eigenvectors on symmetric matrix (i.e., Facebook) and non-symmetric matrices (i.e., Twitter and Google+), respectively. Subsequently, we will use the outputs of the standard library as the ground truth.

8.2 Evaluation on Accuracy

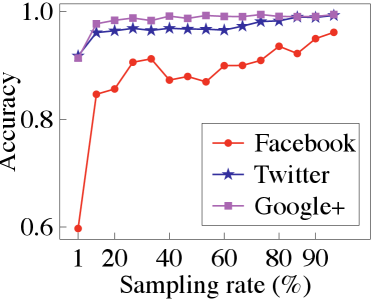

Secure binning map generation. To obtain an appropriate sampling rate when securely estimating the degree histogram, we compare the accuracy of the binning map with varying sampling rates. Fig. 5 summarizes the experiment results, where we set the number of bins to 10 and use the results of sampling rate as the ground truth. It is observed that when the sampling rate is set to , we can obtain a satisfactory accuracy (about ).

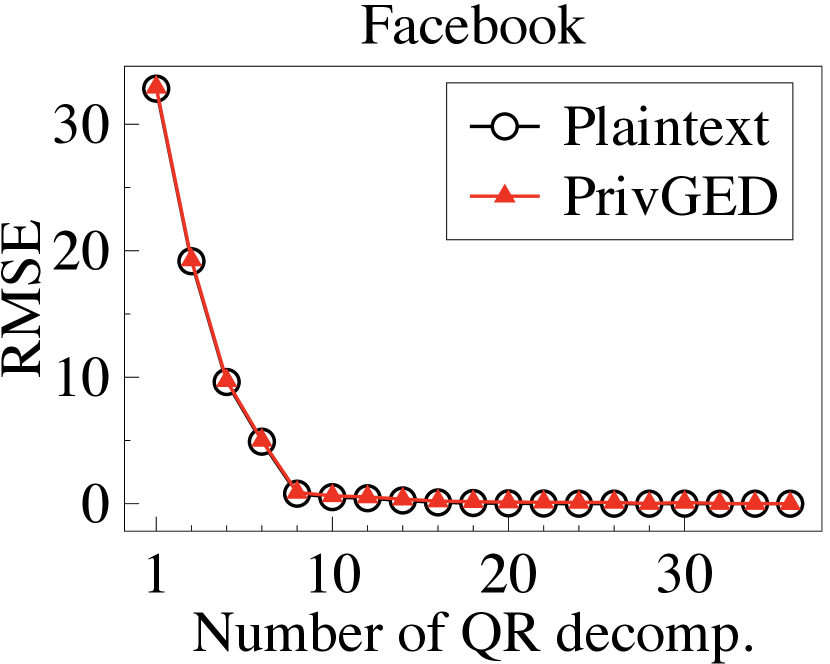

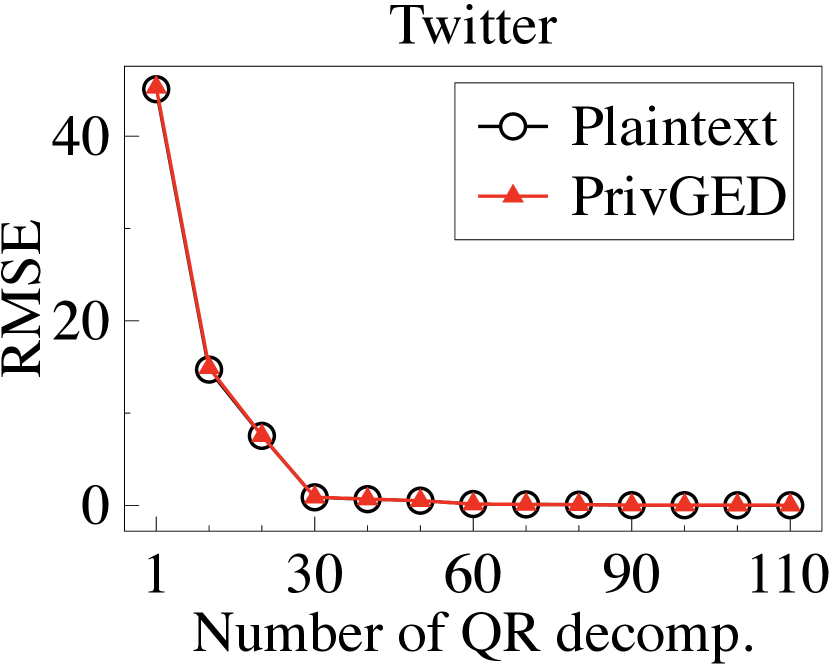

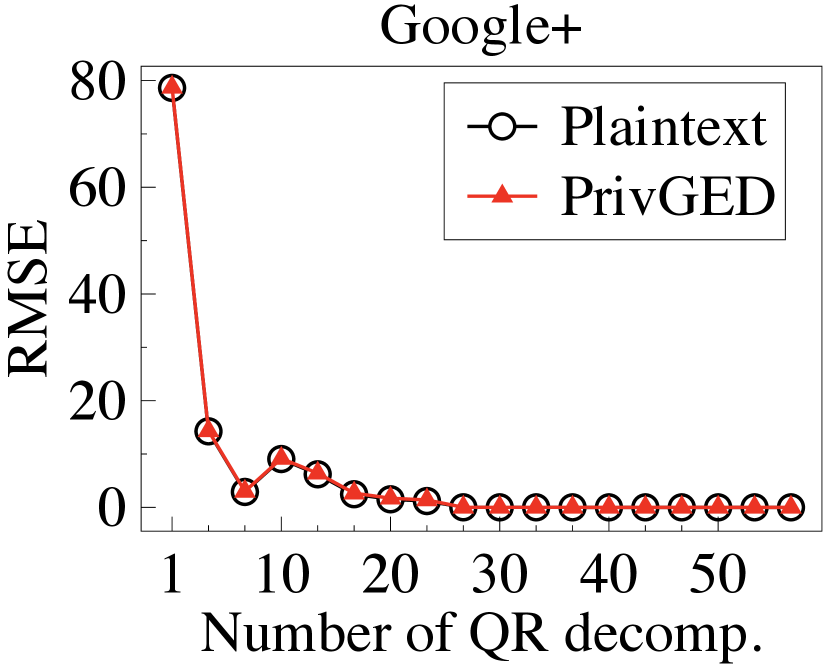

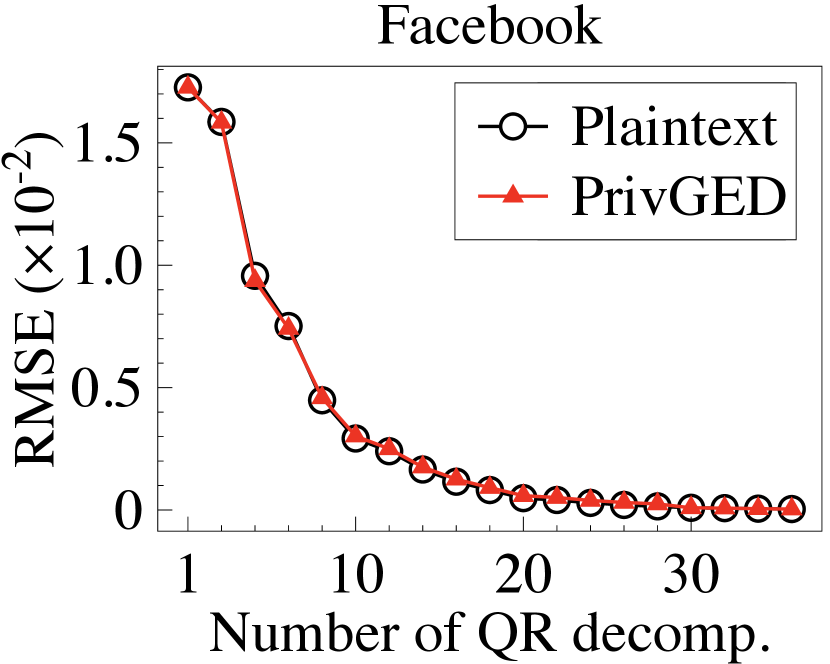

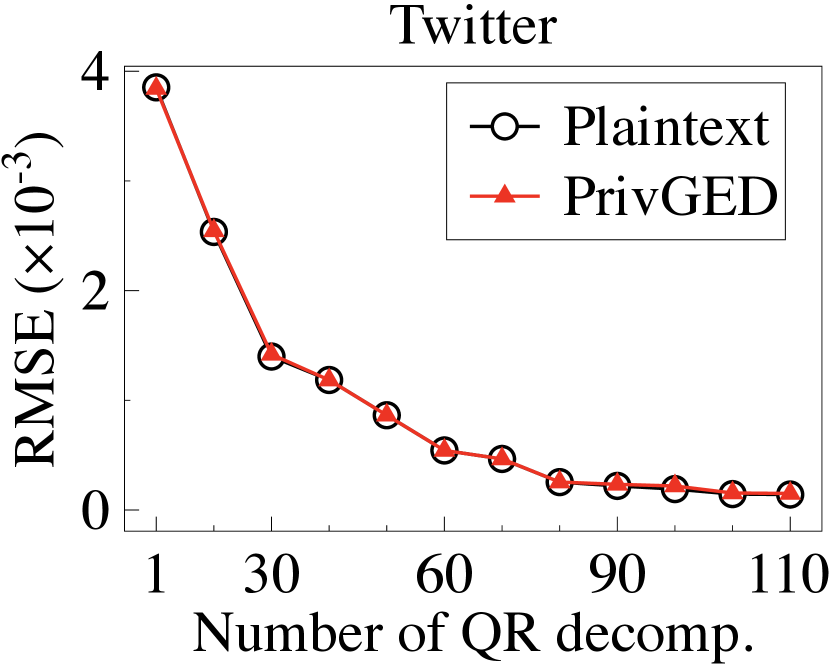

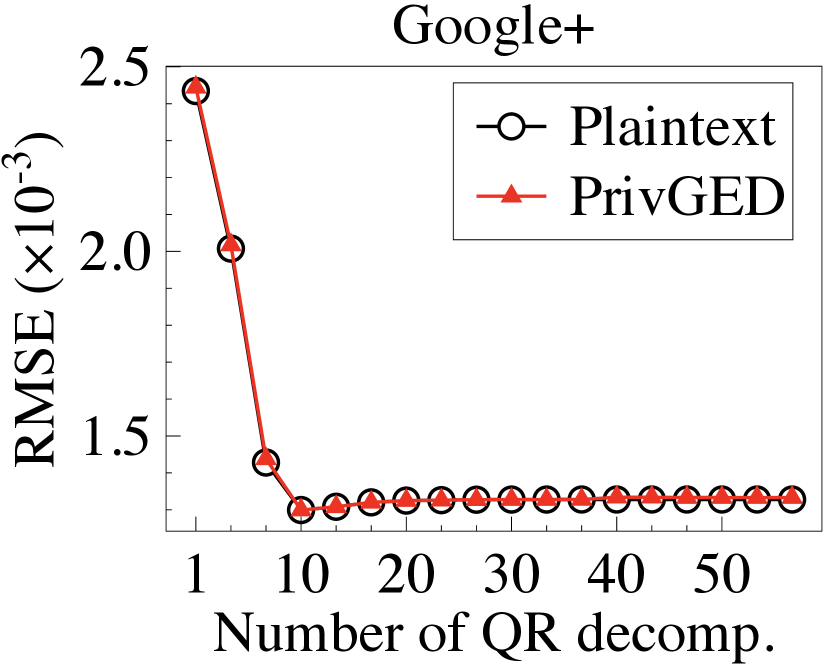

Secure eigendecomposition. We first implement the plaintext Arnoldi method, Lanczos method, and QR algorithm to compute the plaintext eigenvalues/eigenvectors, and then calculate the RMSE in the top-3 eigenvalues/eigenvectors between the plaintext and the ground truth (output by the standard Python library). We then execute PrivGED to compute the ciphertext eigenvalues/eigenvectors, and calculate the RMSE in the top-3 eigenvalues/eigenvectors between the PrivGED’s result and the ground truth. We summarize the results in Fig. 6 and Fig. 7. It is observed that the RMSE of PrivGED is slightly higher than that of plaintext (about ), but they exhibit consistent behavior.

8.3 Evaluation on Performance

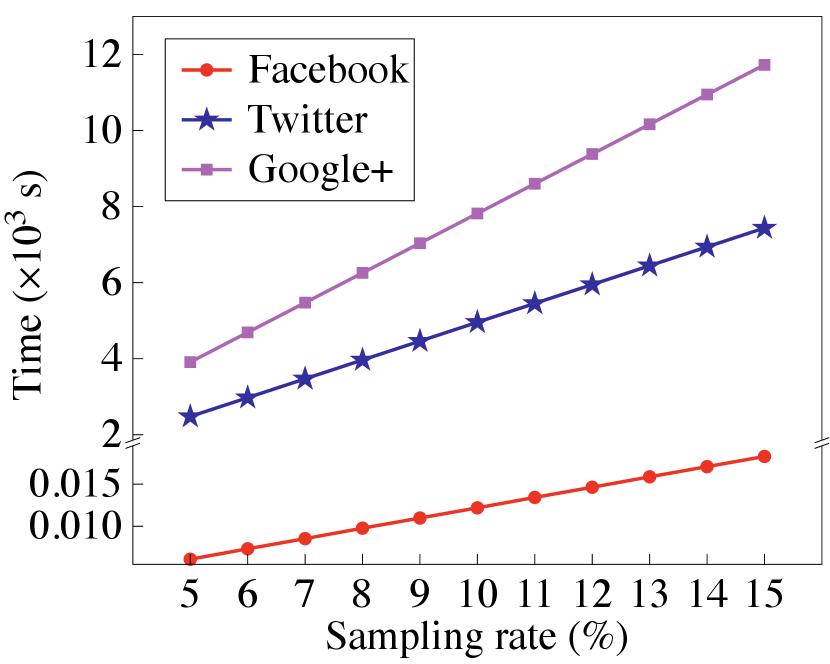

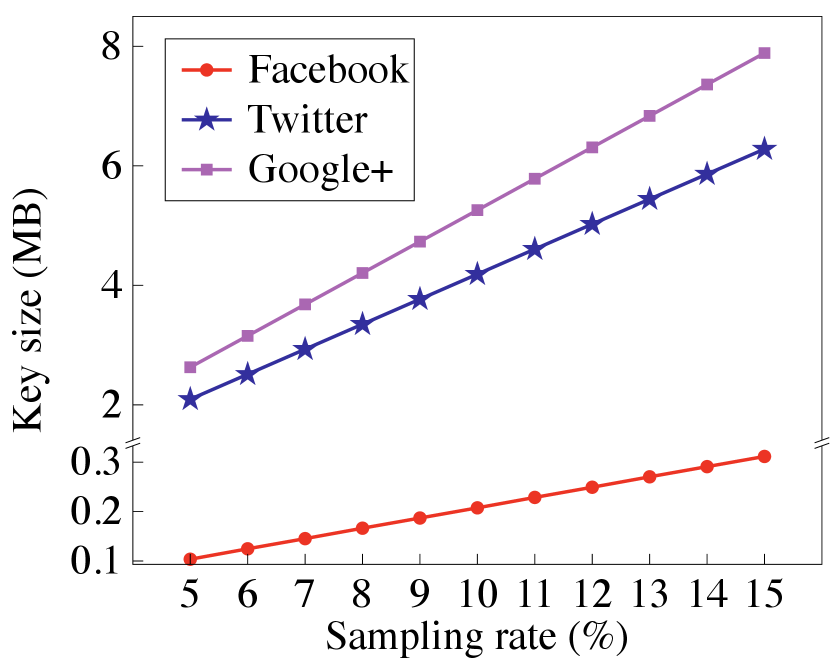

Secure degree histogram estimation. We first report PrivGED’s cost in securely estimating the degree histogram. Fig. 9 illustrates the running time and Fig. 9 shows the key size of DPF involved in the process, where we set the number of bins to 10 and the maximum degree to . It is noted that Algorithm 3 does not require communication between . Over the three social graphs, the total key size of all sampled users only ranges from 0.1 MB to 7.8 MB.

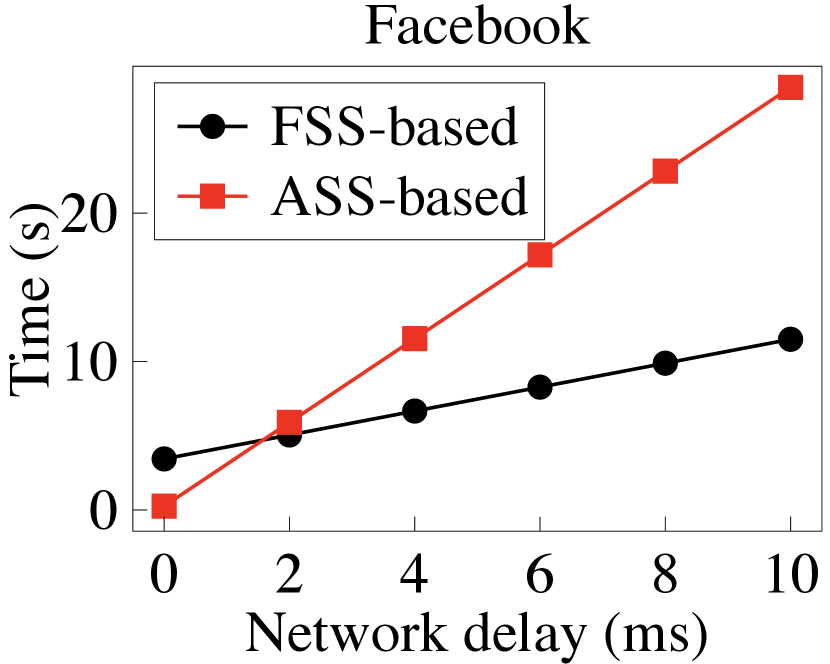

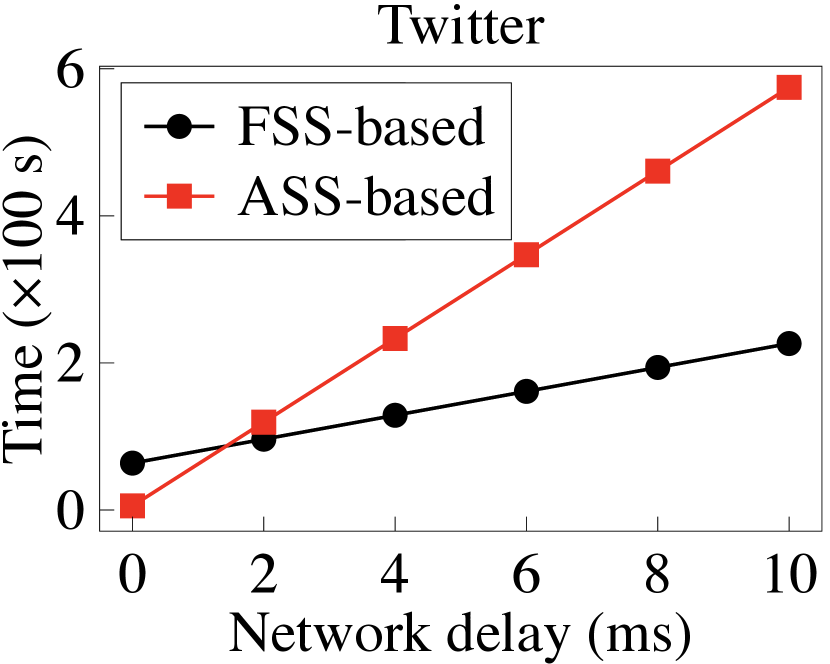

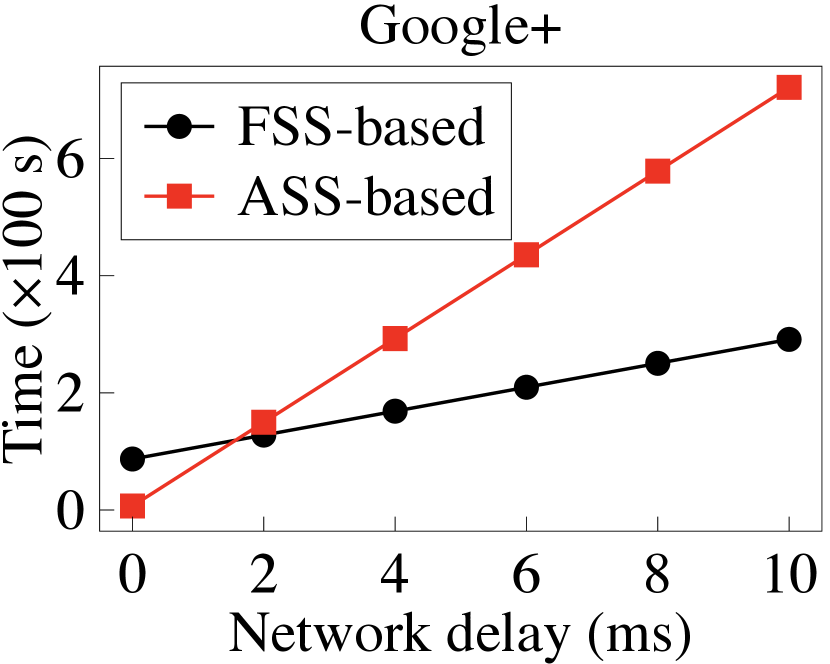

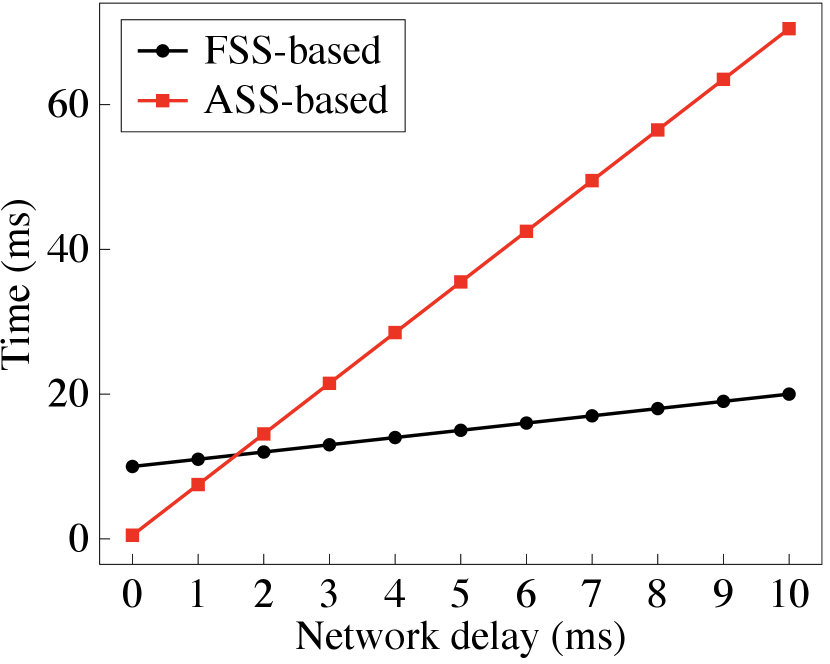

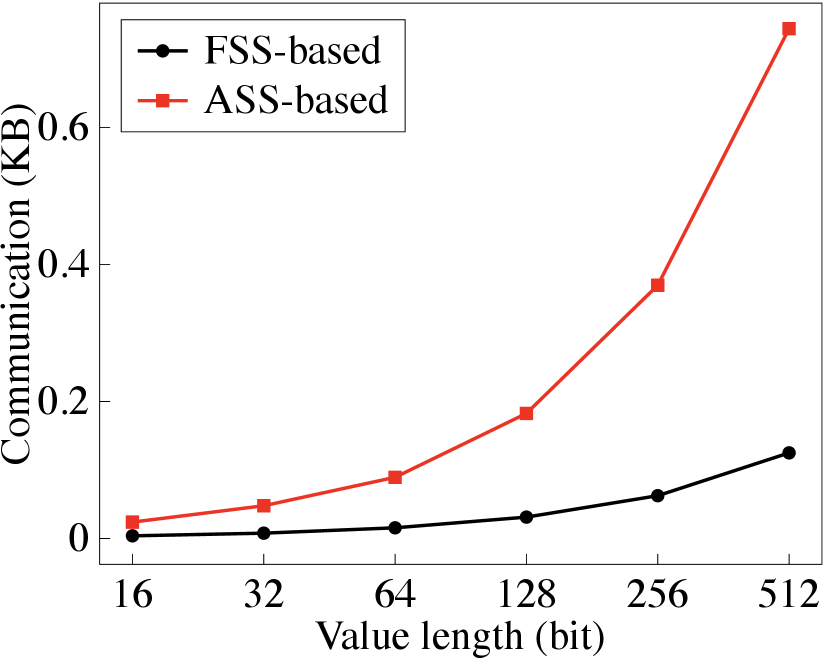

Secure binning map generation. We then report PrivGED’s performance in securely generating the binning map. Recall that in Section 5.3.2, we provide an FSS-based approach and ASS-based approach to support the secure comparison operation under different network environments. Therefore, we first evaluate the efficiency under different network delays, and report the results in Fig. 10. Additionally, we report the benchmark cost of the two secure comparison approaches under different network delays and bit lengths, in Fig. 12 and Fig. 12. It is observed that when the network delay is low (about 0 ms-2 ms), the ASS-based approach faster than the FSS-based approach. As the network delays grows (i.e., 2 ms), the FSS-based approach becomes faster.

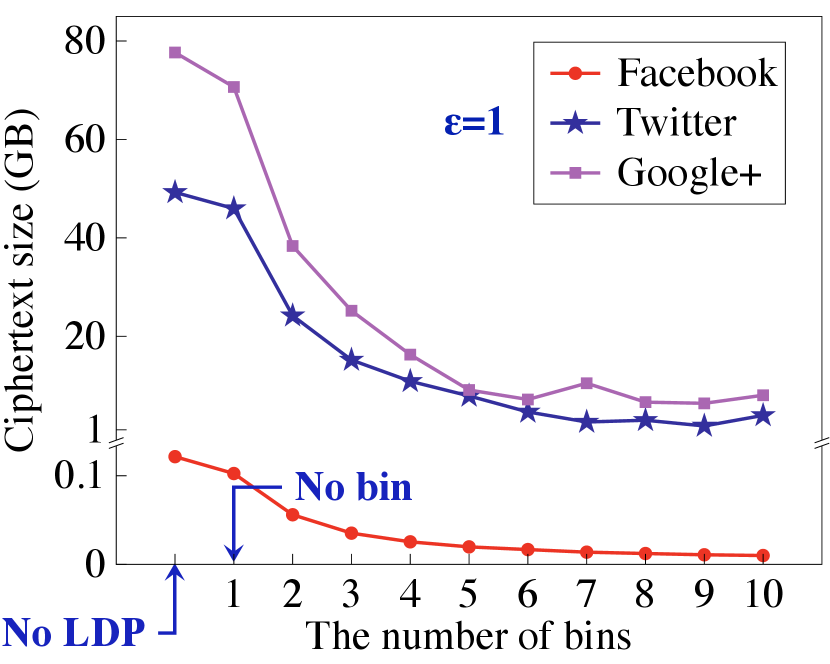

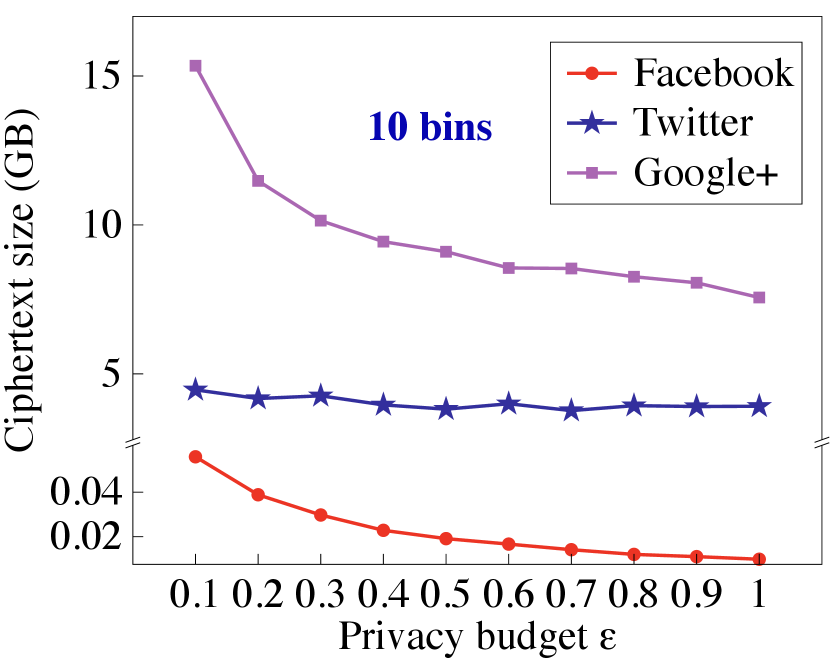

Local view data encryption. We now report the size of the encrypted social graph to show the storage saving of PrivGED, which is shown in Fig. 13. It is observed that compared to direct encryption of all matrix elements by ASS, our protocol achieves a considerable storage saving (up to under , and 10 bins). In addition, compared to way of standard LDP-based encryption (i.e., 1 bin), our encryption protocol (with 10 bins) still achieves a considerable storage saving (up to ).

| Time (ms) | Online Comm. (KB) | |||||

| Basic | Optim. | Gain | Basic | Optim. | Gain | |

| 341 | 339 | 0.6% | 2.3 | 0.2 | 91% | |

| 726 | 698 | 3.9% | 19 | 0.6 | 96% | |

| 1172 | 1062 | 9.3% | 65 | 1.5 | 97% | |

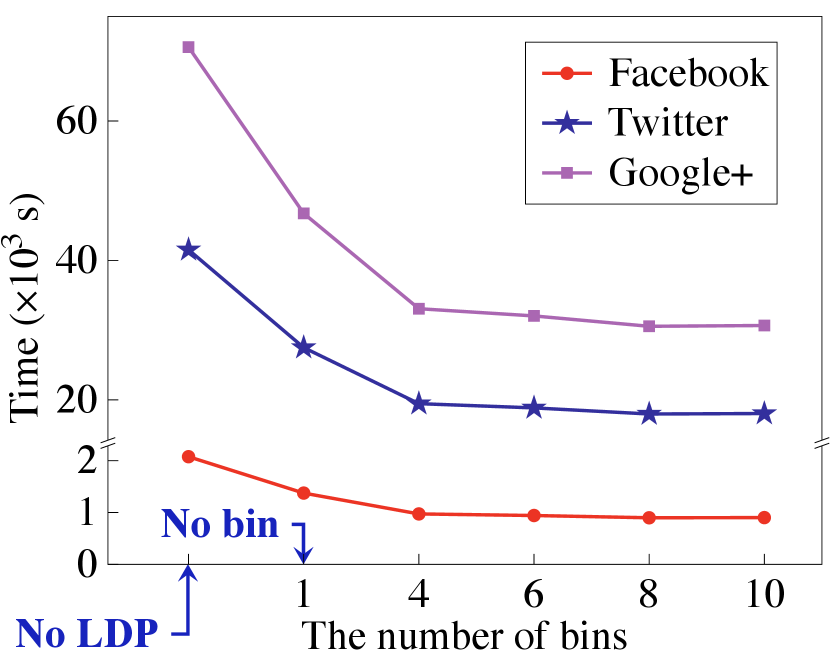

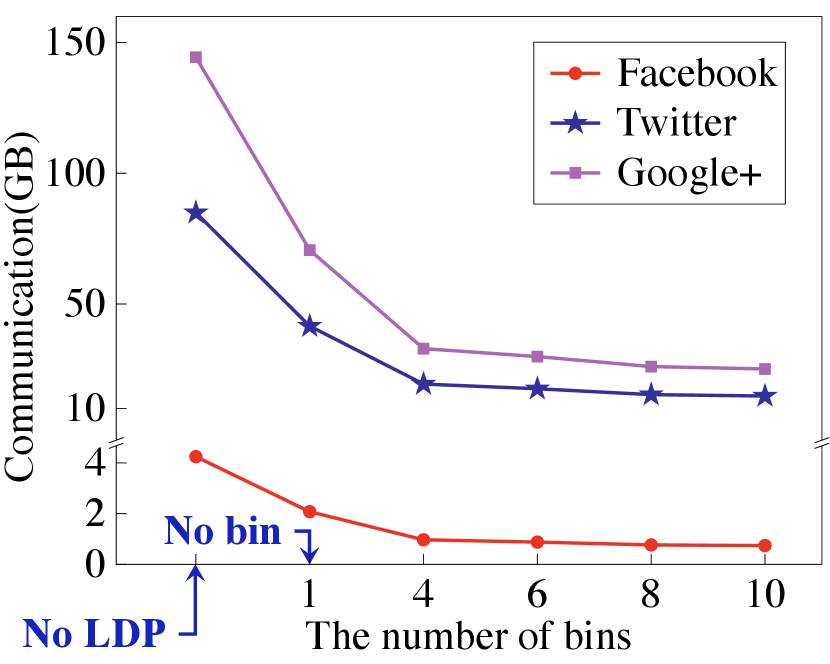

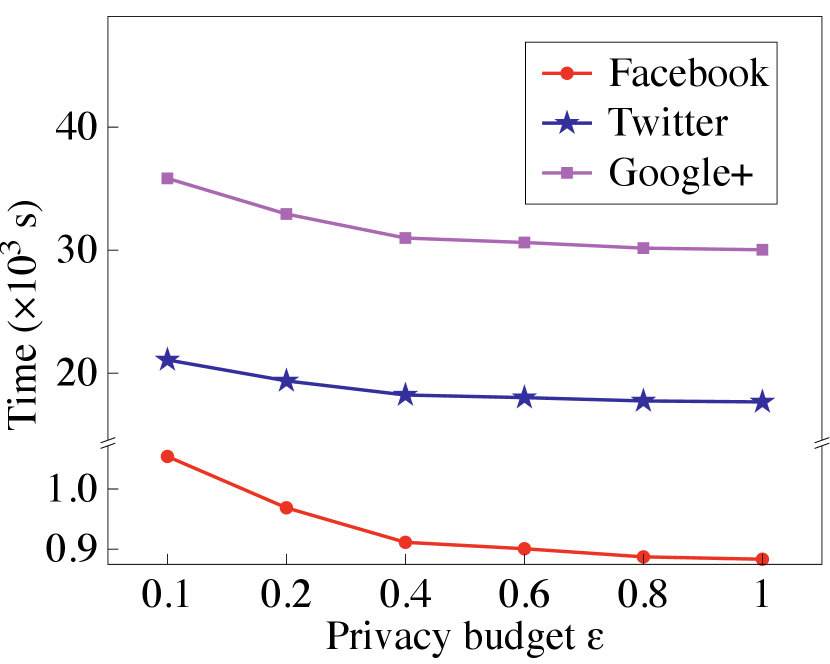

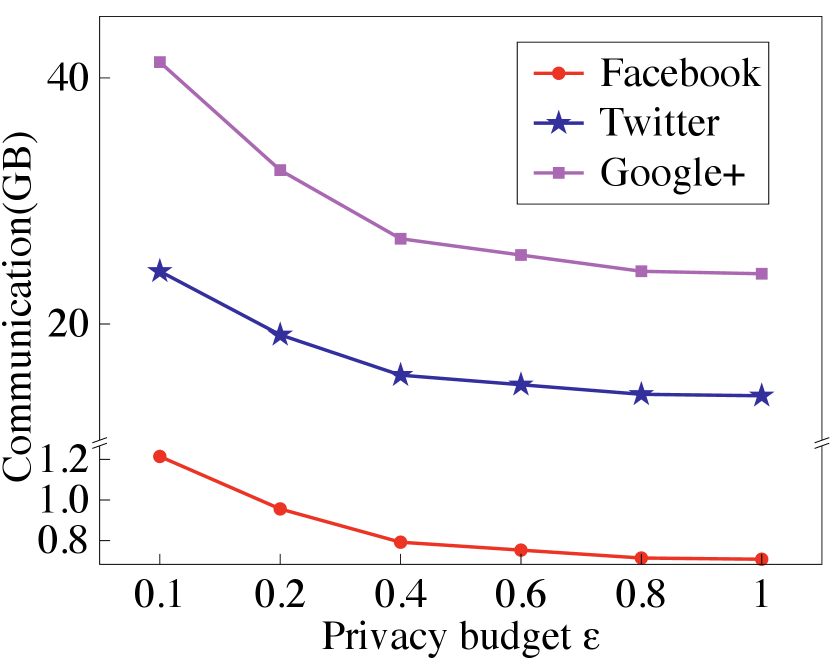

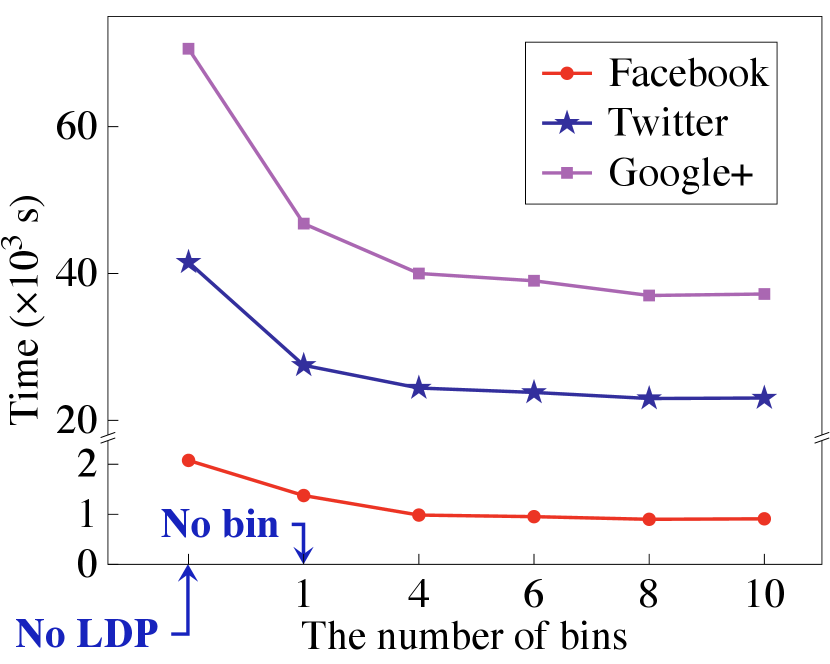

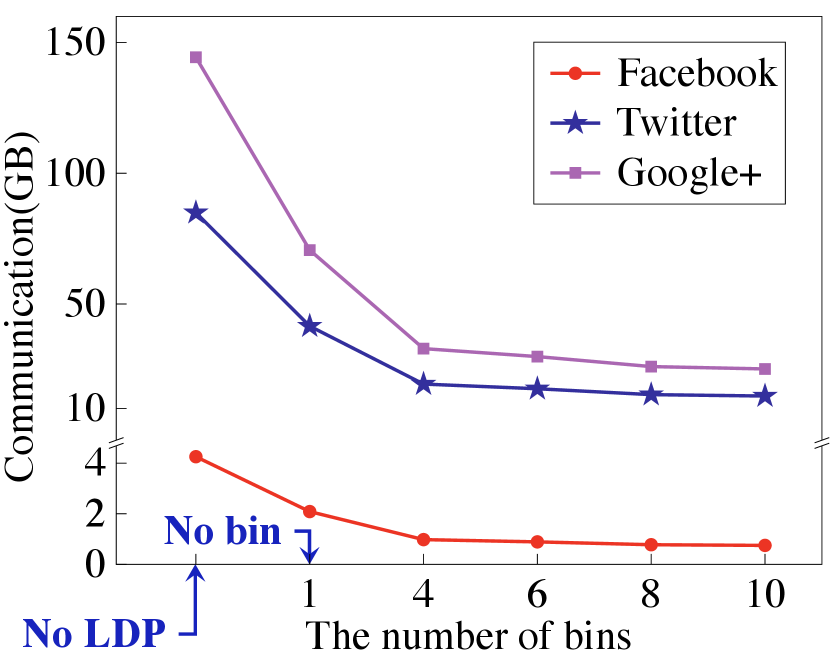

Secure eigendecomposition. We now report PrivGED’s computation and communication performance in secure eigendecomposition. We first examine the performance gain of our optimized secure QR algorithm, and summarize the results in Table III, where we set the matrix dimension output by Arnoldi method and Lanczos method to (). Our optimized secure QR algorithm can save up to online communication as well as computation. Meanwhile, the performance gain increases as grows. We then evaluate the overall performance of secure eigendecomposition, and present the results in Fig. 14 and Fig. 15. From the results, we can observe that compared to the encryption without sparse representation, PrivGED can save up to online communication as well as computation time. Meanwhile, compared to the encryption without binning, PrivGED can still save up to online communication and computation time.

Overall performance. We show in Fig. 16 the overall running time and online communication of PrivGED. It is noted that the communication results in Fig. 16 are similar to that in Fig. 14. This is because secure eigendecomposition dominates the online communication at the cloud in PrivGED. Compared to the way of encryption without sparse representation, PrivGED can save up to overall online communication as well as overall computation time. Meanwhile, compared to the way of standard LDP based encryption, PrivGED can still save up to overall online communication and overall running time.

8.4 Comparison with the State-of-the-Art Prior Work

A fair comparison between PrivGED and the state-of-the-art prior work PrivateGraph [8] does not exist due to its downsides analyzed in Section 2.2. Specifically, PrivateGraph has limited security since it generates the binning map in plaintext domain. Besides, PrivateGraph requires frequent interactions between the cloud and the analyst who undertakes processing workload. As reported in PrivateGraph, to obtain the eigenvalues/eigenvectors of the dataset Google+, the analyst must spend 0.2 hours as well as communicate 10 GB with the cloud. In contrast, PrivGED allows the analyst to directly receive the final eigenvalues/eigenvectors. We also note that under the same privacy budget , the ciphertext size of PrivateGraph is up to 6.3 TB, but that of PrivGED is only 7 GB.

9 Conclusion

We present PrivGED, a new system allows privacy-preserving analytics over decentralized social graphs with eigendecomposition. PrivGED leverages the emerging paradigm of cloud-empowered graph analytics paradigm and enables the cloud to collect individual user’s local view in a privacy-friendly manner and perform eigendecomposition to produce desired encrypted eigenvalues/eigenvectors that can be delivered to an analyst on demand. PrivGED delicately builds on the advancements on lightweight cryptographic techniques (ASS and FSS) and local differential privacy to securely embrace the operations required by eigendecomposition analytics, yielding a customized security design. Extensive experiments demonstrate that PrivGED achieves accuracy comparable to the plaintext domain, with practically affordable performance superior to prior work.

Acknowledgement

This work was supported in part by the Guangdong Basic and Applied Basic Research Foundation (Grant No. 2021A1515110027), in part by the Shenzhen Science and Technology Program (Grant No. RCBS20210609103056041), and in part by the Australian Research Council (ARC) Discovery Project (Grant No. DP180103251).

References

- [1] S. Tabassum, F. S. F. Pereira, S. Fernandes, and J. Gama, “Social network analysis: An overview,” Wiley Interdiscip. Rev. Data Min. Knowl. Discov., vol. 8, no. 5, 2018.

- [2] U. Can and B. Alatas, “A new direction in social network analysis: Online social network analysis problems and applications,” Physica A: Statistical Mechanics and its Applications, vol. 535, p. 122372, 2019.

- [3] H. Sun, X. Xiao, I. Khalil, Y. Yang, Z. Qin, W. H. Wang, and T. Yu, “Analyzing subgraph statistics from extended local views with decentralized differential privacy,” in Proc. of ACM CCS, 2019.

- [4] Z. Qin, T. Yu, Y. Yang, I. Khalil, X. Xiao, and K. Ren, “Generating synthetic decentralized social graphs with local differential privacy,” in Proc. of ACM CCS, 2017.

- [5] M. Xue, B. Carminati, and E. Ferrari, “P3D - privacy-preserving path discovery in decentralized online social networks,” in Proc. of IEEE COMPSAC, 2011.

- [6] L. Zhang, X. Li, K. Liu, T. Jung, and Y. Liu, “Message in a sealed bottle: Privacy preserving friending in mobile social networks,” IEEE Trans. Mob. Comput., vol. 14, no. 9, pp. 1888–1902, 2015.

- [7] X. Ma, J. Ma, H. Li, Q. Jiang, and S. Gao, “ARMOR: A trust-based privacy-preserving framework for decentralized friend recommendation in online social networks,” Future Gener. Comput. Syst., vol. 79, pp. 82–94, 2018.

- [8] S. Sharma, J. Powers, and K. Chen, “Privategraph: Privacy-preserving spectral analysis of encrypted graphs in the cloud,” IEEE Trans. Knowl. Data Eng., vol. 31, no. 5, pp. 981–995, 2019.

- [9] M. E. Newman, “Spectral methods for community detection and graph partitioning,” Physical Review E, vol. 88, no. 4, p. 042822, 2013.

- [10] Y. Wang, Z. Di, and Y. Fan, “Identifying and characterizing nodes important to community structure using the spectrum of the graph,” PloS one, vol. 6, no. 11, p. 27418, 2011.

- [11] Y. Wang, X. Wu, and L. Wu, “Differential privacy preserving spectral graph analysis,” in Proc. of PAKDD, 2013.

- [12] F. Ahmed, A. X. Liu, and R. Jin, “Publishing social network graph eigenspectrum with privacy guarantees,” IEEE Trans. Netw. Sci. Eng., vol. 7, no. 2, pp. 892–906, 2020.

- [13] C. Lanczos, “An iteration method for the solution of the eigenvalue problem of linear differential and integral operators,” 1950.

- [14] M. Curtiss, I. Becker, T. Bosman, S. Doroshenko, L. Grijincu, T. Jackson, S. Kunnatur, S. B. Lassen, P. Pronin, S. Sankar, G. Shen, G. Woss, C. Yang, and N. Zhang, “Unicorn: A system for searching the social graph,” Proc. VLDB Endow., vol. 6, no. 11, pp. 1150–1161, 2013.

- [15] B. Zhou, J. Pei, and W. Luk, “A brief survey on anonymization techniques for privacy preserving publishing of social network data,” SIGKDD Explor., vol. 10, no. 2, pp. 12–22, 2008.

- [16] P. Mohassel and Y. Zhang, “Secureml: A system for scalable privacy-preserving machine learning,” in Proc. of IEEE S&P, 2017.

- [17] E. Boyle, N. Gilboa, and Y. Ishai, “Function secret sharing: Improvements and extensions,” in Proc. of ACM CCS, 2016.

- [18] E. Boyle, N. Chandran, N. Gilboa, D. Gupta, Y. Ishai, N. Kumar, and M. Rathee, “Function secret sharing for mixed-mode and fixed-point secure computation,” in Proc. of EUROCRYPT, 2021.

- [19] M. E. Newman, “Finding community structure in networks using the eigenvectors of matrices,” Physical review E, vol. 74, no. 3, p. 036104, 2006.

- [20] S. D. Kamvar, T. H. Haveliwala, C. D. Manning, and G. H. Golub, “Extrapolation methods for accelerating pagerank computations,” in Proc. of ACM WWW, 2003.

- [21] S. Kamvar, T. Haveliwala, and G. Golub, “Adaptive methods for the computation of pagerank,” Linear Algebra and its Applications, vol. 386, pp. 51–65, 2004.

- [22] T. Charalambous, C. N. Hadjicostis, M. G. Rabbat, and M. Johansson, “Totally asynchronous distributed estimation of eigenvector centrality in digraphs with application to the pagerank problem,” in Proc. of IEEE CDC, 2016.

- [23] A. Sangers and M. B. van Gijzen, “The eigenvectors corresponding to the second eigenvalue of the google matrix and their relation to link spamming,” J. Comput. Appl. Math., vol. 277, pp. 192–201, 2015.

- [24] W. E. Arnoldi, “The principle of minimized iterations in the solution of the matrix eigenvalue problem,” Quarterly of applied mathematics, vol. 9, no. 1, pp. 17–29, 1951.

- [25] J. G. F. Francis, “The QR transformation - part 2,” Comput. J., vol. 4, no. 4, pp. 332–345, 1962.

- [26] L. Page, S. Brin, R. Motwani, and T. Winograd, “The pagerank citation ranking: Bringing order to the web.” Stanford InfoLab, Tech. Rep., 1999.

- [27] C. Meng, S. Rambhatla, and Y. Liu, “Cross-node federated graph neural network for spatio-temporal data modeling,” in Proc. of ACM KDD, 2021.

- [28] J. Zhou, C. Chen, L. Zheng, H. Wu, J. Wu, X. Zheng, B. Wu, Z. Liu, and L. Wang, “Vertically federated graph neural network for privacy-preserving node classification,” arXiv preprint arXiv:2005.11903, 2020.

- [29] C. Wu, F. Wu, Y. Cao, Y. Huang, and X. Xie, “Fedgnn: Federated graph neural network for privacy-preserving recommendation,” in International Workshop on Federated Learning for User Privacy and Data Confidentiality, 2021.

- [30] X. Zhang, X. Chen, J. K. Liu, and Y. Xiang, “Deeppar and deepdpa: Privacy preserving and asynchronous deep learning for industrial iot,” IEEE Trans. Ind. Informatics, vol. 16, no. 3, pp. 2081–2090, 2020.

- [31] D. J. Wu, J. Zimmerman, J. Planul, and J. C. Mitchell, “Privacy-preserving shortest path computation,” in Proc. of NDSS, 2016.

- [32] M. Du, S. Wu, Q. Wang, D. Chen, P. Jiang, and A. Mohaisen, “Graphshield: Dynamic large graphs for secure queries with forward privacy,” IEEE Trans. Knowl. Data Eng., 2020.

- [33] T. Araki, J. Furukawa, K. Ohara, B. Pinkas, H. Rosemarin, and H. Tsuchida, “Secure graph analysis at scale,” in Proc. of ACM CCS, 2021.

- [34] M. Shen, B. Ma, L. Zhu, R. Mijumbi, X. Du, and J. Hu, “Cloud-based approximate constrained shortest distance queries over encrypted graphs with privacy protection,” IEEE Transactions on Information Forensics and Security, vol. 13, no. 4, pp. 940–953, 2018.

- [35] C. Liu, L. Zhu, X. He, and J. Chen, “Enabling privacy-preserving shortest distance queries on encrypted graph data,” IEEE Transactions on Dependable and Secure Computing, vol. 18, no. 1, pp. 192–204, 2021.

- [36] C. Dwork, “Differential privacy,” in Proc. of ICALP, 2006.

- [37] S. P. Kasiviswanathan, H. K. Lee, K. Nissim, S. Raskhodnikova, and A. D. Smith, “What can we learn privately?” SIAM J. Comput., vol. 40, no. 3, pp. 793–826, 2011.

- [38] X. He, A. Machanavajjhala, C. J. Flynn, and D. Srivastava, “Composing differential privacy and secure computation: A case study on scaling private record linkage,” in Proc. of ACM CCS, 2017.

- [39] E. Boyle, N. Gilboa, and Y. Ishai, “Function secret sharing,” in Proc. of EUROCRYPT, 2015.

- [40] A. C. Yao, “Protocols for secure computations,” in Proc. of IEEE FOCS, 1982.

- [41] W. Chen and R. A. Popa, “Metal: A metadata-hiding file-sharing system,” in Proc. of NDSS, 2020.

- [42] P. Mohassel, P. Rindal, and M. Rosulek, “Fast database joins and PSI for secret shared data,” in Proc. of ACM CCS, 2020.

- [43] X. Liu, Y. Zheng, X. Yuan, and X. Yi, “Medisc: Towards secure and lightweight deep learning as a medical diagnostic service,” in Proc. of ESORICS, 2021.

- [44] Y. Zheng, H. Duan, X. Tang, C. Wang, and J. Zhou, “Denoising in the dark: Privacy-preserving deep neural network-based image denoising,” IEEE Transactions on Dependable and Secure Computing, vol. 18, no. 3, pp. 1261–1275, 2021.

- [45] L. Xu, J. Jiang, B. Choi, J. Xu, and S. S. Bhowmick, “Privacy preserving strong simulation queries on large graphs,” in Proc. of IEEE ICDE, 2021.

- [46] S. Tan, B. Knott, Y. Tian, and D. J. Wu, “Cryptgpu: Fast privacy-preserving machine learning on the gpu,” in Proc. of IEEE S&P, 2021.

- [47] Y. Zheng, H. Duan, and C. Wang, “Learning the truth privately and confidently: Encrypted confidence-aware truth discovery in mobile crowdsensing,” IEEE Transactions on Information Forensics and Security, vol. 13, no. 10, pp. 2475–2489, 2018.

- [48] TF-Encrypted, “Encrypted deep learning in tensorflow,” https://tf-encrypted.io, 2021, [Online; Accessed 1-Jan-2022].

- [49] Facebook’s CrypTen, “A research tool for secure machine learning in pytorch,” https://crypten.ai/, 2019, [Online; Accessed 1-Jan-2022].

- [50] J. Doerner and A. Shelat, “Scaling ORAM for secure computation,” in Proc. of ACM CCS, 2017.

- [51] N. Agrawal, A. S. Shamsabadi, M. J. Kusner, and A. Gascón, “QUOTIENT: two-party secure neural network training and prediction,” in Proc. of ACM CCS, 2019.

- [52] P. Mohassel and P. Rindal, “ABY: A mixed protocol framework for machine learning,” in Proc. of ACM CCS, 2018.

- [53] B. Knott, S. Venkataraman, A. Hannun, S. Sengupta, M. Ibrahim, and L. van der Maaten, “Crypten: Secure multi-party computation meets machine learning,” in Proc. of NeurIPS, 2020.

- [54] S. Akram and Q. U. Ann, “Newton raphson method,” International Journal of Scientific & Engineering Research, vol. 6, no. 7, pp. 1748–1752, 2015.

- [55] W. H. Press, B. P. Flannery, S. A. Teukolsky, W. T. Vetterling, and P. B. Kramer, “Numerical recipes: the art of scientific computing,” Physics Today, vol. 40, no. 10, p. 120, 1987.

- [56] M. Kelkar, P. H. Le, M. Raykova, and K. Seth, “Secure poisson regression,” in Proc. of USENIX Security Symposium, 2022.

- [57] B. Kacsmar, B. Khurram, N. Lukas, A. Norton, M. Shafieinejad, Z. Shang, Y. Baseri, M. Sepehri, S. Oya, and F. Kerschbaum, “Differentially private two-party set operations,” in Proc. of IEEE EuroS&P, 2020.

- [58] R. Canetti, “Security and composition of multiparty cryptographic protocols,” J. Cryptol., vol. 13, no. 1, pp. 143–202, 2000.

- [59] J. Katz and Y. Lindell, “Handling expected polynomial-time strategies in simulation-based security proofs,” in Proc. of TCC, 2005.

- [60] M. Curran, X. Liang, H. Gupta, O. Pandey, and S. R. Das, “Procsa: Protecting privacy in crowdsourced spectrum allocation,” in Proc. of ESORICS, 2019.