Privacy-preserving Generative Framework Against Membership Inference Attacks

Abstract

Artificial intelligence and machine learning have been integrated into all aspects of our lives and the privacy of personal data has attracted more and more attention. Since the generation of the model needs to extract the effective information of the training data, the model has the risk of leaking the privacy of the training data. Membership inference attacks can measure the model leakage of source data to a certain degree. In this paper, we design a privacy-preserving generative framework against membership inference attacks, through the information extraction and data generation capabilities of the generative model variational autoencoder (VAE) to generate synthetic data that meets the needs of differential privacy. Instead of adding noise to the model output or tampering with the training process of the target model, we directly process the original data. We first map the source data to the latent space through the VAE model to get the latent code, then perform noise process satisfying metric privacy on the latent code, and finally use the VAE model to reconstruct the synthetic data. Our experimental evaluation demonstrates that the machine learning model trained with newly generated synthetic data can effectively resist membership inference attacks and still maintain high utility.

keywords:

Machine learning, membership inference attack, differential privacy, metric privacy, generative model, variational autoencoder.1 Introduction

With the widespread use of artificial intelligence and machine learning in our daily life, the need to ensure privacy protection in these systems has come to the fore, with an overarching goal that the output and decision do not leak specific secrets of true individual attributes. Nowadays, machine learning has been provided as a service (MLaaS) by many platforms111https://cloud.google.com/automl222 https://azure.microsoft.com/en-us/services/machine-learning333https://aws.amazon.com/cn/. Smart devices access these models through prediction Application Programming Interfaces (APIs) which return a prediction score vector and some companies are willing to deploy these models in smart devices locally. Meanwhile, several architectures have been proposed [7] [24] for distributed and federated learning to protect privacy of the clients’ training data. Each client trains a local model on his own data and periodically exchanges model parameters or partially constructed models with the other clients. However, a series of studies [31, 27, 29, 33, 26, 20, 32, 17, 42, 8] have indicated that the adversary could exploit the prediction output or parameters of machine learning models to perform membership inference attacks to get helpful information about the data used in the machine learning model. Membership inference attack [31], as one of the most important and concerning attack modes, is always used to measure the leakage of the machine learning model [27]. Given an input record and a target model, the membership inference attack tries to determine whether the record was used during the training of the target model or not.

Membership inference attacks cause severe privacy and security threats, especially when the training dataset contains sensitive attributes such as diagnosis and income. For example, if a hacker could determine a patient data is used to train a cancer prediction model which deployed in a wearable device, he was able to judge that the patient have cancer with great probability. As a consequence, this patient’s privacy would be disclosed.

In machine learning, the influence of a user on trained model should be erased at request as the learned model contains private information about its training data. These follow the spirit of the European Union’s General Data Protection Regulation (GDPR) [11]. Meanwhile, the main techniques to achieve the privacy preservation in machine learning are secure multi-party computing (MPC) and fully homomorphic encryption (FHE). However, the MPC scheme applied to protect the security of machine learning cannot effectively resist similar inference attacks because it doesn’t protect the final result, and the FHE scheme is not widely used in practical application environments because of its complex calculations. Therefore, how to design a practical and effective mechanism to resist the membership inference attack is still an unsolved challenge in machine learning.

Overfitting is the major cause for the feasibility of membership inference attack, as the trained model tends to memorize training inputs and perform better on them. As a result, the prediction scores or the parameters’ gradients in the target model are distinguishable for members and non-members of the training data. There are several defenses [31, 27, 29, 33, 26, 32, 36, 39] have been proposed and most of them try to reducing overfitting by various regularization technique, such as L2 regularization [31], min-max game based adversarial regularization [26, 39], and dropout [29]. In addition, Song et al.[32] showed that early stopping outperformed other overfitting-prevention counter-measures against membership inference attacks to classifiers. However, none of them directly reduce the distinguishability of members or non-members and make the big utility loss. Some other mechanisms [1, 2, 35, 25, 41, 18, 19] like Differentially Private - Stochastic Gradient Descent (DP-SGD) leverage differential privacy [9] when training the target model. In addition, some research like MemGuard[20] and Purification framework[38] leverage both the specific differential privacy mechanism and machine learning constraint method to the prediction output of target model. However, all of these work consider that leverage some mechanisms in the training stage or the output of the target model, but actually, data and model reuse is common in practice, and each time we utilize these data to train a new model or apply these machine learning models, we have to adjust the settings of the mechanism for the corresponding situation. Therefore, in this paper, we consider that some transformation mechanisms could apply to the input data to prevent membership inference attacks fundamentally.

In this paper, we propose a privacy-preserving modular generative framework to defend membership inference attacks by transforming the source data into synthetic data to train the target model. In the general situation where the same set of data is used for training different models, the privacy protection mechanism tampering with the training phase of the target model need change according to the different models and the model convergence faces challenges. Meanwhile, the noise mechanism applied to the output will also reduce the accuracy of the target model to a certain extent. While some data generation mechanisms[37, 4, 43, 34] satisfying differential privacy have been proposed, all of these works consider that leverage differential noise mechanism in the training stage as previously stated and the membership inference attacks are not included. The biggest problem of these work is that they should consider more about the model convergence and privacy budget costs in each iteration, which makes the model hard to converge and extend. On the other hand, their purpose is to construct generative models that satisfy differential privacy to generate lots of new data, while this paper focuses on protecting the target model from membership inference attacks by transforming the original data into similar data that satisfy differential privacy.

The goal of this study is to develop a data generation method for machine learning that provides a provable privacy against membership inference attacks, without compromising much utility.

In this paper, the membership inference attack, including black-box attack and white-box attack, is considered a measurement to reflect the privacy leakage of the trained model about its training set.

We achieve the generative framework by generating synthetic data through a well-trained Variational Autoencoder (VAE) generative model on source data. Unlike many other schemes[13, 5, 6, 23, 16] that set the goal of protection as a generative model, we treat VAE as a building component to protect the downstream task model, which is the difference between our work among them. We treat the VAE model as a specific data generation solution because the VAE model creates a continuous and complete latent space locally and the new never seen data could be generated. Compared with the traditional generation model that randomly generates hidden vectors in the hidden space, we generate new data through the hidden vector generated from the existing original data, which can maintain certain distribution characteristics of the data to be generated. And our mechanism can generate new data that is more in line with the target data distribution when facing the scene of partial data generation. Notice that we choose to generate new data rather than stay the latent vectors because of the portability of downstream training tasks.

In conclusion, we make the following contributions in the paper.

-

1.

We propose a privacy-preserving modular generative framework to generate robust representation of the source data for all type of machine learning and this framework can be further easily fine-tuned for many different downstream tasks such as classification as shown in our work.

-

2.

We adopt VAE as a specific extractor and reconstructor in our framework, because of the stochasticity of the generative model and the continuity of the VAE model, which is unlike the aforementioned others set the generative model as the protection target. Moreover, we adopt the metric privacy mechanism in the latent space of VAE rather than the data domain. Note that, we don’t apply the differential privacy mechanism in the model during the training process like many papers mentioned above.

-

3.

We evaluate our system framework against the state-of-art membership inference attack on three real-world datasets. Our results show that our project can protect data resources without much efficiency loss.

In the remainder of this article, we firstly describe preliminaries in Section 2. Then, we present the system model and threat model in Section 3. After that, Section 4 proposes our privacy-preserving generative framework and the related algorithms. Experiments and results are discussed in Section 5 and related works are shown in Section 6. Conclusions of this article with future work are presented in Section 7.

2 Preliminaries

In this section, we review three concepts used in our work, namely, generative model, differential privacy, and membership inference attack. We briefly introduce the variational autoencoder used as transformation function and the metric privacy which is applied in satisfying privacy guarantee.

2.1 Generative Model

Variational Autoencoder[22] (VAE): VAE is a widely used generative framework that consists of an encoder and a decoder, which are cascaded to reconstruct data with pre-defined similarity metrics. The encoder maps data into a latent space, and the decoder maps the encoded latent code back to the data space. The VAE objective function is composed of the reconstruction loss and the prior regularization over the latent code distribution. Formally,

| (1) |

where denote the latent code, denotes the input data, is the probabilistic encoder parameterized by which is used to approximate the true posterior, represents the probabilistic decoder parameterized by , and denotes the KL divergence. In practice, is always constrained to be Gaussian distribution and is sampled via the reparameterization trick, which results in a closed-form derivation of the second term.

2.2 Differential Privacy

Differential privacy [9] is one of the most popular paradigm used to deal with information leak in statistical databases. It provides a formal privacy guarantee, ensuring that sensitive information relative to individuals cannot be easily inferred by disclosing answers to aggregate queries.

A mechanism is -differential private if for any two adjacent databases , , and any property , the probability distributions , differ on at most by , which means .

2.2.1 Metric Privacy

While the standard differential privacy is a rigorous privacy notion, it is only applicable to publishing aggregate statistics. The problem studied in this paper wishes to generate data, rather than aggregate statistics about the data. Therefore, it requires a more general privacy notion for data that belongs to an arbitrary domain of secrets. The authors of [3] extended the principle of differential privacy and proposed a generalized notion, metric privacy. Essentially, it defines a distance metric between secrets and guarantees a level of indistinguishability proportional to the distance. Specifically, given an arbitrary set of secrets with a metric :

Definition 1 (Generalized Privacy [3]).

A mechanism from to is a (probabilistic) function : which satisfies -privacy, iff , or equivalently:

| (2) |

where is a set of query outcomes, is a -algebra over , and is the set of probability measures over .

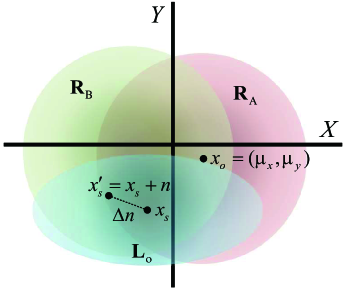

Metric privacy guarantees that the output of a mechanism should be roughly the same, i.e., bounded by the distance , between two inputs and . For an adversary who observes the output space, it is challenging to infer the exact input, thus the privacy of the input is protected. The metric can be extended by scaling a standard metric by a factor , i.e., . As a result, the guarantee of metric privacy also relies on : lower indicates higher indistinguishability, hence stronger privacy.

2.3 Membership Inference Attack

We formulate the membership inference attack as a binary classification task where the attacker aims to classify whether a sample has been used to train the victim machine learning model. Formally, we define

| (3) |

where the attack model output 1 if the attacker infers that the sample is included in the training set, and 0 otherwise. denotes the target model parameters while represents the general model publishing mechanism, i.e., type of access available to the attacker. For example, is an identity function for the white-box access case and can be the inference function for the black-box case.

3 System Model and Threat Model

In this section, we first describe the system model of our framework and then elaborate on the threat model defined in our framework.

3.1 System Model

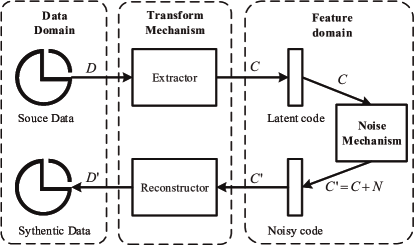

We depict in Figure1 the proposed system model for a privacy-preserving generative framework to train the target machine learning model against membership inference attacks. At the very outset, the data owner builds an extractor and a reconstructor through their data . Then he adds noise which satisfy differential privacy to the output of the extractor and gain synthetic data from reconstructor. At the same time, if the source data has label originally, the label of synthetic data should be created. In the end, synthetic data could be used to train new models.

More specifically, the data owner first trains a fundamental VAE model locally (see 4.2) using the data source . The VAE model maps the original data to the latent space vector through the encoder and then maps the latent space vector back to the original data through the decoder. Because the decoder can rebuild the data in original data through the latent space vector, it shows that the latent space vector contains enough original data information. Some noise (see 4.3) is added to the latent vector generated by model and the data owner leverages the decoder of to reconstruct data . If necessary, implement label migration through label-transfer mechanism (see 4.4). Then we could use this synthetic set to train an application model .

3.2 Threat Model

Attacker’s goal:

Like many studies on membership inference attacks, we consider an attacker’s goal is to determine whether an input sample was used as part of the training set . But in our solution, we focus on protecting the raw dataset rather than the synthetic dataset . Thus, we replace with .

In short, in this paper, we focus on the privacy of the source data .

Attacker’s capability:

Because there are three important entities in our solution, we need to classify attackers (shown in TABLE 1) based on the knowledge of framework. Firstly, we assume that they only have the ability to access the application model after data generated. With the knowledge of model structure and parameters, we can separate them into black-box attack and white-box attack. These two types of attacks are almost the same as the traditional membership inference attack mode: black-box attack and white-box attack except the protected data. In the other situation, the attacker can get the synthetic data. Inspired by the Chen [5], according to the knowledge of the extractor and reconstructor, the attack mode can be divided into a black-box generator attack, partial black-box generator attack, and white-box generator attack. These three types of attacks belong to the attack category facing data release and the generative model release. Note that, in this paper, we just consider the following black-box attack and white-box attack.

| Name | extractor | reconstructor | synthetic data | application model |

| black-box | ● | ● | ● | |

| white-box | ● | ● | ● | ✓ |

| black-box generator | ● | ● | ○ | ✓ |

| partial black-box generator | ◑ | ◑ | ○ | ✓ |

| white-box generator | ○ | ○ | ○ | ✓ |

-

1

:without access; : with access; : full control.

-

2

: white-box; : black-box.

Taxonomy of membership inference attacks discussed in this paper:

-

1.

black-box attack: This is the least knowledgeable setting to attackers where the attacker is only able to send query data samples to the target application model and obtain their output predicted by the target model. Besides, they have no information about the extractor and reconstructor in this framework.

-

2.

white-box attack: The attacker has access to the full application model, including its architecture and parameters, and any hyper-parameter that is needed to predict in the model. At the same time, he can also observe the intermediate computations at hidden layers. However, he also doesn’t know the extractor and reconstructor in this framework.

4 Privacy Preserving Generative Framework

This section details the implementation of our privacy preserving generative framework (PPGF) to enforce both privacy and validity. Our method focuses on preserving privacy and our idea is inspired by the mechanism in [12]. The source data will first be extracted to be feature vectors. Next, add noise into the feature vectors to achieve privacy guarantees and go through the reconstruction function resulting in the synthetic data.

In [12], Fan et al., utilize the SVD to decompose the image matrix and add noise to fuzzy the perceptual similarity of each image. But we want to generate new data that satisfies the distribution of the source data, so the reconstructor needs to have generalized properties. This means that giving suitable input to the reconstructor, we could generate new data that is never seen but satisfy the distribution of source data in some features.

In the following sections, we first discuss the overview of our framework and then elaborate on the three main components. Finally, the privacy analysis of PPGF is described formally.

4.1 Overview of the PPGF

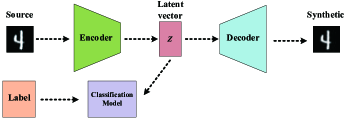

As shown in Figure2, our basic scheme can be divided into two main phases and an optional phase. The initial stage and the data generation stage are the main stages, and the label generation stage is an optional stage. In addition, all of these stages are executed locally.

-

1.

In the initial stage, our main task is to select or generate suitable extractor and reconstructor. In this solution, the VAE model is used as the extractor and reconstructor. As shown in Figure2LABEL:sub@overview1, by training a generator that can generate original data, map the data to the latent space to get specified by the encoder in the VAE model and the decoder maps the latent space data back to the data space. At this time, we treat encoder as the mapping relationship from the source data to the latent space and treat decoder as , which means is reversible.

-

2.

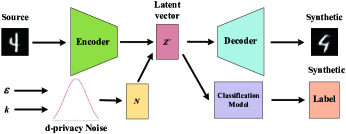

In the data generation stage, our main task is to use the existing mapping relationship and a given privacy budget to generate synthetic data that meets the differential privacy requirements and can resist membership inference attacks. In this scheme, we leverage a noise addition mechanism that satisfies . As shown in Figure2LABEL:sub@overview2, we first map the source data into the latent space through the mapping relationship , and use the noise mechanism to disturb the latent vector , and finally, the mapping relationship is used to map back to the source data space to obtain . For instance, as shown in Figure3, we assume that our latent space is a two-dimensional space. We firstly process an original data point through the VAE encoder to obtain the point in latent space and the corresponding variance , where the data point is mapped to get the red area that satisfies distribution in latent space. Then we randomly select a point in the area to get . After that, the noise mechanism obtains the corresponding blue area that satisfies the probability density function according to the area , and we randomly selects in the noise area . Then VAE decoder decodes into data . Note that, is the green area that another data is mapped in the latent space.

-

3.

In the label generation stage, we mainly provide labels for the newly generated data through the label-transfer mechanism if the original data contains labels. Through the existing mapping relationship , as shown in Fig. 2(a), we put the source data into the latent space: . Then we use the data in the latent space and the corresponding labels to train a classification model in the latent space. Next, as shown in Fig. 2(b), we use in latent space generated in the data generation stage and the label classification model , and we can get the label of the new data .

The components of our basic scheme are mainly included: (1) extractor and reconstructor, (2) noise addition mechanism, and (3) label-transfer mechanism. We will separately describe these three components in detail in the next sections.

4.2 Extractor and Reconstructor

We first describe the components, Extractor and Reconstructor, in detail of our scheme. In general, the extractor and reconstructor appear in pairs. After the extractor gains the features from the input, the paired reconstructor could recover the data from these features as much as possible. In this paper, we consider the type of input data as images. We adopt the VAE or VAE-structure generative model to extract data features and reconstruct new data because VAEs learn encoders that produce probability distributions over the latent space instead of points in the latent space and are easy to converge. In addition, when we sample from these probability distributions during many training iterations, we effectively show the decoder that the entire area around the distribution’s mean produces output that are similar to the input value. In short, we create a continuous and complete latent space locally and we could generate the new never seen data. However, we need to point out that the limitation of the VAE model. We need to treat the prior probability as the Gaussian form and Gaussian distribution is a bad representation of low-dimensional data. At the same time, if an adversary can access the VAE model, the VAE model is vulnerable under the membership inference attack (shown in [15]).

There are an encoder and a decoder in the VAE machine learning model, and we consider the latent layer’s output as the feature information. We treat the VAE model as a combination of extractor and reconstructor. Each time, even we train a VAE model using the same data set and initial hyper parameters, the parameters of the final generated model is different. So, we could treat the generated VAE model as a stochastic extractor and stochastic reconstructor.

We denote the extractor as , which maps an input data to a k-dimensional real vector . We denote VAE model with , which means we consider the VAE encoder as the extractor, and the dimension of the latent layer is . As for reconstructor, it is like an invertible function of extractor in this solution.

If the feature vector is created by the paired extractor, the output of the reconstructor is more similar to the input of the extractor. We denote the reconstructor as , which maps a k-dimensional real vector to a synthetic data. And we denote the VAE model with , which means we consider the VAE decoder as the reconstructor, and the dimension of the latent layer is k. Therefore, we put the mapping relationship as and put the inverse mapping as .

4.3 Noise Addition Mechanism

In this section, we describe the procedure of adding noise in detail. Given the input feature vector , the private mechanism performs random in space according to certain probability distributions which can provide differential privacy for . The following theorem states the privacy guarantee and differential privacy can be generalized to the case of an arbitrary set of secrets , equipped with a metric and privacy budget . Note that in the framework of -privacy, we can express the privacy of the mechanism itself, on its own domain, without the need to consider a query. In our scenario, using the Hamming metric of standard differential privacy, which aims at completely protecting the value of an individual, would be too strong. We are not interested in completely hiding the data characteristics, since some approximate information needs to be revealed to obtain the required service. Hence, using a privacy level that depends on the Euclidean distance between the latent vectors is a natural choice. Privacy proof of the Theorem 1 is described in the paper[3].

Theorem 1.

In a k-dimensional space, a mechanism that samples according to the following probability density function satisfies -privacy

| (4) |

where represents k-dimensional Euclidean distance444Euclidean distance can measure similarity of images in the eyes of humans and encoder can preserve some image perceptual similarity and k is without loss of generality.

| (5) |

The coefficient is derived in Appendix A.

Generating according to (4) can be achieved as follows in Algorithm 1. We first set at the origin of the hyper-spherical coordinate system. Then we sample the radial coordinate according to the marginal distribution and uniformly sampling a point on the unit -sphere. We multiply the results of them and add them with to gain the new feature vector . Note that, the marginal distribution satisfies the -distribution and this proof is shown in Appendix B.

Definition 2.

(-sensitivity): -sensitivity of function indicates the maximum distance between dataset and

| (6) |

For the VAE model, we set the input feature vector as the value obtained by sampling from according to the output of Encoder . Thus, the is set to be the dimension of . As for the , because each dimension in the hidden layer is approximated to a standard Gaussian distribution in the well-trained VAE model, we use the empirical rule [21] to estimate the max distance, namely sensitivity according to Definine 2 where . We set as where and is generated by Encoder .

4.4 Label-Transfer Mechanism

Sometimes, for semi-supervised or supervised learning models, some source data have labels at the beginning. However, through our generative framework, while the raw data is transformed into new data, the labels of the new data may not correspond one-to-one with the source data. Therefore, during semi-supervised or supervised learning, for labeled source data, we need to use label-transfer algorithms to label new data. In this paper, we adopted a model-driven label-transfer mechanism and the specific algorithm in Algorithm 2.

We first use the feature extractor in the framework to extract the feature vectors of the source data set with labels and then train a classification model based on the corresponding labels and these feature vectors . After that, put the previously recorded feature vector used in the newly generated data into this classification model for prediction, and then the label corresponding to the newly generated data can be obtained. Note that, this classification model is sensitive facing a white-box generator attack.

For the VAE model, we use our label-transfer mechanism after the VAE model is well trained. If we gain the well-trained VAE model, the label-transfer mechanism records the feature vector generated by the Encoder according to the source data and the corresponding tag . When the data generative mechanism is running, we record the noised feature vector and the corresponding generated data . The classification model leverages the feature vector and label obtained from the source data set for training, and the well-trained classification model predicts the label of the corresponding data according to the noised feature vector .

4.5 Privacy Analysis of PPGF

In this section, we provide a concise privacy analysis of our framework. In our framework, there are two main stages and we only need to make sure the output of the data generation stage satisfying -privacy privacy. We make the claims that the synthetic data satisfies -privacy privacy. Without loss of generality, we mark the encoder as the mapping relationship and the decoder as the mapping relationship . We process the raw data through the encoder to get and utilize Algorithm 1 to add noise to get new latent code , at this time satisfies -privacy according to the Theorem 1. Finally, the decoder of the VAE model is used to obtain the synthetic data .

We first give the Theorem 2 to show that applying differential privacy mechanism to the data in the latent layer can also make the decoded data meet the same privacy requirements.

Theorem 2.

(Post-Processing [10]): Let be an -differential private function and let be an arbitrary mapping where is any arbitrary space. Then is -differential private.

The above properties based on -differential privacy can also be extended to -privacy, because -privacy is an extension of -differential privacy. The following Corollary can be obtained.

Corollary 1.

(Extended Post-Processing): Let be an -privacy probability density function and let be an arbitrary mapping where is any arbitrary space. Then is also -privacy.

According to Corollary 1, we know that applying -privacy mechanism to latent vector can also make the decoded data meet the same privacy requirements and we get Theorem 3.

Theorem 3.

The synthetic data satisfies -privacy.

5 Experiments

Based on the existing membership inference attacks, we present the most comprehensive evaluation to the proposed privacy-preserving generative framework. Our experiments include verification of the validity and utility of the synthetic data according to the accuracy of the training task, and the analysis of the defense of our framework according to the some metric of the membership inference attack. Moreover, we used three common datasets and utilized two efficient membership inference attack methods.

There are three baselines in our experiments: (1) BS (Source data), (2) N-PPGF (Synthetic data with non-private), and (3) DP-SGD. BS represents training each model based on source data set. N-PPGF where the noise addition mechanism is removed from PPGF is designed to show the upper bound on expression ability of the VAE model used in the PPGF. DP-SGD is designed according to the work of [1] [25] which is the generic privacy protection method and widely used. We should note that although we usually treat as the measure of privacy as we called privacy budget in this paper, we could calculate from in DP-SGD. Therefore, we use to measure the privacy of DP-SGD. The is privacy relaxation parameter[25] for DP-SGD and we set for DP-SGD all the time in this paper.

We implemented our framework in Pytorch. We trained all of the models on a PC equipped with one Titan V GPU with 12 GB of memory. We used Scipy and Numpy for samping from -distribution. The parameters were set to default values according to the databases, i.e., and for MNIST. We used Opacus555https://opacus.ai/ to implement DP-SGD method.

5.1 Setup

This part is mainly the setup phase of the experiment, including the databases, models, and measurement for various tests.

5.1.1 Databases

As stated in TABLE 2, There are three databases MINST666http://yann.lecun.com/exdb/mnist/, Fashion-MNIST777https://github.com/zalandoresearch/fashion-mnist and CelebA888http://mmlab.ie.cuhk.edu.hk/projects/CelebA.html used in our experiments and all of them are widely used in Image learning field.

| Name | Category | Pixel Size | Set Size |

| MNIST | Handwritten digits | 2828 | 70,000 |

| Fashion-MNIST | Zalando’s article | 2828 | 70,000 |

| CelebA | Face attributes | 178 218 | 202,599 |

5.1.2 VAE models

We choose two different VAE generative model for these three datasets. The details of the architecture of the VAE model for MNIST and FashionMNIST is presented in TABLE C1 and the details of the architecture of the VAE model for CelebA is shown in TABLE C2 in Appendix C. Note that, we crop the image size in CelebA database from 178 218 to 128 128 when training the VAE model. And we resize the generated image from 128 128 to 178 218 when training the target model.

5.1.3 Target models

We choose target models with varying capacity, including a small convolution model as CNN used for the MNIST, deep ResNet[14], and SlimCNN[30] used for CelebA. The small convolution model contains 2 convolution layers with kernels 32 and 64, a global pooling layer and two fully connected layer of size 9216 and 128. The small model is trained for 20 epochs with initial learning rate 0.2. The small convolution model CNN, ResNet-18, and ResNet-34 are for MNIST image classification. The FashionMNIST image classification models are ResNet-18, ResNet-34, and ResNet-50. The SlimCNN image classification is specially for CelebA. The detailed configurations and training recipes for deep ResNets and SlimCNN can be found in the original paper. Note that we only use these models for performance testing due to the limitations of the GPU memory size. For security testing of membership inference attacks, we uniformly use the ResNet-18 model, and in particular, we use ResNet-18 on the CelebA dataset to train for gender classification.

5.1.4 Metrics

There are two basic evaluation indicators to measure the validity of the synthetic data and the effectiveness of defense mechanism.

-

1.

Prediction accuracy: In order to reflect the validity of the newly generated image data, we separately use these generated data and source data to train the same training task, and compare the prediction accuracy of the different naturally trained model. Besides, the aforementioned N-PPGF baseline and DP-SGD baseline are used in the same experimental conditions to show that the newly generated data can retain somewhat the domain characteristics of the original dataset.

-

2.

Attack accuracy: The output of the membership inference attack is divided into two categories: member and non-member. The attack accuracy is used to indicate the source of unknown input data for successful prediction. In order to show the effectiveness of defense, we separately use these generated data and source data to train the same target training task for membership inference attacks. Besides, we calculate the attack prediction accuracy, including precision, recall, fscore. At the same time, to show the classification ability of the attack classifier more accurately, we also use the Area Under Curve (AUC) to accurately express the ability of membership inference attack.

5.2 Validity of PPGF

We generate new data through our framework on the three datasets of MNIST, Fashion-MNIST and CelebA respectively. And through the following experiments to illustrate the validity of the generated data for training tasks.

| MNIST | FashionMNIST | CelebA | |||||

| Architecture | CNN | ResNet18 | ResNet34 | ResNet18 | ResNet34 | ResNet50 | SlimCNN[30] |

| TA (PPGF ) | 0.9807 | 0.9938 | 0.9919 | 0.8905 | 0.8685 | 0.8909 | 0.8531 |

| VA (PPGF ) | 0.9741 | 0.9828 | 0.9788 | 0.7823 | 0.7820 | 0.7736 | 0.8016 |

| TA (N-PPGF) | 0.9818 | 0.9838 | 0.9914 | 0.8932 | 0.8897 | 0.8913 | 0.8839 |

| VA (N-PPGF) | 0.9835 | 0.9818 | 0.9857 | 0.8138 | 0.8308 | 0.7737 | 0.8011 |

| TA (BS) | 0.9973 | 0.9955 | 0.9969 | 0.9798 | 0.9757 | 0.9709 | 0.9189 |

| VA (BS) | 0.9919 | 0.9843 | 0.9918 | 0.9115 | 0.9152 | 0.9023 | 0.9117 |

| TA (DP-SGD ) | 0.8042 | 0.9274 | 0.9413 | 0.7766 | 0.7955 | 0.7686 | 0.8067 |

| VA (DP-SGD ) | 0.9291 | 0.9365 | 0.9451 | 0.7716 | 0.7850 | 0.7639 | 0.8092 |

-

1

TA: Train Accuracy; VA: Validity Accuracy.

5.2.1 Validity of the Generated Data

In order to measure the validity of the generated data, we separately train the same classification training task using source data and the newly generated data. We firstly separately use total training data of these three databases to train the VAE generative model and generate corresponding synthetic data for all training data of these databases. The original dataset are tested on these models under three baselines, and the results are shown in TABLE 3. It can be seen that through our framework, under the same classification task and the same model, the newly generated dataset with has little decrease in the accuracy of prediction compared with DP-SGD mechanism (, ).

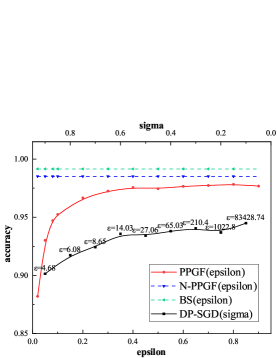

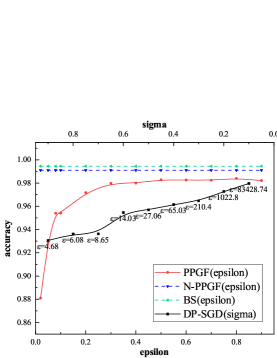

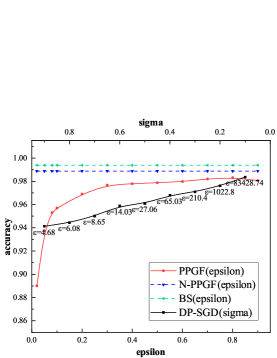

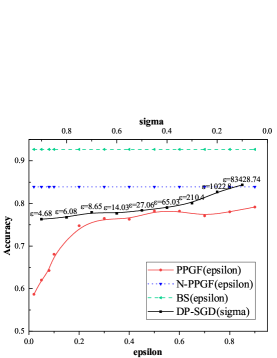

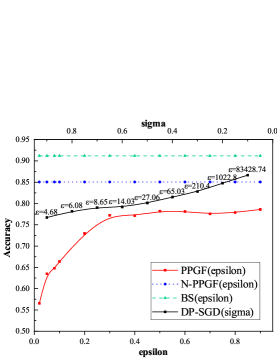

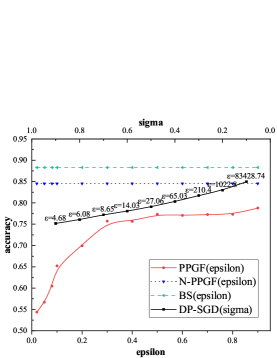

We also present the privacy utility tradeoff by varying the parameter in the following range [0.02, 0.05, 0.08, 0.1, 0.2, 0.3, 0.4, 0.5, 0.6, 0.7, 0.8, 0.9]. Recall that can be used to tune the level of privacy as in noise addition mechanism. Lower indicates higher indistinguishability, stronger privacy and vice versa. As for DP-SGD, we set and in the following range [1.1, 1.2 ,…, 11, 12, …, 64]. The parameter is vary from 0.1 to 0.9. We plot the prediction accuracy of different model for MNIST and FashionMNIST in Figure4 and Figure5. The BS baseline, N-PPGF baseline and DP-SGD baseline are included for reference. As can be seen in Figure4, when increasing , from more private to less private, the accuracy of model starts to catch up the BS baseline and N-PPGF baseline. In Figure4LABEL:sub@M1 even the and the , there is a significant gap between PPGF and DP-SGD. Figure5 shows similar trends for the FashionMNIST database. But, we notice that even when , test accuracy yields around 0.8 for all type of model, demonstrating less utility. The ability of VAE generative model is the most significant reason for the performance gap between our method and the source baseline, because there is a certain gap between N-PPGF baseline and the BS baseline. In addition, the performance of DP-SGD is better than PPGF while the of DP-SGD is much larger than the PPGF’s . However, lower indicates higher indistinguishability, stronger privacy and vice versa. In TABLE 4, when is close, there is a huge gap between DP-SGD and PPGF.

| Architecture | PPGF | DP-SGD | |||

| ResNet18 | 0.7 | 0.7709 | 3.9 | 0.7 | 0.6542 |

| 0.8 | 0.7805 | 3.4 | 0.81 | 0.6827 | |

| 0.9 | 0.7917 | 3 | 0.92 | 0.6849 | |

| ResNet34 | 0.7 | 0.7756 | 3.9 | 0.7 | 0.6118 |

| 0.8 | 0.7788 | 3.4 | 0.81 | 0.6809 | |

| 0.9 | 0.7860 | 3 | 0.92 | 0.6860 | |

| ResNet50 | 0.7 | 0.7727 | 3.9 | 0.7 | 0.6045 |

| 0.8 | 0.7733 | 3.4 | 0.81 | 0.6411 | |

| 0.9 | 0.7881 | 3 | 0.92 | 0.6615 |

TABLE 5 shows the performance of the synthetic data generated by PPGF with , which is used in the SlimCNN[30] model, as compared to BS baseline, N-PPGF baseline and the DP-SGD baseline in terms of both accuracy for each of the first 12 attributes. We notice that in some attributes like ”Blurry”, the accuracy of model utilizing synthetic data is almost the same as the accuracy of model using source data. However, in some attributes like ”Attractive”, there is a huge performance gap between our synthetic data and source data. Even this gap exists between N-PPGF baseline and BS baseline, because the ability of our VAE generative model is limited and some features of raw data can not be reconstructed. In addition, in ”Attractive” feature, we also notice that there is also a huge gap between DP-SGD mechanism and source data and we think some feature like ”Attractive” is hard to be extracted. Meanwhile, the performance of DP-SGD with () is similar with the PPGF (). Lower indicates higher indistinguishability, stronger privacy and vice versa. When performance close, PPGF has stronger privacy.

| Features | 5_Clock_Shadow | Arched_Eyebrows | Attractive |

| PPGF () | 0.8819 | 0.7408 | 0.5114 |

| DP-SGD () | 0.8819 | 0.7415 | 0.5245 |

| N-PPGF | 0.8812 | 0.7416 | 0.5303 |

| BS | 0.9296 | 0.8296 | 0.7841 |

| Features | Bags_Under_Eyes | Bald | Bangs |

| PPGF () | 0.7923 | 0.9793 | 0.8416 |

| DP-SGD () | 0.7925 | 0.9793 | 0.8532 |

| N-PPGF | 0.7914 | 0.9793 | 0.8514 |

| BS | 0.8281 | 0.9876 | 0.9474 |

| Features | Big_Lips | Big_Nose | Black_Hair |

| PPGF () | 0.8454 | 0.7509 | 0.7534 |

| DP-SGD () | 0.8467 | 0.7511 | 0.7914 |

| N-PPGF | 0.8363 | 0.7144 | 0.7717 |

| BS | 0.8487 | 0.8153 | 0.8988 |

| Features | Blond_Hair | Blurry | Brown_Hair |

| PPGF () | 0.8443 | 0.9526 | 0.7586 |

| DP-SGD () | 0.8461 | 0.9526 | 0.7587 |

| N-PPGF | 0.8462 | 0.9527 | 0.7587 |

| BS | 0.9449 | 0.9595 | 0.8403 |

5.3 Security of PPGF

We use two membership inference attack methods, namely black-box attack and white-box attack, to attack the target model. For our PPGF, we leverage the generated new data on the three databases to train the target classification model, and utilize the two kinds of membership inference attacks to test. Note that, we choose ResNet-18 model for MNIST, FashionMNIST, CelebA as for the limitation of GPU memory.

5.3.1 Black-box Attacks

We use the black-box attack method in the paper[31, 29]. We just train one shadow models locally as suggested in [29] to learn a two-class model based on the difference between the model’s output for member data and non-member data. Then we use this two-class model to determine whether the data is used for training the target model. The evaluation indicators include the accuracy, precision, recall, fscore, and AUC value of the attack. Specifically, in this type of attack, there is only 1 shadow model with the same training epochs comparing with the target model and we set a Multi-layer Perceptron(MLP) attack model as paper[29] suggested as the two-class attack model. The details of the architecture of the MLP attack model is shown in TABLE D1 in Appendix D. As for the training data, when we set the number of training data as , we select the first data from datasets to train the target model and randomly select data from raw datasets to train the shadow model. Note that there is no intersection between the training data of the target model and the shadow model. For test data, when we set the number of test data as , we select randomly select data from raw datasets to test the target model and randomly choose data from total raw datasets to test the shadow model. All training data and test data of shadow model with corresponding output are used to train our two-class attack model (MLP). In the end, we use data from total training data of target model and data from total test data of target model to test this black-box attack. The attack results are shown in the TABLE 6. Our framework with reduces the membership inference accuracy to the target model to be close to and the AUC of the attack model to be close to , which means our target model could effectively defend this black-box attack.

| Black-Box Attack | |||||||

| BS(Source Data) | |||||||

| Database | Train size | Test size | Accuracy | Precision | Recall | Fscore | AUC |

| MNIST | 15000 | 15000 | 0.5149 | 0.5149 | 0.5149 | 0.5149 | 0.5265 |

| FashionMNIST | 15000 | 15000 | 0.6074 | 0.6347 | 0.6074 | 0.5864 | 0.6580 |

| CelebA | 15000 | 15000 | 0.5969 | 0.7718 | 0.5969 | 0.5197 | 0.5896 |

| PPGF() | |||||||

| Database | Train size | Test size | Accuracy | Precision | Recall | Fscore | AUC |

| MNIST | 15000 | 15000 | 0.4966 | 0.4965 | 0.4966 | 0.4934 | 0.4971 |

| FashionMNIST | 15000 | 15000 | 0.5025 | 0.5025 | 0.5025 | 0.5020 | 0.5045 |

| CelebA | 15000 | 15000 | 0.4967 | 0.4951 | 0.4967 | 0.4522 | 0.4971 |

| White-Box Attack | |||||||

| BS(Source Data) | |||||||

| Database | Train size | Test size | Accuracy | Precision | Recall | Fscore | AUC |

| MNIST | 2000 | 2000 | 0.5415 | 0.5544 | 0.5415 | 0.5126 | 0.5699 |

| FashionMNIST | 2000 | 2000 | 0.5815 | 0.7295 | 0.5815 | 0.5011 | 0.6072 |

| CelebA | 2000 | 2000 | 0.5425 | 0.6197 | 0.5425 | 0.4546 | 0.5639 |

| PPGF() | |||||||

| Database | Train size | Test size | Accuracy | Precision | Recall | Fscore | AUC |

| MNIST | 2000 | 2000 | 0.5015 | 0.5319 | 0.5015 | 0.3452 | 0.4986 |

| FashionMNIST | 2000 | 2000 | 0.5085 | 0.5115 | 0.5085 | 0.4745 | 0.5176 |

| CelebA | 2000 | 2000 | 0.5020 | 0.5459 | 0.5020 | 0.3455 | 0.4944 |

5.3.2 White-box Attacks

We utilize the white-box attack method from the paper [27]. As is shown in this paper, the white-box attack is more effective than the black-box one and considered to be the most effective attack. The adversary controls the target model and could test target models locally, learning a two-class model based on the difference between the model’s layer output, loss values, label values, and layer gradients for member data and non-member data. In this part, we use this two-class model in [27] to determine whether the data is used for training the target model. The evaluation indicators include the accuracy, precision, recall, fscore and AUC value of the attack. Specifically, we only select the gradients of last layer, the model’s output, loss values and label values to train this two-class model. We use 60000 training data to train target model. And we set training size as and test size as . We randomly select data from all raw training data and data from all raw test data to test this white-box attack. As shown in the TABLE 6, our framework with reduces the inference accuracy to the target model to be close to and the AUC of the attack model to be close to , which means our target model could prevent this white-box attack.

6 Related Work

6.1 Membership Inference Attack

The first paper about the membership inference attack was published by Shokri et al. [31] under the assumption of the black-box model. The shadow model was used to train a machine learning model similar to the target model, and the shadow model was used to learn differences of confidence vector about members and non-members. Later, Nasr et al.[27] first proposed the membership inference attack under the white-box model and federated learning model. They classified between members and non-members by distinguishing model data such as the gradient changes of each layer or the output of each layer. Song et al.[33] mainly discussed the practical threat of machine learning in the article. The defensive measures against adversarial samples were to generate a robust machine learning model. But, this robust machine learning model was more sensitive to membership inference attacks. The article [32] set different thresholds, which was used to determine whether the prediction confidence belongs to the member, for a different class label to improve the accuracy of the attack. At the same time, the redefined prediction entropy was used to strengthen the membership inference attack. The paper [40] designed new membership inference algorithms against machine learning models and achieved significantly higher inference accuracy when the augmented data was also used in training but the augmented mechanism was known to the adversary. However, the malicious attacker could only utilize the original image or randomly chosen transformations which yielded a significantly lower inference success rate. There are also some membership inference attacks where the adversary’s advantages are limited. Hui et al.[17] proposed an membership inference attack when the adversary couldn’t collect enough sample with output probabilities and labels as either members or non-members and Choquette et al.[8] introduced label-only membership attacks with the adversary only got access to models’ predicted labels. In addition, there was an new membership inference attack [42] to recommander systems where the adversary could only observe the ordered recommended items.

6.2 Protection Mechanism Against Membership Inference Attack

Membership inference attack defense mechanisms have also developed for many years. As mentioned in the article[29], they used the dropout mechanism and model stacking mechanism to avoid over-fitting of the target model and prevent membership inference attacks. Song et al. [26] introduced adversarial training in the training process, which was abstracted into a maximum and minimum problem. Minimizes the classification loss and maximizes the profit of the inference attack to find the best defensive model against the attack model. Jia et al.[20] studied to fuzzy the confidence score vector to evade the membership classification of the attack model. Wang et al.[36] reduced model storage and computational operation to defend membership inference attacks. Yin et al. [39] set definitions for utility and privacy of target classifier, and formulated the design goal of the defense method as an optimization problem and solved it.

Differential Privacy: For the schemes that apply differential privacy technology to protect privacy, some schemes[2, 35, 18, 19, 28] added noise to the objective function to protect privacy, while others [1, 41, 25] called DP-SGD added noise to the gradient during training. Jayaraman et al. [19] added noise in the objective function, gradient vectors, and output vectors to analyze trade-offs between privacy and utility. Nasr et al. instantiated the hypothetical adversary to analyze the differential private machine learning especially DP-SGD. There are also some schemes[13, 5, 6, 23, 16] that set the goal of protection as a generative model. Hayeset al. [13] first applied the membership inference attack on the generative model and utilized differential privacy during training stage to protect the generative model. Chen et al. [5] Systematically analyzed the membership inference attack on the generative model and made a detailed classification. However, we treat generative model VAE as a building component to protect the downstream task model in this work.

The existing defense schemes basically add noise in the model training process or directly confuse the output results of the model. It is rarely considered to protect the privacy of the source data by processing the raw data.

6.3 Data Generation Mechanism

Some data generation mechanisms[37, 34, 43, 4] satisfying differential privacy have been proposed, all of these works considered that leverage differential noise mechanism in the training process as previously stated and the membership inference attacks were not included. Xie et al. [37] added noise on the gradient of the Wasserstein distance with respect to the training data during the training procedure. The DP-CGAN [34] made the training process private by injecting random Gaussian noise into the optimization process of the discriminator network. DP-GAN [43] was built upon the improved WGAN framework and enforced DP by injecting random noise in updating the discriminator. Chen et al. [4] proposed gradient-sanitized Wasserstein GAN replacing the typical training procedure with the DP-SGD. However, we consider that generating synthetic data to train the target model for source data protection and don’t affect the training process of the generative model. On the one hand, we don’t consider applying the differential privacy mechanism during the training stage because of the model convergence, privacy budget costs in each iteration, and schemes extension. On the other hand, their purpose is to construct generative models satisfying differential privacy to generate lots of new data, while this paper focuses on protecting the target model from membership inference attacks by transforming the original data into similar data that satisfy differential privacy.

7 Conclusion and Future Work

In this paper, we have proposed a privacy-preserving system framework for preventing source data leakage to generate synthetic data satisfying differential privacy without much loss. This is achieved via a well-trained VAE generative model and an extended differential privacy mechanism. We first gain a VAE model through our source data. Next, we can use a VAE encoder to gain data feature from the data source and add some noise which satisfies extended differential privacy. Then we put the result into the VAE decoder to get new data. Finally, we can use synthetic data to train the target machine learning model. Therefore, our proposed framework can apply in any downstream machine learning environment without any change to the target model. Extensive experiment results also show the effectiveness and generalization ability of our framework. Recall that the ability of the generative model to extract data feature limits the validity of the generated data. Thus, an interesting future work is providing an application programming interface to the more complex and effective generative model in our framework and any latent generative model can apply in this framework. Another direction is to discover the fine-grained noise mechanism which can make the synthetic data more realistic in a specific feature.

Appendix A Coefficient of Probability Density Function

The derivation of derivates the coefficient from the pdf is as follows. We convert the Cartesian coordiantes to the hyper-spherical coordinate system and set the at the origin of the hyper-spherical coordinate system without loss generality.

| (A.1) |

From the (A) and , we gain:

| (A.2) |

Appendix B Proof of the Marginal Distribution

We could gain the marginal distribution function of the pdf as follows.

| (A.3) |

The pdf of -distribution is as follows:

| (A.4) |

we could replace with k and replace with . Obliviously, the marginal distribution satisfies -distribution.

Appendix C Architecture of Variational Autoencoder Models

| Name | Layer Type | Details |

| Encoder Component | Fully Connected Layer | Sizes: 768 400 |

| Activation: ReLU | ||

| Fully Connected Layer | Sizes: 400 20 | |

| Decoder Component | Fully Connected Layer | Sizes: 20 400 |

| Activation: ReLU | ||

| Fully Connected Layer | Sizes: 400 768 |

| Encoder Component | |

| Layer Type | Details |

| 5 Convolutional Layers | Kernels: 128, 256, 512, 1024, 2048 |

| Kernel size | |

| Stride: 2 | |

| Batch Normalization | |

| Activation: ReLU | |

| Fully Connected Layer | Size: 32768 512 |

| Decoder Component | |

| Layer Type | Details |

| Fully Connected Layer | Size: 512 32768 |

| Activation: LeakyReLU | |

| 6 Convolutional Layers | Kernels: 2048, 1024, 512, 256, 128, 3 |

| Kernel size | |

| Stride: 2 | |

| Batch Normalization | |

| Activation: LeakyReLU | |

Appendix D Architecture of Black-box Attack Model

| Name | Layer Type | Details |

| MLP Component | Fully Connected Layer | Sizes: len(output) 128 |

| Activation: ReLU | ||

| Fully Connected Layer | Sizes: 128 2 |

References

- [1] Martin Abadi, Andy Chu, Ian Goodfellow, H Brendan McMahan, Ilya Mironov, Kunal Talwar, and Li Zhang. Deep learning with differential privacy. In Proceedings of the 2016 ACM SIGSAC Conference on Computer and Communications Security, pages 308–318, 2016.

- [2] Raef Bassily, Adam Smith, and Abhradeep Thakurta. Private empirical risk minimization: Efficient algorithms and tight error bounds. In 2014 IEEE 55th Annual Symposium on Foundations of Computer Science, pages 464–473. IEEE, 2014.

- [3] Konstantinos Chatzikokolakis, Miguel E Andrés, Nicolás Emilio Bordenabe, and Catuscia Palamidessi. Broadening the scope of differential privacy using metrics. In International Symposium on Privacy Enhancing Technologies Symposium, pages 82–102. Springer, 2013.

- [4] Dingfan Chen, Tribhuvanesh Orekondy, and Mario Fritz. Gs-wgan: A gradient-sanitized approach for learning differentially private generators. arXiv preprint arXiv:2006.08265, 2020.

- [5] Dingfan Chen, Ning Yu, Yang Zhang, and Mario Fritz. Gan-leaks: A taxonomy of membership inference attacks against gans. arXiv preprint arXiv:1909.03935, 2019.

- [6] Junjie Chen, Wendy Hui Wang, Hongchang Gao, and Xinghua Shi. Par-gan: Improving the generalization of generative adversarial networks against membership inference attacks. In Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery & Data Mining, pages 127–137, 2021.

- [7] Trishul Chilimbi, Yutaka Suzue, Johnson Apacible, and Karthik Kalyanaraman. Project adam: Building an efficient and scalable deep learning training system. In 11th USENIX Symposium on Operating Systems Design and Implementation (OSDI 14), pages 571–582, 2014.

- [8] Christopher A Choquette-Choo, Florian Tramer, Nicholas Carlini, and Nicolas Papernot. Label-only membership inference attacks. In International Conference on Machine Learning, pages 1964–1974. PMLR, 2021.

- [9] Cynthia Dwork, Frank McSherry, Kobbi Nissim, and Adam Smith. Calibrating noise to sensitivity in private data analysis. In Theory of cryptography conference, pages 265–284. Springer, 2006.

- [10] Cynthia Dwork, Aaron Roth, et al. The algorithmic foundations of differential privacy. Foundations and Trends in Theoretical Computer Science, 9(3-4):211–407, 2014.

- [11] European union. European union’s general data protection regulation. https://gdpr-info.eu/, 2018.

- [12] Liyue Fan. Practical image obfuscation with provable privacy. In 2019 IEEE International Conference on Multimedia and Expo (ICME), pages 784–789. IEEE, 2019.

- [13] Jamie Hayes, Luca Melis, George Danezis, and Emiliano De Cristofaro. Logan: Membership inference attacks against generative models. In Proceedings on Privacy Enhancing Technologies (PoPETs), volume 2019, pages 133–152. De Gruyter, 2019.

- [14] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 770–778, 2016.

- [15] Benjamin Hilprecht, Martin Härterich, and Daniel Bernau. Monte carlo and reconstruction membership inference attacks against generative models. Proceedings on Privacy Enhancing Technologies, 2019(4):232–249, 2019.

- [16] Hongsheng Hu, Zoran Salcic, Gillian Dobbie, and Xuyun Zhang. Membership inference attacks on machine learning: A survey. arXiv preprint arXiv:2103.07853, 2021.

- [17] Bo Hui, Yuchen Yang, Haolin Yuan, Philippe Burlina, Neil Zhenqiang Gong, and Yinzhi Cao. Practical blind membership inference attack via differential comparisons. arXiv preprint arXiv:2101.01341, 2021.

- [18] Roger Iyengar, Joseph P Near, Dawn Song, Om Thakkar, Abhradeep Thakurta, and Lun Wang. Towards practical differentially private convex optimization. In 2019 IEEE Symposium on Security and Privacy (SP), pages 299–316. IEEE, 2019.

- [19] Bargav Jayaraman and David Evans. Evaluating differentially private machine learning in practice. In 28th USENIX Security Symposium (USENIX Security 19), pages 1895–1912, 2019.

- [20] Jinyuan Jia, Ahmed Salem, Michael Backes, Yang Zhang, and Neil Zhenqiang Gong. Memguard: Defending against black-box membership inference attacks via adversarial examples. In Proceedings of the 2019 ACM SIGSAC Conference on Computer and Communications Security, pages 259–274, 2019.

- [21] Leonard J Kazmier. Schaum’s outline of theory and problems of business statistics. McGraw-Hill New York, 1976.

- [22] Diederik P Kingma and Max Welling. Auto-encoding variational bayes. arXiv preprint arXiv:1312.6114, 2013.

- [23] Kin Sum Liu, Chaowei Xiao, Bo Li, and Jie Gao. Performing co-membership attacks against deep generative models. In 2019 IEEE International Conference on Data Mining (ICDM), pages 459–467. IEEE, 2019.

- [24] Brendan McMahan, Eider Moore, Daniel Ramage, Seth Hampson, and Blaise Aguera y Arcas. Communication-efficient learning of deep networks from decentralized data. In Artificial Intelligence and Statistics, pages 1273–1282. PMLR, 2017.

- [25] H Brendan McMahan, Galen Andrew, Ulfar Erlingsson, Steve Chien, Ilya Mironov, Nicolas Papernot, and Peter Kairouz. A general approach to adding differential privacy to iterative training procedures. arXiv preprint arXiv:1812.06210, 2018.

- [26] Milad Nasr, Reza Shokri, and Amir Houmansadr. Machine learning with membership privacy using adversarial regularization. In Proceedings of the 2018 ACM SIGSAC Conference on Computer and Communications Security, pages 634–646, 2018.

- [27] Milad Nasr, Reza Shokri, and Amir Houmansadr. Comprehensive privacy analysis of deep learning: Passive and active white-box inference attacks against centralized and federated learning. In 2019 IEEE Symposium on Security and Privacy (SP), pages 739–753. IEEE, 2019.

- [28] Milad Nasr, Shuang Songi, Abhradeep Thakurta, Nicolas Papemoti, and Nicholas Carlin. Adversary instantiation: Lower bounds for differentially private machine learning. In 2021 IEEE Symposium on Security and Privacy (SP), pages 866–882. IEEE, 2021.

- [29] Ahmed Salem, Yang Zhang, Mathias Humbert, Pascal Berrang, Mario Fritz, and Michael Backes. Ml-leaks: Model and data independent membership inference attacks and defenses on machine learning models. arXiv preprint arXiv:1806.01246, 2018.

- [30] Ankit Sharma and Hassan Foroosh. Slim-cnn: A light-weight cnn for face attribute prediction. arXiv preprint arXiv:1907.02157, 2019.

- [31] Reza Shokri, Marco Stronati, Congzheng Song, and Vitaly Shmatikov. Membership inference attacks against machine learning models. In 2017 IEEE Symposium on Security and Privacy (SP), pages 3–18. IEEE, 2017.

- [32] Liwei Song and Prateek Mittal. Systematic evaluation of privacy risks of machine learning models. In 30th USENIX Security Symposium (USENIX Security 21), 2021.

- [33] Liwei Song, Reza Shokri, and Prateek Mittal. Privacy risks of securing machine learning models against adversarial examples. In Proceedings of the 2019 ACM SIGSAC Conference on Computer and Communications Security, pages 241–257, 2019.

- [34] Reihaneh Torkzadehmahani, Peter Kairouz, and Benedict Paten. Dp-cgan: Differentially private synthetic data and label generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, pages 0–0, 2019.

- [35] Di Wang, Minwei Ye, and Jinhui Xu. Differentially private empirical risk minimization revisited: Faster and more general. In Advances in Neural Information Processing Systems, pages 2722–2731, 2017.

- [36] Yijue Wang, Chenghong Wang, Zigeng Wang, Shanglin Zhou, Hang Liu, Jinbo Bi, Caiwen Ding, and Sanguthevar Rajasekaran. Against membership inference attack: Pruning is all you need. arXiv preprint arXiv:2008.13578, 2020.

- [37] Liyang Xie, Kaixiang Lin, Shu Wang, Fei Wang, and Jiayu Zhou. Differentially private generative adversarial network. arXiv preprint arXiv:1802.06739, 2018.

- [38] Ziqi Yang, Bin Shao, Bohan Xuan, Ee-Chien Chang, and Fan Zhang. Defending model inversion and membership inference attacks via prediction purification. arXiv preprint arXiv:2005.03915, 2020.

- [39] Yu Yin, Ke Chen, Lidan Shou, and Gang Chen. Defending privacy against more knowledgeable membership inference attackers. In Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery & Data Mining, pages 2026–2036, 2021.

- [40] Da Yu, Huishuai Zhang, Wei Chen, Jian Yin, and Tie-Yan Liu. Membership inference with privately augmented data endorses the benign while suppresses the adversary. arXiv preprint arXiv:2007.10567, 2020.

- [41] Lei Yu, Ling Liu, Calton Pu, Mehmet Emre Gursoy, and Stacey Truex. Differentially private model publishing for deep learning. In 2019 IEEE Symposium on Security and Privacy (SP), pages 332–349. IEEE, 2019.

- [42] Minxing Zhang, Zhaochun Ren, Zihan Wang, Pengjie Ren, Zhumin Chen, Pengfei Hu, and Yang Zhang. Membership inference attacks against recommender systems. arXiv preprint arXiv:2109.08045, 2021.

- [43] Xinyang Zhang, Shouling Ji, and Ting Wang. Differentially private releasing via deep generative model (technical report). arXiv preprint arXiv:1801.01594, 2018.