Probabilistic 3d regression with projected huber distribution

Abstract

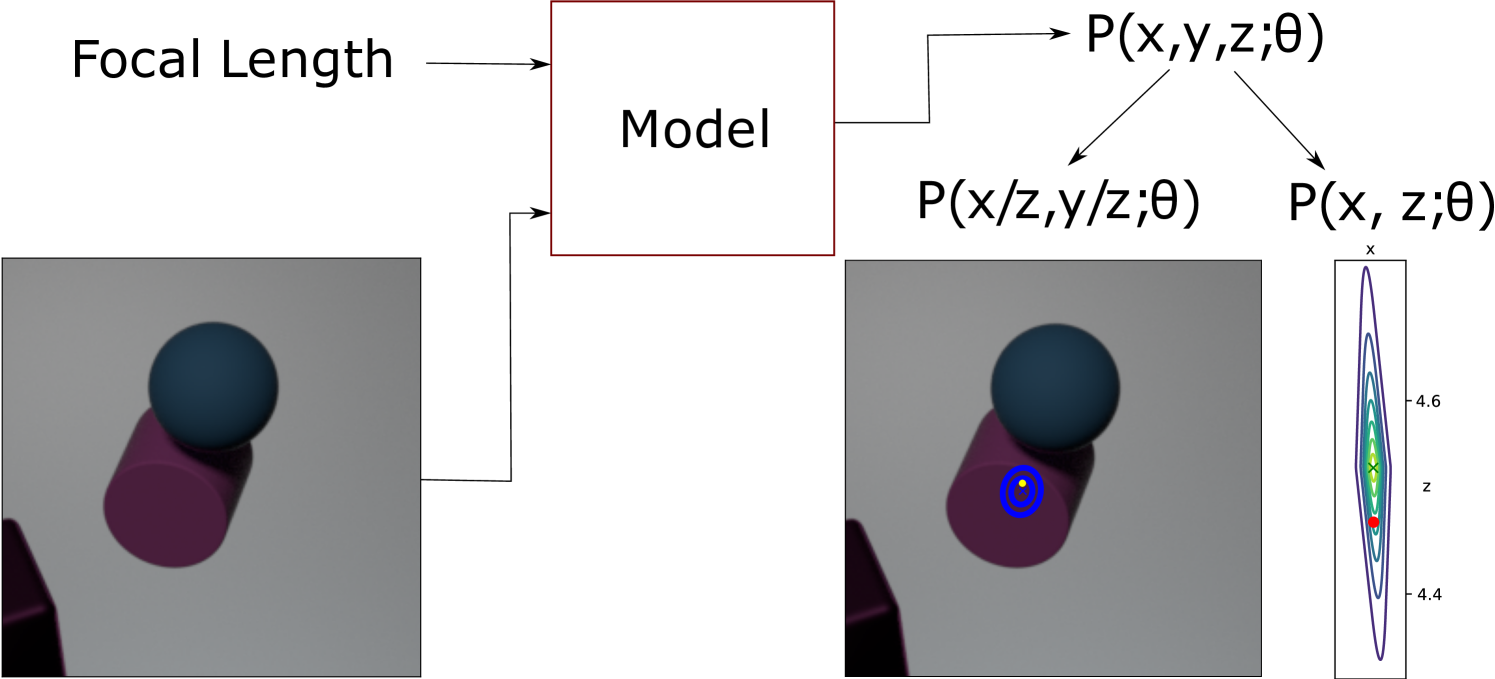

Estimating probability distributions which describe where an object is likely to be from camera data is a task with many applications. In this work we describe properties which we argue such methods should conform to. We also design a method which conform to these properties. In our experiments we show that our method produces uncertainties which correlate well with empirical errors. We also show that the mode of the predicted distribution outperform our regression baselines. The code for our implementation is available online

1 Introduction

Estimating 3d position of object have many use cases. For example 1) Estimating the position of a car or pedestrian is required to construct policies for how to traverse traffic for autonomous driving or ADAS. As a result this task is included in many such datasets, for example KITTI Geiger et al. (2012). 2) Body pose estimation can be seen as trying to find the 3d position of each joint for a human skeleton. Examples of such datasets are von Marcard et al. (2018) and Ionescu et al. (2013). 3) For many robotics applications estimating the 3d position of objects is relevant Bruns & Jensfelt (2022). For industrial robotics the shape of the object is often known, therefore the only source of variation is the six degrees of freedom due to the orientation and position of the object.

Estimating uncertainties associated with the estimated position has applications for fusion of multiple estimates Mohlin et al. (2021). Another application for uncertainty estimates is time filters, such as Kalman filters. Uncertainties can also be used for application specific purposes. For examples in an ADAS setting it makes sense to not only avoid the most likely position of an object, but to also include a margin proportional to the uncertainty of the estimate. In robotic grasping a good policy could be to try to pick up objects directly if the estimated position is certain while doing something else if the estimate is uncertain, such as changing viewing angle.

2 Prior work

When estimating 3d position from camera data there is an inherent ambiguity due to scale/depth ambiguity. Many types of interesting types of objects have a scale ambiguity, such as people, cars and animals.

The existence of scale ambiguity is reflected in the performance on competitive datasets. For example the errors of single view methods which estimate the position of people relative to the camera is on the order of 120mm where 100mm is in the depth direction Moon et al. (2019). This is consistent with the presence of scale/depth ambiguity. In the multi view setting where the depth ambiguity can be eliminated the state of the art is on the order of 17mm Iskakov et al. (2019).

There are many ways to resolve the scale/depth ambiguity.

One way to solve the problem is to add a depth sensor such as a lidar Geiger et al. (2012) or structured light Choi et al. (2016). However adding additional sensors come with several drawbacks such as price, complexity, range among others. For these reasons we will focus on methods which only use camera data.

For camera only methods there are two common ways to resolve the ambiguity, the first is only applicable if the object lies on a known plane, in practice this plane is often the ground plane, but could also be for example the surface of a table. Mills & Goldenberg (1989) Another approach is to do multi-view fusion. In this case the errors due to these ambiguities point in different directions and can therefore be cancelled out with a suitable method.

For this reason it is important if a method to estimate 3d position also fits into a framework which is able to resolve the scale/depth ambiguity.

It is well known that using densities which are log concave are well suited for sensor fusion since computing the optimal combination is a convex problem in this setting An (1997). In our work we show that both the ground plane assumption and multi view fusion turns into a convex optimization problems if model predicts a log concave probability distribution.

There are many other works which treat the problem of estimating 3d position in a probabilistic framework. For example Feng et al. (2019) models the the position of an object with a normal distribution. However they also use a detector on lidar data to avoid large errors and thereby large gradients. Meyer et al. (2019) models the locations of the corners of a bounding box with a laplace distributions, but they also avoid the scale/depth ambiguity by using lidar data. Many works treat depth estimation as a classification problem were a final prediction is constructed by computing the expected value of this discrete distribution Wang et al. (2022), but the probabilities predicted by such methods are in general not concave since it is possible to model a multimodal distribution with these methods. In Bertoni et al. (2019) they model the reciprocal of the depth with a laplace distribution. However they do not model the projected location in a probabilistic manner, which is not well suited for multi-view fusion. In section 3.3 we also show that modelling depth independently of the projected location always result in undesirable properties, which they, like many others do.

3 Motivation

This section will focus on the specific properties which data captured by cameras conform to and therefore which a model should take into account when doing probabilistic position estimation based on camera data.

In practice when applying this method the location of the object is modeled by a probability distribution which is parameterized by the output of a convolutional neural network.

3.1 Notation

We will investigate a setting where the model takes an input image which is produced by capturing a scene on a sensor. The camera is a pin-hole camera without radial distoritions and have the known intrinsics

| (1) |

Coordinates for this camera are denoted where the axis is aligned with the principal axis of the camera and correspond to the center of the camera.

The method will predict a probability distribution parameterized by based on the input image.

3.2 Desired constraints for estimated distribution

In this section we describe constraints which we argue a probability distribution estimated from camera data should conform to. We also motivate why these constaints should exist.

constraint 1: The model can express any variance for the projected coordinates on the image sensor and depth independently.

Formally:

| (2) |

Motivation

For many tasks the error of depth estimation is inherently large due to scale/depth ambiguity. Therefore for objects which have a variation in size, but where the absolute size is hard to estimate there will be an inherent error proportional to the scale error and the distance to the object. Despite this estimating the position in projected coordinates can often be done accurately.

For example assuming that the height of humans is between 1.5-1.9m and assuming that it is intrinsically hard to estimate the size of an unseen person an uncertainty of 13% of the distance to the person is reasonable to expect.

Despite this it is possible to estimate the position of keypoints in the projected coordinate space of an image accurately, often within a few pixels.

constraint 2: The model should not try to estimate the coordinates directly, but instead estimate the depth divided by the focal length and the projected position of the object.

Formally:

| (3) |

Motivation:

It is difficult to separate the the quantities scale, depth and focal length, since the main effect of each variable is to change the apparent size of the object on the sensor. For this reason the focal length needs to be taken into account when estimating the depth of an object, otherwise the variation in focal length adds additional ambiguity. When scale/depth ambiguity is present it is not possible to estimate the absolute position for the x or y coordinate accurately, but it is often possible to do so for the projected position of the object. This motivates why a method should also estimate the location in image space. Methods which conform to these constrants are not uncommon. For example Zhen et al. (2020) estimates the depth after dividing by the focal length. It is also common to estimate the location of objects in image space.

constraint 3: The output probability should have support only for points in front of the camera.

Formally:

| (4) |

Motivation:

Cameras only depict objects in front of them. The output distribution should reflect that.

constraint 4: The output probability should have support for all points in the field of view.

Formally: for a camera with focal length and sensor size

| (5) |

Motivation:

One standard way to optimize neural networks which output probability distributions is to minimize the negative log likelihood. Backpropagation is only possible if the predicted probability is larger than 0 for the ground truth position. Since there are not any guarantees what an untrained network predicts the probability has to be positive for all locations which are reasonable without considering the input data. Since a camera only depict objects in its field of view this is a sufficient condition.

constraint 5: The negative log likelihood of the position is convex. Formally:

| (6) |

is convex with respect to

Motivation:

A common method to combine estimates which are expressed as probability distributions is by assuming that the two estimates have errors which are independent random variables and letting the output of the fusion be the maximum likelihood point under this assumption Mohlin et al. (2021). It is desirable if computing this combination is a convex optimization problem since it guarantees that it is possible to find the fusion quickly. In section 3.3 we show that a sufficient constraint for this property is that the negative log likelihood is convex with respect to position.

constraint 6: The probability for objects infinitely far away from the camera is 0 Formally

| (7) |

| (8) |

Motivation:

Firstly objects of finite size are not visible if they are infinitely far away from the camera. Secondly we need this property to guarantee convergence when doing multi-view fusion.

constraint 7: is continuous with respect to .

Motivation:

It is not reasonable that small changes in position change the density by a large amount.

3.3 Properties given these constraints

Proposition 1: All distributions which are twice differentiable at least at one point and decompose into

| (9) |

Do not fulfill all of the above constraints. Proof in supplementary B

Proposition: 2 In multi view fusion, if the field of view for the cameras have a non-empty intersection then if each camera produce probability estimates conforming to constraints 1-7 then a valid maximum likelihood fusion point exist and can be found by convex optimization. Proof in supplementary C

Proposition 3: Imposing a ground plane constraint is a convex optimization problem. If any point of the ground plane is in the field of view of the camera, then the constraints also guarantee that a solution exist. Proof in supplementary C

4 Method

In this work we first describe the Projected Huber Distribution which we will show fulfills the constraints described in section 3

4.1 Projected Huber Distribution

The distribution can be decomposed into one component which mainly model the probability in the projected coordinates and another component which models the distribution in depth

The component which mainly models the probability over the projected coordinates is

| (10) |

By including in this distribution we can avoid the problem described in proposition 1. Note that conditioning on is necessary to get a proper distribution.

, model the mean position on the camera sensor. models the estimated depth of the object and models the precision. The function is a huber function.

The distribution which models the depth is

| (11) |

Where models the estimated depth. Note that this is the same parameter as for . roughly models the precision, that is a larger reduces the variance.

By combining these distributions we get the Projected Huber Distribution

| (12) |

for x,y,z in the cameras coordinate system.

The normalizing factors are

| (13) | ||||

| (14) | ||||

| (15) |

Where is bounded by

| (16) |

This distribution will conform to all constraints, except constraint 2 which relates to how to predict the parameters of the model. We show how to predict the parameters in a way which conform to constraint 2 in subsection 4.4

proposition 5: the moments of this distribution are

| (17) | ||||

| (18) | ||||

| (19) | ||||

| (20) |

The terms are not very intuitive, but the fraction for the expected value quickly decrease to 1 while the fraction for the variance behaves similar to that is

| (21) | ||||

| (22) |

proof in supplementary F

4.2 Proof for constraints

constraint 1: Proof: From proposition 5 we see that models the uncertainty in projected coordinates, while models the uncertainty in depth coordinates.

constraint 3: if , from definition in equation 12

constraint 4: if since the range of is the and the expression can be evaluated for all values of . The field of view for a camera is a subset of the half plane .

constraint 5: Proof sketch: Show that both and has a convex negative log likelihood. An affine basis change turns the argument of the into a norm which is convex. is also convex. Full proof in supplementary G.1

constraint 6: Proof sketch. For the region the proof is trivial. Then we prove it for the region . Here the term will go to 0. as while the other factors are bounded. For the region the factor goes to 0 while the other factors are bounded. Full proof in supplementary G.2

constraint 7: Proof sketch for the function is trivially continuous. For the function is also trivially continuous. When z approaches 0 the factor goes to 0. Full proof in G.3

4.3 Parameter remapping

When the parameters of Projected Huber Distribution are estimated by a neural network distribution it is necessary to turn the output of the neural network into a valid parameterization for Projected Huber Distribution . Valid in this sense refers to conforming to the constraints , and , that is is positive definite while a and are positive.

Neural networks give outputs in where is decided by the architecture of the network. These outputs are do not conform to our desired parameter constraints, unless a suitable activation is applied.

To construct this activation we start by doing a basis change to conform to constraint 2 from the motivation.

| (23) | ||||

| (24) |

Where is a bias term defined by

| (25) |

For the purpose of proving that our loss has bounded gradients we also need the constant defined by

| (26) |

These values can either be derived from known properties of the dataset or by computing these values for all samples in the training dataset.

proportional to the projected scale of an object. Even in the extreme case where the scale ambiguity is 40% and the projected size varies between 2 and 200 pixels would be less than 20. Furthermore is only used to prove that the loss has bounded gradients.

proposition 5 and . for points in the field of the camera with a depth conforming to equation 26.

proof Proof sketch correspond to the projected image coordinates, scaled to be between -1 and 1. is normalized by defininition 25-26. Full proof in supplementary H

Now correspond to the coordinates in the projected image and is the z coordinate after compensating for the scale change of the focal length and applying a logarithm to map to

Define

| (27) | ||||

| (28) | ||||

| (29) |

Using a negative log likelihood of in the original basis can be written as

| (30) | ||||

| (31) | ||||

| (32) |

Which in the new basis is

| (33) | ||||

| (34) | ||||

| (35) |

In these losses we exclude terms not required for computing gradients such as terms only containing constants, .

and its gradients is computed by numerical integrals. The full method is described in supplementary J

Estimating these parameters will conform to constraint 2 since and models the position and precision for the projected coordinates while and models the depth and precision for the depth estimate.

4.4 Enforcing constraints on distribution parameters

Designing the activation which outputs and is done in the same way as Mohlin et al. (2021).

Estimating and is done by starting with two real numbers ,

| (36) |

| (37) |

With this parameterization the the loss is convex with respect to the network output when . The gradients of the loss will be bounded with respect to . Proof in K and I

Having a loss which is Lipschitz-continous should aid with stability during training since it avoids back-propagating very large gradients.

5 Experiments

In this section we show how the method described in section 4 can be applied in practice to estimate the position of an object.

5.1 Dataset

We construct a synthetic dataset by rendering objects in a similar way as Johnson et al. (2017). We choose our task to be to estimate the location of a rendered cylinder. The cylinder is rendered different reflection material properties, color and with random orientation.

The scene is rendered on a sensor of size pixels, the focal length of the camera is sampled from a uniform distribution The cylinder has a height of 0.2 meters and a radius of 0.1 meter. The depth is uniformly sampled from The x and y coordinates are sampled from for each axis.

In this experimental setup the normalizing constants are

| (38) | ||||

| (39) |

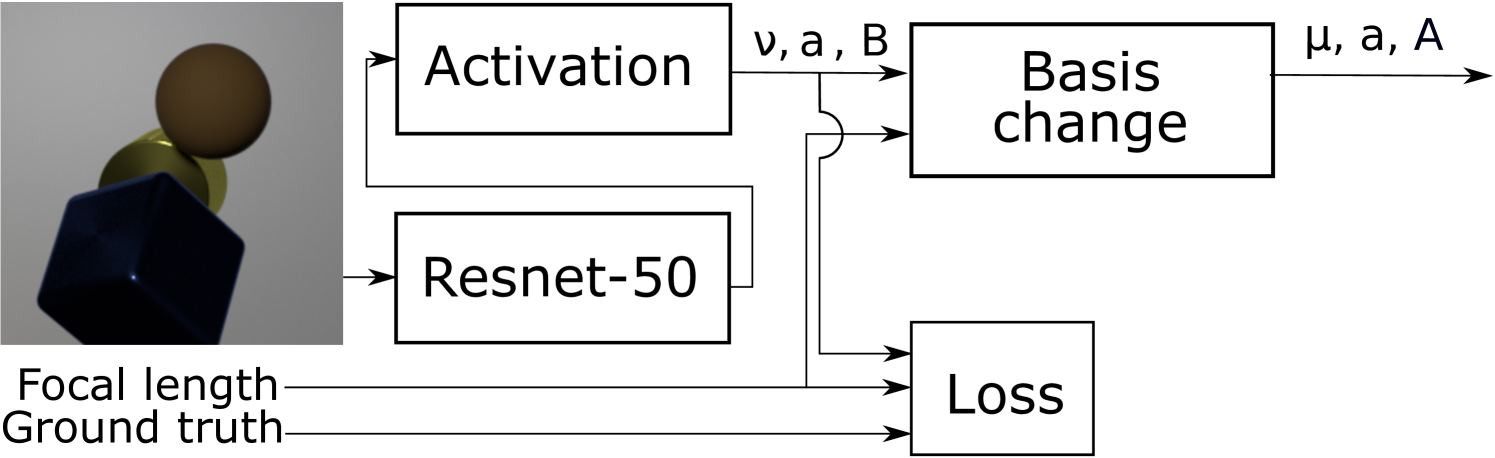

The data is fitted by the method by using the rendered image as input to a standard Resnet-50 with an output dimension of 7. Instead of applying a softmax on the output we use the mapping described in section 4.4 to predict the parameters of Projected Huber Distribution . Applying a negative log likelihood loss gives equation 33 which is used as the loss when fitting the parameters of the network. The parameters are updated using Adam with default parameters. This setup is visualized in figure 2

To showcase different cases we also generate additional datasets where the cylinder is scaled with a factor uniformly sampled from U(0.8,1.2). This dataset is constructed to showcase how the method performs when a scale/depth ambiguity is present.

We also generate a dataset where we also add a cube and a sphere to both add visual complexity and introduce occlusions which incentive the model to predict different uncertainties for the case where the cylinder is occluded compared to when it is not. For this dataset no scale ambiguity is present. The cube and sphere have orientations, material properties and color sampled indepenently from the cylinder and each other.

Finally we generate a dataset where we render a scene with occluding objects and with scale ambiguity. The scaling factor is sampled independently for all objects.

Each dataset containts 8000 training samples and 2000 test samples.

5.2 Experimental result

5.2.1 Empirical error vs. estimated error

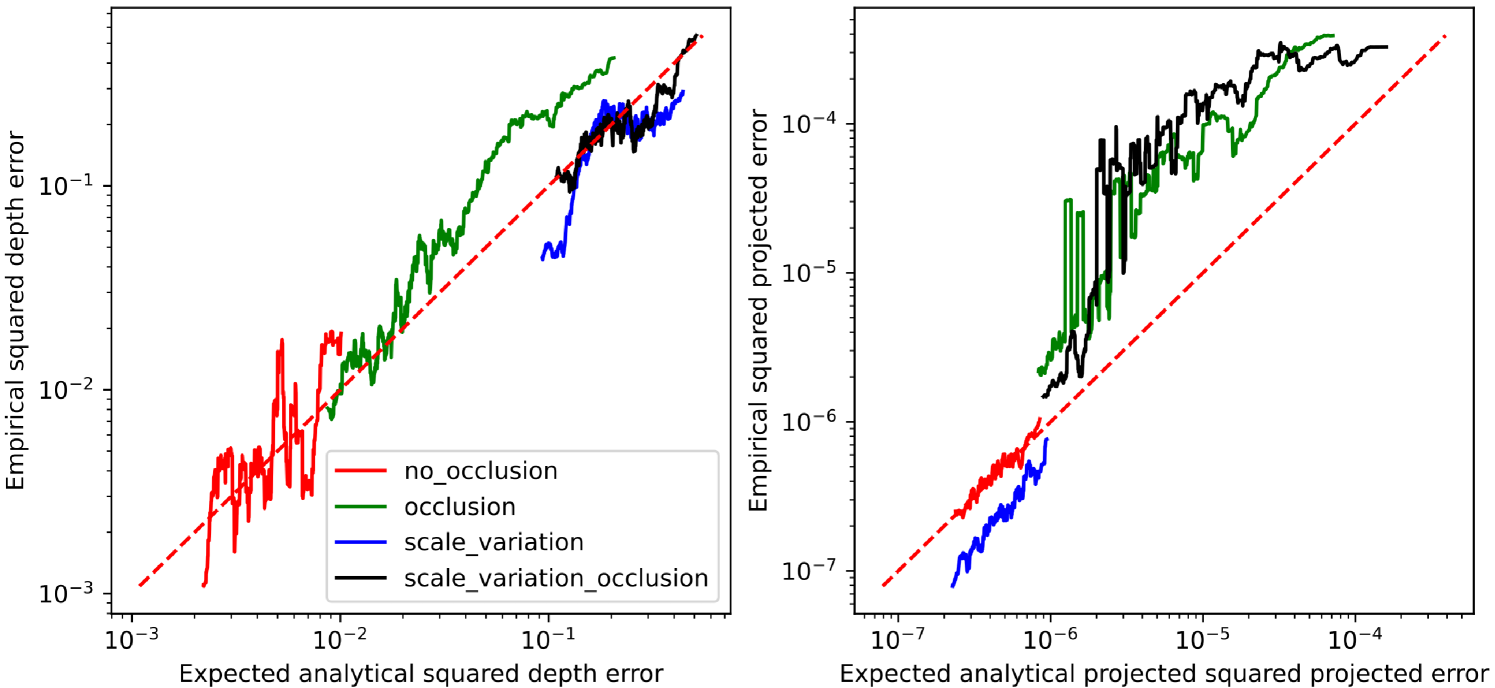

We investigate how the predicted uncertainty correlates with the empirical error by for each sample computing the empirical squared error for the projected coordinates and for the depth. We then compute the predicted variance for each sample based on and by using equation 18 and 20

We check if the estimated uncertainties are well correlated by sorting the samples based on the predicted variance and apply a low pass filter over 200 adjacent samples for both the predicted variance and the empirical squared error. For a perfect model these should be identical. For a good model they should be highly correlated.

From figure 3 we see that the estimated errors correlate well with the empirical errors over all datasets for both the depth and projected component. This indicates that the uncertainty estimates which the model produces are useful. The estimated uncertainties also follow intuition, the dataset without occlusion or scale ambiguity has low predicted uncertainty for both the projected coordinates and for the depth. For the dataset without occlusion but with scale ambiguity the depth uncertainty increases significantly, but the uncertainty for the projected coordinates remains low. When fitting the model on a dataset without scale ambiguity but with other objects which can occlude the cylinder both the predicted uncertainty for the projected cordinates and the depth increases, which is expected since it is harder to predict where objects are if they are partially or fully occluded.

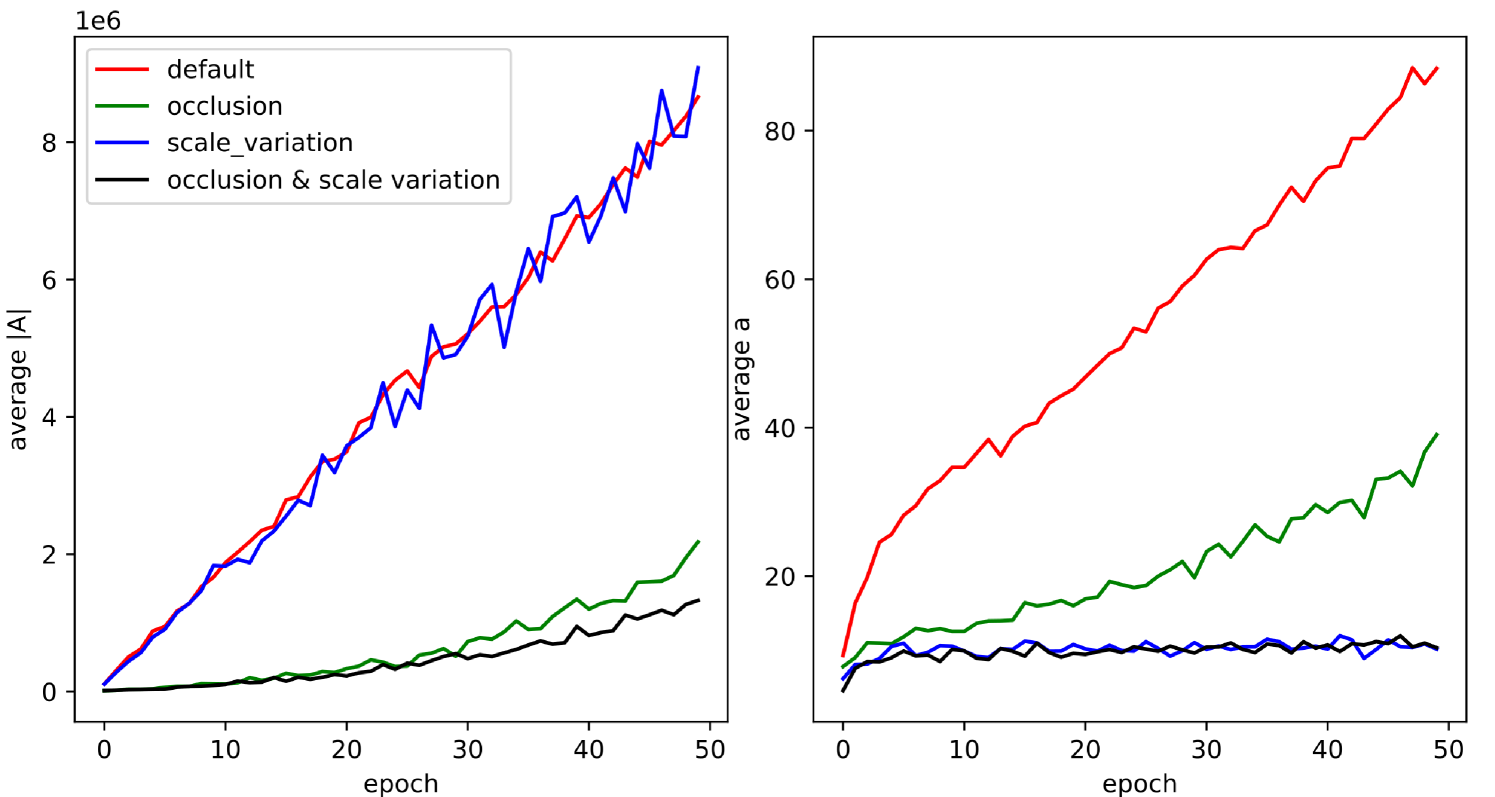

We also show how the average predicted parameter and change over training in figure 4. From this figure we see that the predicted uncertainty decreases as the training proceeds. This is to due to the model adapt to fact that the error decrease when training.

5.2.2 Regression performance

To show that our method is able to estimate 3d position well we compare it against several regression baselines. The reference is to try to regress and directly. Some methods estimate the logarithm of depth instead of the raw depth such as Lin & Lee (2020). For completeness we compare to estimating as well. The results of our method and these baselines is shown in table 1. From this table we see that our method often exceeds the performance of both baselines, however this improvement is not likely to be significant, but does not need to be so either since our contribution is giving a probabilistic prediction with a convex negative log likelihood, not to produce a better single point estimate. The errors are measured in the cameras coordinate system. For the baselines the predicted coordinates are computed by using the mapping in equation 23. For our method we use the mode as our single point prediction

| (40) |

To construct a point estimate. There are other possible single point predictors which could be equally good, such as geometric median or mean we did not evaluate these, but they should produce similar predictions.

We also do ablations where we ignore the focal length of the camera when estimating the position, thereby violating our proposed constraint 2. One such baseline is to let the network predict directly using a MAE loss. Another such baseline is to try to predict and but with a constant focal length of for the mapping between and . Note that the camera used to capture the scene had different focal length for different samples, we only use an incorrect average focal length for the mapping in equation 23 for training and evaluation. The results for the baselines and our method on the dataset without occluding objects nor scale ambiguity is shown in Table 2.

From this table we see that baselines which ignore the focal length perform significantly worse, likely due to the fact that in general it is not possible to measure depth from images, only scale, therefore by ignoring the focal length an irreducible error is introduced.

Finally we try to frame how large our errors are in image space. On average the focal length is approximately 1550 pixels. The object is on average 4000 mm away. Therefore the average error of 2.4mm correspond to approximately pixels which quite good. Objects with a diameter of 0.2m will have a diameter of approximately 80 pixels when rendered with a focal length of 1550 at a depth of 4 meter. If estimating depth was done by the proxy task of estimating the diameter of objects an error of 1 pixel would therefore result in a depth error of 50mm which is on par with our average error when there are no occlusions or scale ambiguities, again indicating that our method works quite well.

Introducing a 20% scale ambiguity of the rendered object should result in irreducible errors which are on average 10% error of the depth. The object was on average 4000mm from the camera, which should give an average error of approximately 400mm. The measured errors which we observe are similar to this, but slightly lower, possibly due to the network being able to infer some information about the scale through some non-intuitive shading effect or due to the fact that does not follow a uniform distribution.

| Method | Metric | default | occl. | scale ambig. | occl. & scale ambig |

|---|---|---|---|---|---|

| MAE, , | projected error (mm) | 3.6 | 22.8 | 3.7 | 37.2 |

| depth error (mm) | 69 | 218 | 359 | 386 | |

| MAE, , | projected error (mm) | 5.0 | 18.0 | 5.1 | 27.6 |

| depth error (mm) | 36 | 178 | 355 | 355 | |

| probabilistic (ours) | projected error (mm) | 2.4 | 13.0 | 1.6 | 17.0 |

| depth error (mm) | 48 | 168 | 347 | 350 |

| Method | Projected error | Depth error |

|---|---|---|

| MAE, (x,y,z) | 13.0 | 327 |

| MAE, , , constant | 10.8 | 325 |

| probabilistic (ours) | 2.4 | 48 |

6 Discussion

The constraints which we described are well motivated for the general case, but there are cases where they are not necessary. For example if scale ambiguity is not present in the dataset then constraint 1 is not necessary. If the focal length is the same for all pictures in the dataset then constraint 2 is not neccessary. It is also possible to infer camera intrinsics from some scenes if this is the case then constraint 2 might not be necessary either. If the uncertainty in depth is small many distributions would assign a small probability that the object is behind the camera even if constraint 3 is not strictly true indicating that this constraint is mainly important when the depth uncertainty is large. Constraint 5 is only necessary if the estimated probability distribution needs to be log concave. we have shown this is useful for multi view fusion and imposing ground plane constraints, but if the goal is only to produce a as good as possible single point prediction of the position this constraint should not be necessary.

Furthermore our ablations indicate that using the correct focal length is important when estimating 3d position from camera data.

The focus of this work is to investigate the theoretical aspects of probabilistic 3d regression from camera data. To be able to highlight this the experiments are on synthetic data. Future work could be to verify that the proposed method works on more challenging real world data as well.

We also show that it should be easy to combine our method with multi-view fusion or the ground plane assumption in theory, but we do not implement it. This could also be future work.

7 Conclusion

In this work we have described constraints which we argue should be taken into account when designing a method to estimating probability distributions over 3d position from camera data. We have also designed a method which conform to these constraints. In our experiments we show that the uncertainty estimates which our method produce correlate well with empirical errors. Our experiments also show that our method perform on par or better than several regression baselines. In our ablations we show that in our experimental setting the performance decreases significantly if the camera intrinsics are ignored.

8 Acknowledgements

DM is an industrial PhD student at Tobii AB. this work was partially supported by the Wallenberg AI, Autonomous Systems and Software Program (WASP) funded by the Knut and Alice Wallenberg Foundation.

References

- An (1997) Mark Yuying An. Log-concave probability distributions: Theory and statistical testing. Duke University Dept of Economics Working Paper, (95-03), 1997.

- Bertoni et al. (2019) Lorenzo Bertoni, Sven Kreiss, and Alexandre Alahi. Monoloco: Monocular 3d pedestrian localization and uncertainty estimation. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), October 2019.

- Bruns & Jensfelt (2022) Leonard Bruns and Patric Jensfelt. On the evaluation of rgb-d-based categorical pose and shape estimation. arXiv preprint arXiv:2202.10346, 2022.

- Choi et al. (2016) Sungjoon Choi, Qian-Yi Zhou, Stephen Miller, and Vladlen Koltun. A large dataset of object scans. arXiv preprint arXiv:1602.02481, 2016.

- Feng et al. (2019) Di Feng, Lars Rosenbaum, Claudius Glaeser, Fabian Timm, and Klaus Dietmayer. Can we trust you? on calibration of a probabilistic object detector for autonomous driving. arXiv preprint arXiv:1909.12358, 2019.

- Geiger et al. (2012) Andreas Geiger, Philip Lenz, and Raquel Urtasun. Are we ready for autonomous driving? the kitti vision benchmark suite. In Conference on Computer Vision and Pattern Recognition (CVPR), 2012.

- Ionescu et al. (2013) Catalin Ionescu, Dragos Papava, Vlad Olaru, and Cristian Sminchisescu. Human3. 6m: Large scale datasets and predictive methods for 3d human sensing in natural environments. IEEE transactions on pattern analysis and machine intelligence, 36(7):1325–1339, 2013.

- Iskakov et al. (2019) Karim Iskakov, Egor Burkov, Victor Lempitsky, and Yury Malkov. Learnable triangulation of human pose. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 7718–7727, 2019.

- Johnson et al. (2017) Justin Johnson, Bharath Hariharan, Laurens Van Der Maaten, Li Fei-Fei, C Lawrence Zitnick, and Ross Girshick. Clevr: A diagnostic dataset for compositional language and elementary visual reasoning. In Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 2901–2910, 2017.

- Kumar et al. (2020) Abhinav Kumar, Tim K Marks, Wenxuan Mou, Ye Wang, Michael Jones, Anoop Cherian, Toshiaki Koike-Akino, Xiaoming Liu, and Chen Feng. Luvli face alignment: Estimating landmarks’ location, uncertainty, and visibility likelihood. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 8236–8246, 2020.

- Lin & Lee (2020) Jiahao Lin and Gim Hee Lee. Hdnet: Human depth estimation for multi-person camera-space localization. In European Conference on Computer Vision, pp. 633–648. Springer, 2020.

- Meyer et al. (2019) Gregory P Meyer, Ankit Laddha, Eric Kee, Carlos Vallespi-Gonzalez, and Carl K Wellington. Lasernet: An efficient probabilistic 3d object detector for autonomous driving. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp. 12677–12686, 2019.

- Mills & Goldenberg (1989) James K Mills and Andrew A Goldenberg. Force and position control of manipulators during constrained motion tasks. IEEE Transactions on Robotics and Automation, 5(1):30–46, 1989.

- Mohlin et al. (2021) David Mohlin, Gerald Bianchi, and Josephine Sullivan. Probabilistic regression with huber distributions. arXiv preprint arXiv:2111.10296, 2021.

- Moon et al. (2019) Gyeongsik Moon, Ju Yong Chang, and Kyoung Mu Lee. Camera distance-aware top-down approach for 3d multi-person pose estimation from a single rgb image. In Proceedings of the IEEE/CVF international conference on computer vision, pp. 10133–10142, 2019.

- von Marcard et al. (2018) Timo von Marcard, Roberto Henschel, Michael Black, Bodo Rosenhahn, and Gerard Pons-Moll. Recovering accurate 3d human pose in the wild using imus and a moving camera. In European Conference on Computer Vision (ECCV), sep 2018.

- Wang et al. (2022) Tai Wang, ZHU Xinge, Jiangmiao Pang, and Dahua Lin. Probabilistic and geometric depth: Detecting objects in perspective. In Conference on Robot Learning, pp. 1475–1485. PMLR, 2022.

- Zhen et al. (2020) Jianan Zhen, Qi Fang, Jiaming Sun, Wentao Liu, Wei Jiang, Hujun Bao, and Xiaowei Zhou. Smap: Single-shot multi-person absolute 3d pose estimation. In European Conference on Computer Vision, pp. 550–566. Springer, 2020.

Appendix A Appendix

Appendix B Projected and depth independent result in finite precision

B.1 Variance decreases when reducing size of single tail

This section proves an intermediate result which we use for the main proof Here we show that for random varianbles Y,Z and a variable and then if then

| (41) |

Then decreases in decreases

proof

| (42) | ||||

| (43) | ||||

| (44) |

Where … does not depend on c. The first term is time the variance of Y which is positive, the second term is positive since . The third term is positive since and the negations cancel out.

Therefore if increase when increase, which conclude the proof.

B.2 Main proof

Proof sketch, assume all constraints except constraint 1 are true, show that the smallest possible variance for the projected coordinates is at least a constant which is larger than 0.

By considering the line we get

| (45) |

The negative log likelihood is

| (46) |

For convenience we define

| (47) | ||||

| (48) |

We compute the hessian with respect to and of

| (49) | ||||

| (50) | ||||

| (51) | ||||

| (52) | ||||

| (53) |

If this function is convex then this hessian has a positive determinant. The determinant is

| (54) | ||||

| (55) |

The function is twice differentiable at least for one point, let exist for the value at this point. If Then we reach a contradition, if then Where the function has support. The solution here would be which can not model arbitrary precision.

The solution of

| (56) |

is

| (57) |

If the constraint is not tight, then the resulting function would increase faster than the solution of the differential equation as the distance to the mode increases. If we study the function for which are larger than the mode then the derivative is

| (58) |

Since we are to the right of the mode , is also positive since the logarithm of this value is real. Thus in this region Therefore approach infinity when . Therefore .

The mode has to exist since the distribution is proper. The same analysis holds to show that for

| (59) |

Where and .

The distribution will either give a value less than the mode or larger or equal to the mode We can now apply the proof in B.1 to show and because if that was not the case it would be possible to reduce the variance by making the constraint active.

Furthermore is continuous since it is convex.

Thus the distribution which minimizes the projected variance while being consistent with the constraints has the form

| (60) |

Which obviously can not model an arbitrary small variance for the projected coordinates for a fixed C.

Finally we need to show that

| (61) |

Imposes constraints on what variance for z can be modeled

To maximize variance we minimize g while by keeping the constraint tight. This gives

| (62) |

which gives the distribution after reparameterization

| (63) |

Which is a normal distribution with variance

Therefore a low variance in the projected coordinates requires a large which requires a low variance for the depth estimate. This concludes the proof.

Appendix C Multi-view fusion and ground plane constraints

In this section we prove proposition 2 and 3.

C.1 Multi view

For this section we have cameras which produce predictions where are coordinates in the coordinate system for camera . are the parameters of the estimated probability distribution based on the image captured by camera . The images are in theory captured at the same time or in practice within a very short time period. The multi view fusion is constructed under the assumption that the errors for each estimate are independent. The affine transform transform coordinates from coordinate system to coordinate system

Definition 1: We define the maximum likelihood fusion given the estimates to be

| (64) |

We introduce the notion of a valid fusion as

Definition 2: A valid maximum likelihood fusion point exist if

| (65) |

To exclude the case where either most likely fusioned point exist infinitely far away or when the fusioned distribution is improper.

Proposition: 2 (existance) In multi view fusion, if the field of view for the cameras have a non-empty intersection then if each camera gives probability estimates conforming to constraints 1-7 there will exist a valid maximum likelihood fusion point.

Proof Having a non empty intersection of the field of views for the cameras implies

| (66) |

Constraint 4 implies that for all cameras. since is in the field of view of the camera

Therefore

| (67) |

Constraint 6 implies that there exist a such that

| (68) |

therefore

| (69) | ||||

| (70) |

The last step is due to

1) is continuous since continuity is preserved when multiplying continuous functions.

2) A closed ball is compact.

3) the extreme value theorem states that the maximum over a compact set of a continous function exists. Thus applying the extreme value theorem concludes the proof.

Proposition 2: (convexity) Finding the maximum likelihood fusion is a convex optimization problem.

proof Maximizing a probability is equivalent to minimizing a log likelihood.

Formally, given definition 1

| (71) |

Here we have used the fact that the transform from to has a jacobian with determinant 1.

Maximizing the probability is the same as minimizing a negative log likelihood, since logarithms are increasing and negating an increasing function result in a decreasing function, that is

| (72) | ||||

| (73) |

It is also well known that convexity is preserved under linear transformations of the argument

This means that if is convex with respect to then is convex with respect to since the change in coordinate system is a linear transform.

Convexity is also preserved under addition therefore if is convex with respect to for all , then is convex with respect to .

Finding a minima with respect to a convex function is a convex optimization problem. This concludes the proof.

proposition 3: The constraints makes finding the most likely point while enforcing a ground plane assumption, that is solving

| (74) |

where and define the ground plane is a convex optimization problem. If any point of the ground plane is in the field of view of the camera, then the constraints also guarantee that a solution exists.

proof

| (75) |

optimizing a convex function with an affine constraint in a convex optimization problem.

If a point of the ground plane is in the field of view of the camera, then constraint 4 ensures

Constraint 6 ensures

| (76) |

Therefore

| (77) |

Which exist due to extreme value theorem and constraint 7.

Appendix D Intermediate integrals

D.1 Double exponential integral

For convenience for future proofs we derive the integral of a double exponential

| (78) |

Where is the upper incomplete gamma function

Proof

| (79) | ||||

| (80) | ||||

| (81) | ||||

| (82) |

The first step is rearranging.

The second step comes from the variable substitution

| (83) |

The third step comes from the variable substitution

| (84) |

D.2 Moments depth distribuion

Here we show

| (85) |

proof

| (86) | ||||

| (87) | ||||

| (88) | ||||

| (89) | ||||

| (90) | ||||

| (91) |

The first step is rearranging. The second step is the variable substitution . The third step is separating the range of the integral into positive and negative numbers. The final step is using equation 78.

D.3 Expected value of a positive huber distribution in 1 dimension

For use in future parts we derive the integral of

| (92) |

Proof

| (93) | ||||

| (94) |

The first step is the variable substitution , which has a scale factor of

| (95) | ||||

| (96) |

The first step is integration by parts.

This gives

| (97) | ||||

| (98) |

D.4 Expected cube of positive Huber distribution in 1 dimension

For use in future parts we derive the integral of

| (99) |

Proof

| (100) |

| (101) | ||||

| (102) | ||||

| (103) | ||||

| (104) | ||||

| (105) |

| (106) | ||||

| (107) | ||||

| (108) | ||||

| (109) |

Therefore

| (110) |

D.5 Norm factor of 2d Huber distribution

| (111) |

proof

| (112) |

D.6 Covariance of 2d Huber distribution

| (113) |

proof

| (114) | |||

| (115) |

From rotation symmetry we know the variance will be a scaled identity matrix

Therefore

| (116) |

Appendix E Projected Huber Distribution components

E.1 Depth distribution

| (117) |

with

| (118) |

proof The normalizing factor can be computed as

| (119) | ||||

| (120) | ||||

| (121) |

The first step is from definition. The second step is using equation 85. The third step is

| (122) |

E.1.1 Expected value of depth

| (123) |

This function is decreasing with respect to a with limit as When ,

proof

Follows directly from and equation 85

The limit can be proven by multiplying numerator and denominator by This gives

| (124) |

Since

| (125) |

E.1.2 Variance of depth

The variance of the depth is

| (126) |

This expression is not very intuitive, but it behaves like

| (127) |

when

proof

| (128) |

Follows directly from and equation 85

| (129) |

E.2 Projected distribution

The normalizing factor of the projected part of the distribution is

| (130) |

For a distribution over a given plane where z is constant.

Proof

| (131) | ||||

| (132) | ||||

| (133) |

The second step is the variable change

| (134) |

The last step comes from 92 after a variable change to polar coordinates.

E.3 Expected value of projected components

The expected value

| (135) |

for a fixed plane where is constant.

Proof

| (136) | ||||

| (137) | ||||

| (138) |

where the basis change is the same as when deriving the normalizing factor. The last step is recognizing that the expected value of due to symmetry.

E.4 variance of projected components

The for a constant z value is

| (139) |

proof

| (140) | ||||

| (141) | ||||

| (142) |

Since

| (143) |

We get

| (144) |

Appendix F Projected Huber Distribution

F.1 Normalizing factor

In this section we show

| (145) |

proof

| (146) | ||||

| (147) | ||||

| (148) |

Since the normalizing factor of does not depend on

F.2 Expected projected coordinates

| (149) |

proof

| (150) |

F.3 Variance of projected coordinates

The variance of the projected coordinates is

| (151) |

proof

When integrating this over z we get

| (152) | ||||

| (153) |

| (154) | ||||

| (155) | ||||

| (156) |

Therefore

| (157) |

F.4 Expected depth

The expected depth is

| (158) |

proof

| (159) | ||||

| (160) |

F.5 Depth variance

| (161) |

proof

| (162) |

and

| (163) |

Appendix G Proof constraints

G.1 Proof constraint 5

We want to show that the negative log likelihood is convex with respect to for fixed .

Since the distribution decomposes into

| (164) |

Therefore

| (165) |

Since convexity is preserved under addition it is sufficient to show that the negative log likelihood of and are convex separately.

| (166) |

Doing the affine parameter change

| (167) | ||||

| (168) |

gives

| (169) |

which is convex. Since convexity is preserved under affine transforms this concludes the proof for

The proof for is even simpler

| (170) |

maximum of convex functions is convex. Therefore it is sufficient to show that each expression in the max is convex.

is linear, linear functions are convex

| (171) |

Therefore the second expression is convex as well. This concludes the proof.

G.2 Proof constraint 6

First consider the region . The statement is trivially true here since 0 converges to 0.

Secondly consider the region

in this region

| (172) | ||||

| (173) |

The last step comes from applying the triangle inequality on the term and maximizing the depth and projected terms independently.

For the last expression the first factor converges to 0 since is positive, is positive definite and is finite. The second factor is finite. Therefore for limits in this region the condition holds.

In the region also need to go to infinity.

In this region the following inequalities hold.

| (174) |

Since the depth distribution is decreasing where the first step relaxes the constraint to and does the variable change

The following inequality holds in this region as well.

| (175) | ||||

| (176) |

which goes to infinity as goes to infinity.

Therefore goes to 0 in this region since

| (177) |

goes to 0 while

| (178) |

is bounded by a constant.

For the region z will need to go to infinity. the term is smaller than 1 and

| (179) |

The union of these regions is . The limit is also the same for each region, therefore the limit is the same for the union of the regions. which concludes the proof.

G.3 Proof constraint 7

The function is continuous for all values when due to the fact that the density is a combination of continuous functions for this region. The function is also continuous when since the function is 0 in this region. For the regions we know

| (180) |

The normalizing factor is finite and the exponential term goes to 0 as

Appendix H Ground truth bounded after basis change

| (181) | ||||

| (182) | ||||

| (183) |

and

| (184) | ||||

| (185) | ||||

| (186) |

and are bounded by 1 because for objects in the field of view of the camera. Therefore

| (187) | |||

| (188) |

Which concludes the proof

Appendix I Proof of bounded gradients for loss

This section contains a proof that the gradients are bounded with respect to the network output when the mapping in section 4.4 is used.

The proof for is already done in Mohlin et al. (2021)

The mapping from to is a contraction. Therefore it is sufficient to show that the loss has bounded gradients with respect to and to prove bounded gradients with respect to

For the region

the loss regression part of the loss is

| (189) |

The gradient of the first term is

| (190) |

The gradient of the second expression is

| (191) |

If the loss is

| (192) |

The first expression has gradient norm

| (193) |

The second expression has gradient norm

| (194) |

Which concludes for the regression part of the loss.

The normalizing factor component of the depth loss is

| (195) |

for The gradient of this is

| (196) |

Which is less than when

If the mapping between and is Therefore

| (197) | ||||

| (198) |

Which is less than . Therefore the negative log likelihood of the normalizing factor has bounded gradients with respect to

Appendix J Computing normalizing factor

The normalizing factor can be rewritten as

| (199) |

with gradient

| (200) |

Therefore if can be computed accurately both the function and its gradient can be computed. We show how to do this in section J.1

Proof of function evaluation

| (201) | ||||

| (202) | ||||

| (203) |

Proof of gradient

| (204) | ||||

| (205) | ||||

| (206) | ||||

| (207) | ||||

| (208) |

J.1 numerical integration of

Here we show that

| (209) |

proof

| (210) | ||||

| (211) |

where that basis change is used.

Appendix K Convex loss with respect to network output

Here we show that the network is convex with respect to the network outputs

Proof In the following sections we will prove that both the projected and depth loss are convex with repect to their parameters. To prove the depth loss is convex.

K.1 Projected term

We have the same loss and the same mapping to parameterize and as in Mohlin et al. (2021) In this work they prove that this loss is convex when . We use which concludes the proof.

K.2 Depth Loss

K.2.1 Rewriting argument of logarithm of depth normalizing factor

In this section we show that

| (212) |

proof

| (213) | ||||

| (214) | ||||

| (215) |

K.2.2 Log-convexity of integrand in equation 212

Here we show that for and

| (216) |

is log-convex with respect to

proof

since is a constant for this proof we denote this value as

| (217) | ||||

| (218) | ||||

| (219) |

To show log convexity we need to show that this expression is larger than 0. To make notation slighly easier we denote , since both a and k are positive t is positve as well.

| (220) | ||||

| (221) | ||||

| (222) | ||||

| (223) | ||||

| (224) |

In the last step every term is positive in both numerator and denominator.

K.2.3 log-convex integral for log-convex integrands

For a function

| (225) |

where is continuous and log-convex with respect to a, then g(a) is log-convex as well.

proof First we show

| (226) |

which is equivalent to

| (227) |

Since is increasing

| (228) | ||||

| (229) | ||||

| (230) | ||||

| (231) | ||||

| (232) |

The first inequality is cauchy-schwartz, the second comes from the fact that is log convex.

The proof can be extended for all rational between 0 and 1 where the denominator is a power of 2 by recursive bisection.

| (233) |

Using continuity completes the proof for all

Which concludes the proof.

K.2.4 convexity of

In this section we show that

| (234) |

is convex with respect to

proof

This is the same as

| (235) |

being log convex.

K.2.5 depth loss error term

here we show that

| (236) |

is convex when

| (237) |

proof if then

| (238) | ||||

| (239) |

The first expression is linear therefore convex.

The hessian of the second expression is

| (240) | ||||

| (241) | ||||

| (242) | ||||

| (243) | ||||

| (244) | ||||

| (245) | ||||

| (246) | ||||

| (247) | ||||

| (248) | ||||

which has the eigenvalues 0 and . The denominator is positive since both and are positive. Therefore this expression is positive definite.

If Then the expression which is linear and therefore convex. The expression

| (249) |

doing the variable change turns this in the expression

| (250) |

which we have already shown is convex for . Therefore this expression is convex for this region. max of convex expressions is convex therefore the function is convex in each region. The mapping between and has continous gradient at the boundary Therefore the function is convex for all . Since mapping between network output and is linear for the region this the loss is convex for this region.

This concludes the proof that the loss is convex in the region