Probabilistic Charging Power Forecast of EVCS: Reinforcement Learning Assisted Deep Learning Approach

Abstract

The electric vehicle (EV) and electric vehicle charging station (EVCS) have been widely deployed with the development of large-scale transportation electrifications. However, since charging behaviors of EVs show large uncertainties, the forecasting of EVCS charging power is non-trivial. This paper tackles this issue by proposing a reinforcement learning assisted deep learning framework for the probabilistic EVCS charging power forecasting to capture its uncertainties. Since the EVCS charging power data are not standard time-series data like electricity load, they are first converted to the time-series format. On this basis, one of the most popular deep learning models, the long short-term memory (LSTM) is used and trained to obtain the point forecast of EVCS charging power. To further capture the forecast uncertainty, a Markov decision process (MDP) is employed to model the change of LSTM cell states, which is solved by our proposed adaptive exploration proximal policy optimization (AePPO) algorithm based on reinforcement learning. Finally, experiments are carried out on the real EVCSs charging data from Caltech, and Jet Propulsion Laboratory, USA, respectively. The results and comparative analysis verify the effectiveness and outperformance of our proposed framework.

Index Terms:

Electric vehicle charging station, probabilistic charging power forecasting, reinforcement learning, deep learning, forecast uncertainty.I Introduction

As one of the most essential actions, the carbon emission reduction has been adopted to address the problem of climate change. Therefore, renewable energy sources, such as wind and solar energy, are widely deployed to substitute fossil fuels. Besides, the electrification of transportation systems is another effective approach to reduce carbon emissions [1]. Due to the development of manufacture and related key technologies, the reliability and efficiency of electric vehicles (EVs) are significantly improved. Therefore, various countries aim to achieve a high EV penetration in the near future. It is predicted that the number of EVs will increase continually and may approximately reach 35 million at the end of 2022 throughout the world [2].

However, as one of the most important factors to expand the EV adoption, EV charging has brought great challenges to the secure and economic operations of the EV charging station (EVCS). Because the charging behavior of EV is with the nature of intrinsic randomness, the charging power of EVCS is uncertain [3]. Consequently, it is considered as a volatile and uncertain electricity load. Fluctuations of such load may threaten the operational security of EVCS and corresponding power systems [4]. To prevent these issues, it is crucial to well predict the EVCS charging power.

Current works about EVCS or EV charging power forecasting can be divided into two main categories, namely, the model-based and data-driven approaches. Different from the wind power and residential load power forecasting that are mainly influenced by the natural environment and the living habits of residents, the charging behavior of the EV users is the focus on the first category. Ref. [5] has employed the trip chain to establish a spatial-temporal behavior model of EVs, which is beneficial for forecasting their charging power. Considering the impacts of traffic on the charging behavior, Ref. [6] has provided a reliable approach for EVCS charging power forecasting. Based on the case in Shenzhen, China, the charging behaviors of EVs are considered for systematic forecasting of EV charging power [7].

Thanks to the advent of the cloud services and the internet of things, massive charging process data, such as the charging time and energy of EVs, can be collected. Thus, data-driven algorithms could be used for the forecast of EVCS charging power [8]. Some classical data-driven forecasting algorithms are widely employed. For instance, [9] has applied an autoregressive integrated moving average (ARIMA) model to forecast the charging power of massive EVCSs, which were distributed in Washington State and San Diego. Ref. [10] has combined the least squares support vector machine algorithm and fuzzy clustering for the EVCS charging power forecasting task. Based on the historical data of Nebraska, USA, the effectiveness of the extreme gradient boosting on the charging power forecasting has been validated in [11].

The above algorithms rely on the structured input data with a specific human-defined feature, which requires cumbersome engineering-based efforts [12]. Hence, the deep learning method [13] becomes more popular in this field. For instance, Ref. [12] has reviewed numerous deep learning methods for EV charging load forecasting, and concluded that the long short-term memory (LSTM) model could reduce by about 30% forecasting error compared with the conventional artificial neural networks (ANN) model. Considering the temporal dependency of the data, Ref. [14] has implemented the LSTM to capture the peak of charging power and achieve an effective result. Ref. [15] has introduced the LSTM network to build a hybrid model for the forecasting of EVCS charging power and the experimental results demonstrated its effectiveness. Besides, compared with ARIMA and ANN, Ref. [16] has also illustrated the advantage of LSTM with abundant experiments.

Note that the above algorithms have been used to conduct the point forecasting, which only provides an expected charging power of EVCS in the future. Therefore, the probabilistic forecasting is introduced. It could forecast the probabilistic distribution of the future charging power, and thus provide more information, i.e., the expected value and the forecast uncertainties [17][18]. Consequently, the probabilistic forecasting of EVCS charging power is conducted in this paper. To the best of our knowledge, there exist only a few works about this topic. For instance, Ref. [17] has applied four quantiles regression algorithms to the probabilistic EV load forecasting. In addition, the deep learning method is used in Ref. [18] to capture uncertainties of the probabilistic forecasting.

However, the above quantiles regression and deep learning-based methods have disadvantages. Regarding the first method, several steps are needed to be taken under different quantiles to obtain the forecast distribution, which would bring about much computational burden [17]. For deep learning, the forecast uncertainty is mainly caused by the model training and input data [18][19]. Indeed, the stochastic parameters are normally introduced in the deep learning model, and the uncertainty is estimated through statistical indicators of its outputs. Therefore, the uncertainty of probabilistic forecast is usually obtained by using adequate samples, which needs the repeated running of the deep learning model and the usage of input data. In other words, traditional approaches may lead to a huge computation complexity.

Consequently, we propose an approach to solve the above issue. That is, a reinforcement learning assisted deep learning forecast approach is adopted to directly obtain the forecast uncertainty. This approach is designed to calculate the uncertainty once only, instead of repeated running. In detail, the LSTM is used to obtain the point prediction results of EVCS charging power, which is deemed as the expected value of the forecasting probabilistic distribution. Furthermore, note that the cell state of LSTM is one of the core inherent parameters, which could represent the important information of the model and the input data, simultaneously. Therefore, the forecast uncertainty can be obtained from the cell state (this issue is explained in Section IV). As such state is varying from the input data, the forecast uncertainty also changes with the time-series data. Then, this change could be modelled as a Markov decision process (MDP). In this way, we innovatively introduce the reinforcement learning algorithm to assist the LSTM in order to obtain the forecast uncertainty. The reason for selecting reinforcement learning is that it is a powerful technique to improve the artificial target by the autonomous learning without using any prior knowledge [20]. Here, the target is to obtain the effective probabilistic forecast, and the reinforcement learning is used to learn the forecast uncertainty by observing cell states of the LSTM.

The contributions of this paper are shown as follows:

1) A reinforcement learning assisted deep learning framework is proposed for probabilistic EVCS charging power forecasting. To the authors’ best knowledge, this is the first paper that uses the reinforcement learning algorithm to obtain forecast uncertainty in the field of EV charging power forecasting.

2) We model the variation of LSTM cell state as a MDP, which is solved by proximal policy optimization (PPO) to obtain the forecast uncertainty. In this case, the expected value of the EVCS charging power is forecasted by the LSTM, while the forecast uncertainty are yielded by the PPO.

3) An adaptive exploration PPO (AePPO) is further proposed. This would help adaptively balance the exploration and the exploitation during the training of PPO, which could improve its performance and help prevent it from getting trapped into local optima.

4) A data transformer method is developed to obtain the information of EVCS from distributed charger recordings, i.e., charging sessions for the LSTM training. It aggregates the charging sessions and transforms them into the time-series format that includes the information of charging process, i.e., the charging power, utilization time and demand satisfaction rate.

The remainder of this paper is organized as follows. Section II presents our proposed framework for EVCS charging power forecasting. In Section III, a data transformer method is proposed to preprocess the charging data. Section IV introduces the LSTM, the MDP model of the cell state variation and the original PPO. In Section V, the AePPO is proposed, and the case studies are conducted in Section VI. Finally, Section VII concludes this paper.

II Framework

II-A Problem Formulation

The target of our studied probabilistic forecast problem is modelled by following formulations.

| (1) |

| (2) |

| (3) |

| (4) |

where is the probabilistic forecast of EVCS charging power at time . is the expectation of the forecast values, which is obtained by the point forecast function , as shown in (3) [19]. represents the noise, which stands for the uncertainty between the forecast and real values. In this paper, it is assumed that the is Gaussian distributed with variance , which is obtained by the variance forecast function , presented in (4). It has been proved that the time series models based on the Gaussian distribution assumption can be applied to obtain a satisfactory performance [21]. Note that and represent the LSTM and our proposed AePPO, respectively. In addition, is the vector of input features of the two functions. It stands for the features of timestamps before , whereas ( denotes the dimensional integer space).

Generally, provides a point forecast which represents the mean value of our target [22]. However, the obtained results might be unreliable since its inherent parameters are learned by the stochastic gradient descent optimization method such as Adam [18] and its input data is related to the stochastic situation, i.e., the charging behavior of EV users. Therefore, it is necessary to quantify the uncertainty of and , and the introduction of the noise is one of the most effective approaches [21, 23].

Usually, the is forecasted through statistical indicators, which requires a massive repeated running of and thus leads to a huge computation complexity [19]. Consequently, it is expected to design a function to forecast the variance without the repeated running. The should have the ability to capture the uncertainty of and , which means the input of has to contain the characteristics of both point forecast model and data. Compared with other deep learning algorithms, such as ANN and conventional neural network, the LSTM owns a unique structural parameter, i.e., the cell state, which is the core parameter of LSTM and is also determined by the . Therefore, the uncertainties coming from both and would make changes to the value of cell state, which could be captured by the to forecast . Besides, since the value of cell state is affected by its previous value, the change is modelled as a MDP in this paper. Therefore, the cell state is denoted as the state of the MDP, and the is defined as the action. In this way, a reinforcement learning model, AePPO, is developed as to observe the cell state and generate the action, i.e., the . Generally, when the LSTM is applied to produce , the cell state would be produced, and the AePPO would capture it to determine . Then, the probabilistic forecast can be constructed by and .

II-B The Probabilistic Forecast Framework of EVCS Charging Power

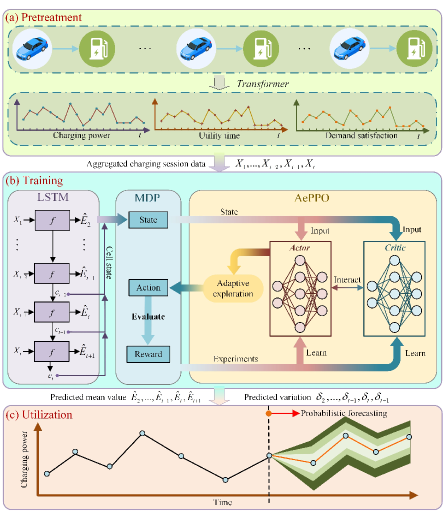

As discussed above, the LSTM and AePPO are trained and utilized to obtain the mean and variance values for obtaining the predicted probabilistic distribution of EVCS charging power. In order to implement this procedure, we propose a probabilistic forecast framework, as shown in Fig. 1. It contains three parts, preprocessing, training, and utilization. They are presented by the three sub-figures in Fig. 1, which are denoted as (a), (b) and (c), respectively.

As illustrated in Fig. 1(a), an EVCS has numerous chargers which record the information about charging power in the period between the arrival and departure of an EV user [17]. Since all chargers may work simultaneously, the charging information of EVCS is obtained by recordings of chargers. In this paper, such recording is termed as the charging session. It consists of the period during the process of EV charging, i.e., the arrival and departure time of the EV, and the related data about the energy information, for instance, the demand and remaining energy of the corresponding EV. Note that one charging session only contains partial charging information of EVCS and the period between different charging sessions may overlap. The information in charging session during the overlap period should be gathered to derive the data of EVCS for LSTM training. In this case, the charging session data should be pre-processed by aggregating the information and transforming them into the time-series format with different features, such as the charging power, utility time and demand satisfaction rate.

During the training process shown in Fig. 1(b), the LSTM and AePPO are separately trained. At first, the EVCS data is used to train LSTM. Then, for the well-trained LSTM, its variation of cell state is modeled as a MDP. By doing so, AePPO could produce the action and interact with the LSTM according to the state. Afterwards, the reward is calculated based on the state and action, which is used for the training of AePPO.

Finally, the last part of this framework is the utilization, as shown in Fig. 1(c). The LSTM and AePPO models are utilized here. That is, the LSTM provides the mean value of probabilistic forecast distribution , while AePPO is used to determine the variation based on the cell state of LSTM. In this way, the predicted probabilistic distribution could be obtained by Eq. (1).

III Data Transformer Method

In this section, the data transformer method is introduced to extract the charging information of EVCS from charging sessions, including the delivery energy, utility time and demand satisfaction rate. Then, they are aggregated into the time-series format for the training of LSTM.

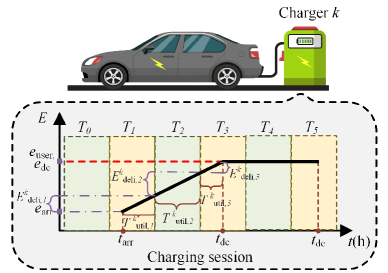

Usually, the charging session data is composed by a series of time and energy information which is recorded during the charging process of the EV. An example of a charging session recorded by the th charger is illustrated in Fig. 2. The time information includes , and , which denote the time when EV is connected to the charger, done charging and departure. Besides, the energy information is also recorded, including , and , which represent the remaining energy in the EV battery at , and , respectively. Furthermore, the charging session also contains the user demand, termed as . Note that expect the above information, the energy at the end of timestamp is also recorded.

However, it is difficult to use the original charging session data for the forecasting of EVCS charging power. The charging session focuses on a single EV, which only contains partial information of the EVCS since numerous EVs may charge at the same time.

Therefore, the charging session is manually split by timestamps, and each of the timestamps represents 1 hour, as illustrated in Fig. 2. Three features are defined to summarize the charging information in each timestamp , i.e., the delivery energy , the demand satisfaction rate and the utilization time . They are formulated as follows.

| (5) |

| (6) |

| (7) |

where indicates the charger. Examples of these features are shown in Fig. 2, where the charging session is split into 6 timestamps.

Then, considering chargers are set in the EVCS, the features split by timestamp are aggregated by the following equations:

| (8) |

After aggregation, the three features are used for the training of LSTM. They could summarize the information of time and energy contained in the charging session data. On the one hand, the delivery energy measures the service quality of the EVCS in terms of time. On the other hand, the time information of the charging process is summarized by the demand satisfaction rate and utilization time. In this way, they are selected as the features for the training of LSTM, aiming to promote its performance. Therefore, the number of features is 3 in this paper, and the input feature vector at timestamp is denoted by .

IV Reinforcement Learning Assisted Deep Learning Algorithm

This section presents the reinforcement learning assisted deep learning algorithm. In the first subsection, the LSTM is introduced to forecast the mean value of charging power with the aggregated time-series data. Then, the variation of the LSTM cell state is modeled as a MDP in the second subsection. In the last subsection, the PPO is used to solve the MDP, and obtain the variance of the forecasts.

IV-A Long Short Term Memory

In this paper, in order to obtain the mean value of EVCS charging power for the forecast of probabilistic distribution, the LSTM is applied. Compared with other deep learning algorithms, such as the fully connected network, convolutional neural network and vanilla recurrent neural network, LSTM is more capable of learning the long-term dependencies inherent to the time-series data and would not suffer from the vanishing gradients [23]. Besides, Overall, the LSTM is formulated as follows.

| (9) |

where represents the output of LSTM at time , which stands for the predicted mean value of EVCS charging power. The LSTM model A takes , and as inputs, which denote the input data, the output and the cell state of LSTM at time , respectively.

For clarity, the formulations of the LSTM is referred as follows.

| (10) | |||

| (11) | |||

| (12) | |||

| (13) | |||

| (14) | |||

| (15) |

where are the learnable weight metrics and are the learnable bias metric. , tanh are the sigmoid and hyperbolic tangent activation functions. Their output range is . indicates the elementwise multiplication. stand for the input, forget and output gate, respectively. represents the candidate information of the inputs in , i.e., the cell state.

It can be seen from Eqs. (14) and (15) that the value of the cell state highly influences . Besides, these equations also show that the cell state is determined by the learnable parameters and the input data , and they are affected by the uncertainty from the model and user’s behaviour, respectively. Wherein, the uncertainty of the LSTM model comes from the learnable parameters, which are determined by the stochastic gradient descent optimization method. In addition, since is aggregated by the charging session data, it is uncertain as well because of correlations with the behaviour of users.

IV-B The Modeling of LSTM Cell State Variation

As described above, the input of LSTM is the time-series format, which reflects the behavior of the users. Besides, because of the recurrent structure of the LSTM, the cell state is influenced by its previous value. Therefore, the variation of the LSTM cell state is caused by the uncertainty that comes from both the model and the data. In this case, the variation of the cell state should be modelled to extract the variance of the probabilistic forecast distribution from the LSTM cell state. Since the cell state is determined by its previous state, its variation can be modelled as a MDP. Normally, the MDP is represented by a tuple . is the state space that stands for all possible states of the environment. represents the action space, i.e., the agent interacts with the environment to produce action for the guidance of state transition. stands for the set of transition probability, and depends on the state and action and is termed as the reward. In our problem, the MDP can be formulated as follows:

1) Environment: The environment produces the state and computes the reward based on the action of the agent. In this paper, the variance of the probabilistic forecast distribution is obtained from the variation of the cell state, which is the most representative parameter of the LSTM. The definition requires a premise, namely, the LSTM model is well-trained and its learnable parameters are fixed. The promise ensures the decisive position of the cell state in the model, therefore, a well-trained LSTM is defined as the environment of MDP.

2) Agent: Here, the agent stands for a policy to obtain the action from observing the state. Besides, the agent could imply the self-learning from its experience to gradually improve the policy.

3) State: The definition of the state at time should follow two criteria. First, the state should be the most representative parameter of the environment. Second, the state should only be related to the previous state and not affected by the next state, namely, the non-aftereffect property. According to the calculation process of LSTM shown in Eqs. (10)(15), the most suitable parameter is the cell state, which not only determines the output of LSTM but also be obtained by the cell state of the previous time. Therefore, is set as and the state space equals to all the possible value of .

4) Action: As the uncertainty of the model and input data are contained in , it is expected that the agent can capture this uncertainty to produce the variance of the forecast distribution, i.e., the action .

5) Reward: The reward indicates the evaluation index of on the basis of the . It gives feedback to the agent about the performance of its action, which could be used to update the agent. Since our target is to obtain the probabilistic distribution of future charging power, which is constructed by the output of the environment, i.e., LSTM and the agent’s action. In our case, the reward is designed to evaluate the performance of the predicted distribution.

To quantify it, the continuous ranked probability score (CRPS) is introduced to measure of performance for probabilistic forecasts, i.e., the accuracy of the predicted probabilistic distribution [24]. The details of CRPS calculation are given in the Appendix A.

Note that the lower CRPS represents the more accurate probabilistic forecast. Therefore, the reward is defined as , which should be maximized by the agent. stands for the shrinkage coefficient, which is set as 0.75.

IV-C Proximal Policy Optimization

Based on the MDP, a recent reinforcement learning algorithm, called PPO, is introduced to train the agent for generating the optimal policy . The PPO contains two types of deep neural networks, which are termed as actor and critic. The actor works as the agent, and it produces the action by a policy with parameter . On the other hand, the critic is used to evaluate the performance of actor, parametrized by . The critic approximates the value function , which evaluates the value of the state , i.e., it represents the expectation of the reward from to the end of the MDP, which can be formulated as follows.

| (16) |

where is the return function, which is defined as the discounted cumulative reward from state .

| (17) |

where stands for the discount factor, denotes the value of reward and is the finite time horizontal of the MDP.

The main target of the PPO is to train the actor and critic, by learning from the experience tuples . It is obtained by the interaction between the actor and the environment. The actor generates a Normal distribution after observing . That is, the action is sampled from this distribution, i.e., . Therefore, this introduces randomness in the produced action and thus create more diverse actions when observing the same state. In this way, it leads to the diversity of rewards since they are related to the action. This mechanism is termed as the exploration of PPO, which is used to enrich the experience tuple in order to prevent the actor from getting trapped in local optima.

Then, based on the prediction of the mean value obtained by LSTM, the predicted probabilistic distribution is constructed. Afterwards, could be calculated by the reward function to evaluate , and the state at the next time is also restored. With the experience tuple, the parameters of both networks are updated by following equations.

| (18) | |||||

| (19) |

where and denote the learning rates of actor and critic, respectively. and stand for the loss functions of the two networks, which are given by

| (20) |

| (21) |

where denotes the policy of th iteration, and is the discount rate. is the clip function. It returns if , and if . is the advantage of action under state , which is represented by the difference between and the averaged performance of the actor, formulated as follows.

| (22) |

The Q-function evaluates the value of executing action at state , i.e., it represents the expectation of the reward from to after selecting the action . The formulation of the is shown below.

| (23) |

Then, the is calculated by the following equation in the PPO algorithm.

| (24) |

V Adaptive Exploration Proximal Policy Optimization

The actor of PPO will generate and , based on the state . As discussed in the above section, the action is sampled from the distribution . The value of determines the exploration of PPO. With the same , a larger represents a wider distribution, which brings higher degree of exploration and more diverse experiences. On the contrary, the smaller cannot provide a richer experience because the action distribution is more concentrated, and the probability of deviating from is lower. Under this situation, the PPO trains its actor and critic according to its previously generated experience, which is termed as the process of exploitation.

Note that exploration and exploitation are usually contradictory. Ideally, the reinforcement learning algorithm focuses on its exploration in the early stage of the training to obtain sufficient experiences. However, if the algorithm keeps a high exploration, it is hard to be convergent because of its high randomness. In this case, the exploration will shrink according to the training. Thus, the degree of exploitation is increased. Consequently, the explored experiences should be fully utilized to help the actor and critic converge. However, the practical training process of PPO is different from the ideal one. Since of PPO is generated by the actor, which means the degree of exploration is uncontrollable. is dependent on both the initial condition (set manually) and the inherent parameters of actor, which is difficult to converge. Thus it may cause higher exploitation prematurely. The consequence of lacking enough exploration is the lower diversity of the experiences. In this way, the PPO may get trapped in the local optima.

To tackle this issue, we propose an adaptive exploration mechanism for PPO to dynamically balance its exploration and exploitation. This mechanism is termed as the adaptive exploration proximal policy optimization (AePPO). In the AePPO, the action is sampled from where is generated by the actor and is determined by our proposed adaptive exploration mechanism.

Using such a mechanism, the exploration of AePPO is increased in the earlier training stage and then is gradually shrunk to enhance the exploitation of the experiences. It is inadvisable to achieve this target by gradually reducing the value of according to the training. This is because different actions may produce the same performance, i.e., reward, which may limit the expansion of experiences. In this case, the should be adjusted according to the performance of the reward.

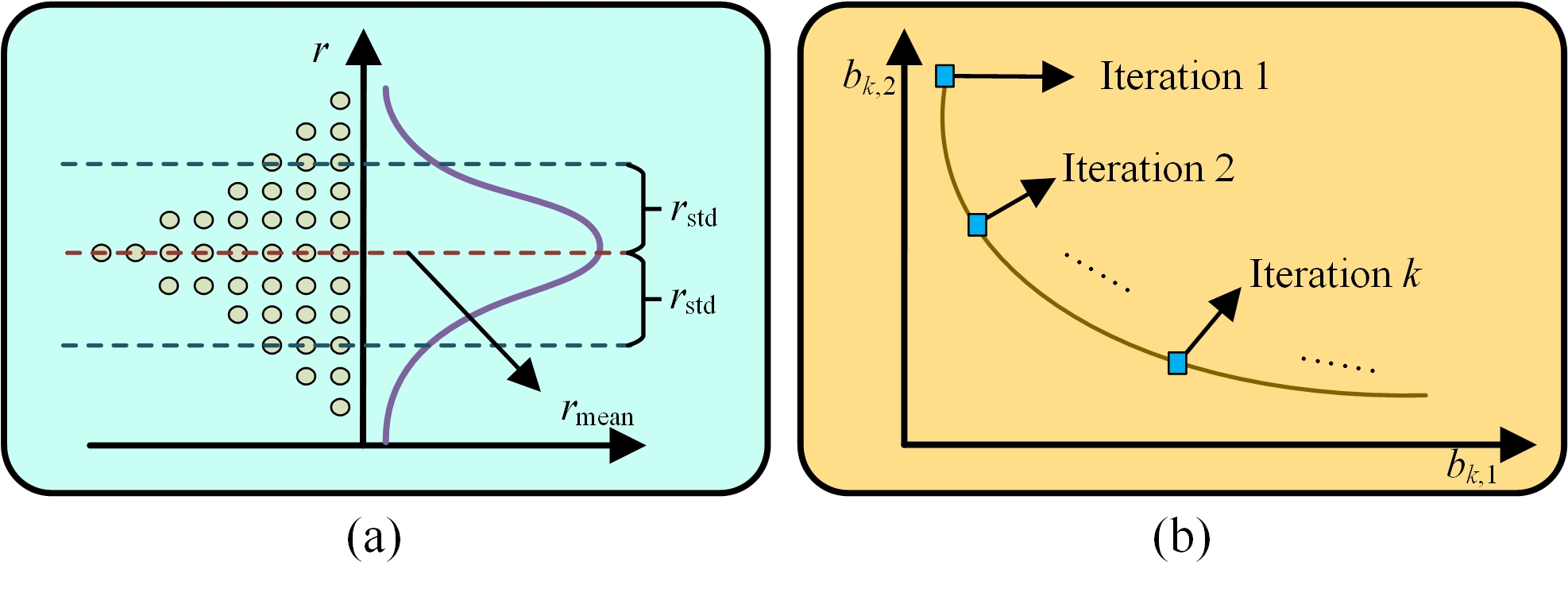

In PPO, the actions are sampled from and the reward is determined by both the action and state, i.e., . Then, considering the Bayes’ theorem, since is one-to-one corresponds to pair, when the is fixed, should subject to a Normal distribution as well, and and represent the mean and variance of the distribution, respectively. As shown in Fig. 3(a), the two parameters could be estimated by the following equations.

| (25) | |||

| (26) | |||

| (27) |

where indicates the actions which are sampled from .

Note that represents the exploration of the PPO and it is influenced by . If is enlarged by changing , it indicates the diversity of experiences is enriched, i.e., the exploration ability of PPO is enhanced. Besides, since evaluates the performance of PPO, the higher means improved convergence as well. In this case, the focus on exploration and exploitation should be changed during the training. This process could be represented by the following equation.

| (28) |

where represents the degree of exploration. and denote two parameters which represent the focus on and , respectively. As shown in Fig. 3(b), the values of these parameters vary according to the training iteration . In the early stage of the training, is larger than , which means is highly influenced by . Then, is increased according to the training, and thus, gradually decreases. When , the value of is more influenced by , which means the larger causes higher and thus strengthen the exploration. On the other hand, when , the raise of leads to the increase of , the focus of has changed from raising the exploration to increase the average performance of PPO, i.e., enhancing the exploitation. In this case, should be maximized by adjusting the during the training of AePPO, i.e., should be minimized. In this paper, it is solved by the particle swarm optimization (PSO), a well-known optimization algorithm [25].

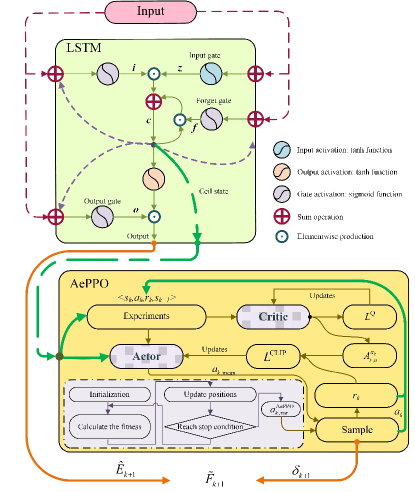

In conclusion, after the separate training, the interconnection between LSTM and proposed AePPO is described as follows. As illustrated in the upper side of Fig. 4, LSTM takes the input data, which is obtained by the data transformer and performs its internal calculation according to the Eqs. (10)(15). After that, the output of LSTM is the predicted mean value of EVCS charging power. Then, the cell state of LSTM is extracted to fulfill the experience of AePPO, which serves as the state of AePPO. The lower side of Fig. 4 shows the process of AePPO training iteration. The square with a yellow background in this figure represents the update mechanism of actor and critic according to the Eqs. (19)(24). Besides, the area with a violet background represents the procedure of adaptive exploration. Then, the PSO is applied to minimize and obtains , which represents the adaptive exploration of AePPO. Afterwards, the variation of predicted distribution is sampled from where is determined by the actor of AePPO. Finally, based on Eqs. (1)(4), the probabilistic prediction distribution of EVCS charging power is represented by . The pseudocode of LSTM-AePPO is provided in the Appendix B.

VI Case Study

VI-A Data Description and Experiential Initialization

In this part, we conduct a case study to verify the effectiveness of our proposed algorithm, i.e., LSTM-AePPO. The training data of the algorithm is collected from ACN dataset [26], which is an open dataset for EVCS charging researches. The charging sessions in ACN dataset are recorded from two EVCSs, one at Caltech, Pasadena, including 54 chargers and the other located in Jet Propulsion Laboratory (JPL), Lacanada, USA, containing 50 chargers. In our experiments, the charging session from 1st June 2018 to 1st June 2020 are applied to train the LSTM-AePPO, in which 25916 and 22128 charging sessions are included in the case of Caltech and JPL, respectively.

In order to demonstrate the effectiveness of LSTM-AePPO, numerous algorithms, i.e., support vector quantile regression (SVQR) [27], linear quantile regression (QR) [28], and gradient boosting quantile regression (GBQR) [29] are introduced for comparisons. Considering the seasonal variations of charging behavior, the performance and metric comparisons are conducted on the four seasons, respectively. Moreover, for further verifying the outperformance of our proposed AePPO, the traditional PPO is introduced to generate the from the cell state for comparisons, which is termed as LSTM-PPO.

Then, CRPS, Winkler [23], and Pinball [30] are introduced as evaluation metrics. The three metrics focus on the accuracy, variation, and reliability of the forecast distribution, respectively. Their details are presented in Appendix C.

In this paper, the features of former hours are used in the probabilistic forecasting of EVCS charging power at hour . The value of is set as 12, and other hyperparameters of LSTM-AePPO are given in Table I. Note that the experiments are conducted on a computer with 8GB RAM, Intel i5-8265U CPU, and implemented in Python.

| Symbol | Description | Value |

|---|---|---|

| , | The learning rate of actor and critic | 0.0001 |

| The learning rate of LSTM | 0.001 | |

| The clip value of actor loss | 0.1 | |

| The discount factor | 0.99 | |

| The maximum training number of AePPO | 10000 | |

| The population of PSO | 20 | |

| The maximum iteration of PSO | 100 | |

| The learning factor of PSO | 2 | |

| The inertia weight of PSO | 0.7 |

| Winkler | Pinball | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| QR | QRSVM | GBQR | LSTM-PPO | LSTM-AePPO | QR | QRSVM | GBQR | LSTM-PPO | LSTM-AePPO | |||

| Caltech | Spring | 30 | 144.565 | 113.104 | 116.499 | 54.882 | 30.099 | 18.922 | 17.335 | 17.715 | 15.412 | 15.158 |

| 60 | 85.686 | 75.070 | 73.747 | 45.429 | 21.036 | 18.023 | 16.762 | 17.095 | 18.512 | 13.311 | ||

| 90 | 40.323 | 39.088 | 38.607 | 34.876 | 23.966 | 16.656 | 15.783 | 15.940 | 23.966 | 14.057 | ||

| Summer | 30 | 203.099 | 160.325 | 191.611 | 65.351 | 26.914 | 26.366 | 21.962 | 24.434 | 24.424 | 23.475 | |

| 60 | 99.963 | 93.854 | 102.781 | 40.923 | 16.665 | 24.387 | 20.960 | 22.590 | 23.475 | 15.835 | ||

| 90 | 44.193 | 45.280 | 45.503 | 27.592 | 13.074 | 21.359 | 18.935 | 20.003 | 21.815 | 18.664 | ||

| Autumn | 30 | 131.399 | 83.484 | 93.647 | 37.247 | 67.858 | 18.495 | 16.616 | 18.910 | 22.154 | 14.480 | |

| 60 | 76.467 | 42.663 | 47.674 | 22.865 | 35.791 | 17.788 | 16.595 | 18.434 | 15.039 | 14.683 | ||

| 90 | 34.970 | 28.657 | 28.326 | 18.342 | 21.510 | 16.446 | 15.818 | 16.988 | 19.850 | 14.870 | ||

| Winter | 30 | 131.210 | 137.102 | 124.088 | 55.526 | 19.342 | 19.777 | 18.939 | 18.630 | 13.498 | 13.328 | |

| 60 | 76.665 | 87.910 | 77.862 | 45.158 | 15.463 | 18.984 | 18.101 | 17.884 | 17.004 | 14.124 | ||

| 90 | 28.660 | 30.142 | 30.627 | 21.798 | 14.666 | 17.271 | 16.590 | 16.401 | 24.813 | 14.378 | ||

| JPL | Spring | 30 | 367.828 | 292.015 | 278.605 | 71.544 | 27.800 | 36.793 | 30.789 | 32.484 | 23.128 | 18.072 |

| 60 | 171.225 | 157.093 | 135.559 | 26.264 | 19.512 | 34.360 | 29.845 | 30.738 | 31.896 | 20.312 | ||

| 90 | 54.291 | 49.069 | 49.749 | 21.784 | 18.296 | 30.001 | 26.917 | 27.372 | 46.840 | 20.760 | ||

| Summer | 30 | 210.133 | 237.387 | 208.853 | 37.048 | 24.952 | 32.200 | 26.701 | 27.833 | 25.464 | 25.464 | |

| 60 | 103.983 | 112.102 | 100.350 | 27.096 | 21.368 | 31.269 | 27.156 | 27.538 | 31.480 | 22.680 | ||

| 90 | 52.745 | 49.958 | 50.535 | 24.824 | 19.608 | 27.807 | 25.061 | 25.105 | 37.528 | 22.584 | ||

| Autumn | 30 | 157.871 | 123.457 | 94.360 | 29.240 | 23.064 | 21.857 | 21.287 | 21.325 | 22.712 | 21.016 | |

| 60 | 54.617 | 57.052 | 52.259 | 21.976 | 19.416 | 23.676 | 23.167 | 23.110 | 25.400 | 21.464 | ||

| 90 | 47.000 | 47.033 | 47.040 | 18.232 | 18.584 | 22.834 | 22.420 | 22.123 | 28.856 | 21.656 | ||

| Winter | 30 | 524.566 | 569.323 | 505.956 | 38.328 | 32.440 | 45.037 | 43.167 | 40.867 | 22.680 | 22.136 | |

| 60 | 258.636 | 314.319 | 265.607 | 23.416 | 23.256 | 41.205 | 39.586 | 37.381 | 24.920 | 24.888 | ||

| 90 | 51.263 | 76.256 | 73.966 | 19.032 | 18.776 | 34.898 | 33.815 | 32.077 | 31.512 | 29.400 | ||

VI-B The Performance of Probabilistic Forecasting Obtained by LSTM-AePPO

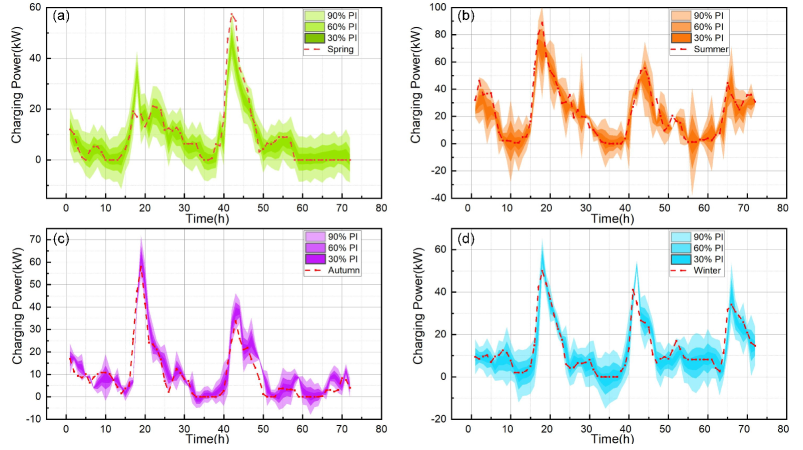

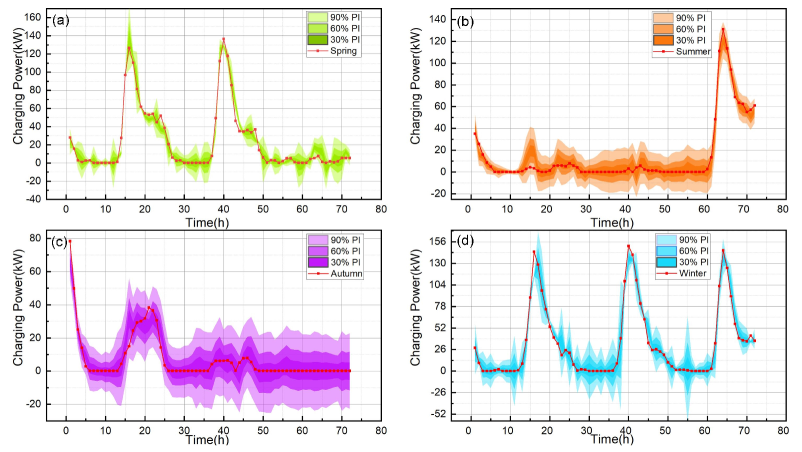

The probabilistic forecast of EVCS charging power obtained by LSTM-AePPO are shown in Fig. 5 and Fig. 6, which illustrate the results on Caltech and JPL cases among 3 test days (72 hours). The subfigures (a), (b), (c) and (d) represent the forecast of Spring, Summer, Autumn, and Winter, respectively. Specifically, prediction interval (PI) is used to denote the interval between the lower and upper quantiles at probability , and the darker color denotes the PI with a smaller . Consequently, the 30% PI is shown with the darkest color while the 90% PI corresponds to the lightest. Note that the real charging power is represented by the red line in these figures.

One of the biggest challenges of the charging power forecasting is to predict the peak, which varies according to the seasons. For instance, in the Spring and Autumn case of Caltech, it could be learned that the peaks emerge in around the 18th and 42nd hours, as shown in Figs. 5(a) and (c). However, as shown in Figs. 5(b) and (d), the peaks during the Summer and Winter appear 3 times, which is more frequent. Besides, as shown in Fig. 6, the peaks are more irregular in the JPL case, which appear 2 times in the Spring and Autumn cases, 1 time in the Summer case and 3 times in the Winter case. Although the peak appears irregularly, LSTM-AePPO achieves a good result. The fluctuation of the probabilistic forecast distribution can capture the EVCS charging power. As shown in Figs. 5 and 6, most of the values in the red lines are covered by the 90% PI, which means that the probabilistic forecast distribution could represent the variation of real charging power.

VI-C Metrics Comparison Among Different Algorithms

To verify the effectiveness of LSTM-AePPO, the Winkler, Pinball and CRPS are introduced as metrics for comparison. Since the values of Winkler and Pinball are related to PI, the effectiveness of our proposed algorithm is demonstrated by comparing with other algorithms under , and PIs, as shown in Table II.

In this table, the best metric value of each case is marked in bold. It could be seen that the LSTM-AePPO outperforms other algorithms on Winkler and Pinball in most cases. For example, in the Spring case of Caltech, the Winkler and Pinball values of LSTM-AePPO at 30%, 60% and 90% PIs are (30.099, 15.158), (21.036, 13.311) and (23.966, 14.057), which are lower than the Winkler values of other algorithms. Besides, in the Summer and Autumn cases of Caltech, the LSTM-AePPO always performs the best at 60% PI. Moreover, when focusing on the Winter case of Caltech, the QR, QRSVM, GBQR and LSTM-PPO also perform worse than LSTM-AePPO since their Winkle and Pinball values are larger. Generally, the forecast distribution obtained by LSTM-AePPO have a better variation and reliability at Caltech case. In addition, the effectiveness is also verified comprehensively in the JPL case. As shown in the Table II, the Winkler and Pinball numbers obtained by LSTM-AePPO in the JPL case are bold, which means QR, QRSVM, GBQR and LSTM-PPO are all surpassed from both the variation and reliability of the forecast distribution.

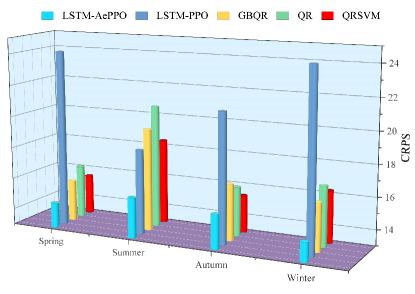

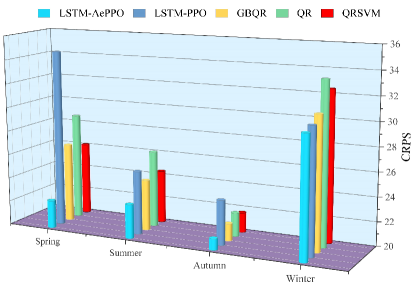

Note that the LSTM-AePPO does not perform the best in the following two cases, i.e., the Winkler of Caltech Autumn case at 90% PI (21.510 for LSTM-AePPO but 18.342 for LSTM-PPO) and the Pinball of Caltech Summer case at 30% PI (23.475 for LSTM-AePPO but 21.962 for QRSVM). The cause of this phenomenon may be the correlation between Winkler and Pinball to the PI. Therefore, as a metric that is independent of PI, the CRPS is introduced to evaluate the accuracy of the whole forecast probabilistic distribution, and the lower CRPS value indicates a more accurate forecast probabilistic distribution. The comparisons of CRPS under Caltech and JPL cases are illustrated in Fig. 7 and Fig. 8. It can be seen from the two figures that our proposed LSTM-AePPO achieves the lowest CRPS values in all the cases, which demonstrates the accuracy of LSTM-AePPO could surpass other comparison algorithms. Based on the analysis of the Winkler, Pinball and CRPS values under different cases, it could be concluded that the LSTM-AePPO is more effective than QR, GBQR, QRSVM and LSTM-PPO.

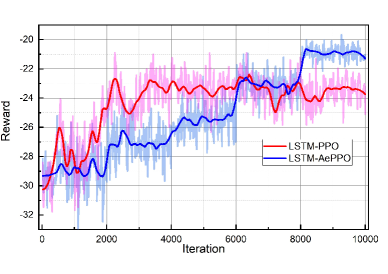

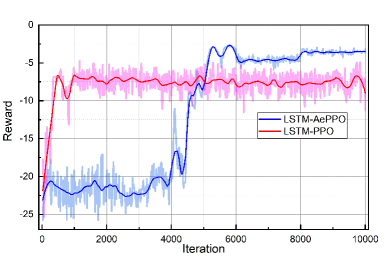

VI-D The Effectiveness of AePPO

To illustrate the effectiveness of the proposed adaptive exploration mechanism in LSTM-AePPO, the reward curves of PPO and AePPO during the 10000 training iterations are compared in the Caltech and JPL cases, as shown in Fig. 9 and Fig. 10. In Fig. 9, the reward of PPO increases during the first 3000 iterations and stays steady. Similarly, as shown in Fig. 10, the reward of PPO continuously raise from -20 to -7.5 in the first 1000 iterations then maintains at a relatively stable level.

Because of applying the adaptive exploration mechanism, the AePPO would focus on exploration in the early stage, which enriches the experience while causing the backwardness of the reward value. For instance, in the case of Caltech, the reward of PPO increases from around -30 to -23 during the early 3000 iterations, while the reward value of AePPO raises from -29 to -27. The same situation also appears in the case of JPL. The reward of PPO goes up from -25 to -7 in the early training, while the corresponding reward of AePPO hardly increases. However, the exploration of PPO would decrease due to the premature convergence of its actor, thus the diversity of its experience is not plentiful enough, which may cause the PPO falling into the local optima. As a result, the rewards of AePPO increase and surpass PPO after around the 8000th and the 5000th iteration in the case of Caltech and JPL, respectively.

Therefore, it can be seen that the adaptive exploration mechanism strengthen the accumulation of AePPO experiences in the early stage. Then, the AePPO would make full use of these experiences during the training and finally achieves a higher reward than PPO. In addition, the fluctuation of reward curves obtained by LSTM-AePPO are lower in the two cases. This illustrates that the proposed AePPO provides a more stable and superior performance than the original PPO.

VII Conclustion

This paper has proposed a reinforcement learning assisted deep learning probabilistic forecast framework for the charging power of EVCS, which contains a data transformer method and a probabilistic forecast algorithm, termed as LSTM-AePPO. After being preprocessed by the data transformer, the charging session data is used to train the LSTM, which aims to obtain the mean value of the forecast distribution. Then, the variation of the LSTM cell state is modeled as a MDP and a reinforcement learning algorithm, AePPO, is applied to solve it. In this way, the variance of the forecast distribution can be provided by the AePPO. In addition, to balance the exploration and the exploitation, an adaptive exploration mechanism is further proposed to enrich the diversity of the experiences thus preventing the premature convergence of AePPO. Finally, the case studies are conducted based on the charging session data of Caltech and JPL. The experiments show the superior performance of the proposed LSTM-AePPO by comparing with QR, QRSVM, GBQR and LSTM-PPO on the CRPS, Winkler and Pinball metrics. Moreover, the comparison between the reward curves of PPO and AePPO indicates that the adaptive exploration mechanism is more effective in balancing the exploration and the exploitation of reinforcement learning.

Two challenges remain in our work, which are worth investigating in future work. First, the better point forecasting method should be investigated to achieve a higher prediction accuracy. Meanwhile, the method is also required to remain the parameters that contain the uncertainties of model and data simultaneously, such as the cell state in LSTM. The other is accelerating the training process of reinforcement learning algorithm, i.e., AePPO, while keeping the diversity of exploration and the convergence performance.

References

- [1] Z. Wei, Y. Li, and L. Cai, “Electric vehicle charging scheme for a park-and-charge system considering battery degradation costs,” IEEE Transactions on Intelligent Vehicles, vol. 3, no. 3, pp. 361–373, 2018.

- [2] L. Liu, F. Kong, X. Liu, Y. Peng, and Q. Wang, “A review on electric vehicles interacting with renewable energy in smart grid,” Renewable and Sustainable Energy Reviews, vol. 51, pp. 648–661, 2015.

- [3] Y. Shi, H. D. Tuan, A. V. Savkin, T. Q. Duong, and H. V. Poor, “Model predictive control for smart grids with multiple electric-vehicle charging stations,” IEEE Transactions on Smart Grid, vol. 10, no. 2, pp. 2127–2136, 2019.

- [4] K. Chaudhari, N. K. Kandasamy, A. Krishnan, A. Ukil, and H. B. Gooi, “Agent-based aggregated behavior modeling for electric vehicle charging load,” IEEE Transactions on Industrial Informatics, vol. 15, no. 2, pp. 856–868, 2019.

- [5] S. Cheng, Z. Wei, D. Shang, Z. Zhao, and H. Chen, “Charging load prediction and distribution network reliability evaluation considering electric vehicles’ spatial-temporal transfer randomness,” IEEE Access, vol. 8, pp. 124 084–124 096, 2020.

- [6] L. Chen, F. Yang, Q. Xing, S. Wu, R. Wang, and J. Chen, “Spatial-temporal distribution prediction of charging load for electric vehicles based on dynamic traffic information,” in 2020 IEEE 4th Conference on Energy Internet and Energy System Integration, 2020, pp. 1269–1274.

- [7] Y. Zheng, Z. Shao, Y. Zhang, and L. Jian, “A systematic methodology for mid-and-long term electric vehicle charging load forecasting: The case study of Shenzhen, China,” Sustainable Cities and Society, vol. 56, p. 102084, 2020.

- [8] H. J. Feng, L. C. Xi, Y. Z. Jun, Y. X. Ling, and H. Jun, “Review of electric vehicle charging demand forecasting based on multi-source data,” in 2020 IEEE Sustainable Power and Energy Conference, 2020, pp. 139–146.

- [9] H. M. Louie, “Time-series modeling of aggregated electric vehicle charging station load,” Electric Power Components and Systems, vol. 45, no. 14, pp. 1498–1511, 2017.

- [10] X. Zhang, “Short-term load forecasting for electric bus charging stations based on fuzzy clustering and least squares support vector machine optimized by wolf pack algorithm,” Energies, vol. 11, no. 6, p. 1449, 2018.

- [11] A. Almaghrebi, F. Aljuheshi, M. Rafaie, K. James, and M. Alahmad, “Data-driven charging demand prediction at public charging stations using supervised machine learning regression methods,” Energies, vol. 13, no. 16, p. 4231, 2020.

- [12] J. Zhu, Z. Yang, M. Mourshed, Y. Guo, Y. Zhou, Y. Chang, Y. Wei, and S. Feng, “Electric vehicle charging load forecasting: A comparative study of deep learning approaches,” Energies, vol. 12, no. 14, p. 2692, 2019.

- [13] Z. Li, Y. Li, Y. Liu, P. Wang, R. Lu, and H. B. Gooi, “Deep learning based densely connected network for load forecasting,” IEEE Transactions on Power Systems, vol. 36, no. 4, pp. 2829–2840, 2021.

- [14] G. Guo, W. Yuan, Y. Lv, W. Liu, and J. Liu, “Traffic forecasting via dilated temporal convolution with peak-sensitive loss,” IEEE Intelligent Transportation Systems Magazine, in press.

- [15] M. Xue, L. Wu, Q. P. Zhang, J. X. Lu, X. Mao, and Y. Pan, “Research on load forecasting of charging station based on XGBoost and LSTM model,” in Journal of Physics: Conference Series, vol. 1757, no. 1, 2021, p. 012145.

- [16] Y. Kim and S. Kim, “Forecasting charging demand of electric vehicles using time-series models,” Energies, vol. 14, no. 5, p. 1487, 2021.

- [17] L. Buzna, P. De Falco, G. Ferruzzi, S. Khormali, D. Proto, N. Refa, M. Straka, and G. van der Poel, “An ensemble methodology for hierarchical probabilistic electric vehicle load forecasting at regular charging stations,” Applied Energy, vol. 283, p. 116337, 2021.

- [18] X. Zhang, K. W. Chan, H. Li, H. Wang, J. Qiu, and G. Wang, “Deep-learning-based probabilistic forecasting of electric vehicle charging load with a novel queuing model,” IEEE Transactions on Cybernetics, vol. 51, no. 6, pp. 3157–3170, 2021.

- [19] L. Zhu and N. Laptev, “Deep and confident prediction for time series at uber,” 2017 IEEE International Conference on Data Mining Workshops, pp. 103–110, 2017.

- [20] Y. Li, G. Hao, Y. Liu, Y. Yu, Z. Ni, and Y. Zhao, “Many-objective distribution network reconfiguration via deep reinforcement learning assisted optimization algorithm,” IEEE Transactions on Power Delivery, in press.

- [21] C. Wan, Z. Xu, P. Pinson, Z. Y. Dong, and K. P. Wong, “Probabilistic forecasting of wind power generation using extreme learning machine,” IEEE Transactions on Power Systems, vol. 29, no. 3, pp. 1033–1044, 2014.

- [22] A. Khosravi, S. Nahavandi, D. Creighton, and A. F. Atiya, “Comprehensive review of neural network-based prediction intervals and new advances,” IEEE Transactions on Neural Networks, vol. 22, no. 9, pp. 1341–1356, 2011.

- [23] M. Sun, T. Zhang, Y. Wang, G. Strbac, and C. Kang, “Using bayesian deep learning to capture uncertainty for residential net load forecasting,” IEEE Transactions on Power Systems, vol. 35, no. 1, pp. 188–201, 2020.

- [24] Y. Chu and C. F. Coimbra, “Short-term probabilistic forecasts for direct normal irradiance,” Renewable Energy, vol. 101, pp. 526–536, 2017.

- [25] S. Kachroudi, M. Grossard, and N. Abroug, “Predictive driving guidance of full electric vehicles using particle swarm optimization,” IEEE Transactions on Vehicular Technology, vol. 61, no. 9, pp. 3909–3919, 2012.

- [26] Z. J. Lee, T. Li, and S. H. Low, “ACN-data: Analysis and applications of an open ev charging dataset,” in Proceedings of the Tenth International Conference on Future Energy Systems, 2019, pp. 139–149.

- [27] Y. He, R. Liu, H. Li, S. Wang, and X. Lu, “Short-term power load probability density forecasting method using kernel-based support vector quantile regression and copula theory,” Applied Energy, vol. 185, pp. 254–266, 2017.

- [28] T. Hong, P. Wang, and H. L. Willis, “A naïve multiple linear regression benchmark for short term load forecasting,” in 2011 IEEE Power and Energy Society General Meeting, 2011, pp. 1–6.

- [29] Y. Wang, N. Zhang, Y. Tan, T. Hong, D. S. Kirschen, and C. Kang, “Combining probabilistic load forecasts,” IEEE Transactions on Smart Grid, vol. 10, no. 4, pp. 3664–3674, 2019.

- [30] Y. Wang, D. Gan, M. Sun, N. Zhang, Z. Lu, and C. Kang, “Probabilistic individual load forecasting using pinball loss guided LSTM,” Applied Energy, vol. 235, pp. 10–20, 2019.

- [31] J. Schulman, F. Wolski, P. Dhariwal, A. Radford, and O. Klimov, “Proximal policy optimization algorithms,” arXiv:1707.06347 [cs], in press.

Appendix A The Calculation of Continuous Ranked Probability Score

Normally, the CRPS is defined as follows.

| (A.1) |

where is the predicted distribution obtained by the probabilistic forecasting model and is the real value. Since the noise of our studied probabilistic forecast problem is assumed to follow the Gaussian distribution, as shown in Eqs. (1)(4), Eq. (A.1) can be derived as follows.

| (A.2) |

where and are the mean and variance of the predicted distribution. The functions and are formulated as follows.

| (A.3) | |||

| (A.4) |

Appendix B The Pseudocode of LSTM-AePPO Training

Algorithm 1 The training of LSTM.

Algorithm 2 The training of AePPO.

Appendix C Comparative Metrics in Experiments

To compare performances of the algorithms mentioned in this paper, the competitive metrics are needed to be used. The comprehensive evaluation of probabilistic prediction should consider its accuracy, variation, and reliability. Here, we adopt three metrics, i.e., CRPS, Winkler [23] and Pinball [30] to evaluate the three aspects, respectively. The definition of CRPS is given in Eq. (A.2). As a famous metric, the Winkler is used to evaluate the variation of probabilistic distribution, and it is expressed as follows.

| (C.1) |

where and denote the predicted distribution and the real charging power at time . and indicate the lower and upper quantiles at probability . and are two parameters of this metric, which are set as 0.1 and 1, respectively. Normally, the lower value of Winkler means the better variation.

In addition, considering the reliability of the predicted probabilistic distribution, Pinball is introduced as a metric, and its mathematical formulation is expressed as follows.

| (C.2) |

where stands for the probability of and indicates the predicted value at time . The average Pinball value of the probabilistic prediction is used to represent its performance. Note that the lower Pinball stands for the better performance.

Appendix D The Derivatives of Learnable Parameters in LSTM-AePPO

In this section, we provide the derivatives of the learnable parameters and of LSTM-AePPO. Based on the Eqs. (10)(15), the LSTM takes as its input and output as its forecast. Its loss function is denoted as . The is taken as an example to describe its derivative111During the intermediate training of LSTM, the may be equal to 0 because the loss function has not calculated at time . In this case, to avoid the gradient disappearance that caused by the zero of derivative, we use instead of ..

| (D.1) |

where is the derivative of error at with respect to . In this paper, the loss function is mean square error, which could be formulated by

| (D.2) |

Then, the other derivatives are given in the following explicit formulas.

| (D.3) |

| (D.4) |

| (D.5) |

| (D.6) |

| (D.7) |

Similarly, the derivative of and could be represented by the following equations.

| (D.8) |

| (D.9) |

| (D.10) |

| (D.11) |

| (D.12) |

| (D.13) |

| (D.14) |

where

| (D.15) |

| (D.16) |

| (D.17) |

| (D.18) |

| (D.19) |

Then, the learnable parameters of AePPO is provided. To simplify the notation, we denote as the probability ratio by . Then, the Eq. (20) could be rewritten as

| (D.20) |

The derivative of could be derived from the above formula.

| (D.21) |

where indicates the actor at iteration with parameter . Note that the term in the above formula is clipped into the range [31]. The is formulated as follows.

| (D.22) |

where is obtained by the adaptive exploration mechanism rather than AePPO during the training, which is different with traditional PPO. Besides, is obtained by AePPO with learnable parameters .

With the formulation of these derivative, the learnable parameters could be update through the following equation with learning rate .

| (D.23) |

where indicates the learnable parameters of LSTM-AePPO and the is represented by

| (D.24) |

where denotes the derivative of .

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/c227360f-8cb4-4eaa-87e4-324e086ad2f9/YZLi.png) |

Yuanzheng Li received the M.S. degree and Ph.D. degree in Electrical Engineering from Huazhong University of Science and Technology (HUST), Wuhan, China, and South China University of Technology (SCUT), Guangzhou, China, in 2011 and 2015, respectively. Dr Li is currently an Associate Professor in HUST. He has published several peer-reviewed papers in international journals. His current research interests include electric vehicle, deep learning, reinforcement learning, smart grid, optimal power system/microgrid scheduling and decision making, stochastic optimization considering large-scale integration of renewable energy into the power system and multi-objective optimization. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/c227360f-8cb4-4eaa-87e4-324e086ad2f9/SYHe.jpg) |

Shangyang He received the B.S. degree in Automation from the Wuhan University of Technology in 2020. He is currently working toward the M.S. degree in Huazhong University of Science and Technology (HUST), Wuhan, China. His research interests include electric vehicle charging power forecast, deep learning, deep reinforcement learning and graph neural network. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/c227360f-8cb4-4eaa-87e4-324e086ad2f9/YLi.png) |

Yang Li received the Ph.D. degree in electrical engineering from North China Electric Power University, Beijing, China, in 2014. He is an Associate Professor with the School of Electrical Engineering, Northeast Electric Power University, Jilin, China. He is currently also a China Scholarship Council-funded Postdoc with Argonne National Laboratory, Lemont, IL, USA. His research interests include power system stability and control, integrated energy systems, renewable energy integration, and smart grids. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/c227360f-8cb4-4eaa-87e4-324e086ad2f9/LJGe.png) |

Leijiao Ge received the bachelor degree in electrical engineering and its automation from Beihua University, Jilin, China, in 2006, and the master degree in electrical engineering from Hebei University of Technology, Tianjin, China, in 2009, and the Ph.D. degree in Electrical Engineering from Tianjin University, Tianjin, China, in 2016. He is currently an associate professor in the School of Electrical and Information Engineering at Tianjin University. His main research interests include situational awareness of smart distribution network, cloud computing and big data. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/c227360f-8cb4-4eaa-87e4-324e086ad2f9/SHLou.jpg) |

Suhua Lou received her B.E., M.Sc. and Ph.D. degrees in electrical engineering from Huazhong University of Science and Technology (HUST), Wuhan, China, in 1996, 2001 and 2005, respectively. She is currently working as a Professor in HUST. Her research interests include power system planning,energy economics and renewable energy generation. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/c227360f-8cb4-4eaa-87e4-324e086ad2f9/ZGZeng.png) |

Zhigang Zeng (IEEE Fellow) received the Ph.D. degree in systems analysis and integration from Huazhong University of Science and Technology,Wuhan, China, in 2003. He is currently a Professor with the School of Automation and the Key Laboratory of Image Processing and Intelligent Control of the Education Ministry of China, Huazhong University of Science and Technology. He has published more than 100 international journal articles. His current research interests include theory of functional differential equations and differential equations with discontinuous right-hand sides and their applications to dynamics of neural networks, memristive systems, and control systems. Dr. Zeng was an Associate Editor of the IEEE TRANSACTIONS ON NEURAL NETWORKS AND LEARNING SYSTEMS from 2010 to 2011. He has been an Associate Editor of the IEEE TRANSACTIONS ON CYBERNETICS since 2014 and the IEEE TRANSACTIONS ON FUZZY SYSTEMS since 2016, and a member of the Editorial Board of Neural Networks since 2012, Cognitive Computation since 2010, and Applied Soft Computing since 2013. |