Probabilistic Deduction: an Approach to Probabilistic Structured Argumentation

Abstract

This paper introduces Probabilistic Deduction (PD) as an approach to probabilistic structured argumentation. A PD framework is composed of probabilistic rules (p-rules). As rules in classical structured argumentation frameworks, p-rules form deduction systems. In addition, p-rules also represent conditional probabilities that define joint probability distributions. With PD frameworks, one performs probabilistic reasoning by solving Rule-Probabilistic Satisfiability. At the same time, one can obtain an argumentative reading to the probabilistic reasoning with arguments and attacks. In this work, we introduce a probabilistic version of the Closed-World Assumption (P-CWA) and prove that our probabilistic approach coincides with the complete extension in classical argumentation under P-CWA and with maximum entropy reasoning. We present several approaches to compute the joint probability distribution from p-rules for achieving a practical proof theory for PD. PD provides a framework to unify probabilistic reasoning with argumentative reasoning. This is the first work in probabilistic structured argumentation where the joint distribution is not assumed form external sources.

keywords:

Probabilistic Structured Argumentation, Epistemic Probabilistic Argumentation, Probabilistic Satisfiability1 Introduction

The field of argumentation has been in rapid development in the past three decades. In argumentation, information forms arguments; one argument attacks another if the former is in conflict with the latter. As stated in Dung’s landmark paper [17], “whether or not a rational agent believes in a statement depends on whether or not the argument supporting this statement can be successfully defended against the counterarguments”, argumentation analyses statement acceptability by studying attack relations amongst arguments. As a reasoning paradigm in multi-agent settings, especially for reasoning under uncertainty or with conflict information, argumentation has seen its applications in e.g. medical (see e.g. [9, 24, 42, 21, 41, 10, 25]), legal (see [5] for an overview), and engineering (see e.g. [60] for an overview) domains.

Arguments in Dung’s abstract argumentation (AA) are atomic without internal structure. Also, in AA, there is no specification of what is an argument or an attack as these notions are assumed to be given. To have a more detailed formalisation of arguments than is available with AA, one turns to structured argumentation - using some forms of logic, arguments are built from a formal language, which serves as a representation of information; attacks are also derived from some notion representing conflicts in the underlying logic and language [3]. Both abstract argumentation and structured argumentation are seen as powerful reasoning paradigms with extensive theoretical results and practical applications (see e.g. [2] for an overview).

As reasoning with probabilistic information is considered a pertinent issue in many application areas, several different probabilistic argumentation frameworks have been developed in the literature to join probability with argumentation. As summarised in Hunter [31], two main approaches to probabilistic argumentation exist today: the epistemic and the constellations approaches. Quoting Hunter & Thimm [37] on this distinction:

In the constellations approach, the uncertainty is in the topology of the graph [of arguments]. …In the epistemic approach, the topology of the argument graph is fixed, but there is uncertainty about whether an argument is believed.

In other words, in a constellations approach, probabilities are defined over sets (extensions) of arguments, representing the uncertainty on whether sets of arguments exist in a given context; whereas in an epistemic approach, probabilities are defined over arguments, representing uncertainty on whether arguments are true. Both approaches have seen many successful development. For instance, [18, 43, 52, 30, 16, 49, 15, 54, 22, 23, 12] are works taking the constellations approach; and [55, 36, 40, 32, 31, 37, 35, 33] are works taking the epistemic approach.

As in non-probabilistic or classical argumentation, arguments in probabilistic argumentation can either be atomic or structured. For instance, amongst the works mentioned above, [18, 23, 52, 31, 32, 33, 12] are the ones studying structured arguments whereas the rest are the ones studying non-structured arguments. Within the group of works that studying probabilistic structured argumentation with the epistemic approach, i.e. [31, 32, 33], it is assumed that a probability distribution over the language is given. An implication of this assumption is that the logic component is detached from the probability component in the sense that one first performs logic operations to form arguments, and then view them through a lens of probability. In other words, there is a “logic information” component describing some knowledge that is used to construct arguments; separately, there is “probability information” which acts as an perspective filter to augment arguments.

| Abstract | Structured | |

|---|---|---|

| Constellations | [43, 30, 16, 49, 15, 54, 22, 12] | [18, 52, 23] |

| Epistemic | [55, 36, 40, 37, 35] | [31, 32, 33] |

This work aims to provide an alternative approach to epistemic probabilistic structured argumentation. Instead of assuming the duality of logic and probability, we consider all information being probabilistic and represented in the form of Probabilistic Deduction (PD) frameworks composed of probability rules (p-rules). Being the sole representation in our work, p-rules describe both probability and logic information at the same time as p-rules can be read as both conditional probabilities and production rules. Instead of taking a probability distribution from some external source, p-rules define probability distributions; at the same time, when reading them as production rules, p-rules form a deduction system that can be used to build arguments and attacks as in classical structured argumentation.

Example 1.1.

Consider a hypothetical university admission example with the following information.

-

•

A student is likely to receive good exam scores if he studies hard.

:[] -

•

A student is likely to receive good exam scores if he has high IQ.

:[] -

•

A student is likely to be admitted to university if he has good exam scores.

:[] -

•

A student is likely not to be admitted if he does not have extracurricular experience.

:[] -

•

A student will have extracurricular experience if he has both time and interest for it.

:[] -

•

A student may or may not have time for extracurricular experience.

:[] -

•

A student is likely interest in having extracurricular experience.

:[] -

•

A student may or may not have high IQ.

:[] -

•

A student will not study hard if he is lazy.

:[]

Each of these statements is represented with a probabilistic rule (p-rule) denoting conditional probability. For instance,

:[]

is read as

;

and

:[]

is read as

.

From these p-rules, we can build arguments and specify attacks using the approach we will describe in Section 3. Some arguments and their attacks are shown in Figure 1; and readings of these arguments are summarised in Table 2. With a probability calculation approach we will introduce in Section 2, we compute probabilities for literals as follows:

| , | , |

| , | , |

| , | , |

| , | . |

With the PD framework we will introduce in Section 3, we compute arguments probabilities as follows.

| , | , | , | . |

| Argument | Reading |

|---|---|

| With hard study, a student will score well in exams. | |

| Thus they will be admitted to university. | |

| With high IQ, a student will score well in exams. | |

| Thus they will be admitted to university. | |

| Without extracurricular experience, a student will not | |

| be admitted to university. | |

| With time and interest, student will have extracurricular experience. |

As illustrated in Example 1.1, one can view PD as a representation for probabilistic information supported by a well defined probability semantics. (We will show in Section 2 that the probability semantics is developed from Nilssons’ probabilistic satisfiability (PSAT) [47].) At the same time, there is an argumentative interpretation to PD frameworks in which information can be arranged for presentation with the notions of arguments and attacks. This spirit is inline with contemporary approaches on argumentation for explainable AI (see e.g., [13] for a survey). In these works, there is a “computational layer” for carrying out the computation using any suitable (numerical) techniques, such as machine learning or optimization, and an “argumentation layer” built on top of the “computational layer”, in which the argumentation layer is responsible for producing explanations with argumentation notions such as arguments and attacks. A key property of our work, as we will show in Section 3, is that the two layers reconcile with each other in the sense that when there is no uncertainty with p-rules, i.e. when all p-rules derived from classical argumentation having probability 1, the probability computation coincides with the complete semantics [17] in classical abstract argumentation.

As we intend to let PD frameworks to have practical value, effort has been put into proof theories of PD as we will present in Section 4. In a nutshell, our approach works by viewing each p-rules as a constraint imposed on the probability space defined by the language. We then find a solution in the feasible region as the joint probability distribution. For each literal, we define its probability as the marginal probability computed from the joint probability distribution. For each argument, we define its probability as the sum of probabilities of all models entailing all literals in the argument. The core to our probability computation is finding the joint probability distribution. To this end, we have developed approaches using linear programming, quadratic programming and stochastic gradient descent.

A quick summary of the rest of this paper is as follows.

We introduce the notion of probabilistic rules (p-rules) in Section 2.1, describing its syntax and how p-rules define joint probability distributions. We introduce probability computation of literals in Section 2.2 with two key concepts, probabilistic open-world assumption (P-OWA) and probabilistic closed-world assumption (P-CWA), mimicking their counterparts in non-probabilistic logic. We introduce Maximum Entropy Reasoning in Section 2.3, which give a unique joint probability distribution on each sets of p-rules. As maximum entropy solutions distribute probability “as equally as possible”, we also show an important result (Lemma 2.1) that the probability of a possible world is not zero unless zero is the only value it can take. As both P-CWA and maximum entropy reasoning impose constraints on the joint distribution, we clarify their relations in Section 2.4.

In Section 3, we first give an overview to AA in Section 3.1. We then formally introduce PD framework in Section 3.2, presenting definitions of arguments and attacks in PD frameworks. We connect PD with AA in Section 3.3, showing how AA frameworks can be mapped to PD frameworks in which all p-rules have probability 1. We also present the result that on such mapping, the probability semantics of PD (under P-CWA and Maximum Entropy Reasoning) coincides with the complete extension (Theorem 3.1).

Section 4 presents proof theories of PD frameworks, focusing on the joint probability distribution calculation. Section 4.1 gives the basic approach using linear programming. This is modelled after Nilsson’s PSAT approach [47, 20]. Section 4.2 and 4.3 present approaches for computing joint distributions under P-CWA and with maximum entropy reasoning, respectively. The chief contributions are an algorithm that computes P-CWA “locally” (Theorem 4.2) and using linear entropy in place of von Neumann entropy, respectively. Section 4.4 presents the stochastic gradient descent (SGD) approach for computing joint probabilities. As we show in the performance study in Section 4.5, SGD (with its GPU implementation) is the most practical approach for reasoning with PD frameworks.

This paper builds upon our prior work [20] as follows. The concepts of p-rules and Rule-PSAT (Section 2.1) as well as the linear programming approach for calculating joint probability (Section 4.1) have been presented in [20]. In this paper, we have significantly expanded the theoretical presentation of [20] by introducing the PD framework with a probabilistic version of the closed-world assumption and connecting PD to existing argumentation frameworks. We have also present several scalable techniques for calculating joint probabilities, which pave the way to practical probabilistic structured argumentation.

Proofs of all theoretical results are shown in A.

2 Probabilistic Rules

In this section, we introduce probabilistic rules (p-rules) and satisfiability as the cornerstone of this work. We introduce probabilistic versions of closed-world and open-world assumptions and show how probability of literals can be computed from p-rules with these two assumptions. We introduce the concept of maximum entropy reasoning in the context of p-rules as well.

2.1 Probabilistic Rules and Satisfiability

Given atoms forming a language , we let be the closure of under the classical negation (namely if , then ).111In this work, symbols , , and take their standard meaning as in classical logic. The core representation of this work, probabilistic rule (p-rule), is defined as follows.

Definition 2.1.

is referred to as the head of the p-rule, the body, and the probability.

The p-rule in Definition 2.1 states that the probability of , when all hold, is . In other words, this rule states that . Without loss of generality, we only consider in this work. In other words, for , one writes .222Throughout, stands for an anonymous variable as in Prolog.

Definition 2.2.

[28] Given a language with atoms, the Complete Conjunction Set (CC Set) of is the set of conjunction of literals such that each conjunction contains distinct atoms.

Each is referred to as an atomic conjunction.

represent the set of all possible worlds and each is one of them. For instance, for , the CC set of is

The four atomic conjunctions are: and .

Definition 2.3.

[20] Given a language and a set of p-rules , let be the CC set of . A function is a consistent probability distribution with respect to on for if and only if:

-

1.

For all ,

(1) -

2.

It holds that:

(2) -

3.

For each p-rule , it holds that:

(3) -

4.

For each p-rule , , it holds that:

(4)

Our notion of consistency as given in Definition 2.3 consists of two parts. Equations 1 and 2 assert being a probability distribution over the CC set of with each between 0 and 1, and the sum of all is 1, respectively. Equations 3 and 4 assert that each p-rule should be viewed as defining conditional probabilities for which the probability of the head of the p-rule conditioned on the body is the probability. When the body is empty, the head is conditioned on the universe. In other words, Equation 3 asserts , whereas Equation 4 asserts .

Example 2.1.

With consistency defined, we are ready to define Rule-PSAT as follows.

Definition 2.4.

[20] The Rule Probabilistic Satisfiability (Rule-PSAT) problem is to determine for a set of p-rules on a language , whether there exists a consistent probability distribution for the CC set of with respect to .

If a consistent probability distribution exists, then is Rule-PSAT; otherwise, it is not.

We illustrate Rule-PSAT with Example 2.2.

Example 2.2.

(Example 2.1 continued.) To test whether is Rule-PSAT on , we need to solve Equations 5-8 for as is Rule-PSAT if and only if a solution exists. It is easy to see that this is the case as:

Since , we have . We can let , and obtain one solution for . As the system is underspecified with four unknowns and three equations, we have infinitely many solutions to and in the range of [0, ].

The next example gives a set of p-rules that is not Rule-PSAT.

Example 2.3.

From , we have

Thus, , which does not satisfy .

Note that there is no restriction imposed on the form of p-rules other than the ones given in Definition 2.1, as illustrated in the next two examples, Examples 2.4 and 2.5, a set of p-rules can be consistent even if there are rules in this set forming cycles or having two rules with the same head.

Example 2.4.

Example 2.5.

Consider a set of p-rules

There are two p-rules with head , namely,

and .

These two p-rules have different bodies and probabilities. We set up equations as follows.333To simplify the presentation, Boolean values are used as shorthand for the literals. E.g., 111, 011, and 001 denote , , and , respectively.

Solve these, a solution found is follows:

| , | , | , | , |

| , | , | , | . |

2.2 Probability of Literals

So far, we have defined probability distribution over the CC set of a language. To discuss probabilities of literals in the language, there are two distinct views we can take: probabilistic open-world assumptions (P-OWA) and probabilistic closed-world assumptions (P-CWA), explained as follows.

With P-OWA, from a set of p-rules, we take the stand that:

The probability of a literal is determined by the p-rules deducing the literal in conjunction with some unspecified factors that are not described by all p-rules that are known.

P-CWA is the opposite of the P-OWA, such that:

The probability of a literal is determined by the known p-rules deducing the literal.

P-OWA and P-CWA can be viewed as probabilistic counterparts to Reiter’s classic OWA and CWA [50] in the following way.

-

•

P-OWA and OWA assume that things which cannot be deduced from known information can still be true;

-

•

P-CWA and CWA both assume that the information available is “complete” for reasoning.

We start our discussion with P-OWA. To define literal probability with P-OWA, we need the following result.

Proposition 2.1.

Given a set of p-rules over a language , if there is a consistent probability distribution for with respect to , then for any , it is the case that:

| (11) | ||||

| (12) |

With Proposition 2.1, we can define probability of literals under P-OWA. Given a set of p-rules , if there is consistent probability distribution for with respect to , then for any , the probability of under P-OWA is such that:

| (13) |

Under P-OWA, the literal probability is as defined in [20]. From a Rule-PSAT solution, which characterises a probability distribution over the CC set, one can compute literal probabilities by summing up . We illustrate literal probability computation in P-OWA in the example below.

Example 2.6.

Consider the set of p-rules

which states that holds if does, and holds. With P-OWA, we have the following equations:

| (14) | ||||

| (15) | ||||

| (16) |

Solve these, a solution to the joint distribution is

| , | , |

| , | . |

Use Equation 13 to calculate literal probability, we have

Thus, we read these as:

-

1.

holds as we have asserted with the p-rule

-

2.

yet there is a 50/50 chance that holds as well, despite that we have the knowledge that would hold if does not, captured with the p-rule

Thus, we see that the world is “open” as has a chance to hold, even though we have no way to deduce that with the p-rules we have.

P-CWA asserts more constraints to literal probabilities than P-OWA. With P-CWA, the probability of a literal is determined by all ways of deducing the literal. To define literal probability under P-OWA, we formalize deduction with p-rules using the same notion defined in Assumption-based Argumentation (ABA) [56], as follows.

Definition 2.5.

Given a language and a set of p-rules , a deduction for with , denoted , is a finite tree with nodes labelled by literals in or by 444 represents “true” and stands for the empty body of rules. In other words, each rule can be interpreted as for the purpose of presenting deductions as trees., the root labelled by , leaves either or literals in , non-leaves , as children, the elements of the body of some rules in with head .

With deduction defined, we can define literal probabilities under P-CWA. Formally, let be all maximal deductions for ,555A deduction is maximal when there is no such that . where . Let

| (17) |

Then,

| (18) |

The difference between P-OWA and P-CWA is illustrated in the following example.

Example 2.7.

(Example 2.6 continued.) There are maximal deductions

and

for and , respectively. With P-CWA, in addition to Equation 14 - 16, we have an additional constraint derived from :

| (19) |

This is the case as the LHS sums up probabilities on atomic conjunctions that entail ; and the RHS is the atomic conjunction that entails . The (unique) solution to is

| , | , |

| , | . |

With these, we have .

Such results match with our intuition:

-

•

With P-CWA, we assume that the only way to obtain is by having (with the p-rule :[]). However, since we know without any doubt (from the p-rule :[]), there is no room to believe ; thus we cannot not deduce .

-

•

On the other hand, with P-OWA, we assume that although we can obtain from , but there are other possible ways of deriving that we are unaware of, thus not having does not suggest that we can rule out a possibility of .

Another way to look at P-OWA and P-CWA is from Equation 18. Given , let be as defined in Equation 17, then for any , and , it holds that:

| (20) |

From Equation 20, as demonstrated in Example 2.7, it is clear that P-CWA imposes additional constraints on the joint distribution . Thus, from a set of p-rules that is Rule-PSAT, literal probabilities under P-CWA may be undefined. Consider the following example.

Example 2.8.

We introduce the concept of P-CWA consistency as follows.

Definition 2.6.

A set of p-rules defined with a language is P-CWA consistent if and only if it is consistent and for each ,

P-CWA differs from assumptions several standard assumptions made in handling probabilistic systems such as independence (discussed in e.g., [28]) or mutual exclusivity (discussed in e.g., [59]), as illustrated in Example 2.9 below.

Example 2.9.

Consider a p-rule:

:[].

By the definition of p-rule, it holds that

-

•

With P-CWA, from the deduction , we have

Thus, .

-

•

With the independence assumption, assuming that and are independent, we have

Thus, .

-

•

With the mutual exclusivity assumption, assuming that and are mutually exclusive, we have

Thus, .

It is easy to see that P-CWA also differs from conditional independence [14], which is the main assumption enabling Bayesian network [53], as illustrated in Example 2.10 below.

Example 2.10.

Consider two p-rules:

:[], :[].

By the definition of p-rule, we have

Use the chain rule,

With conditional independence, assuming that and are conditionally independent given , we have Thus,

| (21) |

With P-CWA, from the deduction we have

From , we have

Thus, . From , we have

Since ,

Since , we have

Therefore, with P-CWA we also obtain

as with the conditional independence assumption.

However, with P-CWA, from the deduction , we also have

which infers that

These do not hold in general with the conditional independence assumption.

2.3 Maximum Entropy Solutions

As we are solving systems derived from p-rules to compute the joint distribution , when the system is underdetermined, multiple solutions exist, as illustrated in the next example.

Example 2.11.

(Example 2.5 continued.) This example shows a system with five equations and eight unknowns. Thus the system is underdetermined. In addition to the solution shown previously, the following is another solution:

| , | , | , | , |

| , | , | , | . |

If a set of p-rules is satisfiable (or P-CWA consistent), but the solution to is not unique, then the range of the probability of any literal in can be found with optimization. The upper bound of the probability of a literal can be found by maximising as defined in Equation 13 (with P-OWA) or 18 (with P-CWA), subject to constraints given by the systems derived from the p-rules. The lower bound of can be found by minimising these equations accordingly.

Example 2.12.

In addition to choosing a solution that maximizes or minimizes the probability of a literal, we can also choose the solution that maximizes the entropy of the joint distribution. The principle of maximum entropy is commonly used in probabilistic reasoning [39, 48], including in probabilistic argumentation as discussed in e.g., [32] and [55]. It states that

amongst the set of distributions that characterize the known information equally well, the distribution with the maximum entropy should be chosen [38].

The entropy of a discrete probability distribution is

In our context, given a language with atoms, the maximum entropy distribution can be found by maximising

| (22) |

subject to the system derived from p-rules.

Example 2.13.

(Example 2.12 continued.) The maximum entropy distribution solution found in this example is:

| , | , | , | , |

| , | , | , | . |

With this , .

By the definition of Rule-PSAT and P-CWA consistency, it is easy to see that maximum entropy solution exists and is unique for satisfiable p-rules. Formally,

Proposition 2.2.

Given a set of consistent p-rules, the maximum entropy solution exists and is uniquely determined.

A maximum entropy solution is as unbiased as possible amongst all solutions [55]. A useful result on maximum entropy solution that we use in Section 3 is the following.

Lemma 2.1.

Given a set of consistent p-rules, for each , consider some constant such that is the feasible region for . Let be the maximum entropy solution. If , then .

Lemma 2.1 sanctions that a maximum entropy solution asserting a non-zero probability to each if constraints given by p-rules allow such allocation. In other words, with maximum entropy reasoning, is 0 only if there is no other solution exists. Consequentially, with maximum entropy solution, literal probabilities are not 0 unless explicitly set by p-rules. Formally,

Corollary 2.1.

Given a set of consistent p-rules, for each , let be a constant such that (for ). With the maximum entropy solution , if , then

2.4 Relation between P-CWA and Maximum Entropy Solutions

Although both P-CWA and maximum entropy reasoning restrict the joint probability distribution we can take on the CC set, these are orthogonal concepts. In other words, one can choose to apply either P-CWA, maximum entropy reasoning individually, or both at the same time. They can all lead to different distributions. We illustrate them with the following example.

Example 2.14.

Given a language and a set of two p-rules:

.

With P-OWA, to compute the joint probability distribution on the CC set, we set up three equations over the unknowns:

With P-CWA, we must consider two deductions:

and .

These assert that:

which translate to

The resulting distribution over the CC set is as summarised in Table 3. Note that unlike maximum entropy reasoning, which gives us unique solutions, the two systems used to compute P-OWA and P-CWA without maximum entropy reasoning are underdetermined, so infinitely many solutions exist.

| P-OWA without ME | 1 | 0 | 0 | 0 |

|---|---|---|---|---|

| P-OWA with ME | 0.125 | 0.125 | 0.125 | 0.125 |

| P-CWA without ME | 0 | 0.5 | 0 | 0.25 |

| P-CWA with ME | 0 | 0.293 | 0.207 | 0.146 |

| P-OWA without ME | 0 | 0 | 0 | 0 |

| P-OWA with ME | 0.125 | 0.125 | 0.125 | 0.125 |

| P-CWA without ME | 0 | 0 | 0 | 0.25 |

| P-CWA with ME | 0 | 0 | 0.207 | 0.146 |

In this section, we have introduced p-rule as the core building block of this work. Its probability semantics is defined with the joint probability distribution over the CC set of the language. We introduce Rule-PSAT to describe consistent p-rule sets. Literal probability is defined as the sum of probabilities of conjunctions that are models of the literal.

We then introduce Probabilistic Open-World and Closed-World assumptions, P-OWA and P-CWA, respectively, modelling their counterparts in non-monotonic logic. We show how literal probability can be computed with respect to both P-OWA and P-CWA. We make a few remarks that P-CWA differs from other common probabilistic assumptions such as independence, mutual exclusivity and conditional independence. We finish this section with an introduction to maximum entropy solutions and show that when the joint distribution is computed with maximum entropy reasoning, literals will not take 0 probability unless that is the only solution they have.

3 Argumentation with P-Rules

Thus far, we have introduced p-rules as the basic building block in probabilistic deduction. In this section, we show how probabilistic arguments can be built with p-rules and how attacks can be defined between arguments. To this end, we formally define Probabilistic Deduction (PD) Framework composed of p-rules. We then show how PD admits Abstract Argumentation [17] as instances.

Note that in this section, we assume p-rules in discussion are Rule-PSAT consistent. Thus there exists a consistent joint probability distribution for the CC set of . We will discuss several methods for computing joint distributions from a set of p-rules in Section 4. We also assume that P-CWA can be imposed. Thus, unless specified otherwise, we use to denote in this section.

3.1 Background: Abstract Argumentation

We briefly review concepts from abstract argumentation (AA).

An Abstract Argumentation (AA) frameworks [17] are pairs , consisting of a set of abstract arguments, , and a binary attack relation, . Given an AA framework , a set of arguments (or extension) is

-

•

admissible (in AF) if and only if , (i.e. is conflict-free) and for any , if , then there exists some such that ;

-

•

complete if and only if is admissible and contains all arguments it defends, where defends some iff attacks all arguments that attack .

Given and a set of labels , a labelling is a total function . Given a labelling on some argumentation framework,

-

•

an -labelled argument is said to be legally if and only if all its attackers are labelled ;

-

•

an -labelled argument is said to be legally if and only if it has at least one attacker that is labelled ;

-

•

an -labelled argument is said to be legally if and only if not all its attackers are labelled and it does not have an attacker that is labelled .

A complete labelling is a labelling where every -labelled argument is legally , every -labelled argument is legally and every labelled argument is legally . An important result that connects complete extensions and complete labelling is that arguments that are labelled with a complete-labelling belong to a complete extension. [1]

3.2 Probabilistic Deduction Framework

We define Probabilistic Deduction framework as a set of P-CWA consistent p-rules constructed on a language, as follows.

Definition 3.1.

A Probabilistic Deduction (PD) framework is a pair where is the language, is a set of p-rules such that

-

•

for all , literals in are in ,

-

•

is P-CWA consistent.

With a PD framework, we can build arguments as deductions.

Definition 3.2.

Given a PD framework , an argument for supported by , , denoted is such that there is a deduction in which for each leaf node in , either

-

1.

is labelled by , or

-

2.

is labelled by some , and .

The condition in Definition 3.2 is put in place to remove “negative singleton argument” resulted from p-rules. For instance, consider a PD framework with , Without this condition, we would admit as an argument, because

-

1.

forms a tree by itself in which the root and the leaf are both ,

-

2.

and is a p-rule in .

Intuitively, in this definition of argument, we want to assert that

-

1.

if there is only a single literal in the deduction, then there must exist a p-rule in the set of rules; otherwise,

-

2.

there must be some reason to acknowledge each leaf, either directly through a rule without body, or the existence of some information about the negation of the leaf.

Example 3.1 presents a few deductions for illustration.

Example 3.1.

Consider a language and four sets of p-rules , where

-

•

,

-

•

,

-

•

,

-

•

.

Figure 2 shows some examples of arguments built with these sets of p-rules.

Example 3.2.

(Example 2.14 continued.) With these two p-rules,

.

although both and are deductions; only is a PD argument.

Definition 3.3.

For two arguments and in some PD framework, attacks if .

Example 3.3.

Consider a PD framework with

Two arguments and can be built with such that attacks , as illustrated in Figure 3.

At the core of PD semantics is the argument probability, defined as follows.

Definition 3.4.

Given an argument , in which , the probability of is:

| (23) |

Trivially, . We illustrate argument probability in Example 3.4.

Example 3.4.

(Example 3.3 continued.) Consider the following joint distribution computed from the p-rules:

Note that the joint distribution is unique. With P-CWA, from , we have

This implies .

Probabilities of arguments that forming attack cycles can be computed without any special treatment, as illustrated in the following example.

Example 3.5.

Consider a PD framework with a set of p-rules

Two arguments and can be built such that attacks and attacks , as illustrated in Figure 4.

We compute the joint probability distribution as:

Note that the joint probability distribution is again unique. With P-CWA, we have

and

Both imply . Thus there are four equations and four unknowns, so the solution is unique.

With these, we compute literal and argument probabilities:

A few observations can be made with our notions of arguments and attacks in PD frameworks as follows.

-

•

Arguments are defined syntactically in that arguments are deductions, which are trees with nodes being literals and edges defined with p-rules.

-

•

Attacks are also defined syntactically, without referring to either literal or argument probabilities, such that argument attacks argument if and only if the claim of the is the negation of some literals in . In this process, we make no distinction between “undercut” or “rebuttal” as done in some other constructions (see e.g., [19] for some discussion on these concepts).

-

•

The probability semantics of PD framework given in Equation 23 is based on solving the joint distribution over the CC set of the language under the P-CWA assumption. Thus, it is by design a “global” semantics in that it requires a sense of “global consistency” as given in Definition 2.6. We give a brief discussion in B.1 about reasoning with PD frameworks that are not P-CWA consistent.

-

•

Given a PD framework, since its joint distribution may not be unique (unless maximum entropy reasoning is enforced), argument probabilities may not be unique, as well (subject to the same maximum entropy reasoning condition). We discuss the calculation of the joint distribution in detail in Section 4.

A few results concerning the argument probability semantics are as follows. Arguments containing both a literal and its negation have 0 probability.

Proposition 3.1.

For any argument , if , then .

Self-attacking arguments have 0 probability.

Proposition 3.2.

For any argument , if , then .

An argument’s probability is no higher than the probability of its claim.

Proposition 3.3.

For any argument , .

If an argument’s probability equals the probability of its claim, then there is one and only one argument for the claim.

Proposition 3.4.

For any argument , if and only if there is no such that and .

If an argument is attacked by another argument, then the sum of the probability of the two arguments is no more than 1.

Proposition 3.5.

For any two arguments and , if attacks , then .

Proposition 3.5 is the first of the two conditions of p-justifiable introduced in [55] (Definition 4), also known as the “coherence criterion” introduced in [36]. Note that the second condition of p-justifiable, the sum of probabilities of an argument and its attackers must be no less than 1 (also known as the “optimistic criterion” in [36]), does not hold in PD in general, as illustrated in the following example.

Example 3.6.

Consider a PD framework with two p-rules

Let and . We compute and . Clearly, attacks , yet . This example represents a case where a weak argument attacks another weak argument, and the sum of the probabilities of the two is less than 1.

With p-rules, PD naturally support reasoning with both knowledge with uncertainty and “hard” facts in a single framework. A classic example used in defeasible reasoning, “Nixon diamond” introduced by Reiter and Criscuolo [51], can be modelled with a PD framework as shown in Table 4. We can see that both arguments

and

can be drawn such that they attack each other. Calculate their probabilities, we have .

| Defeasible Knowledge | |

|---|---|

| usually, Quakers are pacifist | :[] |

| usually, Republicans are not pacifist | :[] |

| “Hard” Facts | |

| Richard Nixon is a Quaker | :[] |

| Richard Nixon is a Republican | :[] |

| Nixon exists | :[] |

3.3 PD Frameworks and Abstract Argumentation

To show relations between PD and AA [17], we first explore a few classic examples studied with AA to illustrate PD’s probabilistic semantics. We then present a few intermediate results (Proposition 3.6-3.10). With them, we show that PD generalises AA (Theorem 3.1).

We start by presenting a few examples 3.7-3.11. Arguments and attacks shown in these examples are used in [1] to illustrate differences between several classical (non-probabilistic) argumentation semantics. Characteristics of these examples are summarised in Table 5.

| Example | Description | Source |

|---|---|---|

| Example 3.7 | Three arguments and two attacks | Figure 2 in [1] |

| Example 3.8 | Two arguments attack each other | Figure 3 in [1] |

| Example 3.9 | “Floating Acceptance” example | Figure 5 in [1] |

| Example 3.10 | “Cycle of three attacking arguments” example | Figure 6 in [1] |

| Example 3.11 | Stable extension example | Figure 8 in [1] |

Example 3.7.

Let be a PD framework with three p-rules:

Let , , . Arguments and attacks are shown in Figure 5. The joint distribution is such that

| , | , | , | , |

| , | , | , | . |

With these, we have , , and .

Example 3.8.

Let be a PD framework with two p-rules:

Let , and . The arguments and attacks are shown in Figure 3. A joint distribution is such that

With these, we have .

Example 3.9.

Let be a PD framework with four p-rules:

| , | , | , | . |

Let , , , and . The arguments and attacks are shown in Figure 6. The joint distribution is such that

Compute argument probabilities, we have , , and .

Example 3.10.

Let be a PD framework with three p-rules:

Let , , and . Arguments and attacks are shown in Figure 7. Although is Rule-PSAT, it is not P-CWA consistent. Thus, there is no consistent joint distribution over the CC set. , and are undefined.

Example 3.11.

Let be a PD framework with five p-rules:

Let , , , , and . Arguments and attacks are shown in Figure 8. The joint distribution is such that

Compute argument probabilities, we have , and .

We make two observations from these examples.

-

•

Syntactically, it is straightforward to map AA frameworks to PD frameworks such that a one-to-one mapping between arguments and attacks in an AA frameworks and their counterparts in the mapped PD framework exist. Thus, for any AA framework , there is a counterpart of it represented as a PD framework.

-

•

Semantically, the probability semantics of PD frameworks in these examples behaves intuitively, in the sense that:

-

–

winning arguments have probability 1;

-

–

losing arguments have probability 0;

-

–

arguments that can either win or lose have probability between 0 and 1; and

-

–

arguments cannot be labelled neither winning nor losing result in inconsistency.

With these observations, more formally, as we show below, when argument probabilities are viewed as labelling, they represent a complete labelling.

-

–

Starting with the syntactical aspect, we define a mapping from AA frameworks to PD frameworks as follows.

Definition 3.5.

The function is a mapping from AA frameworks to PD frameworks such that for an AA framework , , where:

-

•

,

-

•

, .

Proposition 3.6 below sanctions that the arguments and attacks in AA frameworks are mapped to their counterparts in PD frameworks unambiguously.

Proposition 3.6.

Given an AA framework , there exists a function that maps arguments in to arguments in such that for any , attacks in .

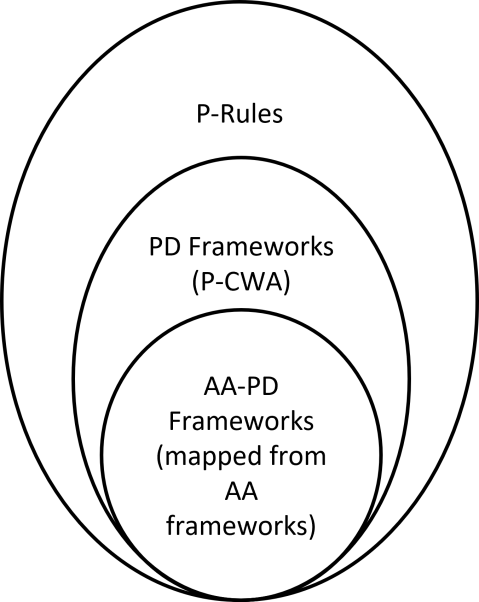

To establish semantics connections between AA frameworks and PD frameworks, we first introduce AA-PD frameworks as the set of PD frameworks that are mapped from AA frameworks, i.e., let be the set of AA frameworks, then the set of AA-PD frameworks is . We observe the following with AA-PD frameworks:

-

1.

unattacked arguments have probability 1 (note that this is the “founded” criterion in [36]), and

-

2.

if an argument with probability 1 attacks another argument , will have probability 0.

Proposition 3.7.

Given an AA-PD framework , for an argument in , if is not attacked in , then .

Proposition 3.8.

Given an AA-PD framework , for two arguments and in such that attacks . If then .

Extending Propositions 3.7 and 3.8, we can show that if all attackers of an argument have probability 0, then the argument has probability 1; moreover, if an argument that has been attacked has probability 1, then all of its attackers must have probability 0. Formally,

Proposition 3.9.

Given an AA-PD framework , let be an argument in and the set of arguments attacking , .

-

1.

If for all , , then .

-

2.

If , then for all , .

An argument has probability 0 if and only if it has an attacker with probability 1.

Proposition 3.10.

Given an AA-PD framework , let be an argument in and the set of arguments attacking , . With maximum entropy reasoning,

-

1.

if , then there exists , such that ;

-

2.

if there exists , such that , then .

Maximum entropy reasoning is a key condition for Proposition 3.10. This proposition does not hold without it, as illustrate in the following example.

Example 3.12.

Consider an AA-PD framework with five p-rules:

| :[], | :[], | :[], |

| :[], | :[]. |

The arguments and attacks are shown in Figure 9. Calculate the joint probability distribution with maximum entropy reasoning, we have the solution

This solution gives and .

Without maximum entropy reasoning, a possible solution is

This joint distribution gives and Thus, even though neither of the two arguments ( and ) attacking has probability 1.

Propositions 3.7 - 3.10 describe attack relations between PD arguments similar to attacks in AA (or other non-probabilistic argumentation frameworks). For instance, if we consider arguments with probability 0 and probability 1 , then we obtain a labelling-based semantics as shown in [1]. Formally,

Theorem 3.1.

Given an AA PD framework , let be the set of arguments in , with maximum entropy reasoning, the Probabilistic Labelling function defined as

in which , is a complete labelling.

Theorem 3.1 bridges PD and AA semantically as relations between the complete labelling and argument extensions have been studied extensively in e.g., [1, 7, 8]. In short, labelling can be mapped to extensions in the way that given some semantics , arguments that are labelled with -labelling belong to an -extension. Moreover, the complete labelling can be viewed at the centre of defining labellings for other semantics [57]. For instance, a grounded labelling is a complete labelling such that the set of arguments labelled in is minimal with respect to set inclusion among all complete labellings; a stable labelling is a complete labelling such that the set of undecided arguments is empty; a preferred labelling is a complete labelling such that the set of arguments labelled in is maximal with respect to set inclusion among all complete labellings [57].

One last result we would like to present on AA-PD framework is the following.

Proposition 3.11.

Given an AA-PD framework , let be an argument in and the set of arguments attacking , . .

This is the “optimistic criterion” introduced in [36, 37]. We will discuss more on the relation between AA-PD and probabilistic abstract argumentation in Section 5.1.

In this section, we have introduced arguments built with p-rules and attacks between arguments in PD frameworks. In PD frameworks, arguments are deductions as they are in ABA frameworks, [11]. Attacks are defined syntactically such that an argument attacks another argument if the claim of is the negation of some literal in . We have compared PD with AA and show that AA can be mapped to PD frameworks containing only rules assigned with probability 1. The key insight is that the probability semantics given by PD can be viewed as a complete labelling as defined in AA.

4 Probability Calculation

So far we have introduced the probability semantics of p-rules in Section 2 and argument construction in Section 3. In both sections, we have assumed that the joint probability distribution for the CC set can be computed. In this section, we study methods for computing from p-rules. We look at methods for computing exact solutions as well as their approximations.

4.1 Compute Joint Distribution with Linear Programming

We begin with methods for computing exact solutions. Given a set of p-rules such that contains literals, to test whether is Rule-PSAT, we set up a linear system

| (24) |

where is an -by- matrix, , an -by- matrix.777We let be the CC set of . We consider elements in this set being ordered with their Boolean values. E.g., for , the four elements in the CC set are ordered such that . We construct and in a way such that is Rule-PSAT if and only if has a solution in , as follows.

For each rule , if has an empty body, then

| (25) |

and

| (26) |

Otherwise, , then

| (27) |

| (28) |

Row in and are and , respectively.

Example 4.1.

4.2 Compute Joint Distribution under P-CWA

To reason with P-CWA, additional equations must be introduced as constraints. To this end, we revise the construction of matrices and in Equation 24 as given in Equations 25 & 27 and Equations 26 & 28, respectively, to meet the requirement given in Equation 18.

In revising constructions of these two matrices, one useful observation we can make is that the P-CWA constraint

can be computed “locally” in the sense that one does not need to explicitly identify , the disjunction of conjunctions of literals that are in deductions for (Equation 17), when computing for each literal . This is important as if we were to identify explicitly upon computing for each , then we need to compute all deductions of , which is both repetitive and computationally expensive. We first illustrate the “local computation” idea with two examples and then present the algorithm along with a formal proof.

Consider three p-rules:

.

Directly applying the definition of P-CWA, we have

-

•

from the deduction 888To simplify the presentation, we use to denote . E.g., denotes , and

-

•

from the deduction .

However, if we were to take the “global” view and directly encode

with the equation

| (29) |

then we must traverse all three rules to find the deduction . Instead of doing this traversal, we can simply encode

-

•

from the p-rule with

(30) and

-

•

from the p-rule with

(31)

The new equations 30 and 31 are “local” as given a rule , we simply assert . There is no deduction construction or multi-rule traversal, which is needed for constructing Equation 29. To see their equivalence, we show that they assign the same set of to 0.

Example 4.2.

We examine the assigned to 0 from each equation. To simplify the presentation, we again use the Boolean string representation introduced in Example 2.5 for literals. E.g., “110” denotes .

-

•

asserts that .999These are easy to see as the first bit in the three-bit string must be 1 so the conjunction represented by the string satisfies ; the remaining two bits cannot both be 1 as that would make the conjunction satisfies . So we have 100, 101, and 110 produced in this case.

-

•

asserts that .101010Similarly, in this case the second bit must be 1 to satisfy , and the third bit must be 0 to not satisfy . There is no constraint on the first bit, so we produce 010 and 110 in this case.

-

•

asserts that .

We see that the only difference between

and

is on setting . Yet, this is asserted by , which is available in both the “global” and the “local” versions of constraint.

The above example illustrates the case where p-rules with different heads are chained. When there are two p-rules with the same head, e.g., there exist

:[] and :[],

then we assert

Example 4.3.

Consider five p-rules:

:[], :[], :[], :[], :[].

With a direct application of P-CWA definition, we have

and .

We show that this is the same as asserting

and .

Using the Boolean string representation, E.g., “1001” denotes ,

-

•

with , we assert

-

•

With , we assert

-

•

With , we assert

We can see that the difference between the “global” constraint

and the “local” one

is on asserting . However, this is asserted by . Thus the “global” constraint is indeed satisfied by the “local” version.

Summarising these two examples, additional rows of matrices and describing P-CWA can be constructed as follows.

Given a set of p-rules such that are heads of p-rules in , for each , let

be the p-rules in with head . We construct

| (32) |

For each , append a new row to such that

| (33) |

and

| (34) |

where are the column indices of , all atomic conjunctions in . Here, we again consider that is ordered with the Boolean values of its elements as in footnote 7.

Theorem 4.2.

Theorem 4.2 sanctions the correctness of coding the P-CWA criterion with local constraints. The proof of this theorem shown in A is long-winded. However, the idea is simple. We first observe that the “global” constraints given by the P-CWA definition, which are defined with respect to deductions, require us setting for some ; and the “local” constraints, given by Equations 33 and 34, which only need information in the level of p-rules, also set for some . This theorem states that s and s are the same set of atomic conjunctions. This is the case as shown by our induction proof that:

-

1.

when each deduction contains a single p-rule, it is obvious to see that the set of s is the same set of s;

-

2.

when a deduction contains multiple p-rules, assume it is the case that s = s, then introducing any a new p-rule will not break the equality. This is the case because for any that is set to by the global constraint but not the local constraint defined by the new p-rule, we can find an existing p-rule that sets for the same .

To see this, we observe that for a new p-rule of the form

with deduction

the that is set to by but not is of the form

in which . However, will be set for such by the p-rule

and must both exist in as without them, there would not be . With the local constraint, will set .

4.3 Compute Maximum Entropy Solutions

To compute the maximum entropy distribution introduced in Section 2.3, we use

Maximize:

| (35) |

subject to:

As the objective function is quadratic, it can no longer be solved with linear programming techniques. Thus, one possibility is to use a quadratic programming (QP) approach, such as a trust-region method [61], which can optimise quadratic objective functions with linear constraints and allow specification of variable bounds.

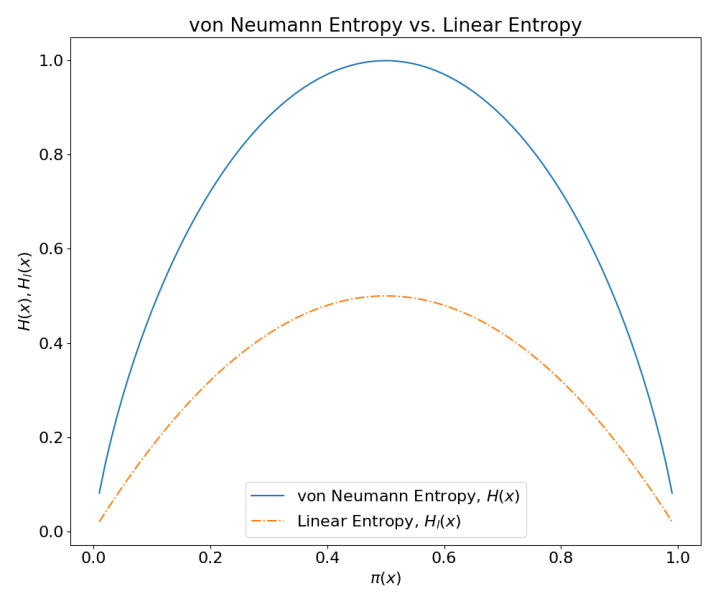

Alternatively, to maintain linearity hence reducing complexity, instead of maximising the von Neumann entropy (Equation 36), we can use the linear entropy [6], which approximates with (the first term in the Taylor series of ), and maximise

| (36) |

with Lagrange multipliers [4] as follows.

Consider as a column vector of row vectors

and . Define an auxiliary function :

are the Lagrange multipliers. We need to solve

This amounts to solve the following equations:

For :

| (37) |

For :

| (38) |

Together with the remaining equations (Equation 37), we have a new system that gives a maximum linear entropy solution to .

In a matrix form, we have

| (39) |

where is the -by- identity matrix, and .

Example 4.4.

Consider a p-rule set with two p-rules

and .

To find the maximum linear entropy solution of using Lagrange multipliers, we start by constructing matrices and as:

. Thus, and

As illustrate in Figure 10, linear entropy, , gives a lower bound to von Neumann entropy . More importantly, both and attain their maxima when the probabilities are most equally distributed. It is easy to see that the distribution that maximize linear entropy also maximizes von Neumann entropy; thus we do not lose accuracy while maximizing linear entropy.

4.4 Compute Solutions with Stochastic Gradient Descent

So far, all discussions on calculating the joint distribution is centered on solving the linear system

with different constructions of and . Although linear programming with linprog computes solutions, it is computationally expensive. To have a more efficient approach, we consider a stochastic gradient descent (SGD) method for solving as follows.

Let , for , define

Consider the squared loss function :

Then, solve is to find , such that

Use SGD to find the minimum point. For some initial , loop in , each in is updated iteratively with

| (40) |

in which

where is the learning rate (a small positive number).

Such a root finding process can be viewed as training an unthresholded perceptron model [53] without activation function using SGD. Each is bounded in in the updating step (Equation 40). Note that this is a generic method for solving linear systems. It does not rely on any specific construction of or . Thus, to compute maximum entropy solutions, we can use this approach to solve the linear system composed of Equation 37 and 38.

A prominent advantage of SGD based approach is the ability to control the error at run time, so the gradient descent loop terminates when is “small enough”. Moreover, as SGD can be easily parallelized on GPU, its performance can be improved significantly.

4.5 Computational Performance Studies

To study the performance of presented Rule-PSAT algorithms, we experiment them on randomly generated p-rules. Given a language , to ensure that p-rule sets defined over are Rule-PSAT, we first generate a random distribution over the CC set of by drawing samples from a discrete uniform distribution. Then we generate p-rules by randomly selecting literals from to be the head and body of p-rules. The length of each p-rule is randomly selected between 1 and the size of the language. The probability of each generated p-rule is then computed from with Equations 3 and 4.

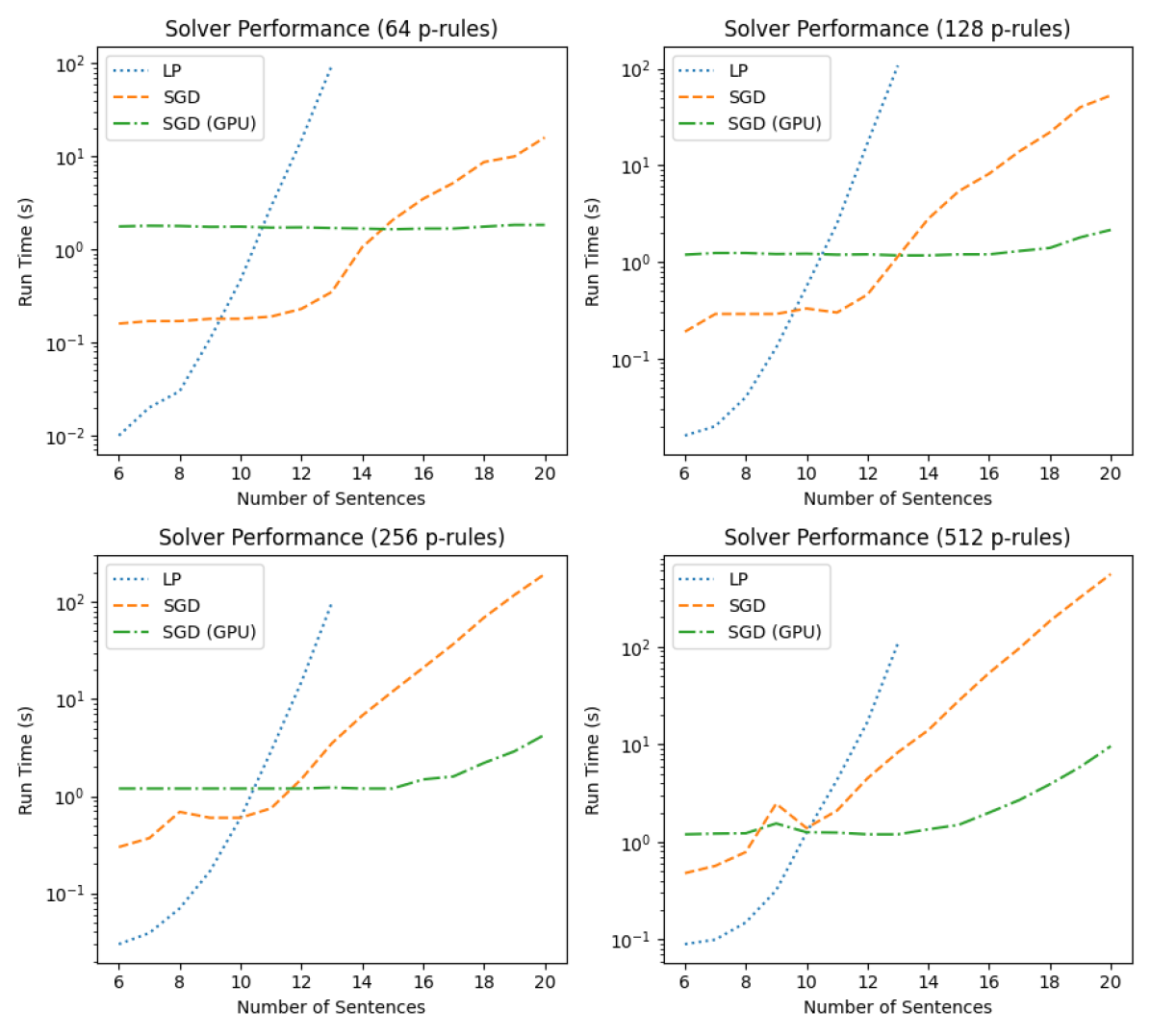

We separate our experiments into two groups, approaches that find a solution to and approaches that find maximum entropy solutions. Figure 11 shows the solver performances for the first gruop: LP, SGD (CPU) and SGD (GPU). In these experiments, the size of the language ranges from to ; the lengths of p-rule sets are 64, 128, 256 and 512, respectively. The termination condition for SGD (with and without GPU) is . All experiments are conducted on a desktop PC with a Ryzen 2700 CPU (16 cores), 64GB RAM and a Nvidia 3090 GPU (24GB RAM). From this figure, we observe that although LP is faster than SGD when the size of the language is smaller than 10, SGD is significantly faster as the size of grows, especially when the GPU implementation is used.

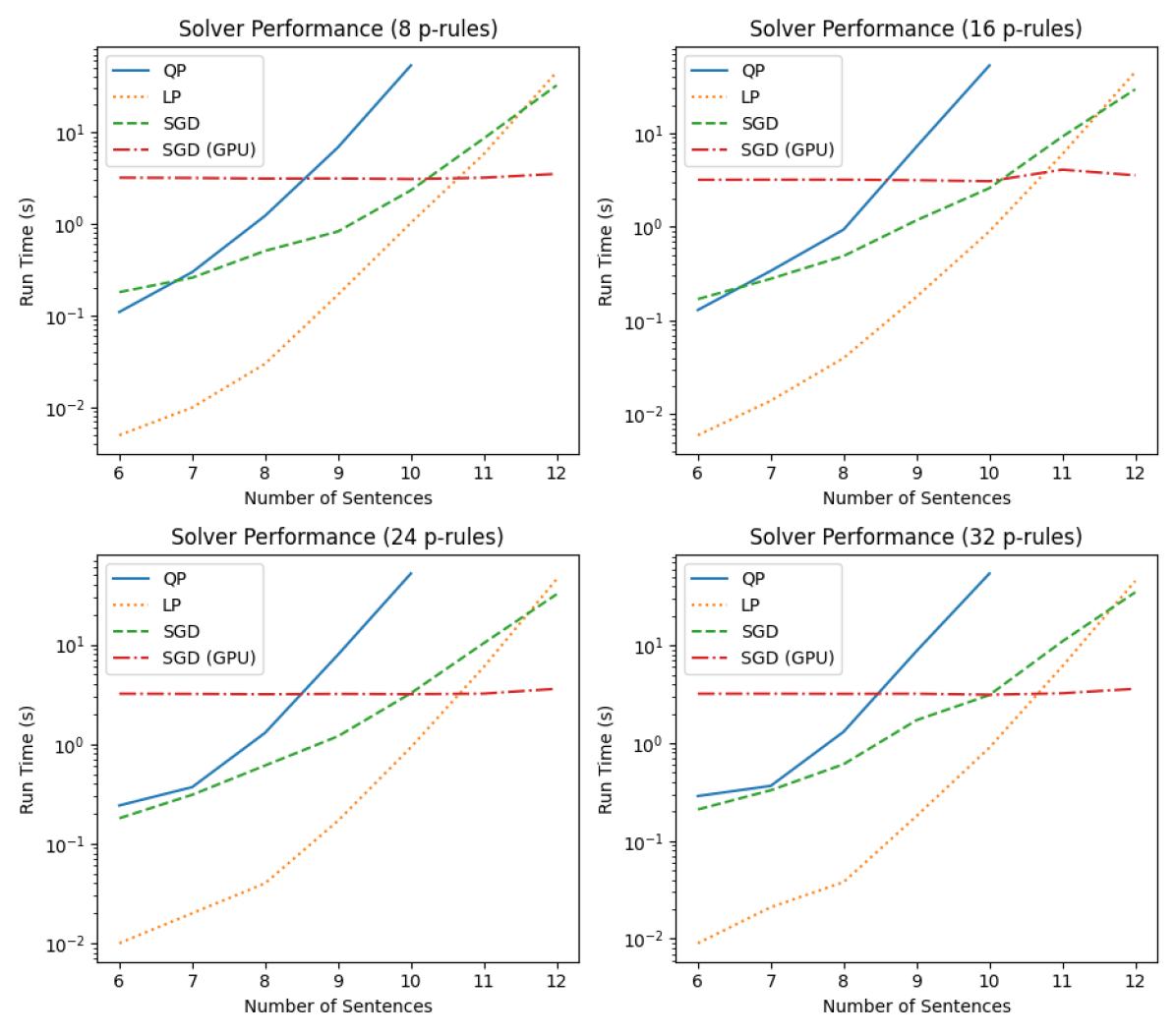

To study performances of approaches that compute maximum entropy solutions, we first compare the entropy of solutions found by these approaches with results shown in Table 6. We observe that approaches that optimize for linear entropy find the same solutions as the QP approach that maximizes von Neumann entropy, as expected. The small differences between these methods are likely caused by numerical errors. For these experiments, the size of p-rule sets is 16. Figure 12 presents the solver performance in terms of speed. We see that although SGD is slower than LP and QP initially, it over takes LP and QP as the size of the grows.

| Method | = 6 | = 7 | = 8 | = 9 | = 10 |

|---|---|---|---|---|---|

| QP maximize | 4.101 | 4.820 | 5.529 | 6.235 | 6.928 |

| LP maximize | 4.103 | 4.821 | 5.529 | 6.235 | 6.928 |

| SGD maximize | 4.101 | 4.824 | 5.529 | 6.235 | 6.929 |

In all experiments, the learning rate in our SGD implementations are set to , where is the size of the language. A common technique, momentum [45], in training neural networks have been applied to speed up the SGD convergence rate. Namely, in each iteration, is updated with

where is the momentum used in all experiments. on the RHS is calculated in the previous iteration.

In summary, Table 7 presents characteristics of the Rule-PSAT solving approaches studied in this work. We see that SGD approaches with GPU implementation significantly outperform LP and QP methods in terms of scalability.

| Exact | Maximum | |

| Method | Solution | Entropy Solution |

| LP solve | Yes | No |

| QP maximize | Yes | Yes (von Neumann) |

| LP maximize | Yes | Yes (Linear) |

| SGD solve | No | No |

| SGD maximize | No | Yes (Linear) |

| SGD solve (GPU) | No | No |

| SGD maximize (GPU) | No | Yes (Linear) |

5 Discussion and Conclusion

In this work, we have presented a novel probabilistic structured argumentation framework, Probabilistic Deduction (PD). Syntactically, PD frameworks are defined with probabilistic rules (p-rules) in the form of

which is read as conditional probabilities . To reason with p-rules, we solve the rule probabilistic satisfiability problem to find the joint probability distribution over the language defining the p-rules and then compute literal probabilities for literals in the language. We have introduced two different formulations for this process, the probabilistic open-world assumption (P-OWA) and probabilistic closed-world assumption (P-CWA). With P-OWA, the joint probability is solved based on conditional probabilities defined by p-rules; with P-CWA, additional constraints are introduced to assert that the probability of a literal is the sum of all possible worlds that contain a deduction to the literal.

From p-rules, we build arguments as deductions in the way that the leaves of a deduction are either literals that are heads of p-rules with empty bodies or literals for which there are p-rules for their negations. One argument attacks another when the claim of the former is the negation of some literal in the latter. The main technical achievement in this part is that we prove that with maximum entropy reasoning, our probability semantics with P-CWA coincide with the complete semantics defined for non-probabilistic argumentation. We prove this abstract argumentation with mappings from AA frameworks to PD presented.

Solving Rule-PSAT is at the core of reasoning with PD. We have investigated several different approaches for doing this using linear programming, quadratic programming and stochastic gradient descent. We have conducted experiments with these approaches on p-rule sets built on different sizes of languages and with different numbers of p-rules. We observe that stochastic gradient descent with GPU implementation outperforms all other approaches as the size of the language grows.

5.1 Relations with some Existing Works

As discussed in [20], Rule-PSAT is a variation of the probabilistic satisfiability (PSAT) problem introduced by Nilsson in [47]. Nilsson considered knowledge bases in Conjunctive Normal Form. A modus ponens example,111111This example is used in [47]. The figure on the left hand side of Table 8 is a reproduction of Figure 2 in [47].

If , then . . Therefore, .

is shown in Table 8. The probabilities of the conditional claim is , the antecedent and the consequent . With Nilsson’s probabilistic logic, this is interpreated as:

| , | , | , |

which gives rise to equations

| (41) | ||||

| (42) | ||||

| (43) |

With probabilistic rules discussed in this work, the interpreatation to modus ponens is the three p-rules as follows.

| , | , | , |

which gives rise to equations

| (44) |

42 and 43. The two shaded polyhedrons shown in Table 8 illustrate probabilistic consistent regions for and , with probabilistic logic and probabilistic rule, respectively, as defined by their corresponding equations together with equations 1 and 2. The consistent region in the probabilistic logic case is a tetrahedron, with vertices (0,0,1), (1,0,0), (1,1,0) and (1,1,1). The consistent region in the probabilistic rule case is an octahedron, with vertices (0,0,0), (0,0,1), (0,1,0), (1,0,0), (1,1,0) and (1,1,1). It is argued in [46] that the conditional probability interpretation to modus ponens is more reasonable than the probabilistic logic interpretation in practical settings.

| Probabilistic Logic | Probabilistic Rule |

|---|---|

| , , . | , , . |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/5ba3cdd0-1f49-4e16-b183-0d04449ffda5/x4.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/5ba3cdd0-1f49-4e16-b183-0d04449ffda5/x5.png) |

From this example, we observe that both methods are nothing but imposing constraints on the feasible regions of the spaces defined by clauses (in the case of probabilistic logic) or p-rules (in the case of probabilistic rules). In this sense, reasoning on such probability and logic combined forms is about identifying feasible regions determined by solutions to in .121212Constructions of differ between Nilsson’s probabilistic logic and this work. However, both are designed for solving the joint probability distribution over the CC set.

Hunter and Liu [34] make an interesting observation on representing scientific knowledge by combining probabilistic reasoning with logical reasoning. Quoting from [34]:

A key shortcoming of extending classical logic in order to handle probabilistic or statistical information, either by using a possible worlds approach or by adding a probability distribution to each model, is the computational complexity that it involves.

They suggest one may circumvent the computation of joint probability distribution by considering approaches such as Bayesian networks. We certainly agree that computational difficulty is a major challenge. On the other hand, as [26] have shown that the problem of PSAT is NP-complete, thus there does not exist a shortcut that performs probabilistic reasoning “correctly” in general cases. Thus, any probabilistic reasoning approach that does not require the computation of the joint probabilistic distribution either imposes probabilistic assumptions in the underlying model such as independence e.g., [44, 28], or topological constraints, e.g., conditional independence [14], as in Bayesian networks. In this work, we choose not to make such assumptions and study computational approaches with optimization techniques.

In the landscape of probabilistic argumentation, [55, 36, 37] give detailed account on probabilistic abstract argumentation with the epistemic approach. They have described some “desirable” properties of probability semantics, which can be viewed as properties imposed on the joint probability distribution. As discussed in Section 1, the main difference distinguishes this work with existing ones is that we do not assume a given joint probability distribution. Yet, with our approach, we can still compute argument probabilities and thus compare with some of the properties they have proposed, as follows.

-

•

COH A probability distribution is coherent if for arguments and , if attacks , then .

As shown in Proposition 3.5, COH holds in PD frameworks in general.

-

•

SFOU is semi-founded if for every not attacked.

This is not true in general PD frameworks, as one can use a p-rule

to build an un-attacked argument , . However, SFOU holds for AA-PD frameworks, as shown by Proposition 3.7.

-

•

FOU is founded if all un-attacked argument have probability 1.

This is not true in general PD frameworks, but true for AA-PD frameworks, as shown by Proposition 3.7.

-

•

SOPT is semi-optimistic if , where is the set of arguments attacking .

-

•

OPT is optimistic if , where is the set of arguments attacking .

As in the previous case, this is not true in general PD framework but true for AA-PD frameworks by Proposition 3.11.

-

•

JUS is justifiable if is coherent and optimistic.

Since PD frameworks are not optimistic in general, they are not justifiable. AA-PD frameworks are justifiable.

-

•

TER is ternary if for all .

This comparison is encouraging as one can take these properties introduced by Hunter and Thimm as a benchmark for probabilistic argumentation semantics. Observing AA-PD frameworks, a subset of PD frameworks, confirm to these properties helps us to see the underlying connection between PD frameworks and existing works on probabilistic argumentation. At the same, since general PD frameworks do not confirm to the founded and optimistic properties, we observe the hierarchical structure as shown in Figure 13.

PD frameworks share some similarities with Probabilistic Assumption-based Argumentation (PABA) [18, 29, 12], syntactically. [20] has presented differences between p-rules and PABA. Namely, PABA disallows rules forming cycles and if two rules have the same head, the body of one must be a subset of the other; whereas p-rules do not have these constraints. PD frameworks and AA-PD frameworks introduced in this work do not have these constraints. More fundamentally, PABA is an constellation approach to probabilistic argumentation [12], whereas PD is an epistemic approach.

5.2 Future Work

Moving forward, there are three main research directions we will explore in the future. Firstly, as briefly explained in Section B.1, the current approaches for computing literal probability requires either a Rule-PSAT solution (for reasoning with P-OWA) or a P-CWA consistent solution (for reasoning with P-CWA) found on the joint probability distribution. However, such requirement renders “local” reasoning impossible in the sense that one cannot deduce the probability of any literal in an inconsistent set of p-rules even if the literal of interest is independent of the of subset of p-rules that are inconsistent. (This is not much different from observing inconsistency in classical logic in the sense that with a classical logic knowledge base, having both and co-exist trivializes the knowledge base.) In the future, we would like to explore probability semantics for such inconsistent set of p-rules as well as their computational counterparts.

Secondly, even though we have shown that solving Rule-PSAT with SGD is a promising direction when compared with other approaches such as LP and QP, we are aware that the number of unknowns grows exponentially with respect to the size of the language. Thus, we would like to explore techniques that do not explicitly compute the unknowns defining the joint probability distribution, as suggested by e.g., [34]. To this end, there are two directions we will explore. (1) Inspired by the column generation method that is commonly used in optimization, we would like to see whether a similar technique can be developed for reasoning with p-rules; and (2) investigate the existence of equivalent “local” semantics in addition to the “global” semantics given in this work for literal probability computation, especially in cases where the given PD framework can be assumed to be P-CWA consistent and P-CWA can be assumed.

Lastly, we would like to explore applications of PD. As a generic structured probabilistic argumentation framework, we believe the practical limits of PD can be best understood by experimenting it with applications from different domains. Just as ABA has seen its applications in areas such as decision making and planning, we believe PD with its ability in handling probabilistic information could be suitable for solving problems in such domains. We would like to explore these potentials in the future.

References

- [1] P. Baroni, M. Caminada, and M. Giacomin. An introduction to argumentation semantics. Knowl. Eng. Rev., 26(4):365–410, 2011.

- [2] P. Baroni, D. Gabbay, M. Giacomin, and L. Van der Torre. Handbook of formal argumentation. College Publications, 2018.

- [3] P. Besnard, A. Garcia, A. Hunter, S. Modgil, H. Prakken, G. Simari, and F. Toni. Special issue: Tutorials on structured argumentation. Argument & Computation, 5(1), 2014.

- [4] G.S.G. Beveridge, S.G. Beveridge, and R.S. Schechter. Optimization: Theory and Practice. Chemical Engineering Series. McGraw-Hill, 1970.

- [5] G. Bongiovanni, G. Postema, A. Rotolo, G. Sartor, C. Valentini, and D. Walton. Handbook of Legal Reasoning and Argumentation. Springer Netherlands, 2018.

- [6] F. Buscemi, P. Bordone, and A. Bertoni. Linear entropy as an entanglement measure in two-fermion systems. Physical Review A, 75(3), mar 2007.

- [7] M. Caminada. Argumentation semantics as formal discussion. FLAP, 4(8), 2017.

- [8] M. Caminada and D. Gabbay. A Logical Account of Formal Argumentation. Studia Logica, 93(2):109–145, December 2009.

- [9] R. Craven, F. Toni, C. Cadar, A. Hadad, and M. Williams. Efficient argumentation for medical decision-making. In Proc. of AAAI. AAAI Press, 2012.

- [10] K. Čyras, B. Delaney, D. Prociuk, F. Toni, M. Chapman, J. Domínguez, and V. Curcin. Argumentation for explainable reasoning with conflicting medical recommendations. In Proc. of MedRACER 2018, pages 14–22. CEUR-WS.org, 2018.

- [11] K. Čyras, X. Fan, C. Schulz, and F. Toni. Assumption-based argumentation: Disputes, explanations, preferences. IfCoLog JLTA, 4(8), 2017.

- [12] K. Čyras, Q. Heinrich, and F. Toni. Computational complexity of flat and generic assumption-based argumentation, with and without probabilities. Artificial Intelligence, 293:103449, 2021.

- [13] K. Čyras, A. Rago, E. Albini, P. Baroni, and F. Toni. Argumentative XAI: A survey. In Proc. of IJCAI, pages 4392–4399. ijcai.org, 2021.

- [14] A. P. Dawid. Conditional independence in statistical theory. Journal of the Royal Statistical Society: Series B (Methodological), 41(1):1–15, 1979.

- [15] D. Doder and S. Woltran. Probabilistic argumentation frameworks - A logical approach. In Proc. of SUM, pages 134–147. Springer, 2014.

- [16] P. Dondio. Multi-valued and probabilistic argumentation frameworks. In Proc. of COMMA, volume 266, pages 253–260. IOS Press, 2014.

- [17] P. M. Dung. On the acceptability of arguments and its fundamental role in nonmonotonic reasoning, logic programming and n-person games. Artificial Intelligence, 77(2):321–357, 1995.

- [18] P.M. Dung and P.M. Thang. Towards (probabilistic) argumentation for jury-based dispute resolution. In Proc. of COMMA, pages 171–182. IOS Press, 2010.

- [19] F. H. Van Eemeren and B. Verheij. Argumentation theory in formal and computational perspective. FLAP, 4(8), 2017.

- [20] X. Fan. Rule-psat: Relaxing rule constraints in probabilistic assumption-based argumentation. In Proc. of COMMA, 2022.

- [21] X. Fan, R. Craven, R. Singer, F. Toni, and M. Williams. Assumption-based argumentation for decision-making with preferences: A medical case study. In Proc. of CLIMA, pages 374–390, 2013.

- [22] B. Fazzinga, S. Flesca, and F. Parisi. On efficiently estimating the probability of extensions in abstract argumentation frameworks. Int. J. Approx. Reason., 69:106–132, 2016.

- [23] B. Fazzinga, S. Flesca, F. Parisi, and A. Pietramala. Computing or estimating extensions’ probabilities over structured probabilistic argumentation frameworks. FLAP, 3(2):177–200, 2016.

- [24] J. Fox, D. Glasspool, D. Grecu, S. Modgil, M. South, and V. Patkar. Argumentation-based inference and decision making–a medical perspective. IEEE Intelligent Systems, 22(6):34–41, 2007.

- [25] J. Gainsburg, J. Fox, and L. M. Solan. Argumentation and decision making in professional practice. Theory Into Practice, 55(4):332–341, 2016.

- [26] G. F. Georgakopoulos, D. J. Kavvadias, and C. H. Papadimitriou. Probabilistic satisfiability. Journal of Complexity, 4(1):1–11, 1988.

- [27] P. Hansen and B. Jaumard. Algorithms for the maximum satisfiability problem. Computing, 44(4):279–303, 1990.

- [28] T. C. Henderson, R. Simmons, B. Serbinowski, M. Cline, D. Sacharny, X. Fan, and A. Mitiche. Probabilistic sentence satisfiability: An approach to PSAT. Artificial Intelligence, 278, 2020.

- [29] N. D. Hung. Inference procedures and engine for probabilistic argumentation. International Journal of Approximate Reasoning, 90:163–191, 2017.

- [30] A. Hunter. Some foundations for probabilistic abstract argumentation. In Proc. of COMMA, volume 245, pages 117–128. IOS Press, 2012.

- [31] A. Hunter. A probabilistic approach to modelling uncertain logical arguments. International Journal Approximate Reasoning, 2013.

- [32] A. Hunter. Reasoning with inconsistent knowledge using the epistemic approach to probabilistic argumentation. In Proc. of KR, pages 496–505, 2020.

- [33] A. Hunter. Argument strength in probabilistic argumentation based on defeasible rules. Int. J. Approx. Reason., 146:79–105, 2022.

- [34] A. Hunter and W. Liu. A survey of formalisms for representing and reasoning with scientific knowledge. Knowl. Eng. Rev., 25(2):199–222, 2010.

- [35] A. Hunter, S. Polberg, and M. Thimm. Epistemic graphs for representing and reasoning with positive and negative influences of arguments. Artificial Intelligence, 281:103236, 2020.

- [36] A. Hunter and M. Thimm. Probabilistic argumentation with incomplete information. In Proc. of ECAI, pages 1033–1034. IOS Press, 2014.

- [37] A. Hunter and M. Thimm. Probabilistic reasoning with abstract argumentation frameworks. J. Artif. Intell. Res., 59:565–611, 2017.

- [38] E. T. Jaynes. Information theory and statistical mechanics. Phys. Rev., 106:620–630, May 1957.

- [39] E.T. Jaynes and G.L. Bretthorst. Probability Theory: The Logic of Science. Cambridge University Press, 2003.

- [40] N. Käfer, C. Baier, M. Diller, C. Dubslaff, S. Alice Gaggl, and H. Hermanns. Admissibility in probabilistic argumentation. J. Artif. Intell. Res., 74, 2022.

- [41] N. Kökciyan, I. Sassoon, E. Sklar, S. Modgil, and S. Parsons. Applying metalevel argumentation frameworks to support medical decision making. IEEE Intelligent Systems, 36(2):64–71, 2021.

- [42] N. Labrie and P. J. Schulz. Does argumentation matter? a systematic literature review on the role of argumentation in doctor–patient communication. Health communication, 29(10):996–1008, 2014.

- [43] H. Li, N. Oren, and T. Norman. Probabilistic argumentation frameworks. In Proc. of TAFA, 2011.

- [44] J. Ma, W. Liu, and A. Hunter. Inducing probability distributions from knowledge bases with (in)dependence relations. In Proc. of AAAI. AAAI Press, 2010.

- [45] T. M. Mitchell. Machine Learning. McGraw-Hill, Inc., New York, NY, USA, 1 edition, 1997.

- [46] N. Nilsson. Probabilistic logic revisited. Artificial Intelligence, 59(1-2):39–42, 1993.

- [47] N. J. Nilsson. Probabilistic logic. Artificial Intelligence, 28(1):71–87, 1986.

- [48] J. B. Paris. The uncertain reasoner’s companion: a mathematical perspective. Cambridge University Press, 1994.

- [49] S. Polberg and D. Doder. Probabilistic abstract dialectical frameworks. In Eduardo Fermé and João Leite, editors, Proc. of JELIA, volume 8761, pages 591–599. Springer, 2014.

- [50] R. Reiter. On closed world data bases. In Logic and Data Bases, Symposium on Logic and Data Bases, Centre d’études et de recherches de Toulouse, France, 1977, pages 55–76, New York, 1977. Plemum Press.