Progressive Graph Convolutional Networks

for Semi-Supervised Node Classification

Abstract

Graph convolutional networks have been successful in addressing graph-based tasks such as semi-supervised node classification. Existing methods use a network structure defined by the user based on experimentation with fixed number of layers and neurons per layer and employ a layer-wise propagation rule to obtain the node embeddings. Designing an automatic process to define a problem-dependant architecture for graph convolutional networks can greatly help to reduce the need for manual design of the structure of the model in the training process. In this paper, we propose a method to automatically build compact and task-specific graph convolutional networks. Experimental results on widely used publicly available datasets show that the proposed method outperforms related methods based on convolutional graph networks in terms of classification performance and network compactness.

Index Terms:

Graph-based Learning, Graph Convolutional Networks, Progressive Learning, Semi-Supervised LearningI Introduction

Convolutional Neural Networks (CNNs) [1], as an end-to-end deep learning paradigm, have been very successful in many machine learning and computer vision tasks [2, 3, 4]. While CNNs have high ability to extract latent representations and local meaningful statistical patterns from data, they can only operate on Euclidean data structures such as audio, images and videos which have a form based on D, D and D regular grids, respectively. Recently, there has been an increasing research interest in applying deep learning approaches on non-euclidean data structures, like graphs, which lack geometrical properties, but have high ability to model complex irregular data such as social networks [5, 6] citation networks and knowledge graphs [7].

Motivated by CNNs, the notion of convolution was generalized from grid data to graph structures, which correspond to locally connected data items, by aggregating the node’s neighbors’ features with its own features [8]. Combining such convolutional operator with a data transformation process and hierarchical structures Graph Convolutional Networks (GCNs) are obtained, which can be trained in an end-to-end fashion to optimize an objective defined on individual graph nodes, or the graph as a whole. As an example, the GCN method proposed in [6] is a semi-supervised node classification method consisting of two convolutional layers. Each graph convolutional layer learns nodes’ features by applying aggregation rule on their corresponding first-order neighbors. The graph structure can also be learned dynamically [9]. GCNs, have been successful in various graph mining tasks like node classification, [10, 11], graph classification [12], link prediction [13], and visual data analysis [14].

One of the main drawbacks of existing GCN-based methods is that they require an extensive hyper-parameter search process to determine a good topology of the network. This process is commonly based on extensive experimentation involving the training of multiple GCN topologies and monitoring their performance on a hold-out set. To avoid such a computationally demanding process, a common practice is to empirically define a network topology and use it for all examined problems (data sets). As an example, a two layer GCN model cannot gain sufficient global information by aggregating the information from just a two-hop neighborhood for each node. Meanwhile, in the reported results of [6] it has been shown that adding more layers makes the training process harder and does not necessarily improve the classification performance. It was also shown that the topology of the GCN plays a crucial role in performance which is related to the underlying difficulty of the problem to be solved [15].

Problem-specific design of the neural networks’ architecture contributes in improving the performance and the efficiency of a learning system. Recently, methods of finding an optimized network topology have been receiving much attention and many works were proposed to define compact network topologies by employing various learning strategies, such as compressing pre-trained networks, adding neurons progressively to the network, pruning the network’s weights and applying weight quantization [16, 17, 18, 19]. However, all these methods work with grid data in Euclidean spaces. In this regard, learning a compact topology for GCNs makes a step towards increasing the training efficiency and reducing the computational complexity and storage needed while achieving comparable performance with existing methods.

In this paper, we propose a method to jointly define a problem-specific GCN topology and optimize its parameters, by progressively growing the network’s structure both in width and depth. The contributions of our work are:

-

•

We propose a method to learn an optimized and problem-specific GCN topology progressively without user intervention. The resulting networks are compact in terms of number of parameters, while performing on par, or even better, compared to other recent GCN models.

-

•

We provide a convergence analysis for the proposed approach, showing that the progressively building GCN topology is guaranteed to converge to a (local) minimum.

-

•

We conduct experiments on widely-used graph datasets and compare the proposed method with recently proposed GCN models to demonstrate both the efficiency and competitive performance of the proposed method. Our experiments include an analysis of the effect of the network’s complexity with respect to the underlying complexity of the classification problem, highlighting the importance of network’s topology optimization.

The rest of the paper is organized as follows: Section II provides a description of graph-based semi-supervised classification, along with the terminology used in this paper. Section III reviews the GCN method [6] as the baseline method of our work. The proposed method is described in detail in section IV. The conducted experiments are described in section V, and conclusions are drawn in Section VI.

II Semi-supervised graph-based classification

Let be an undirected graph where and denote the set of nodes and the set of edges , respectively. denotes the adjacency matrix of encoding the node connections. The elements can be either binary values, indicating presence or absence of an edge between nodes and , or real values encoding the similarity between and , based on a similarity measure. Using , we define the degree matrix which is a diagonal matrix with elements equal to . Each node of the graph is also equipped with a representation , which is used to form the feature vector matrix . When such a feature vector for each graph node is not readily available for the problem at hand (e.g. in the case of processing citation graphs), vector-based node representations are learned by using some node embedding method, like [20, 21].

Traditional graph-based semi-supervised node classification methods [22, 23, 24], learn a mapping from the nodes’ feature vectors to labels, which for a -class classification problem are usually represented by -dimensional vectors following the -of- encoding scheme. This mapping exploits a graph-based regularization term and it commonly has the form:

| (1) |

where denotes the learnable function mapping the -dimensional node representations to the -dimensional class vectors, is a matrix formed by the class label vectors of the labelled nodes which form the matrix , and is the unnormalized graph Laplacian matrix. The first term in (1) is the classification loss of the trained model measured on the labelled graph nodes, and the second term corresponds to graph Laplacian-based regularization incorporating a smoothness prior to the optimization function . expresses the relative importance of the two terms. By following this approach the labels’ information of the labelled graph nodes is propagated over the entire graph.

III Graph Convolutional Networks

GCNs are mainly categorized into spatial-based and spectral-based methods. The spatial-based methods update the features of each node by aggregating its spatially close neighbors’ feature vectors. In these methods, the convolution operation is defined on the graph with a specified neighborhood size which propagates the information locally [10, 25, 26, 5].

The spectral-based GCN methods follow a graph signal processing approach [6]. Let us denote by a filter and by a multi-dimensional signal defined over the nodes of the graph. The signal transformation using is given by:

| (2) |

where denotes the convolution operator, is the matrix of eigenvectors of the normalized graph Laplacian with being a diagonal matrix having as elements the corresponding eigenvalues, and being the graph Fourier transform of . Since computing the eigen-decomposition of is computationally expensive, low-rank approximations using truncated Chebyshev polynomials have been proposed [27]. The transformation in (2) corresponds to the building block of a GCN. Followed by an element-wise activation it forms a GCN layer.

The multilayer GCN for semi-supervised node classification was proposed in [6] by stacking multiple GCN layers. To achieve a fast and scalable operation a first-order approximation of the spectral graph convolution is proposed leading to:

| (3) |

where and . Let us denote by the graph node representations at layer of the multi-layer GCN. The propagation rule for calculating the graph node representations at layer is given by:

| (4) |

where is the layer weight matrix and denotes the activation function, such as , or used for the output layer. For a two layer GCN model this leads to:

| (5) |

where and denotes the predicted feature vectors for all the graph nodes in classes. The model parameters () are finetuned by minimizing the cross entropy loss over the labeled nodes.

One of the drawbacks of all existing GCN methods is that they use a predefined network topology, which is selected either based on the user’s experience, or empirically by testing multiple network topologies. In the next section, we describe a method for automatically determining a problem-specific compact GCN topology based on a data-driven approach.

IV Progressive Graph Convolutional Network

PGCN follows a data-driven approach to learn a problem-dependant compact network topology, in terms of both depth and width, by progressively building the network’s topology based on a process guided by its performance. That is, the learning process of GCN jointly determines the network’s topology and estimates its parameters to optimize the cost function defined at the output of the network.

The learning process starts with a single hidden layer formed by one block of neurons equipped with an activation function (e.g. ) and an output layer with neurons. At iteration , the synaptic weight matrix connecting the input layer to the hidden-layer neurons is initialized randomly. The graph nodes’ representations defined at the outputs of the hidden layer , where the index indicates that the one block of hidden-layer neurons is used, are obtained by using graph convolution:

| (6) |

By setting , the network’s output for all graph nodes is calculated using a linear transformation as follows:

| (7) |

where denotes the weight matrix connecting hidden layer to the output layer. The transformation matrix can be calculated by minimizing the regression problem:

| (8) |

where denotes the trace operator, denotes the relative importance of model loss, denotes the hidden layer representations of the labeled graph nodes and is a matrix formed by the labeled nodes’ target vectors.

To exploit the information in both the labeled and unlabeled nodes’ feature vectors in , we can replace the linear regression problem in (8) by a semi-supervised regression problem exploiting the smoothness assumption of semi-supervised learning [28, 24] expressed by the term:

| (9) |

Minimization of (9) with respect to leads to node feature vectors at the output of the network which are similar for nodes connected in the graph. To incorporate this property in the optimization problem of (8) the term in (9) is added as a regularizer. Thus, is obtained by minimizing:

| (10) | |||

where is the number of all (labeled and unlabeled) graph nodes and denotes the relative importance of Laplacian regularization and is set to which is the natural scale factor to estimate the Laplacian operator empirically [22].

The optimal solution of (10) is obtained by setting , and is given by:

| (11) |

where and are identity matrices. denotes the feature dimension of the first hidden layer which is equal to in the first iteration.

After the initialization of both the hidden layer and output layer weights, these are finetuned based on Backpropagation using the loss of the model on the labeled graph nodes. Finally, the model’s performance (classification accuracy on the labeled nodes) is recorded.

At iteration , the network’s topology grows by adding a second block of hidden layer neurons. We initialize the weights connecting the input layer to the first block of hidden layer neurons with finetuned values obtained at iteration , while the weight matrix of the newly added block is initialized randomly. The hidden layer representations corresponding to the newly added neurons are calculated as in (6) by replacing with . Then, we combine and the output weight matrix is calculated by using (11). At this iteration, and , are of size and , respectively.

After finetuning all the parameters , , , and recording the model’s performance, the network’s progression is evaluated based on the rate of performance improvement given by:

| (12) |

where and denote the model’s performance before and after adding the second block of hidden layer neurons. If the addition of the second GCN block does not improve the model’s performance, i.e. when , the newly added block is removed, and all the model parameters are set to the finetuned values which were obtained at previous iteration. At this point, the progression in the current hidden layer terminates.

After stopping the progressive learning in the first hidden layer, a new hidden layer is formed which takes as input the previous hidden layers’ output. The block-based progression of the newly added hidden layer starts by using a single block of neurons and repeats in the same way as for the first hidden layer until model’s performance converges.

Let us assume that at iteration , the network’s topology comprises of layers (the input layer corresponds to ) and it is growing at the layer giving as outputs . Before adding the block formed by neurons, the weights of all the existing blocks in the network are set to the finetuned values obtained in the previous step. The newly added block in the hidden layer takes the output of the previous hidden layer as input, and the graph convolutional operation for the block is given by:

| (13) |

where and denote the hidden representation of the newly added block and the randomly initialized synaptic weights connecting the hidden layer to block of layer, respectively. Given which denotes the hidden representations formed by using both the existing blocks and the newly added block in the hidden layer, the models’ output is calculated using a linear transformation as follows:

| (14) |

where denotes the transformation matrix which is calculated based on semi-supervised linear regression:

| (15) |

Similar to other GCN-based methods, the linear transformation for calculating the models’ output in (14) (and (7), respectively) can also be followed by softmax activation (on a node basis) as follows:

| (16) |

and instead of Mean Squared Error (MSE), the Cross-Entropy (CE) loss function can be employed for finetuning the model.

After the initialization step, the synaptic weights of the existing blocks and all the weight parameters of the newly added block are finetuned with respect to the labeled data using (8). The model diagram is shown in Fig. 1 which indicates the progressive learning process in layer where a new block is added and after initializing its weights (dashed lines), all the model parameters are finetuned together. Fig. 2 indicates the graph convolution process which is applied on graph node by a block of the neurons. This convolution process gets as input the hidden representation matrix of nodes at layer , , and outputs the representation matrix at layer .

To evaluate the network’s progression, the model’s performance is recorded and the rate of the improvement is given by:

| (17) |

where and denote the classification accuracy before and after adding the block, respectively. If the addition of a new GCN block in step does not improve the model’s performance, i.e. when , the progression of the layer terminates and all the network parameters are set to the finetuned values obtained in the previous step with blocks. After stopping the block progression for the layer, the algorithm evaluates whether the network’s performance converged using the rate of the performance before and after adding the new hidden layer:

| (18) |

When , the last hidden layer is removed and the algorithm stops growing the network’s topology. Here we should note that it is also possible to use other performance metrics, such as model loss, to evaluate the network progression process in (17) and (18).

Algorithm 1 summarizes the PGCN algorithm. In A, we show that the proposed approach of building GCN layers in a progressive manner converges.

V Experiments

V-A Datasets

We evaluated the proposed method for semi-supervised node classification task following transductive setting, on three widely-used benchmark datasets, Citeseer, Cora and Pubmed [29], which are standard citation networks.

The citation networks represent published documents as nodes and the citation links between them as undirected edges. Each node in a citation network is represented by a sparse binary Bag-of-Words (BoW) feature vector extracted from articles’ abstract and a class label which represents the articles’ subject. The symmetric binary adjacency matrix is built using the list of undirected edges between the nodes and the task to be solved is the prediction of the articles’ subject based on the BoW features and their citations to other articles.

To perform a fair comparison, we follow the same experimental setup as in [30, 6] for data configuration and preprocessing. The detailed datasets statistics are summerized in Table. I. For training the model, 20 labeled nodes per class are used for each citation network. In Table. I, label rate denotes the number of training labeled nodes divided by the total number of nodes for each dataset. In all datasets, the validation set contains 500 randomly selected samples and the trained model is evaluated on 1000 test nodes. The labels of validation set are not used for training the model. Following the transductive learning setup, only the labeled nodes of the training set but all the feature vectors are used for training. The feature vectors are row-normalized.

| Dataset | Citeseer | Cora | Pubmed | |

|---|---|---|---|---|

| Type | Citation | Citation | Citation | |

| Nodes | 3327 | 2708 | 19717 | |

| Edges | 4732 | 5429 | 44338 | |

| Classes | 6 | 7 | 3 | |

| Features | 3703 | 1433 | 500 | |

| Label rate | 0.036 | 0.052 | 0.003 |

V-B Competing Methods

We compared the proposed method with the baseline GCN [6] and related methods N-GCN [31], GAT [32], GraphNAS [33] and Auto-GNN [34]. N-GCN [31] trains a network of GCNs over the neighboring nodes discovered at different distances in random walk. Each GCN module uses a different power of adjacancy matrix like , where indicates the statistics collected from the step of a random walk on the graph. It combines the information from different graph scales by using the weighted sum or the concatenation of all the GCNs’ outputs into a final classification layer and finetunes the entire model for semi-supervised node classification. The graph attention network (GAT) [32] introduces attention mechanism to GNNs. It assigns different weights to different neighboring nodes implicitly by employing self-attention through an end-to-end neural network architecture. The attention coefficients are shared across all graph edges, so there is no need to capture global graph structure.

GraphNAS [33] and Auto-GNN [34] are the most related works to ours which employ neural architecture search (NAS) mechanism to find the best graph neural network architecture automatically. GraphNAS utilizes a recurrent neural network (RNN) as a controller which generates the descriptions of different GNN architectures. The RNN is trained with reinforcement learning which gives feedback, like reward or penalty, to the controller in order to maximize the expected accuracy of the generated GNN architecture. Auto-GNN [34] is also a NAS-based method which designed a novel parameter sharing method for GNN homogeneous architectures and uses an efficient RNN controller to capture the variations of data representation in GNN which are produced by small modifications made in GNN architecture during the search process.

V-C Experimental settings

We implemented our method in tensorflow [35] and trained it using Adam optimizer [36] for epochs with learning rate of . The network weight parameters are initialized randomly using uniform distribution. To handle the effect of randomness on network performance, we ran it times on each dataset. For each dataset, the set of hyper-parameters which leads to best validation performance is selected and the corresponding performance on test set and architectural information are reported. To avoid overfitting, dropout [37] and regularization techniques are employed. The regularization factor is set to . The dropout rate is selected from and we apply dropout on the output of hidden layers, not on input features. The regularizer is selected from and the size of the block is selected from . The maximum topology of the network is limited to layers with neurons per layer and the threshold values are set to .

The baseline and competing methods also optimized the hyper-parameters on the same data splits. The test performance of these methods are reported directly from their papers. GCN method optimized the hyper-parameters on Cora dataset and used the same set of hyper-parameters for Citeseer and Pubmed datasets too. The two layer GCN is trained for 200 epochs using Adam optimizer with a learning rate of and early stoping of step size . GCN with dropout rate of , hidden layer of neurons and regularization of is applied on citation datasets.

N-GCN uses Adam optimizer with learning rate of for epochs and regularization factor is set to . This method uses a fixed architecture for all datasets. It has blocks of GCN which use different powers of : , , , . Each GCN, as a two layer network with hidden neurons, is replicated three times and the weighted sum of the outputs is introduced to the final classification layer. Similar to our experimental settings, N-GCN is run using 20 different random initializations and the test performance of the model with the highest accuracy on the validation set is reported.

In GAT method, the hyper-parameters are optimized on Cora dataset and then reused for Citeseer dataset. The regularization factor and the dropout rate are set to , respectively for Citeseer and Cora datasets. For pubmed dataset the regularization factor is set to . The dropout is applied on both input feature vectors and hidden layers output. GAT uses a two layer network architecture. The first layer consists of attention heads, each computing features, followed by ELU [38] activation function and the second layer is a single attention head computing features followed by softmax activation function. The reported results for GAT method is the average of the classification accuracy on the test set after 100 runs.

The NAS-based methods, GraphNAS and Auto-GNN, train a one-layer LSTM with 100 neurons as a controller using Adam optimizer with a learning rate of . The models which are sampled by the controller are trained for 200 epochs with the same hyper-parameter setting used in N-GCN. These methods explore only 2-layer GCN models in their predefined search space which leads to a constrained representation learning capacity for model. Both methods totally explore different architectures to find the optimal graph neural architecture which achieves the best classification accuracy on the validation set. The reported results for GraphNAS method is the average performance of the top architectures on test set and the Auto-GNN method randomly initializes the best found architecture times and reports the average classification accuracy on test set. The Auto-GNN method is able to train the models with parameter sharing by transferring the finetuned weights, to a newly sampled architecture while the GraphNAS doesn’t employ parameter sharing mechanism and it has a high computational and time complexity for training all 1000 different models from scratch. The results of Auto-GNN method both with and without using parameter sharing mechanism are reported in Table. II.

V-D Results

Table. II shows the performance in terms of classification accuracy for all the methods on the datasets. The best performance is shown in bold fonts for each dataset. We evaluate our method with both Cross Entropy (CE) and Mean Squared Error (MSE) loss functions. The obtained results in Table. (II) indicate that the proposed method has outperformed the baseline GCN method and other competing methods on Citeseer and Pubmed datasets and it has competitive performance with competing methods on Cora dataset. In order to compare the efficiency of our method with competing methods, especially the NAS-based methods, the model sizes, i.e., the number of model parameters, of all trained models are reported in Table. III. Table. IV also shows the model architectures which are learned by our proposed method and the fixed model architectures used by the baseline method GCN. The results indicate that the network topologies which are learned by the proposed method on all datasets are much more compact compared to the fixed network topologies used by baseline methods, while the classification performance of our compact model is similar or better than others. Although the NAS-based methods achieve competitive performance, exploring different architectures even by using a parameter sharing mechanism leads to an extremely high computation and time cost. To make their application tractable, their practical implementations restrict the search space by fixing the number of hidden layers to . Thus, there is no guarantee that they can reveal an optimal topology of the network for achieving a good classification performance on large and complex graph datasets. Contrary to that, the proposed method can efficiently determine the network’s topology by determining both the number of layers and neurons per layer.

| Method | Citeseer | Cora | Pubmed |

|---|---|---|---|

| GCN [6] | |||

| N-GCN [31] | |||

| GAT [32] | |||

| GraphNAS [33] | |||

| Auto-GNN [34] | |||

| PGCN (CE) | 74.3 | 80.2 | |

| PGCN (MSE) |

| Method | Citeseer | Cora | Pubmed | |

| GCN | 59.4k | 23.1k | 8.1k | |

| N-GCN | 445.4k | 173k | 60.5k | |

| GAT | 237.5k | 92.3k | 32.3k | |

| GraphNAS [33] | 230k | 188k | 30k | |

| Auto-GNN [34] | 710k | 50k | 70k | |

| PGCN (CE) | 56k | 14.7k | 5.04k | |

| PGCN (MSE) | 74.3k | 22.1k | 7.5k |

| Method | GCN | PGCN (CE) | PGCN (MSE) |

|---|---|---|---|

| Citeseer | [D, h1: 16, 6] | [D, h1: 15, h2: 20, 6] | [D, h1: 20, 6] |

| Cora | [D, h1: 16, 7] | [D, h1: 10, h2: 20, 7] | [D, h1: 15, h2: 25, 7] |

| Pubmed | [D, h1: 16, 3] | [D, h1: 10, 3] | [D, h1: 15, 3] |

| denotes the dimensionality of the input data |

| hX denotes the hidden layer. |

Fig. 3 illustrates the t-SNE visualization of learned feature vectors of the Cora dataset from last layer of network before applying the softmax activation.

V-E Analysis on Dataset Statistics

The results of the previous section indicate that all the competing methods perform on par with the baseline GCN which has a simple network architecture. This can be explained by the benchmark dataset statistics. The dataset complexity is defined by the number of labeled nodes and the dimentionality of nodes’ feature vectors , and the ratio is extremely small for all the benchmark datasets of Table. I. It has been recently shown that even the heavily regularized linear methods can obtain high performance on classification problems on datasets with low complexity [39, 40, 41]. Therefore, all the GCN-based methods, with simple or sophisticated network structures, can lead to comparable performance on these widely used benchmark datasets. In [15], it has been experimentally shown that the performance of the GCN-based methods heavily depends on the underlying difficulty of the problem and non-linear models with more complex structure perform significantly better on datasets with higher ratio. That is, it is expected that the difference in performance of various methods will increase when the underlying semi-supervised classification problem becomes more complex.

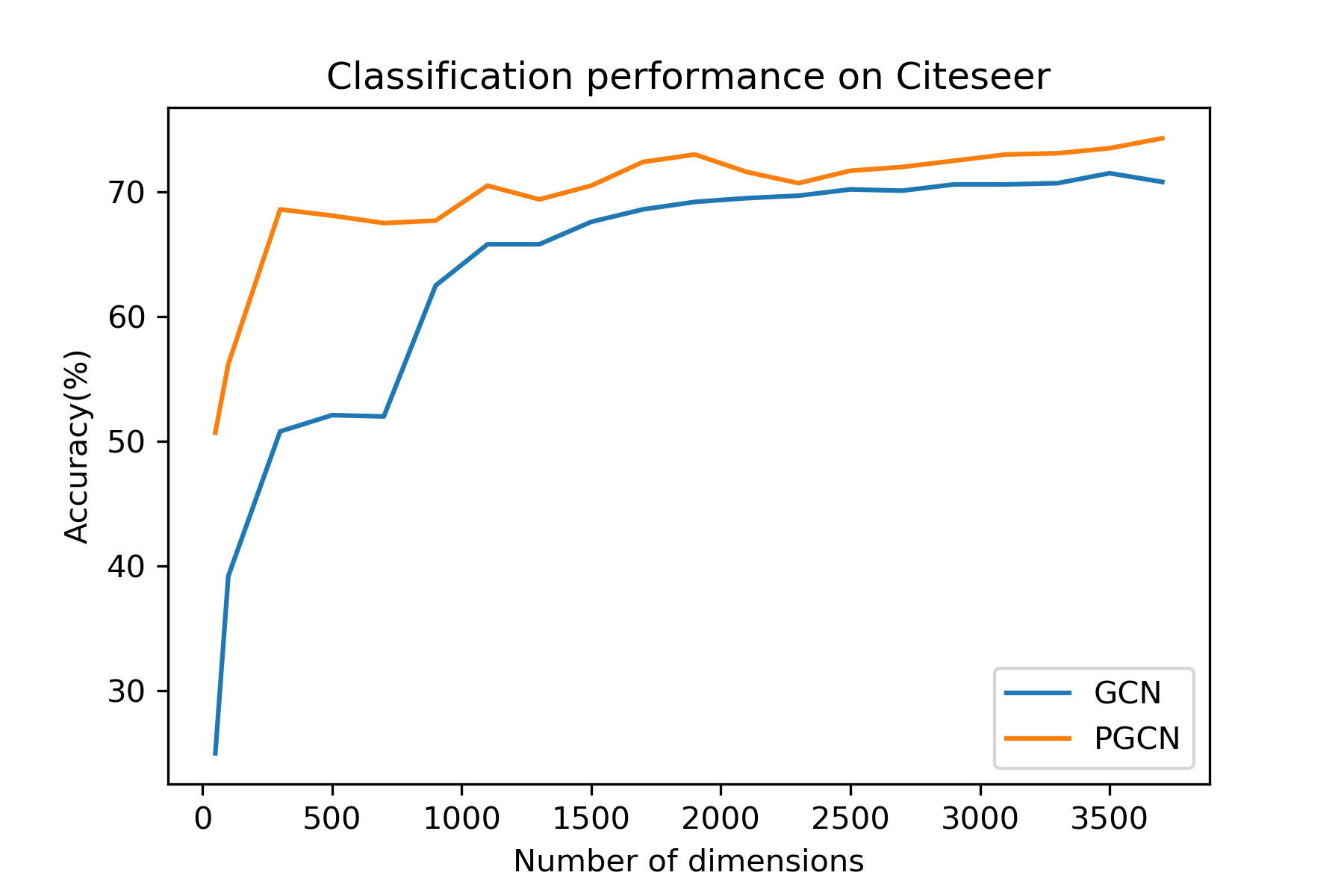

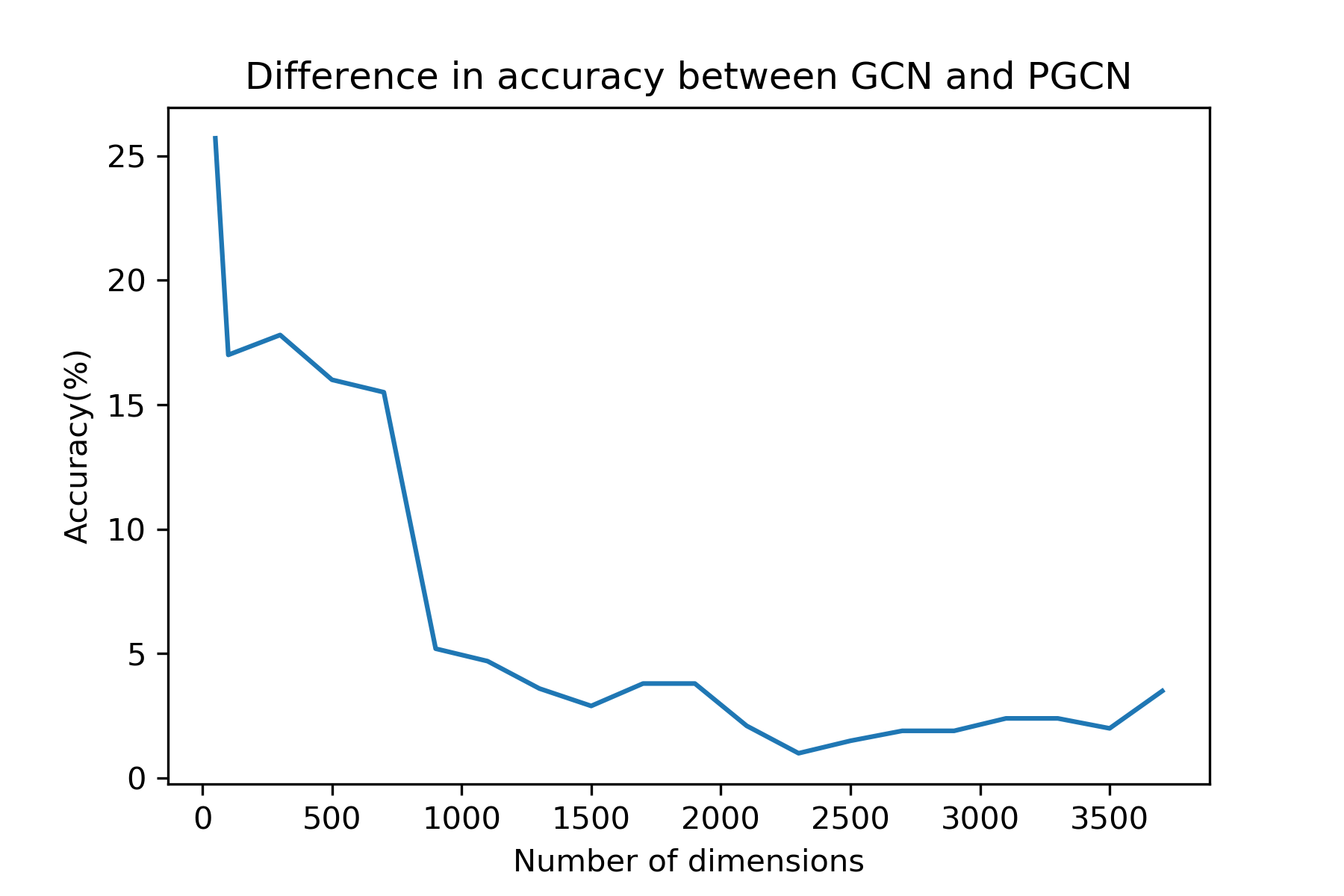

Here we highlight the importance of optimizing the network structure based on the problem’s complexity. We compare the performance of the proposed method with the baseline method GCN [6], by tuning the ratio using different input data dimensionalities when of nodes are labeled. We use the same data splits for both methods and follow the same approach as in [15] to control the ratio by mapping the input data representations to a subspace through random projections. Specifically, we use a random sketching matrix with , which is drawn from a Normal distribution, to obtain new data representations as follows:

| (19) |

To avoid bias of the performance values obtained for different values of , we first randomly sample a square matrix and subsequently we use its first columns to map the input data from to its subspace . Such an approach guarantees that when a subspace of a higher dimensionality is used, it corresponds to an augmented version of the initial (lower-dimensional) subspace. We applied three experiments for each choice of on each dataset and we report the performance on the test data corresponding to the best validation performance.

Fig. 4 compares the classification performance of GCN and PGCN methods on all datasets when problems of different difficulty are considered. It can be observed that for all data dimensionalities, our method performs better than the baseline method, while they have a larger difference in classification accuracy in lower dimensionalities, i.e. when the ratio is higher. This is in line with the findings of [15] indicating that in high-dimensional feature spaces neural network structures tend to perform in similar manners irrespectively to their complexity. On the other hand, when the classification problem is encoded in lower-dimensional feature spaces and, thus, becomes more complex, the structure of the neural network’s topology is important. Indeed, as can be observed in Fig. 4b, PGCN outperforms GCN with a high margin when the dimensionality of the node representations is low. The comparison of model complexity with respect to number of trainable parameters in Fig. 4c shows that both methods have similar complexity when lower-dimensional feature spaces are used on Citeseer and Cora datasets, while PGCN outperforms GCN in terms of classification perfromance. For Pubmed dataset, PGCN outperforms GCN in terms of both model complexity and classification performance in all cases.

VI Conclusion

In this paper, we proposed a method for progressively training graph convolutional networks for semi-supervised node classification which jointly defines a problem-dependant compact topology and optimizes its parameters. The proposed method employs a learning process which utilizes the input to each layer data to grow the network’s structure both in terms of depth and width. This is achieved by operating an efficient layer-wise propagation rule leading to a self-organized network structure exploiting data relationships expressed by their vector representations and the adjacency matrix of the corresponding graph structure. Experimental results on three commonly used datasets for evaluating graph convolutional networks on semi-supervised classification indicate that the proposed method outperforms the baseline method GCN and performs on par, or even better, compared to more complex recently proposed methods in terms of classification performance and efficiency.

Appendix A Proof of Convergence

Here we show that the progressive learning in each layer of the PGCN method converges. Lets assume that denotes the hidden representations of data produced by using the first blocks in GCN layer and denotes the finetuned weights connecting all the blocks of layer to the output layer. We prove that the sequence of graph-regularized MSE, obtained with and is monotonically decreasing while it is bounded below by .

Given the fixed hidden representation , the finetuned output weights are not necessarily the optimized weights in terms of MSE. It can be explained by the following relation:

| (20) |

where denotes the optimized output weights which are obtained by solving the semi-supervised linear regression problem as follows:

| (21) |

In the next step, when the block is added to the layer, the new hidden representation of layer would be in which is fixed from previous step and is generated by new randomly initialized weights. The new optimal output weights which connect the layer to output layer is initialized according to (21) by substituting by . The MSE after adding the block would be as follows:

| (22) |

Since (22) holds for all , we can replace , with , respectively to obtain the following relation:

| (23) |

After finetuning the network parameters, the output weights are denoted by and the MSE would be . It has been proven that stochastic gradient descent converges to a local optimum [42] with small enough learning rate, so the following relation holds for the MSE:

| (24) |

According to (24), (25) we have the following relation:

| (25) |

which indicates that the sequence is monotonically decreasing.

Based on the connection of the linear activation function combined with the mean-square error to the soft-max activation function combined with the cross-entropy criterion and maximum likelihood optimization [43], an analysis following the same steps as above can be used to show that (when the latter is employed) the sequence is also monotonically decreasing.

References

- [1] Y. LeCun, Y. Bengio, and G. Hinton, “Deep learning,” Nature, vol. 521, no. 7553, pp. 436–444, 2015.

- [2] A. Krizhevsky, I. Sutskever, and G. E. Hinton, “Imagenet classification with deep convolutional neural networks,” in Advances in Neural Information Processing Systems, 2012, pp. 1097–1105.

- [3] C. Szegedy, W. Liu, Y. Jia, P. Sermanet, S. Reed, D. Anguelov, D. Erhan, V. Vanhoucke, and A. Rabinovich, “Going deeper with convolutions,” in IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2015, pp. 1–9.

- [4] K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” in IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2016, pp. 770–778.

- [5] W. Hamilton, Z. Ying, and J. Leskovec, “Inductive representation learning on large graphs,” in Advances in Neural Information Processing Systems, 2017, pp. 1024–1034.

- [6] T. N. Kipf and M. Welling, “Semi-supervised classification with graph convolutional networks,” in International Conference on Learning Representations (ICLR), 2017.

- [7] T. Hamaguchi, H. Oiwa, M. Shimbo, and Y. Matsumoto, “Knowledge transfer for out-of-knowledge-base entities : A graph neural network approach,” in International Joint Conference on Artificial Intelligence, (IJCAI), 2017, pp. 1802–1808.

- [8] Z. Wu, S. Pan, F. Chen, G. Long, C. Zhang, and P. S. Yu, “A comprehensive survey on graph neural networks,” IEEE Transaction on Neural Networks and Learning Systems, vol. 32, no. 1, pp. 4–24, 2021.

- [9] F. Manessi, A. Rozza, and M. Manzo, “Dynamic graph convolutional networks,” Pattern Recognition, vol. 97, p. 107000, 2020.

- [10] J. Atwood and D. Towsley, “Diffusion-convolutional neural networks,” in Advances in Neural Information Processing Systems, 2016, pp. 1993–2001.

- [11] Y. Luo, R. Ji, T. Guan, J. Yu, P. Liu, and Y. Yang, “Every node counts: Self-ensembling graph convolutional networks for semi-supervised learning,” Pattern Recognition, vol. 106, p. 107451, 2020.

- [12] M. Zhang, Z. Cui, M. Neumann, and Y. Chen, “An end-to-end deep learning architecture for graph classification,” in AAAI Conference on Artificial Intelligence, 2018, pp. 4438–4445.

- [13] M. Zhang and Y. Chen, “Link prediction based on graph neural networks,” in Advances in Neural Information Processing Systems, 2018, pp. 5165–5175.

- [14] Y. Chen, G. Ma, C. Yuan, B. Li, H. Zhang, F. Wang, and W. Hu, “Graph convolutional network with structure pooling and joint-wise channel attention for action recognition,” Pattern Recognition, vol. 103, p. 107321, 2020.

- [15] C. Vignac, G. Ortiz-Jiménez, and P. Frossard, “On the choice of graph neural network architectures,” in IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2020, pp. 8489–8493.

- [16] Y. Cheng, D. Wang, P. Zhou, and T. Zhang, “A survey of model compression and acceleration for deep neural networks,” arXiv:1710.09282, 2017.

- [17] A. G. Howard, M. Zhu, B. Chen, D. Kalenichenko, W. Wang, T. Weyand, M. Andreetto, and H. Adam, “Mobilenets: Efficient convolutional neural networks for mobile vision applications,” arXiv:1704.04861, 2017.

- [18] D. T. Tran, A. Iosifidis, and M. Gabbouj, “Improving efficiency in convolutional neural networks with multilinear filters,” Neural Networks, vol. 105, pp. 328–339, 2018.

- [19] D. T. Tran, S. Kiranyaz, M. Gabbouj, and A. Iosifidis, “Heterogeneous multilayer generalized operational perceptron,” IEEE Transactions on Neural Networks and Learning Systems, vol. 31, no. 3, pp. 710–724, 2019.

- [20] S. Abu-El-Haija, B. Perozzi, R. Al-Rfou, and A. Alemi, “Watch your step: Learning graph embeddings through attention,” arXiv:1710.09599, 2017.

- [21] A. Grover and J. Leskovec, “node2vec: Scalable feature learning for networks,” in International Conference on Knowledge Discovery and Data Mining, 2016, pp. 855–864.

- [22] M. Belkin, P. Niyogi, and V. Sindhwani, “Manifold regularization: A geometric framework for learning from labeled and unlabeled examples,” Journal of Machine Learning Research, vol. 7, no. Nov, pp. 2399–2434, 2006.

- [23] J. Weston, F. Ratle, H. Mobahi, and R. Collobert, “Deep learning via semi-supervised embedding,” in Neural Networks: Tricks of the trade, 2012, pp. 639–655.

- [24] A. Iosifidis, A. Tefas, and I. Pitas, “Regularized extreme learning machine for multi-view semi-supervised action recognition,” Neurocomputing, vol. 145, pp. 250–262, 2014.

- [25] C. Zhuang and Q. Ma, “Dual graph convolutional networks for graph-based semi-supervised classification,” in World Wide Web Conference, 2018, pp. 499–508.

- [26] F. Monti, D. Boscaini, J. Masci, E. Rodola, J. Svoboda, and M. M. Bronstein, “Geometric deep learning on graphs and manifolds using mixture model cnns,” in IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2017, pp. 5115–5124.

- [27] D. K. Hammond, P. Vandergheynst, and R. Gribonval, “Wavelets on graphs via spectral graph theory,” Applied and Computational Harmonic Analysis, vol. 30, no. 2, pp. 129–150, 2011.

- [28] S. Yan, D. Xu, B. Zhang, H.-J. Zhang, Q. Yang, and S. Lin, “Graph embedding and extensions: A general framework for dimensionality reduction,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 29, no. 1, pp. 40–51, 2006.

- [29] P. Sen, G. Namata, M. Bilgic, L. Getoor, B. Galligher, and T. Eliassi-Rad, “Collective classification in network data,” AI Magazine, vol. 29, no. 3, pp. 93–93, 2008.

- [30] Z. Yang, W. W. Cohen, and R. Salakhutdinov, “Revisiting semi-supervised learning with graph embeddings,” in International Conference on Machine Learning (ICML), 2016, pp. 40–48.

- [31] S. Abu-El-Haija, A. Kapoor, B. Perozzi, and J. Lee, “N-GCN: multi-scale graph convolution for semi-supervised node classification,” in Conference on Uncertainty in Artificial Intelligence (UAI), 2019, p. 310.

- [32] P. Velickovic, G. Cucurull, A. Casanova, A. Romero, P. Liò, and Y. Bengio, “Graph attention networks,” in International Conference on Learning Representations (ICLR), 2018.

- [33] Y. Gao, H. Yang, P. Zhang, C. Zhou, and Y. Hu, “Graphnas: Graph neural architecture search with reinforcement learning,” arXiv preprint arXiv:1904.09981, 2019.

- [34] K. Zhou, Q. Song, X. Huang, and X. Hu, “Auto-gnn: Neural architecture search of graph neural networks,” arXiv preprint arXiv:1909.03184, 2019.

- [35] M. Abadi, A. Agarwal, P. Barham, E. Brevdo, Z. Chen, C. Citro, G. S. Corrado, A. Davis, J. Dean, M. Devin et al., “Tensorflow: Large-scale machine learning on heterogeneous distributed systems,” arXiv:1603.04467, 2016.

- [36] D. P. Kingma and J. Ba, “Adam: A method for stochastic optimization,” arXiv:1412.6980, 2014.

- [37] N. Srivastava, G. Hinton, A. Krizhevsky, I. Sutskever, and R. Salakhutdinov, “Dropout: a simple way to prevent neural networks from overfitting,” Journal of Machine Learning Research, vol. 15, no. 1, pp. 1929–1958, 2014.

- [38] D.-A. Clevert, T. Unterthiner, and S. Hochreiter, “Fast and accurate deep network learning by exponential linear units (elus),” arXiv:1511.07289, 2015.

- [39] F. Wu, A. H. S. Jr., T. Zhang, C. Fifty, T. Yu, and K. Q. Weinberger, “Simplifying graph convolutional networks,” in International Conference on Machine Learning (ICML), 2019, pp. 6861–6871.

- [40] H. NT and T. Maehara, “Revisiting graph neural networks: All we have is low-pass filters,” arXiv:1905.09550, 2019.

- [41] J. Klicpera, A. Bojchevski, and S. Günnemann, “Predict then propagate: Graph neural networks meet personalized pagerank,” in International Conference on Learning Representations (ICLR), 2019.

- [42] H. Robbins and S. Monro, “A stochastic approximation method in: Herbert robbins selected papers,” Annals of Mathematical Statistics, vol. 22, no. 3, pp. 400–407, 1951.

- [43] C. M. Bishop, Pattern Recognition and Machine Learning. Springer, 2007.