Projective Embedding of Dynamical Systems:

uniform mean field equations

Abstract

We study embeddings of continuous dynamical systems in larger dimensions via projector operators. We call this technique PEDS, projective embedding of dynamical systems, as the stable fixed point of the original system dynamics are recovered via projection from the higher dimensional space. In this paper we provide a general definition and prove that for a particular type of rank-1 projector operator, the uniform mean field projector, the equations of motion become a mean field approximation of the dynamical system. While in general the embedding depends on a specified variable ordering, the same is not true for the uniform mean field projector. In addition, we prove that the original stable and saddle-node fixed points retain this feature in the embedding dynamics, while unstable fixed points become saddles. Direct applications of PEDS can be non-convex optimization and machine learning.

keywords:

projective embedding, projector operators, dynamical systems, fixed points, PEDS1 Introduction

The past decades witnessed an increased interest in physics- or neuro-inspired algorithms for the analysis of dynamical systems, with the main area of application being problems that can be mapped onto optimization ones, whether continuous or discrete [2, TraversaSOLG, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14]. Among the most important neuro-inspired algorithms, we mention neural networks, which received a large amount of attention given their wide applicability and remarkable achievements: this is an active area of research falling at the boundary between complex systems, neuromorphic computing and nonlinear dynamics, dating back to Turing [15] at least. In the study of neural networks, one of the most important open problems is the acceleration of the training phase, a problem connected to the roughness of the energy landscape [16, 17]. Network training is one of the most difficult tasks, requiring in general huge computational power and a vast number of samples. Many algorithms attempt at modifying the energy function to reduce the time spent on saddle points [18, 19]. Changing the landscape is however challenging in general, as it somehow requires some a priori knowledge of what type of local extrema should be modified. Thus, finding valuable alternatives and/or generalizations of gradient descent has been a topic of intense study. In addition to this, analog models of computation is an active area of research [20] with several applications.

From the point of view of a dynamical system, however, there are not many strategies that one can attempt to employ. A possibility, incidentally the one we explore in this paper, is to increase the dimensionality of the system, by attempting to preserve some properties related to the original dynamical system, while aiming at a trade-off between convergence optimality and the curse of dimensionality. The basic rationale for this strategy is that increasing dimensions, there are more pathways that a system can take in order to reach a certain target point. As a simple example, consider a one dimensional barrier between two minima in a potential: following gradients, one could never move from one local minimum to the other, while in a higher dimension system, pathways around that confinement barrier are, at least in principle, possible.

The technique we propose here is inspired by recent results in the context of memristive circuits [21, 22, 23, 24, 25, 26]. In circuits, Kirchhoff laws are manifestations of the conservation of physical quantities such as charge or energy. Mathematically, these can be expressed via the introduction of projection operators, i.e. matrices satisfying the constraint , and directly connected to circuit topology. For instance, for a resistive circuit made of identical unitary resistances in series with impressed voltage generators, the Ohm’s law for the network can be expressed as

| (1) |

where is the collection of voltage generators connected in series to each resistance, while contains the branch currents. The underlying assumption of (1) is that the voltage generators ’s are in series to the resistances ’s, while the circuit can be represented as graph with edges. Given the branch currents and a certain orientation of the graph loops , we can obtain the so called loop matrix of the circuit , of size , such that , where t denotes the transpose. The details of the derivation of from the circuit topology are beyond the scope of this paper, where will be kept generic and unrelated to any underlying graph or conservation law.

We assume a continuous dynamical system, but the technique can in principle be extended to vector maps, and thus works also for numerical implementations of a dynamical system. Let us consider a dynamical system expressed in vector form as a first-order differential system

| (2) |

where functions are assumed known, and analytic. We are in general interested in recovering the stable fixed points of (2), i.e. the values such that , if they exist.

To this aim we consider another dynamical system, of size , written in the form

| (3) |

where for each value we define an augmented vector of size . The question we aim at answering in this contribution is to ascertain whether functions and exist such that the dynamical system (2) is contained, in a sense we will make more precise in the next section, into the extended system (3). The answer we provide in this paper is affirmative, as we will explicitly construct such system along with the technique to recover the original dynamical system.

From a mathematical perspective, these generalizations can be investigated by the study of the properties of fixed points in the embedded system in terms of the original ones, which is the strategy we use in this paper. A fixed point is particular point of the phase space satisfying . We dub the method developed in this paper Projective Embedding of Dynamical Systems (PEDS), as the technique involves the embedding of a target dynamical system of dimension into one of dimension ; ultimately, we recover the fixed points of the original dynamical system by projecting back onto a chosen set (of size ) of observables. We will prove that the information of the fixed points of the original target system are related to the fixed points of the reduced observables. As we will see, the dynamical system in which the embedding is contained is a nontrivial and nonlinear extension of the original dynamical system which is obtained via a map between the original one and an extended one. Although the projection operator may be quite general, we prove most of the results here for a specific operator, that we call uniform mean-field projector, as in this simplified case mostly analytical proofs are available.

The structure of the paper is as follows. In Section 2 we introduce the PEDS procedure formally, and provide various examples to intuitively grasp why these definitions make sense. In Section 3 we study the uniform mean field projector, and both for 1-dimensional and -dimensional dynamical systems we prove exact results about the properties of the asymptotic stable fixed points and their Jacobians. In Section 4 we provide numerical examples alongside analytical analysis, to further corroborate the bulk of the paper. Finally, conclusions follow.

2 The PEDS procedure: key definitions and examples

In order to clarify the techniques developed in this paper, we now construct the simplest example of the embedding, before introducing the necessary definitions. Notation-wise, we will denote with the identity matrix, while is a column vector with elements equal to 1.

Example 2.1.

Exponential dynamics.

Let us consider the following one dimensional dynamical system:

| (4) |

with , whose analytical solution is given by

| (5) |

Considering an projector matrix such that and, thus, , we define the following enlarged (size ) dynamical system

| (6) |

where , and is an arbitrary vector, satisfying the only requirement .

Since the system above is linear, we do know the analytical solution, which is given by

| (7) |

where the approximation holds for , i.e. for . As for any projector the following identity holds

| (8) |

the asymptotic solution reads

| (9) |

Therefore, projecting (9)

| (10) |

i.e., the asymptotic solution of (7) is contained as a common factor in all the modes of , the “replicated” dynamics.

As a last comment, we recover the solution of the original dynamical system by averaging the elements of (10)

| (11) |

where T represents the transpose. Therefore, choosing vector such that

| (12) |

we find

| (13) |

i.e., the projected dynamics recovers the original one dimensional system.

The main goal of this paper is to extend the results of Example 2.1 to arbitrary dynamical systems. Let us now identify the key steps of the procedure. First, we begin with a dynamical system in the standard form.

Definition 2.2.

Embedding procedure: PEDS. We explicitly define here the steps involved in developing the PEDS procedure.

-

1.

We begin with a tuple , where is a size projector operator. We call the target dynamical system, while represent the target variables. represents an ordering, relevant for the case of a multi-dimensional target system if the embedding is non-commutative. Vector is constant and such that . Finally, is the dimension of the embedding for each scalar variable. As such, it can also be interpreted as the number of dimensions in which each scalar variable is expanded into.

-

2.

Given the target dynamical system of dimension , we build an extended dynamical system of size , represented by a set of canonical equations of the form

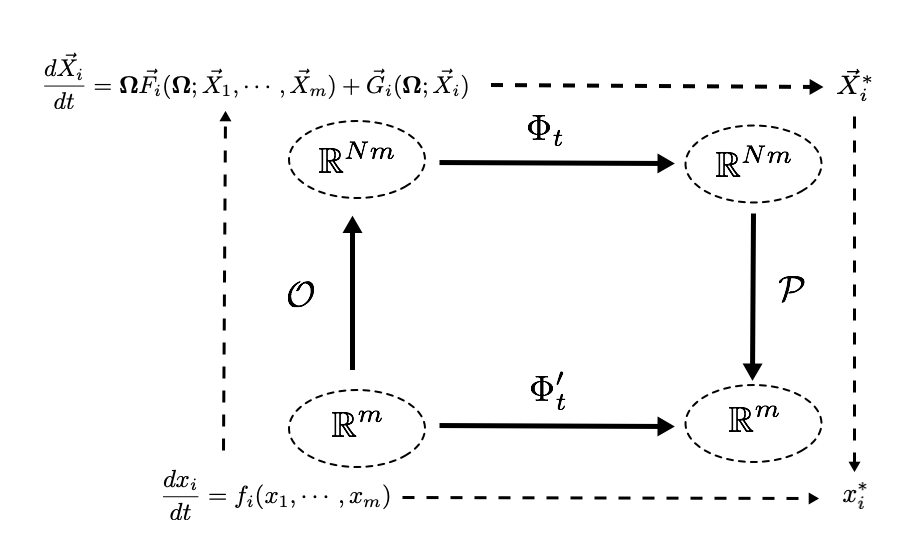

(14) This step is represented by the arrow in Fig. 1, being it a mapping between each scalar functions to the vector function . Thus, for each dimension of the original dynamical system, we obtain an extended dimensional subspace in the dynamical system, so that

(15) We call the specific map the embedding, while is the extended system and the extended variable (i.e., a set of scalar variables in the extended system). We also dub the set , the decay functions. In each extended subspace, is a vector of components , while diagonal matrix is made of elements , where represents Kronecker symbol. The original vector can be easily recovered from the diagonal matrix as . We stress that in principle can be a non-trivial function of , as we shall discuss later on.

- 3.

-

4.

Arrow in Fig. 1, finally, projects the extended dynamical system from size to an dimensional system, that is required to coincide with the trajectory of the target dynamical system. For each variable, the projection is derived from the projector operator as, given a certain extended variable , we obtain

2.1 Extended variable ordering

Before delving into the details of the construction of (14), let us clarify what we mean by ordering. During the development of the PEDS procedure, commuting variable products such as will be mapped onto matrix products of the form . As matrix products do not commute, the ordering of the variables will have a role.

Definition 2.3.

Ordering. Within the context of PEDS, an ordering is a map between commuting monomials of the form and non-commuting matrix monomials of the form .

In general, an ordering can be written in terms of a certain set of coefficients. We will use the following notation

| (16) |

where is an element of the permutation group over variables, the coefficients are zero if at least two indices are equal, and

| (17) |

Definition 2.4.

Given the monomial , the standard ordering is given by , i.e. a matrix monomial where the matrix products strictly follow the same sequence as in the scalar case.

Definition 2.5.

Given the monomial , the balanced ordering is given by

Notice that

and that, given an order-independent function , i.e. a function satisfying

| (18) |

for any permutation , then

| (19) |

Example 2.6.

The standard ordering is characterized by

| (20) |

while for the balanced ordering, .

Considering the case of two scalar variables (i.e., ), choosing and , we get . Another possible choice is , where and , so that

| (21) |

2.2 Decay functions

We discuss now the decay functions . The choice made in Example 2.1 was

| (22) |

where . This particular choice corresponds to a precise definition:

Definition 2.7.

Standard decay function. The decay function in (22) is called standard decay function.

As seen in Example 2.1, the standard decay function allowed to recover the target dynamical system dynamics, that in turn was reconstructed in the Span. The role played by the decay functions is to enforce that in each extended subspace, the modal components associated to the Ker are asymptotically vanishing.

Definition 2.8.

A decay function is -eligible if

| (23) |

and if the solution of the dynamical system obtained projecting (14) onto the Ker (i.e., projecting the extended equation via and defining )

| (24) |

is decaying, i.e. if .

Obviously, the standard decay functions are -eligible.

2.3 Embedding map

We are now ready to state the exact definition of the map. However, this step requires to express the nonlinear scalar functions defining the target dynamical system as a power series. From this standpoint, it is convenient to formulate the Taylor expansion of an variable, scalar analytic function as a superposition of monomials exploiting Kronecker symbol:

| (25) |

where .

Definition 2.9.

Matrix map.

Given a scalar analytic function with Taylor expansion as in (25), we call a matrix map for the following construction

| (26) |

where is a properly defined ordering.

Definition 2.10.

Let us now provide three examples of matrices which will be used in the following. Each target function is analytical, with series representation as in (25):

Definition 2.11.

We define the following three possible matrix embeddings:

-

1.

the standard commutative map is

(28) -

2.

the mixed commutative map is

(29) where denotes the constant term of the series expansion for function

-

3.

the standard non-commutative map is

(30) where is the chosen ordering.

Clearly, since diagonal matrices commute, defining an ordering for the standard and the mixed commutative maps is unnecessary. As we will see, such difference is important for embeddings of vector dynamical systems in the case of the mixed commutative map, but not for a scalar system. Notice also that

-

1.

the standard commutative map is a linear mix of the dynamical systems functions , since a direct calculation shows

(31) which simplifies drastically the evaluation

-

2.

for scalar dynamical systems, the mixed commutative map and the standard non-commutative map reduce to the same quantity

-

3.

in the case of vector dynamical systems, the mixed commutative map preserves the commutativity of the target variables, since diagonal matrices commute among themselves.

As a consequence, for scalar dynamical system we will study only the standard commutative and non-commutative maps, while the result will follow also for the mixed commutative map from the non-commutative one. However, we will have to be more careful in the vector case.

2.4 Projection operator

We provide here a few definitions on the projection operators of size we will consider in the following.

Definition 2.12.

A projector is called trivial if rank, or, equivalently, if Span.

A simple proof shows that the only trivial projector is the identity matrix .

Definition 2.13.

The uniform mean-field projector is defined as the square matrix with elements

Let us consider to be a diagonal matrix, as in the PEDS embedding procedure. Projection using the uniform mean-field operator yields

| (32) |

therefore, the powers of appearing in the PEDS procedure, are neither trivial expressions nor sparse matrices, and indeed contain non linear components in the variables.

Example 2.14.

For we have

| (33) |

where .

The previous example can easily be generalized to size , showing that , thus justifying the definition of as the uniform mean-field projector.

A similar property applies to vectors, as .

Example 2.15.

We would like to stress the fact that the PEDS mapping is, in general, highly non-trivial, at least as far as the projection operator is not the trivial one: this condition is required because in this case the matrix powers of the form couple all the subspace variables in a nonlinear way.

On the other hand, for the trivial projector, the standard decay function is identically zero, and the extended system as well as any extended monomial are ordering independent. In fact, as the diagonal matrices commute among themselves, we have that

| (35) |

if and only if . As a consequence, (27) becomes

| (36) |

thus showing that the PEDS procedure for the trivial projector decouples into identical copies of the original system.

3 Embedding via the uniform mean field projector

We derive here in a more rigorous way the key results presented above. We focus on the uniform mean field projector , as the proofs are easier to be carried out. Nevertheless, several results are actually valid even for a more general projection operator : these will be explicitly denoted by using the general projector in place of the uniform mean field operator.

3.1 Simple case: Scalar target system, embedding without decay function

We start from the case of a one dimensional target dynamical system

| (37) |

where is analytic, so that

| (38) |

Following the PEDS procedure, we introduce the projector operator . The extended variable is thus an -dimensional vector with components , and the matrix map associated to takes either the standard commutative form (28) so that

| (39) |

where we have chosen , or the standard non-commutative form (30) (we remind that for scalar target systems, the mixed commutative and the standard non-commutative forms coincide)

| (40) |

Before we begin our discussion on the embedding, it is worth giving a definition of what we mean when we say that a dynamical system is contained in another one. Taking Fig. 1 as a reference, let our target system be described by the evolution map (the solution of the dynamical system) , while the PEDS evolution is instead a map .

Definition 3.1.

A dynamical system of size is contained in a dynamical system of dimensions if a linear operator exists such that, for

| (41) |

where has size .

Given the definition above, we can now prove the following

Proposition 3.2.

Banality of mean value. Let be a PEDS tuple of a target dynamical system as in (37), where the matrix map can take either the standard commuting or standard non-commuting forms. Then, the extended dynamical system (39) or (40) contains the dynamics of (38) for a generic projection operator satisfying .

Proof.

Let us consider an extended variable subject to the condition . Then, as and , for both the standard commuting and non-commuting maps we have:

| (42) |

Therefore, projecting the previous relation onto the span of , i.e. evaluating , we obtain

| (43) |

It follows that as , then . In order to prove that the dynamical system is contained, we can project on any component , obtaining

| (44) |

if , we have then proven that an initial condition exists for which (41) applies. ∎

Proposition 3.2 is a warm up for the type of proofs that will follow. It shows that if the initial condition for the variables are chosen homogeneously, then the extended dynamical system will follow the one dimensional dynamics of (37). However, condition is a strong requirement for the dynamical system. In principle, a dynamically obtained convergence towards a state of the form would be a much better demand. To this aim, we introduce the decay functions.

3.2 Scalar target system: Enforcing the convergence to the mean via decay functions

We consider now the following form for the extended system (14) based on the uniform mean field projector:

| (45) |

where and . Notice that because of the properties of discussed in Sec. 2.4. The second term on the right hand side of (45) is an “elastic” force compelling the extended trajectories to remain close to the mean. The relative strength of the two addends determines the behavior of the system.

Proposition 3.3.

Proof.

Considering the complementary projection, we have

| (47) |

or, defining ,

| (48) |

Equation (48) represents the dynamics of the modes that make non-uniform. The above implies that any non-uniform mode of decays exponentially, and thus in a time . This concludes the proof. ∎

In conclusion, using the uniform mean field projector and the standard decay functions as in (45), converges to the right mean, and thus to the same fixed points as the target system. Let us now provide some technical results to support the idea that the decay functions project back on the subspace of our interest. The result is in fact not strictly limited to the standard decay functions. We now prove the decay of the modes in Ker for a generalized set of decay functions. Let us consider

| (49) |

where is a positive diagonal matrix with diagonal elements . If , both generalizations reduce to the standard decay function. In the general case, their difference becomes evident projecting via

| (50) |

i.e., the PEDS embeddings

and

Clearly, the first part of the proof of the banality lemma remains valid also in these cases. On the other hand, projecting via , we obtain for both generalizations the following governing equation for the non-uniform modes

| (51) |

whose solution is

| (52) |

We show now that the two generalizations A and B are -eligible, i.e. that asymptotically approaches the zero vector. We prove the following proposition for general projectors:

Proposition 3.4.

Given the governing equation (51) written for a general projector , assuming then .

Proof.

The solution of (51) takes the form

| (53) |

where . Expanding the exponential, we get

that, projecting through , becomes

| (54) |

We notice that if , then we can express where are eigenvectors associated to the eigenvalue equal to 1 of . Thus, . This implies that

| (55) |

Thus, we have , i.e. . ∎

As a result of the proposition above, vector is contained in the subspace spanned by at all times and for any projector, and thus also for .

Proof.

We consider the dynamics for the modes from (51), and we use a Lyapunov stability argument. Let us consider the following functional: . Then,

| (56) |

Using the fact that from the previous Proposition, we obtain that

| (57) |

Since the only minimum of is , then for . This proves that , and thus , for . ∎

Propositions 3.2, 3.3 and 3.4, along with Corollary 3.5 imply that for a one-dimensional dynamical system, the PEDS contains the fixed points of the original dynamical system. In particular, Corollary 3.5 implies that the extended system converges to an asymptotic state of the form . Therefore, through the banality of the mean value Lemma, the PEDS embedding will contain the original dynamical system.

For practical purposes, it is sufficient to consider the observable in order to recover the location of the fixed points. Clearly, this example applies only to a one-dimensional dynamical system. However, the result can be extended to the vector case following similar considerations.

Example 3.6.

As an example of a one dimensional dynamical system embedded in dimensions, let us consider the dynamical system

| (58) |

whose stable fixed point is given by . A PEDS embedding is given by the two coupled differential equations:

| (59) |

whose components can be made explicit introducing and evaluating the matrix expressions. The result is

| (60) | |||

| (61) |

whose stable fixed point is easily seen to be .

Example 3.6 highlights an important property of the uniform mean field embedding. We gain some intuition on how the uniform mean field projector works by exploiting a direct evaluation of the matrix powers. For instance, for the PEDS , then

| (62) |

Using the properties

we obtain the equivalent form for (62)

| (63) |

Multiplying on the left times and times , yields

| (64) | ||||

| (65) |

Therefore, the mean value in (64) follows exactly the target system dynamics, while (65) asymptotically determines for . It follows that for scalar target systems, because of the identity , the standard commutative and non-commutative maps are identical. This is no longer true for vector target systems, as we discuss below.

3.3 General case: Vector target system

We will now focus on the generalization of the previous results to the case of a vector target dynamical system of arbitrary dimension. Such generalization is involved, basically because the ordering becomes important (at least for the standard non-commutative map) and this depends on the fact that matrix terms such as and do not commute. Nevertheless, exploiting the properties of the uniform mean field projector certain exact results can be obtained.

We consider an dimensional target system as in (2), where a Taylor expansion of the defining functions takes the form (25) after choosing the ordering . Following the PEDS procedure, we construct as a first instance the embedding map in the abscence of decay functions. Given the extended variables () of size , we write the embedding maps (28)–(30) as

| standard commuting map | (66a) | |||

| mixed commuting map | (66b) | |||

| standard noncommuting map | (66c) | |||

where is the chosen ordering of the variables. The question is whether also in this case the banality of the mean value Proposition 3.2 still holds.

An easy proof shows that assuming a solution , the banality of the mean value lemma applies also in the vector case, irrespective of the chosen extended variable ordering.

Corollary 3.7.

Multivariate banality of the mean value. Let be the uniform mean field projector, and the embedding with an arbitrary ordering. Following the PEDS procedure, the dynamics of the variables is determined by

| (67) |

where is the vector constructed following the PEDS procedure defined in (66). We define the projection operator , and the projected variables . Then, the following two statements hold true

| (68) |

and (b) if the extended system approaches a fixed point for times , then the projection is a fixed point of the target system.

Proof.

Let us first prove statement , which is a corollary of the banality of the mean value Proposition 3.2. We set . In all cases of the standard commutative, mixed commutative and non-commutative maps, the standard decay function is identically zero, and it is not hard to see that, for an arbitrary orderings , we have in all cases the same expression for each term of the expansion:

| (72) | ||||

| (73) |

As a consequence, a relatively simple calculation shows

| (74) |

or

| (75) |

Replacing with , we obtain that the fixed points of the extended system under the assumption must be the same as for the target dynamical system.

We now turn to statement . The initial condition is now arbitrary. Multiplying on the left (67) times , we get

| (76) |

where we define . As Span, can be interpreted as a deviation from the average, since . Following almost the same steps as in the proof of the convergence of the mean for the one dimensional system, we arrive at

| (77) |

where the second expression follows from the first being , so that for . Essentially, this implies that the extended system fixed points are those of the target dynamical system: for long enough times the system converges exponentially to the mean in each variable, for which the banality lemma applies. Thus, if the projected PEDS given by approaches a fixed point, it has to be a fixed point of the target system. Alternatively, the system must not converge. ∎

The results of this section show that at least one type of PEDS exists which preserves the fixed points of the target system, thus justifying the entire construction of the PEDS embedding. We now focus the attention on how the PEDS procedure modifies the properties of the fixed points, by analyzing their stability. Therefore, we need to look at the spectral properties of the Jacobian at the embedding fixed points.

3.4 Properties of the Jacobian and fixed points

Let us now investigate the properties of the Jacobian.

We begin with the one dimensional case

| (78) |

For the sake of generality, we consider also the generalized decay functions in (49)

| (79) |

As usual, we shall derive the results for a general projector whenever possible.

3.4.1 Simple case: scalar target system

We will focus first on the PEDS of a one dimensional target system, as in this case the ordering is immaterial and proofs are easier to carry out. Initially we consider the PEDS map , i.e. the standard decay function. The embedding thus takes the form

| (80) |

We prove the following:

Proposition 3.8.

One dimensional PEDS Jacobian

For a scalar target system, a PEDS of the form is characterized by the following functional form of the Jacobian

-

1.

for the standard non-commuting map

(81) -

2.

for the standard commuting map

(82)

Proof.

The Jacobian elements are defined as

| (83) |

As we need to evaluate the derivatives of the matrix maps, we consider first the derivatives of the matrix quantities depending on . We have

| (84) | |||||

where a matrix to zero power coincides with the identity matrix.

Taking into account definition (30) we find

| (85) | |||||

Thus, the first term of the Jacobian is simply given by

| (86) |

In the case of the standard commutative map, the result can be easily derived exploiting (31) if function is known in closed form. On the other hand, making use of the power expansion of the function, we can directly calculate the derivatives noticing that

| (87) |

so that

| (88) | |||||

where denotes the derivative of .

Similarly, for the standard decay function , it is not hard to see that the second term of the Jacobian is the same irrespective of the chosen matrix embedding

| (89) | |||||

Summing and , we find the expression to be proven. ∎

As a direct Corollary of Proposition 3.8, we obtain that for and , the Jacobian takes a simpler form.

Corollary 3.9.

Consider the uniform mean field PEDS with standard decay function of a scalar dynamical system characterized by a fixed point . The Jacobian of the PEDS in its fixed point is given by

| (90) |

both for the standard commutative and non-commutative maps.

Proof.

We exploit Proposition 3.8, considering and , from which, because of Proposition 3.2, we have . Substituting into (85), we obtain

| (91) | |||||

Since , we find

| (92) | |||||

therefore, in these conditions the first part of the Jacobian takes the same expression as for the standard commutative map. Summing the second term (89), finally yields for both maps

| (93) | |||||

∎

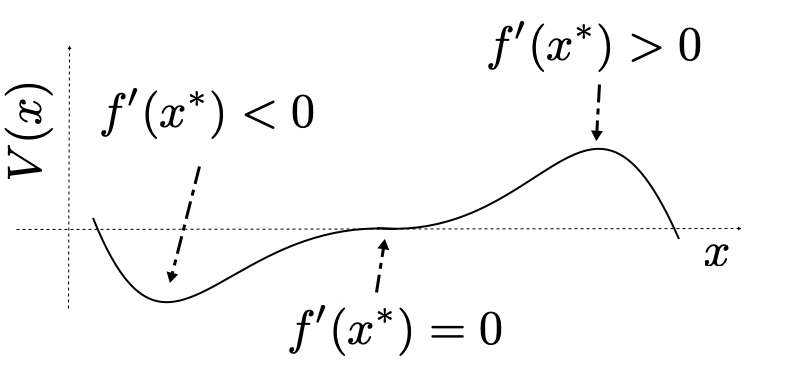

At this point we can start to draw some partial conclusions. In fact, we can use the properties of the fixed points of the target system to understand how these are transformed by the embedding procedure. The target system equilibrium is unstable if , while it is stable for . Finally, corresponds to a saddle. Since any scalar dynamical system is conservative, it can be expressed as

| (94) |

where is a potential function. The extrema of correspond to minima, maxima and saddles, as we show in Fig. 2.

For the PEDS , Corollary 3.9 implies that the Jacobian spectrum follows from the spectral properties of . In fact, has one eigenvalue equal to , and identical, null eigenvalues. Thus, the spectrum of the Jacobian at is given by

| (95) |

i.e. with multiplicity , and with multiplicity 1.

We can therefore carry out a stability analysis of the PEDS fixed point as follows:

| (96) |

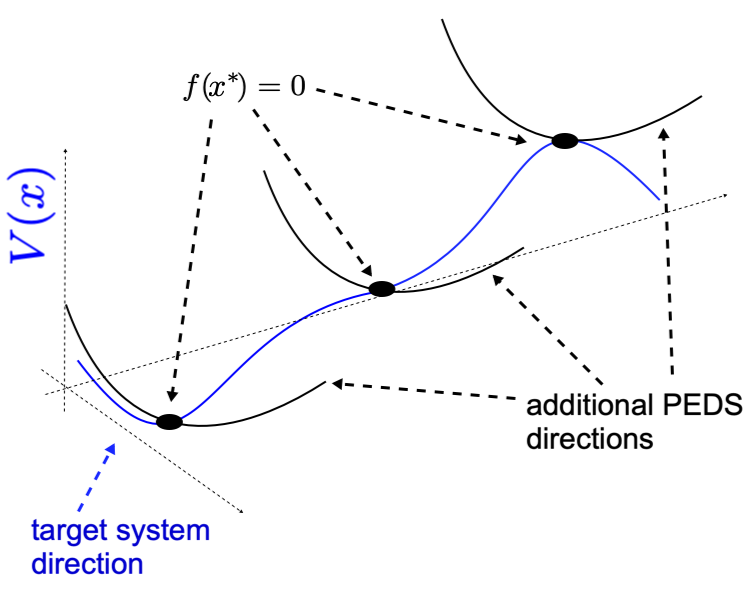

We thus see what are the benefits of the PEDS from the point of view of the target system. While in the scalar case “barriers” can be present, these can be made to disappear via the PEDS. Although this specific feature is peculiar to scalar target systems, this result will later be useful also for vector target systems. A graphical representation is shown in Fig. 3.

We can conclude that the PEDS procedure preserves stable and saddle fixed points of the target dynamics, while it turns unstable fixed points into saddle points.

Let us now consider the Jacobian properties in presence of the generalized decay functions in (79). A simple generalization of (89) yields

| (97) |

Therefore, the full Jacobian for and , becomes

| (98) |

We wish, now, to determine the spectrum . We consider the two generalizations separately:

-

1.

Generalization B. As a consequence of the identities , we can deduce . Thus, both Jacobian components can be diagonalized in the same basis assembled in matrix , such that and . Then the eigenvalues are given by the elements of the diagonal matrix . Since it is not hard to see that and with , we can focus on the eigenvalues of the two addends of . For , there are eigenvalues equal to 0 and identical eigenvalues . For , there are null eigenvalues, while the remaining eigenvalues satisfy . Clearly, is determined by the cardinality of , equal to 1 for .

-

2.

Generalization A. This case is slightly more complicated, since the Jacobian is not symmetric and, thus, its eigenvalues can be complex. We can provide some results exploiting Gerschgorin’s theorem

(99) Since and , we have

Let and . It follows that the eigenvalues must be enclosed, in the complex plane, in circles of radius and center .

3.4.2 General case: Vector target system

The analysis of the PEDS embedding for scalar target systems carried out in the previous section showed that exploiting the uniform mean field projector and the standard or generalized decay functions, the embedding Jacobian can be fully characterized on the basis of the features of the target system fixed points (stable, unstable, or neutral). We have obtained this result both for the standard commutative map, in which essentially one “mixes” linearly the dynamical systems, and in the case of the standard non-commuting map, in which the mix is non-trivial. The difference between the two is, thus, essentially contained in the embedding intermediate dynamics.

While in higher dimensions the nature of the questions to be answered is quite similar, the derivations are technically more challenging. The reason lies in the ordering, that, as mentioned earlier, does play a role in how the PEDS is defined. However, the case still makes it possible to carry out an almost entirely analytical derivation, even if at least some results can be proved for a more general projector structure.

Let us focus on the following PEDS for an -dimensional target system

Therefore we consider an extended system as in (14) characterized by the mean field projector and any eligible decay functions.

Similarly to the scalar case, we also consider here the two generalizations to the standard decay functions defined in (49). The corresponding PEDS take the form and , which in terms of PEDS equations, read

| (100) |

where

| (101) |

being positive, diagonal matrices that make the corresponding decay function -eligible.

For any eligible decay function, we can obtain the vector target system Jacobian for the PEDS system as follows. Let us consider first the standard commuting map. We use the representation in (31), so that

| (102) |

as . We evaluate the Jacobian in blocks, starting from component 1 as in the scalar case

| (103) |

thus, in the fixed point (see the multivariate banality of the mean Corollary 3.7) we find

| (104) |

where . In other words, the Jacobian in the equilibria can be built as

| (105) |

where each block is of size .

Surprisingly, the same result holds also for the mixed and standard non-commutative maps. We start by proving this in the mixed commuting case, where ordering is immaterial.

Proposition 3.10.

Let be the PEDS built on a mixed commutative map, considering the decay functions assumed to be -eligible. Then, for any ordering , the Jacobian matrix, evaluated at the equilibrium being an equilibrium of the target system, is given by

| (106) |

Proof.

We aim at evaluating the Jacobian of111As usual, we use a general projection operator wherever possible.

| (107) |

as it is needed for the estimation of the first Jacobian component

| (108) | |||||

Therefore, we need to evaluate

| (109) |

We exploit the identity

| (110) |

valid for any matrix , obtaining

| (111) | |||||

where, since are diagonal, we have

Now, taking into account that and that the fixed points are defined by (because of the multivariate banality of the mean Corollary 3.7), we find

| (112) | |||||

| (113) |

thus, substituting into (111)

| (114) |

where each element of is equal to . Substituting into (108) we recognize the series expansion of . This leads to the final expression

| (115) |

Taking into account the second Jacobian component, i.e. the Jacobian of the decay functions, finally yields the result to be proved

| (116) |

∎

Finally, let us turn to the non-commutative map Jacobian, for which we have the following result.

Proposition 3.11.

Let be the PEDS built on a non-commutative map, considering the decay functions assumed to be -eligible. Then, for any ordering , the Jacobian matrix, evaluated at the equilibrium , is given by

| (117) |

Proof.

As we aim at demonstrating the independence of the Jacobian from the ordering (at the fixed points), we consider the formalism introduced in Section 2.1. Given the matrix map definition (26), we use the general form

| (118) |

that forms the basis for the evaluation of the first Jacobian component. Writing, to simplify the notation, , we compute the derivatives

| (119) |

Applying identity (110) to matrix we find

| (120) |

At this point, the multivariate banality of the mean Corollary 3.7 proves that the PEDS fixed points are given by , therefore for the mean field projector we find

| (121) |

Substituting this expression into the derivative of the ordered product, yields

| (122) |

where and are vectors made of the -th column and row of , respectively. As the matrix products all collapse into the same quantity, the previous expression is independent of the ordering . Therefore, we can write

| (123) |

This means that, for any ordering , at the fixed point the sum of the terms for the derivative with respect to the elements of each extended variable leads, once taking into account the factor in (100), to a scalar factor corresponding to , i.e. the corresponding element of the Jacobian of the target system multiplied times matrix .

Concerning the second part of the Jacobian, i.e. the derivatives of , the result is a block diagonal matrix of the type .

In summary, even in this case the full Jacobian at the fixed points follows the block structure claimed in the proposition. ∎

We are now ready to discuss the Jacobian spectral properties irrespective of the chosen map, as the matrix is the same for all of the three maps that we consider. For the sake of simplicity, we limit the discussion to the standard decay functions , so that

| (124) |

that can be cast in the following form

| (125) |

where is the Jacobian of the target system functions evaluated at the target system equilibrium , denotes matrix Kronecker product222According to the definition, the block of the matrix Kronecker product is ., and

The Jacobian (125) is a generalization of the scalar case (see Proposition 3.8). We are interested in assessing the properties of its eigenvalues.

For the time being, we discuss the simpler case , and since it will be useful later, let us think of this Jacobian for a general , only to then consider as a special case.333To motivate this generalized discussion, we briefly anticipate the result of an upcoming paper, in which we show that (126) is in fact the first term of the representation obtained for the Jacobian of a general projector . This general case is, however, beyond the scope of this paper. Let us therefore discuss the spectrum of

| (126) |

where is the identity matrix of size . Being the eigenvalues of , the eigenvalues of are given by , and are the eigenvalues of for the whole matrix. Let us assume that has unitary eigenvalues ( for ), while the remaining eigenvalues are equal to . Then matrix has eigenvalues [27]

-

1.

, with multiplicity

-

2.

with multiplicity .

As a consequence, if , then a stable equilibrium point for the target system is still stable in the PEDS embedding. Similarly, if the equilibrium point is unstable, or if at least some values exist for which , then it becomes a saddle point for the extended system, being characterized by negative eigenvalues and positive eigenvalues. Thus, the following classification holds

| (127) |

This analysis suggests that the presence of “barriers” in the target system, characterized by unstable equilibria, can (in principle) be overcome in the PEDS embedding via their transformation into saddle points in the extended system.

3.5 Dynamical ordering-equivalence for the uniform mean field projector

The uniform mean field projector has various properties that are interesting per se. In particular, we wish to show here that not only the fixed points, but also the embedding dynamics is ordering independent.

For this purpose, consider a PEDS of the form

and for arbitrary -eligible decay functions. Given the PEDS above, the standard non-commutative matrix embedding is given by

| (128) |

We prove the following

Proposition 3.12.

The quantity

is independent of the ordering , for any .

Proof.

The proof relies on the following observation. We have in general that

| (129) |

with . The previous result can be easily shown as follows

| (130) | |||||

Because of (129), we can always write the following

| (131) |

where function is scalar. To gain an intuition about the scalar , consider for instance . Using (129), the previous expression can be written as

| (132) |

so that . At this point we have

| (133) | |||||

which follows from the fact that the scalar variables do commute. ∎

Proposition 3.12 is important because it implies the following

Corollary 3.13.

Dynamical ordering independence for the uniform mean field projector. For any analytic functions , we have

| (134) | |||||

or, alternatively, the uniform mean field PEDS are ordering independent.

Proof.

The proof follows directly from the fact that any analytic function can be written in the form of a series expansion as in Proposition 3.12. ∎

This implies essentially that for any dynamical system, we can write the dynamics with the most convenient ordering, without affecting the dynamics.

4 Numerical Examples

We present here some numerical examples of application of the PEDS procedure.

4.1 Implementation remarks

For the sake of implementation, it would be convenient to define the PEDS transformation without having to evaluate the Taylor expansion, as for the theoretical developments in the previous Sections. This can be easily carried out for factorized vector target systems (or linear combinations of factorized terms of the same type). For such factorized target systems, the matrix map can be built as the function (and or similar expressions).

The question is therefore how to efficiently evaluate such matrix functions. This can be done defining the matrix maps as the Taylor expansion evaluated in matrix . We can write

| (135) |

where always exists since is diagonal. Notice that (135) defines a similarity transformation, i.e. it conserves the spectrum of the similar matrices. An important point is to verify that if the spectrum of is real and is diagonal, then the spectrum of is also real. This can be shown using the fact that, based on the definition of the Cayley polynomial and on the determinant properties, the eigenvalue problem for is equivalent to the generalized eigenvalue problem , assuming invertible. Then, a proof similar to the spectral theorem shows that if and are symmetric and real, then . This guarantees that an extension to the complex field of the scalar target system functions is not required. In fact, (135) implies

| (136) |

Since is symmetric, exists such that

where the real matrix is made of the elements of the spectrum of . As a result, we can write

| (137) |

where

| (138) |

Thus, evaluating the matrix maps boils down to the knowledge of the eigenvalues and eigenvectors of . The question is whether these two must evaluated at every time step, as is a dynamical variable: unfortunately this is the case.

4.2 Uniform mean field projector

We now provide several examples to show the applications of the theory developed in this paper.

4.2.1 One dimensional potential

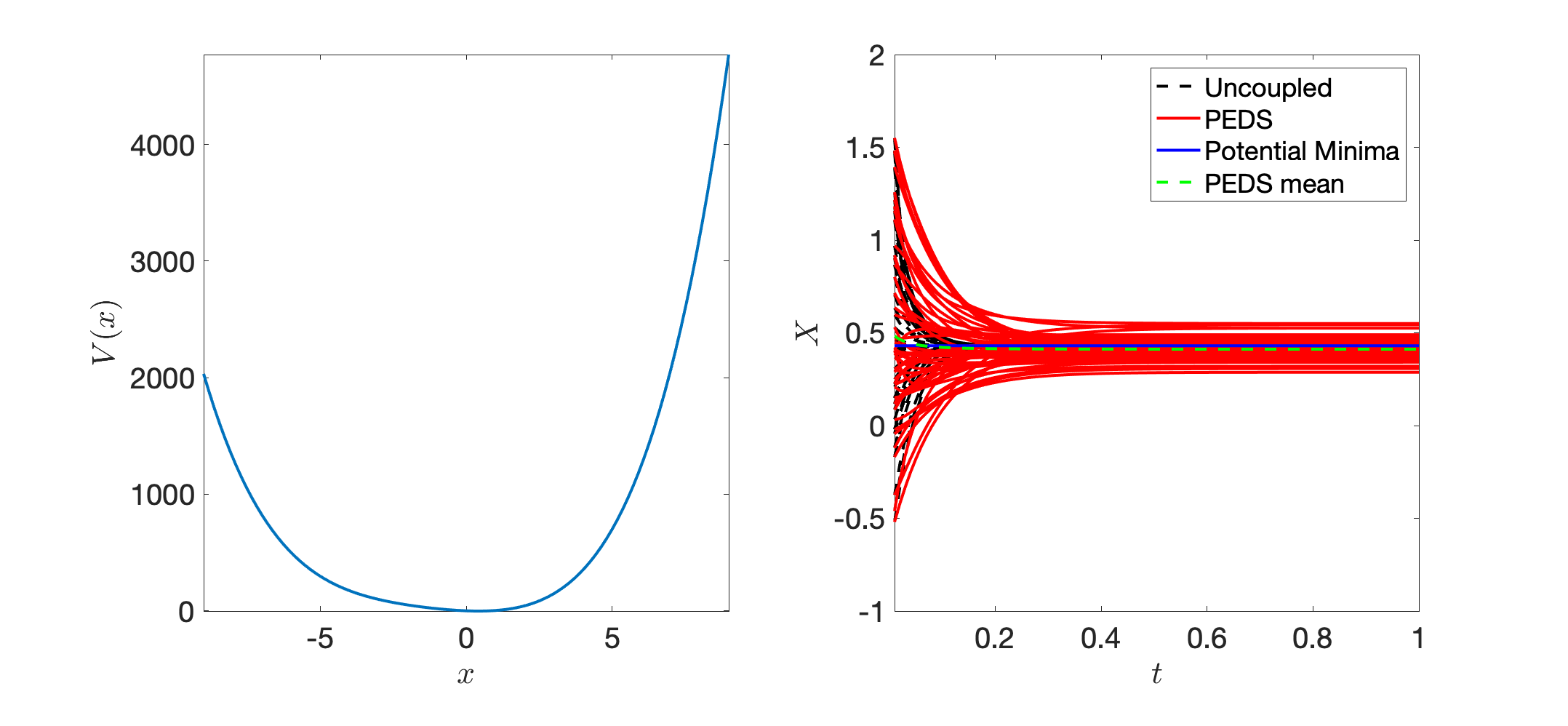

Our first example is a one dimensional nonlinear dynamical system, written as:

| (139) |

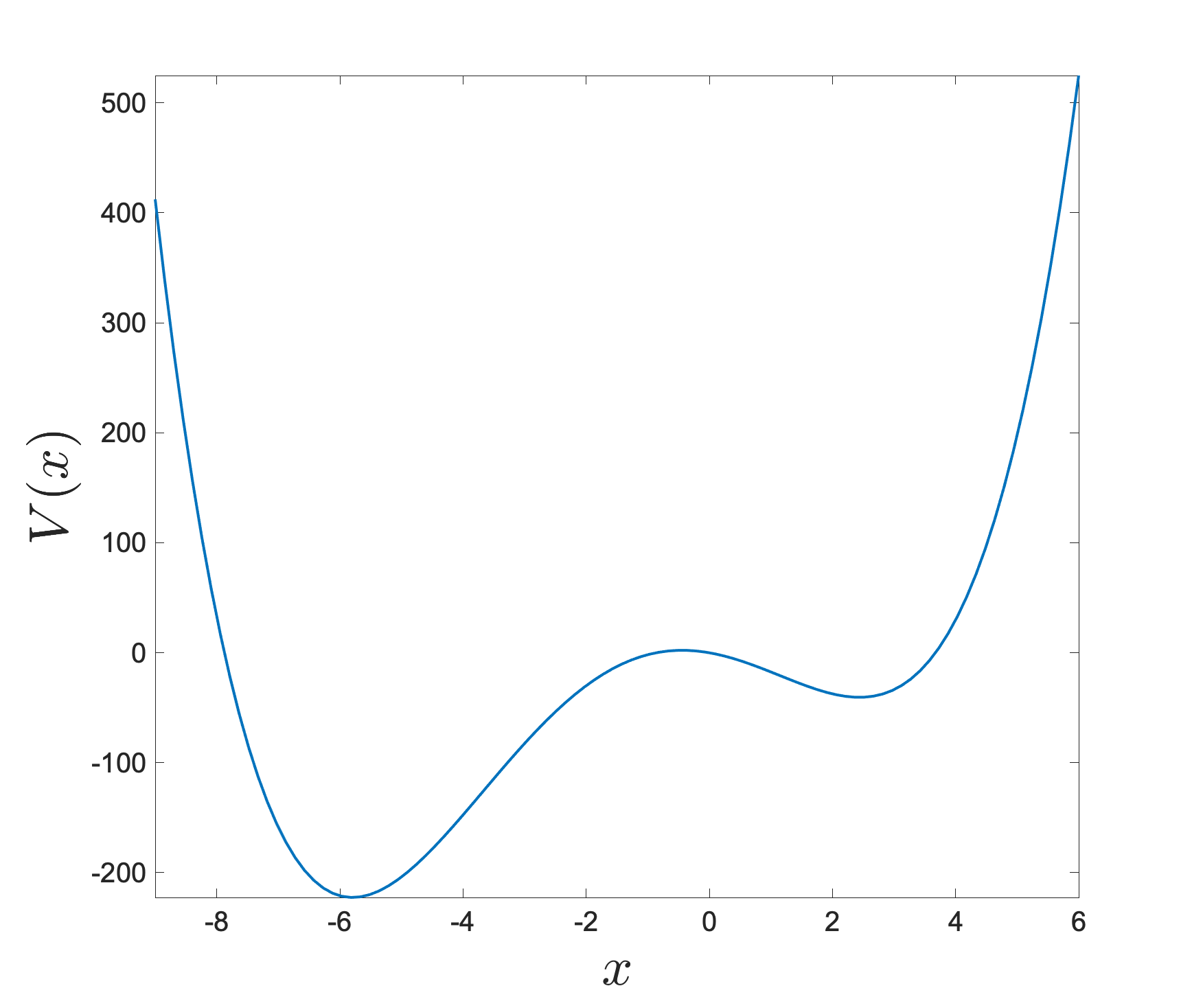

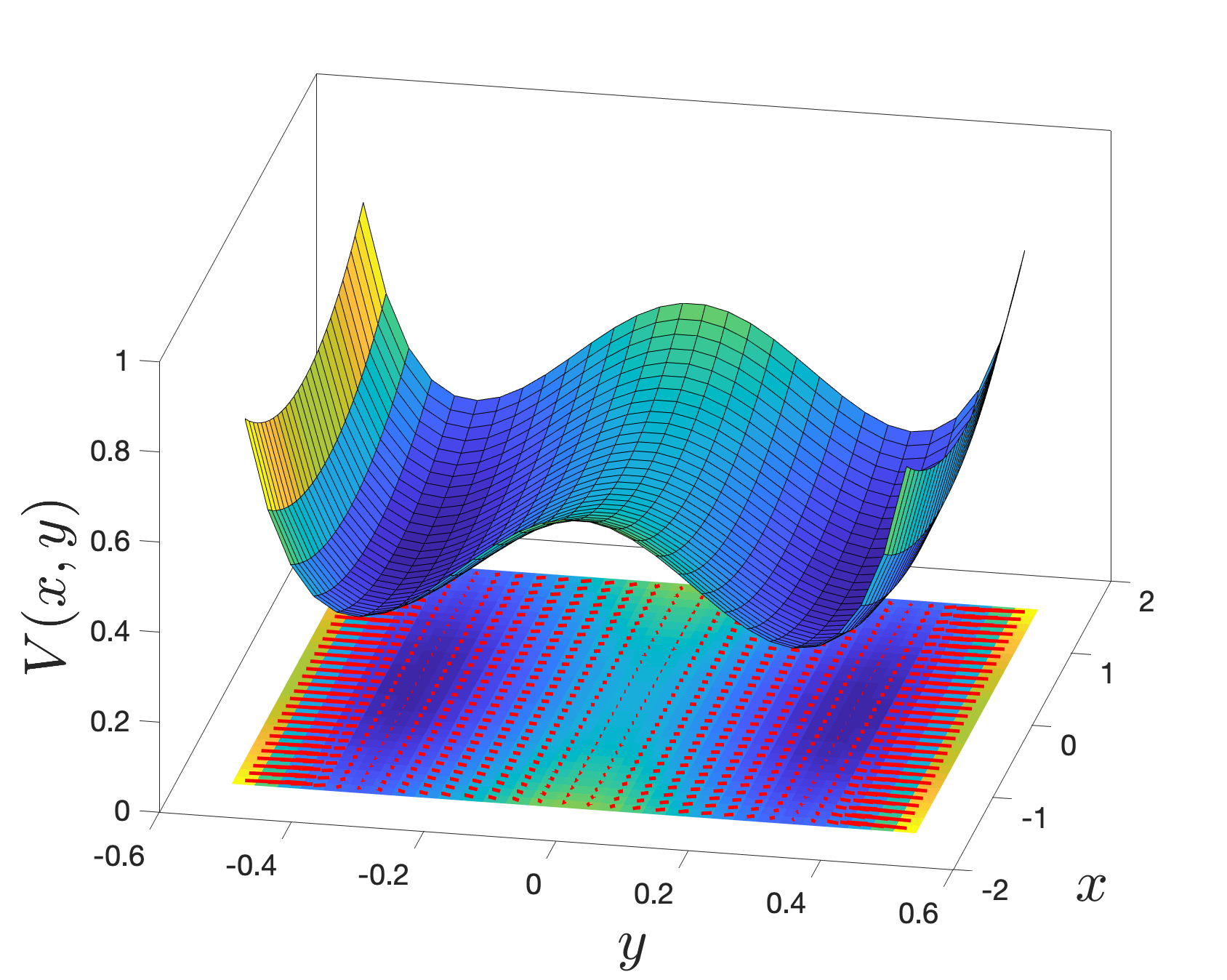

Let us thus analyze numerically the PEDS , with a potential of the form

| (140) |

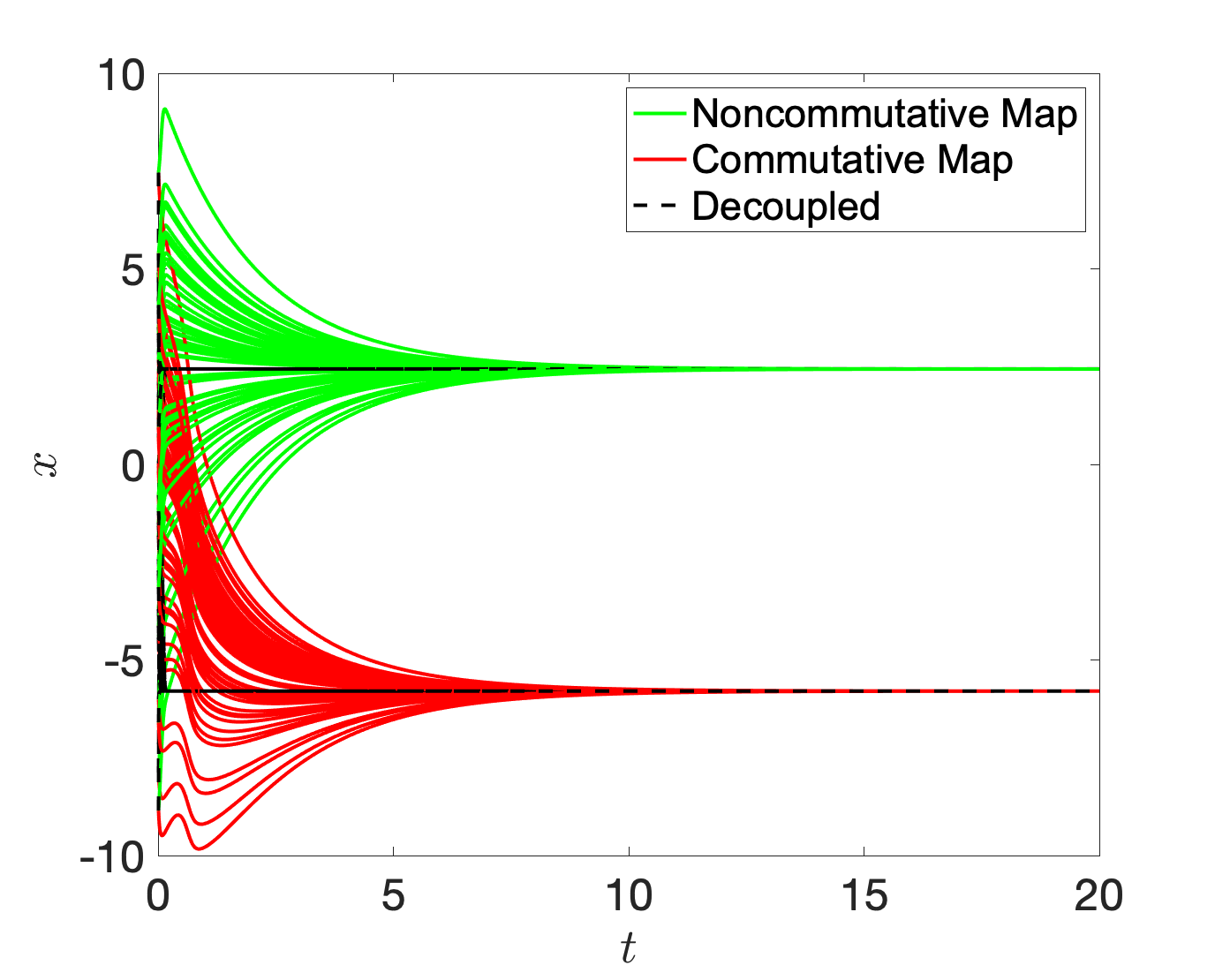

for a set of parameters for which two minima are present, as shown in Fig. 4. First, we compare the standard commutative and non-commutative maps. The difference is shown in Fig. 5 for identical initial conditions.

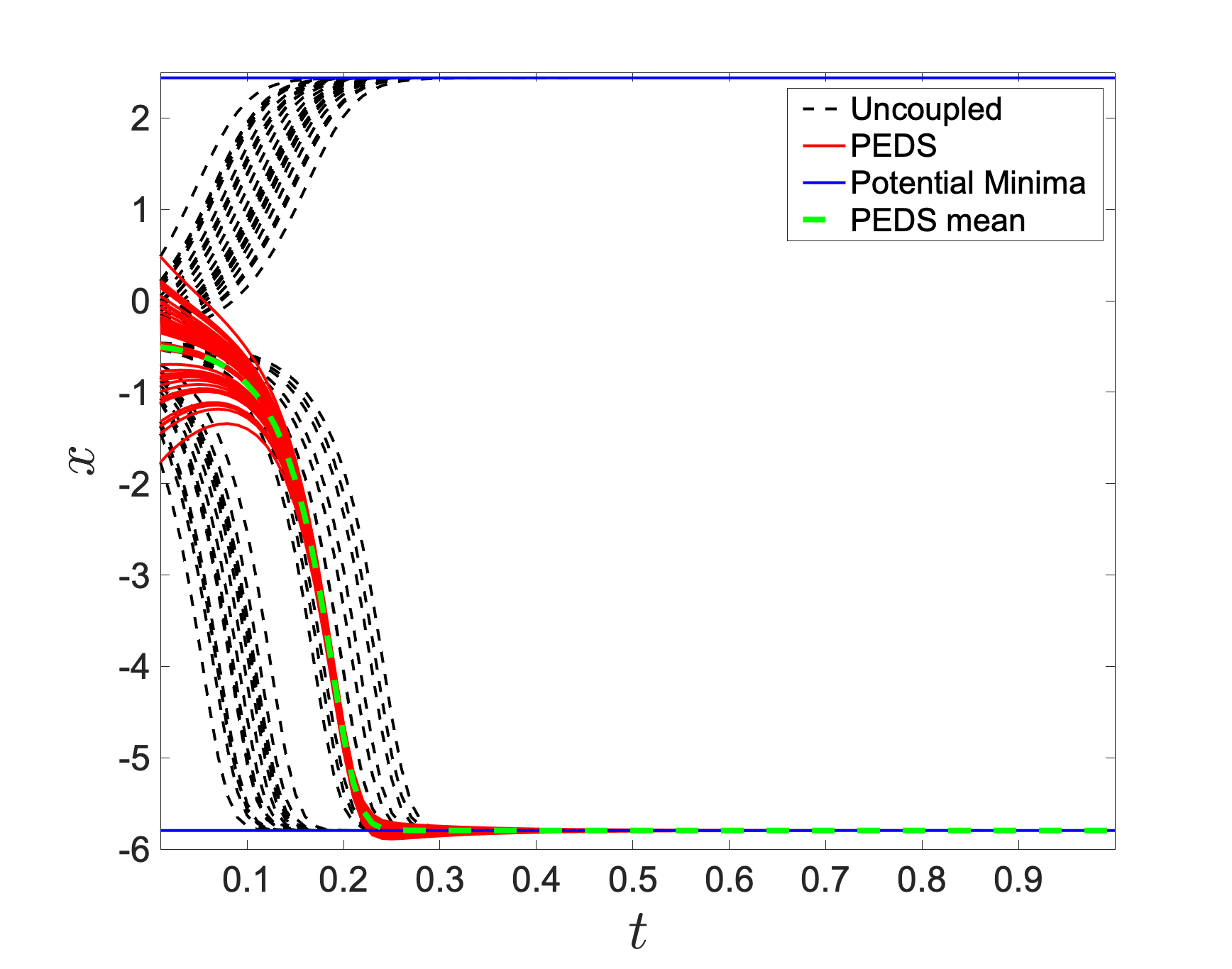

The associated PEDS is given by the differential system

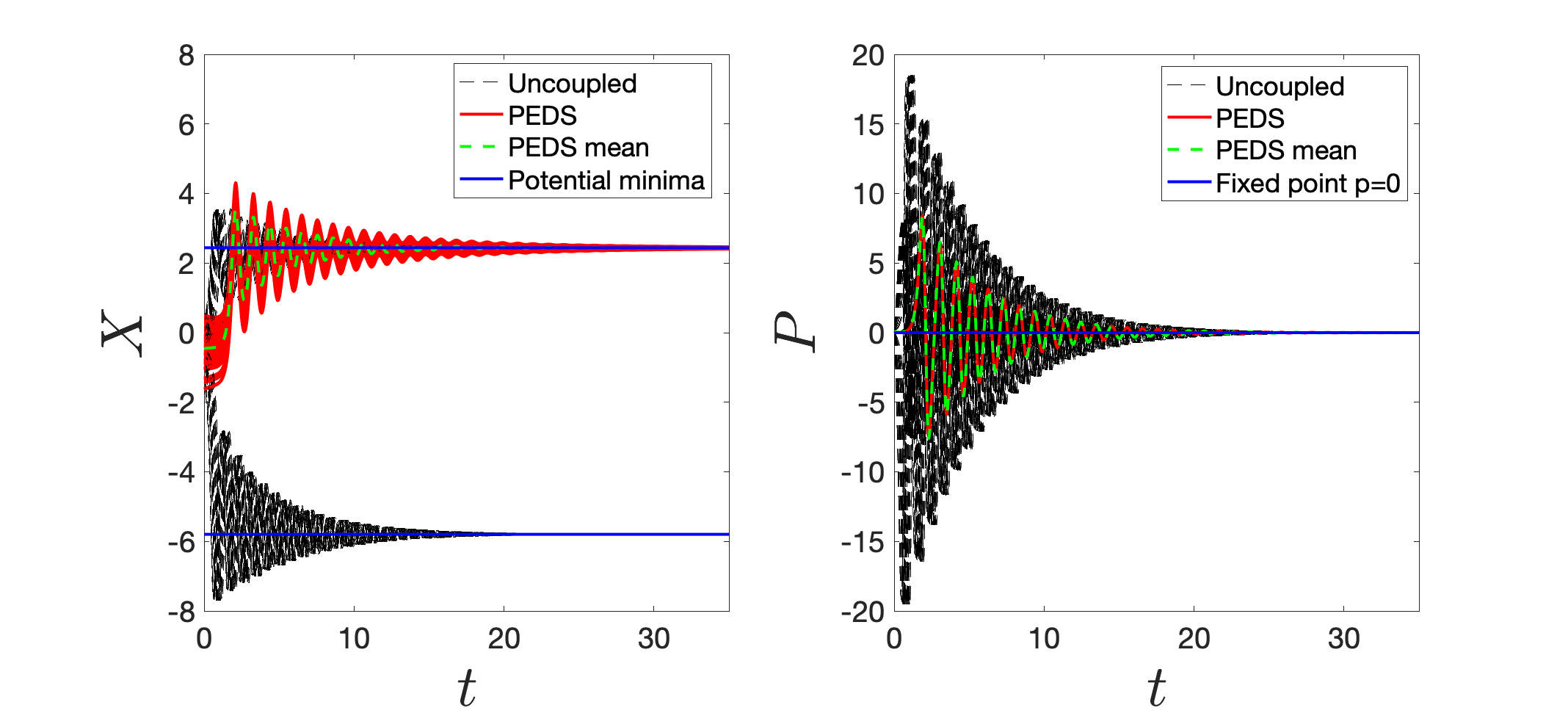

| (141) | |||||

The results of the numerical integration, using a simple Euler scheme for Gaussian-distributed initial conditions around the potential maximum, at , of the target system and of the PEDS embedding with the standard non-commutative map. The PEDS trajectories all reach the global minimum of the potential , while the uncoupled trajectories split between the two stable equilibria.

4.2.2 Vector target system

As an example of vector target system, we consider a two-dimensional dynamical system embedded with the standard non-commutative map. The target system is:

| (142) | |||||

| (143) |

where

| (144) |

which is characterized by two local minima,. The equations of motion define the gradient descent dynamics

| (145) | |||||

| (146) |

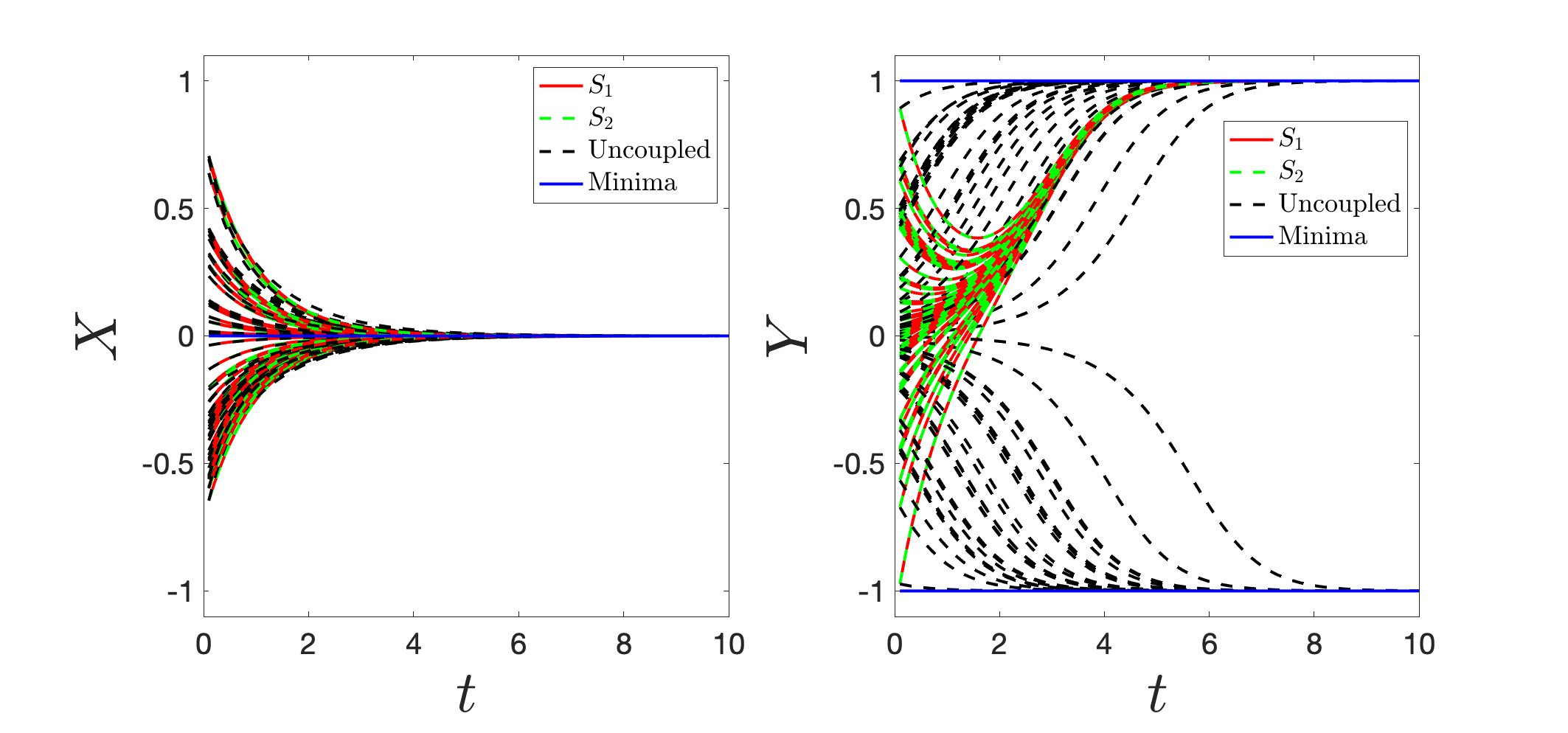

The interest in this examples lies in the fact that the PEDS equations of motion depend on the ordering prescription considered. We discuss here the two cases defined below:

| (147) | |||||

| (148) |

where

| (149) | |||||

| (150) |

where since . Thus, choosing one versus the other is equivalent to a different ordering choice.

We embed this system of equations via and , obtaining

| (151) | |||||

| (152) |

where is the label for . The numerical solutions are shown in Fig. 8 using and . The two dynamic behaviours are essentially identical, as per Corollary 3.13. Interestingly, even if in general , for this example we can work out the full details leading to the independence on the ordering.

First, let us note that . Using this expression, we can write

therefore

| (153) |

We can now apply again the same formula

| (154) |

yielding, after taking into account the multiplication times

| (155) |

In other words, we have shown that

| (156) |

i.e. the equivalence of the dynamics of and .

4.2.3 Hamiltonian equations with dissipation

As a third example, let us consider another two-dimensional vector target system: the description of a dissipative Hamiltonian system for a single particle of mass . The target system reads

| (157) | |||||

| (158) |

where denotes the dissipation and we define the force . Following the prescription of the previous sections, we write the PEDS

The extended system equations are given by

| (159) | |||||

| (160) |

which is thus a set of equations. Let us focus on the potential defined in (140). The results are shown in Fig. 9.

4.3 Beyond the uniform mean field projector

This paper is focused mostly on the uniform mean field projection . Before concluding, we wish to numerically simulate also the case of a PEDS with a different projection operator. Let us consider a PEDS where , where is a random square matrix (uniformly distributed on ) of size . The scalar target dynamical system we are interested in is again

| (161) |

with the potential in (140), choosing parameters , , and that guarantee a single potential minimum, as shown Fig. 10 (left). We then consider the PEDS , with , and follow the observable . The results are shown in Fig. 10 (right). The PEDS embedding converges also in this case to the potential absolute minimum, thus confirming that a generalization of the results of this paper to arbitrary projectors is possible.

As a last comment, one of the main motivations for this study is that in circuits, conservation laws can be expressed in terms of projector operators. An example is the volatile but (almost) ideal memristor. A resistor with memory can be described, at the lowest level of approximation for a current controlled device, by an effective dynamical resistance depending on an internal parameter . In this sense, memristors are approximately described by the functional form , where are the boundary resistances, and . We assume that the internal memory parameter evolves according to a simple equation of the form . The parameters and are the decay constant and the effective activation voltage per unit time, respectively. For a recent paper which inspired this study, consider [30], where transitions between effective minima of a lower dimensional potential were observed. Using Ohm’s law, we define voltage , so as to obtain a normalized equation for

| (162) |

where and , with in the physically relevant cases, and as an effective potential, where is the voltage applied to the circuit, and is a normalized quantity with units of inverse time.

The dynamics of a single memristor (162) is fully characterized by the gradient following the dynamics of the effective potential

| (163) |

with ; the constant also acts as the learning rate in (162).

For a network of memristors, the differential equation for is a set of coupled ODE of the form [23]:

| (164) |

where . The matrix is the projection operator on the vector space of cycles of ,the graph representing the circuit [23], and, as discussed in the Introduction, a mathematical consequence of Kirchhoff’s conservation laws. Now, we note that we can write (164) as:

| (165) |

which is exactly in the form of a PEDS, with a standard decay function. Thus, the results of [30] can be interpreted as the relaxation of the system towards the minima defined by the embedding function. If , then using the results of this paper we know that the potential (163) determines the effective minima of the system. However, in order to justify the presence of the rumbling transitions shown in [30], a deeper understanding of the PEDS properties for a general projector is required.

5 Conclusions and perspective

In the present paper we presented and studied a map between dynamical systems of size and dynamical systems in a higher number of variables. This is the first of a series of papers formally investigating the projective embeddings of dynamical systems (PEDS) paradigm that we defined here. The purpose of this work was to formally show the properties of this type of embeddings, within the context of a particular projector matrix. As we have seen, their structure is such that for long times, the asymptotic equilibria of the target dynamical system can be recovered.

We have discussed in particular the case of the uniform mean field projector operator . For this choice, we have been able to prove analytically that the asymptotic equilibria are strictly connected to those of the original system. Aside from establishing the formalism, this paper also established some exact results about how the embedding changes the properties of the dynamics critical, including the cases of unstable equilibria and saddle points.

Specifically, we have studied the embedding of dimensional dynamical systems in -dimensional systems. The purpose of such embedding is to modify the nature of the fixed points of the dynamics, i.e. those satisfying . In particular, we have shown that stable and saddle type fixed points retain their properties, while unstable fixed points become saddles. This observation justify future works in this direction, in particular exploiting different types of decay functions, matrix embeddings and projectors with respect to this contribution. It is worth to mention that a follow up of this work is in preparation, in which we discuss the behavior of PEDS for general projectors; many of the results on the Jacobian obtain in this paper do actually apply also in the general case [PEDS2].

An important aspect of interest of future works will be to focus on how to further modify the spectral properties of the fixed points, i.e. the nature of the Jacobian once evaluated at . What we have shown in the present paper is that, for the uniform projector, the PEDS Jacobian is always symmetric, and thus characterized by real eigenvalues, that in particular are negative if the corresponding fixed point of the target system is stable. This implies that the dynamics near stable fixed points is always laminar, e.g. slowly decaying towards the fixed point. As we will see in future works, this is not the case for general projectors, for which approximate but special techniques will have to be employed.

As discussed, the spectral signature is in part inherited by the original, target dynamical system, but modified through the extended number of dimensions. The idea of generalizing the space of solutions to higher dimensions is not new. In a way, the PEDS technique is in spirit close to both Markov Chain Monte Carlo methods [28] and the notion of lifts in convex optimization [29], but is specifically developed for the fixed points of dynamical systems.

In particular, in [30] it was observed that memristive circuits have an effective lower dimensional representation in terms of an effective potential, and that they can exhibit a “rumbling” transition, i.e. a transient chaotic tunneling between local minima of a properly defined potential. As it turns out, such dynamics is only a particular case of the PEDS introduced here, in which the projector operator was given by random circuit connections.

The rumbling transition in [30] was pinpointed numerically to be due to an effective “Lyapunov force”, shown to be present in connection with the rumbling transition phenomenon. Such force was defined essentially as a deviation from a mean field theory, and we provided evidence of an athermal and novel mechanism in which barrier escapes emerge in the effective description of a multi-particle system. This paper is a continuation of that work, attempting at generalizing those findings to general systems, although focusing specifically on a particular type of projector: in this case, these “Lyapunov” forces are not present. Similar yet different types of behavior were also observed previously within the context of memory-based computing (memcomputing) solutions [31, 32, 33, 34, 35, 36].

The main focus of this paper represents a first step towards a clarification of the general reasons why the introduction of hidden variables in a dynamical system can lead to transitions between local and global minima of the effective description via instabilities in the full system. Since maxima can be turned into saddle points, generically there cannot be no “barriers” when the target system is a gradient descent. However, as we will show formally in future works, in order to obtain the rumbling transitions, one has to go beyond the uniform mean field approximation and study a more general type of projector.

Clearly, the projective embedding studied in this paper can be employed in a variety of dynamical systems, including all sort of gradient-based dynamics, with applicability to machine learning and neural networks. These applications will also be the subject of future studies. In particular, we hope that the introduction of “hidden variables” in dynamical systems [37] can be further investigated for the purpose of machine learning and optimization applications [38]. In general, the study of transient chaos in dynamical systems and optimization [39, 40] is an interesting area of research with possible applications also in memristor-based algorithms [41].

Acknowledgments. The work of F.C. was carried out under the auspices of the NNSA of the U.S. DoE at LANL under Contract No. DE-AC52-06NA25396, and in particular grant PRD20190195 from the LDRD. F. C. would also like to thank W. Bruinsma for various comments and observations on the paper.

References

- [1] M. Di Ventra, Y. V. Pershin, The parallel approach, Nature Physics 9 (4) (2013) 200–202. doi:10.1038/nphys2566.

- [2] M. Di Ventra, F. L. Traversa, Perspective: Memcomputing: Leveraging memory and physics to compute efficiently, Journal of Applied Physics 123 (18) (2018) 180901. doi:10.1063/1.5026506.

- [3] S. Kirkpatrick, C. D. Gelatt, M. P. Vecchi, Optimization by simulated annealing, Science 220 (4598) (1983) 671–680. doi:10.1126/science.220.4598.671.

- [4] G. E. Santoro, Theory of quantum annealing of an ising spin glass, Science 295 (5564) (2002) 2427–2430. doi:10.1126/science.1068774.

- [5] C. Baldassi, R. Zecchina, Efficiency of quantum vs. classical annealing in nonconvex learning problems, Proceedings of the National Academy of Sciences 115 (7) (2018) 1457–1462. doi:10.1073/pnas.1711456115.

- [6] J. L. Hennessy, D. A. Patterson, A new golden age for computer architecture, Communications of the ACM 62 (2) (2019) 48–60. doi:10.1145/3282307.

- [7] S. K. Vadlamani, T. P. Xiao, E. Yablonovitch, Physics successfully implements lagrange multiplier optimization, Proceedings of the National Academy of Sciences 117 (43) (2020) 26639–26650. doi:10.1073/pnas.2015192117.

- [8] F. L. Traversa, M. Di Ventra, Universal memcomputing machines, IEEE Transactions on Neural Networks aMemristive devices and systems10.1109/PROC.1976.10092nd Learning Systems 26 (11) (2015) 2702–2715. doi:10.1109/tnnls.2015.2391182.

- [9] B. Sutton, K. Y. Camsari, B. Behin-Aein, S. Datta, Intrinsic optimization using stochastic nanomagnets, Scientific Reports 7 (1). doi:10.1038/srep44370.

- [10] F. Böhm, G. Verschaffelt, G. Van der Sande, A poor man’s coherent ising machine based on opto-electronic feedback systems for solving optimization problems, Nature Communications 10 (1). doi:10.1038/s41467-019-11484-3.

- [11] D. Pierangeli, G. Marcucci, C. Conti, Large-scale photonic ising machine by spatial light modulation, Physical Review Letters 122 (21) (2019) 213902. doi:10.1103/physrevlett.122.213902.

- [12] G. Csaba, W. Porod, Coupled oscillators for computing: A review and perspective, Applied Physics Reviews 7 (1) (2020) 011302. doi:10.1063/1.5120412.

- [13] H. Goto, K. Endo, M. Suzuki, Y. Sakai, T. Kanao, Y. Hamakawa, R. Hidaka, M. Yamasaki, K. Tatsumura, High-performance combinatorial optimization based on classical mechanics, Science Advances 7 (6). doi:10.1126/sciadv.abe7953.

- [14] M. Dorigo, T. Stützle, Ant colony optimization, MIT Press, Cambridge, Mass, 2004.

-

[15]

A. M. Turing,

The

Essential Turing: Seminal Writings in Computing, Logic, Philosophy,

Artificial Intelligence, and Artificial Life Plus the Secrets of Enigma,

OXFORD UNIV PR, 2004.

URL https://www.ebook.de/de/product/3612004/alan_m_turing_the_essential_turing_seminal_writings_in_computing_logic_philosophy_artificial_intelligence_and_artificial_life_plus_the_secrets_of_eni.html -

[16]

D. J. C. MacKay,

Information

Theory, Inference and Learning Algorithms, Cambridge University Press, 2003.

URL https://www.ebook.de/de/product/3259882/david_j_c_mackay_information_theory_inference_and_learning_algorithms.html -

[17]

D. Barber,

Bayesian

Reasoning and Machine Learning, Cambridge University Press, 2019.

URL https://www.ebook.de/de/product/13930073/david_barber_bayesian_reasoning_and_machine_learning.html -

[18]

J. D. Lee, M. Simchowitz, M. I. Jordan, B. Recht,

Gradient descent only

converges to minimizers, in: V. Feldman, A. Rakhlin, O. Shamir (Eds.), 29th

Annual Conference on Learning Theory, Vol. 49 of Proceedings of Machine

Learning Research, PMLR, Columbia University, New York, New York, USA, 2016,

pp. 1246–1257.

URL https://proceedings.mlr.press/v49/lee16.html -

[19]

C. Jin, P. Netrapalli, M. I. Jordan,

Accelerated gradient

descent escapes saddle points faster than gradient descent, in: S. Bubeck,

V. Perchet, P. Rigollet (Eds.), Proceedings of the 31st Conference On

Learning Theory, Vol. 75 of Proceedings of Machine Learning Research, PMLR,

2018, pp. 1042–1085.

URL https://proceedings.mlr.press/v75/jin18a.html - [20] O. Bournez, A. Pouly, A survey on analog models of computation, in: Theory and Applications of Computability, Springer International Publishing, 2021, pp. 173–226. doi:10.1007/978-3-030-59234-9_6.

- [21] L. Chua, Memristor-the missing circuit element, IEEE Transactions on Circuit Theory 18 (5) (1971) 507–519. doi:10.1109/tct.1971.1083337.

- [22] D. B. Strukov, G. S. Snider, D. R. Stewart, R. S. Williams, The missing memristor found, Nature 453 (7191) (2008) 80–83. doi:10.1038/nature06932.

- [23] F. Caravelli, F. L. Traversa, M. Di Ventra, The complex dynamics of memristive circuits: Analytical results and universal slow relaxation, Physical Review E 95 (2) (2017) 022140. doi:10.1103/physreve.95.022140.

- [24] F. Caravelli, The mise en scéne of memristive networks: effective memory, dynamics and learning, International Journal of Parallel, Emergent and Distributed Systems 33 (4) (2017) 350–366. doi:10.1080/17445760.2017.1320796.

- [25] F. Caravelli, Locality of interactions for planar memristive circuits, Physical Review E 96 (5) (2017) 052206. doi:10.1103/physreve.96.052206.

- [26] A. Zegarac, F. Caravelli, Memristive networks: From graph theory to statistical physics, EPL (Europhysics Letters) 125 (1) (2019) 10001. doi:10.1209/0295-5075/125/10001.

-

[27]

D. S. Bernstein,

Scalar,

Vector, and Matrix Mathematics: Theory, Facts, and Formulas - Revised and

Expanded Edition, PRINCETON UNIV PR, 2018.

URL https://www.ebook.de/de/product/28983788/dennis_s_bernstein_scalar_vector_and_matrix_mathematics_theory_facts_and_formulas_revised_and_expanded_edition.html - [28] S. Asmussen, P. W. Glynn, Stochastic Simulation: Algorithms and Analysis, Stochastic Modelling and Applied Probability, Vol. 57, Springer, 2007.

- [29] H. Fawzi, J. Gouveia, P. A. Parrilo, J. Saunderson, R. R. Thomas, Lifting for simplicity: Concise descriptions of convex sets, arXiv:2002.09788.

- [30] F. Caravelli, F. Sheldon, F. L. Traversa, Global minimization via classical tunneling assisted by collective force field formation, accepted for publication in Sci. Adv. arxiv:2102.03385 (2021).

- [31] F. Caravelli, J. Carbajal, Memristors for the curious outsiders, Technologies 6 (4) (2018) 118. doi:10.3390/technologies6040118.

- [32] F. Sheldon, F. L. Traversa, M. Di Ventra, Taming a nonconvex landscape with dynamical long-range order: Memcomputing ising benchmarks, Physical Review E 100 (5) (2019) 053311. doi:10.1103/physreve.100.053311.

- [33] F. L. Traversa, M. Di Ventra, Polynomial-time solution of prime factorization and NP-complete problems with digital memcomputing machines, Chaos: An Interdisciplinary Journal of Nonlinear Science 27 (2) (2017) 023107. doi:10.1063/1.4975761.

- [34] F. L. Traversa, C. Ramella, F. Bonani, M. Di Ventra, Memcomputing NP-complete problems in polynomial time using polynomial resources and collective states, Science Advances 1 (6) (2015) e1500031. doi:10.1126/sciadv.1500031.

- [35] M. D. Ventra, F. L. Traversa, I. V. Ovchinnikov, Topological field theory and computing with instantons, Annalen der Physik 529 (12) (2017) 1700123. doi:10.1002/andp.201700123.

- [36] S. R. B. Bearden, H. Manukian, F. L. Traversa, M. Di Ventra, Instantons in self-organizing logic gates, Physical Review Applied 9 (3) (2018) 034029. doi:10.1103/physrevapplied.9.034029.

- [37] D. Bohm, A suggested interpretation of the quantum theory in terms of ”hidden” variables. II, Physical Review 85 (2) (1952) 180–193. doi:10.1103/physrev.85.180.

-

[38]

B. Poole, S. Lahiri, M. Raghu, J. Sohl-Dickstein, S. Ganguli,

Exponential

expressivity in deep neural networks through transient chaos, in: D. Lee,

M. Sugiyama, U. Luxburg, I. Guyon, R. Garnett (Eds.), Advances in Neural

Information Processing Systems, Vol. 29, Curran Associates, Inc., 2016, pp.

3360–3368.

URL https://proceedings.neurips.cc/paper/2016/file/148510031349642de5ca0c544f31b2ef-Paper.pdf - [39] M. Ercsey-Ravasz, Z. Toroczkai, The chaos within sudoku, Scientific Reports 2 (1). doi:10.1038/srep00725.

- [40] T. Tél, The joy of transient chaos, Chaos: An Interdisciplinary Journal of Nonlinear Science 25 (9) (2015) 097619. doi:10.1063/1.4917287.

- [41] K. Yang, Q. Duan, Y. Wang, T. Zhang, Y. Yang, R. Huang, Transiently chaotic simulated annealing based on intrinsic nonlinearity of memristors for efficient solution of optimization problems, Science Advances 6 (33) (2020) eaba9901. doi:10.1126/sciadv.aba9901.