Proposal Distribution Calibration for

Few-Shot Object Detection

Abstract

Adapting object detectors learned with sufficient supervision to novel classes under low data regimes is charming yet challenging. In few-shot object detection (FSOD), the two-step training paradigm is widely adopted to mitigate the severe sample imbalance, i.e., holistic pre-training on base classes, then partial fine-tuning in a balanced setting with all classes. Since unlabeled instances are suppressed as backgrounds in the base training phase, the learned RPN is prone to produce biased proposals for novel instances, resulting in dramatic performance degradation. Unfortunately, the extreme data scarcity aggravates the proposal distribution bias, hindering the RoI head from evolving toward novel classes. In this paper, we introduce a simple yet effective proposal distribution calibration (PDC) approach to neatly enhance the localization and classification abilities of the RoI head by recycling its localization ability endowed in base training and enriching high-quality positive samples for semantic fine-tuning. Specifically, we sample proposals based on the base proposal statistics to calibrate the distribution bias and impose additional localization and classification losses upon the sampled proposals for fast expanding the base detector to novel classes. Experiments on the commonly used Pascal VOC and MS COCO datasets with explicit state-of-the-art performances justify the efficacy of our PDC for FSOD. Code is available at github.com/Bohao-Lee/PDC.

Index Terms:

Distribution Calibration, Few-shot Object Detection, Object Detection, Proposal Distribution CalibrationI Introduction

With the abomination of large-scale dataset collection and annotation and the thirst for imitating human cognition, deep learning under low data regimes has attracted growing attention. To simulate data-scarce scenarios, such as identifying rare diseases, species, military objectives, etc., few-shot learning (FSL) has been proposed for pursuing fast knowledge adaptation from base classes with sufficient labeled data to novel classes with limited instances and annotations. Coupling with classification and localization tasks, few-shot object detection (FSOD) presents to be a more practicable yet challenging problem, which is far from well-studied.

To avoid overfitting under extreme data imbalance, FSOD methods [1] typically adopt a two-step training paradigm of base training and fast adapting. TFA [5] further reveals that novel classes can be well represented based on base features, inspiring actively studied topics in the spirit of maximizing base knowledge usage. Specifically, Halluc [22] introduces a data hallucinator via an EM-style training procedure based on the base detector to improve the model variation of novel classes by transferring the shared within-class variation from base classes. FADI [10] imitates specific base class feature space for novel class embedding by explicitly associating similar base-novel class pairs, then scatters them by disentangling the base and novel classifiers.

Since novel instances may be suppressed as backgrounds during base training, the learned RPN is typically inadequate to recognize novel instances. What is worse, the biased RPN proposals can hardly be rectified by the RoI head with limited novel annotations, resulting in sub-optimal classification and localization adaptation [26]. This intrinsic contradiction is far from satisfactorily solved, which essentially hinders the development of FSOD. Nonetheless, we greedily wonder whether there is a “free lunch” [17] for remedy.

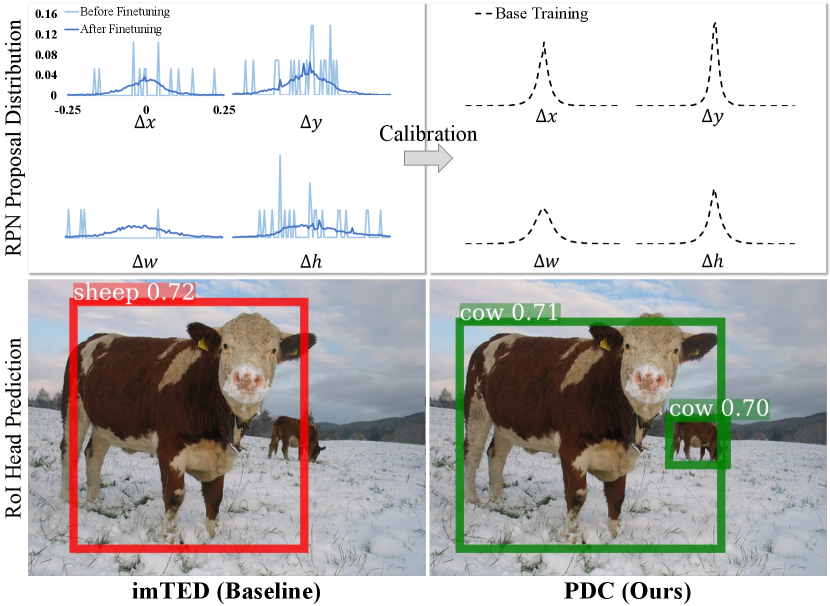

To figure out this issue, we plot the bias distributions of RPN proposals against ground truths in both learning stages and ascertain a noticeable shift, Fig. 1(up). It seems impracticable to reverse the deviation with scarce and low-quality novel proposals. Since it is natural that comprehensive coverage of training data typically ensures superior model generalization ability, we resort to mitigating the data thirst in fine-tuning by reusing the proposal distribution statistics from base training to crop sufficient high-quality novel proposals. In this way, the augmented novel proposals involve less context and artifact noise for sufficient and correct novel semantic assimilation. Also, as localization is relatively independent against classification, the de-biased high IoU proposals facilitate a milder repurposing procedure of the RoI head by recycling the localization ability endowed in the base training stage. Additionally, we add objective functions, i.e., supervised contrastive loss [44], to the sampled proposal set to enhance the classification and localization adaptation of the detection head towards novel classes.

In summary, we propose a simple yet effective proposal distribution calibration (PDC) approach pursuing alleviating the critical target contradiction and data scarcity in the current two-step FSOD. With a plug-and-play nature, the proposed PDC can be easily inserted in seminal two-stage few-shot detectors for both classification and localization generalization enhancement. With sufficient theoretical and experimental analyses, we justify the efficacy of our PDC and achieve new SOTA performance on the commonly used benchmarks, i.e. Pascal VOC2007 [41], VOC 2012 [42], and MS COCO [43].

II Related Works

II-A Few-Shot Learning

FSL methods can be roughly divided into three categories: 1) the meta-learning-based ones [36, 35, 34, 39] typically design specific models or optimization for fast model adaption; 2) the metric learning-based ones [28, 29, 30, 31, 32, 33, 7] pursue optimizing the embedding manifold for feature discrimination enhancement; 3) the data augmentation-based ones [38, 37] target augmenting novel data to improve the model generalizability.

As FSL shares similar intrinsic challenges with FSOD while relatively simple, seminal FSL works continually inspire the development of FSOD [40]. Concretely, MVT [16] transfers factors of variations across classes via semantic transformations to alleviate the data requirement. DC [17] steps forward by economically migrating distribution statistics from base classes to their most similar novel classes for data sampling. These valuable explorations encourage the in-depth attempts in FSOD to exhaust the base knowledge at a low cost.

II-B Few-Shot Object Detection

General object detection methods can be commonly categorized as one-stage and two-stage detectors, which are continually evolving with the tremendous progress in deep neural architectures [21]. One-stage detectors [18, 19] locate and classify objects simultaneously to achieve better efficiency. Two-stage detectors [20] propose suspicious objectness regions, then attempt to discriminate and rectify each proposal for superior accuracy. However, these achievements are severely data-hungry for generalization, which limits their real-world application.

Few-shot object detection, initially inspired by meta-learning [1, 2], targets expanding the object vocabulary from label-rich scenarios to the open world with limited supervised showcases. TFA [5] advocates to keep the backbone learned during base training frozen and only fine-tune the box regressor and classifier. Such a simple two-step training scheme reports surprisingly superior performance, which encourages and inspires the FSOD community to maximize the usage of base knowledge for fast adaptation.

Concretely, subsequent fine-tuning-based few-shot detectors typically delve into optimizing embedding space via metric learning [8, 7], relation reasoning [27], and feature interaction [11], alleviating the essential objective contradiction across training stages via base knowledge retention [13], detection head decoupling [9, 10], and annotation rectification [26], and augmenting novel instances via object pyramids [6], data hallucination [22], etc. In this work, we intend to bridge the semantic gap in repurposing the class-aware RoI head for novel classes by applying PDC on the class-agnostic RPN with base proposal statistics as a “free lunch.”

III Proposal Distribution Calibration

III-A Background

Task definition

Given a dataset , the objects are divided into base and novel classes. Each base class contains sufficient bounding-box annotations, while each novel class has only limited ones. Following FSRW [1], few-shot detectors usually adopt a two-step paradigm to avoid overfitting. Specifically, in the base-training stage, the whole detector is sufficiently trained on base class dataset with only base class annotations. While in the fine-tuning stage, the detection head is fine-tuned on both base and novel classes with balanced data settings of , and the backbone keeps frozen. In , each class has annotated instances. When the novel class number is , it is the so-called -way -shot FSOD setting.

Baseline detector

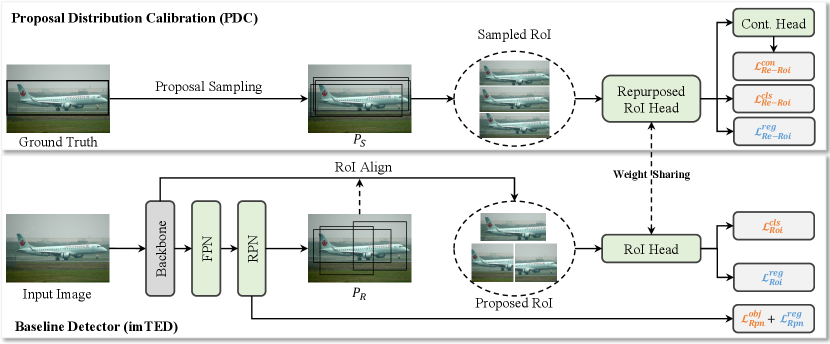

We use imTED [12] as our basic detector, which is modified by holistically introducing MAE [15] pre-training model to Faster-RCNN [20], Fig. 2 (the branch below). It introduces the MAE encoder as the detector’s backbone and the decoder as the RoI head. For proposal generation, imTED uses an FPN and an RPN following the settings defined in Faster-RCNN. For proposal feature extraction, imTED skips the FPN and simply extracts RoI features on the feature map from the last backbone layer. In this way, imTED maximally utilizes pre-trained parameter of MAE to improve the generalization ability of object representation. The proposal features are integrally migrated from pre-trained MAE to imTED, which is observed to be important for few-shot object detection.

Note that our PDC only attaches an additional branch to imTED after base training, and we focus on introducing the fine-tuning stage modification in the following. Denote the detection head parameters of imTED as . The baseline object detection loss can be defined as

| (1) | |||

where , and respectively denote RoI, RPN and FPN parameters, and . and are regression and classification losses for the RoI head. and are the regression and objectness losses for the RPN head.

III-B Proposal Sampling

Distribution statistics in base training

Given an image for base training, denote its annotations as , where ; , , and are the center position, width, and height of the - bounding-box; is the class label of . We omit for brevity.

We calculate the scale-normalized offset statistics of RPN proposals for distribution fit. Concretely, for the -th proposal () with its corresponding ground truth bounding box , define its four-dimensional offset as , where is the scale coefficient.

According to the law of large numbers, we assume the RPN proposal offset distribution of base classes after base training is Gaussian. For simplicity, we denote it as , where is the mean vector, = diag() is the covariance matrix. can be calculated as follow:

| (2) | ||||

Distribution calibration for fine-tuning

With the Gaussian offset distribution , we can perturb the object location annotations for proposal sampling. Concretely, we sample proposals for , which all share the same category . The sample proposal set can be expressed as,

| (3) |

| (4) |

Denote the RPN proposal set as . We apply the calibrated proposal set for fine-tuning. Especially, is used to assist in repurposing the RoI head for novel classes.

III-C Repurposed RoI Head

Classification

For a mini-batch of images, we apply supervised contrastive loss [44] on the sampled proposal set , which can be defined as

| (5) |

| (6) | ||||

where denotes the sampled proposal number of class ; , , and are contrastive head encoded RoI features of sampled proposals , , and respectively; equals 1 if the input sentence is true, otherwise 0; is the temperature hyper-parameter.

Combining the origin RoI classification loss, the updated classification loss for sampled proposals on the repurposed RoI head can be defined as

| (7) |

Localization

The sampled proposal set is also fed into the localization branch in the repurposed RoI head to recycle the localization ability endowed in the base training for generalization enhancement. The regression loss in the Re-RoI head can be calculated as

| (8) |

Hence, the total fine-tuning loss function of our PDC can be defined as

| (10) | ||||

| , |

where is a hyper-parameter to balance each loss item and set to 0.1 by default.

III-D Generalization Analysis

Assume the input proposal of the RoI head after base training is denoted by and the input proposal during fine-tuning is denoted by , where and are the corresponding proposal distributions, respectively. They are sampled from different data distributions as . Let denote proposal distribution after sufficient dataset training and is approximately equal to .

We can determine the relationship between the true risk and the empirical risk as follow:

| (11) |

where is the loss function of fine-tuning for the RoI head, and . is the number of proposals with probability at least . denotes the true risk and denotes the empirical risk for available labeled data.

Following [23], we can obtain the empirical risk upper bound as follow:

| (12) | ||||

Note that and are constants when the dataset and the model are given. As is minimized during model finetuning, Eq. 12 can be approximated as

| (13) |

where MMD [24] is the maximum mean discrepancy term, which is proved as

| (14) |

where and denote the input proposal numbers of RoI head of during base training and fine-tuning respectively. denotes the RKHS(Reproducing Kernel Hilbert Space).

According to Eq. 14, when the mean and variance differences of distributions and are decreased, the maximum mean discrepancy of distributions and are reduced at the same time. Combining Eq. 13 and Eq. 14, the empirical risk upper bound is effectively reduced.

| Proposal Samping | Classification | Localization | Novel set 1 (AP50) | avg. | ||||||||||

| {Distribution} | {Number} | {} | {Dim.} | {} | {} | 1 | 2 | 3 | 5 | 10 | ||||

| - | - | - | - | 43.4 | 51.0 | 58.1 | 67.6 | 66.6 | - | |||||

| - | 0.2 | 128 | - | ✓ | - | - | 45.3 | 49.4 | 57.6 | 67.4 | 66.0 | -0.2 | ||

| 10 | - | ✓ | ✓ | 46.3 | 51.8 | 56.5 | 67.8 | 66.0 | +0.3 | |||||

| - | ✓ | ✓ | 47.8 | 49.3 | 60.5 | 67.3 | 66.7 | +1.0 | ||||||

| - | ✓ | ✓ | 49.3 | 50.0 | 56.4 | 69.8 | 67.2 | +1.2 | ||||||

| - | ✓ | ✓ | 47.2 | 50.4 | 57.5 | 67.9 | 67.3 | +0.9 | ||||||

| - | ✓ | ✓ | 47.8 | 47.7 | 57.5 | 68.6 | 67.0 | +0.4 | ||||||

| 20 | - | ✓ | ✓ | 46.8 | 52.7 | 57.6 | 69.5 | 66.7 | +1.3 | |||||

| 50 | - | ✓ | ✓ | 46.5 | 52.7 | 58.3 | 68.7 | 67.9 | +1.5 | |||||

| 100 | - | ✓ | ✓ | 45.4 | 51.5 | 57.8 | 69.4 | 67.3 | +0.9 | |||||

| 50 | - | ✓ | ✓ | 48.4 | 53.4 | 58.1 | 67.9 | 67.2 | +1.6 | |||||

| 50 | 0.07 | 128 | ✓ | - | ✓ | ✓ | 47.4 | 52.6 | 57.6 | 69.6 | 67.9 | +1.7 | ||

| 0.2 | ✓ | - | ✓ | ✓ | 50.1 | 52.3 | 60.2 | 70.7 | 68.4 | +3.0 | ||||

| 0.7 | ✓ | - | ✓ | ✓ | 47.8 | 51.0 | 58.4 | 69.7 | 67.9 | +1.6 | ||||

| 0.2 | 64 | ✓ | - | ✓ | ✓ | 47.4 | 57.0 | 57.8 | 69.5 | 69.2 | +2.8 | |||

| 256 | ✓ | - | ✓ | ✓ | 45.8 | 49.4 | 60.1 | 69.0 | 67.3 | +1.0 | ||||

| 128 | - | ✓ | ✓ | ✓ | 48.2 | 54.0 | 59.4 | 69.4 | 68.7 | +2.6 | ||||

| ✓ | ✓ | ✓ | ✓ | 49.6 | 48.5 | 50.7 | 69.0 | 67.9 | +1.8 | |||||

| ✓ | - | - | - | 42.4 | 49.6 | 58.5 | 68.1 | 67.6 | -0.1 | |||||

| ✓ | - | ✓ | - | 43.5 | 54.3 | 62.7 | 69.1 | 66.9 | +2.0 | |||||

| ✓ | - | - | ✓ | 45.4 | 49.0 | 58.8 | 68.0 | 66.8 | +0.3 | |||||

| 50 | 0.2 | 128 | ✓ | - | ✓ | ✓ | 50.1 | 52.3 | 60.2 | 70.7 | 68.4 | +3.0 | ||

IV Experiments

IV-A Experimental Setting

Datasets. Pascal VOC 2007 [41], VOC 2012 [42], and MS COCO [43] datasets are commonly used for FSOD evaluation. They are typically split into fully annotated base classes and -shot novel classes in accordance with the settings in FSRW [1]. Specifically, the Pascal VOC dataset is divided into three splits for cross validation, where 5 classes are chosen as novel classes and the rest 15 classes are base classes. The number of annotated instances is set to 1, 2, 3, 5, and 10. For MS COCO, twenty categories are chosen as novel classes with set to 10 and 30, and the remaining 60 categories are treated as base classes.

Implementation Details. We mainly conduct the ablation study and model analysis of PDC based on the baseline detector imTED [12]. ImTED [12] with ViT-S [45] backbone is implemented with Pytorch 1.8.0, mmcv 1.4.0, and mmdetection 2.11.0 on 8 NVIDIA A40 GPUs. We feed each GPU 2 images with data augmentations of random cropping, horizontal flipping, random resizing, and size normalization for base training and finetuning with the AdamW optimizer.

| Method | Novel set 1 | Novel set 2 | Novel set 3 | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 5 | 10 | 1 | 2 | 3 | 5 | 10 | 1 | 2 | 3 | 5 | 10 | |

| FSRW[ICCV2019] [1] | 14.8 | 15.5 | 26.7 | 33.9 | 47.2 | 15.7 | 15.3 | 22.7 | 30.1 | 40.5 | 21.3 | 25.6 | 28.4 | 42.8 | 45.9 |

| Meta R-CNN[ICCV2019] [MetaRCNN] | 19.9 | 25.5 | 35.0 | 45.7 | 51.5 | 10.4 | 19.4 | 29.6 | 34.8 | 45.4 | 14.3 | 18.2 | 27.5 | 41.2 | 48.1 |

| Viewpoint[ECCV2020] [4] | 24.2 | 35.3 | 42.2 | 49.1 | 57.4 | 21.6 | 24.6 | 31.9 | 37.0 | 45.7 | 21.2 | 30.0 | 37.2 | 43.8 | 49.6 |

| TFA w/cos[ICML2020] [5] | 39.8 | 36.1 | 44.7 | 55.7 | 56.0 | 23.5 | 26.9 | 34.1 | 35.1 | 39.1 | 30.8 | 34.8 | 42.8 | 49.5 | 49.8 |

| MPSR[ECCV2020] [6] | 41.7 | 42.5 | 51.4 | 52.2 | 61.8 | 24.4 | 29.3 | 39.2 | 39.9 | 47.8 | 35.6 | 41.8 | 42.3 | 48.0 | 49.7 |

| MPSR+PDC (Ours) | 41.6 | 44.2 | 50.8 | 56.7 | 61.5 | 31.1 | 30.6 | 41.1 | 43.1 | 48.6 | 34.6 | 43.0 | 43.0 | 49.5 | 52.2 |

| CME[CVPR2021] [7] | 41.5 | 47.5 | 50.4 | 58.2 | 60.9 | 27.2 | 30.2 | 41.4 | 42.5 | 46.8 | 34.3 | 39.6 | 45.1 | 48.3 | 51.5 |

| FSCE[CVPR2021] [8] | 44.2 | 43.8 | 51.4 | 61.9 | 63.4 | 27.3 | 29.5 | 43.5 | 44.2 | 50.2 | 37.2 | 41.9 | 47.5 | 54.6 | 58.5 |

| DeFRCN[ICCV2021] [9] | 40.2 | 53.6 | 58.2 | 63.6 | 66.5 | 29.5 | 39.7 | 43.4 | 48.1 | 52.8 | 35.0 | 38.3 | 52.9 | 57.7 | 60.8 |

| FADI[NIPS2021] [10] | 50.3 | 54.8 | 54.2 | 59.3 | 63.2 | 30.6 | 35.0 | 40.3 | 42.8 | 48.0 | 45.7 | 49.7 | 49.1 | 55.0 | 59.6 |

| FCT[CVPR2022] [11] | 49.9 | 57.1 | 57.9 | 63.2 | 67.1 | 27.6 | 34.5 | 43.7 | 49.2 | 51.2 | 39.5 | 54.7 | 52.3 | 57.0 | 58.7 |

| LVC[CVPR2022] [26] | 54.5 | 53.2 | 58.8 | 63.2 | 65.7 | 32.8 | 29.2 | 50.7 | 49.8 | 50.6 | 48.4 | 52.7 | 55.0 | 59.6 | 59.6 |

| DC[NIPS2022] [47] | 45.8 | 59.1 | 62.1 | 66.8 | 68.0 | 31.8 | 41.7 | 46.6 | 50.3 | 53.7 | 39.6 | 52.1 | 56.3 | 60.3 | 63.3 |

| imTED-S*[Arxiv2022] [12] | 43.4 | 51.0 | 58.1 | 67.6 | 66.6 | 23.2 | 26.9 | 39.4 | 44.2 | 52.7 | 49.9 | 48.8 | 56.4 | 61.4 | 61.1 |

| imTED-S+PDC (Ours) | 50.1 | 52.3 | 60.2 | 70.7 | 68.4 | 23.3 | 28.5 | 43.2 | 48.4 | 54.6 | 53.0 | 50.8 | 57.7 | 63.8 | 62.9 |

| imTED-B*[Arxiv2022] [12] | 56.8 | 64.8 | 69.4 | 80.1 | 76.8 | 37.4 | 38.1 | 59.1 | 57.6 | 60.9 | 60.9 | 59.3 | 70.0 | 73.9 | 75.7 |

| imTED-B+PDC (Ours) | 61.8 | 69.1 | 70.2 | 78.7 | 79.6 | 42.9 | 41.2 | 60.0 | 56.3 | 65.9 | 60.3 | 63.1 | 70.6 | 73.3 | 76.7 |

IV-B Ablation Study

The validity of PDC components with proper hyper-parameters on the Pascal VOC split-1 is justified in Table I. The average PDC improvement with the proposal sampling module over the baseline method is 1.6%. With contrastive learning, the performance gain further rises to 3.0%, demonstrating the significant advancement brought by our PDC.

Proposal Sampling In Table I, we evidence that sampling proposals from distributions similar to that produced by base trained RPN, i.e., which is defined in Eq. 2, insures superior novel class detection adaptation. Specifically, we denote the optimal uniform distribution of maximum union with as , where = [U(-0.055,0.055), U(-0.036,0.036), U(-0.077,0.077), U(-0.057,0.057)]. As shown in rows 3-7 of Table I, when sampling from uniform distributions with different perturbation intensities, the performance gain progressively improves as the sampling distribution approaches , justifying the efficacy to calibrate proposals for fine-tuning using base training statistics. From rows 5 and 8-10, one can see that with more high-quality positives sampled (less than 50 per instance), the average performance gain increases from 1.0 to 1.5, while drops to 0.9 when too many positives break the balance of positive and negative samples for RoI head repurposing. As adopting reports slightly higher performance than , i.e., 0.1 “avg. ” gain, rows 9 and 11, we sample 50 proposals from the Gaussian distribution of by default.

Contrastive Learning Following FSCE [8], we introduce an MLP head for contrastive learning. From row 1-2 in Table I, one can see that it is not wise to directly impose contrastive loss on the biased RPN proposals. While according to rows 12-16, it is clear that with applied on the proposals sampled from the calibrated distribution, the detection performance improves consistently with different settings of temperature and dimensions of the contrastive MLP head output. With the superior setting of the temperature as 0.2 and the output dimension as 128, we confirm again from results in row 13, 17, and 18 that it is better to absorb discriminative representations by only applying contrastive learning upon high-quality proposals from . These facts enhance the importance of the proposed PDC approach for current FSOD with the two-step training paradigm.

Losses for Repurposing RoI Head As defined in Eq. 7, 8, and 9, our PDC attaches three loss terms to repurpose the RoI head for novel class detection, i.e., , , and . From rows 19–22 in Table I, the combination of three losses based on reports the best performance (+3.0 “avg. ” gain), indicating that high-quality proposals with these losses facilitate the classification and localization abilities of the detector.

| Method | Shots | AP | AP50 | AP75 |

|---|---|---|---|---|

| FSRW[ICCV2019] [1] | 10 | 5.6 | 12.3 | 4.6 |

| Meta R-CNN[ICCV2019] [MetaRCNN] | 8.7 | 19.1 | 6.6 | |

| TFA w/cos[ICML2020] [5] | 10.0 | - | 9.3 | |

| Viewpoint[ECCV2020] [4] | 12.5 | 27.3 | 9.8 | |

| MPSR[ECCV2020] [6] | 9.8 | 17.9 | 9.7 | |

| FSCE[CVPR2021] [8] | 11.9 | - | 10.5 | |

| FADI[NIPS2021] [10] | 12.2 | 22.7 | 11.9 | |

| AirDet[ECCV2022] [46] | 13.0 | 23.9 | 12.4 | |

| CME[CVPR2021] [7] | 15.1 | 24.6 | 16.4 | |

| DeFRCN[ICCV2021] [9] | 16.8 | - | - | |

| FCT[CVPR2022] [11] | 17.1 | 30.2 | 17.0 | |

| DC[NIPS2022] [47] | 18.0 | - | - | |

| imTED-S[Arxiv2022] [12] | 15.0 | 25.7 | 15.2 | |

| imTED-S+PDC (Ours) | 15.7 | 26.8 | 15.8 | |

| imTED-B[Arxiv2022] [12] | 22.5 | 36.6 | 23.7 | |

| imTED-B+PDC (Ours) | 23.4 | 38.1 | 24.5 | |

| FSRW[ICCV2019] [1] | 30 | 9.1 | 19.0 | 7.6 |

| Meta R-CNN[ICCV2019] [MetaRCNN] | 12.4 | 25.3 | 10.8 | |

| TFA w/cos[ICML2020] [5] | 13.7 | - | 13.4 | |

| Viewpoint[ECCV2020] [4] | 14.7 | 30.6 | 12.2 | |

| MPSR[ECCV2020] [6] | 14.1 | 25.4 | 14.2 | |

| FSCE[CVPR2021] [8] | 16.4 | - | 16.2 | |

| FADI[NIPS2021] [10] | 16.1 | 29.1 | 15.8 | |

| CME[CVPR2021] [7] | 16.9 | 28.0 | 17.8 | |

| DeFRCN[ICCV2021] [9] | 21.2 | - | - | |

| FCT[CVPR2022] [11] | 21.4 | 35.5 | 22.1 | |

| DC[NIPS2022] [47] | 22.2 | - | - | |

| imTED-S[Arxiv2022] [12] | 21.0 | 34.5 | 21.8 | |

| imTED-S+PDC (Ours) | 22.1 | 35.8 | 23.4 | |

| imTED-B[Arxiv2022] [12] | 30.2 | 47.4 | 32.5 | |

| imTED-B+PDC (Ours) | 30.8 | 47.3 | 33.5 |

IV-C Model Analysis

In this subsection, we quantitatively and qualitatively reveal the specific advantages of our PDC based on imTED [12] with the 5-shot setting, including aspects of the RoI head’s localization and classification capabilities, embedding space, and detection results.

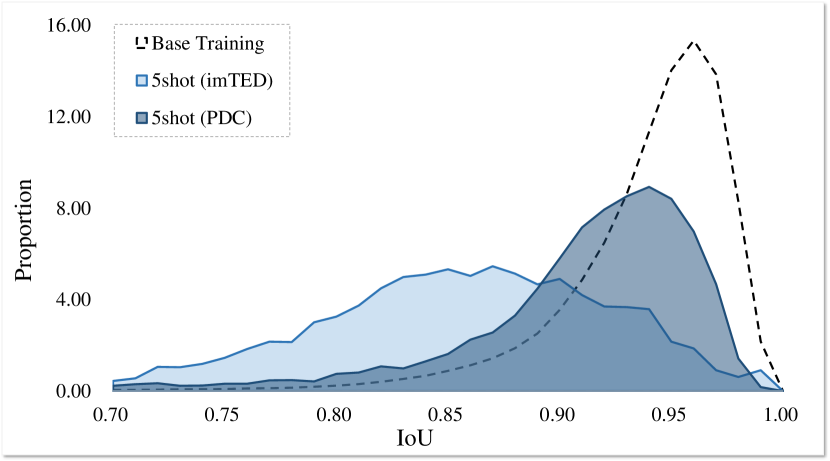

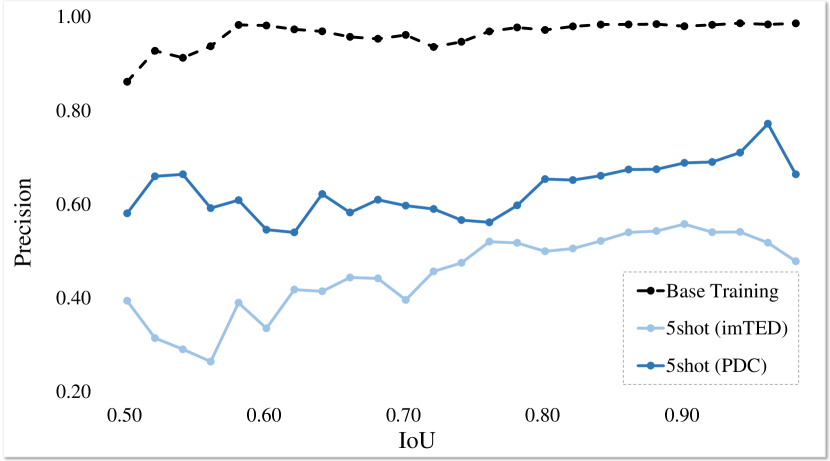

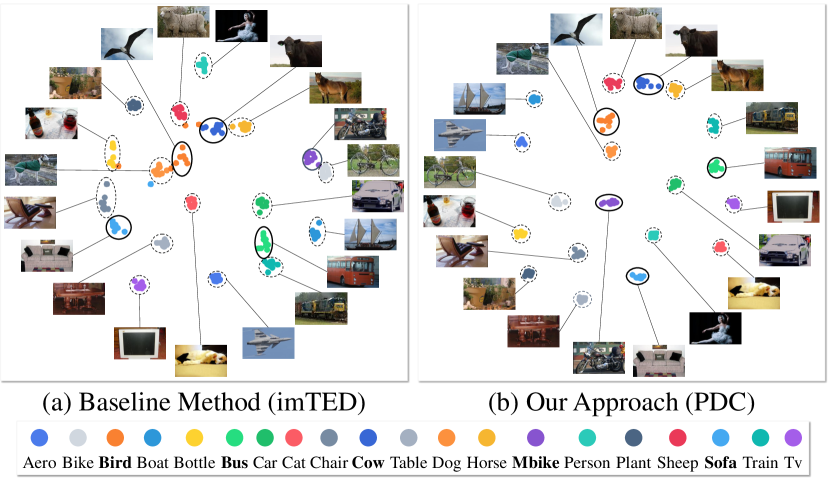

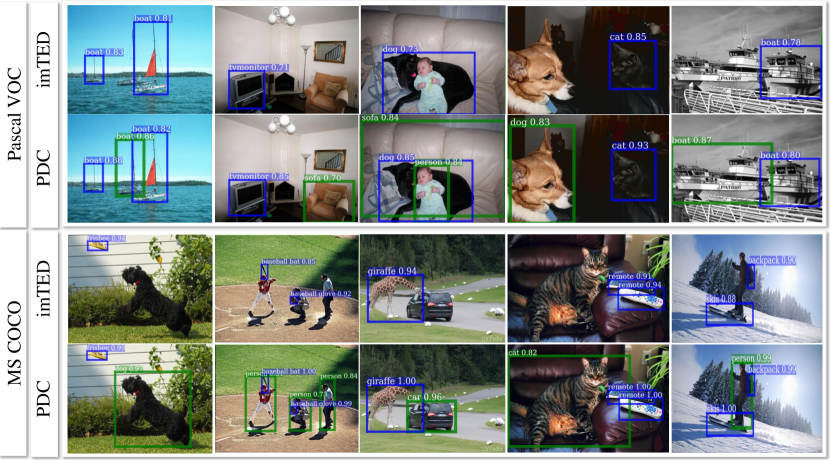

Concretely, we visualize the IoU histograms of bounding boxes predicted by the RoI head of the baseline method, i.e., imTED [12], w or w/o our PDC after fine-tuning in Fig. 3. It is clear that our PDC remarkably improves the localization ability of the RoI head, which predicts more accurate bounding boxes (high IoU with ground truth) whose distribution approaches the base class statistics produced with sufficient supervision. In Fig. 4, we explore the detailed classification precision improvement of the RoI head for localized instances with bounding boxes of different IoUs. PDC achieves better results than the baseline not limited in proposals of high IoU, which validates that fine-tuning with the calibrated proposal distribution facilitates the improved classification ability generalizing to low IoU cases, cf. Sec. III-D. so, from the figure, PDC classification ability improvement is proven.

In Fig. 5, we visualize the RoI feature embeddings of the baseline method and PDC after 5-shot finetuning. It is obvious that our PDC produces superior feature space with better discrimination, especially for novel classes. Fig. 6 showcases some detection results of the baseline and PDC on Pascal VOC and MS COCO datasets. Since the calibrated proposal distribution encourages to recall the novel class suppressed in base training, it is clear that our PDC can significantly reduce missed samples, i.e., green boxes in the figure.

IV-D Performance Comparison

IV-D1 Pascal VOC

In Table II, our PDC respectively achieve 1.0%, 2.8%, and 1.0% performance improvements on split-1, split-2, and split-3 of Pascal VOC with the MPSR [6] baseline detector. Compared with imTED [12] with the ViT-S backbone, PDC reports 3.0%, 2.3%, and 2.1% performance gains on three splits. While, with the ViT-B backbone, PDC averagely outperforms imTED by 2.3%, 2.6%, and 0.8%, respectively. The consistent advantages of PDC on different detectors justify its outstanding efficacy and generalization.

IV-D2 MS COCO

Compared with Pascal VOC, the MS COCO dataset is challenging with more categories and images. In Table III, with ViT-S, imTED-S+PDC shows performance improvement of 0.7% AP, 1.1% AP50, and 0.6% AP75 in the 10-shot setting and 1.1% AP, 1.3% AP50, and 1.6% AP75 in the 30-shot setting. Relative gains can also be found with ViT-B backbone (, 0.9% for AP, 1.5% for AP50, and 0.8% for AP75 in 10-shot setting), justifying that our approach could generalize well with stronger baseline models.

V Conclusion

In this paper, we alleviate the intrinsic learning objective contradiction across the two-step learning phases via a simple yet effective proposal distribution calibration (PDC) approach. PDC samples proposals via base class statistics during fine-tuning to offer additional calibrated high-quality corpus for mild novel class adaptation. With additional classification and localization losses, PDC achieves great generalization ability toward novel classes in a plug-and-play fashion. Experimental results reported on Pascal VOC [41, 42] and MS COCO [43] with two baseline detectors, i.e., MPSR [6] and imTED [12], justify the efficacy and generalizability of our PDC. Although developing for general two-stage FSOD, we also hope our PDC can inspire creative insights for one-stage FSOD.

References

- [1] B. Kang, Z. Liu, X. Wang, F. Yu, J. Feng, and T. Darrell, “Few-shot object detection via feature reweighting,” in Proc. IEEE Int. Conf. Comput. Vis. (ICCV), 2019, pp. 8420–8429.

- [2] Y.-X. Wang, D. Ramanan, and M. Hebert, “Meta-learning to detect rare objects,” in Proc. IEEE Int. Conf. Comput. Vis. (ICCV), 2019, pp. 9925–9934.

- [3] X. Yan, Z. Chen, A. Xu, X. Wang, X. Liang, and L. Lin, “Meta r-cnn: Towards general solver for instance-level low-shot learning,” in Proc. IEEE Int. Conf. Comput. Vis. (ICCV), 2019, pp. 9577–9586.

- [4] Y. Xiao and R. Marlet, “Few-shot object detection and viewpoint estimation for objects in the wild,” in Proc. ECCV, 2020, pp. 192–210.

- [5] X. Wang, T. E. Huang, T. Darrell, J. E. Gonzalez, and F. Yu, “Frustratingly simple few-shot object detection,” in Proc. ICML, 2020, pp. 9919–9928.

- [6] J. Wu, S. Liu, D. Huang, and Y. Wang, “Multi-scale positive sample refinement for few-shot object detection,” in Proc. ECCV, 2020, pp. 456–472.

- [7] B. Li, B. Yang, C. Liu, F. Liu, R. Ji, and Q. Ye, “Beyond max-margin: Class margin equilibrium for few-shot object detection,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit. (CVPR), 2021, pp. 7363–7372.

- [8] B. Sun, B. Li, S. Cai, Y. Yuan, and C. Zhang, “Fsce: Few-shot object detection via contrastive proposal encoding,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit. (CVPR), 2021, pp. 7352–7362.

- [9] L. Qiao, Y. Zhao, Z. Li, X. Qiu, J. Wu, and C. Zhang, “Defrcn: Decoupled faster r-cnn for few-shot object detection,” in Proc. IEEE Int. Conf. Comput. Vis. (ICCV), 2021, pp. 8681–8690.

- [10] Y. Cao, J. Wang, Y. Jin, T. Wu, K. Chen, Z. Liu, and D. Lin, “Few-shot object detection via association and discrimination,” Proc. NIPS, vol. 34, pp. 16 570–16 581, 2021.

- [11] G. Han, J. Ma, S. Huang, L. Chen, and S.-F. Chang, “Few-shot object detection with fully cross-transformer,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit. (CVPR), 2022, pp. 5321–5330.

- [12] X. Zhang, F. Liu, Z. Peng, Z. Guo, F. Wan, X. Ji, and Q. Ye, “Integral migrating pre-trained transformer encoder-decoders for visual object detection,” arXiv:2205.09613, 2022.

- [13] Z. Fan, Y. Ma, Z. Li, and J. Sun, “Generalized few-shot object detection without forgetting,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit. (CVPR), 2021, pp. 4527–4536.

- [14] F. Li, H. Zhang, S. Liu, J. Guo, L. M. Ni, and L. Zhang, “Dn-detr: Accelerate detr training by introducing query denoising,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit. (CVPR), 2022, pp. 13 619–13 627.

- [15] K. He, X. Chen, S. Xie, Y. Li, P. Dollár, and R. Girshick, “Masked autoencoders are scalable vision learners,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit. (CVPR), 2022, pp. 16 000–16 009.

- [16] S.-J. Park, S. Han, J.-W. Baek, I. Kim, J. Song, H. B. Lee, J.-J. Han, and S. J. Hwang, “Meta variance transfer: Learning to augment from the others,” in Proc. ICML, 2020, pp. 7510–7520.

- [17] S. Yang, L. Liu, and M. Xu, “Free lunch for few-shot learning: Distribution calibration,” in Proc. ICLR, 2020.

- [18] J. Redmon, S. Divvala, R. Girshick, and A. Farhadi, “You only look once: Unified, real-time object detection,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit. (CVPR), 2016, pp. 779–788.

- [19] W. Liu, D. Anguelov, D. Erhan, C. Szegedy, S. Reed, C.-Y. Fu, and A. C. Berg, “Ssd: Single shot multibox detector,” in Proc. ECCV, 2016, pp. 21–37.

- [20] S. Ren, K. He, R. Girshick, and J. Sun, “Faster r-cnn: Towards real-time object detection with region proposal networks,” Proc. NIPS, vol. 28, 2015.

- [21] N. Carion, F. Massa, G. Synnaeve, N. Usunier, A. Kirillov, and S. Zagoruyko, “End-to-end object detection with transformers,” in Proc. ECCV, 2020, pp. 213–229.

- [22] W. Zhang and Y.-X. Wang, “Hallucination improves few-shot object detection,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit. (CVPR), 2021, pp. 13 008–13 017.

- [23] Z. Wang and J. Ye, “Querying discriminative and representative samples for batch mode active learning,” Trans. TKDD, vol. 9, no. 3, pp. 1–23, 2015.

- [24] K. Muandet, K. Fukumizu, B. Sriperumbudur, B. Schölkopf et al., “Kernel mean embedding of distributions: A review and beyond,” Foundations and Trends® in Machine Learning, vol. 10, no. 1-2, pp. 1–141, 2017.

- [25] Y. Li, H. Mao, R. Girshick, and K. He, “Exploring plain vision transformer backbones for object detection,” arXiv:2203.16527, 2022.

- [26] P. Kaul, W. Xie, and A. Zisserman, “Label, verify, correct: A simple few shot object detection method,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit. (CVPR), 2022, pp. 14 237–14 247.

- [27] C. Zhu, F. Chen, U. Ahmed, Z. Shen, and M. Savvides, “Semantic relation reasoning for shot-stable few-shot object detection,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit. (CVPR), 2021, pp. 8782–8791.

- [28] O. Vinyals, C. Blundell, T. Lillicrap, K. Kavukcuoglu, and D. Wierstra, “Matching networks for one shot learning,” Proc. NIPS, pp. 3630–3638, 2016.

- [29] F. Sung, Y. Yang, L. Zhang, T. Xiang, P. H. S. Torr, and T. M. Hospedales, “Learning to compare: Relation network for few-shot learning,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit. (CVPR), 2018, pp. 1199–1208.

- [30] C. Zhang, Y. Cai, G. Lin, and C. Shen, “Deepemd: Few-shot image classification with differentiable earth mover’s distance and structured classifiers,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit. (CVPR), 2020, pp. 12 200–12 210.

- [31] B. Yang, C. Liu, B. Li, J. Jiao, and Q. Ye, “Prototype mixture models for few-shot semantic segmentation,” in Proc. ECCV, vol. 12353, 2020, pp. 763–778.

- [32] B. Liu, Y. Ding, J. Jiao, J. Xiangyang, and Q. Ye, “Anti-aliasing semantic reconstruction for few-shot semantic segmentation,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit. (CVPR), June 2021.

- [33] B. Liu, J. Jiao, and Q. Ye, “Harmonic feature activation for few-shot semantic segmentation,” IEEE Trans. Image Process., vol. 30, pp. 3142–3153, 2021.

- [34] C. Finn, P. Abbeel, and S. Levine, “Model-agnostic meta-learning for fast adaptation of deep networks,” in Proc. ICML, 2017, pp. 1126–1135.

- [35] S. Ravi and H. Larochelle, “Optimization as a model for few-shot learning,” in Proc. ICLR, 2017.

- [36] Y. Wang and M. Hebert, “Learning to learn: Model regression networks for easy small sample learning,” in Proc. ECCV, 2016, pp. 616–634.

- [37] Y. Wang, R. B. Girshick, M. Hebert, and B. Hariharan, “Low-shot learning from imaginary data,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit. (CVPR), 2018, pp. 7278–7286.

- [38] B. Hariharan and R. B. Girshick, “Low-shot visual recognition by shrinking and hallucinating features,” in Proc. IEEE Int. Conf. Comput. Vis. (ICCV), 2017, pp. 3037–3046.

- [39] M. A. Jamal and G.-J. Qi, “Task agnostic meta-learning for few-shot learning,” in Proc. IEEE Int. Conf. Comput. Vis. (ICCV), 2019, pp. 111 719–111 727.

- [40] M. Köhler, M. Eisenbach, and H.-M. Gross, “Few-shot object detection: A survey,” arXiv:2112.11699, 2021.

- [41] M. Everingham, L. Van Gool, C. K. Williams, J. Winn, and A. Zisserman, “The pascal visual object classes (voc) challenge,” International Journal of Computer Vision, vol. 88, no. 2, pp. 303–338, 2010.

- [42] M. Everingham, S. Eslami, L. Van Gool, C. K. Williams, J. Winn, and A. Zisserman, “The pascal visual object classes challenge: A retrospective,” International Journal of Computer Vision, vol. 111, no. 1, pp. 98–136, 2015.

- [43] T.-Y. Lin, M. Maire, S. Belongie, J. Hays, P. Perona, D. Ramanan, P. Dollár, and C. L. Zitnick, “Microsoft coco: Common objects in context,” in Proc. ECCV, 2014, pp. 740–755.

- [44] P. Khosla, P. Teterwak, C. Wang, A. Sarna, Y. Tian, P. Isola, A. Maschinot, C. Liu, and D. Krishnan, “Supervised contrastive learning,” Proc. NIPS, vol. 33, pp. 18 661–18 673, 2020.

- [45] A. Dosovitskiy, L. Beyer, A. Kolesnikov, D. Weissenborn, X. Zhai, T. Unterthiner, M. Dehghani, M. Minderer, G. Heigold, S. Gelly et al., “An image is worth 16x16 words: Transformers for image recognition at scale,” Proc. ICLR, 2020.

- [46] B. Li, C. Wang, P. Reddy, S. Kim, and S. Scherer, “Airdet: Few-shot detection without fine-tuning for autonomous exploration,” in Proc. ECCV, 2022, pp. 427–444.

- [47] B.-B. Gao, X. Chen, Z. Huang, C. Nie, J. Liu, J. Lai, G. Jiang, X. Wang, and C. Wang, “Decoupling classifier for boosting few-shot object detection and instance segmentation,” in Proc. NIPS, 2022.