Prostate Cancer Malignancy Detection and localization from mpMRI using auto-Deep Learning: One Step Closer to Clinical Utilization††thanks: Supported by American Cancer Society.

Abstract

Automatic diagnosis of malignant prostate cancer patients from mpMRI has been studied heavily in the past years. Model interpretation and domain drift have been the main road blocks for clinical utilization. As an extension from our previous work where we trained a customized convolutional neural network on a public cohort with 201 patients and the cropped 2D patches around the region of interest were used as the input, the cropped 2.5D slices of the prostate glands were used as the input, and the optimal model were searched in the model space using autoKeras. Something different was peripheral zone (PZ) and central gland (CG) were trained and tested separately, the PZ detector and CG detector were demonstrated effectively in highlighting the most suspicious slices out of a sequence, hopefully to greatly ease the workload for the physicians.

Keywords:

MRI sequence input Sub-region separation Malignancy probability for each slide Lesion localization.1 Introduction

In this section, the literature in the field of diagnosis of prostate cancer (PCa) from medical images, the current state-of-the-art research of using deep learning algorithms in automating the diagnosis process and challenges in closing the gap of research and clinical utilization is discussed.

1.1 Background

Prostate cancer (PCa) is the most common cancer in men in the United States, and it is the second leading cause of cancer death in these patients (https://www.cancer.org/cancer/prostate-cancer/about/key-statistics.html). Multiparametric magnetic resonance imaging (mpMRI) for PCa diagnosis has been increased significantly over the past decade. mpMRI sequences have shown promise for the detection and localization of PCa including T2-weighted (T2W), diffusion-weighted imaging (DWI), dynamic contrast-enhanced imaging (DCE) and MR spectroscopy. Combining these MR sequences into a multiparametric format has improved the performance characteristics of PCa detection and localization by evaluating area under curve (AUC) values, sensitivities, specificities, and positive predictive values[2, 1]. Prostate biopsy is still considered the golden standard to determine if a suspicious lesion is a benign or a malignant. However, the biopsy is an invasive procedure prone to complications such as hemorrhage, dysuria, and infection. Furthermore, in a small number of cases, the prostate biopsies can fail to establish the diagnosis despite magnetic resonance and transrectal ultrasound-guided approaches4[11].

Clinically significant cancer is defined on histopathology as Gleason score 7 (including 3+4) according to PI-RADSTM v2 in order to standardize reporting of mpMRI and correlate imaging findings with pathology results[10]. Also, PCa is a multifocal disease in up to 87% of cases; therefore, the ability to distinguish malignant from benign foci within the prostate is crucial for optimal diagnosis and treatment. This has led to an interest in machine learning and computer vision utilizing mpMRI to non-invasively obtain accurate radiologic diagnoses that correlate with their histopathologic variants[8]. Unfortunately, data scarcity is one of the major challenges in applying deep machine learning algorithms in interpreting mpMRI images due to tightly regulated information acquisition as well as the high cost of MRI acquisition and data labelling from medical experts. In addition, health records cannot be shared without consents due to privacy laws and related healthcare policies and regulations. Recently, efforts have been made[12, 6] to transfer knowledge from publicly available large-scale data consisting of millions or more natural objects or scene images[3] to medical applications with a small-scale data.

Compared to the recent progress in the application of deep neural networks on 2D images such as chest x-ray[13], mammogram[9], the challenges on the classification using prostate MRI[4, 7] include relative small patient sample sizes due to high screening cost and much more complex information such as morphological, diffusion or perfusion imaging characteristics.

1.2 Previous Work

Data used in the study was originated from the SPIE-AAPM-NCI Prostate MR Gleason Grade Group Challenge[5], which aimed to develop quantitative mpMRI biomarkers for determination of malignant lesions in PCa patients. PCa patients were previously de-identified by SPIE-AAPM-NCI and The Cancer Imaging Archive (TCIA).

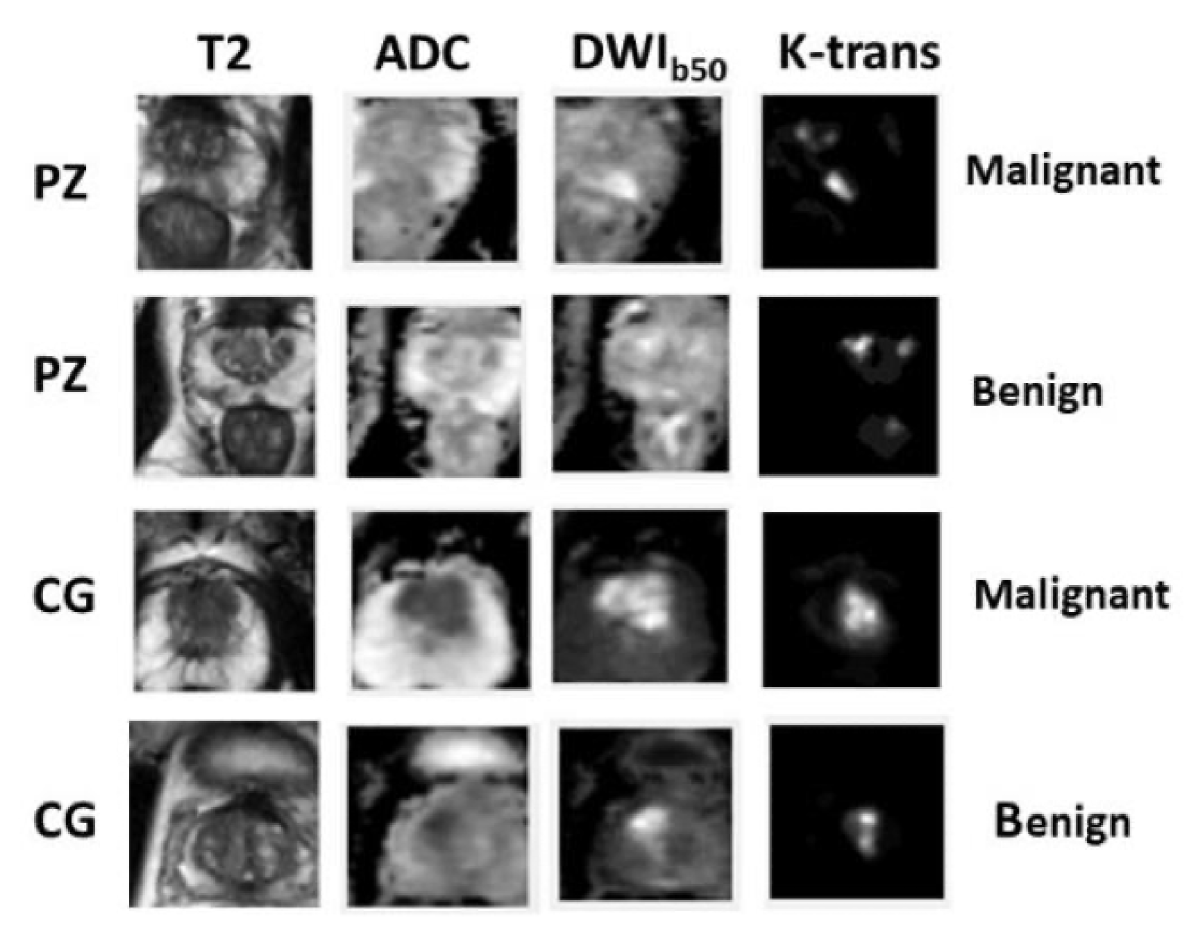

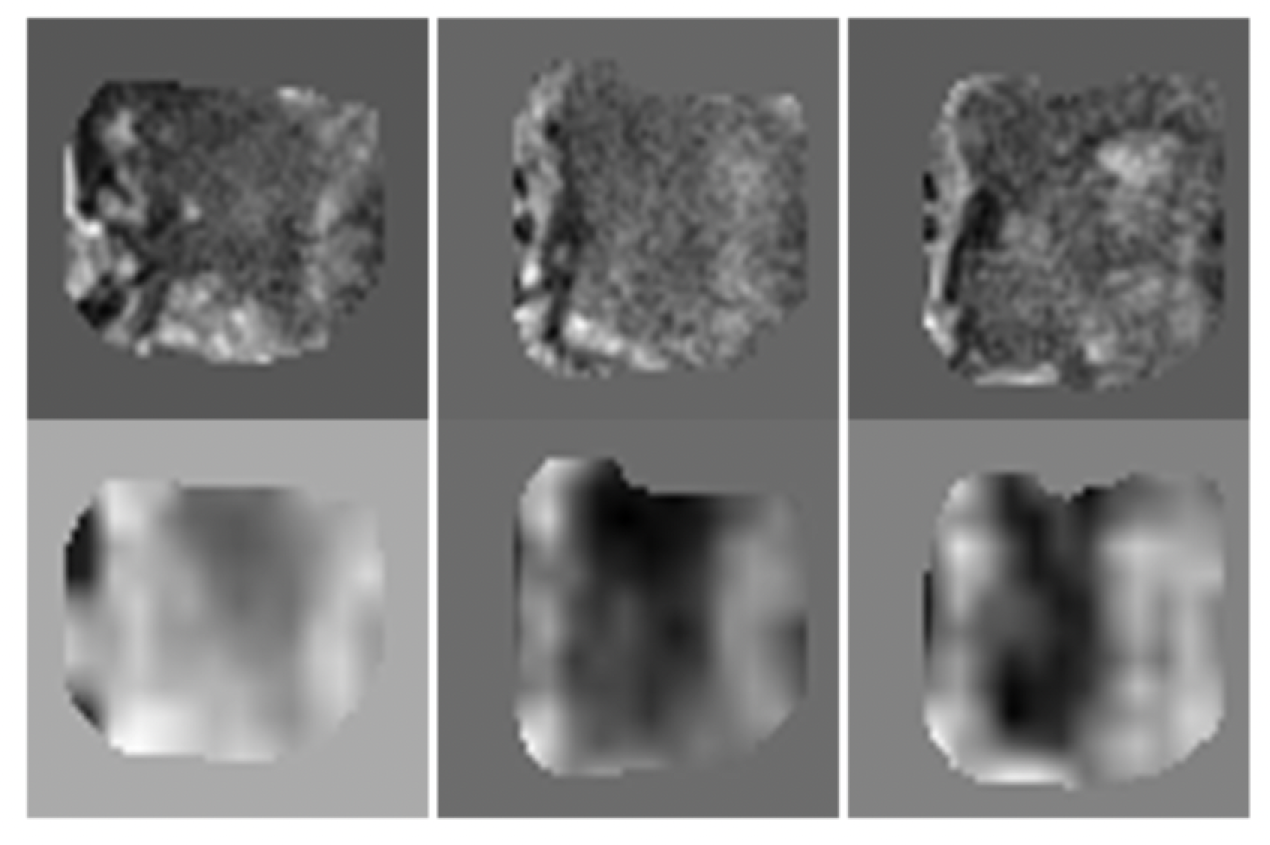

Each patient came with four modalities displayed as in Figure 1. Lesions exhibited hypointense signals in T2 weighted and apparent diffusion coefficient (ADC) map, and hyperintense signals in diffusion weighted imaging with low b values (b = 50). For k-trans, lesions and disease can be confusing, especially in the CG, thus were overlooked in this study.

In our previous work, we found that multi-modality input contributed significantly to accurate classification. In most cases, class activation map (CAM) [14] helped provide the proof about where the model is looking at when making predictions. The central point of the potential lesion was provided in [5].

One step closer to clinical utilization by easing the workload of a medical expert is the focus and contribution of this paper. (1) We input the entire prostate gland (PZ and CG separately) rather than the cropped region of interest (ROI). (2) According to Figure 1, lesion can exhibit very different characteristics when residing in PZ and CG. Another attempt in improving upon our previous work is to train and test using separate models for PZ and CG. (3) To verify the robustness of the trained model, we test it on an independent cohort from our own institute which results in high accuracy. (4) One bonus finding is that, the trained PZ-detector and CG-detector are able to rank the probability of malignancy for each slice and highlight the suspicious slices out of the sequence, despite of the challenges that the testing samples are generated from different scanners with different parameters. This finding served one step closer to clinical utilization.

2 Data

In this section, imaging acquisition parameters and image pre-processing steps are described in detail.

2.1 Scanning Parameters

The images were acquired on two different types of Siemens 3T MR scanners, the MAGNETOM Trio and Skyra. T2W images were acquired using a turbo spin echo sequence and had a resolution of around 0.5 mm in plane and a slice thickness of 3.6 mm. The DWI series were acquired with a single-shot echo planar imaging sequence with a resolution of 2 mm in-plane and 3.6 mm slice thickness and with diffusion-encoding gradients in three directions. Three b-values were acquired (50, 400, and 800 s/mm2), and subsequently, an ADC map was calculated by the scanner software.

To test cross-institutional generalization capability of our model, an independent cohort (test cohort 2) consisting of 40 patients was collected for testing from our own institution with the IRB-approval. An ultrasound-guided needle biopsy was performed to confirm the diagnosis. Two image modalities were acquired for each patient using a 3.0 T MR scanner (Ingenia, Philips Medical System, Best, the Netherlands) using small field of view as follows: T2W acquired with Fast-Spin-Echo (TE/TR: 4389/110ms, Flip Angle: 90∘ with image resolution of 0.42×0.42×2.4mm3) and DWI with two b values (0 and 1,000 s/mm2). The voxel-wise ADC map was constructed using these two DWIs.

2.2 Data Pre-Processing

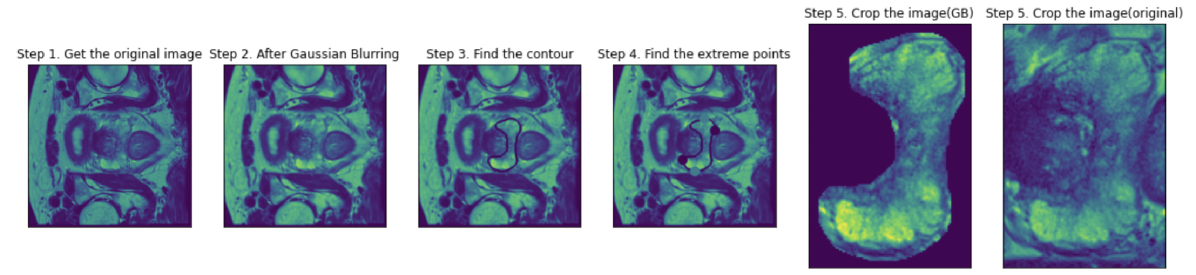

All images were registered to T2-axial image sets and resampled to 1 mm isotropic resolution. N4 bias-field correction was applied to T2 and ADC for intensity uniformity correction. Then sub-regions such as PZ or CG were cropped from the sequence based on the contouring performed by our team of medical experts. Pre-processing and cropping on one slice were shown in Figure 2. Gaussian blurring was applied to increase the contrast. Four extreme points were located based on the annotated contour. With a margin of 5 pixels, a surrounding rectangle was cropped.

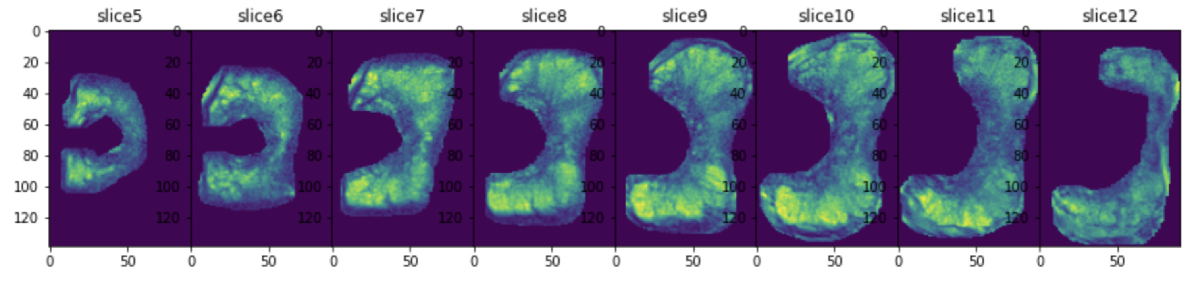

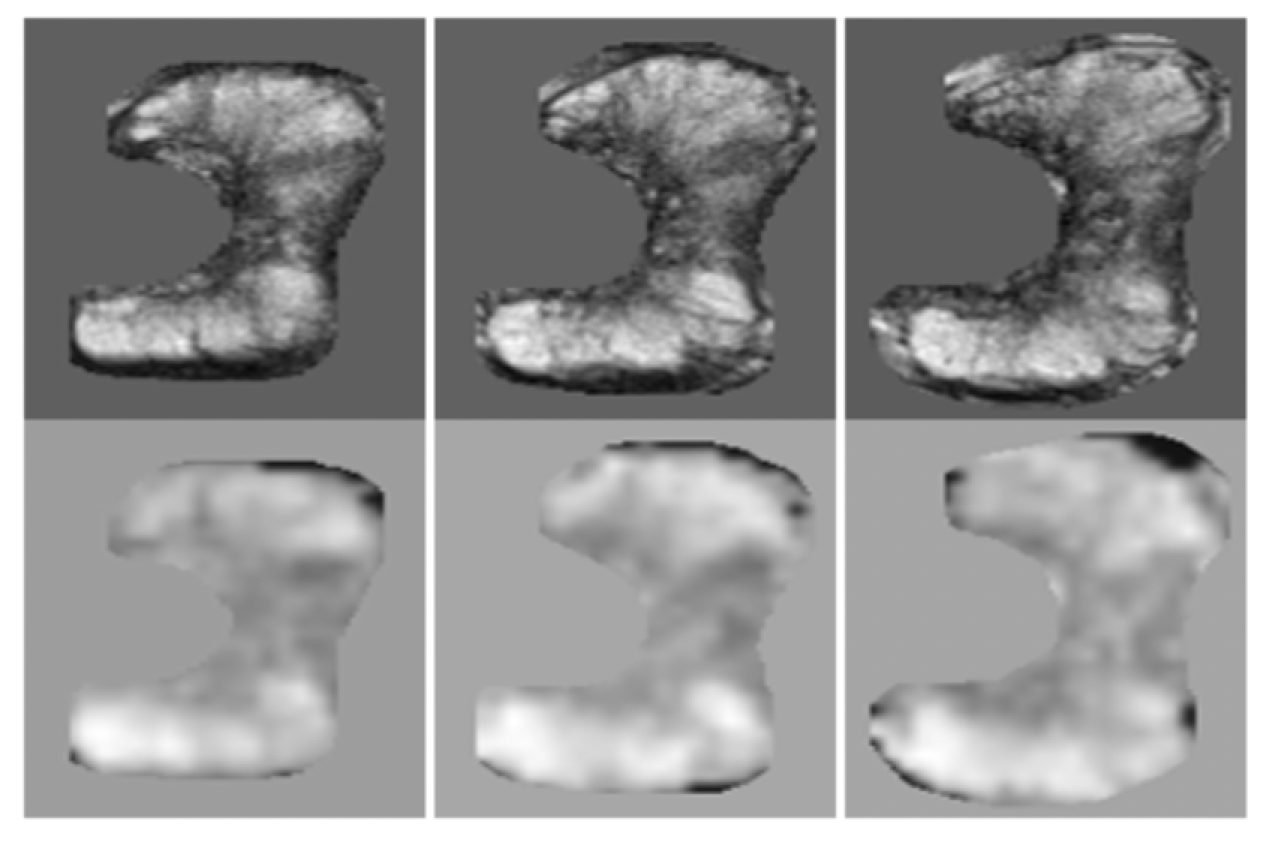

During training and testing, the sequence representing one patient were taken into consideration. Therefore, the extreme points were searched through the sequence to not to miss any part of the PZ or CG, as shown in Figure 3 and Figure 4.

3 Method and Results

3.1 Auto-Keras

Auto deep learning models for medical image analysis have not been studied much. To fully take advantage of this technique, implementation details were illustrated as follows.

To increase the sample size, and to leverage the 3D information between two consecutive slices, the 2-channel input was augmented to include T2-ADC pair, consecutive T2 pairs and consecutive ADC pairs. Performance of using just T2-ADC pair and the mixed pairs were compared for both PZ and CG detectors.

The number of benign lesions outnumbered malignant lesions and re-weighting was used accordingly. After shuffling the data, third of the data were randomly selected for validation. Early stopping with a patience value of and optimization goal of area-under-the-curve (AUC) for validation set was used to prevent overfitting. Bayesian tuner was used as the searching strategy and maximum searching epochs were set as . Auto-augmentation included a search in the augmentation space with operations such as random flip, translation, and contrast, etc.

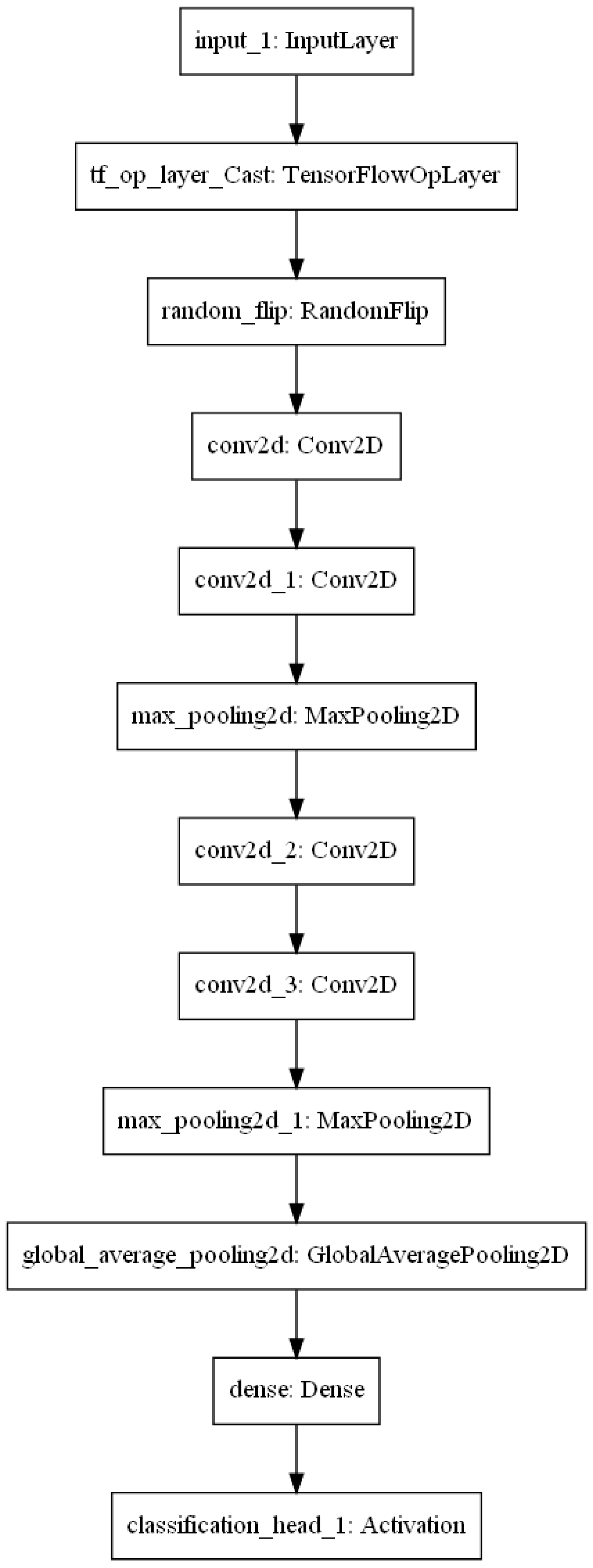

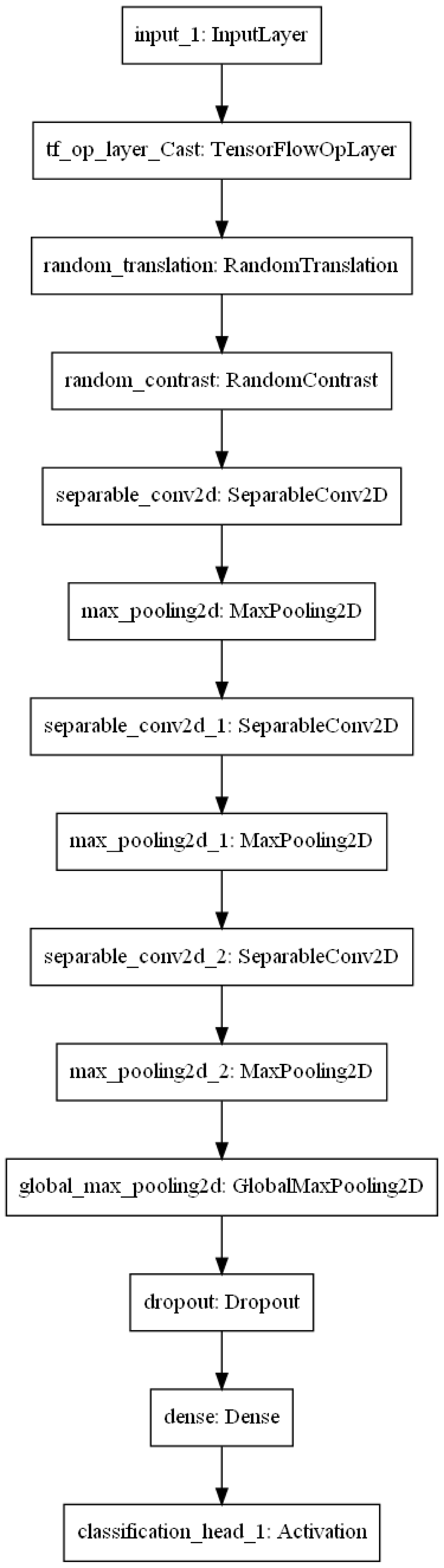

The learned optimal models to detect lesions in PZ 5(a) and CG 5(b) were displayed in Figure 5. Compared to PZ-detector, data augmentation and network architecture were found more complicated to recognize malignant lesions in CG. However, the best validation AUC of 0.94 was achieved for CG-detector when only T2-ADC pairs were used as the input. According to Figure 1, lesions in CG are visually difficult to detect from single source of modality, which might have explained the preference of T2-ADC pair as the input. The best validation AUC for PZ-detector, on the other hand, was 0.90, when the input was a mixture type of pairs.

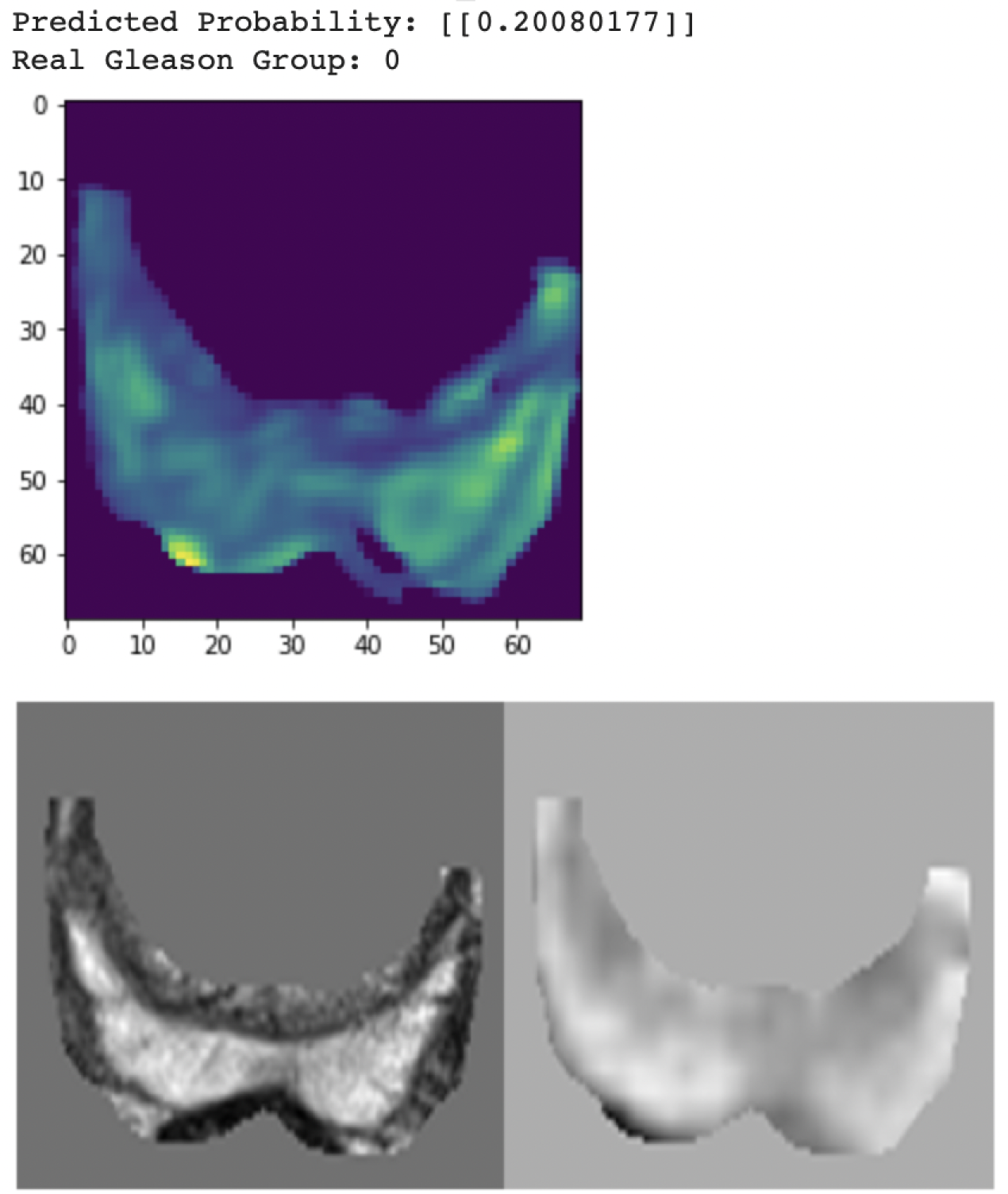

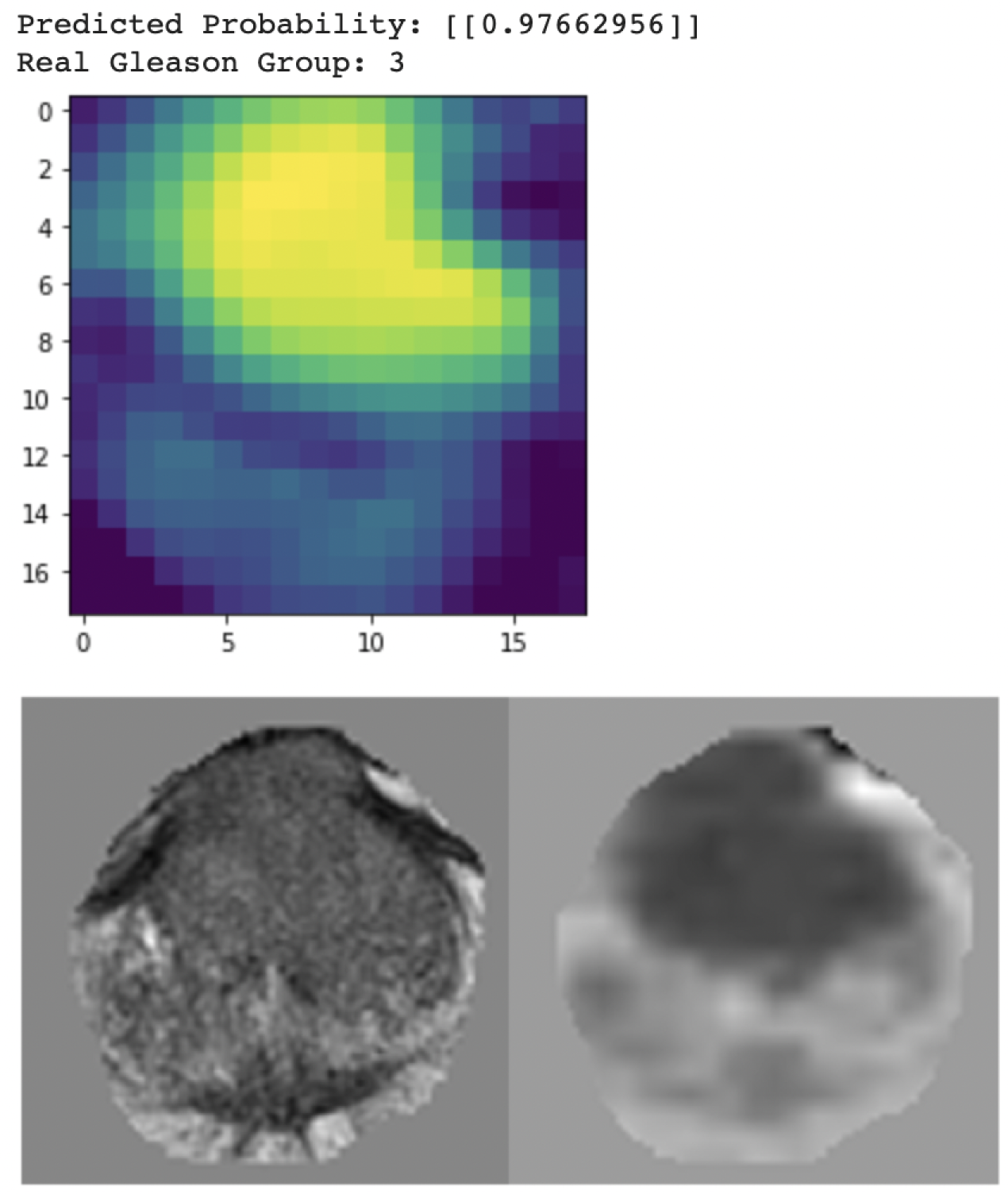

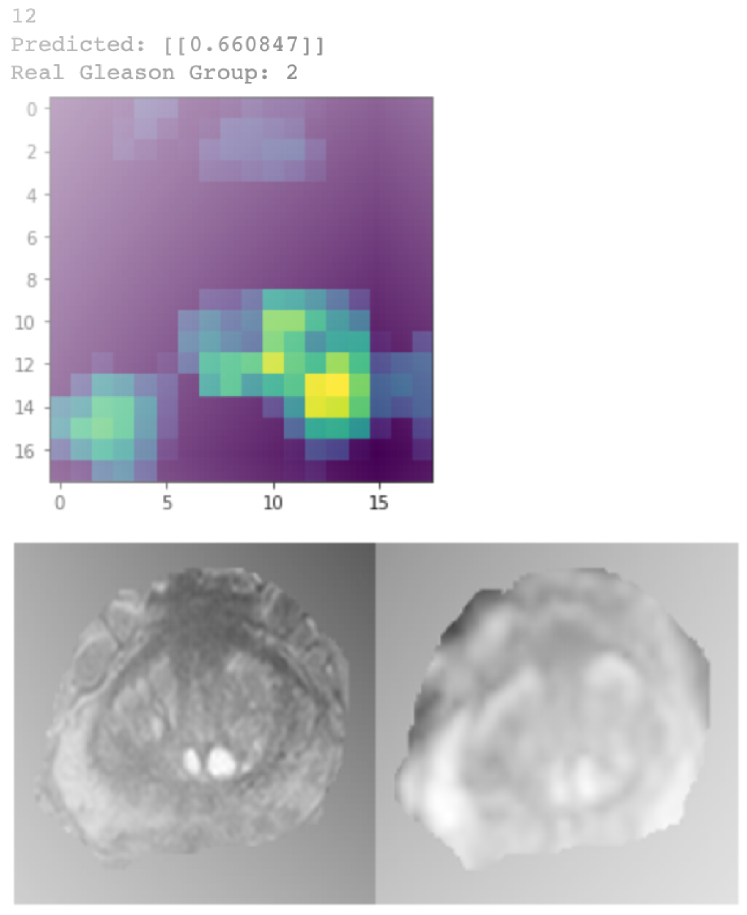

In Figure 6, gradient class activation map (Grad-CAM) was used to visualize the learned PZ-detector 6(a) and CG-detector 6(b). The prediction probability was the value of the output node. It can be observed from the two cases that, lesions aligned well with the hyper-intense signal in the Grad-CAM if predicted malignant, otherwise neither hyper- nor hypo-intense signal was visible.

3.2 Challenges of Domain Drift

Testing data cohort was collected from our institute and posed several challenges during the inference stage.

(1) Contours of PZ or CG were not available on contrary to the cases in the training cohort, but more of a realistic setting.

(2) The ADC maps were calculated from DWI . They are visually slightly different from the ADC maps in the training cohort.

(3) Number of slices were varied in the testing cohort.

3.3 Solutions

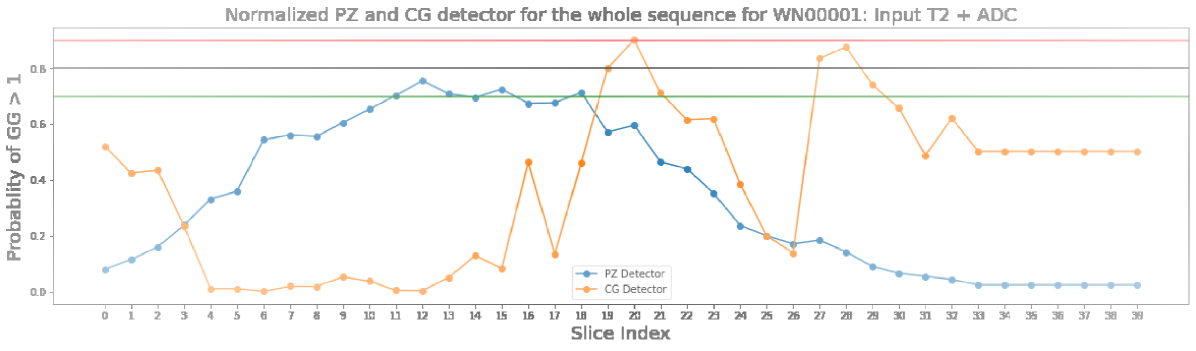

(1) We nevertheless tested PZ-detector and CG-detector on the testing cohort, where the input is the T2-ADC pair and whole prostate gland was used as the input since sub-region contour was not available. The PZ-detector was able to accurately highlight slices with suspicious malignant lesions. While the CG-detector was able to detect lesions in CG most of the time but were found prone to the false positive traps as shown in Figure 7, such as the marginal Slice .

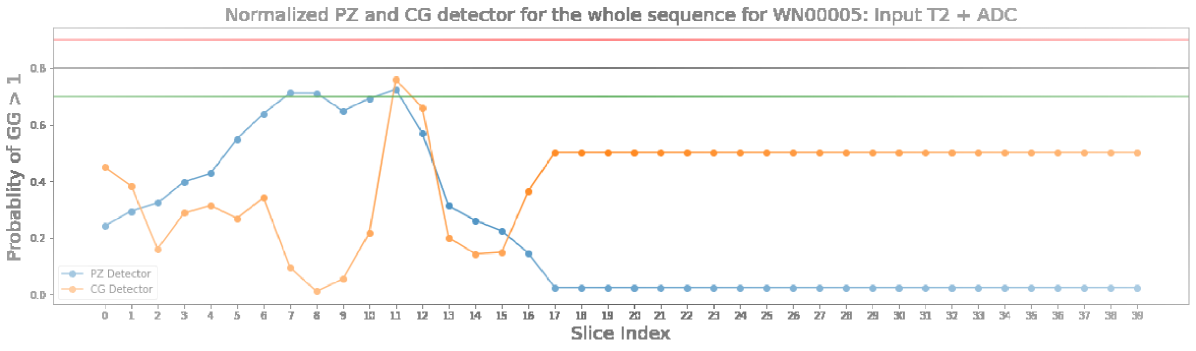

Figure 8 was one of the successful cases where both CG-detector and PZ-detector were able to pick the suspicious slice and the Grad-CAM showed the location of suspicious lesion on that slice which aligned well with the lesion.

(2) The trained models were verified robust to the domain drift issues caused by ADC map calculated from DWIs with different b values.

(3) By making prediction for each slice and connecting the slices into the sequence, the PZ-detector and CG-detector were able to work together to highlight the suspicious slice out of the sequence.

4 Conclusions

This work extended our previous research on using deep learning models to read from mpMRI and suggest diagnosis for lesion malignancy. The purpose was one step closer to clinical utilization by means of eliminating the manual efforts of lesion localization, utilizing automatic deep learning framework to search for the optimal augmentation strategy, network architecture and parameters, and finally make prediction for the sequence to find the most suspicious slice and localize the lesion on that slice using the saliency map. The code has been made public and ready to be deployed for anyone who is interested.

References

- [1] et al, L.D.: Magnetic Resonance Imaging for the Detection, Localisation, and Characterisation of Prostate Cancer: Recommendations from a European Consensus Meeting. European Urology 59(4), 477–494 (Apr 2011)

- [2] Delongchamps, N.B., Rouanne, M., Flam, T., Beuvo, F., Liberatore, M., Zerbib, M., Cornud, F.: Multiparametric magnetic resonance imaging for the detection and localization of prostate cancer: combination of T2-weighted, dynamic contrast-enhanced and diffusion-weighted imaging: INCREASED PROSTATE CANCER DETECTION WITH MULTIPARAMETRIC MRI. BJU International 107(9), 1411–1418 (May 2011)

- [3] Deng, J., Dong, W., Socher, R., Li, L.J., Kai Li, Li Fei-Fei: ImageNet: A large-scale hierarchical image database. In: 2009 IEEE Conference on Computer Vision and Pattern Recognition. pp. 248–255. IEEE, Miami, FL (Jun 2009)

- [4] G., A.S., Henkjan, H., Karen, D., Lubomir, H., S., K.J., Nicholas, P., George, R., L., G.M., Kenny, C., Artem, M., Jayashree, K.C., Keyvan, F.: PROSTATEx Challenges for computerized classification of prostate lesions from multiparametric magnetic resonance images. Journal of Medical Imaging 5(04), 1 (Nov 2018)

- [5] Litjens, G., Debats, O., Barentsz, J., Karssemeijer, N., Huisman, H.: Computer-Aided Detection of Prostate Cancer in MRI. IEEE Transactions on Medical Imaging 33(5), 1083–1092 (May 2014)

- [6] Litjens, G., Kooi, T., Bejnordi, B.E., Setio, A.A.A., Ciompi, F., Ghafoorian, M., van der Laak, J.A., van Ginneken, B., Sánchez, C.I.: A survey on deep learning in medical image analysis. Medical Image Analysis 42, 60–88 (Dec 2017)

- [7] Liu, S., Zheng, H., Feng, Y., Li, W.: Prostate cancer diagnosis using deep learning with 3D multiparametric MRI. In: Armato, S.G., Petrick, N.A. (eds.) Medical imaging 2017: computer-aided diagnosis. p. 1013428. Orlando, Florida, United States (Mar 2017)

- [8] Meiers, I., Waters, D.J., Bostwick, D.G.: Preoperative Prediction of Multifocal Prostate Cancer and Application of Focal Therapy: Review 2007. Urology 70(6), S3–S8 (Dec 2007)

- [9] Nan, W., Jason, P., Jungkyu, P., Yiqiu, S., Zhe, H., Masha, Z., Stanislaw, J., Thibault, F., Joe, K., Eric, K., Stacey, W., Ujas, P., Sushma, G., Young, L.L.L., Kara, H., D., W.J., Beatriu, R., Yiming, G., Hildegard, T., Kristine, P., Alana, L., Jiyon, L., Krystal, A., Eralda, M., Stephanie, C., Esther, H., Naziya, S., Gene, K.S., Laura, H., Linda, M., Kyunghyun, C., J., G.K.: Deep Neural Networks Improve Radiologists’ Performance in Breast Cancer Screening. IEEE Transactions on Medical Imaging 39(4), 1184–1194 (Apr 2020)

- [10] Sadeghi, Z., Abboud, R., Abboud, B., Mahran, A., Buzzy, C., Yang, J., Gulani, V., Ponsky, L.: MP77-02 A NEW VERSUS AN OLD NOTION: IS THERE ANY CORRELATION BETWEEN MULTI-PARAMETRIC MRI (MPMRI) PI-RADS (PROSTATE IMAGING-REPORTING AND DATA SYSTEM) SCORE AND PSA (PROSTATE SPECIFIC ANTIGEN) KINETICS? Journal of Urology 199(4S) (Apr 2018)

- [11] Schouten, M.G., van der Leest, M., Pokorny, M., Hoogenboom, M., Barentsz, J.O., Thompson, L.C., Fütterer, J.J.: Why and Where do We Miss Significant Prostate Cancer with Multi-parametric Magnetic Resonance Imaging followed by Magnetic Resonance-guided and Transrectal Ultrasound-guided Biopsy in Biopsy-naïve Men? European Urology 71(6), 896–903 (Jun 2017)

- [12] Tajbakhsh, N., Shin, J.Y., Gurudu, S.R., Hurst, T.R., Kendall, C.B., Gotway, M.B., Liang, J.: Convolutional Neural Networks for Medical Image Analysis: Full Training or Fine Tuning? IEEE Transactions on Medical Imaging 35(5), 1299–1312 (May 2016)

- [13] Tsai, M.J., Tao, Y.H.: Machine Learning Based Common Radiologist-Level Pneumonia Detection on Chest X-rays. In: 2019 13th International Conference on Signal Processing and Communication Systems (ICSPCS). pp. 1–7. IEEE, Gold Coast, Australia (Dec 2019)

- [14] Zhang, Y., Chan, W., Jaitly, N.: Very deep convolutional networks for end-to-end speech recognition. In: 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). pp. 4845–4849. IEEE, New Orleans, LA (Mar 2017)