Protégé: Learn and Generate Basic Makeup Styles with Generative Adversarial Networks (GANs)

Abstract

Makeup is no longer confined to physical application; people now use mobile apps to digitally apply makeup to their photos, which they then share on social media. However, while this shift has made makeup more accessible, designing diverse makeup styles tailored to individual faces remains a challenge. This challenge currently must still be done manually by humans. Existing systems, such as makeup recommendation engines and makeup transfer techniques, offer limitations in creating innovative makeups for different individuals “intuitively”—significant user effort and knowledge needed and limited makeup options available in app. Our motivation is to address this challenge by proposing Protégé, a new makeup application, leveraging recent generative model—GANs to learn and automatically generate makeup styles. This is a task that existing makeup applications (i.e., makeup recommendation systems using expert system and makeup transfer methods) are unable to perform. Extensive experiments has been conducted to demonstrate the capability of Protégé in learning and creating diverse makeups, providing a convenient and intuitive way, marking a significant leap in digital makeup technology!

Index Terms— Makeup, Makeup Application, Inpainting, Image Inpainting, Face Inpainting

1 Introduction

Existing digital makeup applications have shown their capabilities in photo editing and beauty applications. They often fall into three categories which are (1) manual makeup applications such as Meitu [1], ModiFace [2], Perfect365 [3], BeautyPlus [4], YouCamMakeup [5], and FaceTune2 [6], (2) makeup recommendation systems using expert system [7, 8, 9, 10, 11] and (3) makeup transfer methods [12, 13, 14, 15, 16].

These three categories offer makeup functionalities; however, they share the common drawbacks—they fail to replicate makeup applications functionalities and characteristics that a human makeup artist would have. Specifically, a human makeup artist does innovative and tailored makeups for different individuals in an intuitive manner. “Intuitive” in this context means a makeup artist would decide and create makeup based on their makeup experiences and aesthetic instincts. Existing technologies lack these intuition and innovation capabilities that are necessary to meet the increasingly sophisticated demands of today’s consumers.

This shortfall is particularly evident in conventional digital makeup systems, which include manual makeup enhancement applications, makeup recommendation systems, and makeup transfer systems. Manual makeup applications such as MeiTu, FaceTune, YouCam Perfect offer users an array of makeup styles to experiment with. Yet, this requires significant user effort and knowledge as it involves trial and error in mixing and matching makeup for different facial features, which is not intuitive. Moreover, this approach is often hampered by the limited makeup options available within the apps, restricting the innovation in makeup applications. Consequently, users frequently settle for less than satisfactory makeup choices.

Makeup recommendation systems with expert system aim to simplify user experience by automating makeup selections through rule-based algorithms. Makeup recommendation systems aim to streamline the user experience by automating makeup selections through rule-based algorithms. These algorithms operate on predefined, expert-derived rules—for example, recommending peach and coral shades for users with a warm undertone or suggesting specific eyeliner or lipstick options based on facial features. Basically, to make this makeup recommendation system effective, these rules must be made of all possible kinds of cosmetic options in the world. While this automation simplifies the procedures for the end users, it transfers the burden to industry experts who must develop extensive, detailed rules for makeup combinations, effectively replacing rather than reducing the effort required, not even to say the capability to build this extensive expert system is impractical. Moreover, these systems lack the intuitive judgment that characterizes professional artistry, where decisions are based on experience and instinct rather than rigid rules.

Makeup transfer, on the other hand, was designed to allow users to preview makeup on their faces digitally before actual application. These systems are often integrated in the existing mobile makeup applications to allow the users to direct transfer the existing makeup onto their faces. However, as we discussed, this method only provides limited choices of makeup options for the users to choose. Besides, this process merely replicates existing makeup from a reference image, without creating adaptive and tailored makeups automatically, losing the intuition and innovation that a professional artist would offer.

Responding to these challenges, we introduce Protégé, a groundbreaking idea, the first kind of basic makeup application. Unlike existing systems, Protégé learns and generates distinct makeup for individual intuitively.

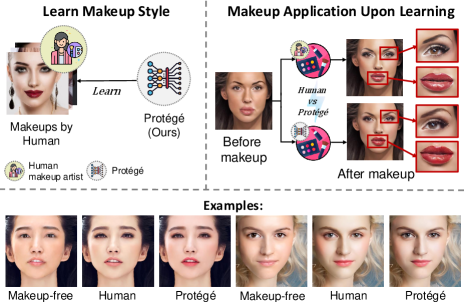

As depicted in Fig. 1, the context ‘Learn’ refers to training our makeup model- Protégé, to be a makeup artist that can do basic makeup like human. Following our designed methodology, Protégé leans basic makeup styles from a basic makeup dataset. This training mirrors the training phase of a human makeup artist where each makeup artist would undergo at the beginning of their career. Upon training, the model is expected to equip with the makeup styles and knowledge it obtained from the dataset and the training phase. Whereas for the context ‘Generate’, it means that our model, Protégé applies makeup to the face of the individual based on its learning. This makeup is generated intuitively, akin to the approach of a human artist, also, it is distinct with different face inputted.

To facilitate this learning and generation process, Protégé is equipped by our designed makeup inpainting algorithm that has repurposed from existing image inpainting techniques. Makeup inpainting algorithm allows Protégé to learn the overall makeup style and makeup knowledge from a makeup dataset where we desire it to absorb. During the makeup application, this algorithm leads Protégé to utilize information from the input face and surrounding information, then fill a makeup on face, completing a makeup generation process. This method opens a new avenue for the community by not only going beyond those makeup applications that offer only limited choices of makeup on digital platform but also proposing a human-like makeup application technique. Besides, it also opens the possibility to allow the makeup industries to train their customized digital makeup artist with their specific cosmetic styles. Our key contributions are:

-

1.

We propose Protégé, a basic makeup generation model that mimics human makeup artist to intuitively generate distinct and innovative makeup for different individuals.

-

2.

We introduce a GAN-based makeup inpainting algorithm, learning and applying makeup that complements the input face while preserving the original face identity.

Manual Selection Rule-based Makeup Transfer Protégé Require Makeup Dataset for Training ✓ ✓ ✓ ✓ Independent on User’s Knowledge ✗ ✓ ✓ ✓ Independent on Expert Knowledge ✓ ✗ ✓ ✓ Independent on Rule Quality ✓ ✗ ✓ ✓ Independent on Reference Image ✗ ✓ ✗ ✓ Easy Maintenance and Trend Updates ✓ ✗ ✓ ✓ Flexible & Intuitive Makeup Generation ✗ ✗ ✗ ✓

2 Related Work

Makeup Applications. As summarized in Tab. 1, the field of digital makeup application has evolved through several stages, beginning with simple filter-based manual makeup enhancement applications [17, 1, 4, 2, 3, 5, 6] that rely heavily on user input, advancing through rule-based systems [12, 14, 15, 16] that automate some decisions but lack flexibility, to more sophisticated makeup transfer techniques [7, 8, 13, 9, 10, 11]. These developments, while each marking an improvement over previous methods, often do not fully address the need for intuitive and innovative makeup application, causing a limited range and diversity of makeups available to end users. An intuitive and innovative makeup application reduces human efforts in digital makeup application and provides a useful tool in creating tailored makeup that is aligned with our specified makeup style.

Image Inpainting. Image inpainting [18, 19, 20, 21, 22, 23, 24] is a technique originally developed to restore damaged parts of images or fill in missing areas within digital photographs. The primary objective of traditional image inpainting is to reconstruct coherent image regions with plausible textures and details that are visually seamless and consistent with the surrounding areas. We appreciate the concept of image inpainting, implementing the image inpainting techniques to integrate in facial makeup applications. We aim to repurpose this technique to become a new makeup algorithm so it can mimic human makeup artist to be able to create innovative makeup intuitively for different individuals.

3 Methodology

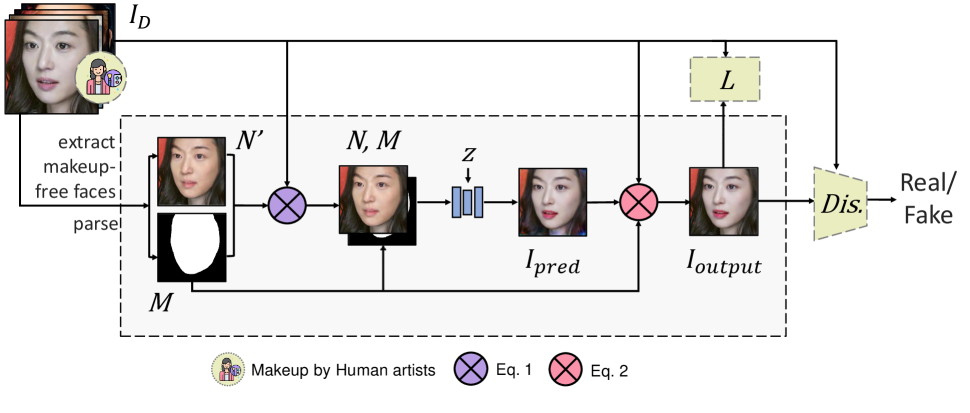

To develop a sophisticated digital basic makeup application system—Protégé, that autonomously learns from a vast dataset with basic makeups and then applies this knowledge to generate distinct, dataset-aligned makeups that are tailored to individual facial features. As illustrated in Fig. 2, this system integrates generative adversarial networks (GANs) [25] and repurposes image inpainting method [24] to achieve a level of intuitiveness and makeup innovation in digital makeup applications that mirrors human’s makeup generation capabilities. The reason for using Generative Adversarial Networks (GANs) instead of stable diffusion models in this work is that GANs focus solely on learning from the given dataset. In contrast, stable diffusion models typically rely on pre-trained models, which incorporate existing knowledge beyond the dataset. This external knowledge can affect the quality of the basic makeup generation we aim to achieve.

3.1 Preliminary

The foundation of Protégé’s capabilities is its robust dataset of makeup images, designated as . These images, all crafted by professional human makeup artists, represent a wide range of makeups with different artistic expressions, poses and lighting. This dataset is pivotal for our model to analyze and internalize the makeup distribution which represents the basic makeup style that we want our model to learn, allowing it to synthesize bespoke makeup styles that are both innovative and aligned with that particular makeup style which is basic makeup style in our case.

3.2 Makeup learning and generation via makeup inpainting

Protégé commences its learning process by deeply analyzing a dataset of basic makeup images. This initial stage is crucial as it allows the system to absorb and understand our specified makeup style, makeup knowledge, and the specific interplay of colors and textures from the dataset. The goal here is to build a framework that captures the essence of the makeup style to facilitate the generation of innovative while style-aligned makeup applications on new subjects. Next, we elaborate on the makeup inpainting algorithm for makeup learning and generation.

3.2.1 Identify the area for makeup

To understand the location where the makeup should be applied, the first technical step in our makeup inpainting algorithm involves defining the regions of interest on the face where makeup will be applied. This is achieved by extracting a binary Region of Interest (ROI) mask using advanced face-parsing technique [26]. This mask helps in delineating facial features that are critical for personalized makeup application. In this mask, facial areas designated for makeup application are marked with a value of 1, indicating that they are active regions for makeup application. Non-facial areas and regions not targeted for makeup, such as the hair, neck, background, are marked with a value of 0, indicating that these areas should remain unaffected during the makeup application process. This binary distinction helps in focusing the application algorithms specifically where makeup is intended, ensuring clarity and precision in the final output.

3.2.2 Comprehend makeup by applying makeup on makeup-free face and assessing the makeup

The core of Protégé’s functionality is its makeup comprehending process. This process begins with the extraction of a makeup-free version of the face from each image from the dataset, using a sophisticated cross-image style transfer technique, known as LADN[27]. This technique strips the original makeup from the face, revealing its natural state and providing a clean slate for makeup application . However, the resultant makeup-free image is not only devoid of makeup on the face but also free of any makeup traces in non-facial areas such as the neck, hair, and background, which is undesired.

To preserve the integrity of non-facial areas as the original image in the dataset during the extraction of makeup-free face, is blended with the corresponding image from the dataset, using the previously defined mask . The resulting composite image , which is our desired resultant makeup-free image, is then concatenated with to form a four-channel input , which is utilized in the subsequent inpainting stages, where the equation as:

| (1) | ||||

With the preparatory stages complete, Protégé proceeds to the actual application of makeup. Emulating the techniques of human makeup artists, our model, inspired by image inpainting technique, repurposes the image inpainting to intuitively apply makeup across the defined facial areas. In the following stages, Protégé reflects the makeup procedure that a human would do on the targeted face.

(a) Process the prime information in N for makeup. This process reflects the human behavior of understanding targeted facial features before makeup application. Upon having the ingredient ready, the makeup comprehending process is continued with the critical encoding of , which is the concatenated input of the makeup-free face and the ROI mask . This initial encoding stage is crucial as it ensures that all the relevant information, especially the information from the masked region (i.e., face) is considered in the makeup application process.

Note: Conventional image inpainting techniques treat the masked region as a void, filling it in solely with information from the surrounding areas, as they do not expect any usable data from within the masked region itself. In contrast, our Makeup Inpainting algorithm considers the masked region as a crucial source of information for the makeup application process. It leverages both the surrounding details from the unmasked areas and the data within the masked region itself.

During the entire input processing, Protégé includes facial identity. This approach mirrors the procedure before a makeup artist starts their makeup application-assessing a client’s facial features, choosing enhancements that suit the client.

(b) Fine-grained and diverse makeup synthesis. Protégé uses a sophisticated mapping network to convert a noise latent vector into a detailed latent space. This space effectively captures the diversity of makeup styles contained within the training dataset. Subsequently, a StyleGAN2-based generator refines these inputs into a coherent makeup application in a coarse-to-fine manner. This step-by-step refinement process ensures that each layer of makeup is applied with increasing precision, reflecting the intricate details that characterize professional makeup applications. The results , is a high-quality representation of how the makeup looks on the user’s face, combining artistic flair with technical precision.

Moreover, during the makeup synthesis, Protégé maintains the facial identity, ensuring that any makeup enhancements complement rather than obscure the face of the subject. This approach mirrors the precision with which a makeup artist conducts makeup applications without altering the face identity. This capability is achieved using generative adversarial networks (GANs), which are designed to learn detailed and nuanced representations of facial features. GANs effectively capture and reinforce the unique characteristics of each face, allowing for personalized makeup applications that maintain the integrity of the original facial structure. By integrating this advanced technology, Protégé ensures that the makeup application enhances the individual’s appearance while staying true to their natural identity, much like the bespoke services offered by skilled human makeup artists.

(c) Preserve the integrity of non-facial areas after makeup application. Note: This scenario would not occur in human makeup artist applications but is needed to be addressed in digital contexts. After the initial application of makeup, it is imperative to integrate the newly applied cosmetics seamlessly with the non-facial areas of the original image . This integration is meticulously achieved by using the ROI mask to blend the makeup-applied regions of with the untouched areas of . This careful blending ensures the non-targeted regions remain its integrity as the . This final composite image , is the product ready for professional evaluation and further refinement if necessary. The equation is written as below:

| (2) |

3.3 Makeup’s checking and guarding.

The final step in the Protégé’s process involves rigorous quality assurance to ensure that the makeup applied not only meets the intended aesthetic standards but also remains true to the makeup style identified in the dataset. This is accomplished through a set of meticulously calibrated loss functions during the training phase. The non-saturating cross-entropy loss ensures that the generated makeup aligns closely with the makeup style in the dataset. The loss is implemented, where the input is either dataset’s image or the final output , based on the training phase. Whereas the high receptive perceptual loss LHRFPL fine-tunes the details for accuracy. It is achieved by calculating the distance between the final output and in feature space, which is formulated as . Additionally, a pixel-wise reconstruction loss assesses the overall quality, adjusting ensure that the final product is not only visually appealing but also authentic to the desired trend. It is achieved by calculating between and , formulated as , which is formulated as .

The total loss function is formulated as: .

The generator and discriminator are trained adversarially, with empirically set loss weights [24]: and .

4 Experiments

Baselines. We evaluate our methodology from two perspectives: (i) Makeup style learning: To evaluate if the makeup applied on the targeted face is aligned with our desired style (i.e., the basic makeup style in dataset), we benchmark our set of resulting makeups with the dataset where it contains the basic style that we desire to follow. (ii) Face Identity Preservation: To evaluate if the face identity of the target subject is preserved upon makeup application by our Protégé, we evaluate the resulting face after makeup applied with the makeup-free face before makeup. This evaluation is critical because we are repurposing from typical image inpainting technique which is unable to preserve facial identity. Facial preservation is a must upon makeup application because in real condition, our face features will still be maintained even after makeup. Therefore, we benchmark the face identity preservation capability with the conventional image inpainting techniques, notably FcF[24] and LaMa[18]. To ensure the equitable comparisons, both baselines were trained on the BeautyFace dataset[11], adhering to identical training configurations as our proposed method.

Evaluation Metrics We employ two metrics for quantitative evaluations: (i) Fréchet inception distance (FID) [28] the quality of images generated by machine learning models, particularly in the context of generative adversarial networks (GANs), where it measures the similarity between two sets of images, typically the set of real images and the set of images generated by the model. In our case, we utilize it to evaluate how well our model learns the makeup style from the dataset where it contains our desired style. It is evaluated by calculating and comparing the distribution of the generated makeup images , with the distribution of the dataset containing our desired makeup style. (ii) Additive Angular Margin Loss (ArcFace) [29], normally used in scenarios where there is a need to verify or recognize faces with high accuracy, such as security systems, smartphone unlocking or identification processes. We utilize it to evaluate the face identity preservation capability of our model, determining the likelihood of our results matching the person’s identity in the makeup-free images.

Dataset. We trained Protégé on BeautyFace dataset [11]. This dataset features 2,719 high-resolution images that include various facial characteristics, expressions, poses, and lighting conditions. We used this dataset to represent a specific makeup style (i.e., basic makeup) where we expect our model to learn and generate makeups that follow the similar overall makeup style. We partitioned the dataset into a training set of 2,447 images and a test set of 272 images.

Implementation Details. Not all data augmentation techniques are suitable for our situation. In our case, we resize all the images to 256 x 256, adopting only a random flip as data augmentation. The random flip is applied simultaneously on both the input image and ROI Mask . Specifically, when is flipped, must be flipped in the same direction. This is because is essential to be positioned the same as to indicate the correct region for makeup application. We employ Adam as optimizer with a learning rate of 0.00001 and a batch size of 2 on a Nvidia RTX 3070 GPU. Our model is implemented using PyTorch[30].

4.1 Qualitative Results

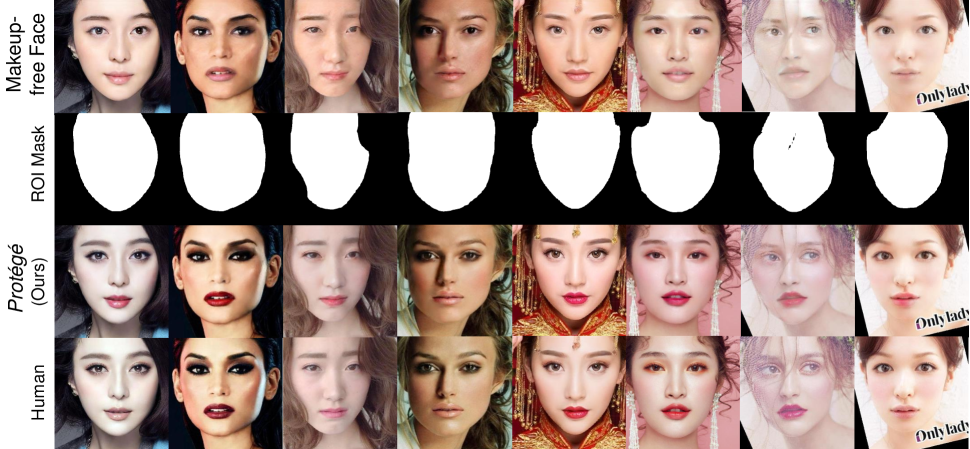

Visual comparisons in makeup application between Protégé and human-artist makeup. We visually compared Protégé against the makeup applied by the human makeup artist. As shown in Fig. 3, our model demonstrates proficiency in generating makeup that is close to the general style of the makeups done by the human makeup artist (evaluated by FID).

A notable observation in the results is Protégé’s inclination towards pinkish hues, reflecting the overall style our model learns from the makeup set from human makeup artist . This is evident in the lipstick shades in makeups done by Protégé, where it prefers bright red to dark red, and favors pink lipstick color over nude lipstick color, showing a preference for vibrant shades. Nonetheless, Protégé occasionally select more subdued nude tones due to the learned makeup distribution.

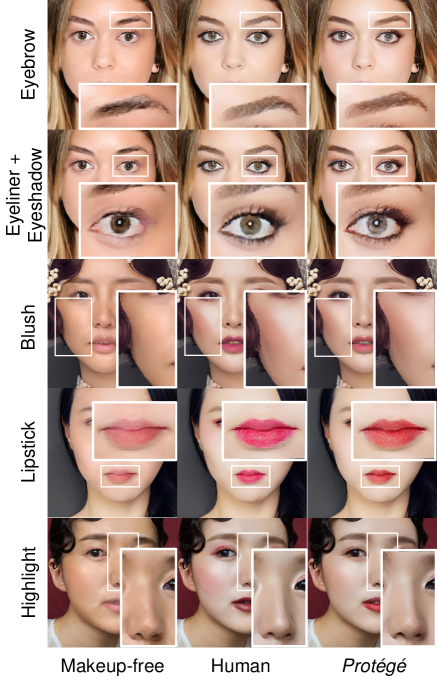

Protégé’s prowess in detailed makeup application is highlighted in Fig. 4, showcasing Protégé’s remarkable ability to capture the details from makeups done by human makeup artists .

Visual Comparisons in Face Identity Preservation. Which of these faces belongs to the same person as the one shown in the makeup-free image?

We compared Protégé’s resulting image after makeup with baseline models, which are FcF[24] and LaMa[18]. The results in Fig. 5 demonstrate Protégé’s superior capability in maintaining facial identity after makeup application, which is expected to be like how a human makeup artist would do-keep the face identity after makeup application. This capability stands Protégé apart from the conventional image inpainting models.

4.2 Quantitative Results

Methods FID1 () ArcFace2 () LaMa [18] 91.03 0.33 (0.14) FcF [24] 40.00 0.59 (0.14) Protégé (Ours) 27.26 0.89 (0.07)

Apart from qualitative evaluation, we further evaluate our model in the makeup style learning capability and facial identity preservation.

How well did our model Protégé learn makeup style from ? As shown in Tab. 2, our Protégé outperforms LaMa and FcF in generating style-aligned makeups as supported by the lowest FID score (i.e., 27.26).

How well did our model Protégé preserve face identity during makeup application? The finding in ArcFace score as shown in Tab. 2 shows that Protégé effectively maintains face identity with the highest ArcFace score (i.e., 0.89), despite the slight variations due to different makeup applied.

The results demonstrate that our model effectively learns the makeup style and proficiently generates makeups, as assessed in qualitative evaluations, while maintaining the integrity of the facial identity.

4.3 User Study

| Methods | Prefer Makeup by? | Identical to |

|---|---|---|

| LaMa [18] | - | 0.00% |

| FcF [24] | - | 5.00% |

| Human Artists | 25.00% | 100.00% |

| Protégé (Ours) | 75.00% | 100.00% |

We evaluated subjective preferences and perceptions from humans for Protégé’s generated makeups. The participants were given a set of 20 questions, where each question is presented with a face alongside its two makeup versions, one is makeup face done by Protégé, while another is makeup face done by the human makeup artist. However, the information of whether the makeup is done by Protégé or human makeup artist is not exposed to the participants. In the situation of believing both options were from the makeup application models, they were not aware which one was makeup done by human makeup artist.

From the user study shown in Tab. 3, we are surprised that many favored makeup applications done by the Protégé with a percentage of 75.00%. This is under the impression that both options were generated by Protégé, specifically, the participants did not expect one of the options is makeup done by human makeup artist. We also conducted interview to these participants to learn the reason of their choices. We are informed that the reason is mostly because of the makeups generated by Protégé is more natural and has a closer resemblance to common human’s makeup styles. This preference suggests that Protégé not only effectively captures and learns from the makeup dataset done by human makeup artist, but also surpassed our expectations.

Moreover, we conducted survey related to the capability of Protégé to preserve face identity upon makeup application. In the survey, makeup-free faces were shown alongside four options: (i) Makeup image by Protégé, (ii) Makeup image by human makeup artist, (iii) Makeup image by FcF, and (iv) Makeup image by LaMa. Participants had to identity which makeup image is the person similar to the makeup-free face presented. The results show that many participants have linked our makeup image with the original face, showing our model’s success in preserving face identity.

5 Conclusion

This study successfully overcomes the limitations of conventional makeup application methods such as manual makeup application, makeup transfers, and rule-based makeup recommendation systems using expert system, which often lack the capability to create intuitive and innovative makeup, causing limitation in fulfilling the increasing demands of users on the digital platform. Traditional approaches not only require extensive user interaction and makeup knowledge, but they are also constrained by static rules and pre-existing makeup styles, which hinder the creation of innovative makeup looks. Our innovative model, Protégé, introduces a transformative makeup application model. By leveraging our advanced makeup inpainting algorithm, Protégé simplifies the learning and application processes, enabling intuitive and bespoke makeup creations that maintain the user’s facial identity. Comprehensive testing in the BeautyFace dataset has demonstrated Protégé’s ability to deliver personalized, diverse, and high-quality makeup efficiently on subject’s face, redefining user experience in digital basic makeup application.

References

- [1] Inc. Meitu, “Meitu,” https://www.meitu.com/, 2024, [Mobile app].

- [2] L’Oréal, “Modiface,” https://www.modiface.com/, 2024, [Mobile app].

- [3] Perfect365 Technology Inc., “Perfect365,” https://www.perfect365.com/, 2024, [Mobile app].

- [4] Meitu, Inc., “Beautyplus [mobile app],” https://www.beautyplus.com/, 2024, Available at https://www.beautyplus.com/.

- [5] Perfect Corp., “Youcam makeup,” https://www.perfectcorp.com/consumer/apps/ymk, 2024, [Mobile app].

- [6] Lightricks Ltd., “Facetune2,” https://www.facetuneapp.com/, 2024, [Mobile app].

- [7] Wai-Shun Tong, Chi-Keung Tang, Michael S Brown, and Ying-Qing Xu, “Example-based cosmetic transfer,” in PG’07, 2007.

- [8] Dong Guo and Terence Sim, “Digital face makeup by example,” in CVPR, 2009.

- [9] Si Liu, Xinyu Ou, Ruihe Qian, Wei Wang, and Xiaochun Cao, “Makeup like a superstar: Deep localized makeup transfer network,” arXiv preprint arXiv:1604.07102, 2016.

- [10] Kshitij Gulati, Gaurav Verma, Mukesh Mohania, and Ashish Kundu, “Beautifai-personalised occasion-based makeup recommendation,” in ACML, 2023.

- [11] Qixin Yan, Chunle Guo, Jixin Zhao, Yuekun Dai, Chen Change Loy, and Chongyi Li, “Beautyrec: Robust, efficient, and component-specific makeup transfer,” in CVPR, 2023.

- [12] Taleb Alashkar, Songyao Jiang, Shuyang Wang, and Yun Fu, “Examples-rules guided deep neural network for makeup recommendation,” in AAAI, 2017.

- [13] Kristina Scherbaum, Tobias Ritschel, Matthias Hullin, Thorsten Thormählen, Volker Blanz, and Hans-Peter Seidel, “Computer-suggested facial makeup,” in CGF, 2011.

- [14] Luoqi Liu, Junliang Xing, Si Liu, Hui Xu, Xi Zhou, and Shuicheng Yan, “Wow! you are so beautiful today!,” TOMM, 2014.

- [15] Taleb Alashkar, Songyao Jiang, and Yun Fu, “Rule-based facial makeup recommendation system,” in FG 2017, 2017.

- [16] Tam V Nguyen and Luoqi Liu, “Smart mirror: Intelligent makeup recommendation and synthesis,” in ACMMM, 2017.

- [17] PRH Perera, ESS Soysa, HRS De Silva, ARP Tavarayan, MP Gamage, and KMLP Weerasinghe, “Virtual makeover and makeup recommendation based on personal trait analysis,” in ICAC, 2021.

- [18] Roman Suvorov, Elizaveta Logacheva, Anton Mashikhin, Anastasia Remizova, Arsenii Ashukha, Aleksei Silvestrov, Naejin Kong, Harshith Goka, Kiwoong Park, and Victor Lempitsky, “Resolution-robust large mask inpainting with fourier convolutions,” in WACV, 2022.

- [19] Wenbo Li, Zhe Lin, Kun Zhou, Lu Qi, Yi Wang, and Jiaya Jia, “Mat: Mask-aware transformer for large hole image inpainting,” in CVPR, 2022.

- [20] Xiaoguang Li, Qing Guo, Di Lin, Ping Li, Wei Feng, and Song Wang, “Misf: Multi-level interactive siamese filtering for high-fidelity image inpainting,” in CVPR, 2022.

- [21] Qiankun Liu, Zhentao Tan, Dongdong Chen, Qi Chu, Xiyang Dai, Yinpeng Chen, Mengchen Liu, Lu Yuan, and Nenghai Yu, “Reduce information loss in transformers for pluralistic image inpainting,” in CVPR, 2022.

- [22] Chuanxia Zheng, Tat-Jen Cham, Jianfei Cai, and Dinh Phung, “Bridging global context interactions for high-fidelity image completion,” in CVPR, 2022.

- [23] Andreas Lugmayr, Martin Danelljan, Andres Romero, Fisher Yu, Radu Timofte, and Luc Van Gool, “Repaint: Inpainting using denoising diffusion probabilistic models,” in CVPR, 2022.

- [24] Jitesh Jain, Yuqian Zhou, Ning Yu, and Humphrey Shi, “Keys to better image inpainting: Structure and texture go hand in hand,” in WACV, 2023.

- [25] Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio, “Generative adversarial nets,” NeurIPS, 2014.

- [26] Changqian Yu, Jingbo Wang, Chao Peng, Changxin Gao, Gang Yu, and Nong Sang, “Bisenet: Bilateral segmentation network for real-time semantic segmentation,” in ECCV, 2018.

- [27] Qiao Gu, Guanzhi Wang, Mang Tik Chiu, Yu-Wing Tai, and Chi-Keung Tang, “Ladn: Local adversarial disentangling network for facial makeup and de-makeup,” in ICCV, 2019.

- [28] Martin Heusel, Hubert Ramsauer, Thomas Unterthiner, Bernhard Nessler, and Sepp Hochreiter, “Gans trained by a two time-scale update rule converge to a local nash equilibrium,” NeurIPS, 2017.

- [29] Jiankang Deng, Jia Guo, Niannan Xue, and Stefanos Zafeiriou, “Arcface: Additive angular margin loss for deep face recognition,” in CVPR, 2019.

- [30] Adam Paszke, Sam Gross, Francisco Massa, Adam Lerer, James Bradbury, Gregory Chanan, Trevor Killeen, Zeming Lin, Nathaniel Keiser, Luca Antiga, and Andreas Pyatkovskiy, “Pytorch: An imperative style, high-performance deep learning library,” arXiv preprint arXiv:1912.01703, 2019.