17(0.5,0.2)

Published in: missing2022 IEEE 25th International Conference on Intelligent Transportation Systems (ITSC).

Please cite this paper as:

Zhaobin Mo, Yongjie Fu, and Xuan Di. “Quantifying Uncertainty In Traffic State Estimation Using Generative Adversarial Networks.”

2022 IEEE 25th International Conference on Intelligent Transportation Systems (ITSC). IEEE, 2022.

Quantifying Uncertainty In Traffic State Estimation Using Generative Adversarial Networks

Abstract

This paper aims to quantify uncertainty in traffic state estimation (TSE) using the generative adversarial network based physics-informed deep learning (PIDL). The uncertainty of the focus arises from fundamental diagrams, in other words, the mapping from traffic density to velocity. To quantify uncertainty for the TSE problem is to characterize the robustness of predicted traffic states. Since its inception, generative adversarial networks (GAN) has become a popular probabilistic machine learning framework. In this paper, we will inform the GAN based predictions using stochastic traffic flow models and develop a GAN based PIDL framework for TSE, named “PhysGAN-TSE”. By conducting experiments on a real-world dataset, the Next Generation SIMulation (NGSIM) dataset, this method is shown to be more robust for uncertainty quantification than the pure GAN model or pure traffic flow models. Two physics models, the Lighthill-Whitham-Richards (LWR) and the Aw-Rascle-Zhang (ARZ) models, are compared as the physics components for the PhysGAN, and results show that the ARZ-based PhysGAN achieves a better performance than the LWR-based one.

I INTRODUCTION

Traffic states, represented by traffic velocity or density, can contain uncertainty from various sources, including model errors, measurement noise, inherent stochasticity in driving behavior, and random initial or boundary conditions. Imposed with these stochastic terms, traffic states are essentially spatiotemporal (ST) random fields. In this paper, we are primarily focused on uncertainty arising from driving behaviors and their resultant effect on traffic states. We aim to infer the distribution of traffic state random fields using sparse data collected from fixed-location sensors, i.e., loop detectors.

Traffic state estimation (TSE), a classical traffic problem, has long been studied aiming to estimate traffic states using various sensor data [1]. The methods that predict traffic states and characterize the robustness of the prediction can be categorized into model-based and data-driven methods. The model-based methods assume that observed data are generated by underlying models and filtering methods can be applied to these models to propagate uncertainty. The data-driven methods learn distributions of uncertainty directly from data without using a prior assumption of underlying physics. The model based methods suffer from limitations such as the non-Gaussian likelihoods and high-dimensional posterior distributions [2], while the data-driven approaches require a huge amount of data to learn the posterior distributions from high-dimensional random inputs. Thus, an integration of model based and data-driven methods has become a rapidly growing research arena, namely, physics-informed deep learning (PIDL) [3, 4, 5, 6].

Recent years have seen a growing trend of learning random fields using machine learning models, including but not limited to: Gaussian Processes [7], Bayesian neural networks [8], and most recently, generative models [2]. Among the generative models, generative adversarial networks (GAN) have demonstrated its robustness in various applications, from image or video generation [9] to uncertainty quantification (UQ) [10]. For UQ problems, physics-informed GANs (PhysGAN) is becoming increasingly popular to quantify uncertainty in stochastic differential equations [11, 2, 12, 13]. Little research, though, has been documented when randomness inherently arises from human behaviors or inherent dynamics. In other words, the existing literature on PhysGAN integrates a deterministic physics model into GANs because it is assumed that the equations that generate data are deterministic and the prediction contains stochasticity due to randomness from initial or boundary conditions. In this paper, in contrast, we will assume that the models that generate data are themselves stochastic due to randomness in parameters, and thus, stochastic physics models need to be integrated to capture the randomness from these sources.

The contributions of this paper include:

(1) Physics-informed GANs are used to quantify uncertainty in TSE.

Because traffic, constituted by human drivers, is inherently stochastic due to randomness in driving behaviors, stochastic traffic flow models are incorporated into GANs to help regularize the training process;

(2) Parameters in physics are estimated jointly with traffic state prediction;

(3) PhysGAN-TSE is applied to the real-world dataset, Next Generation SIMulation (NGSIM), to demonstrate its predictive accuracy compared to other baselines.

The rest of the paper is organized as follows: Section II reviews the state-of-the-art of PIDL on TSE. Section III introduces the preliminaries of GAN based UQ. Section IV introduces how we integrate PIDL and GAN into the framework of PhysGAN for TSE problems. Section V demonstrates how PhysGAN is applied to NGSIM to characterize uncertainty from the real-world data. Section VI concludes our work and projects future research directions in this promising arena.

II Related Work

For studies that use model-based UQ for TSE, a prior assumption is usually made about the distribution of randomness in inputs or traffic states. The existing practice includes two ways: one to add a Brownian motion on the top of the deterministic traffic flow models, leading to Gaussian stochastic traffic flow models [14]; and the other to derive intrinsic stochastic traffic flow models with more complex probabilistic distributions [15]. The former is more amenable to applying standard filtering methods while the latter is more challenging to deal with and requires a large population approximation to extract mean and variance before any filtering can be applied.

If the noise in the output traffic states is non-Gaussian and follows complex distributions, the filtering methods could fail. GANs have been used to learn complex distributions of stochasticity residing in random fields from ST data [11]. Key issues with GANs include mode collapse and training instability [16]. One solution is to regularize the training of GANs by incorporating physics into the structure of generators (and potentially discriminators), where unlabeled data are generated from generators that are guided by physics-based models, so that the search space of parameters is narrowed down.

PhysGAN have been applied to quantify real-world data uncertainty in various domains, which includes flood prediction [17], blood alcohol concentration prediction [18], and porous media flow modeling [19]. PhysGANs have not been used to quantify uncertainty in traffic state estimation. Traffic, different from physical systems, can exhibit highly nonlinear randomness arising from inherent human driving behavior. Thus, it poses new challenges when PhysGANs are applied.

III Preliminaries

Let us start with a partial differential equation (PDE). Define location and time and . Then the ST domain of interest is a continuous set of points: . A PDE defined over the ST domain is written as:

| (1) |

where, is the nonlinear differential operator, is the boundary condition operator, is the exact solution of the PDE, and is the physics parameter vector.

Now we will approximate the PDE solution, , by a (deep) neural network (DNN) parametrized by , i.e. , which is called “physics uninformed neural network (PUNN).” If this PUNN is exactly equivalent to the PDE solution, then we have

| (2) |

Otherwise we define a residual function:

| (3) |

If PUNN is well trained, the residual needs to be as close to zero as possible. Since NNs are normally trained with discrete data points, below we will define the potentially accessible training data in the context of TSE.

Assume the dataset consists of (1) (labeled) observation data , and (2) (unlabeled) collocation points . and are the indexes of observation points and collocation points, respectively. The numbers of observed data and collocation states are denoted as , respectively. Observation data are limited to the time and locations where traffic sensors are placed. In contrast, collocation points have neither measurement requirements nor location limitations, and thus are controllable. The target values associated with collocation points are used to regularize the loss function. Accordingly, we define a loss function as the weighted average of data discrepancy and physics discrepancy. Denote the weight of the data discrepancy in the loss function as . The loss function is:

| (4) |

We will introduce stochasticity into the PDE solution. Below we will discuss how the probabilistic physics-informed neural networks [2] can be used for UQ. When considering the stochasticity of a PDE, we assume the uncertainty mainly comes from two sources, the intrinsic uncertainty of the physics parameter and the measurement uncertainty of the variable . In connection with this, we treat the physics parameter as a random variable, i.e. , and assume the variable follows a conditional distribution of the spatial-temporal coordinates, i.e. .

After formulating the uncertainty of the physics and the variable , a UQ problem further assumes that there exists a mapping between the variable and a latent random variabe , i.e. , such that the PDE holds in a stochastic manner:

| (5) | |||

where encodes possible randomness sources that lead to stochasticity in the observable output .

With Eq. 5 generating noised output that are constrained by a PDE, the UQ problem based on adversarial inference is to match the conditional distribution of the generated data, denoted as parametrized by , and the conditional distribution of the observed output, which is . One widely used metric to match two distributions is the reverse Kullback-Leibler (KL) divergence.

Now we will formulate the UQ problem in the context of conditional GANs. The generator is a neural network surrogate model, denoted as , that approximates the ground-truth mapping mentioned before. The objective of the generator is to fool an adversarially trained discriminator . Then the adversarial UQ problem becomes a min-max game:

| (6) | |||

where and are the parameters of the generator and the discriminator, respectively. is the joint distribution of the spatial-temporal coordinates and the output , and is the marginal distribution of the spatial-temporal coordinates. is a standard normally distributed random variable.

With the physics introduced into the generator, the loss functions of the generator and the discriminator can be decoupled and defined below, respectively:

| (7) | ||||

| (8) | ||||

| (9) |

where and are predictions of the generator on observation and collocation points, respectively. Note that only depends on the observation points, only depends on the collocation points, and depends on both the observation and the collocation points.

IV GAN based PIDL framework for TSE (PhysGAN-TSE)

In this section, we will introduce how PhysGANs are introduced to solve the UQ problem with latent variables.

IV-A Problem Statement

Let and be two general nonlinear differential operators. The problem of interest is to quantify uncertainty in the traffic density and velocity fields at each point in the ST domain , such that the following PDEs of a traffic flow model can be satisfied:

| (10) |

where is the exact solution of PDEs. and are potential intrinsic and observation uncertainties, respectively, represented by random fields.

We aim to develope PUNNs to approximate and with time and location as inputs, respectively. We denote the approximation from PUNN as and . To use physics to guide the training of PUNN, we customize the residuals on the collocation points as:

| (11a) | |||

| (11b) | |||

which are defined according to the traffic flow model in Eq. 10.

IV-B Architecture Design

We adopt two types of traffic model as the physics component, the Lighthill-Whitham-Richards (LWR) model [20] and the Aw–Rascle–Zhang (ARZ) model [21], to construct the LWR-based PhysGAN (LWR-PhysGAN) and the ARZ-based PhysGAN (ARZ-PhysGAN).

LWR-PhysGAN. The LWR model is depicted as:

| (12a) | |||

| (12b) | |||

Eq. 12a is the conservation law; Eq. 12b defines the relation between the traffic density and the traffic velocity , where and are the maximum traffic density and the maximum traffic velocity, respectively. Note that the time and locations of and are omitted for simplicity. Applying Eq. 12a on the collocation points, we can rewrite Eq. 11a as below below:

| (13) |

With this residual, we can re-write Eq. LABEL:eqn:physics in the form of the LWR model as:

| (14) |

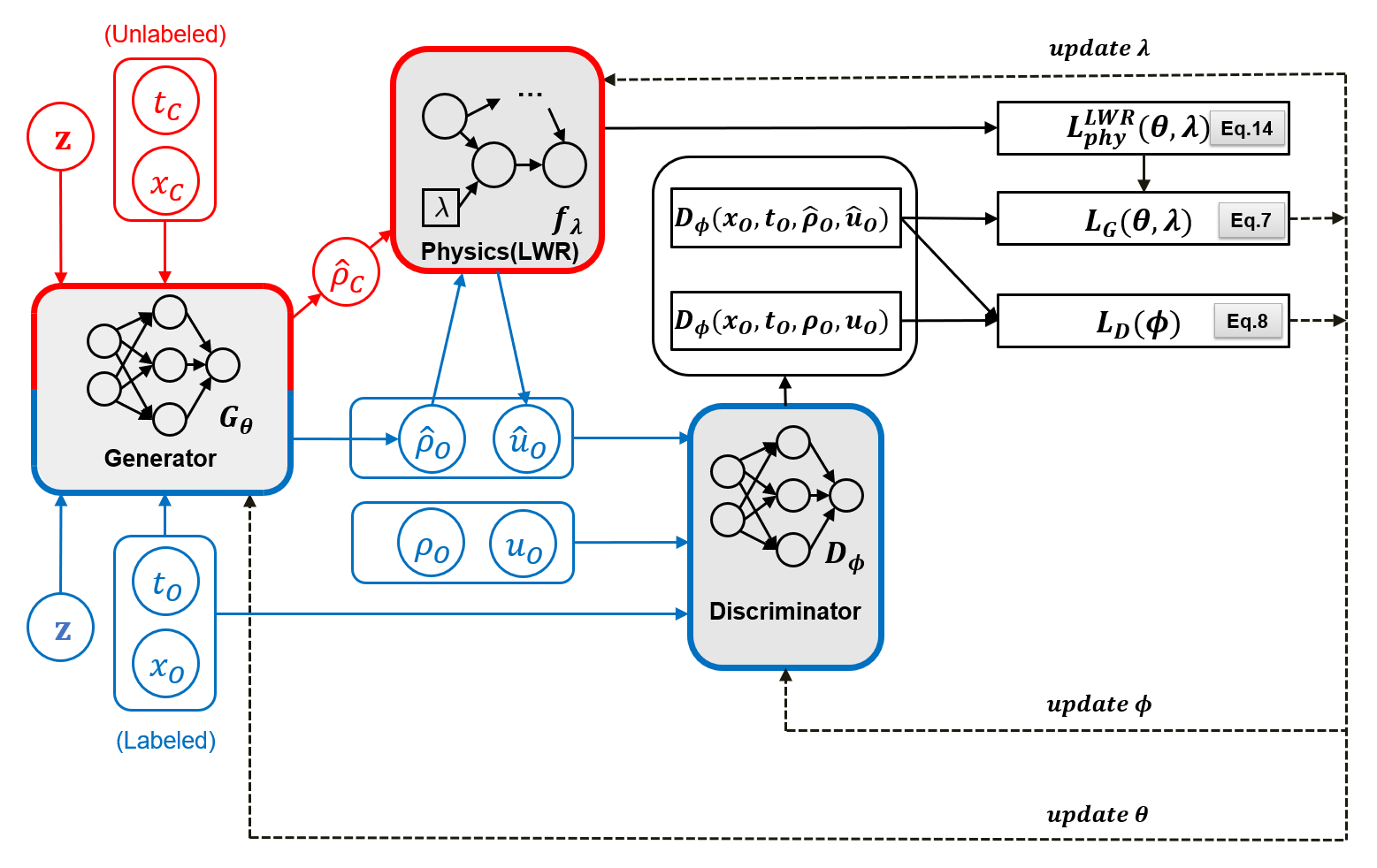

The architecture of the LWR-PhysGAN is illustrated in Fig. 1. It consists of three sub-models, namely the generator , the discriminator , and the physics , which are emphasized by shaded boxes. The blue and red colors illustrate the processes of calculating the data discrepancy and physics discrepancy, respectively. The dashed lines indicate the process of updating parameters.

As for calculating the data discrepancy, both the observation points and the collocation points are fed into the generator along with the random number . The outputs of the generator are the predicted traffic density and , which correspond to the observation and collocation points, respectively. Then the physics takes as input the predicted traffic density and outputs the predicted traffic velocity according to the LWR equation depicted in Eq. 12b. The prediction tuple and the ground-truth tuple are then fed into the discriminator to calculate discriminator loss function .

As for calculating the physics discrepancy, is used to calculate the physics loss function using Eq. 14. The physics loss is then added to the generator loss function as a regularization term.

As for updating the parameters, the discriminator loss function is used to update the discriminator parameters . The generator loss function , which is regularized by the physics loss, is used to update both the generator parameters and the physics parameter .

ARZ-PhysGAN. The ARZ model is depicted as:

| (15a) | |||

| (15b) | |||

| (15c) | |||

| (15d) | |||

Eq. 15a is the conservation law, which is the same as Eq. 12a; and are the maximum traffic density and the maximum traffic velocity, respectively; is the relaxation time. Applying Eq. 15b on the collocation points, we can rewrite Eq. 11b as below:

| (16) |

Thus, we can re-write Eq. LABEL:eqn:physics in the form of the ARZ model as:

| (17) | ||||

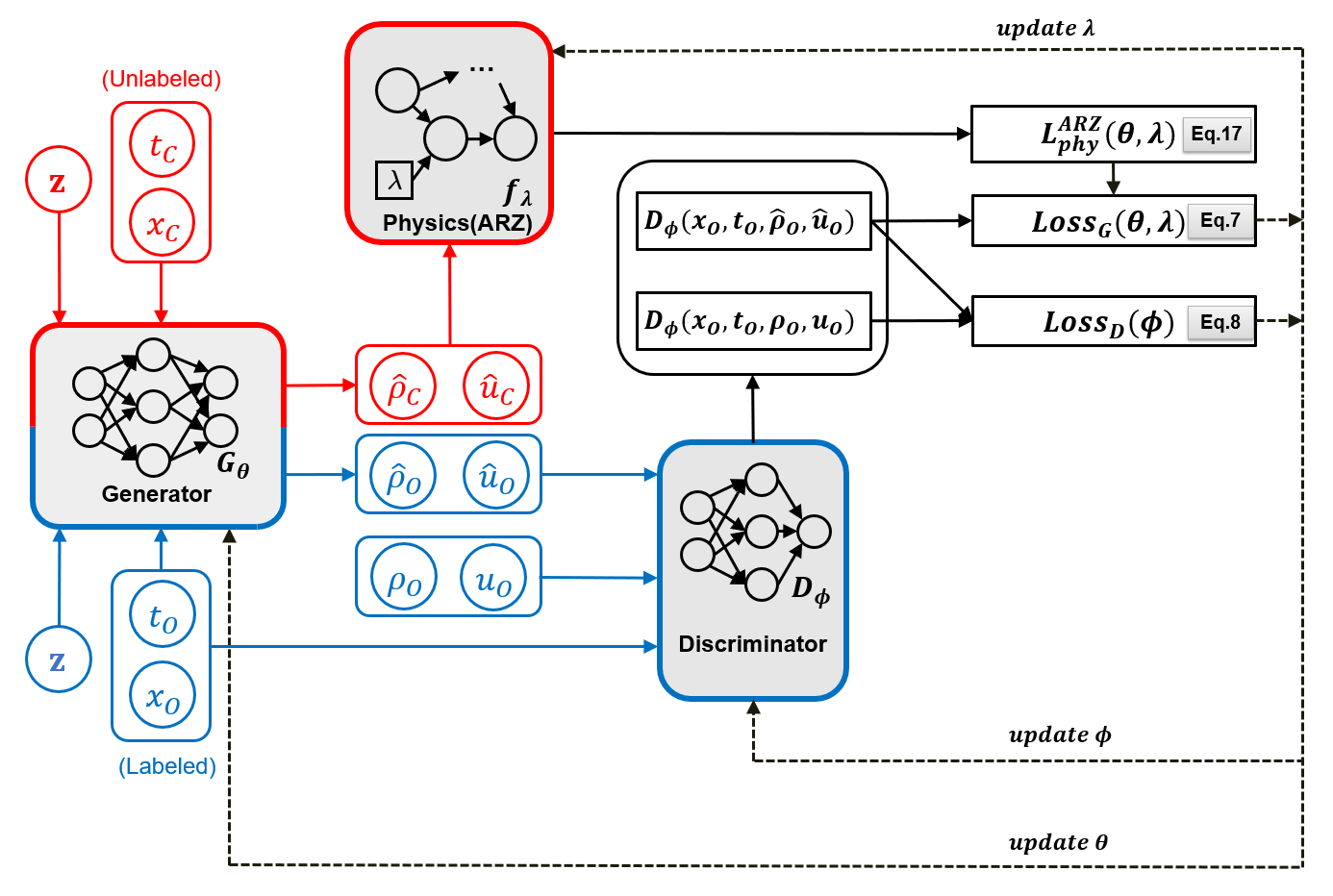

The structure of the ARZ-PhysGAN is illustrated in Fig. 2, which is similar to the LWR-PhysGAN except for two modifications due to different physics components used:

-

1.

The generator of ARZ-PhysGAN have two outputs, the predicted traffic density and the predicted traffic velocity .

-

2.

To compute the physics loss as shown in Eq. 17 , both and are fed into the physics.

IV-C Training Algorithm

We use the Adam [22], one widely used gradient descent algorithm, to update our models. The training algorithm is shown in Algorithm 1.

Initialization:

Initialized physics parameters .

Initialized networks parameters , .

Training iterations .

Batch size .

Learning rate .

Weights of loss functions .

Require: Adam optimizer.

Input: The observation data and collocation points .

V PhysGAN-TSE on Real World Data

To validate the performance and effectiveness of our proposed model and algorithm, in this section, we will apply LWR-PhysGAN and ARZ-PhysGAN to the real-world dataset.

V-A NGSIM Dataset

The Next Generation SIMulation (NGSIM) dataset [23] is an open dataset that is widely used to evaluate various transportation models, which contains high-resolution vehicular information including the position, velocity, occupied lane, and spacing at every 0.1 seconds. We focus on trajectories of automobiles from all five lanes in the mainline.

V-B Baseline and Metrics

Two baselines are used for comparison:

-

1.

Pure GAN. Pure GAN shares the same architecture with the PhysGAN except for the physics component. We adopt this baseline to verify the effectiveness of adding physics component.

-

2.

Extended Kalman Filter (EKF). EKF applies a nonlinear version of the Kalman filter and is widely used in nonlinear systems like the TSE. We use the ARZ-based EKF, of which details can be found in [6].

We adopt the relative error (RE) to measure the difference between the mean of the prediction and that of the ground-truth. Also, we use the KL divergence to quantify the distributional difference between the prediction and the ground-truth.

V-C Experiment Setup

Experiments are conducted on an AWS cloud workstation with 8 Intel Xeon E5-2686 v4 processors and an NVIDIA V100 Tensor Core GPU with 16 GB memory in Ubuntu 18.04.3. The initial value of and are 1.0 and 50.0 , respectively. The learning rate for the Adam optimizer is , and other configurations are kept as default.

V-D Results and Discussion

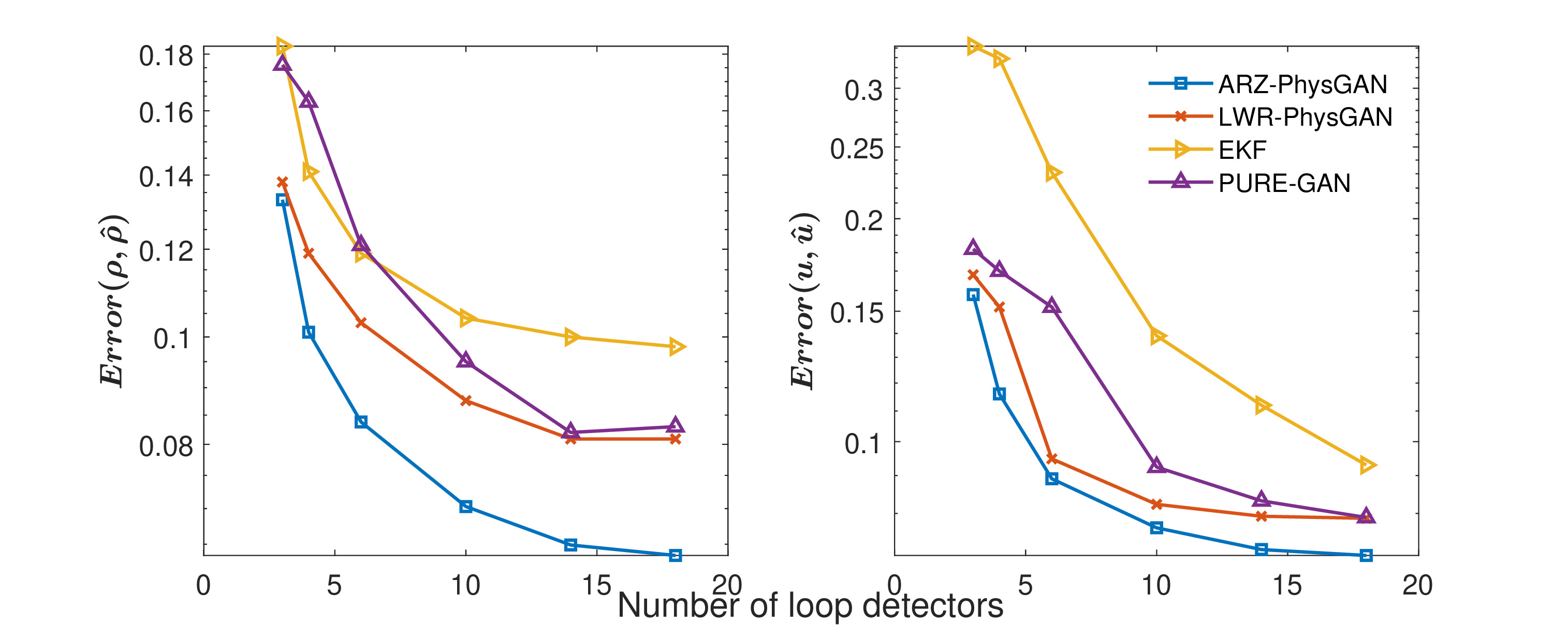

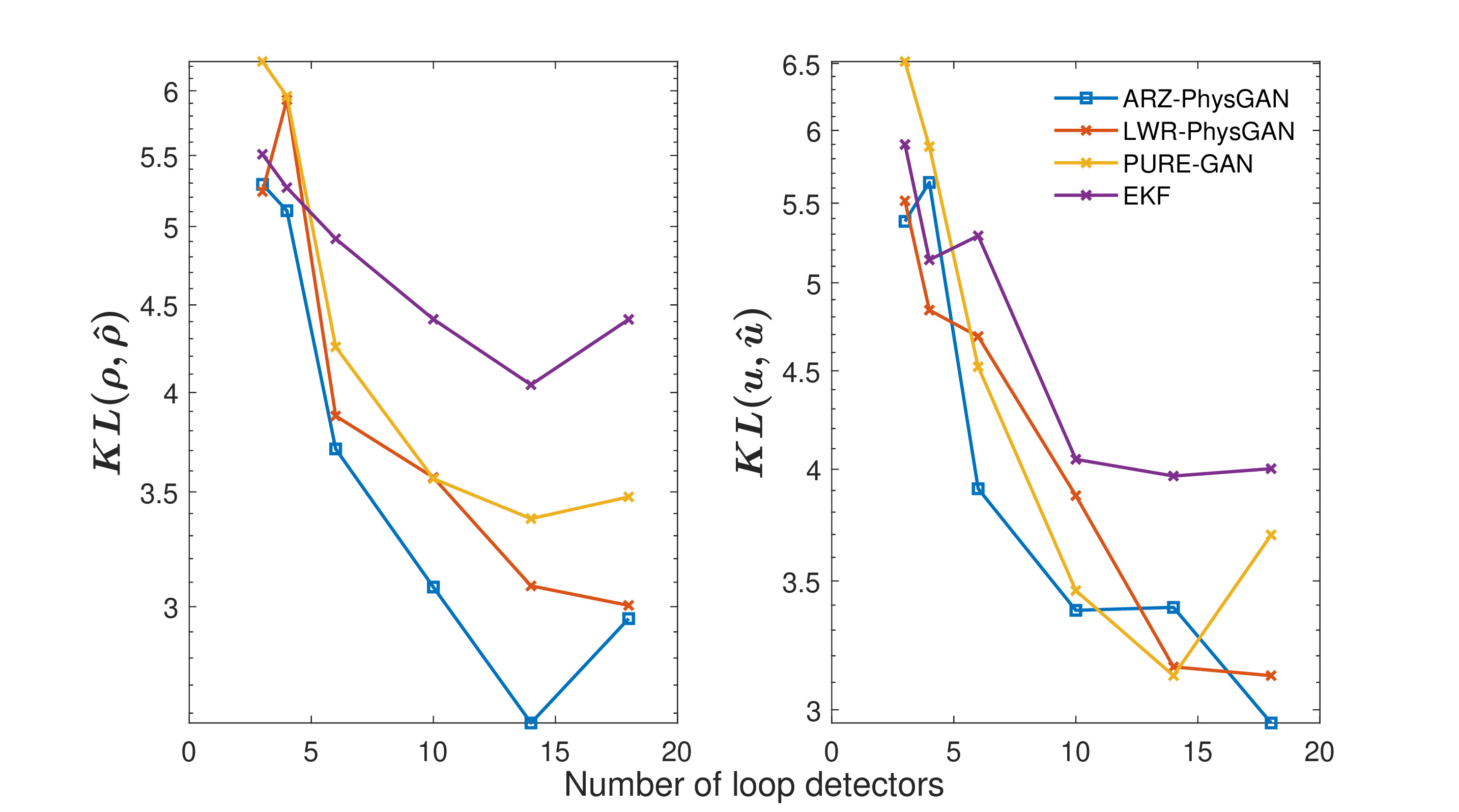

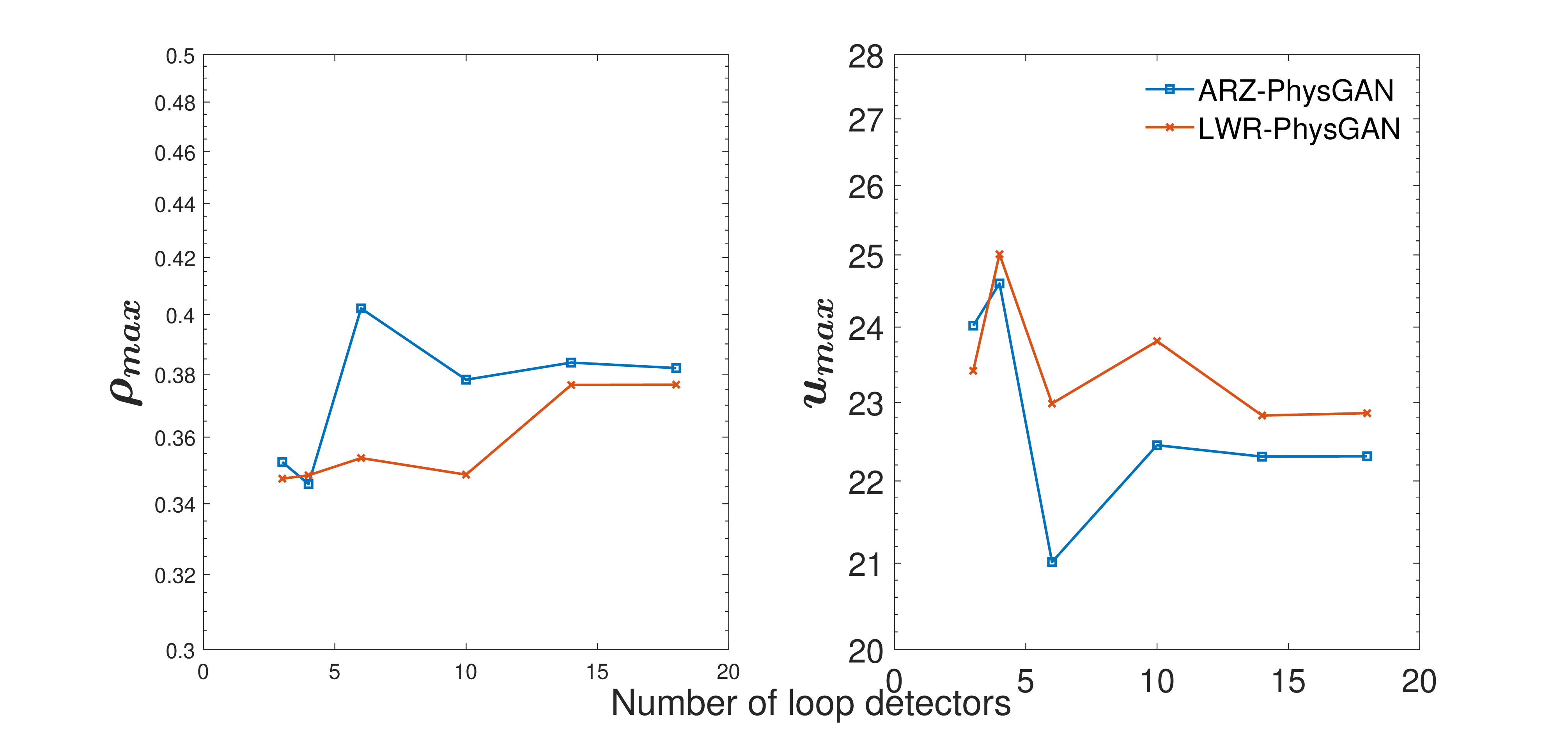

Effect of training sizes. Fig. 3 shows the REs of our proposed PhysGANs and the baselines, the left column for the RE of the traffic density and the right column for the RE of the traffic velocity. The x-axis is the number of loop detectors, and the y-axis is the RE. Different scatter types and colors are used to distinguish with different models, namely blue lines with squares for the ARZ-PhysGAN; red lines with crosses for the LWR-PhysGAN; yellow lines with rotated triangles for the EKF; purple lines with triangles for the pure GAN. From this figure, we can see that ARZ-PhysGAN outperforms others across all numbers of loop detectors, followed by LWR-PhysGAN. The superior performance of ARZ-PhysGAN compared to LWR-PhysGAN can be explained by the better compatibility of ARZ with the NGSIM data. In addition, both ARZ- and LWR-PhysGAN outperform the pure GAN by a significant margin, which demonstrates the effectiveness of exploiting physics information. Fig. 4 shows the KL divergences under different loop detector numbers. The x-axis is the number of loop detectors, and the y-axis is the value of KL divergence for the prediction of the traffic density (left) and the traffic velocity (right). A lower KL divergence indicates a better performance. Although the LWR-PhysGAN, ARZ-PhysGAN and pure GAN achieve similar KL divergences of the traffic velocity, the ARZ-PhysGAN outperforms other models by a significant margin in terms of the KL divergence of the traffic density.

Estimation of physics parameters. Fig. 5 shows the mean of the estimated physics parameters, the left for and the right for , where the x-axis is the number of loop detectors. The line specification is the same as Fig. 3. From the figure we can see that both and converge as the number of loop detectors increase. The converged values for , of LWR and ARZ model are and , respectively, when the number of loop detectors is 18. The units of and are and , respectively. The converged values of , are reasonable for the highway scenario.

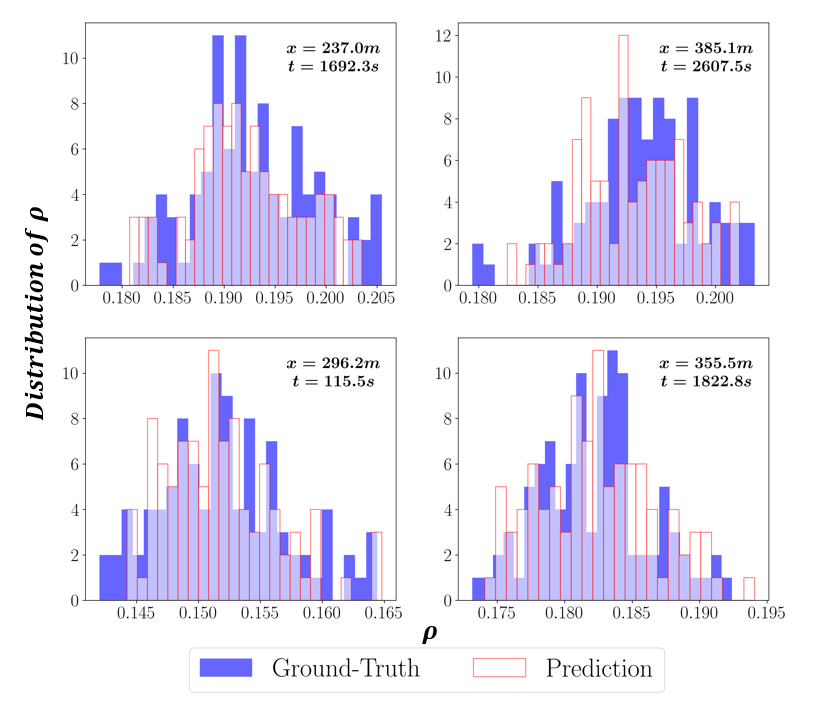

Visualization of prediction distribution. Fig. 6 presents the comparison between the ground-truth traffic density distribution and that predicted by the ARZ-PhysGAN, each subfigure for a randomly sampled spatial-temporal coordinates. The x-axis is the traffic density and the y-axis is the corresponding distribution, where the blue is for the ground-truth and the transparent is for the prediction. Most parts of the predicted and ground-truth distributions overlap with each other, which demonstrates that our proposed model can estimate the real-world traffic states uncertainties well.

VI Conclusions

This paper proposes PhysGANs to quantify the uncertainty in the TSE problem. The effectiveness of the proposed model is verified by conducting experiments on the real-world NGSIM dataset, where the ARZ-PhysGAN has the best performance, followed by the LWR-PhysGAN. In summary, we show that the GAN model can achieve an enhanced prediction accuracy in terms of the RE and KL divergence if physics information is used to guide the training. We also show that the ARZ-PhysGAN outperforms the LWR-PhysGAN because the ARZ model can better capture the real-world traffic dynamics.

This work can be further improved in two directions. First, although adding physics can help stabilize the training process, GAN is still hard to train due to the min-max game. One possible solution is to use the normalizing flow [24] as the generator, which can explicitly compute the data likelihood and thus the discriminator is not needed. Second, the proposed model needs to be re-trained if applied to other roads or to the same road but within a new time slot, which limits the real-world application of the proposed model. Thus, the model generalizability for unseen scenarios is worth investigating.

References

- [1] T. Seo, A. M. Bayen, T. Kusakabe, and Y. Asakura, “Traffic state estimation on highway: A comprehensive survey,” Annual Reviews in Control, vol. 43, pp. 128–151, 2017.

- [2] Y. Yang and P. Perdikaris, “Adversarial uncertainty quantification in physics-informed neural networks,” Journal of Computational Physics, vol. 394, pp. 136–152, 2019.

- [3] M. Raissi, “Deep hidden physics models: Deep learning of nonlinear partial differential equations,” Journal of Machine Learning Research, vol. 19, no. 1, pp. 932–955, Jan. 2018.

- [4] R. Shi, Z. Mo, and X. Di, “Physics-informed deep learning for traffic state estimation: A hybrid paradigm informed by second-order traffic models,” Proceedings of the AAAI Conference on Artificial Intelligence, vol. 35, no. 1, pp. 540–547, May 2021.

- [5] Z. Mo, R. Shi, and X. Di, “A physics-informed deep learning paradigm for car-following models,” Transportation Research Part C: Emerging Technologies, vol. 130, p. 103240, 2021.

- [6] R. Shi, Z. Mo, K. Huang, X. Di, and Q. Du, “A physics-informed deep learning paradigm for traffic state and fundamental diagram estimation,” IEEE Transactions on Intelligent Transportation Systems, 2021.

- [7] Y. Yuan, Z. Zhang, X. T. Yang, and S. Zhe, “Macroscopic traffic flow modeling with physics regularized gaussian process: A new insight into machine learning applications in transportation,” Transportation Research Part B: Methodological, vol. 146, pp. 88–110, 2021.

- [8] P. L. McDermott and C. K. Wikle, “Bayesian recurrent neural network models for forecasting and quantifying uncertainty in spatial-temporal data,” Entropy, vol. 21, no. 2, p. 184, 2019.

- [9] M. Chu, Y. Xie, J. Mayer, L. Leal-Taixé, and N. Thuerey, “Learning temporal coherence via self-supervision for gan-based video generation,” ACM Transactions on Graphics (TOG), vol. 39, no. 4, pp. 75–1, 2020.

- [10] D. V. Patel and A. A. Oberai, “Gan-based priors for quantifying uncertainty in supervised learning,” SIAM/ASA Journal on Uncertainty Quantification, vol. 9, no. 3, pp. 1314–1343, 2021.

- [11] D. Zhang, L. Lu, L. Guo, and G. E. Karniadakis, “Quantifying total uncertainty in physics-informed neural networks for solving forward and inverse stochastic problems,” Journal of Computational Physics, vol. 397, p. 108850, 2019.

- [12] L. Yang, D. Zhang, and G. E. Karniadakis, “Physics-informed generative adversarial networks for stochastic differential equations,” SIAM Journal on Scientific Computing, vol. 42, no. 1, pp. A292–A317, 2020.

- [13] A. Daw, M. Maruf, and A. Karpatne, “Pid-gan: A gan framework based on a physics-informed discriminator for uncertainty quantification with physics,” in Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery & Data Mining, 2021, pp. 237–247.

- [14] Y. Wang, M. Papageorgiou, A. Messmer, P. Coppola, A. Tzimitsi, and A. Nuzzolo, “An adaptive freeway traffic state estimator,” Automatica, vol. 45, no. 1, pp. 10–24, 2009.

- [15] S. E. Jabari and H. X. Liu, “A stochastic model of traffic flow: Theoretical foundations,” Transportation Research Part B: Methodological, vol. 46, no. 1, pp. 156–174, 2012.

- [16] M. Wiatrak, S. V. Albrecht, and A. Nystrom, “Stabilizing generative adversarial networks: A survey,” arXiv preprint arXiv:1910.00927, 2019.

- [17] K. Qian, A. Mohamed, and C. Claudel, “Physics informed data driven model for flood prediction: application of deep learning in prediction of urban flood development,” arXiv preprint arXiv:1908.10312, 2019.

- [18] C. Oszkinat, S. E. Luczak, and I. Rosen, “Uncertainty quantification in estimating blood alcohol concentration from transdermal alcohol level with physics-informed neural networks,” IEEE Transactions on Neural Networks and Learning Systems, 2022.

- [19] B. Siddani, S. Balachandar, W. C. Moore, Y. Yang, and R. Fang, “Machine learning for physics-informed generation of dispersed multiphase flow using generative adversarial networks,” Theoretical and Computational Fluid Dynamics, vol. 35, no. 6, pp. 807–830, 2021.

- [20] M. J. Lighthill and G. B. Whitham, “On kinematic waves II. A theory of traffic flow on long crowded roads,” Proceedings of the Royal Society of London. Series A. Mathematical and Physical Sciences, vol. 229, no. 1178, pp. 317–345, 1955.

- [21] A. Aw and M. Rascle, “Resurrection of “second order” models of traffic flow,” SIAM Journal on Applied Mathematics, vol. 60, no. 3, pp. 916–938, 2000.

- [22] D. P. Kingma and J. Ba, “Adam: A method for stochastic optimization,” arXiv preprint arXiv:1412.6980, 2014.

- [23] V. Punzo, M. T. Borzacchiello, and B. Ciuffo, “On the assessment of vehicle trajectory data accuracy and application to the next generation simulation (ngsim) program data,” Transportation Research Part C: Emerging Technologies, vol. 19, no. 6, pp. 1243–1262, 2011.

- [24] L. Dinh, J. Sohl-Dickstein, and S. Bengio, “Density estimation using real nvp,” arXiv preprint arXiv:1605.08803, 2016.