Quantiled conditional variance, skewness, and kurtosis by Cornish-Fisher expansion

Abstract

The conditional variance, skewness, and kurtosis play a central role in time series analysis. These three conditional moments (CMs) are often studied by some parametric models but with two big issues: the risk of model mis-specification and the instability of model estimation. To avoid the above two issues, this paper proposes a novel method to estimate these three CMs by the so-called quantiled CMs (QCMs). The QCM method first adopts the idea of Cornish-Fisher expansion to construct a linear regression model, based on different estimated conditional quantiles. Next, it computes the QCMs simply and simultaneously by using the ordinary least squares estimator of this regression model, without any prior estimation of the conditional mean. Under certain conditions, the QCMs are shown to be consistent with the convergence rate . Simulation studies indicate that the QCMs perform well under different scenarios of Cornish-Fisher expansion errors and quantile estimation errors. In the application, the study of QCMs for three exchange rates demonstrates the effectiveness of financial rescue plans during the COVID-19 pandemic outbreak, and suggests that the existing “news impact curve” functions for the conditional skewness and kurtosis may not be suitable.

keywords:

and

t1Address correspondence to Ke Zhu: Department of Statistics and Actuarial Science, University of Hong Kong, Hong Kong. E-mail: mazhuke@hku.hk

1 Introduction

Learning the conditional variance, skewness, and kurtosis of a univariate time series is the core issue in many financial and economic applications. The classical tools to study the conditional variance are the generalized autoregressive conditional heterosecdasticity (GARCH) model and its variants. See Engle (1982), Bollerslev (1986), Francq and Zakoïan (2019), and references therein. However, except for some theoretical works on parameter estimation in Escanciano (2009) and Francq and Thieu (2019), the GARCH-type models commonly assume independent and identically distributed (i.i.d.) innovations, resulting in the constant conditional skewness and kurtosis. As argued by Samuelson (1970) and Rubinstein (1973), the higher moments like skewness and kurtosis are nonnegligible, since they are not only exemplary evidence of the non-normal returns but also relevant to the investor’s optimal decision. Along this way, a large body of literature has demonstrated the importance of conditional skewness and kurtosis in portfolio selection (Chunhachinda et al., 1997), asset pricing (Harvey and Siddique, 2000), risk management (Bali et al., 2008), return predictability (Jondeau et al., 2019), and many others. These empirical successes indicate the necessity to learn the dynamic structures of the conditional skewness and kurtosis simultaneously with the conditional variance.

Although there is a large number of studies on the conditional variance, only a few of them have taken account of the conditional skewness and kurtosis. The pioneer works towards this goal are Hansen (1994) and Harvey and Siddique (1999), followed by Jondeau and Rockinger (2003), Brooks et al. (2005), León et al. (2005), Grigoletto and Lisi (2009), and León and N̄íguez (2020). All of these works assume a peculiar conditional distribution on the innovations of GARCH-type model, where the conditional skewness or kurtosis either directly has an analogous GARCH-type dynamic structure rooting in re-scaled shocks or indirectly depends on dynamic structure of distribution parameters. See also Francq and Sucarrat (2022) and Sucarrat and Grønneberg (2022) for a different investigation of conditional skewness and kurtosis via the GARCH-type model with a time-varying probability of zero returns. However, the aforementioned parametric methods have two major shortcomings: First, they inevitably have the risk of using wrongly specified parametric models or innovation distributions; Second, they usually have unstable model estimation results in the presence of dynamic structure of skewness and kurtosis.

This paper proposes a new novel method to simultaneously learn the conditional variance, skewness, and kurtosis by the so-called quantiled conditional variance, skewness, and kurtosis, respectively. Our three quantiled conditional moments (QCMs) are formed based on the spirit of the Cornish-Fisher expansion (Cornish and Fisher, 1938), which exhibits a fundamental relationship between the conditional quantiles and CMs. By replacing the unknown conditional quantiles with their estimators at quantile levels, the QCMs (with respect to variance, skewness, and kurtosis) are simply computed at each fixed timepoint by using the ordinary least squares (OLS) estimator of a linear regression model, which stems naturally from the Cornish-Fisher expansion. Surprisingly, our way to compute the QCMs does not require any estimator of the conditional mean. The precision of the QCMs is controlled by the errors of the proposed linear regression model, which comprises two components: First, the expansion error encompasses higher-order conditional moments in the Cornish-Fisher expansion that are not taken as the regressors in the linear regression model; Second, the approximation error arises from the use of estimated conditional quantiles (ECQs). Under certain conditions on the regression model error, we show that the QCMs are consistent estimators of the corresponding CMs with the convergence rate . Simulation studies reveal that, when considering various scenarios of approximation errors caused by the biased ECQs from the use of contaminated or mis-specified conditional quantile models, (i) the quantiled conditional variance and skewness exhibit robust and satisfactory performance, regardless of the non-negligibility of expansion error; (ii) the quantiled conditional kurtosis has a larger dispersion for heavier-tailed data with non-negligible expansion error, which is unavoidable due to the less accuracy of CF expansion on the heavier-tailed distributions (Lee and Lin, 1992).

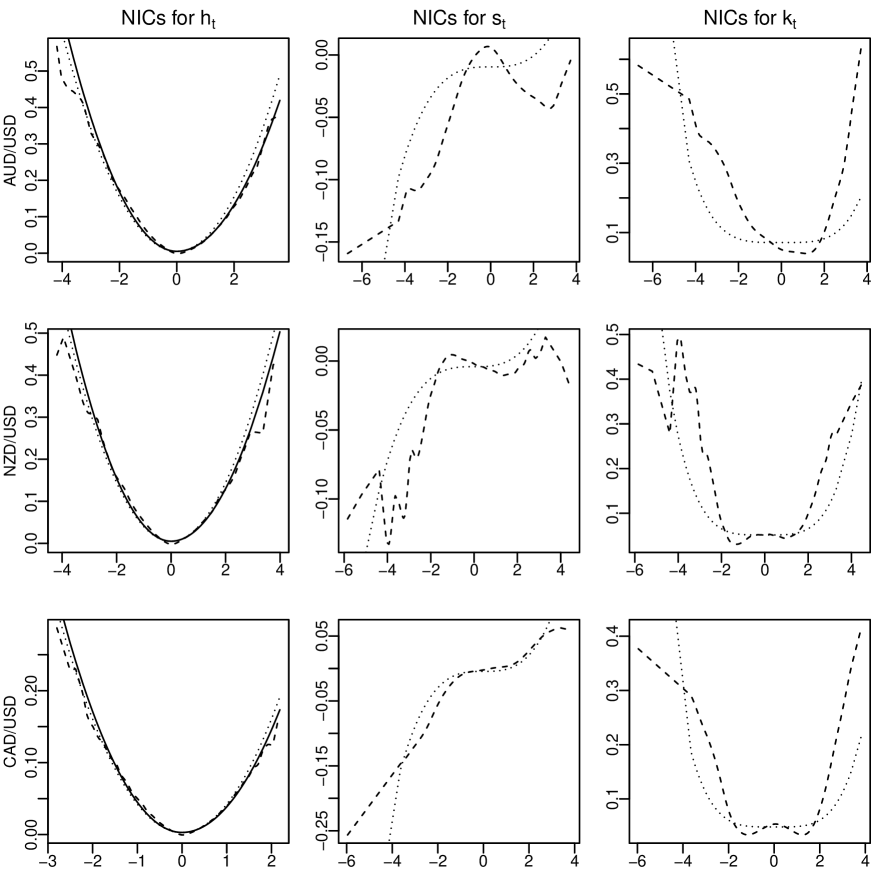

In the application, we study the QCMs of return series for three exchange rates. During the COVID-19 pandemic outbreak in March 2020, we find that the values of quantiled conditional variance and kurtosis increased rapidly, and the values of quantiled conditional skewness decreased sharply before March 19 or 20 in all of examined exchange rates, shedding light on the worldwide perilous financial crisis at that time. After March 19 or 20, we find that the values of quantiled conditional variance, skewness, and kurtosis exhibited totally opposite trends, so demonstrating the effectiveness of financial rescue plans issued by governments. Moreover, since the existing parametric forms of “news impact curve” (NIC) functions for the CMs are chosen in an ad-hoc way (Engle and Ng, 1993; Harvey and Siddique, 1999; León et al., 2005), we give a data-driven method to scrutinize the parametric forms of the NIC functions by using the QCMs. Our findings suggest that the parametric forms of NIC functions for conditional variance are appropriate, while those for conditional skewness and kurtosis may be unsuitable.

It is worth noting that our QCM method essentially transforms the problem of CM estimation to that of conditional quantile estimation. This brings us two major advantages over the aforementioned parametric methods, although we need do the conditional quantile estimation different times to implement the QCM method, and could face the risk of getting inaccurate estimate of conditional kurtosis for the very heavy-tailed data.

First, the QCM method can largely reduce the risk of model mis-specification, since the QCMs are simultaneously computed without any prior estimation of the conditional mean, and their consistency holds even when the specifications of conditional quantiles are mis-specified. This advantage is attractive and unexpected, since we usually have to estimate conditional mean, variance, skewness (or kurtosis) successively via some correctly specified parametric models. The reason of this advantage is that the QCM method is regression-based. Specifically, the conditional mean formally becomes one part of the intercept parameter, so it has no impact on the QCMs that are computed only from the OLS estimator of all non-intercept parameters; meanwhile, the impact of biased ECQs from the use of wrongly specified conditional quantile models can be aggregately offset by another part of the intercept parameter, ensuring the consistency of QCMs to a large extent. In a sense, without specifying any parametric forms of CMs, this important feature allows us to view the QCMs as the “observed” CMs, and consequently, many intriguing but hardly implemented empirical studies could become tractable based on the QCMs (see, e.g., our empirical studies on NICs for the CMs).

Second, the QCM method can numerically deliver more stable estimators of the CMs than the parametric methods. As shown in Jondeau and Rockinger (2003), there exists a moment issue that places a necessary nonlinear constraint on the conditional skewness and kurtosis, leading to a complex restriction on the admission region of model parameters. This restriction not only raises the computational burden of parameter estimation but also makes the estimation result unstable, so it has been rarely considered in the existing parametric methods. In contrast, the QCM method directly computes the QCMs at each fixed timepoint, and this interesting feature ensures that the nonlinear constraint on the conditional skewness and kurtosis can be simply examined using the computed QCMs at each timepoint. Particularly, if this nonlinear constraint is violated at some timepoints, it is straightforward to replace the OLS estimator with a constrained least squares estimator to propose the QCMs which then satisfy the constraint automatically.

The remaining paper is organized as follows. Section 2 proposes the QCMs based on the linear regression, and discusses the issues of conditional mean and moment constraints. Section 3 establishes the asymptotics of the QCMs. Section 4 provides the practical implementations of the QCMs. Simulation studies are given in Section 5. An application to study the QCMs for three exchange rates and their related NICs is offered in Section 6. Concluding remarks are presented in Section 7. Proofs and some additional simulation results are deferred into the supplementary materials.

2 Quantiled Conditional Moments

2.1 Definition

Let be a time series of interest with length , and be its available information set up to time . Given , the conditional mean, variance, skewness, and kurtosis of at timepoint are defined as and

| (2.1) |

respectively. Below, we show how to estimate these three conditional moments in (2.1) by using the Cornish-Fisher expansion (Cornish and Fisher, 1938) at a fixed timepoint .

Let be the conditional quantile of at the quantile level . According to the Cornish-Fisher expansion, we have

| (2.2) |

where with being the distribution function of , and contains all remaining terms on the higher-order conditional moments. Taking quantile levels , , equation (2.2) entails the following regression model with deterministic explanatory variables but random coefficients :

| (2.3) |

where , with ,

| (2.4) |

and . We call the expansion error, since it comes from the Cornish-Fisher expansion but can not be explained by adequately.

Next, we aim to obtain the estimators of , , and through the estimator of in (2.4). To achieve this goal, we replace the unobserved with its estimator , and then rewrite model (2.3) as follows:

| (2.5) |

where with being an estimator of , and with . Clearly, quantifies the error caused by using to approximate , so it can be termed as the approximation error. Consequently, considering the total number of and can be viewed as the gross error. We should mention that any two quantile levels and in (2.5) are allowed to be the same, as long as and are different due to the use of two different conditional quantile estimation methods. In other words, model (2.5) allows us to simply pool different information of conditional quantiles from different channels of estimation methods at any fixed quantile level.

Although is expected to have values oscillating around zero, it may not always have mean zero. Therefore, for the purpose of identification, we add a deterministic term into model (2.5) to form the following regression model:

| (2.6) |

where , , and with the intercept parameter .

Let be an vector with entries , be an matrix with rows , and be an vector with entries . Then, the ordinary least squares (OLS) estimator of in (2.6) is

| (2.7) |

According to (2.4), we rationally use in (2.7) to propose the estimators , , and for , , and , respectively, where

| (2.8) |

We call , , and the quantiled conditional variance, skewness, and kurtosis of , since they are estimators of , , and , by using the estimated conditional quantiles (ECQs) of . Clearly, provided different ECQs (that is, different in (2.6)), our three quantiled conditional moments (QCMs) in (2.8) are easy-to-implement, since their computation only relies on the OLS estimator .

2.2 The conditional mean issue

Using in (2.7), we can estimate but not due to the presence of . Hence, we are unable to form the quantiled conditional mean of to estimate . Interestingly, our way to compute , , and does not require an estimator of . This is far beyond our expectations, since normally we have to first estimate (or model) and then , , and .

Although the estimation of is not required to compute , , and , knowing the dynamic structure of is still important in practice. Note that is often assumed to be an unknown constant in accordance with the efficient market hypothesis, and this constant assumption can be examined by the consistent spectral tests for the martingale difference hypothesis (MDH) in Escanciano and Velasco (2006). If the constant assumption is rejected by these tests, the dynamic structure of manifests and is usually specified by a linear model (e.g., the autoregressive moving-average model) or a nonlinear model (e.g., the threshold autoregressive model); see Fan and Yao (2003) and Tsay (2005) for surveys. In this case, the model correctly specifies the dynamic structure of if and only if its model error is an MD sequence, a statement which can be consistently checked by two spectral tests for the MDH on unobserved model errors in Escanciano (2006). Hence, it is usually tractable for practitioners to come up with a valid parametric model for in most of applications.

2.3 The moment constraints issue

Note that , , , and can be expressed in terms of the first four non-central moments , , , and of , where . Therefore, the existence of , , , and is equivalent to that of , , , and , and the latter requires the existence of a non-decreasing function such that

To ensure this existence, Theorem 12.a in Widder (1946) indicates that the following condition must hold for , , , and :

| (2.9) |

By some direct calculations, it is not hard to see that condition (2.9) is equivalent to

| (2.10) |

Condition (2.10) above places two necessary moment constraints on , , and . When , , and are specified by some parametric models with unknown parameters, the first moment constraint usually can be easily handled, but the second moment constraint restricts the admission region of unknown parameters in a very complex way, so that the model estimation becomes quite inconvenient and unstable. This is the reason why the second moment constraint has been rarely taken into account in the literature except Jondeau and Rockinger (2003).

Impressively, the moment constraints issue above is not an obstacle for our QCMs, since the QCMs estimate the CMs directly at each fixed timepoint . In view of the relationship between the QCMs and in (2.8), we know that the QCMs satisfy two constraints in (2.10) if and only if and . Since the constraint holds automatically, we indeed only need to check whether

| (2.11) |

In practice, the constraint in (2.11) can be directly examined after the QCMs are computed. Our applications in Section 6 below show that this constraint holds at all examined timepoints. In other applications, if the constraint in (2.11) does not hold at some timepoints , we can easily re-estimate in (2.6) by the constrained least squares estimation method with the constraint , so that the resulting QCMs satisfy the constraint in (2.11) automatically at these timepoints.

3 Asymptotics

This section studies the asymptotics of , , and at a fixed timepoint . Let denote convergence in probability. To derive the consistency of , , and in (2.8), the following assumptions are needed.

Assumption 3.1.

is uniformly positive definite.

Assumption 3.2.

as .

We offer some remarks on the aforementioned assumptions. Assumption 3.1 is regular for linear regression models, and it holds as long as is fully ranked (i.e., Rank()=4). Because , Assumption 3.2 is equivalent to

| (3.1) |

where , , and . By law of large numbers for dependent and heteroscedastic data sequence (Andrews, 1988), it is reasonable to assert that and . Then, since , condition (3.1) holds if

| (3.2) |

Condition (3.2) reveals an important fact that the role of is to offset the possible non-identification effect caused by the non-zero mean of . In other words, to achieve the identification, should automatically tend to minimize the absolute difference

for large . Clearly, if for large , Condition (3.2) holds automatically, so then Assumption 3.2 most likely holds.

Next, we study the behavior of in different cases. For the first case that for all , we have with . For the second case that for most of , we also have with . For the third case that and for large , we again have with . For other cases, we still have the chance to ensure , depending on the behavior of across . In summary, the condition that for all is not necessary for the validity of Assumption 3.2. This implies that the QCMs are able to have robust performances across diverse error scenarios, including situations where has the large non-zero absolute values across (that is, the large biases of ECQs caused by the use of mis-specified conditional quantile models).

As expected, the condition that for large may not always hold, and therefore, under certain circumstances, Assumption 3.2 may fail. For example, when the tail of becomes heavier, the impact of higher-order conditional moments in the Cornish-Fisher expansion becomes larger. In this case, tends to have a more non-negligible exotic behavior across , so that it is harder to offset the non-identification effect via , with the value of farther away from zero. Indeed, our simulation studies in Section 5 below indicate that the presence of non-negligible has a larger impact on the consistency of than and , which perform robustly in terms of the heavy-tailedness of .

The following theorem establishes the consistency of , , and .

Remark 3.1.

Remark 3.2.

Let denote convergence in distribution. We raise the following higher order assumption to replace Assumption 3.2:

Assumption 3.3.

as , where is an identity matrix, and is bounded and uniformly positive definite.

Assumption 3.3 is regular for proving the asymptotic normality of OLS estimator (see White (2001)). The theorem below shows that , , and are -consistent but not asymptotically normal.

Theorem 3.2.

Remark 3.3.

Although , , and are not asymptotically normal, the asymptotic normality of demonstrates that the quantiled volatility (the second entry of ), denoted by , has the asymptotic normality property: as , where .

As shown before, the asymptotics of QCMs in Theorems 3.1–3.2 hold with no need to consider the specification of conditional mean and choose the specifications of conditional quantiles correctly. This important feature guarantees that the QCM method can largely reduce the risk of model mis-specification. The reason of this feature is that the QCM method is regression-based. Specifically, the conditional mean is absorbed into the intercept parameter , so that it has no impact on the QCMs; meanwhile, the biases of ECQs from the use of wrongly specified conditional quantile models can be aggregately offset by the term , which is also nested in .

The aforementioned feature is accompanied by two limitations. The first limitation is that we need to estimate conditional quantiles different times. Fortunately, this limitation seems mild, since the quantile estimation commonly can be computed easily by the linear programming method and the resulting estimation biases can be tolerated by the QCM method to a large extent. The second limitation of the QCMs is that when the data are very heavy-tailed, the expansion error could have a non-negligible impact to cause the identification problem, particularly for the conditional kurtosis. This limitation seems an unavoidable consequence of the Cornish-Fisher expansion, and it could not be addressed by simply increasing the order of expansion (Lee and Lin, 1992).

4 Practical Implementations

To compute three QCMs in (2.8), we only need to input different ECQs , which can be computed in many different ways; see, for example, McNeil and Frey (2000), Kuester et al. (2006), Xiao and Koenker (2009) and the references therein for earlier works, and Koenker et al. (2017) and Zheng at al. (2018) for more recent ones. Without assuming any parametric specifications of CMs, Engle and Manganelli (2004) propose a general class of CAViaR models, which can decently specify the dynamic specification of conditional quantiles. Hence, the CAViaR models are appropriate choices for us to compute . Following Engle and Manganelli (2004), we consider four CAViaR models below:

-

(1)

Symmetric Absolute Value (SAV) model: ;

-

(2)

Asymmetric Slope (AS) model: , where and ;

-

(3)

Indirect GARCH (IG) model: ;

-

(4)

Adaptive (ADAP) model: , where is a positive finite number.

Each CAViaR model above can be estimated via the classical quantile estimation method (Koenker and Bassett, 1978). For simplicity, we take the SAV model as an illustrating example. Let be the unknown parameter of SAV model, and be its true value. As in Engle and Manganelli (2004), we estimate by the quantile estimator , where is the check function, and is defined in the same way as in the SAV model with replaced by . Once is obtained, we then take as our ECQ.

Using the above CAViaR models, we can obtain different . However, at some quantile levels , some of models may be inadequate to specify the dynamic structure of , resulting in invalid . To screen out those invalid before computing the QCMs, we consider the in-sample dynamic quantile (DQ) test in Section 6 of Engle and Manganelli (2004). This test aims to detect the inadequacy of CAViaR models by examining whether has mean zero, where and . The testing idea of relies on the fact that has mean zero when the CAViaR model specifies the dynamic structure of correctly. Based on the sequence from a given CAViaR model, we can compute and then its p-value , where (a Chi-squared distribution with degrees of freedom). If the p-value of is less than , the corresponding ECQs are deemed to be invalid, so they are not included to compute the QCMs.

Below, we summarize our aforementioned procedure to compute the QCMs:

- Procedure 4.1. (The steps to compute , , and )

-

-

1.

Obtain at any quantile level in based on any CAViaR model in , where is a sequence of real numbers from to incrementing by , and .

-

2.

Apply the DQ test to each from Step 1, and discard those with p-values of less than .

- 3.

- 4.

-

1.

In Procedure 4.1, the value of decreases with that of , and it achieves the upper bound when (i.e., no ECQs are discarded). Clearly, the choice of reveals a trade-off between estimation reliability and estimation efficiency in the QCM method, since a large value of enhances the reliability of , but meanwhile, it reduces the efficiency of as the value of becomes small. So far, how to choose optimally is unclear. Our additional simulation results in the supplementary materials show that is a good choice to balance the bias and variance of the estimation error of the QCMs. Hence, we recommend to take for the practical use.

5 Simulations

This section examines the finite sample performance of three QCMs , , and . For saving the space, some additional simulation results on the selection of are reported in the supplementary materials.

5.1 Simulations on the GARCH model

We examine the performance of QCMs when the data are generated by the benchmark GARCH model. Specifically, we generate 100 replications of sample size from the following GARCH model (Bollerslev, 1986)

| (5.1) |

where the parameters are chosen as , , and , and is an i.i.d. sequence with and . Here, is the standardized distribution with mean zero and variance one, and is generated from the Uniform distribution over the interval . For each replication, we can easily see that when , the true values of CMs and conditional quantiles of in (5.1) are

when , those of are

Note that the expansion errors from the Cornish-Fisher expansion in regression model (2.6) are negligible when the conditional distribution of is normal in the case of , whereas are non-negligible when the conditional distribution of is heavy-tailed in the case of ; see Lee and Lin (1992).

Next, we generate the sequence at each to compute , , and in four different cases:

| (5.2) | ||||

where in Cases 1–3, and are defined as in Procedure 4.1, is the true value of conditional quantile of at level , with , and . Under the setting in Case 1, there has no approximation errors in regression model (2.6). Under the settings in Cases 2 and 3, the approximation errors are considered with different variances across , where their means are zeros (Case 2) or non-zero values (Case 3) to mimic the scenario that is an unbiased or biased estimator of , respectively. Under the setting in Case 4, are computed by the CAViaR models, and this case mimics the real application scenario that the dynamic structures of are unknown and modelled by the CAViaR models.

Using the values of generated by (5.2), we compute three QCMs , , and for each replication, and then we measure their precision at each by considering

| (5.3) |

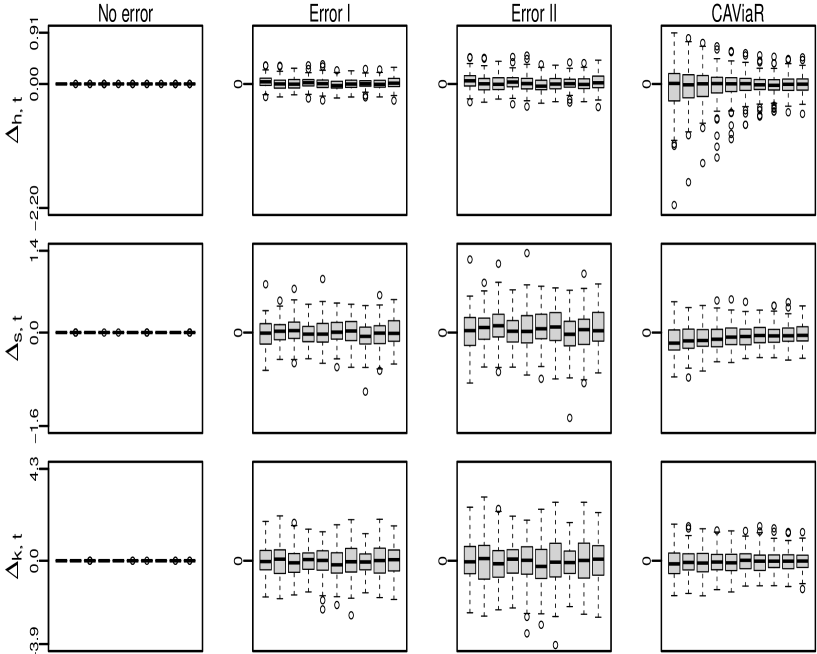

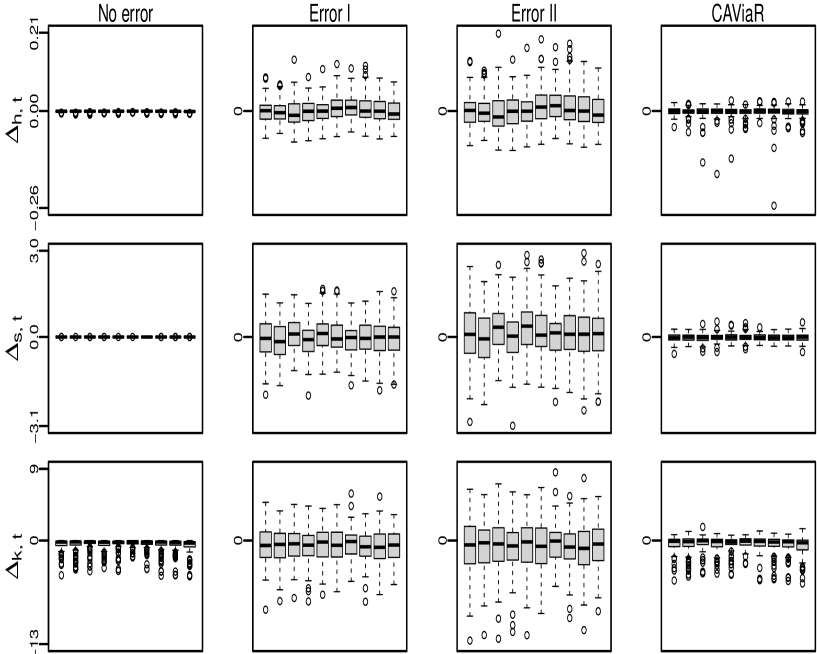

where , , and are the true values of three CMs of . Based on the results of 100 replications, Figs 1 and 2 exhibit the boxplots of , , and for under four different cases in (5.2), with and , respectively. The corresponding boxplots for are similar and they thus are not reported here to make the figure more visible. From these two figures, our findings are as follows:

-

1.

When there has no approximation errors as in Case 1, and are very accurate estimators of and , regardless of the negligibility of the expansion errors. However, exhibits a large dispersion when the expansion errors are non-negligible in the case of , even though it has a very small dispersion when the expansion errors are negligible in the case of . This indicates that the expansion errors typically have a minor effect on and , while their impact on could be more significant.

-

2.

When there has approximation errors with zero means (or nonzero means) as in Case 2 (or Case 3), the median lines in the boxplots of , , and are generally close to zero, irrespective of the negligibility of the expansion errors. These results suggest that the three QCMs are still consistent, even when are biased estimators of and the expansion errors are present. Compared with the results in the case of , it is expected to see that the dispersion of all QCMs becomes larger in the case of , and this phenomenon is more evident for .

-

3.

When are estimated by using the CAViaR method as in Case 4, the boxplots of , , and show that the three QCMs are consistent across all considered situations. Surprisingly, when , the performance of , , and in Case 4 is even better than that in Case 2 or 3. This observation is probably because the approximation errors and expansion errors are canceled out in Case 4, leading to smaller gross errors in regression model (2.6) and so more accurate QCMs consequently. Therefore, our QCM method can effectively accommodate the co-existence of approximation errors and expansion errors, which is frequently encountered in real data analysis.

5.2 Simulation on the ARMA–MN–GARCH model

Let denote a mixed normal (MN) distribution, the density of which is a mixture of two normal densities of and with the weighting probabilities and , respectively, where , , and . To examine the performance of QCMs in the presence of conditional mean specification and time-varying CMs, we generate 100 replications of sample size from the following ARMA–MN–GARCH model (Haas et al., 2004)

| (5.4) |

where with , , and , and the parameters are chosen as , , , , , , , , , , and . For each replication, we compute the true values of CMs and conditional quantiles of in model (5.4) as follows:

and

where satisfies , and represents the normal distribution function with mean and variance .

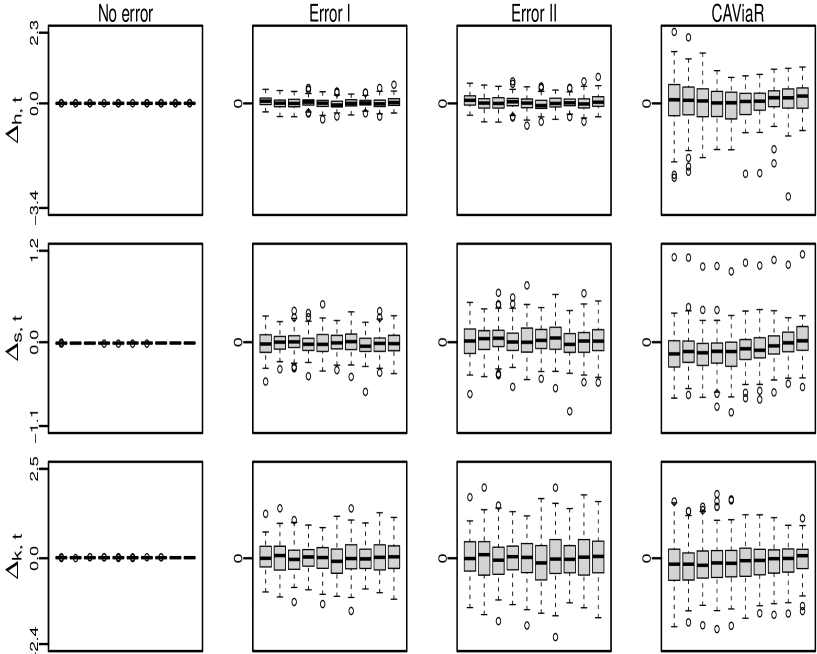

Based on the results of 100 replications, Fig 3 exhibits the boxplots of , , and for under four different cases in (5.2). From this figure, we can reach the similar conclusions as in Fig 1. Hence, although has a heavier tail than normal under model (5.4), the presence of conditional mean specification does not affect the performance of QCMs.

6 A Real Application

In our empirical work, we consider the log-return (in percentage) series of three exchange rates, including the AUD to USD (AUD/USD), NZD to USD (NZD/USD), and CAD to USD (CAD/USD). We denote each log-return series by , which are computed from January 1, 2009 to April 20, 2023. See Table 1 for some basic descriptive statistics of all three log-return series. Below, we compute the three QCMs of each log-return series, and then use these QCMs to study the “News impact curve” (NIC).

| AUD/USD | NZD/USD | CAD/USD | |

|---|---|---|---|

| Sample size | 3730 | 3730 | 3730 |

| Sample mean | 0.0012 | 0.0015 | 0.0027 |

| Sample variance | 0.4374 | 0.4612 | 0.2056 |

| Sample skewness | 0.4160 | 0.4485 | 0.0533 |

| Sample kurtosis | 7.0748 | 7.1818 | 5.3841 |

6.1 The three QCMs of return series

Following the steps in Procedure 4.1, we compute the three QCMs , , and of each log-return series, and report their basic descriptive statistics in Table 2, where the constraint (2.11) holds for all of the computed QCMs. From Tables 1 and 2, we find that for each return series, the mean of (or ) is close to the corresponding sample variance (or skewness), whereas the mean of is much smaller than the corresponding sample kurtosis. These findings are expected, since extreme returns can affect the sample kurtosis for a prolonged period of time, but their impact on decays exponentially over time.

| AUD/USD | NZD/USD | CAD/USD | ||

|---|---|---|---|---|

| Mean | 0.5400 | 0.5821 | 0.2951 | |

| Maximum | 3.0661 | 2.7224 | 1.2098 | |

| Minimum | 0.1380 | 0.1537 | 0.0784 | |

| Ljung-Box† | 0.0000 | 0.0000 | 0.0000 | |

| Mean | 0.2051 | 0.1274 | 0.1149 | |

| Maximum | 0.0463 | 0.1251 | 0.1230 | |

| Minimum | 0.5176 | 0.4867 | 0.3951 | |

| Ljung-Box | 0.0000 | 0.0000 | 0.0000 | |

| Mean | 3.7057 | 3.5129 | 3.6003 | |

| Maximum | 5.0147 | 4.7127 | 4.6095 | |

| Minimum | 2.8841 | 2.6247 | 2.8776 | |

| Ljung-Box | 0.0000 | 0.0000 | 0.0000 |

-

•

The results are the p-values of Ljung-Box test (Ljung and Box, 1978).

Next, we check the validity of QCMs via a similar method as in Gu et al. (2020). Denote , , and , where , , and . Based on the estimates , , and , we utilize the Student’s t tests , , and to test the null hypotheses : , : , and : , respectively. Here, is the estimate of conditional mean, and it is computed based on the mean specifications in Section 6.2 below. If is not rejected by at the significance level , then it is reasonable to conclude that is valid. Similarly, the validity of and can be examined by using and . Table 3 reports the p-values of , , and for all three exchange rates, and the results imply that all QCMs are valid at the significance level .

| AUD/USD | NZD/USD | CAD/USD | |

|---|---|---|---|

| 0.4543 | 0.6207 | 0.1306 | |

| 0.3250 | 0.2634 | 0.3901 | |

| 0.8321 | 0.3900 | 0.6425 |

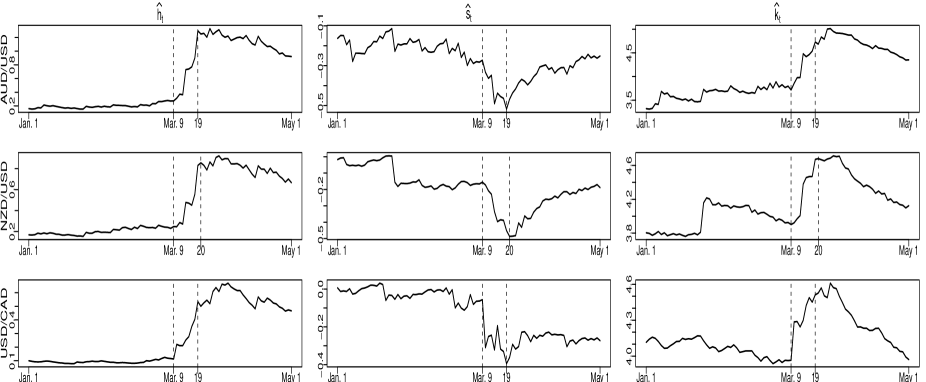

After checking the validity of the QCMs, we further plot the QCMs of all three return series during a sub-period from January 1, 2020 to May 1, 2020 in Fig 4. This sub-period deserves a detailed study, since it covers the 2020 stock market crash caused by the COVID-19 pandemic. For ease of visualization, the plots of all computed QCMs for the entire examined time period are not given here but available upon request. From Fig 4, we can have the following interesting findings:

-

1.

Starting from March 9, there is a rapid rising trend for both and while an apparent decline trend for in all of examined exchange rates. These observed trends demonstrate that the volatility risk kept rising sharply in currency markets, and synchronically, the tail risk to have extremely negative returns kept increasing substantially to make things even worse. The aforementioned phenomenon is not surprising, given the global impact of the COVID-19 pandemic outbreak in early 2020, which led to the depreciation of exchange rates across almost all countries afterwards.

-

2.

After March 19 or March 20, all of , , and exhibited opposite trends. This is very likely because the Federal Reserve and many central banks announced their financial rescue plans on March 17. Hence, the trend reversal sheds light on the effectiveness of the issued financial plans in rescuing stock markets.

Overall, we find that the COVID-19 pandemic has a perilous impact on the examined three exchange rates, and the financial rescue plans are effective in reducing the values of conditional variance and kurtosis, while increasing the values of conditional skewness.

6.2 The study on NICs

The NIC initiated by Engle and Ng (1993) aims to study how past shocks (or news) affect the present conditional variance by assuming

| (6.1) |

where is the collective shock at , is an unknown parameter to measure the persistence of , and is the NIC function for that has a specific parametric form. For example, researchers commonly assume that

| (6.2) | ||||

| (6.3) |

where the specifications of in (6.2) and (6.3) lead to the standard GARCH (Bollerslev, 1986) and GJR-GARCH (Glosten et al., 1993) models for , respectively. Similar to the NIC for in (6.1), we can follow the ideas of Harvey and Siddique (1999) and León et al. (2005) to consider the NICs for and :

| (6.4) | |||

| (6.5) |

where is the re-scaled collective shock at , and are two unknown parameters to measure the persistence of and , respectively, and and are the NIC functions for and , respectively. As for , and are often assumed to have certain parametric forms, such as

| (6.6) | ||||

| (6.7) |

see, for example, Harvey and Siddique (1999) and León et al. (2005). Since , , , , and are generally unobserved, all of the unknown parameters in (6.1) and (6.4)–(6.5) have to be estimated by specifying some parametric models on that account for the conditional variance, skewness, and kurtosis simultaneously.

However, so far the parametric forms of , , and are chosen in an ad-hoc rather than a data-driven manner. Intuitively, if , , and can be estimated non-parametrically, we are able to get some useful information on their parametric forms. Motivated by this idea, we replace , , , , and in (6.1) and (6.4)–(6.5) with , , , , and , respectively, where and with being an estimator of . After this replacement, we can get the following models:

| (6.8) | ||||

| (6.9) | ||||

| (6.10) |

where , , and are model errors caused by the replacement. Since the QCMs are most likely consistent estimators of CMs, all model errors are expected to have desirable properties for valid model estimations when the parametric form of is correctly specified.

To get the correct specification of , we apply two Cramér-von Mises tests and in Escanciano (2006) to check whether the assumed form of is correctly specified, where the p-values of and are computed via the bootstrap method in Escanciano (2006). Since the strong autocorrelations are detected for the three return series, we adopt an order threshold autoregressive (TAR()) model (Tong, 1978) with threshold variable being zero and delay variable being one to fit these three return series. After dismissing insignificant parameters, the AUD/USD, NZD/USD, and CAD/USD exchange rates are fitted by the TAR(5), TAR(9), and TAR(6) models, respectively. The p-values of and in Table 4 indicate that these TAR models are correct specifications for the three return series at the significance level 5%.

| AUD/USD | NZD/USD | CAD/USD | |

|---|---|---|---|

| 0.7700 | 0.5400 | 0.4200 | |

| 0.7200 | 0.4500 | 0.4100 |

After estimating the chosen specifications of above by the least squares method, we are able to obtain and . Define , where is Gaussian kernel function and is the bandwidth. Then, based on the sample sequence , we use the method in Robinson (1988) to estimate by

where

and the values of and are chosen by the conventional cross-validation method. Next, we estimate non-parametrically by , where , and the value of is chosen by the cross-validation method. Similarly, based on the sample sequences and , we estimate and non-parametrically by and , respectively.

Fig 5 plots the non-parametric fitted models , , and for all three return series. From this figure, we find that the form of in (6.2) or (6.3) matches quite well, whereas the forms of in (6.6) and in (6.7) exhibit a large deviation from and , respectively, in all three cases. The same conclusion can be reached in view of the results of adjusted R2 for all fitted models in Table 5.

7 Concluding Remarks

This paper estimates the three CMs (with respect to variance, skewness, and kurtosis) by the corresponding QCMs, which are easily computed from the OLS estimator of a linear regression model constructed by the ECQs. The QCM method builds on the Cornish-Fisher expansion, which essentially transforms the estimation of CM to that of conditional quantile. Such transformation brings us two attractive advantages over the parametric GARCH-type methods: First, the QCM method bypasses the investigation of conditional mean and allows the mis-specified conditional quantile models, due to its regression-based feature; Second, the QCM method has stable estimation results, since it does not involve any complex nonlinear constraint on the admission region of parameters in conditional quantile models. These two advantages come with two limitations. The first limitation is that we need to do the conditional quantile estimation different times. But this seems neither a computational burden nor a theoretical obstruct. The second limitation is that when the data are more heavy-tailed, the CF expansion is unavoidable to be less accurate, leading to more non-negligible expansion error. Although this limitation does not affect the consistency of the QCMs in general, it makes the QCMs (especially the quantiled conditional kurtosis) have a larger dispersion when the data are more heavy-tailed.

Notably, the QCM method is a supervised learning procedure without assuming any distribution on the returns. This important supervised learning feature makes the QCM method have a substantially computational advantage over the GARCH-type methods to estimate conditional variance, skewness, and kurtosis, when the dimension of return is large. See Zhu et al. (2023) for an in-depth discussion on this context and an innovative method for big portfolio selections based on the learned conditional higher-moments from the QCM method.

Finally, we should mention that the existing parametric methods commonly only work for stationary data and their extension to deal with more complex data environments seems challenging in terms of methodology and computation. In contrast, the QCM method could be applicable in complex data environments, as long as the ECQs are fairly provided. For example, the QCMs could adapt to the mixed categorical and continuous data or locally stationary data when the ECQs are computed by the method in Li and Racine (2008) or Zhou and Wu (2009), respectively. In addition, the useful information from exogenous variables and conditional quantiles of other variables can be easily embedded to the QCMs through the channels of the ECQs as done in Härdle et al. (2016) and Tobias and Brunnermeier (2016). Since the QCMs are computed at each fixed timepoint, the QCM method also allows us to pay special attention to the CMs during a specific time period by employing the methods in Cai (2002) and Xu (2013) to compute the ECQs. On the whole, the QCM method exhibits a much wider application scope than the parametric ones, which so far have not offered a clear feasible manner to study the CMs under the above complex data environments.

References

- Andrews (1988) Andrews, D. W. K. (1988). Laws of large numbers for dependent nonidentically distributed random variables. Econometric Theory 4, 458–467.

- Bali et al. (2008) Bali, T. G., Mo, H. and Tang, Y. (2008). The role of autoregressive conditional skewness and kurtosis in the estimation of conditional VaR. Journal of Banking & Finance 32, 269–282.

- Bollerslev (1986) Bollerslev, T. (1986). Generalized autoregressive conditional heteroskedasticity. Journal of Econometrics 31, 307–327.

- Brooks et al. (2005) Brooks, C., Burke, S. P., Heravi, S. and Persand, G. (2005). Autoregressive conditional kurtosis. Journal of Financial Econometrics 3, 399–421.

- Cai (2002) Cai, Z. (2002). Regression quantiles for time series. Econometric Theory 18, 169–192.

- Chunhachinda et al. (1997) Chunhachinda, P., Dandapani, K., Hamid, S. and Prakash, A. J. (1997). Portfolio selection and skewness: Evidence from international stock markets. Journal of Banking & Finance 21, 143–167.

- Cornish and Fisher (1938) Cornish, E. A. and Fisher, R. A. (1938). Moments and cumulants in the specification of distributions. Revue de l’Institut international de Statistique 5, 307–320.

- Engle (1982) Engle, R. F. (1982). Autoregressive conditional heteroscedasticity with estimates of the variance of United Kingdom inflation. Econometrica 50, 987–1007.

- Engle and Manganelli (2004) Engle, R. F. and Manganelli, S. (2004). CAViaR: Conditional autoregressive value at risk by regression quantiles. Journal of Business & Economic Statistics 22, 367–381.

- Engle and Ng (1993) Engle, R. F. and Ng, V. K. (1993). Measuring and testing the impact of news on volatility. Journal of Finance 48, 1749–1778.

- Escanciano (2006) Escanciano, J. C. (2006). Goodness-of-fit tests for linear and nonlinear time series models. Journal of the American Statistical Association 101, 140–149.

- Escanciano (2009) Escanciano, J. C. (2009). Quasi-maximum likelihood estimation of semi-strong GARCH models. Econometric Theory 25, 561–570.

- Escanciano and Velasco (2006) Escanciano, J. C. and Velasco, C. (2006). Generalized spectral tests for the martingale difference hypothesis. Journal of Econometrics 134, 151–185.

- Fan and Yao (2003) Fan, J. and Yao, Q. (2003). Nonlinear Time Series: Nonparametric and Parametric Methods. Springer, New York.

- Francq and Sucarrat (2022) Francq, C. and Sucarrat, G. (2022). Volatility estimation when the zero-process is nonstationary. Journal of Business & Economic Statistics 41, 53–66.

- Francq and Thieu (2019) Francq, C. and Thieu, L. Q. (2019). QML inference for volatility models with covariates. Econometric Theory 35, 37–72.

- Francq and Zakoïan (2019) Francq, C. and Zakoïan, J. M. (2019). GARCH Models: Structure, Statistical Inference and Financial Applications. John Wiley & Sons.

- Glosten et al. (1993) Glosten, L. R., Jagannathan, R. and Runkle, D. E. (1993). On the relation between the expected value and the volatility of the nominal excess return on stocks. Journal of Finance 48, 1779–1801.

- Grigoletto and Lisi (2009) Grigoletto, M. and Lisi, F. (2009). Looking for skewness in financial time series. Econometrics Journal 12, 310–323.

- Gu et al. (2020) Gu, S., Kelly, B. and Xiu, D. (2020). Empirical asset pricing via machine learning. Review of Financial Studies 33, 2223–2273.

- Haas et al. (2004) Haas, M., Mittnik, S. and Paolella, M. S. (2004). Mixed normal conditional heteroskedasticity. Journal of Financial Econometrics 2, 211–250.

- Hansen (1994) Hansen, B. E. (1994). Autoregressive conditional density estimation. International Economic Review 35, 705–730.

- Härdle et al. (2016) Härdle, W. K., Wang, W. and Yu, L. (2016). TENET: Tail-Event driven NETwork risk. Journal of Econometrics 192, 499–513.

- Harvey and Siddique (1999) Harvey, C. R. and Siddique, A. (1999). Autoregressive conditional skewness. Journal of Financial and Quantitative Analysis 34, 465–487.

- Harvey and Siddique (2000) Harvey, C. R. and Siddique, A. (2000). Conditional skewness in asset pricing tests. Journal of Finance 55, 1263–1295.

- Jondeau and Rockinger (2003) Jondeau, E. and Rockinger, M. (2003). Conditional volatility, skewness, and kurtosis: existence, persistence, and comovements. Journal of Economic Dynamics and Control 27, 1699–1737.

- Jondeau et al. (2019) Jondeau, E., Zhang, Q. and Zhu, X. (2019). Average skewness matters. Journal of Financial Economics 134, 29–47.

- Koenker and Bassett (1978) Koenker, R. and Bassett, G. (1978). Regression quantiles. Econometrica 46, 33–50.

- Koenker et al. (2017) Koenker, R., Chernozhukov, V., He, X. and Peng, L. (2017). Handbook of Quantile Regression. Chapman & Hall/CRC.

- Kuester et al. (2006) Kuester, K., Mittnik, S. and Paolella, M. S. (2006). Value-at-risk prediction: A comparison of alternative strategies. Journal of Financial Econometrics 4, 53–89.

- Lee and Lin (1992) Lee, Y. S. and Lin, T. K. (1992). Algorithm AS 269: High order Cornish-Fisher expansion. Journal of the Royal Statistical Society: Series C 41, 233–240.

- León and N̄íguez (2020) León, Á. and N̄íguez, T. M. (2020). Modeling asset returns under time-varying semi-nonparametric distributions. Journal of Banking & Finance 118, 105870.

- León et al. (2005) León, Á., Rubio, G. and Serna, G. (2005). Autoregresive conditional volatility, skewness and kurtosis. Quarterly Review of Economics and Finance 45, 599–618.

- Li and Racine (2008) Li, Q. and Racine, J. S. (2008). Nonparametric estimation of conditional CDF and quantile functions with mixed categorical and continuous data. Journal of Business & Economic Statistics 26, 423–434.

- Ljung and Box (1978) Ljung, G. M. and Box, G. E. (1978). On a measure of lack of fit in time series models. Biometrika 65, 297–303.

- McNeil and Frey (2000) McNeil, A. J. and Frey, R. (2000). Estimation of tail-related risk measures for heteroscedastic financial time series: an extreme value approach. Journal of Empirical Finance 7, 271–300.

- Robinson (1988) Robinson, P. M. (1988). Root-N-consistent semiparametric regression. Econometrica 56, 931–954.

- Rubinstein (1973) Rubinstein, M. E. (1973). A comparative statics analysis of risk premiums. Journal of Business 46, 605–615.

- Samuelson (1970) Samuelson, P. A. (1970). The fundamental approximation theorem of portfolio analysis in terms of means, variances and higher moments. Review of Economic Studies 37, 537–542.

- Sucarrat and Grønneberg (2022) Sucarrat, G. and Grønneberg, S. (2022). Risk estimation with a time-varying probability of zero returns. Journal of Financial Econometrics 20, 278–309.

- Tobias and Brunnermeier (2016) Tobias, A. and Brunnermeier, M. K. (2016). CoVaR. American Economic Review 106, 1705–1741.

- Tong (1978) Tong, H. (1978). On a threshold model. Pattern recognition and signal processing. (ed. C.H.Chen). Sijthoff and Noordhoff, Amsterdam.

- Tsay (2005) Tsay, R. S. (2005). Analysis of Financial Time Series. John Wiley & Sons.

- White (2001) White, H. (2001). Asymptotic Theory for Econometricians, Revised edition. San Diego: Academic Press.

- Widder (1946) Widder, D. V. (1946). The Laplace Transform. Princeton University Press, Princeton, NJ.

- Xiao and Koenker (2009) Xiao, Z. and Koenker, R. (2009). Conditional quantile estimation for generalized autoregressive conditional heteroscedasticity models. Journal of the American Statistical Association 104, 1696–1712.

- Xu (2013) Xu, K. L. (2013). Nonparametric inference for conditional quantiles of time series. Econometric Theory 29, 673–698.

- Zheng at al. (2018) Zheng, Y., Zhu, Q., Li, G. and Xiao, Z. (2018). Hybrid quantile regression estimation for time series models with conditional heteroscedasticity. Journal of the Royal Statistical Society: Series B 80, 975–993.

- Zhou and Wu (2009) Zhou, Z. and Wu, W. B. (2009). Local linear quantile estimation for nonstationary time series. Annals of Statistics 37, 2696–2729.

- Zhu et al. (2023) Zhu, Z., Zhang, N. and Zhu, K. (2023). Big portfolio selection by graph-based conditional moments method. Working paper. Available at “https://arxiv.org/abs/2301.11697”.