Quantum Light Detection and Ranging

Abstract

Single-photon light detection and ranging (LiDAR) is a key technology for depth imaging through complex environments. Despite recent advances, an open challenge is the ability to isolate the LiDAR signal from other spurious sources including background light and jamming signals. Here we show that a time-resolved coincidence scheme can address these challenges by exploiting spatio-temporal correlations between entangled photon pairs. We demonstrate that a photon-pair-based LiDAR can distill desired depth information in the presence of both synchronous and asynchronous spurious signals without prior knowledge of the scene and the target object. This result enables the development of robust and secure quantum LiDAR systems and paves the way to time-resolved quantum imaging applications.

daniele.faccio@glasgow.ac.uk

edoardo.charbon@epfl.ch

Light detection and ranging (LiDAR) systems with the ability to reach long distance at high speed and accuracy have emerged as a key technology in autonomous driving, robotics, and remote sensing Schwarz (2010). Today miniaturised LiDARs are integrated in many consumer electronics devices, e.g. smartphones. Moving beyond depth sensing, the LiDAR technique has been also used for non-line-of-sight imaging Velten et al. (2012); Gariepy et al. (2016); O’Toole et al. (2018); Liu et al. (2019a); Faccio et al. (2020), imaging through scattering media Lyons et al. (2019) and biophotonics applications Bruschini et al. (2019). A typical LiDAR system records the time-of-flight, , of light back-reflected from a scene, which enables to estimate distance , where is the speed of light Sun et al. (2016). Thanks to their single-photon sensitivity, picosecond temporal resolution and low cost, single-photon avalanche diodes (SPADs) are widely used as detectors in LiDAR Shin et al. (2016); Tachella et al. (2019). In this respect, two well established techniques can be used to achieve the timing information at picosecond resolution: time-correlated single-photon counting (TCSPC) that operates by recording a time-stamp for each individual photon Zhang et al. (2018); Ronchini Ximenes et al. (2019); Seo et al. (2021) or time gating in which a gate window is finely shifted Ulku et al. (2018); Morimoto et al. (2020); Ren et al. (2018); Chan et al. (2019).

Despite recent advances, interference is a major challenge for robust and secure LiDAR applications through complex environments. In our work, the term ‘interference’ refers to the detection by the LiDAR sensor of any optical signals other than those emitted by the LiDAR source. These may originate from ambient light, other LiDAR systems operating concurrently and intentional spoofing signals. In addition to depth distortion such as degradation in accuracy and precision, interference could result in misleading information, causing the system to make incorrect decisions. Over the past several years, some approaches addressing LiDAR interference have been proposed. One technique isolates the signal based on temporal correlations between two or more photons Niclass et al. (2013), which effectively suppresses noise due to ambient light. Another technique based on laser phase modulation can reduce both ambient light and mutual interference Ronchini Ximenes et al. (2019); Seo et al. (2021). However, these approaches have limitations. For example, an external signal can still spoof the LiDAR detector if it copies the temporal correlation or phase modulation of the illumination source, which is easily achievable by placing a photodiode close to the target object. To date, there is no LiDAR system immune to all types of interference.

The use of non-classical optical states can also improve object detection in the presence of spurious light and noise. In a quantum illumination protocol, a single photon is sent out towards a target object while its entangled pair is retained and used as an ancilla Lloyd (2008). Coincidence detection between the returned photon and its twin increases the effective signal-to-noise ratio compared to classical illumination, an advantage persisting even in the presence of noise and losses. Recently, practical quantum illumination schemes have been experimentally demonstrated using spatially entangled photon pairs for target detection Lopaeva et al. (2013); Zhang et al. (2020) and imaging objects England et al. (2019); Defienne et al. (2019); Gregory et al. (2020) in the presence of background light and spurious images. These approaches rely on the ability to measure photon coincidences between many spatial positions, which is conventionally performed using single-photon sensitive cameras such as electron multiplied charge coupled device (EMCCD) cameras Moreau et al. (2012); Edgar et al. (2012); Defienne et al. (2018), intensified(i)CCD Chrapkiewicz et al. (2014, 2016) and SPAD cameras Eckmann et al. (2020); Ianzano et al. (2020); Ndagano et al. (2020); Defienne et al. (2021). However, while a handful of works have reported the use of photon pairs for target detection at distance Liu et al. (2019b); Frick et al. (2020); Ren et al. (2020), no imaging LiDAR experiments with absolute range distance and interference or background rejection have been reported.

In this work, we demonstrate a quantum LiDAR system immune to any type of classical interference by using a pulsed light source of spatially entangled photon pairs and a time-resolved SPAD camera. We use spatial anti-correlations between photon pairs as a unique identifier to distinguish them from any other light sources in the target scene. In particular, we show our LiDAR system successfully images objects and retrieves their depths in two different interference scenarios mimicking the presence of spoofing or additional classical LiDAR signals. In the first case, spurious light from a synchronised laser is used to demonstrate the robustness against intentional spoofing attacks. In the second case, the interference takes the form of asynchronous pulses imitating the presence of multiple background LiDAR systems running in parallel. The results show that our approach enables to image with high depth resolution while offering immunity to classical light interference.

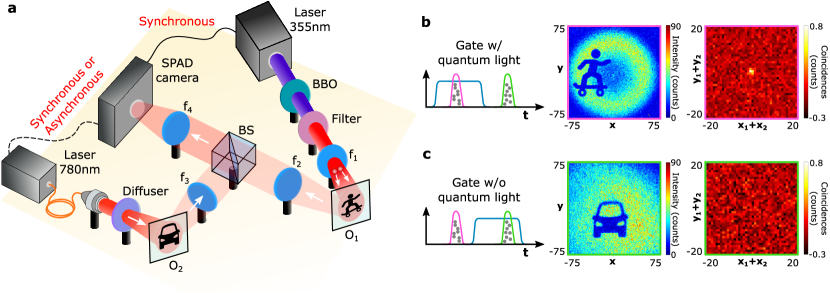

Imaging system. The experimental setup is shown in Fig. 1a. Spatially entangled photon pairs are produced by type-I spontaneous parametric down conversion (SPDC) with a -Barium Borate (BBO) nonlinear crystal pumped by a nm pulsed laser with a repetition frequency of MHz. The objects to be imaged are masks placed on a reflective mirror. One object (“skater”) is placed in the far field of the crystal thus the down-converted photon pairs are spatially anti-correlated at the object plane. Another object (“car”) is illuminated by a diffused nm laser pulsed at MHz as well to produce the interference. Both objects are imaged onto the SPAD camera SwissSPAD2 Ulku et al. (2018) (see Methods). Similar to a typical LiDAR scheme, the pump laser is synchronised with the camera, while the spoofing signal generated by a classical pulse laser can be synchronous or asynchronous.

As in conventional time-gated LiDAR, backscattered photons with specific time-of-flight are detected by scanning a gate window ( ns wide) using ps time steps, which corresponds to a depth resolution of mm Ulku et al. (2018); Morimoto et al. (2020). At each gate position, a set of 8-bit frames ( frames) is acquired to reconstruct two different types of images: (i) a classical intensity image, obtained by summing all frames, and (ii) a spatially-averaged photon correlation image computed by identifying photon coincidences in the frame set using a technique detailed in Defienne et al. (2018) (see Methods). The intensity image retrieves the shape of the objects in the scene at a given depth, while the spatially-averaged correlation image measures spatial correlations between detected photons to identify the presence of photon pairs. For example, if only reflected photon pairs are captured within the gate window (Fig. 1b), the intensity image shows the “skater” object and an intense peak is observed at the center of the spatially-averaged correlation image. The presence of such a correlation peak above the noise level confirms the presence of photon pairs among the detected photons. If only classical light is detected (Fig. 1c), the intensity images show the “car” object illuminated by the pulse laser and the spatially-averaged correlation image is flat.

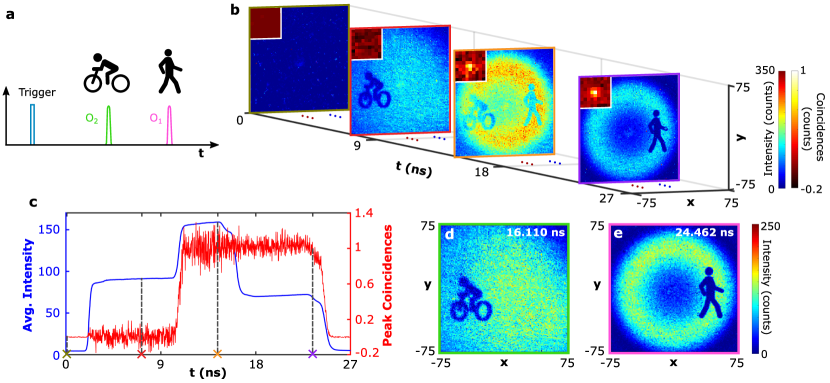

Synchronous classical light interference. First, we consider the case of a spurious classical source of light that is synchronised with the SPAD camera i.e. photons reflected by both (“person”) and (“bike”) are synchronous with the camera (Fig. 2a). This scenario corresponds to a spoofing attack. To operate the LiDAR, the gate window is continuously shifted over a range of ns, which corresponds to 1500 gate positions. Figure 2b shows the intensity and spatially-averaged correlation images (zoom pixels in inset) measured at four specific gate positions ns, ns, ns and ns. At the early gate position ( ns), there is only noise recorded by the camera such as dark count, crosstalk, afterpulsing and ambient light. As the gate window is shifted, appears in the intensity image ( ns) and the absence of a peak in the spatially-averaged correlation image shows that it originates from classical light alone. When the reflected quantum light starts to be collected in the gate window together with the classical light ( ns), and are superposed in the intensity image and a correlation peak becomes visible. For the late gate window ( ns) the classical laser pulse vanishes while only quantum light is detected, as shown by the peak persisting in the spatially-averaged correlation image, and only is visible in the intensity image.

To acquire depth information and distinguish classical interference, the spatially-averaged intensity and correlation peak values represented in function of the gate position in Fig. 2c are analyzed. The two-step average intensity profile represents the double reflections from and , while the correlation peak profile only reveals the trend of quantum light over the given time range. By locating the falling edges of the intensity profile, the arrival time information of all the objects can be obtained Morimoto et al. (2021); Chan et al. (2019). Whilst by just searching for the last falling edge of the correlation peak profile, the arrival time information of the quantum object is extracted. As shown in Fig. 2d the arrival time of the classical object of ns and its intensity image are obtained. The arrival time of the quantum object, ns, is located at the last fitted falling edge of the correlation peak profile and the corresponding intensity image is subtracted in Fig. 2e. Refer to the Supplementary Video for the scanned results over the entire detected range. The proposed dual-profile locating method enables locating and distinguishing objects illuminated by quantum light or classical light.

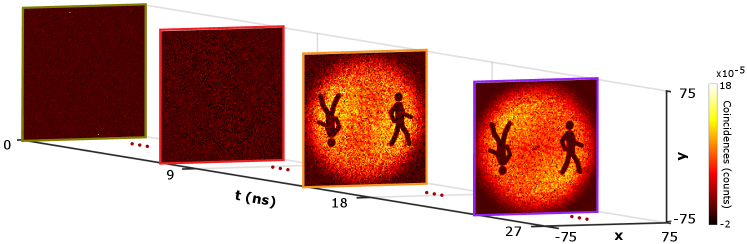

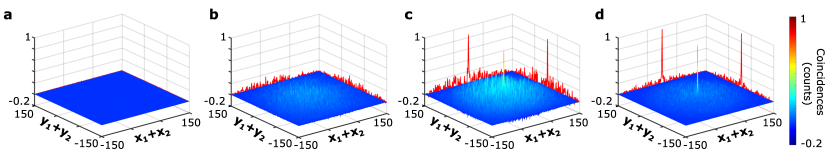

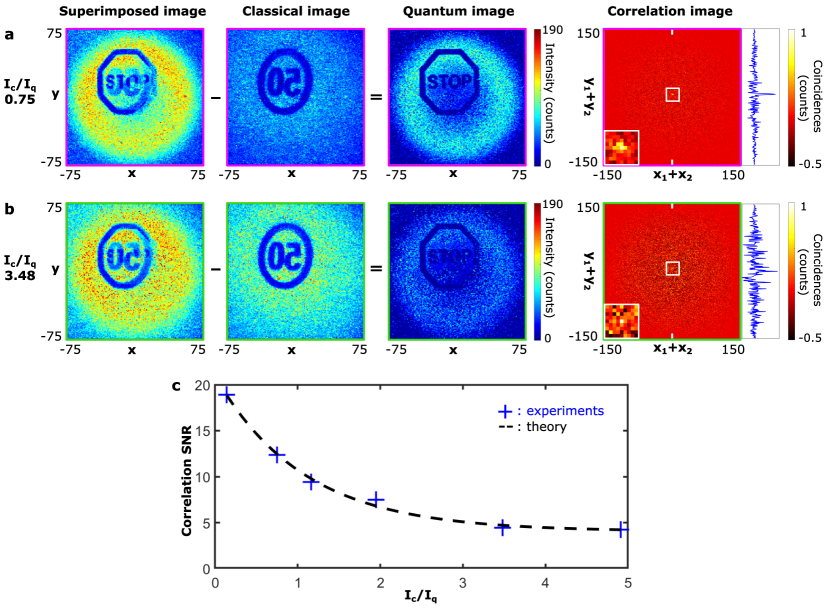

The anti-spoofing capability works as described, as long as the time delay between the two objects is larger than the temporal resolution of the SPAD camera. One may then enhance the removal of temporally overlapping interferences by increasing the number of frames (e.g. up to 8-bit frames) for each gate delay so as to retrieve a spatially-resolved correlation image instead of a spatially-averaged correlation image Defienne et al. (2018, 2019); Gregory et al. (2020); Defienne et al. (2021). An example is shown in Fig. 3: a spatially-resolved correlation image retrieves directly the shape of the object illuminated by photons pairs and remains insensitive to classical interference (classical background noise added in the experiment). In fact, such a spatially-resolved correlation image could potentially be measured at all gate positions of the LiDAR scanning. However, the acquisition time is much longer than that required to retrieve an spatially-averaged correlation image (a few hours instead of seconds for a single time gate delay) and it is therefore better to limit its use to gate positions for which the objects cannot be distinguished otherwise. In addition, note that because of the anti-symmetric spatial structure of photon pairs illuminating the object, each spatially-resolved correlation image contains both the object and its symmetric image, which means that the object must interact with only half of the photon pair beam to be imaged through correlations without ambiguity.

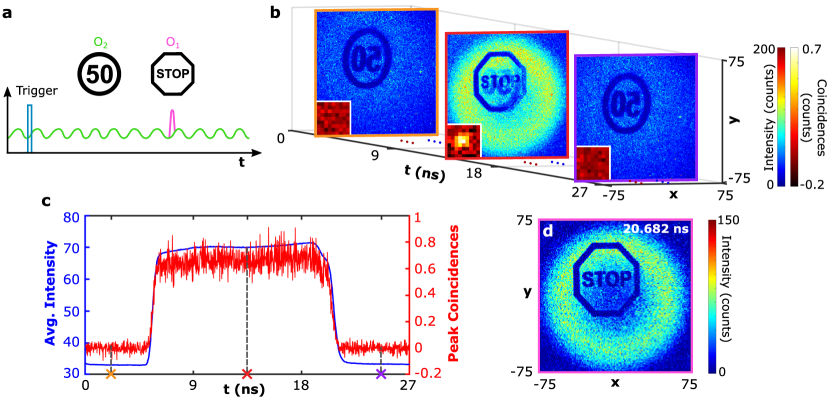

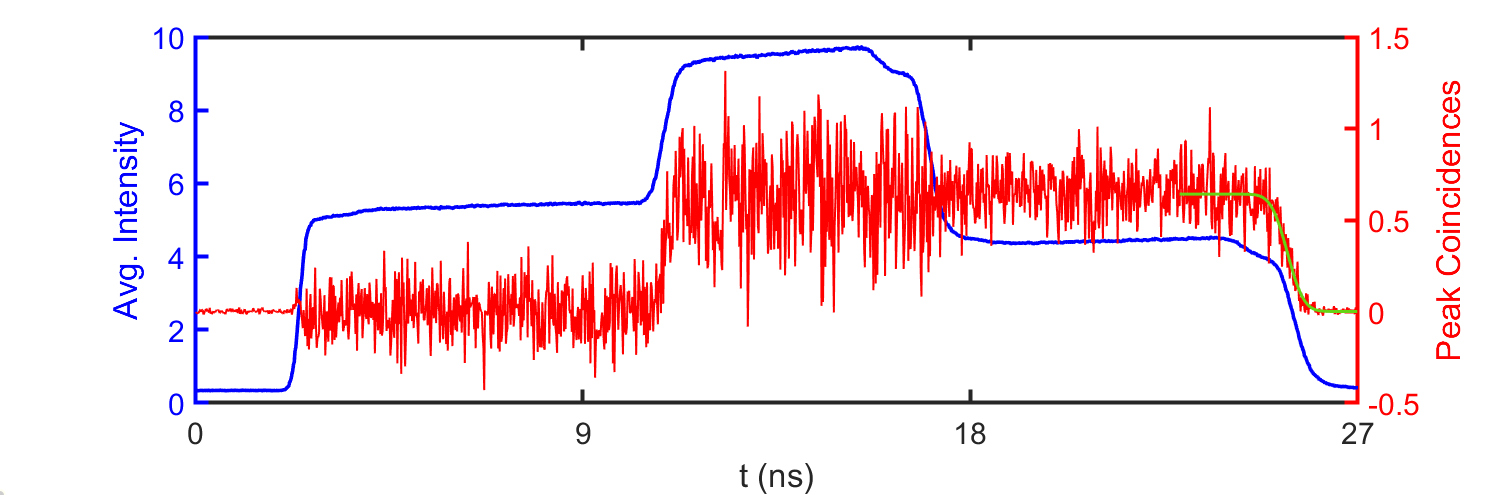

Asynchronous classical light interference. In real-world applications, another possible scenario is the interference coming from ambient light and other LiDAR systems. We therefore consider a classical source of light that is not synchronised with the SPAD camera but still running at the same repetition frequency ( MHz) and illuminates the object “50 traffic sign” (Fig. 4a). Fig. 4b shows the intensity and correlation images at three example gate positions ns, ns and ns. The object is visible in the intensity images at all gate positions as background noise. When the gate is shifted to ns, the SPAD also captures photon pairs reflected by (“STOP traffic sign”) and both objects are superimposed in the intensity image. We now also observe a peak in the spatially-averaged correlation image which highlights the presence of photon pairs. By locating the falling edge of the spatially-averaged correlation peak shown in Fig. 4c, the time arrival information of the quantum object is located at ns, and its intensity image is also obtained by subtraction as shown in Fig. 4d. See the Supplementary Video for the entire measured results.

Discussion. We demonstrated a LiDAR system based on spatially entangled photon pairs showing robustness against interference from classical sources of light. In particular, we showed its successful use in the presence of (i) a spoofing attack (synchronous classical light interference) and (ii) of a background light and another LiDAR system operating in parallel (asynchronous classical light interference). Note also that because the quantum LiDAR harnesses anti-correlations between photon pairs to retrieve images, it is also immune to classically-correlated sources of light such as thermal and pseudo-thermal sources in which photons are position-correlated Valencia et al. (2005).

In our current implementation, time gate position is acquired in several seconds so that the full scanning takes several hours (5.6 hours for the synchronous case and 3.4 hours for the asynchronous case). This total acquisition time can however be significantly decreased by reducing (i) the acquisition time per gate position and (ii) the number of gate positions to detect the falling edge of quantum light (currently steps scanned linearly). For example, in the case of the synchronous classical light shown in Fig. 2, the quantum illuminated object could be located by measuring only frames for different gate positions by using a correlation-driven scanning and falling edge fitting algorithm, which would reduce the total acquisition time to seconds (see details in the Supplementary Information). In addition, the speed of the SPAD camera in our experiment was limited to fps by the readout architecture, but it has been demonstrated that the similar cameras can be operated at frame rates up to fps Gasparini et al. (2018), which would further diminish the total acquisition time and potentially reach real-time acquisition. Furthermore, in the current quantum LiDAR prototype the target object is a two dimensional ‘co-operative’ object attached to a mirror, which ensures enough photon pairs are reflected and collected by the camera. However, the proposed scheme can be extended to scattering materials with three dimensional profiles by using brighter photon pair sources and more sensitive SPAD cameras, which are currently under development. Looking forward, these results could enable the development of robust and secure LiDAR systems and more general time-resolved quantum imaging applications.

Methods

Details of the experimental setup. The nm pump laser used in this work is VisUV-355-HP (Picoquant GmbH) with mW average output power at a repetition frequency of MHz. The non-linear crystal cut for type-I SPDC is a -Barium Borate crystal of size mm with a half opening angle of 3 degrees (Newlight Photonics). A nm bandpass filter is placed after the BBO crystal to filter out the spurious pump light. The nm classical laser (PiL079XSM, ALS GmbH) running at a repetition rate of MHz with ps (FWHM) pulse width is coupled with a fiber and then connected to a collimator, the output power of which can be finely tuned. A diffuser (ED1-C50-MD, Thorlabs) is used to create even illumination of the classical light over the object. The nm pump laser always operates as a master to trigger only the camera in the asynchronous measurement, and both camera and the nm laser in the synchronous experiment.

Details of the SPAD camera. The camera used in our study is the SwissSPAD2 with microlens on chip. It is composed of pixels with a pitch of m, a native fill factor of and photon detection probability (PDP) of approximately at nm. The camera runs in time-gating mode by scanning gate windows ( ns wide) continuously with a time step of ps. The starting gate position is tuned to be prior to the first synchronous light reflection by adding an appropriate initial offset to the laser trigger. There is no initial calibration approach implemented in this work to compensate the time-arrival skew from electrical signal (cable length, trigger circuit) and optical signal (fiber) as we only focus on the relative range of different objects. At each gate window, the number of N -bit frames are transferred to the computer by USB connection and photon correlations are processed on a GPU before the gate shifts to the next successive position to run the same operation. Each 8-bit frame is accumulated by 255 successive 1-bit measurements with ns exposure time. The overall acquisition speed is fps (with s readout time for each bit) and the post-processing time is less than ms per 8-bit frame when running on a GPU. The central pixels are selected for all experiments to minimize the skew influence due to the electrical propagation across the SPAD array. The gate control signal injected to the middle of the pixel array resulted a symmetrical time propagation to the right and left pixels. To remove the hot pixels, we define a threshold at dark counts and set all pixel values above this threshold in each frame to 0, as described in Defienne et al. (2021). Then the hot pixels are smoothed by its neighboring pixels Shin et al. (2016) for all intensity images shown in the work. The crosstalk effects are also removed by setting the correlation values from direct neighbour pixels to 0.

Spatial correlation image calculation. The photon coincidence processing model used in this study is detailed in Defienne et al. (2018), in which the spatial joint probability distribution (JPD) (,) of entangled photon pairs is measured by multiplying values measured at pixel in each frame by the difference of values measured at pixel between two successive frames:

| (1) |

where is the acquired number of frames at each gate position. and represent the photon-count value at any pixel and (in position and ) of the frame (). The genuine coincidences that only originates from correlations between entangled photon pairs are obtained by removing the accidental coincidences resulting from dark counts, after-pulsing, hot pixels, crosstalk, detection of multiple photon pairs and stray light. Both the (i) spatially-averaged correlation and the (ii) spatially-resolved correlation image used in our study can be extracted from the JPD:

(i) The spatially-averaged correlation image (noted ) is calculated from JPD using the formula:

| (2) |

This represents the average number of photon coincidences detected between all pairs of pixels and separated by a distance .

(ii) The spatially-resolved correlation image is defined as the anti-diagonal component of the JPD . It represents the number of photon coincidences detected between symmetric pair of pixels.

References

- Schwarz (2010) B. Schwarz, Nature Photonics 4, 429 (2010).

- Velten et al. (2012) A. Velten, T. Willwacher, O. Gupta, A. Veeraraghavan, M. G. Bawendi, and R. Raskar, Nature communications 3, 1 (2012).

- Gariepy et al. (2016) G. Gariepy, F. Tonolini, R. Henderson, J. Leach, and D. Faccio, Nature Photonics 10, 23 (2016).

- O’Toole et al. (2018) M. O’Toole, D. B. Lindell, and G. Wetzstein, Nature 555, 338 (2018).

- Liu et al. (2019a) X. Liu, I. Guillén, M. La Manna, J. H. Nam, S. A. Reza, T. H. Le, A. Jarabo, D. Gutierrez, and A. Velten, Nature 572, 620 (2019a).

- Faccio et al. (2020) D. Faccio, A. Velten, and G. Wetzstein, Nature Reviews Physics 2, 318 (2020).

- Lyons et al. (2019) A. Lyons, F. Tonolini, A. Boccolini, A. Repetti, R. Henderson, Y. Wiaux, and D. Faccio, Nature Photonics 13, 575 (2019).

- Bruschini et al. (2019) C. Bruschini, H. Homulle, I. M. Antolovic, S. Burri, and E. Charbon, Light: Science & Applications 8, 1 (2019).

- Sun et al. (2016) M.-J. Sun, M. P. Edgar, G. M. Gibson, B. Sun, N. Radwell, R. Lamb, and M. J. Padgett, Nature communications 7, 1 (2016).

- Shin et al. (2016) D. Shin, F. Xu, D. Venkatraman, R. Lussana, F. Villa, F. Zappa, V. K. Goyal, F. N. Wong, and J. H. Shapiro, Nature communications 7, 1 (2016).

- Tachella et al. (2019) J. Tachella, Y. Altmann, N. Mellado, A. McCarthy, R. Tobin, G. S. Buller, J.-Y. Tourneret, and S. McLaughlin, Nature communications 10, 1 (2019).

- Zhang et al. (2018) C. Zhang, S. Lindner, I. M. Antolović, J. M. Pavia, M. Wolf, and E. Charbon, IEEE Journal of Solid-State Circuits 54, 1137 (2018).

- Ronchini Ximenes et al. (2019) A. Ronchini Ximenes, P. Padmanabhan, M. Lee, Y. Yamashita, D. Yaung, and E. Charbon, IEEE Journal of Solid-State Circuits 54, 3203 (2019).

- Seo et al. (2021) H. Seo, H. Yoon, D. Kim, J. Kim, S. J. Kim, J. H. Chun, and J. Choi, IEEE Journal of Solid-State Circuits , 1 (2021).

- Ulku et al. (2018) A. C. Ulku, C. Bruschini, I. M. Antolović, Y. Kuo, R. Ankri, S. Weiss, X. Michalet, and E. Charbon, IEEE Journal of Selected Topics in Quantum Electronics 25, 1 (2018).

- Morimoto et al. (2020) K. Morimoto, A. Ardelean, M.-L. Wu, A. C. Ulku, I. M. Antolovic, C. Bruschini, and E. Charbon, Optica 7, 346 (2020).

- Ren et al. (2018) X. Ren, P. W. Connolly, A. Halimi, Y. Altmann, S. McLaughlin, I. Gyongy, R. K. Henderson, and G. S. Buller, Optics express 26, 5541 (2018).

- Chan et al. (2019) S. Chan, A. Halimi, F. Zhu, I. Gyongy, R. K. Henderson, R. Bowman, S. McLaughlin, G. S. Buller, and J. Leach, Scientific reports 9, 1 (2019).

- Niclass et al. (2013) C. Niclass, M. Soga, H. Matsubara, S. Kato, and M. Kagami, IEEE Journal of Solid-State Circuits 48, 559 (2013).

- Lloyd (2008) S. Lloyd, Science 321, 1463 (2008).

- Lopaeva et al. (2013) E. Lopaeva, I. R. Berchera, I. P. Degiovanni, S. Olivares, G. Brida, and M. Genovese, Physical review letters 110, 153603 (2013).

- Zhang et al. (2020) Y. Zhang, D. England, A. Nomerotski, P. Svihra, S. Ferrante, P. Hockett, and B. Sussman, Physical Review A 101, 053808 (2020).

- England et al. (2019) D. G. England, B. Balaji, and B. J. Sussman, Physical Review A 99, 023828 (2019).

- Defienne et al. (2019) H. Defienne, M. Reichert, J. W. Fleischer, and D. Faccio, Science advances 5, eaax0307 (2019).

- Gregory et al. (2020) T. Gregory, P.-A. Moreau, E. Toninelli, and M. J. Padgett, Science advances 6, eaay2652 (2020).

- Moreau et al. (2012) P.-A. Moreau, J. Mougin-Sisini, F. Devaux, and E. Lantz, Physical Review A 86, 010101 (2012).

- Edgar et al. (2012) M. P. Edgar, D. S. Tasca, F. Izdebski, R. E. Warburton, J. Leach, M. Agnew, G. S. Buller, R. W. Boyd, and M. J. Padgett, Nature communications 3, 1 (2012).

- Defienne et al. (2018) H. Defienne, M. Reichert, and J. W. Fleischer, Physical review letters 120, 203604 (2018).

- Chrapkiewicz et al. (2014) R. Chrapkiewicz, W. Wasilewski, and K. Banaszek, Optics letters 39, 5090 (2014).

- Chrapkiewicz et al. (2016) R. Chrapkiewicz, M. Jachura, K. Banaszek, and W. Wasilewski, Nature Photonics 10, 576 (2016).

- Eckmann et al. (2020) B. Eckmann, B. Bessire, M. Unternährer, L. Gasparini, M. Perenzoni, and A. Stefanov, Optics Express 28, 31553 (2020).

- Ianzano et al. (2020) C. Ianzano, P. Svihra, M. Flament, A. Hardy, G. Cui, A. Nomerotski, and E. Figueroa, Scientific reports 10, 1 (2020).

- Ndagano et al. (2020) B. Ndagano, H. Defienne, A. Lyons, I. Starshynov, F. Villa, S. Tisa, and D. Faccio, npj Quantum Information 6, 1 (2020).

- Defienne et al. (2021) H. Defienne, J. Zhao, E. Charbon, and D. Faccio, Physical Review A 103, 042608 (2021).

- Liu et al. (2019b) H. Liu, D. Giovannini, H. He, D. England, B. J. Sussman, B. Balaji, and A. S. Helmy, Optica 6, 1349 (2019b).

- Frick et al. (2020) S. Frick, A. McMillan, and J. Rarity, Optics Express 28, 37118 (2020).

- Ren et al. (2020) X. Ren, S. Frick, A. McMillan, S. Chen, A. Halimi, P. W. Connolly, S. K. Joshi, S. Mclaughlin, J. G. Rarity, J. C. Matthews, et al., in CLEO: Applications and Technology (Optical Society of America, 2020) pp. AM3K–6.

- Morimoto et al. (2021) K. Morimoto, M.-L. Wu, A. Ardelean, and E. Charbon, Physical Review X 11, 011005 (2021).

- Valencia et al. (2005) A. Valencia, G. Scarcelli, M. D’Angelo, and Y. Shih, Phys. Rev. Lett. 94, 063601 (2005).

- Gasparini et al. (2018) L. Gasparini, M. Zarghami, H. Xu, L. Parmesan, M. M. Garcia, M. Unternährer, B. Bessire, A. Stefanov, D. Stoppa, and M. Perenzoni, in 2018 IEEE International Solid - State Circuits Conference - (ISSCC) (2018) pp. 98–100.

- Kim et al. (2021) B. Kim, S. Park, J.-H. Chun, J. Choi, and S.-J. Kim, in 2021 IEEE International Solid-State Circuits Conference (ISSCC), Vol. 64 (IEEE, 2021) pp. 108–110.

Acknowledgements. DF is supported by the Royal Academy of Engineering under the Chairs in Emerging Technologies scheme and acknowledges financial support from the UK Engineering and Physical Sciences Research Council (grants EP/T00097X/1 and EP/R030081/1). This project has received funding from the European union’s Horizon 2020 research and innovation programme under the Marie Skłodowska-Curie grant agreement No. 754354. H.D. acknowledges support from the European Union’s Horizon 2020 research and innovation programme under the Marie Sklodowska-Curie grant agreement No. 840958.

Authors contributions. D.F. and E.C. conceived the research. H.D., A.L. and J.Z. designed the experimental setup. J.Z. and A.L. performed the experiment. J.Z. and A.L. analysed the data. A.U. E.C. developed SwissSPAD2 and H.D. developed the coincidence counting algorithm. E.C. supervised the project. All authors contributed to the manuscript.

Data availability. The experimental data and codes that support the findings presented here are available from the corresponding authors upon reasonable request.

Supplementary Information

.1 Details on data processing

The intensity images shown in this work are pixel-wise summed by the acquired number N frames. The value of hot pixels is set to be 0 for correlation calculation and then interpolated with the neighboring pixels to show a better intensity image. The spatially-averaged correlation image shown in the context is cropped from the full data for better visualization purpose. The results shown in Fig. 5 are the full spatially correlation data corresponding to the 4 selected gate positions in Fig. 2. In Fig. 5c and d, the correlation peaks are well visible, while the background fluctuation in Fig. 5d is smaller as no classical light is collected at that gate position Defienne et al. (2019).

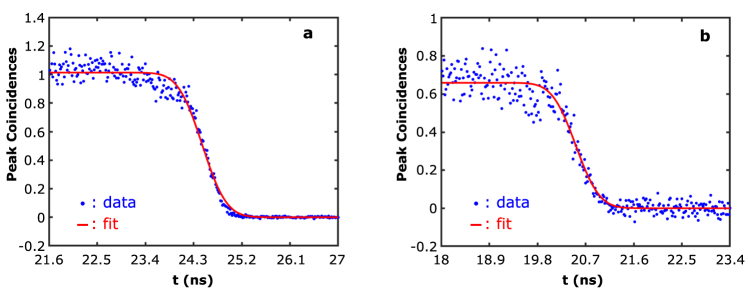

The time arrival information of the quantum illuminated object can be located by finding the falling edge of the correlation peak profile. In order to locate the middle point at the falling edge, we fitted the fluctuated correlation peak data by the the error function Chan et al. (2019). From the fitted curves shown in Fig. 6a and b, we obtained the falling time of ns for the synchronous case and ns for the asynchronous case (from to ). The tiny variation (1 gate position) also proves the reliability of the correlation peak profile used for object ranging. As the falling edge is not perfectly sharp, we recovered the intensity images of the classical object and quantum object by subtracting the intensity image at the gate position gates before the middle point from the one gates after. This allows us to achieve a subtracted intensity image with better contrast by avoiding using the images at the falling edge. However, the falling edge profile can be improved by optimizing the electrical gate shape of the camera and decreasing the width of the laser pulse.

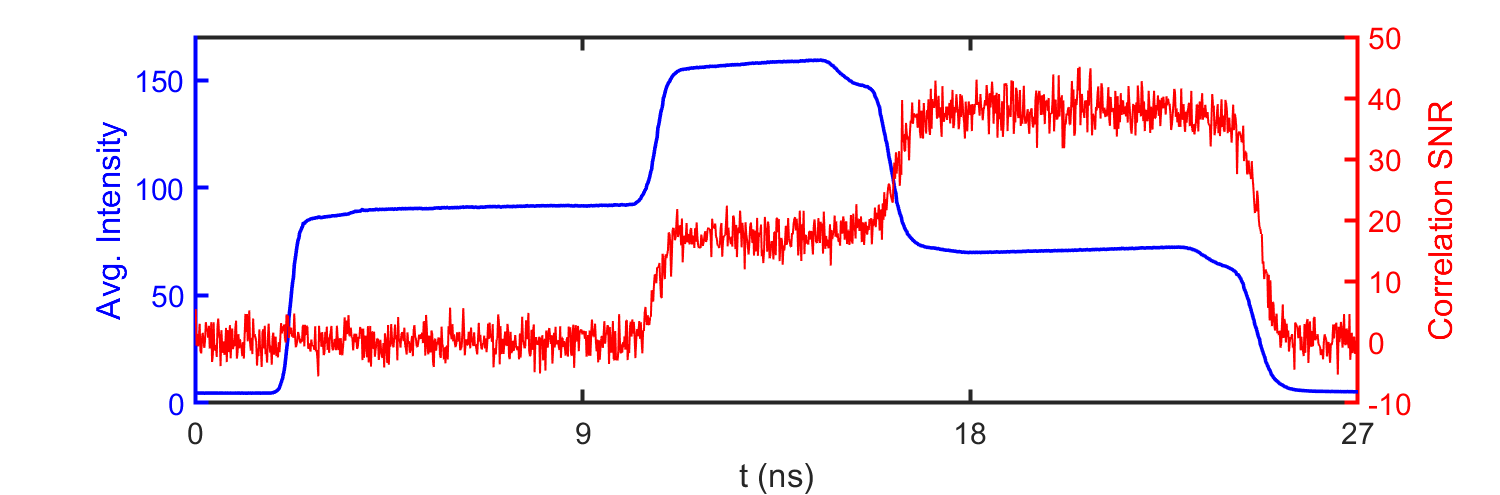

.2 Correlation peak SNR analysis

To analyse the visibility of the correlation peak in the spatially-averaged correlation image, we calculate the single-to-noise ratio (SNR) defined as the correlation peak value divided by the standard deviation of the background noise surrounding it. Fig. 7 shows the SNR of the corresponding result in Fig. 2 and the average intensity plotted as reference. We observe that the SNR increases above when quantum light arrives at the camera ns (classical light already present). In addition, the SNR is further improved when the classical light disappears at ns because the background noise decreases.

In order to further evaluate the visibility of the correlation peak in the spatially-average correlation image, we measure the correlation peak SNR for various classical light over quantum light intensity ratios () by keeping the pump laser power constant and tuning the classical laser power. The experiments are performed with the same camera configurations as in Fig. 4. As shown in Fig. 8a and b, the superimposed images are acquired at ns gate position where the gate window captures both classical light and quantum light. It is difficult to distinguish the traffic stop sign when is high () in the superimposed image, While it is better resolved after subtracting the classical light acquired at gate position of ns. From the spatially-averaged correlation image we can see that the background is more noisy when classical light intensity is higher as shown in the profiles across the center. Fig. 8c shows the measured correlation peak SNR values with various and the fitting curve with the theoretical model described in Defienne et al. (2019). All the results are based on the measurements with fixed 8-bit frames.

.3 Improving the quantum LiDAR acquisition speed

.3.1 Reducing the number of frames

In Figure 2, frames are acquired in s at each gate position to measure a spatially-averaged correlation image and identify the peak with a SNR on the order of . As shown in Figure 9, the peak is still well visible (SNR on the order of ) if the number of acquired frame per gate is reduced to , which strongly lower the acquisition time down to s per gate.

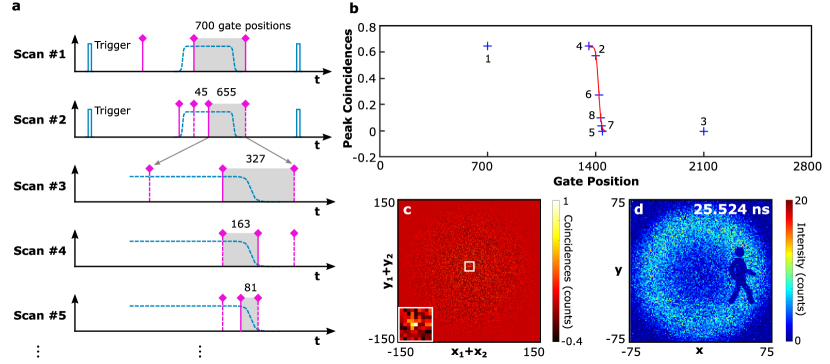

.3.2 Using a correlation-driven algorithm

To further improve the quantum LiDAR speed, we developed a coincidence-driven algorithm inspired by the binary search process used in successive-approximation register (SAR) analog-to-digital converter. To cover the range of the object, the scanning time range should be larger than the laser pulse period. Here, we use gate positions corresponding to ns. As shown in Fig. 10a, we initially scan gate positions with gates ( ns) interval dividing the scanning range to parts equally. As the width of the gate window is ns, we can make sure that at least one of the initially scanned gates can capture the reflected quantum light pulse, thus a corresponding higher correlation peak will be obtained. Since the falling edge is not perfectly sharp, a following scanning process is implemented to check if the gate position with higher correlation peak is from the falling edge. To avoid such false locating, we scanned the gate position gates before and gates after the target position from the last scanning. The target range defined for the next scanning is between the last gate position with higher correlation peak and the scanned gate position just after it. When the target range is narrow enough the fitting method can be applied to achieve the falling edge according to the discrete scanned points. An example is depicted in Fig. 10b, where only seconds is consumed with scanning points to locate the range of the object. Fig. 10c shows the projected coincidences at scanning point 2, in which the coincidence peak is obvious. Fig. 10d is the subtracted intensity image and the measured relative range is ns. Note that the initial offset for measurements in Fig. 2 and Fig. 10 are different resulting different relative time ranges.

By reducing the number of acquired frames to and using a correlation-driven algorithm, we retrieve the quantum-illuminated object and its depth in seconds. In the current implementation, it is not necessary to consider the gating profile variation over different pixels since the object is based on a 2 dimensional mask, which simplifies the processing and makes the proposed algorithm effective. However, the correlation-driven algorithm can be also extended to quantum LiDAR applications with 3 dimensional objects by applying in-pixel successive approximation. The similar approach has been implemented for conventional TCSPC-based LiDAR to reduce the output bandwidth Kim et al. (2021).

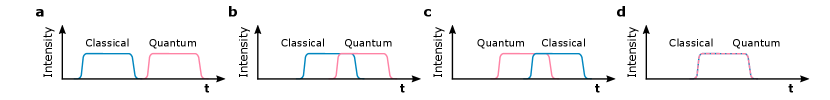

.4 Analysis of different scenarios

According to the different arrival time of the reflected photons from the classical light and quantum light, different scenarios are shown in Fig. 11. For the first three scenarios shown in Fig. 11a, b and c, the target quantum object can be located by the falling edge of the correlation peak profile and subtracted from the intensity image after the falling edge no matter it is background light (in a and b) or classical light (c). However, if the distance between the two classical and quantum objects are smaller than the depth resolution of the camera, the spatially-resolved correlation image has to be performed for distillation, which takes more time.