Random Isn’t Always Fair: Candidate Set Imbalance and Exposure Inequality in Recommender Systems

Abstract.

Traditionally, recommender systems operate by returning a user a set of items, ranked in order of estimated relevance to that user. In recent years, methods relying on stochastic ordering have been developed to create “fairer” rankings that reduce inequality in who or what is shown to users. Complete randomization–ordering candidate items randomly, independent of estimated relevance–is largely considered a baseline procedure that results in the most equal distribution of exposure. In industry settings, recommender systems often operate via a two-step process in which candidate items are first produced using computationally inexpensive methods and then a full ranking model is applied only to those candidates. In this paper, we consider the effects of inequality at the first step and show that, paradoxically, complete randomization at the second step can result in a higher degree of inequality relative to deterministic ordering of items by estimated relevance scores. In light of this observation, we then propose a simple post-processing algorithm in pursuit of reducing exposure inequality that works both when candidate sets have a high level of imbalance and when they do not. The efficacy of our method is illustrated on both simulated data and a common benchmark data set used in studying fairness in recommender systems.

1. Introduction

Recommender and ranking systems organize vast troves of information to help people make decisions, like what to buy or who to hire. They also help people find relevant information, like content produced on social media or web pages. These recommender systems have historically followed the Probability Ranking Principle (Robertson, 1977): to maximize utility to a user of the system, recommended items should appear in decreasing order of their probability of relevance to the user. However, this approach completely ignores who or what is recommended despite the economic and social impacts to them. For example, a recommender system that does not return an individual’s content can preclude that content creators’ access to the $10 billion creator economy (Wee, 2021). Image searches that only return the images with the highest relevance can perpetuate occupational gender stereotypes in images by only, for example, returning images of men when queried for professional roles traditionally associated with men. (Kay et al., 2015). This over-indexing on the consumers of the system can lead to extreme inequality in who or what gets exposure.

These inequalities arise for reasons related to the algorithm itself and how the results of the recommendation algorithm are displayed and interacted with by users. On the non-algorithmic end, due to user interface design choices such as limited recommendation spots and human behavior such as limited attention or preference for highly ranked items regardless of relevance (Collins et al., 2018; Granka et al., 2004), a relatively small number of items are recommended and even fewer engaged with. On the algorithmic end, the Probability Ranking Principle exacerbates the disparities of outcomes between items that are predicted to be better than others since they will always be ranked in a better position even when differences in predicted relevance is negligible. In the worst case, one item can receive high levels of exposure while a similar item with only marginally worse estimated relevance can receive none. Because the estimated relevance itself can have high statistical uncertainty, it is possible that this “winners-take-all” dynamic could allocate much more exposure to an item that is, in reality, less relevant.

Stochastically ranking the items–instead of deterministically ranking them–has emerged as one standard approach to mitigate exposure inequality (Singh and Joachims, 2019; Diaz et al., 2020; Wu et al., 2022; Bower et al., 2021; Oosterhuis, 2021; Do et al., 2021; Singh and Joachims, 2018; Usunier et al., 2022; Kuhlman et al., 2021; Gorantla et al., 2022; García-Soriano and Bonchi, 2021; Singh et al., 2021; Heuss et al., 2022; Pandey et al., 2005). Stochastic rankings provide flexibility in how expected exposure is allocated to items in comparison to the rigid allocation of exposure under deterministic rankings. For example, one popular approach samples rankings from the Plackett-Luce distribution (Singh and Joachims, 2019; Diaz et al., 2020; Wu et al., 2022; Bower et al., 2021; Oosterhuis, 2021). Under this sampling approach, for each ranking request, items with higher estimated relevance are more likely to appear lower in the ranking, though there is randomness in the exact order in which items occur. In contrast to the deterministic ranking setting, under the Plackett-Luce sampling, items with similar predicted relevance will receive similar amounts of exposure in expectation. Another common approach used as a baseline for comparison of fair ranking models is to order the candidate items (Diaz et al., 2020; Wu et al., 2022) uniformly at random , i.e. every item has equal probability of appearing in each position. This approach is often described as leading to “fairer” ranking models that more equally distribute exposure across producers. However, in practice we have found that a pure randomization approach can actually result in an increase of exposure inequality.

In this paper, we explore how randomized ranking can increase inequality. In short, ranking in industry settings typically operates in a two-step process since it would be infeasible to rank every single possible item for each user (Wang and Joachims, 2022). In the first stage, a computationally inexpensive algorithm generates a relatively small candidate set, and then a more computationally expensive approach ranks the candidates. The phenomenon we identified in which randomization across the candidates increases inequality can occur when candidate sets are imbalanced, i.e. when some items are much more likely to appear in the candidate sets than others.

While there are several aspects to consider when building responsible recommender systems, like demographic biases in model performance, in this paper we specifically focus on inequality of exposure of the items being recommended. We offer two main contributions. First, we demonstrate that when striving to reduce exposure inequality in recommender systems, candidate set imbalance cannot be ignored. In particular, despite the widespread assumption that randomizing reduces inequality, we demonstrate that for recommender systems with imbalanced candidate sets, this need not be the case. Second, we propose a modification to the class of Plackett-Luce distributions that have been used as a post-processing step to reduce inequality of exposure on the individual ranking level while balancing consumer-utility (Diaz et al., 2020; Wu et al., 2022). This modification uses the system level information of how often an item appears in the candidate sets to reduce exposure inequality. Similar to Plackett-Luce post-processing, our approach is simple as it does not require re-training the candidate generation nor ranking model. Moreover, since post-processing methods like ours operate on the relevance scores regardless of how they were learned or obtained, our approach is appropriate in industry settings where the outputs of several models may be combined to produce a ranking of candidates. We illustrate the effectiveness of this approach on synthetic and real data.

2. Related Work

Over the past few years, the fair ranking literature has rapidly grown. See (Ekstrand et al., 2022; Patro et al., 2022; Li et al., 2022; Zehlike et al., 2021; Sonboli et al., 2022) for survey papers. While there are many ethical aspects to consider when building recommender systems, in this work, we are interested in the popular problem of inequality of exposure allocation to the items being recommended. In particular, we are interested in the relationship between equity of exposure at the individual ranking level and at the system level. Exposure at the individual ranking level comes from the exposure items receive in a single ranking request from a user at a single point in time. Exposure at the system level comes from the cumulative exposure items receive over all users and all ranking requests over a time period.

There are several papers that focus on distributing exposure more equitably on the individual ranking level (Singh and Joachims, 2018, 2019; Diaz et al., 2020; Heuss et al., 2022; Wu et al., 2022) which do not take candidate set inequality into account. For example, the canonical work in (Singh and Joachims, 2018) requires that the ratio of exposure that an item receives to its relevance should be constant over all items for each ranking request. One reason to focus on exposure at the individual ranking level is that the associated optimization problems become easier to solve since the dependent nature of system level exposure can be ignored. In light of candidate set imbalance, as we show in our experiments, these type of approaches do not necessarily guarantee equity of exposure at the system level.

On the other hand, there are group-fairness notions of equality of exposure on the individual ranking level where exposure equality does generalize to the system level. In particular, since these approaches put constraints on the proportions of items in the “top ” belonging to each demographic group for each ranking, each group at the system level will receive the same level of exposure as they do in each individual ranking (Yang and Stoyanovich, 2017; Zehlike et al., 2017; Celis et al., 2018; Geyik et al., 2019; Celis et al., 2020; Yang et al., 2019). However, these approaches do not naturally extend to individual fairness, the main case we consider.

Another line of work focuses on distributing and measuring exposure more equitably at the system level, which implicitly takes candidate set imbalance into account (Sürer et al., 2018; Biega et al., 2018; Do et al., 2021; Do and Usunier, 2022; Lazovich et al., 2021; Zhu and Lerman, 2016). Instead of turning to stochastic rankings, the work in (Biega et al., 2018) amortizes equity of exposure on the system level over time. However, their approach as well as (Sürer et al., 2018) requires solving an integer linear program.

Recently, the works in (Do et al., 2021; Do and Usunier, 2022) overcame the previously mentioned optimization challenges that arise when reducing system level exposure directly via the Franke-Wolfe algorithm and leveraging tools in non-smooth optimization. Their approach learns a stochastic ranking model given relevance scores by optimizing welfare functions that take into account user utility and item side inequality. Since our algorithm does not require training a model, our approach can more quickly adapt to dynamic environments that effect candidate set imbalance although recently a fast online algorithm was proposed (Usunier et al., 2022) applicable to differentiable models like in (Do et al., 2021) but not (Do and Usunier, 2022). Furthermore, along the way, the authors of (Do et al., 2021) also note a similar finding as ours wherein a class of stochastic rankings theoretically behave unexpectedly in terms of producer inequality and consumer utility, albeit in a different setting.

In either case, stochastic rankings are one standard way of achieving system level or individual-level equity of exposure (Singh and Joachims, 2019; Diaz et al., 2020; Wu et al., 2022; Bower et al., 2021; Oosterhuis, 2021; Do et al., 2021; Singh and Joachims, 2018; Usunier et al., 2022; Kuhlman et al., 2021; Gorantla et al., 2022; García-Soriano and Bonchi, 2021; Singh et al., 2021; Heuss et al., 2022; Pandey et al., 2005). The work in (Diaz et al., 2020; Wu et al., 2022) proposes sampling rankings from a class of Plackett-Luce distributions as a post-processing step to achieve individual-level equity of exposure. They make the observation that as stochasticity is increased, inequality of exposure decreases. In the case of a random ranking then, expected exposure is equalized for all items in a single ranking request. However, in our work, due to candidate set inequality, we argue that this post-processing algorithm does not guarantee system level equality of exposure. We make a simple modification to this algorithm (Diaz et al., 2020; Wu et al., 2022) by leveraging system level information.

Finally, very recently, methods to change the candidate sets themselves have emerged. For example, the authors in (Wang and Joachims, 2022) propose algorithms to obtain group-fair candidate sets. Instead of modifying the candidate sets, our work leverages information about the candidate sets to achieve more equitable rankings in cases where candidate set inequality is significant but not insurmountable.

3. Motivating Toy Example

In this section, we illustrate how system level inequality of exposure can actually increase even when the final ranking a user sees is drawn uniformly at random from all permutations of their candidate set.

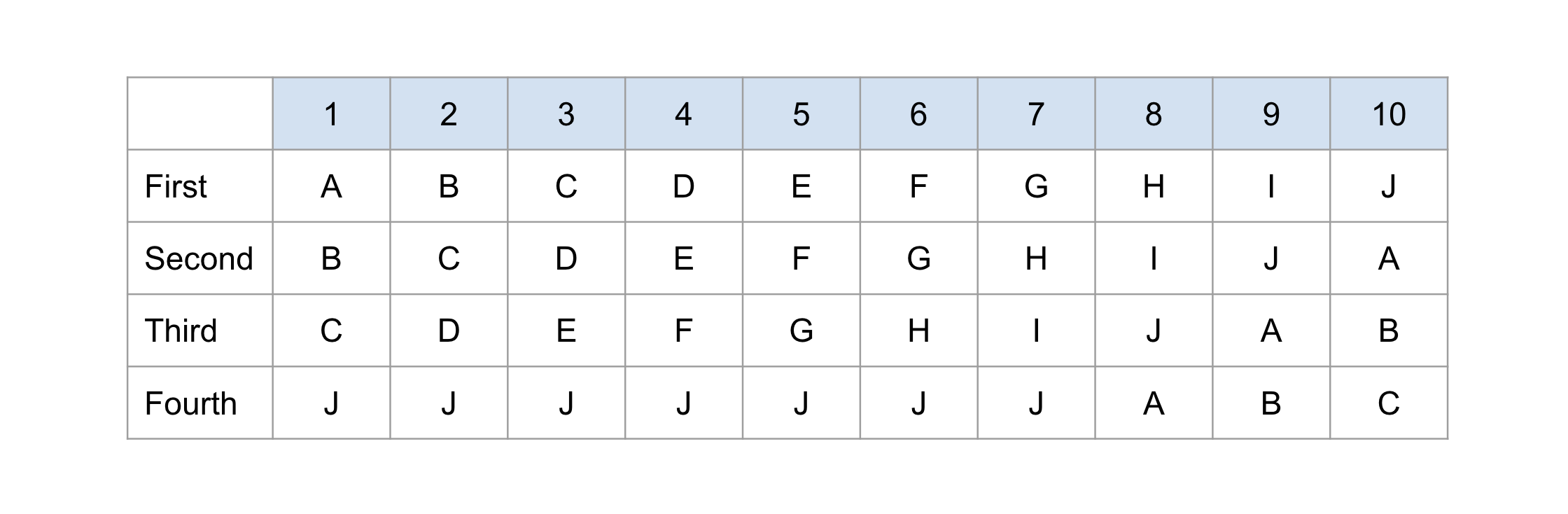

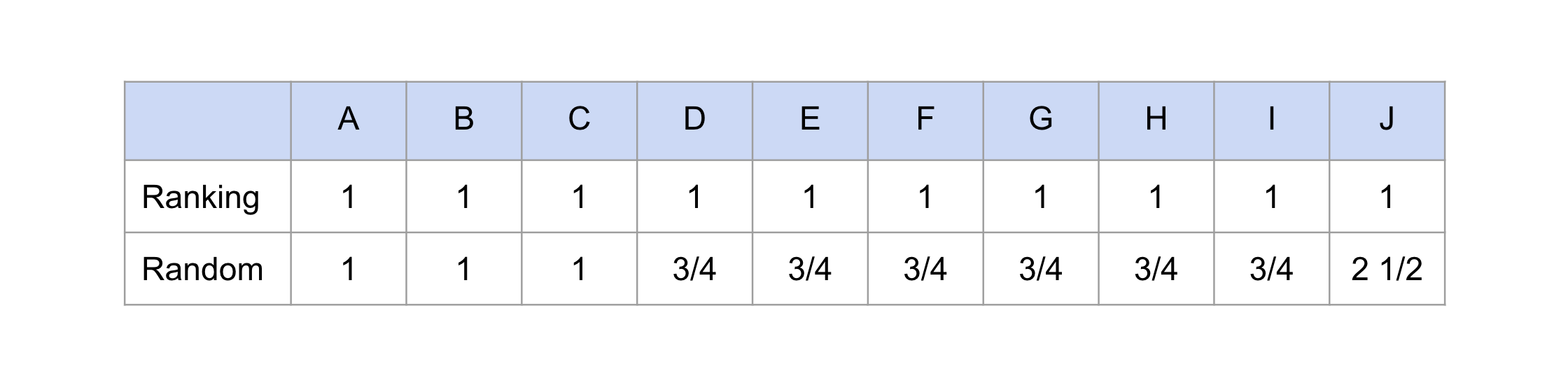

To illustrate how completely randomizing each ranking request can increase system level inequality, we present the following toy example. Consider a case where we have consumers {1, 2, …, 10} and producers {A, B, C, …, J}. For each consumer, the candidate generation process returns four candidates, which are ranked as shown in Figure 1. Suppose for simplicity that each consumer stops after the first item that is returned.

Then, if we use deterministic ranking, every producer receives exactly the same number of impressions–exactly one. However, if we first randomize the order among the four candidates, then producer J has a 1/4 chance of being shown to every consumer, resulting in 2.5 impressions on expectation overall. The expected number of impressions are shown in Figure 2 under both scenarios. In this case, it is clear that randomization would increase exposure inequality relative to ranking because of the imbalanced nature of the candidate sets.

While this example may seem contrived, this phenomenon, where lower ranked content tends to appear much more often in candidate sets overall, can occur in practice. One such reason is to prevent empty or small candidate sets. When the candidate sets that are returned by the first stage model are small, popular items may be used to backfill candidate sets. These popular items may not be the most relevant items to a user, resulting in low relevance scores, but could appear in many candidate sets to ensure every candidate set is of an appropriate size.

4. Post-Processing Algorithm: Plackett-Luce With Inverse Candidate Frequency Weights

We now present a post-processing algorithm to mitigate the effect of candidate set inequality. While it is reasonable to directly intervene on the first stage of candidate generation like in (Wang and Joachims, 2022), we argue it is also valid to intervene directly at the second stage of ranking the candidates for a couple reasons. First, candidate sets are populated from many different sources, like popular accounts, accounts that are followed by accounts a user follows, etc. If we directly intervene at the candidate generation stage, we need to take into account dependencies between these multiple sources that would otherwise be independent from one another, which may increase latency to an unacceptable level and may result in small candidate sets for some users since some sources like popular account sources are used to ensure each user has a reasonable number of candidates. Second, intervening on the second step arguably has more of a direct impact on what recommendation a user ultimately sees as there may be hundreds of candidates but only a handful of candidates actually selected to be recommended.

In (Diaz et al., 2020; Wu et al., 2022), the authors propose a simple post-processing algorithm to achieve equity of exposure on the individual ranking level by taking the relevance scores output by a model, transforming the scores either by exponentiation by a constant or multiplying them by a constant, and then sampling a ranking from the Plackett-Luce distribution. Their transformation of scores allows them to smoothly interpolate between completely randomizing the candidate sets (constant is zero) and deterministically ranking the items in decreasing order of relevance score (constant is large).

We propose a modification to this post-processing algorithm by incorporating the frequency that each item appears in candidate sets in pursuit of achieving system level equity of exposure. We call our approach “Plackett-Luce With Inverse Candidate Frequency Weights.” In particular, suppose there are users and items . For every user , let be the set of candidate items that need to be ranked for user . Let be the set of relevance scores corresponding to the items. For simplicity, we assume each user requests just one ranking. Let . Let be the number of candidate sets that item appears in. Then for user , the probability that the ranking is sampled is

| (1) |

Considering Equation (1) for all possible rankings, it is easy to see that it is more likely to sample rankings such that items corresponding to larger, respectively smaller, are highly, respectively lowly, ranked. When and , the hyperparameter allows this distribution to smoothly interpolate between the deterministic ranking given by sorting the items by decreasing relevance score (when ) and stochastic rankings that are more likely to highly (lowly) rank items that appear less (more) frequently among all candidate sets (when ). When each item appears in the candidate sets at equal rates and is large, our algorithm behaves like the algorithm in (Diaz et al., 2020; Wu et al., 2022). For a fixed , controls the level of stochasticity. To efficiently sample rankings from the Plackett-Luce distribution, we utilize “Algorithm-A” in (Efraimidis and Spirakis, 2006), which is an algorithm that solves the “weighted sampling” problem when there are items to rank.

Furthermore, we can easily extend our individual fairness type method to group fairness by replacing , which is the number of times user appears in the candidate sets, with defined as the number of times a candidate from group appears in the candidate sets where is the demographic group user belongs to.

5. Experimental Results

We consider experiments on a synthetic data set where ground truth relevance scores are known as well as the German credit data set (Dua and Graff, 2017) where relevance scores need to be learned from features of the candidates to test our approach under a more realistic scenario. The German credit data set is a real binary classification data set that is commonly repurposed for ranking tasks in the fair ranking literature (Zehlike et al., 2021).

5.1. Set-up

We now describe the common set-up in both experiments. Motivated by the example in Section 3, we simulate scenarios where some candidates who have relatively low relevance scores are significantly more likely to appear in a candidate set than others. This can be achieved if the rank at which these candidates appear in the final recommendation is negatively correlated with their scores.

5.1.1. Basics

Recall that we consider a two-stage process for recommendations. First, for each user requesting a ranking, a candidate set is selected. Then the items in the candidate set are ranked. We assume that the size of each candidate set, , is constant over all users and that each user views exactly the first candidates per ranking presented to them. We assume each user makes exactly one ranking request. Each candidate has a relevance score that is constant over the users. These scores are either given as ground truth in the synthetic experiments or learned from a model in the German credit experiments.

5.1.2. Candidate Set Construction

The candidate sets are sampled from a certain probability distribution over all candidate sets of size . In particular, each candidate is assigned a candidate score . For all but 10 of the candidates, the candidate score for each candidate is sampled from a beta distribution . The other 10 are assigned a relatively large candidate score of 5 compared to the scores of the other items so that these items will be more likely to be selected in the candidate sets. Then we build each candidate set iteratively by sampling one candidate out of all possible remaining candidates without replacement until we have selected candidates. The chance that a candidate is selected at any step is the ratio of its candidate score to the sum of the candidate scores of all remaining potential candidates.

5.1.3. Algorithms

We evaluate our post-processing algorithm “Plackett-Luce with Inverse Candidate Frequency Weights” from Section 4 against a handful of other baselines. We refer to it as “PL-ICFW” for short. We call the special case of in our post-processing algorithm “Inverse Weighted.” The algorithm that ranks candidates in decreasing order of relevance score is called “Deterministic.” As we stated in the introduction, this type of algorithm has historically been used in recommender systems based on the Probability Ranking Principle (Robertson, 1977). We also compare our approach to sampling rankings from the Plackett-Luce distribution as a function of the relevance scores multiplied by a constant as proposed in (Diaz et al., 2020; Wu et al., 2022). We refer to this approach as “Scaled Plackett-Luce” or “Scaled PL” for short. Our approach is similar except we include the extra terms in Equation (1) and our hyperparameter . The algorithm that uniformly at random samples a ranking of the candidates is called “Randomized”, which is a special case of Scaled Plackett-Luce. In the German credit experiments, we also compare to the stochastic rankings learned via policy-gradients with individual fairness constraints in (Singh and Joachims, 2019), and refer to it as “PG-Rank”. Because PG-Rank is an in-processing algorithm that learns relevance scores, we do not consider this approach in the synthetic experiment case where the relevance scores are already known. All algorithms except Deterministic are stochastic in nature.

Furthermore, we illustrate our algorithm under several choices of and two different choices of as a scalar multiple of in Equation (1). Similarly, we vary the one hyperparameter in Scaled Plackett-Luce that multiplies the relevance scores by a constant before sampling from the Plackett-Luce distribution. See the Appendix in Section B for the specific choices of hyperparameters for PL-ICFW and Scaled PL for both experiments.

5.1.4. Evaluation Metrics

We measure performance by looking at the relationship between item-side inequality of exposure and content quality of items recommended to users. We make the normative assumption that viewed recommendations being concentrated to a small number of users is inherently unfair. Therefore, we evaluate system-level inequality by evaluating what percent of viewed recommendations belongs to the top 1% of users. We refer to this metric as “T1PS.” This metric for measuring system-level inequality has been recently proposed in (Lazovich et al., 2021) and has been used in the empirical work in (Zhu and Lerman, 2016) for measuring inequality on social media. This metric is related to the Gini coefficient, which has also recently been used to measure inequality in recommender systems (Do and Usunier, 2022; Do et al., 2021). We opt to use T1PS since it is more interpretable than the Gini coefficient. We call the performance of the ranking algorithm on the users receiving recommendations the “content quality.” We measure content quality by the sum of the ground truth relevance scores of items that have been recommended and viewed by users divided by the number of users . Note that a candidate’s ground truth score may contribute to content quality multiple times if that candidate was viewed by multiple users. To be clear, for the German credit experiments, the ground truth relevance score of a candidate is its ground truth binary label. For the synthetic experiments, the relevance score is a continuous number, not a binary label.

5.2. Synthetic Data

5.2.1. Set-up

In our synthetic data set-up, there are users and unique candidates. The candidate set size is , and each user views the first candidates they are recommended. For each candidate with candidate score , we assign a ground truth relevance score of where .

5.2.2. Results

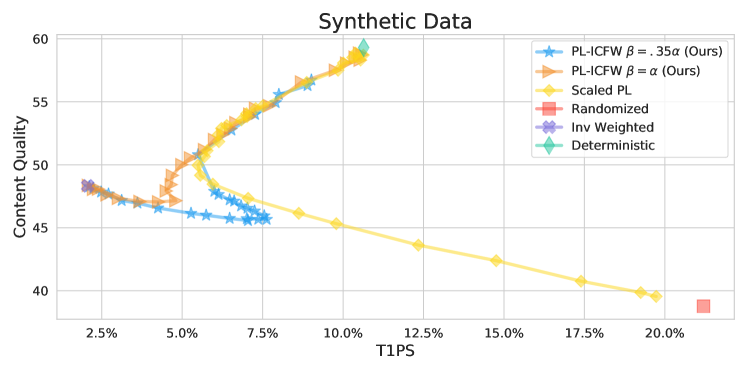

See Figure 3 for the performance of all the algorithms on the synthetic data set. There are several behaviors in this plot we would expect. First, our algorithm smoothly interpolates between Inverse Weighted and Deterministic. Second, we see the expected behavior that Scaled Plackett-Luce can smoothly interpolate between Randomized and Deterministic. Third, as expected due to our simulation set-up, we see that the Randomized scenario results in the most unequal outcome for producer-side exposure: more than 20% of viewed recommendations are attributed to the top 1% of recommended items. Fourth, the Deterministic ranking has the highest content quality as expected since by definition it recommends items with the highest relevance scores.

Interestingly, for the range of hyperparameter choices for Scaled PL111Recall this hyperparameter is a constant factor such that each score is multiplied by it before sampling from the Plackett-Luce model., while content quality monotonically decreases as the hyperparameter decreases, T1PS initially decreases but then gravely increases again, far beyond Deterministic. On the other hand, for our approach when , while T1PS almost monotonically decreases as gets smaller, content quality does not and actually increases as . We see that for relatively large hyperparameter choices that result in the sampled rankings being close to the Deterministic ranking, both our PL-ICFW method and Scaled PL behave similarly. However, the two methods diverge with relatively small hyperparameter choices, and by far outperforms . Our PL-ICFW method under both choices of significantly outperforms Scaled PL since there are several instances where PL-ICFW has the same or better content quality and the same or better T1PS. The minimum T1PS that Scaled PL can achieve is 6% whereas our approach achieves 2%. We do not expect to achieve the perfect case of T1PS being 1% since in general it is impossible to achieve equality of exposure among all producers. See Section A in the Appendix.

5.3. German Credit Data

5.3.1. Set-up

The German credit data set contains 1000 individuals seeking a loan from a bank. Each are labeled as good or bad credit risk and have features relating to demographic information as well as employment, education, credit history, and so on (Dua and Graff, 2017). We follow the pre-processing steps of Singh. et. al.222Using the notebook here: https://github.com/ashudeep/Fair-PGRank/blob/master/GermanCredit/ to allow for comparison with the PG-Rank work (Singh and Joachims, 2019). Nine features from the dataset are used as inputs to a linear model: age, sex, amount and duration of credit history, job and housing status, savings and checking account balances, and purpose of the loan. The numerical features age, credit amount, and credit duration are all shifted and scaled to a standard normal distribution. The remaining categorical features are encoded as one-hot vectors. Ultimately, this leads to a 29-dimensional input to the linear model.

We train a linear model with no fairness regularization based on the code in the notebook referenced above to obtain predicted relevance scores of the candidates for all algorithms except PG-Rank. Our algorithm and all the other baseline algorithms except PG-Rank require relevance scores since these algorithms do not learn the scores themselves. We also train several individually fair PG-Rank models based on their code by varying their hyperparameter that controls disparities in exposure at the individual level. See the Appendix in Section B for the specific hyperparameter choices.

The ten candidates whose candidate scores are 5 as discussed in Section 5.1.2 are the ten candidates with the 50-60 lowest learned relevance scores. There are , candidates, users who request recommendations, the candidate sets have size , and each user requesting recommendations sees recommendations.

5.3.2. Results

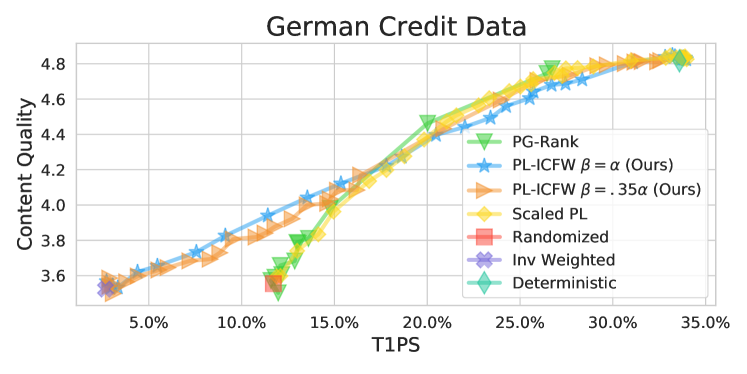

See Figure 4 for a comparison of the algorithms on the German credit data set. Unsurprisingly, similar to the synthetic data set case, we observe the same four expected behaviors as discussed before. When T1PS is larger than 16%, the models all perform similarly with PL-ICFW with slightly underperforming the other three algorithms. However, for content quality less than 4.2, our algorithm PL-ICFW under both choices of significantly outperforms the other algorithms since for the same level of content quality, our method has a significantly lower T1PS. All other methods cannot achieve T1PS lower than 12% while our method achieves values of T1PS less than 3% for the same level of content quality.

6. Conclusion And Limitations

6.1. Limitations

In many settings, the set of items to rank is continually changing and we may not know the exact frequency with which items will occur in candidate sets. We may need to estimate these frequencies based on a prior time period’s data. It is possible that the estimates are sensitive to the choice of time period. Although our post-processing is simple, the optimality of the distribution in Equation (1) is unknown. There may be better distributions that trade-off consumer-utility and system level exposure. Additionally, because our algorithm requires hyperparameters and the ranking distribution is a function of the relevance scores, any change to the distribution of the item scores due to a new model will require a new hyperparameter search. This issue is not limited to our approach, but any post-processing approach with hyperparameters, like score boosting approaches (Ha-Thuc et al., 2020; Nandy et al., 2021). Finally, if the candidate sets contain high levels of inequality, it may be infeasible for any approach that does not directly modify the candidate sets to achieve equitable exposure.

6.2. Conclusion

There are several aspects to consider when building responsible recommender systems, like demographic disparities in model performance. Our work specifically focuses on one aspect: inequality of exposure of the items being recommended. We show that even common-sense solutions to “fair ranking” can behave unexpectedly if they do not take into account candidate set inequality. We showed that even when individual ranking level exposure is completely equal for a given set of candidates, system level exposure measuring the cumulative exposure items receive over all users may not be due to candidate set inequalities. Although others have pointed out the distinction between individual level exposure and system level exposure (Sonboli et al., 2022; Raj et al., 2020), we provide a concrete example of the seemingly paradoxical relationship between system and individual ranking level exposure. We proposed a simple, computationally inexpensive post-processing algorithm that trades-off consumer-utility and producer-side exposure both in cases with that do and do not exhibit high candidate set imbalance. Additionally, our post-processing algorithm provides a baseline for producer-side experimentation, where the effects of algorithmic changes are measured on the producers of content (Ha-Thuc et al., 2020; Nandy et al., 2021), since we can allocate exposure to each item relatively evenly.

References

- (1)

- Biega et al. (2018) Asia J. Biega, Krishna P. Gummadi, and Gerhard Weikum. 2018. Equity of Attention: Amortizing Individual Fairness in Rankings. In The 41st International ACM SIGIR Conference on Research & Development in Information Retrieval (SIGIR ’18). Association for Computing Machinery, Ann Arbor, MI, USA, 405–414. https://doi.org/10.1145/3209978.3210063

- Bower et al. (2021) Amanda Bower, Hamid Eftekhari, Mikhail Yurochkin, and Yuekai Sun. 2021. Individually Fair Rankings. In International Conference on Learning Representations. https://openreview.net/forum?id=71zCSP_HuBN

- Celis et al. (2020) L. Elisa Celis, Anay Mehrotra, and Nisheeth K. Vishnoi. 2020. Interventions for Ranking in the Presence of Implicit Bias. In Proceedings of the 2020 Conference on Fairness, Accountability, and Transparency (Barcelona, Spain) (FAT* ’20). Association for Computing Machinery, New York, NY, USA, 369–380. https://doi.org/10.1145/3351095.3372858

- Celis et al. (2018) L. Elisa Celis, Damian Straszak, and Nisheeth K. Vishnoi. 2018. Ranking with Fairness Constraints. International Colloquium on Automata, Languages, and Programming (2018). arXiv:1704.06840 http://arxiv.org/abs/1704.06840

- Collins et al. (2018) Andrew Collins, Dominika Tkaczyk, Akiko Aizawa, and Joeran Beel. 2018. Position Bias in Recommender Systems for Digital Libraries in Transforming Digital Worlds. 335–344. https://doi.org/10.1007/978-3-319-78105-1_37

- Diaz et al. (2020) Fernando Diaz, Bhaskar Mitra, Michael D Ekstrand, Asia J Biega, and Ben Carterette. 2020. Evaluating stochastic rankings with expected exposure. In Proceedings of the 29th ACM international conference on information & knowledge management. 275–284.

- Do et al. (2021) Virginie Do, Sam Corbett-Davies, Jamal Atif, and Nicolas Usunier. 2021. Two-sided fairness in rankings via Lorenz dominance. Advances in Neural Information Processing Systems 34 (2021), 8596–8608.

- Do and Usunier (2022) Virginie Do and Nicolas Usunier. 2022. Optimizing Generalized Gini Indices for Fairness in Rankings. In Proceedings of the 45th International ACM SIGIR Conference on Research and Development in Information Retrieval (Madrid, Spain) (SIGIR ’22). Association for Computing Machinery, New York, NY, USA, 737–747. https://doi.org/10.1145/3477495.3532035

- Dua and Graff (2017) Dheeru Dua and Casey Graff. 2017. UCI Machine Learning Repository. https://archive.ics.uci.edu/ml/datasets/statlog+(german+credit+data). http://archive.ics.uci.edu/ml

- Efraimidis and Spirakis (2006) Pavlos S Efraimidis and Paul G Spirakis. 2006. Weighted random sampling with a reservoir. Information processing letters 97, 5 (2006), 181–185.

- Ekstrand et al. (2022) Michael D Ekstrand, Anubrata Das, Robin Burke, and Fernando Diaz. 2022. Fairness in recommender systems. In Recommender systems handbook. Springer, 679–707.

- García-Soriano and Bonchi (2021) David García-Soriano and Francesco Bonchi. 2021. Maxmin-fair ranking: individual fairness under group-fairness constraints. In Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery & Data Mining. 436–446.

- Geyik et al. (2019) Sahin Cem Geyik, Stuart Ambler, and Krishnaram Kenthapadi. 2019. Fairness-aware ranking in search & recommendation systems with application to linkedin talent search. In Proceedings of the 25th acm sigkdd international conference on knowledge discovery & data mining. 2221–2231.

- Gorantla et al. (2022) Sruthi Gorantla, Amit Deshpande, and Anand Louis. 2022. Sampling Random Group Fair Rankings. arXiv preprint arXiv:2203.00887 (2022).

- Granka et al. (2004) Laura A Granka, Thorsten Joachims, and Geri Gay. 2004. Eye-tracking analysis of user behavior in WWW search. In Proceedings of the 27th annual international ACM SIGIR conference on Research and development in information retrieval. 478–479.

- Ha-Thuc et al. (2020) Viet Ha-Thuc, Avishek Dutta, Ren Mao, Matthew Wood, and Yunli Liu. 2020. A counterfactual framework for seller-side a/b testing on marketplaces. In Proceedings of the 43rd International ACM SIGIR Conference on Research and Development in Information Retrieval. 2288–2296.

- Heuss et al. (2022) Maria Heuss, Fatemeh Sarvi, and Maarten de Rijke. 2022. Fairness of Exposure in Light of Incomplete Exposure Estimation. arXiv preprint arXiv:2205.12901 (2022).

- Kay et al. (2015) Matthew Kay, Cynthia Matuszek, and Sean A Munson. 2015. Unequal representation and gender stereotypes in image search results for occupations. In Proceedings of the 33rd annual acm conference on human factors in computing systems. 3819–3828.

- Kuhlman et al. (2021) Caitlin Kuhlman, Walter Gerych, and Elke Rundensteiner. 2021. Measuring group advantage: A comparative study of fair ranking metrics. In Proceedings of the 2021 AAAI/ACM Conference on AI, Ethics, and Society. 674–682.

- Lazovich et al. (2021) Tomo Lazovich, Luca Belli, Aaron Gonzales, Amanda Bower, Uthaipon Tantipongpipat, Kristian Lum, Ferenc Huszar, and Rumman Chowdhury. 2021. Measuring Disparate Outcomes of Content Recommendation Algorithms with Distributional Inequality Metrics. (2021).

- Li et al. (2022) Yunqi Li, Hanxiong Chen, Shuyuan Xu, Yingqiang Ge, Juntao Tan, Shuchang Liu, and Yongfeng Zhang. 2022. Fairness in Recommendation: A Survey. arXiv preprint arXiv:2205.13619 (2022).

- Nandy et al. (2021) Preetam Nandy, Divya Venugopalan, Chun Lo, and Shaunak Chatterjee. 2021. A/B Testing for Recommender Systems in a Two-sided Marketplace. Advances in Neural Information Processing Systems 34 (2021), 6466–6477.

- Oosterhuis (2021) Harrie Oosterhuis. 2021. Computationally efficient optimization of plackett-luce ranking models for relevance and fairness. In Proceedings of the 44th International ACM SIGIR Conference on Research and Development in Information Retrieval. 1023–1032.

- Pandey et al. (2005) Sandeep Pandey, Sourashis Roy, Christopher Olston, Junghoo Cho, and Soumen Chakrabarti. 2005. Shuffling a Stacked Deck: The Case for Partially Randomized Ranking of Search Engine Results. In Proceedings of the 31st International Conference on Very Large Data Bases, Trondheim, Norway, August 30 - September 2, 2005, Klemens Böhm, Christian S. Jensen, Laura M. Haas, Martin L. Kersten, Per-Åke Larson, and Beng Chin Ooi (Eds.). ACM, 781–792. http://www.vldb.org/archives/website/2005/program/paper/thu/p781-pandey.pdf

- Patro et al. (2022) Gourab K. Patro, Lorenzo Porcaro, Laura Mitchell, Qiuyue Zhang, Meike Zehlike, and Nikhil Garg. 2022. Fair Ranking: A Critical Review, Challenges, and Future Directions. In 2022 ACM Conference on Fairness, Accountability, and Transparency (Seoul, Republic of Korea) (FAccT ’22). Association for Computing Machinery, New York, NY, USA, 1929–1942. https://doi.org/10.1145/3531146.3533238

- Raj et al. (2020) Amifa Raj, Connor Wood, Ananda Montoly, and Michael D Ekstrand. 2020. Comparing fair ranking metrics. 3rd FAccTrec Workshop on Responsible Recommendation (2020).

- Robertson (1977) Stephen E Robertson. 1977. The probability ranking principle in IR. Journal of documentation (1977).

- Singh and Joachims (2018) Ashudeep Singh and Thorsten Joachims. 2018. Fairness of Exposure in Rankings. Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining - KDD ’18 (2018), 2219–2228. https://doi.org/10.1145/3219819.3220088 arXiv:1802.07281

- Singh and Joachims (2019) Ashudeep Singh and Thorsten Joachims. 2019. Policy Learning for Fairness in Ranking. In Advances in Neural Information Processing Systems 32. Curran Associates, Inc., 5426–5436. http://papers.nips.cc/paper/8782-policy-learning-for-fairness-in-ranking.pdf

- Singh et al. (2021) Ashudeep Singh, David Kempe, and Thorsten Joachims. 2021. Fairness in ranking under uncertainty. Advances in Neural Information Processing Systems 34 (2021), 11896–11908.

- Sonboli et al. (2022) Nasim Sonboli, Robin Burke, Michael Ekstrand, and Rishabh Mehrotra. 2022. The multisided complexity of fairness in recommender systems. AI Magazine 43, 2 (2022), 164–176.

- Sürer et al. (2018) Özge Sürer, Robin Burke, and Edward C Malthouse. 2018. Multistakeholder recommendation with provider constraints. In Proceedings of the 12th ACM Conference on Recommender Systems. 54–62.

- Usunier et al. (2022) Nicolas Usunier, Virginie Do, and Elvis Dohmatob. 2022. Fast online ranking with fairness of exposure. In 2022 ACM Conference on Fairness, Accountability, and Transparency. 2157–2167.

- Wang and Joachims (2022) Lequn Wang and Thorsten Joachims. 2022. Fairness in the First Stage of Two-Stage Recommender Systems. arXiv preprint arXiv:2205.15436 (2022).

- Wee (2021) Edwin Wee. 2021. Indexing the creator economy. (2021). https://stripe.com/blog/creator-economy

- Wu et al. (2022) Haolun Wu, Bhaskar Mitra, Chen Ma, Fernando Diaz, and Xue Liu. 2022. Joint Multisided Exposure Fairness for Recommendation. 703–714. https://doi.org/10.1145/3477495.3532007

- Yang et al. (2019) Ke Yang, Vasilis Gkatzelis, and Julia Stoyanovich. 2019. Balanced ranking with diversity constraints. IJCAI International Joint Conference on Artificial Intelligence (2019).

- Yang and Stoyanovich (2017) Ke Yang and Julia Stoyanovich. 2017. Measuring Fairness in Ranked Outputs. In Proceedings of the 29th International Conference on Scientific and Statistical Database Management (Chicago, IL, USA) (SSDBM ’17). Association for Computing Machinery, New York, NY, USA, Article 22, 6 pages. https://doi.org/10.1145/3085504.3085526

- Zehlike et al. (2017) Meike Zehlike, Francesco Bonchi, Carlos Castillo, Sara Hajian, Mohamed Megahed, and Ricardo Baeza-Yates. 2017. Fa* ir: A fair top-k ranking algorithm. In Proceedings of the 2017 ACM on Conference on Information and Knowledge Management. 1569–1578.

- Zehlike et al. (2021) Meike Zehlike, Ke Yang, and Julia Stoyanovich. 2021. Fairness in ranking: A survey. arXiv preprint arXiv:2103.14000 (2021).

- Zhu and Lerman (2016) Linhong Zhu and Kristina Lerman. 2016. Attention inequality in social media. arXiv preprint arXiv:1601.07200 (2016).

Appendix A Impossibility of Equal Exposure

In this section, we show theoretically why it is impossible to achieve equality of exposure among all producers in general.

A.1. Notation and Model

Suppose there are consumers receiving recommendations of producers . Each consumer has a candidate set of producers such that some of these candidates are recommended to the consumer in a ranked list. We assume each producer can show up only once in these sets. We will also make the simplifying assumption that for all . Let be the set of consumers such that there exists where producer . Let be the set of producers that consumer impresses on. Let be the exposure the producer receives measured in number of impressions, where an impression means a consumer saw being recommended to them.

A.1.1. Model for How Consumers Impress on Recommendations: Subset Selection

Analyzing how much exposure producers receive requires a model for how consumers impress on recommendations. For each consumer, we pick candidates to recommend and assume each consumer impresses on all candidates. The ordering of the candidates is irrelevant since all are impressed on. This model is reasonable when is small. For example, if a small module recommended three new accounts to follow on a social media platform, it is likely that a user would see all three recommendations.

Proposition 1.

In general, it is impossible to rank the candidates so that for all .

Proof.

Let be 1 if for consumer , producer is selected as one of the selected producers and 0 otherwise. Clearly under the -subset selection recommendation model, a valid ranking requires

for all Therefore, we have linear equations with variables.

However, in order to satisfy for all , we now must satisfy an additional linear equations of the same variables since can be written as

Altogether, we must satisfy linear equations, in variables. Clearly since there cannot be more candidates than there are producers to recommend. It is also reasonable to assume since every consumer can be a producer and vice versa. Therefore, there are more than linear equations and less than variables. This system of linear equations cannot be satisfied in general. ∎

Intuitively, it is clear that in general the exposure each producer receives cannot be equal. For example, if one candidate set contains producers that do not appear in any other candidate set, then clearly one producer will necessarily get 0 exposure. Furthermore, if a producer shows up in exactly one candidate set, they can only receive one impression at most.

Appendix B Hyperparameter Choices in Experiments

For the synthetic experiments, for both PL-ICFW (our method) and Scaled Plackett-Luce, we vary in our model and the single hyperparameter for PL in and the numbers starting at .05 to 1 in increments of .05. We set to be either or

For the German experiments, for both PL-ICFW (our method) and Scaled Plackett-Luce, we vary in our model and the single hyperparameter for PL in as well as the numbers starting at .05 to 1 in increments of .05 and 1 to 7 in increments of . For PG-Rank, we vary their single hyperparameter in . We set to be either or