Rapid heuristic projection on simplicial cones ††thanks: 1991 A M S Subject Classification. Primary 90C33; Secondary 15A48, Key words and phrases. Metric projection on simplicial cones

Abstract

A very fast heuristic iterative method of projection on simplicial cones is presented. It consists in solving two linear systems at each step of the iteration. The extensive experiments indicate that the method furnishes the exact solution in more then 99.7 percent of the cases. The average number of steps is 5.67 (we have not found any examples which required more than 13 steps) and the relative number of steps with respect to the dimension decreases dramatically. Roughly speaking, for high enough dimensions the absolute number of steps is independent of the dimension.

1 Introduction

Projection on polyhedral cones is one of the important problems applied optimization is confronted with. Many applied numerical optimization methods uses projection on polyhedral cones as the main tool.

In most of them, projection is part of an iterative process which involve its repeated application (see e. g. problems of image reconstruction [1], nonlinear complementarity [4, 9], etc.). Hence, it is important to get fast projection algorithms.

The main streems of the current methods in use rely on the classical von Neumann algorithm (see e.g. the Dykstra algorithm [3, 2, 10]), but they are rather expensive for a numerical handling (see the numerical results in [7] and the remark preceding section 6.3 in [5]).

Finite methods of projections are of combinatorial nature which reduces their applicability to low dimensional ambient spaces.

Recently we have given a simple projection method exposed in note [8] for projecting on so called isotone projection cones. Isotone projection cones are special simplicial cones, and due to their good properties we can project on them in steps, where is the dimension of the ambient space. In the first part of that note we have explained our approach by considering the problem of projection on simplicial cones by giving an exact method based on duality. This method has combinatorial character and therefore it is inefficient. More recently we observed that a heuristic method based on the same ideas gives surprisingly good results. This note describes the theoretical foundation of the heuristic method and draws conclusions based on millions of numerical experiments.

Projection on polyhedral cones is a problem of high impact on scientific community.111see the popularity of the Wikimization page Projection on Polyhedral Cone at http://www.convexoptimization.com/wikimization/index.php/Special:Popularpages.

2 The simplicial cone and its polar

Let be an -dimensional Euclidean space endowed with a Cartesian reference system. We assume that each point of is a column vector.

We shall use the term cone in the sense of closed convex cone. That is, the nonempty closed subset in our terminology is a cone, if , and whenever .

Let and be elements in . Denote

the cone engendered by . Then,

| (1) |

where is the matrix with columns and is the non-negative orthant in .

Suppose that are linearly independent elements. Then, the cone

| (2) |

with the matrix from (1) for , is called simplicial cone. Denote .

The polar of is the set

| (3) |

is called the dual of . is called subdual, if . This is equivalent to the condition

Lemma 1

The polar of the simplicial cone (2) can be represented in the form

| (4) |

where is a solution of the system

( is normal to the hyperplane in the opposite direction to the halfspace that contains ). Thus,

with and

| (5) |

For simplicity we shall call the polar matrix of . The columns of are : .

Proof. Let and for any non-negative real numbers and . The inner produce of and is non-positive because

But is an arbitrary element of the right hand side of (4) and is an arbitrary element of , thus we can conclude that the righ hand side of (4) is a subset of .

The vectors are linearly independent. (This can be verified by assuming the contrary, and by multiplying the subsequent relation by to get a contradiction.) Hence for we have the representation

By (3),

so which

prove that is an element of the right hand side of (4).

Thus we can conclude that is a subset of the right hand side of

(4).

Corollary 1

For each subset of indices in , the vectors (where the complement of with respect to ) are linearly independent.

Proof. Assume that

| (6) |

for some reals and By the mutual orthogonality of the vectors and it follows, by multiplication of the relation (6) with and respectively with , that

and

Hence, must hold.

The cone is called a face of if from and it follows that The face is called a proper face of , if

Lemma 2

If is the cone (2) and is the cone (4), then for every subset of indices the set

| (7) |

(with if ) is a face of . If whenever , then is for a nonempty set in of dimension . (In the sense that spans a subspace of of dimension .)

Every face of is equal to for some If then is a proper face.

Proof.

The relation in (7) follows from the definition of the vectors in Lemma 1, while the assertion on the dimension of is obvious.

Suppose that and with .

Then , and , because . Thus , hence showing that is a face.

Suppose that for an arbitrary proper face of . Since , by the definition of the vectors for

If , then there exists a positive scalar with . Hence, and thus . But then and since is a cone, . This means that , that is, cannot be a proper face.

We have to show that has a representation like (7). By the above reasoning, for each there exist some index with with

If we have the representation (7) with

If , take in the relative interior of and let be the complement in of the maximal set of indices with ( must be a nonempty, proper subset of since )

Take arbitrarily. By the definition of , for some sufficiently small . Hence,

| (8) |

By we also have If for some , then (8) would imply

which is a contradiction. Hence, we must have ; and accordingly

| (9) |

Suppose that and . From definition we have for each , whereby for a sufficiently large ,

Hence, is in the polar of , which by Farkas’ lemma is (This follows in fact, in our case, also by the symmetry of the vectors and in the formulae of Lemma 1.) Thus , whereby Since is a face of , we have and since it is also a cone, This proves the converse of the inclusion in (9) and completes the proof.

Thus a maximal proper face of is of the form

hence it is also called the face of orthogonal to . Similarly, we have a maximal proper face of orthogonal to some .

An equivalent result to the one presented in Lemma 2 is given by the Cone Table 1 on page 179 of [1].

Thus a maximal proper face of is of the form

hence it is also called the face of orthogonal to . Similarly, we have a maximal proper face of orthogonal to some .

Let and Then, from the above results it follows that

and

The faces and of the above form are called a pair of orthogonal faces where is called the orthogonal face of and is called the orthogonal face of .

3 Finite method of projection on a simplicial cone

For an arbitrary denote . Let be an arbitrary cone and its polar, and an arbitrary closed convex set. Recall that the projection mapping on is well defined by and

Then, Moreau’s decomposition theorem asserts:

Theorem 1

(Moreau, [6]) For the following statements are equivalent:

-

(i)

and

-

(ii)

and .

Suppose now, that is a simplicial cone in . We shall use the representation (2) for and the representation (4) for . Hence,

where the Kronecker symbol. As a direct implication of Moreau’s decomposition theorem and the constructions in the preceding section we have:

Theorem 2

Let . For each subset of indices , can be represented in the form

| (10) |

with the complement of with respect to , and with and real numbers. Among the subsets of indices, there exists exactly one (the cases and are not excluded) with the property that for the coefficients in (10) one has and For this representation it holds that

| (11) |

and

| (12) |

Proof. The first assertion is the consequence of Corollary 1.

The projections and as elements of and , respectively can be represented as

| (13) |

and

| (14) |

To prove existence, let and let be the complement of in the set of indices. For an arbitrary element , denote . If would hold in (14), for some , then by Lemma 1 it would follow that which contradicts the theorem of Moreau. Hence, (13) can be written in the form (11) and (14) can be written in the form (12). Therefore, Theorem 1 implies

where , and , .

To prove uniqueness, suppose that in the representation (10) of we have for and for , where is a subset of , and is the complement of in (the cases and are not excluded). Then representations (11) and (12) follow from Theorem 1 by using the mutual orthogonality of the vectors and . From (11) and the uniqueness of the projection it follows that is unique.

From this theorem it follows that a given simplicial cone determines a partition of the space in cones in the sense that

and for two different sets of indices the respective cones do not contain common interior points. The cones in the above union are exactly the sums of orthogonal faces.

This theorem suggests the following algorithm for finding the projection :

Step 1. For the subset we solve the following linear system in

| (15) |

Step 2. Then, we select from the family of all subsets in the subfamily of subsets for which the system possesses non-negative solutions.

Step 3. For each we solve the linear system in

| (16) |

By Theorem 2 among these systems there exists exactly one with non-negative solutions. By this theorem, for corresponding and for the solution of the system (15), we must have

This algorithm requires that we solve linear systems of at most equations in Step 1 (15) and another systems in Step 2 (16). (Observe that all these systems are given by Gram matrices, hence they have unique solutions.) Perhaps this great number of systems can be substantially reduced, but it still remains considerable.

Remark 1

If is subdual; that is, if , the above algorithm can be reduced as follows: By supposing that we have got the representation (10) of with non-negative coefficients, we multiply both sides of (10) by an arbitrary . If then cannot be in , otherwise the relations and would furnish a contradiction. Thus, we have to look for the set of indices (for which we have to solve the system (15)) among the subfamilies of (Arguments like this can be used, as it was done e. g. in [7] for the Dykstra algorithm, to eliminate some hyperplanes while comkputing successive approximations of the solution.)

Obviously, the proposed method is inefficient. It was presented by A. B. Németh and S. Z. Németh in [8] as a preparatory matherial for an efficient algorithm for so called isotone projection cones only. For isotone projection cones we can obtain the projection of a point in at most steps, where is the dimension of the space. Isotone projection cones are special simplicial cones. Even if there are important isotone projection cones in applications, they are rather particular in the family of simplicial cones.

4 Heuristic method for projection onto a simplicial cone

Regardless the inconveniences of the above presented exact method, which follow from its combinatorial character, it suggests an interesting heuristic algorithm. To explain its intuitive background we consider again the simplicial cone

and its polar

given by Lemma 1.

Take an arbitrary . We are seeking the projection

If , then , hence

If , then , and hence .

We can assume that . Hence, projects in a proper face of and in a proper face of

Take an arbitrary family of indices. Then, the vectors

entgender by Corollary 1 a reference system in . Then,

| (17) |

with some (As far as the family of indices is given, we can determine the coefficients and , according to Theorem 2, by solving the systems (15) and (16).)

In this case is projected onto face ortogonally along the subspace engendered by the elements , roughly speaking, along the orthogonal face of .

Suppose that for some . Then, considering the reference system entgendered by , lies in its orthant with negative coordinate, that is in the direction of the vector . By construction, and form an obtuse angle. Hence the angle of and is an acute one. Thus there is a real chance that in a new reference system in which replaces , the coordinate of with respect to has the same sign as its coordinate with respect to , that is positive (or at least non-negative).

If we have for some , then by similar reasoning it seems to be advantageous to replace with , and so on.

Thus, we arrive to the following step in our algorithm:

Substitute with if and substitute with if and solve the systems (15) and (16) for the new configuration of indices . We shall call this step an iteration of the heuristic algorithm.

Then, repeat the procedure for the new configuration of and so on, until we obtain a representation (17) of with all the coefficients non-negative.

5 Experimental results

The heuristic algorithm was programmed in Scilab, an open source platform for numerical computation.222http://www.scilab.org/ Experiments were performed on numerical examples for dimensional cones. The algorithm was performed on random examples for each of the problem sizes . Statistical analysis on a subset of examples from the set of examples for size indicates no significant difference in overall results and performance, therefore we subsequenlty reduced the number of experiments on larger problem sizes. random examples were used for sizes and and examples for size , as the time needed by the algorithm increases with size.

| Size | Changes | Iterations | Iterations with | Loops |

|---|---|---|---|---|

| increases [%] | [%] | |||

Table 1 shows the experimental results. For each problem size, the averages of all runs are shown, together with a confidence interval at confidence level where appropriate.333Any difference less than for integers and for percentages, respectively, is not shown, as deemed irrelevant for the analysis. The Changes column indicates the total number of swaps for and for , respectively, before reaching the solution. The Iterations column indicates the number of iterations (as defined in Section 4) the algorithm performed before reaching a solution. The Iterations with increases column shows the percentage of iterations where the number of changes increased from the previous iteration. We noticed that in the majority of iterations the number of changes decreased, which led to the quick convergence of the algorithm in the vast majority of cases. In all examples the starting point for the search was the base. The final column shows the percentage of problems where the algorithm was aborted due to going in a loop by allocating in some iteration a set of s and s that were encountered in a previous iteration. The percentage of loops was exponentially decreasing as the size increased and we did not observe any loops in any experiments on problem sizes of or above. Overall, loops were observed in of the experiments, so the heuristic algorithm was successful of the time. A solution that we see for solving the problems that lead to a loop is to restart the algorithm from a different initial set of s and s. The problems ending in a loop were excluded from the detailed analysis that follows.

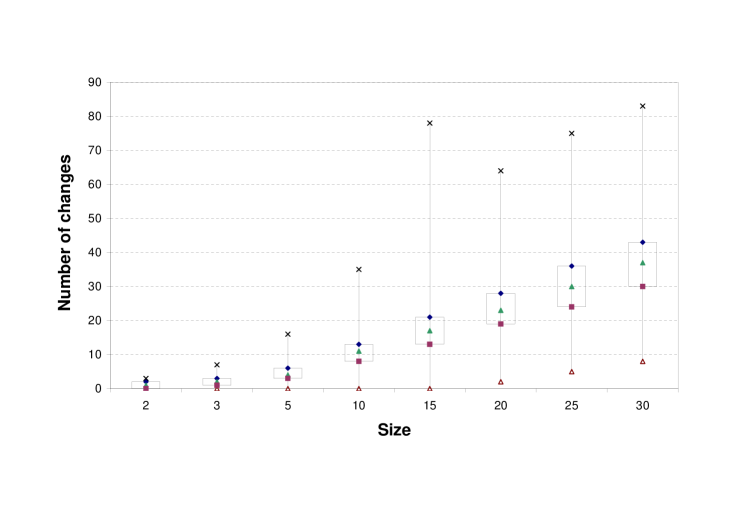

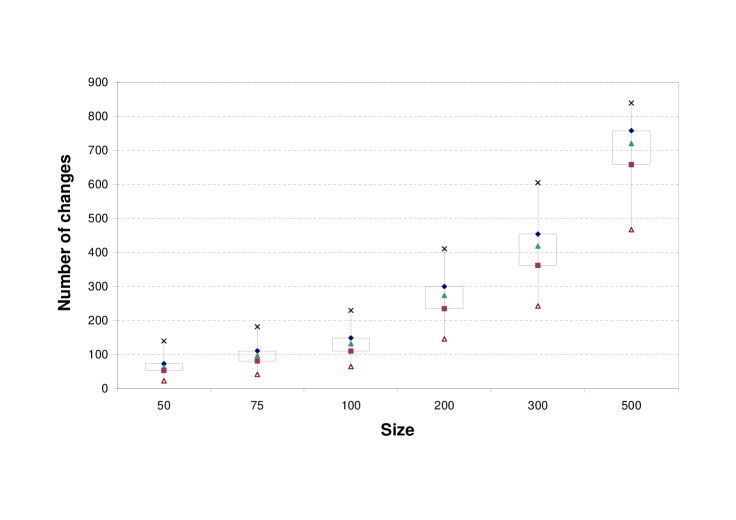

More detailed analysis of the three main performance indicators of changes, iterations and iterations with increases was performed using boxplots as shown in Figures 1, 2 and 3. Although the total number of changes performed increases linearly with problem size (at a rate of less than , even if considering maximum numer of changes), this does not affect the performance substantially (see Figure 1, where the results were split into two parts for a clearer view).

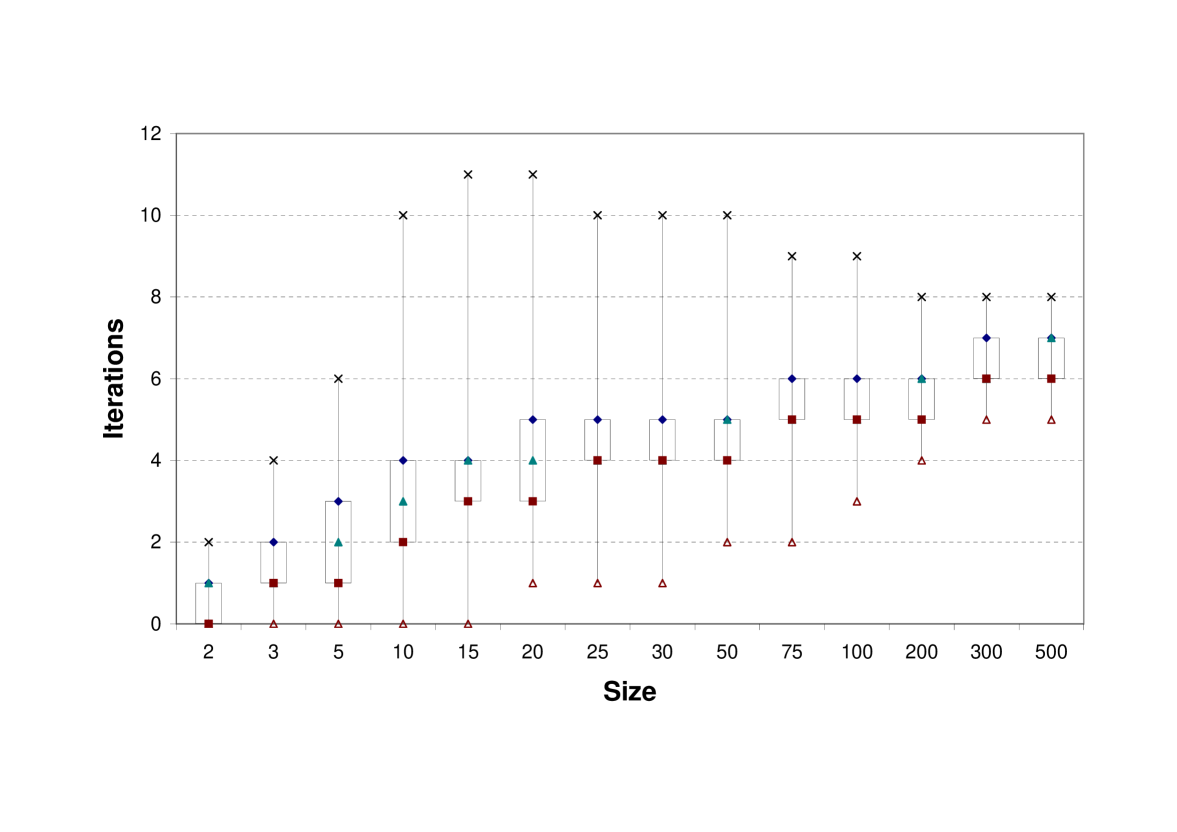

The number of iterations is the crucial indicator of performance. As shown in Figure 2 the number of iterations reaches at most (for sizes and ), but in of cases has a value of or below. We ran a few experiments on larger sizes, up to , and the largest number of iterations we obeserved was . Running experiments on very large problem sizes is problematic due to computer memory limitations and the Scilab built-in solving of linear systems.444 Note that the time needed by one iteration substantially increases with problem size as one iteration involves solving a linear system with equations and variables. The major benefit of this heuristic algorithm is the small number of iterations even for very large number of cone dimensions.

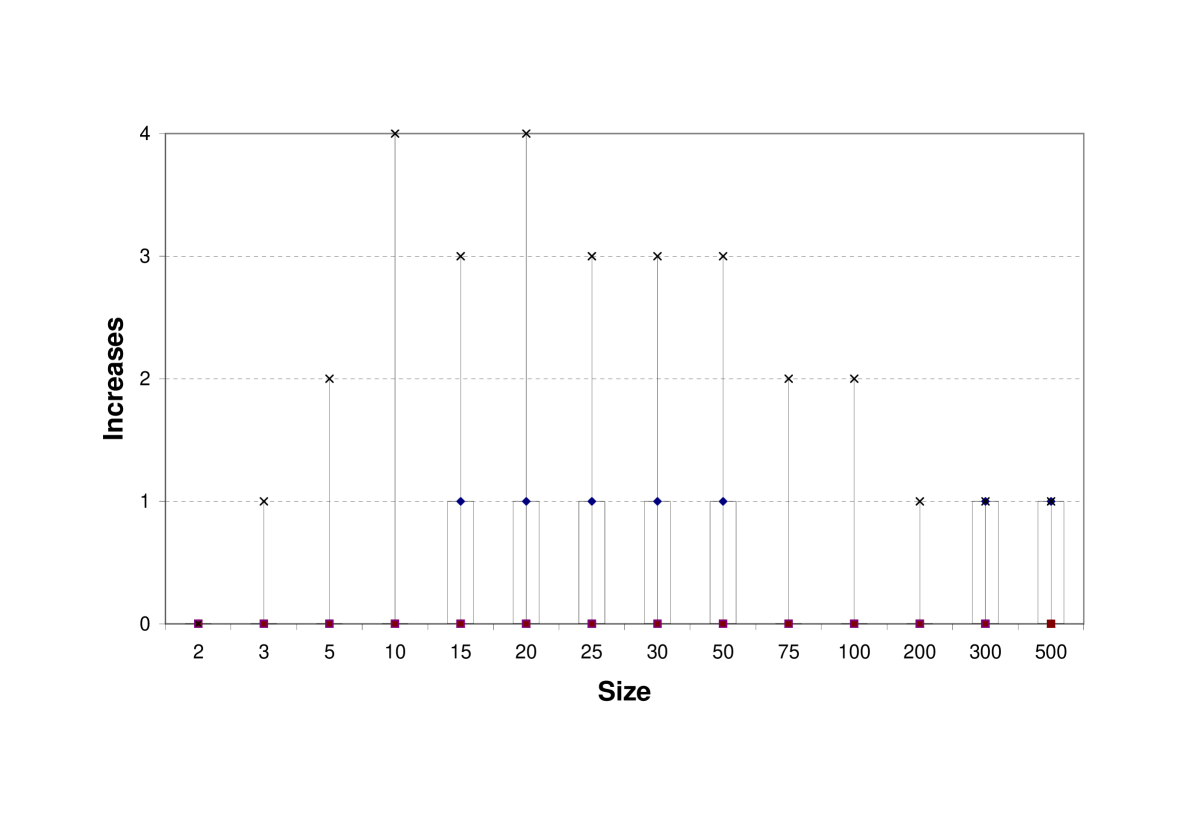

As our heuristic algorithm seems to converge quickly, we wanted to know how frequently it deviates from the optimal path. An optimal path would consist of iterations with decreasing number of changes. Figure 3 shows the boxplots for the number of iterations where an increase in the number of changes took place. The maximum number of such iterations over all experiments is , but of examples involved only one or no such iteration. This provides an explanation for the fast convergence: the algorithm very rarely deviates from the optimal path.

6 Conclusion

We presented a heuristic method of projection on simplicial cones based on Moreau’s decomposition theorem. The heuristic algorithm presented in this note iteratively finds the projection onto a simplicial cone in a surprisingly small number of steps even for large cone dimensions in of the cases. We attribute the success to the fact that the algorithm rarely deviates from the optimal path, in every iteration it usually has to change less base values than in the previous iteration. We are planning to further extend the algorithm with random restart hoping to achieve success rate.

Acknowledgements

S. Z. Németh was supported by the Hungarian Research Grant OTKA 60480. The authors are grateful to J. Dattorro for many helpful conversations.

References

- [1] J. Dattorro. Convex Optimization Euclidean Distance Geometry. , 2005, v2009.04.11.

- [2] F. Deutsch and H. Hundal. The rate of convergence of Dykstra’s cyclic projections algorithm: the polyhedral case. Numer. Funct. Anal. Optim., 15(5-6):537–565, 1994.

- [3] R. L. Dykstra. An algorithm for restricted least squares regression. J. Amer. Stat. Assoc., 78(384):273–242, 1983.

- [4] G. Isac and A. B. Németh. Projection methods, isotone projection cones, and the complementarity problem. J. Math. Anal. Appl., 153(1):258–275, 1990.

- [5] T. Ming, T. Guo-Liang, F. Hong-Bin, and Ng. Kai Wang. A fast EM algorithm for quadratic optimization subject to convex constraints. Statist. Sinica, 17(3):945–964, 2007.

- [6] J. J. Moreau. Décomposition orthogonale d’un espace hilbertien selon deux cônes mutuellement polaires. C. R. Acad. Sci., 255:238–240, 1962.

- [7] P. M. Morillas. Dykstra’s algorithm with strategies for projecting onto certain polyhedral cones. Applied Mathematics and Computation, 167(1):635–649, 2005.

- [8] A. B. Németh and S. Z. Németh. How to project onto an isotone projection cone. Linear Algebra Appl., submitted.

- [9] S. Z. Németh. Iterative methods for nonlinear complementarity problems on isotone projection cones. J. Math. Anal. Appl., 350(1):340–347, 2009.

- [10] X. Shusheng. Estimation of the convergence rate of Dykstra’s cyclic projections algorithm in polyhedral case. Acta Math. Appl. Sinica (English Ser.), 16(2):217–220, 2000.