ruledlabelfont=normalfont,labelsep=colon,strut=off

RARE: Robust Masked Graph Autoencoder

Abstract

Masked graph autoencoder (MGAE) has emerged as a promising self-supervised graph pre-training (SGP) paradigm due to its simplicity and effectiveness. However, existing efforts perform the mask-then-reconstruct operation in the raw data space as is done in computer vision (CV) and natural language processing (NLP) areas, while neglecting the important non-Euclidean property of graph data. As a result, the highly unstable local connection structures largely increase the uncertainty in inferring masked data and decrease the reliability of the exploited self-supervision signals, leading to inferior representations for downstream evaluations. To address this issue, we propose a novel SGP method termed Robust mAsked gRaph autoEncoder (RARE) to improve the certainty in inferring masked data and the reliability of the self-supervision mechanism by further masking and reconstructing node samples in the high-order latent feature space. Through both theoretical and empirical analyses, we have discovered that performing a joint mask-then-reconstruct strategy in both latent feature and raw data spaces could yield improved stability and performance. To this end, we elaborately design a masked latent feature completion scheme, which predicts latent features of masked nodes under the guidance of high-order sample correlations that are hard to be observed from the raw data perspective. Specifically, we first adopt a latent feature predictor to predict the masked latent features from the visible ones. Next, we encode the raw data of masked samples with a momentum graph encoder and subsequently employ the resulting representations to improve predicted results through latent feature matching. Extensive experiments on seventeen datasets have demonstrated the effectiveness and robustness of RARE against state-of-the-art (SOTA) competitors across three downstream tasks.

Index Terms:

incomplete multi-view learning, classification, masked graph autoencoder, robustness.I Introduction

Masked autoencoders (MAEs) have emerged as the dominant technique for self-supervised vision and language pre-training. The objective of MAEs is to learn generalized sample representations from massive unlabeled data by recovering partially masked content (e.g., image patches or word embeddings) from observations. Due to their simplicity and powerful local structure modeling capabilities, advanced efforts in this field [1, 2, 3] have garnered significant interest among researchers. These methods have demonstrated impressive performance across a wide range of real-world applications, including medical image analysis [4], natural language understanding [5], and 3D object detection [6].

Recent studies have shown that applying MAEs to facilitate graph-based learning has become a topic of increasing interest. The success of masked graph autoencoders (MGAEs) lies in the mask-then-reconstruct operation. In this setting, a portion of visible nodes or edges are randomly masked and then adopted as self-supervision signals to guide the model learning, so as to allow the network to explore the underlying structural information for downstream evaluations [7, 8, 9, 10, 11, 12, 13]. Despite their promising performance on various graph-oriented tasks [14, 15, 16], existing MGAEs overlook the inherent distinction between images (or texts) and graphs, i.e., images (or texts) are Euclidean while graphs are non-Euclidean. In other words, the nearby structure of an image patch or a sub-sentence has higher semantic certainty and is more stable than that of a sub-graph. Therefore, the quality of the self-supervision guidance provided by a masked image patch or a masked word embedding would be much higher than that provided by a masked node (or edge). Specifically, as shown in Fig. 1(a), since the relative spatial distribution of organs on a dog is quite certain, people can easily imagine the masked image patches based on the observed content within an incomplete dog photo. Similarly, in Fig. 1(b), the strong context correlation among words helps us involuntarily fill an incomplete sentence with meaningful words. Comparatively, in a social network where nodes are entities and edges are interactions, the neighborhood structure of an entity within a graph varies a lot, as shown in Fig. 1(c). When a node or an edge is entirely masked, it is hard to identify the removed entity or ascertain whether two entities keep in contact directly, since it is common for two unconnected entities to have conjoint neighbors or valuable higher-order relationships that are hard to be observed from the raw data perspective in a real-world social graph. Consequently, although in most cases, the mask-then-reconstruct principle is effective for learning valuable node representations, directly recovering the masked nodes and edges driven by the low-level raw data would put the corresponding model at risk of being confused by the local structural ambiguity. Based on these observations, we argue that the non-Euclidean property of graph data could to some extent trigger uncertainty in inferring masked data and may negatively affect the reliability of the self-supervision mechanism. In this circumstance, the robustness of the model may be compromised when applying a masked autoencoder to process graphs straightforwardly.

To address the above issue, we propose a novel method termed Robust mAsked gRaph autoEncoder (RARE) for self-supervised graph pre-training. The main idea of RARE is to integrate implicit and explicit self-supervision mechanisms for masked content recovery by performing a joint mask-then-reconstruct strategy in both latent feature and raw data spaces. The effectiveness of our method lies in the fact that unlike the model optimization driven by the low-level raw data only [8, 9], the self-supervision mechanism of RARE could be further enhanced by incorporating more informative high-order sample correlations that are hard to be observed from the raw data perspective [17, 18, 19]. To this end, we design a masked latent feature completion scheme that includes two steps. Specifically, we first adopt a latent feature predictor to assist the graph encoder in extracting more compressed features by predicting the latent features of masked samples based on observations. To further enhance the integrity and accuracy of predicted representations, we encode the raw data of masked samples using a momentum graph encoder and leverage the resultant representations to guide the latent feature prediction through information matching. With such persistent and informative signals as self-supervision guidance, each masked sample in the latent space is encouraged to explore reliable information from available features. As the implicit self-supervision signals become more accurate and the masked content becomes more reliable, the model is enforced to promote greater information encoding capability, thereby generating more robust and generalized node representations for downstream evaluations. The main contributions of this work are summarized as below:

-

•

We propose a novel SGP framework termed RARE to enhance the robustness of masked graph autoencoders. It not only eases the instability of the self-supervision mechanism driven by the non-Euclidean raw graph data, but also achieves a good accuracy-efficiency trade-off.

-

•

We incorporate a simple but effective masked latent feature completion scheme into a masked graph autoencoder. This design can enhance the certainty in inferring masked data and the reliability of the self-supervision mechanism by exploiting more informative high-order sample correlations to drive the model training.

-

•

Extensive experiments on seventeen datasets across three downstream tasks demonstrate the effectiveness and robustness of RARE over competitors. Moreover, a series of elaborate ablation studies also verify that RARE can indeed unleash the full potential of MAEs to provide a comprehensive understanding of graphs.

The remainder of this paper is organized as follows. In Section II, we review related work in areas of self-supervised graph pre-training, masked graph autoencoders, and self-distillation on graphs. Section III presents defined notations, the proposed network designs, loss functions, and discussions. In Section IV, we conduct experiments and analyze the results. Section V draws a final conclusion.

II Related Work

II-A Self-supervised Graph Pre-training

Self-supervised graph pre-training (SGP), whose goal is to learn representations from supervised signals derived from the graph data itself, has made significant progress recently. With the powerful learning capability of graph neural networks (GNNs) [20, 21, 22, 23], advanced studies in this field have recently achieved great success in anomaly detection [24], feature selection [25], multi-view clustering [26, 27], etc. One of the most representative self-supervised learning paradigms is contrastive SGP, where discriminative features are learned by pulling together the representations of semantically similar samples while pushing away the ones of unrelated samples [28, 29, 30, 31, 32, 33, 34, 35]. However, the impressive performance of these methods heavily relies on careful data augmentations, large amounts of negative samples, or relatively complicated optimization strategies, which usually causes time- and resource-consuming issues. Comparatively, generative SGP methods [36, 37, 38, 39, 9, 40] could naturally avoid the low-efficiency problem, as their optimization target is to reconstruct the input (or masked) information directly. In particular, masked graph autoencoders (MGAEs) [9], which aim to predict the masked content from visible one, have significantly advanced classical graph autoencoders and shown their potential to achieve better performance against contrastive learning-based competitors.

II-B Masked Graph Autoencoders

Masked signal modeling (MSM), which models masked signals locally to facilitate the extraction of significant features, has recently gained popularity in self-supervised vision and language applications, such as natural language understanding [5] and medical image analysis [4]. Inspired by the successes of existing masked autoencoders (MAEs) [41, 1, 3, 2], researchers pose a natural question regarding the potential of utilizing MAEs to handle large amounts of unlabelled graph data. To this end, MGAE [7] first applies an undirected edge-masking strategy to the original graph structure, and then utilizes a tailored cross-correlation decoder to predict the masked edges via a standard graph-based loss function. Similarly, MaskGAE [8] incorporates random corruption into the graph structure from both edge-wise level and path-wise level, and then utilizes edge-reconstruction and node-regression loss functions to match the predicted information with the original data. Another important research line in this field is node-masking-based MGAEs [42, 9, 10]. For example, GMAE [42] utilizes a graph transformer-based backbone [43] to pre-train a masked autoencoder, and applies a cross-entropy loss to compare the reconstructed attributes with their ground truths. Similarly, GraphMAE [9] reconsiders the reconstruction loss functions of previous graph autoencoders and proposes an improved scaled cosine error to boost the masked attribute recovery. More recently, some studies first randomly mask a portion of node attributes and connections of given graphs simultaneously, and then learn to predict the removed content from available information via two specific reconstruction objectives [12, 13]. However, the aforementioned methods usually learn representations by directly minimizing a reconstruction loss in the raw data space, which may mislead the model into a local structural ambiguity situation caused by the non-Euclidean property of graphs. In contrast, RARE can effectively enhance the certainty in inferring masked data and the reliability of the self-supervision mechanism by reconciling the reconstructions of masked raw attributes and latent features, unleashing the potential of MAEs for graph analysis while mitigating the aforementioned negative effects.

II-C Self-distillation on Graphs

Self-distillation has emerged as a powerful technique for self-supervised learning, as evidenced by its successful applications in various domains[44, 45, 46, 47, 48]. This technique involves using the outputs of a target network as pseudo labels to guide the representation learning of an online network, which encourages the feature extractor to learn more generalized features. Self-distillation has also been widely developed and employed in multiple graph machine learning tasks, including graph structure learning [48] and augmentation-free node clustering [49]. While previous studies have focused on complete graphs, it is crucial to explore the effectiveness of self-distillation in boosting MGAEs in scenarios where the graph data is incomplete. This motivates us to investigate the feasibility of enhancing the robustness of MGAEs with the aid of self-distillation learning, as well as uncovering the key factors that contribute to the success of RARE.

III Method

| Notation | Description |

|---|---|

| Original graph | |

| , | Masked graphs |

| Edge set | |

| , , | All/visible/masked node set |

| , | Token-masked node set of the visible/masked part |

| Token-masked representation set of the masked part | |

| , , | The number of all/visible/masked nodes |

| The number of data categories | |

| , | Node attribute/representation dimension |

| Mask ratio | |

| Random binary mask vector | |

| Attribute vector of -th visible node | |

| Attribute vector of -th masked node | |

| Reconstructed attribute vector of -th masked node | |

| Predicted representation vector of -th masked node | |

| Representation vector of -th masked node | |

| Token-masked attribute vector of -th visible node | |

| Token-masked attribute vector of -th masked node | |

| Token-masked representation vector of -th masked node | |

| Normalized adjacency matrix | |

| Raw attribute matrix of all nodes | |

| Raw attribute matrix of visible nodes | |

| Raw attribute matrix of masked nodes | |

| Reconstructed raw attribute matrix of masked nodes | |

| Token-masked attribute matrix of visible nodes | |

| Token-masked attribute matrix of masked nodes | |

| Token-masked representation matrix of masked nodes | |

| Representation matrix of visible nodes | |

| Representation matrix of masked nodes | |

| Recomposed representation matrix | |

| Predicted representation matrix of masked nodes | |

| , , | Union symbol/intersection symbol/empty set |

| Inner product |

III-A Task Definition and Overall Framework

III-A1 Task Definition

In this study, we mainly focus on the task of self-supervised masked graph pre-training for unlabeled graphs. Our model is designed to learn two graph encoding functions (i.e., and ), along with a hidden predicting function (i.e., ) to recover masked latent features from observations. Subsequently, a decoding function (i.e., ) is employed to reconstruct the raw attributes of masked samples based on the predicted representations. The learned graph embedding can be saved and utilized for various downstream tasks, such as node classification and graph classification.

III-A2 Overall Framework

As shown in Fig. 2, the pre-training procedure of RARE could be mainly grouped into three parts. The goal of the data masking part is to generate two complementary masked graphs by randomly masking some nodes with tokens under a mask ratio . The masked latent feature completion part is the core of RARE, which aims to enhance the reliability of the self-supervision mechanism by leveraging more informative high-order sample correlations to drive the model training. It consists of three components. Firstly, the graph encoder maps the visible nodes into node representations. Secondly, the latent feature predictor performs a latent feature prediction from visible nodes to masked ones. Thirdly, the momentum graph encoder receives the raw data of masked nodes as inputs and takes the resultant representations as implicit self-supervision signals for matching with the predicted representations. In the data decoding part, the decoder only maps the representations of masked nodes into the raw data space. Finally, and are integrated to minimize the loss error in both latent feature and raw data spaces. After pre-training, only the backbone of the graph encoder is adopted for downstream evaluations. The following subsections present the technical details of the corresponding components.

III-B Data Masking

Before pre-training RARE, we first generate two complementary masked graphs as inputs. These graphs are then fed into the graph encoder and the momentum graph encoder , respectively. To begin, we denote an undirected graph that contains nodes with categories. Here, and indicate the node set and the edge set, respectively. Generally, can be characterized by its normalized adjacency matrix and raw attribute matrix , where refers to the dimension of node attributes. To perform the data-masking operation on a given graph, we initially draw a random binary mask vector , where if is masked with a node token, and otherwise. The probability of drawing 0 is , which represents the mask ratio. Based on , we obtain the raw attribute matrix of visible nodes and the raw attribute matrix of masked nodes :

| (1) |

Accordingly, the nodes in are randomly divided into two sets, i.e., the visible node set and the masked node set , where = , = . and denotes the numbers of visible nodes and masked nodes, respectively, where = + . Similarly, we define token-masked node sets of the visible part and the masked part as and , respectively, where (or ) refers to a stochastic learnable vector. With these mathematical formulations, two complementary masked graphs for pre-training can be denoted as and , respectively. All frequently used notations are listed in Table I.

III-C Masked Latent Feature Completion

As discussed in the previous section, although the masked auto-encoder has proven effective and efficient, applying it to process non-Euclidean graphs directly may not always provide the required expressive capability for feature extraction. To address this issue, we propose a simple yet effective Masked Latent Feature Completion (MLFC) scheme. It facilitates model learning by incorporating more informative high-order sample correlations that are hard to be observed from the raw data perspective, leading to enhanced certainty in inferring masked content and a more reliable self-supervision mechanism for greater information encoding capability. The learning process of MLFC includes the following four main steps.

III-C1 Graph Encoding

The graph encoder is responsible for transforming the masked graph into a low-dimension latent space. To achieve this, we employ a graph neural network (GNN)-based architecture that consists of a sequence of graph attention layers [22] or graph isomorphism layers [23] as the encoder backbone. Inspired by BYOL [46], we incorporate a multilayer perception (MLP) layer as a projector following the backbone to form the graph encoder. This encoder generates the visible node representation matrix , where represents the latent dimension.

III-C2 Latent Feature Predicting

Following the graph encoder , an autoencoder-style latent feature predictor is elaborately designed, which consists of two parts, i.e., a graph attention (or graph isomorphism) layer that recovers the masked content from observations and an MLP layer that predicts the latent features of masked nodes based on recovered information. Specifically, we utilize a Concat function to integrate and a token-masked representation matrix , where denotes a -dimensional stochastic learnable vector. It is worth noting that the information concatenation used here to construct is not the classic channel-wise or row-wise concatenation. Instead, we fill the visible part with and the masked part with to create the recomposed representation matrix . Finally, we apply to process and obtain a predicted representation matrix of masked nodes .

III-C3 Momentum Graph Encoding

Since we have obtained the predicted representations of masked nodes, a natural question arises: how to provide effective supervision to guide the masked latent feature completion in unsupervised scenarios? Our answer is to acquire the self-supervision signals from the data itself via self-distillation learning. To this end, we introduce a momentum graph encoder that has the same architecture as the graph encoder. This encoder is responsible for encoding the raw data of masked samples and utilizing their complete representations to provide the predicted ones with stable optimization guidance. Concretely, we take the masked graph as an input and feed it into . The resultant representation matrix preserves high-order sample correlations and serves as implicit self-supervision signals to refine . It is worth noting that is detached from the gradient back-propagation and its parameters are updated by exponential moving average (EMA) [46]. The parameters of and are denoted as and , respectively, and the parameters of are updated by:

| (2) |

where denotes the momentum factor that has been determined empirically and fixed as 0.1. Since both graph encoders involve training on multiple subsets of a common graph, the EMA can provide with a smooth estimate of the underlying graph data distribution from , thus promoting to encode reliable information.

III-C4 Latent Feature Matching

This operation acts as an implicit form of self-supervision for recovering masked content at the feature level. Rather than aiming to maintain similarity between the reconstructed nodes (or edges) and the raw information of the original graphs, our method focuses on ensuring that the predicted representations precisely match with the underlying structural statistics calculated by the momentum graph encoder. To this end, we approximate to by minimizing the following formulation:

| (3) |

where and indicate the representation vectors of -th masked node within and , respectively. According to Eq. (3), we recover masked information within the latent space by constraining the predicted representations to match with the ones that preserve more informative underlying structural information of graphs. By taking these implicit self-supervision signals as model learning guidance, we could ensure the integrity and accuracy of predicted representations, resulting in a higher quality of the learned graph embedding.

III-D Data Decoding

To complete the raw attributes of masked nodes, we employ a simple MLP layer as a decoder to map into the raw data space. Once the reconstructed attribute matrix of masked nodes has been processed by the decoder, we take as explicit self-supervision signals and reconstruct the raw data for masked nodes by minimizing the distance between and . Inspired by the SCE loss [9], we design an improved scaled cosine error to boost the stability of network training, formulated as:

| (4) |

where refers to an inner product operation. and denotes the attribute vectors of -th masked node within and , respectively. is a scaling factor and we empirically set in most cases.

III-E Loss Function and Complexity Analysis

III-E1 Loss Function

By integrating implicit and explicit self-supervision mechanisms in a united pre-training framework, the total loss of the proposed RARE can be formulated as a weighted combination of the latent feature matching loss and the raw attribute reconstruction loss :

| (5) |

where is a balanced coefficient. In the inference phase, the input graph with and is fed into RARE without any data-masking operations. The resultant graph embedding can be saved and used for downstream evaluations, such as graph classification and image recognition tasks. The detailed pre-training procedure of RARE is illustrated in Algorithm 1.

III-E2 Complexity Analysis

The time complexity of the proposed RARE could be discussed from the following two perspectives: the graph auto-encoder framework and the loss error computation. For two graph encoders, the complexities of and are , where , , , and are the numbers of nodes, edges, encoder layers, and attention heads, respectively. and are the dimension of input and output features, respectively. For the latent feature predictor, the complexity of is . For the decoder, the complexity of is . For the computation of loss error, the time complexities of and are , where and are the numbers of token-masked samples and the dimension of node attributes. Therefore, the overall time complexity of RARE for each training iteration is . We can observe that the complexity of RARE is linear with both the numbers of nodes and edges of the graph, making the proposed RARE theoretically efficient and scalable.

III-F Discussion

In this section, we aim to explain the reasons why the proposed MLFC scheme is effective and why RARE works better than existing MGAEs, respectively.

III-F1 Why The Proposed MLFC Scheme Is Effective

We start from a more intuitive masked signal modeling perspective to revisit MLFC. As aforementioned, we can obtain the latent features of visible and masked nodes from two complementary masked views (i.e., and ), respectively:

| (6) |

| (7) |

After that, outputs the predicted representations for masked nodes from visible ones , and then match with by Eq. (3), which can be rewritten as:

| (8) |

Based on Eq. (8), we observe that the MLFC scheme actually learns to pair two complementary views (i.e., and ) through a latent feature matching task. Inspired by the previous work [50, 51], we denote a bipartite graph to model the corresponding learning problem, where and denotes the sets of visible views and masked views, respectively. is represented as an adjacency matrix whose normalized version is . Here, both and are diagonal degree matrices. Consequently, we can derive an asymmetric instance alignment loss between and to lower bounded .

Theorem 1. We assume that any autoencoder-style architecture satisfies , where represents either visible content or masked content , therefore, the MLFC loss on the bipartite graph can be lower bounded by:

| (9) |

where denotes the output matrix of on whose -row is =, and denotes the output matrix of on whose -row is =. Note that both and are normalized.

According to Eq. (9), it is evident that the MLFC scheme aims to minimize by conducting an implicit similarity-based alignment between connected visible and masked samples in the latent space through an autoencoder-style predictor. Intuitively, as illustrated in Fig. 3(b), we consider a pair of second-order neighbor visible views (e.g., and ) that share a common complementary masked view (e.g., ). By enforcing , to recover simultaneously, the latent features of visible views are implicitly correlated through the MLFC scheme. In other words, the second-order neighbors act as positive sample pairs that are pulled closer as the instance alignment (i.e., positive samples should remain close in the latent space) in contrastive learning. From this perspective, the proposed MLFC scheme serves as a hidden regularization that helps encode discriminative features, thereby promoting greater information encoding capability for better downstream performance.

III-F2 Why RARE Works Better Than Existing MGAEs

To better understand the superiority of RARE compared to its competitors, we conduct a comparison of the self-supervision mechanism between the proposed RARE and state-of-the-art (SOTA) GraphMAE [9] via a toy example illustrated in Fig. 3. As previously mentioned, completing masked content within a masked graph can be regarded as a complementary view pairing problem between the visible and masked data. To solve this problem, GraphMAE [9] adopts an explicit self-supervision (i.e., Exp.-SS) mechanism by matching predicted nodes with raw ones directly, which may mislead the model into a local structural ambiguity situation. For example, as shown in Fig. 3(c), two masked samples (e.g., Node 4 and Node 5) belonging to different categories share a common visible sample (e.g., Node 1). By enforcing Node 1 to reconstruct Node 4 and Node 5, GraphMAE subconsciously reduces their distance in the latent space. Consequently, the model may struggle to differentiate Node 4 and Node 5 accurately in unsupervised scenarios. This is because the self-supervision signals provided by GraphMAE only preserve raw data information that is insufficient to ascertain whether two nodes belong to the same category or not. In contrast, RARE employs both implicit and explicit self-supervision mechanisms for masked content recovery by performing a joint mask-then-reconstruct strategy in both latent feature and raw data spaces. Particularly, in RARE, the MLFC scheme could provide more informative high-order sample correlations as implicit self-supervision signals, which are not readily available in the raw data space. As shown in Fig. 3(d), by incorporating prior knowledge that 1) Node 3 or Node 6 is closely related to Node 4 or Node 5; and 2) Node 3 and Node 6 are unconnected, it would become easier for the model to infer the relationship between Node 4 and Node 5. As a result, the two nodes are pushed apart from each other in the latent space. Empirical results in Section IV-G support our claim that the proposed RARE indeed works better than GraphMAE by making the learned samples belonging to different categories more distinguishable.

IV Experiments

In this section, we evaluate the effectiveness of RARE against advanced SGP methods. The experiments aim to answer six research questions: How does RARE perform compared to baselines in various downstream tasks? How does each component influence the model performance? How is the robustness of RARE against noisy graphs? How do key hyper-parameters influence the performance of RARE? How does the running time of RARE compare to other competitors? How do the learned node representations and reconstructed data of RARE compare to those of baselines?

In the following, we begin with a brief introduction to experimental setups, including benchmark datasets, implementation procedures, training settings, and baseline methods. Then we report experimental results with corresponding analysis.

IV-A Evaluation Setups

IV-A1 Benchmark Datasets

We conduct experiments to compare the proposed RARE with several baseline methods on seventeen datasets in total, including seven node classification datasets (i.e., Cora, Citeseer, Pubmed, WikiCS, Corafull, Flickr, and Yelp [52]), seven graph classification datasets (i.e., IMDB-B, IMDB-M, PROTEINS, COLLAB, MUTAG, PTC-MR, and NCI1 [53]), and three image datasets (i.e., Usps [54], Mnist [55], and Fashion-mnist [56]). Please note that all graph datasets contain both the raw attribute matrix and the adjacency matrix, while all image datasets only have the attribute matrix.

IV-A2 Implementation Procedures

The learning procedure of RARE mainly includes two steps: 1) in the pre-training task, all nodes of datasets except for Flickr and Yelp are fed into the proposed RARE for at least 20 training iterations by minimizing Eq. (5). Since Flickr and Yelp are commonly used for inductive evaluations, we follow the public data split as GraphSAINT [57], where 50%/75%, 25%/10%, and 25%/15% nodes are randomly sampled to form the train, validation, and test sets on Flickr and Yelp, respectively; and 2) in the downstream tasks related to nodes and images, we use the publicly available train/validation/test data split for Cora, Citeseer, Pubmed, Flickr, and Yelp. For WikiCS, Corafull, Usps, Mnist, and Fashion-mnist, since these datasets have no publicly available data split, we perform a random data split where 7%, 7%, and 86% nodes are randomly sampled to form the train, validation, and test sets, respectively. We train a simple linear classifier with Adam optimizer until convergence by optimizing a cross-entropy loss by 10 times. After 10 separate runs, we report average accuracy values with standard deviations for each model. In the graph-related downstream task, as is done in GraphMAE [9], we take the support vector machine as a classifier and record the results with 10-fold cross-validation after 5 separate runs. To mitigate the adverse impact of randomness, we report average accuracy (ACC) values with standard deviations for all downstream evaluations.

| Learning Type | Method | Cora | Citeseer | Pubmed | WikiCS | Corafull | Flickr | Yelp |

|---|---|---|---|---|---|---|---|---|

| Supervised | GCN [21] | 81.5 | 70.3 | 79.0 | 66.70.5 | 48.60.5 | 42.90.1 | 57.30.1 |

| GAT [22] | 83.00.7 | 72.50.7 | 79.00.3 | 69.41.0 | 50.70.2 | 43.90.1 | 57.60.1 | |

| Self-supervised | GAE [36] | 71.50.4 | 65.80.4 | 72.10.5 | 67.30.3 | 52.00.1 | - | - |

| DGI [28] | 82.30.6 | 71.80.7 | 76.80.6 | 64.80.6 | 48.20.5 | 45.00.2 | 57.40.1 | |

| MVGRL [31] | 83.50.4 | 73.30.5 | 80.10.7 | 64.80.7 | 52.60.5 | - | - | |

| GRACE [32] | 81.90.4 | 71.20.5 | 80.60.4 | 68.00.7 | 45.20.1 | - | - | |

| BGRL [47] | 82.70.6 | 71.10.8 | 79.60.5 | 65.51.5 | 47.40.5 | 39.40.1 | - | |

| InfoGCL [58] | 83.50.3 | 73.50.4 | 79.10.2 | - | - | - | - | |

| CCA-SSG[34] | 84.00.4 | 73.10.3 | 81.00.4 | 67.40.9 | 53.50.4 | 49.10.1 | 59.60.1 | |

| SeeGera [13] | 82.80.3 | 71.60.2 | 79.20.3 | 65.80.2 | - | - | - | |

| MaskGAE [8] | 82.60.3 | 73.40.6 | 81.00.3 | 66.00.2 | 52.20.1 | 49.10.1 | 68.10.1 | |

| GraphMAE [9] | 84.20.4 | 73.40.4 | 81.10.4 | 65.70.7 | 53.40.1 | 49.60.2 | 69.40.2 | |

| Ours | 84.20.2 | 74.10.3 | 81.80.2 | 69.00.6 | 55.50.1 | 50.60.1 | 72.10.6 |

| Learning Type | Method | IMDB-B | IMDB-M | PROTEINS | COLLAB | MUTAG | PTC-MR | NCI1 |

|---|---|---|---|---|---|---|---|---|

| Supervised | GIN [23] | 75.15.1 | 52.32.8 | 76.22.8 | 80.21.9 | 89.45.6 | 63.78.2 | 82.71.7 |

| DiffPool [59] | 72.63.9 | - | 75.13.5 | 78.92.3 | 85.010.3 | - | - | |

| Graph Kernels | WL [60] | 72.33.4 | 47.00.5 | 72.90.6 | - | 80.73.0 | 58.00.5 | 80.30.5 |

| DGK [53] | 67.00.6 | 44.60.5 | 73.30.8 | - | 87.42.7 | 60.12.6 | 80.30.5 | |

| Self-supervised | Graph2vec [61] | 71.10.5 | 50.40.9 | 73.32.1 | - | 83.29.3 | 60.26.9 | 73.21.8 |

| Infograph [33] | 73.00.9 | 49.70.5 | 74.40.3 | 70.71.1 | 89.01.1 | 61.76.4 | 76.21.1 | |

| GraphCL [29] | 71.10.4 | 48.60.7 | 74.40.5 | 71.41.2 | 86.81.3 | - | 77.90.4 | |

| JOAO [62] | 70.23.1 | 49.20.8 | 74.60.4 | 69.50.3 | 87.41.0 | - | 78.10.5 | |

| GCC [30] | 72.0 | 49.4 | - | 78.9 | - | - | - | |

| MVGRL [31] | 74.20.7 | 51.20.5 | - | - | 89.71.1 | 62.51.7 | - | |

| InfoGCL [58] | 75.10.9 | 51.40.8 | - | 80.01.3 | 91.21.3 | 59.41.6 | 80.20.6 | |

| GraphMAE [9] | 75.50.7 | 51.60.5 | 75.30.4 | 80.30.5 | 88.21.3 | 57.60.8 | 80.40.3 | |

| Ours | 76.20.1 | 53.10.1 | 76.40.2 | 81.20.6 | 88.60.6 | 59.30.7 | 81.30.4 |

IV-A3 Training Settings

To ensure a fair comparison, all experiments are conducted on the same device and under an identical configuration environment. For all compared baselines, we directly report the results listed in the existing literature if available. Otherwise, we implement their official source codes and report the reproduced performance. For our method, we perform a grid search to select hyper-parameters on the following searching space: the mask ratio is selected between {0.5, 0.75}; the balanced coefficient is searched from 1 to 9; the scale factor is selected between {1, 2}; the hidden size of latent features is selected from {256, 512, 1024}; the number of feature extractor layers is selected from {1, 2, 3, 4, 5}; by default, the momentum rate is empirically fixed to 0.1 by default; the learning rate of the Adam optimizer is selected from {1.5e-4, 5e-4, 1e-3}; the maximum epoch is determined according to the results of model convergence. Particularly, in the graph classification task, we 1) choose the batch size from {32, 64}, similar to GraphMAE [9]; 2) consistently adopt a batch normalization operation to regularize the model learning; and 3) follow the sample pooing setups in GraphMAE, where a non-parameterized graph pooling function (e.g., max-pooling, mean-pooing or sum-pooling) is employed to generate graph-level representations. Please note that we employ similar hyper-parameter setups as reported in GraphMAE [9], and most hyper-parameters are not carefully tuned for ease of model learning.

IV-A4 Baseline Methods

In this work, we compare the performance of the proposed RARE with several baseline methods on three different tasks: node classification, graph classification, and image classification. For node classification, we compare RARE with two supervised methods (i.e., GCN [21] and GAT [22]) and ten self-supervised methods (i.e., GAE [36], DGI [28], MVGRL [31], GRACE [32], BGRL [47], InfoGCL [58], CCA-SSG [34], SeeGera [13], MaskGAE [8], and GraphMAE [9]). For graph classification, we consider twelve baseline methods from three different aspects: 1) two supervised methods (i.e., GIN [23] and DiffPool [59]); 2) two graph kernels-based methods (i.e., WL [60] and DGK [53]); and 3) eight self-supervised methods (i.e., Graph2vec [61], Infograph [33], GraphCL [29], JOAO [62], GCC [30], MVGRL [31], InfoGCL [58], and GraphMAE [9]). For image classification, we investigate two image classification methods (i.e., VGG16 [63] and ResNet18 [64]) and three graph autoencoder methods (i.e., GAE [36], MaskGAE [8], and GraphMAE [9].

IV-B Overall Performance (RQ1)

IV-B1 Evaluation on Node Classification

As shown in Table II, we report the node classification performance of thirteen compared methods on seven datasets. From these results, several major observations can be concluded: 1) the proposed RARE consistently outperforms two supervised methods on all datasets, with margins going up to 7.7%-14.8% on Flickr and Yelp. These improvements demonstrate the great potential of masked graph autoencoders for effectively handling massive unlabeled graph data; 2) InfoGCL is one of the strongest contrastive self-supervised methods, while the proposed RARE improves it by 0.7%, 0.6%, 2.7% accuracy on Cora, Citeseer, and Pubmed, respectively. This phenomenon indicates that RARE can effectively boost the learned representations by conducting a mask-then-reconstruct mechanism instead of relying on a relatively complicated contrastive mechanism; 3) taking the results on WikiCS for example, RARE significantly outperforms SeeGera, MaskGAE, and GraphMAE by 3.2%, 3.0%, and 3.3%, respectively. These benefits are attributed to the novel idea of integrating implicit and explicit self-supervision mechanisms to drive model learning by performing a joint mask-then-reconstruct strategy in both latent feature and raw data spaces; and 4) on Cora, RARE achieves competitive results compared to the most powerful masked graph autoencoder, i.e., GraphMAE. However, it is possible that the full potential of model optimization was not demonstrated due to the insufficient size of the test dataset. Increasing the size of the test dataset, such as WikiCS and Yelp, could reveal that RARE has the potential to yield even more substantial performance gains.

IV-B2 Evaluation on Graph Classification

Table III summarizes graph classification results of thirteen methods on seven datasets. The results reveal several key observations that are similar to those obtained from the node classification task: 1) the performance of RARE is highly competitive compared to both supervised methods and graph kernels methods, indicating that the masked graph autoencoder has the potential to be a promising alternative for self-supervised graph pre-training; 2) compared to GraphCL and JOAO, our method achieves significant performance improvements (up to 1.8%-11.7%) over them on almost all datasets. However, some contrastive learning methods, such as InfoGCL and MVGRL, demonstrate better performance than masked graph autoencoders on MUTAG and PTC-MR. This may be because in some cases, the partitioning of small-scale graph data can be easily achieved through multi-view contrastive learning; and 3) RARE achieves an approximate 1.1% average performance gain over GraphMAE on all datasets, which further indicates that RARE can effectively leverage both implicit and explicit self-supervision signals to improve the quality of the learned graph embedding.

| Learning Type | Method | Usps | Mnist | Fashion-mnist |

|---|---|---|---|---|

| Supervised | VGG16 [63] | 94.50.5 | 95.60.8 | 85.60.6 |

| ResNet18 [64] | 94.21.6 | 96.11.3 | 85.4 1.5 | |

| Self-supervised | GAE [36] | 75.80.2 | - | - |

| MaskGAE [8] | 92.20.2 | 87.10.1 | 78.50.1 | |

| GraphMAE [9] | 93.00.5 | 91.70.1 | 79.80.2 | |

| Ours | 94.30.3 | 94.20.2 | 85.70.1 |

IV-B3 Evaluation on Image Classification

To verify the superiority of RARE in-depth, Table IV reports the image classification performance of six methods on three datasets. From those results, we can obtain the following observations: 1) the proposed RARE shows a significant advantage against existing state-of-the-art MGAEs and other baselines on all image benchmarks. For example, on Mnist and Fashion-mnist datasets, RARE consistently outperforms the best edge-masking-based MaskGAE and node-masking-based GraphMAE by 7.1%/2.5% and 7.2%/5.9% accuracy, respectively. These improvements once again demonstrate the effectiveness of introducing implicit self-supervision signals for model learning; and 2) it is interesting to note that RARE can achieve competitive or slightly better results than typical supervised classification methods, such as VGG16 and ResNet18. This implies that improving the reliability of the self-supervision mechanism can facilitate RARE to unleash its potential for SGP. Thus, the learned representations show good robustness and generalization across a wide range of downstream tasks.

IV-C Ablation Study (RQ2)

IV-C1 Impact of The MLFC Scheme

To demonstrate the effectiveness of the proposed masked latent feature completion scheme, we compare RARE with its three variants on eight datasets. Concretely, “w/o-Pred.” implies that RARE removes the latent feature predictor. “w/o-Mome.” indicates that RARE discards the momentum graph encoder. “w-()” denotes that the momentum graph encoder of RARE accepts rather than . As shown in Table V, some major observations can be summarized: 1) when compared to “w/o-Pred.”, the latent feature predictor produces a performance gain of 1.4%-5.2% on eight datasets, indicating that this component plays a vital role in our SGP solution. By iteratively conducting the mask-then-reconstruct operation on incomplete graphs, the latent feature predictor could be regarded as a hidden regularization that assists the graph encoder in extracting more compressed features; 2) RARE consistently outperforms “w/o-Mome.” on all eight datasets. Taking the results on Corafull and IMDB-M for example, RARE achieves 3.6% and 2.3% accuracy gains respectively, demonstrating the effectiveness of providing implicit self-supervision signals to ensure the integrity and accuracy of predicted representations. Similar observations can be concluded from the results on other datasets; and 3) although “w-()” can also achieve competitive performance, it suffers from 0.3%-1.3% accuracy degradation compared to our method. The reason behind this may be that since is a sub-graph of the original graph , a large amount of redundant information between two-source encoded representations would overwhelm the latent space, resulting in inferior representations for downstream tasks.

| Dataset | w/o-Pred. | w/o-Mome. | w-() | Ours |

|---|---|---|---|---|

| Cora | 82.5 (1.7 ) | 83.4 (0.8 ) | 83.7 (0.5 ) | 84.2 |

| Citeseer | 72.7 (1.4 ) | 70.5 (3.6 ) | 73.6 (0.5 ) | 74.1 |

| Pubmed | 79.5 (2.3 ) | 78.8 (3.0 ) | 81.4 (0.4 ) | 81.8 |

| Corafull | 52.2 (3.3 ) | 51.9 (3.6 ) | 55.2 (0.3 ) | 55.5 |

| IMDB-B | 74.6 (1.6 ) | 74.7 (1.5 ) | 75.6 (0.6 ) | 76.2 |

| IMDB-M | 51.7 (1.4 ) | 50.8 (2.3 ) | 52.3 (0.8 ) | 53.1 |

| PROTEINS | 74.1 (2.3 ) | 75.6 (0.8 ) | 75.4 (1.0 ) | 76.4 |

| PTC-MR | 54.1 (5.2 ) | 57.5 (1.8 ) | 58.0 (1.3 ) | 59.3 |

| Method | Loss Function | WikiCS | Flickr | IMDB-M | MUTAG |

|---|---|---|---|---|---|

| (A) | (MSE) & (MSE) | 66.2 | 49.7 | 51.0 | 85.7 |

| (B) | (ISCE) & (MSE) | 66.5 | 49.8 | 50.0 | 87.3 |

| (C) | (ISCE) & (ISCE) | 68.2 | 49.2 | 51.4 | 87.2 |

| (D) | (MAE) & (ISCE) | 68.8 | 49.9 | 53.2 | 87.6 |

| Ours | (MSE) & (ISCE) | 69.0 | 50.6 | 53.1 | 88.6 |

IV-C2 Impact of The Loss Function

In this subsection, we conduct ablation studies to investigate the effect of different loss functions. Table VI reports the accuracy results of RARE and its four variants on WikiCS, Corafull, IMDB-M, and MUTAG. and indicate the loss functions used for implicit and explicit self-supervision mechanisms, respectively. Moreover, MAE, MSE, and ISCE are abbreviations for mean absolute error, mean square error, and improved scaled cosine error, respectively. From the results presented in Table VI, we can observe that 1) our method achieves better performance than method (A) and method (B) by 2.8%/2.5% and 2.1%/3.1% accuracy improvements on WikiCS and IMDB-M, respectively. The reason behind this is that the MSE loss is better at modeling detailed information from the data itself, while the ISCE loss focuses more on estimating the similarity between two entities. Therefore, minimizing MSE in the noisy raw data space may mislead the network to overly preserve redundant graph details, which may not always result in the required expressive encoding capability for downstream tasks; and 2) RARE and method (D) consistently outperform method (C) by 0.8%/0.6%, 1.4%/0.7%, 1.7%/1.8%, and 1.4%/0.4% on WikiCS, Flickr, IMDB-M, and MUTAG, respectively. This is because the latent features contain much category-related information that needs to be carefully preserved. As a result, completing the masked content in the latent space with MAE or MSE contributes more to the performance than that with ISCE. Moreover, when one has to choose a loss function for masked latent feature completion, both MAE and MSE are suitable for guiding the model learning implicitly.

| Method | Data-masking Setup | Pubmed | Flickr | IMBD-M | NCI1 |

|---|---|---|---|---|---|

| (A) | M-Node (Z) & M-Rep. (Z) | 81.2 | 49.6 | 51.7 | 80.2 |

| (B) | M-Node (Z) & M-Rep. (T) | 80.4 | 49.4 | 52.0 | 80.6 |

| (C) | M-Node (T) & M-Rep. (Z) | 81.2 | 49.3 | 52.5 | 80.0 |

| (D) | M-Node (T) & w/o M-Rep. | 80.0 | 49.0 | 52.2 | 79.5 |

| Ours | M-Node (T) & M-Rep. (T) | 81.8 | 50.6 | 53.1 | 81.3 |

| Method | Predictor Setup | Pubmed | Corafull | PROTEINS | PTC-MR |

|---|---|---|---|---|---|

| (A) | GCL | 78.8 | 49.9 | 74.6 | 56.6 |

| (B) | GAL (or GIL) | 80.2 | 50.9 | 75.3 | 57.5 |

| (C) | GCL + MLP | 80.9 | 54.7 | 75.2 | 57.8 |

| Ours | GAL (or GIL) + MLP | 81.8 | 55.5 | 76.4 | 59.3 |

IV-C3 Impact of Data-masking Setups

To investigate the effect of different data-masking setups, we conduct ablation studies to make a comparison among RARE and its four variants on Pubmed, Flickr, IMBD-M, and NCI1. Specifically, “M-Node (Z)” and “M-Rep. (Z)” means that nodes and representations are masked with zero values, and “M-Node (T)” and “M-Rep. (T)” means that nodes and representations are masked with tokens (i.e., stochastic learnable vectors). “w/o M-Rep.” denotes a variant of RARE without masking representations. Some major conclusions can be summarized from the results in Table VII: 1) our method consistently achieves better accuracy performance than method (A), method (B), and method (C) on four datasets, indicating that using parameterized tokens for masked samples as initialization is more effective; and 2) the last two rows reveal the advantage of implementing the data-masking operation over latent features. It is worth noting that method (D) refines the encoded representations of masked nodes via latent feature matching directly, while RARE first recovers the masked representations from scratch via a latent feature predictor and then conducts the sample matching in the high-order latent feature space. The latter makes the self-supervised task more challenging, which further encourages the model to enhance its local modeling capability. As seen, RARE consistently outperforms method (D) by 1.8%, 1.6%, 0.9%, and 1.8% accuracy on all datasets.

IV-C4 Impact of Latent Feature Predictor Setups

To investigate how different latent feature predictor setups influence the performance of RARE, we compare RARE with its three variants and report their performance on Pubmed, Corafull, PROTEINS, and PTC-MR in Table VIII. The abbreviations used in the table are as follows: “GCL”, “GAL”, “GIL”, and “MLP” denote the graph convolution layer, graph attention layer, graph isomorphism layer, and multilayer perception, respectively. Firstly, we observe that our method produces better performance than method (C) on all datasets, indicating that GAL (or GIL) is a better option than GCL for recovering masked latent features due to its more powerful graph modeling capability. Similar observations can be obtained from the comparison between method (A) and method (B). Secondly, we also observe that our method consistently outperforms method (B) by 1.6%, 4.6%, 1.1%, and 1.8% on all datasets. Similarly, method (C) performs better than method (A) in almost all cases. These results demonstrate that MLP plays an important role in predicting the latent features of masked nodes in the MLFC scheme.

IV-D Robustness Against Outliers (RQ3)

To provide a more comprehensive understanding of our motivations, we conduct an experiment to make a comparison between the proposed RARE and state-of-the-art (SOTA) GraphMAE on adversarial WikiCS. We also include results from the original WikiCS as a reference. In our settings, we randomly divide all nodes of the original WikiCS into 5% marked nodes and 95% normal nodes. To construct an adversarial WikiCS, within the raw attribute matrix, we row-wise shuffle the attributes of 5% marked nodes to generate outliers (i.e., noisy samples). From the sub-figures in Fig. 4, some key observations can be concluded: 1) the proposed RARE achieves an approximate 2.5% accuracy gain against SOTA GraphMAE on both adversarial and original datasets, which demonstrates the superiority and effectiveness of our method; 2) GraphMAE suffers from obvious performance degradation after around 400 iterations until convergence, while RARE substantially enhances the training stability of masked graph autoencoder with performance continually reaching a plateau. We attribute this to the integration of implicit and explicit self-supervision mechanisms, which can regularize each other to provide more reliable guidance for model training and produce better performance than only minimizing a raw data reconstruction loss; 3) when GraphMAE processes a noisy graph, the average data loss value of outliers (i.e., the red curve) is generally smaller than that of normal nodes (i.e., the green curve), indicating that GraphMAE fits outliers better than normal nodes. This phenomenon is opposite to that of our method, implying that RARE has stronger robustness against adversarial attacks than GraphMAE. These results solidly support our claim that the robustness of the model would be compromised when lacking of reliable self-supervision guidance for model learning; and 4) we also notice an interesting phenomenon that the latent loss curves of outliers and normal nodes almost overlap each other on adversarial WikiCS. We guess that this is because our implicit self-supervision mechanism is driven by the MSE loss, which could carefully collect and preserve the informative features but is not selective enough to focus RARE on those outliers that are hard to tell. We will investigate this phenomenon thoroughly in our further work.

IV-E Hyper-parameter Sensitivity (RQ4)

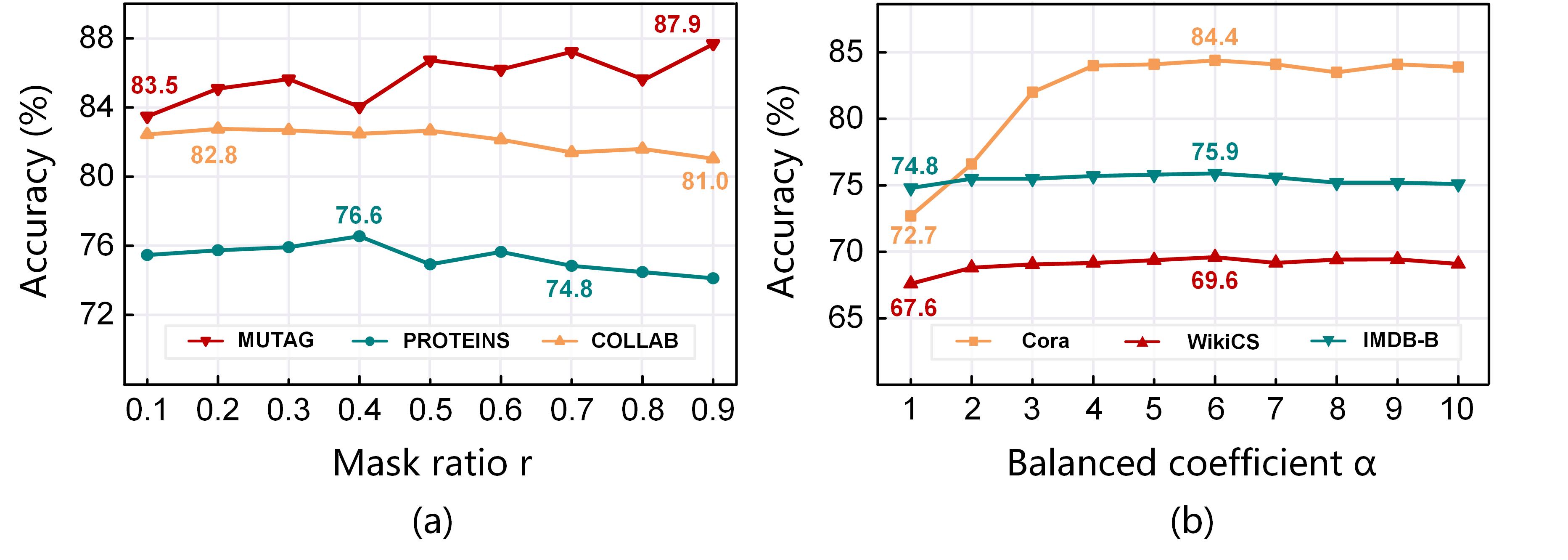

IV-E1 Impact of Mask Ratio

To further illustrate the superiority of RARE, we investigate its performance variation with respect to different mask ratios. Concretely, we pre-train RARE by varying the mask ratio from 0.1 to 0.9 with a step size of 0.1. From the results shown in Fig. 5(a), some key observations can be obtained: 1) taking the results on PROTEINS for example, continually increasing the value of first improves the model accuracy and then leads to relatively poor performance. This indicates that the masking-then-reconstructing mechanism is indeed effective for self-supervised graph pre-training, but a proper is required to balance the visible and masked information; 2) increasing by more than 0.6 would cause a performance drop in most cases, while the proposed RARE can still perform well within a wide range of high mask ratios. For example, the optimal masked ratio (i.e., 90%) for MUTAG is surprisingly high, indicating that in some cases, the model with a high mask ratio largely eliminates redundancy and thus yields a nontrivial and meaningful self-supervision task; and 3) the performance of RARE is relatively stable across a wide range of on COLLAB. This once again implies that RARE can also learn useful representations with limited observed information, indicating its potential to achieve a good accuracy-efficiency trade-off.

IV-E2 Impact of Hyper-parameters

Eq. (5) introduces a hyper-parameter to balance the importance of two loss functions. To show its influence in-depth, Fig. 5(b) presents the accuracy variation of RARE on three datasets when varies from 1 to 10 with a step size of 1. Our observations from this sub-figure are as follows: 1) the effect of tuning on model performance varies across different datasets, but the stability of the model performance is higher in the range of [5, 10]. This indicates that searching from a reasonable hyper-parameter region could positively influence the model performance; 2) the accuracy variation is relatively stable across a wide range of on IMDB-B and WikiCS, while on Cora, it shows a trend of first rising and then dropping slightly. This suggests that RARE requires a suitable to ensure the quality of learned representations when reconstructing the raw attributes; and 3) RARE tends to perform well by setting according to the results on all datasets.

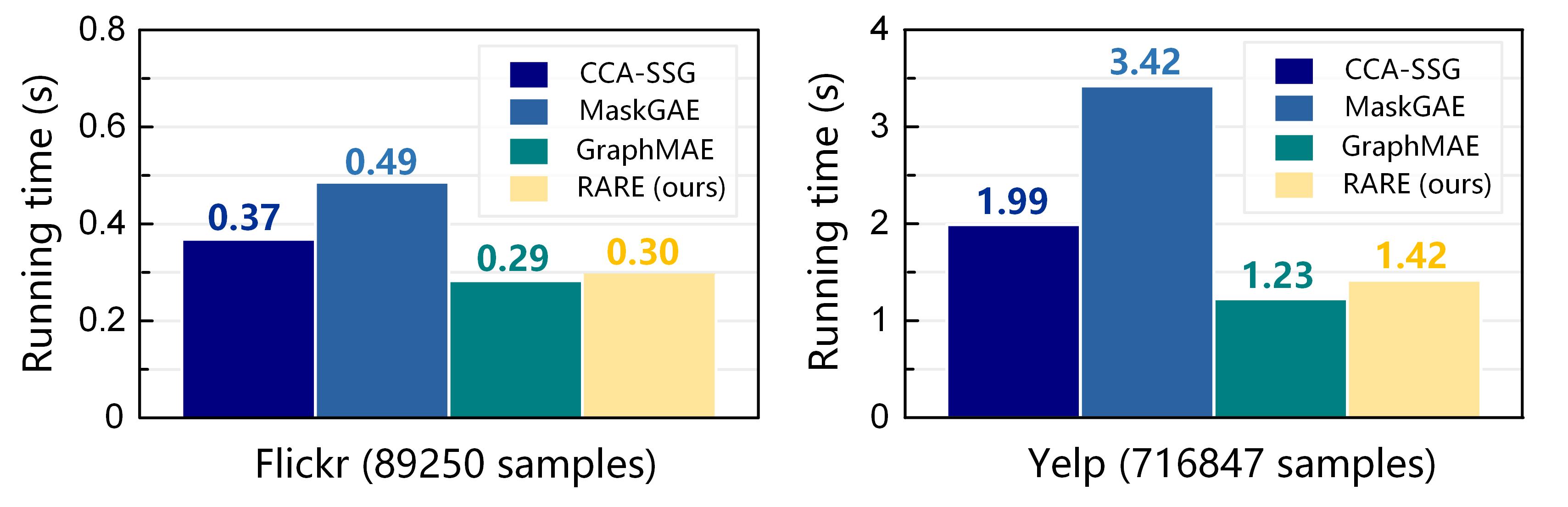

IV-F Running Time Consumption (RQ5)

Fig. 6 shows a comparison of the running time consumption among a scalable contrastive SGP method (i.e., CCA-SSG) and three MGAEs on Flickr and Yelp datasets. All methods are evaluated on the same device with one NVIDIA-3090 GPU card, and the reported result refers to an average time of 10 iterations. As evidenced by the results, since RARE outperforms CCA-SSG in terms of speed on both Flickr and Yelp datasets, the computational efficiency of utilizing a masked graph autoencoder to handle large-scale graphs is promising. Moreover, RARE can achieve better accuracy than MaskGAE and GraphMAE without considerably increasing the computation cost. These experimental results are consistent with previous complexity analyses, demonstrating that our method can scale to large-scale scenarios with linear scalability.

IV-G Visualization (RQ6)

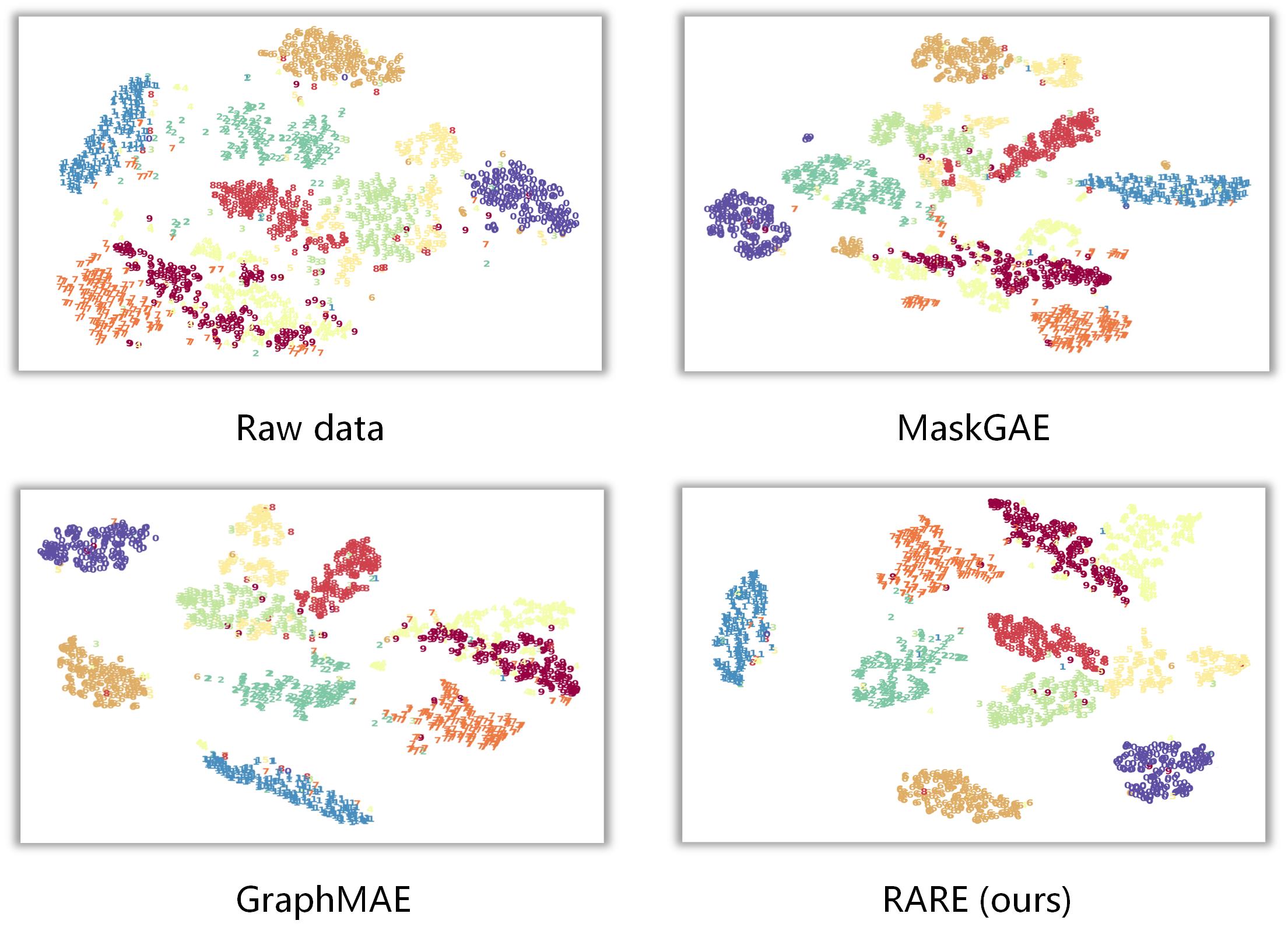

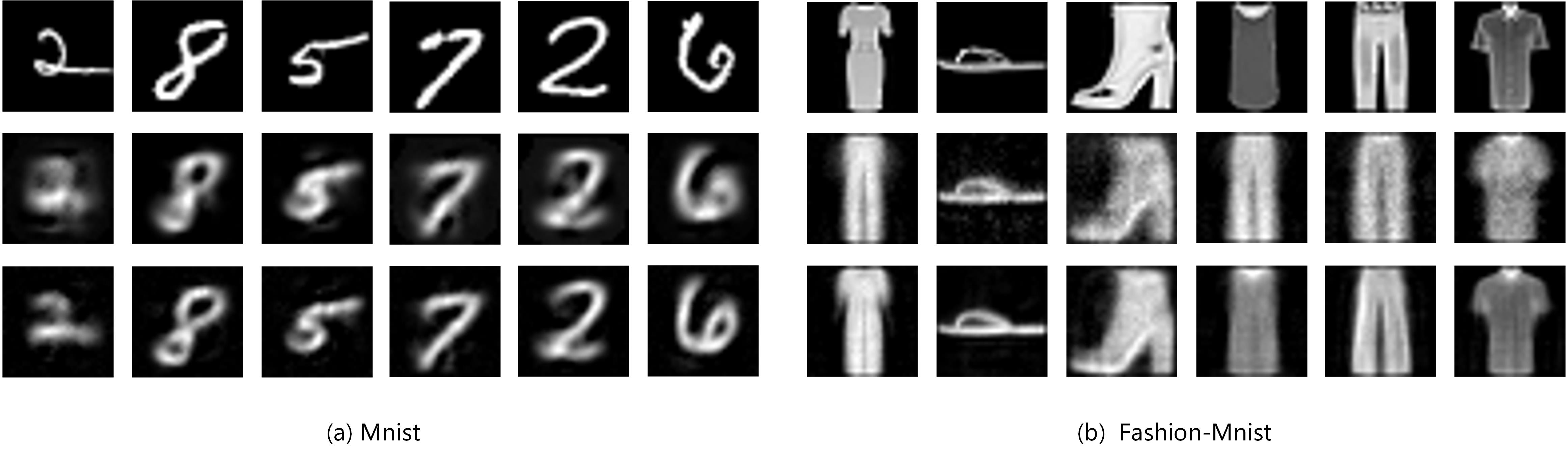

In this subsection, we present comparisons of T-SNE and reconstructed images among several methods, respectively. In Fig. 7, the samples with different colors indicate different categories predicted by methods. As seen, RARE presents clearer partitions and denser cluster structures than MaskGAE and GraphMAE, especially for Category 3 and Category 5. This verifies that RARE can learn more compact and discriminative node representations. This is attributed to integrating implicit and explicit self-supervision mechanisms for masked content recovery by performing a joint mask-then-reconstruct strategy in both latent feature and raw data spaces. To intuitively understand the superiority of our method, we utilize the Pillow toolkit to visualize the raw data of masked images, the masked images reconstructed by GraphMAEA and RARE, respectively, as shown in Fig. 8. These results once again illustrate that our method can achieve a better reconstruction in the raw data space with clearer boundaries and semantics than baselines. This verifies that RARE can indeed provide a more comprehensive understanding of the original data.

V Conclusion and Future Work

In this work, we revisit the inherent distinction between traditional data formats (e.g., images and texts) and graphs for masked signal modeling, and investigate the applicability problem of leveraging masked autoencoders to process graph data. This motivates us to propose a novel framework called RARE for self-supervised graph pre-training. In our method, we implement both implicit and explicit self-supervision mechanisms for masked content recovery by performing a joint mask-then-reconstruct strategy in both latent feature and raw data spaces. Particularly, the designed masked latent feature completion scheme can improve the certainty in inferring masked data and the reliability of the self-supervision mechanism. We also provide theoretical analyses to explain why RARE can work well and how the designed components contribute to the model performance. Extensive experiments on seventeen datasets have demonstrated the effectiveness and superiority of RARE on three downstream tasks.

However, there are still some limitations of existing masked graph autoencoders that have not been fully explored. For instance, existing MGAEs assume that all samples within a graph are available and complete, which may not always hold in practice since it is hard to collect all information from real-world graph data. Future work may extend the proposed RARE to the data-incomplete circumstance, and investigate the connections and differences between self-supervised masked graph pre-training and self-supervised incomplete graph pre-training. Moreover, in the current version, RARE only supports reconstructing each masked node from its adjacent neighbors via graph attention layers. In the future, developing a more effective and efficient MGAE to explore global features for information recovery is another interesting direction.

References

- [1] K. He, X. Chen, S. Xie, Y. Li, P. Dollár, and R. B. Girshick, “Masked autoencoders are scalable vision learners,” in In CVPR, 2022, pp. 15 979–15 988.

- [2] A. Baevski, W.-N. Hsu, Q. Xu, A. Babu, J. Gu, and M. Auli, “data2vec: A general framework for self-supervised learning in speech, vision and language,” in In ICML, 2022, pp. 1298–1312.

- [3] X. Chen, M. Ding, X. Wang, Y. Xin, S. Mo, Y. Wang, S. Han, P. Luo, G. Zeng, and J. Wang, “Context autoencoder for self-supervised representation learning,” in ArXiv preprint arXiv:2202.03026, 2022.

- [4] Z. Chen, Y. Du, J. Hu, Y. Liu, G. Li, X. Wan, and T.-H. Chang, “Multi-modal masked autoencoders for medical vision-and-language pre-training,” in In MICCAI, 2022, pp. 679–689.

- [5] Z. Fu, C. Wang, J. Xu, H. Zhou, and L. Li, “Contextual representation learning beyond masked language modeling,” in In ACL, 2022, pp. 2701–2714.

- [6] A. Chen, K. Zhang, R. Zhang, Z. Wang, Y. Lu, Y. Guo, and S. Zhang, “Pimae: Point cloud and image interactive masked autoencoders for 3d object detection,” in In CVPR, 2023.

- [7] Q. Tan, N. Liu, X. Huang, S.-H. Choi, L. Li, R. Chen, and X. Hu, “S2gae: Self-supervised graph autoencoders are generalizable learners with graph masking,” in In WSDM, 2023, pp. 787–795.

- [8] J. Li, R. Wu, W. Sun, L. Chen, S. Tian, L. Zhu, C. Meng, Z. Zheng, and W. Wang, “Maskgae: Masked graph modeling meets graph autoencoders,” in ArXiv preprint arXiv:2205.10053, 2022.

- [9] Z. Hou, X. Liu, Y. Cen, Y. Dong, H. Yang, C. Wang, and J. Tang, “Graphmae: Self-supervised masked graph autoencoders,” in In KDD, 2022, p. 594–604.

- [10] Y. Tian, K. Dong, C. Zhang, C. Zhang, and N. V. Chawla, “Heterogeneous graph masked autoencoders,” in In AAAI, 2023.

- [11] C. Liu, Y. Zhan, X. Ma, D. Tao, B. Du, and W. Hu, “Masked graph auto-encoder constrained graph pooling,” in In ECML-PKDD, 2022.

- [12] Z. Wang, Z. Feng, Y. Li, B. Li, Y. Wang, C. Sha, M. He, and X. Li, “Batmannet: Bi-branch masked graph transformer autoencoder for molecular representation,” ArXiv preprint arXiv:2211.13979, 2022.

- [13] X. Li, T. Ye, C. Shan, D. Li, and M. Gao, “Seegera: Self-supervised semi-implicit graph variational auto-encoders with masking,” In WWW, 2023.

- [14] W. Yu, M. Huang, S. Wu, and Y. Zhang, “Ensembled masked graph autoencoders for link anomaly detection in a road network considering spatiotemporal features,” Information Sciences, vol. 622, pp. 456–475, 2023.

- [15] J. Feng, Z. Wang, Y. Li, B. Ding, Z. Wei, and H. Xu, “Mgmae: Molecular representation learning by reconstructing heterogeneous graphs with a high mask ratio,” in In CIKM, 2022, pp. 509–519.

- [16] K. Jing, J. Xu, and P. Li, “Graph masked autoencoder enhanced predictor for neural architecture search,” in In IJCAI, 2022, pp. 3114–3120.

- [17] C. Morris, M. Ritzert, M. Fey, W. L. Hamilton, J. E. Lenssen, G. Rattan, and M. Grohe, “Weisfeiler and leman go neural: Higher-order graph neural networks,” in In AAAI, 2019, pp. 4602–4609.

- [18] Z. Lin, Z. Kang, L. Zhang, and L. Tian, “Multi-view attributed graph clustering,” IEEE TKDE, vol. 35, no. 2, pp. 1872–1880, 2023.

- [19] J. Li, H. Peng, Y. Cao, Y. Dou, H. Zhang, P. S. Yu, and L. He, “Higher-order attribute-enhancing heterogeneous graph neural networks,” IEEE TKDE, vol. 35, no. 1, pp. 560–574, 2023.

- [20] Y. Liu, M. Jin, S. Pan, C. Zhou, Y. Zheng, F. Xia, and P. Yu, “Graph self-supervised learning: A survey,” In TKDE, 2022.

- [21] T. N. Kipf and M. Welling, “Semi-supervised classification with graph convolutional networks,” in In ICLR, 2017.

- [22] P. Velickovic, G. Cucurull, A. Casanova, A. Romero, P. Liò, and Y. Bengio, “Graph attention networks,” in In ICLR, 2018.

- [23] K. Xu, W. Hu, J. Leskovec, and S. Jegelka, “How powerful are graph neural networks?” in In ICLR, 2019.

- [24] L. Huang, Y. Zhu, Y. Gao, T. Liu, C. Chang, C. Liu, Y. Tang, and C.-D. Wang, “Hybrid-order anomaly detection on attributed networks,” IEEE TKDE, 2021.

- [25] M. You, A. Yuan, M. Zou, D. He, and X. Li, “Robust unsupervised feature selection via multi-group adaptive graph representation,” IEEE TKDE, vol. 35, no. 3, pp. 3030–3044, 2023.

- [26] K. Zhan, C. Niu, C. Chen, F. Nie, C. Zhang, and Y. Yang, “Graph structure fusion for multiview clustering,” IEEE TKDE, vol. 31, no. 10, pp. 1984–1993, 2019.

- [27] S. Huang, I. W. Tsang, Z. Xu, and J. Lv, “Measuring diversity in graph learning: A unified framework for structured multi-view clustering,” IEEE TKDE, vol. 34, no. 12, pp. 5869–5883, 2022.

- [28] P. Velickovic, W. Fedus, W. L. Hamilton, P. Liò, Y. Bengio, and R. D. Hjelm, “Deep graph infomax,” in In ICLR, 2019.

- [29] Y. You, T. Chen, Y. Sui, T. Chen, Z. Wang, and Y. Shen, “Graph contrastive learning with augmentations,” in In NeurIPS, 2020, pp. 5812–5823.

- [30] J. Qiu, Q. Chen, Y. Dong, J. Zhang, H. Yang, M. Ding, K. Wang, and J. Tang, “Gcc: Graph contrastive coding for graph neural network pre-training,” in In KDD, 2020, pp. 1150–1160.

- [31] K. Hassani and A. H. Khasahmadi, “Contrastive multi-view representation learning on graphs,” in In ICML, 2020, pp. 4116–4126.

- [32] Y. Zhu, Y. Xu, F. Yu, Q. Liu, S. Wu, and L. Wang, “Deep graph contrastive representation learning,” in ArXiv preprint arXiv:2006.04131, 2020.

- [33] F.-Y. Sun, J. Hoffman, V. Verma, and J. Tang, “Infograph: Unsupervised and semi-supervised graph-level representation learning via mutual information maximization,” in In ICLR, 2020.

- [34] H. Zhang, Q. Wu, J. Yan, D. Wipf, and P. S. Yu, “From canonical correlation analysis to self-supervised graph neural networks,” in In NeurIPS, 2021, pp. 76–89.

- [35] Z. Hou, Y. Cen, Y. Dong, J. Zhang, and J. Tang, “Automated unsupervised graph representation learning,” IEEE TKDE, vol. 35, no. 3, pp. 2285–2298, 2023.

- [36] T. N. Kipf and M. Welling, “Variational graph auto-encoders,” in ArXiv preprint arXiv:1611.07308, 2016.

- [37] D. Bo, X. Wang, C. Shi, M. Zhu, E. Lu, and P. Cui, “Structural deep clustering network,” in In WWW, 2020, pp. 1400–1410.

- [38] W. Tu, S. Zhou, X. Liu, X. Guo, Z. Cai, E. Zhu, and J. Cheng, “Deep fusion clustering network,” in In AAAI, 2021, pp. 9978–9987.

- [39] W. Tu, S. Zhou, X. Liu, Y. Liu, Z. Cai, E. Zhu, C. Zhang, and J. Cheng, “Initializing then refining: A simple graph attribute imputation network,” in In IJCAI, 2022, pp. 3494–3500.

- [40] X. Gao, W. Hu, and G.-J. Qi, “Self-supervised graph representation learning via topology transformations,” IEEE TKDE, vol. 35, no. 4, pp. 4202–4215, 2023.

- [41] J. Devlin, M.-W. Chang, K. Lee, and K. Toutanova, “Bert: Pre-training of deep bidirectional transformers for language understanding,” in NAACL-HLT, 2019, pp. 4171–4186.

- [42] H. Chen, S. Zhang, and G. Xu, “Graph masked autoencoders with transformers,” in ArXiv preprint arXiv:2202.08391, 2022.

- [43] S. Yun, M. Jeong, R. Kim, J. Kang, and H. J. Kim, “Graph transformer networks,” in In NeurIPS, 2019, pp. 11 960–11 970.

- [44] A. Tarvainen and H. Valpola, “Mean teachers are better role models: Weight-averaged consistency targets improve semi-supervised deep learning results,” in In NeurIPS, 2017, pp. 1195–1204.

- [45] M. Caron, P. Bojanowski, A. Joulin, and M. Douze, “Deep clustering for unsupervised learning of visual features,” in In ECCV, 2018, pp. 139–156.

- [46] J.-B. Grill, F. Strub, F. Altché, C. Tallec, P. Richemond, E. Buchatskaya, C. Doersch, B. A. Pires, Z. Guo, M. G. Azar, B. Piot, koray kavukcuoglu, R. Munos, and M. Valko, “Bootstrap your own latent - a new approach to self-supervised learning,” in In NeruIPS, 2020, pp. 21 271–21 284.

- [47] S. Thakoor, C. Tallec, M. G. Azar, M. Azabou, E. L. Dyer, R. Munos, P. Veličković, and M. Valko, “Large-scale representation learning on graphs via bootstrapping,” in In ICLR, 2021.

- [48] Y. Liu, Y. Zheng, D. Zhang, H. Chen, H. Peng, and S. Pan, “Towards unsupervised deep graph structure learning,” in In WWW, 2022, pp. 1392–1403.

- [49] N. Lee, J. Lee, and C. Park, “Augmentation-free self-supervised learning on graphs,” in In AAAI, 2022, pp. 7372–7380.

- [50] Q. Zhang, Y. Wang, and Y. Wang, “How mask matters: Towards theoretical understandings of masked autoencoders,” in In NeurIPS, 2022.

- [51] J. Zhu, L. Tao, H. Yang, and F. Nie, “Unsupervised optimized bipartite graph embedding,” IEEE TKDE, vol. 35, no. 3, pp. 3224–3238, 2023.

- [52] M. Wang, D. Zheng, Z. Ye, Q. Gan, M. Li, X. Song, J. Zhou, C. Ma, L. Yu, Y. Gai, T. Xiao, T. He, G. Karypis, J. Li, and Z. Zhang, “Deep graph library: A graph-centric, highly-performant package for graph neural networks,” in ArXiv preprint arXiv:1909.01315, 2019.

- [53] P. Yanardag and S. Vishwanathan, “Deep graph kernels,” in In KDD, 2015, pp. 1365–1374.

- [54] Y. LeCun, O. Matan, B. E. Boser, J. S. Denker, D. Henderson, R. E. Howard, W. E. Hubbard, and L. D. Jacket, “Handwritten zip code recognition with multilayer networks,” in In ICPR, 1990, pp. 35–40.

- [55] Y. LeCun, L. Bottou, Y. Bengio, and P. Haffne, “Gradient-based learning applied to document recognition,” In IEEE, vol. 86, no. 11, pp. 2278–2324, 1998.

- [56] H. Xiao, K. Rasul, and R. Vollgraf, “Fashion-mnist: a novel image dataset for benchmarking machine learning algorithms,” in ArXiv preprint arXiv:1708.07747, 2017.

- [57] H. Zeng, H. Zhou, A. Srivastava, R. Kannan, and V. K. Prasanna, “Graphsaint: Graph sampling based inductive learning method,” in In ICLR, 2020.

- [58] D. Xu, W. Cheng, D. Luo, H. Chen, and X. Zhang, “Infogcl: Information-aware graph contrastive learning,” in In NeurIPS, 2021, pp. 30 414–30 425.

- [59] Z. Ying, J. You, C. Morris, X. Ren, W. Hamilton, and J. Leskovec, “Hierarchical graph representation learning with differentiable poolings,” in In NeurIPS, 2018.

- [60] N. Shervashidze, P. Schweitzer, E. J. van Leeuwen, K. Mehlhorn, and K. M. Borgwardt, “Weisfeiler-lehman graph kernels,” JMLR, vol. 12, no. 9, pp. 2539–2561, 2011.

- [61] R. V. L. C. Y. L. Annamalai Narayanan, Mahinthan Chandramohan and S. Jaiswal, “Learning distributed representations of graphs,” in ArXiv preprint arXiv:1707.05005, 2017.

- [62] Y. You, T. Chen, Y. Shen, and Z. Wang, “Graph contrastive learning automated,” in In ICML, 2021, pp. 12 121–12 132.

- [63] K. Simonyan and A. Zisserman, “Very deep convolutional networks for large-scale image recognition,” in In ICLR, 2015.

- [64] K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” in In CVPR, 2016, pp. 770–778.