Rationally Inattentive Utility Maximization for Interpretable Deep Image Classification

Abstract

Are deep convolutional neural networks (CNNs) for image classification explainable by utility maximization with information acquisition costs? We demonstrate that deep CNNs behave equivalently (in terms of necessary and sufficient conditions) to rationally inattentive utility maximizers, a generative model used extensively in economics for human decision making. Our claim is based by extensive experiments on 200 deep CNNs from 5 popular architectures. The parameters of our interpretable model are computed efficiently via convex feasibility algorithms. As an application, we show that our economics-based interpretable model can predict the classification performance of deep CNNs trained with arbitrary parameters with accuracy exceeding . This eliminates the need to re-train the deep CNNs for image classification. The theoretical foundation of our approach lies in Bayesian revealed preference studied in micro-economics. All our results are on GitHub and completely reproducible.

Index Terms:

Interpretable Machine Learning, Bayesian Revealed preference, Rational Inattention, Deep Neural Networks, Image Classification1 Introduction

This paper considers interpretable deep image classification.111This paper builds substantially on our existing arXiv preprint https://arxiv.org/abs/2102.04594 uploaded in January, 2021. We show that image classification using deep Convolutional Neural Networks (CNNs) can be interpreted as a generative human decision-making model developed in microeconomics.

In micro- and behavioral economics222Micro-economics models the interaction of individual agents pursuing their private interests. Behavioral economics models human decision making in terms of subjective probabilities via prospect theory and framing. In the rest of this paper, we will use the term ‘agent’ to denote a human decision-maker., a fundamental question relating to human decision making is: How to model attention spans in humans (agents)? The area of rational inattention [1, 2], pioneered by Nobel laureate Christopher Sims models human attention in information-theoretic terms. The key hypothesis is that agents are “boundedly rational”- their perception of the environment is modeled as a Shannon capacity limited channel. In simple terms, rational inattention assigns a mutual information cost for human attention spans.

Building on the rational inattention model, the next key concept is that of a Bayesian agent with rational inattention that maximizes its expected utility. Such models are studied extensively in [3, 4, 5]. The intuition is this: more attentive decisions yield a higher expected utility at the expense of a larger attention cost. Hence, the Bayesian agent optimally trades off between minimizing its sensing cost and maximizing its expected utility. An important question is: How to test for rationally inattentive utility maximization given the decisions of a Bayesian agent? In the last decade, necessary and sufficient conditions have been developed in the area of Bayesian revealed preference [6, 7] to test if the decisions of a Bayesian agent are consistent with rationally inattentive utility maximization.

Summary of Results.

The question we address is: Can the decisions of deep CNNs in image classification be explained by a rationally inattentive Bayesian utility maximizer?

This paper uses a data-driven Bayesian revealed preference approach to interpretable deep CNN image classification. Bayesian revealed preference performs a post-hoc analysis of agent decisions. It constructs a generative333A generative model is image-independent, and hence provides a global explanation for deep image classification. In contrast, local approximation models for deep image classification are image-specific; they approximate model decisions via tractable functionals in a -neighborhood of every input. explanatory model for the agent decisions, parameterized by a utility function and an information acquisition cost. Our approach draws important parallels between human decision making and deep neural networks; namely that deep neural networks satisfy economics based rationality.

By very nature, Bayesian revealed preference reconstructs a set of feasible utility functions and information acquisition costs. Every element in the feasible set explains the deep CNN decisions equally well. The computed utility function induces a preference ordering on the set of image classes. That is, how much a deep CNN prioritizes accurate classification over an inaccurate classification. The information acquisition cost abstracts the penalty incurred by the deep CNN to ‘learn’ an accurate latent feature representation. The key results in this paper are:

-

1.

We show that the image classification decisions of deep CNNs satisfy the necessary and sufficient conditions for rationally inattentive utility maximization by a large margin. The margin by which the decisions satisfy these conditions are displayed in Table I. Hence, we establish that rationally inattentive utility maximization is a robust fit to deep image classification. This result is schematically shown in Fig. 1.

-

2.

To aid visualization of our interpretable model, we provide a sparsity-enhanced decision test that computes the sparsest utility function and information acquisition cost which rationalizes deep CNN decisions. The sparsest solution yields a parsimonious representation of hundreds of thousands of layer weights of the deep CNNs in terms of a few hundred parameters. The utility function of the sparsest interpretable model also induces a useful preference ordering amongst the set of hypotheses (image labels) considered by the CNN; for example, how much additional priority is allocated to the classification of a cat as a cat compared to a cat as a dog. In classical deep learning, this preference ordering is not explicitly generated. The sparsity results for various deep CNN architectures are displayed in Table II and Fig. 2.

-

3.

Our final result demonstrates the usefulness of our interpretable model. We show that the interpretable model computed from CNN decisions can predict the classification accuracy of a CNN trained with arbitrary parameters with accuracy exceeding 94%. This by-passes the need to re-train the deep CNN when its accuracy is observed for a finite number of training parameters. The prediction results are displayed in Table III.

The above results are backed by experiments performed on several deep CNN architectures using the benchmark image dataset, namely, CIFAR-10 [8]. The first two results use deep CNN decisions aggregated over varying training epochs. The third (prediction) result uses deep CNN decisions trained on noisy image datasets parameterized by the noise variance.

Related Works

Since we study interpretable deep learning using behavioral and micro- economics, we briefly discuss related works in these areas.

Bayesian revealed preference and Rational inattention. Estimating utility functions given a finite sequence of decisions and budget constraints is the central theme of revealed preference in micro-economics. The seminal work of [9, 10] (see also [11]) give necessary and sufficient conditions for the existence of a utility function that rationalizes a finite time series of consumption bundles of a decision-maker. Rationally inattentive models for Bayesian decision making have been studied extensively in [3, 4, 5]. In the last decade, the area of Bayesian revealed preference [6, 7] develops necessary and sufficient conditions to test for rationally inattentive Bayesian utility maximization.

Interpretable ML. Providing transparent models for de-obfuscating ‘black-box’ ML algorithms under the area of interpretable machine learning is a subject of extensive research [12, 13, 14]. Interpretable machine learning is defined in [15] as “the use of machine-learning models for the extraction of relevant knowledge about domain relationships contained in data”.

Since the literature is enormous, we only discuss a subset of works pertaining to interpretability of deep neural networks for image classification [16, 17, 18]. One prominent approach, namely, saliency maps, reconstructs the most preferred or typical image pertaining to each image class the deep neural network has learned [19, 20]. Related work includes creating hierarchical models for determining the importance of image features that determine its label [21]. In this paper, this feature importance is encoded into the utility function that parameterizes our interpretable model. Another approach seeks to provide local approximations to the trained model, local w.r.t the input image [22, 23]. In contrast, our generative interpretable model provides a global black-box approximation for deep image classification. A third approach approximates the decisions of the deep neural networks by a linear function of simplified individual image features [24, 25, 26, 23]. In contrast, our interpretable model fits a stochastic non-linear map that relates the true and predicted image labels. The parameters of the map are obtained by solving a convex feasibility problem parameterized by the deep CNN decisions. Finally, deep neural networks have also been modeled by Bayesian inference frameworks using probabilistic graphical methods [27].

To the best of our knowledge, an economics based approach for the post-hoc analysis of deep neural networks has not been explored in literature. However, we note that behavioral economics based interpretable models have been applied to domains outside interpretable machine learning, for example, in online finance platforms for efficient advertising [28, 29], training neural networks [30] and more recently in YouTube to rationalize user commenting behavior [31]. Finally, due to our recent equivalence result [32], our behavioral economics approach to interpretable deep image classification can be related to classical revealed preference methods [9, 10] in microeconomics.

2 Bayesian Revealed preference with Rational Inattention

This section describes the key ideas behind Bayesian revealed preference. Despite the abstract formulation below, the reader should keep in mind the deep learning context. In Sec. 3, we will use Bayesian revealed preference theory to construct an interpretable deep learning representation by showing that deep CNNs are equivalent to rationally inattentive Bayesian utility maximizers.

2.1 Utility Maximization with Rational Inattention (UMRI)

Bayesian revealed preference aims to determine if the decisions of a Bayesian agent are consistent with expected utility maximization subject to a rational inattention sensing cost. We start by describing the utility maximization model with rational inattention (henceforth called UMRI) for a collection of Bayesian decision makers/agents.

Abstractly, the UMRI model is parameterized by the tuple

| (1) |

With respect to the abstract parametrization of the UMRI model for a collection of Bayesian agents, the following elements constitute the tuple defined in (1).

Agents: indexes the finite set of Bayesian agents.

State: is the finite set of ground truths with prior probability distribution . With respect to our image classification context, is the set of image classes in the CIFAR-10 dataset and is the empirical probability distribution of the image classes in the test dataset of CIFAR-10.

Observation and attention strategy: Agent chooses attention strategy ,

a stochastic mapping from to a finite set of observations . Given state and attention strategy , the agent samples observation with probability .

The agent then computes the posterior probability distribution via Bayes formula as

| (2) |

The observation and attention strategy are latent variables that abstractly represent the learned feature representations in the deep image classification context. Bayesian revealed preference theory tests their existence via the convex feasibility test in Theorem 1 below.

Action: Agent chooses action from a finite set of actions after computing the posterior probability distribution . In the image classification context, is the image class predicted by the neural network, hence .

Utility function: Agent has a utility function , and aims to maximize its expected value, with the expectation taken wrt the random state and random observation . A key feature in our approach is to show that the utility function rationalizes the decisions of the deep CNNs (made precise in Definition 1).

Information Acquisition Cost: The information acquisition cost depends on attention strategy and prior pmf . It is the sensing cost the agent incurs in order to

estimate the underlying state (2). In the context of machine learning, abstractly captures the ‘learning’ cost incurred during training of the deep neural networks. In rational inattention theory from behavioral economics,

a higher information acquisition cost is incurred for more accurate attention strategies (equivalently, more accurate state estimates (2) given observation ).

We refer the reader to the influential work of [1, 2].

Each Bayesian agent , aims to maximize its expected utility while minimizing its cost of information acquisition. Hence, the action given observation , and attention strategy are chosen as follows:

Definition 1 (Rationally Inattentive Utility Maximization).

Consider a collection of Bayesian agents parameterized by in (1) under the UMRI model. Then,

(a) Expected Utility Maximization:

Given posterior probability distribution , every agent chooses action that maximizes its expected utility. That is, with denoting mathematical expectation, the action satisfies

| (3) |

(b) Attention Strategy Rationality: For agent , the attention strategy optimally trades off between maximizing the expected utility and minimizing the information acquisition cost.

| (4) |

Eq. 3,4 in Definition 1 constitute a nested optimization problem. The lower-level optimization task is to choose the the ‘best’ action for any observation based on the computed posterior belief of the state. The upper-level optimization task is to sample the observations optimally by choosing the ‘best’ attention strategy.

Remark. The multiple Bayesian agents in have the same state space , observation space , action space , prior and cost of information acquisition , but only differ in their utility functions. Bayesian revealed preference theory relies on this crucial constraint on the optimization variables in (3), (4) for detecting optimal behavior in a finite number of agents.

2.2 Bayesian Revealed Preference (BRP) Test for Rationally Inattentive Utility Maximization

Having described the UMRI model (collection of rationally inattentive utility maximizers), we are now ready to state our key result. Theorem 1 below says that the decisions of a collection of Bayesian agents is rationalized by a UMRI tuple if and only if a set of convex inequalities have a feasible solution. These inequalities comprise our Bayesian Revealed Preference (henceforth called BRP) test for rationally inattentive utility maximization.

For notational convenience, the decisions of the Bayesian agents in the UMRI model are compacted into the dataset defined as:

| (5) |

In (5), denotes the prior pmf over the set of states in (1). The variable is the conditional probability that agent takes action given state . characterizes the input-output behavior of the collection of Bayesian agents and serves as the input for BRP feasibility test described below.

Theorem 1 (BRP Test for Rationally Inattentive Utility Maximization [7]).

Given the dataset (5) obtained from a collection of Bayesian agents . Then,

1. Existence: There exists a UMRI tuple (1) that rationalizes dataset if and only if there exists a feasible solution that satisfies the set of convex inequalities

| (6) |

In (6), BRP corresponds to a set of convex (in the variables ) inequalities, stated in Algorithm 1.

2. Reconstruction: Given a feasible solution to , is the Bayesian agent’s utility function in the feasible model tuple . The set of observations , the set of actions in . The feasible cost of information acquisition in is defined in terms of as:

| (7) |

The proof of Theorem 1 is in Appendix A.1. Before launching into a detailed discussion, we stress the “iff” in Theorem 1. Put simply: if the inequalities in (6) are not feasible, then the Bayesian agents that generate the dataset are not rationally inattentive utility maximizers. If (6) has a feasible solution, then there exists a reconstructable family of viable utility functions and information acquisition costs that rationalize 444In terms of interpretable deep learning, of all parameters in the UMRI tuple, we are only interested in the utility functions of the agents and the cost of information acquisition, since the remaining parameters are immediately deduced from the decision dataset .. A key feature of Theorem 1 is that the estimated utilities (and information costs) are set-valued; every utility and cost function in the feasible set explains equally well. The estimated UMRI model parameters are set-valued due to the finite number of Bayesian agents whose decisions constitute the dataset . The estimated parameter set converges to a point if and only if the inequality (6) holds as .

Computational Aspects of BRP Test. Suppose the dataset is obtained from Bayesian agents. Then, BRP comprises

a feasibility test with free variables and

convex inequalities. Thus, the number of free variables and inequalities in the BRP feasibility test scale linearly and quadratically, respectively, with the number of observed Bayesian agents.

| (8) | ||||

| (9) | ||||

2.3 Relating UMRI and BRP test to Interpretable Deep Image Classification

We now discuss how the above BRP test relates to interpretable image classification using deep CNNs. The BRP convex feasibility test in Theorem 1 comprises two sets of inequalities, namely, the NIAS (No-Improving-Action-Switches) (8) and NIAC (No-Improving-Action-Cycles) (9) inequalities (Algorithm 1). NIAS ensures that the agent takes the best action given a posterior pmf. NIAC ensures that every agent chooses the best attention strategy. BRP test checks if there exist utility functions and positive reals that, together with , satisfy the NIAS and NIAC inequalities.

Toy Example with 2 CNNs

The following discussion gives additional insight into our approach. Consider the simplest case involving two trained deep CNNs and ; so in the above notation. Assume and have the same network architecture. Suppose an analyst observes that makes accurate decisions on a rich input image dataset while makes less accurate decisions on the same dataset.

Our UMRI model first abstracts the accuracy of the feature representations of the input image data learned by and via attention strategies and in (4). Second, the information acquisition cost function abstracts the computational resources expended for learning the representations. The rationale is that learning an accurate latent feature representation is costly, and this is abstracted by the information acquisition cost.

Let the training cost incurred by and be and respectively. If the decisions of and can be explained by the UMRI model (and Theorem 1 below will give necessary and sufficient conditions for this), then there exist utility functions and for and , that satisfy:

| (10) |

The above inequality says that CNNs and would be worse off (in an expected utility sense) if they make decisions based on swapping each other’s learned representations. That is, both and learn the ‘best’ feature representation of the input images given their training parameters.

Discussion

(i) Parsimonious Interpretable Representation of deep CNNs. In the deep image classification context, due to the UMRI model’s parsimonious parametrization in (1), the decisions of CNNs can be rationalized by just utility functions and an information acquisition cost function, thus bypassing the need of several million parameters to describe the deep CNNs.

(ii) Identifiability.

The BRP feasibility test requires the dataset to be generated from Bayesian agents. If , then (6) holds trivially since any information acquisition cost satisfies the convex inequalities of BRP. Another intuitive way of motivating a collection of agents for the BRP is as follows. Reconstructing a feasible UMRI model tuple that rationalizes the decisions of the deep CNNs is analogous to fitting a line to a finite number of points. One can fit infinitely many lines through a single point. The task becomes non-trivial if the number of points exceeds . In the Bayesian revealed preference context, the points correspond to the decisions from each Bayesian agent. The slope and intercept of the fitted line, in our case, corresponds to the utility function and cost of information acquisition that rationalize the agent decisions.

(iii) Relative Optimality implies Global Optimality. In the setting involving deep CNNs (agents), the NIAS and NIAC inequalities of BRP test check for relative optimality -

given utility function , does deep CNN performs at least as well as any other observed deep CNN in ? Clearly, testing for relative optimality is weaker than testing for global optimality (4) which ideally requires access to decisions from an infinite number of deep CNNs. Setting the cost of information acquisition as a free variable bridges this gap.

The proof of Theorem 1 shows that if the deep CNN decisions satisfy relative optimality, then there exists a cost of information acquisition such that the decisions are globally optimal. That is, Theorem 1 ensures relative optimality is sufficient for global optimality.

(iii) Generalization of [7]. Theorem 1 generalizes [7, Theorem 1] in two ways. (1) In [7], the utilities in UMRI model tuple are assumed known, and only the information acquisition costs are estimated, whereas Theorem 1 estimates both parameters. (2) The expression for the reconstructed model tuple is novel; the discussion in [7] is only confined to the existence of such a tuple.

(vi) Single Utility UMRI (S-UMRI ). In Appendix A.2, we propose a sparse version of UMRI , namely, the S-UMRI model in (21). The key distinction of this model is that all agents have the same utility function and thus can be represented with substantially fewer parameters. In complete analogy to Theorem 1, we outline a decision test in Theorem 3 that states necessary and sufficient conditions for agent decisions to be consistent with the S-UMRI model of rationally inattentive utility maximization. We discuss this sparse parametrization in the appendix so as not to interrupt the flow of the main text.

(vii) Degenerate solution to BRP and S-BRP tests. The degenerate utility function of all zeros and cost of information acquisition trivially satisfy the BRP and S-BRP tests and lie at the boundary of the feasible set of parameters.

Summary

This section formulated an economics-based decision-making model. Since this model may not be familiar to a machine learning reader, we summarize the main ideas. We introduced the rationally inattentive utility maximization model, namely, the UMRI model for a collection of Bayesian agents (decision makers). Our main result Theorem 1 outlines a decision test BRP for rationally inattentive utility maximization given decisions from a collection of agents. This BRP test comprises a set of convex inequalities that have a feasible solution if and only if the collection of agents are rationally inattentive utility maximizers. Theorem 1 also provides an explicit reconstruction of the feasible UMRI model parameters that rationalize input agent decisions. The set of feasible utility functions and information acquisition costs thus parsimoniously explain the decisions generated by the Bayesian agents. In Appendix A.2, we propose a single utility version of the UMRI model with fewer parameters. Due to fewer parameters, the decision test for this sparse model, given in Theorem 3, is computationally less expensive yet more restrictive than the BRP test for rationality in Theorem 1.

The rest of the paper focuses on computing interpretable UMRI models that rationalize deep CNN decisions. We will investigate through extensive experiments how well the UMRI fits the deep CNN decisions via robustness tests. We will also investigate how well the computed interpretable models, namely, UMRI and S-UMRI , predict the deep CNNs’ decisions when the training parameters are varied.

3 Bayesian Revealed Preference explains CIFAR-10 Image Classification by Deep CNNs

The experimental results in this section are divided into two parts: First, we show that the deep CNNs decisions pass the BRP and S-BRP tests formulated in Theorems 1 and 3 by a large margin. This implies that the rationally inattentive utility maximization model is a robust fit to the deep CNN decisions.

Our second result demonstrates an application of the reconstructed interpretable model. Training datasets are often noisy. We show that in such a noisy setting, the reconstructed interpretable model from Theorem 1 can accurately predict (with accuracy exceeding 94%) the image classification performance of the deep CNNs. This bypasses the need to train the deep CNN for various noise variances that corrupt the training dataset.

Experimental Setup: Deep CNN Architectures, Training Parameters and Construction of Dataset

Image Dataset. In our experiments, we trained and validated the deep CNNs using the CIFAR-10 benchmark image dataset [8]. This public dataset consists of x colour images in distinct classes (for example, airplane, automobile, ship, cat, dog etc.), with images per class. There are training images and test images. We will use the terms image classes and image labels interchangeably.555Our experiments are confined to the CIFAR-10 dataset for clarity of exposition. Our approach to interpretable deep learning can be easily extended to richer benchmark image datasets like ImageNet and CIFAR-100 (that comprise over a 100 image labels).

Network Architecture and Training Parameters. In this paper, we use well-known deep CNN architectures for our experiments. 1. LeNet [33], 2. AlexNet [34] 3. VGG16 [35] 4. ResNet-50 [18] 5. Network-in-Network (NiN) [36] The deep CNNs are trained and validated on the CIFAR-10 image dataset, using learning rate schedules, namely, L.R. 1, L.R. 2 and L.R. 3. All schedules use the RMSprop optimizer [37] with the decay parameter and maximum training epochs (full passes of the training dataset) set to and , respectively, and initial step size set to . The step size is halved every epochs, respectively, for L.R. 1, 2 and 3.

Relation to Bayesian revealed preference. We now relate the deep CNN setup to the Bayesian revealed preference framework in Sec. 2. For each CNN architecture, we use the decisions of CNNs, i.e. , Bayesian agents in the terminology of Sec. 2, for our BRP and S-BRP decision tests. The CNN decisions from CNNs on the test image dataset of CIFAR-10 are aggregated into dataset (5). The results of the decision tests are discussed below. In the deep image classification context, the parameter in (5) is the probability that the deep CNN classifies an image from category into category in the CIFAR-10 test image dataset. The prior in (5) is the empirical pmf over the set of image categories in the CIFAR-10 test dataset. Constructing from raw CNN decisions is discussed in Appendix A.3.

3.1 BRP and S-BRP Tests for deep CNN datasets. Results and Insights

| Network Architecture | Learning Rate | ||

|---|---|---|---|

| LeNet | L. R. 1 | ||

| L. R. 2 | |||

| L. R. 3 | |||

| AlexNet | L. R. 1 | ||

| L. R. 2 | |||

| L. R. 3 | |||

| VGG16 | L. R. 1 | ||

| L. R. 2 | |||

| L. R. 3 | |||

| ResNet-50 | L. R. 1 | ||

| L. R. 2 | |||

| L. R. 3 | |||

| Network-In-Network (NiN) | L. R. 1 | ||

| L. R. 2 | |||

| L. R. 3 |

A. Robustness Results on Deep CNN datasets

Our first key result is that image classifications of all deep CNN architectures listed in Sec. 3 pass the BRP and S-BRP tests by a large margin. The results are tabulated in Table I. The robustness values and in Table I are defined in Definition 2 below which formalizes the notion of margin for the decision tests.

| Network Architecture | Learning Rate (L.R.) | airplane | auto | bird | cat | deer | dog | frog | horse | ship | truck |

|---|---|---|---|---|---|---|---|---|---|---|---|

| LeNet | L.R. 1 | ||||||||||

| L.R. 2 | |||||||||||

| L.R. 3 | |||||||||||

| AlexNet | L.R. 1 | ||||||||||

| L.R. 2 | |||||||||||

| L.R. 3 | |||||||||||

| VGG16 | L.R. 1 | ||||||||||

| L.R. 2 | |||||||||||

| L.R. 3 | |||||||||||

| ResNet-50 | L.R. 1 | ||||||||||

| L.R. 2 | |||||||||||

| L.R. 3 | |||||||||||

| Network-in-Network | L.R. 1 | ||||||||||

| L.R. 2 | |||||||||||

| L.R. 3 |

Definition 2 (Robustness (Goodness-of-fit) of BRP and S-BRP Tests.).

Given dataset (5) aggregated from a collection of Bayesian agents, and measure the largest perturbation so that passes the BRP and S-BRP decision tests:

| (11) | |||

| (12) |

In Definition 2, robustness values and measure, respectively, the smallest perturbation needed for to fail the BRP and S-BRP decisions tests. Both and are normalized wrt the row-wise norm of the feasible utility functions. Higher robustness values imply a better fit of the UMRI , S-UMRI models to the decision dataset 666The robustness value for the non-informative dataset of uniformly distributed pmfs is . Hence, the robustness value measures the informativeness of the attention strategies in relative to the uniform probability distribution..

Discussion and Insights. Robustness Results of Table I

(i) Deep CNN dataset: The deep CNN datasets used for the robustness tests (11), (12) comprise decisions of deep CNNs for every network architecture, where CNN was trained for training epochs, .

(ii) Comparison between and values for deep CNN datasets: The average value of (12) over all learning rate schedules and network architectures was found to be . In contrast, the average value of (11) was found to be , almost times the average value of . This result shows that the UMRI model fits deep CNN decisions substantially better than the S-UMRI model. This result is expected since S-UMRI is parameterized using much fewer variables compared to the UMRI and hence, S-BRP test is more restrictive than BRP .

(iii) Sensitivity of to Network Architecture: The average value of is for the LeNet and AlexNet architectures, which is approximately times the the average value of for the VGG16, ResNet-50 and NiN architectures which is . The variation of with network architecture is negligible compared to . This shows the robustness test for UMRI model is more sensitive to network architecture compared to that for the S-UMRI model.

(iv) Computational aspects of and . The computation time for is almost times that for . This is expected since the UMRI model is parameterized by utility functions compared to a single utility function in S-UMRI .

B. Sparsity-enhanced Interpretable Model

Our next task is to determine the sparsest possible interpretable model that satisfies the decision tests BRP and S-BRP. The motivation is three fold:

-

1.

The sparsest interpretable model explains the deep CNN decisions using the fewest number of parameters.

-

2.

The sparsest interpretable model induces a useful preference ordering amongst the set of hypotheses (image labels) considered by the CNN; for example, how much additional priority is allocated to the classification of a cat as a cat compared to a cat as a dog. In classical deep learning, this preference ordering is not explicitly generated.

-

3.

Third, the sparsest solution is a point valued estimate. Recall the BRP and S-BRP decision tests yield a set-valued estimate of feasible utility functions and cost of information acquisition that explain the deep CNN datasets. While every element in the set explains the dataset equally well, it is useful to have a single representative point.

Theorem 2 below computes the sparsest utility function out of all feasible utility functions.

Theorem 2 (Sparsity Enhanced BRP and S-BRP Tests for Deep CNN datasets).

Given dataset (5) from a collection of Bayesian agents. The sparsest solutions to the BRP and S-BRP tests minimize the sum of row-wise norm of the feasible utility functions of the agents that generate .

| (13) |

where denotes the row-wise norm.

Results and Discussion. Sparsity Test for deep CNN datasets

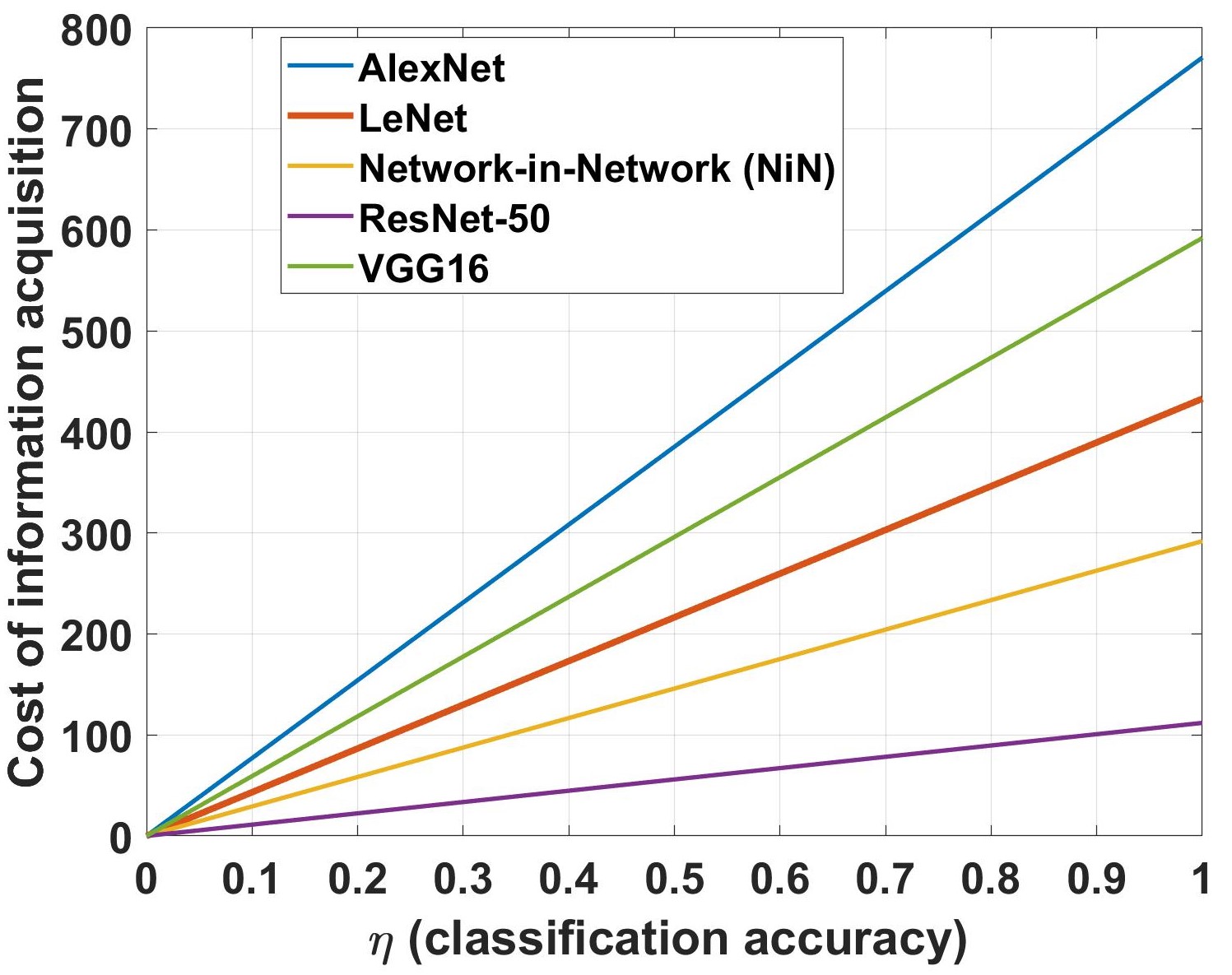

The sparsest utility function from the S-BRP test are tabulated in Table II for all deep CNN architectures. The corresponding information acquisition cost for all architectures averaged over learning rates are shown in Fig. 2. Together, the sparsest utility and information cost constitute the sparsest S-UMRI interpretable model777For brevity, we have only included the sparsity results for the S-UMRI model. The sparsest utility functions of the UMRI model that explains deep CNN decisions are included in our public GitHub repository that contains all test results and codes. for the deep CNN decisions.

(i) The sparsest S-UMRI model is comprised of variables.

(ii) Preference ordering induced from sparsest utility. The sparsest utility function for the S-UMRI model induces a useful preference ordering among the predicted image classes. That is, they measure how the deep CNN’s priority for accurate classification varies across image classes.

For instance, consider the VGG16 architecture trained using learning rate schedule . Of all image categories, the maximum utility is observed for trucks () and the minimum for ships (). This shows the VGG16 architecture prioritizes classifying trucks correctly about times more than classifying ships.

(ii) Penalty for learning image features accurately. The computed information acquisition costs in Fig. 2 can be understood as the training cost the CNN incurs to learn latent image features accurately. The interpretable model cannot explain the variation in CNN classification accuracy versus variation in training parameters without an information acquisition cost.

From Fig. 2, we can conclude that learning accurate image features is the most and least costly, respectively, for the AlexNet and ResNet architectures, respectively.

| Network Architecture | airplane | auto | bird | cat | deer | dog | frog | horse | ship | truck |

| LeNet | ||||||||||

| AlexNet | ||||||||||

| VGG16 | ||||||||||

| ResNet-50 | ||||||||||

| Network-in-Network |

3.2 Predicting deep CNN classification accuracy using our Interpretable Models

Training datasets are often noisy; for example, [38] considers noisy datasets for hand-written character recognition. We now exploit the proposed interpretable model to predict how the deep CNN will perform with a noisy training dataset without actually implementing the deep CNN.

Our predictive procedure is as follows. We first train the CNNs on noisy datasets that are generated by adding simulated Gaussian noise with noise variances chosen from a finite set. 888Injecting artificial noise in training datasets is also used in variational autoencoders for robust feature learning [39, 40]. Then given the CNN decisions, we compute our interpretable model over this finite set of noise variances. Finally, to predict how the CNN will perform for a noise variance not in the set, we interpolate the utility function of the interpretable model at this noise variance. Then given the interpolated utility function and information acquisition cost from our interpretable model, the predicted classification performance is computed by solving convex optimization problem (4). The above procedure is formalized in Algorithm 2. Hence, our interpetable model serves as a computationally efficient method for predicting the performance of a CNN without implementing the CNN. The interpretable model can be viewed as a low dimension projection of the high-dimension CNN with predictive accuracy exceeding 94%.

Remark. An alternative procedure is to directly interpolate the performance over the space of CNN weights (several hundreds of thousands). Due to the high dimensionality, this is an intractable interpolation. In comparison, interpolation over the utility functions in our interpretable model is over a few hundred variables.

Prediction Results of Algorithm 2 on Deep CNN Performance

Table III displays the prediction errors (difference between the true and predicted classification accuracy) for the deep CNNs for all architectures and all image classes in CIFAR-10. For a fixed CNN architecture and noise variance , the prediction error for image class is defined as:

| (14) |

In (14), is the predicted CNN performance generated from Algorithm 2 and is the true CNN performance obtained by implementing the CNN. Recall that is the probability that the CNN correctly classifies an image belonging to class .

| (15) |

Discussion and Insights

(i) Our interpretable model can predict CNN classification performance with accuracy exceeding 94% (see below).

(ii) The interpretable model (utility functions and information acquisition cost) for our predictive procedure (Algorithm 2) is evaluated on the set of noise variances . The predictive procedure of Algorithm 2 is applied on the the set of noise variances given by . Table III displays the prediction errors averaged over all .

(iii) From Table III, the prediction error averaged over all image classes for the CNN architectures are:

-

1.

LeNet -

-

2.

AlexNet -

-

3.

VGG16 -

-

4.

ResNet-50 -

-

5.

NiN-

So the least accuracy is 95.9%, and highest accuracy is 97.3%.

(iv) The prediction error averaged over the network architectures was observed to be minimum

for image class ‘cat’ (98.1%) and maximum for image class ‘deer’ (95.7%) over all image classes.

(iv) Statistical Similarity between Deep CNNs and Interpretable Model. We computed the Kullback-Leibler (KL) divergence between the true and predicted classification performances and . Recall is computed from the interpretable model via Algorithm 2 and is obtained from the CNN.

The KL divergence values for the 5 CNN architectures are:

-

1.

LeNet -

-

2.

AlexNet -

-

3.

VGG16 -

-

4.

Resnet-50 -

-

5.

NiN - .

Thus the decisions made by the deep CNNs are statistically similar to decisions generated by our interpretable model.

4 Conclusions and Extensions

This paper has developed an interpretable model for deep image classification using micro- and behavioral economics theory. By extensive analysis of the decisions of CNNs over popular CNN architectures, we showed that deep CNNs can be explained by rationally inattentive Bayesian utility maximization.

Our main results were the following:

1. Using the theory of Bayesian revealed preference, Theorem 1 gave a necessary and sufficient condition for the actions of a collection of decision makers to be consistent with rationally inattentive Bayesian utility maximization. We showed that deep CNNs operating on the CIFAR-10 dataset satisfy these necessary and sufficient conditions.

2. Next we studied the robustness margin by which the deep CNNs satisfy Theorem 1; we found that the margins were sufficiently large implying robustness of the results. Our robustness results are summarized in Table I.

3. In Theorem 2, we constructed the sparsest interpretable model from the feasible set generated using Theorem 1. The sparsest interpretable model explains deep CNN decisions using the least number of parameters. The sparsest interpretable model introduces a useful preference ordering amongst

the set of hypotheses (image labels) considered by the deep

neural network; for example, how much additional priority

is allocated to the classification of a cat as a cat compared

to a cat as a dog. In classical deep learning, this preference

ordering is not explicitly generated

4. Finally, we showed that our interpretable model can predict CNN performance with accuracy exceeding 94%, and the decisions generated by our interpretable model are statistically similar to that of a deep CNN.

At a more conceptual level, our results suggest that deep CNNs for image classification are equivalent to an economics-based constrained Bayesian decision system (used in micro-economics to model human decision making).

Extensions. An immediate extension of this work is to construct appropriately designed image features to replace the image class label as the state. This would result in a richer descriptive model of the CNN due to more degrees of freedom in the utility function.

Our proposed interpretable model generates a concave utility function by design. This is an important feature of the revealed preference framework; even though the actual deep learner’s utility may not be convex. To quote Varian [11]: ”If data can be rationalized by any non-trivial utility function, then it can be rationalized by a nice utility function. Violations of concavity cannot be detected with only a finite number of observations.” A more speculative extension is to investigate the asymptotic behavior of the BRP and S-BRP decision tests for rationally inattentive utility maximization-do the tests pass when the number of deep CNNs tend to infinity? Recent results [41] show that an infinite dataset can at best be rationalized by a quasi-concave utility function.

Reproducibility: The computer programs and deep image classification datasets needed to reproduce all the results in this paper can be obtained from the public GitHub repository https://github.com/KunalP117/DL_RI.

Acknowledgement

This research was supported in part by the Army Research Office under grants W911NF-21-1-0093 and W911NF-19-1-0365, and the National Science Foundation under grant CCF-2112457.

References

- [1] C. Sims. Implications of rational inattention. Journal of monetary Economics, 50(3):665–690, 2003.

- [2] C. Sims. Rational inattention and monetary economics. Handbook of Monetary Economics, 3:155–181, 2010.

- [3] M. Woodford. Inattentive valuation and reference-dependent choice. 2012.

- [4] F. Matějka and A. McKay. Rational inattention to discrete choices: A new foundation for the multinomial logit model. American Economic Review, 105(1):272–98, 2015.

- [5] H. De Oliveira, T. Denti, M. Mihm, and K. Ozbek. Rationally inattentive preferences and hidden information costs. Theoretical Economics, 12(2):621–654, 2017.

- [6] A. Caplin and D. Martin. A testable theory of imperfect perception. The Economic Journal, 125(582):184–202, 2015.

- [7] A. Caplin and M. Dean. Revealed preference, rational inattention, and costly information acquisition. The American Economic Review, 105(7):2183–2203, 2015.

- [8] A. Krizhevsky, G. Hinton, et al. Learning multiple layers of features from tiny images. 2009.

- [9] S. N. Afriat. The construction of utility functions from expenditure data. International economic review, 8(1):67–77, 1967.

- [10] W. E. Diewert. Afriat and revealed preference theory. The Review of Economic Studies, 40(3):419–425, 1973.

- [11] H. R. Varian. The nonparametric approach to demand analysis. Econometrica: Journal of the Econometric Society, pages 945–973, 1982.

- [12] S. Chakraborty, R. Tomsett, R. Raghavendra, D. Harborne, M. Alzantot, F. Cerutti, M. Srivastava, A. Preece, S. Julier, R. M. Rao, et al. Interpretability of deep learning models: a survey of results. In 2017 IEEE smartworld, ubiquitous intelligence & computing, advanced & trusted computed, scalable computing & communications, cloud & big data computing, Internet of people and smart city innovation (smartworld/SCALCOM/UIC/ATC/CBDcom/IOP/SCI), pages 1–6. IEEE, 2017.

- [13] F. Doshi-Velez and B. Kim. Towards a rigorous science of interpretable machine learning. arXiv preprint arXiv:1702.08608, 2017.

- [14] R. Guidotti, A. Monreale, S. Ruggieri, F. Turini, F. Giannotti, and D. Pedreschi. A survey of methods for explaining black box models. ACM Computing Surveys (CSUR), 51(5):1–42, 2018.

- [15] W. J. Murdoch, C. Singh, K. Kumbier, R. Abbasi-Asl, and B. Yu. Interpretable machine learning: definitions, methods, and applications. arXiv preprint arXiv:1901.04592, 2019.

- [16] D. Ciregan, U. Meier, and J. Schmidhuber. Multi-column deep neural networks for image classification. In 2012 IEEE conference on computer vision and pattern recognition, pages 3642–3649. IEEE, 2012.

- [17] O. Russakovsky, J. Deng, H. Su, J. Krause, S. Satheesh, S. Ma, Z. Huang, A. Karpathy, A. Khosla, M. Bernstein, et al. ImageNet large scale visual recognition challenge. International journal of computer vision, 115(3):211–252, 2015.

- [18] K. He, X. Zhang, S. Ren, and J. Sun. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 770–778, 2016.

- [19] K. Simonyan, A. Vedaldi, and A. Zisserman. Deep inside convolutional networks: Visualising image classification models and saliency maps. arXiv preprint arXiv:1312.6034, 2013.

- [20] A. Nguyen, A. Dosovitskiy, J. Yosinski, T. Brox, and J. Clune. Synthesizing the preferred inputs for neurons in neural networks via deep generator networks. arXiv preprint arXiv:1605.09304, 2016.

- [21] P. Hase, C. Chen, O. Li, and C. Rudin. Interpretable image recognition with hierarchical prototypes. In Proceedings of the AAAI Conference on Human Computation and Crowdsourcing, volume 7-1, pages 32–40, 2019.

- [22] T. Lei, R. Barzilay, and T. Jaakkola. Rationalizing neural predictions. arXiv preprint arXiv:1606.04155, 2016.

- [23] S. Lundberg and S.-I. Lee. A unified approach to interpreting model predictions. arXiv preprint arXiv:1705.07874, 2017.

- [24] S. Bach, A. Binder, G. Montavon, F. Klauschen, K.-R. Müller, and W. Samek. On pixel-wise explanations for non-linear classifier decisions by layer-wise relevance propagation. PloS one, 10(7):e0130140, 2015.

- [25] M. T. Ribeiro, S. Singh, and C. Guestrin. Model-agnostic interpretability of machine learning. arXiv preprint arXiv:1606.05386, 2016.

- [26] A. Shrikumar, P. Greenside, A. Shcherbina, and A. Kundaje. Not just a black box: Learning important features through propagating activation differences. arXiv preprint arXiv:1605.01713, 2016.

- [27] H. Wang and D.-Y. Yeung. Towards Bayesian deep learning: A framework and some existing methods. IEEE Transactions on Knowledge and Data Engineering, 28(12):3395–3408, 2016.

- [28] P. Milgrom. Good news and bad news: Representation theorems and applications. Bell Journal of Economics, 12(2):380–391, 1981.

- [29] L. Huang and H. Liu. Rational inattention and portfolio selection. The Journal of Finance, 62(4):1999–2040, 2007.

- [30] S. E. Mirsadeghi, A. Royat, and H. Rezatofighi. Unsupervised image segmentation by mutual information maximization and adversarial regularization. IEEE Robotics and Automation Letters, 2021.

- [31] W. Hoiles, V. Krishnamurthy, and K. Pattanayak. Rationally Inattentive Inverse Reinforcement Learning Explains YouTube commenting behavior. The Journal of Machine Learning Research, 21(170):1–39, 2020.

- [32] K. Pattanayak and V. Krishnamurthy. Unifying classical and bayesian revealed preference, 2021.

- [33] Y. LeCun, B. Boser, J. Denker, D. Henderson, R. Howard, W. Hubbard, and L. Jackel. Handwritten digit recognition with a back-propagation network. Advances in neural information processing systems, 2, 1989.

- [34] A. Krizhevsky, I. Sutskever, and G. E. Hinton. Imagenet classification with deep convolutional neural networks. Advances in neural information processing systems, 25:1097–1105, 2012.

- [35] K. Simonyan and A. Zisserman. Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556, 2014.

- [36] M. Lin, Q. Chen, and S. Yan. Network in network. arXiv preprint arXiv:1312.4400, 2013.

- [37] G. Hinton, N. Srivastava, and K. Swersky. Neural networks for machine learning lecture 6a overview of mini-batch gradient descent. Cited on, 14(8), 2012.

- [38] R. Anand, T. Shanthi, R. Sabeenian, and S. Veni. Real time noisy dataset implementation of optical character identification using cnn. International Journal of Intelligent Enterprise, 7(1-3):67–80, 2020.

- [39] Y. Bengio. Learning deep architectures for AI. Now Publishers Inc, 2009.

- [40] P. Vincent, H. Larochelle, Y. Bengio, and P.-A. Manzagol. Extracting and composing robust features with denoising autoencoders. In Proceedings of the 25th international conference on Machine learning, pages 1096–1103, 2008.

- [41] P. J. Reny. A characterization of rationalizable consumer behavior. Econometrica, 83(1):175–192, 2015.

- [42] D. Blackwell. Equivalent comparisons of experiments. The annals of mathematical statistics, pages 265–272, 1953.

Appendix

A.1 Proof of Theorem 1

Proof of necessity of NIAS and NIAC:

-

1.

NIAS (8): For agent , define the subset so that for any observation , given posterior pmf , the optimal choice of action is (3). We define the revealed posterior pmf given action as . The revealed posterior pmf is a stochastically garbled version of the actual posterior pmf , that is,

(16) Since the optimal action is for all , (3) implies:

This is precisely the NIAS inequality (8).

-

2.

NIAC (9): Let , where denotes the information acquisition cost of the collection of agents . Also, let denote the expected utility of the agent given attention strategy (first term in RHS of (4)). Here, the expectation is taken wrt both the state and observation . It can be verified that is convex in the first argument. Finally, for the agent, we define the revealed attention strategy over the set of actions as

where the variable is obtained from the dataset . Clearly, the revealed attention strategy is a stochastically garbled version of the true attention strategy since

(17) From Blackwell dominance [42] and the convexity of the expected utility functional , it follows that:

(18) when Blackwell dominates . The above relationship holds with equality if (this is due to NIAS (8)). We now turn to condition (4) for optimality of attention strategy. The following inequalities hold for any pair of agents :

(19) This is precisely the NIAC inequality (9).

Proof for sufficiency of NIAS and NIAC: Let denote a feasible solution to the NIAS and NIAC inequalities of Theorem 1. To prove sufficiency, we construct an UMRI tuple as a function of dataset and the feasible solution that satisfies the optimality conditions (3),(4) of Definition 1.

Consider the following UMRI model tuple:

| (20) |

In (20), is a convex cost since it is a point-wise maximum of monotone convex functions. Further, since NIAC is satisfied, (20) implies . It only remains to show that inequalities (3) and (4) in Definition 1 are satisfied for all agents in .

-

1.

NIAS implies (3) holds. This is straightforward to show since the observation and action sets are identical.

- 2.

A.2 S-UMRI (Sparse UMRI ) Model for Rationally Inattentive Bayesian Utility Maximization

In Sec. 2.1, we outlined the UMRI model for rationally inattentive utility maximization of Bayesian agents parameterized by utility functions and a cost of information acquisition. This section proposes a sparse version of the UMRI model, namely, the S-UMRI model that is parameterized by a single utility function that rationalizes the decisions of Bayesian agents. Abstractly, the S-UMRI model is described by the tuple

| (21) |

All parameters in (21) are identical to that in (1) except for the additional parameter . can be interpreted as the sensitivity to information acquisition of the agent. We discuss the significance of in more detail below. In complete analogy to Definition 1, Definition 3 below specifies the optimal action and attention strategy policy of the Bayesian agents in .

Definition 3 (Rationally Inattentive Utility Maximization for S-UMRI ).

Consider a collection of Bayesian agents parameterized by in (21) under the S-UMRI model. Then,

(a) Expected Utility Maximization:

Given posterior pmf , agent chooses action that maximizes its expected utility.

| (22) |

(b) Attention Strategy Rationality: Agent chooses attention strategy that optimally trades off between utility maximization and cost minimization.

| (23) |

Remarks. 1. Role of . In (23), is the differentiating parameter across agents. Even though all agents have the same utility function, different values of result in different optimal strategies (23).

2. Sparsity of S-UMRI . The UMRI model tuple for Bayesian agents is parameterized using variables. In comparison, the S-UMRI tuple is parameterized via variables. The difference in variables for parametrization is linear in .

Finally, in complete analogy to Theorem 1, we now state Theorem 3 that states necessary and sufficient conditions for the decisions of a collection of Bayesian agents to be rationalized by the S-UMRI model.

Theorem 3 (S-BRP Test for Rationally Inattentive Utility Maximization).

Given the dataset (5) obtained from a collection of Bayesian agents . Then,

1. Existence: There exists a S-UMRI tuple (1) that rationalizes dataset if and only if there exists a feasible solution that satisfies the set of inequalities

| (24) |

In (6), S-BRP corresponds to a set of inequalities stated in Algorithm 3 below. The set-valued estimate of that rationalizes is the set of all feasible solutions to (6).

2. Reconstruction: Given a feasible solution to , is the Bayesian agent’s utility function, for all . The set of observations , the set of actions in . The feasible cost of information acquisition in is defined in terms of the feasible variables as:

| (25) |

The proof of Theorem 3 closely follows the proof of Theorem 1 and hence, omitted. In comparison to the BRP test of Theorem 1, the S-BRP test has the same number of inequalities but fewer decision variables. Hence, the set of feasible parameters generated from Algorithm 3 is smaller compared to Algorithm 1.

A.3 Construction of Deep CNN Dataset

We now explain how the decisions of the deep CNNs are incorporated into our main theorems Theorems 1 and 3. Suppose deep CNNs indexed by with different training parameters are trained on the CIFAR-10 dataset. For every trained deep CNN , given test image from CIFAR-10 test dataset with image class , let the vector denote the corresponding softmax output of the deep CNN. The vector is a -dimensional probability vector where is the probability that deep CNN classifies test image into image class .

The decisions of all deep CNNs on the CIFAR-10 test dataset are aggregated into dataset for compatibility with Theorems 1 and 3 as follows:

| (26) |

Here is the empirical probability that the image class of a test image in the CIFAR-10 test dataset is . Since the output of the CNN is a probability vector, we compute for the CNN by averaging the component of the output over all test images in image class . Finally, is the number of test images in the CIFAR-10 test dataset, and the set of true and predicted image classes are the same, i.e., . Although implicit in the above description, our Bayesian revealed preference approach to interpretable deep image classification assumes the deep CNN’s (agent’s) ground truth is the true image label, and its decision is the predicted image label.