Rawlsian Fairness in Online Bipartite Matching: Two-Sided, Group, and Individual

Abstract

Online bipartite-matching platforms are ubiquitous and find applications in important areas such as crowdsourcing and ridesharing. In the most general form, the platform consists of three entities: two sides to be matched and a platform operator that decides the matching. The design of algorithms for such platforms has traditionally focused on the operator’s (expected) profit. Since fairness has become an important consideration that was ignored in the existing algorithms a collection of online matching algorithms have been developed that give a fair treatment guarantee for one side of the market at the expense of a drop in the operator’s profit. In this paper, we generalize the existing work to offer fair treatment guarantees to both sides of the market simultaneously, at a calculated worst case drop to operator profit. We consider group and individual Rawlsian fairness criteria. Moreover, our algorithms have theoretical guarantees and have adjustable parameters that can be tuned as desired to balance the trade-off between the utilities of the three sides. We also derive hardness results that give clear upper bounds over the performance of any algorithm.

1 Introduction

Online bipartite matching has been used to model many important applications such as crowdsourcing (Ho and Vaughan 2012; Tong et al. 2016; Dickerson et al. 2019b), rideshare (Lowalekar, Varakantham, and Jaillet 2018; Dickerson et al. 2021; Ma, Xu, and Xu 2021), and online ad allocation (Goel and Mehta 2008; Mehta 2013). In the most general version of the problem, there are three interacting entities: two sides of the market to be matched and a platform operator which assigns the matches. For example, in rideshare, riders on one side of the market submit requests, drivers on the other side of the market can take requests, and a platform operator such as Uber or Lyft matches the riders’ requests to one or more available drivers. In the case of crowdsourcing, organizations offer tasks, workers look for tasks to complete, and a platform operator such as Amazon Mechanical Turk (MTurk) or Upwork matches tasks to workers.

Online bipartite matching algorithms are often designed to optimize a performance measure—usually, maximizing overall profit for the platform operator or a proxy of that objective. However, fairness considerations were largely ignored. This is troubling especially given that recent reports have indicated that different demographic groups may not receive similar treatment. For example, in rideshare platforms once the platform assigns a driver to a rider’s request, both the rider and the driver have the option of rejecting the assignment and it has been observed that membership in a demographic group may cause adverse treatment in the form of higher rejection. Indeed, (Cook 2018; White 2016; Wirtschafter 2019) report that drivers could reject riders based on attributes such as gender, race, or disability. Conversely, (Rosenblat et al. 2016) reports that drivers are likely to receive less favorable ratings if they belong to certain demographic groups. A similar phenomenon exists in crowdsourcing (Galperin and Greppi 2017). Moreover, even in the absence of such evidence of discrimination, as algorithms become more prevalent in making decisions that directly affect the welfare of individuals (Barocas, Hardt, and Narayanan 2019; Dwork et al. 2012), it becomes important to guarantee a standard of fairness. Also, while much of our discussion focuses on the for-profit setting for concreteness, similar fairness issues hold in not-for-profit scenarios such as the fair matching of individuals with health-care facilities, e.g., in the time of a pandemic.

In response, a recent line of research has been concerned with the issue of designing fair algorithms for online bipartite matching. (Lesmana, Zhang, and Bei 2019; Ma and Xu 2022; Xu and Xu 2020) present algorithms which give a minimum utility guarantee for the drivers at a bounded drop to the operator’s profit. Conversely, (Nanda et al. 2020) give guarantees for both the platform operator and the riders instead. Finally, (Sühr et al. 2019) shows empirical methods that achieve fairness for both the riders and drivers simultaneously but lacks theoretical guarantees and ignores the operator’s profit.

Nevertheless, the existing work has a major drawback in terms of optimality guarantees. Specifically, the two sides being matched along with the platform operator constitute the three main interacting entities in online matching and despite the significant progress in fair online matching none of the previous work considers all three sides simultaneously. In this paper, we derive algorithms with theoretical guarantees for the platform operator’s profit as well as fairness guarantees for the two sides of the market. Unlike the previous work we not only consider the size of the matching but also its quality. Further, we consider two online arrival settings: the KIID and the richer KAD setting (see Section 3 for definitions). We consider both group and individual notions of Rawlsian fairness and interestingly show a reduction from individual fairness to group fairness in the KAD setting. Moreover, we show upper bounds on the optimality guarantees of any algorithm and derive impossibility results that show a conflict between group and individual notions of fairness. Finally, we empirically test our algorithms on a real-world dataset.

2 Related Work

It is worth noting that similar to our work, (Patro et al. 2020) and (Basu et al. 2020) have considered two-sided fairness as well, although in the setting of recommendation systems where a different model is applied—and, critically, a separate objective for the operator’s profit was not considered.

Fairness in bipartite matching has seen significant interest recently. The fairness definition employed has consistently been the well-known Rawlsian fairness (Rawls 1958) (i.e. max-min fairness) or its generalization Leximin fairness.***Leximin fairness maximizes the minimum utility like max-min fairness. However, it proceeds to maximize the second worst utility, and so on until the list is exhausted. We note that the objective to be maximized (other than the fairness objective) represents operator profit in our setting.

The case of offline and unweighted maximum cardinality matching is addressed by (García-Soriano and Bonchi 2020), who give an algorithm with Leximin fairness guarantees for one side of the market (one side of the bipartite graph) and show that this can be achieved without sacrificing the size of the match. Motivated by fairness consideration for drivers in ridesharing, (Lesmana, Zhang, and Bei 2019) considers the problem of offline and weighted matching. Specifically, they show an algorithm with a provable trade-off between the operator’s profit and the minimum utility guaranteed to any vertex in one-side of the market.

Recently, (Ma, Xu, and Xu 2020) considered fairness for the online part of the graph through a group notion of fairness. In particular, the utility for a group is added across the different types and is minimized for the group worst off, in rough terms their notion translates to maximizing the minimum utility accumulated by a group throughout the matching. Their notion of fairness is very similar to the one we consider here. However, (Ma, Xu, and Xu 2020) considers fairness only on one side of the graph and ignores the operator’s profit. Further, only the matching size is considered to measure utility, i.e. edges are unweighted.

A new notion of group fairness in online matching is considered in (Sankar et al. 2021). In rough terms, their group fairness criterion amounts to establishing a quota for each group and ensuring that the matching does not exceed that quota. This notion can be seen as ensuring that the system is not dominated by a specific group and is in some sense an opposite to max-min fairness as the utility is upper bounded instead of being lower bounded. Further, the fairness guarantees considered are one-sided as well.

On the empirical side of fair online matching, (Mattei, Saffidine, and Walsh 2017) and (Lee et al. 2019) give application-specific treatments in the context of deceased-donor organ allocation and food bank provisioning, respectively. More related to our work is that of (Sühr et al. 2019; Zhou, Marecek, and Shorten 2021) which consider the rideshare problem and provide algorithms to achieve fairness for both sides of the graph simultaneously, however both papers lack theoretical guarantees and in the case of (Sühr et al. 2019) the operator’s profit is not considered.

3 Online Model & Optimization Objectives

Our model follows that of (Mehta 2013; Feldman et al. 2009; Bansal et al. 2010; Alaei, Hajiaghayi, and Liaghat 2013) and others. We have a bipartite graph where represents the set of static (offline) vertices (workers) and represents the set of online vertex types (job types) which arrive dynamically in each round. The online matching is done over rounds. In a given round , a vertex of type is sampled from with probability with the probability is known beforehand for each type and each round . This arrival setting is referred to as the known adversarial distribution (KAD) setting (Alaei, Hajiaghayi, and Liaghat 2013; Dickerson et al. 2021). When the distribution is stationary, i.e. , we have the arrival setting of the known independent identical distribution (KIID). Accordingly, the expected number of arrivals of type in rounds is , which reduces to in the KIID setting. We assume that for KIID (Bansal et al. 2010). Every vertex () has a group membership,†††For a clearer representation we assume each vertex belongs to one group although our algorithms apply to the case where a vertex can belong to multiple groups. with being the set of all group memberships; for any vertex , we denote its group memberships by (similarly, we have for ). Conversely, for a group , () denotes the subset of () with group membership . A vertex () has a set of incident edges () which connect it to vertices in the opposite side of the graph. In a given round, once a vertex (job) arrives, an irrevocable decision has to be made on whether to reject or assign it to a neighbouring vertex (where ) which has not been matched before. Suppose, that is assigned to , then the assignment is not necessarily successful rather it succeeds with probability . This models the fact that an assignment could fail for some reason such as the worker refusing the assigned job. Furthermore, each vertex has patience parameter which indicates the number of failed assignments it can tolerate before leaving the system, i.e. if receives failed assignments then it is deleted from the graph. Similarly, a vertex has patience , if a vertex arrives in a given round, then it would tolerate at most many failed assignments in that round before leaving the system.

For a given edge , we let each entity assign its own utility to that edge. In particular, the platform operator assigns a utility of , whereas the offline vertex assigns a utility of , and the online vertex assigns a utility of . This captures entities’ heterogeneous wants. For example, in ridesharing, drivers may desire long trips from nearby riders, whereas the platform operator would not be concerned with the driver’s proximity to the rider, although this maybe the only consideration the rider has. Similar motivations exist in crowdsourcing as well. We finally note that most of the details of our model such the KIID and KAD arrival settings as well as the vertex patience follow well-established and pratically motivated model choices in online matching, see Appendix (A) for more details.

Letting denote the set of successful matchings made in the rounds, then we consider the following optimization objectives:

-

•

Operator’s Utility (Profit): The operator’s expected profit is simply the expected sums of the profits across the matched edges, this leads to .

-

•

Rawlsian Group Fairness:

-

–

Offline Side: Denote by the subset of edges in the matching that are incident on . Then our fairness criterion is equal to

this value equals the minimum average expected utility received by a group in the offline side .

-

–

Online Side: Similarly, we denote by the subset of edges in the matching that are incident on vertex , and define the fairness criterion to be

this value equals the minimum average expected utility received throughout the matching by any group in the online side .

-

–

-

•

Rawlsian Individual Fairness:

-

–

Offline Side: The definition here follows from the group fairness definition for the offline side by simply considering that each vertex belongs to its own distinct group. Therefore, the objective is .

-

–

Online Side: Unlike the offline side, the definition does not follow as straightforwardly. Here we cannot obtain a valid definition by simply assigning each vertex type its own group. Rather, we note that a given individual is actually a given arriving vertex at a given round , accordingly our fairness criterion is the minimum expected utility an individual receives in a given round, i.e. , where is the vertex that arrived in round .

-

–

4 Main Results

Performance Criterion:

We note that we are in the online setting, therefore our performance criterion is the competitive ratio. Denote by the distribution for the instances of matching problems, then where is the optimal value of the sampled instance . Similarly, for a given algorithm , we define the value of its objective over the distribution by where the expectation is over the randomness of the instance and the algorithm. The competitive ratio is then defined as .

In our work, we address optimality guarantees for each of the three sides of the matching market by providing algorithms with competitive ratio guarantees for the operator’s profit and the fairness objectives of the static and online side of the market simultaneously. Specifically, for the KIID arrival setting we have:

Theorem 4.1.

For the KIID setting, algorithm achieves a competitive ratio of 222Here, denotes the Euler number, not an edge in the graph. simultaneously over the operator’s profit, the group fairness objective for the offline side, and the group fairness objective for the online side, where and .

The following two theorems hold under the condition that . Specifically for the KAD setting we have:

Theorem 4.2.

For the KAD setting, algorithm achieves a competitive ratio of simultaneously over the operator’s profit, the group fairness objective for the offline side, and the group fairness objective for the online side, where and .

Moreover, for the case of individual fairness whether in the KIID or KAD arrival setting we have:

Theorem 4.3.

For the KIID or KAD setting, we can achieve a competitive ratio of simultaneously over the operator’s profit, the individual fairness objective for the offline side, and the individual fairness objective for the online side, where and .

We also give the following hardness results. In particular, for a given arrival (KIID or KAD) setting and fairness criterion (group or individual), the competitive ratios for all sides cannot exceed 1 simultaneously:

Theorem 4.4.

For all arrival models, given the three objectives: operator’s profit, offline side group (individual) fairness, and online side group (individual) fairness. No algorithm can achieve a competitive ratio of over the three objectives simultaneously such that .

It is natural to wonder if we can combine individual and group fairness. Though it is possible to extend our algorithms to this setting. The follow theorem shows that they can conflict with one another:

Theorem 4.5.

Ignoring the operator’s profit and focusing either on the offline side alone or the online side alone. With and denoting the group and individual fairness competitive ratios, respectively. No algorithm can achieve competitive ratios over the group and individual fairness objectives of one side simultaneously such that .

Finally, we carry experiments on real-world datasets in Section 6.

5 Algorithms and Theoretical Guarantees

Our algorithms use linear programming (LP) based techniques (Bansal et al. 2010; Nanda et al. 2020; Xu and Xu 2020; Brubach et al. 2016b) where first a benchmark LP is set up to upper bound the optimal value of the problem, then an LP solution is sampled from to produce an algorithm with guarantees. Due to space constraints, all proofs and the technical details of Theorems (4.4 and 4.5) are in Appendix (B).

5.1 Group Fairness for the KIID Setting:

Before we discuss the details of the algorithm, we note that for a given vertex type , the expected arrival rate could be greater than one. However, it is not difficult to modify the instance by “fragmenting” each type with such that in the new instance for each type. This can be done with the operator’s profit, offline group fairness, and online group fairness having the same values. Therefore, in what remains for the KIID setting and therefore for any round , each vertex arrives with probability . It also follows that for a given group , .

For each edge we use to denote the expected number of probes (i.e, assignments from to type not necessarily successful) made to edge in the LP benchmark. We have a total of three LPs each having the same set of constraints of (4), but differing by the objective. For compactness we do not repeat these constraints and instead write them once. Specifically, LP objective (1) along with the constraints of (4) give the optimal benchmark value of the operator’s profit. Similarly, with the same set of constraints (4) LP objective (2) and LP objective (3) give the optimal group max-min fair assignment for the offline and online sides, respectively. Note that the expected max-min objectives of (2) and (3), can be written in the form of a linear objective. For example, the max-min objective of (2) can be replaced with an LP with objective subject to the additional constraints that , . Having introduced the LPs, we will use LP(1), LP(2), and LP(3) to refer to the platform’s profit LP, the offline side group fairness LP, and the online side group fairness LP, respectively.

| (1) | |||

| (2) | |||

| (3) |

| (4a) | |||

| (4b) | |||

| (4c) | |||

| (4d) | |||

| (4e) | |||

Now we prove that LP(1), LP(2) and LP(3) indeed provide valid upper bounds (benchmarks) for the optimal solution for the operator’s profit and expected max-min fairness for the offline and online sides of the matching.

Lemma 5.1.

Our algorithm makes use of the dependent rounding subroutine (Gandhi et al. 2006). We mention the main properties of dependent rounding. In particular, given a fractional vector where each , let , dependent rounding rounds (possibly fractional) to for each such that the resulting vector has the following properties: (1) Marginal Distribution: The probability that is equal to , i.e. for each . (2) Degree Preservation: Sum of ’s should be equal to either or with probability one, i.e. . (3) Negative Correlation: For any , (1) (2) . It follows that for any , .

Going back to the LPs (1,2,3), we denote the optimal solutions to LP (1), LP (2), and LP (3) by , and respectively. Further, we introduce the parameters where and each of these parameters decide the ”weight” the algorithm places on each objective (the operator’s profit, the offline group fairness, and the online group fairness objectives). We note that our algorithm makes use of the subroutine PPDR (Probe with Permuted Dependent Rounding) shown in Algorithm 1.

The procedure of our parameterized sampling algorithm is shown in Algorithm 2. Specifically, when a vertex of type arrives at any time step we run PPDR(), PPDR(), or PPDR() with probabilities , , and , respectively. We do not run any of the PPDR subroutines and instead reject the vertex with probability . The LP constraint (4e) guarantees that where could be . Therefore, when PPDR is invoked by the degree preservation property of dependent rounding the number of edges probed will not exceed , i.e. it would be within the patience limit.

Now we analyze to prove Theorem 4.1. It would suffice to prove that for each edge the expected number of successful probes is at least , and . And finally from the linearity of expectation we show that the worst case competitive ratio of the proposed online algorithm with parameters and is at least for the operator’s profit and group fairness objectives on the offline and online sides of the matching, respectively.

A critical step is to lower bound the probability that a vertex is available (safe) at the beginning of round . Let us denote the indicator random variable for that event by . The following lemma enables us to lower bound for the probability of .

Lemma 5.2.

.

Now that we have established a lower bound on , we lower bound the probability that an edge is probed by one of the PPDR subroutines conditioned on the fact that is available (Lemma 5.3). Let be the indicator that is probed by the Algorithm at time . Note that event occurs when (1) a vertex of type arrives at time and (2) is sampled by PPDR(), PPDR(), or PPDR().

Lemma 5.3.

,,

Given the above lemmas Theorem 4.1 can be proved.

5.2 Group Fairness for the KAD Setting:

For the KAD setting, the distribution over is time dependent and hence the probability of sampling a type in round is with . Further, we assume for the KAD setting that for every edge we have . This means that whenever an incoming vertex is assigned to a safe-to-add vertex the assignment is successful. This also means that any non-trivial values for the patience parameters and become meaningless and hence we can WLOG assume that . From the above discussion, we have the following LP benchmarks for the operator’s profit, the group fairness for the offline side and the group fairness for the online side:

| (5) | |||

| (6) | |||

| (7) |

| (8a) | |||

| (8b) | |||

| (8c) | |||

Lemma 5.4.

Note that in the above LP we have as the probability for successfully assigning an edge in round (with an explicit dependence on ), unlike in the KIID setting where we had instead to denote the expected number of times edge is probed through all rounds. Similar to our solution for the KIID setting, we denote by , , and the optimal solutions of the LP benchmarks for the operator’s profit, offline side group fairness, and online side group fairness, respectively.

Having the optimal solutions to the LPs, we use algorithm shown in Algorithm 3. In new parameters are introduced, specifically and where is the probability that edge is safe to add in round , i.e. the probability that is unmatched at the beginning of round . For now we assume that we have the precise values of for all rounds and discuss how to obtain these values at the end of this subsection. Now conditioned on arriving at round and being safe to add, it follows that is sampled with probability which would be a valid probability (positive and not exceeding 1) if . This follows from the fact that and and also by constraint (8c) which leads to . Further, if then by constraint (8c) we have and therefore the distribution is valid. Clearly, the value of is important for the validity of the algorithm, the following lemma shows that leads to a valid algorithm.

Lemma 5.5.

Algorithm is valid for .

We now return to the issue of how to obtain the values of for all rounds. This can be done by using the simulation technique as done in (Dickerson et al. 2021; Adamczyk, Grandoni, and Mukherjee 2015). To elaborate, we note that we first solve the LPs (5,6,7) and hence have the values of , , and . Now, for the first round , clearly . To obtain for , we simulate the arrivals and algorithm a collection of times, and use the empirically estimated probability. More precisely, for we sample the arrival of vertex from with ( values are given as part of the model), then we run our algorithm for the values of that we have chosen. Accordingly, at some vertex in might be matched. We do this simulation a number of times and then we take for to be the average of all runs. Now having the values of for and , we further simulate the arrivals and the algorithm to obtain for and so on until we get for the last round . We note that using the Chernoff bound (Mitzenmacher and Upfal 2017) we can rigorously characterize the error in this estimation, however by doing this simulation a number of times that is polynomial in the problem size, the error in the estimation would only affect the lower order terms in the competitive ration analysis (Dickerson et al. 2021) and hence for simplicity it is ignored. Now, with Lemma 5.5 Theorem 4.2 can be proved (see Appendix (B)).

5.3 Individual Fairness KIID and KAD Settings:

For the case of Rawlsian (max-min) individual fairness, we consider each vertex of the offline side to belong to its own distinct group and the definition of group max-min fairness would lead to individual max-min fairness. On the other hand, for the online side a similar trick would not yield a meaningful criterion, we instead define the individual max-min fairness for the online side to equal where is the utility received by the vertex arriving in round . If we were to denote by the probability that the algorithm would successfully match in round , then it follows straightforwardly that . We consider this definition to be the valid extension of max-min fairness for the online side as we are now concerned with the minimum utility across the online individuals (arriving vertices) which are many. The following lemma shows that we can solve two-sided individual max-min fairness by a reduction to two-sided group max-min fairness in the KAD arrival setting:

Lemma 5.6.

Whether in the KIID or KAD setting, a given instance of two-sided individual max-min fairness can be converted to an instance of two-sided group max-min fairness in the KAD setting.

6 Experiments

In this section, we verify the performance of our algorithm and our theoretical lower bounds for the KIID and group fairness setting using algorithm (Section 5.1). We note that none of the previous work consider our three-sided setting. We use rideshare as an application example of online bipartite matching (see also, e.g., Dickerson et al. 2021; Nanda et al. 2020; Xu and Xu 2020; Barann, Beverungen, and Müller 2017). We expect similar results and performance to hold in other matching applications such as crowdsourcing.

Experimental Setup:

As done in previous work, the drivers’ side is the offline (static) side whereas the riders’ side is the online side. We run our experiments over the widely used New York City (NYC) yellow cabs dataset (Sekulić, Long, and Demšar 2021; Nanda et al. 2020; Xu and Xu 2020; Alonso-Mora, Wallar, and Rus 2017) which contains records of taxi trips in the NYC area from 2013. Each record contains a unique (anonymized) ID of the driver, the coordinates of start and end locations of the trip, distance of the trip, and additional metadata.

Similar to (Dickerson et al. 2021; Nanda et al. 2020), we bin the starting and ending latitudes and longitudes by dividing the latitudes from to and longitudes from to into equally spaced grids of step size . This enables us to define each driver and request type based on its starting and ending bins. We pick out the trips between 7pm and 8pm on January 31, 2013, which is a rush hour with 10,814 drivers and 35,109 trips. We set driver patience to 3. Following (Xu and Xu 2020), we uniformly sample rider patience from .

Since the dataset does not include demographic information, for each vertex we randomly sample the group membership (Nanda et al. 2020). Specifically, we randomly assign of the riders and drivers to be advantaged and the rest to be disadvantaged. The value of for depends on whether the vertices belong to the advantaged or disadvantaged group. Specifically, if both vertices are advantaged, if both are disadvantaged, and for other cases.

In addition to this, a key component of our work is the use of driver and rider specific utilities. We follow the work of (Sühr et al. 2019) to set the utilities. We adopt the Manhattan distance metric rather than the Euclidean distance metric since the former is a better proxy for length of taxi trips in New York City. We set the operator’s utility to the rider’s trip length —a rough proxy for profit. In addition, the rider’s utility over an edge is set to where dist is the distance between the rider and the driver. The driver’s utility is set to . Whereas the trip length tripLength is available in the dataset, the distance between the rider and the driver dist is not. We therefore simulate the distance, by creating an equally spaced grid with step size around the starting coordinates of the trip. This results in 81 possible coordinates in the vicinity of the starting coordinates of the trip. We then randomly choose one of these 81 coordinates to be the location of the driver when the trip was requested. Then is the distance between this coordinate to the start coordinate of the trip. This is a valid approximation since the platform would not assign drivers unreasonably far away to pickup a rider. Lastly, we scale the utilities by a constant to prevent them from being negative.

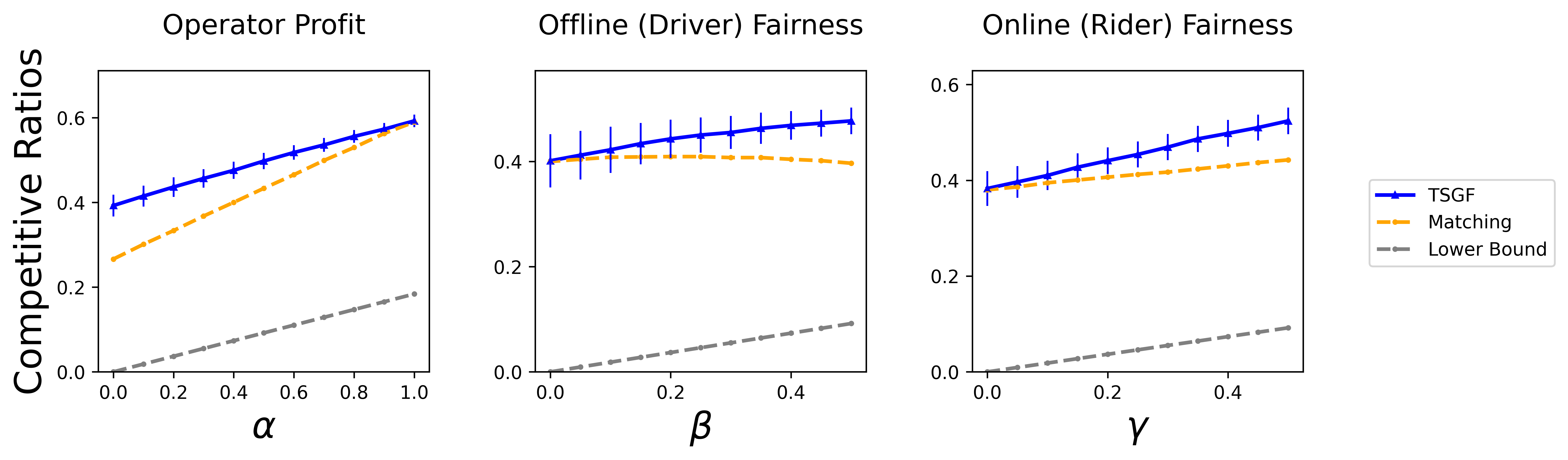

We run at the scale of , for 100 trials. During each trial, we randomly sample 49 drivers and 172 requests between 7 and 8pm, and run 100 times to measure the expected competitive ratios of this trial. We then averaged the competitive ratios over all trials, and the results are reported in figure 1. Code to reproduce our experiments is available in the blinded format‡‡‡https://github.com/anonymousUser634534/TSGF; we will release that code in deblinded form upon acceptance.

Performance of with Varied Parameters:

Figure 1 shows the performance of our algorithm over the three objectives: operator’s profit, offline (driver) group fairness, and online (rider) group fairness. It is clear that the algorithm behaves as expected with all objectives being steadily above their theoretical lower bound. More importantly, we see that increasing the weight for an objective leads to better performance for that objective. I.e., a higher weight for leads to better performance for the offline side fairness and similar observations follow in the case of for the operator’s objective and in the case of for the online-fairness. This also indicates the limitation in previous work which only considered fairness for one-side since their algorithms would not be able to improve the fairness for the other ignored side.

Furthermore, previous work (e.g., Nanda et al. 2020; Xu and Xu 2020; Ma and Xu 2022) only considered the matching size when optimizing the fairness objective for the offline (drivers) or online (riders) side. This is in contrast to our setting where we consider the matching quality. To see the effect of ignoring the matching quality and only considering the size, we run the same experiments with , i.e. the quality is ignored. The results are shown shown in the graph labelled “Matching” in figure 1, it is clear that ignoring the match quality leads to noticeably worse results.

Comparison to Heuristics:

We also compare the performance of against three other heuristics. In particular, we consider Greedy-O which is a greedy algorithm that upon the arrival of an online vertex (rider) picks the edge with maximum value of until it either results in a match or the patience quota is reached. We also consider Greedy-R which is identical to Greedy-O except that it greedily picks the edge with maximum value of instead, therefore maximizing the rider’s utility in a greedy fashion. Moreover, we consider Greedy-D which is a greedy algorithm that upon the arrival of an online vertex , first finds the group on the offline side with the lowest average utility so far, then it greedily picks an offline vertex (driver) from this group (if possible) which has the maximum utility until it either results in a match or the patience limit is reached. We carried out 100 trials to compare the performance of with the greedy algorithms, where each trial contains 49 randomly sampled drivers and 172 requests and is repeated 100 times. The aggregated results are displayed in table 1. We see that outperforms the heuristics with the exception of a small under-performance in comparison to Greedy-D. However, using Greedy-D we cannot tune the weights (, , and ) to balance the objectives as we can in the case of .

| Profit |

|

|

|||||

|---|---|---|---|---|---|---|---|

| Greedy-O | 0.431 | 0.549 | 0.503 | ||||

| () | 0.595 | 0.398 | 0.384 | ||||

| Greedy-D | 0.371 | 0.609 | 0.563 | ||||

| () | 0.517 | 0.571 | 0.44 | ||||

| Greedy-R | 0.316 | 0.504 | 0.513 | ||||

| () | 0.252 | 0.353 | 0.574 |

Acknowledgments

This research was supported in part by NSF CAREER Award IIS-1846237, NSF Award CCF-1918749, NSF Award CCF-1852352, NSF Award SMA-2039862, NIST MSE Award #20126334, DARPA GARD #HR00112020007, DARPA SI3-CMD #S4761, DoD WHS Award #HQ003420F0035, ARPA-E DIFFERENTIATE Award #1257037, ARL Award W911NF2120076, and gifts by research awards from Amazon, and Google. We are grateful to Pan Xu for advice and comments on earlier versions of this work.

References

- Adamczyk, Grandoni, and Mukherjee (2015) Adamczyk, M.; Grandoni, F.; and Mukherjee, J. 2015. Improved approximation algorithms for stochastic matching. In Algorithms-ESA 2015, 1–12. Springer.

- Alaei, Hajiaghayi, and Liaghat (2012) Alaei, S.; Hajiaghayi, M.; and Liaghat, V. 2012. Online prophet-inequality matching with applications to ad allocation. In Proceedings of the 13th ACM Conference on Electronic Commerce, 18–35.

- Alaei, Hajiaghayi, and Liaghat (2013) Alaei, S.; Hajiaghayi, M.; and Liaghat, V. 2013. The online stochastic generalized assignment problem. In Approximation, Randomization, and Combinatorial Optimization. Algorithms and Techniques, 11–25. Springer.

- Alonso-Mora, Wallar, and Rus (2017) Alonso-Mora, J.; Wallar, A.; and Rus, D. 2017. Predictive routing for autonomous mobility-on-demand systems with ride-sharing. In 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 3583–3590.

- Bahmani and Kapralov (2010) Bahmani, B.; and Kapralov, M. 2010. Improved bounds for online stochastic matching. In European Symposium on Algorithms, 170–181. Springer.

- Bansal et al. (2010) Bansal, N.; Gupta, A.; Li, J.; Mestre, J.; Nagarajan, V.; and Rudra, A. 2010. When lp is the cure for your matching woes: Improved bounds for stochastic matchings. In European Symposium on Algorithms, 218–229. Springer.

- Barann, Beverungen, and Müller (2017) Barann, B.; Beverungen, D.; and Müller, O. 2017. An open-data approach for quantifying the potential of taxi ridesharing. Decision Support Systems, 99: 86–95.

- Barocas, Hardt, and Narayanan (2019) Barocas, S.; Hardt, M.; and Narayanan, A. 2019. Fairness and Machine Learning. fairmlbook.org. Accessed: 2022-08-01.

- Basu et al. (2020) Basu, K.; DiCiccio, C.; Logan, H.; and Karoui, N. E. 2020. A Framework for Fairness in Two-Sided Marketplaces. arXiv preprint arXiv:2006.12756.

- Brubach et al. (2016a) Brubach, B.; Sankararaman, K. A.; Srinivasan, A.; and Xu, P. 2016a. New algorithms, better bounds, and a novel model for online stochastic matching. In 24th Annual European Symposium on Algorithms (ESA 2016). Schloss Dagstuhl-Leibniz-Zentrum fuer Informatik.

- Brubach et al. (2016b) Brubach, B.; Sankararaman, K. A.; Srinivasan, A.; and Xu, P. 2016b. Online Stochastic Matching: New Algorithms and Bounds. arXiv:1606.06395.

- Cook (2018) Cook, G. 2018. Woman Says Uber Driver Denied Her Ride Because of Her Wheelchair. NBC4-Washington. Available at https://www.nbcwashington.com/news/local/woman-says-uber-driver-denied-her-ride-because-of-her-wheelchair/2029780/, Accessed: 2023-04-23.

- Dickerson et al. (2019a) Dickerson, J.; Sankararaman, K.; Sarpatwar, K.; Srinivasan, A.; Wu, K.-L.; and Xu, P. 2019a. Online resource allocation with matching constraints. In International Conference on Autonomous Agents and Multiagent Systems (AAMAS).

- Dickerson et al. (2019b) Dickerson, J. P.; Sankararaman, K. A.; Srinivasan, A.; and Xu, P. 2019b. Balancing relevance and diversity in online bipartite matching via submodularity. In AAAI.

- Dickerson et al. (2021) Dickerson, J. P.; Sankararaman, K. A.; Srinivasan, A.; and Xu, P. 2021. Allocation Problems in Ride-sharing Platforms: Online Matching with Offline Reusable Resources. ACM Transactions on Economics and Computation (TEAC), 9(3): 1–17.

- Dwork et al. (2012) Dwork, C.; Hardt, M.; Pitassi, T.; Reingold, O.; and Zemel, R. 2012. Fairness through awareness. In ITCS.

- Feldman et al. (2009) Feldman, J.; Mehta, A.; Mirrokni, V.; and Muthukrishnan, S. 2009. Online stochastic matching: Beating 1-1/e. In 2009 50th Annual IEEE Symposium on Foundations of Computer Science, 117–126. IEEE.

- Galperin and Greppi (2017) Galperin, H.; and Greppi, C. 2017. Geographical discrimination in the gig economy. Available at SSRN 2922874.

- Gandhi et al. (2006) Gandhi, R.; Khuller, S.; Parthasarathy, S.; and Srinivasan, A. 2006. Dependent rounding and its applications to approximation algorithms. Journal of the ACM (JACM), 53(3): 324–360.

- García-Soriano and Bonchi (2020) García-Soriano, D.; and Bonchi, F. 2020. Fair-by-design matching. Data Mining and Knowledge Discovery, 1–45.

- Goel and Mehta (2008) Goel, G.; and Mehta, A. 2008. Online budgeted matching in random input models with applications to Adwords. In SODA.

- Ho and Vaughan (2012) Ho, C.-J.; and Vaughan, J. 2012. Online task assignment in crowdsourcing markets. In AAAI.

- Karp, Vazirani, and Vazirani (1990) Karp, R. M.; Vazirani, U. V.; and Vazirani, V. V. 1990. An optimal algorithm for on-line bipartite matching. In Proceedings of the twenty-second annual ACM symposium on Theory of computing, 352–358.

- Lee et al. (2019) Lee, M. K.; Kusbit, D.; Kahng, A.; Kim, J. T.; Yuan, X.; Chan, A.; See, D.; Noothigattu, R.; Lee, S.; Psomas, A.; ; and Procaccia, A. D. 2019. WeBuildAI: Participatory framework for algorithmic governance. In CSCW.

- Lesmana, Zhang, and Bei (2019) Lesmana, N. S.; Zhang, X.; and Bei, X. 2019. Balancing efficiency and fairness in on-demand ridesourcing. In NeurIPS.

- Lowalekar, Varakantham, and Jaillet (2018) Lowalekar, M.; Varakantham, P.; and Jaillet, P. 2018. Online spatio-temporal matching in stochastic and dynamic domains. Artificial Intelligence (AIJ), 261: 71–112.

- Ma and Xu (2022) Ma, W.; and Xu, P. 2022. Group-level fairness maximization in online bipartite matching. In AAMAS.

- Ma, Xu, and Xu (2020) Ma, W.; Xu, P.; and Xu, Y. 2020. Group-level fairness maximization in online bipartite matching. arXiv preprint arXiv:2011.13908.

- Ma, Xu, and Xu (2021) Ma, W.; Xu, P.; and Xu, Y. 2021. Fairness Maximization among Offline Agents in Online-Matching Markets. In WINE.

- Manshadi, Gharan, and Saberi (2012) Manshadi, V. H.; Gharan, S. O.; and Saberi, A. 2012. Online stochastic matching: Online actions based on offline statistics. Mathematics of Operations Research, 37(4): 559–573.

- Mattei, Saffidine, and Walsh (2017) Mattei, N.; Saffidine, A.; and Walsh, T. 2017. Mechanisms for Online Organ Matching. In IJCAI.

- Mehta (2013) Mehta, A. 2013. Online Matching and Ad Allocation. Foundations and Trends in Theoretical Computer Science, 8(4): 265–368.

- Mitzenmacher and Upfal (2017) Mitzenmacher, M.; and Upfal, E. 2017. Probability and computing: Randomization and probabilistic techniques in algorithms and data analysis. Cambridge university press.

- Nanda et al. (2020) Nanda, V.; Xu, P.; Sankararaman, K. A.; Dickerson, J. P.; and Srinivasan, A. 2020. Balancing the Tradeoff between Profit and Fairness in Rideshare Platforms During High-Demand Hours. In AAAI.

- Patro et al. (2020) Patro, G. K.; Biswas, A.; Ganguly, N.; Gummadi, K. P.; and Chakraborty, A. 2020. FairRec: Two-Sided Fairness for Personalized Recommendations in Two-Sided Platforms. In Proceedings of The Web Conference 2020, 1194–1204.

- Rawls (1958) Rawls, J. 1958. Justice as fairness. The philosophical review, 67(2): 164–194.

- Rosenblat et al. (2016) Rosenblat, A.; Levy, K. E.; Barocas, S.; and Hwang, T. 2016. Discriminating tastes: Customer ratings as vehicles for bias. Available at SSRN 2858946.

- Sankar et al. (2021) Sankar, G. S.; Louis, A.; Nasre, M.; and Nimbhorkar, P. 2021. Matchings with Group Fairness Constraints: Online and Offline Algorithms. arXiv preprint arXiv:2105.09522.

- Sekulić, Long, and Demšar (2021) Sekulić, S.; Long, J.; and Demšar, U. 2021. A spatially aware method for mapping movement-based and place-based regions from spatial flow networks. Transactions in GIS, 25(4): 2104–2124.

- Sühr et al. (2019) Sühr, T.; Biega, A. J.; Zehlike, M.; Gummadi, K. P.; and Chakraborty, A. 2019. Two-sided fairness for repeated matchings in two-sided markets: A case study of a ride-hailing platform. In KDD.

- Tong et al. (2016) Tong, Y.; She, J.; Ding, B.; Wang, L.; and Chen, L. 2016. Online mobile micro-task allocation in spatial crowdsourcing. In ICDE.

- White (2016) White, G. B. 2016. Uber and Lyft Are Failing Black Riders. The Atlantic. Available at https://www.theatlantic.com/business/archive/2016/10/uber-lyft-and-the-false-promise-of-fair-rides/506000/, Accessed: 2023-04-22.

- Wirtschafter (2019) Wirtschafter, E. 2019. Driver discrimination still a problem as Uber and Lyft prepare to go public. KALW. Available at https://www.kalw.org/post/driver-discrimination-still-problem-uber-and-lyft-prepare-go-public, Accessed: 2023-04-23.

- Xu and Xu (2020) Xu, Y.; and Xu, P. 2020. Trade the System Efficiency for the Income Equality of Drivers in Rideshare. In IJCAI.

- Zhou, Marecek, and Shorten (2021) Zhou, Q.; Marecek, J.; and Shorten, R. N. 2021. Subgroup Fairness in Two-Sided Markets. arXiv preprint arXiv:2106.02702.

Appendix A Online Matching Model Details

A.1 Arrival Setting (KIID and KAD):

The modelling choices we have made follow standard settings in online matching (Mehta 2013; Alaei, Hajiaghayi, and Liaghat 2013). To elaborate further, the initial seminal paper on online matching (Karp, Vazirani, and Vazirani 1990) does not assume any prior knowledge on the arrival of the online vertices of and follows adversarial analysis to establish theoretical guarantees on the competitive ratio. In addition to overly pessimistic theoretical results, the lack of prior knowledge is often an unrealistic assumption. Most decision makers in online matching settings are able to gain knowledge on the arrival rates of the online vertices and this knowledge can be used to build more realistic probabilistic knowledge of the arrival.

Specifically, the Known Independent and Identically Distributed KIID model is an established model in online matching (Feldman et al. 2009; Mehta 2013; Bahmani and Kapralov 2010; Manshadi, Gharan, and Saberi 2012; Dickerson et al. 2019b). In this model, the collection of arriving vertices on the online side belong to a finite set of known types where the type of a vertex decides the edge connections it has to the vertices of along with the weights of those edges. Further, a given vertex of type arrives with the same probability in every round. These arrival probabilities can be estimated easily from historical data based on previous matchings.

While the KIID model utilizes prior knowledge which is frequently available in practical applications, it is still restrictive since it assumes that the probabilities do not vary through time. The Known Adversarial Arrival KAD model (also known as prophet inequality matching) on the other hand, takes into account the dynamic variation in the probabilities. Therefore, the probability a vertex of type arrives in round is instead of being constant for every round . This model is also well-established in the matching literature and has been used in a collection of papers such as (Alaei, Hajiaghayi, and Liaghat 2012; Brubach et al. 2016a; Dickerson et al. 2021, 2019a). Despite the fact that the KAD model is well-motivated and richer than the KIID model it was not used in the one-sided online fair matching papers of (Nanda et al. 2020; Xu and Xu 2020).

A.2 Patience:

The patience parameter of a vertex (or ) for an offline vertex (or an online vertex ) models its tolerance for unsuccessful probes (match attempts) before leaving the system. We note that this is an important detail in the online matching model since it is frequently the case that the vertices in the online matching applications (such as advertising, crowdsourcing, and ridesharing) represent human participants who would only tolerate a fixed number of failed matching attempts before leaving the system. Like the KIID and KAD arrival models, the patience parameter is also well-established in online matching, see for example (Mehta 2013; Bansal et al. 2010; Adamczyk, Grandoni, and Mukherjee 2015). Despite the importance of this parameter, the previous work in fair online matching did not consider the patience issue for both sides simultaneously (Nanda et al. 2020; Xu and Xu 2020), handling both parameters at the same time is more challenging and leads to more tedious derivations.

We further elaborate on the meaning of the patience for both the online and offline sides, we note again that this is following the research literature on online matching:

Offline Patience:

Consider a vertex with patience , then vertex will remain on the offline side unless it is successfully matched or it receives many failed matching attempts. As a concrete example, consider a vertex with patience . Clearly, in the first round () will be in the offline side , suppose an unsuccessful matching attempt (unsuccessful probe) is made in this round, then in the next round will still be there. Suppose that the next round when is probed is in the fifth round (), then if the probe is successful then is matched and will be removed from the offline side in the next rounds (), but also if the match is unsuccessful then will not be matched but will still be removed for all of the next rounds () since it has a patience and therefore can only take two failed matching attempts before leaving.

Online Patience:

Unlike the offline side, an online vertex would arrive in a round and must be matched or rejected in that given round. While in a round we can at most match one online vertex (which is the arriving vertex ) to some offline vertex , we can make multiple match attempts (probes) from to the vertices it is connected to in in that round . The patience of decides the upper limit on the number of failed attempts we can make in round before leaves the system and can no longer be matched even if a possible match was still not attempted. As a concrete example, suppose vertex of type with arrives in round and that is connected to a total of four vertices in all of which are still available (i.e. unmatched and still have not ran out of patience), suppose we make match attempts (probes) to then then , it follows since that has left the system and we can no longer even attempt to match it to despite that fact that its available. Further, if at any probe attempt was matched then no further probe attempts are made to , e.g. if the first probe ( to ) in the above discussion was successful, then and are matched to each other and we cannot attempt to match to .

Appendix B Proofs

Here we include the missing proofs. Each lemma/theorem is restated followed by its proof.

B.1 Proofs for Section 5.1

See 5.1

Proof.

We follow a similar proof to that used in (Bansal et al. 2010). We shall focus on the operator’s profit objective since the other objectives follow by very similar arguments. First, we note that LP(1) uses the expected values of the problem parameters, i.e. if we consider a specific graph realization , then let be the number of arrival for vertex type , then it follows that LP(1) uses the expected values since where is an expectation over the randomness of the instance. We shall therefore refer to the value of LP(1) as .

To prove that is a valid upper bound it suffices to show that where is the optimal LP value of a realized instance and is the expected value of that optimal LP value. Let us then consider a specific realization , its corresponding LP would be the following:

| (9) |

| (10a) | |||

| (10b) | |||

| (10c) | |||

| (10d) | |||

| (10e) | |||

where is the realization of the online side. It is clear that for a given realization the above LP(9) is an upper bound on the operator’s objective value for that realization.

Now we prove that . The dual of the LP for the realization is the following:

| (11) |

| (12a) | |||

| (12b) | |||

Consider the graph with the expected number of arrival it would have a dual of the above form, let be the optimal solution of its corresponding dual. Then it follows by the strong duality of LPs that solution would have a value of . Now for the instance , we shall use the following dual solution which is set as follows:

-

•

.

-

•

of type : .

-

•

of type : .

Note that the new solution is a feasible dual solution since it satisfies constraints 12a and 12b. By weak duality the value of the solution upper bounds . Now if we were to denote the number of vertices of type that arrived in instance by , then the value of the solution satisfies:

Now taking the expectation, we get:

For the offline and online group fairness objectives, we use the same steps. The difference would be in the constraints of the dual program, however following a similar assignment as done from to is sufficient to prove the lemma for both fairness objectives. ∎

Before we prove Lemma 5.2 for the lower bound on the probability of . We have to first introduce the following two lemmas. Specifically, let be the number of successful assignments that received and accepted before round . Then the following lemma holds.

Lemma B.1.

For any given vertex at time , .

Proof.

Let be the indicator random variable for receiving an arrival request of type where and . Let be the indicator random variable that the edge gets sampled by the algorithm at time . Let be the indicator random variable that assignment is successful (a match) at time . Then .

∎

Now we lower bound the probability that was probed less than times prior to . Denote the number of probes received by before by , then the following lemma holds:

Lemma B.2.

.

Proof.

First it is clear that .

The inequality before the last follows from . Now using Markov’s inequality: , we get . ∎

Proof.

Consider a given edge where

The above inequality is due to the fact that .

Therefore the expected number of assignments (probes) to vertex until time is at most . Therefore, we have:

It is to be noted that is the total number of probes received before round . Thus, we have that the events and are positively correlated. Therefore,

∎

. See 5.3

Proof.

In this part we prove that the probability that edge is probed at time is at least . Note that the probability that a vertex of type arrives at time and that Algorithm 2 calls the subroutine PPDR() is . Let be the set of edges in excluding . For each edge let be the indicator for being before in the random order of (in algorithm 1) and let be the probability that the assignment is successful for . It is clear that and that . Now we have:

| (13) | |||

| (14) | |||

| (15) | |||

| (16) | |||

| (17) | |||

| (18) | |||

| (19) |

Applying Markov inequality we get the inequality (16). By linearity of expectation we get inequality (17). Since and are negatively correlated to each other from the Negative Correlation property of Dependent Rounding we have and we get (18). The last inequality (19) is due the fact that for any feasible solution the constraints imply that for all . Using similar analysis we can also prove that and . ∎

Proof.

Denote the expected number of probes on each edge resulting from PPDR() by . It follows that:

Denote the optimal solution for the operator’s profit LP by . Let be operator’s profit obtained by our online algorithm. Using the linearity of expectation we get: . Similarly, we can obtain and competitive ratios for the expected max-min group fairness guarantees on the offline and online sides, respectively. ∎

B.2 Proofs for Section 5.2

See 5.4

Proof.

We shall consider only the operator’s profit objective as the other objectives follow through an identical argument. Let be the indicator random variable for the arrival for vertex type in round . Then we can obtain a realization and solve the corresponding LP and then take the expected value of LP as an upper bound on the operator’s profit objective, i.e. the value where is an expectation with respect to the randomness of the problem. This means replacing by its realization in the LP below:

| (20) |

| (21a) | |||

| (21b) | |||

| (21c) | |||

If we were to replace the random variables by their expected value, then we would retrieve LP(5) where . It suffices to show that the value of LP(5) which is the LP value over the “expected” graph (the parameters replaced by their expected value) which we now denote by is an upper bound to , i.e. . Let be the optimal solution for a given realization and be the realization of the random variables over the instance, then we have that . It follows that is a feasible solution for LP(5), since and the rest of the constraints are satisfied as well since they are the same in every realization. However, we have that where is the optimal solution for LP(5) over the “expected” graph. The inequality followed since a feasible solution to a problem cannot exceed its optimal solution. ∎

See 5.5

Proof.

We prove the validity of the algorithm for by induction. For the base case, it is clear that , hence . Assume for , that , then at round we have:

where we used the fact that from constraint (8a) and the fact that . From the above, it follows that . ∎

Proof.

For an edge the probability that it is matched (successfully probed) is the following:

Setting , it follows from the above that is successfully matched with probability at least , at least , and at least . Hence, the guarantees on the competitive ratios follow by linearity of the expectation. ∎

B.3 Proofs for Section 5.3

Proof.

Given an instance with individual fairness, define as the set of all groups, thus , i.e. one group for each time round and one group for each offline vertex. Further given the online side types , create a new online side where and where consists of the same types as . Moreover, and , finally . For the offline side , we let each vertex have its own distinct group membership, i.e. for vertex , .

Based on the above, it is not difficult to see that both problems have the same operator profit, and that the individual max-min fairness objectives of the original instance equal the group max-min fairness objectives of the new instance. ∎

From the above Lemma, applying algorithm to the reduced instance leads to the following corollary:

Corollary B.1.

Given an instance of two-sided individual max-min fairness, applying to the reduction from Theorem 5.6 leads to a competitive ratio of simultaneously over the operator’s profit, the individual fairness objective for the offline side, and the individual fairness objective for the online side, where and .

The proof of Theorem 4.3 is immediate from the above corollary.

B.4 Proofs for Theorems 4.4 and 4.5

Proof.

We prove it for group fairness in the KIID setting, since the KIID setting is a special case of the KAD setting, then this also proves the upper bound for the KAD setting.

Consider the graph which consists of three offline vertices and three online vertex types, i.e. . Each vertex in () belongs to its own distinct group. The time horizon is set to an arbitrarily large value. The arrival rate for each is uniform and independent of time, i.e. KIID with . Further, the bipartite graph is complete, i.e. each vertex of is connected to all of the vertices of with for all . We also let for each , and for each . We represent the utilities on the edges of with matrices where the element gives the utility of the edge connecting vertex and vertex . The utility matrices for the platform operator, offline, and online sides are following, respectively:

It can be seen that the utility assignments in the above example conflict between the three entities.

Let and be the optimal values for the operator’s profit, offline group fairness, and online group fairness, respectively. It is not difficult to see that , , and . Now, denote by and the edges with values of 1 for and in the graph, respectively. Further, for a given online algorithm, let and be the expected number of probes received by edges and , respectively. Moreover, denote the algorithm’s expected value over the operator’s profit, expected fairness for offline vertices, and expected fairness for online vertices by , and , respectively. We can upper bound the sum of the competitive ratios as follows:

in the above, the second inequality follows since the minimum value is upper bounded by the average. The last inequality follows since and therefore the expected number of probes any offline vertex receives cannot exceed 1 and we have many vertices.

To prove the same result for individual fairness we use the same graph. We note that the arrival of vertices in is KAD instead with the vertex having and . Then we follow an argument similar to the above. ∎

The following proves Theorem 4.5 therefore showing that there is indeed a conflict between achieving group and individual fairness even if we were to consider only one side of the graph.

See 4.5

Proof.

Let us focus on the offline side, i.e. we consider and that are the competitive ratios for the group and individual fairness of the offline side.

Consider a graph which consists of two offline vertices and one online vertex, i.e. and . Further, there is only one group. Let and . has two vertices and both connected to the same vertex . For edge , we let and for edge , we let where is an arbitrarily large number. Note that both of these weights are for the utility of the offline side. Finally, we only have one round so .

Let and be the expected number of probes edges and receive, respectively. Note that . It follows that the optimal offline group fairness objective is . Further, the optimal offline individual fairness objective is , it is not difficult to show that . Now consider the sum of competitive ratios, we have:

this proves the result for the offline side of the graph.

To prove the result for the online side, we reverse the graph construction, i.e. having one vertex in and two vertex types in which arrive with equal probability. It now holds that and by setting to an arbitrarily large value . Then we follow an identical argument to the above. ∎

Appendix C Additonal Experimental Results

As mentioned before one of the major contributions of our work is that we consider the operator’s profit and fairness for both sides simultaneously instead of fairness for only one side. To further see the effects of ignoring one side, we run with one side ignored (see table 2). It is clear that the fairness objective for the ignored side is indeed lower in comparison to what can be achieved in figure 1. More precisely, we can see that the Offline (Driver) and Online (Rider) fairness can be simultaneously improved to around by setting (figure 1) whereas their values when their optimization weight is set to zero is and , respectively (see table 2).

| Profit | Driver Fairness | Rider Fairness | |

|---|---|---|---|

| 0.43 | 0.387 | 0.509 | |

| 0.564 | 0.498 | 0.41 |