RDP-GAN: A Rényi-Differential Privacy based Generative Adversarial Network

Abstract

Generative adversarial network (GAN) has attracted increasing attention recently owing to its impressive ability to generate realistic samples with high privacy protection. Without directly interactive with training examples, the generative model can be fully used to estimate the underlying distribution of an original dataset while the discriminative model can examine the quality of the generated samples by comparing the label values with the training examples. However, when GANs are applied on sensitive or private training examples, such as medical or financial records, it is still probable to divulge individuals’ sensitive and private information. To mitigate this information leakage and construct a private GAN, in this work we propose a Rényi-differentially private-GAN (RDP-GAN), which achieves differential privacy (DP) in a GAN by carefully adding random noises on the value of the loss function during training. Moreover, we derive the analytical results of the total privacy loss under the subsampling method and cumulated iterations, which show its effectiveness on the privacy budget allocation. In addition, in order to mitigate the negative impact brought by the injecting noise, we enhance the proposed algorithm by adding an adaptive noise tuning step, which will change the volume of added noise according to the testing accuracy. Through extensive experimental results, we verify that the proposed algorithm can achieve a better privacy level while producing high-quality samples compared with a benchmark DP-GAN scheme based on noise perturbation on training gradients.

Index Terms:

Generative Adversarial Network, Rényi-Differential Privacy, Adaptive Noise Tuning AlgorithmI Introduction

Recent technological advancements are transforming the ways in which data are created and processed. With the advent of the Internet-of-things (IoT), the number of intelligent devices in the world is rapidly growing in the last couple of years. Many of these devices are equipped with various sensors and increasingly powerful hardware, which allow them to not just collect, but more importantly, process data at unprecedented scales. In the concurrent development, artificial intelligence (AI) has revolutionized the ways that information is utilized with ground breaking successes in areas such as computer vision, natural language processing, voice recognition, etc [1]. Therefore, there is a high demand for harnessing the rich data provided by distributed devices to improve machine learning models.

In the past few years, deep learning has demonstrated largely improved performance over traditional machine learning methods in various applications, e.g., image understanding [2], speech recognition [3], cancer analysis [4], and the game of GO [5]. The great success of deep learning is owing to the development of powerful computing processor and the availability of massive data for training the neural networks. However, there exists domains where the accessibility of this huge data is not fully granted. For example, the sensitive medical data are usually not open-access in most countries. Thus, building a high-quality analytical model remains to be challenging at present. At the same time, data privacy has become a growing concern for clients. In particular, the emergence of centralized searchable data repositories has made the leakage of private information an urgent social problem, e.g., health conditions, travel information, and financial data. Furthermore, the diverse set of open data applications, such as census data dissemination and social networks, place more emphasis on privacy concerns. In such practices, the access to real-life datasets may cause information leakage even in pure research activities. Consequently, privacy preservation has become a critical issue.

Fortunately, generative models [6] provide us with a promising solution to alleviated the data scarcity issue. By sketching the data distribution from a small set of training data, it is feasible to sample from the input distribution and further generate more synthetic samples. By combining the complexity of deep neural networks and game theory, the Generative Adversarial Networks (GANs) [7] and its variants have demonstrated impressive performance on modelling the underlying data distribution, generating high quality “fake” samples that are hard to be distinguished from real ones, i.e., synthetic samples. In this way, the availability of GANs can fully facilitate the generated data and well protect the privacy of individuals.

However, the GANs can still implicitly disclose private information on the training examples. The adversarial training procedure and the high model complexity of deep neural networks, jointly encourage a distribution that is concentrated around training samples. By repeated sampling from the distribution, there is a considerable chance of recovering the training examples. For example, the authors in [8] introduced an active inference attack model that can reconstruct training samples from sampling generated data. Therefore, it is highly demanded to have generative models that not only generates high quality samples but also protects the privacy of the training data. Indeed, a private GAN has its potential to address this challenging problem.

To preserve the data privacy, one way is to make the synthetic data differentially private with respect to the original data. To do this, the authors in [9] modified the training procedure of the discriminator to be differentially private by using the Private Aggregation of Teacher Ensembles (PATE) framework. The post-processing theorem then guarantees that the generator of GAN will also be differentially private. Unlike [9], the work in [10] introduced a subsampled randomized algorithm that first takes a subsample for the dataset generated through a subsampling procedure, and then applies a known randomized mechanism on the subsampled data points. It is important to exploit the randomness in subsampling because if the original mechanism is differentially private, then the subsampled mechanism also obeys differentially privacy, referred to the “privacy amplification lemma”. To further conduct the sampling rate, [11, 12] introduced a key idea that adding random noise to the updating gradients of the discriminator during training, and provided privacy guarantees. Moreover, norm clipping is used to bound the parameters while it will also control the maximum influence of added noises. However, bounding on the added noise will always violate the privacy level, as its maximum scale of noise is limited to one in their assumptions. To keep track of privacy loss, differential privacy (DP) that measures the difference in output between two input databases differing by at most one element, has been evolved in [13]. By ensuring each gradient descent step is differentially private, the final output model satisfies a certain level of DP given by the strong composition property. Moreover, to obtain a tighter estimation, Abadi et al. [14] proposed the moment accountant methods, which track the log moments of the privacy loss variable under random sampling.

Nevertheless, the training of GAN usually suffers from its instability, and directly adding random noises on the updating gradients will definitely harm the learning performance. Therefore, there needs a more subtle design on the privacy protection scheme. In addition, the architecture of GAN evolves multiple iterations. In more details, the fashion Wasserstein GAN [15] will train the generator multiple times and train the discriminator once to obtain better results. Thus, to conduct the privacy analysis, it is necessary to give an accurate estimation on each iteration. To sum up, there are three key tasks to solve in the design of a private GAN:

-

•

Design a differentially private algorithm for GAN while guaranteeing its performance.

-

•

Obtain a tight privacy loss estimation under subsampling.

-

•

Obtain an accurate privacy budget calculation for cumulated iterations.

Accordingly, in this work, we propose a differentially private GAN. To accurately estimate the privacy loss of the proposed algorithm, we elaborate on the definition of Rényi-DP which can achieve a tighter privacy analysis compared to the moment accountant method. Furthermore, we improve the proposed algorithm by designing an adaptive noise tuning algorithm, which can achieve better learning performance. Specifically, the contributions of this work are listed as follows:

-

•

To achieve a differentially private GAN, we propose a method, where random noises are added on the value of the loss function of a discriminator in each iteration. Different from previous works in [11, 12], the proposed algorithm does not need an additional norm clipping method as the chosen loss function can be bounded naturally.

-

•

To investigate the privacy loss of the proposed algorithm, we first elaborate on the effectiveness of Rényi-DP in the subsampling step, which is proved to achieve a tighter analysis compared with the moment accountant method. Then we obtain the expression of the total privacy cost for cumulated iterations.

-

•

To obtain better learning performances, we further improve the proposed algorithm by adding an adaptive noise tuning step, called the RDP-ANT algorithm. The improved algorithm can adaptively change the value of added noise according to the testing accuracy, and eventually consummate the algorithm.

-

•

We conduct sufficient numerical experiments to verify the effectiveness of the analytical results. Compared with other algorithms, the proposed algorithm will inject less noise and obtain better performance in turn when achieving the same privacy level. Moreover, we also show the superiority of the RDP-ANT algorithm compared with other noise decay methods.

The rest of the paper is structured as follows. We introduce the back ground in Section II, and propose the RDP-GAN algorithm with comprehensive privacy loss analysis in Section III. In Section IV, we show the details of the improved adaptive noise tuning algorithm. Then, we validate the privacy analysis through extensive simulations and discuss the network performance in Section V, and conclude the paper in Section VI.

II Preliminary

In this section, we present some background knowledge of DP and GAN.

II-A ()-Differential Privacy

We first recall the standard definition of ()-DP [16].

Definition 1.

(-DP). A randomized mechanism offers ()-DP if for any adjacent and ,

| (1) |

where denotes a random function of .

The above definition is contingent on the notion of adjacent inputs and , which is domain-specific and is typically chosen to capture the contribution to the mechanism’s input by a single individual. Moreover, to avoid the worst-case scenario of always violating privacy of a fraction of the dataset, the standard recommendation is to choose or even , where is the data size.

The definition of ()-DP is initially proposed to capture privacy guarantees of the Gaussian mechanism, defined as follows:

| (2) |

where represents a random noise, which follows the Gaussian normal distribution with mean and standard derivation . Elementary analysis shows that the Gaussian mechanism satisfies a continuum of incomparable ()-differential privacy [16], for all combinations of and , where the -sensitivity of is defined as

| (3) |

and taken over all adjacent inputs and .

We also list the proposition that will be used in the proof.

Proposition 1.

(Post-processing.) Let be an ()-differentially private algorithm and let where is any arbitrary space. Then is -differentially private.

II-B -Rényi Differential Privacy

The original DP has been investigated in several works to estimate the privacy level of a typical machine learning algorithm, such as Deep Learning [14], Federated Learning [17] and GAN [11, 12]. However, the original DP needs strong assumption and will damage its accuracy when using composition theorem, as pointed in [18]. Thus, we elaborate a generalization of the notion of DP based on the concept of the Rényi divergence, which can avert inaccuracy. The Rényi divergence is classically defined as follows:

Definition 2.

(Rényi divergence). For two probability distributions and defined over , the Rényi divergence of order is

| (4) |

where denotes the density of at .

It motivates exploring a relaxation of DP based on the Rényi divergence.

Definition 3.

(()-RDP). A randomized mechanism is said to have -Rényi DP of order , or ()-RDP for short, if for any adjacent it holds that

| (5) |

Remark 1.

Similar to the definition of DP, a finite value for -RDP implies that feasible outcomes of for some are feasible, i.e., have a non-zero density, for all inputs from except for a set of measure 0. Assuming that this is in cause, we let the event space be the support of the distribution.

We then list some propositions that will be used in deriving the analytical results in the following.

Proposition 2.

Let be -RDP and be -RDP, then the mechanism defined as , where and , satisfies -RDP.

Proposition 3.

(Probability preservation). Let , and be two distributions defined over with identical support. be an arbitrary event. Then

| (6) |

II-C Generative Adversarial Networks

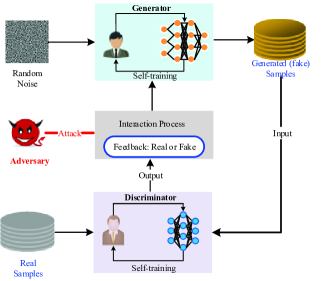

GAN is a recently developed generative model to produce synthetic images or texts after being trained [7]. The learning process in the model is based on one generator (g) and one discriminator (d) neural networks playing in the following zero-sum minimax (i.e., adversarial) game:

| (7) |

where is a prior distribution of latent vector , is a generator function, and is a discriminator function whose output spans . (resp. ) indicates that the discriminator classifies a sample as generated (resp. real).

In Fig. 1, we show a typical structure of GAN. and can be any form of neural networks. The discriminator attempts to maximize the objective, whereas the generator attempts to maximize the objective. In other words, the discriminator attempts to distinguish between real and generated samples, while the generator attempts to generate real-looking fake samples that the discriminator cannot distinguish from real samples. According to the feedback in the interaction process, the generator will updates its network to enhance the quality of the generated samples. Adversaries exist in this environment and may attack the interaction process, and obtain private information from clients. In order to avoid this privacy leakage, in the next section we propose a method to improve the privacy performance of GAN.

III Design of RDP-GAN

In this section, we elaborate the design of RDP-GAN, a Rényi differentially private adversarial network to mitigate information leakage, and maintain desirable utility in the generated data.

III-A RDP-GAN Framework

In most real-world problems, the ture data distribution is unknown and needs to be estimated empirically. Since we are primarily interested in data synthesis, and we will use GANs as the mechanism to estimate and draw samples from it. If trained properly, a RDP-GAN will mitigate inference attack during the data analysis.

The framework of RDP-GAN is structured as follows. Sensitive data is first fed into a discriminator with a privacy-preserving layer. This discriminator is used to train a differentially private generator to produce a private artificial dataset . Different from the work on differentially private deep learning (e.g., [14, 11, 12, 19]), the proposed algorithm achieves DP by injecting random noise on the value of the loss function in the interaction procedure. In more details, the rational behind our design is as follows:

-

•

Different from existing works, which add noise on the gradients, the proposed algorithm focuses on the design on the interaction between the generator and discriminator. This is because that from the aspect of an adversary, the interaction process is more vulnerable to attack than the network architecture if the generator and discriminator are not assembled. Moreover, it is not trivial to acknowledge information from the inside of the discriminator or generator.

-

•

Adding noise on the value of the loss function is a direct method compared to the method in [11, 12, 19], which will bring explicit privacy protection. This is because that the loss values, not the parameters, are exchanged between generator and discriminator, and the perturbation on the loss value will directly influence the updates of the generator. In addition, the privacy estimation is not that accuracy as the changes of the privacy level from parameters to loss values are ignored in the previous works.

-

•

Adding noise on the value of the loss function does not need an extra norm clipping function as we naturally use a bounded activation function as the last layer of neuron network. Manually bounding the loss function is an non-trivial task as its value is sensitive and needs adjustment based on empirical results.

Despite the fact that the generator does not have access to the real data in the training process, one cannot guarantee DP because of the information passed through with the loss value from the discriminator. A simple high level example will illustrate such breach of privacy. Let the dataset , contain some small real numbers. The only difference between these two datasets is the number , which happens to be extremely large. Since the impact of is large enough to influence the performance of the discriminator, specifically on its loss value, it will lead to a large difference whether is used to train or not. In this case, this difference on the loss value will be propagated to the generator that will break privacy.

III-B The Implementation of RDP-GAN

Our method focuses on preserving the privacy during the training procedure, and we add noise on the loss value of the discriminator as follows:

| (8) |

where we use to represent the value of the loss function of all data samples, and denotes the sample size of the discriminator, respectively.

We first explain this perturbation on the loss value will not vanish during back propagation. Considering a layers network, the perturbed loss value of the last layer (the -th layer) can be represented by

| (9) |

where denotes the activation function of the -th layer, denotes the correct label, expresses the XNOR function that outputs a logical one or true only if two inputs are the same, and is the input of the -th layer, respectively [20]. During back propagation, this perturbation is involved as follows:

| (10) |

where . Thus, if adding noise on the value of the discriminator can achieve DP, this perturbation will be elaborated during the back propagation when updating the discriminator, and ensures that the discriminator is DP. Moreover, the loss value (parameters) of generator can also guarantee DP because of post-processing property in [16] which states that any mapping (operation) after a differentially private output will not invade the privacy. Here the mapping is in fact the computation of parameters of generator.

The RDP-GAN procedure is summarized in Algorithm 1.

III-C Privacy Analysis of RDP-GAN

Because the proposed algorithm consists of multiple iterations and in order to analyze the total privacy loss, we first prove that adding noise on the value of the loss function in the discriminator ensures the Rényi differential privacy, and then show that the generator can satisfy DP.

III-C1 Sensitivity on the loss function

According to the definition of DP, we consider two adjacent database and with same size, and only differ by one sample.

Consequently, for the discrimination in one iteration, the training process can be written as:

| (11) |

where denotes the training process. Therefore, the sensitivity on the loss function can be expressed as

| (12) |

To make the loss function bounded, we can choose a bounded activation as the last network layer, thus the value of the -th sample is bounded. So the value of the loss function has a bounded input with unchanged label, and its output is naturally bounded according to the forward processing property. In addition, we denote the bound of loss function by 111Note here can be different for different datasets and loss functions. In our experimental results, we will conduct experiments to obtain empirically.. Thus, following the same logic of [17], the sensitivity of loss function can be expressed as

| (13) |

From Eq. (13), we can observe that when the batch size increases, the sensitivity should decrease accordingly. The intuition is that the maximum difference on single data sample can make is set to and such a maximum difference is scaled down by a factor of since every data sample contributed equally to the resulting model. For example, if a model is trained by patient records with a certain disease, acquiring that an individual’s record is among them directly affects the privacy. If we increase the size of the dataset, it will be more difficult to determine whether this record is part of the training data or not.

III-C2 Privacy analysis on the discriminator

With the sensitivity, we can design the Gaussian mechanism with -RDP requirement in terms of the sampling rate under iterations. In Theorem 1, we present the privacy level of the output of discriminator as follows.

Theorem 1.

Given the sampling rate and the sensitivity , adding random noise on the loss function, the output of discriminator for each iteration can satisfy -RDP, and .

Proof.

Please find the proof in the Appendix A. ∎

We then provide the following lemma, which will be used to prove the output of discriminator after iterations can satisfy -DP. For simplicity, we rewrite the loss function as in the following.

Lemma 1.

Let be an adaptive composition of mechanisms all satisfying -RDP. Let and be two adjacent inputs. Then for any subset :

| (14) |

Proof.

Please find the proof in the Appendix B. ∎

Remark 2.

Theorem 2.

Given the number of discriminator iterations , the sampling rate and the sensitivity , adding random noise on the value of the loss function of discriminator, the output guarantees -DP under a composition of RDP mechanisms, when . The expression of can be derived as

| (15) |

where .

Proof.

Please find the proof in the Appendix C. ∎

III-C3 Privacy analysis of the generator

We next provide the analytical privacy results on the generator according to the post processing property.

Theorem 3.

The output of generator in the proposed algorithm guarantees -DP, where

| (16) |

Proof.

The privacy mechanism guarantees a direct consequence from followed Proposition 1 by the post processing property of DP. ∎

III-C4 Privacy analysis of the total training process

Finally, we come to our final results that the proposed algorithm after iterations satisfies the DP guarantee.

Theorem 4.

The proposed algorithm satisfies -DP, where , and denotes the total iterations of generator in this algorithm.

Remark 3.

For the calculation of the total privacy budget, it cannot directly using the composition property (Lemma 1) as there is a strict condition in Theorem 2, i.e., . For typical DP setting, we can choose and , then we can obtain . Thus this condition cannot be satisfied in GAN scenarios as we always set a large number of iterations, usually . So we directly sum all the privacy budgets of every generator iteration in Theorem 4.

IV Adaptive Noise Tuning Algorithm

Although the loss value is bounded naturally, adding random noise may reduce the learning performance. In Algorithm 1, the privacy budget allocated to one iteration is evenly distributed, which implies a same noise scale of Gaussian mechanism in every generator iteration. In general, the final model performance, such as convergence, accuracy, etc., is largely dependent on the amount of noise added over the training process [19]. Thus, in order to obtain a better learning performance, we can optimize the allocation of privacy budget and design the amount of noise added in each iteration. The details are described as follows.

IV-A Adaptive Noise Tuning Algorithm

Our adaptive noise tuning algorithm follows the idea that, as the training continues, it is expected to have less noise on the loss function, which allows the model converge faster and obtain better results. Similar ideas have been applied by adjusting the learning rate in common practice [21]. Therefore, we propose an algorithm that can adaptively tune the noise scale over the training iterations, and effectively improve the model accuracy in turn.

Our approach for adaptive tuning the noise scale is to monitor the training accuracy and reduce the noise scale when the accuracy stops improving. To do this, we first add an extra testing process after each generator finishes training in each iteration. Every time when the improvement of the testing accuracy is less than a predefined threshold , the noise scale is reduced by a factor of until the total privacy budget runs out. Although this will lead to recalculating privacy cost, we can show the improvement on the performance is convincing despite more energy cost in the experimental results.

In our approach, with a validation dataset, the testing accuracy is checked periodically during the training process in the generator to determine whether the noise scale needs to be reduced for subsequent iterations. Let be the noise scale in the th iteration obtained by Theorem 4 when the total privacy budget and total iterations are given, and be the corresponding testing accuracy. The noise scale for the subsequent iterations is adjusted based on the accuracy difference between the current and previous iteration.

| (17) |

where denotes the threshold for the testing accuracy.

Initially we set and . Then the updated noise scale will be applied to the next iteration.

In addition, we find that the testing accuracy may not increase monotonically as the training progresses, and its fluctuation may cause unnecessary reduction of noise scale and thus wasting the privacy budget. This motivates us to use the average of testing accuracy to improve the algorithm: at the current testing iteration , we define an average testing accuracy over the previous iterations as follows:

| (18) |

The average testing value will replace the previous one in Eq.(17) and determine whether the noise scale should be reduced or not.

To sum up, we formally present our adaptive noise tuning DP-GAN framework in the Algorithm 2.

IV-B Pre-defined Noise Decay Schedules

In this subsection, we will list several predefined noise decay schedules, and provide comprehensive comparison results with our proposed algorithm in Sec. 5.5.

IV-B1 Time-Based Decay

According to [22], it is defined with the mathematical form

| (19) |

where is the initial noise scale, is the decay rate and is the current iteration, respectively.

IV-B2 Exponential Decay

It has the mathematical form that

| (20) |

IV-B3 Step Decay

Step decay reduces the learning rate by some factor every few iterations. The mathematical form is expressed as

| (21) |

where decides how often to reduce noise in terms of the number of iterations.

IV-C Privacy Preserving Parameter Selection

The proposed algorithms require a set of pre-defined hyperparameters, such as decay rate . This value will definitely the training time and affect the final model accuracy. Intuitively speaking, a smaller decay rate will lead to a better performance but longer training iterations. It is expected to find an appropriate decay rate for the schedules under an optimal tradeoff between the learning performance and training. However, this decay rate should be determined by multiple factors such as typical neuron network, training set and other factors. Thus in this subsection, we only propose a straightforward method to obtain an appropriate value. To list candidates by training neural networks respectively and directly choose the one that achieves the highest accuracy, accordingly we show the experimental results in Sec. V-E.

V Experimental Results

In this section, we first evaluate the performance of our analytical results for different privacy budgets. Then, we evaluate our proposed RDP-GAN method on the tabular and image datasets, respectively. In addition, we demonstrate the effectiveness of the improved algorithm with various noise scaling methods and parameter settings.

V-A Experimental Setting

V-A1 Dataset

In our experiments, we use two benchmark datasets for different tasks:

-

•

Adult, which consists of individual information, has attributes ( selected in the experiments) for each individual and split into training and test samples. We show an Adult dataset sample with some attributes in Table I.

TABLE I: Some examples of the Adult dataset Age Occupation Education Gender Work Class Marital Status Work Hour Income 39 Sales Bachelors Male State-gov Never-married 30 38 Tech-support HS-grad Male Private Divorced 35 44 Prof-specialty Masters Male Private Married-civ-spouse 42 43 Other-service Some-college Female State-gov Separated 26 -

•

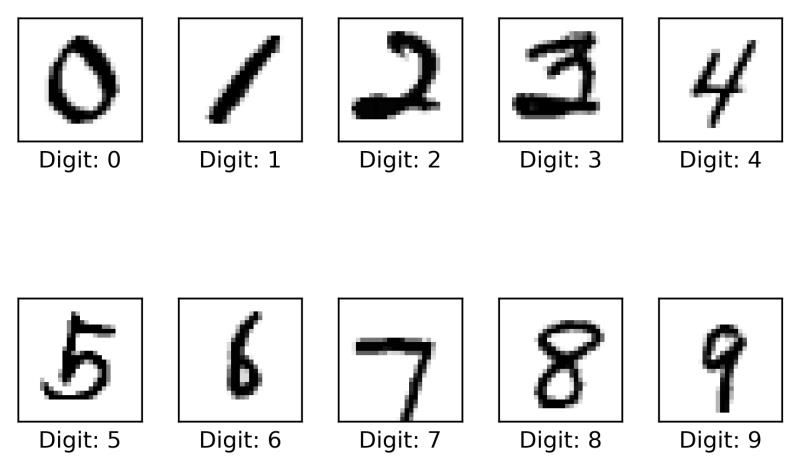

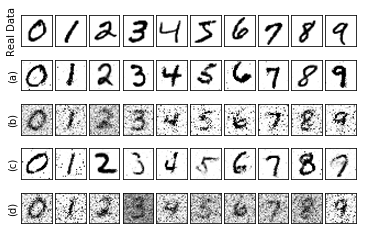

MNIST, which consists of handwritten digit images of size , split into training and test samples. We show the typical samples of the standard MNIST dataset in Fig. 2.

Figure 2: Some samples of the MNIST dataset

V-A2 GAN

The neural network architecture is adapted to the deep convolutional GAN (DCGAN), where the discriminator is a convolutional neural network (CNN) that contains layers. In each layer, a list of learnable filters are applied to the entire input matrix, where data samples are converted into square matrices in our method. To make the loss value bounded, we choose the Sigmoid activation function as the last layer that generates the probability of being real or fake. The loss function of the discriminator is set to use Cross Entropy function. The generator is also a neural network that consists of de-convolutional layers.

In the experiment, we set the learning rate of discriminator and generator to be . The sampling rate is set according to different batch sizes, and the number of iterations on discriminator () and generator () are and , respectively.

V-A3 Privacy and Utility Level Estimation

In the experimental results, we set up two privacy levels: and , respectively. To verify the generated results, we use an additional classifier to test the accuracy with right labels in the MNIST dataset. The classifier is trained by the test samples in the MNIST dataset and is used to distinguish whether an input digit with a predefined label is belong to the right label. Moreover, the classifier uses a multilayer perceptron (MLP) neural network with layers. Except for the input nodes, each node is a neuron that uses Sigmoid activation function. In the Adult dataset, we conducted the probability mass function (PMF) and the absolute average error with the true data for each attribute. For the relationship among different attributes, we use a trained classifier to test the accuracy. In addition, the absolute average error is calculated by the following equation:

| (22) |

where denotes the total number of labels, and denote the PMF of the generated and real data, respectively.

V-B Analytical Results on the Privacy Level

V-B1 Investigation on the Sensitivity

Before we show the analytical results of the proposed RDP-GAN, we need to investigate the sensitivity on the loss function. According to Eq. (13), we can first estimate the bound of loss function and calculate the sensitivity. We conduct experimental results on the Adult and MNIST dataset, respectively, and record the maximum loss value without injecting noises in In Table II.

| Batch size | Batch size | |

| Maximum value of loss function on Adult | 17.852 | 21. 242 |

| Maximum value of loss function on MNIST | 23.534 | 26.823 |

| on Adult | 20 | 23 |

| on MNIST | 25 | 28 |

| on the Adult dataset | 0.3125 | 0.1796 |

| on the MNIST dataset | 0.1953 | 0.2187 |

From the results we can find a large batch size will lead to a large maximum loss function value on both datasets. Accordingly, we set for different situations in Table. II222Note here the value of is only used to estimate the privacy loss. Nevertheless, we will show the proposed privacy analysis can achieve a lower bound compared to other method under same noise.. The results also indicate that one individual will produce less influence in a larger dataset.

V-B2 Analytical Results on the Privacy Level of Generator

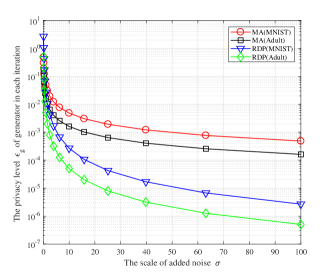

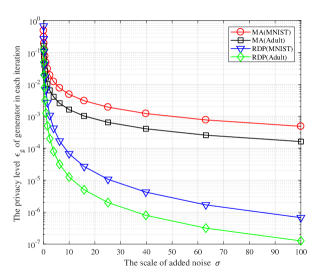

To show the effectiveness of the RDP-GAN, we compare it with the method using Moment Accountant (MA) algorithm [12, 14] as shown in the follow figures. In [12, 14], the privacy level of generator in each iteration can be expressed as

| (23) |

where the sensitivity is omitted in the formula. In addition, the expression of in MA has a order of , where our analysis method (RDP) will achieve a order of respect of the injected noise. This will lead to a higher privacy level in RDP when the noise scale is qualitative larger, e.g., .

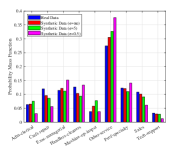

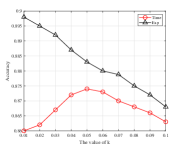

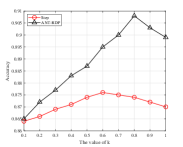

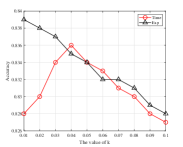

In Fig. 3 we investigate the privacy level in two batch sizes. As can be found in these figures, the proposed RDP method can achieve a better privacy level when larger noises are added as expected. Moreover, the privacy level in the Adult dataset is always higher (a smaller ) than the MNIST dataset. The intuition behind this is that the sensitivity in the Adult dataset is lower than it in the MNIST dataset as it has less attributes.

V-C Experimental Results on the MNIST Dataset

In this subsection, we excute the proposed algorithm on the MNIST dataset. In Fig. 4, we first show the real and generated data samples with different noise scale. We also show the results generated by the DP-GAN [12, 11] for comparison, which adds noise on the gradients. From the figure we can find the generated digits with low privacy level can well preserve the characters of the true ones, and with the increase of the privacy level, the quality begins to drop as there are unclear pixels exists. For example, we can find the digit and digit are hard to recognize in the generated samples when . Moreover, the quality generated by DP-GAN is obviously worse than the proposed RDP in both privacy levels.

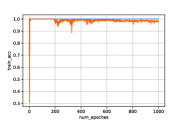

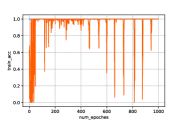

In order to further verify the qualities of the generated samples, we use an additional classifier to test the accuracy. We show the test accuracy along with the training process (i.e., iterations) in the following figures.

From Fig. 5 we can first find that the generated samples without privacy protection, i.e., , can achieve relative high accuracy in the classifying. Second, with the increase of the noise scale, the accuracy performance begins to fluctuate, which is brought from the injected noises. In addition, a few collapse cases happen when a large noise is added. For example, we can find in Fig. 5(d) the accuracy always suddenly drops to or less. This is because the large scale of will probably brings about huge noises to the loss function that the system cannot stand. Therefore, it will lead to unacceptable results in the generated samples, i.e., the digit in Fig. 4 when .

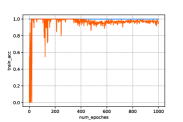

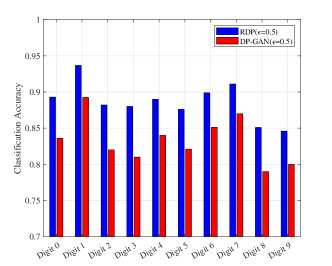

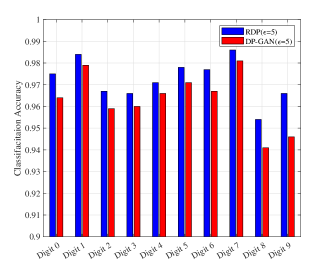

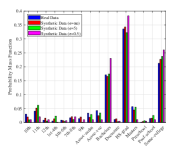

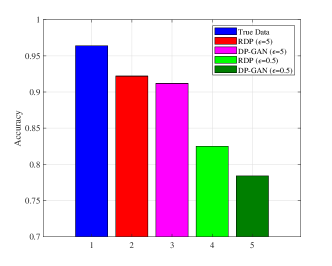

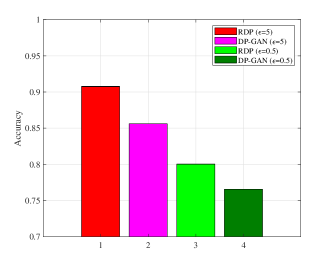

In addition, we compare our algorithm (RDP) with the method that adds noise on the parameters, i.e., DP-GAN in two privacy levels, and , respectively. As can be found in Fig. 6, the proposed algorithm has a better performance compared with DP-GAN in both privacy levels, in which the biggest performance gap is around when classifying digit in the high privacy level. As explained in Sec. 3.1, the superior performance of the proposed RDP-GAN compared with the DP-GAN is because that adding noise on the loss function can bring in more explicit privacy protection.

V-D Experimental Results on the Adult Dataset

In this subsection, we show the performance of the proposed algorithm on the Adult dataset.

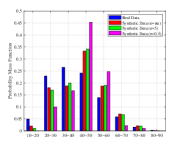

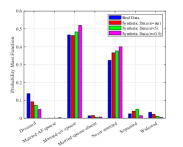

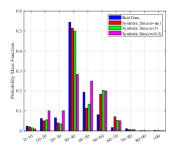

In Fig. 7 we show the probability mass function (PMF) of the generated attributes. Take the attribute of Age as an example, we use binning to divide the age range into regions as shown in Fig. 7(a) to increase the training speed,. As can be found in Fig. 7(a), the generated samples can achieve similar distribution with the true ones, while the samples with larger noise make more differences. Moveover, the largest difference is focused on the edge domain. The main reason may because the generator always produces samples that are likely to appear in the true dataset, i.e., the one close the average value. Similar phenomenon can be found in other attributes as well. To further investigate this the quality of the generated samples, we show the absolute average error for these attributes. As can be found in Table. III, when more noises are injected, the absolute average error increases. Thus, the proposed algorithm may not have the capability to achieve high performance in maintaining the character of unique attributes, especially in the tabular dataset. To further enhance this performance, it may need more intelligent design on the generator, which is left as our future work.

| Age | Occ. | Edu. | Gen. | Work C | Mar. | Work H | Inc. | |

| 1.09 | 0.68 | 0.92 | 0.01 | 0.834 | 0.36 | 1.66 | 0.06 | |

| 1.1 | 1.23 | 1.23 | 0.009 | 1.11 | 0.57 | 1.65 | 0.17 | |

| 3. | 1.75 | 1.87 | 0.048 | 2.21 | 0.69 | 2.55 | 0.44 |

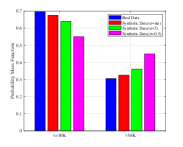

To verify the correlation among different attributes, we use a trained classifier to determine whether the income of an individual is larger than or not. Experimental results on the comparison of the proposed RDP-GAN with DP-GAN can be found in Fig. 8.

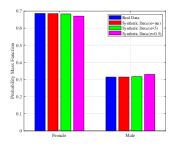

From Fig. 8(a) we can find the classifier is able to obtain a fairly high accuracy trained by the true data samples, which has around accuracy. Moreover, compared with DP-GAN, the proposed RDP algorithm can better capture the relationship among attributes in both privacy level. For example, when , the performance gap is around ( v.s. ). In addition, in Fig. 8(b) we trained the classifier using the synthetic data, and test its accuracy using the true data. The results also show that the proposed algorithm can achieve a better performance than DP-GAN at a same privacy level.

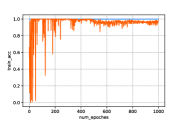

V-E Experimental Results on the Adaptive Noise Tuning Algorithm

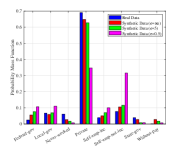

In this subsection, we first conduct experiments to find the appropriate decay rate for different algorithms. Specifically, we choose and iterations, which leads to adding to the discriminator in each iteration, and the testing accuracy is set to to prevent model collapsing. In addition, we denote the Time-based decay, Exponential decay and Step decay as Time, Exp and Step, respectively, and the in Step is set to . In the following, we show the trend of the testing accuracy with different value of decay rate in the Adult dataset.

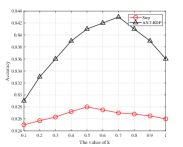

From Fig. 9 and Fig. 10, we can find there exists an optimal decay rate in the current system setting. For example, in the ANT-RDP algorithm, the best accuracy occurs when in the Adult dataset, and in the Adult dataset, respectively. In addition, the performance of the Time, Step and ANT-RDP schedules seem to have a convex relationship with the value of . Intuitively speaking, it may because that a lower decay rate causes to a longer training time, and it will produce a worse accuracy because most iterations will suffer from noise-added parameters. On the other side, a higher decay rate leads to the noise scale decaying sharply in this case and therefore resulting in an insufficient training time, which may also degrades the accuracy. Moreover, we show a comparable results for these algorithms in Table. IV and Table. V.

| No Privacy | RDP-GAN | Time | Step | Exp | ANT-RDP | |

| Iterations | 1000 | 1000 | 657 | 564 | 902 | 868 |

| Accuracy | 0.995 | 0.863 | 0.874 | 0.876 | 0.898 | 0.908 |

| No Privacy | RDP-GAN | Time | Step | Exp | ANT-RDP | |

| Iterations | 1000 | 1000 | 632 | 532 | 894 | 845 |

| Accuracy | 0.964 | 0.825 | 0.836 | 0.828 | 0.839 | 0.843 |

From the tables we can find that all the dynamic schedules can achieve a better performance than RDP-GAN, with smaller cost on the training iterations. The reason behind this is that the dynamic algorithm will adjust the scale of the added noise according to the testing performance, and it will cost more privacy budget when noise scale becomes smaller in each iteration, thus leading to less iterations. Moreover, the proposed ANT-RDP can obtain the highest accuracy on both datasets while it does not need the largest iterations. For example, compared with the Exp algorithm, the ANT-RDP has a better accuracy, i.e., there is a performance gain in the Adult dataset, and in the MNIST dataset, respectively, while needs less iterations, i.e., they are and less in each dataset.

VI Related Work

In this section, we provide a brief literature review of relevant topics: differential privacy and differentially private deep learning.

VI-A Differential Privacy

Differential privacy (DP) [16] and related algorithms have been widely studied in the literatures. The work [23] laid the theoretical foundations of many DP studies by adding noise to make the maximum change of data related functions. Another related frameworks that adds noise on gradient are [24] and its followed work [25], which studied to obtain information from models with DP requirements using stochastic gradient. In [26], a comprehensive and structured overview of DP data publishing and analysis show several possible future directions and applications. In addition, to tightly analyze the composition, especially on the tails of the privacy loss, the work [18] gave a new privacy definition, named Rényi DP. The Rényi DP is not only a natural generalization of pure DP as it shares many properties with DP, but also has the capability to capture a more accurate aggregate privacy loss by the composition theorem.

VI-B Differentially Private Deep Learning

Abdadi et al. [14] proposed a differentially private SGD algorithm for deep learning to offer provable privacy guarantees on the output model, and the work [19] used the concentrated DP [13], which is in spirt of RDP, to provide cumulative privacy loss estimation over computations. Moreover, the applications of DP in GAN, such as [11, 12], have well addressed information leakage by adding noises to gradients during training. They also can produce data samples with good quality under reasonable privacy budgets.

Different from these solutions, our work can obtain an more accurate estimation of privacy loss. The properties of RDP is first used to apply in the GAN. Moreover, we provide a naturally succeed method without artificial clipping on the noise-added gradients, which may destroy the learning performance.

VII Conclusion

In this work, we proposed a Rényi-differentially private-GAN (RDP-GAN), which achieves differential privacy (DP) under the framework of GAN by carefully adding random Gaussian noises on the value of the loss function during training. The advantages of applying perturbation on the loss function are three-fold: i) loss values are exchanged by the generator and discriminator directly which need more protection than parameters; ii) adding noise on the loss value can provide explicit privacy protection; and iii) it does not need value clipping on the parameters, so that the stability can be enhanced. We theoretically obtained the analytical results of the total privacy loss considering the subsampling method and multiple iterations of training with equal privacy budget allocation to each iteration. In addition, in order to alleviate the negative impact brought by the noise injection, we improved the proposed algorithm by designing an adaptive noise tuning algorithm, which can adaptively change the value of added noise according to the testing accuracy. Through comprehensive experimental results, we verified that the proposed algorithm can achieve a higher data utility compared with the existing DP-GAN algorithm at the same DP protection level.

Appendix A Proof of Theorem 1

Let denote the loss function of discriminator, and are adjacent datasets which only differ one element . Thus, the loss value of these two adjacent datasets after noise perturbation are expressed as:

| (24) |

where denotes the parameter incurring in the neural network.

From Eq. (24), we know is a summation of two independent random variables. Using the Gaussian approximation approach in [27], the summation of these two RVs can be treated as another RV which also follows a Gaussian distribution. Moreover, without loss of generality, we assume that . Thus and are distributed identically except for the first coordinate and hence we have a one-dimensional problem [14]. Let denote the pdf of and let denote the pdf of . Thus we have

| (25) |

where is the sampling rate and denotes the sensitivity bound on the loss function. In addition, , where , denotes the sampling and total size of dataset, respectively.

According to the definition of Rényi differential privacy in Eq. (5), of one iteration can be expressed as

. For simplicity, we rewrite the

as .

To obtain , we first calculate as

| (26) |

where step (a) uses the binomial expansion, is denoted by , and step (b) is obtained by . Step (c) uses Taylor series expansion, and step (d) is obtained by Taylor series expansion.

We then calculate as

| (27) |

where step (a) is obtained by , and is denoted by

. Step (b) is obtained by , and is denoted by .

As a result, which concludes the proof.

Appendix B Proof of Lemma 1

By applying Proposition 2 to their composition, we can obtain that for all ,

| (28) |

Denote by and by and consider two cases.

Case I: . According to the Proposition 3 and choosing , we have

| (29) |

where step (a) is obtained by substituting into the equation.

Case II: . This case follows trivially, since the right hand of Eq. (14) is larger than 1 as:

| (30) |

Appendix C Proof of Theorem 2

Let and be two adjacent inputs, and be some subset results of the loss function . To argue -differential private of , we need to verify that

| (31) |

According to Lemma 1, we know that

| (32) |

Denote by and by and we can obtain that

| (33) |

Eq. (33) can be divided into two cases as follows.

Case I: . Then Eq. (33) can be derived by

| (34) |

Case II: . Then Eq. (33) can be derived by

| (35) |

where step (a) is obtained by substituting the condition into the equation.

This concludes the proof.

References

- [1] X. Wang, Y. Han, C. Wang, Q. Zhao, X. Chen, and M. Chen, “In-edge ai: Intelligentizing mobile edge computing, caching and communication by federated learning,” arXiv preprint arXiv:1809.07857, 2018.

- [2] K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” in The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), June 2016.

- [3] A. Graves, A. Mohamed, and G. Hinton, “Speech recognition with deep recurrent neural networks,” in 2013 IEEE International Conference on Acoustics, Speech and Signal Processing, May 2013, pp. 6645–6649.

- [4] M. Liang, Z. Li, T. Chen, and J. Zeng, “Integrative data analysis of multi-platform cancer data with a multimodal deep learning approach,” IEEE/ACM Transactions on Computational Biology and Bioinformatics, vol. 12, no. 4, pp. 928–937, July 2015.

- [5] D. Silver, A. Huang, C. J. Maddison, A. Guez, L. Sifre, G. Van Den Driessche, J. Schrittwieser, I. Antonoglou, V. Panneershelvam, M. Lanctot et al., “Mastering the game of go with deep neural networks and tree search,” nature, vol. 529, no. 7587, p. 484, 2016.

- [6] A. Makhzani, J. Shlens, N. Jaitly, I. Goodfellow, and B. Frey, “Adversarial autoencoders,” arXiv preprint arXiv:1511.05644, 2015.

- [7] I. Goodfellow, J. Pouget-Abadie, M. Mirza, B. Xu, D. Warde-Farley, S. Ozair, A. Courville, and Y. Bengio, “Generative adversarial nets,” in Advances in neural information processing systems, 2014, pp. 2672–2680.

- [8] B. Hitaj, G. Ateniese, and F. Perez-Cruz, “Deep models under the gan: information leakage from collaborative deep learning,” in Proceedings of the 2017 ACM SIGSAC Conference on Computer and Communications Security. ACM, 2017, pp. 603–618.

- [9] J. Jordon, J. Yoon, and M. van der Schaar, “Pate-gan: generating synthetic data with differential privacy guarantees,” 2018.

- [10] Y.-X. Wang, B. Balle, and S. Kasiviswanathan, “Subsampled rényi differential privacy and analytical moments accountant,” arXiv preprint arXiv:1808.00087, 2018.

- [11] L. Xie, K. Lin, S. Wang, F. Wang, and J. Zhou, “Differentially private generative adversarial network,” arXiv preprint arXiv:1802.06739, 2018.

- [12] C. Xu, J. Ren, D. Zhang, Y. Zhang, Z. Qin, and K. Ren, “Ganobfuscator: Mitigating information leakage under gan via differential privacy,” IEEE Transactions on Information Forensics and Security, vol. 14, no. 9, pp. 2358–2371, Sep. 2019.

- [13] C. Dwork and G. N. Rothblum, “Concentrated differential privacy,” arXiv preprint arXiv:1603.01887, 2016.

- [14] M. Abadi, A. Chu, I. Goodfellow, H. B. McMahan, I. Mironov, K. Talwar, and L. Zhang, “Deep learning with differential privacy,” in Proceedings of the 2016 ACM SIGSAC Conference on Computer and Communications Security. ACM, 2016, pp. 308–318.

- [15] M. Arjovsky, S. Chintala, and L. Bottou, “Wasserstein gan,” arXiv preprint arXiv:1701.07875, 2017.

- [16] C. Dwork, A. Roth et al., “The algorithmic foundations of differential privacy,” Foundations and Trends® in Theoretical Computer Science, vol. 9, no. 3–4, pp. 211–407, 2014.

- [17] K. Wei, J. Li, M. Ding, C. Ma, H. Su, B. Zhang, and H. V. Poor, “Performance analysis and optimization in privacy-preserving federated learning,” arXiv preprint arXiv:2003.00229, 2020.

- [18] I. Mironov, “Rényi differential privacy,” in 2017 IEEE 30th Computer Security Foundations Symposium (CSF). IEEE, 2017, pp. 263–275.

- [19] L. Yu, L. Liu, C. Pu, M. E. Gursoy, and S. Truex, “Differentially private model publishing for deep learning,” arXiv preprint arXiv:1904.02200, 2019.

- [20] S. Ruder, “An overview of gradient descent optimization algorithms,” arXiv preprint arXiv:1609.04747, 2016.

- [21] F. Chollet, “Xception: Deep learning with depthwise separable convolutions,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2017, pp. 1251–1258.

- [22] C. Darken and J. E. Moody, “Note on learning rate schedules for stochastic optimization,” in Advances in neural information processing systems, 1991, pp. 832–838.

- [23] C. Dwork, F. McSherry, K. Nissim, and A. Smith, “Calibrating noise to sensitivity in private data analysis,” in Theory of cryptography conference. Springer, 2006, pp. 265–284.

- [24] S. Song, K. Chaudhuri, and A. D. Sarwate, “Stochastic gradient descent with differentially private updates,” in 2013 IEEE Global Conference on Signal and Information Processing. IEEE, 2013, pp. 245–248.

- [25] S. Song, K. Chaudhuri, and A. Sarwate, “Learning from data with heterogeneous noise using sgd,” in Artificial Intelligence and Statistics, 2015, pp. 894–902.

- [26] T. Zhu, G. Li, W. Zhou, and S. Y. Philip, “Differentially private data publishing and analysis: A survey,” IEEE Transactions on Knowledge and Data Engineering, vol. 29, no. 8, pp. 1619–1638, 2017.

- [27] M. Ding, D. López-Pérez, G. Mao, Z. Lin, and S. K. Das, “Dna-ga: A tractable approach for performance analysis of uplink cellular networks,” IEEE Transactions on Communications, vol. 66, no. 1, pp. 355–369, 2017.