Reachability Embeddings: Scalable Self-Supervised Representation Learning from Spatiotemporal Motion Trajectories for Multimodal Computer Vision

1 Introduction

Graphs are natural data structures for representing geospatial datasets (e.g., road networks, point clouds, 3D object meshes) with natural definitions of nodes and edges. Instead of hand-engineering task-specific and domain-specific features for nodes in graphs, recent methods metapath2vec ; node2vec ; deepwalk have focused on automatically learning low-dimensional, feature vector representations called node embeddings. In parallel, self-supervised learning has been an area of active and promising research. Self-supervised representation learning techniques utilize large datasets without semantic annotations to learn meaningful, universal features that can be conveniently transferred to solve a wide variety of downstream supervised tasks. In ganguli2021reachability , we propose a novel self-supervised method for learning representations of geographic locations from observed unlabeled GPS trajectories that can be used by itself or can be combined with other image-like data modalities to solve downstream geospatial computer vision tasks. A spatial proximity-preserving graph representation of the earth surface is inferred from observed mobility trajectories that is used to cast the geospatial representation learning task into a task of learning self-supervised node embeddings. Reachability embeddings serve as task-agnostic, feature representations of geographic locations. Using reachability embeddings as pixel representations for five different downstream geospatial tasks, cast as supervised semantic segmentation problems, we quantitatively demonstrate that reachability embeddings are semantically meaningful representations and result in 4–23% gain in performance, as measured using area under the precision-recall curve (AUPRC) metric, when compared to baseline models that use pixel representations (called Local Aggregate Representations (LAR)) that do not account for the spatial connectivity between tiles syntheticdata . Reachability embeddings transform sequential, spatiotemporal motion trajectory data into semantically meaningful image-like tensor representations that can be combined (multimodal fusion) with other data modalities (on machine learning platforms such as Trinity trinity ) that are or can be transformed into image-like tensor representations (for e.g., RBG imagery, graph embeddings of road networks, passively collected imagery like SAR, etc.) to facilitate multimodal learning in geospatial computer vision. Multimodal computer vision is critical for training machine learning models for geospatial feature detection to keep a geospatial mapping service up-to-date in real-time and can significantly improve user experience and above all, user safety.

2 The Reachability Embeddings Algorithm Proposed in ganguli2021reachability

A GPS trajectory encodes spatiotemporal movement of an object as a chronologically ordered sequence of GPS records (a tuple of timestamp and the location’s zoom-24 tile z24tiledefinition ). Let represent the set of all available GPS trajectories during the time interval such that all GPS records in each trajectory are associated with the same motion modality (e.g., driving, walking, biking). The Earth Surface Graph (ESG), , is defined as the inferred graph obtained from a raster representation of the earth’s surface based on zoom-24 tiles with these tiles as nodes, .

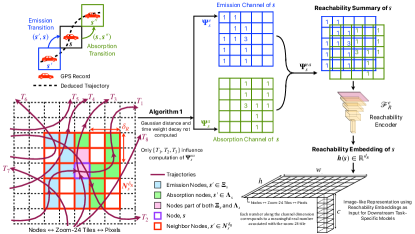

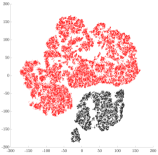

GPS trajectories are modeled as allowed Markovian paths on the ESG thereby defining the edges of the ESG, , as the observed transitions between nodes in . In summary, the following equivalences are defined: (i) zoom-24 tile Markovian state space , (ii) GPS trajectory Markovian trajectory in Path in . In ganguli2021reachability , a two stage algorithm is proposed to learn self-supervised representations for each node, , in the ESG based on the frequency of transitions from neighboring nodes to (emission transitions) and transitions from to its neighboring nodes (absorption transitions). With the intuition that transitions occurring over large spatial distances should not influence the learned representations of nodes, transitions occurring only within a user-specified neighborhood () are considered valid. The first stage comprises of a scalable, distributed, and data-parallel algorithm that exploits the Cartesian grid structure of to generate spatial proximity-preserving, image-like, tensor representations, called the reachability summary, of the transitions associated with a node (Figure 1) in for every node . In the second stage, reachability summaries are compressed to a -dimensional vector representation for each tile/node, called reachability embeddings, using the encoder of a contractive, fully-convolutional autoencoder trained to reconstruct reachability summaries. Reachability summary generation and its contractive reconstruction can together be viewed as the self-supervision pretext task to obtain reachability embeddings. The contractive regularization incentivizes robustness and invariance of embeddings to small perturbations in the summary leading embeddings of geographic locations with similar mobility activity to be close to each other (Figure 2) in the learned, low-dimensional manifold. Reachability summary generation is the expensive stage influencing the runtime of the algorithm at test time. We demonstrate the scalability of the proposed distributed, data-parallel algorithm for stage 1 using strong scaling analysis grama03 on the publicly available T-Drive dataset msrtdrive . Reachability summaries are shown to capture spatial connectivity patterns based on frequency, distance traveled, and time taken during transitions. A theoretical motivation of reachability summaries and reachability embeddings using the Chapman-Kolmogorov Equations (CKE) for Markov chains is also provided in ganguli2021reachability . Reachability embeddings are designed to be easy for storage and management by ML feature stores for efficient multimodal modeling of downstream tasks. We use the Trinity Feature Store and the Trinity ML platform trinity to store the generated embeddings and for the experiments described in the next section.

3 Qualitative and Quantitative Results

The impact of reachability embeddings on the performance of downstream tasks is evaluated by training supervised, pixel-wise, semantic segmentation models for five important downstream geospatial tasks, viz. (i) detection of overpasses, (ii) detection of pedestrian crosswalks, (iii) detection of driving access (entry/exit) points, (iv) detection of locations with traffic lights, and (v) detection of locations with stop signs. For all tasks, the UNet ronneberger2015 architecture is trained to minimize the pixel-wise binary cross-entropy between the predicted segmentation map and labels. A 60-20-20 randomly chosen training-validation-testing set is created and fixed for all subsequent experimentation. Table 1 shows the quantitative comparison of the performance for all 5 downstream tasks, quantified using AUPRC on the test set after model training converges (100 epochs), between two variants each of baseline (varying observation intervals) and reachability-based (varying embedding dimension) models. Precision-Recall curves for all tasks will be shown. Three key observations emerge: (i) increasing the observation interval (increasing signal-to-noise ratio) for computing LAR and increasing increases AUPRC — for most values of recall, precision increases due to reduction in false positives; (ii) reachability-based models outperform the baseline models, including those using LAR computed by observing 3 times more trajectories: the AUPRC gain by the inferior reachability-based model () over the superior baseline model (observation interval ) varies from 1.6% for stop signs detection task to 18.4% for the access point detection task; (iii) for the same observation time interval, , simply replacing the LAR-based inputs by reachability embeddings () results in an AUPRC gain that varies from 4.1% for the stop signs detection task to 23.3% for the access point detection task. These observations conclusively demonstrate that reachability embeddings are more informative, denser representations of trajectory data requiring lesser trajectories (upto 67% less) to compute. Thus, reachability embeddings may be used to compute semantically meaningful representations of trajectories in geographical areas with less traffic or to build computer vision-based models for low-resource geospatial tasks. Additional results of using reachability embeddings in multimodal settings (combining with satellite imagery and road network graph via early fusion) and qualitative comparison of model predictions using reachability embeddings as inputs ganguli2021reachability will be shown.

| Input Channels | Observation Interval | Overpass Detection | Crosswalk Detection | Access Point Detection | Traffic Lights Detection | Stop Signs Detection |

|---|---|---|---|---|---|---|

| LAR Baseline | 0.782 | 0.922 | 0.663 | 0.890 | 0.921 | |

| LAR Baseline | 0.785 (+0.4%) | 0.923 (+0.1%) | 0.684 (+3.0%) | 0.925 (+3.8%) | 0.924 (+0.3%) | |

| Reachability, | 0.899 (+15.0%) | 0.959 (+4.0%) | 0.843 (+21.4%) | 0.974 (+8.6%) | 0.938 (+1.9%) | |

| Reachability, | 0.925 (+18.3%) | 0.976 (+5.9%) | 0.864 (+23.3%) | 0.985 (+9.6%) | 0.959 (+4.1%) |

References

- [1] Definition of zoom-24 tiles. A tile resulting from viewing the spherical Mercator projection coordinate system (EPSG:3857) [3] of earth as a grid. This corresponds to a spatial resolution of approximately 2.38 m at the equator. use of finite area tiles mitigate handling real-valued latitude-longitude gps location pairs. the tile represents all such latitude-longitude pairs within itself.

- [2] Y. Dong, N. V. Chawla, and A. Swami. metapath2vec: Scalable representation learning for heterogeneous networks. In Proceedings of the 23rd ACM SIGKDD international conference on knowledge discovery and data mining, pages 135–144, 2017.

- [3] EPSG Geodetic Parameter Registry. EPSG:3857. https://epsg.io/3857.

- [4] S. Ganguli, C. Iyer, and V. Pandey. Reachability Embeddings: Scalable self-supervised representation learning from mobility trajectories for geospatial computer vision. In Proceedings of the 23nd IEEE International Conference on Mobile Data Management (MDM) 2022, pages 44–53, 2022.

- [5] A. Grama et al. Introduction to parallel computing. Pearson Education, 2003.

- [6] A. Grover and J. Leskovec. node2vec: Scalable feature learning for networks. In Proceedings of the 22nd ACM SIGKDD international conference on Knowledge discovery and data mining, pages 855–864, 2016.

- [7] C. V. K. Iyer, F. Hou, H. Wang, Y. Wang, K. Oh, S. Ganguli, and V. Pandey. Trinity: A no-code AI platform for complex spatial datasets. In Proceedings of the 4th ACM SIGSPATIAL International Workshop on AI for Geographic Knowledge Discovery, page 33–42, 2021.

- [8] B. Perozzi, R. Al-Rfou, and S. Skiena. DeepWalk: Online learning of social representations. In Proceedings of the 20th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, page 701–710, 2014.

- [9] O. Ronneberger et al. U-Net: CNNs for biomedical image segmentation. arXiv:1505.04597, 2015.

- [10] X. Xiao, S. Ganguli, and V. Pandey. VAE-Info-cGAN: Generating synthetic images by combining pixel-Level and feature-Level geospatial conditional inputs. In Proceedings of the 13th ACM SIGSPATIAL International Workshop on Computational Transportation Science, 2020.

- [11] J. Yuan, Y. Zheng, X. Xie, and G. Sun. Driving with knowledge from the physical world. In Proceedings of the 17th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, page 316–324, 2011.