Recovering Accurate Labeling Information from Partially Valid Data for Effective Multi-Label Learning

Abstract

Partial Multi-label Learning (PML) aims to induce the multi-label predictor from datasets with noisy supervision, where each training instance is associated with several candidate labels but only partially valid. To address the noisy issue, the existing PML methods basically recover the ground-truth labels by leveraging the ground-truth confidence of the candidate label, i.e., the likelihood of a candidate label being a ground-truth one. However, they neglect the information from non-candidate labels, which potentially contributes to the ground-truth label recovery. In this paper, we propose to recover the ground-truth labels, i.e., estimating the ground-truth confidences, from the label enrichment, composed of the relevance degrees of candidate labels and irrelevance degrees of non-candidate labels. Upon this observation, we further develop a novel two-stage PML method, namely Partial Multi-Label Learning with Label Enrichment-Recovery (PML3er), where in the first stage, it estimates the label enrichment with unconstrained label propagation, then jointly learns the ground-truth confidence and multi-label predictor given the label enrichment. Experimental results validate that PML3er outperforms the state-of-the-art PML methods.

1 Introduction

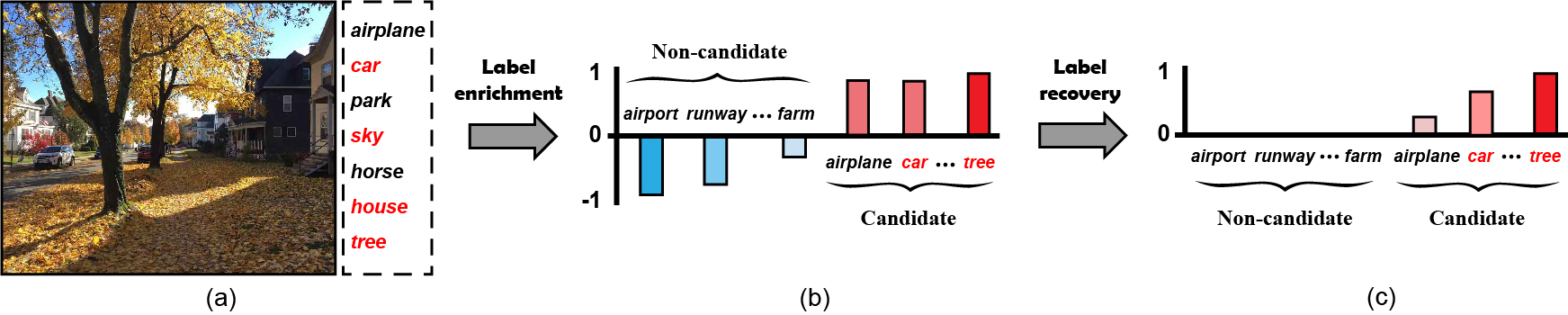

Partial Multi-label Learning (PML), a novel learning paradigm with noisy supervision, draws increasing attention from the machine learning community Fang and Zhang (2019); Sun et al. (2019). It refers to induce the multi-label predictor from PML datasets, with each training instance associated with multiple candidate labels that are only partially valid. The PML datasets are available in many real-world applications, where collecting the accurate supervision is quite expensive for many scenarios, e.g., crowdsourcing annotations. To visualize this, we illustrate a PML image instance in Fig.1(a): an annotator may roughly select more candidate labels, so as to cover all ground-truth labels but ineluctably with several irrelevant ones, imposing big challenges for learning with such noisy PML training instances.

Formally speaking, given a PML dataset with instances and labels, where denotes the feature vector and the candidate label set of . For , the value of 0 or 1 represents the corresponding label to be a non-candidate or candidate label. Let denote the (unknown) ground-truth label sets of instances. Specifically, for each instance , its corresponding is covered by the candidate label set , i.e., . Accordingly, the task of PML aims to induce a multi-label predictor from .

To solve the problem, several typical attempts have been made Xie and Huang (2018); Yu et al. (2018); Fang and Zhang (2019); Sun et al. (2019), where the basic idea is to recover the ground-truth labels by leveraging the ground-truth confidence of the candidate label, i.e., the likelihood of a candidate label being a ground-truth one, and learning with it, instead of the candidate label. For example, an early framework of PML Fang and Zhang (2019) estimates the ground-truth confidences via label propagation over an instance neighbor graph, following the intuition that neighboring instances tend to share the same labels; another work Sun et al. (2019) recovered the ground-truth confidences by decomposing the candidate labels under a low-rank scheme.

Revisiting the existing PML methods, we find that they basically estimate the ground-truth confidence around candidate label annotations, but neglect the information from non-candidate labels, which potentially contributes to the ground-truth label recovery. The irrelevance degree of the non-candidate label, i.e., the degree of a non-candidate label being irrelevant with the instance, is contributive to distinguish the irrelevant noisy labels within candidate label sets, since any candidate label tends to be an irrelevant noisy one if its highly correlated labels are given higher irrelevance degrees. For example, in Fig.1(b) and (c), the candidate label “airplane” is more likely to be an irrelevant noisy label, since its highly correlated labels, e.g., “airport” and “runway”, are with higher irrelevance degrees for the example image instance.

Based on the above analysis, we propose to estimate the ground-truth confidences over both candidate and non-candidate labels. In particular, we develop a novel two-stage PML method, namely Partial Multi-Label Learning with Label Enrichment-Recovery (PML3er). On the first stage, we estimate the label enrichment, composed of the relevance degrees of candidate labels (i.e., the complementary definition of irrelevance degree) and irrelevance degrees of non-candidate ones, using an unconstrained label propagation procedure. In the second stage, we jointly train the ground-truth confidence and multi-label predictor given the label enrichment learned from the first stage. We conduct extensive experiments to validate the effectiveness of PML3er.

The contributions of this paper are summarized below:

-

•

We propose PML3er by leveraging both the information from candidate and non-candidate labels.

-

•

The PML3er estimates the label enrichment using unconstrained label propagation, and then trains the multi-label predictor with label recovery simultaneously.

-

•

We conduct extensive experiments to validate the effectiveness of PML3er.

2 Related Work

2.1 Partial Multi-label Learning

Abundant researches towards Partial Label Learning (PLL) have been made, where each training instance is annotated with a candidate label set but only one is valid Cour et al. (2011); Liu and Dietterich (2012); Chen et al. (2014); Zhang et al. (2017); Yu and Zhang (2017); Wu and Zhang (2018); Gong et al. (2018); Chen et al. (2018); Feng and An (2018, 2019b, 2019a); Wang et al. (2019). The core idea of PLL follows the spirit of disambiguation, i.e., identifying the ground-truth label from the candidate label set for each instance. In some cases, PLL can be deemed as a special case of PML, where the ground-truth label number is fixed to one. Naturally, PML is more challenging than PLL, even the number of ground-truth labels is unknown.

The existing PML methods mainly recover the ground-truth labels by estimating the ground-truth confidences Xie and Huang (2018); Yu et al. (2018); Fang and Zhang (2019); Sun et al. (2019). Two PML methods are proposed Xie and Huang (2018), i.e., Partial Multi-label Learning with label correlation (PML-lc) and feature prototype (PML-fp), which are upon the ranking loss objective weighted by ground-truth confidences. The other one Sun et al. (2019), namely Partial Multi-label Learning by Low-Rank and Sparse decomposition (PML-LRS), trains the predictor with ground-truth confidences under the low-rank assumption. Besides them, the two-stage PML framework Fang and Zhang (2019), i.e., PARTIal multi-label learning via Credible Label Elicitation (Particle), estimates the ground-truth confidences by label propagation, and then trains the predictor over candidate labels with high confidences only. Two traditional methods of victual Label Splitting (VLS) and Maximum A Posteriori (MAP) are used in its second stage, leading to two versions, i.e., Particle-Vls and Particle-Map.

Orthogonal to those methods, our PML3er estimates the ground-truth confidence using the label enrichment involving both candidate and non-candidate labels to recover more accurate supervision.

2.2 Learning with Label Enrichment

Learning with label enrichment, also known as label enhancement, explores richer label information, e.g., the label membership degrees of instances, to enhance the performance Gayar et al. (2006); Jiang et al. (2006); Li et al. (2015); Hou et al. (2016); Xu et al. (2018). The existing methods achieve the label enrichment using the similarity knowledge of instances by various schemes, such as fuzzy clustering Gayar et al. (2006), label propagation Li et al. (2015), manifold learning Hou et al. (2016) etc. They have been applied to the paradigms of multi-label learning and label distribution learning with accurate annotations.

Our PML3er also refers to the label enrichment, but it works on the scenario of PML under noisy supervision.

3 The PML3er Algorithm

Following the notations in Section 1, we denote and the instance matrix and candidate label matrix, respectively. For each instance , means that the -th label is a candidate label, otherwise it is a non-candidate one.

Given a PML dataset , our PML3er first estimates the label enrichment, which describes the relevance degrees of candidate labels and irrelevance degrees of non-candidate ones. Specifically, for each instance , we denote the corresponding label enrichment as follows:

| (1) |

referring to the example image instance in Fig.1(b). Further, we denote by the label enrichment matrix. Then, PML3er induces the multi-label predictor from the enrichment version of PML dataset .

We now describe the two stages of PML3er, i.e., label enrichment by unconstrained propagation and jointly learning the ground-truth confidence and multi-label predictor.

3.1 Label Enrichment by Unconstrained Propagation

In the first stage, PML3er estimates the label enrichment matrix . Inspired by Li et al. (2015); Fang and Zhang (2019), we develop an unconstrained label propagation procedure, which estimates the labeling degrees by progressively propagating annotation information over a weighted k-Nearest Neighbor (kNN) graph over the instances. The intuition is that the candidate and non-candidate labels with more accumulations during the kNN propagation tend to be with higher relevance degrees and lower irrelevance degrees, respectively.

We describe the detailed steps of the unconstrained label propagation procedure below.

[Step 1]: After constructing the kNN graph , for each instance , we compute its reconstruction weight vector of kNNs, i.e., , by minimizing the following objective:

| (2) |

where denotes the kNNs of . This objective can be efficiently solved by any off-the-shelf Quadratic Programming (QP) solver222We apply the public QP solver of mosek downloaded at https://www.mosek.com/. Repeat to solve the problem of Eq.(2) for each instance, we can achieve the reconstruction weight matrix . Then, we normalize by row, i.e., , where .

[Step 2]: Following the idea that the relationships of instances can be translated to their associated labels, we can enrich the labeling information by propagating over with . Formally, we denote by the label propagation solution, which is initialized by the candidate label matrix , i.e., . Then, can be iteratively updated by propagating over with until convergence. At each iteration , the update equation is given by:

| (3) |

where is the propagation rate. To avoid overestimate non-candidate labels, we normalize each row of :

| (4) |

where denotes the minimum of , and the maximum over candidate labels in .

[Step 3]: After obtaining the optimal , denoted by , we compute the label enrichment matrix as follows:

| (5) |

For clarity, we summarize the unconstrained label propagation for in Algorithm 1.

3.2 Jointly Learning the Ground-truth Confidence and Multi-label Predictor

In the second stage, PML3er jointly trains the ground-truth confidence matrix and multi-label predictor given the enrichment version of PML dataset .

First, we aim to recover from by leveraging the following minimization:

| (6) |

where denotes the label correlation matrix, and the all zero matrix. Specifically for capturing local label correlations, we utilize the nuclear regularizer for , i.e., , with the regularization parameter .

Second, we aim to train a linear multi-label predictor with by leveraging a least square minimization with a squared Frobenius norm regularization:

| (7) |

where is the predictive parameter matrix, and the regularization parameter.

Discussion on Recovering from .

Referring to Eq.(6), we jointly learn the ground-truth confidence matrix and label correlation matrix by minimizing their reconstruction error of . By omitting the regularizers, it can be roughly re-expressed below:

Obviously, for each component , it contributes that any tends to be larger or smaller given larger value of (i.e., a higher correlation between label and ), if corresponds to a candidate label () or a non-candidate one (). That is, we actually recover using the information from candidate and non-candidate labels simultaneously.

3.2.1 Optimization

As directly solving the objective of Eq.(8) is intractable, we optimize the variables of interest, i.e., , via alternating fashion, by optimizing one variable with the other two fixed. Repeat this process until convergence or reaching the maximum number of iterations. We describe the update equations of one by one.

[Update ] When {} are fixed, the sub-objective with respect to can be reformulated as follows:

| (9) |

This optimization refers to a convex optimization, so as to achieve the following truncated update equation:

| (10) |

where :

[Update ] When {} are fixed, the sub-objective with respect to can be reformulated as follows:

| (11) |

Following the spirit of Alternating Direction Method of Multipliers (ADMM) Boyd et al. (2011), we convert Eq.(11) into an augmented Lagrange problem with an auxiliary matrix and Lagrange parameter matrix :

| (12) |

where is the penalty parameter. We perform an inner iteration to alternatively optimizing each of with the other two fixed. After some simple algebra, the update equations are formulated as follows:

| (13) |

For , it can be directly solved by

| (14) |

where is the singular thresholding with Cai et al. (2010). Then, for , it can be updated by:

| (15) |

[Update ] When {} are fixed, the sub-objective with respect to can be reformulated as follows:

| (16) |

The problem has an analytic solution as follows:

| (17) |

For clarity, we summarize the procedure of this predictive model induction in Algorithm 2.

3.3 PML3er Summary

We describe some implementation details of PML3er. First, following Fang and Zhang (2019), we fix the parameters of the unconstrained label propagation as: and . Second, we empirically fix the penalty parameter of ADMM as 1. Third, the maximum iteration number of both ADMM loops is set to 5, as to be widely known, ADMM basically converges fast. Overall, the PML3er algorithm is outlined in Algorithm 3.

Time Complexity.

We also discuss the time complexity of PML3er. First, in the unconstrained label propagation procedure, we require time to construct the weighted kNN graph, and time to obtain the label enrichment matrix, referring to Eq.(3), where denotes its iteration number. Second, in predictive model induction, the major computational costs include matrix inversion and SVD, requiring roughly time, where denotes the iteration number of the outer loop333Here, we omit the iteration numbers of inner ADMM loop for , since it converges very fast.. Therefore, the total time complexity of PML3er is .

4 Experiment

4.1 Experimental Setup

| Dataset | #AL | Domain | |||

| Genbase | 662 | 1186 | 27 | 1.252 | biology |

| Medical | 978 | 1449 | 45 | 1.245 | text |

| Arts | 5000 | 462 | 26 | 1.636 | text |

| Corel5k | 5000 | 499 | 374 | 3.522 | images |

| Bibtex | 7395 | 1836 | 159 | 2.406 | text |

| Dataset | a | PML3er (Ours) | Particle-Map | PML-LRS | PML-fp | ML-kNN | Lift |

| RLoss | |||||||

| Genbase | 50 | .005 .002 | .010 .002 | .006 .002 | .019 .006 | .012 .003 | .009 .003 |

| 100 | .005 .003 | .010 .002 | .005 .003 | .018 .007 | .014 .006 | .008 .004 | |

| 150 | .008 .003 | .010 .001 | .009 .002 | .019 .010 | .019 .006 | .012 .004 | |

| 200 | .007 .003 | .009 .001 | .010 .003 | .014 .004 | .017 .005 | .012 .005 | |

| Medical | 50 | .028 .005 | .048 .005 | .033 .006 | .042 .009 | .075 .009 | .044 .005 |

| 100 | .030 .006 | .050 .006 | .032 .006 | .043 .011 | .078 .012 | .046 .007 | |

| 150 | .034 .007 | .054 .005 | .035 .007 | .042 .009 | .094 .012 | .055 .006 | |

| 200 | .031 .005 | .049 .004 | .035 .007 | .043 .015 | .088 .012 | .056 .008 | |

| Arts | 50 | .154 .003 | .142 .002 | .162 .002 | .132 .002 | .165 .003 | .137 .003 |

| 100 | .162 .004 | .152 .002 | .170 .005 | .131 .002 | .166 .003 | .143 .005 | |

| 150 | .175 .002 | .158 .003 | .186 .003 | .140 .004 | .174 .005 | .155 .003 | |

| 200 | .180 .002 | .165 .003 | .192 .003 | .146 .001 | .172 .004 | .156 .004 | |

| Corel5k | 50 | .174 .002 | .128 .002 | .206 .002 | .198 .006 | .146 .002 | .144 .001 |

| 100 | .179 .003 | .132 .002 | .216 .003 | .178 .008 | .152 .001 | .154 .002 | |

| 150 | .185 .003 | .134 .002 | .230 .004 | .169 .003 | .156 .002 | .164 .002 | |

| 200 | .186 .002 | .135 .002 | .232 .003 | .176 .005 | .161 .003 | .175 .003 | |

| Bibtex | 50 | .094 .002 | .190 .004 | .126 .003 | .112 .004 | .240 .002 | .121 .003 |

| 100 | .100 .002 | .187 .003 | .138 .002 | .107 .003 | .250 .003 | .130 .004 | |

| 150 | .112 .002 | .187 .002 | .157 .002 | .109 .003 | .260 .003 | .143 .003 | |

| 200 | .116 .001 | .189 .005 | .165 .001 | .111 .001 | .266 .009 | .145 .002 | |

| AP | |||||||

| Genbase | 50 | .991 .005 | .978 .005 | .988 .004 | .981 .006 | .968 .007 | .982 .006 |

| 100 | .992 .004 | .978 .004 | .989 .004 | .981 .007 | .967 .008 | .984 .003 | |

| 150 | .988 .003 | .978 .004 | .979 .002 | .976 .011 | .958 .011 | .979 .008 | |

| 200 | .989 .003 | .979 .003 | .981 .005 | .979 .006 | .964 .014 | .977 .011 | |

| Medical | 50 | .882 .014 | .798 .018 | .853 .021 | .852 .023 | .742 .029 | .830 .017 |

| 100 | .881 .018 | .791 .021 | .861 .021 | .855 .022 | .741 .029 | .822 .015 | |

| 150 | .867 .022 | .781 .024 | .858 .018 | .845 .019 | .720 .033 | .804 .006 | |

| 200 | .870 .016 | .768 .013 | .855 .020 | .853 .029 | .715 .034 | .797 .020 | |

| Arts | 50 | .598 .003 | .528 .004 | .588 .003 | .577 .007 | .488 .005 | .595 .005 |

| 100 | .597 .004 | .513 .004 | .584 .005 | .578 .005 | .486 .005 | .591 .007 | |

| 150 | .577 .005 | .499 .006 | .564 .005 | .558 .005 | .478 .006 | .577 .003 | |

| 200 | .572 .003 | .491 .006 | .557 .005 | .554 .005 | .477 .003 | .578 .005 | |

| Corel5k | 50 | .295 .004 | .263 .005 | .282 .003 | .240 .003 | .233 .003 | .244 .005 |

| 100 | .293 .004 | .260 .003 | .276 .004 | .242 .003 | .229 .003 | .217 .004 | |

| 150 | .289 .004 | .264 .005 | .266 .003 | .241 .003 | .226 .003 | .194 .003 | |

| 200 | .288 .004 | .260 .004 | .266 .004 | .241 .003 | .224 .003 | .185 .005 | |

| Bibtex | 50 | .567 .004 | .383 .007 | .532 .003 | .517 .003 | .295 .004 | .487 .007 |

| 100 | .555 .004 | .380 .006 | .509 .005 | .522 .005 | .282 .005 | .467 .007 | |

| 150 | .536 .004 | .369 .003 | .476 .006 | .519 .004 | .270 .004 | .448 .007 | |

| 200 | .528 .006 | .365 .004 | .460 .005 | .511 .006 | .266 .005 | .440 .007 | |

| Macro-F1 | |||||||

| Genbase | 50 | .710 .029 | .543 .053 | .680 .015 | .598 .023 | .622 .022 | .619 .044 |

| 100 | .722 .044 | .522 .017 | .710 .027 | .594 .056 | .594 .022 | .600 .039 | |

| 150 | .649 .033 | .536 .034 | .618 .039 | .603 .030 | .540 .016 | .566 .046 | |

| 200 | .652 .054 | .533 .019 | .559 .033 | .603 .026 | .562 .058 | .579 .039 | |

| Medical | 50 | .405 .027 | .270 .013 | .301 .016 | .296 .015 | .243 .034 | .309 .022 |

| 100 | .363 .017 | .254 .015 | .314 .021 | .320 .020 | .235 .020 | .293 .015 | |

| 150 | .348 .026 | .238 .006 | .316 .013 | .294 .014 | .208 .016 | .264 .010 | |

| 200 | .373 .024 | .227 .016 | .315 .015 | .315 .036 | .192 .019 | .266 .020 | |

| Arts | 50 | .244 .012 | .201 .007 | .220 .008 | .159 .008 | .123 .007 | .249 .009 |

| 100 | .251 .012 | .190 .004 | .227 .006 | .156 .004 | .119 .008 | .247 .006 | |

| 150 | .240 .007 | .180 .005 | .229 .006 | .129 .004 | .112 .010 | .237 .009 | |

| 200 | .226 .006 | .184 .007 | .210 .006 | .126 .004 | .108 .005 | .217 .005 | |

| Corel5k | 50 | .040 .001 | .027 .001 | .039 .001 | .005 .000 | .020 .001 | .046 .002 |

| 100 | .038 .000 | .028 .002 | .038 .001 | .004 .000 | .020 .002 | .040 .002 | |

| 150 | .037 .001 | .034 .002 | .037 .001 | .004 .000 | .019 .002 | .035 .002 | |

| 200 | .036 .000 | .032 .002 | .032 .000 | .004 .000 | .018 .001 | .033 .001 | |

| Bibtex | 50 | .375 .002 | .163 .004 | .359 .004 | .299 .006 | .133 .001 | .299 .008 |

| 100 | .360 .002 | .157 .004 | .332 .004 | .311 .002 | .120 .004 | .271 .008 | |

| 150 | .340 .002 | .146 .003 | .296 .006 | .306 .007 | .109 .001 | .247 .009 | |

| 200 | .331 .004 | .145 .005 | .278 .004 | .301 .002 | .103 .005 | .232 .010 | |

4.1.1 Datasets

In the experiment, we downloaded five public multi-label datasets from the mulan website444http://mulan.sourceforge.net/datasets-mlc.html, including Genbase, Medical, Arts, Corel5k and Bibtex. The statistics of those datasets are outlined in Table 1.

To conduct experiments under the scenario of noisy supervision, we generate synthetic PML datasets from each original dataset by randomly drawing irrelevant noisy labels. Specifically, for each instance, we create the candidate label set by adding several randomly drawn irrelevant labels with number of ground-truth labels, where we vary over the range . Besides, to avoid useless PML instances annotated with all labels, we fix each candidate label set at most labels. Accordingly, a total of twenty synthetic PML datasets are generated.

4.1.2 Baseline Methods

We employed five baseline methods for comparison, including three PML methods and two traditional methods of Multi-label Learning (ML). For the ML ones, we directly train them over the synthetic PML datasets by considering all candidate labels as ground-truth ones. We outline the method-specific settings below.

-

•

PML-fp Xie and Huang (2018): A PML method with a ranking loss objective weighted by ground-truth confidences. We utilize the code provided by its authors, and tune the parameters following the original paper. Here, the other version of Xie and Huang (2018), i.e., PML-lc, was neglected, since it performed worse than PML-fp in our early experiments.

-

•

Particle-Map Fang and Zhang (2019): A two-stage PML method with label propagation. We employ the public code555http://palm.seu.edu.cn/zhangml/files/PARTICLE.rar, and tune the parameters following the original paper. Here, the other version of Fang and Zhang (2019), i.e., Particle-Vls, was neglected, since it performed worse than Particle-Map in our early experiments.

-

•

PML-LRS Sun et al. (2019): A PML method with candidate label decomposition. We utilize the code provided by its authors, and tune the regularization parameters over using cross-validations.

-

•

Multi-Label k Nearest Neighbor (ML-kNN) Zhang and Zhou (2007): A kNN ML method. We employ the public code666http://palm.seu.edu.cn/zhangml/files/ML-kNN.rar implemented by its authors, and tune its parameters following the original paper.

-

•

Multi-label learning with Label specIfic FeaTures (Lift) Zhang and Wu (2015): A binary relevance ML method. We employ the public code777http://palm.seu.edu.cn/zhangml/files/LIFT.rar implemented by its authors, and tune its parameters following the original paper.

For our PML3er, the regularization parameter is fixed to 1, and is tuned over using 5-fold cross validation results.

4.1.3 Evaluation Metrics

We employed seven evaluation metrics Zhang and Zhou (2014), including Subset Accuracy (SAccuracy), Hamming Loss (HLoss), One Error (OError), Ranking Loss (RLoss), Average Precision (AP), Macro Averaging F1 (Macro-F1), Micro Averaging F1 (Micro-F1), where both instance-based and label-based metrics are covered. For SAccuracy, AP, Macro-F1 and Micro-F1, the higher value is better, while for HLoss, OError and RLoss, a smaller value is better.

| Baseline Method | SAccuracy | HLoss | OError | RLoss | AP | Macro-F1 | Micro-F1 | Total |

| Particle-Map | 20/0/0 | 16/4/0 | 20/0/0 | 12/0/8 | 20/0/0 | 20/0/0 | 20/0/0 | 128/4/8 |

| PML-LRS | 17/2/1 | 9/11/0 | 20/0/0 | 17/3/0 | 20/0/0 | 18/2/0 | 20/0/0 | 121/18/1 |

| PML-fp | 20/0/0 | 18/2/0 | 19/1/0 | 11/1/8 | 20/0/0 | 20/0/0 | 20/0/0 | 128/4/8 |

| ML-kNN | 20/0/0 | 20/0/0 | 20/0/0 | 14/0/6 | 20/0/0 | 20/0/0 | 20/0/0 | 134/0/6 |

| Lift | 19/0/1 | 17/3/0 | 17/2/1 | 12/0/8 | 18/1/1 | 17/0/3 | 19/1/0 | 119/7/14 |

4.2 Experimental Results

For each PML dataset, we randomly generate five 50%/50% training/test splits, and evaluate the average scores (standard deviation) of comparing algorithms. Due to the space limitation, we only present detailed results of RLoss, AP and Macro-F1 in Table 2, while the observations of other metrics tend to be similar. First, we can observe that PML3er outperforms other three PML methods in most cases, where PML3er dominates the scenarios of AP and Macro-F1 on different noise levels. Especially, the performance gain over Particle-Map indicates that using the information from non-candidate labels is beneficial for PML. Besides, we can see that PML3er significantly performs better than the two traditional ML methods in most cases, since they directly use noisy candidate labels for training.

Additionally, for each PML dataset and evaluation metric, we conduct a pairwise t-test (at 0.05 significance level) to examine whether PML3er is statistically different to baselines. The win/tie/loss counts over 20 PML datasets and 7 evaluation metrics are presented in Table 3. We can observe that PML3er significantly outperforms the PML baseline methods Particle-Map, PML-LRS and PML-fp in , and cases, and also outperforms the two traditional MLL methods ML-kNN and Lift in and cases. Besides, on the results of evaluation metrics, PML3er achieves significantly better scores. For example, the SAccuracy, OError, AP, Macro-F1 and Micro-F1 of PML3er are better than all comparing algorithms in cases.

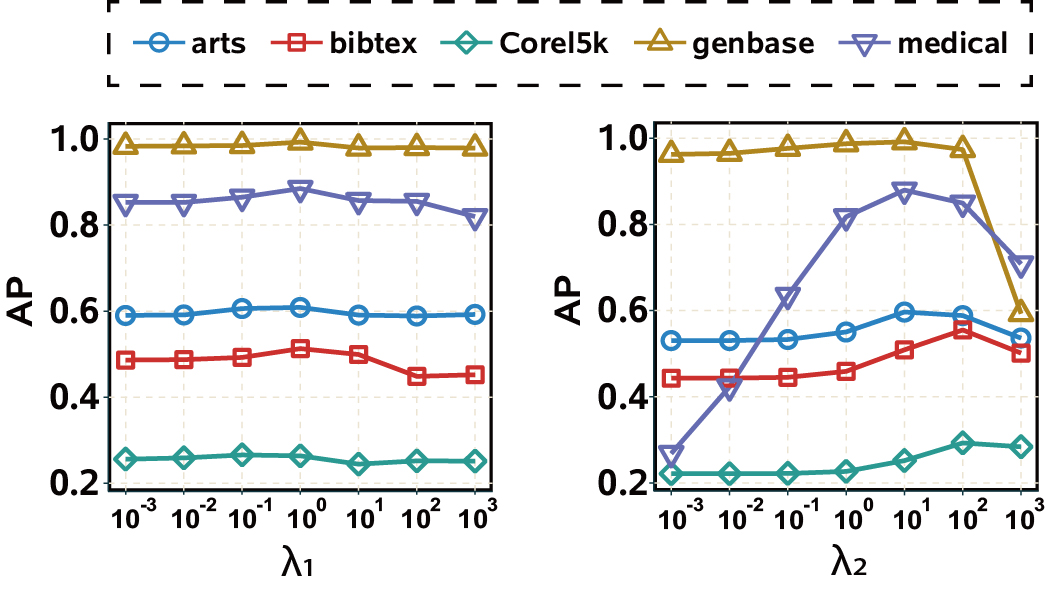

4.3 Parameter Sensitivity

We empirically analyze the sensitivities of the regularization parameters of PML3er. For each one, we examine the AP scores by varying its value from across PML datasets with by holding the other two fixed. The experimental results are presented in Fig.2. First, we can observe that the AP scores of seem quite stable over different types of PML datasets. That is, we empirically conclude that PML3er is insensitive to , making PML3er more practical. Second, for , it performs better with the values of across all PML datasets, which are the settings used in our experiment.

5 Conclusion

We concentrate on the task of PML, and propose a novel two-stage PML3er algorithm. In the first stage, PML3er performs an unconstrained label propagation procedure to estimate the label enrichment, which simultaneously involves the relevance degrees of candidate labels and irrelevance degrees of non-candidate labels. In the second stage, PML3er jointly learns the ground-truth confidence and multi-label predictor given the label enrichment. Extensive experiments on PML datasets indicate the superior performance of PML3er.

Acknowledgments

We would like to acknowledge support for this project from the National Natural Science Foundation of China (NSFC) (No.61602204, No.61876071, No.61806035, No.U1936217)

References

- Boyd et al. [2011] Stephen Boyd, Neal Parikh, Eric Chu, Borja Peleato, and Jonathan Eckstein. Distributed optimization and statistical learning via the alternating direction method of multipliers. Foundations and Trends in Machine Learning, 3(1):1–122, 2011.

- Cai et al. [2010] Jian-Feng Cai, Emmanuel J. Candès, and Zuowei Shen. A singular value thresholding algorithm for matrix completion. SIAM Journal on Optimization, 20(4):1956–1982, 2010.

- Chen et al. [2014] Yi-Chen Chen, Vishal M. Patel, Rama Chellappa, and P. Jonathon Phillips. Ambiguously labeled learning using dictionaries. IEEE Transactions on Information Forensics and Security, 9(12):2076–2088, 2014.

- Chen et al. [2018] Ching-Hui Chen, Vishal M. Patel, and Rama Chellappa. Learning from ambiguously labeled face images. IEEE Transactions on Pattern Analysis and Machine Intelligence, 40(7):1653–1667, 2018.

- Cour et al. [2011] Timothee Cour, Benjamin Sapp, and Ben Taskar. Learning from partial labels. Journal of Machine Learning Research, 12:1501–1536, 2011.

- Fang and Zhang [2019] Jun-Peng Fang and Min-Ling Zhang. Partial multi-label learning via credible label elicitation. In AAAI Conference on Artificial Intelligence, pages 3518–3525, 2019.

- Feng and An [2018] Lei Feng and Bo An. Leveraging latent label distributions for partial label learning. In International Joint Conference on Artificial Intelligence, pages 2107–2113, 2018.

- Feng and An [2019a] Lei Feng and Bo An. Partial label learning by semantic difference maximization. In International Joint Conference on Artificial Intelligence, pages 2294–2300, 2019.

- Feng and An [2019b] Lei Feng and Bo An. Partial label learning with self-guided retraining. In AAAI Conference on Artificial Intelligence, pages 3542–3549, 2019.

- Gayar et al. [2006] Neamat El Gayar, Friedhelm Schwenker, and Günther Palm. A study of the robustness of kNN classifiers trained using soft labels. In International Conference on Artificial Neural Network in Pattern Recognition, pages 67–80, 2006.

- Gong et al. [2018] Chen Gong, Tongliang Liu, Yuanyan Tang, Jian Yang, Jie Yang, and Dacheng Tao. A regularization approach for instance-based superset label learning. IEEE Transactions on Cybernetics, 48(3):967–978, 2018.

- Hou et al. [2016] Peng Hou, Xin Geng, and Min-Ling Zhang. Multi-label manifold learning. In AAAI Conference on Artificial Intelligence, pages 1680–1686, 2016.

- Jiang et al. [2006] Xiufeng Jiang, Zhang Yi, and Jian Cheng Lv. Fuzzy SVM with a new fuzzy membership function. Neural Computing & Applications, 15(3-4):268–276, 2006.

- Li et al. [2015] Yu-Kun Li, Min-Ling Zhang, and Xin Geng. Leveraging implicit relative labeling-importance information for effective multi-label learning. In IEEE International Conference on Data Mining, pages 251–260, 2015.

- Liu and Dietterich [2012] Li-Ping Liu and Thomas G. Dietterich. A conditional multinomial mixture model for superset label learning. In Neural Information Processing Systems, pages 548–556, 2012.

- Sun et al. [2019] Lijuan Sun, Songhe Feng, Tao Wang, Congyan Lang, and Yi Jin. Partial multi-label learning with low-rank and sparse decomposition. In AAAI Conference on Artificial Intelligence, pages 5016–5023, 2019.

- Wang et al. [2019] Deng-Bao Wang, Li Li, and Min-Ling Zhang. Adaptive graph guided disambiguation for partial label learning. In ACM SIGKDD Conference on Knowledge Discovery and Data Mining, pages 83–91, 2019.

- Wu and Zhang [2018] Xuan Wu and Min-Ling Zhang. Towards enabling binary decomposition for partial label learning. In International Joint Conference on Artificial Intelligence, pages 2868–2874, 2018.

- Xie and Huang [2018] Ming-Kun Xie and Sheng-Jun Huang. Partial multi-label learning. In AAAI Conference on Artificial Intelligence, pages 4302–4309, 2018.

- Xu et al. [2018] Ning Xu, An Tao, and Xin Geng. Label enhancement for label distribution learning. In International Joint Conference on Artificial Intelligence, pages 2926–2932, 2018.

- Yu and Zhang [2017] Fei Yu and Min-Ling Zhang. Maximum margin partial label learning. Machine Learning, 106:573–593, 2017.

- Yu et al. [2018] Guoxian Yu, Xia Chen, Carlotta Domeniconi, Jun Wang, Zhao Li, Zili Zhang, and Xindong Wu. Feature-induced partial multi-label learning. In IEEE International Conference on Data Mining, pages 1398–1403, 2018.

- Zhang and Wu [2015] Min-Ling Zhang and Lei Wu. Lift: Multi-label learning with label-specific features. IEEE Transactions on Pattern Analysis and Machine Intelligence, 37(1):107–120, 2015.

- Zhang and Zhou [2007] Min-Ling Zhang and Zhi-Hua Zhou. ML-kNN: A lazy learning approach to multi-label learning. Pattern Recognition, 40(7):2038–2048, 2007.

- Zhang and Zhou [2014] Min-Ling Zhang and Zhi-Hua Zhou. A review on multi-label learning algorithms. IEEE Transactions on Knowledge and Data Engineering, 26(8):1819–1837, 2014.

- Zhang et al. [2017] Min-Ling Zhang, Fei Yu, and Cai-Zhi Tang. Disambiguation-free partial label learning. IEEE Transactions on Knowledge and Data Engineering, 29(10):2155–2167, 2017.