Research \vgtcpapertypealgorithm/technique \authorfooter J.Y.Hwang, Y.H,Cho, S.U.Kwon, and S.B.Jeon are with the Department of Computer Science, Yonsei University, Seoul, Republic of Korea. E-mail: {tlavotl1,maxburst88,kwonars,ludens0508}@yonsei.ac.kr. Y.H,Cho is with the Department of Computer Science, Yonsei University, Seoul, Republic of Korea. E-mail: maxburst88@gmail.com. I.K.Lee is with the Department of Computer Science, Yonsei University, Seoul, Republic of Korea. E-mail: iklee@yonsei.ac.kr. \shortauthortitleHwang et al.: Redirected Walking in Infinite Virtual Indoor Environment Using Change-blindness

Redirected Walking in Infinite Virtual Indoor Environment

Using Change-blindness

Abstract

We present a change-blindness based redirected walking algorithm that allows a user to explore on foot a virtual indoor environment consisting of an infinite number of rooms while at the same time ensuring collision-free walking for the user in real space. This method uses change blindness to scale and translate the room without the user’s awareness by moving the wall while the user is not looking. Consequently, the virtual room containing the current user always exists in the valid real space. We measured the detection threshold for whether the user recognizes the movement of the wall outside the field of view. Then, we used the measured detection threshold to determine the amount of changing the dimension of the room by moving that wall. We conducted a live-user experiment to navigate the same virtual environment using the proposed method and other existing methods. As a result, users reported higher usability, presence, and immersion when using the proposed method while showing reduced motion sickness compared to other methods. Hence, our approach can be used to implement applications to allow users to explore an infinitely large virtual indoor environment such as virtual museum and virtual model house while simultaneously walking in a small real space, giving users a more realistic experience.

keywords:

Virtual reality, redirected walking, space manipulation, change blindnessK.6.1Management of Computing and Information SystemsProject and People ManagementLife Cycle; \CCScatK.7.mThe Computing ProfessionMiscellaneousEthics \teaser Moving the walls of a room using change blindness: (a) before the wall moves, and (b) after the wall moves. \vgtcinsertpkg

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/7198e3df-f9c6-479b-8ca5-d83b10f28731/01.png)

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/7198e3df-f9c6-479b-8ca5-d83b10f28731/03.png)

Introduction

Because locomotion in virtual reality affects a user’s experience, various researches have studied locomotion techniques to provide a realistic experience[2, 32, 11]. Among the locomotion techniques, actual walking induces higher immersion and natural and effective exploration for users[26, 21, 38]. ReDirected Walking (RDW) has been studied as a technique that can overcome the difference between virtual and real space to explore a virtual environment through real walking. In particular, much research has been done on finely manipulating the user’s scene in a range that the user is unaware of [6, 15, 39, 31]. On the other hand, some RDW studies[28, 29, 36] have combined the change blindness phenomenon in which the user does not perceive the manipulation of the virtual space outside the field of view.

However, the virtual environments applied in previous works of change blindness-based spatial manipulation in RDW are limited. In addition, it is necessary to measure the detection threshold to what extent humans are not aware of environmental changes outside of their field of view in a general indoor environment. Previous studies[29, 36] have proposed a spatial manipulation algorithm using change blindness for a limited situation where the virtual space was divided into a manipulation area and a transition area, and measured the detection threshold to what extent the user was unaware of the spatial manipulation in that structure. Nevertheless, these methods cannot be applied to some indoor environments such as a consecutively connected rooms without any corridors. Also, since the previous work procedurally generated virtual layout, these layout cannot be preserved. Therefore, users are more likely to be confused when trying to recall the structure of the virtual space they have experienced. Moreover, it is difficult to apply the measured detection threshold to other indoor environments because the detection threshold was measured in an impossible virtual environment consisting of two adjacent rooms that spatially overlap.

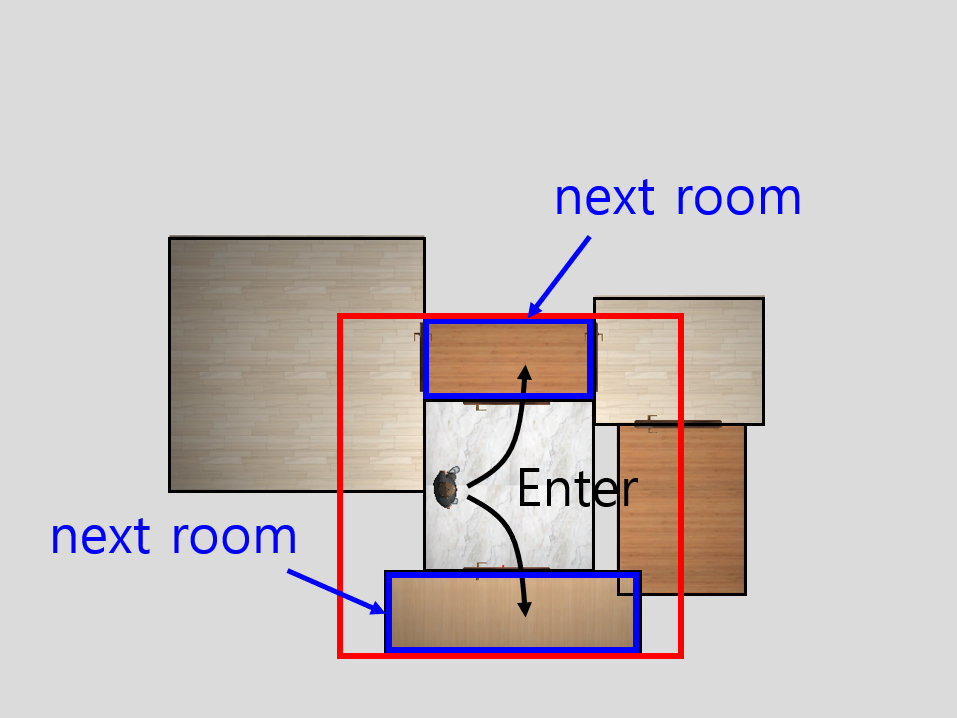

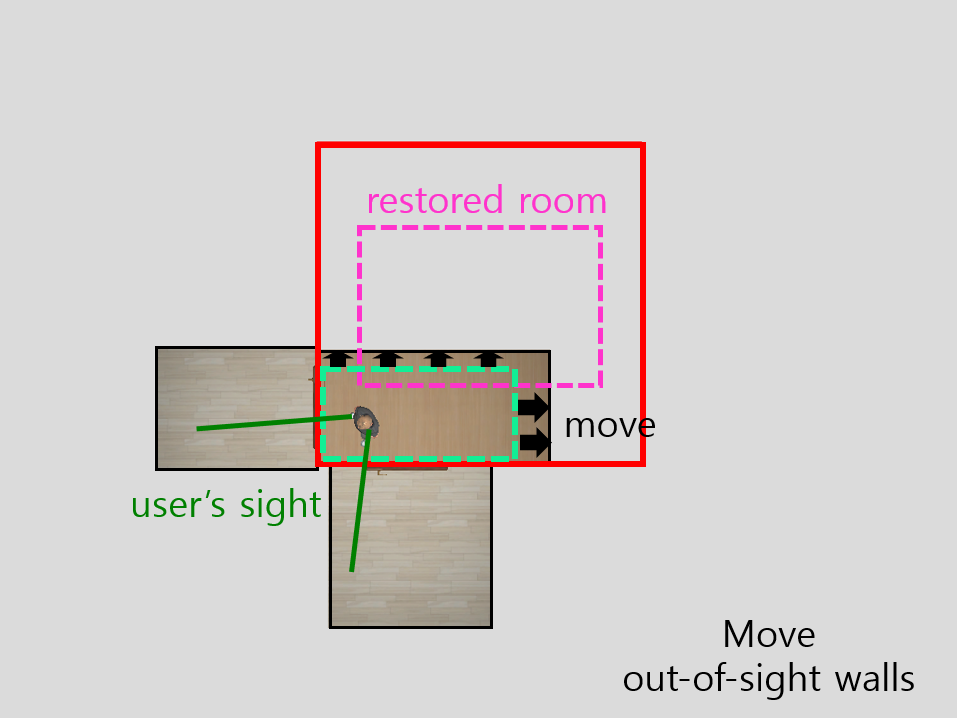

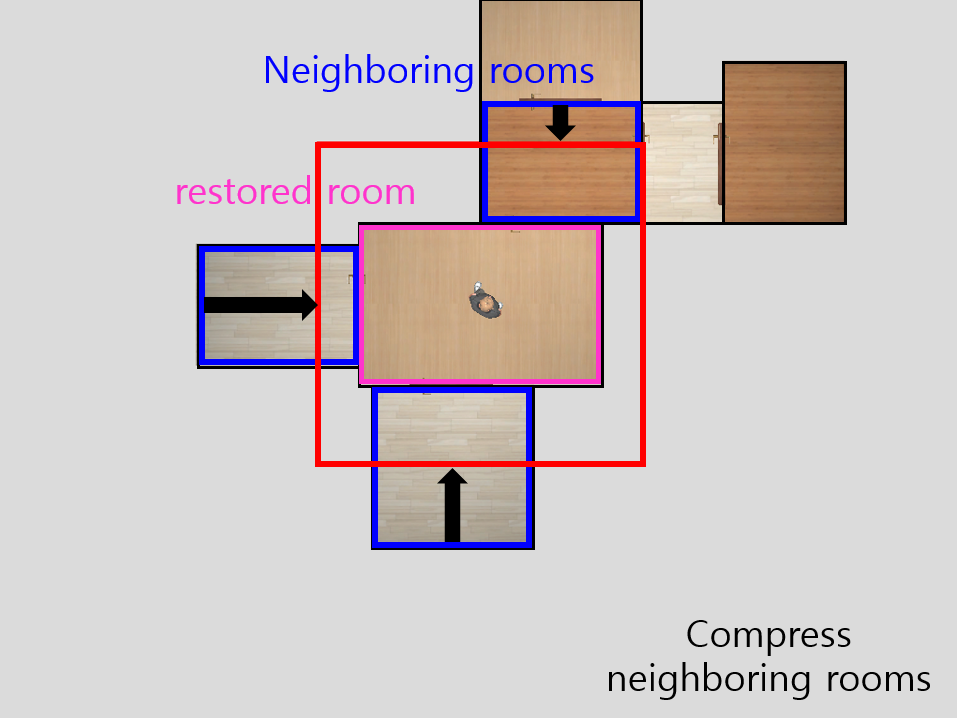

In this paper, we propose a novel RDW technique combined with change blindness-based spatial manipulation in some indoor environments. Through this, change blindness-based redirection can be applied in more various indoor scenarios and furthermore, by combining the proposed method and the existing one, this method can be expanded to be used to a general virtual indoor environment. We generalize the virtual indoor environment as a set of rectangular rooms (which can be considered as rooms or corridors in indoor environment) and assume that doors could connect adjacent rooms. In this environment, our method moves the walls out of the user’s field of view as Figure Redirected Walking in Infinite Virtual Indoor Environment Using Change-blindness and allows the user to walk the entire virtual space without colliding with the real space. Specifically, this technique applies ‘restore’ and ‘compression’ phases whenever the user enters a new room. The restore phase gradually moves the walls of the user’s room until the room restores its original dimension and is centered in the user’s physical space. The compression phase moves the walls of adjacent rooms into the real space so that all adjacent rooms the user is likely to enter next are completely contained within the real space.

We defined out-of-view wall movement that can be applied in various virtual indoor environments and measured the detection threshold for its movement. Furthermore, we compared the results of user questionnaire by making the user perform the same virtual task in our method and other locomotion methods. As a result, the proposed method showed higher immersion, usability, and motion sickness reduction than Steer-To-Center (S2C) and showed a high sense of immersion and presence compared to Teleport, another conventional locomotion technique.

Based on our results, we claim the contribution of our research as follows. First, by combining spatial transformation using change blindness and RDW, we propose a new redirected walking method applicable to diverse virtual indoor environments in which interconnected multiple rooms and corridors exist. Second, we defined the wall movement gain outside the user’s field of view suitable in various indoor environments and measured the detection threshold for the gain in empty room. Therefore, our method will provide a better VR experience to users by applying it to an infinite virtual indoor environment such as a virtual model house and museum consisting of multiple rooms and corridors or games like room escape where the user stays in one room for a long time.

1 Related work

1.1 Redirected Walking (RDW)

RDW has been proposed to allow users to simultaneously walk in an infinitely wide and complex virtual space and a narrow, small, and limited real (physical) space. This method finely manipulates the user’s view in a virtual environment to the extent that the user does not notice it. As a result, it induces the user’s real movement and its virtual movement to be different from each other. For example, when the user moves forward in virtual space, this technique rotates the user’s view to induce the user to circle in real space. A general overview of the RDW is provided in Suma et al. [27] and Nilson et al. [17]. Hodgson et al.[6] implemented the RDW concept, presented four steering algorithms, and conducted a performance comparison among the steering algorithms. Azmandian et al. [1] compared the performance of these steering algorithms according to the actual space with various sizes and ratios. Also, they confirmed that it shows better performance if translation gain is added to the existing steering algorithm.

Recently, several studies have shown better performance than the existing ones by combining a new concept with RDW. Sun et al. [30] and Langbehn et al. [13] have proposed an RDW that manipulates the user’s scene between blinking and saccades. Thomas et al. [31] proposed an RDW technique that guides users to a place far away from obstacles in real space by applying the concept of Artificial Potential Field (APF). Williams et al. [37] proposed an Alignment-based Redirection Controller (ARC) that introduces alignment, representing the difference between the user’s state in real space and virtual space, and steers the user toward decreasing alignment. Moreover, some studies [14, 25] have proposed techniques that combine RDW and reinforcement learning to apply optimal gains according to the states of user and environment.

1.2 Manipulating Virtual Space

Meanwhile, some studies have shown that RDW can be combined with the change blindness [22] phenomenon, in which the user does not perceive the manipulation of the virtual space. Steinicke et al. [23] confirmed that change blindness occurred in a stereoscopic environment and showed the possibility of applying change blindness in VR environment. Suma et al. [28] combined the change blindness phenomenon with RDW for the first time. In this study, they changed the locations of doors and corridors to avoid collisions between the user and the real space while the user was working on a computer in a virtual room. The work reported that most users left the room without knowing these changes and proceeded with the virtual environment. A follow-up study[29] proposed a method to overlap the room with an adjacent room to extend its size while the user moves through the corridor. However, this technique cannot be applied unless a virtual environment is composed of a manipulation area and a translation area such as two adjacent rooms and a corridor. For example, it is impossible to explore the virtual environment with consecutive connecting rooms, one of the general indoor structures, without colliding with the real space.

Vasylevska et al. [36] first developed an algorithm that procedurally generates layout by computing room positions and connecting corridors’ shapes. However, it is still applicable only in an environment where rooms and the corridor are separated, and it cannot fix the spatial layout, such as the location of rooms and doors and the shape of the corridor in advance. In addition, they did not measure the detection threshold of whether a user knew space manipulation whenever the layout of the virtual environment was procedurally generated and did not conduct the experiment on the quantitative measure of user questionnaire when applying this method.

1.3 Detection Thresholds

User’s cognitive performance gets affected when a user perceives that the movement in virtual and real is different [4, 20]. Hence, to make the user unaware of the distortion in the virtual environment, Steinicke et al. [24] defined how to distort the virtual space as gain. They presented a way to measure a detection threshold for the gain and its result. Based on this work, many studies have measured how the detection threshold of gain changes under various conditions. For example, Neth et al. [16] examined the curvature gain according to speed. Lucie et al. [10] measured the change in translation gain with and without the user’s feet, and Paludan et al. [18] confirmed how visual density affects the rotation gain. Recently, some studies[12, 13, 9, 5] have defined new kinds of gains and measured the detection threshold for them.

On the other hand, Suma et al. [29] measured the detection threshold for overlapping space for the first time in space manipulation. In detail, they measured the detection threshold for how much overlapping of rooms be distinguished by the user in a situation where two rooms are attached. In addition, they conducted a distance estimation task between two rooms for users according to the degree of overlap and confirmed the tendency for users to overestimate the distance between rooms even if they recognized the overlap. However, they measured the detection threshold only when two rooms are adjacent and expanded, so it is not easy to apply it to general structures different from the proposed structure. Vasylevska et al.[34, 35] also conducted an experiment to estimate the distance between two rooms according to the complexity of the corridor connecting the two rooms. Though, unlike Suma et al., they did not measure the detection threshold for overlapping virtual rooms and corridors, and it is also impossible to apply if the structure is not followed the proposed one.

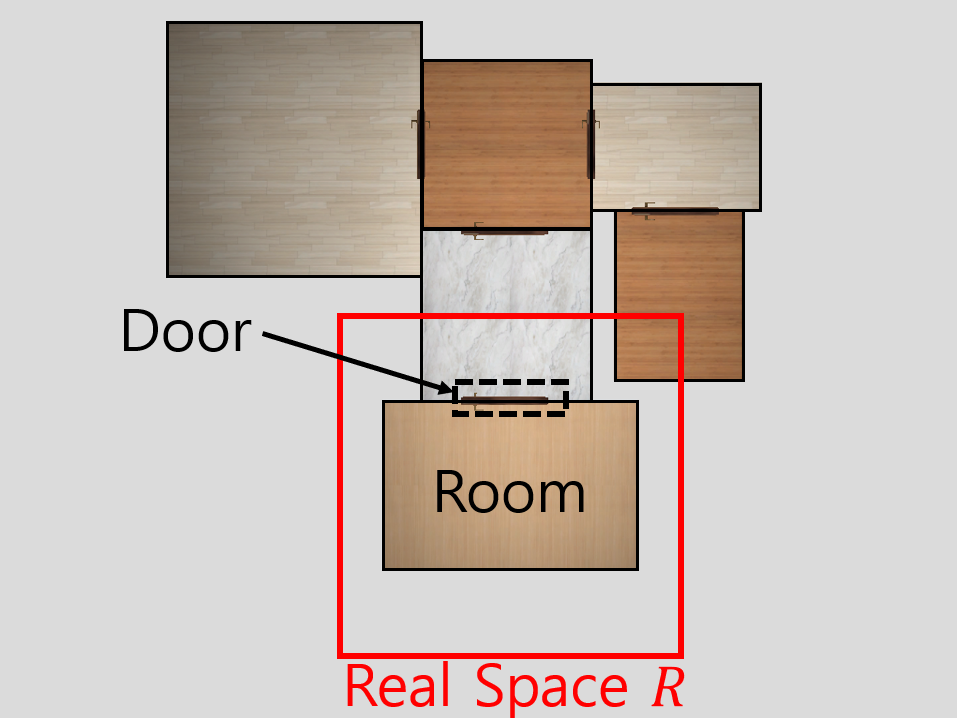

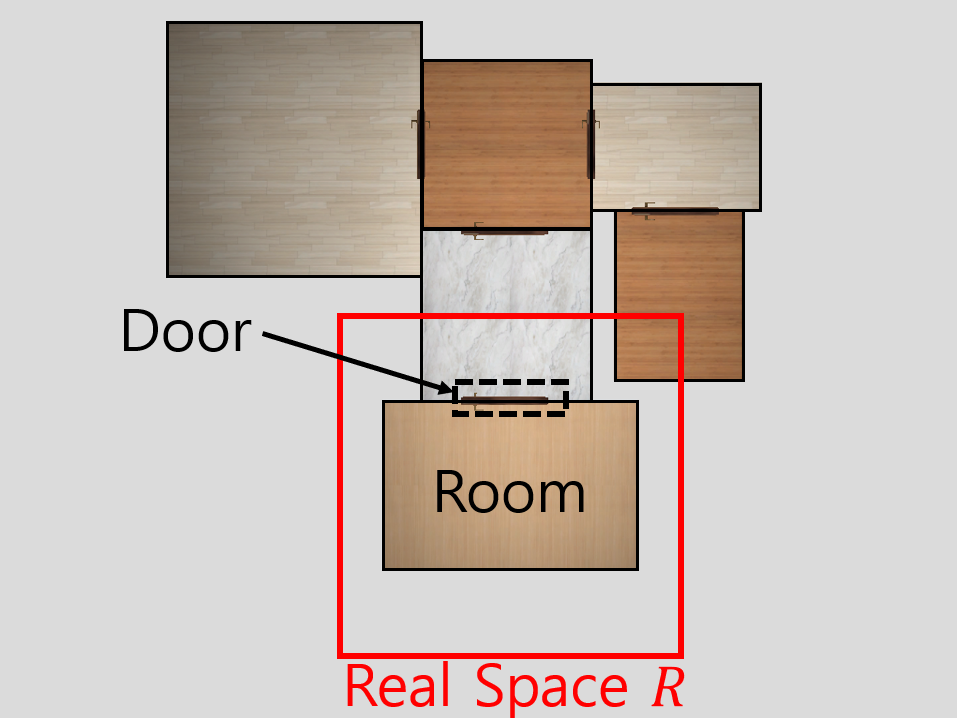

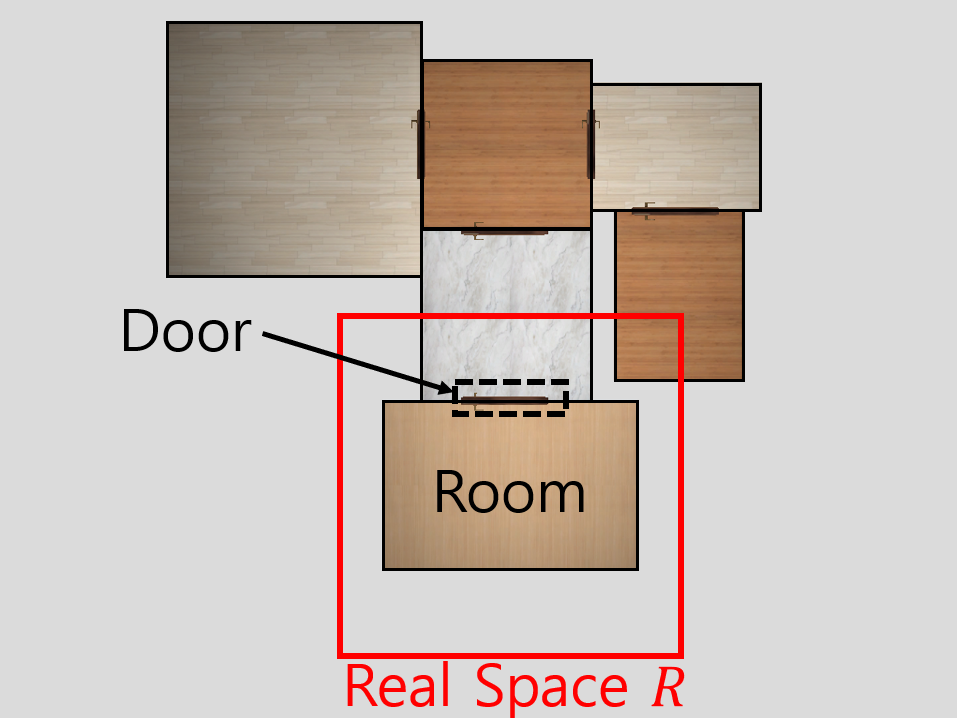

2 Method

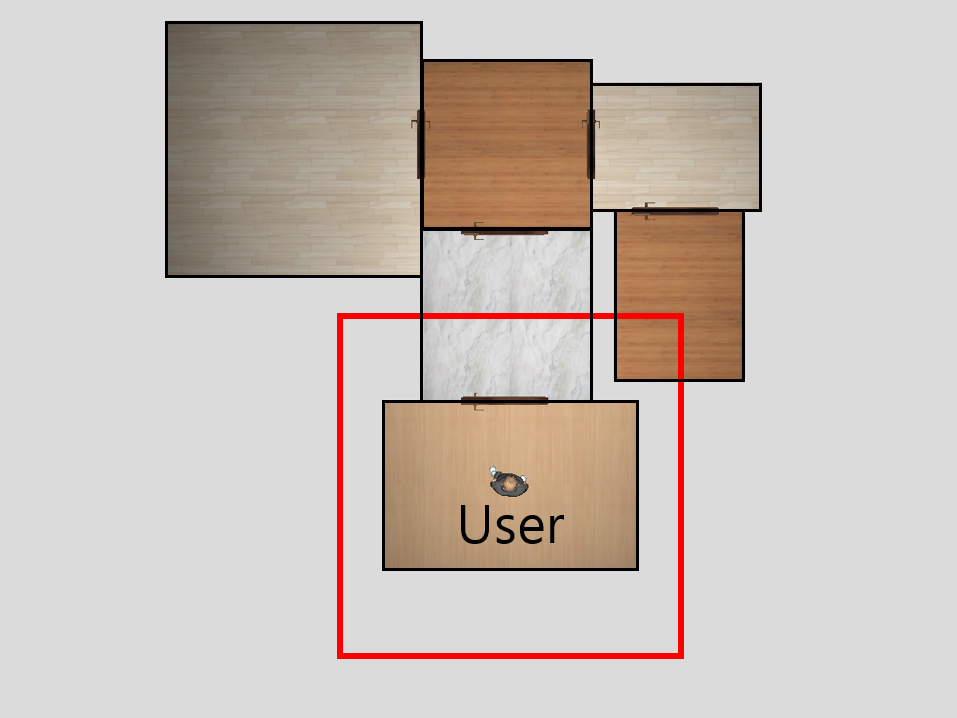

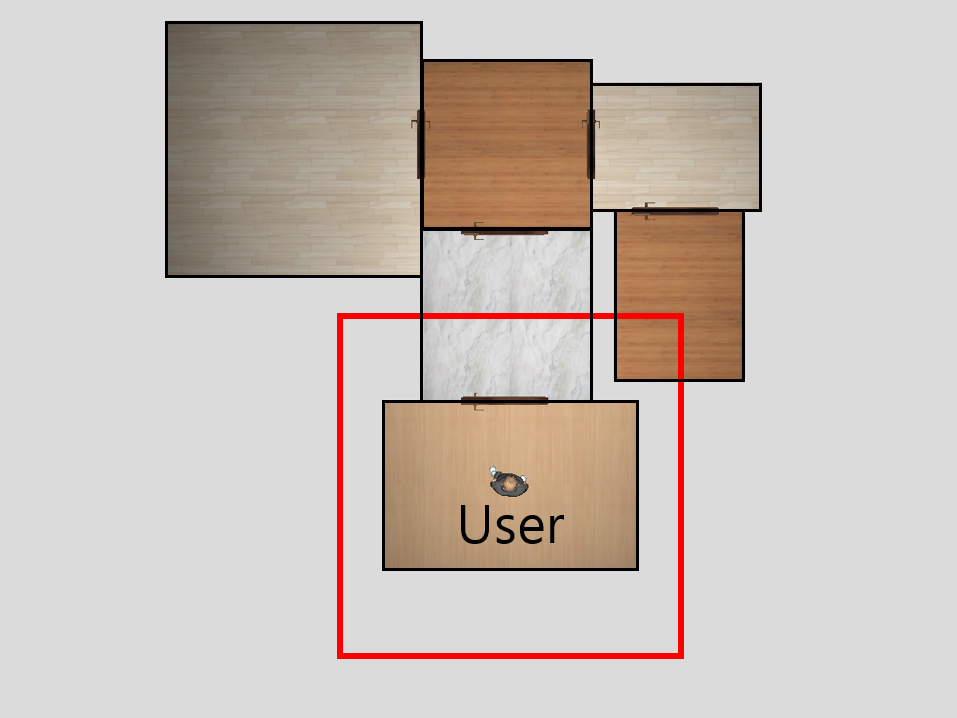

In this study, we propose a novel approach to space manipulation in RDW by moving the wall outside the user’s field of view wherever the user is located in an arbitrary room in virtual indoor environments. Typically, an indoor environment is composed of rooms and corridors connecting them. Furthermore, if we consider the corridors are just another type of room, the general indoor environment can be represented as a set of the infinite number of adjacent rooms. Hence, we can assume the virtual indoor environment and the real space as Figure 1. We assume the real space is a rectangle, and the virtual space consists of rooms, each of which is a rectangle with a size that can be contained entirely within the real space. Lastly, each room could be connected to another adjacent room by a door.

Under this assumption, we propose a general space manipulation algorithm for RDW as follows. As shown in Figure 2(a), 2(b), and line 7–8 in Algorithm 1, this method first set the room the user starts with to the center of the real space and then initializes the rooms by compressing all neighboring rooms of the initial room to the inside of the real space.

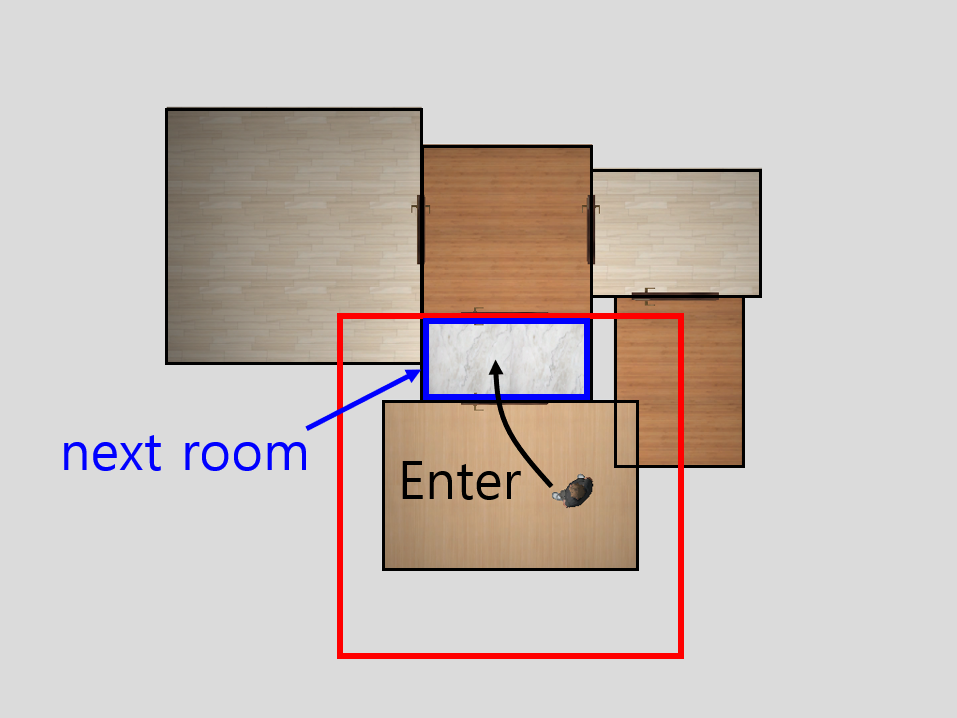

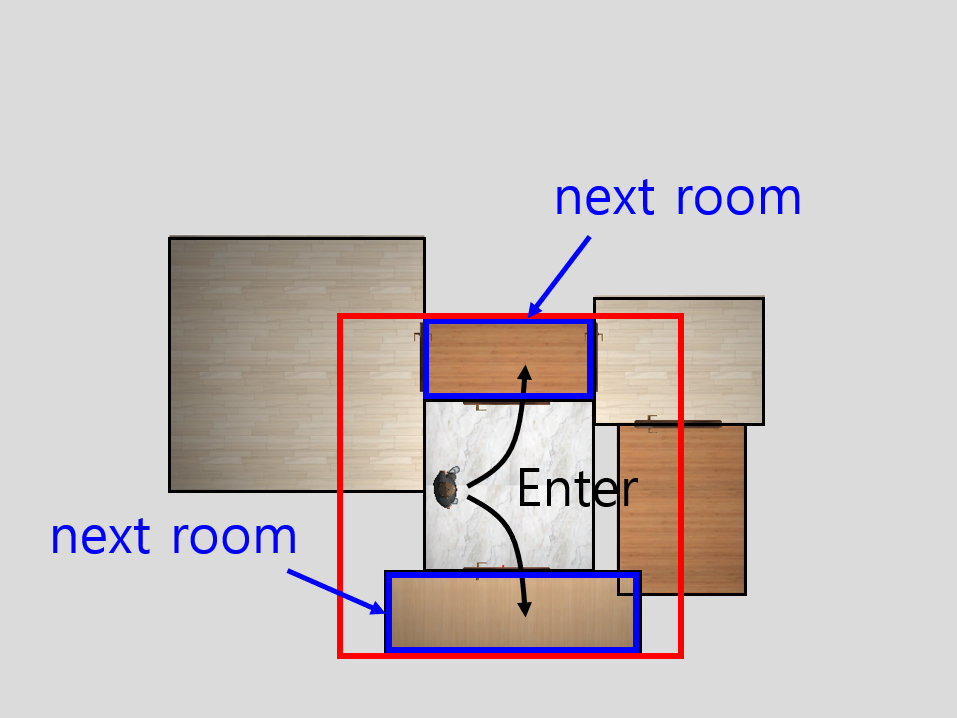

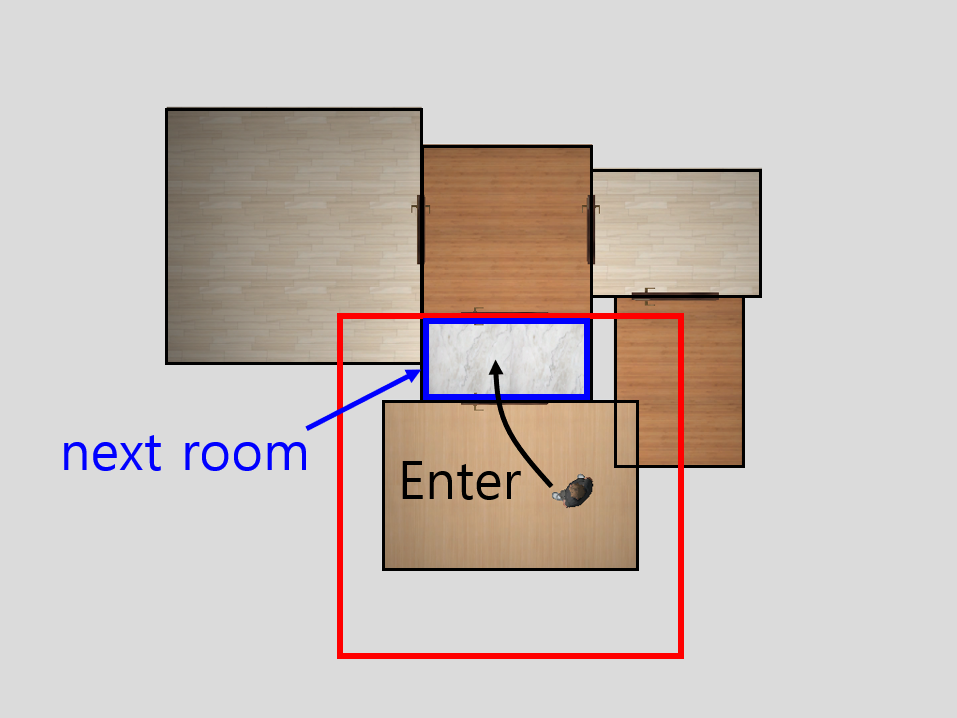

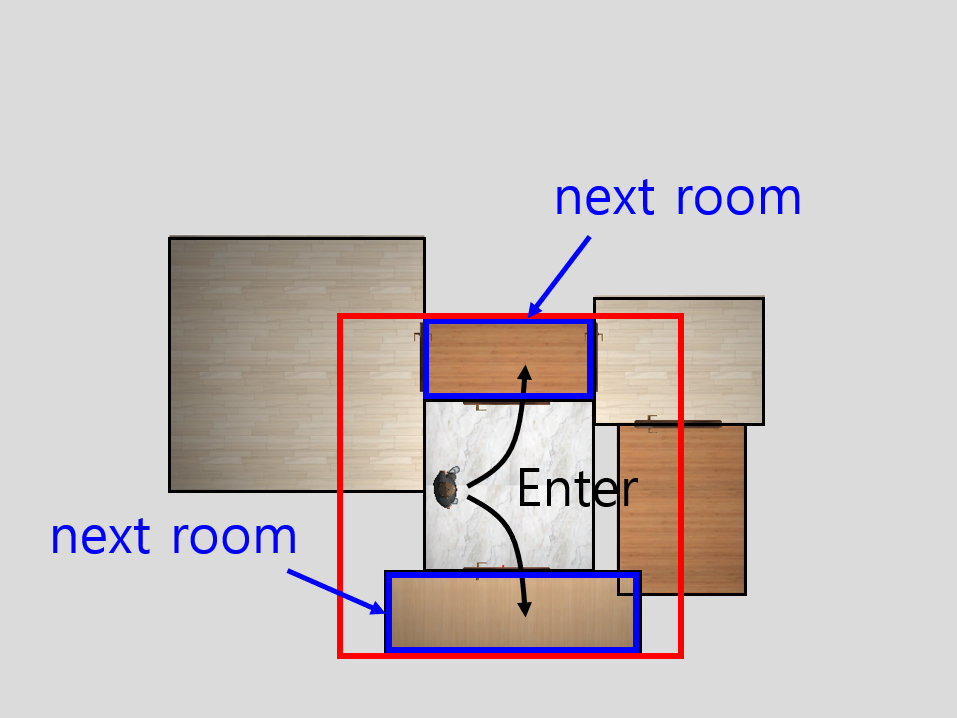

Afterward, this method proceeds with the restore-compression phases whenever the user visits a new room (Figure 2(c) and line 10–11 in Algorithm 1). As shown in Figure 2(d), and line 21–22 in Algorithm 1, it calculates the scale from the current dimension of the room to its original dimension and the translation from the current center position of the room to the center of real space. The restore phase is performed if the calculated scale is not or translation factor is not . In the restore phase, it first calculates the position to which each wall should be moved by combining the scale and translation obtained above (line 4 in Algorithm 2). Next, it repeatedly moves the walls outside the user’s field of view by applying the detection threshold of wall movement gain, which we define as a new type of gain to move the wall, until each wall reaches the corresponding position (Figure 2(e) and line 9 in Algorithm 2). After the restore phase is completed, the compression phase compresses all neighboring rooms to the current user’s room into the real space. For each wall in the neighboring room, the algorithm first finds the side of parallel and nearest to the wall. Then, it moves the wall towards that side of (Figure 2(f) and line 7–9 in Algorithm 3). As a result, it is possible to walk the virtual space where the infinite number of rooms are interconnected without colliding with real space by repeating these restoration-compression phases.

This method always abruptly compresses the neighbor’s room. Also, since only the room the user located is restored to its original size while the user moves and looks around the current room, most room does not have the original size but is compressed. Hence, the user can feel this extreme distortion every time he enters a room. However, when a user stays in one room for a long time, such as a model house, a museum, and a room escape game, it is rare that the user perceives the compression of the room during the entire experience time since the user doesn’t have to move much between each room. That is, in such applications, it can be expected that the user can explore the virtual environment through actual walking while offsetting the above disadvantages to some extent by applying the proposed method.

3 Experimental Result

3.1 Experiment 1: Wall Movement Gain

Our method moves out-of-view walls to the extent that a user does not perceive the movement. Therefore, it is necessary to measure the detection threshold for the movement of the wall behind the user. Also, change blindness is a phenomenon in which humans do not realize these changes even though they see changes in visual stimuli. To the best of our knowledge, there are no studies that analyze the detection threshold of change blindness in a general condition. Also, only one of change blindness-based RDW researches measured the detection threshold and only measured it in a specific case when two adjacent rooms overlap. Consequently, we measure the detection threshold of the wall movement in a room to apply that to our proposed algorithm. to estimate the detection threshold for wall movement, we made two assumptions. First, the more significant the change in the user’s scene, the less likely change blindness will occur, hence the more users notice the changes. Second, many factors affect the user’s scene, such as the user’s viewing angle, the user’s current state, the texture of the wall, the size of the room, and the relative position of the furniture in the room. However, among them, we suppose the distance between the user and the moving wall may significantly impact the visual changes in our environment. Hence, we evaluated the detection threshold of wall movement as three types of distance: Short (1m), Middle (2m), and Long (3m).

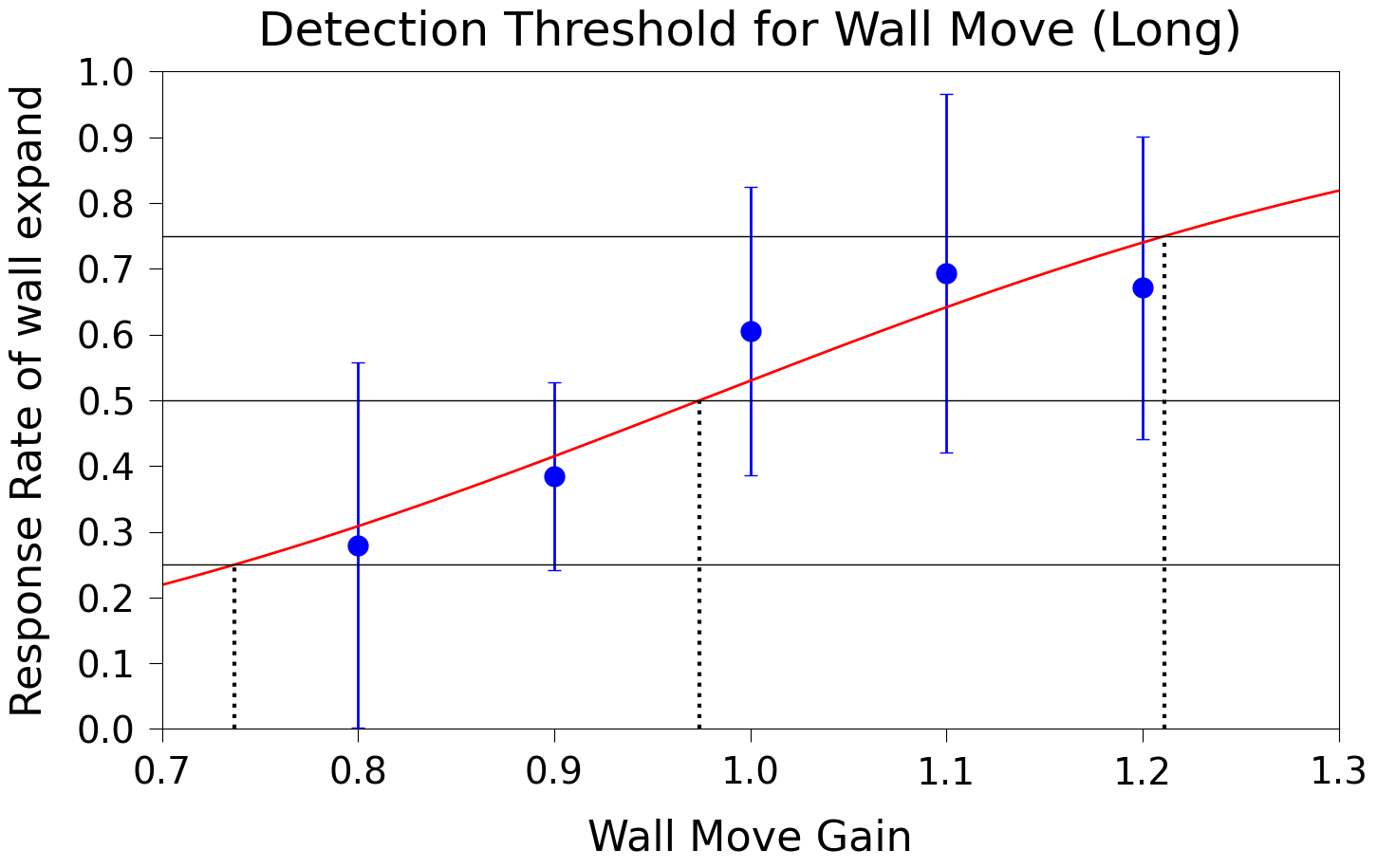

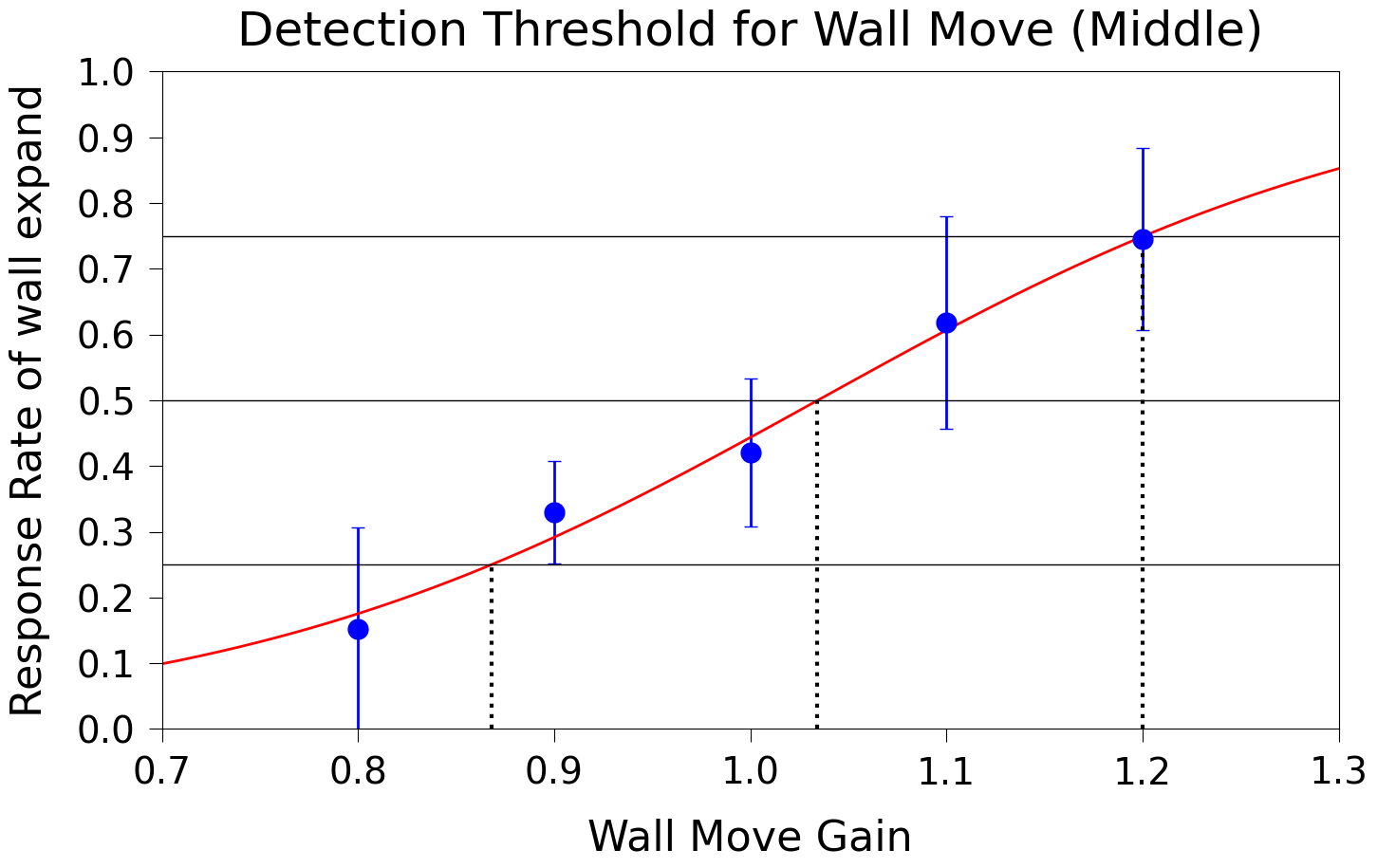

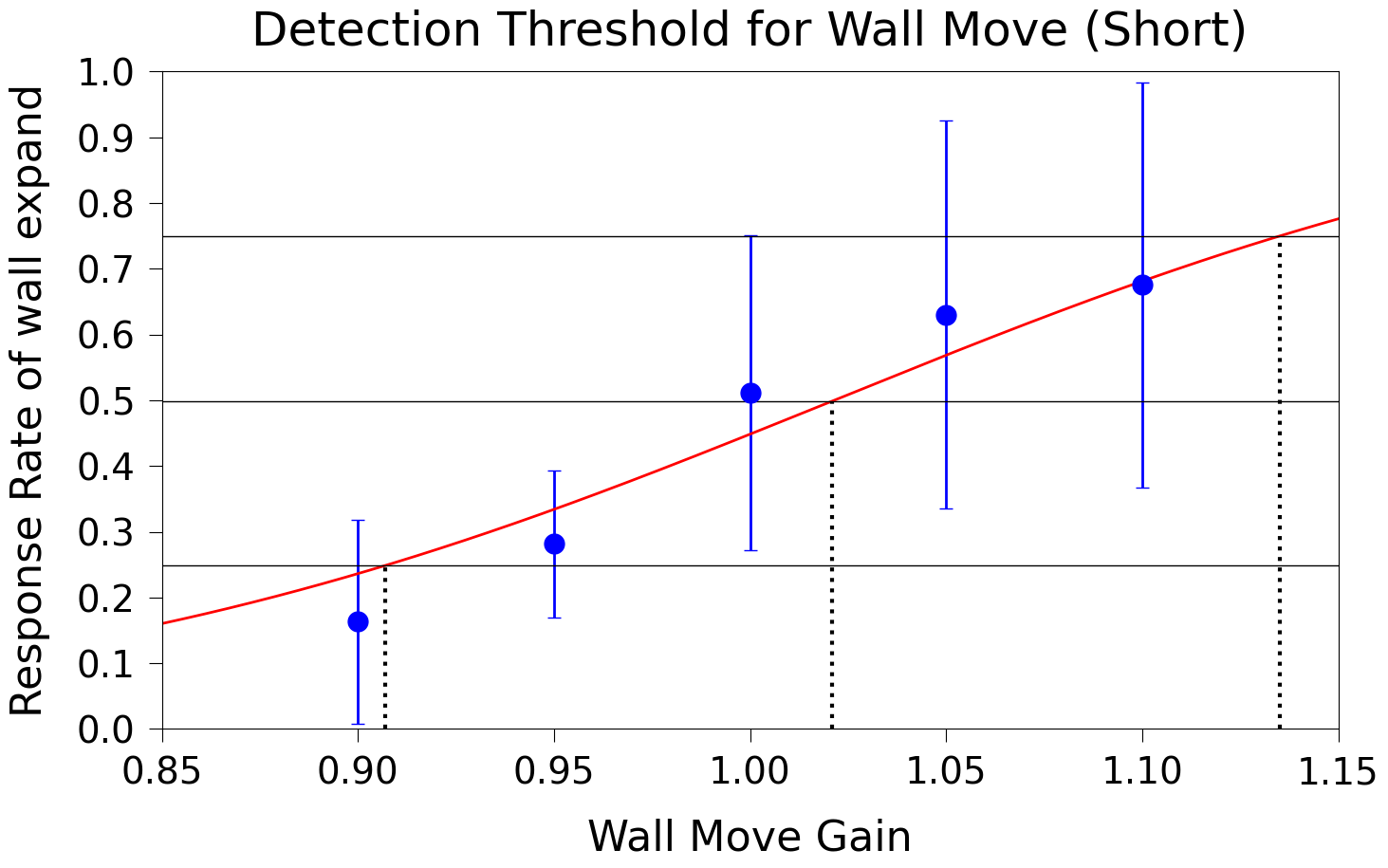

We defined a new type of gain about wall movement applied in a typical virtual room. Precisely, we assume as the shortest distance between the user and the wall before the wall moving, and as the shortest distance between the user and the wall after the wall moving. And then, we define a wall movement gain as . That is, if , the wall moves closer to the user. means the wall does not move. if , it means the wall moves away from the user. To estimate the detection threshold for wall movement gain, we conducted a preliminary experiment and modified the gains to appropriate values. As a result, we manipulated the wall movement gain at intervals of 0.1 from 0.8 to 1.2 for Long and Middle, and 0.05 intervals from 0.9 to 1.1 for Short when applying the gain to users. We applied each wall movement gain three times, collected the user responses, and used the average of the user’s responses in three times as the result. To summarize, we performed measurements of 45 times for each user, and the sequence was random.

Setup

We created a virtual space using Unity 3D as shown in Figure 3(a), (b) and (c). The experimental space consists of a single rectangular room that fits perfectly within the real space of the room-scale size . The virtual space has a light on the ceiling and doors located in the center of each wall. The texture of each wall was set to a monotone color. We used HTC Vive Pro HMD as the VR equipment for the experiment. The simulation program was created and run on a PC with an i7-8700 CPU, 16 GB of RAM, and an Nvidia Geforce 1070 GPU.

Participants

20 people (12 men and 8 women) participated in our experiment; they were between the ages of 20 and 30 years (mean = 23.4, SD= 3.1), and were students or office workers. 6 participants had no VR experience, 9 participants had VR experience once or twice, and 5 reported they had experienced VR more than three times. 14 people wore glasses and lenses, and 15 people were right-handed. After the experiment was over, all participants got incentives of 10$.

Procedure

We informed all participants that they could take a break or stop if they felt motion sickness during the experiment. Afterward, we conducted a simple test session to prevent the learning effect by making the users adapt to VR and tasks in the experiment. After the test session was over, we moved participants to the center of the actual space and began the experiment. The user started at the center of the virtual room and look around their room for 10 seconds (Figure 4(a)). Then, as shown in Figure 4(b), the user was guided to move the specific position according to the distance to which the wall movement gain is applied. After the user reached the that position and was looking at the indicated door, we moved the wall behind the user by applying a wall movement gain (Figure 4(c)). Then, the user turned around and observed the wall as shown in Figure 4(d). Finally, the user asked, ”Did the space get larger or smaller?” and they chose one of two answers, which are ”It got larger” and ”It got smaller”, to the question. After answering, the user moved to the center while the screen blinks and move to the next case for other gains.

Detection Threshold

We used the two-alternative forced method during the experiment, such as ”Did the space get larger or smaller?”. Two-alternative forced choice is a question-answering method in which one cannot choose a neutral answer when two opposing answers are given but must be forcibly select one of them. We estimated the detection threshold by fitting the user’s response obtained through this process to the psychometric function . The point corresponding to 0.5 in the psychometric function is called Point of Subjective Equality (PSE), where the subject does not know whether the wall has moved at all. On the other hand, as the gain closer to 0 or 1 is applied, the user accurately recognizes whether the space is smaller or bigger because a significantly larger gain is applied. In Steinicke et al. [24], they took the range of detection threshold from the lower point corresponding to 0.25, which is the midpoint between PSE and 0, to the upper point corresponding to 0.75, which is the midpoint between PSE and 1. Therefore, we fitted the response rate of expansion by the users for each wall movement gain to a psychometric function and took the range of wall movement gain corresponding to 0.25 and 0.75 in the estimated psychometric function as the range of detection threshold.

Result

Table 1 and Figure 5 summarize the result of the detection threshold for each distance between the user and the moving wall. In Figure 5, -axis represents the wall movement gain, and -axis represents the response rate of expansion. The black dashed lines perpendicular to the -axis represent the wall movement gain at the lower threshold (25%), PSE (50%), and upper threshold (75%) points, respectively, from left to right. In the Long condition, the detection threshold ranged from 0.737 to 1.211, and the PSE was 0.974 (Figure 5(a)). If we represent the detection threshold for the Long condition as meters, the user does not notice the changes until the wall moves toward the user by approximately 0.8m or moves away from the user by approximately 0.6m. In the Middle condition, the detection threshold ranged from 0.868 to 1.2, and the PSE was 1.03. Lastly, in the Short condition, the detection threshold ranged from 0.899 to 1.145, and the PSE was 1.02.

| Distance from wall | Lower | PSE | Upper |

|---|---|---|---|

| Long (3m) | 0.737 | 0.974 | 1.211 |

| Middle (2m) | 0.868 | 1.030 | 1.200 |

| Short (1m) | 0.899 | 1.020 | 1.145 |

3.2 Experiment 2: Navigating Indoor Environment

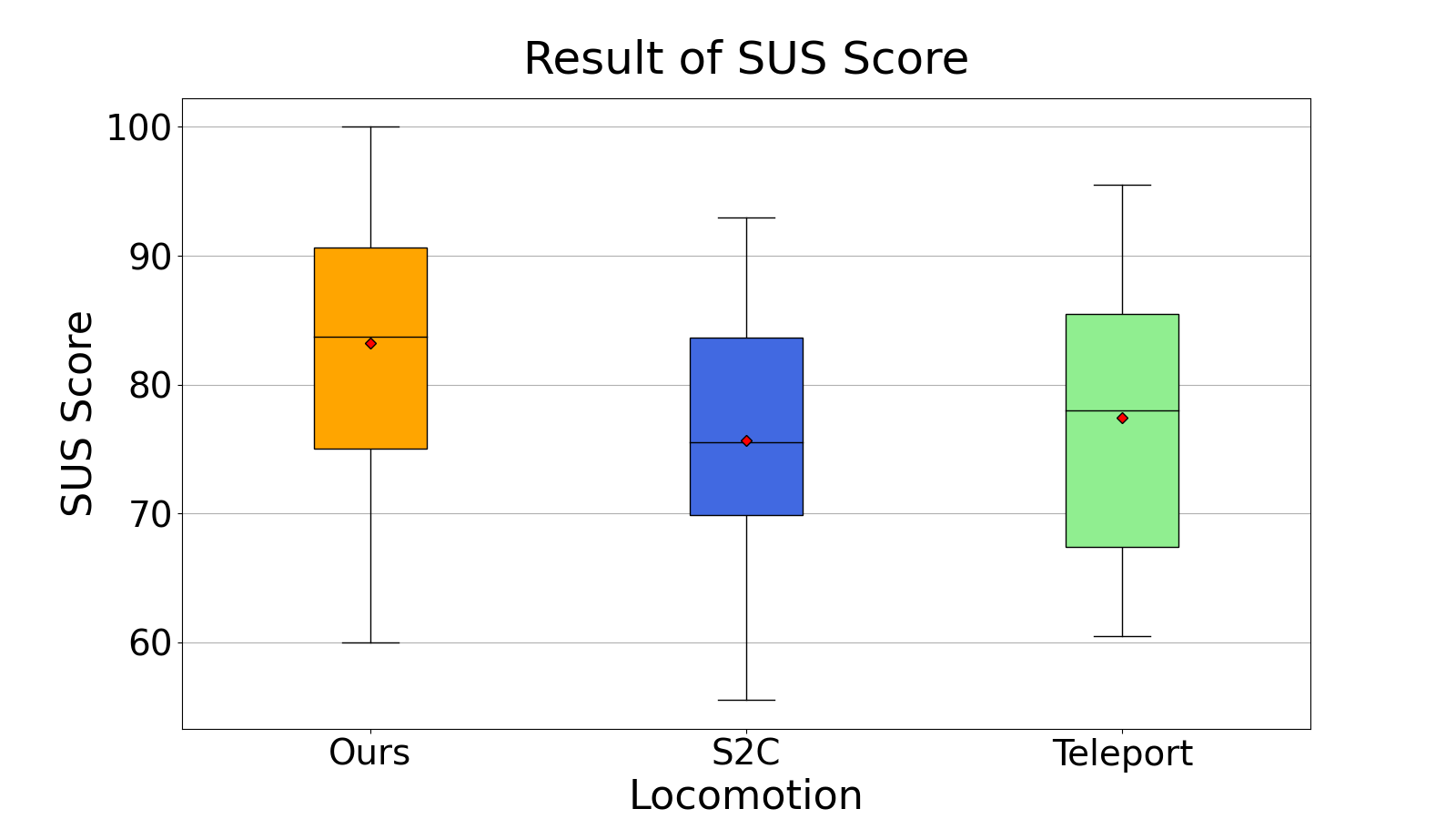

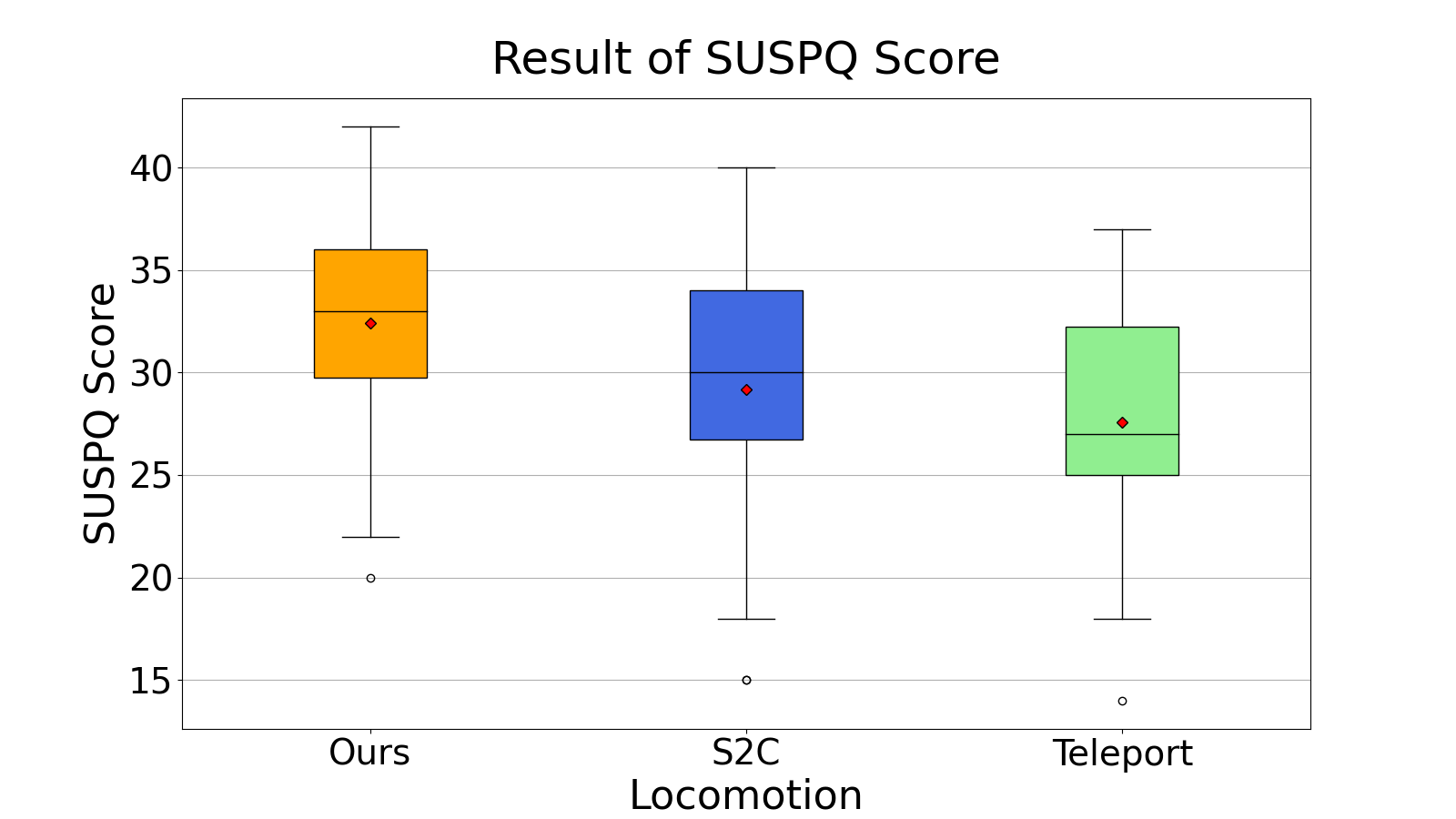

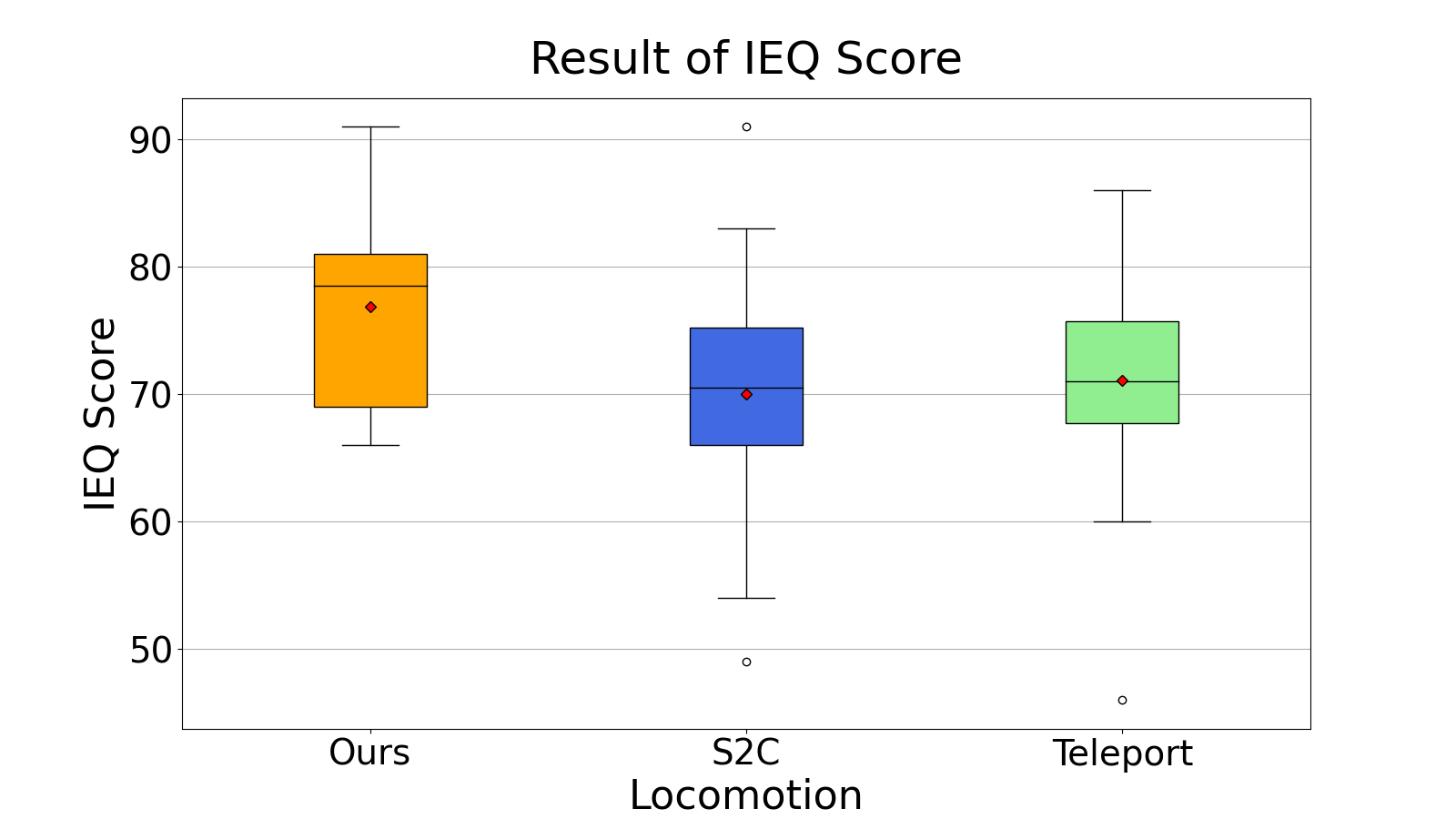

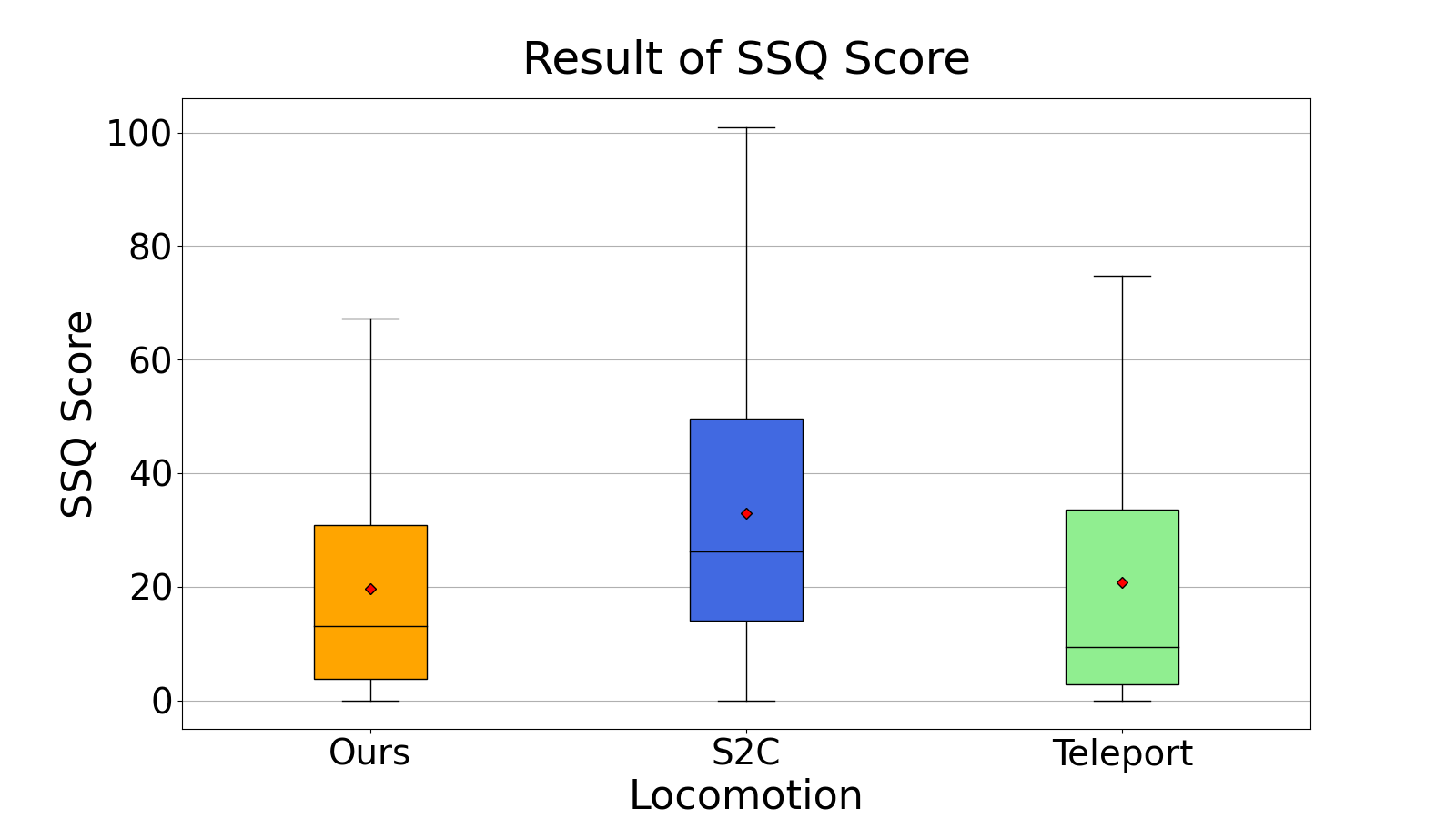

To check the effect of our method on user experience compared to other locomotion techniques, we conducted a live-user experiment to examine user questionnaires for our technique (Ours), S2C [19], and Teleport [2]. The reason we did not compare it with previous spatial manipulation studies is that these studies [28, 29] only presented the technique and did not suggest an algorithm for how to apply it. In addition, since our study presents an algorithm that can be applied to indoor environments different from early study [36], it is impossible to compare performance with previous change blindness-based studies in the same environment. We formulated the following hypotheses H1-1,H1-2,H1-3,H1-4,H2-1,H2-2,H2-3,H2-4 and tested these hypotheses through statistical analysis. For each locomotion technique, we used SUS [3] to indicate usability, SUSPQ [33] to indicate presence, IEQ [7] to indicate immersion, and SSQ [8] to indicate the degree of motion sickness. We also collected qualitative feedback from users by asking some additional questions after the experiment. We excluded some questions of IEQ not suitable for our experiments, such as “How much did you want to win the game?” and “To what extent did you enjoy the graphics and imagery?”. When applying the proposed method, we applied the detection threshold for wall movement gain obtained in Experiment 1. We designed an experiment with a within-subject design to offset baseline differences for each user. Also, the order of all conditions was counterbalanced.

-

•

H1-1, H1-2, H1-3, H1-4: the score of SUS, SUSPQ, IEQ, and SSQ will be better in Ours than S2C, respectively.

-

•

H2-1, H2-2, H2-3, H2-4: the score of SUS, SUSPQ, IEQ, and SSQ will be better in Ours than Teleport, respectively.

Setup

We created a virtual space in Unity 3D as shown in Figure 6(a)(b)(c). As the assumption mentioned in Section 3, the virtual space consists of several rectangular rooms, each of which can fit entirely into the real space. Some rooms are connected through a door, and the user can move to the connected room by pulling the door handle to open the door. Each room in the virtual space has a light on the ceiling and monotone color texture of the walls, but a different color is applied to each room to distinguish them. The VR equipment and computer used for the experiment are the same as in Experiment 1.

Participants

29 people (18 men and 11 women) participated in our experiment; they were between the ages of 20 and 30 years (mean = 25.1, SD= 3.4), and were students or office workers. 9 participants had no VR experience, 13 participants had VR experience once or twice, and 7 reported they had experienced VR more than three times. 20 people wore glasses and lenses, and 27 people were right-handed. After the experiment was over, all participants got incentives of 10$. In order to comply with the government’s quarantine rules due to the COVID-19 outbreak, we obtain the user’s agreement to transmit experimental-related information to the quarantine authorities, if necessary.

Procedure

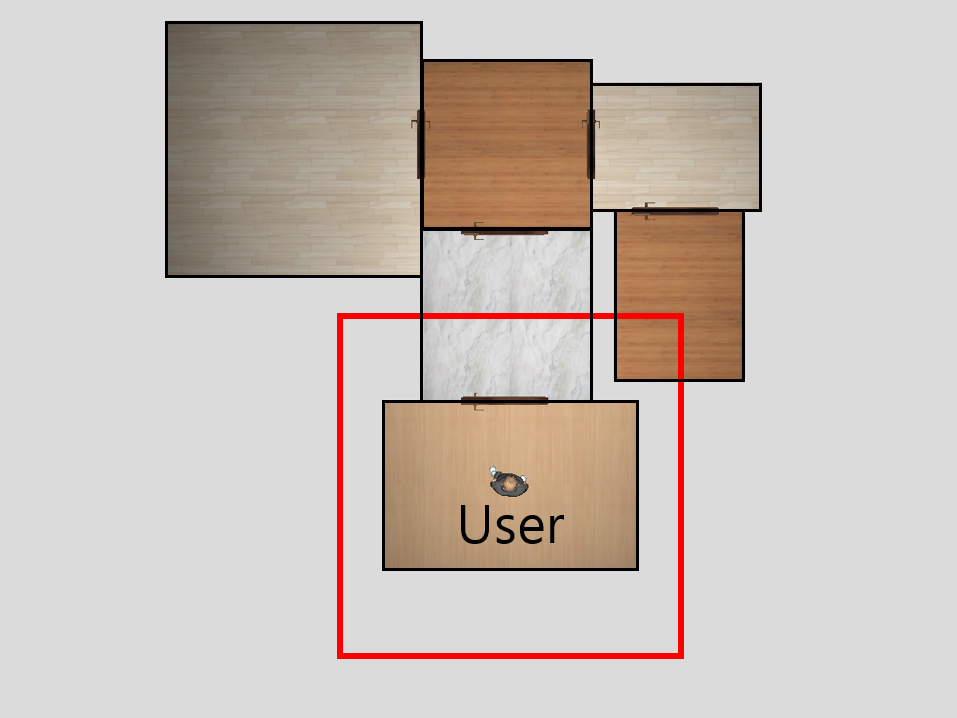

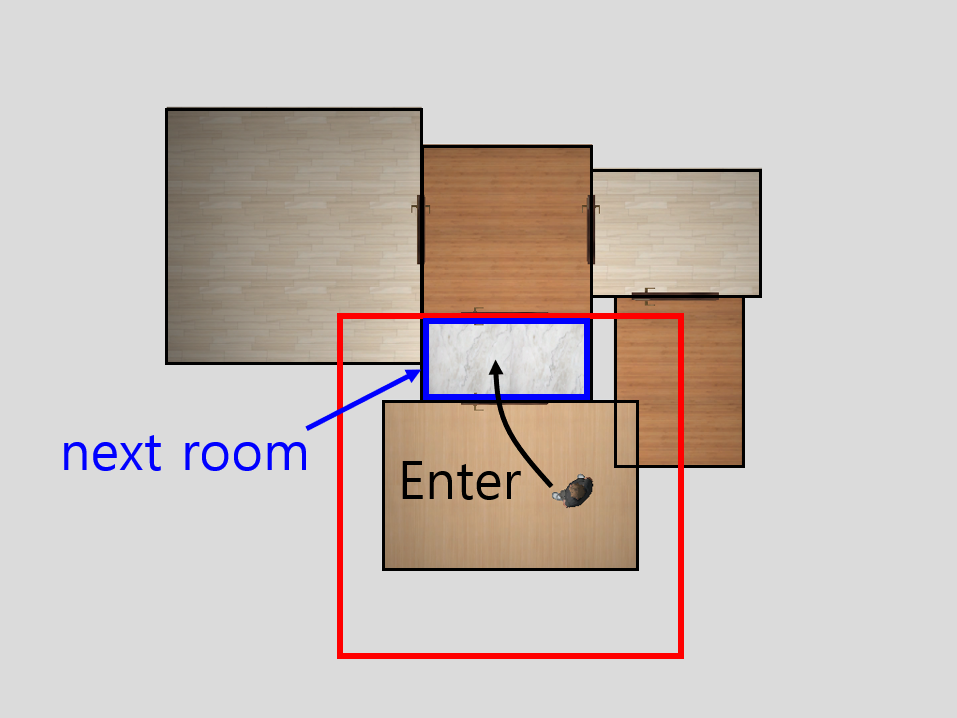

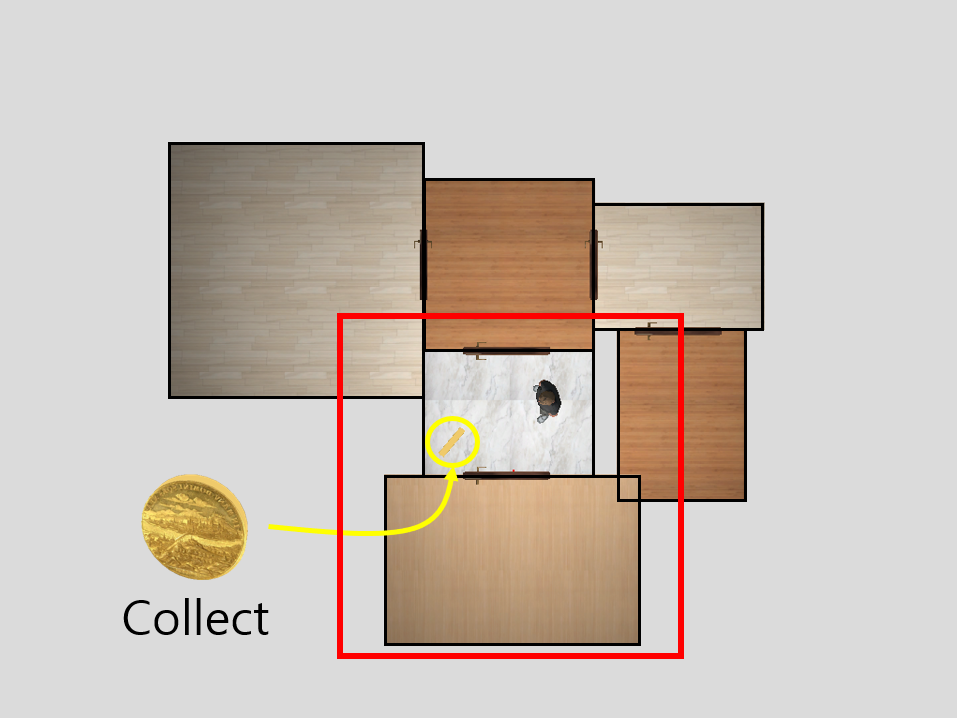

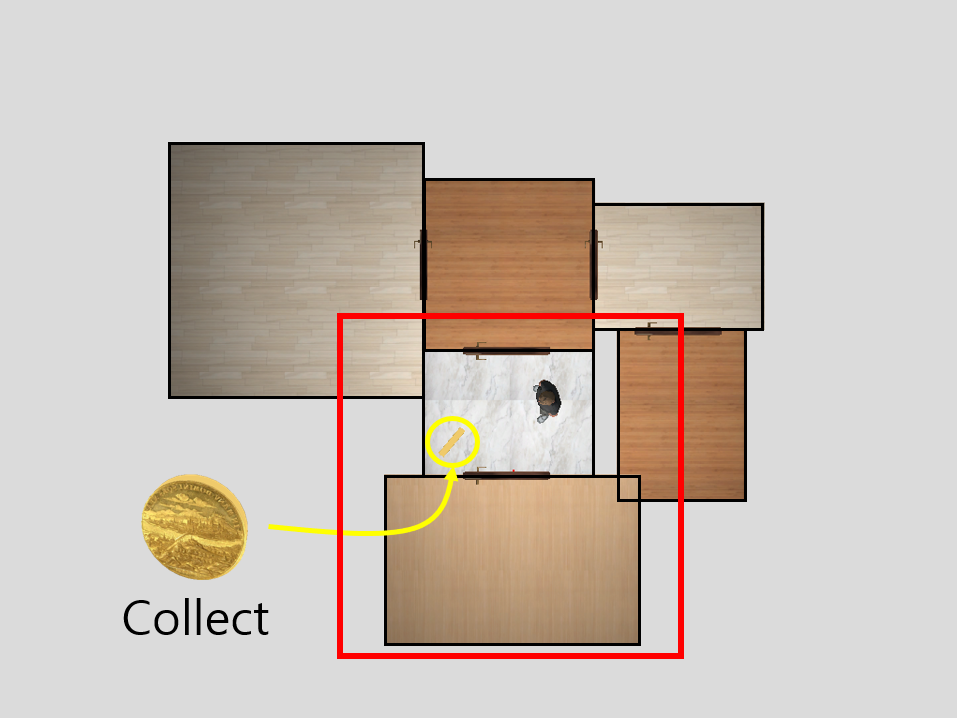

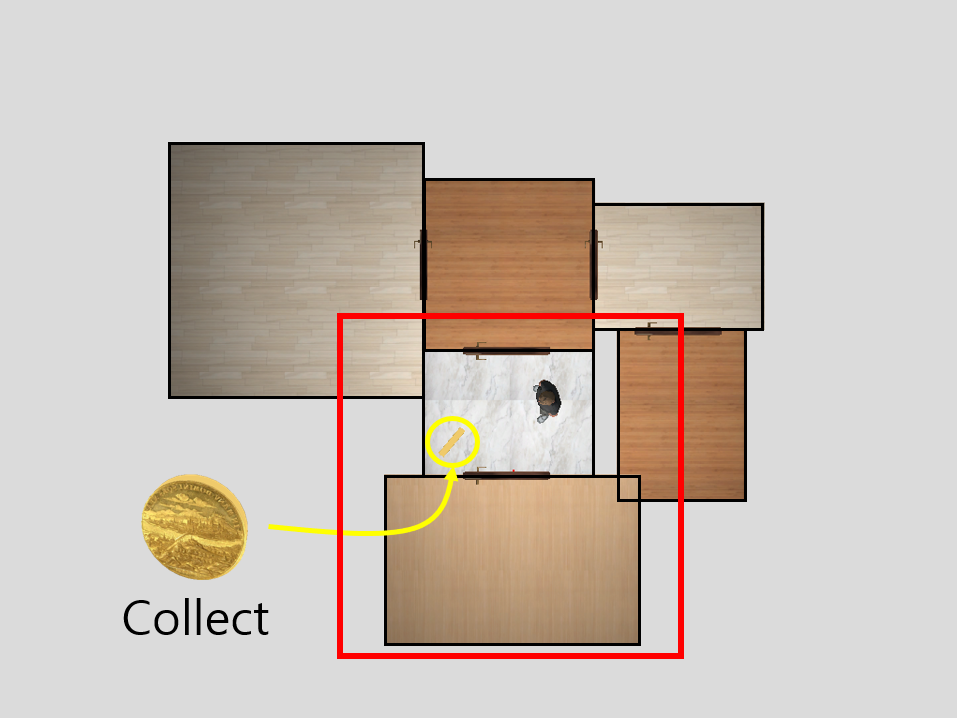

We received a preliminary questionnaire from users and collected information such as age and gender for the analysis of the participant group. We conducted a simple test session to prevent the learning effect by adapting the users to locomotion and interaction in VR. After the test session was over, we instructed the user that the tasks to do during the experiment were in progress. As shown in Figure 7(a), the user starts at the center of a virtual room. The user first opened the door and moved to the connected room (Figure 7(b)). Then, as shown in Figure 7(c), the user repeatedly collects coins in the room. If the user collected the given number of coins in the corresponding room, the user chose one of the rooms connected to the current room and repeated the above process. After the given time has elapsed and the experiment is over, the user filled out the SUS, SUSPQ, IEQ, and SSQ questionnaires. Then we changed the experiment condition and the user proceeded with the experiment again. Most of the subjects took about 40 minutes to complete all trials and questionnaires.

Result

Table 2 shows the average score of SUS, SUSPQ, IEQ, and SSQ in each locomotion condition. Figure 8 shows each score as box-and-whisker plot. We used a significance value of to test all hypotheses. For statistical analysis, we performed a Saphiro-Wilk test [23] for normality and Levene’s test for equality of variance on each questionnaire result. All SUS, SUSPQ, and IEQ results passed both normality and equality of variance tests. However, the SSQ result did not pass the normality test. Hence, we performed a one-way analysis of variance (ANOVA) test for SUS, SUSPQ, and IEQ results to check whether each score was significantly different according to locomotion. There were statistically significant differences in SUS (), SUSPQ (), and IEQ () in each case. A pairwise Tukey’s honestly significant difference test was performed as a posthoc test. As a result, there were significant differences between Ours and S2C () in SUS, Ours and Teleport () in SUSPQ, and Ours and S2C (), Ours and Teleport () in IEQ. Meanwhile, since SSQ did not pass the normality test, we conducted the Kruskal–Wallis H test to verify the significant difference in SSQ score among the three methods. we also found a significant difference in SSQ score () for each method. As a post-hoc test, we conducted the Mann-Whitney U test with adjusting -value ( in significance level) by Bonferroni Correction. The result is that there was a significant difference in SSQ scores between Ours and S2C () and S2C and Teleport ().

| Method | SUS | SUSPQ | IEQ | SSQ |

|---|---|---|---|---|

| Ours | 83.621 | 32.690 | 77.000 | 19.474 |

| S2C | 75.759 | 29.586 | 70.310 | 36.983 |

| Teleport | 77.828 | 28.000 | 71.345 | 19.990 |

4 Discussion

4.1 Result of Experiment 1

As shown in Table 1 and Figure 5, the detection thresholds for wall movement in Long, Middle and Short cases are [0.737,1.211], [0.868,1.200], and [0.899,1.145], respectively. Through this, we confirm that the closer the wall is, the narrower the entire range of detection threshold is. As mentioned in Experiment 1, it can be said that the change in the user’s view increases as the user approaches the wall. Also, we conclude that the amount of change on the user’s scene determines to recognize the movement of the wall outside the field of view. Hence, we can say that the user is more likely to perceive the movement of the nearer wall than that of the further wall. Moreover, as the user gets closer to the wall, we can see that the lower threshold narrows dramatically more than the upper threshold. We believe that this is because the user’s point of view is perspective. As a result, the closer the wall is, the greater the change in the user’s view is when the wall is closer than away from the user.

On the other hand, we confirmed that the standard deviation of the response rate of expansion for all pairs of gains and distance is more significant than expected, as shown in Figure 5(b)(c)(d). This means that although the user’s view is affected by the distance between the user and the moving wall due to our experimental results, it also can be affect by many other variables such as the user’s viewing angle, the user’s current state, the texture of the wall, and the relative location of the surrounding furniture. Therefore, we first express the complexity of the user’s view as a set of feature points extracted from the user’s scene rather than simply the distance from the wall and then check the detection threshold for wall movement whenever these feature points change.

4.2 Result of Experiment 2

In Experiment 2 results, we verified that Ours showed statistically significantly higher performance in all scores except for SUSPQ than S2C. This can be confirmed in Figure 5(b)(c)(d). Therefore, we support H1-1, H1-3, and H1-4 and reject H1-2. This is because the user feels visual-vestibular conflict due to the continuous application of the gain in S2C. It also seems that the resets that occurs during the experiment greatly influence the user’s questionnaire. Even in users’ feedback in S2C, there were many cases where they felt that the view was rotating during VR, so they felt dizzy. However, we believe that there is no significant difference between Ours and S2C in terms of presence because both methods actually explore the room through walking.

Also, we confirmed that Ours showed statistically significantly higher performance in SUSPQ and IEQ compared to Teleport. Hence, we support H2-2, H2-3 and reject H2-1, H2-4. In the case of usability, it seems that once the user gets used to the Teleport method, they can move conveniently using Teleport in the same place. In short, users could not adapt to the Teleport method at first, but they got used to it during the test session and can move conveniently in place. Hence, it seems that it did not show a big difference in usability compared to Ours. Looking at the user feedback about Teleport, some users believed that it was a little uncomfortable because it took time to adapt to Teleport. Meanwhile, there was feedback that the feeling of immersion was rather increased because Teleport was an impossible experience in reality. In the case of motion sickness, it seems that the user feels motion sickness unconsciously in Ours method, because the room’s structure continues to change while exploring the room due to the constant room manipulation of Ours.

4.3 Limitation

Our proposed method has some limitations: the user has to stay in the room and look around continuously until the algorithm completes restore-compression phases. Briefly, the user cannot move across multiple rooms in a row because the user should perform a certain task every time he/she visits each room. Also, since this method assumes that the virtual room and the real space have a rectangular shape, the algorithm is currently not suitable in a virtual indoor environment having general polygonal or circular rooms. Similarly, our method does not allow the environment to have a rectangular room that does not fit entirely inside the real space. Also, if the size of a compressed room is too small, the user cannot practically explore that room on foot and compromise the sense of immersion. As a result, the extent to which it can be compressed is somewhat limited. In other words, the size of each room is bound to be much smaller than the given tracking space to ensure a minimum size when neighboring rooms are compressed. Besides, to use change blindness, we are currently only using wall movement that is wholly outside the user’s field of view. For this reason, only specific walls can move. Therefore, there is a need to find a way to alleviate this limitation of freedom by moving the walls inside the field of view as well as the walls outside the user’s field of view.

We newly defined the wall movement gain that can be applied in a general virtual room surrounded by walls and measured the detection threshold of the wall and distance. However, it is necessary to measure the detection threshold for out-of-sight wall movement gain more accurately by considering not only the distance from the wall but also other variables. In particular, since change blindness is directly related to the change in the user’s view, we need to quantitatively analyze the user’s viewing point and estimate the detection threshold for the movement of the wall according to this value.

5 Conclusion

In this study, we propose a new spatial manipulation technique in RDW by moving the wall outside the field of view using change blindness applicable to various virtual indoor environments that can be represented as infinitely rooms are interconnected. The method consists of restore and compression phases. The restore phase continuously moves the walls outside the user’s field of view using change blindness. After the restoration, the room where the current user is located has the original dimension of the room and is located in the center of the real space. The compression phase pushes all rooms adjacent to the restored room into the real space. In addition, we defined the outside wall movement to utilize change blindness as a new type of gain and measured its detection threshold. We can manipulate the space by applying this gain to the typical virtual room surrounded by walls. In addition, we conducted a live user experiment between the proposed method, S2C, and Teleport and surveyed users after the experiment.

Our future studies that can be conducted from this work are as follows. First, we extend this technique to a more general indoor environment with a virtual unit that is not rectangular but has various shapes and a larger size than the real space. Also, our current method only uses out-of-sight wall movement. To increase the degree of freedom in spatial manipulation, we can consider the extension of the algorithm to move some objects and walls inside the user’s view in some way. On the other hand, by applying reinforcement learning, it will be possible to determine the room’s optimal restored size and location in the restoration phase according to the state of the user and environment. Finally, we can consider combining our method with the previously studied techniques such as flexible space [36] and impossible space [29]. For example, there can be several themes in a virtual museum such as “Stone Age” and “Bronze Age”. We can use our method to navigate several rooms in an area of a certain single theme. Then, using the flexible space technique [36] we can construct the way to connect a theme area to another theme area by procedurally creating the corridor and rooms. Then users do not have to do unnecessary tasks when simply moving between the areas of different themes.

References

- [1] M. Azmandian, T. Grechkin, M. T. Bolas, and E. A. Suma. Physical space requirements for redirected walking: How size and shape affect performance. In ICAT-EGVE, pp. 93–100, 2015.

- [2] E. Bozgeyikli, A. Raij, S. Katkoori, and R. Dubey. Point & teleport locomotion technique for virtual reality. In Proceedings of the 2016 annual symposium on computer-human interaction in play, pp. 205–216, 2016.

- [3] J. Brooke et al. Sus-a quick and dirty usability scale. Usability evaluation in industry, 189(194):4–7, 1996.

- [4] G. Bruder, P. Lubos, and F. Steinicke. Cognitive resource demands of redirected walking. IEEE transactions on visualization and computer graphics, 21(4):539–544, 2015.

- [5] Y.-H. Cho, D.-H. Min, J.-S. Huh, S.-H. Lee, J.-S. Yoon, and I.-K. Lee. Walking outside the box: Estimation of detection thresholds for non-forward steps. In 2021 IEEE Virtual Reality and 3D User Interfaces (VR), pp. 448–454. IEEE, 2021.

- [6] E. Hodgson and E. Bachmann. Comparing four approaches to generalized redirected walking: Simulation and live user data. IEEE transactions on visualization and computer graphics, 19(4):634–643, 2013.

- [7] C. Jennett, A. L. Cox, P. Cairns, S. Dhoparee, A. Epps, T. Tijs, and A. Walton. Measuring and defining the experience of immersion in games. International journal of human-computer studies, 66(9):641–661, 2008.

- [8] R. S. Kennedy, N. E. Lane, K. S. Berbaum, and M. G. Lilienthal. Simulator sickness questionnaire: An enhanced method for quantifying simulator sickness. The international journal of aviation psychology, 3(3):203–220, 1993.

- [9] D. Kim, J.-e. Shin, J. Lee, and W. Woo. Adjusting relative translation gains according to space size in redirected walking for mixed reality mutual space generation. In 2021 IEEE Virtual Reality and 3D User Interfaces (VR), pp. 653–660. IEEE, 2021.

- [10] L. Kruse, E. Langbehn, and F. Steinicke. I can see on my feet while walking: Sensitivity to translation gains with visible feet. In 2018 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), pp. 305–312. IEEE, 2018.

- [11] E. Langbehn, T. Eichler, S. Ghose, K. von Luck, G. Bruder, and F. Steinicke. Evaluation of an omnidirectional walking-in-place user interface with virtual locomotion speed scaled by forward leaning angle. In Proceedings of the GI Workshop on Virtual and Augmented Reality (GI VR/AR), pp. 149–160, 2015.

- [12] E. Langbehn, P. Lubos, G. Bruder, and F. Steinicke. Bending the curve: Sensitivity to bending of curved paths and application in room-scale vr. IEEE transactions on visualization and computer graphics, 23(4):1389–1398, 2017.

- [13] E. Langbehn, F. Steinicke, M. Lappe, G. F. Welch, and G. Bruder. In the blink of an eye: leveraging blink-induced suppression for imperceptible position and orientation redirection in virtual reality. ACM Transactions on Graphics (TOG), 37(4):1–11, 2018.

- [14] D.-Y. Lee, Y.-H. Cho, and I.-K. Lee. Real-time optimal planning for redirected walking using deep q-learning. In 2019 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), pp. 63–71. IEEE, 2019.

- [15] T. Nescher, Y.-Y. Huang, and A. Kunz. Planning redirection techniques for optimal free walking experience using model predictive control. In 2014 IEEE Symposium on 3D User Interfaces (3DUI), pp. 111–118. IEEE, 2014.

- [16] C. T. Neth, J. L. Souman, D. Engel, U. Kloos, H. H. Bulthoff, and B. J. Mohler. Velocity-dependent dynamic curvature gain for redirected walking. IEEE transactions on visualization and computer graphics, 18(7):1041–1052, 2012.

- [17] N. C. Nilsson, T. Peck, G. Bruder, E. Hodgson, S. Serafin, M. Whitton, F. Steinicke, and E. S. Rosenberg. 15 years of research on redirected walking in immersive virtual environments. IEEE computer graphics and applications, 38(2):44–56, 2018.

- [18] A. Paludan, J. Elbaek, M. Mortensen, M. Zobbe, N. C. Nilsson, R. Nordahl, L. Reng, and S. Serafin. Disguising rotational gain for redirected walking in virtual reality: Effect of visual density. In 2016 IEEE Virtual Reality (VR), pp. 259–260. IEEE, 2016.

- [19] S. Razzaque. Redirected walking. The University of North Carolina at Chapel Hill, 2005.

- [20] M. Rietzler, J. Gugenheimer, T. Hirzle, M. Deubzer, E. Langbehn, and E. Rukzio. Rethinking redirected walking: On the use of curvature gains beyond perceptual limitations and revisiting bending gains. In 2018 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), pp. 115–122. IEEE, 2018.

- [21] R. A. Ruddle and S. Lessels. The benefits of using a walking interface to navigate virtual environments. ACM Transactions on Computer-Human Interaction (TOCHI), 16(1):1–18, 2009.

- [22] D. J. Simons and D. T. Levin. Change blindness. Trends in cognitive sciences, 1(7):261–267, 1997.

- [23] F. Steinicke, G. Bruder, K. Hinrichs, and P. Willemsen. Change blindness phenomena for stereoscopic projection systems. In 2010 IEEE Virtual Reality Conference (VR), pp. 187–194. IEEE, 2010.

- [24] F. Steinicke, G. Bruder, J. Jerald, H. Frenz, and M. Lappe. Estimation of detection thresholds for redirected walking techniques. IEEE transactions on visualization and computer graphics, 16(1):17–27, 2009.

- [25] R. R. Strauss, R. Ramanujan, A. Becker, and T. C. Peck. A steering algorithm for redirected walking using reinforcement learning. IEEE transactions on visualization and computer graphics, 26(5):1955–1963, 2020.

- [26] E. Suma, S. Finkelstein, M. Reid, S. Babu, A. Ulinski, and L. F. Hodges. Evaluation of the cognitive effects of travel technique in complex real and virtual environments. IEEE Transactions on Visualization and Computer Graphics, 16(4):690–702, 2009.

- [27] E. A. Suma, G. Bruder, F. Steinicke, D. M. Krum, and M. Bolas. A taxonomy for deploying redirection techniques in immersive virtual environments. In 2012 IEEE Virtual Reality Workshops (VRW), pp. 43–46. IEEE, 2012.

- [28] E. A. Suma, S. Clark, D. Krum, S. Finkelstein, M. Bolas, and Z. Warte. Leveraging change blindness for redirection in virtual environments. In 2011 IEEE Virtual Reality Conference, pp. 159–166. IEEE, 2011.

- [29] E. A. Suma, Z. Lipps, S. Finkelstein, D. M. Krum, and M. Bolas. Impossible spaces: Maximizing natural walking in virtual environments with self-overlapping architecture. IEEE Transactions on Visualization and Computer Graphics, 18(4):555–564, 2012.

- [30] Q. Sun, A. Patney, L.-Y. Wei, O. Shapira, J. Lu, P. Asente, S. Zhu, M. McGuire, D. Luebke, and A. Kaufman. Towards virtual reality infinite walking: dynamic saccadic redirection. ACM Transactions on Graphics (TOG), 37(4):1–13, 2018.

- [31] J. Thomas and E. S. Rosenberg. A general reactive algorithm for redirected walking using artificial potential functions. In 2019 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), pp. 56–62. IEEE, 2019.

- [32] M. Usoh, K. Arthur, M. C. Whitton, R. Bastos, A. Steed, M. Slater, and F. P. Brooks Jr. Walking walking-in-place flying, in virtual environments. In Proceedings of the 26th annual conference on Computer graphics and interactive techniques, pp. 359–364, 1999.

- [33] M. Usoh, E. Catena, S. Arman, and M. Slater. Using presence questionnaires in reality. Presence, 9(5):497–503, 2000.

- [34] K. Vasylevska and H. Kaufmann. Influence of path complexity on spatial overlap perception in virtual environments. In ICAT-EGVE, pp. 159–166, 2015.

- [35] K. Vasylevska and H. Kaufmann. Towards efficient spatial compression in self-overlapping virtual environments. In 2017 IEEE Symposium on 3D User Interfaces (3DUI), pp. 12–21. IEEE, 2017.

- [36] K. Vasylevska, H. Kaufmann, M. Bolas, and E. A. Suma. Flexible spaces: Dynamic layout generation for infinite walking in virtual environments. In 2013 IEEE Symposium on 3D User Interfaces (3DUI), pp. 39–42. IEEE, 2013.

- [37] N. L. Williams, A. Bera, and D. Manocha. Arc: Alignment-based redirection controller for redirected walking in complex environments. IEEE Transactions on Visualization and Computer Graphics, 27(5):2535–2544, 2021.

- [38] C. A. Zanbaka, B. C. Lok, S. V. Babu, A. C. Ulinski, and L. F. Hodges. Comparison of path visualizations and cognitive measures relative to travel technique in a virtual environment. IEEE Transactions on Visualization and Computer Graphics, 11(6):694–705, 2005.

- [39] M. A. Zmuda, J. L. Wonser, E. R. Bachmann, and E. Hodgson. Optimizing constrained-environment redirected walking instructions using search techniques. IEEE transactions on visualization and computer graphics, 19(11):1872–1884, 2013.