Reducing Network Load via Message Utility Estimation for Decentralized Multirobot Teams

Abstract

We are motivated by quantile estimation of algae concentration in lakes and how decentralized multirobot teams can effectively tackle this problem. We find that multirobot teams improve performance in this task over single robots, and communication-enabled teams further over communication-deprived teams; however, real robots are resource-constrained, and communication networks cannot support arbitrary message loads, making naïve, constant information-sharing but also complex modeling and decision-making infeasible. With this in mind, we propose online, locally computable metrics for determining the utility of transmitting a given message to the other team members and a decision-theoretic approach that chooses to transmit only the most useful messages, using a decentralized and independent framework for maintaining beliefs of other teammates. We validate our approach in simulation on a real-world aquatic dataset, and we show that restricting communication via a utility estimation method based on the expected impact of a message on future teammate behavior results in a 42% decrease in network load while simultaneously decreasing quantile estimation error by 1.84%.

I Introduction

Multirobot teams have shown promise for effectively exploring and accurately estimating quantile values to aid understanding of natural environments [1]. When studying spatially varying fields, quantile estimation is useful for describing varied spreads of interest, and entails using measurements from the environment to estimate the values of desired quantiles (e.g., the 25th percentile is equivalent to the 0.25 quantile) [2]. We are interested in using a team of communication-enabled mobile robots to collaboratively explore a natural environment such that we achieve an accurate estimate of quantile values in that environment. However, realistic multirobot deployments must consider limitations in communication capabilities; here, we focus on reducing the network load as measured by the number of attempted transmitted messages with the goal of sacrificing minimal quantile estimation accuracy. We address the question of how to decide if a message is worth sending. Specifically, we:

-

•

Propose methods to reduce network load for bandwidth- and resource-constrained decentralized multirobot teams that leverage utility-based message evaluation, with no guarantees on information from teammates;

-

•

Describe a decision-theoretic approach to choosing whether to transmit a message to the network rooted in the utility-based evaluation and a cost of transmission;

-

•

Present results showing significant reductions in network load are achievable with minor corresponding drops in performance on the problem task of estimating quantile values, even with probabilistic transmission failure and a distance-based transmission probability, on a dataset from a real aquatic environment;

-

•

Discuss results in the context of real-world applications.

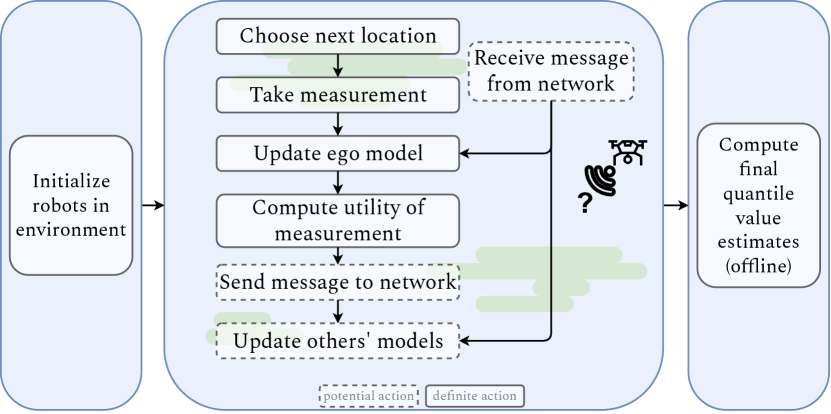

We assume a decentralized team where each robot plans independently and is not guaranteed to receive information from others. However, we assume a communication protocol such that a robot can, at each step, decide to broadcast its newly acquired measurements to the network. The network has limited bandwidth, so robots are incentivized to only send those that will be most beneficial to the others. We encode the cost of loading the network with a message through a threshold value, where utilities higher than the threshold have a higher benefit than cost. With these assumptions in place, the question of how to evaluate a potential message for its utility emerges as a key consideration to this problem; in this work, we propose several approaches to solve it. A general overview can be seen in Figure 1.

II Related Work

There is an extensive literature in utility-based communication, and our methods share similarities with previous work. Work that has considered the problem of deciding what or when to communicate is largely motivated by the same desire to respect bandwidth limitations that real networks must face and to reduce communication costs. However, particular methods differ in how they approach solutions and in what context the problem is situated. Some of the varied contexts in which utility-based decision-making for inter-robot communication has been investigated include months-long data collection to be routed to a base station [3], disaster response [4], non-cooperative multi-agent path finding [5], and those with request-reply protocols [5, 6].

One common approach has been to use message category, type, or intrinsic data features to determine which messages are most valuable to send [3, 4, 7]. These approaches typically leverage domain knowledge for their particular application. Previous work aimed at the problem of multirobot localization has applied directed acyclic graphs built by forward-simulating the robot team under both transmission and non-transmission of each message [8]. The goal then is to reach the best group localization accuracy while minimizing the cost (number of communications required in the DAG).

The expected effect of the message on robot actions has also been used to determine utility [5, 6]. One approach for restricting the number of communications when robots are tasked with selecting goal waypoints to visit is to use a heuristic based on limiting the new path’s length, and only request information from others if the path would be within a certain factor length of the current path [9]. Monte Carlo Tree Search (MCTS) has been used within a general middleware in order to make decisions on whether a message should be sent to the network [7]. Their approach takes into account the temporal aspect of message utility potentially changing over time, and the tree built at each decision point includes all previous and future messages. However, it does not explicitly encode network constraints and assumes that robots are always within communication range of each other.

Another class of solutions propose metrics to determine utility based on the difference in expected reward after incorporating a message [10, 11, 12]. In [13], the authors proposed a utility function computed as the sum of two competing factors: maximizing utility gained by the entire team and minimizing the number of times a message is sent. There, the network is point-to-point, and thus at each step, a decision is made whether to forward the received message to a random recipient or to cease forwarding. [7] and [8] also include a measure of utility based on expected reward, and the teams are decentralized in terms of planning and reward calculation. A major difference between these works and ours is that our end goal is to explore the environment in whatever way results in the best quantile estimates, while those define a static goal location for the robots to reach. We also exclusively choose to plan using a greedy algorithm for computational efficiency and tractability, motivated by resource-constrained systems, and do not forward-simulate the models of other robots.

Significantly, we make the (more realistic) assumption of stochastic communication on top of the communication decision; just because a robot decides to send a message does not guarantee reception by all or any of the recipients. We do not assume perfect measurement sensing. Despite our relatively simple planning and modeling methods and more failure-prone communication assumptions, we are able to achieve a significant decrease in network loading while maintaining good task performance.

III Methods

Our formulation extends the setup in [1] to which we refer the reader for details; we briefly mention relevant definitions here. The team consists of aerial robots, each operating with a fixed planning budget at a fixed height in a discretized environment . Robots can take one of four possible actions at each step: or . Since our focus is on inter-robot communications, we assume a low-level controller, a collision avoidance routine, and perfect localization. The human operator supplies a set of arbitrary quantiles of interest , and the end goal is to produce accurate estimates of where the true values are defined as , the set of all possible locations a robot can measure with its sensor are , and the corresponding true values at those locations are . Each robot has a camera with which it can take noisy measurements representing the concentration of algae at those pixel locations in the environment . Throughout the deployment, each robot collects measurements both firsthand via its camera as well as via any messages received from others through the network. The robots use all their collected measurements to construct and update their internal Gaussian process (GP) model of the environment. Robot ’s estimate of the quantile values is given by

| (1) |

where is the estimate of all possible locations using the GP conditioned on the measurements and that has collected thus far.

In what follows, when viewing the problem from the perspective of a given robot, we will refer to it as or the ego robot and to any of the other robots as . Variables superscripted with such as indicate the GP for from the perspective of .

Motivated by the computational constraints of resource-constrained systems, we opt to use a locally computable, online, greedy planning policy. At each planning step , maximizes an objective function over feasible next measurement locations. We use a modified version of the quantile standard error objective function to evaluate a proposed location for time [2], which measures the difference in the standard error of the estimated quantile values using two versions of the robot model: the current model , and the model updated with the hypothetical new values at the proposed locations . We modify such that it is conditioned not only on the proposed measurement locations , but also the values associated with the locations (denoted by generally) and the GP to be used. The reasoning is that is also used later in the process for computing message utilities, and this allows it to generalize to these cases when and may take on different values. When is used in the planning step by to select ’s next location, are the expected values at the proposed location : (just like in [2]). However, when is used by to determine the utility of receiving a real measurement that has already acquired, are those acquired , and the GP may be either or (see Section III-C). The final term encourages exploration of high-variance areas.

| (2) |

| (3) |

III-A Summary of Process

The approach consists of 3 phases: Initialization, Exploration, and Aggregation. These are described at a high level here; further details are in the sections following.

III-A1 Initialization

Robots begin distributed in the environment according to the initial location spread ([1] provides details). We assume each robot is given the number of other robots and their corresponding starting locations.

III-A2 Exploration

The bulk of the work happens during the decentralized exploration phase, where robots repeatedly choose the next location to visit, measure the environment, optionally broadcast the measurement in a message, and update their models until their budget is exhausted. The next measurement locations at are selected according to

| (4) |

where contains the sets of locations that could be sensed one step from the current location, . On top of this planning cycle, may receive a message from another at any point, leading to further model updates (Section III-C).

III-A3 Aggregation

Once the robots have reached the allotted budgets, their data is collected. The operator can produce an aggregate GP model of the environment offline using it and, with that, obtain final estimates of quantile values:

| (5) |

III-B Communication Framework

The communication network consists of robots, each with the ability to broadcast a message at each timestep. A message sent by at time is composed of a header containing the sending robot’s ID number and current location, and a body containing the current set of measurements. A sent message is (independently) transmitted successfully to every other robot in the network subject to the probability depending on distance and parameterized by the dropoff rate and communication radius [1]:

| (6) |

A successfully transmitted message will be received whole, uncorrupted, and without delay. If a message is unsuccessfully transmitted to , it is considered lost for that robot. In general, we assume does not receive a handshake or confirmation of whether its message was received successfully or not by . We assume that robots can self-localize and that, within the radius , they can accurately sense , which they do for each at each step.

III-C Modeling Others and Updating Models

A central consideration is how to model other robots using incomplete information since such a model is necessary to adequately assess the utility that any given message will have for others. The ego robot maintains a separate model for each . is the most up-to-date location has, and is updated in two cases. First, by ’s sensing capabilities at each step if is within radius , and second, anytime receives a message from , via the header. is a GP based on the measurements believes has. We denote this as . , and , which is defined by it, can also be updated in two scenarios. The first is when receives a message from , since it is clear that must possess the data contained. The second is more uncertain. If broadcasts to the network, it does not know whether it was successfully transmitted to any ; however, recall that can sense the location of within . We use this to determine a proxy for reasonable confidence of transmission success: If is within , believes was received and thus updates .

III-D Computing Message Utility

We introduce several methods for computing the utility of (dropping the super/subscripts for readability). The first, Reward, defines as the reward believes would receive, using the objective function :

| (7) |

We call the second method Action. Intuitively, it considers a message utile if believes that receiving those measurements would result in taking a different action than it would without them. Then is defined as if the actions and differ as computed using Equation 4, and if they do not, takes the value of :

| (8) |

Both and include the caveat that, if believes has collected no measurements yet, the message should be sent.

The last three methods presented are simpler in that they do not make use of the model of . In the third method, Ego-reward, uses the reward that it itself actually received from its measurement, and directly sets that as the utility for all other :

| (9) |

Finally, Always and Never are constant baselines, which always assign either or :

| (10) |

| (11) |

III-E Deciding to Communicate

With the utilities of a message computed for each , now faces the problem of deciding whether the utility overcomes the cost of loading the network with a message. Since the communication is broadcast-based rather than point-to-point, must first compute a single utility value for the team and then determine if it crosses a utility threshold representing the cost. For this, we describe a method for aggregating a set of utilities which computes expected utility, weighting utilities by the estimated transmission success probability:

| (12) |

| (13) |

where and is as defined in Section III-B. The estimated success probability, computed in Equation 13, directly uses the probability based on the distance to if it is known (i.e., if is within the sensing radius ), but defaults to an optimistic probability estimate for any that is beyond the sensing radius by using as the assumed distance. This optimistic heuristic prevents overly restrictive utility values in the face of unknown teammate positions. Note that if any is , then is considered past the threshold . If , decides to transmit .

| Method | Attempted | Median | Successful | Median | Median |

|---|---|---|---|---|---|

| () | Decrease | () | Decrease | RMSE Increase | |

| Action | (66.6, 27.95) | 42.5% | (38.2, 23.85) | 24.32% | -1.84% |

| Always | (120.0, 0.0) | – | (44.93, 16.78) | – | – |

| Ego-reward | (76.2, 28.33) | 27.5% | (34.4, 20.27) | 32.2% | 5.96% |

| Never | (0.0, 0.0) | 100.0% | (0.0, 0.0) | 100.0 | 73.98% |

| Reward | (68.2, 32.1) | 52.5% | (36.0, 22.77) | 35.96% | 16.03% |

IV Experiments and Discussion

We now present experimental results for the methods described in Section III. We test performance on 3 sets of quantiles : Quartiles (0.25, 0.5, 0.75), Median-Extrema (0.5, 0.9, 0.99), and Extrema (0.9, 0.95, 0.99). For each combination of parameters, we run 5 seeds, resulting in 60 total instances. We fix the following parameters: ; budget ( steps total); initial spread ; ; communication radius ; thresholds and . is used for Reward and Action, while is used for Ego-reward. We selected these experimentally by observing the received values during testing and setting to the approximate 25th percentile of observed rewards. This can be interpreted as only messages that are in the top 75% of utility being worth the cost of sending to the network, while those in the lower quarter are not.

The robots operate in simulation using a real-world dataset collected with a hyperspectral camera mounted on a drone from a lake in California. The environment is roughly meters, discretized to locations. Each set of measurements is an image of pixel intensities for the 400nm channel of the hyperspectral image, normalized to . To each measurement, we add zero-mean Gaussian noise with a standard deviation .

IV-A Utility Methods Comparison

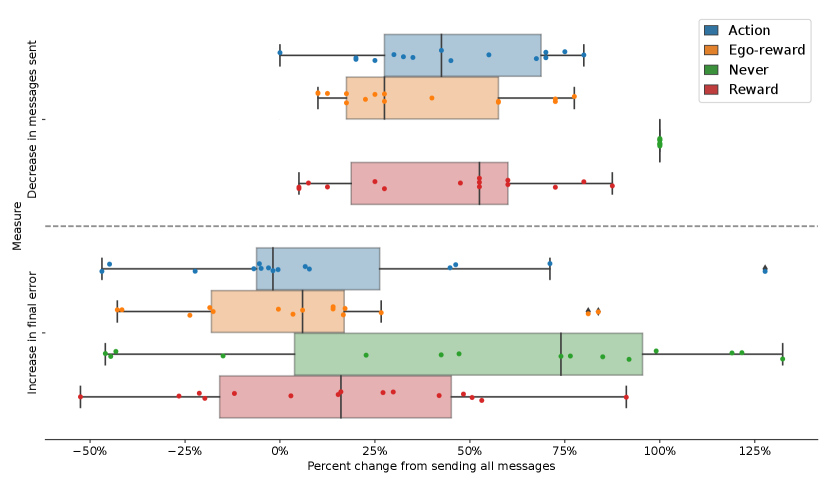

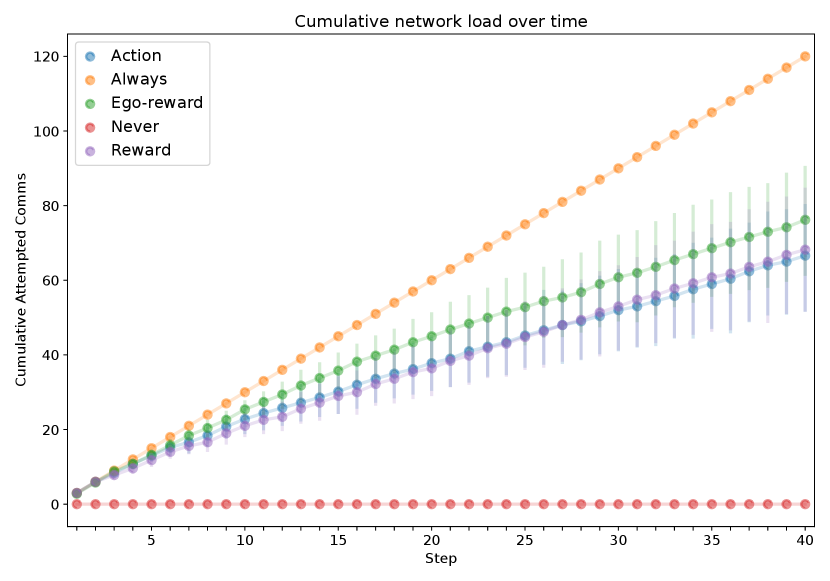

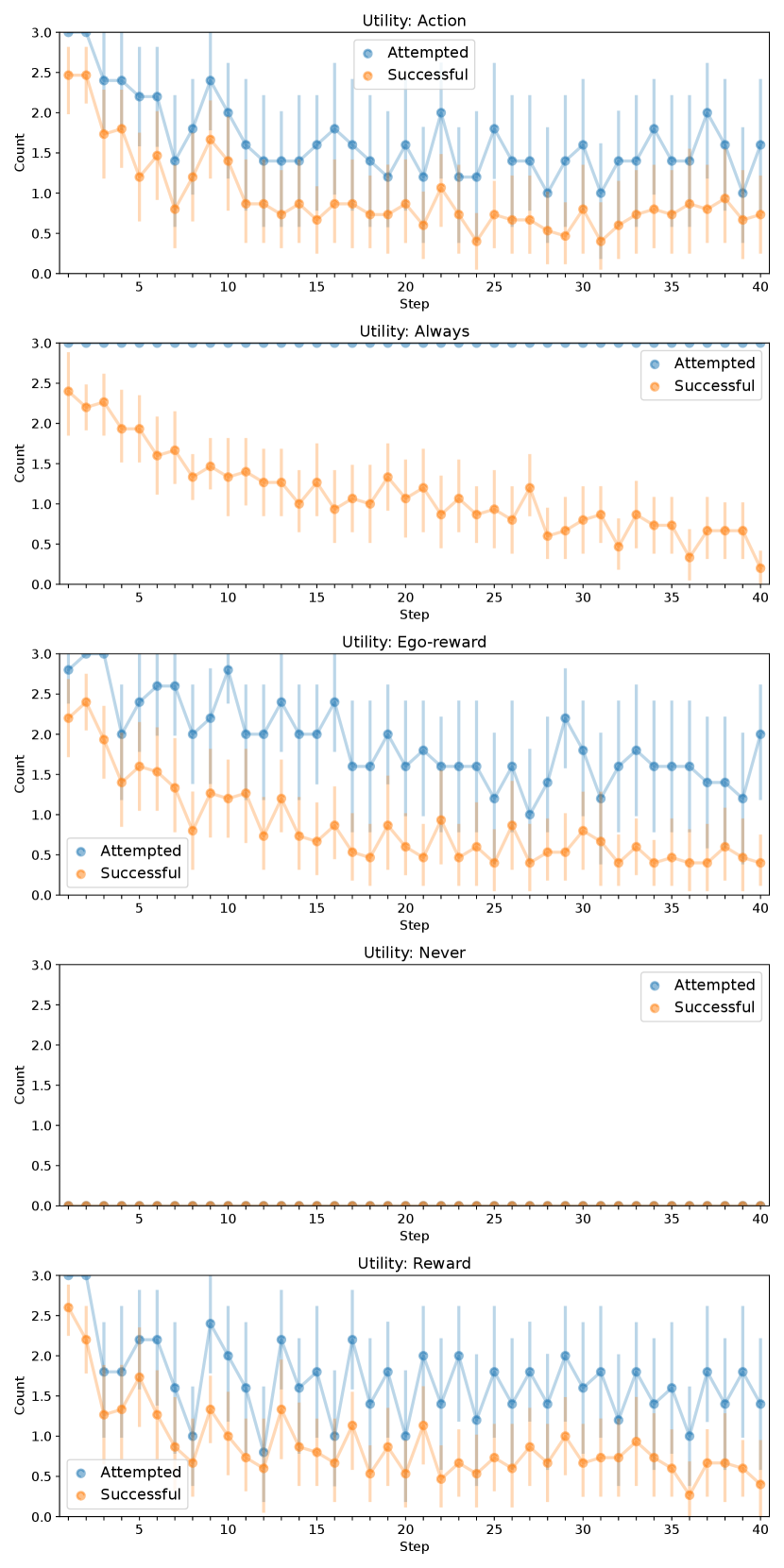

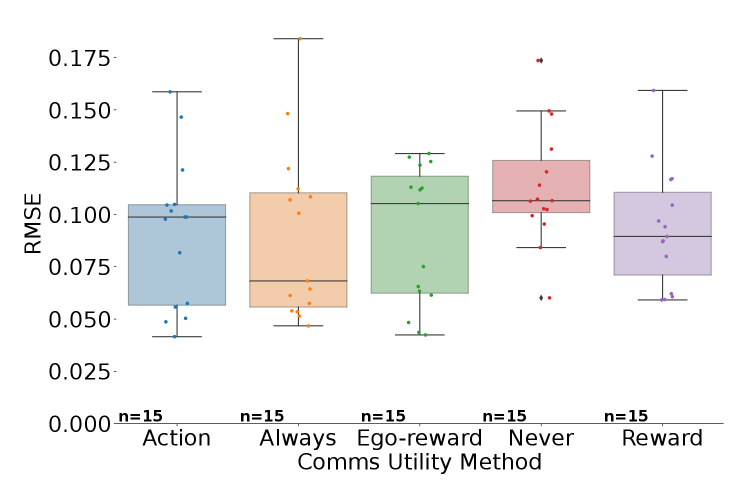

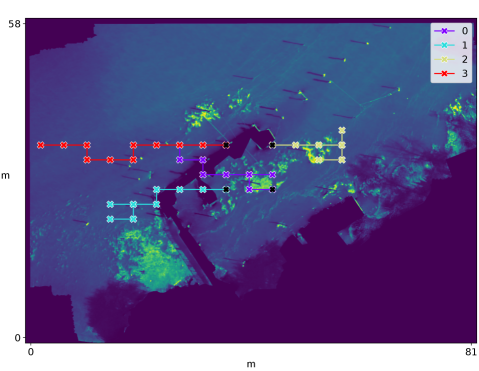

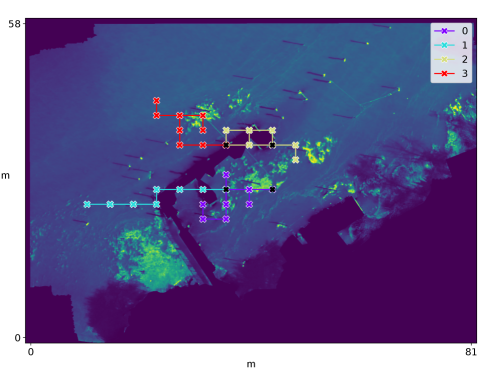

We investigate the effectiveness of the different methods on performance in terms of both the network load as well as the final error (RMSE) between the estimated quantile values and . Units are pixel intensity normalized to the range . Table I displays numerical results, including a comparison of each method to Always in terms of the median percent change of each reported metric. These results are shown graphically in Figure 2, illustrating the tradeoff between network load and task performance. Figure 3 shows the cumulative network load as measured by the attempted transmissions over time, while Figure 4 shows the number of attempted (blue) and successful (orange) message transmissions plotted over time. Figure 5 shows boxplots of the final RMSE for different utility methods. Figure 6 illustrates example paths taken superimposed on the hyperspectral image of the lake.

In Figure 4, we observe that across all methods, successful transmissions generally decrease over time due to robots spreading out. Comparing Never to the other methods in Figure 5, we can confirm our previous findings that inter-robot communication improves performance. However, we are mainly interested in the effect of decreasing network load.

When we analyze results in Table I and Figure 2, we see that severe reductions in communication do not necessarily result in severe decreases in performance. We see that Action results in the best performance: Compared to Always, there is more than decrease in network load as measured by attempted message transmissions, but this translates to less than decrease in actual (successful) message transmissions. Most notably, in terms of final error, there is a decrease in mean RMSE, indicating that performance, on average, improves slightly even with restricted communication.

Other reward-based methods, Reward and Ego-reward, also perform favorably. Figures 4 and 3 suggest that all three proposed methods have message transmission rates that generally decrease over time. We speculate that this may be due to two reasons. First, as robots explore more of the environment and receive more information from the others, their beliefs of their teammates will grow such that increases, leading to more accurate environment models and thus less value in receiving an additional set of measurements. Second, with more exploration time, robots are more likely to be situated at further distances from each other. With distance, the expected utility will decrease according to the transmission probability, and even messages believed to be useful may not be deemed worthy because of their unlikelihood of successful delivery. We note, however, that the optimistic assumption in Equation 13 will prevent a highly useful message from not being sent due to distance.

As shown qualitatively in Figures 4 and 3 and quantitatively in Table I, Action, Reward, and Ego-reward all have lower network load than Always, but their relative loads vary as do their final estimation error. In particular, Reward results in the highest decrease in network load (excluding Never) at , but also the largest increase in final error of more than . Thus, such a metric may be valuable when network bandwidth is severely limited. Ego-reward, on the other hand, has the smallest decrease in network load at , but a relatively small increase in error at less than . Ego-reward has the benefit of not requiring any onboard modeling of teammate beliefs; thus, this method could be powerful when onboard compute is limited. Action is the overall best performer, with a decrease in network load of and a decrease in final error of . We suspect Action performs better because it directly considers the impact of a message on future behavior, leading to more informed and targeted measurement acquisition at the next step. We note that the comparison of relative network loads is more valuable here than the absolute value, as the threshold values used in Equation 12 are tunable parameters that should reflect actual network constraints in a real deployment.

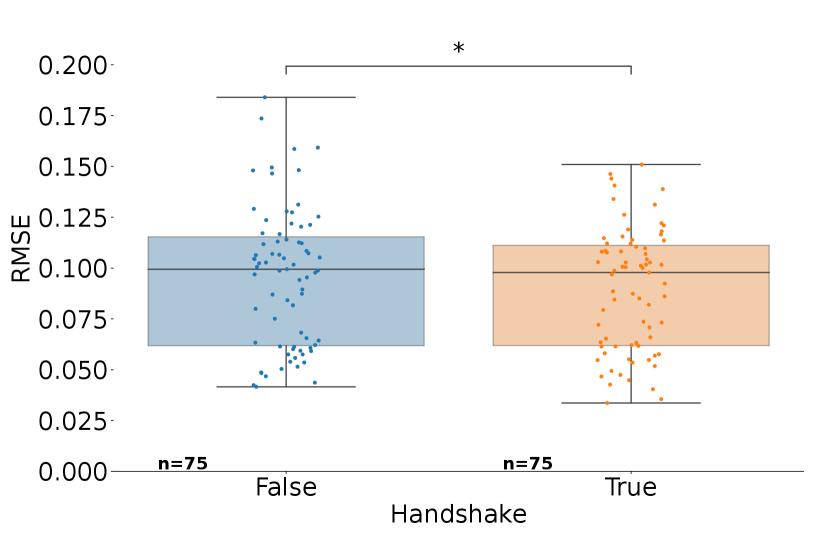

IV-B Oracle Handshaking

We implement a modification to the communication protocol where we assume an oracle handshaking routine, which gives an accurate confirmation handshake from each indicating whether the message was successfully received; then only updates when successful. Figure 7 shows the final RMSE comparing the two; we see that there is a significant improvement with oracle handshaking (Wilcoxon signed-rank test ). Though practically impossible, this suggests that implementing such a (probabilistic) routine in the network could improve performance further; however, the resulting burden of these messages must also be taken into account.

V Conclusion

We presented the first study on restricted communication in the context of quantile estimation for environmental analysis. We show that restricting message transmission based on whether the actions that the sender believes other robots will take at the next state would change with the message results in a decrease in network load while also decreasing quantile estimation error by , when compared to transmitting every message possible. This indicates that targeted communication during quantile estimation can have real benefits to real-world multirobot deployments when robots and their networks are resource-constrained.

In addition to receipt handshaking, there are several interesting directions of future work. We believe allowing the sender to choose the most valuable measurements from its collected data rather than restricting it to the most recent measurements may improve performance. However, this would lead to challenges in scalability as the environment is explored, and could be addressed by applying a rolling window over the previous measurements to mitigate this. Further, we are interested in methods for better approximating the models that a robot maintains for each of its teammates, which could be achieved by augmenting message headers with metadata such as that robot’s current dataset size or current quantile estimates.

References

- [1] I. M. Rayas Fernández, C. E. Denniston, and G. S. Sukhatme, “A study on multirobot quantile estimation in natural environments,” arXiv e-prints, pp. arXiv–2303, 2023.

- [2] I. M. Rayas Fernández, C. E. Denniston, D. A. Caron, and G. S. Sukhatme, “Informative path planning to estimate quantiles for environmental analysis,” IEEE Robotics and Automation Letters, vol. 7, no. 4, pp. 10 280–10 287, 2022.

- [3] P. Padhy, R. K. Dash, K. Martinez, and N. R. Jennings, “A utility-based adaptive sensing and multihop communication protocol for wireless sensor networks,” ACM Transactions on Sensor Networks (TOSN), vol. 6, no. 3, pp. 1–39, 2010.

- [4] P. Lieser, N. Richerzhagen, S. Luser, B. Richerzhagen, and R. Steinmetz, “Understanding the impact of message prioritization in post-disaster ad hoc networks,” in 2019 International Conference on Networked Systems (NetSys). IEEE, 2019, pp. 1–8.

- [5] Z. Ma, Y. Luo, and J. Pan, “Learning selective communication for multi-agent path finding,” IEEE Robotics and Automation Letters, vol. 7, no. 2, pp. 1455–1462, 2021.

- [6] S. Q. Zhang, Q. Zhang, and J. Lin, “Efficient communication in multi-agent reinforcement learning via variance based control,” Advances in Neural Information Processing Systems, vol. 32, 2019.

- [7] M. Barciś, A. Barciś, N. Tsiogkas, and H. Hellwagner, “Information distribution in multi-robot systems: Generic, utility-aware optimization middleware,” Frontiers in Robotics and AI, vol. 8, p. 685105, 2021.

- [8] J. Anderson and G. A. Hollinger, “Communication planning for cooperative terrain-based underwater localization,” Sensors, vol. 21, no. 5, p. 1675, 2021.

- [9] G. Best and G. A. Hollinger, “Decentralised self-organising maps for multi-robot information gathering,” in 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2020, pp. 4790–4797.

- [10] A. Simonetto, L. Kester, and G. Leus, “Distributed time-varying stochastic optimization and utility-based communication,” arXiv preprint arXiv:1408.5294, 2014.

- [11] R. J. Marcotte, X. Wang, D. Mehta, and E. Olson, “Optimizing multi-robot communication under bandwidth constraints,” Autonomous Robots, vol. 44, no. 1, pp. 43–55, 2020.

- [12] V. Unhelkar and J. Shah, “Contact: Deciding to communicate during time-critical collaborative tasks in unknown, deterministic domains,” in Proceedings of the AAAI Conference on Artificial Intelligence, vol. 30, no. 1, 2016.

- [13] P. Velagapudi, O. Prokopyev, K. Sycara, and P. Scerri, “Maintaining shared belief in a large multiagent team,” in 2007 10th International Conference on Information Fusion. IEEE, 2007, pp. 1–8.