Reference-Invariant Inverse Covariance Estimation with Application to Microbial Network Recovery

Abstract

The interactions between microbial taxa in microbiome data has been under great research interest in the science community. In particular, several methods such as SPIEC-EASI, gCoda, and CD-trace have been proposed to model the conditional dependency between microbial taxa, in order to eliminate the detection of spurious correlations. However, all those methods are built upon the central log-ratio (clr) transformation, which results in a degenerate covariance matrix and thus an undefined inverse covariance matrix as the estimation of the underlying network. Jiang et al. (2021) and Tian et al. (2022) proposed bias-corrected graphical lasso and compositional graphical lasso based on the additive log-ratio (alr) transformation, which first selects a reference taxon and then computes the log ratios of the abundances of all the other taxa with respect to that of the reference. One concern of the alr transformation would be the invariance of the estimated network with respect to the choice of reference. In this paper, we first establish the reference-invariance property of a subnetwork of interest based on the alr transformed data. Then, we propose a reference-invariant version of the compositional graphical lasso by modifying the penalty in its objective function, penalizing only the invariant subnetwork. We validate the reference-invariance property of the proposed method under a variety of simulation scenarios as well as through the application to an oceanic microbiome data set.

1 Introduction

Microbiome, which is the collection of micro-organisms in an ecological system, is of great research interest in the science community and has been shown to play an important role in influencing its host or living environment. For instance, it is found that intervening the gut microbiota of African turquoise killifish could result in delay of their aging process (Smith et al., 2017). The advancement of the high-throughput sequencing technologies such as 16S rRNA profiling that replicates a specific sequence of marker genes which is counted and serves as the proxy of the abundance of the Operational Taxonomic Units (OTU’s, the surrogate of bacteria species) in a sample, has enabled researchers to analyze the microbial compositions in uncultivated samples.

One common goal in microbiome data analysis is to understand how microbes interact with each other. However, the nature of microbiome data determined by the technicalities of the sequencing procedures has imposed various challenges in recovering the microbial interactions. Firstly, the data is composed of discrete counts. Secondly, microbiome data entangles with the “compositionality”, which is a technicality imposed by the sequencing procedures. For instance, in 16S rRNA profiling, the “sequencing depth”, i.e. the total count of sequences in a sample, is pre-determined on the sequencing instrument, and is usually not on the same scale from sample to sample. This implies that the counts for each OTU in a sample carry only the information about their relative abundances instead of their absolute abundances. Thirdly, the microbiome data possesses “high-dimensionality” in nature. With the resolution at the OTU level, it is likely that the number of OTU’s is far more than the number of samples in a biological experiment.

After one obtains the abundance data for the microbial species, marginal correlation analysis could be used to infer the interactions among microbes (Faust and Raes, 2012). Over the years, several methods have been developed to address the compositionality issue in the construction of correlation networks for microbiome data, such as SparCC (Friedman and Alm, 2012) , CCLasso (Fang et al., 2015), and REBECCA (Ban et al., 2015). All of those methods aimed to construct a covariance (correlation) matrix or network of the unknown absolute abundances (the positive, unconstrained true abundance of taxa). The high-dimensionality in microbiome data is tackled by imposing a sparsity constraint in the above three methods, with a correlation threshold for SparCC and an -norm penalty for CCLasso and REBECCA.

All the above methods are built upon the marginal correlations between two microbial taxa, and they could lead to spurious correlations that are caused by confounding factors such as other taxa in the same community. Alternatively, interactions among taxa can be modeled through their conditional dependencies given the other taxa, which can eliminate the detection of spurious correlations. SPIEC-EASI was probably the first method that was proposed to estimate sparse microbial network based on conditional dependency (Kurtz et al., 2015). It first performs a central log-ratio (clr) transformation on the observed counts (Aitchison, 1986), and then apply graphical lasso (Yuan and Lin, 2007; Banerjee et al., 2008; Friedman et al., 2008) to find the inverse covariance matrix of the transformed data. More recently, gCoda and CD-trace were developed to improve SPIEC-EASI by accounting for the compositionality property of microbiome data (Fang et al., 2017; Yuan et al., 2019), both of which have been shown to possess better performance in terms of recovering the sparse microbial network than SPIEC-EASI.

It is worth noting that SPIEC-EASI, gCoda, and CD-trace are all built upon the clr transformation of the observed counts. Meanwhile, Jiang et al. (2021) and Tian et al. (2022) proposed bias-corrected graphical lasso and compositional graphical lasso based on the additive log-ratio (alr) transformed data. In alr transformation, one needs to select a reference taxon and compute the log relative abundance of all other taxa with respect to the reference. One of the major concerns for the alr transformation is the robustness or invariance of the proposed method with respect to the choice of the reference taxon, which is not well studied in the literature.

In this paper, we first establish the reference-invariance property of estimating the sparse microbial network based on the alr transformed data. It shows that a submatrix of the inverse covariance matrix that correspond to the non-candidate-of-reference taxa is invariant with respect to the choice of the reference. Then, we propose a reference-invariant version of the compositional graphical lasso by modifying the penalty in its objective function, which only penalizes the invariant submatrix mentioned above. Additionally, we illustrate the reference-invariance property of the proposed method under a variety of simulation scenarios and also demonstrate its applicability and advantages by applying it to an oceanic microbiome data set.

2 Methodology

2.1 Reference-Invariance Property

Let denote a vector of compositional probabilities satisfying that . The additive log-ratio (alr) transformation picks an entry of this vector as the reference and transforms the compositional vector using log ratios of each entry to the reference. Without loss of generality, suppose we pick the last entry as the reference, then the alr transformed vector becomes

The transformed vector is often assumed to follow a multivariate continuous distribution with a mean vector and a covariance matrix . For example, . Denote further the inverse covariance matrix .

Similarly, if we pick another entry as the reference, we can define another alr-transformed vector. For simplicity of illustration, suppose we choose the second last entry to be the reference and consider the following alr transformation

where the subscript denotes the “permuted” version of . Similarly, define the mean vector of by , the covariance matrix by , and the inverse covariance matrix by .

A simple derivation implies that is a linear transformation of as , where

with denoting the identity matrix, and and denoting the column vectors with all ’s and all ’s, respectively. It follows that , , and . It is also worth noting that is an involutory matrix, i.e., .

The following theorem states the reference-invariance property of the inverse covariance matrix under the alr transformation.

Theorem 1.

, i.e. the upper-left sub-matrix of the inverse covariance matrix of the alr transformed vector is invariant with respect to the choices of the -th entry or the -th entry as the reference.

Theorem 1 regards the reference-invariance property of the true value of the inverse covariance matrix . It can also be extended to a class of estimators of . Suppose we have i.i.d. observations of the compositional vectors , and consequently, their alr transformed counterparts . Then, we can construct an estimator of , denoted by , based on the i.i.d. observations . Furthermore, we can construct an estimator of , denoted by , by taking its inverse or generalized inverse. The following corollary presents the reference-invariance property for a class of such estimators.

Corollary 1.

Suppose and both and are invertible. Let and be their inverse matrices. Then, , i.e. the upper-left sub-matrix of the estimated inverse covariance matrix of the alr transformed vector is invariant with respect to the choices of the -entry or the -th entry as the reference.

The above results imply an important property for the additive log-ratio transformation in the compositional data analysis. It can be extended to a more general situation as follows. In general, suppose we have selected a set of entries as “candidate references” in a compositional vector and write . Then, for any alr transformed vector based on a reference in the set of candidate references , the upper-left sub-matrix of the (estimated) inverse covariance matrix of is invariant with respect to the choice of the reference.

In the following subsections, we will incorporate the reference-invariance property into the estimation of a sparse inverse covariance matrix for compositional count data, such as the OTU abundance data in microbiome research.

2.2 Logistic Normal Multinomial Model

Consider an OTU abundance data set with independent samples, each of which composes observed counts of taxa, denoted by for the -th sample, . Due to the compositional property of the data, the sum of all counts for each sample is a fixed number, denoted by . Naturally, a multinomial distribution is imposed on the observed counts as

| (1) |

where are the multinomial probabilities with .

In addition, we choose one taxon, without loss of generality, the -th taxon as the reference and then apply the alr transformation (Aitchison, 1986) on the multinomial probabilities as follows

| (2) |

Further assume that ’s follow an i.i.d. multivariate normal distribution

| (3) |

where is the mean, is the covariance matrix, and is the inverse covariance matrix. The above model in (1)–(3) is called a logistic normal multinomial model and has been applied to analyze the microbiome abundance data (Xia et al., 2013).

Tian et al. (2022) proposed a method called compositional graphical lasso that aims to find a sparse estimator of the inverse covariance matrix , in which the following objective function is minimized

| (4) |

where and . The above objective function has two parts: The first term in (4) is the negative log-likelihood of the multinomial distribution in (1) and the remaining terms are the regular objective function of graphical lasso for the multivariate normal distribution in (3) regarding as known quantities.

2.3 Reference-Invariant Objective Function

Similar to 2.1, if we choose another taxon, for simplicity of illustration, the -th taxon as the reference, then the alr transformation in (2) becomes

As in Sections 2.1, . Therefore, , , where , , and . The reference-invariance property in 2.1 implies that .

The different choice of the reference also leads to a different objective function for the compositional graphical lasso method (Comp-gLASSO) as follows

| (5) |

where and . Comparing (4) and (5), their first terms are the same as they are both equal to the negative log-likelihood of the multinomial distribution: . In addition, from Aitchison (1986), and as known properties of the alr transformation. However, the penalties in (4) and (5) are different because is not necessarily equal to . The reference-invariance property only implies that .

Motivated by the reference-invariance property, we can impose the penalties only on the invariant entries of instead of all entries of as in (4), which leads to

| (6) |

With the previous arguments, we showed that is reference-invariant; in other words, the objective function stays the same regardless of whether the -th or the -th taxa is selected as the reference. This is summarized in the following theorem.

Theorem 2.

If for , , and , then .

We call in (6) the reference-invariant compositional graphical lasso objective function. To obtain a sparse estimator of , we minimize the objective function with respect to , , and . We call this estimation approach the reference-invariant compositional graphical lasso (Inv-Comp-gLASSO) method.

In general, suppose we have selected a set of taxa as “candidate references” and write . Then, the reference-invariant objective function becomes

| (7) |

In other words, (7) is invariant regardless of which reference is selected in the set of candidate references, and so is the invariant part of its minimizer.

It is noteworthy that the trick we played in defining the reference-invariant version of Comp-gLASSO is to revise the penalty term from the regular lasso penalty on the whole inverse covariance matrix to that only on the invariant part of the inverse covariance matrix. Using the same trick, we can define the reference-invariant version of other methods such as reference-invariant graphical lasso (Inv-gLASSO). The objective function of Inv-gLASSO is defined as follows when are observed instead of :

| (8) |

The objective function (7) includes naturally three sets of parameters , , and , which motivates us to apply a block coordinate descent algorithm. A block coordinate descent algorithm minimizes the objective function iteratively for each set of parameters given the other sets. Given the initial values , , and , a block coordinate algorithm repeats the following steps cyclically for iteration until the algorithm converges.

Except for the mild modification on the objective function, the details of the algorithm is essentially the same as the one described in Tian et al. (2022).

3 Simulation Study

3.1 Settings

To illustrate the reference-invariance property under the aforementioned framework, we conduct a simulation study and evaluated the performance of Inv-Comp-gLASSO as well as Inv-gLASSO.

Following Tian et al. (2022), we generate three types of inverse covariance matrices as follows:

-

1.

Chain: , if , and if . Every node is connected to the adjacent node(s), and therefore the degree is for all but two nodes.

-

2.

Random: with probability for . Every two nodes are connected with a fixed probability, and the expected degree is the same for all nodes.

-

3.

Hub: Nodes are randomly partitioned into groups, and there’s one “hub node” in each group. For the other nodes in the group, they are only connected to the hub node but not each other. There’s no connection among groups. The degree of connectedness is much higher for the hub nodes , and is for the rest of the nodes.

In the simulations, we also vary two other factors that play a crucial role in the performances of the methods:

-

1.

Sequencing depth. ’s are simulated from or , denoted by “low” and “high” sequencing depth.

-

2.

Compositional variation. For each aforementioned inverse covariance matrix (“low” compositional variation), we also divide each of them by a factor of to obtain another set of inverse covariance matrices, i.e., /5 (“high” compositional variation).

The data are simulated from the logistic normal multinomial distribution in (1)–(3). In detail, are first generated independently for ; then, the softmax transformation (the inverse of the alr transformation) was applied to get the multinomial probabilities with the -th entry serving as the true reference; last, the multinomial random variables were simulated from , for . We set and throughout the simulations.

The simulation results are based on 100 replicates of the simulated data. Both Inv-Comp-gLASSO and Inv-gLASSO are applied with two choices of reference, the -th entry serving as the false reference and the -th entry serving as the true reference. and only the reference-invariant sub-network is used in the evaluations. For Inv-gLASSO, we estimate with , and performe the alr transformation to get the estimates of , which are denoted by . We then apply Inv-gLASSO to directly to find the inverse covariance matrix , which also serves as the starting value for Inv-Comp-gLASSO. For both methods, we implement them with a common sequence of tuning parameters of .

We empirically validate the invariance property of Inv-Comp-gLASSO and Inv-gLASSO by comparing the estimators of the two sub-networks resulted from choosing the true and false reference separately in each method, which have been shown to be theoretically invariant in Section 2. The comparison is assessed under four criteria as follows.

-

1.

Normalized Manhattan Similarity

For two matrices and , we define the normalized Manhattan similarity (NMS) as

where represents the entrywise norm of a matrix. Note that due to the non-negativity of norms and the triangle inequality.

-

2.

Jaccard Index

For two networks with the same nodes, denote their set of edges by and . Then the Jaccard Index (Jaccard, 1901) is defined as follows:Obviously, it also holds that .

-

3.

Normalized Hamming Similarity

In the context of network comparison, the normalized Hamming similarity for two adjacency matrices and with the same nodes are defined as follows (Hamming, 1950)where denotes the entrywise norm of a matrix. Since there are at most ’s in an adjacency matrix with nodes, this metric is also between and .

-

4.

ROC Curve

The ROC curves on which true positive rate (TPR) and false positive rate (FPR) are plotted and also compared between the choices of true and false reference.

3.2 Results

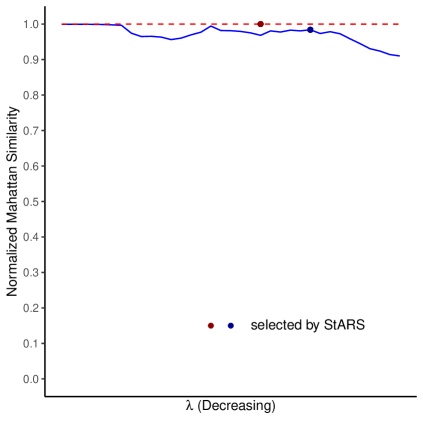

3.2.1 Normalized Manhattan Similarity

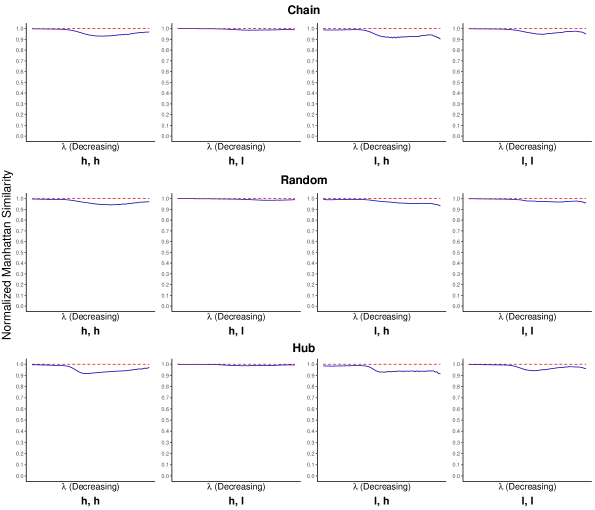

The normalized Manhattan similarity serves as a direct measure of the similarity between the two estimated inverse covariance matrices with different choices of the reference. On a sequence of tuning parameter ’s, we calculated the normalized Manhattan similarity between the two estimated inverse covariance matrices with the true and false references separately. Figure 1 shows the average normalized Manhattan similarity over 100 replicates along with standard error bars.

We can see from Figure 1 that, the normalized Manhattan similarity for Inv-gLASSO stays close to 1 in all settings, throughout the whole sequence of tuning parameters. On the other hand, there are some fluctuations in the same metric from Inv-Comp-gLASSO, although most values stay higher than 0.9. Empirically, the two estimated matrices are numerically identical for Inv-gLASSO and close for Inv-Comp-gLASSO. A potential reason why the invariance of Inv-gLASSO is numerically more evident than that of Inv-Comp-gLASSO is that Inv-gLASSO is a convex optimization while Inv-Comp-gLASSO is not necessarily convex (Tian et al., 2022). With different starting points (as we choose different references), Inv-Comp-gLASSO might result in different solutions as the algorithm is only guaranteed to converge to a stationary point. We refer to Tian et al. (2022) for more detailed discussion about the convexity and the convergence of the algorithm.

In addition, it is consistently observed that the normalized Manhattan similarity for Inv-Comp-gLASSO starts close to 1 when is very large and gradually decreases with some fluctuations as decreases. This is because the Inv-Comp-gLASSO objective function in (6) is solved by an iterative algorithm between graphical lasso to estimate and Newton-Raphson to estimate , which can lead to more numerical errors depending on the number of iterations. Furthermore, the algorithm is implemented with warm start for a sequence of decreasing ’s, i.e., the solution for the previous value is used as the starting point for the current value. With the accumulation of numerical errors, the numerical difference between the two estimated matrices becomes larger.

Among the simulation settings, we find the invariance property for Inv-Comp-gLASSO is most evidently supported by the numerical results in the “high sequencing depth, low compositional variation” setting, regardless of the network types. The normalized Manhattan similarity is very close to 1 for Inv-Comp-gLASSO throughout the sequence of tuning parameters. This is because the compositional probabilities ’s and thus the ’s are estimated accurately with high sequencing depth and low compositional variation in the first iteration of the Inv-Comp-gLASSO algorithm, which implies fewer iterations for the algorithm to converge and less numeric error accumulated during this process. On the other hand, the normalized Manhattan similarity is the lowest in the “low sequencing depth, high compositional variation” setting. It is due to a similar reason that it takes more iterations for the Inv-Comp-gLASSO algorithm to converge, accumulating more numerical errors. However, it is noteworthy that this is exactly the setting in which Inv-Comp-gLASSO has the most advantage over Inv-gLASSO in recovering the true network (see Section 3.2.3 for their ROC curves).

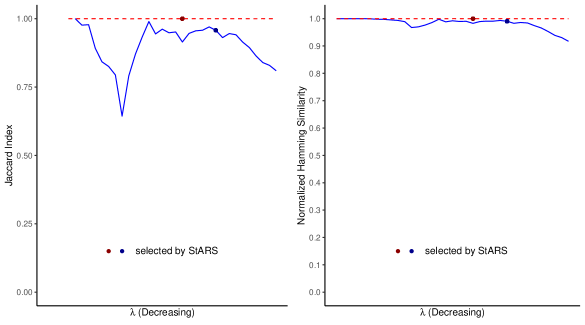

3.2.2 Jaccard Index and Normalized Hamming Similarity

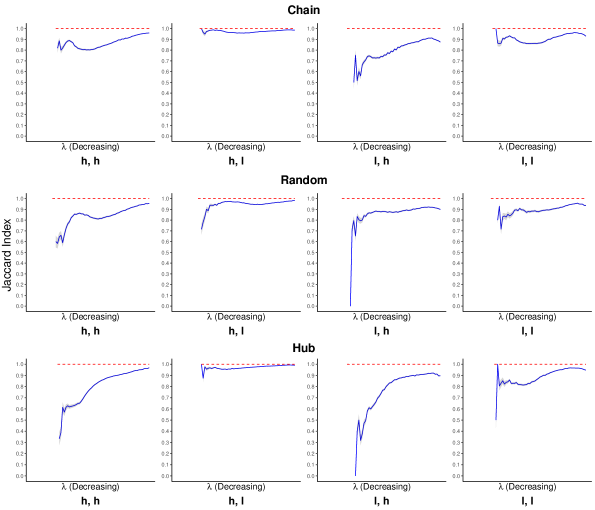

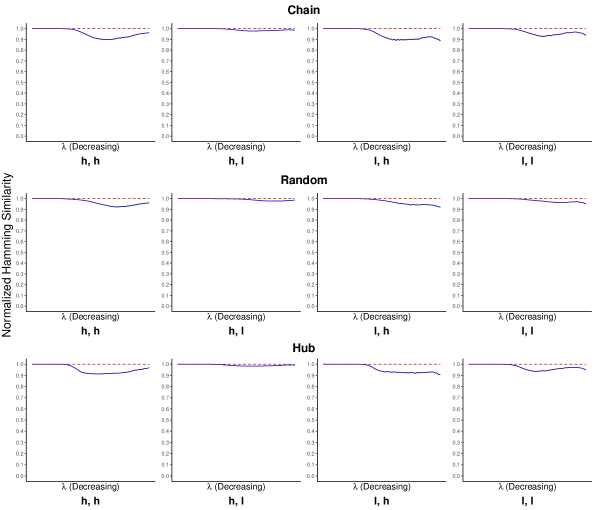

Compared to normalized Manhattan similarity that measures directly the similarity between two inverse covariane matrices, both Jaccard index and normalized Hamming similarity measure the similarity between two networks represented by the matrices because they only compared the adjacency matrices or the edges of the two networks. Again, on a sequence of tuning parameter ’s, we computed the Jaccard index and the normalized Hamming similarity between the two networks with true and false references separately. Figures 2 and 3 plot the average Jaccard index and the normalized Hamming similarity over 100 replicates along with standard error bars.

The results of normalized Hamming similarity in Figure 3 have a fairly similar pattern to those of normalized Manhattan similarity in Figure 1 and thus can be similarly interpreted. We only focus on the results of Jaccard index in Figure 2 here. The Jaccard index stays close to 1 for Inv-gLASSO, implying the identity between the two networks. Although the results of the Jaccard index for Inv-Comp-gLASSO look quite different from the other measures at the first glance, it actually implies a similar conclusion. First, we notice that there is no Jaccard index for the first few tuning parameters that are large enough. This is because the resultant network is empty with either true or false reference. Although two empty networks agree with each other perfectly, the Jaccard index is not well defined. Then, as Inv-Comp-gLASSO starts to pick up edges when decreases, the Jaccard index is quite low in some settings, suggesting that the two networks are dissimilar. However, this is due to the fact the Jaccard index is a much more “strict” similarity measure than the Hamming similarity. For example, for two networks with 100 possible total edges, if both networks only have one but a different edge, then the Jaccard index is while the normalized Hamming similarity is . Finally, as the networks become denser, the Jaccard index increases quickly and stabilizes at a quite high value in most settings.

It is also notable that both the Jaccard index and the normalized Hamming similarity are relatively high in the “high sequencing depth, low compositional variation” setting and relatively low in the “low sequencing depth, high compositional variation” setting, which is consistent with the finding for the normalized Manhattan similarity.

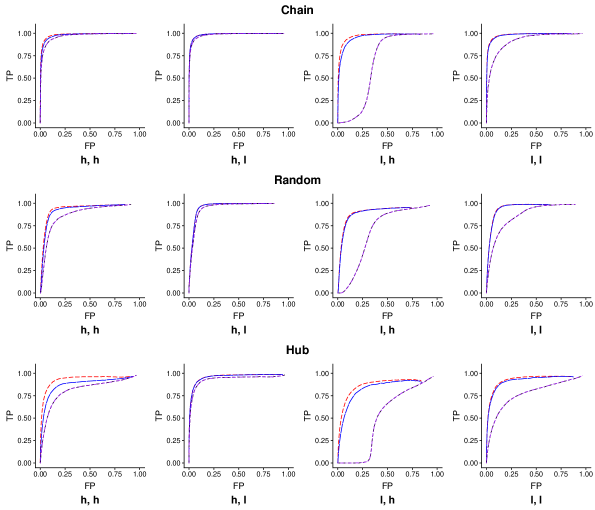

3.2.3 ROC Curves

An ROC curve is plotted from the average of true positive rates and the average of false positive rates over 100 replicates. An ROC curve can be regarded as an indirect measure of the invariance (two networks possessing similar ROC curves is a necessary but not sufficient condition for the two networks to be similar). However, it is crucial to evaluate the algorithms with this criterion, since it answers the question: “Does the performance of the algorithm depends on the choice of reference?”

We could see that the ROC curves from Inv-Comp-gLASSO, regardless of the choice of the reference, dominate the ones from Inv-gLASSO in all settings. The two ROC curves from Inv-gLASSO lay perfectly on top of each other, while the curves from Inv-Comp-gLASSO are also fairly close to each other. These empirical results validate the theoretical reference-invariance property for both methods. In addition, Inv-Comp-gLASSO has the most obvious advantage over Inv-gLASSO in the “low sequencing depth, high compositional variation” setting and they perform almost identically in the “low sequencing depth, high compositional variation” setting. Although the similarity measures are lower in the “most favorable” setting for Inv-Comp-gLASSO (see Sections 3.2.1 and 3.2.2), the ROC curves of the two networks from the method do not deviate too much from each other in this setting.

4 Real Data

To further validate the theoretical reference-invariance properties of Inv-Comp-gLASSO and Inv-gLASSO, we applied them to a dataset from the TARA Ocean project, in which the Tara Oceans consortium sampled both plankton and environmental data in 210 sites from the world oceans. The data collected was later analyzed using sequencing and imaging techniques. We downloaded the taxonomic data and the literature interactions from the TARA Ocean Project data repository (https://doi.pangaea.de/10.1594/PANGAEA.843018). As part of the TARA Oceans project, Lima-Mendez et al. (2015) investigated the impact of both biotic and abiotic interactions in oceanic ecosystem. In this article, a literature-curated list of genus-level marine eukaryotic plankton interactions was generated by a panel of experts.

Similar to Tian et al. (2022), we focused the analysis on genus level and only kept the 81 genus involved in the literature-reported interactions. For computational simplicity, we removed the samples with too small reads (). As a result, it leaves with 324 samples in the final preprocessed data. From the genera that were not reported in the literature, we chose two of them, Acrosphaera and Collosphaera, with the largest average relative abundances as the references. We then applied both Inv-Comp-gLASSO and Inv-gLASSO to the alr-transformed data with those two references, with a common sequence of tuning parameters. For each combination of a method and a reference, we also selected a tuning parameter that corresponds to the “asymptotically sparsistent” (the sparsest estimated network in the path that contains the true network asymptotically) network by StARS (Liu et al., 2010).

Figures 5 presents the normalized Manhattan similarity between two estimated inverse covariance matrices with the two choices of reference. Figures 6 plotted the Jaccard index and the normalized Hamming similarity between the two networks represented by the two estimated inverse covariance matrices. We can see from Figure 5 and Figure 6 that, all three similarity metrics stay steadily around for Inv-gLASSO throughout the sequence of ’s. This agrees with our observation in simulations where Inv-gLASSO produced numerically identical inverse covariance matrices.

For Inv-Comp-gLASSO, the similarity scores start around (for normalized Manhattan similarity and normalized Hamming similarity) or non-existent (for Jaccard index) when the estimated networks are empty. Then, as decreases, the estimated networks become denser, and the measures start to fluctuate and decline slightly at the end. In spite of the fluctuations, both normalized Manhattan similarity and normalized Hamming similarity stay above 0.9, while the lowest Jaccard index is about 0.64. As discussed earlier, Jaccard index is a stricter measure than normalized Hamming similarity.

Within each method, StARS picked the same tuning parameter regardless of the choice of the reference, as denoted by the red dot for Inv-gLASSO and the blue dot for Inv-Comp-gLASSO in Figures 5 and 6. In other words, the red and blue dots represent the tuning parameters corresponding to the final estimated networks selected by StARS. Again, the three similarity measures for the two final inverse covariance matrices or networks from Inv-gLASSO is almost 1, while the normalized Manhattan similarity, Jaccard index, and normalized Hamming similarity are , and for Inv-Comp-gLASSO. All these high similarity scores imply the empirical invariance for Inv-gLASSO and Inv-Comp-gLASSO. Both methods result in invariant inverse covariance matrices and thus the corresponding networks with respect to the choices of the reference genus (Acrosphaera or Collosphaera) when applied to the TARA Ocean eukaryotic dataset.

5 Discussion

In this work, we established the reference-invariance property in sparse inverse covariance matrix estimation and network construction based on the alr transformed data. Then, we proposed the reference-invariant versions of the compositional graphical lasso and graphical lasso by modifying the penalty in their respective objective functions. In addition, we validated the reference-invariance property of the proposed methods empirically by applying them to various scenarios of simulations and a real TARA Ocean eukaryotic dataset.

It is noteworthy that the reference-invariance property is a general property for estimating the inverse covariance matrix based on the alr transformed data. We proposed reference-invariant versions of compositional graphical lasso and graphical lasso based on this property, however, one may revise other existing methods for inverse covariance matrix estimation based on the alr transformed data. The trick is to revise the objective function so that it becomes invariant with respect to the choice of the reference. Subsequently, the resultant inverse covariance matrix and network are expected to be reference-invariant both theoretically and empirically, the latter of which may depend on the algorithm that is used to optimize the reference-invariant objective function.

References

- Aitchison (1986) Aitchison, J. (1986), The statistical analysis of compositional data, Monographs on statistics and applied probability, Chapman and Hall.

- Ban et al. (2015) Ban, Y., An, L., and Jiang, H. (2015), “Investigating microbial co-occurrence patterns based on metagenomic compositional data,” Bioinformatics, 31, 3322–3329.

- Banerjee et al. (2008) Banerjee, O., El Ghaoui, L., and d’Aspremont, A. (2008), “Model selection through sparse maximum likelihood estimation for multivariate Gaussian or binary data,” The Journal of Machine Learning Research, 9, 485–516.

- Fang et al. (2015) Fang, H., Huang, C., Zhao, H., and Deng, M. (2015), “CCLasso: correlation inference for compositional data through Lasso,” Bioinformatics, 31, 3172–3180.

- Fang et al. (2017) — (2017), “gCoda: conditional dependence network inference for compositional data,” Journal of Computational Biology, 24, 699–708.

- Faust and Raes (2012) Faust, K. and Raes, J. (2012), “Microbial interactions: from networks to models,” Nature Reviews Microbiology, 10, 538.

- Friedman and Alm (2012) Friedman, J. and Alm, E. J. (2012), “Inferring correlation networks from genomic survey data,” PLoS computational biology, 8, e1002687.

- Friedman et al. (2008) Friedman, J., Hastie, T., and Tibshirani, R. (2008), “Sparse inverse covariance estimation with the graphical lasso,” Biostatistics, 9, 432–441.

- Hamming (1950) Hamming, R. W. (1950), “Error detecting and error correcting codes,” The Bell system technical journal, 29, 147–160.

- Jaccard (1901) Jaccard, P. (1901), “Distribution de la flore alpine dans le bassin des Dranses et dans quelques régions voisines,” Bull Soc Vaudoise Sci Nat, 37, 241–272.

- Jiang et al. (2021) Jiang, D., Sharpton, T., and Jiang, Y. (2021), “Microbial interaction network estimation via bias-corrected graphical lasso,” Statistics in Biosciences, 13, 329–350.

- Kurtz et al. (2015) Kurtz, Z. D., Müller, C. L., Miraldi, E. R., Littman, D. R., Blaser, M. J., and Bonneau, R. A. (2015), “Sparse and compositionally robust inference of microbial ecological networks,” PLoS computational biology, 11, e1004226.

- Lima-Mendez et al. (2015) Lima-Mendez, G., Faust, K., Henry, N., Decelle, J., Colin, S., Carcillo, F., Chaffron, S., Ignacio-Espinosa, J. C., Roux, S., Vincent, F., et al. (2015), “Determinants of community structure in the global plankton interactome,” Science, 348, 1262073.

- Liu et al. (2010) Liu, H., Roeder, K., and Wasserman, L. (2010), “Stability approach to regularization selection (stars) for high dimensional graphical models,” in Advances in neural information processing systems, pp. 1432–1440.

- Smith et al. (2017) Smith, P., Willemsen, D., Popkes, M., Metge, F., Gandiwa, E., Reichard, M., and Valenzano, D. R. (2017), “Regulation of life span by the gut microbiota in the short-lived African turquoise killifish,” elife, 6, e27014.

- Tian et al. (2022) Tian, C., Jiang, D., Hammer, A., Sharpton, T., and Jiang, Y. (2022), “Compositional Graphical Lasso Resolves the Impact of Parasitic Infection on Gut Microbial Interaction Networks in a Zebrafish Model,” Under Review.

- Xia et al. (2013) Xia, F., Chen, J., Fung, W. K., and Li, H. (2013), “A logistic normal multinomial regression model for microbiome compositional data analysis,” Biometrics, 69, 1053–1063.

- Yuan et al. (2019) Yuan, H., He, S., and Deng, M. (2019), “Compositional data network analysis via lasso penalized D-trace loss,” Bioinformatics, 35, 3404–3411.

- Yuan and Lin (2007) Yuan, M. and Lin, Y. (2007), “Model selection and estimation in the Gaussian graphical model,” Biometrika, 94, 19–35.