Regularized Weighted Discrete Least Squares Approximation Using Gauss Quadrature Points

Abstract

We consider polynomial approximation over the interval by regularized weighted discrete least squares methods with or regularization, respectively. As the set of nodes we use Gauss quadrature points (which are zeros of orthogonal polynomials). The number of Gauss quadrature points is . For , with the aid of Gauss quadrature, we obtain approximation polynomials of degree in closed form without solving linear algebra or optimization problems. In fact, these approximation polynomials can be expressed in the form of the barycentric interpolation formula (Berrut & Trefethen, 2004) when an interpolation condition is satisfied. We then study the approximation quality of the regularized approximation polynomial in terms of Lebesgue constants, and the sparsity of the regularized approximation polynomial. Finally, we give numerical examples to illustrate these theoretical results and show that a well-chosen regularization parameter can lead to good performance, with or without contaminated data.

Keywords. regularized least squares approximation, Gauss quadrature, Lebesgue constants, sparsity, barycentric interpolation.

AMS subject classifications. 41A05, 65D99, 65D32, 94A99

1 Introduction

In this paper, we are interested in approximating or recovering a function (possibly noisy) by a polynomial

| (1.1) |

where is a linear space of polynomials of degree at most , and , , are normalized orthogonal polynomials (Szegö, 1939; Gautschi, 2004). As long as the basis for is given, the next step is to determine coefficients . We will consider the regularized approximation problem

| (1.2) |

and the regularized approximation problem

| (1.3) |

where is a given continuous function with values (possibly noisy) taken at distinct points over the interval ; ; is a vector of positive Gauss quadrature weights (Gautschi, 2004); is a nonnegative nondecreasing sequence, which penalizes coefficients ; and is the regularization parameter.

It is known that approximation schemes (1.2) and (1.3) are special cases of classical penalized least squares methods, see Powell (1967), Golitschek & Schumaker (1990), Gautschi (2004), Lazarov et al. (2007), Cai et al. (2009), Kim et al. (2009), An et al. (2012), Xiang & Zou (2013) and Zhou & Chen (2018). Some optimization methods or iterative algorithms are presented to find minimizers. However, we will concentrate on the aspect of constructing minimizers to problems (1.2) and (1.3) by means of orthogonal polynomials and Gauss quadrature (Kress, 1998; Gautschi, 2004, 2012; Trefethen, 2013). In this paper, Gauss quadrature will play an important role. We assume that the weight function is positive, such that

Definition 1.1

A quadrature formula

with distinct quadrature points is called a Gauss quadrature formula if it integrates all polynomials exactly, i.e., if

| (1.4) |

are called Gauss quadrature points.

Without causing confusion, we call Gauss quadrature points. Throughout this paper, we always assume that are Gauss quadrature points. It is well known (see, for example, Powell (1981), Kress (1998) and Gautschi (2012)) that Gauss quadrature points are the zeros of the orthogonal polynomial of degree . The orthogonality is with respect to the inner product

This inner product induces the standard norm

Given a continuous function defined on , sampling on generates

Let be a matrix of orthogonal polynomials evaluated at :

By subtracting the structure (1.1) of the approximation polynomial into the regularized approximation problem (1.2), the problem (1.2) transforms into the following problem

| (1.5) |

where

and the diagonal matrix is a semi-definite positive matrix.

With the same basis and weight vector as the regularized approximation problem (1.2) above, the regularized approximation problem (1.3) transforms into

| (1.6) |

Now the next step is to fix .

The goal of this paper is to construct approximation polynomials in the form of (1.1). We specify coefficients by solving problems (1.5) and (1.6) directly. This immediately yields the regularized approximation polynomial (2.3) and the regularized approximation polynomial (2.7).

As is known to all, Gauss quadrature goes hand in hand with the theory and computation of orthogonal polynomials, see Gautschi (2004) and Trefethen (2013) and references therein. Orthogonal polynomials occur in a wide range of applications and act as a remarkable role in pure and applied mathematics. Chebyshev polynomials and Legendre polynomials are two excellent factors in the family of orthogonal polynomials. Many polynomial approximation textbooks introduce fruitful results of Chebyshev and Legendre polynomials (Szegö, 1939; Powell, 1981; Gautschi, 2012; Trefethen, 2013). In particular, we take these two orthogonal polynomials (Chebyshev and Legendre) as representative examples in the choice of basis and Gauss quadrature points.

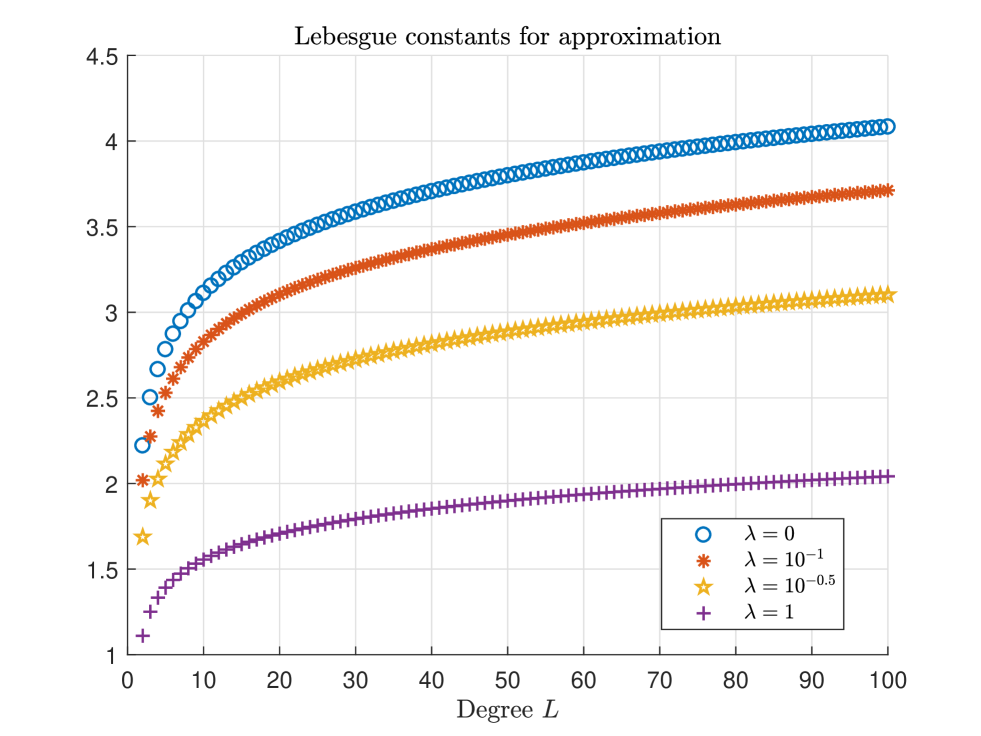

In the next section, we introduce some necessary notations and terminologies. The construction of the and regularized minimizers to problems (1.2) and (1.3) are presented, respectively. The crucial fact is that both and could be presented in the barycentric form under an interpolation condition, see the regularized barycentric interpolation formula (2.13) and the regularized barycentric interpolation formula (2.17). It is worth noting that the Wang-Xiang formula (Wang & Xiang, 2012) is a special case of when we set Legendre polynomials as the basis, see Section 2.3. In Section 3, we study the quality of the approximation polynomial in terms of Lebesgue constants. We illustrate that Lebesgue constants decay when the regularization parameter increases. Section 4 analyses the regularized approximation problem (1.3) in the view of sparsity. In particular, we derive a sharp upper bound of nonzero entries in the solution to the regularized approximation problem (1.6). We consider, in Section 5, numerical experiments containing approximation with exact and contaminated data.

All numerical results***All codes are available at

https://github.com/HaoNingWu/Regularized-Least-Squares-Approximation-using-Orthogonal-Polynomials. in this paper are carried out by using MATLAB R2017A on a desktop (8.00 GB RAM, Intel(R) Processor 5Y70 at 1.10 GHz and 1.30 GHz) with the Windows 10 operating system.

2 Regularized weighted least squares approximation

2.1 regularized approximation problem

First, we consider solving the -regularized weighted discrete least squares approximation problem (the -regularized approximation problem) (1.2). The problem can be transformed into a convex and differential optimization problem (1.5).

Taking the first derivative of the objective function in the regularized approximation problem (1.5) in the matrix form with respect to leads to the first order condition

| (2.1) |

One may solve the first order condition (2.1) using methods of numerical linear algebra; however, in this paper we concentrate on how to obtain the solution to the first order condition (2.1) in a closed form.

Lemma 2.1

Let be a class of normalized orthogonal polynomials with the weight function over , and be the set of zeros of . Assume and is a vector of weights satisfying the Gauss quadrature formula (1.4). Then

where is the identity matrix.

Proof. By the structure of the matrix and the definition of Gauss quadrature formula (see (1.4)), we obtain

where is the Kronecker delta. The middle equality holds from , and the last equality holds because of the orthonormality of .

Theorem 2.1

Proof.

This is immediately obtained from the first order condition (2.1) of the problem (1.5) and Lemma 2.1.

In the limiting case , we obtain the following simple but significant result.

Theorem 2.2

Proof. Since the interval is a compact set, and since the sums over in (2.2) and (2.4) are finite, to prove the theorem, it is sufficient to prove that

| (2.5) |

Since , the result follows from that, the sequence of Gauss quadrature formulae is convergent (Kress, 1998, Chapter 9). Hence (2.5) holds, proving the whole theorem.

2.2 regularized approximation problem

Now we discuss the regularized approximation problem (1.3), but we still convert it into solving the problem (1.6) in a matrix form. To solve this problem, we first define the soft threshold operator .

Definition 2.1 (Donoho & Johnstone, 1994)

The soft threshold operator, denoted as , is defined as

Theorem 2.3

The method of the proof is similar to the proof of Zhou & Chen (2018, Theorem 5.1). But we explain that our regularized least squares approximation problem (1.3) is over the interval rather than over the unit sphere.

Proof. Since is non-singular, for the regularized approximation problem (1.6) in the matrix form, its first order condition is

| (2.8) |

where denotes the subgradient (Clarke, 1990). Since is an identity matrix and is a diagonal matrix, is the solution to the regularized approximation problem (1.6) in the matrix form if and only if

| (2.9) |

where . Let be the optimal solution to the problem (2.9), then

Next there exist three cases to be considered:

-

1.

If , then , thus , yielding , then

-

2.

If , then , thus , giving , then

-

3.

If , then on the one hand, leads to , and thus , on the other hand, produces , and hence . Thus

As we hoped, with the aid of soft threshold operator, we obtain

2.3 Regularized barycentric interpolation formulae

In this subsection, we focus on the condition of and the required interpolation conditions

where is the interpolant of . As pointed out by Wang, Huybrechs, & Vandewalle (2014), “barycentric interpolation is arguably the method of choice for numerical polynomial interpolation”. It is significant to express the regularized approximation polynomial (2.3) and the regularized approximation polynomial (2.7) in a barycentric form (Berrut & Trefethen, 2004; Higham, 2004)

respectively. are called barycentric weights. The study of the for roots and extrema of classical orthogonal polynomials is well developed, see Salzer (1972), Schwarz & Waldvogel (1989), Berrut & Trefethen (2004), Wang & Xiang (2012) and Wang et al. (2014).

We first derive the regularized barycentric interpolation formula. Recall (2.3), we have

| (2.10) |

From the orthonormality of , , by letting we have

Note that

due to the existence of regularization. Then the regularized approximation polynomial (2.3) can be expressed as

| (2.11) |

Without loss of generality, assume for . Note that under this assumption, is still a sequence of orthogonal polynomials. By the Christoffel-Darboux formula (Gautschi, 2004, Section 1.3.3), we rewrite in the form

| (2.12) |

where and denote the leading coefficient and the norm of , respectively. Let us combine (2.12) with the other expression (2.11) of the regularized approximation polynomial and cancel the factor from both the numerator and the denominator. Together with (2.11) and (2.12) we obtain the solution to the regularized approximation problem in a barycentric form, and we name it the regularized barycentric interpolation formula:

| (2.13) |

where is the corresponding barycentric weight at . This relation between barycentric weights and Gauss quadrature weights is revealed by Wang, Huybrechs, & Vandewalle (2014); however, this relation does not lead to the fast computation since it still requires evaluating orthogonal polynomials on . From the relation they also find the explicit barycentric weights for all classical orthogonal polynomials.

Secondly, we induce the regularized barycentric interpolation formula. The regularized approximation polynomial (2.7) can be expressed as the sum of two terms:

| (2.14) |

where

The first term in (2.3) can be directly written in the barycentric form by letting using the regularized barycentric formula. Then let the basis transform into Lagrange polynomials . By the basis-transformation relation between orthogonal polynomials and Lagrange polynomials (Gander, 2005), the second term in (2.3) can be represented by Lagrange polynomials in the form

| (2.15) |

With the same procedure of obtaining the barycentric formula from the classical Lagrange interpolation formula in Berrut & Trefethen (2004), the second term (2.15) in (2.3) can be rewritten as

| (2.16) |

Together with (2.3) and (2.16), we obtain the regularized barycentric interpolation formula:

| (2.17) |

If , the basis is normalized Legendre polynomials (i.e., interpolation nodes are Legendre points) and where is the Gauss quadrature weight at (Wang & Xiang, 2012), then both the regularized barycentric interpolation formula (2.13) and the regularized barycentric interpolation formula (2.17) reduce to the Wang-Xiang formula (Wang & Xiang, 2012; Trefethen, 2013). Inspired by the work of Higham (2004), we will conduct numerical studies on both regularized barycentric interpolation formulae (2.13) and (2.17), such as numerical stability, see the next paper (An & Wu, 2019).

3 Quality of regularized weighted least squares approximation

In this section, we study the quality of the regularized weighted least squares approximation in terms of Lebesgue constants. As is known to all, the Lebesgue constant is a tool for quantifying the divergence or convergence of polynomial approximation. From 1910, a lot of works have been done on Lebesgue constants (Fejér, 1910; Szegö, 1939; Rivlin, 2003; Powell, 1981; Wang & Xiang, 2012; Trefethen, 2013). This paper considers Lebesgue constants in the case of regularization. The Lebesgue constant is the norm of the linear mapping from data to approximation polynomial:

In particular, we have the following estimation on the as a consequence of (2.3).

Proposition 3.1

Adopt the notation and assumptions of Theorem 2.1. We have

| (3.1) |

3.1 Lebesgue constants with the basis of Chebyshev polynomials of the first kind

We mimic the discussion of the least squares approximation without regularization in Rivlin (2003, Section 2.4). We shall treat the case of normalized Chebyshev polynomials of the first kind as the basis for . Primary results are also available in Powell (1967). Consider a weighted Fourier series of a given continuous function over :

where are Fourier coefficients defined as

and

and weights , .

Lemma 3.1 (Rivlin, 2003)

If is continuous on with period , then

where

Definition 3.1

Lebesgue constants for regularized least squares approximation using Chebyshev polynomials of the first kind are defined as

The case of leads to Lebesgue constants for Fourier series (without regularization) (Rivlin, 2003, Section 2.4) in the form of

where the last integrand is the famous Dirichlet kernel. For estimation of , we have the following lemma.

Lemma 3.2

(3.2) is a known result given by Fejér (1910), one might find in Lorentz (1966, p.5) and Stein & Shakarchi (2011, Section 2.7.2). (3.3) is sharper than the known result (Rivlin, 2003, Lemma 2.2). For completeness, the proof of Lemma 3.2 is given in Appendix: Proof of Lemma 3.2.

Theorem 3.1

Suppose is continuous on , and normalized Chebyshev polynomials constitute a basis for . Then Lebesgue constants for the regularized least squares approximation of degree () on satisfy

| (3.4) |

and

| (3.5) |

where , which satisfies and .

Proof. Since is continuous on , then is continuous on . If , then is continuous on . The even function gives for all , and then

| (3.6) | ||||

which reveals that this is the regularized least squares approximation of degree with the basis for being normalized Chebyshev polynomials of the first kind. Since is continuous on with period , there must exist such that

By Lemma 3.1,

When , one may easily verify that

then by Lemma 3.2,

and

Let and . And let be a real number satisfying . If , then

and

which gives the asymptotic result (3.4) and the inequality (3.5).

Take the family of normalized Chebyshev polynomials of the first kind as the basis for and the set of zeros of as the set of nodes. Setting and . Fig. 1 illustrates Lebesgue constants with respect to different choices of regularization parameter .

3.2 Lebesgue constants with the basis of Legendre polynomials

In this subsection, we will derive asymptotic bounds of Lebesgue constants of the regularized approximation by using Legendre polynomials. Consider the kernel

where denotes the Legendre polynomial of degree . The case of gives a simple kernel

where the last equality is due to Gronwall (1913, (4)). Thus,

and

where the rightmost equality is due to Gronwall (1913, (5)). The reader may note that

| (3.7) |

where .

Definition 3.2

Lebesgue constants for the regularized approximation using Legendre polynomials are defined as

The case of leads to

| (3.8) |

which is the definition of Lebesgue constant of Legendre truncation of degree (Gronwall, 1913).

Combining Lemma (3.3) with the inequality (3.7), we obtain the estimation of in the case of Legendre polynomials.

Theorem 3.2

Suppose is continuous on , and Legendre polynomials constitute the basis for . Then Lebesgue constants for the regularized least squares approximation of degree () on satisfy

where .

The proof for Theorem 3.2 is based on the above discussion.

4 Sparsity of solution to the regularized approximation problem

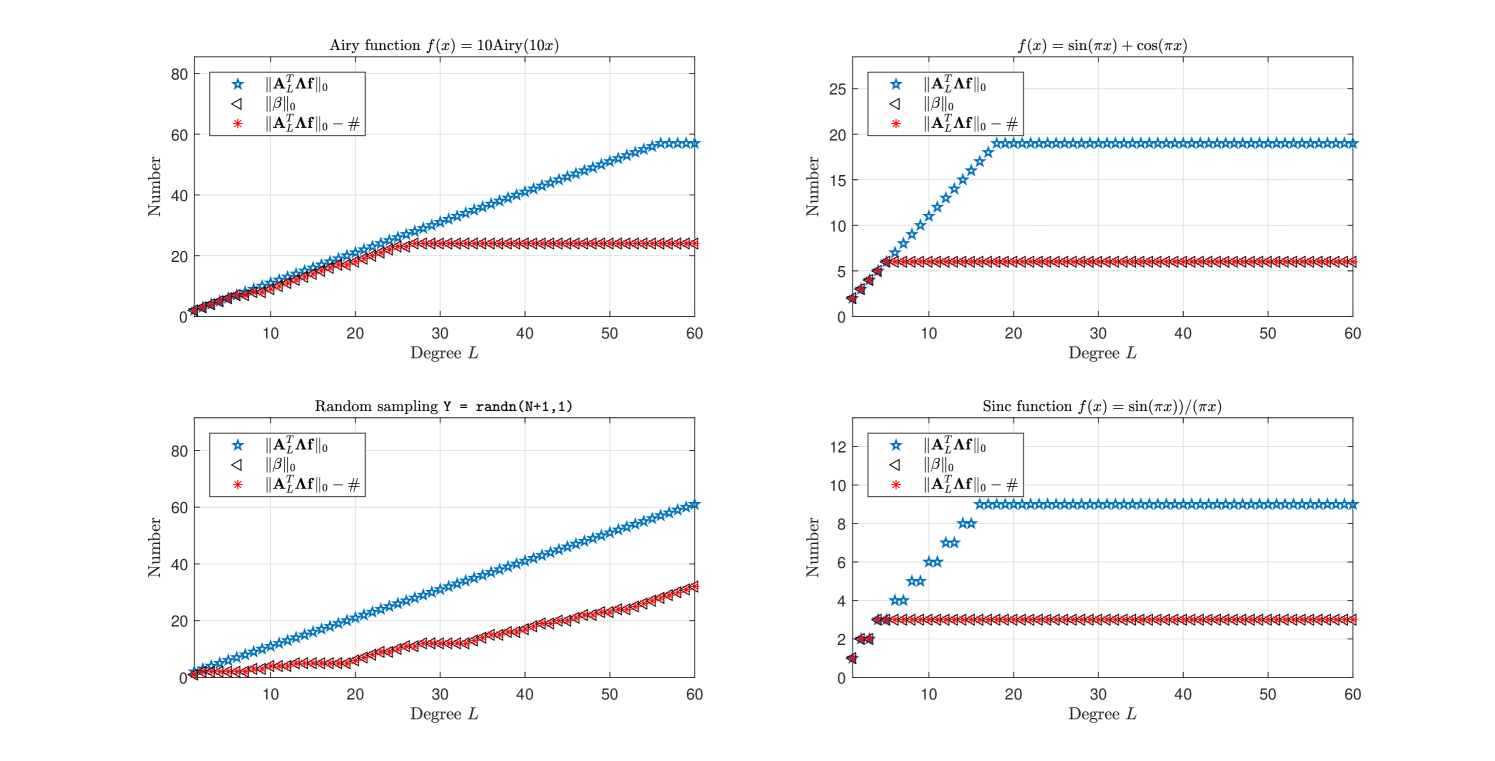

Some real-world problems, such as signal processing, often have sparse solutions. One may seek the sparsest solution to a problem, that is, the solution containing zero elements at most. However, a vector of real data would rarely contains many strict zeros. One may introduce other methods to seek sparsity, such as , where , . Nevertheless, these optimization problems mentioned above are nonconvex and nondifferentiable (Clarke, 1990; Bruckstein et al., 2009). Regularized methods, especially regularizaed methods, also produce sparse solutions, according to our examples. One may find a relatively sparse solution by minimizing norm, because such an optimization problem is a convex optimization problem, and its solution is the closest one to the sparsest solution for all in . For topics on sparsity, we refer to Bruckstein et al. (2009). We consider the sparsity of the solution to the regularized approximation problem (1.6). The sparsity is measured by the number of nonzero elements of , denoted as , also known as the zero “norm” (it is not a norm actually) of .

Before discussing the upper bound of , we offer a quick glimpse into the zero elements distribution of the regularized approximation solution. From Definition 2.1 of the soft threshold operator, we have the following result.

Proposition 4.1 (Zero elements distribution of the regularized approximation solution)

Adopt the notation and assumptions of Lemma 2.1. If satisfies

| (4.1) |

then its corresponding is zero, .

If , then becomes an upper bound of the number of nonzero elements of . Furthermore, we obtain the exact number of nonzero elements of with the help of the information of .

Theorem 4.1

Let be the solution to the regularized problem (1.6). If , then the number of nonzero elements of satisfies

| (4.2) |

and

| (4.3) |

where denotes the number of occurrences of but for .

Proof. The first order condition (2.8) of the regularized approximation problem (1.6) in the matrix form can be rewritten as

To obtain a solution, there must exist an vector such that

| (4.4) |

Since for all , elements of satisfy

yielding . Expression (4.4) gives

If , then . If , whereas may not be zero. Thus,

Hence,

Let denote the optimal solution to the problem (2.9) . With the aid of the closed-form solution to the regularized approximation problem (see (2.6)), expression (4.4) gives birth to

Due to , where denotes the pointwise division between and , the difference between and is expressed as

| (4.5) |

Together with and (4.5), we obtain the exact number (4.3) of nonzero elements of .

Corollary 4.1

If , then the number of nonzero elements of satisfies

| (4.6) |

Remark. Together, Theorem 4.1 and Corollary 4.1 state that the regularized minimization is better than minimization without regularization in terms of sparsity.

Let the basis for be the family of normalized Chebyshev polynomials of the first kind and the set of nodes be the set of zeros of . With degree of approximation polynomial ranging from to , evaluated and evaluated for all , Fig. 2 gives four examples on the bounds and estimations given above.

5 Numerical experiments

In this section, we report numerical results to illustrate the theoretical results derived above and test the efficiency of the regularized approximation polynomial (2.3) and the regularized approximation polynomial (2.7). The choices of the basis for and the set of nodes are primary when using both models. We choose Chebyshev polynomials of the first kind and the corresponding Chebyshev points. Certainly, choosing other orthogonal polynomials such as Legendre polynomials is also possible. All computations are performed in MATLAB in double precision arithmetic. Some related commands, for instance, obtaining quadrature points and weights, are included in Chebfun 5.7.0 (Trefethen et al., 2017).

To test the efficiency of approximation, we define the uniform error and the error to measure the approximation error:

-

•

The uniform error of the approximation is estimated by

where is a large but finite set of well distributed points (for example, clustered grids, see Trefethen (2000, Chapter 5)) over the interval .

-

•

The error of the approximation is estimated by a proper Gauss quadrature rule:

5.1 Regularized approximation models for exact data

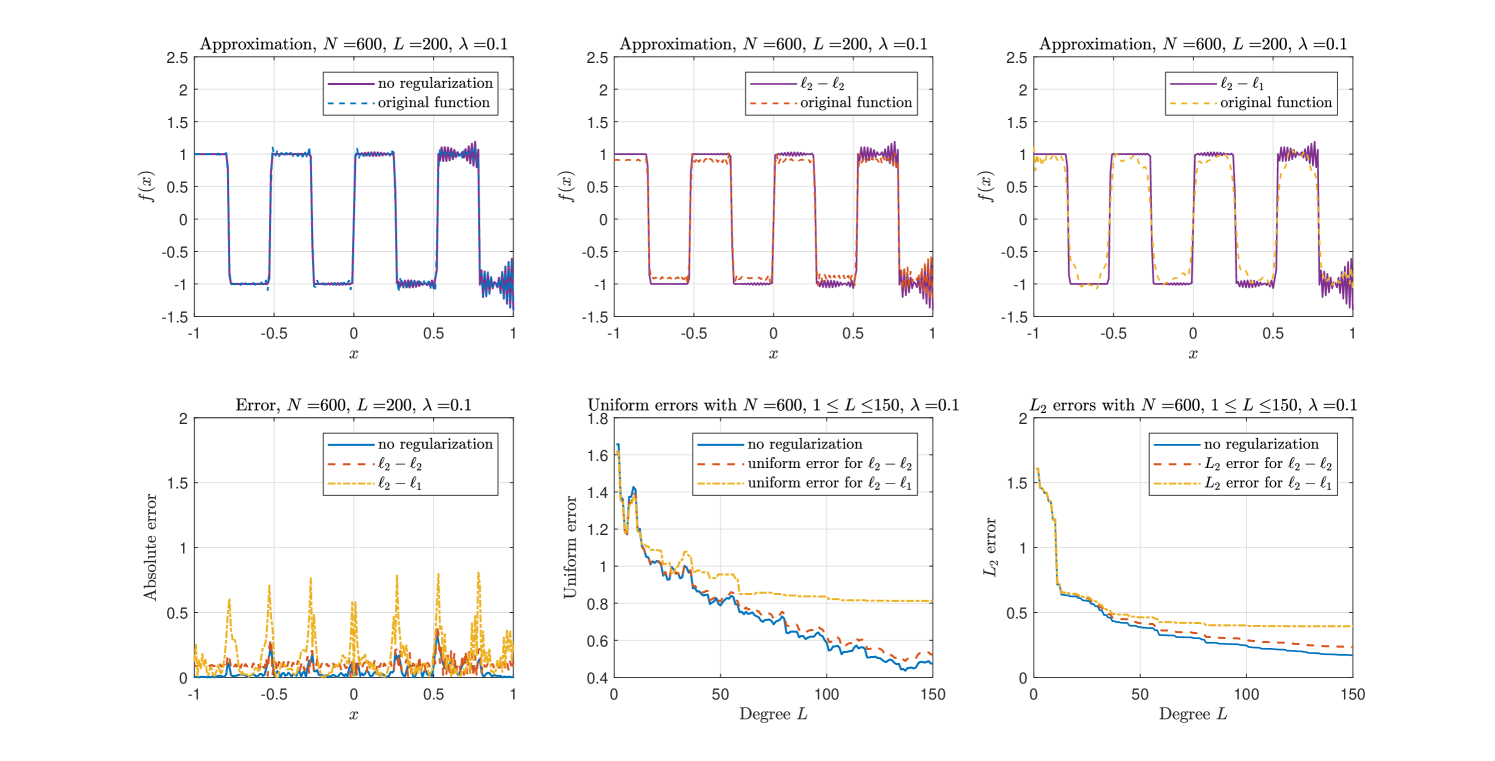

The fact should always stick in readers’ mind that regularization is introduced to solve ill-posed problems or to prevent overfitting. When approximation applies to functions without noise, regularization parameter (no regularization) contributes to the best choice of approximating. Fig. 3 reports the efficiency and errors for approximating function

with or without regularization over . The test function is given in Trefethen (2013). Let , , and for all . Fig. 3 illustrates that regularization is beyond use in this well-posed approximation problem, and regularization is better than regularization in approximating smooth functions.

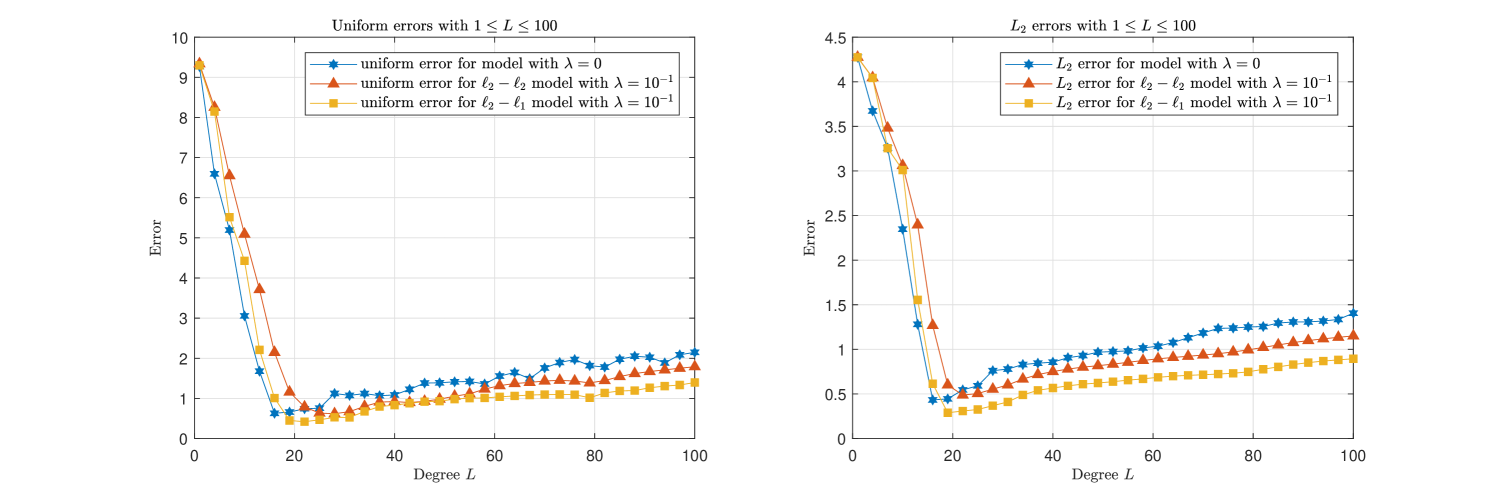

5.2 Regularized approximation models for contaminated data

We consider

| (5.1) |

which is the Fourier transform of the gate signal

see Bracewell (1965). We use regularized least squares models to reduce Gaussian white noise added to the function (5.1) with the signal-noise ratio (SNR) 10 dB. The choice of is critical in these models, so we first consider the relation between and approximation errors to choose the optimal . Let and . We take to choose the best regularization parameter. Here we choose . For more advanced methods to choose the parameter , we refer to Lazarov et al. (2007) and Pereverzyev et al. (2015) for a further discussion.

Fig. 4 shows that the and regularized approximation models with are effective in recovering the noisy function. In the case we let

where the filter function is defined as (An et al., 2012)

In this case, is a sequence of nonnegative nondecreasing parameters.

Results in Fig. 5 illustrate that the regularized approximation model is the best choice when recovering a contaminated function, which accords with the known facts (Lu & Pereverzev, 2009). Both Fig. 4 and Fig. 5 show that regularized models are better than those without regularization ().

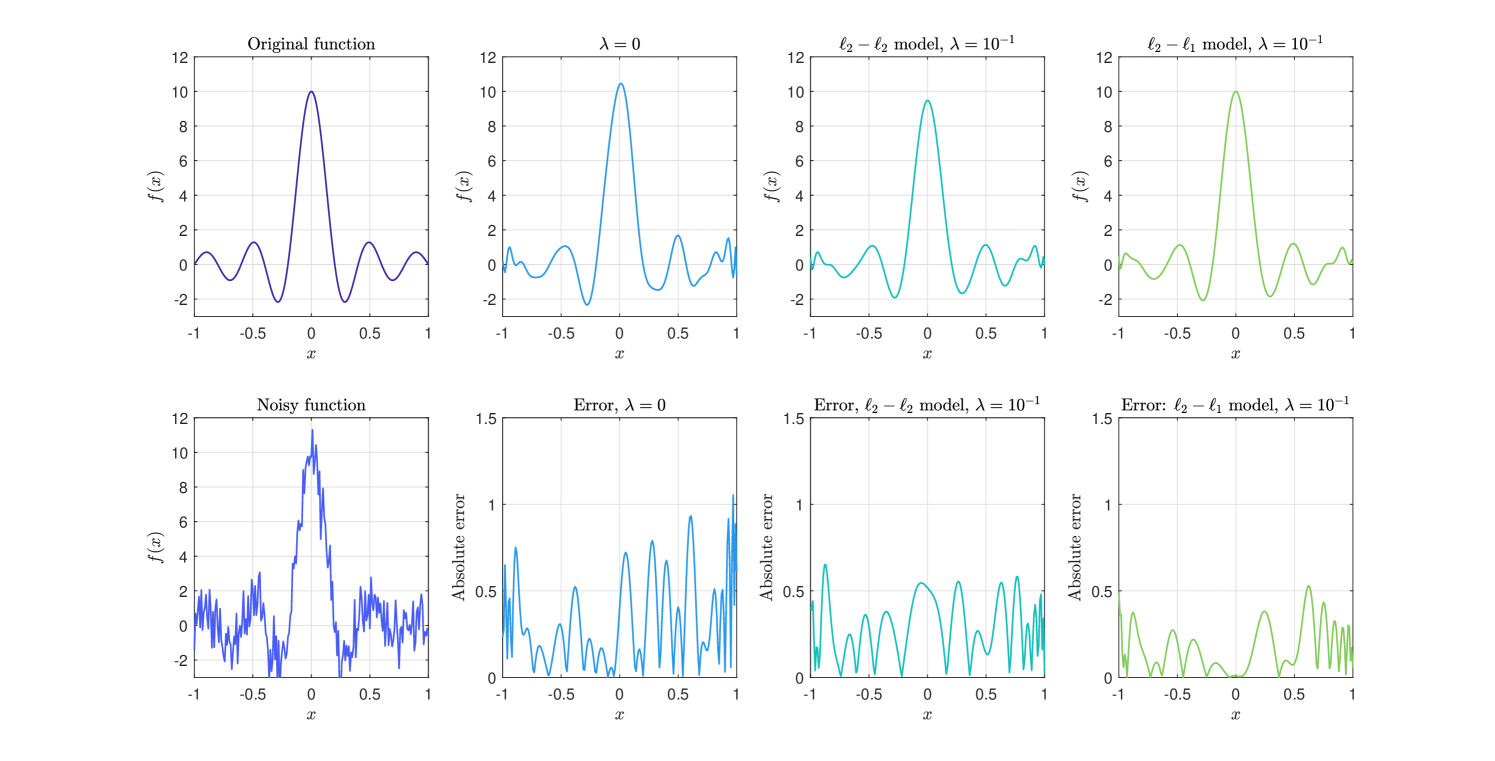

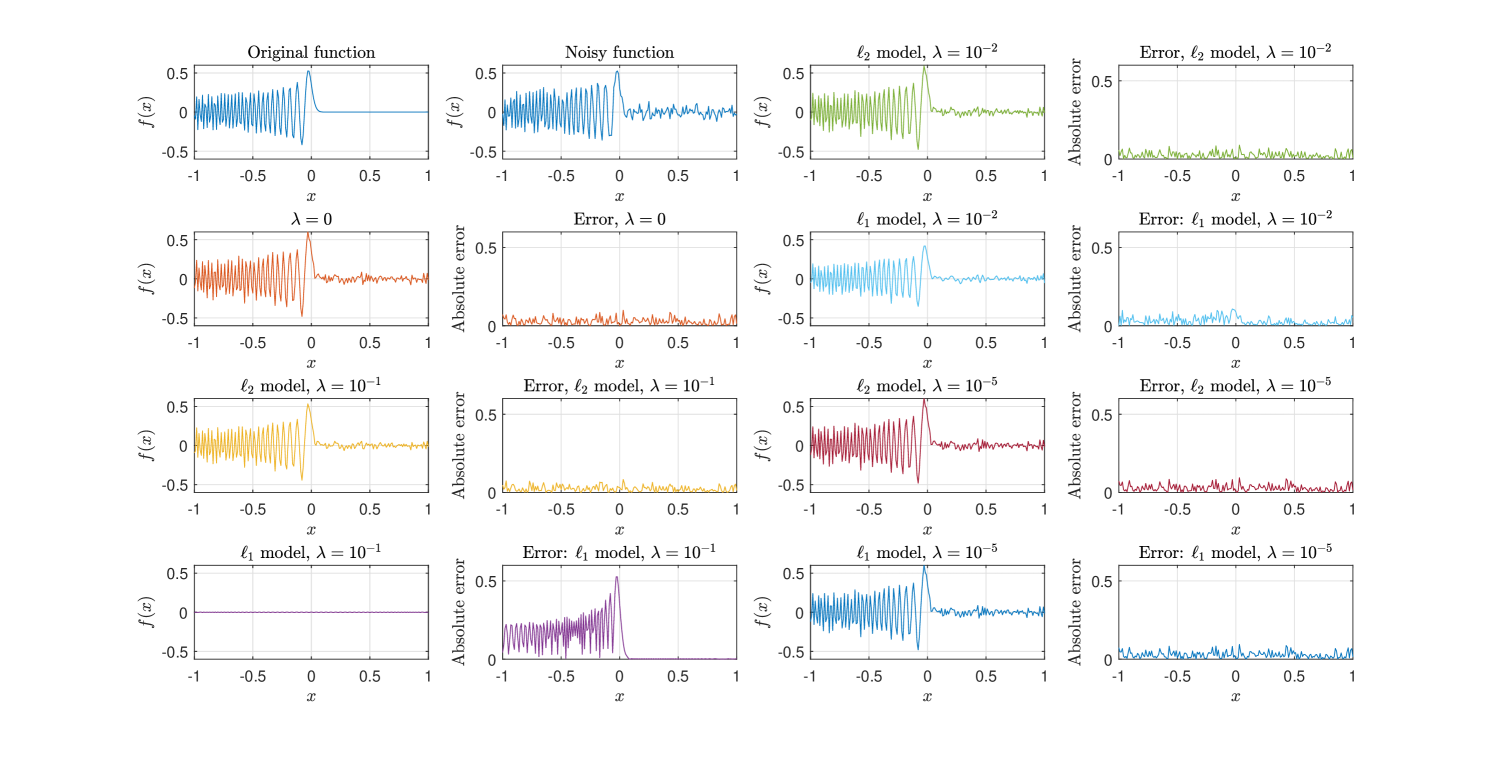

Besides this, consider the highly oscillatory function

with 12dB Gauss white noise added (noisy function is shown in Fig. 6). We use regularized barycentric formulae (2.13) and (2.17) to conduct this experiment. Let and be the same as above. Different values of , say , , , lead to different results, see Fig. 6. This experiment indicates that one could apply a simple formula to reduce noise, rather than employ an iterative scheme.

These numerical examples illustrate that for some problems, regularization also can be better than regularization. For example, suits regularization, but almost straightens the function with regularization. Besides this, we can see that regularization contains lower sensitivity than regularization.

6 Concluding remarks

In this paper, we have investigated minimizers to the and regularized least squares approximation problems with the aid of Gauss quadrature points and orthogonal polynomials on the interval . Based on those explicit constructed approximation polynomials (2.3) and (2.7), the -regulariarized barycentric interpolation formula (2.13) and the regulariarized barycentric interpolation formula (2.17) have been derived. In addition, Lebesgue constants are studied in the case of using Legendre polynomials and normalized Chebyshev polynomials of the first kind as the basis for the polynomial space . A bound for sparsity of the solution to regularized approximation is obtained by the refinement of the subgradient. Numerical results indicate that both the regularized approximation polynomial and the regularized approximation polynomial are practicable and efficient. Regularization parameter choice strategies and error bounds of approximation should be studied in future work. These results provide new insights into the and regularized approximation, and can be adapted to many practical applications such as noise reduction by using the barycentric interpolation scheme on Gauss quadrature points.

References

- An et al. (2012) An, C., Chen, X., Sloan, I. H. & Womersley, R. S. (2012) Regularized least squares approximations on the sphere using spherical designs. SIAM Journal on Numerical Analysis, 50, 1513–1534.

- An & Wu (2019) An, C. & Wu, H.-N. (2019) The numerical stability of regularized barycentric interpolation formulae for interpolation and extrapolation. arXiv preprint arXiv:1904.07187.

- Berrut & Trefethen (2004) Berrut, J.-P. & Trefethen, L. N. (2004) Barycentric Lagrange interpolation. SIAM Review, 46, 501–517.

- Bracewell (1965) Bracewell, R. (1965) The Fourier Transform and Its Applications. New York: McGraw-Hill.

- Bruckstein et al. (2009) Bruckstein, A. M., Donoho, D. L. & Elad, M. (2009) From sparse solutions of systems of equations to sparse modeling of signals and images. SIAM Review, 51, 34–81.

- Cai et al. (2009) Cai, J.-F., Osher, S. & Shen, Z. (2009) Split Bregman methods and frame based image restoration. Multiscale Modeling & Simulation, 8, 337–369.

- Clarke (1990) Clarke, F. H. (1990) Optimization and nonsmooth analysis, vol. 5. Philadelphia: SIAM.

- Donoho & Johnstone (1994) Donoho, D. L. & Johnstone, J. M. (1994) Ideal spatial adaptation by wavelet shrinkage. Biometrika, 81, 425–455.

- Fejér (1910) Fejér, L. (1910) Lebesguessche konstanten und divergente fourierreihen. Journal für die Reine und Angewandte Mathematik, 138, 22–53.

- Gander (2005) Gander, W. (2005) Change of basis in polynomial interpolation. Numerical Linear Algebra with Applications, 12, 769–778.

- Gautschi (2004) Gautschi, W. (2004) Orthogonal Polynomials: Computation and Approximation. Oxford: Oxford University Press on Demand.

- Gautschi (2012) Gautschi, W. (2012) Numerical analysis 2nd edition. Basel: Birkhäuser Basel.

- Golitschek & Schumaker (1990) Golitschek, M. & Schumaker, L. (1990) Data fitting by penalized least squares. Algorithms for Approximation II. New York: Springer-Verlag, pp. 210–227.

- Gronwall (1913) Gronwall, T. H. (1913) Über die Laplacesche reihe. Mathematische Annalen, 74, 213–270.

- Higham (2004) Higham, N. J. (2004) The numerical stability of barycentric Lagrange interpolation. IMA Journal of Numerical Analysis, 24, 547–556.

- Kim et al. (2009) Kim, S.-J., Koh, K., Boyd, S. & Gorinevsky, D. (2009) trend filtering. SIAM Review, 51, 339–360.

- Kress (1998) Kress, R. (1998) Numerical analysis. Graduate Texts in Mathematics, vol. 181. New York: Springer-Verlag.

- Lazarov et al. (2007) Lazarov, R. D., Lu, S. & Pereverzev, S. V. (2007) On the balancing principle for some problems of numerical analysis. Numerische Mathematik, 106, 659–689.

- Lorentz (1966) Lorentz, G. G. (1966) Approximation of functions. Athena Series: Selected Topics in Mathematics. New York Chicago San Francisco Toronto London: Holt, Rinehart and Winston.

- Lu & Pereverzev (2009) Lu, S. & Pereverzev, S. V. (2009) Sparse recovery by the standard Tikhonov method. Numerische Mathematik, 112, 403–424.

- Pereverzyev et al. (2015) Pereverzyev, S. V., Sloan, I. H. & Tkachenko, P. (2015) Parameter choice strategies for least-squares approximation of noisy smooth functions on the sphere. SIAM Journal on Numerical Analysis, 53, 820–835.

- Powell (1967) Powell, M. J. D. (1967) On the maximum errors of polynomial approximations defined by interpolation and by least squares criteria. The Computer Journal, 9, 404–407.

- Powell (1981) Powell, M. J. D. (1981) Approximation theory and methods. Cambridge: Cambridge University Press.

- Rivlin (2003) Rivlin, T. J. (2003) An introduction to the approximation of functions. North Chelmsford: Courier Corporation.

- Salzer (1972) Salzer, H. E. (1972) Lagrangian interpolation at the Chebyshev points xn, cos (/n), = 0 (1) n; some unnoted advantages. The Computer Journal, 15, 156–159.

- Schwarz & Waldvogel (1989) Schwarz, H. R. & Waldvogel, J. (1989) Numerical analysis: a comprehensive introduction. New York: Wiley.

- Stein & Shakarchi (2011) Stein, E. M. & Shakarchi, R. (2011) Fourier analysis: an introduction, vol. 1. Princeton: Princeton University Press.

- Szegö (1934) Szegö, G. (1934) Über einige asymptotische entwicklungen der Legendreschen funktionen. Proceedings of the London Mathematical Society, 2, 427–450.

- Szegö (1939) Szegö, G. (1939) Orthogonal polynomials. Colloquium Publications Volume XXIII, vol. 23. Providence, Rhode Island: American Mathematical Society.

- Trefethen (2000) Trefethen, L. N. (2000) Spectral methods in MATLAB, vol. 10. Philadelphia: SIAM.

- Trefethen (2013) Trefethen, L. N. (2013) Approximation theory and approximation practice, vol. 128. Philadelphia: SIAM.

-

Trefethen et al. (2017)

Trefethen, L. N. et al. (2017)

Chebfun Version 5.7.0.

http://www/maths.ox.ac.uk/chebfun/: Chebfun Development Team, Chebfun Development Team. - Wang et al. (2014) Wang, H., Huybrechs, D. & Vandewalle, S. (2014) Explicit barycentric weights for polynomial interpolation in the roots or extrema of classical orthogonal polynomials. Mathematics of Computation, 83, 2893–2914.

- Wang & Xiang (2012) Wang, H. & Xiang, S. (2012) On the convergence rates of Legendre approximation. Mathematics of Computation, 81, 861–877.

- Xiang & Zou (2013) Xiang, H. & Zou, J. (2013) Regularization with randomized SVD for large-scale discrete inverse problems. Inverse Problems, 29, 085008.

- Zhou & Chen (2018) Zhou, Y. & Chen, X. (2018) Spherical -designs for approximations on the sphere. Mathematics of Computation, 87, 2831–2855.

Appendix A. Proof of Lemma 3.2

Proof. We adopt the proof of Rivlin (2003, Lemma 2.2), but bring up a sharper bound of this inequality:

| (.1) |

When , . Suppose , then

| (.2) |

We have

| (.3) |

and

| (.4) |

Together with (.2), (.3) and (.4), we obtain

Thus,

| (.5) |

where

The value of can be calculated by MATLAB with the function quadgk as

It is not difficult to see inequality (.5) is sharper than inequality (.1) for .