∎

Técnico Lisboa 33institutetext: 33email: bernardo.g.f.cortez@tecnico.ulisboa.pt 33institutetext: ORCID: 0000-0001-5553-1106

S. Authors

MBDA Deutschland GmbH 44institutetext: 44email: florian.peter@mbda-systems.de 55institutetext:

Technical University of Munich 66institutetext: 66email: thomas.lausenhammer@tum.de 77institutetext:

Tecnico Lisboa 88institutetext: 88email: paulo.j.oliveira@tecnico.ulisboa.pt

Reinforcement Learning for Robust Missile Autopilot Design

Abstract

Designing missiles’ autopilot controllers has been a complex task, given the extensive flight envelope and the nonlinear flight dynamics. A solution that can excel both in nominal performance and in robustness to uncertainties is still to be found. While Control Theory often debouches into parameters’ scheduling procedures, Reinforcement Learning has presented interesting results in ever more complex tasks, going from videogames to robotic tasks with continuous action domains. However, it still lacks clearer insights on how to find adequate reward functions and exploration strategies. To the best of our knowledge, this work is pioneer in proposing Reinforcement Learning as a framework for flight control. In fact, it aims at training a model-free agent that can control the longitudinal non-linear flight dynamics of a missile, achieving the target performance and robustness to uncertainties. To that end, under TRPO’s methodology, the collected experience is augmented according to HER, stored in a replay buffer and sampled according to its significance. Not only does this work enhance the concept of prioritized experience replay into BPER, but it also reformulates HER, activating them both only when the training progress converges to suboptimal policies, in what is proposed as the SER methodology. The results show that it is possible both to achieve the target performance and to improve the agent’s robustness to uncertainties (with low damage on nominal performance) by further training it in non-nominal environments, therefore validating the proposed approach and encouraging future research in this field.

Keywords:

Reinforcement Learning TRPO HER flight control missile autopilot1 Introduction

Over the last decades, designing the autopilot flight controller for a system such as a missile has been a complex task, given (i) the non-linear dynamics and (ii) the demanding performance and robustness requirements. This process is classically solved by tedious scheduling procedures, which often lack the ability to generalize across the whole flight envelope and different missile configurations.

Reinforcement Learning (RL) constitutes a promising approach to address the latter issues, given its ability of controlling systems from which it has no prior information. This motivation increases as the system to be controlled grows in complexity, for the plausible hypothesis that the RL agent could benefit from having a considerably wider range of possible (combinations of) actions. By learning the cross-coupling effects, the agent is expected to converge to the optimal policy faster.

The longitudinal nonlinear dynamics (cf. section 2), however, constitutes a mere first step, necessary to the posterior possible expansion of the approach to the whole flight dynamics. This work is, hence, motivated by the will of finding a RL algorithm that can control the longitudinal nonliear flight dynamics of a Generic Surface-to-Air Missile (GSAM) with no prior information about it, being, thus, model-free.

2 Model

2.1 GSAM Dynamics

The GSAM’s flight dynamics to be controlled is modelled by a nonlinear system, in which the total sum of the forces and of the momenta are dependent on the flight dynamic states (cf. equations (1) and (2)), like the Mach number , the height or the angle of attack .

| (1) | |||

| (2) |

This system is decomposed into Translation (cf. equation (3)), Rotation (cf. equation (4)), Position (cf. equation (5)) and Attitude (cf. equation (6)) terms, as Peter (2018) Peter2018 described. Equations (3) to (6) follow Peter’s (2018) Peter2018 notation.

| (3) | |||

| (4) | |||

| (5) | |||

| (6) |

To investigate the principle ability of an RL agent to serve as a missile autopilot, the nonlinear longitudinal motion of the missile dynamics is considered. Therefore, the issue of cross-coupling effects within the autopilot design is not addressed in this paper.

2.2 Actuator Dynamics

The GSAM is actuated by four fin deflections, , which are mapped to three aerodynamic equivalent controls, , and (cf. equation (7)). The latter provide the advantage of directly matching the GSAM’s roll, pitch and yaw axis, respectively.

| (7) | |||

| (8) |

In the control of the longitudinal flight dynamics, and are set to 0 and, hence, equation (8) becomes equation (9).

| (9) |

The actuator system is, thus, the system that receives the desired (commanded) and outputs , modelling the dynamic response of the physical fins with its deflection limit of 30 degrees. The latter is assumed to be a second order system with the following closed loop characteristics:

-

1.

Natural frequency of 150 rad.s-1

-

2.

Damping factor of 0.7

2.3 Performance Requirements

The algorithm must achieve the target performance established in terms of the following requirements:

-

1.

Static error margin = 0.5%

-

2.

Overshoot 20%

-

3.

Rise time 0.6s

-

4.

Settling time (5%) 0.6s

-

5.

Bounded actuation

-

6.

Smooth actuation

Besides, the present work also aims at achieving and improving the robustness of the algorithm to conditions different from the training ones.

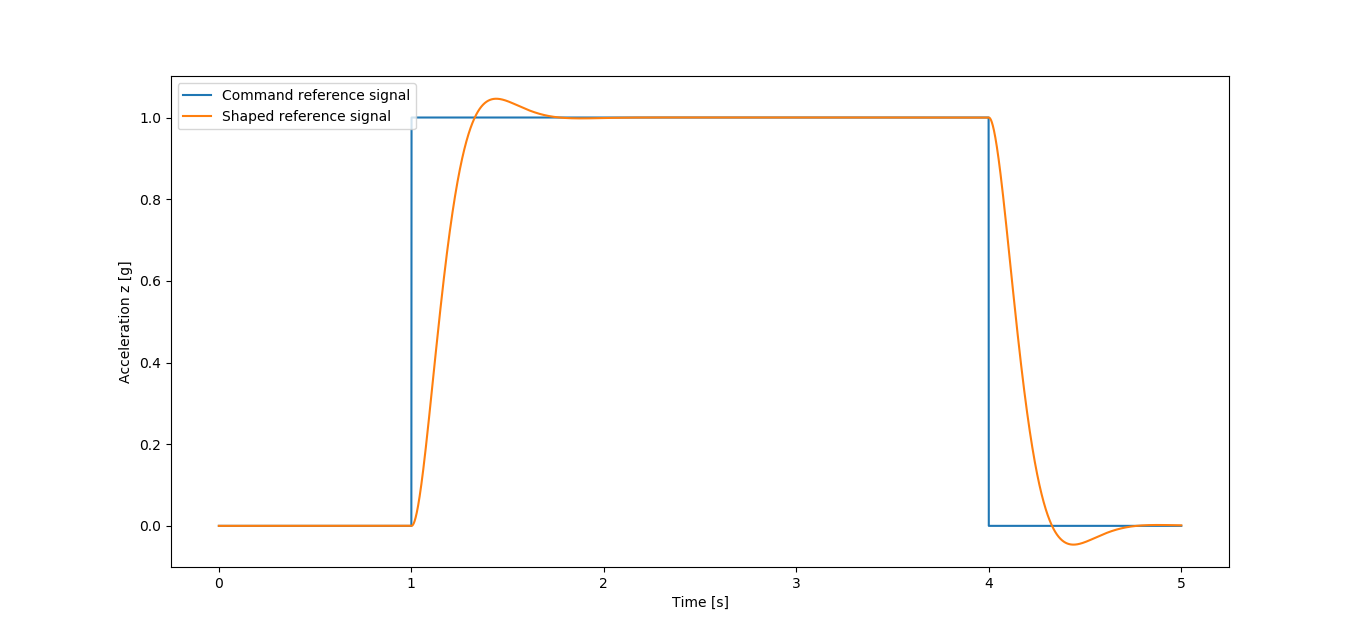

When trying to optimize the achieved performance, one must, however, be aware of the physical limitations imposed by the system being controlled. A commercial airplane, for example, will never have the agility of a fighter jet, regardless of how optimized its controller is. Therefore, it is hopeless to expect the system to follow too abrupt reference signals like step functions. Instead, the system will be asked to follow shaped reference signals, i.e., the output of the Reference Model. The latter is hereby defined as the system whose closed loop dynamics is designed to mimic the one desired for the dynamic system being controlled. In this case: natural frequency and damping factor of 10 rad.s-1 and 0.7, respectively.

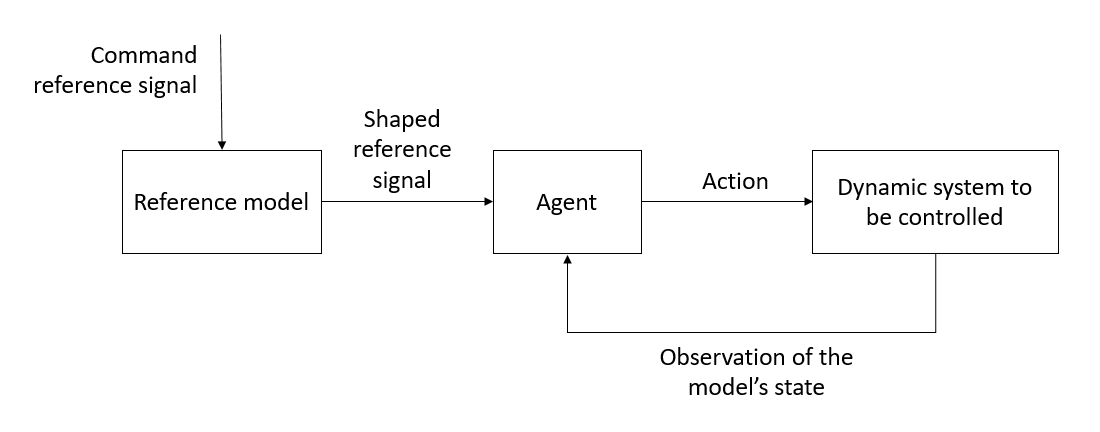

The proper workflow of the interaction between the agent and the dynamic system being controlled - including a Reference Model - is illustrated in figure 2. The command reference signal is given as an input to the Reference Model, whose output, the shaped reference signal is the input of the agent.

3 Background

3.1 Topic Overview

RL has been the object of research after the foundations laid out by Sutton et al. (2018) Sutton2018 , with applications going from the Atari 2600 games, to the MuJoCo games, or to robotic tasks (like grasping…) or other classic control problems (like the inverted pendulum).

TRPO (Schulman et al. 2015 Schulman2015 ) has become one of the commonly accepted benchmarks for its success achieved by the cautious trust region optimization and monotonic reward increase. Perpendicularly, Lillicrap et al. (2016) Lillicrap2016 proposed DDPG, a model-free off-policy algorithm, revolutionary not only for its ability of learning directly from pixels while maintaining the network architecture simple, but mainly because it was designed to cope with continuous domain action. Contrarily to TRPO, DDPG’s off-policy nature implied a much higher sample efficiency, resulting in a faster training proccess. Both TRPO and DDPG have been the roots for much of the research work that followed.

On the one side, some authors valued more the benefits of an off-policy algorithm and took the inspiration in DDPG to develop TD3 (Fujimoto et al. 2018 Fujimoto2018 ), addressing DDPG’s problem of over-estimation of the states’ value. On the other side, others preferred the benefits of on-policy algorithm and proposed interesting improvements to the original TRPO, either by reducing its implementation complexity (Wu et al. 2017 Wu2017 ), by trying to decrease the variance of its estimates (Schulman 2016 Schulman2016a ) or even by showing the benefits of its interconnection with replay buffers (Kangin et al. Kangin2019 ).

Apart from these, a new result began to arise: agents were ensuring stability at the expense of converging to suboptimal solutions. Once again, new algorithms were conceived in each family, on- and off-policy. Haarnoja and Tang proposed to express the optimal policy via a Boltzmann distribution in order to learn stochastic behaviors and to improve the exploration phase within the scope of an off-policy actor-critic architecture: Soft Q-learning (Haarnoja et al. 2017 Haarnoja2017 ). Almost simultaneously, Schulman et al. published PPO (Schulman et al. 2017 Schulman2017 ), claiming to have simplified TRPO’s implementation and increased its sample-efficiency by inserting an entropy bonus to increase exploration performance and avoid premature suboptimal convergence. Furthermore, Haarnoja et al. developed SAC (Haarnoja et al. 2018 Haarnoja2018 ), in an attempt to recover the training stability without losing the entropy-encouraged exploration and Nachum et al. (2018) Nachum2018a proposed Smoothie, allying the trust region implementation of PPO with DDPG.

Finally, there has also been research done on merging both on-policy and off-policy algorithms, trying to profit from the upsides of both, like IPG (Gu et al. 2017 Gu2017 ), TPCL (Nachum et al. 2018 Nachum2018 ), Q-Prop (Guet al. 2019 Gu2019 ) and PGQL (O’Donoghue et al. 2019 ODonoghue2019 ).

3.2 Trust Region Policy Optimization

TRPO is an on-policy model-free RL algorithm that aims at maximizing the discounted sum of future rewards (cf. equation (10)) following an actor-critic architecture and a trust region search.

| (10) |

Initially, Schulman et al. (2015) Schulman2015 proposed to update the policy estimator’s parameters with the conjugate gradient algorithm followed by a line search. The trust region search would be ensured by a hard constraint on the Kullback-Leibler divergence .

Briefly after, Kangin et al. (2019) Kangin2019 proposed an enhancement, augmenting the training data by using replay buffers and GAE (Schulman et al. 2016 Schulman2016a ). Also contrarily to the original proposal, Kangin et al. trained the value estimator’s parameters with the ADAM optimizer and the policy’s with K-FAC (Martens et al. 2015 Martens2015 ). The former was implemented within a regression between the output of the Value NN, , and its target, , whilst the latter used equation (11) as the loss function, which has got a first term concerning the objective function being maximized (cf. equation (10)) and a second one penalizing differences between two consecutive policies outside the trust region, whose radius is the hyperparameter .

| (11) |

For matters of simplicity of implementation, Schulman et al. (2015) Schulman2015 rewrote equation (11) in terms of collected experience (cf. equation (12)), as a function of two different stochastic policies, and , and the .

| (12) |

Both versions of TRPO use the same exploration strategy: the output of the Policy NN is used as the mean of a multivariate normal distribution whose covariance matrix is part of the policy trainable parameters.

3.3 Hindsight Experience Replay

The key idea of HER (Andrychowicz et al. 2018 Andrychowicz2018 ) is to store in the replay buffers not only the experience collected from interacting with the environment, but also about experiences that would be obtained, had the agent followed a different goal. HER was proposed as a complement of sparse rewards.

3.4 Prioritized Experience Replay

When working with replay buffers, randomly sampling experience can be outperformed by a more sophisticated heuristic. Schaul et al. (2016) Schaul2016a proposed Prioritized Experience Replay, sampling experience according to their significance, measured by the TD-error. Besides, the strict required performance (cf. table 1) causes the agent to seldom achieve success and the training dataset to be imbalanced. Thus, the agent simply overfits the ”failure” class. Narasimhan et al. (2015) Narasimhan2015 have addressed this problem by forcing 25% of the training dataset to be sampled from the less represented class.

4 Application of RL to the Missile’s Flight

One episode is defined as the attempt of following a 5s-long reference signal consisting of two consecutive steps whose amplitude and rise times are randomly generated except when deploying the agent for testing purposes (Cortez et al. 2020 Cortez2020 ). With a sampling time of 1ms, an episode includes 5000 steps, from which 2400 are defined as transition periods (the 600 steps composing 0.6s after each of the four rise times), whilst the remaining 2600 are resting periods.

From the requirements defined in section 2.3, the RL problem was formulated as the training of an agent that succeeds when the performance of an episode meets the levels defined in table 1 in terms of the maximum stationary tracking error , of the overshoot, of the actuation magnitude and of the actuation noise levels both in resting () and transition () periods.

| Requirement | Achieved Value | |

|---|---|---|

| 0.5 | [g] | |

| Overshoot | 20 | [%] |

| 15 | [º] | |

| 1 | [rad] | |

| 0.2 | [rad] |

4.1 Algorithm

As explained in section 1, the current problem required an on-policy model-free RL algorithm. Among them, not only is TRPO a current state-of-the-art algorithm (cf. section 3.1), but it also presents the attractiveness of the trust region search, avoiding sudden drops during the training progress, which is a very interesting feature to be explored by the industry, whose mindset is often aiming at robust results. TRPO was, therefore, the most suitable choice.

4.1.1 Modifications to original TRPO

The present implementation was inspired in the implementations proposed by Schulman et al. (2015) Schulman2015 and by Kangin et al. (2019) Kangin2019 (cf. section 3.2). There are several differences, though:

-

1.

The reward function is given by equation (23), whose relative weights can be found in Cortez et al. (2020) Cortez2020 .

(13) (16) (17) (18) (19) (22) (23) -

2.

Both neural networks have got three hidden layers whose sizes - , and - are related with the observations vector (their input size) and with the actions vector (output size of the Policy NN).

-

3.

Observations are normalized (cf. equation (24)) so that the learning process can cope with the different domains of each feature.

(24) In equation (24), and are the running mean value and the running variance of the set of observations collected along the whole training process, which are updated with information about the newly collected observations after each training episode.

-

4.

The proposed exploration strategy is deeply rooted on Kangin et al.’s (2019) Kangin2019 , meaning that, the new action is sampled from a normal distribution (cf. equation (25)) whose mean is the output of the policy neural network and whose variance is obtained according to equation (26).

(25) (26) Although similar, this strategy differs from the original in (cf. equation (27)), which is directly influenced by the tracking error through .

(27) -

5.

Equation (30) was used as the loss function of the policy parameters, modifying equation (12) in order to emphasize the need of reducing with a term that linearly penalizes and a quadratic term that aims at fine-tuning it so that it is closer to the trust region radius , encouraging as big update steps as possible.

(28) (29) (30) Having the previously mentioned exploration strategy, is a Gaussian distribution over the continuous action space (cf. equation (31), where and are defined in equation (25)).

(31) Hence, (cf. equation (28)) is given by equation (32), where is the number of samples in the training batch, assuming that all samples in the training batch are independent and identically distributed111We can assume they are (i) independent because they are sampled from the replay buffer (stage 5 of algorithm 2, section 4.1.5), breaking the causality correlation that the temporal sequence could entail, and (ii) identically distributed because the exploration strategy is always the same and, therefore, the stochastic policy is always a Gaussian distribution over the action space (cf. equation (31))..

(32) Moreover, is given by equation (33).

(33) -

6.

ADAM was used as the optimizer of both NN for its wide cross-range success and acceptability as the default optimizer of most ML applications.

4.1.2 Hindsight Experience Replay

The present goal is not defined by achieving a certain final state as Andrychowicz et al. (2018) Andrychowicz2018 proposed, but, instead, a certain performance in the whole sequence of states that constitutes an episode. For this reason, choosing a different goal must mean, in this case, to follow a different reference signal. After collecting a full episode, those trajectories are replayed with new goals, which are sampled according to two different strategies. These strategies dictate the choice of the amplitudes of the two consecutive steps of each new reference signal.

The first strategy - mean strategy - consists of choosing the amplitudes of the steps of the command signal as the mean values of the measured acceleration during the first and second resting periods, respectively. Similarly, the second strategy - final strategy - consists of choosing them as the last values of the measured signal during each resting period. Apart from the step amplitudes, all the other original parameters (Cortez et al. 2020 Cortez2020 ) of the reference signal are kept.

4.1.3 Balanced Prioritized Experience Replay

Being the priority level of the experience collected in step (cf. equation (34), with ), the number of steps with priority level and the proportion of steps with priority level desired in the training datasets, BPER was implemented according to algorithm 1.

| (34) |

Notice that and follow the notation of Schaul et al. (2016) Schaul2016a (assuming ), in which matches the rank-based prioritization with meaning the ordinal position of step when all steps in the replay buffers are ordered by the magnitude of their temporal differences.

As condensed in algorithm 1, when there is less than 25% of successful steps in the replay buffers, the successful and unsuccessful subsets of the replay buffers are sampled separately, with 25% coming from the successful subset. In a posterior phase of training, when there is already more than 25% of successful steps, both subsets are molten. In either cases, sampling is always done according to the temporal differences, i.e., a step with higher temporal difference has got a higher chance of being sampled.

4.1.4 Scheduled Experience Replay

Having HER (cf. section 4.1.2) and BPER (cf. section 4.1.3) dependent on a condition - the SER condition - is hereby defined as Scheduled Experience Replay (SER). The SER condition is exemplified in equation (35), where stands for the mean tracking error of the previously collected episode .

| (35) |

Contrarily to its original context (cf. section 3.3), the reward function is not sparse and was already able of achieving near-target performance without HER. The hypothesis, in this case, is that HER can be a complementary feature, by activating it only when the agent converges to suboptimal policies.

Moreover, without HER, BPER adds less benefit, since there is no special reason for the agent to believe that some part of the collected experience is more significant than other.

4.1.5 Algorithm Description

-

1.

Collect experience, sampling actions from policy

Augment the collected experience with synthetic successful episodes (SER)

and for all in , for all in

Store the newly collected experience in the replay buffer

Sample the training sets from the replay buffer (SER)

Update all value and policy parameters

Update trust region

-

1.

One batch of trajectories , is collected.

- 2.

-

3.

The targets for the Value NN and the are computed and added to the trajectories.

-

4.

is stored in the replay buffer, discarding the oldest batch: the replay buffer contains data collected from the last policies and works as a FIFO queue.

- 5.

-

6.

The value parameters and the policy parameters are updated.

-

7.

The trust region parameters are updated.

4.2 Methodology

As further detailed in Cortez2020 , the established methodology (i) progressively increases the amplitude of the randomly generated command signal and (ii) intermediate testing of the agent’s performance against a -10g/10g double step without exploration, in order , respectively, (i) to avoid overfitting and (ii) to decide whether or not to finish the training process.

4.3 Robustness Assessments

The formal mathematical guarantee of robustness of a RL agent composed of neural networks cannot be done in the same terms as the one of linear controllers. It was, hence, evaluated by deploying the nominal agent in non-nominal environments. Apart from testing its performance, the hypothesis is also that training this agent in the latter can improve its robustness. To do so, the training of the best found nominal agent was resumed in the presence of non-nominalities (cf. section 4.3.1). This training and its resulting best found agent are henceforward called robustifying training and robustified agent, respectively.

4.3.1 Robustifying Trainings

Three different modifications were separately made in the provided model (cf. section 2), in order to obtain three different non-nominal environments, each of them modelling latency, estimation uncertainty in the nominal estimated Mach number and height (cf. equations (36) and (37)) and parametric uncertainty in the nominal aerodynamic coefficients and (cf. equations (38) and (39)).

| (36) | |||

| (37) | |||

| (38) | |||

| (39) |

In the case of non-nominal environments including latency, the range of possible values is , whereas in the other cases, the uncertainty is assumed to be normally distributed, meaning that, following Peter’s (2018) Peter2018 line of thought, its domain is 222To be accurate, this interval covers only 99.73% of the possible values, but it is assumed to be the whole spectrum of possible values..

Before each new episode of a robustifying training, the non-nominality new value (either latency or one of the uncertainties) was sampled from a uniform distribution over its domain and kept constant during the entire episode. In each case, four different values were tried for the bounds of the range of possibilities (either or ):

-

1.

[ms]

-

2.

[%]

-

3.

[%]

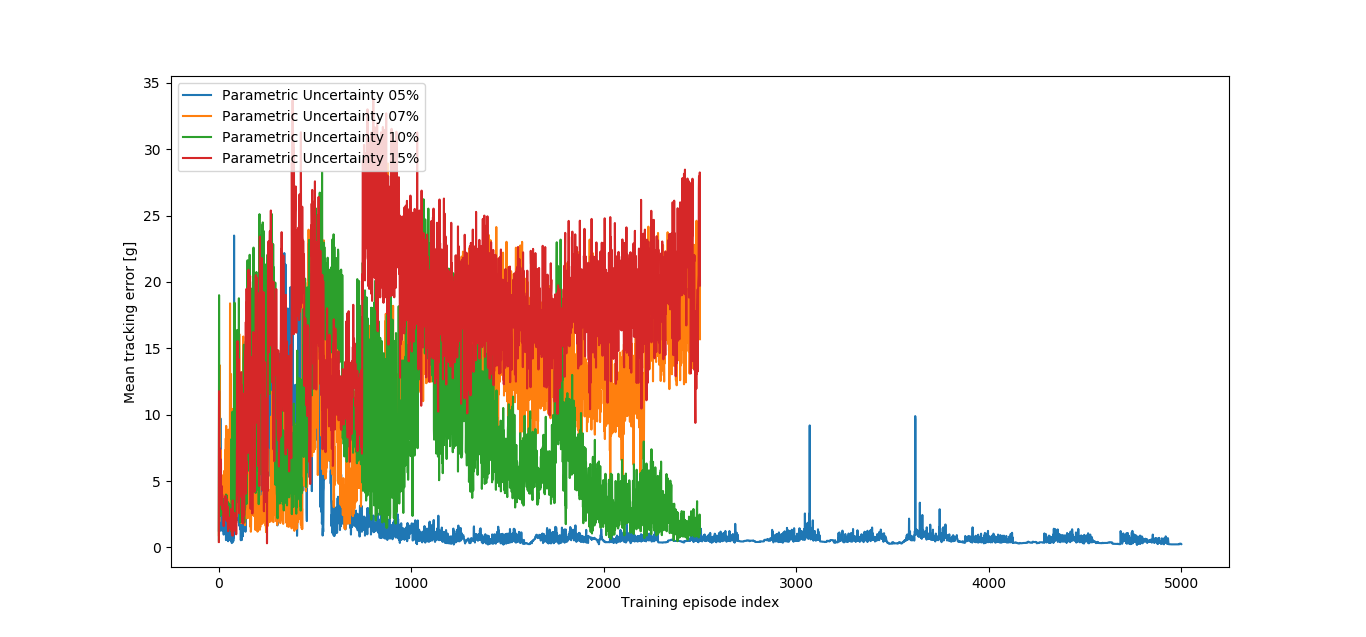

Values whose robustying training had diverged after 2500 episodes were discarded. The remaining were run for a total of 5000 episodes (cf. section 5.4).

5 Results

5.1 Expected Results

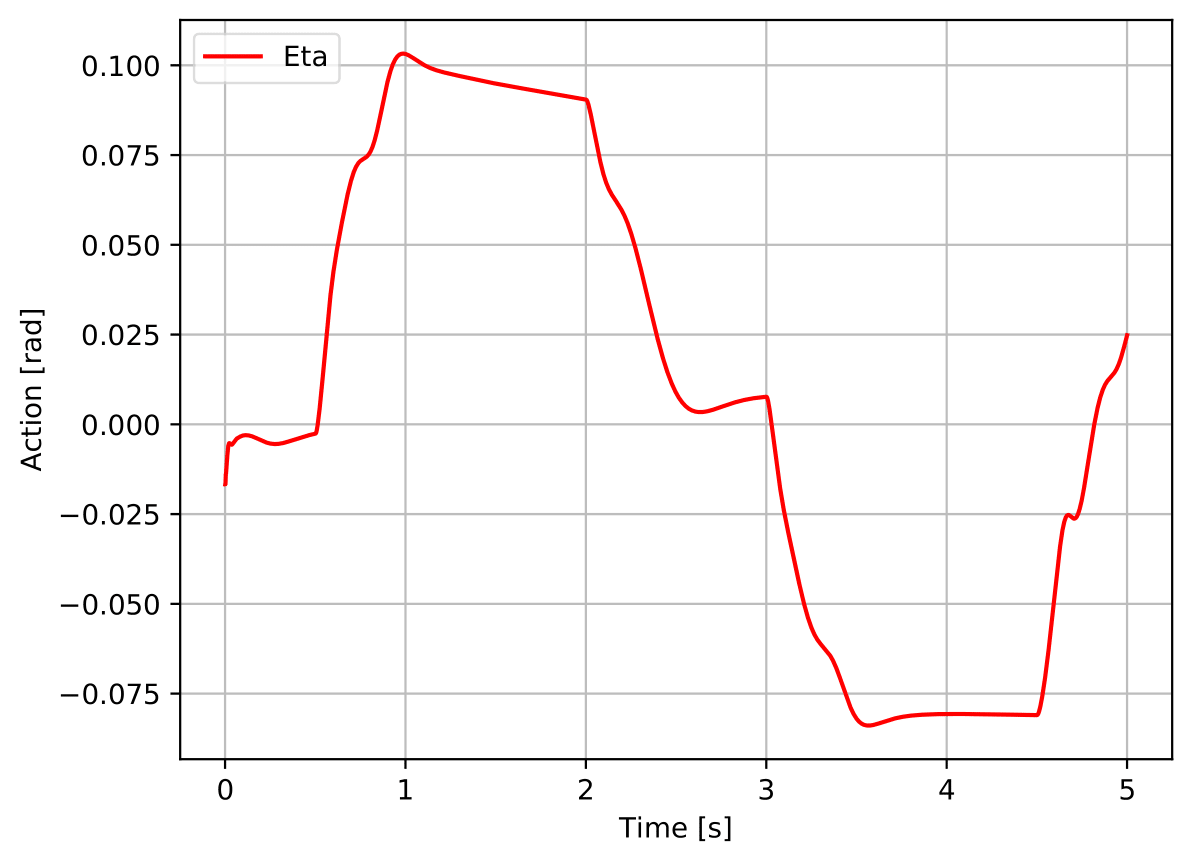

Empirically, it has been seen that agents that struggle to control ’s magnitude within its bounds (cf. table 1) are unable of achieving low error levels without increasing the level of noise, if they can do it at all. In other words, the only way of having good tracking results with unbounded actions is to fall into a bang-bang control-like situation. Therefore, the best found agent will have to start by learning to use only bounded and smooth action values, which will allow it to, then, start decreasing the tracking error.

Notice that, while the tracking error and the noise measures are expected to tend to 0, ’s magnitude is not, since it would mean that the agent had given up actuating in the environment. Hence, it is expected that, at some point in training, the latter stabilizes.

Moreover, the exploration strategy proposed in section 4.1.1 insert some variance in the output of the policy neural network, meaning that the noise measures are also not expected to reach exactly 0.

5.2 Best Found Agent

2

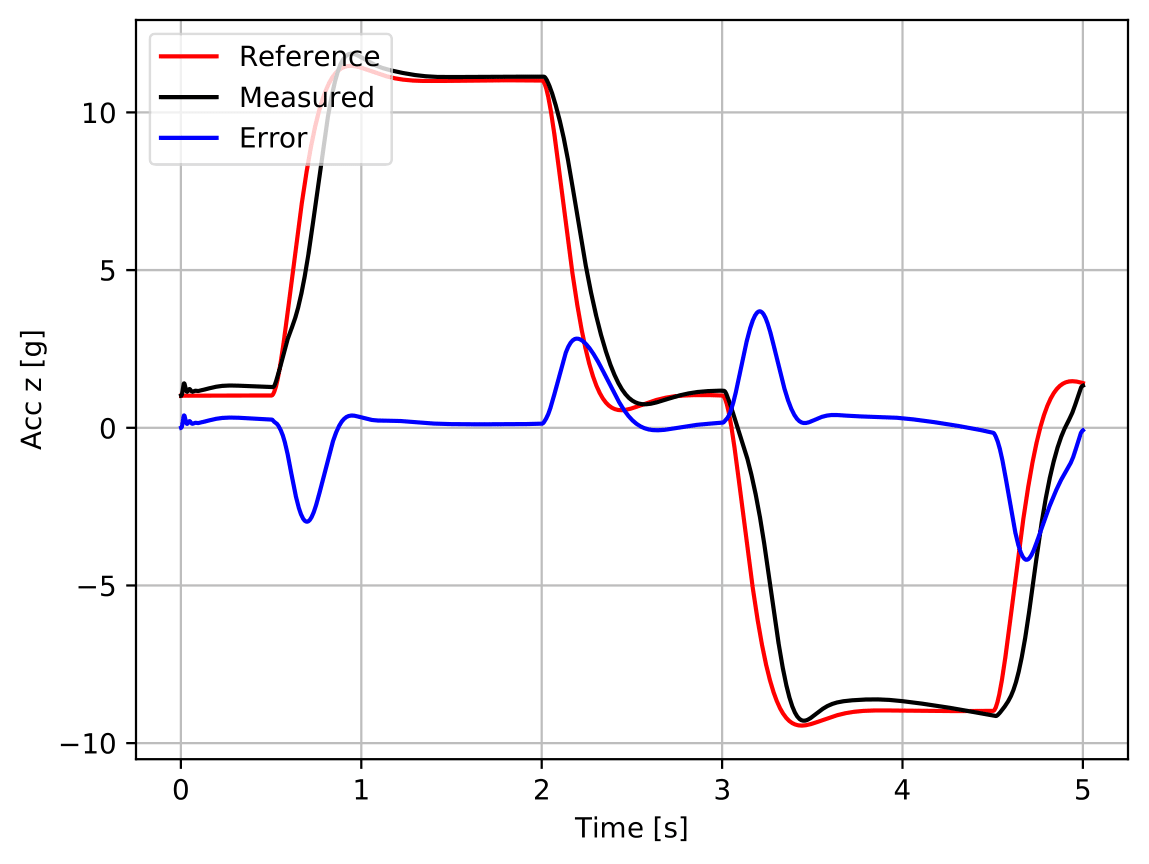

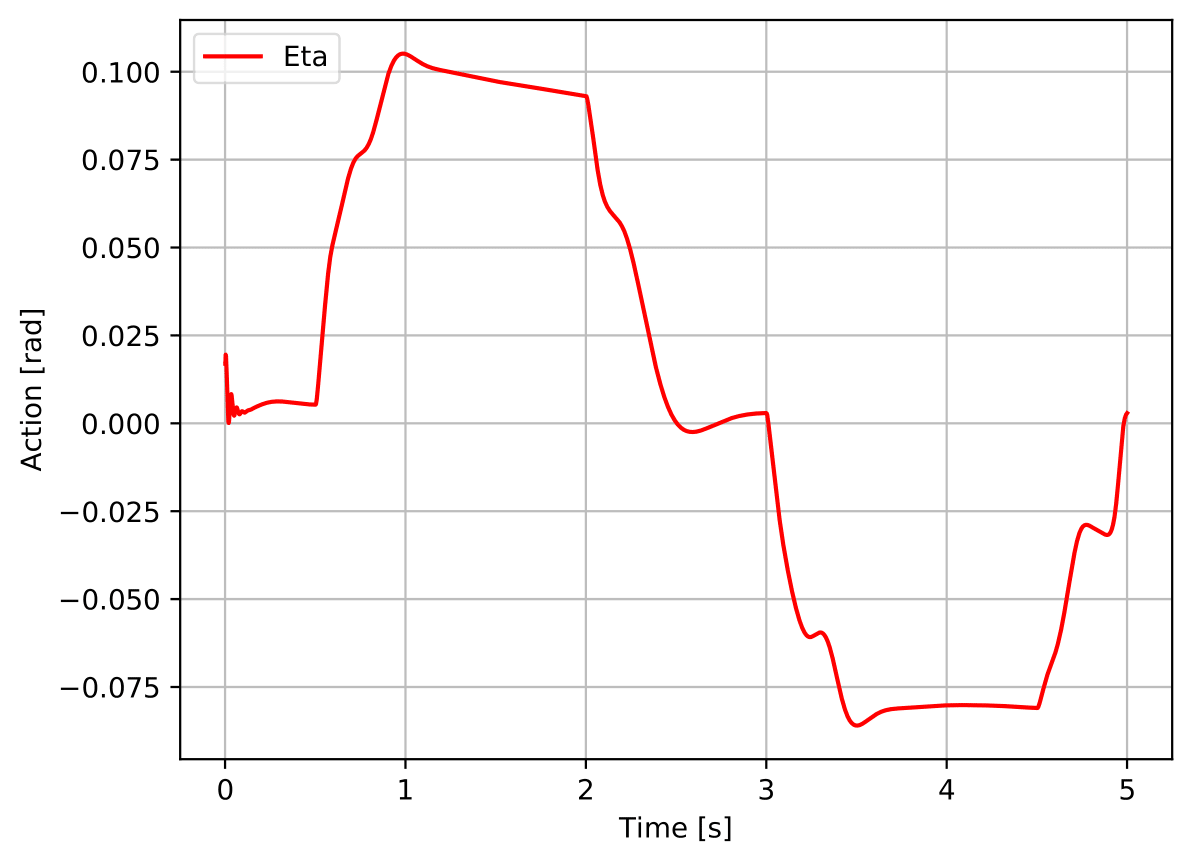

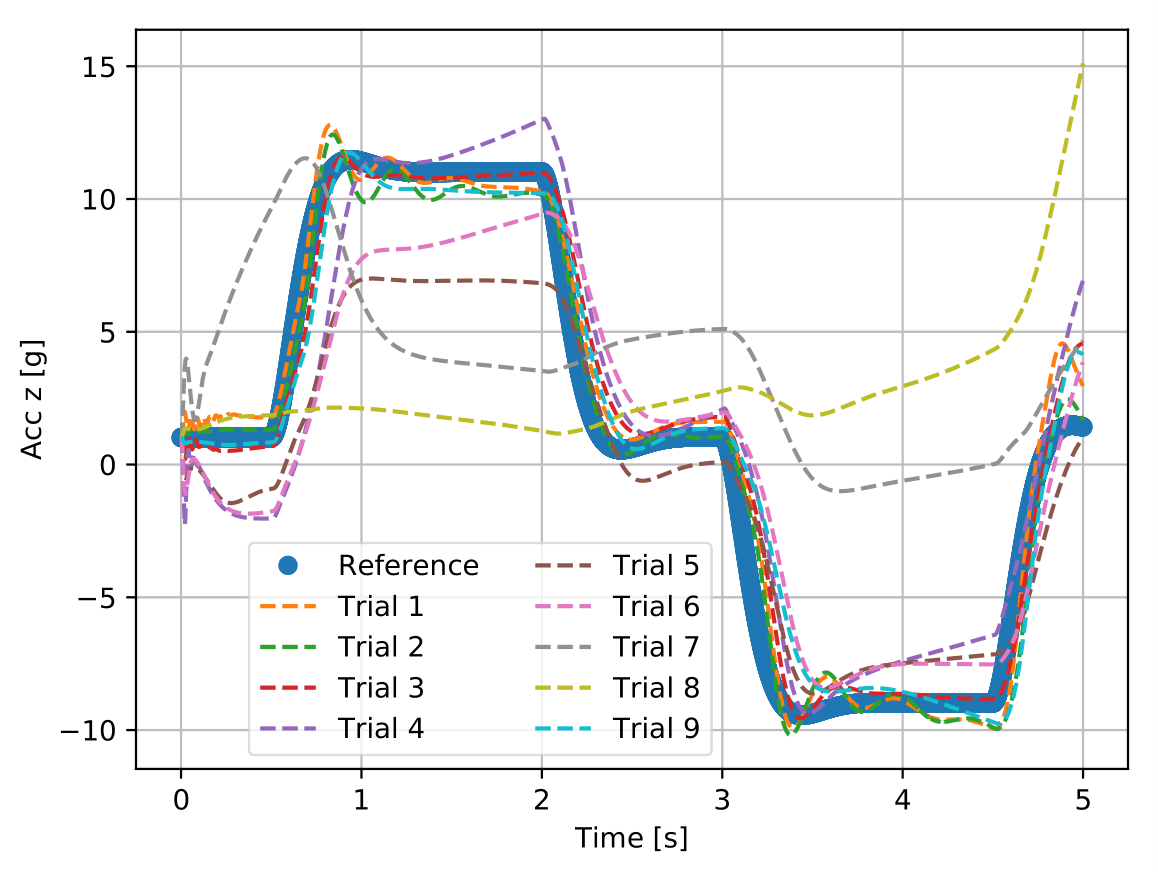

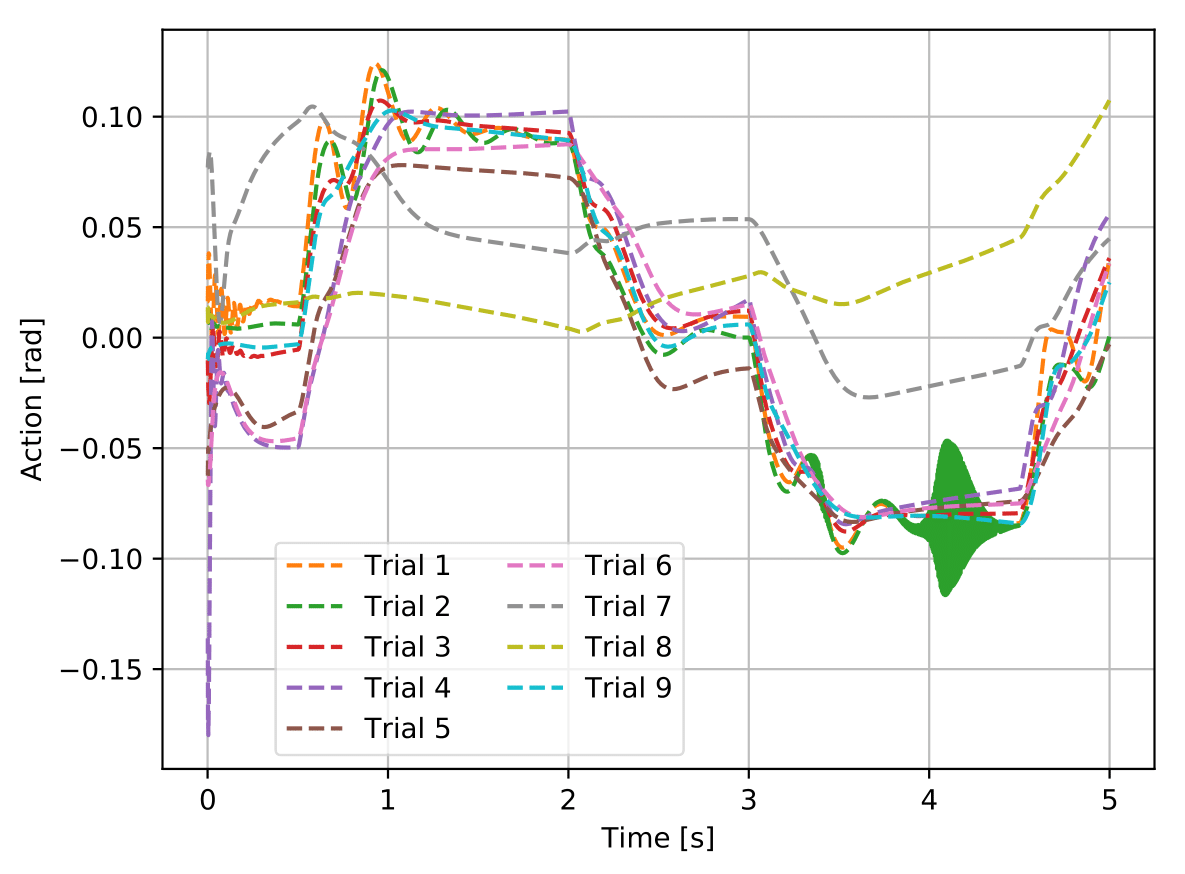

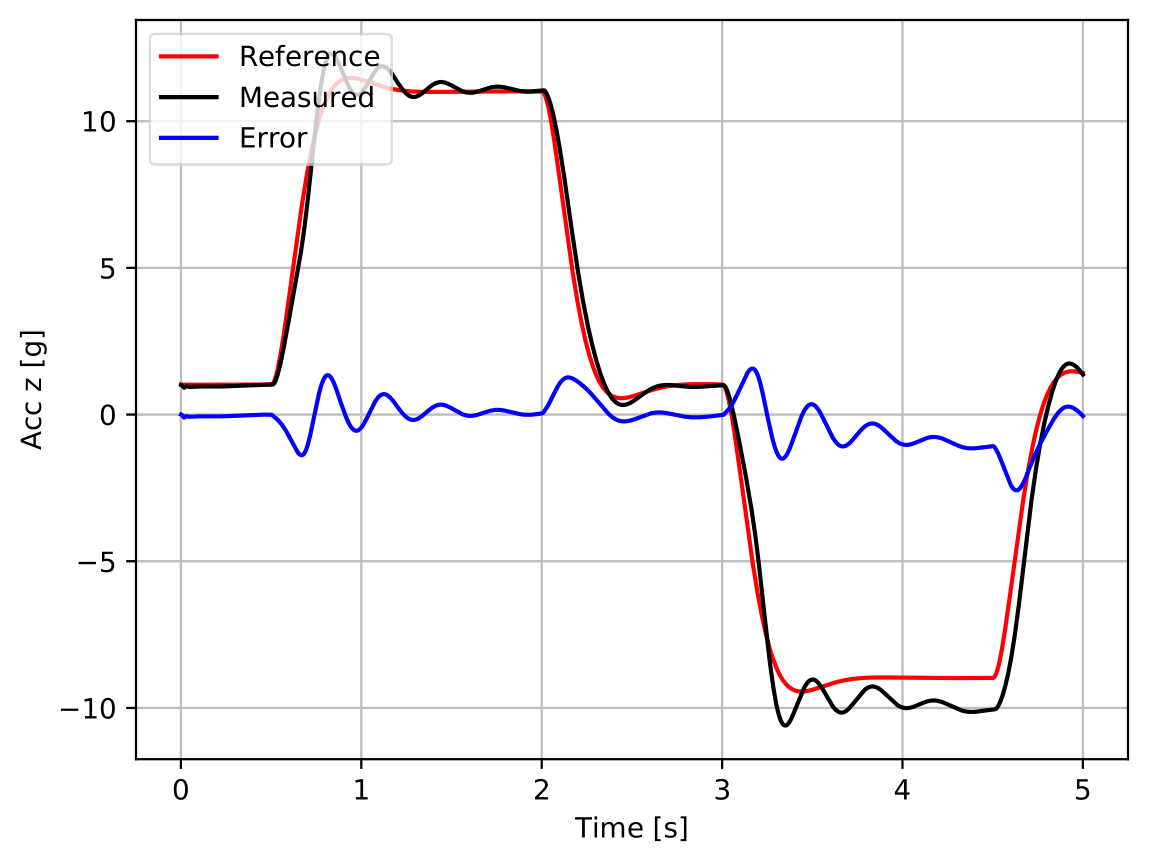

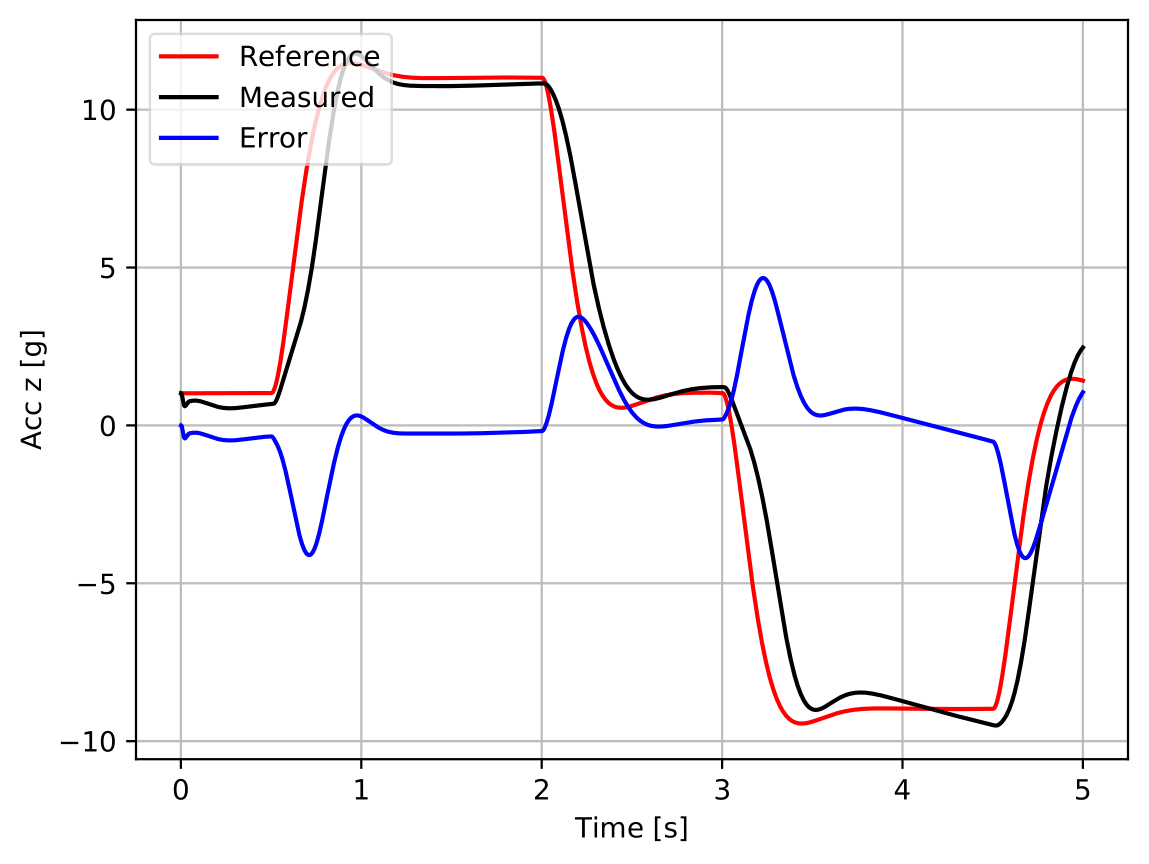

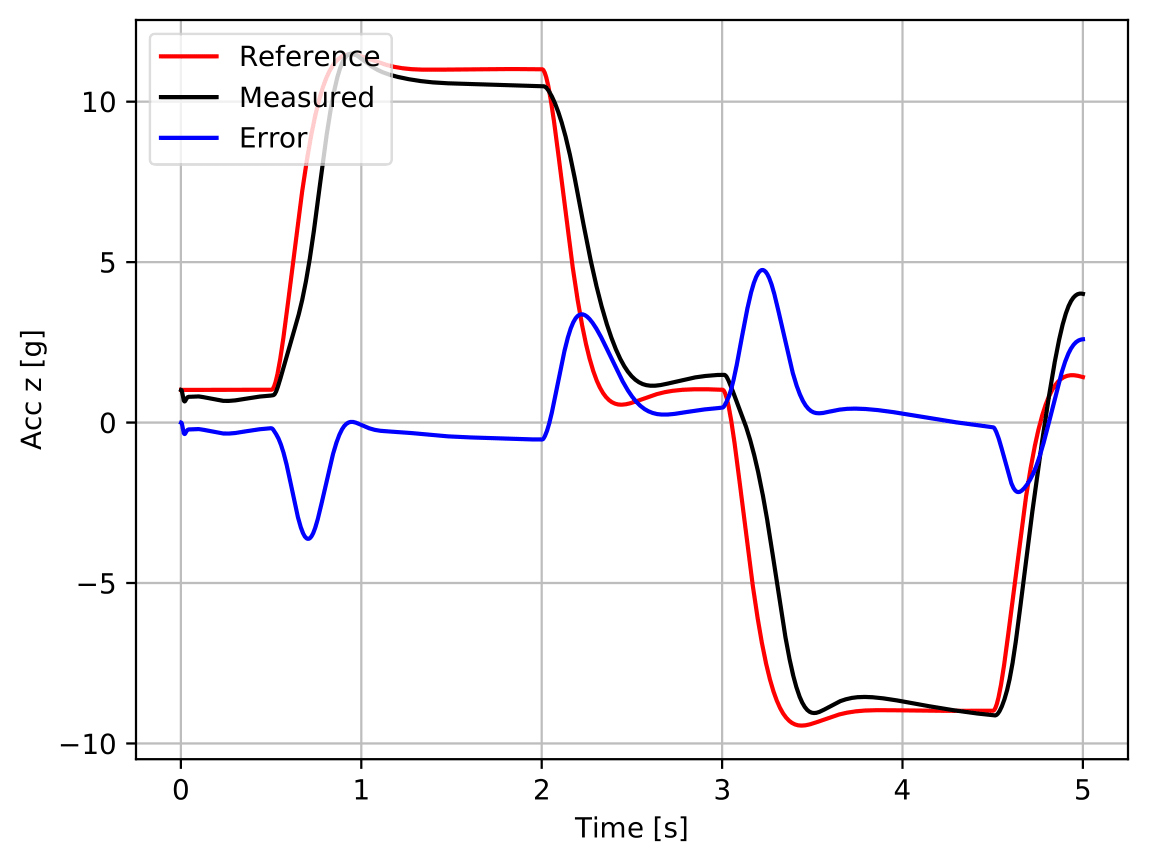

As figure 3 evidences, the agent is clearly able of controlling the measured acceleration and to track the reference signal it is fed with, satisfying all the performance requirements defined in table 1 (cf. table table 2).

| Requirement | Achieved Value | |

|---|---|---|

| 0.4214 | [g] | |

| Overshoot | 8.480 | [%] |

| 0.1052 | [rad] | |

| 0.04222 | [rad] | |

| 0.005513 | [rad] |

5.3 Reproducibility Assessments

2

Figure 4 shows the tests of the best agents obtained during each of the nine trainings run to assess the reproducibility of the best found nominal agent (cf. section 5.2) and it is possible to see that none of them achieved the target performance, i.e., none meets all the performance criteria established in table 1. Although most of the trials can be considered far from the initial random policy, it is possible to verify that trials 7 and 8 present a very poor tracking performance and that trial 2’s action signal is not smooth.

5.4 Robustness Assessments

5.4.1 Latency

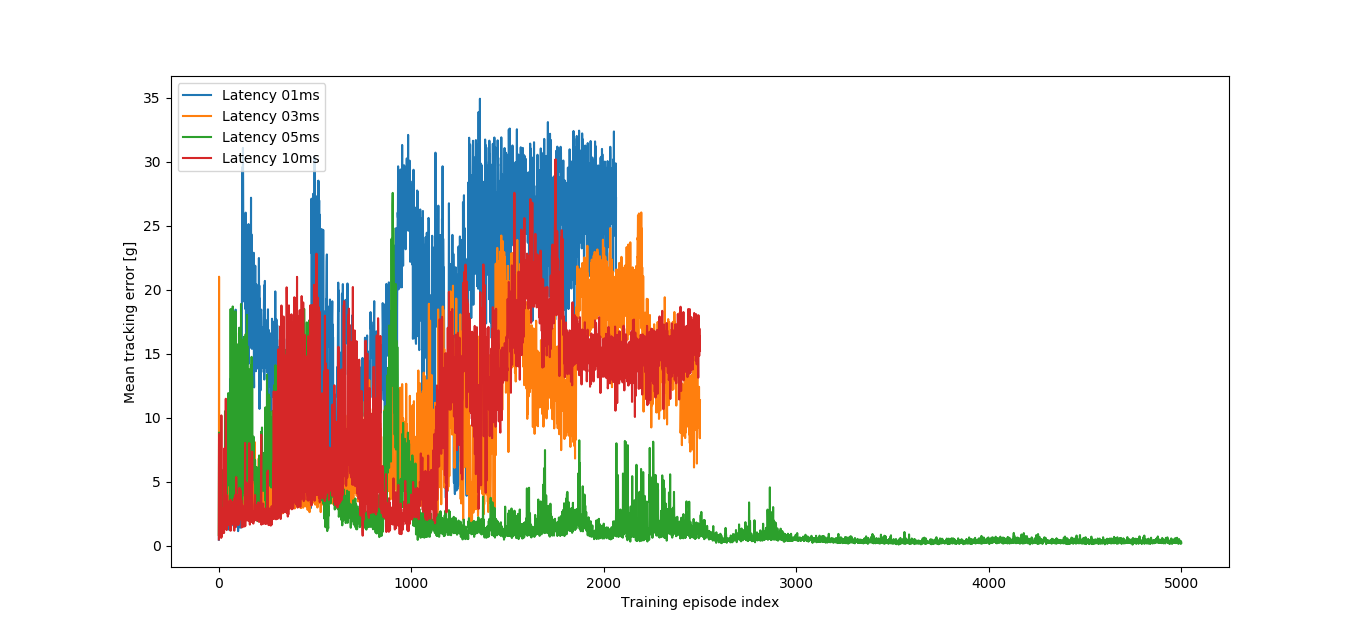

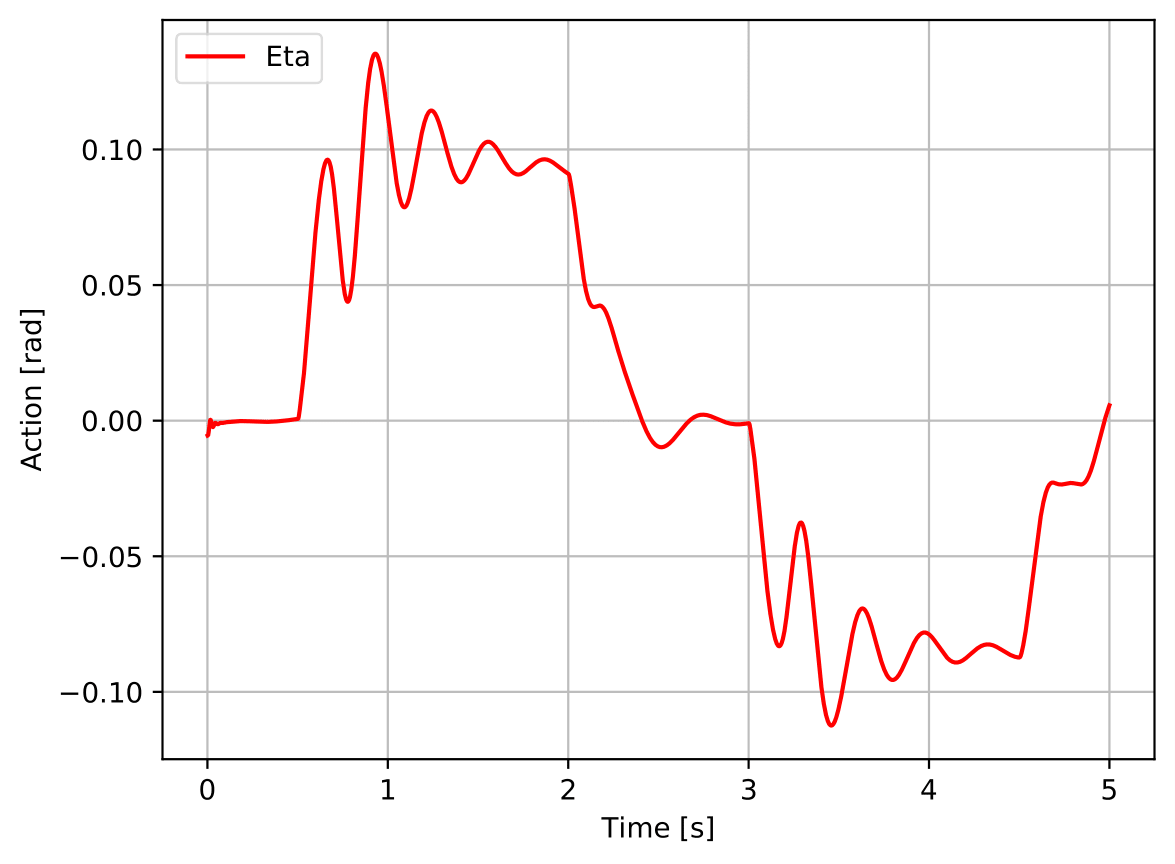

As figure 5 shows, from the four different values of , only 5ms converged after 2500 episodes, meaning that it was the only robustifying training being run for a total of 5000 episodes, during which the best agent found was defined as the Latency Robustified Agent. The nominal performance (cf. figure 6) is damaged, having a less stable action signal and a poorer tracking performance.

2

Furthermore, its performance in environments with latency (0ms to 40ms) did not provide any enhancement, as its success rate is low in all quantities of interest (cf. table 3).

| Requirement | Success % |

|---|---|

| 0.00 | |

| Overshoot | 25.00 |

| 0.00 | |

| 7.50 | |

| 5.00 |

Since the Latency Robustified Agent was worse than the Nominal Agent in both nominal and non-nominal environments, it lost in both Performance and Robustness categories. Thus, it is possible to say, in general terms, that, concerning latency, the robustifying trainings failed.

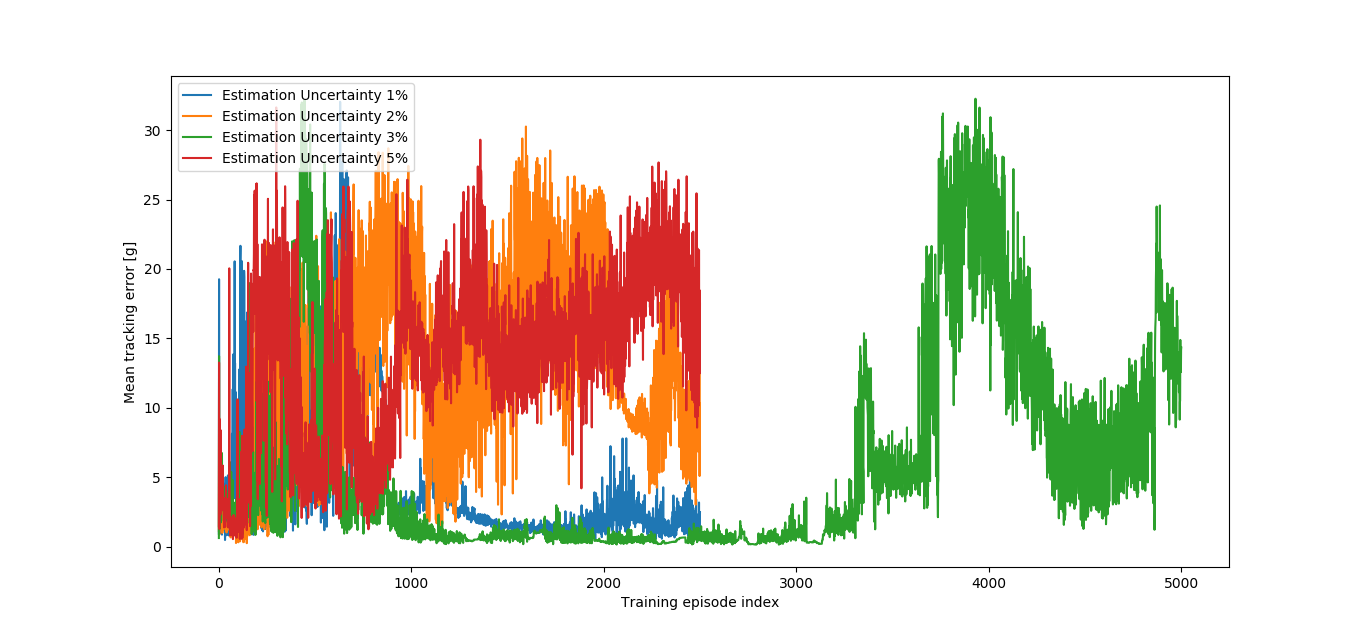

5.5 Estimation Uncertainty

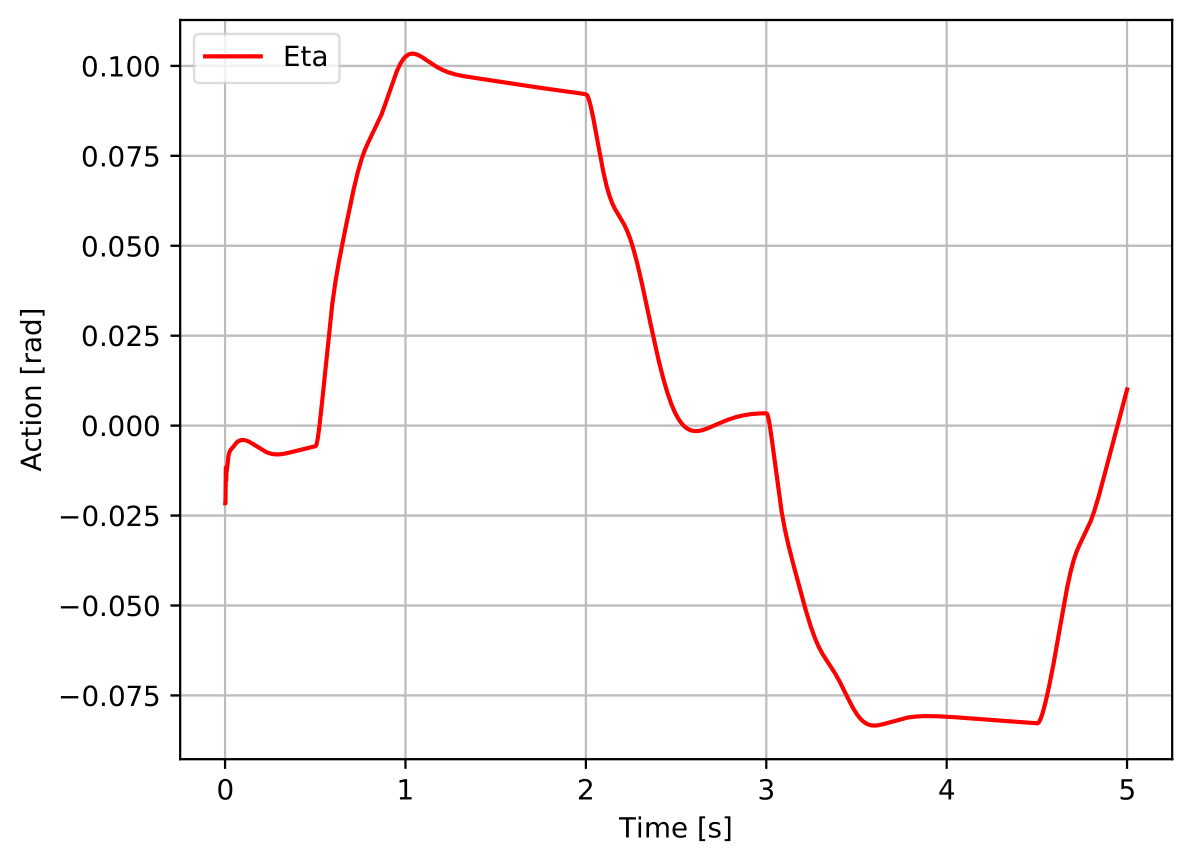

As figure 7 shows, from the four different values of tried, only the 3% one converged after 2500 episodes, meaning that it was the only robustifying training being run for a total of 5000 episodes, during which the best agent found was defined as the Estimation Uncertainty Robustified Agent. The nominal performance is improved, having a lower overshoot and a smoother action signal.

2

Furthermore, it enhanced the performance of the Nominal Agent in environments with estimation uncertainty (-10% to 10%), having achieved high success rates (cf. table 4).

| Requirement | Success % |

|---|---|

| 60.38 | |

| Overshoot | 66.39 |

| 91.97 | |

| 77.51 | |

| 82.33 |

Since the Estimation Uncertainty Robustified Agent was better than the Nominal Agent in both nominal and non-nominal environments, it won in both Performance and Robustness categories. Thus, it is possible to say, in general terms, that, concerning estimation uncertainty, the robustifying trainings succeeded.

5.6 Parametric Uncertainty

As figure 9 shows, from the four different values of tried, only the 5% one converged after 2500 episodes, meaning that it was the only robustifying training being run for a total of 5000 episodes, during which the best agent found was defined as the Parametric Uncertainty Robustified Agent. The nominal tracking performance is damaged, but the action signal is smoother (cf. figure 10).

2

Furthermore, it enhanced the performance of the Nominal Agent in environments with parametric uncertainty (-40% to 40%), having achieved high success rates (cf. table 5).

| Requirement | Success % |

|---|---|

| 61.55 | |

| Overshoot | 83.16 |

| 98.28 | |

| 100.00 | |

| 99.31 |

Since the Parametric Uncertainty Robustified Agent was better than the Nominal Agent in non-nominal environments, it won in the Robustness categories, remaining acceptably the same in terms of performance in nominal environments. Thus, it is possible to say, in general terms, that, concerning parametric uncertainty, the robustifying trainings succeeded.

6 Achievements

The proposed algorithm has been considered successful, since all the objectives established in section 1 were accomplished, confirming the motivations put forth in section 1. Three main achievements must be highlighted:

- 1.

-

2.

the ability of SER (cf. section 4.1.4) in boosting a previously suboptimally converged performance;

-

3.

the very sound rates of success in overtaking the performance achieved by the best found nominal agent (cf. sections 5.5 and 5.6). RL has confirmed to be a promising learning framework for real life applications, where the concept of Robustying Trainings can bridge the gap between training the agent in the nominal environment and deploying it in reality.

7 Future Work

The first direction of future work is to expand the current task to the control of the whole nonlinear flight dynamics of the GSAM, instead of solely the longitudinal one. Such an expansion would require both (i) straightforward modifications in the code and in the training methodology and (ii) some conceptual challenges, concerning the expansion of the reward function and of the exploration strategy.

Secondly, it would be interesting to investigate how to tackle the main challenges faced during the design of the proposed algorithm, namely (i) to avoid the time-consuming reward engineering process, (ii) the definition of the exploration strategy and (iii) the reproducibility issue.

References

- [1] Marcin Andrychowicz, Filip Wolski, Alex Ray, Jonas Schneider, Rachel Fong, Peter Welinder, Bob McGrew, Josh Tobin, Pieter Abbeel, and Wojciech Zaremba. Hindsight Experience Replay. In Advances in Neural Information Processing Systems, 2017.

- [2] Bernardo Cortez. Reinforcement Learning for Robust Missile Autopilot Design. Technical report, Instituto Superior Técnico, Lisboa, 12 2020.

- [3] Scott Fujimoto, Herke Van Hoof, and David Meger. Addressing Function Approximation Error in Actor-Critic Methods. 35th International Conference on Machine Learning, ICML 2018, 4:2587–2601, 2018.

- [4] Shixiang Gu, Timothy Lillicrap, Zoubin Ghahramani, Richard E. Turner, and Sergey Levine. Q-PrOP: Sample-efficient policy gradient with an off-policy critic. 5th International Conference on Learning Representations, ICLR 2017 - Conference Track Proceedings, pages 1–13, 2019.

- [5] Shixiang Gu, Timothy Lillicrap, Zoubin Ghahramani, Richard E. Turner, Bernhard Schölkopf, and Sergey Levine. Interpolated policy gradient: Merging on-policy and off-policy gradient estimation for deep reinforcement learning. Advances in Neural Information Processing Systems, 2017-Decem(Nips):3847–3856, 2017.

- [6] Tuomas Haarnoja, Haoran Tang, Pieter Abbeel, and Sergey Levine. Reinforcement learning with deep energy-based policies. 34th International Conference on Machine Learning, ICML 2017, 3:2171–2186, 2017.

- [7] Tuomas Haarnoja, Aurick Zhou, Pieter Abbeel, and Sergey Levine. Soft actor-critic: Off-policy maximum entropy deep reinforcement learning with a stochastic actor. 35th International Conference on Machine Learning, ICML 2018, 5:2976–2989, 2018.

- [8] Dmitry Kangin and Nicolas Pugeault. On-Policy Trust Region Policy Optimisation with Replay Buffers. pages 1–13, 2019.

- [9] Timothy P. Lillicrap, Jonathan J. Hunt, Alexander Pritzel, Nicolas Heess, Tom Erez, Yuval Tassa, David Silver, and Daan Wierstra. Continuous control with deep reinforcement learning. 4th International Conference on Learning Representations, ICLR 2016 - Conference Track Proceedings, 2016.

- [10] James Martens and Roger Grosse. Optimizing neural networks with Kronecker-factored approximate curvature. 32nd International Conference on Machine Learning, ICML 2015, 3:2398–2407, 2015.

- [11] Ofir Nachum, Mohammad Norouzi, George Tucker, and Dale Schuurmans. Smoothed action value functions for learning Gaussian policies. 35th International Conference on Machine Learning, ICML 2018, 8:5941–5953, 2018.

- [12] Ofir Nachum, Mohammad Norouzi, Kelvin Xu, and Dale Schuurmans. TruST-PCL: An off-policy trust region method for continuous control. 6th International Conference on Learning Representations, ICLR 2018 - Conference Track Proceedings, pages 1–14, 2018.

- [13] Karthik Narasimhan, Tejas D Kulkarni, and Regina Barzilay. Language Understanding for Text-based Games using Deep Reinforcement Learning. Technical report, Massachusetts Institute of Technology, 2015.

- [14] Brendan O’Donoghue, Rémi Munos, Koray Kavukcuoglu, and Volodymyr Mnih. Combining policy gradient and q-learning. 5th International Conference on Learning Representations, ICLR 2017 - Conference Track Proceedings, pages 1–15, 2019.

- [15] Florian Peter. Nonlinear and Adaptive Missile Autopilot Design. PhD thesis, Technische Universitat München, Munich, 5 2018.

- [16] Tom Schaul, John Quan, Ioannis Antonoglou, and David Silver. Prioritized experience replay. 4th International Conference on Learning Representations, ICLR 2016 - Conference Track Proceedings, pages 1–21, 2016.

- [17] John Schulman, Sergey Levine, Philipp Moritz, Michael Jordan, and Pieter Abbeel. Trust region policy optimization. 32nd International Conference on Machine Learning, ICML 2015, 3:1889–1897, 2015.

- [18] John Schulman, Philipp Moritz, Sergey Levine, Michael I. Jordan, and Pieter Abbeel. High-dimensional continuous control using generalized advantage estimation. 4th International Conference on Learning Representations, ICLR 2016 - Conference Track Proceedings, pages 1–14, 2016.

- [19] John Schulman, Filip Wolski, Prafulla Dhariwal, Alec Radford, and Oleg Klimov. Proximal Policy Optimization Algorithms. pages 1–12, 2017.

- [20] Richard S. Sutton and Andrew G. Barto. Reinforcement Learning: An Introduction. The MIT Press, 2 edition, 2018.

- [21] Yuhuai Wu, Elman Mansimov, Shun Liao, Roger Grosse, and Jimmy Ba. Scalable trust-region method for deep reinforcement learning using Kronecker-factored approximation. Advances in Neural Information Processing Systems, 2017-Decem(Nips):5280–5289, 2017.