Reinforcement Learning for Selective Key Applications in Power Systems: Recent Advances and Future Challenges

Abstract

With large-scale integration of renewable generation and distributed energy resources, modern power systems are confronted with new operational challenges, such as growing complexity, increasing uncertainty, and aggravating volatility. Meanwhile, more and more data are becoming available owing to the widespread deployment of smart meters, smart sensors, and upgraded communication networks. As a result, data-driven control techniques, especially reinforcement learning (RL), have attracted surging attention in recent years. This paper provides a comprehensive review of various RL techniques and how they can be applied to decision-making and control in power systems. In particular, we select three key applications, i.e., frequency regulation, voltage control, and energy management, as examples to illustrate RL-based models and solutions. We then present the critical issues in the application of RL, i.e., safety, robustness, scalability, and data. Several potential future directions are discussed as well.

Index Terms:

Frequency regulation, voltage control, energy management, reinforcement learning, smart grid.Nomenclature

-A Notations

- ,

-

Action space, action.

-

, the set of lines connecting buses.

-

Expected total discounted reward.

-

, the set of buses in a power network or the set of agents.

-

Neural network with input and parameter .

-

Observation.

-

Transition probability.

-

-function (or -value) under policy .

-

Reward.

- ,

-

State space, state.

-

, the discrete time horizon.

-

Discounting factor.

-

The set of probability distributions over set .

-

The time interval in .

- ,

-

Policy, optimal policy.

-B Abbreviations

- A3C

-

Asynchronous Advantaged Actor Critic.

- ACE

-

Area Control Error.

- AMI

-

Advanced Metering Infrastructure.

- ANN

-

Artificial Neural Network.

- DDPG

-

Deep Deterministic Policy Gradient.

- DER

-

Distributed Energy Resource.

- DP

-

Dynamic Programming.

- (D)RL

-

(Deep) Reinforcement Learning.

- DQN

-

Deep Network.

- EMS

-

Energy Management System.

- EV

-

Electric Vehicle.

- FR

-

Frequency Regulation.

- HVAC

-

Heating, Ventilation, and Air Conditioning.

- IES

-

Integrated Energy System.

- LSPI

-

Least-Squares Policy Iteration.

- LSTM

-

Long-Short Term Memory.

- MDP

-

Markov Decision Process.

- OLTC

-

On-Load Tap Changing Transformer.

- OPF

-

Optimal Power Flow.

- PMU

-

Phasor Measurement Unit.

- PV

-

Photovoltaic.

- SAC

-

Soft Actor Critic.

- SCADA

-

Supervisory Control and Data Acquisition.

- SVC

-

Static Var (Reactive Power) Compensator.

- TD

-

Temporal Difference.

- UCRL

-

Upper Confidence Reinforcement Learning.

I Introduction

Electric power systems are undergoing an architectural transformation to become more sustainable, distributed, dynamic, intelligent, and open. On the one hand, the proliferation of renewable generation and distributed energy resources (DERs), including solar energy, wind power, energy storage, responsive demands, electric vehicles (EVs), etc., creates severe operational challenges. On the other hand, the deployment of information, communication, and computing technologies throughout the electric system, such as phasor measurement units (PMUs), advanced metering infrastructures (AMIs), and wide area monitoring systems (WAMS) [1], has been growing rapidly in recent decades. It evolves traditional power systems towards smart grids and offers an unprecedented opportunity to overcome these challenges through real-time data-driven monitoring and control at scale. This will require new advanced decision-making and control techniques to manage

-

1)

Growing complexity. The deployment of massive DERs and the interconnection of regional power grids dramatically increase system operation complexity and make it difficult to obtain accurate system (dynamical) models.

-

2)

Increasing uncertainty. The rapid growth of renewable generation and responsive loads significantly increases uncertainty, especially when human users are involved, jeopardizing predictions and system reliability.

-

3)

Aggravating volatility. The high penetration of power electronics converter-interfaced devices reduces system inertia, which leads to faster dynamics and necessitates advanced controllers with online adaptivity.

In particular, reinforcement learning (RL) [2], a prominent machine learning paradigm concerned with how agents take sequential actions in an uncertain interactive environment and learn from the feedback to optimize a specific performance, can play an important role in overcoming these challenges. Leveraging artificial neural networks (ANNs) for function approximation, deep RL (DRL) [3] is further developed to solve large-scale online decision problems. The most appealing virtue of (D)RL is its model-free nature, i.e., it makes decisions without explicitly estimating the underlying models. Hence, (D)RL has the potential to capture hard-to-model dynamics and could outperform model-based methods in highly complex tasks. Moreover, the data-driven nature of (D)RL allows it to adapt to real-time observations and perform well in uncertain dynamical environments. Over the past decade, (D)RL has achieved great success in a broad spectrum of applications, such as playing games [4], robotics [5], autonomous driving [6], clinical trials [7], etc.

Meanwhile, the application of RL in power system operation and control has attracted surging attention [8, 9, 10, 11]. RL-based decision-making mechanisms are envisioned to compensate for the limitations of existing model-based approaches and thus are promising to address the emerging challenges described above. This paper provides a review and survey on RL-based decision-making in smart grids. We will introduce various RL terminologies, exemplify how to apply RL to power systems, and discuss critical issues in their application. Compared with recent review articles [8, 9, 10, 11] on this subject, the main merits of this paper include

-

1)

We present a comprehensive and structural overview of the RL methodology, from basic concepts and theoretical fundamentals to state-of-the-art RL techniques.

-

2)

Three key applications are selected as examples to illustrate the overall procedure of applying RL to the control and decision-making in power systems, from modeling, solution, to numerical implementation.

-

3)

We discuss the critical challenges and future directions for applying RL to power system problems in depth.

In the rest of this paper, Section II presents a comprehensive overview of the RL fundamentals and the state-of-the-art RL techniques. Section III describes the application of RL to three critical power system problems, i.e., frequency regulation, voltage control, and energy management, where paradigmatic mathematical models are provided for illustration. Section IV summarizes the key issues of safety, robustness, scalability, and data, and then discusses several potential future directions. Lastly, we conclude in Section V.

II Preliminaries on Reinforcement Learning

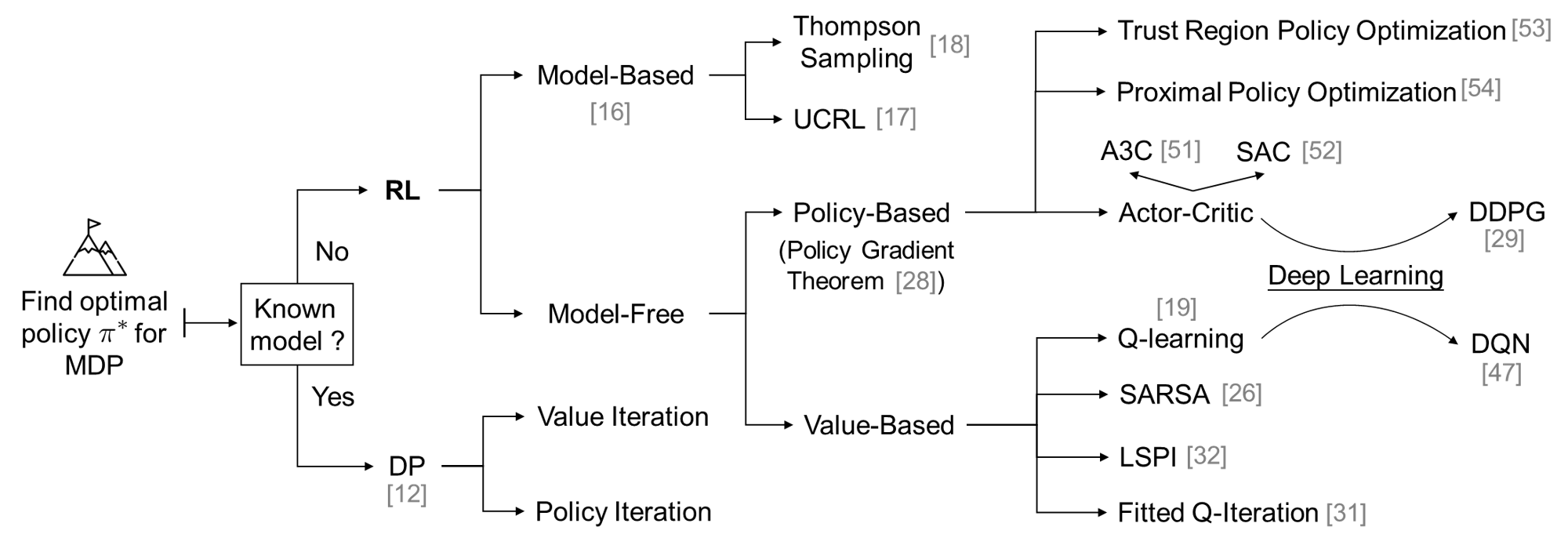

This section provides a comprehensive overview of the RL methodology. First, we set up the RL problem formulation and key concepts, such as -function and Bellman (Optimality) Equation. Then two categories of classical RL algorithms, i.e., value-based and policy-based, are introduced. With these fundamentals in place, we next present several state-of-the-art RL techniques, including DRL, deterministic policy gradient, modern actor-critic methods, multi-agent RL, etc. The overall structure of RL methodology with related literature is illustrated in Fig. 1.

II-A Fundamentals of Reinforcement Learning

RL is a branch of machine learning concerned with how an agent makes sequential decisions in an uncertain environment to maximize the cumulative reward. Mathematically, the decision-making problem is modeled as a Markov Decision Process (MDP), which is defined by state space , action space , the transition probability function that maps a state-action pair to a distribution on the state space, and lastly the reward function 111 A generic reward function is given by where the next state is also included as an argument, but there is no essential difference between the case with and the case with in algorithms and results. By marginalizing over next states according to the transition function , one can simply convert to [2].. The state space and action space can be either discrete or continuous. To simplify discussion, we focus on the discrete case below.

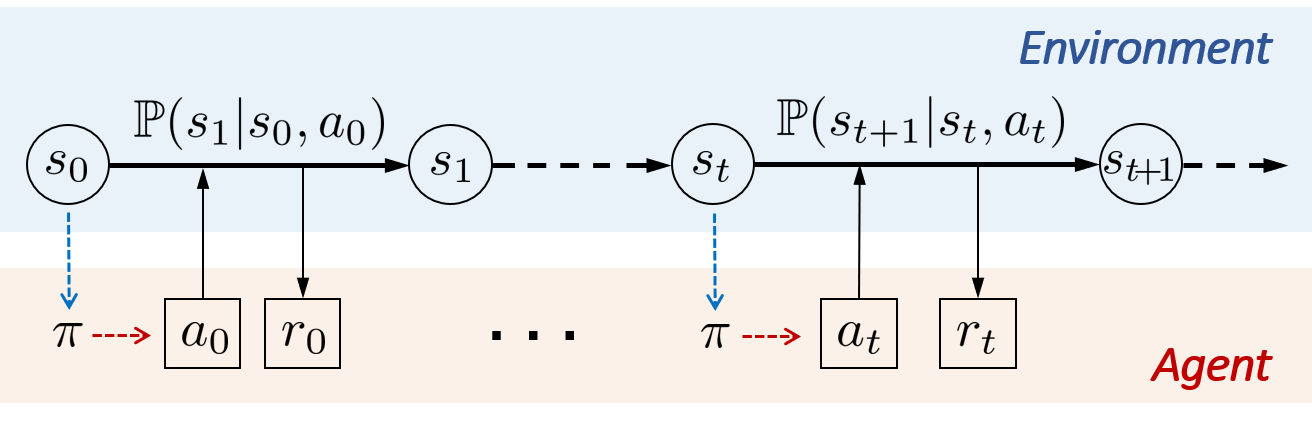

As illustrated in Fig. 2, in an MDP setting, the environment starts with an initial state . At each time , given current state , the agent chooses action and receives reward that depends on the current state-action pair , after which the next state is randomly generated from the transition probability . A policy for the agent is a map from the state to a distribution on the action space , which rules what action to take given a certain state .222 is a stochastic policy, and it becomes a deterministic policy when the probability distribution is a singleton for all . The agent aims to find an optimal policy (may not be unique) that maximizes the expected infinite horizon discounted reward :

| (1) |

where the first expectation means that is drawn from an initial state distribution , and the second expectation means that the action is taken according to the policy . Parameter is the discounting factor that penalizes the rewards in the future.

In the MDP framework, the so-called “model” specifically refers to the reward function and the transition probability . Accordingly, it leads to two different problem settings:

-

•

When the model is known, one can directly solve for an optimal policy by Dynamic Programming (DP) [12].

-

•

When the model is unknown, the agent learns an optimal policy based on the past observations from interacting with the environment, which is the problem of RL.

Since DP lays the foundation for RL algorithms, we first consider the case with a known model and introduce the basic ideas of finding an optimal policy with respect to (1). The crux is the concept of -function together with the Bellman Equation. The -function for a given policy is defined as

| (2) |

which is the expected cumulative reward when the initial state is , the initial action is , and all the subsequent actions are chosen according to policy . The -function satisfies the following Bellman Equation: ,

| (3) |

where the expectation denotes that the next state is drawn from , and the next action is drawn from . Here, it is helpful to think of the -function as a large table or vector filled with -values . The Bellman Equation (3) indicates a recursive relation that each -value equals the immediate reward plus the discounted future value. Computing the -function for a given policy is called policy evaluation, which can be done by simply solving a set of linear equations when the model, i.e., and , is known.

The -function associated with an optimal policy for (1) is called an optimal -function and denoted as . The key to find is that the optimal -function must be the unique solution to the Bellman Optimality Equation (4): ,

| (4) |

Interested readers are referred to textbook [12, Sec. 1.2] on why this is true. Based on the Bellman Optimality Equation (4), the optimal -function and an optimal policy can be solved using DP or linear programming [12, Sec. 2.4]. In particular, policy iteration and value iteration are two classic DP algorithms. See [2, Chapter 4] for details.

Remark 1.

(Modeling Issues with MDP). MDP is a generic framework to model sequential decision-making problems and is the basis for RL algorithms. However, several issues deserve attention when modeling power system control problems in the MDP framework.

-

1)

At the heart of MDP is the Markov property that the distribution of future states depends only on the present state and action, i.e., . In other words, given the present, the future does not depend on the past. Then for a specific control problem, one needs to check whether the choices of state and action satisfy the Markov property. A general guideline is to include all necessary known information in the enlarged state, known as state augmentation [12], to maintain the Markov property, however, at the cost of complexity.

-

2)

Most classical MDP theories and RL algorithms are based on discrete-time transitions, but many power system control problems follow continuous-time dynamics, such as frequency regulation. To fit the MDP framework, continuous-time dynamics are generally discretized with a proper temporal resolution, which is a common issue for digital control systems, and there are well-established frameworks to deal with it. Besides, there are RL variants that are built directly on continuous-time dynamics, such as integral RL [13].

-

3)

Many MDP/RL methods assume time-homogeneous state transitions and rewards. But in power systems, there are various time-varying exogenous inputs and disturbances, making the state transitions not time-homogeneous. This is an important problem that has not been adequately explored in the existing power literature and needs further study. Nonstationary MDP [14] and related RL algorithms [15] could be the potential directions.

In addition, the issues of continuous state/action spaces and partial observability for MDP modeling will be discussed later.

II-B Classical Reinforcement Learning Algorithms

This subsection considers the RL setting when the environment model is unknown, and presents classical RL algorithms for finding the optimal policy . As shown in Fig. 1, RL algorithms can be divided into two categories, i.e., model-based and model-free. “Model-based” refers to the RL algorithms that explicitly estimate and online update an environment model from past observations and make decisions based on this model [16], such as upper confidence RL (UCRL) [17] and Thompson sampling [18]. In contrast, “model-free” means that the RL algorithms directly search for optimal policies without estimating the environment model. Model-free RL algorithms are mainly categorized into two types, i.e., value-based and policy-based. Generally, value-based methods are preferred for modest-scale RL problems with finite state/action space as they do not assume a policy class and have a strong convergence guarantee. The convergence of value-based methods to an optimal -function in the tabular setting (without function approximation) was proven back in the 1990s [19]. In contrast, policy-based methods are more efficient for problems with high dimensions or continuous action/state space. But they are known to suffer from various convergence issues, e.g., local optimum, high variance, etc. The convergence of policy-based methods with restricted policy classes to the global optimum has been shown in a recent work [20] under the tabular policy parameterization.

Remark 2.

(Exploration vs. Exploitation). A fundamental problem faced by RL algorithms is the dilemma between exploration and exploitation. Good performance requires taking actions adaptively to strike an effective balance between 1) exploring poorly-understood actions to gather new information that may improve future reward, and 2) exploiting what is known for decision-making to maximize immediate reward. Generally, it is natural to achieve exploitation with the goal of reward maximization, while different RL algorithms encourage exploration in different ways. For value-based RL algorithms, -greedy is commonly used with a probability of to explore random actions. In policy-based methods, exploration is usually achieved by injecting random perturbation to the actions, adopting a stochastic policy, or adding an entropy term to the objective, etc.

Before presenting classical RL methods, we introduce a key algorithm, Temporal-Difference (TD) learning [21], for policy evaluation when the model is unknown. TD learning is central to both value-based and policy-based RL algorithms. It learns the -function for a given policy from episodes of experience. Here, an “episode” refers to a state-action-reward trajectory over time until terminated. Specifically, TD learning maintains a -function for all state-action pairs and updates it upon a new observation by

| (5) | ||||

where is the step size. Readers might observe that the second term in (5) is very similar to the Bellman Equation (3), which is exactly the rationale behind TD learning. Essentially, TD learning (5) is a stochastic approximation scheme for solving the Bellman Equation (3) [22], and can be shown to converge to the true under mild assumptions [21, 23].

1) Value-based RL algorithms directly learn the optimal -function , and the optimal (deterministic) policy is a byproduct that can be retrieved by acting greedily, i.e., . Among many, -learning [24, 19] is perhaps the most popular value-based RL algorithm. Similar to TD-learning, -learning maintains a -function and updates it towards the optimal -function based on episodes of experience. Specifically, at each time , given current state , the agent chooses action according to a certain behavior policy.333Such a behavior policy, also called exploratory policy, can be arbitrary as long as it visits all the states and actions sufficiently often. Upon observing the outcome , -learning updates the -function by

| (6) | ||||

The rationale behind (6) is that the -learning algorithm (6) is essentially a stochastic approximation scheme for solving the Bellman Optimality Equation (4), and one can show the convergence to under mild assumptions [19, 25].

SARSA444In some literature, SARSA is also called -learning. [26] is another classical value-based RL algorithm, whose name comes from the experience sequence . SARSA is actually an on-policy variant of -learning. The major difference is that SARSA takes actions according to the target policy (typically -greedy based on the current -function) rather than any arbitrary behavior policy in -learning. The following remark distinguishes and compares “on-policy” and “off-policy” RL algorithms.

Remark 3.

(On-Policy vs. Off-Policy). On-policy RL methods continuously improve a policy (called target policy) and implement this policy to generate episodes for algorithm training. In contrast, off-policy RL methods learn a target policy based on the episodes that are generated by following a different policy (called behavior policy) rather than the target policy itself. In short, “on” and “off” indicate whether the training samples are generated by following the target policy or not. For example, -learning is an off-policy RL method as the episodes used in training can be produced by any policies, and the actor-critic algorithm described below is on-policy.555There are also off-policy variants of the actor-critic algorithm, e.g., [27]. For power system applications, control policies that are not well-trained are generally not allowed to be implemented in real-world power grids for the sake of safety. Thus off-policy RL is preferred when high-fidelity simulators are unavailable since it can learn from the vast amount of operational data generated by incumbent controllers. Off-policy RL is also relatively easy to provide a safety guarantee due to the flexibility in choosing the behavior policies, but it is known to suffer from slower convergence and higher sample complexity.

2) Policy-based RL algorithms restrict the optimal policy search to a policy class that is parameterized as with the parameter . With this parameterization, the objective (1) can be rewritten as a function of the policy parameter, i.e., , and the RL problem is reformulated as an optimization problem (7) that aims to find the optimal :

| (7) |

To solve (7), a straightforward idea is to employ the gradient ascent method, i.e., , where is the step size. However, computing the gradient was supposed to be intrinsically hard as the environment model is unknown. Policy Gradient Theorem [28] is a big breakthrough in addressing the gradient computation issue. This theorem shows that the policy gradient can be simply expressed as

| (8) |

Here, is the on-policy state distribution [2, Chapter 9.2], which denotes the fraction of time steps spent in each state . Equation (8) is for a stochastic policy , while the version of policy gradient theorem for a deterministic policy [29] will be discussed later.

The policy gradient theorem provides a highway to estimate the gradient , which lays the foundation for policy-based RL algorithms. In particular, the actor-critic algorithm is a prominent and widely used architecture based on policy gradient. It comprises two eponymous components: 1) the “critic” is to estimate the -function , and 2) the “actor” conducts the gradient ascent based on (8). The following iterative scheme is an illustrative actor-critic example:

-

1)

Given state , take action , then observe the reward and next state ;

-

2)

(Critic) Update -function by TD learning;

-

3)

(Actor) Update policy parameter by

(9) -

4)

. Go to step 1) and repeat.

There are many actor-critic variants with different implementation manners, e.g., how is sampled from , how -function is updated, etc. See [2, Chapter 13] for more details.

We emphasize that the algorithms introduced above are far from complete. In the next subsections, we will introduce the state-of-the-art modern (D)RL techniques that are widely used in complex control tasks, especially for power system applications. At last, we close this subsection with the following two remarks on different RL settings.

Remark 4.

(Online RL vs. Batch RL). The algorithms introduced above are referred to as “online RL” that takes actions and updates the policies simultaneously. In contrast, there is another type of RL called “batch RL” [30], which decouples the sample data collection and policy training. Precisely, given a set of experience episodes generated by following any arbitrary behavior policies, batch RL fits the optimal -function or optimizes the target policy entirely based on this fixed sample dataset. Some classical batch RL algorithms include Fitted -Iteration [31], Least-Squares Policy Iteration (LSPI) [32], etc. For example, given a batch of transition experiences , Fitted -Iteration, which is seen as the batch version of -learning, aims to fit a parameterized -function by iterating the following two steps:

-

1)

Create the target -value for each sample in by

-

2)

Apply regression approaches to fit a new based on the training dataset .

The crucial advantages of batch RL lie in the stability and data-efficiency of the learning process by making the best use of the available sample datasets. However, because of relying entirely on a given dataset, the lack of exploration is one of the major problems of batch RL. To encourage exploration, batch RL typically iterates between exploratory sample collection and policy learning prior to application. Besides, pure batch RL, also referred to as “offline RL” [33], has attracted increasing recent attention, which completely ignores the exploration issue and aims to learn policies fully based on a static dataset with no online interaction. Offline RL assumes a sufficiently large and diverse dataset that adequately covers high-reward transitions for learning good policies and turns the RL problem into a supervised machine learning problem. See [34] for a tutorial of offline RL.

Remark 5.

(Passive RL, Active RL, and Inverse RL). The terminology “passive RL” typically refers to the RL setting where the agent acts by following a fixed policy and aims to learn how good this policy is from observations. It is analogous to the policy evaluation task, and TD learning is one of the representative algorithms of passive RL. In contrast, “active RL” allows the agent to update policies with the goal of finding an optimal policy, which is basically the standard RL setting that we described above. However, in some references, e.g., [35, 36], “active RL” has a completely different meaning and refers to the RL variant where the agent does not observe the reward unless it pays a query cost to account for the difficulty of collecting reward feedback. Thus, the agent chooses both an action and whether to observe the reward at each time. Another interesting RL variant is “inverse RL” [37, 38], in which the state-action sequence of an (expert) agent is given, and the task is to infer the reward function that this agent seeks to maximize. Inverse RL is motivated by various practical applications where the reward engineering is complex or expensive and one can observe an expert demonstrating the task to learn how to perform, e.g., autonomous driving.

II-C Fundamentals of Deep Learning

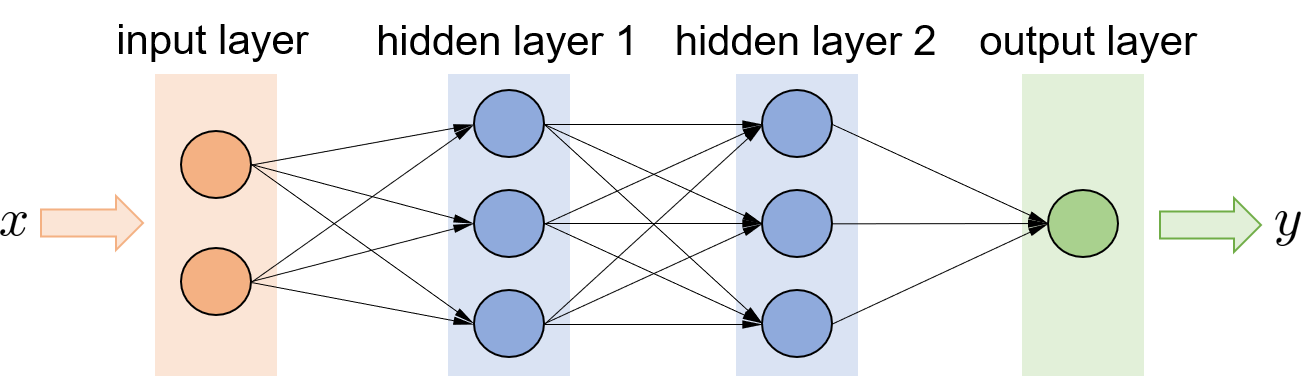

This subsection presents the fundamentals of deep learning to set the stage for the introduction of DRL. Deep learning refers to the machine learning technique that models with multi-layer ANNs. The history of ANNs dates back to 1940s [39], and it has received tremendous interests in the recent decade due to the booming of data technology and computing power, which allows efficient training of wider and deeper ANNs. Essentially, an ANN is an universal parameterized mapping from the input features to the outputs with the parameters . As illustrated in Fig. 3, an input feature vector is taken in by the input layer, then is processed through a series of hidden layers, and results in the output vector . Each hidden layer consists of a number of neurons that are the activation functions, e.g. linear, ReLU, or sigmoid [40]. Based on a sample dataset , the parameter can be optimized via regression. A landmark in training ANNs is the discovery of the back-propagation method, which offers an efficient way to compute the gradient of the loss function over [41]. Nevertheless, it is pretty tricky to train large-scale ANNs in practice, and article [41] provides an overview of the optimization algorithms and theory for training ANNs. Three typical classes of ANNs with different architectures are introduced below. See book [42] for details.

1) Convolutional Neural Networks (CNNs) are in the architecture of feed-forward neural networks (as shown in Fig. 3) and specialize in pattern detection, which are powerful for image analysis and computer vision tasks. The convolutional hidden layers are the basis at the heart of a CNN. Each neuron in a convolutional layer defines a small filter (or kernel) matrix of low dimension (e.g., ) and convolves with the input matrix of relatively high dimension, which leads to the output matrix . Here, denotes the convolution operator666Specifically, the convolution operation is performed by sliding the filter matrix across the input matrix and computing the corresponding element-wise multiplications, so that each element in matrix is the sum of the element-wise multiplications between and the associated sub-matrix of . See [42, Chapter 9] for a detailed definition of convolution., and the output is referred to as the feature map that is passed to the next layer. Besides, pooling layers are commonly used to reduce the dimension of the representation with the max or average pooling.

2) Recurrent Neural Networks (RNNs) specialize in processing long sequential inputs and tackling tasks with context spreading over time by leveraging a recurrent structure. Hence, RNNs achieve great success in the applications such as speech recognition and machine translation. RNNs process an input sequence one element at a time, and maintain in their hidden units a state vector that implicitly contains historical information about the past elements. Interestingly, the recurrence of RNNs is analogous to a dynamical system [42] and can be expressed as

| (10) |

where and are the input and output of the neural network at time step , and is the parameter for training. denotes the state stored in the hidden units at step and will be passed to the processing at step . In this way, implicitly covers all historical input information . Among many variants, long-short term memory (LSTM) network [44] is a special type of RNNs that excels at handling long-term dependencies and outperforms conventional RNNs by using special memory cells and gates.

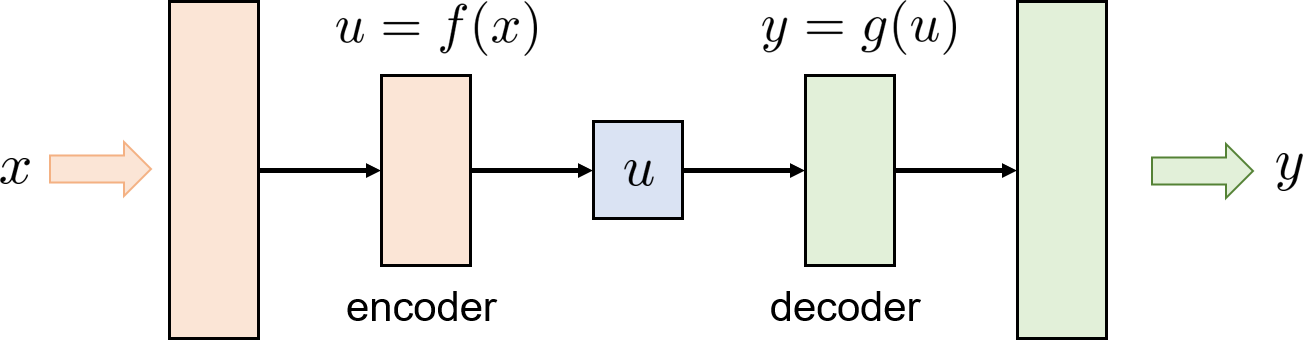

3) Autoencoders [45] are used to obtain a low-dimensional representation of high-dimensional inputs, which is similar to, but more general than, principal components analysis (PCA). As illustrated in Fig. 4, an autoencoder is in an hourglass-shape feed-forward network structure and consists of a encoder function and a decoder function . In particular, an autoencoder is trained to learn an approximation function with the loss function that penalizes the dissimilarity between the input and output . The bottleneck layer has a much smaller amount of neurons, and thus it is forced to form a compressed representation of the input .

II-D Deep Reinforcement Learning

For many practical problems, the state and action spaces are large or continuous, together with complex system dynamics. As a result, it is intractable for value-based RL to compute or store a gigantic -value table for all state-action pairs. To deal with this issue, function approximation methods are developed to approximate the -function with some parameterized function classes, such as linear function or polynomial function. As for policy-based RL, finding a capable policy class to achieve optimal control is also nontrivial in high-dimensional complex tasks. Driven by the advances of deep learning, DRL that leverages ANNs for function approximation or policy parameterization is becoming increasingly popular. Precisely, DRL can use ANNs to 1) approximate the -function with a -network , and 2) parameterize the policy with the policy network .

1) -Function Approximation. -network can be used to approximate the -function in TD learning (5) and -learning (6). For TD learning, the parameter is updated by

| (11) | ||||

where the gradient can be calculated efficiently using the back-propagation method. As for -learning, it is known that adopting a nonlinear function, such as an ANN, for approximation may cause instability and divergence issues in the training process. To this end, Deep -Network (DQN) [47] is developed and greatly improves the training stability of -learning with the following two tricks:

Experience Replay. Instead of performing on consecutive episodes, a widely used trick is to store all transition experiences in a database called “replay buffer”. At each step, a batch of transition experiences is randomly sampled from the replay buffer for -learning update. This can enhance the data efficiency by recycling previous experiences and reduce the variance of learning updates. More importantly, sampling uniformly from the replay buffer breaks the temporal correlations that jeopardize the training process, and thus improves the stability and convergence of -learning.

Target Network. The other trick is the introduction of the target network with parameter , which is a clone of the -network . Its parameter is kept frozen and is only updated periodically. Specifically, with a batch of transition experiences sampled from the replay buffer, the -network is updated by solving

| (12) |

The optimization (12) can be viewed as finding an optimal -network that approximately solves the Bellman Optimality Equation (4). The critical difference is that the target network with parameter instead of is used to compute the maximization over in (12). After a fixed number of updates above, the target network is renewed by replacing with the latest learned . This trick can mitigate the training instability as the short-term oscillations are circumvented. See [48] for more details.

In addition, there are several notable variants of DQN that further improve the performance, such as double DQN [49] and dueling DQN [50]. Particularly, double DQN is proposed to tackle the overestimation issue of the action values in DQN by learning two sets of -functions; one -function is used to select the action, and the other is used to determine its value. Dueling DQN proposes a dueling network architecture that separately estimates the state value function and the state-dependent action advantage function , which are then combined to determine the -value. The main benefit of this factoring is to generalize learning across actions without imposing any change to the underlying RL algorithm [50].

2) Policy Parameterization. Due to the powerful generalization capability, ANNs are widely used to parameterize control policies, especially when the state and action spaces are continuous. The resultant policy network takes states as the input and outputs the probability of action selection. In actor-critic methods, it is common to adopt both the -network and the policy network simultaneously, where the “actor” updates according to (9) and the “critic” updates according to (11). The back-propagation method [41] can be applied to efficiently compute the gradient of ANNs.

When function approximation is adopted, the theoretical analysis on both value-based and policy-based RL methods is little and generally limited to the linear function approximation. Besides, one problem that hinders the use of value-based methods for large or continuous action space is the difficulty of performing the maximization step. For example, when deep ANNs are used to approximate the -function, it is not easy to solve for the optimal action due to the nonlinear and complex formulation of .

II-E Other Modern Reinforcement Learning Techniques

This subsection summarizes several state-of-the-art modern RL techniques that are widely used in complex tasks.

II-E1 Deterministic Policy Gradient

The RL algorithms described above focus on stochastic policies , while deterministic policies are more desirable for many real-world control problems with continuous state and action spaces. On the one hand, since most incumbent controllers in physical systems, such as PID control and robust control, are all deterministic, deterministic policies are better matched to the practical control architectures, e.g., in power system applications. On the other hand, a deterministic policy is more sample-efficient as its policy gradient only integrates over the state space. In contrast, a stochastic policy gradient integrates over both state and action spaces [29]. Similar to the stochastic case, there is a Deterministic Policy Gradient Theorem [29] showing that the policy gradient with respect to a deterministic policy can be simply expressed as

| (13) |

Correspondingly, the “actor” in the actor-critic algorithm can update the parameter by

| (14) |

One major issue regarding a deterministic policy is the lack of exploration due to the determinacy of action selection. A common way to encourage exploration is to perturb a deterministic policy with exploratory noises, e.g., adding a Gaussian noise to the policy with .

II-E2 Modern Actor-Critic Methods

Although achieving great success in many complex tasks, the actor-critic methods are known to suffer from various problems, such as high variance, slow convergence, local optimum, etc. Therefore, many variants have been developed to improve the performance of actor-critic, and we list some of them below.

Advantaged Actor-Critic [2, Chapter 13.4]: The advantage function, , i.e., the -function subtracted by a baseline, is introduced to replace in the “actor” update, e.g., (9). One common choice for the baseline is an estimate of the state value function . This modification can significantly reduce the variance of the policy gradient estimate without changing the expectation.

Asynchronous Actor-Critic [51] presents an asynchronous variant with parallel training to enhance sample efficiency and training stability. In this method, multiple actors are trained in parallel with different exploration polices, then the global parameters get updated based on all the learning results and synchronized to each actor.

II-E3 Trust Region/Proximal Policy Optimization

To improve the training stability of policy-based RL algorithms, reference [53] proposes the Trust Region Policy Optimization (TRPO) algorithm, which enforces a trust region constraint (15b). It indicates that the KL-divergence between the old and new policies should not be larger than a given threshold . Denote as the probability ratio between the new policy and the old policy with . Then, TRPO aims to solve the constrained optimization (15):

| (15a) | ||||

| s.t. | (15b) | |||

where is an estimation of the advantage function. However, TRPO is hard to implement. To this end, work [54] proposes Proximal Policy Optimization (PPO) methods, which achieve the benefits of TRPO with simpler implementation and better empirical sample complexity. Specifically, PPO simplifies the policy optimization as (16):

| (16) |

where is a hyperparameter, e.g., , and the clip function enforces to stay within the interval . The “” of the clipped and unclipped objectives is taken to eliminate the incentive for moving outside of the interval to prevent big updates of .

II-E4 Multi-Agent RL

Many power system control tasks involve the coordination over multiple agents. For example, in frequency regulation, each generator can be treated as an individual agent that makes its own generation decisions, while the frequency dynamics are jointly determined by all power injections. It motivates the multi-agent RL framework, which considers a set of agents interacting with the same environment and sharing a common state . At each time , each agent takes its own action given the current state , and receives the reward , then the system state evolves to based on . Multi-agent RL is an active and challenging research area with many unsolved problems. An overview on related theories and algorithms is provided in [55]. In particular, the decentralized (distributed) multi-agent RL attracts a great deal of attention for power system applications. A popular variant is that each agent adopts a local policy with its parameter , which determines the action based on local observation (e.g., local voltage or frequency of bus ). This method allows for decentralized implementation as the policy for each agent only needs local observations, but it still requires centralized training since the system state transition relies on all agents’ actions. Multi-agent RL methods with distributed training are still under research and development.

Remark 6.

Although the RL techniques above are discussed separately, they can be integrated for a single problem to achieve all the benefits. For instance, one may apply the multi-agent actor-critic framework with deterministic policies, adopt ANNs to parameterize the -function and the policy, and use the advantage function for actor update. Accordingly, the resultant algorithm is usually named after the combination of key words, e.g., deep deterministic policy gradient (DDPG), asynchronous advantaged actor-critic (A3C), etc.

III Selective Applications of RL in Power Systems

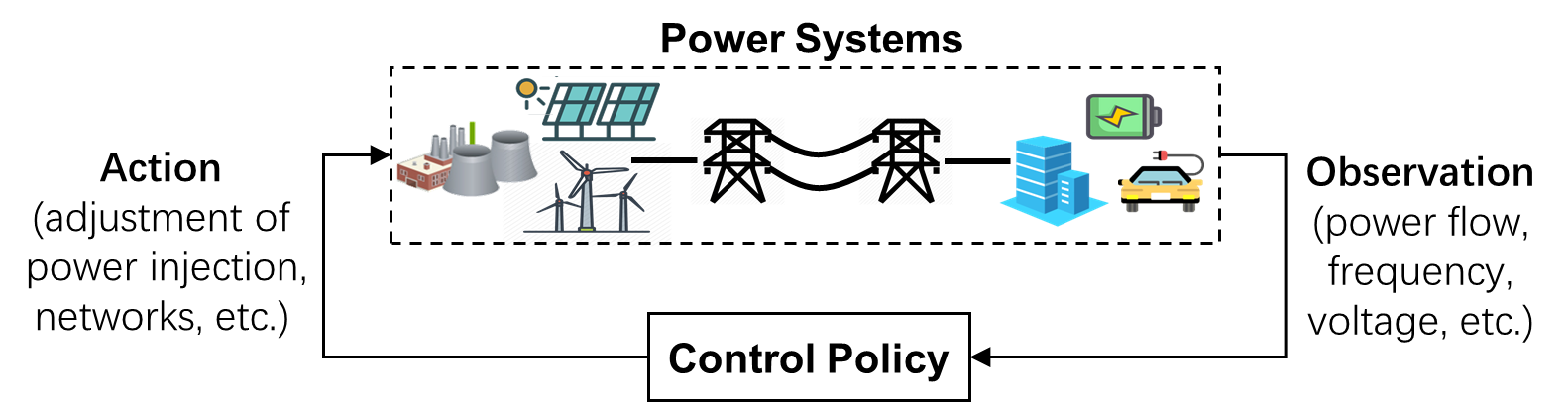

Throughout the past decades, tremendous efforts have been devoted to improving the modeling of power systems. Schemes based on (optimal) power flow techniques and precise modeling of various electric facilities are standard for the control and optimization of power systems. However, the large-scale integration of renewable generation and DERs significantly aggravates the complexity, uncertainty, and volatility. It becomes increasingly arduous to obtain accurate system models and power injection predictions, challenging the traditional model-based approaches. Hence, model-free RL-based methods become an appealing complement. As illustrated in Fig. 5, the RL-based schemes relieve the need for accurate system models and learn control policies based on data collected from actual system operation or high-fidelity simulators, when the underlying physical models and dynamics are regarded as the unknown environment.

For power systems, frequency level and voltage profile are two of the most critical indicators of system operating conditions, and reliable and efficient energy management is a core task. Accordingly, this section focuses on three key applications, i.e., frequency regulation, voltage control, and energy management. Frequency regulation is a fast-timescale control problem with system frequency dynamics, while energy management is usually a slow-timescale decision-making problem. Voltage control has both fast-timescale and slow-timescale controllable devices. RL is a general method that is applicable to both control problems (in fast timescale) and sequential decision-making problems (in slow timescale) under the MDP framework. In the following, we elaborate on the overall procedure of applying RL to these key applications from a tutorial perspective. We summarize the related literature with a table (see Tables I, II, III) and use existing works to exemplify how to model power system applications as RL problems. The critical issues, future directions, and numerical implementation of RL schemes are also discussed.

Before proceeding, a natural question is why it is necessary to develop new RL-based approaches since traditional tools and existing controllers mostly work “just fine” in real-world power systems. The answer varies from application to application, and we explain some of the main motivations below.

-

1)

Although traditional methods work well in the current grid, it is envisioned that these methods may not be sufficient for the future grid with high renewable penetration and human user participation. Most existing schemes rely heavily on sound knowledge of power system models, and have been challenged by various emerging issues, such as the lack of accurate distribution grid models, highly uncertain renewable generation and user behavior, coordination among massive distributed devices, the growing deployment of EVs coupled with transportation, etc.

-

2)

The research community has been studying various techniques to tackle these challenges, e.g., adaptive control, stochastic optimization, machine learning, zeroth-order methods, etc. Among them, RL is a promising direction to investigate and will play an important role in addressing these challenges because of its data-driven and model-free nature. RL is capable of dealing with highly complex and hard-to-model problems and can adapt to rapid power fluctuations and topology changes.

-

3)

This paper does not suggest a dichotomy between RL and conventional methods. Instead, RL can complement existing approaches and improve them in a data-driven way. For instance, policy-based RL algorithms can be integrated into existing controllers to online adjust key parameters for adaptivity and achieve hard-to-model objectives. It is necessary to identify the right application scenarios for RL and use RL schemes appropriately. This paper aims to throw light and stimulate such discussions and relevant research.

III-A Frequency Regulation

Frequency regulation (FR) is to maintain the power system frequency closely around its nominal value, e.g., 60 Hz in the U.S., through balancing power generation and load demand. Conventionally, three generation control mechanisms in a hierarchical structure are implemented at different timescales to achieve fast response and economic efficiency. In bulk power systems,777There are some other types of primary FR, e.g., using autonomous centralized control mechanisms, in small-scale power grids, such as microgrids. the primary FR generally operates locally to eliminate power imbalance at the timescale of a few seconds, e.g., using droop control, when the governor adjusts the mechanical power input to the generator around a setpoint and based on the local frequency deviation. The secondary FR, known as automatic generation control (AGC), adjusts the setpoints of governors to bring the frequency and tie-line power interchanges back to their nominal values, which is performed in a centralized manner within minutes. The tertiary FR, namely economic dispatch, reschedules the unit commitment and restores the secondary control reserves within tens of minutes to hours. See [56] for detailed explanations of the three-level FR architecture. In this subsection, we focus on the primary and secondary FR mechanisms, as the tertiary FR does not involve frequency dynamics and is corresponding to the power dispatch that will be discussed in Section III-C.

There are a number of recent works [57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71] leveraging model-free RL techniques for FR mechanism design, which are summarized in Table I. The main motivations for developing RL-based FR schemes are explained as follows.

-

1)

Although the bulk transmission systems have relatively good models of power grids, there may not be accurate models or predictions on the large-scale renewable generation due to the inherent uncertainty and intermittency. As the penetration of renewable generation keeps growing, new challenges are posed to traditional FR schemes for maintaining nominal frequency in real-time.

-

2)

FR in the distribution level, e.g., DER-based FR, load-side FR, etc., has attracted a great deal of recent studies. However, distribution grids may not have accurate system model information, and it is too complex to model vast heterogeneous DERs and load devices. In such situations, RL methods can be adopted to circumvent the requirement of system model information and directly learn control policies from available data.

-

3)

With less inertia and fast power fluctuations introduced by inverter-based renewable energy resources, power systems become more and more dynamical and volatile. Conventional frequency controllers may not adapt well to the time-varying operational environment [68]. In addition, existing methods have difficulty coordinating large-scale systems at a fast time scale due to the communication and computation burdens, limiting the overall frequency regulation performance [58]. Hence, (multi-agent) DRL methods can be used to develop FR schemes to improve the adaptivity and optimality.

In the following, we take multi-area AGC as the paradigm to illustrate how to apply RL methods, as the mathematical models of AGC have been well established and widely used in the literature [56, 72, 73]. We will present the definitions of environment, state and action, the reward design, and the learning of control policies, and then discuss several key issues in RL-based FR. Note that the models presented below are examples for illustration, and there are other RL formulations and models for FR depending on the specific problem setting.

| Reference | Problem | State/Action Space | Algorithm | Policy Class | Key Features |

|---|---|---|---|---|---|

| Yan et al. 2020 [58] | Multi-area AGC | Continuous | Multi-agent DDPG | ANN | 1-Offline centralized learning and decentralized application; 2-Multiply an auto-correlated noise to the actor for exploration; 3-An initialization process is used for ANN training acceleration. |

| Li et al. 2020 [59] | Single-area AGC | Continuous | Twin delayed DDPG (actor-critic) | ANN | The twin delayed DDPG method is used to improve the exploration process with multiple explorers. |

| Khooban et al. 2020 [60] | Microgrid FR | Continuous | DDPG (actor-critic) | ANN | 1-DDPG method works as a supplementary controller for a PID-based main controller to improve the online adaptive performance; 2-Add Ornstein-Uhlenbeck process based noises to the actor for exploration. |

| Younesi et al. 2020 [61] | Microgrid FR | Discrete | -learning | -greedy | -learning works as a supervisory controller for PID controllers to improve the online dynamic response. |

| Chen et al. 2020 [62] | Emergency FR | Discrete | Single/multi-agent -learning/DDPG | -greedy/ greedy/ANN | 1-Offline learning and online application; 2-The -learning/DDPG-based controller is used for limited/multiple emergency scenarios. |

| Abouheaf et al. 2019 [63] | Multi-area AGC | Continuous | Integral RL (actor-critic) | Linear feedback controller | Continuous-time integral-Bellman optimality equation is considered. |

| Wang et al. 2019 [65] | Optimization of activation rules in AGC | Discrete | Multi-objective RL (-learning) | Greedy | A constrained optimization model is built to solve for optimal participation factors, where the objective is the combination of multiple -functions. |

| Singh et al. 2017 [69] | Multi-area AGC | Discrete | -learning | Stochastic policy | An estimator agent is defined to estimate the frequency bias factor and determine the ACE signal accordingly. |

III-A1 Environment, State and Action

The frequency dynamics in a power network can be expressed as (17):

| (17) |

where denotes the system state, including the frequency deviation at each bus and the power flow deviation over line from bus to bus (away from the nominal values). , capture the deviations of generator mechanical power and other power injections, respectively.

The governor-turbine control model [56] of a generator can be formulated as the time differential equation (18):

| (18) |

where is the generation control command. A widely used linearized version of (17) and (18) is provided in Appendix A. However, the real-world frequency dynamics (17) and generation control model (18) are highly nonlinear and complex. This motivates the use of model-free RL methods, since the underlying physical models (17) and (18), together with operational constraints, are simply treated as the environment in the RL setting.

When controlling generators for FR, the action is defined as the concatenation of the generation control commands with . The corresponding action space is continuous in nature but could get discretized in -learning-based FR schemes [69, 62]. Besides, the continuous-time system dynamics are generally discretized with the discrete-time horizon to fit the RL framework, and the time interval depends on the sampling or control period.

We denote in (17) as the deviations of other power injections, such as loads (negative power injection), the outputs of renewable energy resources, the charging/discharging power of energy storage systems, etc. Depending on the actual problem setting, can be treated as exogenous states with additional dynamics, or be included in the action if these power injections are also controlled for FR [61, 60].

III-A2 Reward Design

The design of the reward function plays a crucial role in successful RL applications. However, there is no general rule to follow, but one principle is to effectively reflect the control goal. For multi-area AGC888For multi-area AGC problem, each control area is generally aggregated and characterised by a single governor-turbine model (18). Then the control actions for an individual generator within this area are allocated based on its participation factor. Thus each bus represents an aggregate control area, and is the deviation of tie-line power interchange from area to area ., it aims to restore the frequency and tie-line power flow to the nominal values after disturbances. Accordingly, the reward at time can be defined as the minus of frequency deviation and tie-line flow deviation, e.g., in the square sum form (19) [58]:

| (19) |

where is the frequency bias factor. For single-area FR, the goal is to restore the system frequency, thus the term related to tie-line power flow can be removed from (19). Besides, the exponential function [71], absolute value function [59], and other sophisticated reward functions involving the cost of generation change and penalty for large frequency deviation [59], can also be used.

III-A3 Policy Learning

Since the system states may not be fully observable in practice, the RL control policy is generally defined as the map from the available measurement observations to the action . The following two steps are critical to learn a good control policy.

Select Effective Observations. The selection of observations typically faces a trade-off between informativeness and complexity. It is helpful to leverage domain knowledge to choose effective observations. For example, multi-area AGC conventionally operates based on the area control error (ACE) signal, given by . Accordingly, the proportional, integral, and derivative (PID) counterparts of the ACE signal, i.e., , are adopted as the observation in [58]. Other measurements, such as the power injection deviations , could also be included in the observation [69, 59]. Reference [64] applies the stacked denoising autoencoders to extract compact and useful features from the raw measurement data for FR.

Select RL Algorithm. Both valued-based and policy-based RL algorithms have been applied to FR in power systems. In -learning-based FR schemes, e.g., [69], the state and action spaces are discretized and the -greedy policy is used. Recent works [58, 60] employ the DDPG-based actor-critic framework to develop the FR schemes, considering continuous action and observation. In addition, multi-agent RL is applied to coordinate multiple control areas or multiple generators in [58, 67, 69], where each agent designs its own control policy with the local observation . In this way, the resultant algorithms can achieve centralized learning and decentralized implementation.

III-A4 Simulation Results

Reference [68] conducts simulations on an interconnected power system with four provincial control areas, and demonstrates that the proposed emotional RL algorithm outperforms SARSA, Q-learning and PI controllers, with much smaller frequency deviations and ACE values in all test cases. In [58], the numerical tests on the New England 39-bus system show that the multi-agent DDPG-based controller improves the mean absolute control error by 60.5% over the DQN-based controller and 50.5% over a fine-tuned PID controller. In [59], the simulations on a provincial power grid with ten generator units show that the proposed DCR-TD3 method achieves the frequency deviation of Hz and ACE of MW, which outperforms the DDPG-based controller (with frequency deviation of Hz and ACE of MW) and PI controller (with frequency deviation of Hz and ACE of MW).

III-A5 Discussion

Based on the existing works listed above, we discuss several key observations as follows.

Environment Model. Most of the references build environment models or simulators to simulate the dynamics and responses of power systems for training and testing their proposed algorithms. These simulators are typically high fidelity with realistic component models, which are too complex to be useful for the direct development and optimization of controllers. Moreover, it is laborious and costly to build and maintain such environment models in practice, and thus they may not be available for many power grids. When such simulators are unavailable, a potential solution is to train off-policy RL schemes using real system operation data.

Safety. Since FR is vital for power system operation, it necessitates safe control policies. Specifically, two requirements need to be met: 1) the closed-loop system dynamics are stable when applying the RL control policies; 2) the physical constraints, such as line thermal limits, are satisfied. However, few existing studies consider the safety issue of applying RL to FR. A recent work [57] proposes to explicitly engineer the ANN structure of DRL to guarantee the frequency stability.

Integration with Existing Controllers. References [61, 60] use the DRL-based controller as a supervisory or supplementary controller to existing PID-based FR controllers, to improve the dynamical adaptivity with baseline performance guarantee. More discussions are provided in Section IV-D.

Load-Side Frequency Regulation. The researches mentioned above focus on controlling generators for FR. Besides, various emerging power devices, e.g., inverter-based PV units, ubiquitous controllable loads with fast response, are promising complements to generation-frequency control [73, 72]. These are potential FR applications of RL in smart grids.

III-B Voltage Control

Voltage control aims to keep the voltage magnitudes across the power networks close to the nominal values or stay within an acceptable interval. Most recent works focus on voltage control in distribution systems and propose a variety of control mechanisms [74, 75, 76, 77, 78]. As the penetration of renewable generation, especially solar panels and wind turbines, deepens in distribution systems, the rapid fluctuations and significant uncertainties of renewable generation pose new challenges to the voltage control task. Meanwhile, unbalanced power flow, multi-phase device integration, and the lack of accurate network models further complicate the situation. To this end, a number of studies [79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89, 90, 91, 92, 93, 94, 95, 96] propose using model-free RL for voltage control. We summarize the related work in Table II and present below how to solve the voltage control problem in the RL framework.

| Reference | Control Scheme | State/Action Space | Algorithm | Policy Class | Key Features |

|---|---|---|---|---|---|

| Shi et. al. 2021 [79] | Reactive power control | Continuous | DDPG | ANN | Through monotone policy network design, a Lyapunov function-based Stable-DDPG method is proposed for voltage control with stability guarantee. |

| Lee et. al. 2021 [80] | Voltage regulators, capacitors, batteries | Hybrid | Proximal policy optimization | Graph convolutional network | A graph neural networks (topology)-based RL method is proposed and extensive simulations are performed to study the properties of graph-based policies. |

| Liu et. al. 2021 [81] | SVCs and PV units | Continuous | Multi-agent constrained soft actor-critic | ANN | Multiple agents are trained in a centralized manner to learn the coordination control strategies and are executed in a decentralized manner based on local information. |

| Yin et. al. 2021 [82] | Automatic voltage control | Discrete | Q-learning | ANN | The emotional factors are added to the ANN structure and Q-learning to improve the accuracy and performance of the control algorithm. |

| Gao et. al. 2021 [83] | Voltage regulator, capacitor banks, OLTC | Hybrid/ discrete | Consensus multi-agent DRL | ANN | 1- The maximum entropy method is used to encourage exploration; 2- A consensus multi-agent RL algorithm is developed, which enables distributed control and efficient communication. |

| Sun et. al. 2021 [84] | PV reactive power control | Hybrid/ continuous | Multi-agent DDPG | ANN | A voltage sensitivity based DDPG method is proposed, which analytically computes the gradient of value function to action rather than using the critic ANN. |

| Zhang et. al. 2021 [85] | Smart inverters, voltage regulators, and capacitors | Continuous/ discrete | Multi-agent DQN | -greedy | 1- Both the network loss and voltage violation are considered in the reward definition; 2- Multi-agent DQN is used to enhance the scalability of the algorithm. |

| Mukherjee et. al. 2021 [86] | Load shedding | Continuous | Hierarchical DRL | LSTM | 1- A hierarchical multi-agent RL algorithm with two levels is developed to accelerate learning; 2- Augmented random search is used to solve for optimal policies. |

| Kou et. al. 2020 [87] | Reactive power control | Continuous | DDPG | ANN | A safety layer is formed on top of the actor network to ensure safe exploration, which predicts the state change and prevents the violation of constraints. |

| Al-Saffar et. al. 2020 [88] | Battery energy storage systems | Discrete | Monte-Carlo tree search | Greedy | The proposed approach divides a network into multiple smaller segments based on impacted regions; and it solves the over-voltage problem via Monte-Carlo tree search and model predictive control. |

| Xu et. al. 2020 [90] | Set OLTC tap positions | Hybrid/ discrete | LSPI (batch RL) | Greedy | 1- “Virtual” sample generation is used for better exploration; 2- Adopt a multi-agent trick to handle scalability and use Radial basis function as state features. |

| Yang et. al. 2020 [91] | On-off switch of capacitors | Hybrid/ discrete | DQN | Greedy | Power injections are determined via traditional OPF in fast timescale, and the switching of capacitors is determined via DQN in slow timescale. |

| Wang et. al. 2020 [92] | Generator voltage setpoints | Continuous | Multi-agent DDPG | ANN | Adopt a competitive (game) formulation with specially designed reward for each agent. |

| Wang et. al. 2020 [93] | Set tap/on-off positions | Hybrid/ Discrete | Constrained SAC | ANN | 1- Model the voltage violation as constraint using the constrained MDP framework; 2- Reward is defined as the negative of power loss and switching cost. |

| Duan et. al. 2020 [94] | Generator voltage setpoints | Hybrid | DQN/DDPG | Decaying -greedy/ANN | 1- DQN is used for discrete action and DDPG is used for continuous action; 2- Decaying -greedy policy is employed in DQN to encourage exploration. |

| Cao et. al. 2020 [95] | PV reactive power control | Continuous | Multi-agent DDPG | ANN | The attention neural network is used to develop the critic to enhance the algorithm scalability. |

| Liu et. al. 2020 [96] | Reactive power control | Continuous | Adversarial RL [97]/SAC | Stochastic policy | 1- A two-stage RL method is proposed to improve the online safety and efficiency via offline pre-training; 2- Adversarial SAC is used to make the online application robust to the transfer gap. |

III-B1 Environment, State and Action

In distribution systems, the controllable devices for voltage control can be classified into slow timescale and fast timescale. Slow timescale devices, such as on-load tap changing transformers (OLTCs), voltage regulators, and capacitor banks, are discretely controlled on an hourly or daily basis. The states and control actions for them can be defined as

| (20a) | ||||

| (20b) | ||||

where is the voltage magnitude of bus , and are the active and reactive power flows over line . , , denote the tap positions of the OLTCs, voltage regulators, and capacitor banks respectively, which are discrete values. denote the discrete changes of corresponding tap positions.

The fast timescale devices include inverter-based DERs and static Var compensators (SVCs), whose (active/reactive) power outputs999Due to the comparable magnitudes of line resistance and reactance in distribution networks, the conditions for active-reactive power decoupling are no longer met. Thus active power outputs also play a role in voltage control, and alternating current (AC) power flow models are generally needed. can be continuously controlled within seconds. Their states and control actions can be defined as

| (21a) | ||||

| (21b) | ||||

where collect the continuous active and reactive power outputs of DERs respectively, and denotes the reactive power outputs of SVCs.

Since RL methods handle continuous and discrete actions differently, most existing studies only consider either continuous control actions (e.g., ) [92, 95], or discrete control actions (e.g., and/or ) [89, 90]. Nevertheless, the recent works [91, 98] propose two-timescale or bi-level RL-based voltage control algorithms, taking into account both fast continuous devices and slow discrete devices. In the rest of this subsection, we uniformly use and to denote the state and action.

Given the definitions of state and action, the system dynamics that depict the environment can be formulated as

| (22) |

where denote the exogenous active power and reactive power injections to the grid, including load demands and other generations. The transition function captures the tap position evolution and the power flow equations, which could be very complex or even unknown in reality. The exogenous injections include the uncontrollable renewable generations that are difficult to predict well. These issues motivate the RL-based voltage control schemes as the dynamics model (22) is not required in the RL setting.

III-B2 Reward Design

The goal of voltage control is to maintain the voltage magnitudes close to the nominal value (denoted as per unit (p.u.)) or within an acceptable range. Accordingly, the reward function is typically in the form of penalization on the voltage deviation from p.u. For example, in [90, 91, 95], the reward is defined as (23),

| (23) |

An alternative is to set the reward to be negative (e.g., ) when the voltage is outside an acceptable range (e.g., of the nominal value), and positive (e.g., ) when inside the range [94]. Moreover, the reward can incorporate the operation cost of controllable devices (e.g., switching cost of discrete devices), power loss [93], and other sophisticated metrics [92].

III-B3 RL Algorithms

Both value-based and policy-based RL algorithms have been applied for voltage control:

Value-Based RL. Several works [89, 90, 91, 94] adopt value-based algorithms, such as DQN and LSPI, to learn the optimal -function with function approximation, typically using ANNs [91, 94] or radial basis functions [90]. Based on the learned -function, these works use the greedy policy as the control policy, i.e., . Two limitations of the greedy policy include 1) the action selection depends on the state of the entire system, which hinders distributed implementation; 2) it is usually not suitable for continuous action space since the maximization may be difficult to compute, especially when complex function approximation, e.g., with ANNs, is adopted.

Policy-Based RL. Compared with value-based RL methods, the voltage control schemes based on actor-critic algorithms, e.g., [92, 93, 94, 95], are more flexible, which can accommodate both continuous and discrete actions and enable distributed implementation. Typically, a parameterized deterministic policy class is employed for each DER device , which determines the reactive power output based on local observation with parameter . The policy class is often parameterized using ANNs. Then some actor-critic methods, e.g., multi-agent DDPG, are used to optimize parameter , where a centralized critic learns the -function with ANN approximation and each DER device performs the policy gradient update as the actor.

III-B4 Simulation Results

In [85], the simulations on the IEEE 123-bus system show that the proposed multi-agent DQN method converges to a stable reward level after about 4000 episodes during the training process, and it achieves an average power loss reduction of 75.23 kW compared with a baseline method. In [93], the tests on a 4-bus feeder system show that the constrained SAC and DQN methods take about training samples to achieve stable performance, while the constrained policy optimization (CPO) method requires up to training samples to converge. Besides, the constrained SAC achieves the highest return and almost zero voltage violations for the 34-bus and 123-bus test feeders. In [90], it takes about 30 seconds for the proposed LSPI-based algorithm to converge in the case of IEEE 13-bus test feeder, which is faster than the exhaustive search approach by several orders of magnitude but maintains a similar level of reward.

III-B5 Discussion

Some key issues of applying RL to voltage control are discussed below:

Scalability. As the network scale and the number of controllable devices increase, the size of the state/action space grows exponentially, which poses severe challenges in learning the -function. Reference [90] proposes a useful trick that defines different -functions for different actions, which leads to a scalable method under its special problem formulation.

Data Availability. To learn the -function for a given policy, on-policy RL methods, such as actor-critic, need to implement the policy and collect sample data. This could be problematic since the policy is not necessarily safe, and thus the implementation on real-world power systems may be catastrophic. One remedy is to train the policy on high-fidelity simulators. Reference [90] proposes a novel method to generate virtual sample data for a certain policy, based on the data collected from implementing another safe policy. More discussions on safety are provided in Section IV-A.

Topology Change. The network topology, primarily for distribution systems, is subject to changes from time to time due to network reconfiguration, line faults, and other operational factors. A voltage control policy trained for a specific topology may not work well under a different network topology. To cope with this issue, the network topology can be included as one of the states or a parameter of the policy. Reference [80] represents the power network topology with graph neural networks and studies the properties of graph-based voltage control policies. Besides, if the set of network topologies is not large, one can train a separate control policy offline for each possible topology and apply the corresponding policy online. To avoid learning from scratch and enhance efficiency, one may use transfer RL [99] to transplant the well-trained policy for a given topology to another.

III-C Energy Management

Energy management is an advanced application that utilizes information flow to manage power flow and maintain power balance in a reliable and efficient manner. To this end, energy management systems (EMSs) are developed for electric power control centers to monitor, control, and optimize the system operation. With the assistance of the supervisory control and data acquisition (SCADA) system, the EMS for transmission systems is technically mature. However, for many sub-regional power systems, such as medium/low-voltage distribution grids and microgrids, EMS is still under development due to the integration of various DER facilities and the lack of metering units. Moreover, an EMS family [100] with a hierarchical structure is necessitated to facilitate different levels of energy management, including grid-level EMS, EMS for coordinating a cluster of DERs, home EMS (HEMS), etc.

In practice, there are significant uncertainties in energy management, which result from unknown models and parameters of power networks and DER facilities, uncertain user behaviors and weather conditions, etc. Hence, many recent studies adopt (D)RL techniques to develop data-driven EMS. A summary of the related literature is provided in Table III. In the rest of this subsection, we first introduce the RL models of DERs and adjustable loads, then review the RL-based schemes for different levels of energy management problems.

III-C1 State, Action, and Environment

We present the action, state and environment models for several typical DER facilities, buildings, and residential loads.

Distributed Energy Resources: For compact expression, we consider a bundle of several typical DERs, including a dispatchable PV unit, a battery, an EV, and a diesel generator (DG). The action at time is defined as

| (24) |

Here, are the power outputs of the PV unit, battery, EV, and DG respectively, which are continuous. can be either positive (discharging) or negative (charging). The DER state at time can be defined as

| (25) |

where is the maximal PV generation power determined by the solar irradiance. The PV output can be adjusted within the interval , and when the PV unit operates in the maximum power point tracking (MPPT) mode. denote the associated state of charge (SOC) levels. captures other related states of the EV, e.g. current location (at home or outside), travel plan, etc.

Building HVAC: Buildings account for a large share of the total energy usage, about half of which is consumed by the heating, ventilation, and air conditioning (HVAC) systems [101]. Smartly scheduling HVAC operation has huge potential to save energy cost, but the building climate dynamics are intrinsically hard to model and affected by various environmental factors. Generally, a building is divided into multiple thermal zones, and the action at time is defined as

| (26) |

where and are the conditioned air temperature and the supply air temperature, respectively. is the supply air flow rate at zone . The choice of states is subtle, since many exogenous factors may affect the indoor climate. A typical definition of the HVAC state is

| (27) |

where and are the outside temperature and indoor temperature of zone ; and are the humidity and occupancy rate of zone , respectively. Besides, the solar irradiance, the carbon dioxide concentration and other environmental factors may also be included in the state .

Residential Loads: Residential demand response (DR) [102] that motivates changes in electric consumption by end-users in response to time-varying electricity price or incentive payments attracts considerable recent attention. The domestic electric appliances are classified as 1) non-adjustable loads, e.g., computers and refrigerators, which are critical and must be satisfied; 2) adjustable loads, e.g., air conditioners and washing machines, whose operating power or usage time can be adjusted. The action for an adjustable load at time can be defined as

| (28) |

where binary denotes whether switching the on/off working mode (equal to ) or keeping unchanged (equal to ). is the power consumption of load , which can be adjusted either discretely or continuously depending on the load characteristics. The operational state of load can be defined as

| (29) |

where binary equals for the off status and for the on status. collects other related states of load . For example, the indoor and outdoor temperatures are contained in if load is an air conditioner [103]; and captures the task progress and the remaining time to the deadline for a washing machine load.

Other System States: In addition to the operational states above, there are some critical system states for EMS, e.g.,

| (30) |

including the current time , electricity price (from past time steps to future time predictions) [104], voltage profile , power flow , etc.

The state definitions in (25), (27), and (29) only contain the present status at time , which can also include the past values and future predictions to capture the temporal patterns. In addition, the previous actions may also be considered as one of the states, e.g., adding to the state . For different energy management problems, their state and action are determined accordingly by selecting and combining the definitions in (but not limited to) (24)-(30). And the environment model is given by

| (31) |

where captures other related exogenous factors. We note that the RL models presented above are examples for illustrative purposes, and one needs to build its own models to fit specific applications.

III-C2 Energy Management Applications

Energy management indeed covers a broad range of sub-topics, including integrated energy systems (IESs), grid-level power dispatch, management of DERs, building HVAC control, and HEMS, etc. We present these sub-topics in a hierarchical order below, and summarize the basic attributes and key features of representative references in Table III.

Integrated Energy Systems [105], also referred to as multi-energy systems, incorporate the power grids with heat networks and gas networks to accommodate renewable energy and enhance the overall energy efficiency and flexibility [106]. Reference [107] proposes a DDPG-based real-time control strategy to manage a residential multi-energy system, where DERs, heat pumps, gas boilers, and thermal energy storage are controlled to minimize the total operational cost. In [108], the management of IESs with integrated demand response is modeled as a Stackelberg game, and an actor-critic scheme is developed for the energy provider to adjust pricing and power dispatching strategies to cope with unknown private parameters of users. Extensive case studies are conducted in [109] to compare the performance of a twin delayed DDPG scheme against a benchmark linear model-predictive-control method, which empirically show that RL is a viable optimal control technique for IES management and can outperform conventional approaches.

Grid-Level Power Dispatch aims to schedule the power outputs of generators and DERs to optimize the operating cost of the entire grid while satisfying the operational constraints. Optimal power flow (OPF) is a fundamental tool of traditional power dispatch schemes. Several recent works [110, 111, 112, 113, 114] propose DRL-based methods to solve the OPF problem in order to achieve fast solution and cope with the absence of accurate grid models. Most existing references [115, 116, 117, 118, 119, 120] focus on the power dispatch in distribution grids or microgrids. In [118], a model-based DRL algorithm is developed to online schedule a residential microgrid, and Monte-Carlo tree search is adopted to find the optimal decisions. Reference [120] proposes a cooperative RL algorithm for distributed economic dispatch in microgrids, where a diffusion strategy is used to coordinate the actions of many DERs.