Reinforcement Learning with Intrinsic Affinity

for Personalized Prosperity Management

Abstract

The common purpose of applying reinforcement learning (RL) to asset management is the maximization of profit. The extrinsic reward function used to learn an optimal strategy typically does not take into account any other preferences or constraints. We have developed a regularization method that ensures that strategies have global intrinsic affinities, i.e., different personalities may have preferences for certain assets which may change over time. We capitalize on these intrinsic policy affinities to make our RL model inherently interpretable. We demonstrate how RL agents can be trained to orchestrate such individual policies for particular personality profiles and still achieve high returns.

Keywords AI in banking, personalized financial services, explainable AI, reinforcement learning, policy regularization, intrinsic affinity

1 Introduction

Effective customer engagement is a requisite for modern financial service providers that are adopting advanced methods to increase the level of personalization of their services [1]. Although artificial intelligence (AI) has become a ubiquitous tool in financial technology [2], research in the field has yet to significantly advance levels of personalization [3]. Asset management is an active research topic in AI for finance; however, the research opportunities presented by the need for personalized services are usually neglected [4]. Whereas personalized investment advice is typically based on questionnaires, we propose the use of micro-segmentation based on spending behavior. Traditionally, customer segmentation has been grounded in demographics which provide only a coarse segmentation [5]; it fails to capture nuanced differences between individuals with the potential for undesirable ramifications, e.g. discrimination in credit scoring based on postal code [6]. Micro-segmentation, however, provides a more sophisticated classification which can improve the quality of banking services [7, 8].

We develop a personal asset manager that invests in a portfolio of asset classes according to individual personality profiles as manifested by their spending behavior. The result is a hierarchical system of reinforcement learning (RL) agents in which a high-level agent orchestrates the actions of five low-level agents with global intrinsic affinities for certain asset classes. These affinities derive from prototypical personality traits. For instance, personality traits with a higher affinity for risk may, as a general rule, prefer high-volatility assets.

Explainability and interpretability are key in sensitive industries, such as finance [9]; they form the basis for understanding and trust and have not yet been adequately addressed [10, 11]. In short, our agents’ policies are regularized by predefined prior action distributions which imprint characteristic behaviors, making their policies inherently interpretable on three levels: (1) they use salient features extracted from customer spending behavior, (2) the affinity of the prototypical agents, and (3) their orchestration to achieve personal investment advice. Our contribution is therefore twofold: we demonstrate how RL agents can be made inherently interpretable through their intrinsic affinities, and their application and value to personalized asset management.

2 Background and Related Work

Spending patterns are a predictor of financial personality [12]. In their paper, the authors trained a random forest to predict customer personalities from their classified financial transactions, using a prevalent taxonomy of personality traits: openness, conscientiousness, extraversion, agreeableness, and neuroticism. Although they achieved only a modest predictive accuracy, a subsequent study found that spending patterns over time expose salient information that is obscured in non-temporal form [13]; the authors in this study used the same personality model, but added temporal patterns such as variability of the amount, persistence of the category in time, and burstiness—the intermittent changes in frequency of an event. Recurrent neural networks (RNNs) are able to extract this salient information when predicting personality traits from financial transactions [3]. In, [14], we gained an understanding of these extracted features by interpreting the dynamics of the RNN state space through a set of attractors. Understanding model behavior is crucial in industries such as personal finance [10]. In their study, [10] extracted rules from three classes of models—linear regression, logistic regression, and random forests—which not only exposed the spending patterns most indicative of personality traits, but also aided in model improvement.

In RL, agents learn to solve problems by tentation; they maximize the expected rewards resulting from their actions in an environment [15]. Environments can have complex dynamics that result in sophisticated policies that are opaque to their developers, who have no influence over what these agents learn [16, 17]. Intrinsic motivation enables agents to learn behaviors that are detached from the expected rewards of the environment [18]. It is a strategy that was developed to address the challenge of exploration in environments with sparse rewards [19]. One such approach is Kullback-Leibler (KL) policy regularization in which the objective function is regularized by the KL-divergence between the current policy and a predefined prior [20]. Policy regularization has been shown to be helpful and never detrimental to convergence [21]. Although most policy regularization methods aim to improve learning performance, they can also control the learning process and imbue the policy with an intrinsic behavior [22]. Here, the objective function is regularized with a predefined prior action distribution that defines a desirable characteristic:

| (1) | ||||

is the learning objective as a function of the model parameters , is the expected reward for state and action as sampled from a replay buffer , and is a scaling hyperparameter for the regularization term , which is the mean square difference between the observed action distribution and the action distribution given a regularization prior . The efficacy of this approach was demonstrated by instilling an inherent characteristic behavior in agents that navigate a grid. These agents learned to either prefer left turns, right turns, or to avoid going straight by taking a zig-zag approach to their destination. In contrast to constrained RL, which avoids certain states, the policy regularization in [22] encourages certain actions irrespective of the state and is a new direction for RL.

Hierarchical reinforcement learning (HRL) decomposes problems into low-level subtasks that are learned by relatively simple agents for the purpose of either improved performance or explainability [23, 24]. Larger problems are solved by choreographing these subtasks through an orchestration agent that learns the high-level dynamics of its environment [25]. HRL has, for instance, been used to control a robotic arm: while low-level agents learned simple tasks such as moving forward / backward or picking up / placing down, an orchestration agent learned to retrieve objects on a surface by choreographing these tasks [26, 27]. The agents were not only efficient at learning, but their policies were more easily interpreted by human experts. In [28], the authors used HRL to train a hierarchical set of agents to play a game. Their low-level agents learned to solve simple tasks such as “pick up a key” or “open a door” while receiving extrinsic rewards from the environment. A high-level agent then orchestrated these sub-tasks and received intrinsic rewards generated by a critic based on whether or not larger objectives were met.

3 Methodology

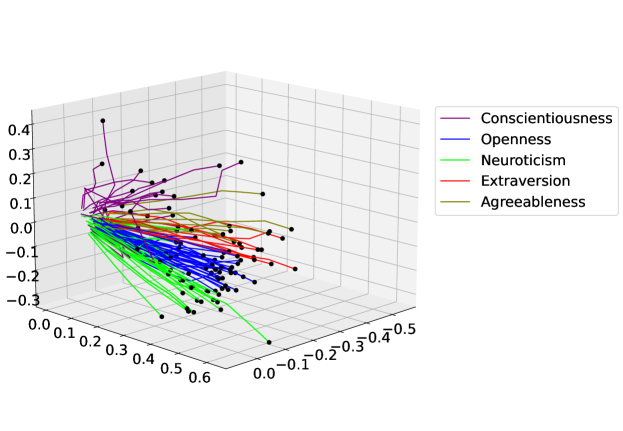

We have previously developed a three-node RNN that predicts customer personalities from an input vector of their classified financial transactions [3]. This input vector consists of six annual time steps, each consisting of 97 transaction classes; the values in each time step add up to one and are the fraction of a customer’s annual spending per transaction category. The RNN output is a five-dimensional personality vector; its values are the degrees of membership in each of five personality traits: openness, conscientiousness, extraversion, agreeableness, and neuroticism. We use the feature trajectories from this model’s state space—shown in Figure 1—to represent a customer’s spending behavior over time.

Each behavioral trajectory represents an individual customer and is labeled according to their most dominant personality trait: the trait with the greatest value in the personality vector. These trajectories form clusters in the state space, which form hierarchical sub-clusters along the successive levels of dominance of the personality traits. This hierarchical clustering provides a means of micro-segmenting customers according to their spending behavior in time. We then explained these behavioral trajectories by reproducing them using a linear regression model, and we interpreted them through locating a number of attractors that govern the dynamics of the state space [14]. We located these attractors by mapping the RNN output space into the state space through inverse regression. Using this mapping, and the maximum reachable values in the output space, based on the known range of the dimensions in the state space (), we extrapolated the final locations (attractors) of the behavioral trajectories. Formally:

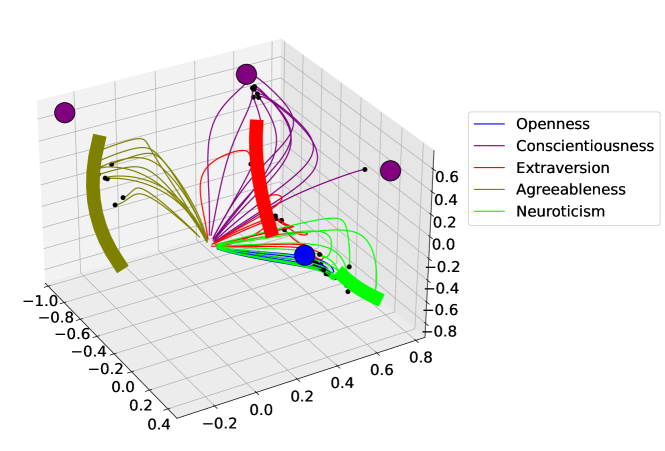

where is the projection of the output space into the state space, is the zero vector or origin of the output space, is the reachable output space, is the reachable state space, is the vector of weights of the RNN’s output layer, is the number of output dimensions, and is the number of points used to map the output hypercube. We corroborated these theoretical locations with the observed destinations of the trajectories; for each customer, we repeated the first time step 100 times, thereby creating trajectories that asymptotically converge to their attractors. We thus determined that trajectories converge towards the attractor associated with their most dominant personality trait. If a customer’s spending behavior changes such that a different personality trait becomes dominant, their trajectory changes direction accordingly towards the new appropriate attractor. Figure 2 shows these attractors in the RNN state space, with the extended spending trajectories, converging towards the appropriate attractors.

There are three point attractors for the personality trait conscientiousness, towards which trajectories converge depending on their initial conditions. Agreeableness, extraversion, and neuroticism each have a single line attractor, while trajectories that classified as openness converge towards a single point attractor. Each basin of attraction forms a cluster of trajectories, which each form a hierarchy of sub-clusters along successive levels of dominance of personality traits. This is the interpretation of the trajectory dynamics.

Interpretable RL can be used for investment that matches personality [29]. In this preliminary study, we had trained multiple RL agents to invest monthly contributions in different financial assets: stocks, property, savings accounts, mortgage payments, and luxury expenses. We obtained assets prices from the S&P 500 index [30], the Norwegian property index [31], and the Norwegian interest rate index [32]. With the help of a panel of experts from a major bank, we ranked these asset classes according to the following properties: expected returns, typical liquidity, capital prerequisite, typical risk, and novelty. We then associated the personality traits with these properties, as shown in Table 1.

| Asset class property | Open. | Cons. | Extra. | Agree. | Neur. |

|---|---|---|---|---|---|

| Expected returns | 1 | 1 | 2 | 1 | 1 |

| Liquidity | 2 | -1 | 2 | 1 | 2 |

| Low capital prerequisite | 0 | -1 | 1 | 1 | 1 |

| Low Risk | -1 | 2 | -1 | 1 | 2 |

| Novelty | 2 | 0 | 2 | 0 | -1 |

The result showed that, for instance, the openness trait might value asset liquidity and novelty; because of their openness to new experiences, they might prefer to have cash readily at hand when such an opportunity presents itself, or they might value assets that in themselves contain novelty. Another example is that the conscientiousness trait might prefer assets with low risk. Combining the coefficients in Table 1 with the asset property rankings, we mapped the association of each personality trait with the asset classes, as shown in Table 2.

| Asset type | Open. | Cons. | Extra. | Agree. | Neuro. |

|---|---|---|---|---|---|

| Savings account | -0.11 | 0.08 | -0.15 | 0.51 | 0.68 |

| Property funds | -0.15 | 0.32 | -0.22 | -0.36 | -0.24 |

| Stock portfolio | 0.82 | -0.61 | 0.95 | 0.42 | 0.12 |

| Luxury expenses | 0.16 | -0.51 | -0.07 | -0.80 | -0.81 |

| Mortgage repayments | -0.72 | 0.72 | -0.52 | 0.23 | 0.25 |

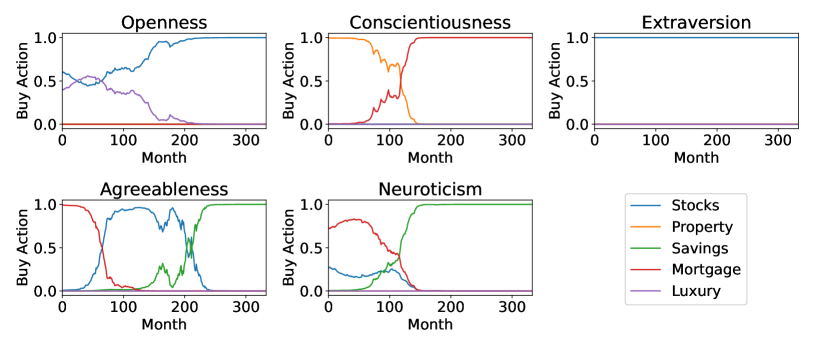

These coefficients reveal that, for example, the extraversion trait has a high preference for stocks, while the conscientiousness agent prefers a combination of mortgage repayments and property investment. When scaled so that they add up to one and their minimum values equal to zero, these coefficients were the regularization priors in Equation 1; we regularized the objective functions of five prototypical agents to instill intrinsic affinities for certain asset classes. Each agent learned an investment strategy associated with one of the five personality traits, which is the interpretation of their policies. Figure 3 shows these strategies, where each agent acted in an environment in which they invested a fixed monthly amount of 10 000 Norwegian Kroner (NOK) for 30 years. These prototypical agents clearly learned unique strategies for investing. The openness agent initially preferred luxury expenditures, in line with their openness to new experiences, and later purely invested in stocks, which had scored high in novelty. In contrast, the conscientiousness agent preferred to reduce risk through property investment, followed by resolute mortgage payments. These are the low-level policies which we intend to orchestrate into personalized asset investment strategies; customers have varying degrees of membership in each of the five personality traits, resulting in unique preferences for assets that may change over time.

Based on the premise that there is a causal relationship between personality-matched spending and happiness [33], we hypothesize that investment matched with personality can similarly increase happiness. Our goal is therefore to learn, through high-level RL orchestration, the optimum strategies that match customers’ unique financial personalities. Our RL agent orchestrates the actions of low-level prototypical agents according to customers’ extracted behavioral trajectories (Figure 1). With actions adding up to one, representing the fraction of the investment amount allocated to each low-level agent, it maximizes the following reward function: the inner product between the values of asset holdings and the customer’s preference for each asset type. This preference is calculated as the inner product of the customer’s personality vector—the set of five values representing their degrees of membership in each of the personality traits—and the set of coefficients relating each asset type with each personality trait (Table 2). This reward measures the correlation between spending behavior and investment strategy, which we call the satisfaction index; the higher the satisfaction index, the higher the correlation between spending behavior and investment strategy. We then instill an intrinsic RL affinity through a regularization prior—the customer’s personality vector scaled by its sum—in a deep deterministic policy gradient algorithm (DDPG - [34]). The actor consisted of three vanilla RNN nodes and an output layer of five actions with a softmax activation. The critic had a similar three-node RNN layer for the states which, concatenated with the actions, were succeeded by a 1000 node feed-forward layer and a single output node with no activation function. We found that three RNN nodes provided consistently high total rewards, which is consistent with findings that RNN architectures generally perform well in low-dimensional representations [35]. We tuned our hyperparameters using a one-at-a-time parameter sweep to reach the following optima: the actor and critic learning rates were 0.005 and 0.01, respectively, the target network update parameter was 0.05, the discount factor was 0.95, and the regularization scaling factor was 5.

In summary, we trained five low-level RL agents to invest in a set of assets according to prototypical personality traits [29]. We now combine their actions using a high-level RL agent that uses a customer’s spending behavior as manifested in their transaction history. This spending behavior is evinced in behavioral trajectories that we extracted from the state space of a RNN that predicts personality from financial transactions [3]. We illustrate this in a flow diagram in Figure 4.

4 Results

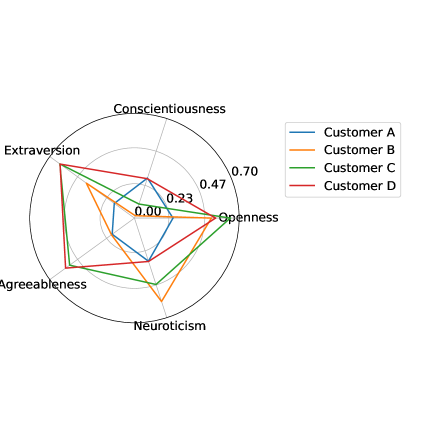

We selected four customers for whom we trained personal orchestration agents; their personality vectors are visualized in Figure 5.

Customer A has a relatively balanced profile, with low variation in the values of their personality vector, which also has relatively small values. This contrasts with Customer B who scores high in neuroticism and openness, Customer C who scores high in openness and extraversion, and Customer D who scores high in extraversion, agreeableness, and openness. Their respective regularization priors are shown in Table 3.

| Prior | Open. | Cons. | Extra. | Agree. | Neur. |

|---|---|---|---|---|---|

| 0.22 | 0.24 | 0.14 | 0.15 | 0.25 | |

| 0.30 | 0.01 | 0.23 | 0.11 | 0.35 | |

| 0.27 | 0.04 | 0.26 | 0.23 | 0.20 | |

| 0.23 | 0.12 | 0.27 | 0.25 | 0.13 |

The regularization prior for Agent A (the agent for Customer A) consequently has a low variation in its values while assigned the highest weight to neuroticism and openness, assigned the highest weight to openness and extraversion, and assigned the highest weight to extraversion, agreeableness, and openness.

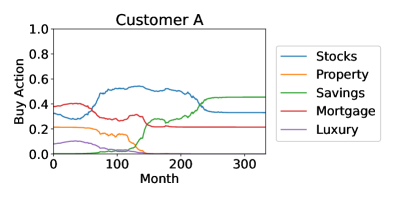

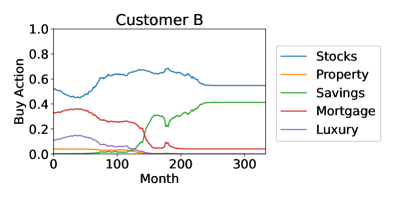

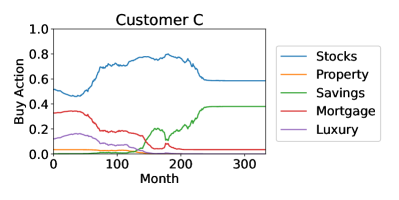

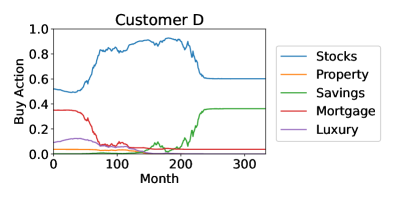

These four customers’ personality profiles, and consequently the orchestration agents’ actions, were constant in time. Customers’ personality profiles may naturally vary in time, causing directional changes in their behavioral trajectories, which alter the orchestration agent’s action distribution. This affects investment strategies in real time which, for the sake of simplicity, we do not illustrate here. The investment strategies for the four customers are shown in Figure 6.

Although these strategies might appear similar, there are significant differences: Customer A never invested more than 60% of their monthly allocation in stocks, while Customer D invested up to 90% in stocks, and Customer A was the only one to invest significantly in property. This is due to Customer A having the highest relative degree of conscientiousness, i.e., they preferred a reduced risk. In contrast, Customer D had the highest risk in their portfolio by investing the least in property and mortgage repayments and the most in stocks, due to their low score in neuroticism which increases their appetite for risk. When comparing Customers B and C, Customer B invested more in savings accounts and less in stocks in the period between 150 and 250 months. This is due to their differences in agreeableness and neuroticism, where customer B scored higher in neuroticism and lower in agreeableness. In Figure 3, the prototypical agents associated with neuroticism and agreeableness are the only two to invest in savings, and the neuroticism agent started investing in savings much earlier and with higher percentages. Despite the nuanced differences in investment approaches, the general advice for all customers was similar: first pay down mortgages to reduce debt repayments, then accept higher risk with higher returns from stocks and benefit from compound growth, and finally toward retirement age reduce risk through savings accounts. This is consistent with conventional financial advice: younger people with more disposable income may accept more risk for higher returns. Very interestingly, this was not explicit in the objective function which had no elements of risk, while the effect of compound growth was evident only in increased final returns.

The final financial returns for these four customers were very similar: after 30 years of investing 10 000 NOK per month, they all had portfolio values ranging between 21 and 24 million NOK. The total amount invested was 3.6 million NOK and the theoretical maximum return was 27.7 million NOK, achieved when investing purely in stocks. Our aim was rather to optimize customer satisfaction in their portfolio while still achieving high returns. We found that satisfaction indices between customers had greater variation than their financial returns, which we attribute to differences in the absolute values of their personality vectors. Therefore, satisfaction indices cannot be directly compared between customers, but they can be compared between different advisors for the same customer. We make such a comparison in Table 4 between the results from the orchestration agents and those of a linear combination of the prototypical agents. This linear combination is the inner product of the personality vector and the action vectors of the prototypical agents, scaled such that the resulting actions add up to one; the actions of the prototypical agents were weighted according to customers’ personality vectors. The orchestration agent never performs worse than a linear combination of the prototypical agents; although it typically achieves only slightly better financial returns, it can significantly improve the satisfaction index. This was not the case when using feed-forward networks to process the customer spending input, which returned inconsistent results across multiple training runs and frequently performed worse than the simple linear combination. This is consistent with findings from [13] that spending patterns in time hold salient information not evident in non-temporal data.

| Orchestration Agent | Linear Combination | |||

| Customer | Portfolio Value Mill. NOK | Satisfaction Index | Portfolio Value Mill. NOK | Satisfaction Index |

| A | 20.9 | 3.1 | 20.9 | 3.0 |

| B | 22.0 | 12.9 | 21.7 | 12.7 |

| C | 22.7 | 19.0 | 22.4 | 17.8 |

| D | 23.8 | 19.5 | 22.6 | 14.5 |

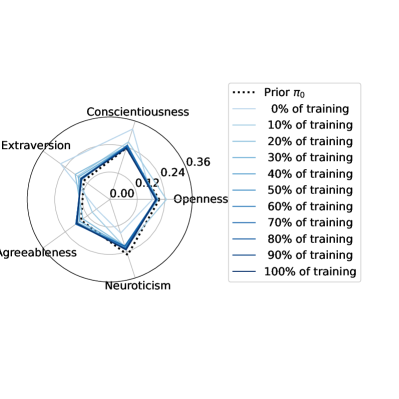

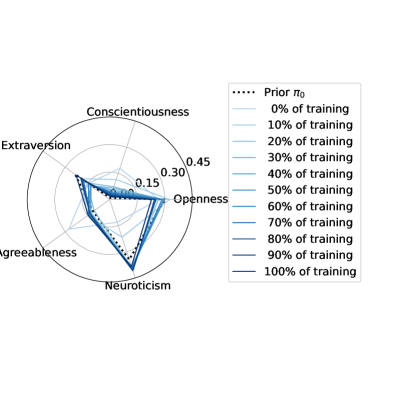

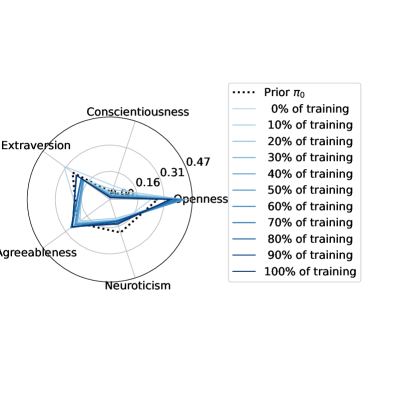

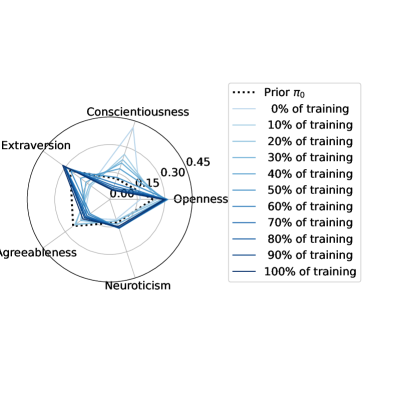

We regularized the orchestration agents to act according to a specified prior with the same action distribution as the linear combination scenario. However, through stochastic gradient descent, they optimized the satisfaction index in that region of the action space. In Figure 7 we illustrate the policy convergence towards local optima of each of the four orchestration agents.

The policies were randomly initialized, but they quickly converged to local optima in close proximity of the regularization priors in the action space. The learned strategies are thus interpretable.

5 Conclusions

Machine learning is essential for personalizing financial services. Its acceptance is contingent on understanding the underlying models, which makes model explainability and interpretability imperative. Our reinforcement learning model blends investment advice that is aligned with different personality traits. Its interpretation follows from the global intrinsic affinities of the learned policies, i.e., affinities that are independent of the current state. These policies not only result in a good profit, but similar profits are achieved across different personality profiles despite their distinct strategies. For instance, they avoid risk for highly conscientious individuals, while pursuing novelty for individuals that are more open to new experiences. Interestingly, they have learned the concept of risk without this being explicit in the objective function. Across all portfolios, the advice is consistent with conventional wisdom: younger investors may accept higher risk, which typically reduces with age. It remains to be seen whether this is simply a consequence of optimizing profit while balancing the intrinsic action distribution, or whether our agents have learned deeper strategies of asset management. In future work, we intend to investigate this phenomenon by extracting an explanation for our agents’ decisions. It will also be interesting to extend our method to local intrinsic affinity, where the preferred policy also depends on the current state. The potential applications for our method go beyond investment advice and includes, e.g., preferred evasive actions for self driving cars, navigation systems that favor either freeways or scenic roads, or medication for the treatment of chronic diseases based on individual medical history in healthcare.

Declarations

-

•

Funding: This study was partially funded by a grant from The Norwegian Research Council, project number 311465.

-

•

Competing Interests: The authors declare no competing interests.

-

•

Ethics approval: Not applicable.

-

•

Consent to participate: Personal data were anonymized and processing was done on the basis of consent in compliance with the European General Data Protection Regulation (GDPR).

References

- [1] Matteo Stefanel and Udayan Goyal. Artificial intelligence & financial services: Cutting through the noise. Technical report, APIS partners, London, England, 2019.

- [2] Ana Fernández. Artificial intelligence in financial services. Technical report, The Bank of Spain, Madrid, Spain, 2019.

- [3] Charl Maree and Christian W. Omlin. Clustering in recurrent neural networks for micro-segmentation using spending personality. In 2021 IEEE Symposium Series on Computational Intelligence (SSCI), pages 1–5, 2021.

- [4] Adrian Millea. Deep reinforcement learning for trading—a critical survey. Data, 6(11):1–25, 2021.

- [5] Wendell R. Smith. Product differentiation and market segmentation as alternative marketing strategies. Journal of Marketing, 21(1):3–8, 1956.

- [6] Solon Barocas and Andrew D. Selbst. Big data’s disparate impact. California Law Review, 104(3):671–732, 2016.

- [7] Salman Mousaeirad. Intelligent vector-based customer segmentation in the banking industry. ArXiv, abs/2012.11876, 2020.

- [8] Edward T. Apeh, Bogdan Gabrys, and Amanda Schierz. Customer profile classification using transactional data. In 2011 Third World Congress on Nature and Biologically Inspired Computing, volume 3, pages 37–43, Salamanca, Spain, 2011.

- [9] Alejandro Barredo Arrieta, Natalia Díaz-Rodríguez, Javier Del Ser, Adrien Bennetot, Siham Tabik, Alberto Barbado, Salvador Garcia, Sergio Gil-Lopez, Daniel Molina, Richard Benjamins, Raja Chatila, and Francisco Herrera. Explainable artificial intelligence (XAI): Concepts, taxonomies, opportunities and challenges toward responsible AI. Information Fusion, 58:82–115, 2020.

- [10] Yanou Ramon, R.A. Farrokhnia, Sandra C. Matz, and David Martens. Explainable AI for psychological profiling from behavioral data: An application to big five personality predictions from financial transaction records. Information, 12(12):1–28, 2021.

- [11] Longbing Cao. Ai in finance: Challenges, techniques and opportunities. Banking & Insurance eJournal, 2021.

- [12] Joe J. Gladstone, Sandra C. Matz, and Alain Lemaire. Can psychological traits be inferred from spending? Evidence from transaction data. Psychological Science, 30(7):1087–1096, 2019.

- [13] Natkamon Tovanich, Simone Centellegher, Nacéra Bennacer Seghouani, Joe Gladstone, Sandra Matz, and Bruno Lepri. Inferring psychological traits from spending categories and dynamic consumption patterns. EPJ Data Science, 10(24):1–23, 2021.

- [14] Charl Maree and Christian W. Omlin. Understanding spending behavior: Recurrent neural network explanation and interpretation (in print). In IEEE Computational Intelligence for Financial Engineering and Economics, pages 1–7, 2022.

- [15] Richard S. Sutton and Andrew G. Barto. Reinforcement Learning: An Introduction. The MIT Press, second edition, 2018.

- [16] A. Heuillet, F. Couthouis, and N. Díaz-Rodríguez. Explainability in deep reinforcement learning. Knowledge-Based Systems, 214(106685):1–24, 2021.

- [17] Javier García, Fern, and o Fernández. A comprehensive survey on safe reinforcement learning. Journal of Machine Learning Research, 16(42):1437–1480, 2015.

- [18] Arthur Aubret, Laetitia Matignon, and Salima Hassas. A survey on intrinsic motivation in reinforcement learning. arXiv, 1908.06976, 2019.

- [19] Alain Andres, Esther Villar-Rodriguez, and Javier Del Ser. Collaborative training of heterogeneous reinforcement learning agents in environments with sparse rewards: What and when to share? arXiv, 2202.12174, 2022.

- [20] Alexandre Galashov, Siddhant Jayakumar, Leonard Hasenclever, Dhruva Tirumala, Jonathan Schwarz, Guillaume Desjardins, Wojtek M. Czarnecki, Yee Whye Teh, Razvan Pascanu, and Nicolas Heess. Information asymmetry in KL-regularized RL. In International Conference on Learning Representations (ICLR), pages 1–25, New Orleans, Louisiana, United States, 2019.

- [21] Nino Vieillard, Tadashi Kozuno, Bruno Scherrer, Olivier Pietquin, Remi Munos, and Matthieu Geist. Leverage the average: An analysis of KL regularization in reinforcement learning. In Advances in Neural Information Processing Systems (NIPS), volume 33, pages 12163–12174. Curran Associates, 2020.

- [22] Charl Maree and Christian Omlin. Reinforcement learning your way: Agent characterization through policy regularization. AI, 3(2):250–259, 2022.

- [23] Shubham Pateria, Budhitama Subagdja, Ah-hwee Tan, and Chai Quek. Hierarchical reinforcement learning: A comprehensive survey. Association for Computing Machinery, 54(5):1–35, 2021.

- [24] Andrew Levy, Robert Platt, and Kate Saenko. Hierarchical reinforcement learning with hindsight. In International Conference on Learning Representations, pages 1–16, 2019.

- [25] Bernhard Hengst. Hierarchical Reinforcement Learning, pages 495–502. Springer US, Boston, MA, 2010.

- [26] Luca Marzari, Ameya Pore, Diego Dall’Alba, Gerardo Aragon-Camarasa, Alessandro Farinelli, and Paolo Fiorini. Towards hierarchical task decomposition using deep reinforcement learning for pick and place subtasks. In 20th International Conference on Advanced Robotics (ICAR), pages 640–645, Ljubljana, Slovenia, 2021.

- [27] B. Beyret, A. Shafti, and A.A. Faisal. Dot-to-dot: Explainable hierarchical reinforcement learning for robotic manipulation. In IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), page 5014–5019, Macau, China, 2019.

- [28] Tejas D Kulkarni, Karthik Narasimhan, Ardavan Saeedi, and Josh Tenenbaum. Hierarchical deep reinforcement learning: Integrating temporal abstraction and intrinsic motivation. In Advances in Neural Information Processing Systems, volume 29, pages 1–9. Curran Associates, Inc., 2016.

- [29] Charl Maree and Christian Omlin. Can interpretable reinforcement learning manage assets your way? arXiv, 2202.09064, 2022.

- [30] Yahoo Finance. Historical data for S&P500 stock index, 2022. https://finance.yahoo.com/quote/%5EGSPC/history?p=%5EGSPC, Accessed on 30/01/2022.

- [31] Statistics Norway. Table 07221 - Price index for existing dwellings, 2022. https://www.ssb.no/en/statbank/table/07221/, Accessed on 30/01/2022.

- [32] Norges Bank. Interest rates, 2022. https://app.norges-bank.no/query/#/en/interest, Accessed on 30/01/2022.

- [33] Sandra C. Matz, Joe J. Gladstone, and David Stillwell. Money buys happiness when spending fits our personality. Psychological Science, 27(5):715–725, 2016.

- [34] Timothy P. Lillicrap, Jonathan J. Hunt, Alexander Pritzel, Nicolas Heess, Tom Erez, Yuval Tassa, David Silver, and Daan Wierstra. Continuous control with deep reinforcement learning. arXiv, 1509.02971, 2019.

- [35] Niru Maheswaranathan, Alex H. Williams, Matthew D. Golub, S. Ganguli, and David Sussillo. Reverse engineering recurrent networks for sentiment classification reveals line attractor dynamics. Advances in neural information processing systems (NIPS), 32:15696–15705, 2019.