Reservoir computing with simple oscillators: Virtual and real networks

Abstract

The reservoir computing scheme is a machine learning mechanism which utilizes the naturally occuring computational capabilities of dynamical systems. One important subset of systems that has proven powerful both in experiments and theory are delay-systems. In this work, we investigate the reservoir computing performance of hybrid network-delay systems systematically by evaluating the NARMA10 and the Sante Fe task.. We construct ’multiplexed networks’ that can be seen as intermediate steps on the scale from classical networks to the ’virtual networks’ of delay systems. We find that the delay approach can be extended to the network case without loss of computational power, enabling the construction of faster reservoir computing substrates.

I INTRODUCTION

Reservoir computing is a supervised machine-learning scheme for recurrent networks that utilizes the naturally occuring computational power of large dynamical systems. Where more general machine learning schemes aim to train a recurrent neural network in its entirety, reservoir computing differs in its approach by training only a few select links. This divides the system into an input layer, a dynamical reservoir and an output layer. Originally, reservoir computing was inspired both by systematic machine-learning considerations Jaeger (2001) as well as the human brainMaass et al. (2002). It was later found that under certain conditions even a general training scheme for recurrent networks can produce structures that mimic the tripartite division of reservoir computingSchiller and Steil (2004).

Proposed applications include channel equalizations for satellite communications Bauduin et al. (2015), real-time audio processing Keuninckx et al. (2017) and unscrambling of bits after long-haul optical data transmission Argyris et al. (2017).

Reservoir computing works with many different types of dynamical reservoirs. It has also been experimentally demonstrated in a wide variety of systems, benefiting from the fact that the dynamical system need not be trained. Successful demonstrations include systems of dissociated neural cell culturesDockendorf et al. (2009), a bucket of water Fernando and Sojakka (2003) and field programmable gate arrays (FPGAs)Antonik et al. (2016).

Understanding the deeper mechanisms behind the performance of different dynamical reservoirs is still an open problem. Previous works have focused on the link between performance and the Lyapunov Spectrum Rodan and Tiňo (2011) and comparison of different node types Verstraeten et al. (2007). Extensions of the reservoir computing have also been proposed: Both the use of plasticity Steil (2007) of links in the artificial neural network, as well as deterministicly constructing networks Rodan and Tiňo (2012) try to boost the performance. However, most of these theoretical investigations have focused on the more machine-learning inspired time-discrete artificial neural networks, as opposed to photonic and time-continuous systems.

Interest in reservoir computing was renewed especially in the photonics and semiconductor community, after Appeltant et al. presented a novel schemeAppeltant et al. (2011). Instead of an extended physical system or large network of single units, this virtual network approach uses a long delay-line to produce a high dimensional phase-space in time. Several groups have successfully implented such a delay-line based reservoir computer using both optic Brunner et al. (2013); Vinckier et al. (2015); Nguimdo et al. (2017) and opto-electronic Larger et al. (2012); Paquot et al. (2012) experiments. Possible extensions to the virtual network scheme have also been considered, among others are hierarchical time-multiplexing Zhang et al. (2014) or the use of counter-propagating fields in a ring laser for simultaneous tasks Nguimdo et al. (2015). Additionally, Ref. Schumacher et al. (2013) proposes to analytically calculate the response and time series of such a virtual network and then use the analytic formula to speed up computation.

Simultaneously the traditional network implementation has seen additional improvements by the use of fully passive dynamical reservoirsVandoorne et al. (2008, 2011, 2014), which greatly reduces the noise and improves performance. However, for every real node a feed-in mechanism for data, as well as a read-out mechanism for the dynamical response is needed. So even a network of passive elements will still require a significant amount of complexity. In this paper we aim to show a systematic comparison between the ’delay-line’ approach of virtual networks and the original ’real’ networks consisting of multiple oscillators. Additionally, we propose a mixed scheme containing both multiple real nodes connected in a network, as well as long delay lines extending the system dimension in time.

II RESERVOIR COMPUTING

Reservoir computing is a machine learning scheme aiming to utilize the intrinsic computational power of complex dynamical systems. The typical problem is to transform or extract data from a given time-dependent data stream. Usually, the target transformation is not explicitly known or computationally very costly and therefore a direct solution of the problem on a computer seems undesirable. Hence a supervised machine learning approach is used. The learning takes place in the typical two step process: First, a training phase fixes the malleable parameters of the reservoir computer at an optimal value, and then a testing phase evaluates the quality of the learned behaviour.

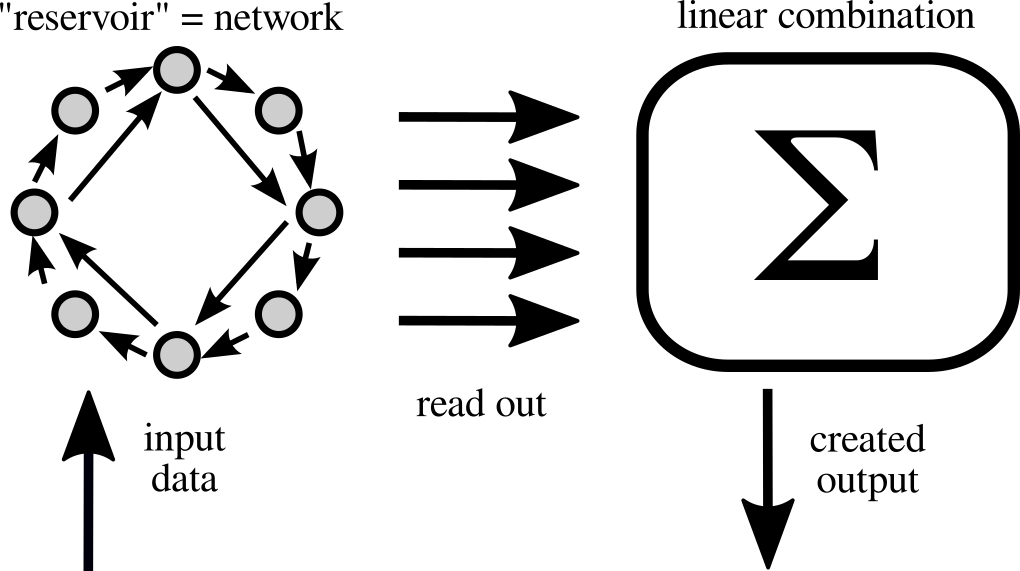

Figure 1 depicts a sketch of the reservoir computing paradigm. At the core of the reservoir computer lies a dynamical system with a high phase-space, also called ’reservoir’. Historically, these systems were first envisioned to be networks of discrete maps Jaeger (2001) or neural models Maass et al. (2002). The data is fed into the system via some number of parameters, e.g. the driving current of a laser or the voltage applied to neurons, or injected with a driving signal, e.g. input light pulses into an optical system. The dynamical system will then be driven by input data, resulting in some trajectory in its phase space. This process is often called ’expansion in feature space’, as the resulting trajectory can be of a much higher dimension than the original data series. The high dimensional response of the dynamical reservoir is then read out and used as the basis for reconstructing the desired output. While conventional deep convolutional neural network learning schemes heavily focus on the training of the internal degrees of the network, the ’reservoir’ is assumed to be fixed for reservoir computing. In fact, training is only applied to the linear output weighting, for which a simple linear regression is enough to find the optimal values Jaeger (2001).

However, for this simplification in the training procedure a price must be paid in system size: We require the desired transformation to be constructable by a mere linear combination of the degrees of freedom of the reservoir. Hence, to be sufficiently computationally powerful, the system needs to be large enough to contain many degrees of freedom. While conventionally trained artificial neural networks are ’condensed’ to contain only useful elements, reservoir computing, even in its fully trained state, can carry a lot of overhead, i.e. elements that are not useful for the computation. Therefore reservoir computers can be expected to be always larger than their fully trained counterparts. A simple example of a time-discrete reservoir computer, often called ’echo state network’, is shown in the methods section.

However, the fixed nature of the reservoir also allows experimentalists to utilize naturally occuring complex dynamical systems as reservoirs. This is the great advantage of the reservoir computing paradigm. The intrinsic computational powers of physical processes can be used Crutchfield et al. (2010).

III RESERVOIR COMPUTING with delay

A class of systems that is naturally suited for reservoir computing are delay systems Appeltant et al. (2011); Crutchfield et al. (2010), which are described by delay-differential equations (DDEs). These DDEs contain terms that are not only dependent on the instantaneous variables , but also on their delayed states before a certain time . Mathematically the phase space dimension of a DDE system is infinite. Many systems in nature can be described by systems of DDEs, where the delay-term usually hides a compressed spatial variable. The most common example are laser systems, where a laser with delayed self-feedback via a mirror has been studied extensively in the literature Lang and Kobayashi (1980); Alsing et al. (1996). Here, the emitted electromagnetic waves can be described with the help of a delay term. Similarly, lasers can also be delay-coupled Soriano et al. (2013). Optical and electro-optical systems consisting of only a single node with delay have been successfully used for reservoir computing, and are especially suited due to their high speeds.

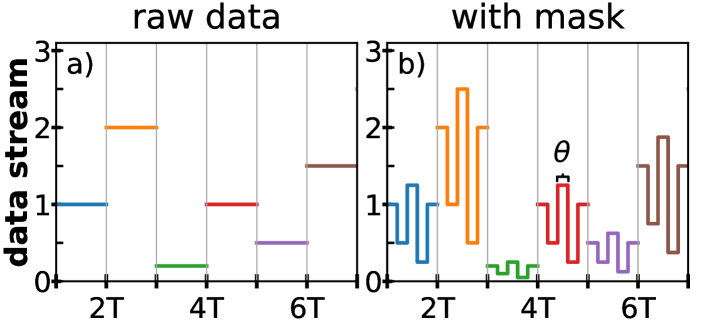

In reality, measurement resolution and noise limit the amount of information that can be stored within a delay-system. Hence, a single delay-system is not infinitely computationally powerful. (If these limitations apply to the pure mathematical construct of a DDE does not seem obvious). Additionally, real-world systems operate in continuous time - not the discrete time of most of the simulated artifical neural networks. This neccessitates the use of an external clock time and a refined data injection and extraction protocol. Input data typically consists of a multi-dimensional vector representing time-discretized measurements. This vector is converted into a piece-wise constant function , with constant step length (a sketch of this is shown in Fig. 2 (a)). While in principle, the reservoir can be directly driven with the piece-wise constant input data , this usually leads to a comparably low-dimensional trajectory for delay-systems. Efficient reservoir computing with delay-systems relies on the so called ’masking’ or ’time-multiplexing’ procedure Appeltant et al. (2011). A -periodic function, called the mask, is multiplied on top of the piece-wise constant resulting in a rich input , shown in Fig. 2 (b). This more complex input data stream induces a dynamically richer response of the reservoir that is still strongly dependent on the input data.

Using a single-node with delay as a reservoir therefore has a few distinct advantages: The setup is easily scaled up or down, depending on the required phase space dimension if the delay line is simply modified. Furthermore, these systems have been successfully used in experimental setups owing to the comparably simple implementation when only a single ’active node’ is needed Appeltant et al. (2011); Brunner et al. (2013); Larger et al. (2012). However, the sequential nature of the data input and readout also slows the system down. In fact, doubling the number of virtual nodes would lead to a halfing of the clock cycle.

IV Virtual and Mixed Networks

Often the mask is chosen to be a piece-wise constant function with lengths of , where is the time-multiplexing or virtualization factor. However, this is not the only choice Nakayama et al. (2016). As with the input, the readout also needs a reference clock when used in a continuous time system. This neccessarily needs to align with the input periods , as otherwise input and output would start to drift with respect to each other. The output timings could now be done once per input-timing window , but this would lead to a very poorly resolved and low-dimensional readout of the complex phase space trajectory. With a piece-wise constant mask it is much more natural to read out with the same frequency as the characteristic mask time scale, i.e. once per . Reading out even faster is possible, however in real experiments this readout process is the actual bottle neck and therefore increasing it is not trivial. With a piece-wise constant mask and synchronized read-out the system can essentially be thought of as a ’Virtual Network’. Each time interval represents a ’Virtual Node’ of which there are in total Appeltant et al. (2011). This analogy helps link the original network-based concepts of reservoir computing with the delay-based examples. For some cases, an explicit or approximate transformation to a network picture can be derived and used Grigoryeva et al. (2015); Schumacher et al. (2013).

In this work we systematically compare network and delay-based approaches. For this, we construct what we call ’multiplexed networks’ that include both delay lines and real nodes within a networks. We refer to physical nodes as ’real nodes’, as opposed to the ’virtual nodes’ created through time-multiplexing. With some small adjustments the masking procedure described in Sec. III can be generalized to coupled network motifs. In principle one could take any small network of instantaneously coupled oscillators, give each individual node or a subset of nodes its own delayed self-feedback with identical delay time and then apply the masking procedure to the network as a whole. This way, the network could be seen as a single multidimensional node that is used in the same way as described in Sec. III. However, this approach has two drawbacks: First, the network motif needs additional external feedback connection, which would neccessitate additional physical components for an experimental implementation. Additionally, most sufficiently fast real-world implementations of coupled systems will not be able to be instantaneously coupled inside the network motif. We propose a different masking process for network motifs profits that profits from the fact that small network motifs of real nodes would usually already contain time-delay connections. Followingly these already present delay-connections can be utilized for the time-multiplexing procedure. We therefore generate a mask for each node independently and simply drive and read-out the network as is. While in the traditional delay-line approach the ’virtual nodes’ are often portrayed as lieing on the single long delay-loop, this representation is no longer possible in a complex network motif. Nevertheless, the same principles of time-multiplexing apply.

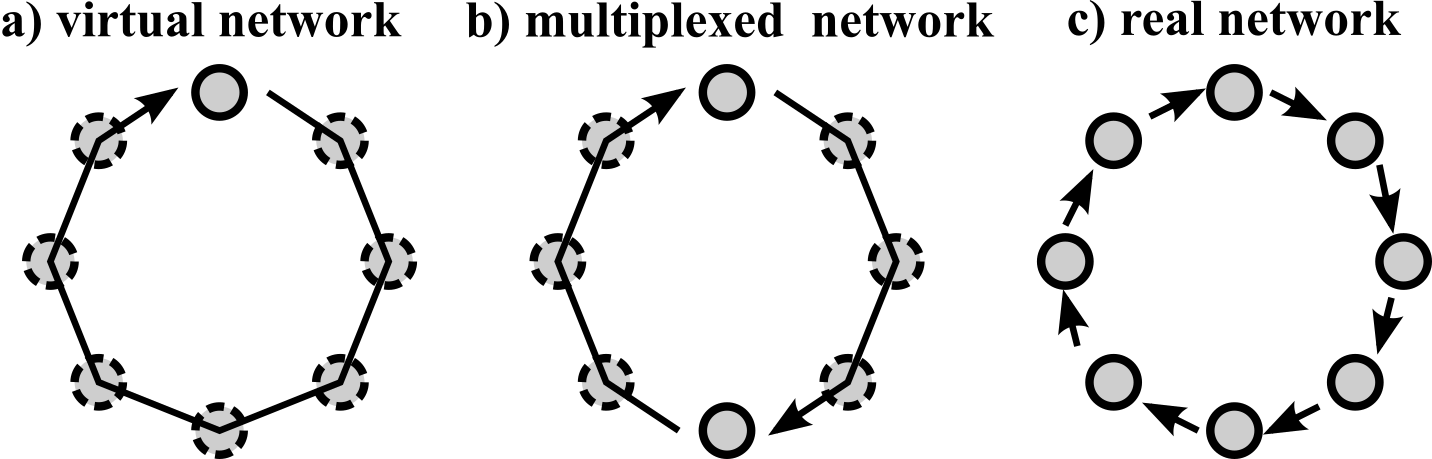

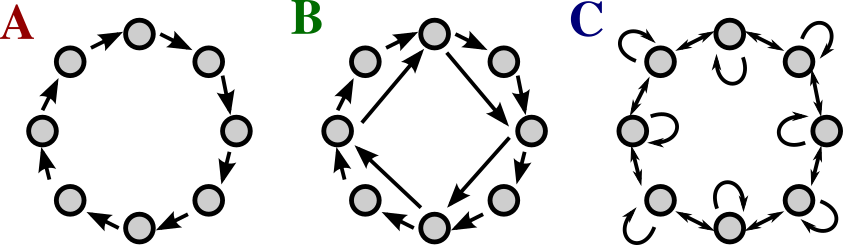

Figure 3 shows a sketch of the different network types that we will compare: Virtual networks consist of a single node with feedback, in which a rich phase space response is created with the masking procedure as described in Sec. III, cf. Fig. 3 a). We also look at delay-coupled network motifs using the already present delay of the connections, shown in Fig. 3 b). A mask of length is generated for each node individually, and the state of every real node is recorded simultaneously. When we increase the number of real nodes and reduce the virtualization factor to 1 the system becomes a network of purely real oscillators, Fig. 3 c).

Many systems in nature are coupled oscillatory systems. These not only include electromagnetic waves, but also nanomechanical oscillators and chemical oscillators among others. As we are interested in fundamental properties of reservoir computing systems, we will not focus on a specific experimental application in depth. Instead, we employ the fundamental case of delay-coupled Stuart-Landau oscillators, described by the complex variables in the following system of DDEs:

| (1) |

Here is the bifurcation parameter with an Andronov-Hopf-bifurcation occurring at in a solitary oscillator, is the frequency of the free-running oscillator. The sign of the real part of the nonlinearity defines whether the Andronov-Hopf-bifurcation is sub- or supercritical, while the imaginary part defines the hardness of the spring and induces an amplitude-phase coupling. Hence, is linked to the amplitude-phase or linewidth-enhancement factor of semiconductor lasers Böhm et al. (2015). The network-coupling between the oscillators is defined by the coupling strength and coupling phase . The topology of the network is given by the adjacency matrix . The coupling and feedback terms are delayed with the delay time . For our numerical simulations we set (supercritical case), , and , unless noted otherwise. We assume all delay-lengths to be identical. This model can approximate a wide range of different oscillatory systems that are coupled instantaneously, i.e., with negligible transmission and coupling delay. The Stuart-Landau system is the normal form of an Andronov-Hopf-bifurcation and therefore any system close to such a bifurcation can be approximated with the nonlinearity of Eq. (1). This model can therefore also describe lasers if they are operated close to an instability threshold.

For the systematic study of reservoir computing performance, we create networks of different sizes. As the reservoir computing performances generally increases with the dimension of the read-out, we keep the output-degrees constant. For this, we create networks for which the product of real nodes and degree of virtualization is constant. However, as the degree of virtualization is linked with the time-per-virtual-node , this means we also change the delay time when changing . We have chosen to use a system with base to create the different networks. We keep the product constant and increase by factors of 2 from to . The time per virtual node is and the delay time set to . Our mask length and delay-length are therefore non-identical, which has been shown to increase performance Paquot et al. (2012). We inject by varying in Eq. (1), corresponding to a driving current in a laser. The maximum injection strength is and the mask values are binary, i.e. either or .

We test the system using the Nonlinear-Autoregressive Moving Average Task (NARMA) Atiya and Parlos (2000) of length 10. This simulates a complex nonlinear transform of an input array, where both memory and nonlinear transformation capabilities are needed. From a given series series drawn from a uniform distribution , the trained system has to calculate the corresponding NARMA10 series. The NARMA10() is defined by an iterative formula as given by:

| (2) |

Furthermore, we also test the performance for the Santa-Fe laser chaotic time series prediction task. This dataset contains roughly 9000 data points for a chaotic laser. Given the timeseries up to a point , the system is trained to predict the future step(s) of this chaotic series. We restrict our-selves to the 1-step prediction in this report. We have always used a training and testing length of 2900 datapoints with a buffer length of 100 for the Santa Fe task.

We evaluate the performance for both tasks by calculating the normalized-root-mean-squared error (NRMSE), where the normalization is done with the variance of the target series. Given a target series and the output of the trained system , we calculate the NRMSE as:

| (3) |

The NRMSE is for a perfect agreement between target and output, while is the highest reasonable error, representing a static prediction of the average of the target.

V Two delay-coupled Oscillator

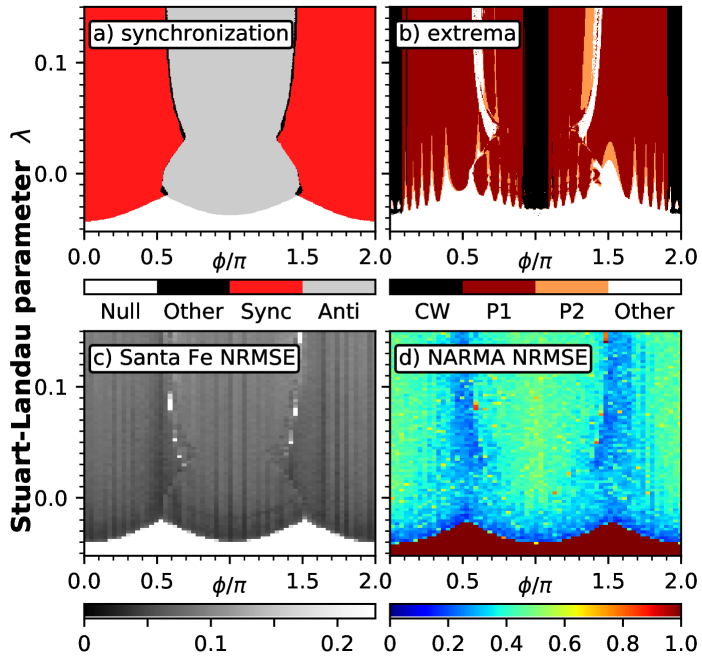

The simplest case of a multiplexed network is the case of two real delay-coupled nodes. The system of coupled Stuart-Landau oscillators is a well-studied example in nonlinear dynamics. Networks of such oscillators can exhibit a wide range of different dynamics Hakim and Rappel (1992); Nakagawa and Kuramoto (1993); Ku et al. (2015); Koseska et al. (2013); Ushakov et al. (2005); Geffert et al. (2014); Zakharova et al. (2014, 2016). Here, we study the connection between reservoir computing capabilities and the dynamics of the underlying network Röhm et al. (2017). In Fig. 4 we have numerically integrated the system given by Eq. (1) with , , , , , for different values of the coupling phase and base input parameter . Note, that is additionally modified by the input procedure by up to in panels c) and d).

Panel a) of Fig. 4 shows the synchronization type of the network without input. White regions correspond to the off-state , synchronization occurs in the red regions centered around and anti-synchronization for the grey regions. Fig. 4 b) shows the number of different maxima of of the network without input, highlighting the regions of dynamic complexity. The black regions exhibit constant amplitudes , while the colored regions contain higher-order dynamics, i.e. amplitude oscillations, period doubling cascades and quasiperiodic behaviour. The white regions contain other dynamics, mostly the off-solution and complex behaviour. Finally, Fig. 4 c) shows the error landscape as measured by the NRMSE for the Santa Fe 1-step prediction task for this network, with the results for the NARMA10 task in panel d). We have used a training length of 1500 data points for training and 500 data points for testing, with an additional 150 data points as a buffer for the NARMA task. The darker/blue colors correspond to a low error, i.e. high performance, while brighter/yellow regions exhibit poor reservoir computing capabilities.

Analyzing the relationship between the different characteristics shown in Fig. 4 we can find a few general trends: First, we find that the regions of the off-solution (low , compare Fig. 4 a) cannot be used for reservoir computing. This is not surprising, as the system does not react at all to input, if the parameter never exceeds the onset of oscillations. A system that does not react to the driving signal will not be able to output a transformation of that signal and hence not have any computational power. Next, within the regions of synchronization and desynchronization there is considerable variability of the error in Fig. 4 c) and d). The regions of lowest error generally lie within the area of synchronization, while the anti-synchronization never reaches NRMSE values that low. Moreover, there exist many regions that exhibit time-dependent amplitude-modulations even without input (cf. colored regions in Fig. 4 b), i.e. the system is on a limit cycle. These amplitude oscillations will, in general, not have a period that is identical to our input timing window . Hence, the network will react differently to the same input, depending on its position in phase space when the input is applied. This violates one of the core requirements for reservoir computing, namely the ’reproducibility’ of phase-space trajectoriesOliver et al. (2016). Nevertheless, we find the regions of best performance to lie in those areas. This demonstrates that looking at the network without input is not sufficient to predict the reservoir computing behaviour. Furthermore, it is likely that the amplitudes of the oscillations can influence, how much the performance is degraded. Fig. 4 c) and d) furthermore reveal, that the regions of best performance can be found close to the bifurcation lines separating the regions of different behaviour. The ’edge of chaos’ has been mentioned as the optimal driving point for reservoir computers in previous works Berg et al. (2004); Verstraeten et al. (2007). A similar effect is occuring here, where the regions closer to dynamic complexity exhibit a better performance.

VI Networks

When going beyond the simple case of , the possibility of how to couple the networks increases dramatically. We cannot test and investigate all topologies, so in this report we use three different topologies for the underlying network of real oscillators. As we are using the already present links inside the network, we can in principle use any network topology in conjunction with the time-multiplexing procedure. However, as we are most interested in general trends we will abstain from using random or complex topologies and focus on three very simple topologies as sketched in Fig. 5. First, we test a unidirectional ring. This is not only a very simple network topology, but also in some sense represents the ’virtual network’ created for a single node with delay Schumacher et al. (2013). An example is shown in Fig. 5 A for . As a second example, we use the same network but add crosslinks for every fourth node, jumping forward 4 nodes. Fig. 5 B shows an example for (the sketch contains jumps with only length 2). Note, that this network will be identical by construction to the unidirectional ring for . For both types of unidirectional ring we take the links to be all identical in strength. Lastly, we test a bidirectional ring with self-feedback, as shown in Fig. 5 C. Here we are inspired by the often used difference coupling and hence the self-feedback is assumed to have double the strength and a differing sign than the bidirectional links.

For each parameter combination we randomly generate the mask sequence, i.e. the two-dimensional scans shown have a different mask for every parameter combination. The NARMA sequence is fixed. In the following we have used a training length of 5000, with an additonal buffer of 1000 at the start to let the system settle into the correct trajectory. The evaluation was done with identical lengths. The simulations were programmed with custom code written in C++ and run on the CPUs of a network of approximately 30 conventional workstation computers.

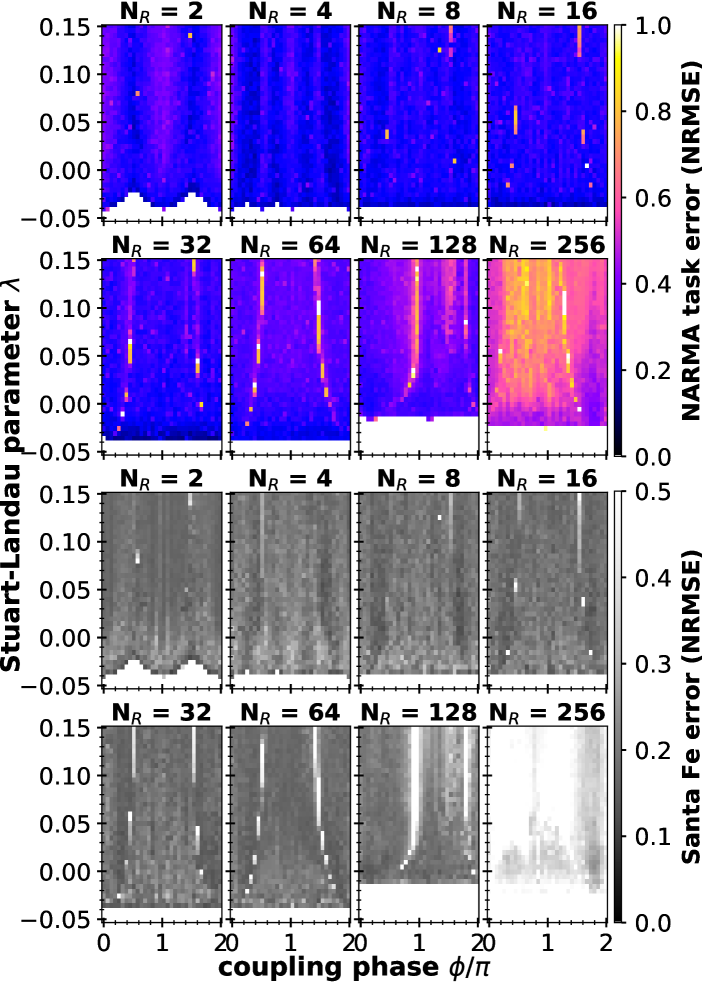

Figure 6 shows the results of our numerical simulation of networks with different number of real nodes for the unidirectional ring as shown in Fig. 5 A, while adjusting the number of virtual nodes to keep the overall reservoir readout dimension constant. The top 8 panels show the result for the NARMA10 task, while the bottom panels show the results for the Santa Fe laser 1-step prediction task. High errors (yellow/white) designate undesirable regions for computing, while low errors (blue/dark grey) show regions of effective computing.

The structure of the colored regions in Fig. 6 is dominated by a sudden cutoff for low . This corresponds to the threshold of oscillations for the individual oscillator, and for values lower than a critical no stable oscillation exists. This is the same mechanism as already discussed for the case of in Sec. V. Note, that the maximum input strength of the pump term is . The comparison of the different number of real nodes in Figure 6 shows several trends: While at first a clear global structure is visible for with two ’valleys’ of good performance, this splits into four valleys for . For larger networks this structure is washed out, and instead two lines of high NRMSE become visible (the red and yellow lines in Fig. 6 for ). These likely indicate bifurcations in the underlying state diagram. For the networks with the highest number of real nodes ( and in Fig. 6) the performance is greatly degraded. Additionally, the cut-off threshold is strongly modified in these last panels, as the delay-time is significantly shorter, as we scale the delay inversely with the number of real nodes.

Overall, the performance of the network reaches values favorable for reservoir computing in all the low- cases. Furthermore, from in Fig. 6 it is qualitatively apparent, that the dependence of the performance on the parameters is reduced for these intermediate networks.

The lower 8 panels of Fig. 6 show the results for numerical simulations of the network of Stuart-Landau oscillators as given by Eq. (1) for the Santa Fe laser chaotic time-series prediction task for different in the plane of and coupling-phase . The grey color code shows the test run NRMSE. Note the different scaling compared to the NARMA10 color scale, due to the overall lower NRMSE for the Santa Fe task. The Santa Fe laser task NRMSE shows a qualitatively similar behaviour as the NARMA10 NRMSE shown in the top-panels of Fig. 6. The same lines of high error can be found for . Additionally, the region of good performance is limited by the same cut-off for low . We find a high degree of similarity between the performance in the Santa Fe and NARMA10 task, i.e. regions that are suitable for one are also suitable for the other. For some values of this is more apparent than others in Fig. 6. The generally accepted mechanism behind most of the transformations is the ability of the reservoir to store memory and its ability of nonlinear transformation. Ref. Dambre et al. (2012) introduced the notion of dividing the stored information inside the reservoir into the linear memory capacity, representing a mere recording of past inputs, and nonlinear memory capacity, representing storage of transformed information. Together these memory capacities form a complete basis in the space of transformations. The fact that both Santa Fe and NARMA10 exhibit similar performance profile as shown in Fig. 6 indicates that both tasks need a similar set of linearly and nonlinearly stored information.

We have used the data sets generated for Fig. 6 to calculate the covariance of NRMSE for the Santa Fe and NARMA10 task. Using the raw data, we find very high covariances of over for all values of . However, this behaviour is mostly dominated by the regions of NRMSE close or equal to for low . When we exclude all points with NRMSE greater than we get drastically lower values, ranging from for to for . For we find a small negative covariance of . These much lower values have two reasons: First, even a visual inspection does reveal some deviation of details for the NRMSE landscape in Fig. 6. For example, the error seems to generally increase with for for the NARMA10 task, while it decreases for the same with in the Santa Fe task. Second, and more importantly, the covariances we have calcuated only represented a lower bound. We have two sources of uncertainty for our simulations that we cannot control for in Fig. 6, namely that we are independently creating random binary masks and drawing the source sequence for the NARMA10 task for every parameter combination and . We are therefore comparing the Santa Fe and Narma task results for different masks, while the parameter scan of the NARMA10 task shown in Fig. 6 uses a new independet but identically distributed for every parameter combination. These limitations have only been considered after the extensive numerical simulations required for the two-dimensional parameter scans and therefore a detailed analysis will have to be left for future investigations.

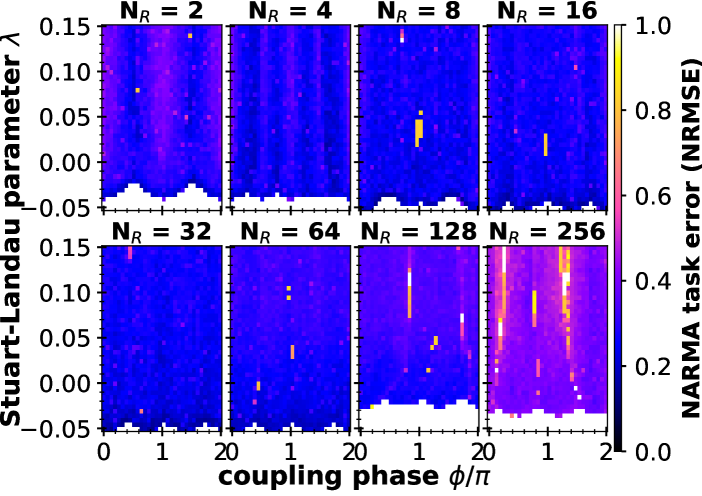

Figure 7 shows the result of the NARMA testing error for the unidirectional ring with jumps (network type in Fig. 5 B). Due to construction, this system is identical for and to the pure unidirectional ring as shown in Fig. 6. We do however randomly generate a new mask and NARMA target series for every parameter combination. Therefore a comparison between Fig. 7 and Fig. 6 for and allows us to see the influence of differing masks. The global structure of the NRMSE is not changed. This indicates that the performance is reproducable across different masks and NARMA10 series. For Fig. 7 and Fig. 6 differ due to the extra links added in Fig. 7. From a mere visual inspection no drastic difference of the quality of perfomance can be seen. Nevertheless, the global structure differs as the lines of bad performance and border regions with the off-state have shifted. This is to be expected, as additional links will change the bifurcations occuring in the network and bifurcations are usually associated with extrema in the performance. As was found for the pure unidrectional ring, we also see a dramatic breakdown in performance in Fig.7 for . Additionally, the same ’washing out’ of structure can be observed for the intermediate values of , indicating a reduced parameter dependence of multiplexed networks with multiple real and virtual nodes.

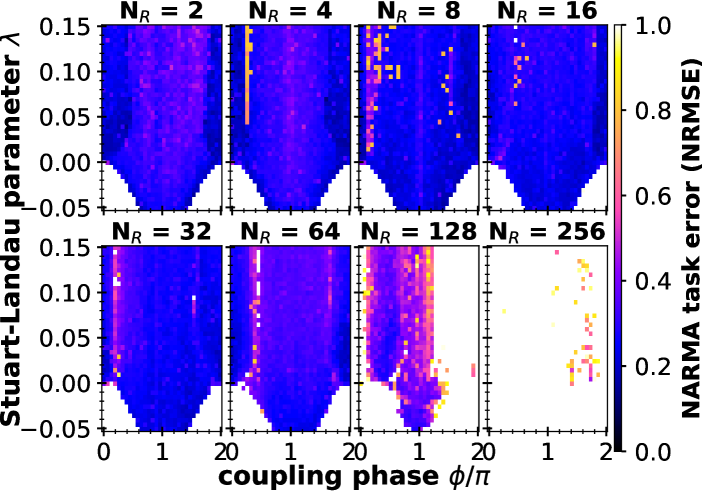

Lastly, Fig. 8 shows the performance of a bidirectionally coupled ring of oscillators with self-feedback as described by Eq. (1) (topology as sketched in Fig. 5 C). Due to the fundamentally different topology, the global structure of the NRMSE shown in Fig. 8 differs from Fig. 6 and Fig. 7. Both the boundary towards the ’off-solution’ (white areas of poor performance at the bottom of Fig. 8) as well as regions of optimal performance are at different locations. The dropout of performance for low degrees of virtualization, i.e. high number of real nodes is the most extreme in this topology (compare across Fig. 6, 7 and 8). This is possibly due to higher multistability of the system due to the more complex but regular topology.

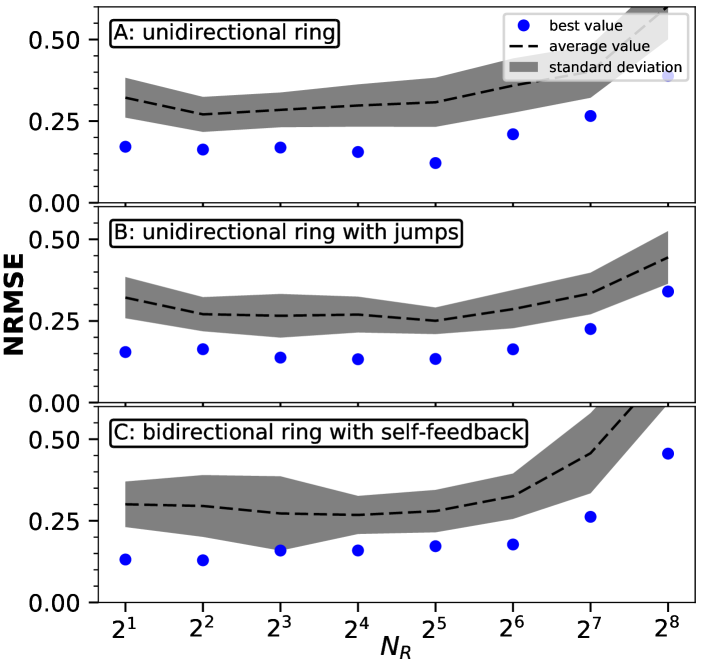

For a qualitative comparison of the general trends we have generated Fig. 9, where the number of real nodes and NRMSE for the NARMA10 task is shown. We have used data simulated for the 2-parameter scans for the unidirectional ring shown in Fig. 6, 7 and 8. The blue dots correspond to the optimal or lowest NRMSE found within the 2d-scan for a given network size . For the simulations as obtained Fig. 6 we have found that the network with no virtualization never showed a NRMSE smaller than . The black line in Fig. 9 shows the average NRMSE for the NARMA task (excluding the white regions of no performance in Fig 6). The grey band shows one standard deviation of the NRMSE data. The qualitative impression of the color plots in Fig. 6 are largely validated by the quantitative evaluation: Both the average NRMSE as well as the optimal NRMSE are mostly flat for multiplexed networks created for low number of real nodes until the performance breaks down for the large networks with low virtualization. All network topologies show qualitatively similar results in Fig. 9.

There are still more effects that warrant some attention. We run a few simulations for a network of slightly non-identical units for the bidirectional ring with self-feedback (Fig. 5 C). This more closely resembles an actual experimental implementation of a network, as in real setups no two nodes would be absolutely identical. We have used the same parameter locations as for the bidirectional ring with self-feedback. However, the difference we found was small.

For all the networks used here, we have employed the same delay length for every network link. Considering that making the mask and delay term non-identical improves performance, it seems likely that non-identical delay-lines could have a similar effect. However, simulating a network of non-identical delay-links is time-consuming and left for future investigations here.

VII Conclusions

We have investigated the reservoir computing performance of a time-continuous system with delay. While many studies have been published concerning a single dynamical system with a long delay loop, we have numerically simulated network motifs consisting of several nodes that are delay-coupled. We have used the time-multiplexing/masking procedure to generate additional high-dimensional trajectories. We have constructued the ’multiplexed networks’ in such a way, that the over all dimension of the read-out stays constant. This enables us to not only qualitatively, but also quantitatively compare the reservoir computing performance.

Reservoir computers consisting exclusively of large real, regular networks have exhibited poor performance in both the NARMA and Santa Fe task, independent of local topology. We attribute this both to the higher multistability of such systems, as well as the relative lack of complex phase space trajectories due to the absence of time-multiplexing. In contrast, networks of only small and intermediate size have performed consistently on a state-of-the-art level. We found a lowered parameter sensitivity and an enhanced computation speed for such systems. This is encouraging for experimental realizations, as our results indicate that the design of a reservoir computer can be chosen with some degree of freedom. As long as a sufficient time-multiplexing is used, the number of real nodes can be adjusted to fit experimental limitations and desired output speed.

VIII Acknowledgement

The authors thank B. Lingnau and L. Jaurigue for fruitful discussions. This work was supported by the DFG in the framework of the SFB910.

References

References

- Jaeger (2001) H. Jaeger, The ’echo state’ approach to analysing and training recurrent neural networks, GMD Report 148 (GMD - German National Research Institute for Computer Science, 2001).

- Maass et al. (2002) W. Maass, T. Natschläger, and H. Markram, Neural Comp. 14, 2531 (2002).

- Schiller and Steil (2004) U. Schiller and J. Steil, Neurocomputing 63, 5 (2004).

- Bauduin et al. (2015) M. Bauduin, A. Smerieri, S. Massar, and F. Horlin, in 2015 IEEE 81st Vehicular Technology Conference (VTC Spring) (2015).

- Keuninckx et al. (2017) L. Keuninckx, J. Danckaert, and G. Van der Sande, Cogn. Comput. (2017), 10.1007/s12559-017-9457-5.

- Argyris et al. (2017) A. Argyris, J. Bueno, M. C. Soriano, and I. Fischer, “Improving detection in optical communications using all-optical reservoir computing,” Abstract (2017).

- Dockendorf et al. (2009) K. Dockendorf, I. Park, P. He, J. C. Principe, and T. B. DeMarse, Biosystems 95 (2009).

- Fernando and Sojakka (2003) C. Fernando and S. Sojakka, in Advances in Artificial Life (2003) pp. 588–597.

- Antonik et al. (2016) P. Antonik, F. Duport, M. Hermans, A. Smerieri, M. Haelterman, and S. Massar, IEEE Trans. Neural Netw. Learn. Syst. (2016), 10.1109/tnnls.2016.2598655.

- Rodan and Tiňo (2011) A. Rodan and P. Tiňo, IEEE Trans. Neural Netw. 22 (2011), 10.1109/tnn.2010.2089641.

- Verstraeten et al. (2007) D. Verstraeten, B. Schrauwen, M. D’Haene, and D. Stroobandt, Neural Networks 20, 391 (2007).

- Steil (2007) J. Steil, Neural Networks 20 (2007), 10.1016/j.neunet.2007.04.011.

- Rodan and Tiňo (2012) A. Rodan and P. Tiňo, Neural Computation 24, 1822 (2012).

- Appeltant et al. (2011) L. Appeltant, M. C. Soriano, G. Van der Sande, J. Danckaert, S. Massar, J. Dambre, B. Schrauwen, C. R. Mirasso, and I. Fischer, Nature Commun. 2, 468 (2011).

- Brunner et al. (2013) D. Brunner, M. C. Soriano, C. R. Mirasso, and I. Fischer, Nature Commun. 4 (2013), 10.1038/ncomms2368.

- Vinckier et al. (2015) Q. Vinckier, F. Duport, A. Smerieri, K. Vandoorne, P. Bienstman, M. Haelterman, and S. Massar, Optica 2 (2015), 10.1364/optica.2.000438.

- Nguimdo et al. (2017) R. M. Nguimdo, E. Lacot, O. Jacquin, O. Hugon, G. Van der Sande, and H. G. de Chatellus, Opt. Lett. 42 (2017), 10.1364/ol.42.000375.

- Larger et al. (2012) L. Larger, M. C. Soriano, D. Brunner, L. Appeltant, J. M. Gutierrez, L. Pesquera, C. R. Mirasso, and I. Fischer, Opt. Express 20, 3241 (2012).

- Paquot et al. (2012) Y. Paquot, F. Duport, A. Smerieri, J. Dambre, B. Schrauwen, M. Haelterman, and S. Massar, Sci. Rep. 2 (2012).

- Zhang et al. (2014) H. Zhang, X. Feng, B. Li, Y. Wang, K. Cui, F. Liu, W. Dou, and Y. Huang, Opt. Express 22, 31356 (2014).

- Nguimdo et al. (2015) R. M. Nguimdo, G. Verschaffelt, J. Danckaert, and G. V. d. Sande, IEEE Trans. Neural Netw. Learn. Syst. 26 (2015).

- Schumacher et al. (2013) J. Schumacher, H. Toutounji, and G. Pipa, “An analytical approach to single node delay-coupled reservoir computing,” Conference: 23rd International Conference on Artificial Neural Networks (2013).

- Vandoorne et al. (2008) K. Vandoorne, W. Dierckx, B. Schrauwen, D. Verstraeten, R. Baets, P. Bienstman, and J. V. Campenhout, Opt. Express 16, 11182 (2008).

- Vandoorne et al. (2011) K. Vandoorne, J. Dambre, D. Verstraeten, B. Schrauwen, and P. Bienstman, IEEE T. Neural. Networ. 22 (2011).

- Vandoorne et al. (2014) K. Vandoorne, P. Mechet, T. Van Vaerenbergh, M. Fiers, G. Morthier, D. Verstraeten, B. Schrauwen, J. Dambre, and P. Bienstman, Nature Photon. 5 (2014).

- Crutchfield et al. (2010) J. P. Crutchfield, W. Ditto, and S. Sinha, Chaos 20 (2010), 10.1063/1.3492712.

- Lang and Kobayashi (1980) R. Lang and K. Kobayashi, IEEE J. Quantum Electron. 16, 347 (1980).

- Alsing et al. (1996) P. M. Alsing, V. Kovanis, A. Gavrielides, and T. Erneux, Phys. Rev. A 53, 4429 (1996).

- Soriano et al. (2013) M. C. Soriano, J. García-Ojalvo, C. R. Mirasso, and I. Fischer, Rev. Mod. Phys. 85, 421 (2013).

- Nakayama et al. (2016) J. Nakayama, K. Kanno, and A. Uchida, Opt. Express 24, 8679 (2016).

- Grigoryeva et al. (2015) L. Grigoryeva, J. Henriques, L. Larger, and J. P. Ortega, Sci. Rep. 5, 12858 (2015).

- Böhm et al. (2015) F. Böhm, A. Zakharova, E. Schöll, and K. Lüdge, Phys. Rev. E 91, 040901 (R) (2015).

- Atiya and Parlos (2000) A. F. Atiya and A. G. Parlos, IEEE Trans. Neural Netw. 11 (2000).

- Hakim and Rappel (1992) V. Hakim and W. J. Rappel, Phys. Rev. A 46, R7347 (1992).

- Nakagawa and Kuramoto (1993) K. Nakagawa and Y. Kuramoto, Prog. Theor. Phys. 89, 313 (1993).

- Ku et al. (2015) W. L. Ku, M. Girvan, and E. Ott, Chaos 25 (2015).

- Koseska et al. (2013) A. Koseska, E. Volkov, and J. Kurths, Phys. Rep. 531, 173 (2013).

- Ushakov et al. (2005) O. V. Ushakov, H. J. Wünsche, F. Henneberger, I. A. Khovanov, L. Schimansky-Geier, and M. A. Zaks, Phys. Rev. Lett. 95, 123903 (2005).

- Geffert et al. (2014) P. M. Geffert, A. Zakharova, A. Vüllings, W. Just, and E. Schöll, Eur. Phys. J. B 87, 291 (2014).

- Zakharova et al. (2014) A. Zakharova, M. Kapeller, and E. Schöll, Phys. Rev. Lett. 112, 154101 (2014).

- Zakharova et al. (2016) A. Zakharova, M. Kapeller, and E. Schöll, J. Phys. Conf. Series 727, 012018 (2016).

- Röhm et al. (2017) A. Röhm, K. Lüdge, and I. Schneider, arXiv (2017), 1712:01610.

- Oliver et al. (2016) N. Oliver, L. Larger, and I. Fischer, CHAOS 26, 103115 (2016).

- Berg et al. (2004) T. W. Berg, J. Mørk, and J. M. Hvam, New J. Phys. 6, 178 (2004).

- Dambre et al. (2012) J. Dambre, D. Verstraeten, B. Schrauwen, and S. Massar, Sci. Rep. 2 (2012), 10.1038/srep00514.

IX Methods

A minimal example of an ’echo state network’, i.e. a time-discrete reservoir computer, as described from Ref. Jaeger (2001) is:

| (4) | ||||

| (5) |

Where is the state-vector of the network of maps, is the adjacency matrix of the network, is the matrix of input-coupling for the data stream . The time is taken to be discrete and the evolution of the network is given by Eq. 4. represents a local sigmoidal function that acts element-wise on . The output is calculated from with the outcoupling weight matrix . The matrices , ande now have to be chosen in such a way that corresponds to the desired transformation. The distinguishing feature now lies in the optimization process. While conventional deep convolutional neural network learning schmes heavily focus on the training of the , this matrix is assumed to be fixed for reservoir computing. In fact, training is only applied to the output weight matrix , for which a simple linear regression is enough to find the optimal values Jaeger (2001).