Resilient Distributed Parameter Estimation in Sensor Networks

Abstract

In this paper, we study the problem of parameter estimation in a sensor network, where the measurements and updates of some sensors might be arbitrarily manipulated by adversaries. Despite the presence of such misbehaviors, normally behaving sensors make successive observations of an unknown -dimensional vector parameter and aim to infer its true value by cooperating with their neighbors over a directed communication graph. To this end, by leveraging the so-called dynamic regressor extension and mixing procedure, we transform the problem of estimating the vector parameter to that of estimating scalar ones. For each of the scalar problem, we propose a resilient combine-then-adapt diffusion algorithm, where each normal sensor performs a resilient combination to discard the suspicious estimates in its neighborhood and to fuse the remaining values, alongside an adaptation step to process its streaming observations. With a low computational cost, this estimator guarantees that each normal sensor exponentially infers the true parameter even if some of them are not sufficiently excited.

I Introduction

As a fundamental problem in various applications such as system identification and adaptive control, distributed parameter estimation has been studied in the literature over decades (see, e.g., [1, 2, 3, 4, 5]). In this problem, each sensor observes (partial) information of a system with an unknown (vector) parameter, and attempts to consistently estimate the true parameter by cooperating with others.

As for a single sensor, it is well known that consistent estimation is possible only if its regressor meets certain excitation conditions. Moreover, exponential convergence can be further achieved if a persistent excitation (PE) condition is verified, which guarantees that the input signals of a plant are sufficiently rich that all modes of the plant can be excited [1]. However, in a distributed framework, the PE condition may not necessarily hold at every sensor side. Therefore, by properly introducing consensus algorithms, weaker excitation conditions have been proposed, with which sensors collectively satisfy the PE condition and cooperatively fulfill the estimation task (see, for example, [4, 6, 7]).

However, distributed estimation algorithms, despite relaxing the condition on PE and providing better flexibility as compared to their centralized counterparts, are vulnerable to adversarial behaviors in the network [8]. As the scale of the network increases, it becomes especially difficult to secure every sensor and communication channel. Particularly, adversaries could manipulate measurements or transmissions of sensors and disrupt the operation of conventional algorithms. In fact, as reported in [9], even a single adversary is able to drive normal sensors falsely to its desired estimates.

Inspired by these issues, recent research efforts have been devoted to the design of secure estimation protocols. A large class of methods focuses on developing resilient algorithms which ensure that all the normally behaving sensors resiliently recover the unknown parameter even in the presence of attacks. To raise the resiliency against malicious behaviors, many approaches adopt the idea to simply ignore the most extreme values in the neighborhood. Stemming from this idea, a family of strategies termed as mean-subsequence reduced (MSR) algorithms has been proposed and widely applied to solve the problem of resilient consensus (see, for example, [10, 11, 12, 13]). However, different from the consensus problem, which does not incorporate sensors’ measurements, in the problem of resilient estimation, each sensor must generate a secure estimate by processing its streaming data. To cope with this issue, the works [14, 9] have developed resilient estimators by extending the MSR algorithms. These estimators are shown to be resilient to Byzantine sensors if the network is sufficiently connected and the collective measurements from normal sensors are observable for the system state. Other resilient approaches include [8, 15], where a pre-defined threshold is necessary at each sensor side to check the distance between the local estimates of its neighbors and its own, and thus limits effects from the misbehaving ones.

In this paper, we also investigate the problem of distributed parameter estimation in an adversarial environment. However, different from most of the aforementioned works, where the regressor is assumed to be a constant matrix, we consider a more general model. Specifically, the observations of each sensor are generated via a linear regression equation (LRE), which is able to describe the input-output relationship of a large class of linear and nonlinear dynamical systems [1]. Moreover, notice that in the literature [8, 15, 16], it is assumed that only the measurements of sensors can be manipulated. In contrast, this paper considers the case where not only the measurements, but also the updating rules of a sensor can be faulty and arbitrarily manipulated. Notice that the latter situation could happen if some “non-participating” sensors exist in the network, which weigh their private interests more than the public ones and are not willing to follow the given protocols.

Our estimator is developed inspired by the so-called dynamic regressor extension and mixing (DREM) algorithm. The DREM was first introduced in the recent work [17], where, in a fault-free environment, it reveals decent performance in relaxing the excitation condition and guaranteeing asymptotic convergence at a fusion center. This paper, with subtle modifications on DREM, proposes a resilient combine-then-adapt (RCTA) diffusion algorithm to accommodate the difficulties brought by the distributed framework and malicious behaviors. To be specific, by leveraging DREM, we transform the problem of estimating a -dimensional vector parameter to that of scalar ones: one for each of the unknown parameters. For each of the scalar problem, an estimation strategy is given, where each normal sensor runs a resilient algorithm to discard the suspicious estimates in its in-neighborhood and fuse the remaining values, alongside an adaptation process to incorporate its own measurements by using least-mean squares. We provide sufficient conditions under which each normal sensor exponentially infers the true parameter over a directed graph, even if some of them are not sufficiently excited through their inputs. Notice that by decoupling the vector estimation problem into scalar ones, the algorithm proposed here reveals a lightweight implementation, which yields lower cost in solving the more complicated distributed estimation problems.

The rest pf this paper is organized as follows. We will introduce some preliminaries and problem formulation in Sections II and III, respectively. The main algorithm and its convergence analysis are presented in Section IV. We test the main results through some numerical examples in Section V. Finally, Section VI concludes the paper.

II Preliminaries

Consider a digraph . Let be the set of sensors, and be the set of edges. An edge from sensor to is denoted by , indicating sensor can receive the information directly from sensor . Accordingly, the sets of in-neighbors and out-neighbors of agent are defined, respectively, as

| (1) |

For the algorithms employed in this paper, we shall characterize their resilience in terms of the definitions below [9]:

Definition 1 (-reachable)

Consider the digraph . A set is said to be -reachable if it contains at least one sensor that has at least in-neighbors from outside . That is, there exists such that .

Definition 2 (Strongly -robust w.r.t. )

Consider the digraph with being a nonempty subset of . The graph is said to be strongly -robust w.r.t. , if for any nonempty subset , is -reachable.

Intuitively, Definition 2 requires that for any nonempty subset of , it has at least one sensor with a sufficient number of in-neighbors from outside.

III Problem Formulation

Let us consider the problem of distributed parameter estimation, where multiple sensors cooperatively infer an unknown parameter through local measurements. To be specific, for each sensor , the measurable signals and are related via the following linear regression equation (LRE)111The results in this paper can be readily generalized to cases where the sensor outputs a vector measurement , by treating each of its entries independently as a scalar measurement.:

| (2) |

where is the parameter to be estimated and denotes its transpose.

The sensors aim to estimate from a stream of measurable signals. However, in a practical network, a single sensor may not be sufficiently excited through its inputs. Therefore, the signals available at its local side are not enough to consistently estimate the parameter [1]. In this respect, each sensor intends to obtain an exact estimate on through the information exchange with others. We use the digraph to model the interaction among them.

III-A Attack model

This paper is concerned with the parameter estimation in an adversarial environment, where some of the sensors might be faulty or misbehaving. Let us denote the set of indices of these sensors as . Any sensor could be the one that fails to follow the pre-defined estimation protocol or whose transmitted data is manipulated by an adversary. On the other hand, the normal or benign sensors will always adopt the prescribed estimation algorithm; the set of such nodes is denoted by . Given the limited energy of adversaries, it is reasonable to assume an upper bound on the number of faulty sensors. In this paper, we shall consider an -local attack model as defined below222Note that the -local attack model assumed here is more general than the -total attack model, where the total number of faulty sensors is assumed to be upper bounded by .:

Assumption 1 (Attack model)

The network is under an -local attack model. That is, for any normal sensor , it has no more than in-neighbors that are misbehaving, i.e., .

III-B Resilient parameter estimation

Our goal is to ensure that all of the normal sensors consistently estimate the parameter . Specifically, let be the local estimate produced by sensor at time . This paper aims to develop a distributed estimation algorithm which works resiliently against the set of misbehaving sensors by solving the following problem:

Definition 3 (Resilient parameter estimation)

Resilient parameter estimation is said to be achieved if the local estimate of each normal sensor converges to the true parameter , regardless of the initial states and network misbehaviors, namely,

IV Main Results

This section will provide a resilient algorithm to solve the problem of distributed parameter estimation. Specifically, by decoupling the -dimensional estimation problem into scalar ones, every normal sensor performs independent MSR-based estimation algorithms in a parallel manner, each of which infers an entry of the unknown vector parameter. We shall show that this protocol is lightweight and efficient even in the presence of faulty sensors.

IV-A Dynamic regressor extension and mixing (DREM)

Our estimator is developed based on the dynamic regressor extension and mixing (DREM) algorithm, which was first introduced in [17]. The DREM algorithm is expressed by the three new variables for each sensor , defined as follows:

| (3) |

where we respectively denote by and the adjugate matrix and determinant of matrix . Notice that is a -dimensional column vector. For simplicity, we denote by the -th entry of it. By using DREM, the following lemma is obtained:

Lemma 1 ( [17])

Therefore, leveraging DREM, we generate scalar LREs as presented in (4): one for each of the unknown parameters.

IV-B Description of the resilient algorithm

This subsection is devoted to developing the resilient estimator based on DREM. In order to reject the possible attacks, we propose a resilient combine-then-adapt (RCTA) diffusion algorithm, which overrules the malicious effects from faulty sensors by performing a resilient convex combination and then an adaptation. Specifically, each normal sensor starts with any initial estimate . At any time , it makes an estimation as in Algorithm 1.

1: Collect the local estimates from all in-neighboring agents , and place these values in the multiset .

2: for do

-

a):

Set . Then sort the points in according to their -th entries in an ascending order.

-

b):

Based on the sorted set, remove the first points from which have the smallest -th entries. If there are less than points in , then remove all of them.

-

c):

Similarly, remove the last points from which have the largest -th entries. If there are less than points in , then remove all of them.

-

d):

Sensor resiliently combines the neighboring estimates as

(Resilient combination)

(5) where each weight is lower bounded by and .

-

e):

Sensor updates the -th entry of local estimate as

(Adaptation)

(6) where .

end for

3: Transmit to out-neighbors.

In the proposed algorithm, for inferring each entry of the unknown parameter, i.e., , each normal sensor sorts the received estimates based on their -th entries, where it discards up to smallest ones and up to largest ones. As discussed previously, for normal sensor , it has at least in-neighbors. Since it removes at most values at each , must be non-empty at any time . On the other hand, if , it is possible that . In either case, sensor calculates a resilient combination (5) via linearly combining its own estimate and the ones in . After that, an adaptation (6) is performed using a least-mean square scheme to update sensor ’s local estimate on . Finally, sensor aggregates its estimates on all the entries and sends them to its out-neighbors.

In contrast, a main feature of Algorithm 1 is its simplicity in implementation and computation. Particularly, by leveraging DREM, we decouple the -dimensional parameter estimation problem into scalar ones. Then the resilient combination (5) is performed within each scalar system, where only coordinate-wise message sorting and trimming are needed. The computational complexity of each normal sensor can be shown to be , where . As compared with the existing solutions in multi-dimensional spaces, such as [19, 22, 18, 20], Algorithm 1 yields lower computational cost.

IV-C Assumptions

Before proceeding, we first introduce assumptions that would be adopted in this paper.

It is well known that consistent estimation is possible only if the input signal satisfies certain excitation conditions. In particular, a persistent excitation (PE) condition, which guarantees the signals to be sufficiently rich, is usually required to achieve an exponential convergence ( [1]). On the other hand, in order to countermeasure the faulty behaviors in an adversarial environment, the network should contain a certain degree of redundancy in its communication structure. In this respect, let us introduce the following assumptions:

Assumption 2

There exists a subset of sensors such that the following statements hold:

-

1.

[Persistent excitation] There exist and a finite time such that the following PE condition is satisfied by each :

(7) -

2.

[Network topology] The network is strongly -robust w.r.t. .

According to Assumption 2, the set consists of the sensors that are persistently excited. Moreover, there could exist two cases: all sensors in the network are persistently excited and thus , or only a subset of the sensors is persistently excited, but they are “sufficiently connected” to all the others. Particularly, as for the second case, since the network is strongly -robust w.r.t. , one can verify from Definition 2 that . Moreover, each sensor has at least in-neighbors333To see this, suppose that sensor outside the set has at most in-neighbors. Let us choose . Then based on Definition 2, is at most -reachable. Therefore, the network can not be strongly -robust w.r.t. , which contradicts the assumption.. As will be proved later, in either case, our algorithm guarantees that each normal sensor, whether it satisfies the PE condition or not, consistently estimates the true parameter.

Notice that sensors in may also be faulty or misbehaving. For simplicity, we denote by the subset of which contains only the normal sensors, that is, . The following assumption is finally made:

Assumption 3

The set is nonempty.

Assumption 3 implies that at least one normal sensor exists, which can find the true parameter. Notice that this is necessary for to be consistently estimated by all normal sensors.

IV-D Convergence analysis

To theoretically analyze the performance of Algorithm 1, let us define the following set for each normal sensor :

| (8) |

which includes all the normal in-neighbors of sensor and itself. This set is used in our analysis only and need not be known by sensor . In the next lemma, we shall prove that obtained by performing the resilient combination (5) is indeed a convex combination of the local estimates from the normal sensors in :

Lemma 2

Suppose that under Assumptions 1–3, each normal sensor performs parameter estimation by following Algorithm 1. Then, for any normal sensor and , there exists a set of weights such that can be represented as

| (9) |

where is calculated by (5). Moreover, the following statements hold:

-

1.

is a convex combination of its own estimate and the ones received from normal in-neighbors. That is, each weight in (9) is non-negative and ;

-

2.

;

-

3.

For any normal sensor whose estimate at -th entry is retained by sensor , i.e., , it follows that and .

As implied by Lemma 2, is updated in a safe manner as it always remains in a region that is determined by the local estimates of only normal sensors. Hence, by resiliently combining in-neighbors’ estimates, (5) prevents the faulty sensors from taking arbitrary control over the dynamics of any normal one.

Therefore, one concludes that the resilient combination step ensures that each normal sensor is updated in a safe manner. We shall next study the performance of Algorithm 1 on achieving the parameter estimation. This is particularly guaranteed by the adaptation step in (6).

To see this, for each sensor , let us define the estimation error of it at -th entry as

| (10) |

Combining (4) and (6) with Lemmas 1 and 2, the dynamics of is obtained as

| (11) |

where

Then let us introduce the following results, the proofs of which are omitted due to the space limitation:

Lemma 3

Therefore, the maximum estimation error would never increase throughout the execution. Further, we show that this error will definitely decrease after a finite time:

Lemma 4

With the above preparations, we are now ready to state the main result of the paper on the convergence of the estimation error as follows:

Theorem 1

In view of Theorem 1, the convergence of Algorithm 1 holds regardless of the presence and behaviors of faulty sensors. Therefore, the algorithm can be applied to withstand even the worst-case adversaries.

Remark 1

We further point out that the topology condition that the network is strongly -robust w.r.t. a set is similar to those in the works [23, 9, 24]. In these works, agents in are assumed to have direct knowledge of the state to be estimated. Thus, these agents perform as leaders while others are followers that resiliently track the states of the leaders. Note that in the framework of leader-following consensus, the leaders only broadcast their estimates to the followers while not taking account of others’ states into the updates of their own. However, different from such a framework, in the parameter estimation here, the sensors in are the ones that satisfy the PE condition. Moreover, according to (5), the dynamics of them are inevitably affected by other sensors including the ones outside . Therefore, the existing methods for convergence analysis are prevented from being directly applied. In order to solve this problem, the adaptation step (6) is necessary to guarantee the convergence of normal sensors.

V Numerical Example

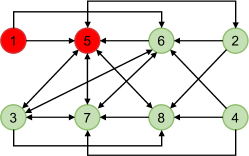

This example considers the network of sensors, which aim to cooperatively estimate a -dimensional parameter over the digraph given by Fig. 1. It can be checked that the graph is strongly -robust w.r.t. the set . Therefore, according to Theorem 1, it is able to tolerate the -local attack.

To verify this, let sensors and be misbehaving. They intend to prevent the estimation task from being fulfilled by maliciously broadcasting their estimates as

| (15) |

On the other hand, the regressor for each normal sensor is given by

| (16) |

where with and . Notice that the PE condition is satisfied by . We set the initial estimates of all sensors as and other parameters as and

The performance of Algorithm 1 is demonstrated in Fig. 2, which shows the Euclidean norm of each sensor’s estimation error. From the figure, we can see that, despite the presence of misbehaving nodes, the normal sensors can consistently estimate the true parameter, as expected from Theorem 1.

VI Conclusion

This paper has considered the problem of distributed estimation in an adversarial environment, where some of the sensors might be Byzantine. To mitigate their misbehaviors, a resilient estimation algorithm has been proposed, which, with low computation complexity, guarantees that the normal sensors exponentially estimate the true parameter when certain requirements on the regressor vector and the network topology are met. As a future work, we plan to extend the results in this paper to more challenging scenarios where the parameter to be estimated might be changing dynamically and the sensor measurements might be subject to stochastic noises.

References

- [1] G. C. Goodwin and K. S. Sin, Adaptive Filtering Prediction and Control. Courier, 2014.

- [2] S. Xie, Y. Zhang, and L. Guo, “Convergence of a distributed least squares,” IEEE Transactions on Automatic Control, vol. 66, no. 10, pp. 4952–4959, 2020.

- [3] I. D. Schizas, G. Mateos, and G. B. Giannakis, “Distributed LMS for consensus-based in-network adaptive processing,” IEEE Transactions on Signal Processing, vol. 57, no. 6, pp. 2365–2382, 2009.

- [4] W. Chen, C. Wen, S. Hua, and C. Sun, “Distributed cooperative adaptive identification and control for a group of continuous-time systems with a cooperative PE condition via consensus,” IEEE Transactions on Automatic Control, vol. 59, no. 1, pp. 91–106, 2013.

- [5] J. Yan, X. Yang, Y. Mo, and K. You, “A distributed implementation of steady-state kalman filter,” IEEE Transactions on Automatic Control, 2022, DOI: 10.1109/TAC.2022.3175925.

- [6] S. Xie and L. Guo, “Analysis of distributed adaptive filters based on diffusion strategies over sensor networks,” IEEE Transactions on Automatic Control, vol. 63, no. 11, pp. 3643–3658, 2018.

- [7] A. S. Matveev, M. Almodarresi, R. Ortega, A. Pyrkin, and S. Xie, “Diffusion-based distributed parameter estimation through directed graphs with switching topology: Application of dynamic regressor extension and mixing,” IEEE Transactions on Automatic Control, vol. 67, no. 8, pp. 4256–4263, 2022.

- [8] Y. Chen, S. Kar, and J. M. Moura, “Resilient distributed parameter estimation with heterogeneous data,” IEEE Transactions on Signal Processing, vol. 67, no. 19, pp. 4918–4933, 2019.

- [9] A. Mitra and S. Sundaram, “Byzantine-resilient distributed observers for LTI systems,” Automatica, vol. 108, p. 108487, 2019.

- [10] D. Dolev, N. A. Lynch, S. S. Pinter, E. W. Stark, and W. E. Weihl, “Reaching approximate agreement in the presence of faults,” Journal of the ACM (JACM), vol. 33, no. 3, pp. 499–516, 1986.

- [11] H. J. LeBlanc, H. Zhang, X. Koutsoukos, and S. Sundaram, “Resilient asymptotic consensus in robust networks,” IEEE Journal on Selected Areas in Communications, vol. 31, no. 4, pp. 766–781, 2013.

- [12] S. M. Dibaji, H. Ishii, and R. Tempo, “Resilient randomized quantized consensus,” IEEE Transactions on Automatic Control, vol. 63, no. 8, pp. 2508–2522, 2017.

- [13] H. Ishii, Y. Wang, and S. Feng, “An overview on multi-agent consensus under adversarial attacks,” Annual Reviews in Control, 2022.

- [14] H. J. LeBlanc and F. Hassan, “Resilient distributed parameter estimation in heterogeneous time-varying networks,” in Proceedings of the 3rd International Conference on High Confidence Networked Systems, 2014, pp. 19–28.

- [15] M. Meng, X. Li, and G. Xiao, “Distributed estimation under sensor attacks: Linear and nonlinear measurement models,” IEEE Transactions on Signal and Information Processing over Networks, vol. 7, pp. 156–165, 2021.

- [16] Y. Chen, S. Kar, and J. M. Moura, “Resilient distributed estimation through adversary detection,” IEEE Transactions on Signal Processing, vol. 66, no. 9, pp. 2455–2469, 2018.

- [17] S. Aranovskiy, A. Bobtsov, R. Ortega, and A. Pyrkin, “Performance enhancement of parameter estimators via dynamic regressor extension and mixing,” IEEE Transactions on Automatic Control, vol. 62, no. 7, pp. 3546–3550, 2017.

- [18] H. Mendes and M. Herlihy, “Multidimensional approximate agreement in Byzantine asynchronous systems,” in Proceedings of the 45th Annual ACM Symposium on Theory of Computing, 2013, pp. 391–400.

- [19] X. Wang, S. Mou, and S. Sundaram, “A resilient convex combination for consensus-based distributed algorithms,” arXiv preprint arXiv:1806.10271, 2018.

- [20] J. Yan, X. Li, Y. Mo, and C. Wen, “Resilient multi-dimensional consensus in adversarial environment,” Automatica, vol. 145, p. 110530, 2022.

- [21] B. Yi and R. Ortega, “Conditions for convergence of dynamic regressor extension and mixing parameter estimators using lti filters,” IEEE Transactions on Automatic Control, 2022, DOI: 10.1109/TAC.2022.3149964.

- [22] N. H. Vaidya, L. Tseng, and G. Liang, “Iterative approximate Byzantine consensus in arbitrary directed graphs,” in Proceedings of the ACM Symposium on Principles of Distributed Computing, 2012, pp. 365–374.

- [23] J. Usevitch and D. Panagou, “Resilient leader-follower consensus to arbitrary reference values in time-varying graphs,” IEEE Transactions on Automatic Control, vol. 65, no. 4, pp. 1755–1762, 2019.

- [24] J. Yan, C. Deng, and C. Wen, “Resilient output regulation in heterogeneous networked systems under Byzantine agents,” Automatica, vol. 133, p. 109872, 2021.