ResLearn: Transformer-based Residual Learning for Metaverse Network Traffic Prediction

Abstract

Our work proposes a comprehensive solution for predicting Metaverse network traffic, addressing the growing demand for intelligent resource management in eXtended Reality (XR) services. We first introduce a state-of-the-art testbed capturing a real-world dataset of virtual reality (VR), augmented reality (AR), and mixed reality (MR) traffic, made openly available for further research. To enhance prediction accuracy, we then propose a novel view-frame (VF) algorithm that accurately identifies video frames from traffic while ensuring privacy compliance, and we develop a Transformer-based progressive error-learning algorithm, referred to as ResLearn for Metaverse traffic prediction. ResLearn significantly improves time-series predictions by using fully connected neural networks to reduce errors, particularly during peak traffic, outperforming prior work by 99%. Our contributions offer Internet service providers (ISPs) robust tools for real-time network management to satisfy Quality of Service (QoS) and enhance user experience in the Metaverse.

Index Terms:

Metaverse Network Traffic Prediction, Residual Learning, Extended Reality (XR), virtual reality (VR), augmented reality (AR), and mixed reality (MR).I Introduction

The Metaverse is a comprehensive ecosystem of interconnected virtual worlds that provide immersive experiences to users. The ecosystem enhances existing and generates new value from economic, environmental, social, and cultural perspectives [1]. Services in the Metaverse ecosystem are designed to be accessed using immersive extended reality (XR) environments. XR is an umbrella term that describes the technologies affecting the user’s immersive experience, such as virtual reality (VR), augmented reality (AR), and mixed reality (MR) [2]. VR allows users to interact with virtually generated environments designed to simulate real-world experiences. AR overlays interactive, virtually generated information onto real-world objects or within real-world spaces. XR technologies lie on a spectrum between AR and VR. In cases where the distinction between the realities is ambiguous, the experience is considered MR. As the Metaverse’s growth continues and XR evolves, the popularity of its services increases. Driven by the rapid growth of the Metaverse, Internet traffic is expected to surpass current forecasts significantly [3]. The entertainment and social media industries have seen the most substantial growth of Metaverse services, as evidenced by popular virtual performance events, one of which attracted an audience of 36 million users [4, 5]. Healthcare, training, and marketing for Metaverse services have also grown recently [6, 7]. Cloud rendering for Metaverse is crucial to offloading computing resources to make the services affordable, a popular technique for VR games [8]. Consequently, the Ericsson 2022 report emphasizes the growing need for more intelligent interactions between XR services and the network to maintain high Quality of Service (QoS) [3]. Therefore, network management is crucial for Internet service providers (ISPs) to accommodate adequate resources and avoid cybersickness among users [9, 10, 11].

Metaverse traffic consists of video, audio, and control flows [8], among all downlink video frames are resource-demanding in the case of VR, and both uplink/downlink video frames for AR and MR traffic. Therefore, predicting the frame size is vital, and for latency-related issues, it is essential to predict frame inter-arrival time and frame duration [12]. Predicting frame size, inter-arrival time, and frame duration will help ISPs prepare and manage the Metaverse network for holistic and intelligent traffic management. However, there needs to be more real-world Metaverse data and research in prediction to make progress in the field. Essentially, frame-related information is time series data. The recent advent of different state-of-the-art artificial intelligence (AI) based time series models has shown tremendous progress in time series predictions [13]. The only VR frame size prediction work is available at [14]; therefore, we consider it state-of-the-art (SoA) work for benchmark comparison. The work studies different AI models to predict VR frame size. It establishes stacked LSTM to produce better results based on transfer learning methodologies. Therefore, the solution aims for online prediction, imperative for real-time network management. However, the work is evaluated on a small dataset captured in a controlled environment. Also, the performance can be improved with further reduction in the error. The frame identification methodology used in the work might need to be revised because the frame loss for 120 Mbps is more than 54 Mbps since the dataset is captured in a controlled environment. Our literature review identifies the following gaps: i) the need for holistic, comprehensive, and real-world Metaverse datasets and ii) accurate frame-related data predicting algorithms.

We chart out a state-of-the-art Metaverse testbed to capture a holistic, comprehensive, and real-world Metaverse dataset comprising VR games, VR videos, VR chat/VoIP, AR, and MR traffic. We also make it open for the research fraternity available at [15]. Our work treats the Metaverse traffic in segments to enhance the predictability of the data at higher speed. We propose an accurate view-frame (VF) algorithm that helps to identify the types of video frames using application-level information to comply with privacy-related policies. Our proposed VF algorithm can predict the number of frames in a segment, total frame size, and frame inter-arrival time. Finally, we propose a state-of-the-art residual learning (ResLearn) algorithm that uses transformer [16] to predict time-series data and fully connected neural networks (FCNN) to learn errors with a bias to identify the peaks of the frame-related data for accurate network management. Our solution outperforms the SoA work [14] by 99% in reducing the prediction errors. The implementation of the solution is made open for the research community at [17].

II System Model

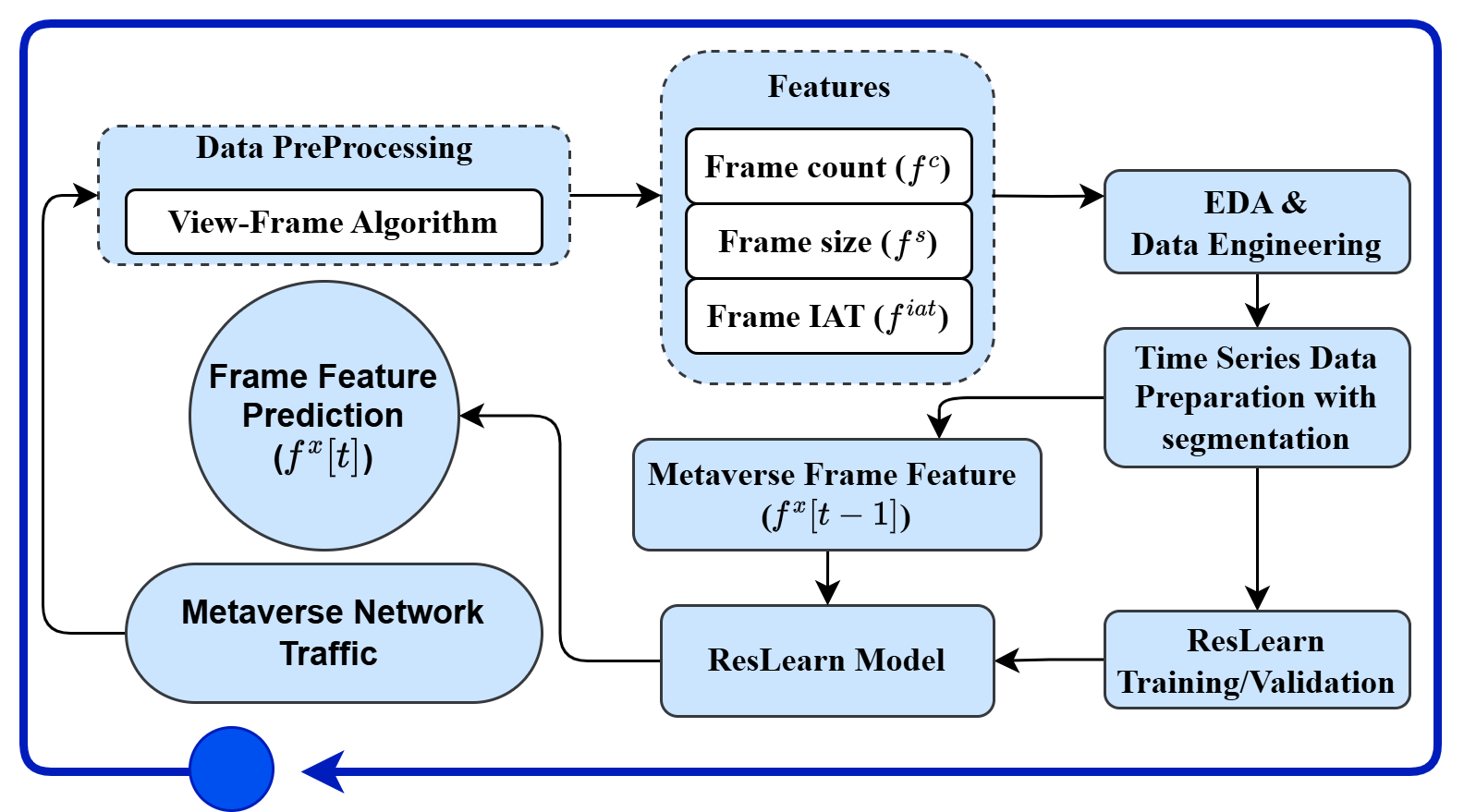

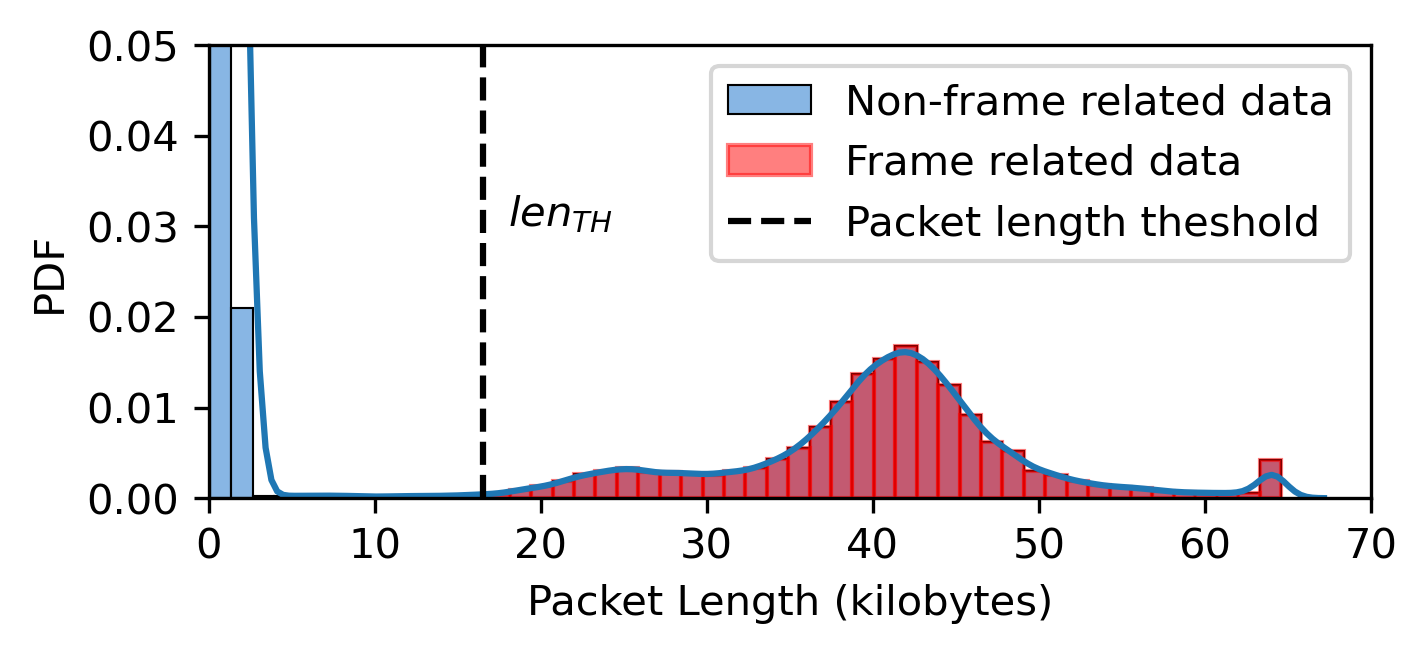

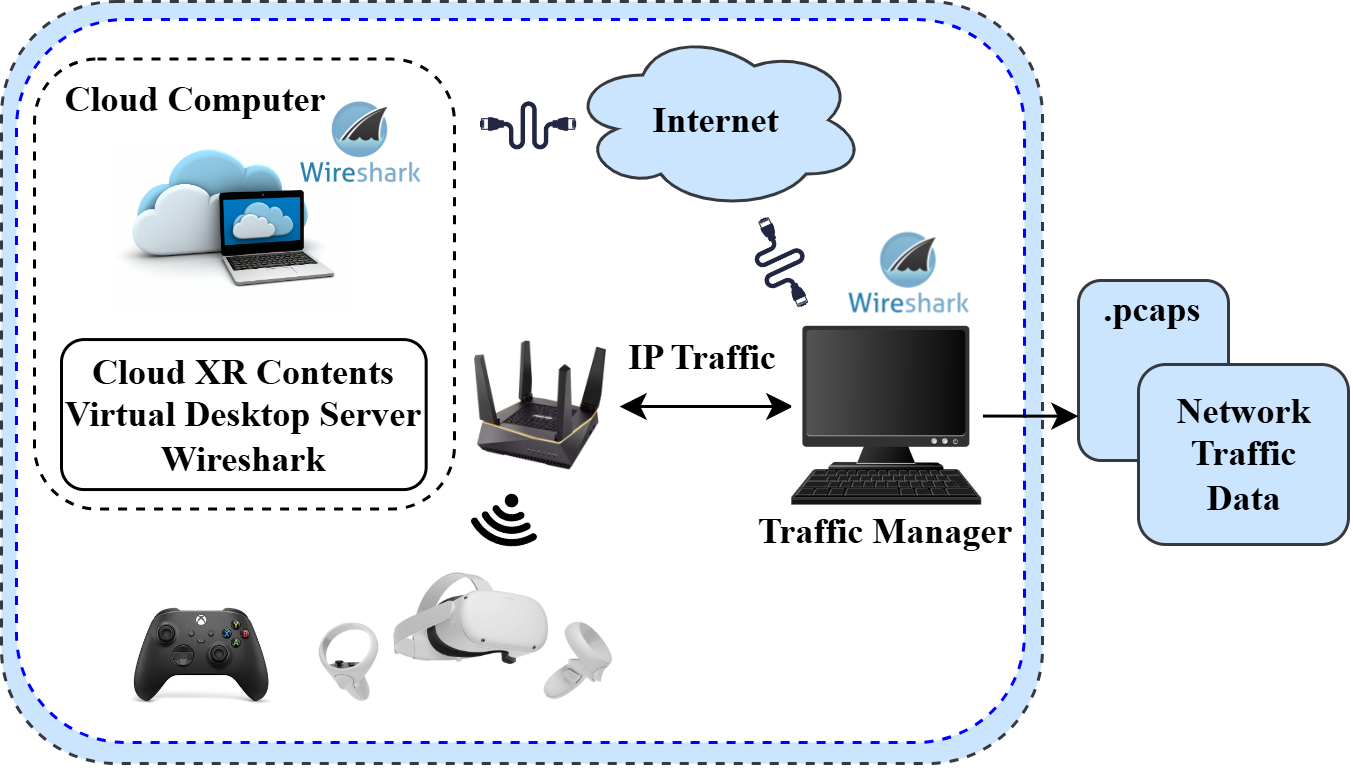

The system model, in Figure 1 illustrates the proposed framework for predicting Metaverse network traffic, focusing on frame-level features. The process begins with data preprocessing, where the VF algorithm is applied to extract relevant frame-related data. The key features extracted from the incoming traffic include frame count (), frame size (), and frame inter-arrival time (IAT) (). Our VF algorithm works on application-level features: time, packet length, packet direction, and packet inter-arrival time. Frame-related packets have a more considerable length with relatively more minor inter-arrival time. We use this property to determine the thresholds for packet length and inter-arrival time to identify frame-related packets as shown in Figure 2. In Figure 2, is determined as 25% of the maximum length of the observed packet length. is the frame duration threshold determined between the first two peaks. The first peak represents the start of the video frame packet with less inter-arrival time, and the second peak represents the end of the video frame when audio and control-related flows are transmitted with slightly higher inter-arrival time. The and are calculated from the first segment at a session’s start for the VF algorithm. All packets within these thresholds are collected to identify the frames. The method is verified on different Metaverse rendering platforms: Meta air link [18], and Virtual Desktop Streamer (VDS) [19].

Based on the requirement, one of the features is selected for prediction. These features undergo Exploratory Data Analysis (EDA) and Data Engineering to ensure data quality and integrity, followed by Time Series Data Preparation with segmentation for model training. The core of the system is frame feature prediction (), where the previous frame feature values () are used as inputs to predict the current network traffic behaviour. With this information, we provide the mathematical problem statement to predict key features of the Metaverse network traffic features. Let be a frame vector, identified by VF algorithm for a given segment, having frame-related information given as where indicates the time index of the given segment. Let represents the Metaverse frame-related network traffic at the previous time step, where indicates one of the three frame-related features in . Therefore, frame count, size, and inter-arrival time are individually predicted using historical data. The prediction function for the current network traffic, , is

| (1) |

where is the prediction model.

III ResLearn: Transformer-based Residual Learning for Metaverse Network Traffic Prediction

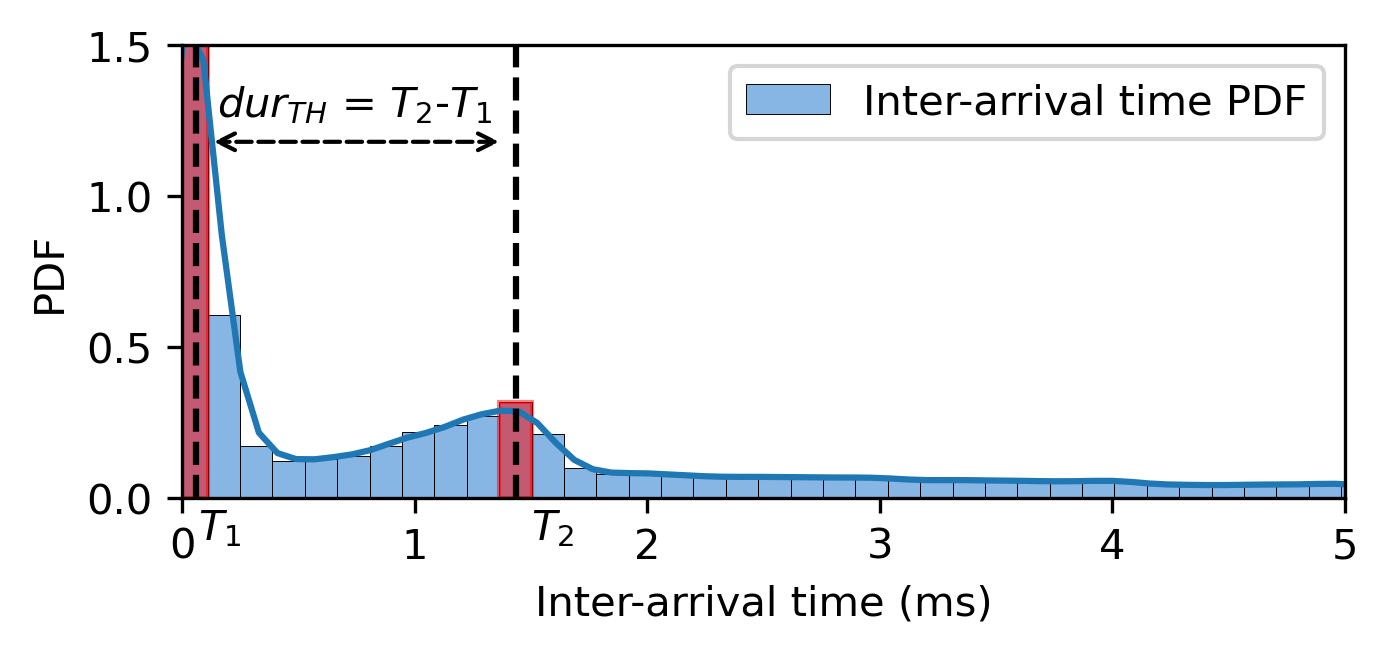

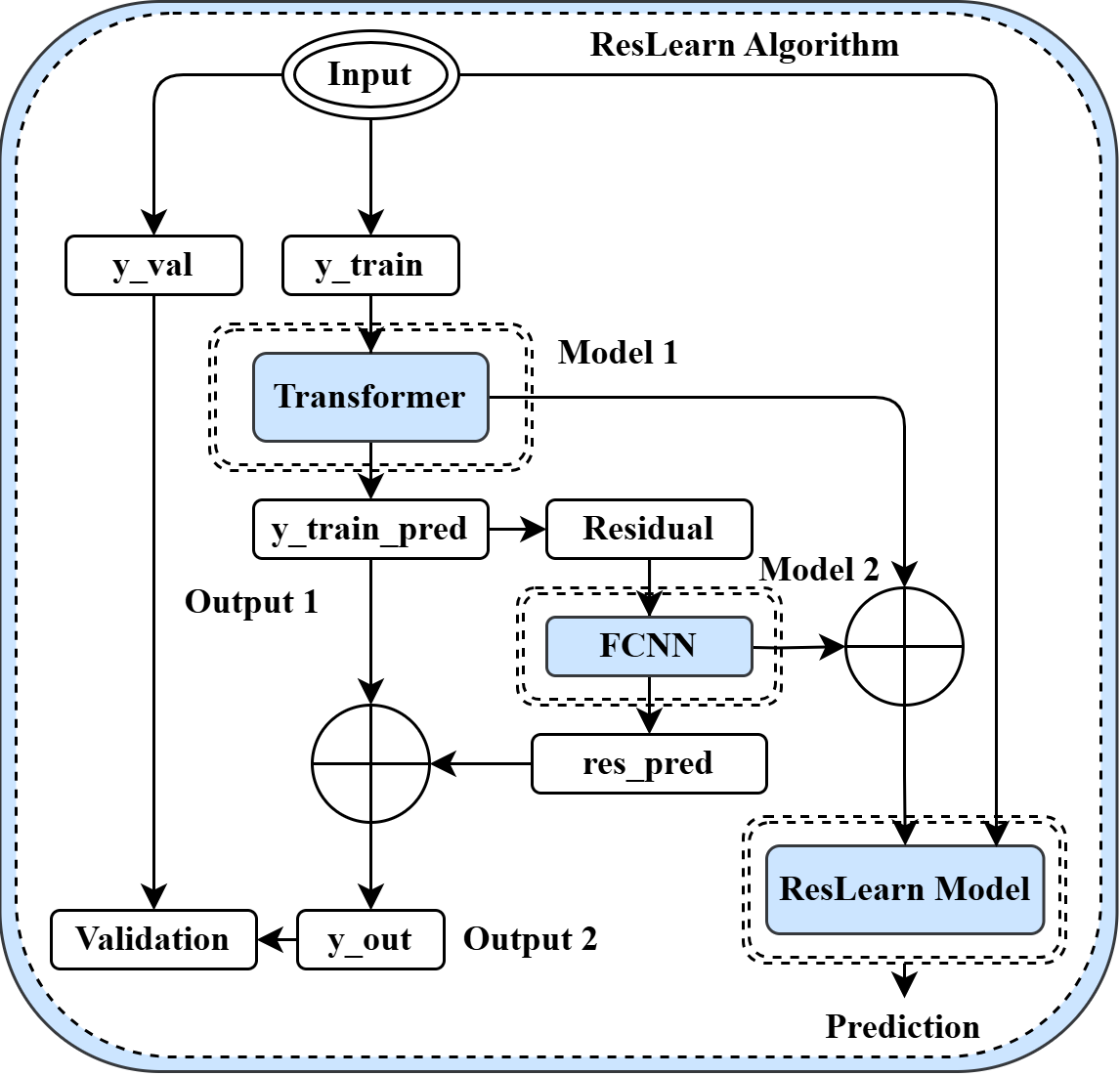

The residual Learning (ResLearn) algorithm is a two-step prediction approach involving a transformer deep neural network model [16] designed to enhance Metaverse network traffic forecasting by leveraging residual learning inspired by ResNet [20]. Transformer is known for learning short and long-term dependencies from time series data. However, the prediction error is inevitable due to the randomness introduced by network health and users in Metaverse infrastructure. However, we can learn the nature of error using a neural network. ResLearn is a novel approach that uses a Transformer in the first step, given as , the predictive Transformer model. The residual from the is fed to a fully connected neural network (FCNN) to learn the nature of the error, given as . The final prediction model from Eq. (1) is given as .

Figure 3 illustrates the workflow of the ResLearn algorithm. The input data is split into training () and validation () sets. The first model, including a transformer network, processes the training data and generates an initial prediction result (). This output, called Output 1, is then compared to the actual training data to calculate the residuals, which capture the difference between the predicted and true values. These residuals are passed to the second model, an FCNN designed to learn and predict the patterns in the residuals, producing a corrected prediction (). Both predictions from the transformer and FCNN are combined to form the final output () of the ResLearn model. This combined output is then validated with the unseen validation data, improving the final prediction’s overall accuracy. The ResLearn training algorithm is shown in Algorithm 1. is a time-series data segment, where is the segment size and is the number of segments. For each segment, the data is split into training and validation sets. The transformer model is trained on the training set, and its predictions, , are computed. The residuals, representing the error between the actual and predicted values, are calculated and adjusted by adding a bias, , to highlight essential peaks. The dense model is then trained on these residuals, and the final ResLearn model, , is created by combining the outputs of both models. The combined model is then evaluated on the validation set using error metrics. The process is repeated for each segment, refining the prediction accuracy iteratively until the validation error is stabilized for . The algorithm’s output is the final ResLearn model , which integrates the strengths of both models to deliver improved predictive performance.

The time complexity of the ResLearn algorithm, which involves training a transformer model and a FCNN, can be approximated as follows: the training complexity of the transformer model is typically , where is the number of training epochs and is the sequence length. The training complexity of the FCNN can be approximated as , where is the number of epochs, is the number of neurons in the hidden layer, and represents the number of training samples [21]. Considering segments of data, the overall time complexity of the algorithm is given by

IV Experimentation Setup and Results

IV-A Datasets and predictability

The experiments are designed to evaluate the performance of various VR, AR, and MR services across three datasets, as shown in Table I. The Dataset I [15] (in-house dataset) focuses on diverse services, including gaming, video streaming, and communication (chat/VoIP), and is tested with applications such as Dirt Rally 2.0, Bigscreen, VR Chat, Solar System, and Reality Mixer. Figure 4 shows the testbed used in the data capture. In the testbed, a virtual desktop streamer (VDS) rendering platform is used for the setup. A cloud computer with a VDS server is a rendering device to which the VDS client on the Oculus Quest 2 is connected. Traffic manager is used to simulate low latency networks to replicate real-world scenarios. Traffic is captured on the cloud computer using Wireshark. More details and packet captures (pcap) are available at [15]. Dataset II [8] examines slow and fast VR traffic using Steam VR Home and Beat Saber to study the impact of different traffic patterns. Dataset III [22] involves two experiments: the first explores fast and slow VR traffic across Beat Saber, Medal of Honor, Forklift Simulator, and Cooking Simulator, while the second focuses on a subset of applications (Forklift Simulator, Cooking Simulator, Beat Saber, and Medal of Honor) to assess network performance under varying traffic conditions further. We use 50% of data for training, in which 20% of the data is used for validation. Another 50% of the data is used for testing. The solution is developed in Python using data science libraries such as Scikit-learn (Sklearn), NumPy, and Pandas. The implementation is available at [17]. The experiments are conducted on a Windows system with an Nvidia RTX2800S GPU. The Windows environment is set up with Anaconda to support machine learning libraries, including TensorFlow.

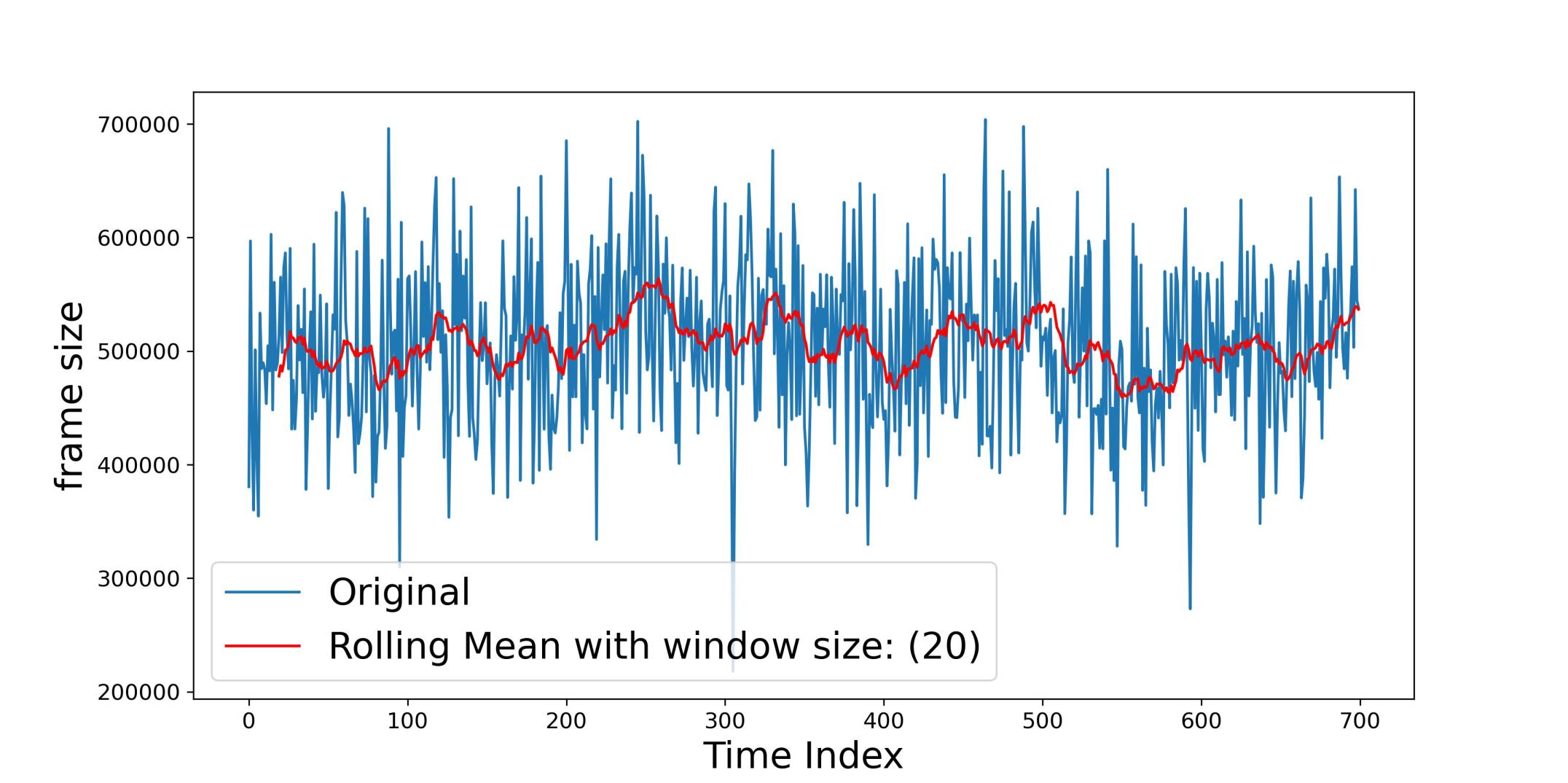

The analysis of data predictability begins with an exploratory data analysis (EDA) that suggests randomness, shown in Figure 5 depicted by blue line, in the network traffic data. Runs test is a statistical procedure which determines whether a sequence of data within a given distribution have been derived with a random process or not [23]. Runs test of the raw data provides a p-value of 0.15, which indicates no significant evidence against the null hypothesis of randomness. However, a deeper examination using decomposition techniques reveals underlying patterns in the time series, breaking it down into trend, seasonal, and residual components. This is further reinforced by applying a rolling window average (with a window size of 20), where the rolling mean (depicted by the red line in Figure 5) closely follows the shape of the data, revealing a clear trend. The corresponding Runs test, now with a p-value close to zero, confirms that the data is predictable and not random. Rolling statistics effectively uncovers this structure, making the data suitable for forecasting models.

p

| Exp. | services | Applications | |

|

Dataset I |

exp. 1 | VR Game, VR Video, VR chat/VoIP, AR, MR | Dirt Rally 2.0, Bigscreen, VR Chat, Solar System, Reality Mixer |

|

Dataset II |

exp. 1 | Slow VR Traffic, Fast VR Traffic | Steam VR Home, Beat Saber |

| Dataset III | exp. 1 | Fast VR Traffic Game 1, Fast VR Traffic Game 2, Slow VR Traffic Game 1, Slow VR Traffic Game 2 | BeatSaber, Medal of Honor, Forklift Sim, Cooking Sim. |

| exp. 2 | Slow VR Traffic, Fast VR Traffic | SForklift Sim, Cooking Sim, BeatSaber, Medal of Honor |

IV-B Performancce Metrics

The performance of Metaverse network traffic prediction models can be evaluated using the following metrics: RMSE (Root Mean Squared Error), MAPE (Mean Absolute Percentage Error), and SMAPE (Symmetric Mean Absolute Percentage Error). These metrics quantify the differences between predicted and actual traffic values, aiding in assessing the model’s accuracy. The RMSE measures the square root of the average squared differences between predicted and actual values, emphasizing more significant errors, and is given by:

where represents the predicted value, is the actual value, and is the number of predictions. The MAPE is used to compute the average percentage error, offering an intuitive interpretation of prediction errors, and is formulated as:

Lastly, SMAPE provides a symmetric approach to percentage error by accounting for both over- and under-predictions. It is computed as:

SMAPE balances the errors across different magnitudes of actual and predicted values, making it particularly suitable for dynamic environments like Metaverse traffic prediction. These metrics offer a comprehensive view of model performance, helping improve prediction accuracy for fluctuating network demands.

IV-C Performance Evaluation

The Tables II III, IV, & V present the performance evaluation of various models (Transformer, LSTM, GRU, and Stacked LSTM) across three datasets, with metrics such as RMSE, MAPE, and SMAPE. Table II shows the performance for frame size (Dataset I), Table III for frame count (Dataset II), Table IV for frame IAT (Dataset III, exp 1), and Table V for frame size (Dataset III, exp2). Each table compares the models’ non-residual version (where residuals are not learned) with the residual learning approach, the ResLearn algorithm. In each case, SMAPE improvement is calculated, highlighting the percentage improvement in predictive accuracy when residual learning is applied.

The results indicate that the ResLearn algorithm significantly improves performance across all models and datasets, especially regarding SMAPE. For example, the transformer model in Table II achieves a SMAPE reduction from 0.78 to 0.24 (68.87% improvement), and a similar trend is observed in Tables III and IV, where the transformer and Stacked LSTM models show substantial SMAPE improvements of over 70%. The observation is similar to Dataset III exp 2; however, GRU is better than the transformer. This demonstrates that residual learning can enhance the accuracy of time series models, particularly for the transformer architecture, making it the most effective among the evaluated models across all datasets.

Model Metrics RMSE MAPE SMAPE Non-Residual Algorithm Transformer 4872.49 0.0078 0.78 % SMAPE Improvement LSTM 4904.88 0.0079 0.79 GRU 4915.61 0.0079 0.79 Stacked LSTM 4929.88 0.0079 0.79 ResLearn Solution Transformer 2164.95 0.0024 0.24 68.87% LSTM 2725.03 0.0034 0.34 56.78% GRU 2786.97 0.0031 0.31 61.04% Stacked LSTM 3666.30 0.0046 0.46 41.78%

-

•

Note: The top table provides results for the Non-residual version of the model in which residuals are not learned. The bottom table provides the result of the proposed solution. The % SMAPE improvement is given in the fifth column.

Model Metrics RMSE MAPE SMAPE Non-Residual Algorithm Transformer 0.042 0.0072 0.72 % SMAPE Improvement LSTM 0.042 0.0073 0.73 GRU 0.043 0.0076 0.76 Stacked LSTM 0.043 0.0076 0.77 ResLearn Solution Transformer 0.018 0.0020 0.20 71.85% LSTM 0.017 0.0023 0.23 68.67% GRU 0.018 0.0025 0.25 67.09% Stacked LSTM 0.019 0.0022 0.22 71.72%

Model Metrics RMSE MAPE SMAPE Non-Residual Algorithm Transformer 0.037 0.0032 0.32 % SMAPE Improvement LSTM 0.034 0.0029 0.29 GRU 0.034 0.0030 0.30 Stacked LSTM 0.033 0.0027 0.29 ResLearn Solution Transformer 0.029 0.0020 0.20 38.01% LSTM 0.030 0.0021 0.21 25.11% GRU 0.035 0.0025 0.25 13.74% Stacked LSTM 0.028 0.0019 0.19 36.04%

Model Metrics RMSE MAPE SMAPE Non-Residual Algorithm Transformer 78.409 0.0103 1.04 % SMAPE Improvement LSTM 74.140 0.009 0.98 GRU 78.014 0.0103 1.03 Stacked LSTM 78.677 0.0104 1.04 ResLearn Solution Transformer 42.950 0.0041 0.41 60.69% LSTM 42.860 0.0041 0.41 58.08% GRU 40.724 0.004 0.40 61.39% Stacked LSTM 48.184 0.0042 0.42 60.1%

IV-D Performance Comparision and Discussion

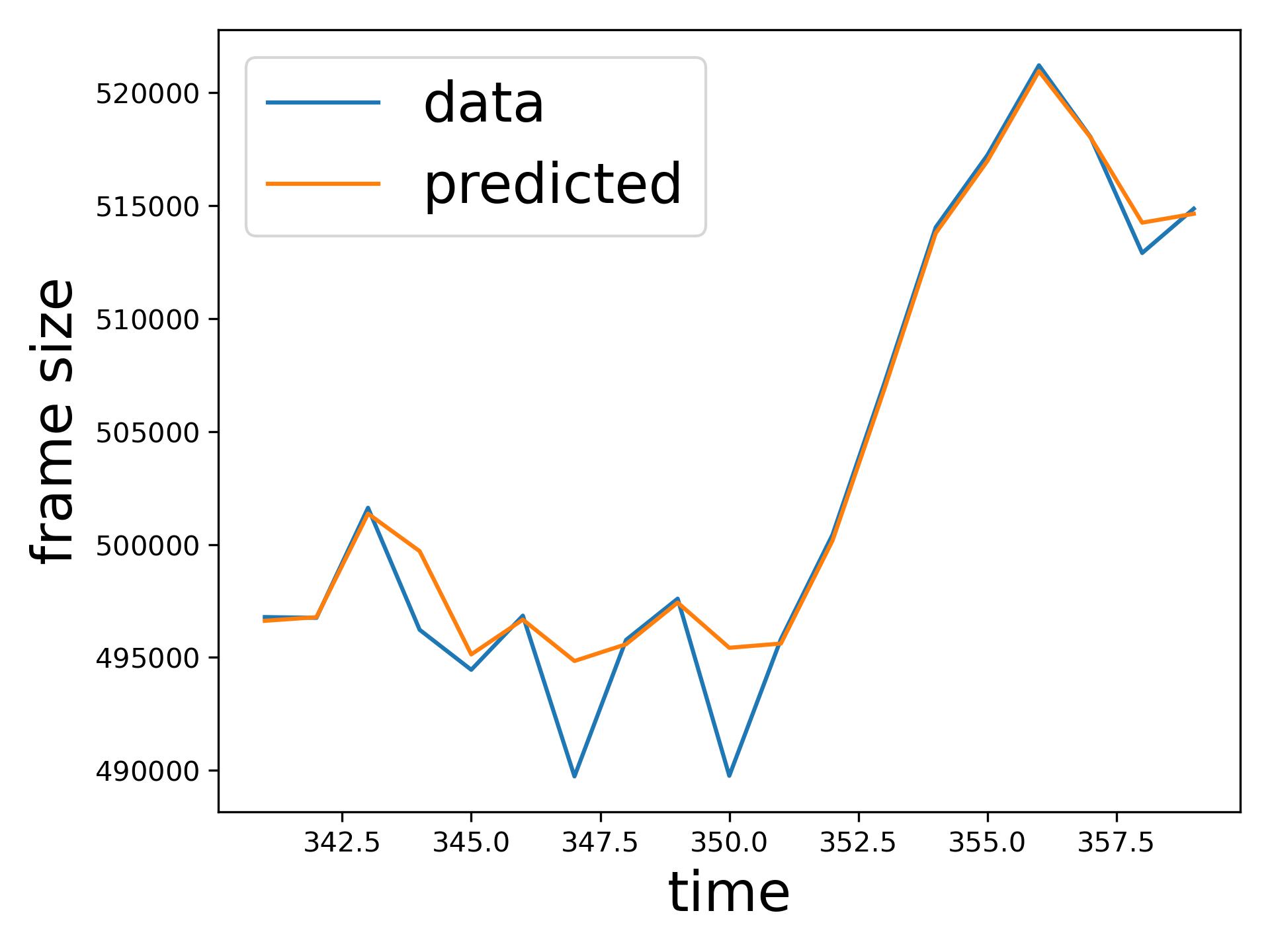

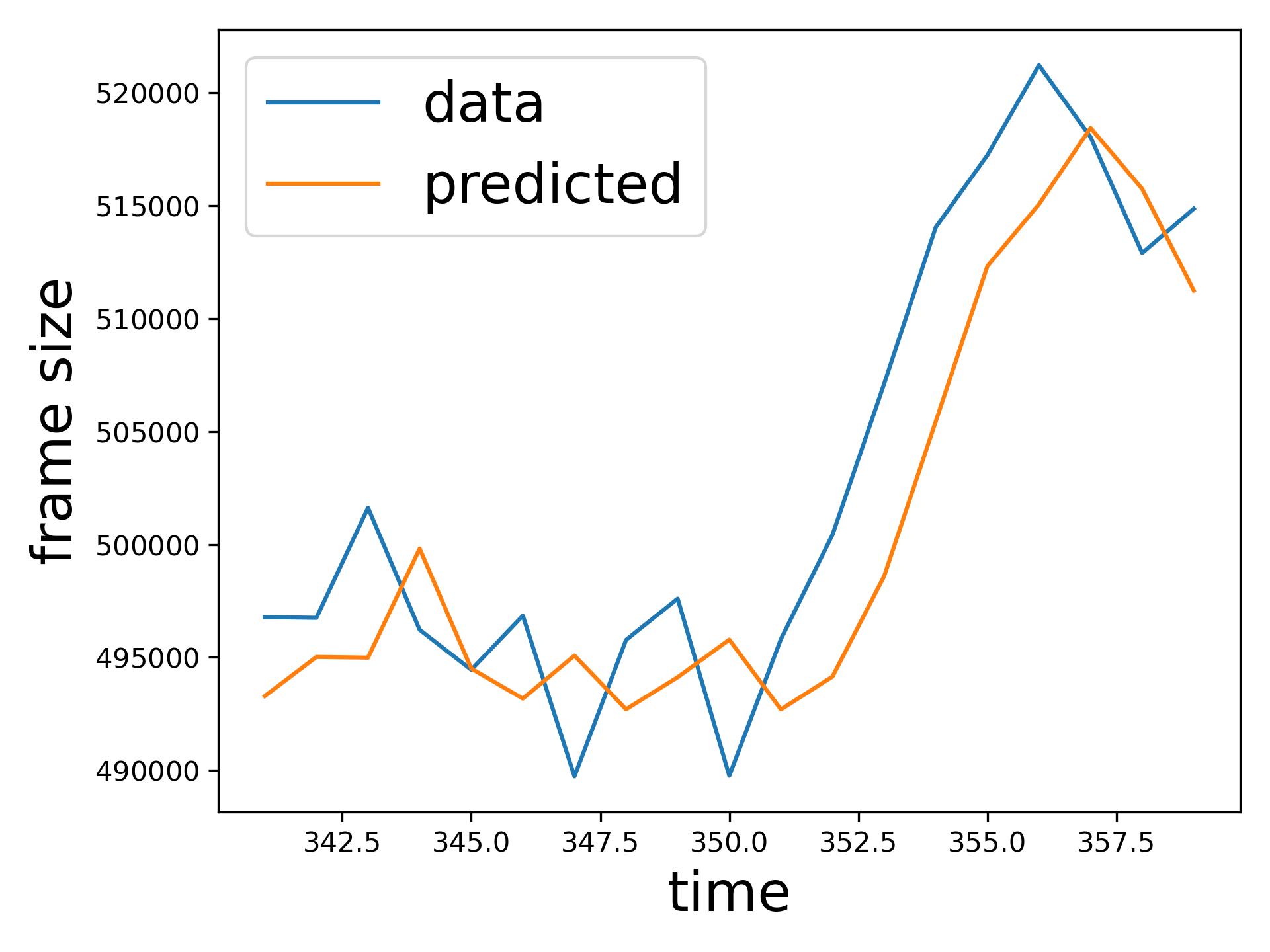

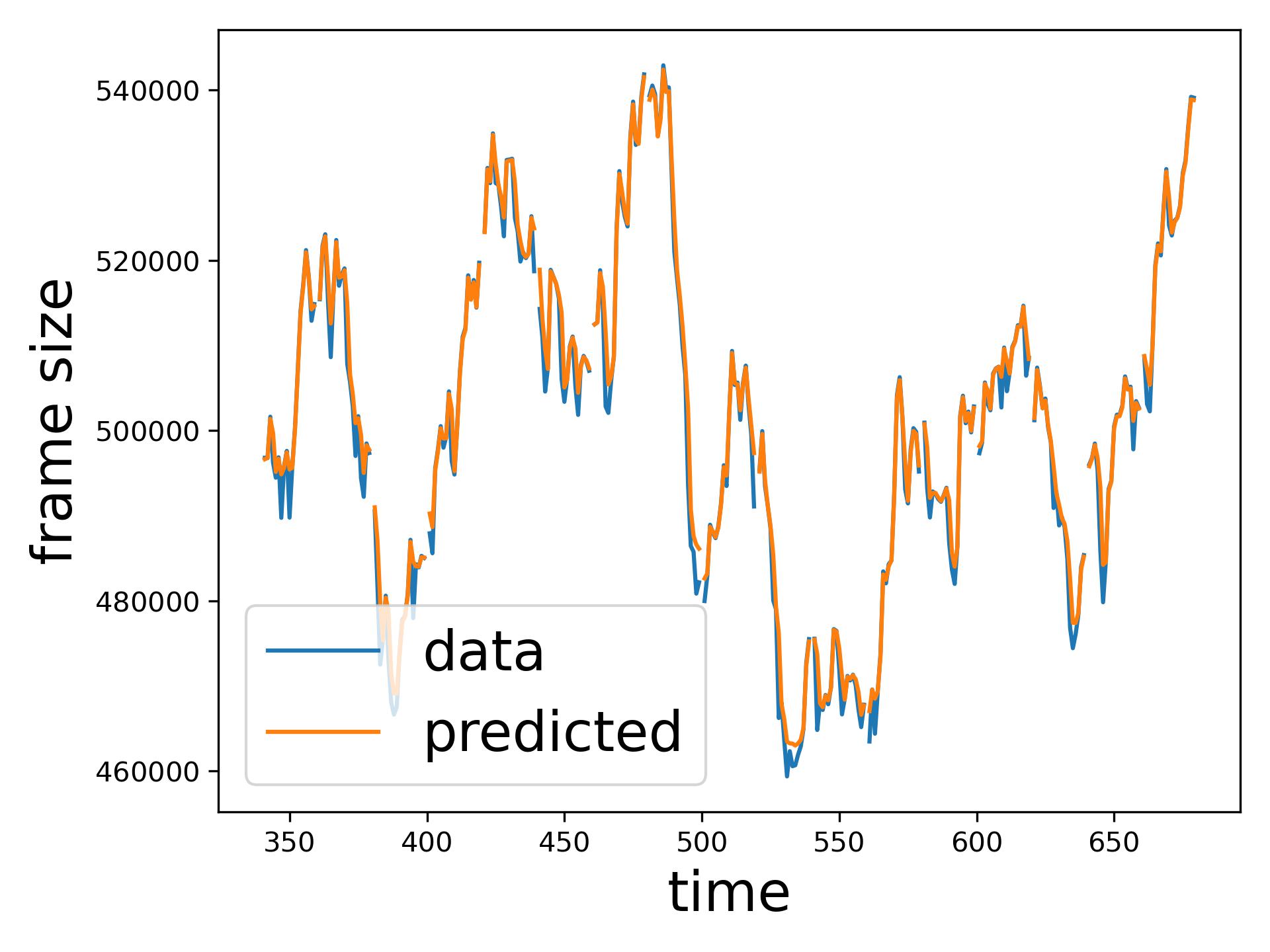

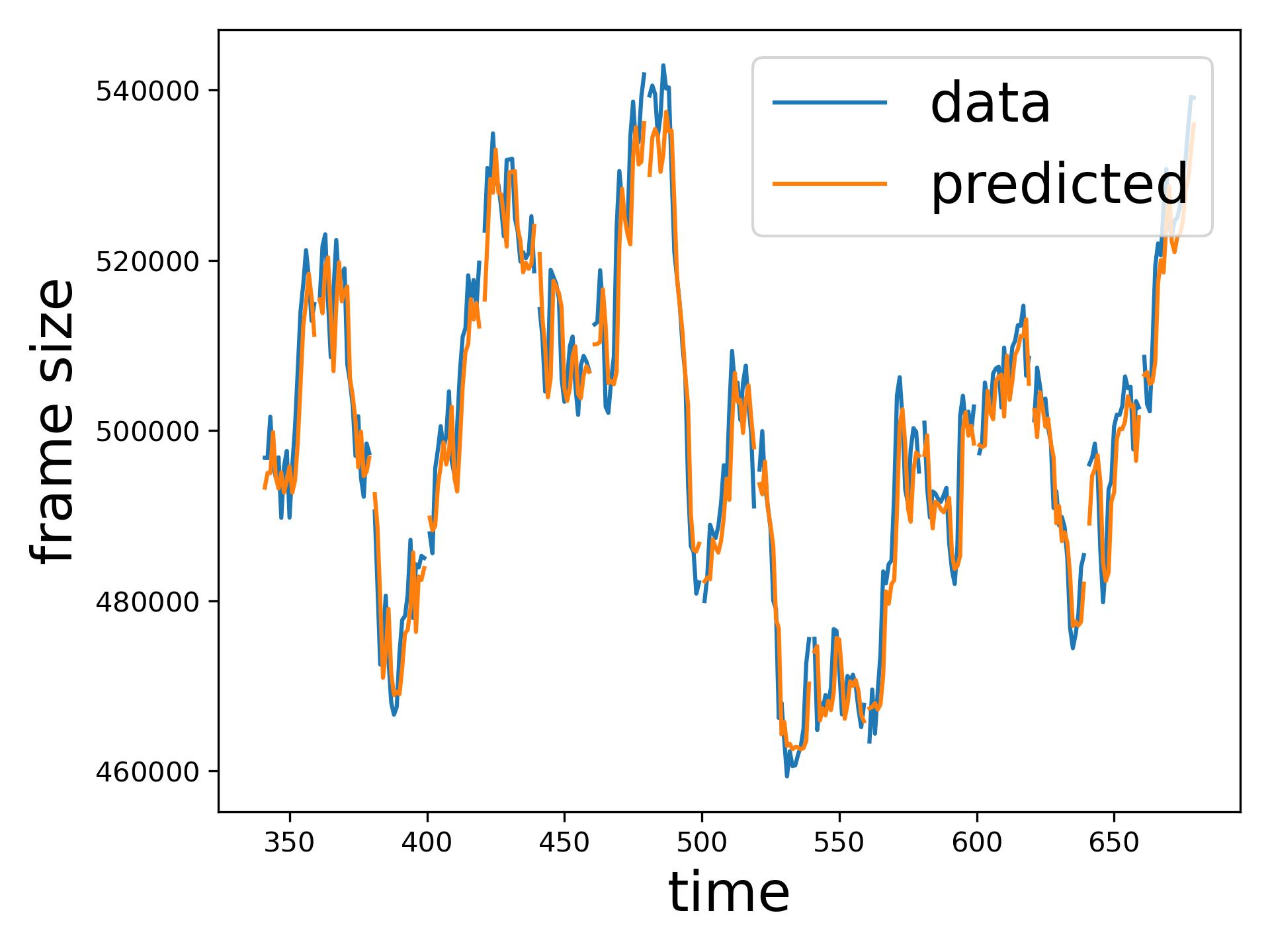

The comparison of SMAPE between the SoA transfer learning model [14] and the proposed ResLearn solution (Table VI) demonstrates a significant performance improvement in favour of ResLearn. In various traffic conditions, as considered in [14], such as BeatSaber and Steam VR house at different Mbps rates, ResLearn consistently achieves a near-perfect reduction in SMAPE, reaching over 99% improvement across all scenarios. Similar reductions are observed in other settings, like the Steam VR house 40 Mbps, where SMAPE decreased from 562.87 to 0.45, showcasing ResLearn’s superior accuracy. Figure 6 compares time series prediction of ResLearn and non-ResLearn solutions. Overall, the ResLearn solution is superior for network management because it accurately predicts the peaks at which maximum resource is required.

| Traffic | Transfer Learning SoA [14] | ResLearn | % SMAPE Improvement |

|---|---|---|---|

| BeatSaber 40 Mbps | 404.05 | 0.36 | 99.91% |

| BeatSaber 54 Mbps | 285.29 | 1.01 | 99.64% |

| BeatSaber 120 Mbps | 371.82 | 0.15 | 99.95% |

| Steam VR house 40 Mbps | 562.87 | 0.45 | 99.92% |

| Steam VR house 54 Mbps | 404.41 | 0.69 | 99.82% |

V Conclusion and Future Work

Our work significantly advances Metaverse network traffic prediction by introducing a comprehensive, real-world dataset and developing novel algorithms including the view-frame and ResLearn algorithms. These algorithms enable ISPs to manage network resources in an effective manner, satisfying the QoS and enhancing the user experience for Metaverse applications. Given that our solution substantially reduces prediction errors about 99% than the SoA [14], future work can focus on expanding the dataset to cover a broader range of Metaverse applications and environments, integrating advanced AI techniques to improve prediction accuracy further, and exploring real-time deployment in diverse network architectures. Additionally, adaptive algorithms for dynamic resource allocation in response to traffic fluctuations will be investigated for enhancing the robustness in provisioning Metaverse ecosystem.

References

- [1] E. Kontogianni and L. Anthopoulos, “Towards a standardized metaverse definition: Empirical evidence from the itu metaverse focus group,” IEEE Engineering Management Review, 2024.

- [2] A. Suh and J. Prophet, “The state of immersive technology research: A literature analysis,” Computers in Human behavior, vol. 86, pp. 77–90, 2018.

- [3] E. Ekudden, “Future network trends driving universal metaverse mobility,” https://www.ericsson.com/4a7138/assets/local/reports-papers/ericsson-technology-review/docs/2022/technology-trends-2022.pdf, 2022, (Accessed on 08/14/2024).

- [4] Qualcomm, “The mobile future of extended reality (XR),” https://www.qualcomm.com/content/dam/qcomm-martech/dm-assets/documents/awe_2017_-_the_mobile_future_of_extended_reality_-for_pdf_1.pdf, 11 2020, (Accessed on 08/14/2024).

- [5] Roblox, “Roblox partners with sony music entertainment to bring their artists into the metaverse,” https://corp.roblox.com/newsroom/2021/07/roblox-partners-sony-music-entertainment-bring-artists-metaverse, 06 2021, (Accessed on 08/14/2024).

- [6] A. Musamih, I. Yaqoob, K. Salah, R. Jayaraman, Y. Al-Hammadi, M. Omar, and S. Ellahham, “Metaverse in healthcare: Applications, challenges, and future directions,” IEEE Consumer Electronics Magazine, vol. 12, no. 4, pp. 33–46, 2022.

- [7] H. Wang, H. Ning, Y. Lin, W. Wang, S. Dhelim, F. Farha, J. Ding, and M. Daneshmand, “A survey on the metaverse: The state-of-the-art, technologies, applications, and challenges,” IEEE Internet of Things Journal, vol. 10, no. 16, pp. 14 671–14 688, 2023.

- [8] S. Zhao, H. Abou-zeid, R. Atawia, Y. S. K. Manjunath, A. B. Sediq, and X.-P. Zhang, “Virtual reality gaming on the cloud: A reality check,” in 2021 IEEE Global Communications Conference (GLOBECOM). IEEE, 2021, pp. 1–6.

- [9] I. T. Feldstein and S. R. Ellis, “A simple video-based technique for measuring latency in virtual reality or teleoperation,” IEEE Transactions on Visualization and Computer Graphics, vol. 27, no. 9, pp. 3611–3625, 2020.

- [10] M. Li, J. Gao, C. Zhou, X. Shen, and W. Zhuang, “User dynamics-aware edge caching and computing for mobile virtual reality,” IEEE Journal of Selected Topics in Signal Processing, vol. 17, no. 5, pp. 1131–1146, 2023.

- [11] Y. S. K. Manjunath, S. Zhao, X.-P. Zhang, and L. Zhao, “Time-distributed feature learning for internet of things network traffic classification,” IEEE Transactions on Network and Service Management, p. ”early access”, 2024.

- [12] W. Luo, W. Liu, D. Lian, and S. Gao, “Future frame prediction network for video anomaly detection,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 44, no. 11, pp. 7505–7520, 2022.

- [13] M. Jin, H. Y. Koh, Q. Wen, D. Zambon, C. Alippi, G. I. Webb, I. King, and S. Pan, “A survey on graph neural networks for time series: Forecasting, classification, imputation, and anomaly detection,” IEEE Transactions on Pattern Analysis and Machine Intelligence, 2024.

- [14] S. Vaidya, H. Abou-Zeid, and D. Krishnamurthy, “Transfer learning for online prediction of virtual reality cloud gaming traffic,” in GLOBECOM 2023 - 2023 IEEE Global Communications Conference, 2023, pp. 4668–4673.

- [15] Y. S. Kuruba Manjunath, L. Zhao, and X.-P. Zhang, “Metaverse network traffic for classification and prediction,” 2024. [Online]. Available: https://dx.doi.org/10.21227/0qs9-f852

- [16] A. Vaswani, “Attention is all you need,” Advances in Neural Information Processing Systems, 2017.

- [17] Y. S. K. Manjunath, M. Szymanowski, and A. Wissborn, “yoga-suhas-km/reslearn: Reslearn: Transformer-based residual learning for metaverse network traffic prediction,” https://github.com/yoga-suhas-km/ResLearn, (Accessed on 10/11/2024).

- [18] Meta, “Set up and connect meta quest link and air link — meta store,” https://www.meta.com/help/quest/articles/headsets-and-accessories/oculus-link/connect-with-air-link/, (Accessed on 10/08/2024).

- [19] V. Desktop, “Virtual desktop streamer,” https://www.vrdesktop.net/, (Accessed on 09/29/2024).

- [20] K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” in 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2016, pp. 770–778.

- [21] B. Shah and H. Bhavsar, “Time complexity in deep learning models,” Procedia Computer Science, vol. 215, pp. 202–210, 2022.

- [22] S. Baldoni, F. Battisti, F. Chiariotti, F. Mistrorigo, A. B. Shofi, P. Testolina, A. Traspadini, A. Zanella, and M. Zorzi, “Questset: A VR dataset for network and quality of experience studies,” in Proceedings of the 15th ACM Multimedia Systems Conference, ser. MMSys ’24, 2024, p. 408–414. [Online]. Available: https://doi.org/10.1145/3625468.3652187

- [23] C. Asano, “Runs test for a circular distribution and a table of probabilities,” Annals of the Institute of Statistical Mathematics, vol. 17, no. 1, pp. 331–346, 1965.