Rethinking Bjøntegaard Delta for Compression Efficiency Evaluation: Are We Calculating It Precisely and Reliably?

Abstract

For decades, the Bjøntegaard Delta (BD) has been the metric for evaluating codec Rate-Distortion (R-D) performance. Yet, in most studies, BD is determined using just 4-5 R-D data points, could this be sufficient? As codecs and quality metrics advance, does the conventional BD estimation still hold up? Crucially, are the performance improvements of new codecs and tools genuine, or merely artifacts of estimation flaws? This paper addresses these concerns by reevaluating BD estimation. We present a novel approach employing a parameterized deep neural network to model R-D curves with high precision across various metrics, accompanied by a comprehensive R-D dataset. This approach both assesses the reliability of BD calculations and serves as a precise BD estimator. Our findings advocate for the adoption of rigorous R-D sampling and reliability metrics in future compression research to ensure the validity and reliability of results.

1 Introduction

Over the past three decades, significant advancements have been made in multimedia coding, cementing its role as a cornerstone of modern technology. To quantitatively measure coding performance in a scalar metric, the BD metric [1], introduced in 2001, has since become the gold standard for comparing the relative rate-distortion (R-D) performance of different codecs.

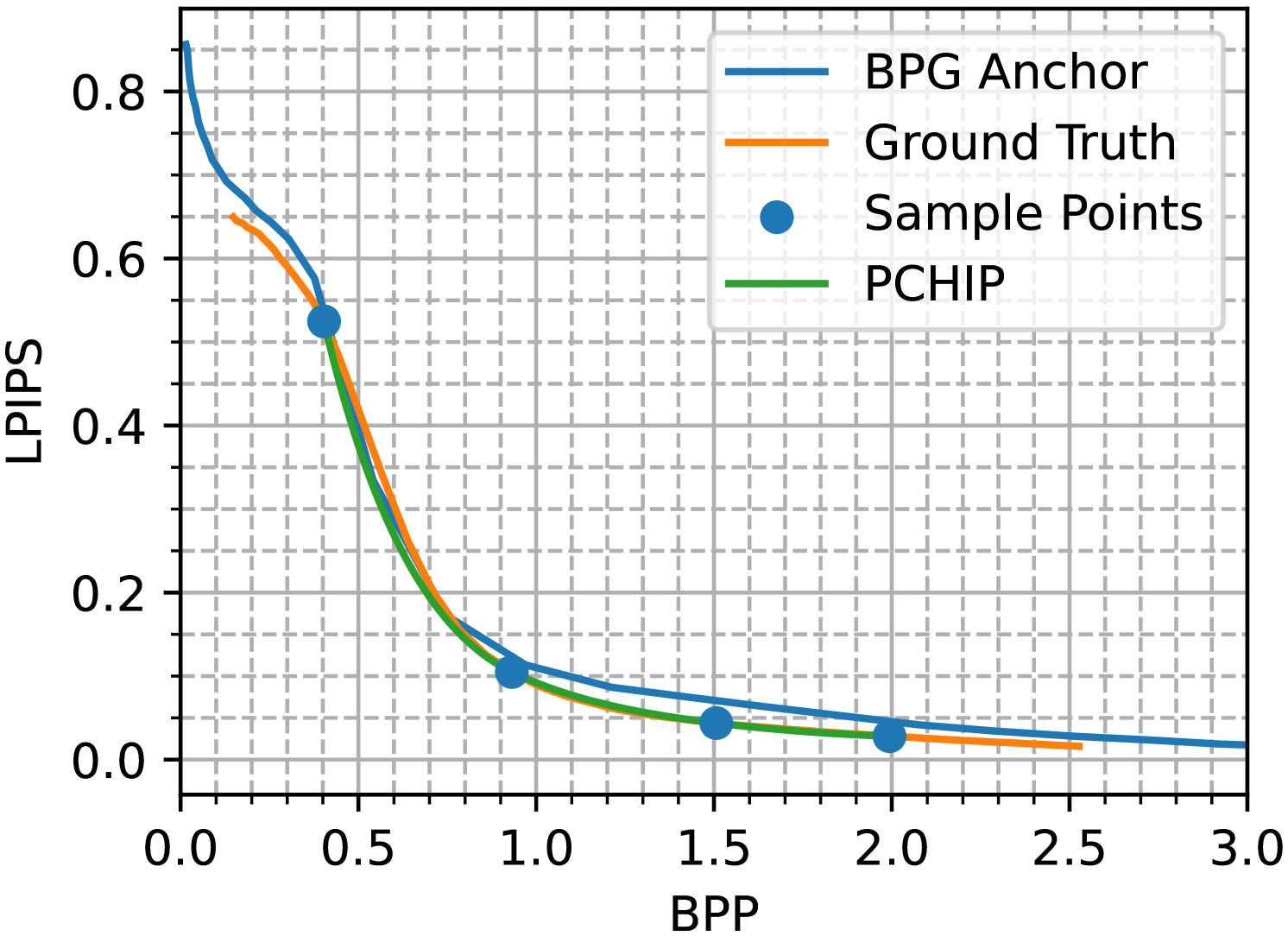

BD is derived from approximating R-D curves using sample points, as acquiring accurate R-D curves is typically expensive. Initially, BD estimation was designed for traditional codecs using Peak Signal-to-Noise Ratio (PSNR) for distortion measurement, where a bilinear or cubic function provided a reasonable fit to the R-D curve, requiring only 4-5 sample points. Later, more sophisticated interpolation methods such as Cubic Spline Interpolation (CSI), Piecewise Cubic Hermite Interpolating Polynomial (PCHIP), and Akima spline have been utilized to achieve high-fidelity R-D curve fitting [2]. However, we find that the evolution of codec technology, especially the rise of learning-based codecs and complex quality metrics, has made BD estimation complicated, making existing methods increasingly inadequate. An illustrative example is shown in Fig. 1(a). In this instance, the curve derived from the PCHIP interpolation starkly deviates from the actual R-D curve, which is determined through dense sampling across the R-D Laplacian parameter . This significant discrepancy has led to a BD-bitrate (BD-BR) estimation bias exceeding 7%.

The implications of such phenomena are indeed serious. Typically, enhancements in codec performance result from incremental advancements introduced by various coding tools. However, potential bias in BD estimation may obscure the actual performance gains achieved by these tools. In such cases, it becomes difficult to determine whether the coding technology has genuinely led to substantial improvements.

In light of these challenges and limitations, we introduce a robust method for high-precision BD estimation across diverse compression scenarios, enhanced by a reliability assessment to determine the probability distribution of BD values from R-D sample points. Our method’s validity is confirmed through extensive testing on a dataset we constructed. The key contributions can be summarized as follows:

-

•

We have established a large-scale, high-precision R-D dataset to verify the accuracy of existing BD estimation algorithms.

-

•

We introduce a novel BD computation technique that not only offers a confidence measure for the predicted BD value but also functions as a high-accuracy BD predictor itself.

-

•

We demonstrate the superiority of the framework in our evaluation dataset, showing reasonable confidence predictions as well as BD estimates with higher accuracy.

2 Physical and Mathematical Concept of BD

The calculation of BD is based on multiple sample points selected from the rate-distortion (R-D) curves of two codecs: the anchor codec, represented by its rate and distortion , and the target codec, represented by its rate and distortion . For a reliable estimation of BD, at least four sample points are required on the R-D curves. The calculation proceeds as follows:

Step 1: Convert the bitrates to a logarithmic scale to equally weigh different rate intervals, preventing bias toward higher bitrates:

| (1) |

Step 2: Apply interpolation to fit the R-D curves of both codecs using the sample points . This results in as a function of and as a function of , denoted as , , , and .

Step 3: Define the measurement intervals for BD-BR and BD-quality. Since the R-D curve interpolations often do not identically overlap, the valid intervals are given by the intersection of the two R-D curves’ axes projections. For BD-BR, the interval is:

| (2) |

For BD-quality, the interval is:

| (3) |

Step 4: Calculate BD-BR (also called BD-rate), , as:

| (4) |

which measures the average bitrate reduction of codec B compared to codec A for the same distortion. The BD-quality, , is similarly calculated as:

| (5) |

describing the average change in distortion between codecs for a given bitrate, also on a logarithmic scale. Typically, the term “BD-quality” is replaced with the corresponding quality assessment indicator, such as “BD-PSNR”, to denote the calculation of the change in the respective quality metric under the same conditions.

A recent work [3] provides a comprehensive comparison of existing tools for calculating BD, with the primary distinction being the R-D interpolation method used in the second step. The most commonly used algorithms include cubic function fitting, CSI, PCHIP, and Akima spline. However, these methods cannot completely describe the difference between the difference between the fitted curve and the actual one, particularly evident when the number of sample points is insufficient. Moreover, the lack of confidence prevents a quantitative assessment of the estimation bias in BD. To address these issues, we pioneered the Bjøntegaard Delta Confidence Interval (BDCI) for quantitatively assessing BD measurement errors.

3 Our Approach: New Insights Named Bjøntegaard Delta Confidence Interval

A key insight is that calculating BD does not necessitate explicitly fitting an R-D curve. The sole purpose of the curve is to facilitate the computation of a definite integral over a specified interval along the relevant axis. Therefore, our method eliminates the need for curve fitting and directly estimates the integral using the sample points.

Based on this insight, we can generalize the integration problem as follows: Given the coordinates of points, for , and the interval of integration , where is assumed to be ordered, we aim to solve the definite integral over the intervals of a curve formed by this set of points, without explicitly constructing a curve:

For BD-BR, corresponds to and to ; for BD-quality, the reverse holds.

We assume that obeys a normal distribution under the given conditions, . We employ the Maximum Likelihood Estimation (MLE) method to model the conditional probability distribution of relative to the sample points. The likelihood function , is defined as the joint probability of observing the integral given the distribution parameters and , where and are functions of and . After obtaining , we can determine a confidence interval for , such that has a sufficiently high probability of falling within this interval as a quantitative measure of the confidence in the estimate. Based on the properties of the normal distribution, we choose the interval as . The corresponding BD values of the interval are named as Bjøntegaard Delta Confidence Interval (BDCI), which represents the high probability that the BD falls within the interval. The variants of this indicator on and are named “BDCI-BR” and “BDCI-quality” correspondingly. Also, the term “quality” is replaced with the name of the indicator when using a specific indicator, e.g. BDCI-PSNR.

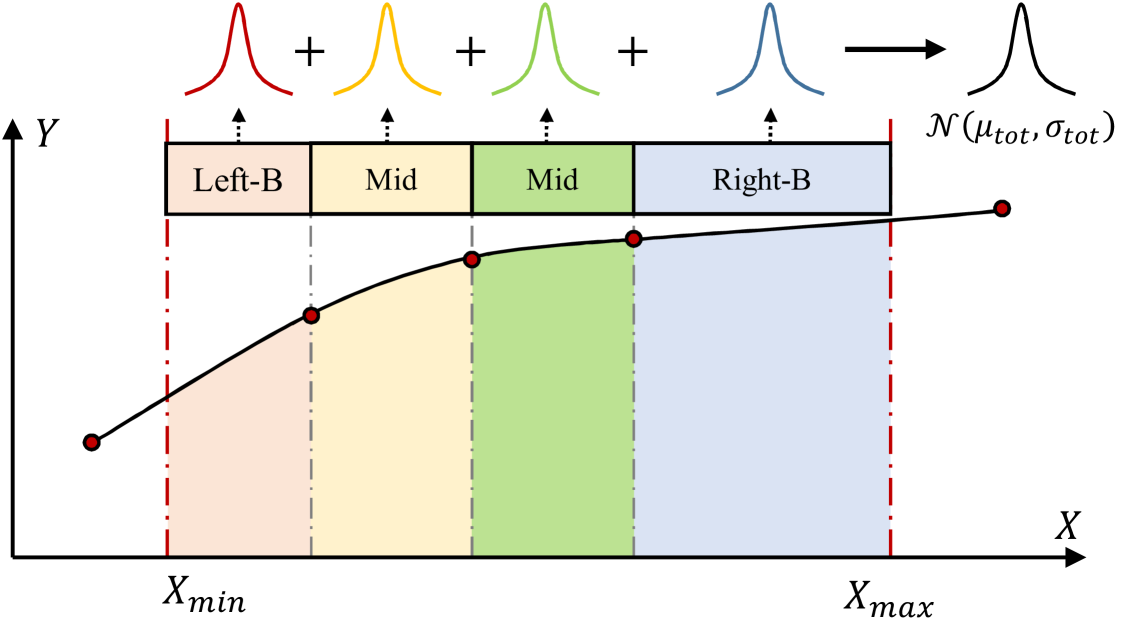

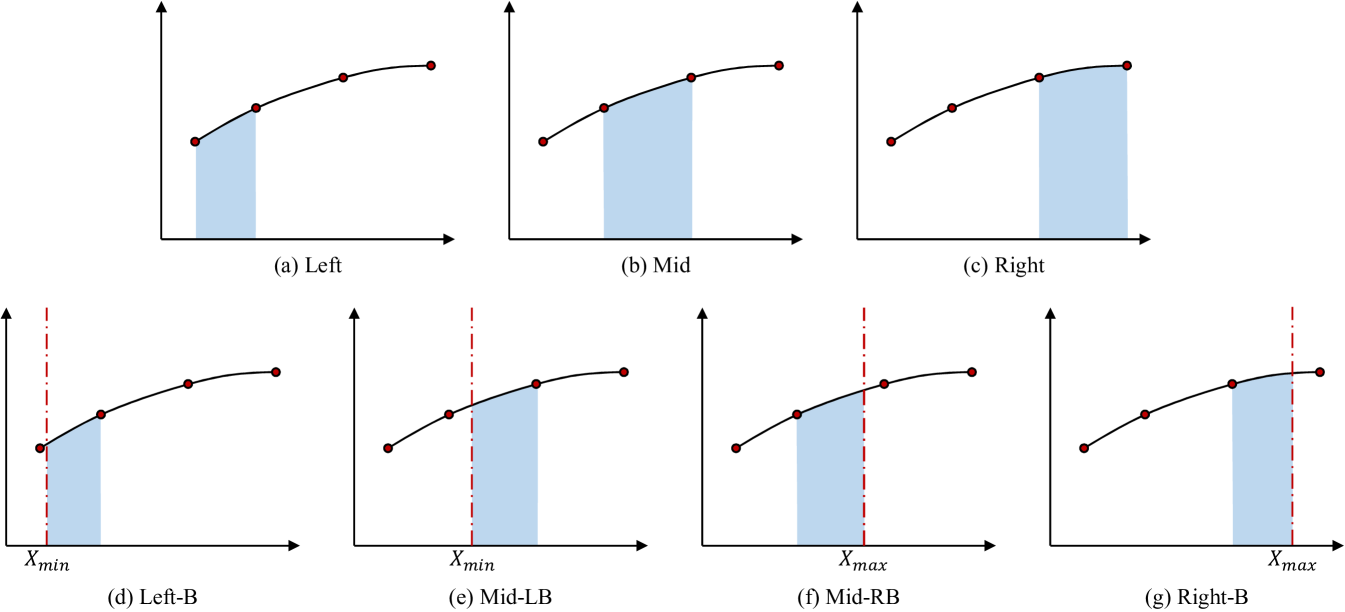

We note that the number of input sample points is variable. Therefore, we segment the curve along the values of the sample points and predict each segment separately. Specifically,

| (6) |

where the prediction for the integral on segment follows a normal distribution .

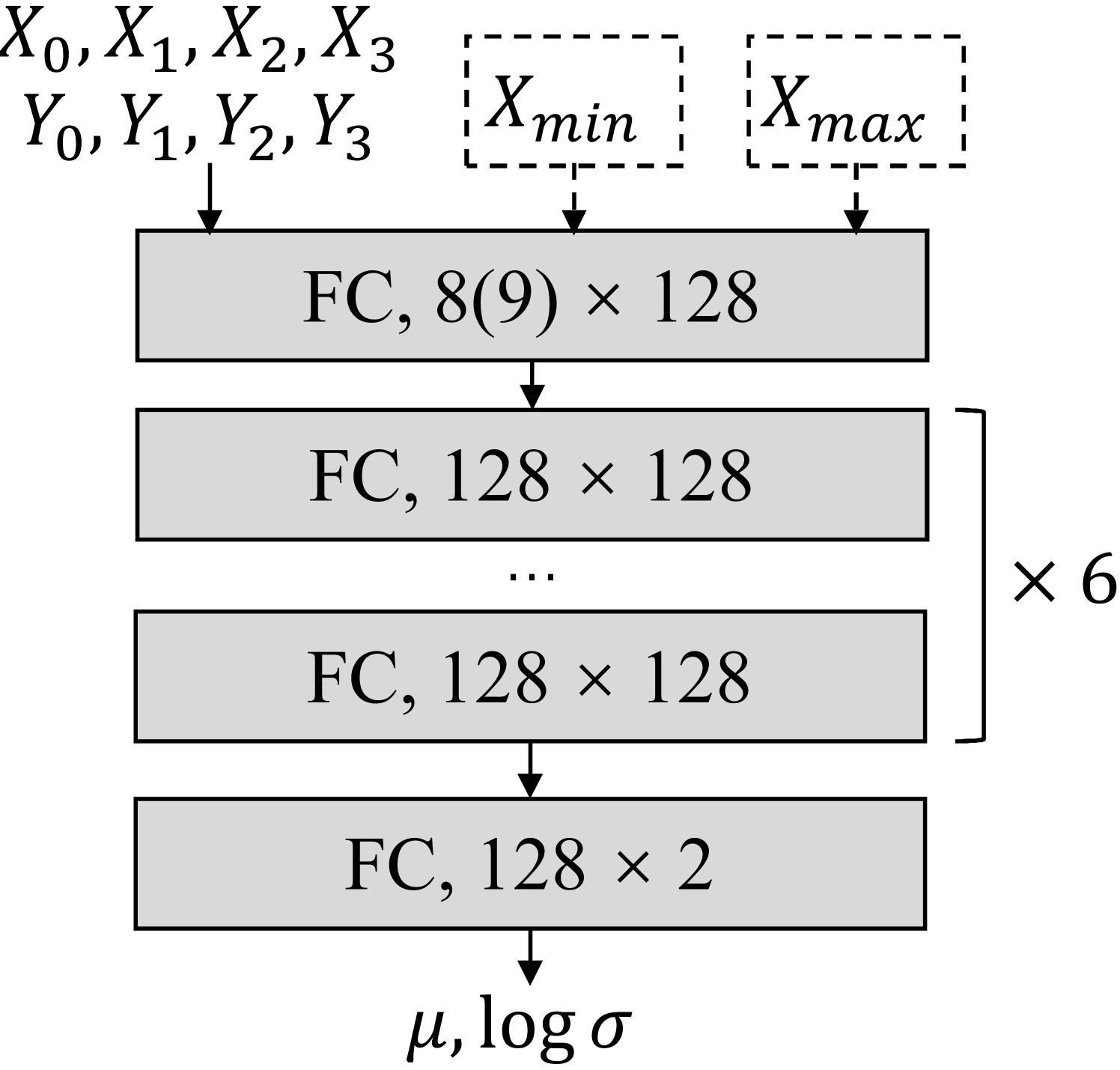

We assume the integral over each segment obeys an independent distribution to each other and modeled using its four nearest sample points. This categorizes the segments into 7 distinct types, all of which are detailed in Figure 3. For segments that include the boundaries of the integral, an additional parameter, either or , is required, resulting in an input size of 9. Conversely, for segments that do not include these boundaries, the input size is reduced to 8. Each type is modeled using a simple multilayer perceptron (MLP), as illustrated in Figure 1(b). The network’s output consists of two components, representing the predictions for and , with indicating the index of the segment. The MLP is optimized by the log-likelihood loss:

| (7) |

where are the parameters of the MLP and are the inputs to the MLP, which include the coordinates of the 4 nearest points and the or boundary. is the Probability Density Function (PDF) of the normal distribution .

The MLP incorporates six hidden layers, each consisting of 128 neurons, which results in an approximate total of 80,000 parameters. This network does not require significant resources from a standard CPU. It is capable of performing its calculations at a remarkably swift pace.

To improve the model’s generalization, the sample point coordinates are normalized before being fed into the MLP. Specifically, the minimum and maximum values of and are identified and scaled isometrically to the 0-1 range:

| (8) | ||||

| (9) |

where and are the normalized values for and , respectively. This ensures that our model captures the shape information of the curve, independent of the actual range of the values.

4 R-D Dataset

We constructed an extensive R-D dataset to assess the reliability of our proposed scheme. This dataset encompasses precise R-D curves for six diverse image codecs across 315 widely utilized test images. The codecs include both conventional and Variable-Bitrate (VBR) Lossy Image Codecs (LICs), as detailed in Table 1. The images were sourced from the Kodak [4], CLIC2020 [5], and TECNICK [6] datasets.

In addition, we examined seven prevalent quality assessment metrics: Root Mean Square Error (RMSE) and PSNR for signal fidelity, Structural Similarity Index (SSIM) [7] and Multi-Scale Structural Similarity Index (MS-SSIM) [8] for structural similarity, PSNR-HVS [9] and PSNR-HVS-M [10] for subjective human visual system metrics, and Learned Perceptual Image Patch Similarity (LPIPS) [11] for feature similarity.

Each image was encoded 50 times using each codec with consistent quality parameters to generate the most precise R-D curve data. This rigorous process resulted in 94,500 decoded images and 13,230 R-D curves.

Utilizing the R-D curve data from our sampling, we developed an R-D benchmark test set. Each test set sample comprises the exact anchor R-D curve paired with the target codec R-D curve. Concurrently, we conducted uniform QP-based sampling on the target codec to establish fixed sample points, with each point labeled accordingly. We sampled each benchmark R-D curve once with 4, 5, 6, 7, and 8 sample points, yielding a total of 47,688 valid sample sets. BPG served as the anchor for all samples. Our model’s training process ensures that samples derived from the test set are excluded from training.

| Name | Type | # of Params |

|---|---|---|

| JPEG [12] | Traditional | - |

| BPG [13] | Traditional | - |

| EVC [14] | LIC (VBR) | 17.4M |

| QARV [15] | LIC (VBR) | 93.4M |

| LIC-TCM [16] | LIC (retrained VBR) | 160.5M |

| MLIC++ [17] | LIC (retrained VBR) | 116.7M |

5 Experimental Results

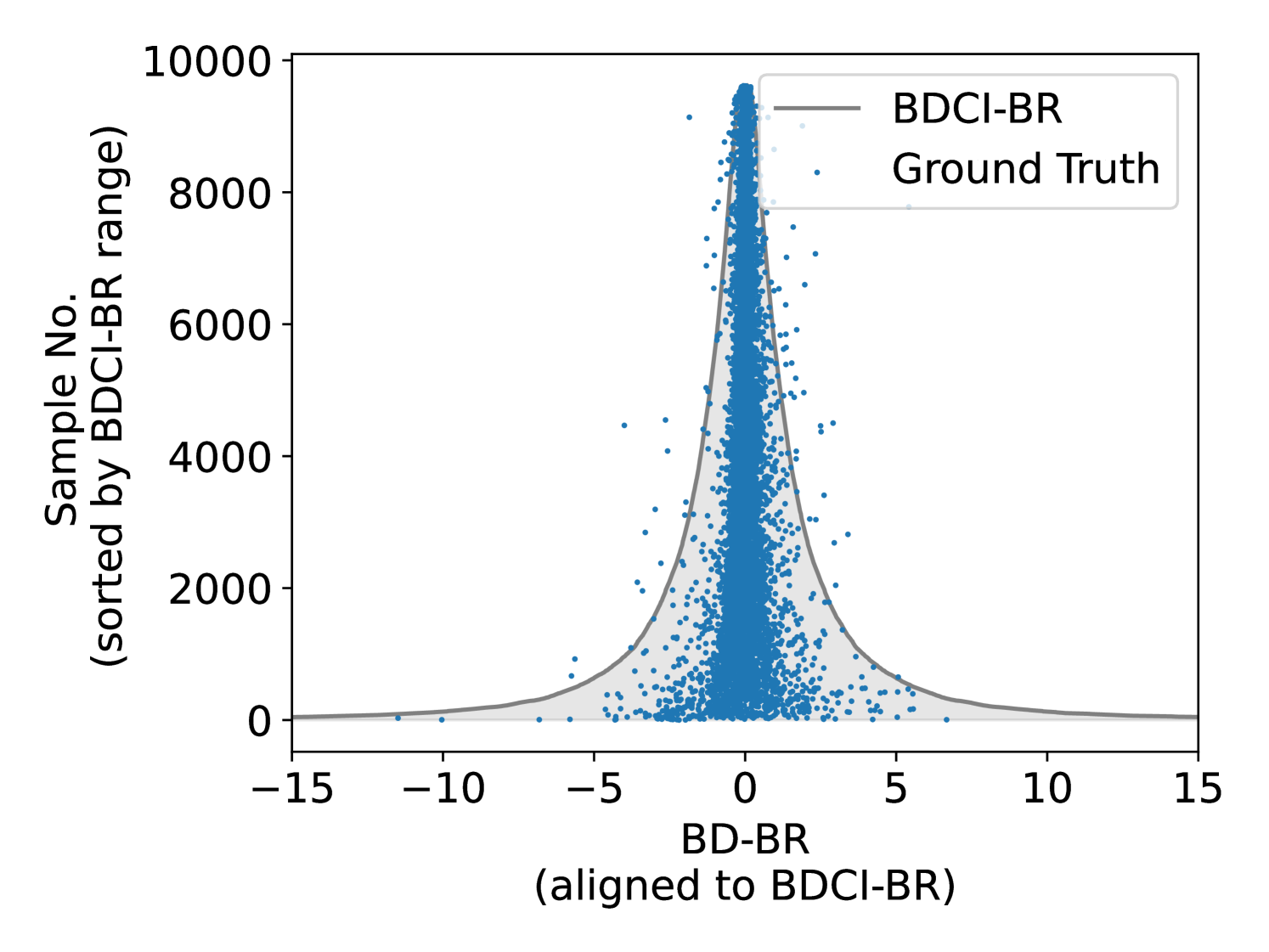

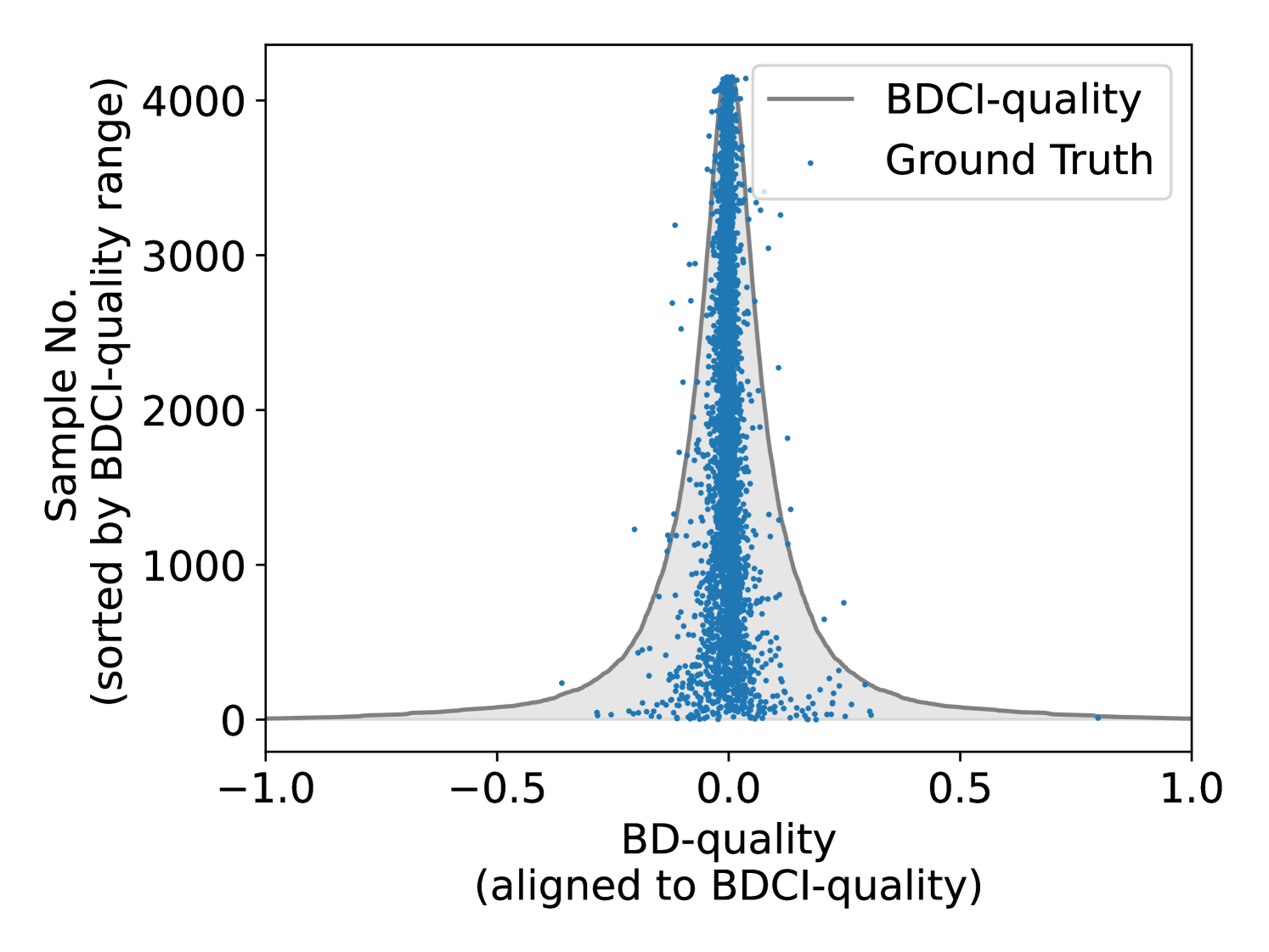

We conducted an evaluation to ascertain the efficacy of the BDCI metric within our dataset. Figure 4 illustrates the correlation between BDCI and the actual BD values when utilizing four sample points for the test set, with the data normalized for clarity. The results indicate that BDCI accurately predicts the BD confidence intervals in the majority of instances, with less than 1% of ground truth BD values lying outside the BDCI.

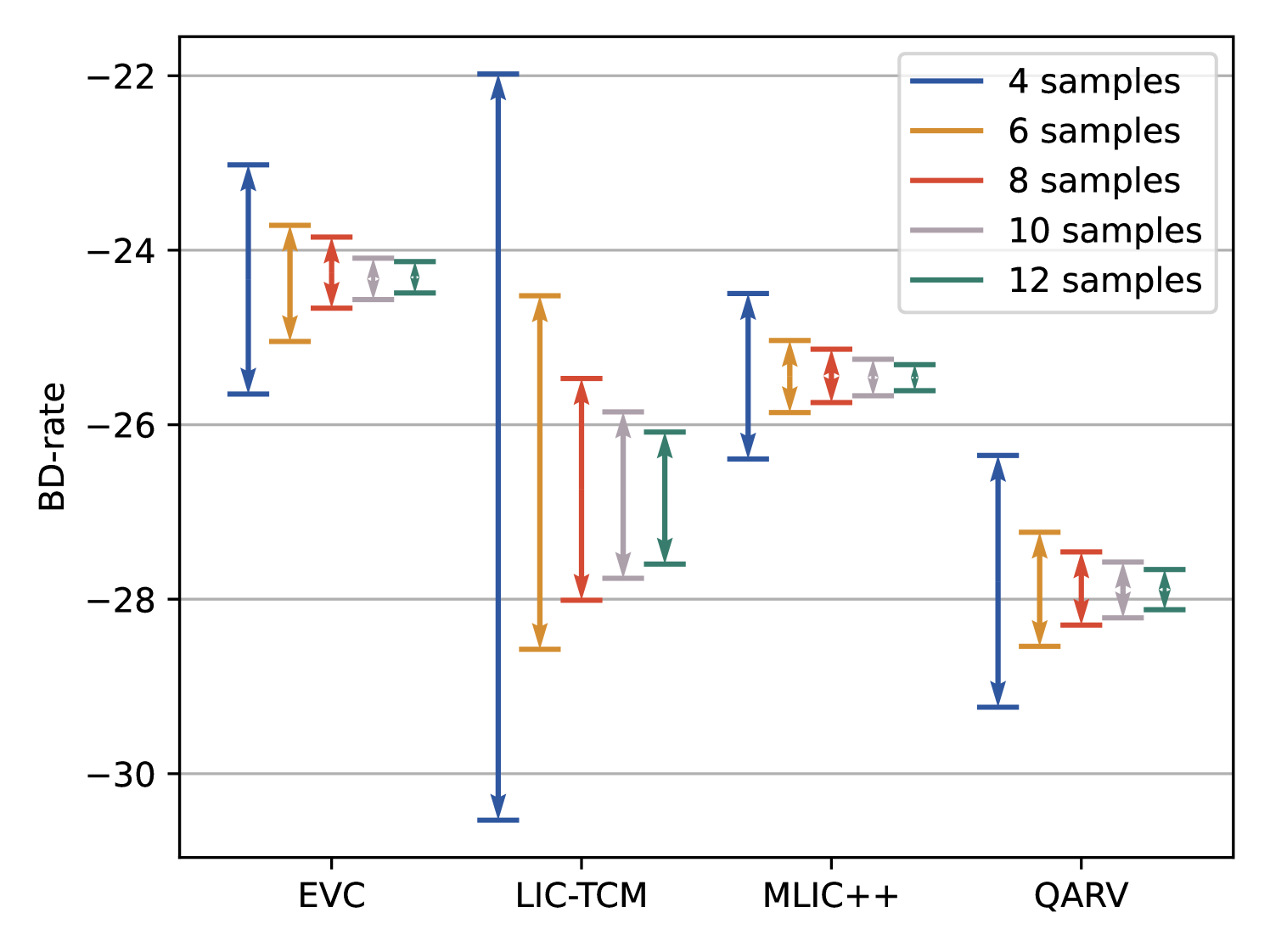

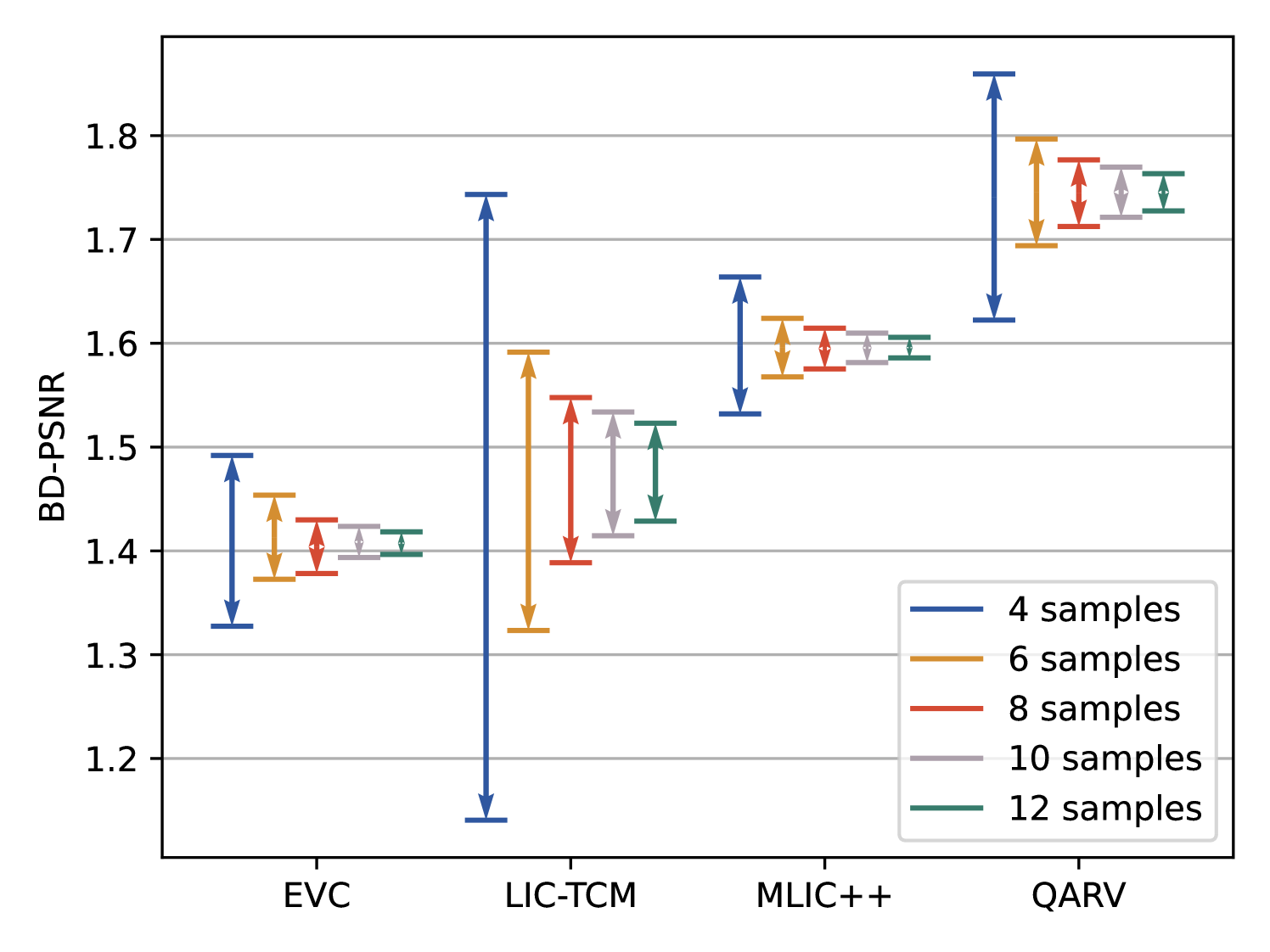

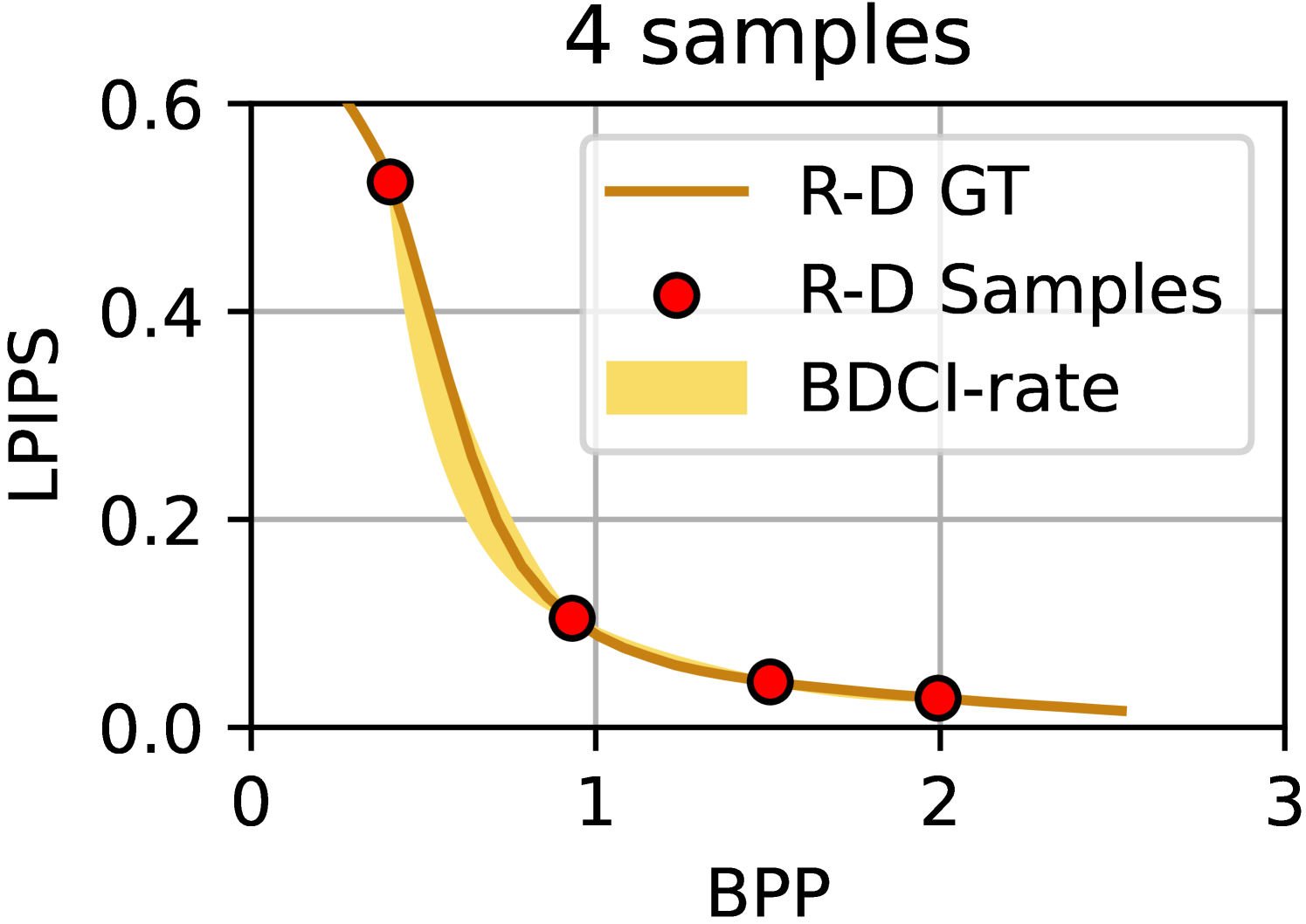

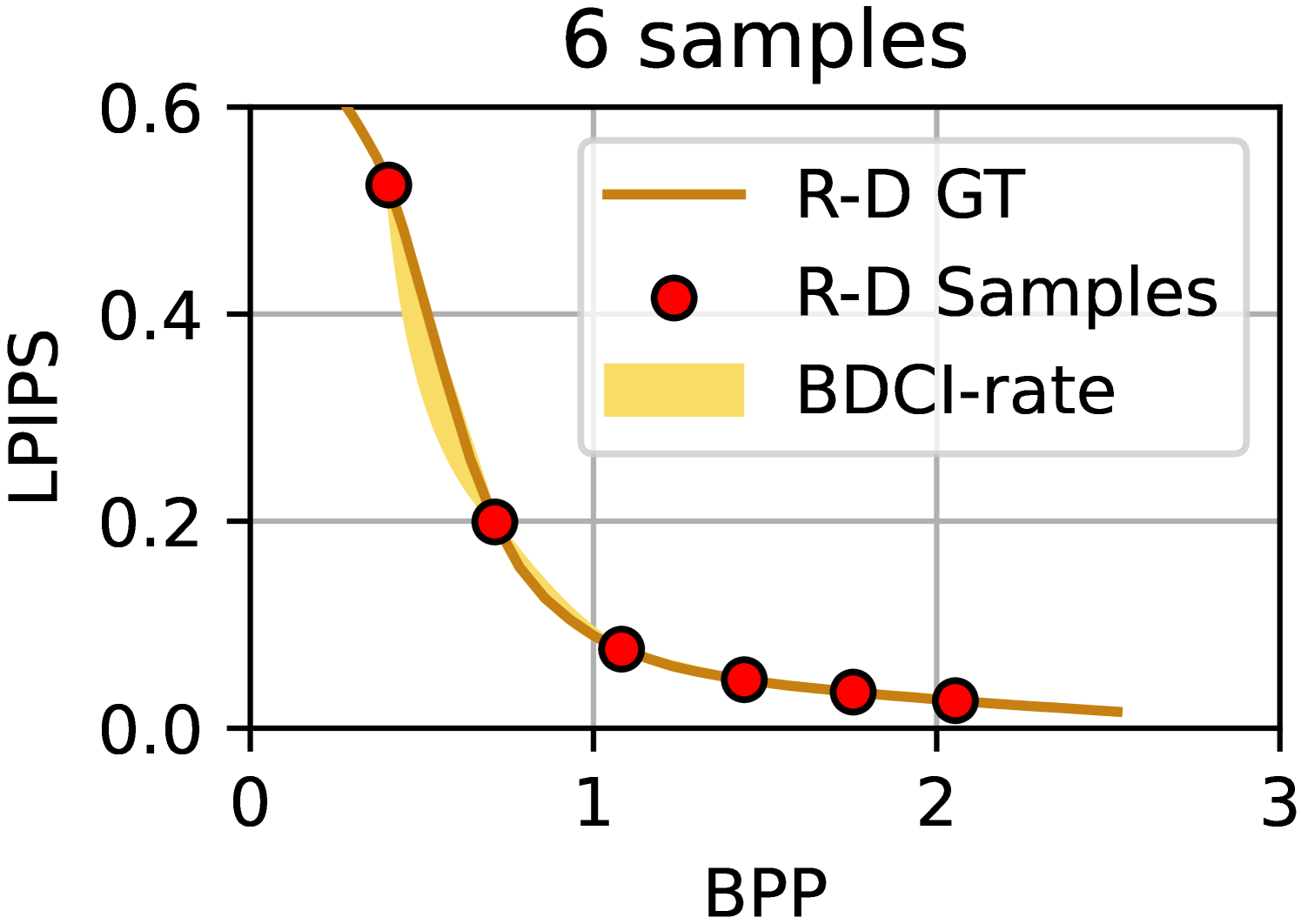

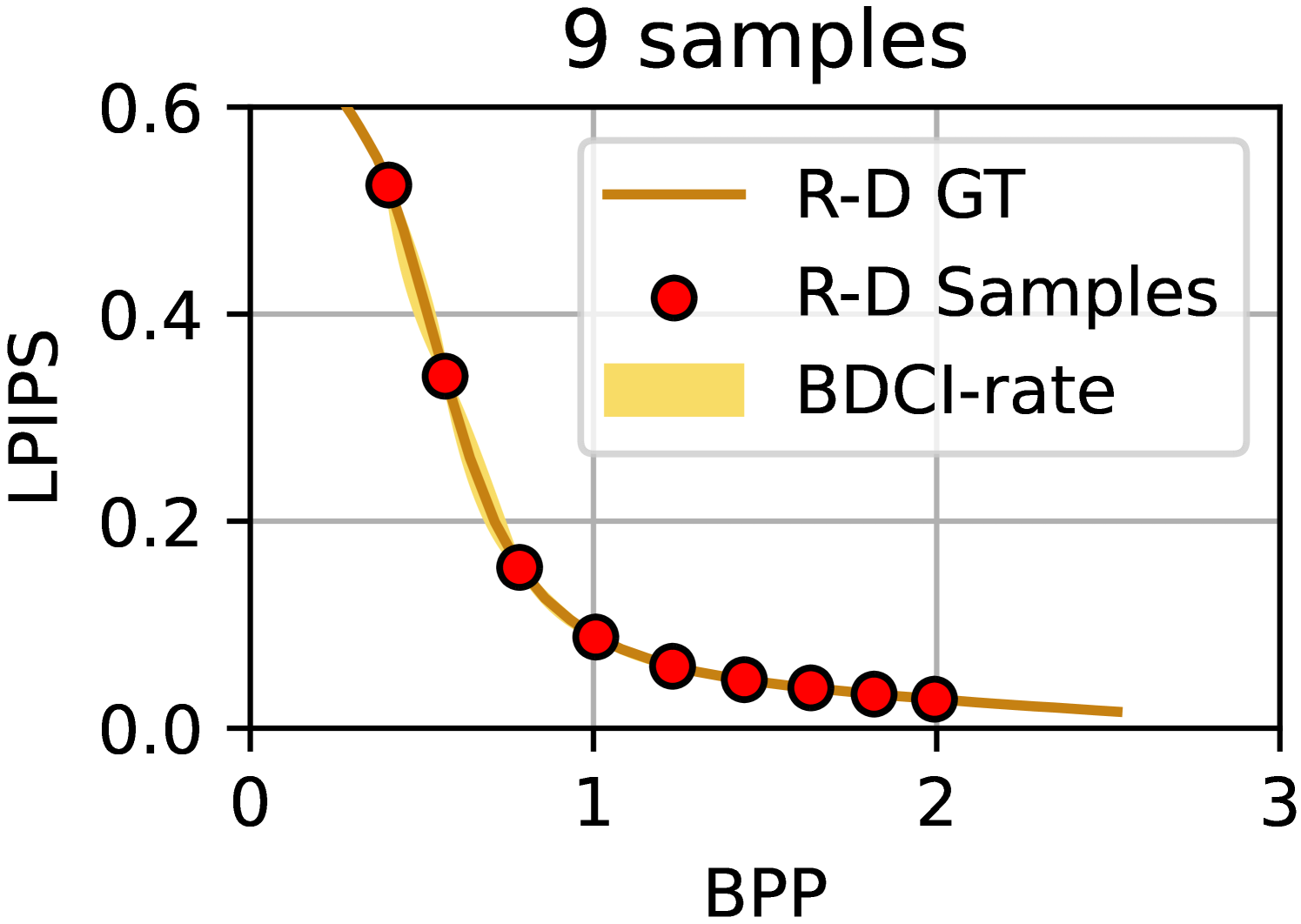

To verify that the BDCI decreases as the number of sample points increases, we tested the results using the BDCI metric on a variety of encoders. Figure 5 depicts the effect of varying the number of sample points on BDCI accuracy. In the default case of 4 sample points, the width of the BDCI is relatively large, indicating that the BD measurement is less reliable at this point. As the number of sample points increases, the width of the BDCI gradually narrows and eventually converges. This is a good indication that the BDCI provides a reasonable response as the R-D samples tend to become more accurate.

We visualize the results of the BDCI calculations in Figure 6, using the same R-D samples as in Figure 1(a). The BD-BR of the upper and lower boundaries of the yellow region in the figure is equal to the upper and lower boundaries of the BDCI. Please note that this region does not actually exist during the computation and is drawn manually for the purpose of visualizing the BDCI. The results clearly demonstrate that BDCI’s calculations are reasonable and consistent with human intuition.

Furthermore, we employed the output from our model as a direct BD estimator and compared its performance against prevalent BD estimation methods using R-D sample points. The experimental outcomes are presented in Table 2, where the estimation bias is quantified using the Mean Square Error (MSE), representing the discrepancy between the actual BD values and the BD predictions based on the sample points. The experimental findings conclusively show that our proposed approach achieves superior prediction accuracy in most of the conditions, especially when calculating BD-BR.

| PSNR | MS-SSIM | LPIPS | RMSE | SSIM |

|

|

||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

|

|

|

|

|

|

|

|||||||||||||||||

| CSI | 0.560 | 1.027 | 199.884 | 0.772 | 73.641 | 0.627 | 0.647 | |||||||||||||||||

| 0.500 | 0.060 | 10.451 | 1.052 | 0.322 | 0.956 | 2.326 | ||||||||||||||||||

| PCHIP | 0.106 | 0.129 | 0.686 | 0.101 | 0.155 | 0.120 | 0.191 | |||||||||||||||||

| 0.245 | 0.032 | 6.671 | 0.365 | 0.284 | 0.372 | 0.902 | ||||||||||||||||||

| Akima | - | 0.160 | 3.870 | 0.167 | 0.496 | 0.169 | 0.229 | |||||||||||||||||

| 0.266 | 0.040 | 6.626 | 0.379 | 0.290 | 0.401 | 0.982 | ||||||||||||||||||

| Ours | 0.104 | 0.115 | 0.566 | 0.083 | 0.154 | 0.120 | 0.191 | |||||||||||||||||

| 0.224 | 0.054 | 6.772 | 0.413 | 0.273 | 0.353 | 1.022 | ||||||||||||||||||

We measured the running time of the algorithm, as presented in Table 3. The findings from experiments conducted with varying numbers of R-D sample points reveal that, despite integrating neural networks into our approach, the runtime for a single execution remains minimal on a single-core CPU.

| Number of R-D Samples | 4 | 6 | 8 |

|---|---|---|---|

| Runtime [ms] | 6.1 | 11.6 | 14.3 |

6 Suggestions & Conclusion

In this study, we identified a critical flaw in the existing BD estimation methodology and offer a novel deep learning-based solution. Our method not only achieves superior accuracy in estimating the posterior probability distribution of the BD integral but also provides reliable confidence interval estimates. The experimental findings suggest avenues for future research aimed at further minimizing estimation errors and enhancing the robustness of BD estimation outcomes.

Our study suggests that the BD comparison method may be flawed when the R-D sample size is limited, which could significantly affect the reliability of the results. To enhance the accuracy and reliability of BD comparisons, we recommend the following:

-

1.

Implement BDCI Metric. Use the methodology from this paper for codec comparisons, including BD estimates and BDCI intervals.

-

2.

Increase Sample Points. Use more sample points for BD metrics to enhance accuracy and reduce BDCI intervals.

-

3.

Use Dense Sample Points with Anchor Method. When using fast codecs as anchor, obtain a dense R-D anchor curve for better measurement accuracy.

7 References

References

- [1] G Bjøntegaard, “Calculation of average psnr differences between rd-curves,” ITU-T SG16 Q, vol. 6, 2001.

- [2] Christian Herglotz, Matthias Kränzler, Ruben Mons, and André Kaup, “Beyond bjøntegaard: Limits of video compression performance comparisons,” in 2022 IEEE International Conference on Image Processing (ICIP). IEEE, 2022, pp. 46–50.

- [3] Christian Herglotz, Hannah Och, Anna Meyer, Geetha Ramasubbu, Lena Eichermüller, Matthias Kränzler, Fabian Brand, Kristian Fischer, Dat Thanh Nguyen, Andy Regensky, and André Kaup, “The bjøntegaard bible why your way of comparing video codecs may be wrong,” IEEE Transactions on Image Processing, vol. 33, pp. 987–1001, 2024.

- [4] Eastman Kodak Company, “Kodak lossless true color image suite,” http://r0k.us/graphics/kodak, 1999.

- [5] George Toderici, Wenzhe Shi, et. al, “Workshop and challenge on learned image compression,” http://www.compression.cc, 2020.

- [6] Nicola Asuni and Andrea Giachetti, “Testimages: A large data archive for display and algorithm testing,” Journal of Graphics Tools, vol. 17, no. 4, pp. 113–125, 2013.

- [7] Zhou Wang, A.C. Bovik, H.R. Sheikh, and E.P. Simoncelli, “Image quality assessment: from error visibility to structural similarity,” IEEE Transactions on Image Processing, vol. 13, no. 4, pp. 600–612, 2004.

- [8] Z. Wang, E.P. Simoncelli, and A.C. Bovik, “Multiscale structural similarity for image quality assessment,” in The Thrity-Seventh Asilomar Conference on Signals, Systems & Computers, 2003, vol. 2, pp. 1398–1402 Vol.2.

- [9] Karen Egiazarian, J. Astola, Vladimir Lukin, Federica Battisti, and Marco Carli, “A new full-reference quality metrics based on hvs,” 01 2006.

- [10] Nikolay Ponomarenko, Flavia Silvestri, Karen Egiazarian, Marco Carli, J. Astola, and Vladimir Lukin, “On between-coefficient contrast masking of DCT basis functions,” Proc of the 3rd Int Workshop on Video Processing and Quality Metrics for Consumer Electronics, 01 2007.

- [11] Richard Zhang, Phillip Isola, Alexei A. Efros, Eli Shechtman, and Oliver Wang, “The unreasonable effectiveness of deep features as a perceptual metric,” in 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2018, pp. 586–595.

- [12] G.K. Wallace, “The JPEG still picture compression standard,” IEEE Transactions on Consumer Electronics, vol. 38, no. 1, pp. xviii–xxxiv, Feb. 1992.

- [13] Fabrice Bellard, “BPG image format,” https://bellard.org/bpg, 2015.

- [14] Guo-Hua Wang, Jiahao Li, Bin Li, and Yan Lu, “EVC: Towards real-time neural image compression with mask decay,” in International Conference on Learning Representations, 2023.

- [15] Z. Duan, M. Lu, J. Ma, Y. Huang, Z. Ma, and F. Zhu, “QARV: Quantization-aware resnet vae for lossy image compression,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 46, no. 01, pp. 436–450, jan 2024.

- [16] Jinming Liu, Heming Sun, and Jiro Katto, “Learned image compression with mixed transformer-cnn architectures,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2023, pp. 14388–14397.

- [17] Wei Jiang and Ronggang Wang, “MLIC++: linear complexity multi-reference entropy modeling for learned image compression,” arXiv preprint arXiv:2307.15421, 2023.