Rethinking the Capacity of Graph Neural Networks for Branching Strategy

Abstract.

Graph neural networks (GNNs) have been widely used to predict properties and heuristics of mixed-integer linear programs (MILPs) and hence accelerate MILP solvers. This paper investigates the capacity of GNNs to represent strong branching (SB), the most effective yet computationally expensive heuristic employed in the branch-and-bound algorithm. In the literature, message-passing GNN (MP-GNN), as the simplest GNN structure, is frequently used as a fast approximation of SB and we find that not all MILPs’s SB can be represented with MP-GNN. We precisely define a class of “MP-tractable” MILPs for which MP-GNNs can accurately approximate SB scores. Particularly, we establish a universal approximation theorem: for any data distribution over the MP-tractable class, there always exists an MP-GNN that can approximate the SB score with arbitrarily high accuracy and arbitrarily high probability, which lays a theoretical foundation of the existing works on imitating SB with MP-GNN. For MILPs without the MP-tractability, unfortunately, a similar result is impossible, which can be illustrated by two MILP instances with different SB scores that cannot be distinguished by any MP-GNN, regardless of the number of parameters. Recognizing this, we explore another GNN structure called the second-order folklore GNN (2-FGNN) that overcomes this limitation, and the aforementioned universal approximation theorem can be extended to the entire MILP space using 2-FGNN, regardless of the MP-tractability. A small-scale numerical experiment is conducted to directly validate our theoretical findings.

1. Introduction

Mixed-integer linear programming (MILP) involves optimization problems with linear objectives and constraints, where some variables must be integers. These problems appear in various fields, from logistics and supply chain management to planning and scheduling, and are in general NP-hard. The branch-and-bound (BnB) algorithm [land1960automatic] is the core of a MILP solver. It works by repeatedly solving relaxed versions of the problem, called linear relaxations, which allow the integer variables to take on fractional values. If a relaxation’s solution satisfies the integer requirements, it is a valid solution to the original problem. Otherwise, the algorithm divides the problem into two subproblems and solves their relaxations. This process continues until it finds the best solution that meets all the constraints.

Branching is the process of dividing a linear relaxation into two subproblems. When branching, the solver selects a variable with a fractional value in the relaxation’s solution and create two new subproblems. In one subproblem, the variable is forced to be less than or equal to the nearest integer below the fractional value. In the other, it is bounded above the fractional value. The branching variable choice is critical because it can impact the solver’s efficiency by orders of magnitude.

A well-chosen branching variable can lead to a significant improvement in the lower bound, which is a quantity that can quickly prove that a subproblem and its further subdivisions are infeasible or not promising, thus reducing the total number of subproblems to explore. This means fewer linear relaxations to solve and faster convergence to the optimal solution. On the contrary, a poor choice may result in branches that do little to improve the bounds or reduce the solution space, thus leading to a large number of subproblems to be solved, significantly increasing the total solution time. The choice of which variable to branch on is a pivotal decision. This is where branching strategies, such as strong branching and learning to branch, come into play, evaluating the impact of different branching choices before making a decision.

Strong branching (SB) [applegate1995finding] is a sophisticated strategy to select the most promising branches to explore. In SB, before actually performing a branch, the solver tentatively branches on several variables and calculates the potential impact of each branch on the objective function. This “look-ahead” strategy evaluates the quality of branching choices by solving linear relaxations of the subproblems created by the branching. The variable that leads to the most significant improvement in the objective function is selected for the actual branching. Usually recognized as the most effective branching strategy, SB often results in a significantly lower number of subproblems to resolve during the branch-and-bound (BnB) process compared to other methods [gamrath2018measuring]. As such, SB is frequently utilized directly or as a fundamental component in cutting-edge solvers.

While SB can significantly reduce the size of the BnB search space, it comes with high computational cost: evaluating multiple potential branches at each decision point requires solving many LPs. This leads to a trade-off between the time spent on SB and the overall time saved due to a smaller search space. In practice, MILP solvers use heuristics to limit the use of SB to where it is most beneficial.

Learning to branch (L2B) introduces a new approach by incorporating machine learning (ML) to develop branching strategies, offering new solutions to address this trade-off. This line of research begins with imitation learning [khalil2016learning, alvarez2017machine, balcan2018learning, gasse2019exact, gupta2020hybrid, zarpellon2021parameterizing, gupta2022lookback, lin2022learning, yang2022learning], where models, including SVM, decision tree, and neural networks, are trained to mimic SB outcomes based on the features of the underlying MILP. They aim to create a computationally efficient strategy that achieves the effectiveness of SB on specific datasets. Furthermore, in recent reinforcement learning approaches, mimicking SB continues to take crucial roles in initialization or regularization [qu2022improved, zhang2022deep].

While using a heuristic (an ML model) to approximate another heuristic (the SB procedure) may seem counterintuitive, it is important to recognize the potential benefits. The former can significantly reduce the time required to make branching decisions as effectively as the latter. As MILPs become larger and more complex, the computational cost of SB grows at least cubically, but some ML models grow quadratically, even just linearly after training on a set of similar MILPs. Although SB can theoretically solve LP relaxations in parallel, the time required for different LPs may vary greatly, and there is a lack of GPU-friendly methods that can effectively utilize starting bases for warm starts. In contrast, ML models, particularly GNNs, are more amenable to efficient implementation on GPUs, making them a more practical choice for accelerating the branching variable selection process. Furthermore, additional problem-specific characteristics can be incorporated into the ML model, allowing it to make more informed branching decisions tailored to each problem instance.

Graph neural network (GNN) stands out as an effective class of ML models for L2B, surpassing other models like SVM and MLP, due to the excellent scalability and the permutation-invariant/equivariant property. To utilize a GNN on a MILP, one first conceptualizes the MILP as a graph and the GNN is then applied to that graph and returns a branching decision. This approach [gasse2019exact, ding2020accelerating] has gained prominence in not only L2B but various other MILP-related learning tasks [nair2020solving, wu2021learning, scavuzzo2022learning, paulus2022learning, chi2022deep, liu2022learning, khalil2022mip, labassi2022learning, falkner2022learning, song2022learning, hosny2023automatic, wang2023learning, turner2023adaptive, ye2023gnn, marty2023learning]. More details are provided in Section 2.

Despite the widespread use of GNNs on MILPs, a theoretical understanding remains largely elusive. A vital concept for any ML model, including GNNs, is its capacity or expressive power [sato2020survey, Li2022, jegelka2022theory], which in our context is their ability to accurately approximate the mapping from MILPs to their SB results. Specifically, this paper aims to answer the following question:

| (1.1) | Given a distribution of MILPs, is there a GNN model capable of mapping each | |||

| MILP problem to its strong branching result with a specified level of precision? |

Related works and our contributions

While the capacity of GNNs for general graph tasks, such as node and link prediction or function approximation on graphs, has been extensively studied [xu2019powerful, maron2019universality, chen2019equivalence, keriven2019universal, sato2019approximation, Loukas2020What, azizian2020expressive, geerts2022expressiveness, zhang2023rethinking], their capacities in approximating SB remains largely unexplored. The closest studies [chen2022representing-lp, chen2022representing-milp] have explored GNNs’ ability to represent properties of linear programs (LPs) and MILPs, such as feasibility, boundedness, or optimal solutions, but have not specifically focused on branching strategies. Recognizing this gap, our paper makes the following contributions:

-

•

In the context of L2B using GNNs, we first focus on the most widely used type: message-passing GNNs (MP-GNNs). Our study reveals that MP-GNNs can reliably predict SB results, but only for a specific class of MILPs that we introduce as message-passing-tractable (MP-tractable). We prove that for any distribution of MP-tractable MILPs, there exists an MP-GNN capable of accurately predicting their SB results. This finding establishes a theoretical basis for the widespread use of MP-GNNs to approximate SB results in current research.

-

•

Through a counter-example, we demonstrate that MP-GNNs are incapable of predicting SB results beyond the class of MP-tractable MILPs. The counter-example consists of two MILPs with distinct SB results to which all MP-GNNs, however, yield identical branching predictions.

-

•

For general MILPs, we explore the capabilities of second-order folklore GNNs (2-FGNNs), a type of higher-order GNN with enhanced expressive power. Our results show that 2-FGNNs can reliably answer question (1.1) positively, effectively replicating SB results across any distribution of MILP problems, surpassing the capabilities of standard MP-GNNs.

Overall, as a series of works have empirically shown that learning an MP-GNN as a fast approximation of SB significantly benefits the performance of an MILP solver on specific data sets [khalil2016learning, alvarez2017machine, balcan2018learning, gasse2019exact, gupta2020hybrid, zarpellon2021parameterizing, gupta2022lookback, lin2022learning, yang2022learning], our goal is to determine whether there is room, in theory, to further understand and improve the GNNs’ performance on this task.

2. Preliminaries and problem setup

We consider the MILP defined in its general form as follows:

| (2.1) |

where , , , is the type of constraints, and are the lower bounds and upper bounds of the variable , and identifies which variables are constrained to be integers.

Graph Representation of MILP

Here we present an approach to represent MILP as a bipartite graph, termed the MILP-graph. This conceptualization was initially proposed by [gasse2019exact] and has quickly become a prevalent model in ML for MILP-related tasks. The MILP-graph is defined as a tuple , where the components are specified as follows: and are sets of nodes representing the constraints and variables, respectively. An edge connects node to node if the corresponding entry in the coefficient matrix of (2.1) is non-zero, with serving as the edge weight. are features/attributes of constraints, with features attached to node . are features/attributes of variables, with features attached to node , where indicates whether the variable is integer-constrained.

We define as the neighbors of and similarly define . This graphical representation completely describes a MILP’s information, allowing us to interchangeably refer to a MILP and its graph throughout this paper. An illustrative example is presented in Figure 1. We also introduce a space of MILP-graphs:

Definition 2.1 (Space of MILP-graphs).

We use to denote the collection of all MILP-graphs induced from MILPs of the form (2.1) with variables and constraints.111Rigorously, the space is equipped with product topology, where all Euclidean spaces have standard Eudlidean topologies, discrete spaces , , , and have the discrete topologies, and all unions are disjoint unions.

Message-passing graph neural networks (MP-GNNs) are a class of GNNs that operate on graph-structured data, by passing messages between nodes in a graph to aggregate information from their local neighborhoods. In our context, the input is an aforementioned MILP-graph , and each node in is associated with a real-number output. We use the standard MP-GNNs for MILPs in the literature [gasse2019exact, chen2022representing-milp].

Specifically, the initial layer assigns features for each node as

-

•

for each constraint , and for each variable .

Then message-passing layers update the features via

-

•

for each constraint , and

-

•

for each variable .

Finally, the output layer produces a read-number output for each node :

-

•

.

In practice, functions are learnable and usually parameterized with multi-linear perceptrons (MLPs). In our theoretical analysis, we assume for simplicity that those functions are continuous on given domains. The space of MP-GNNs is introduced as follows.

Definition 2.2 (Space of MP-GNNs).

We use to denote the collection of all MP-GNNs constructed as above with being continuous with and .222We require yield when the edge weight is to avoid the discontinuity of functions in .

Overall, any MP-GNN maps a MILP-graph to a -dim vector: .

Second-order folklore graph neural networks (2-FGNNs) are an extension of MP-GNNs designed to overcome some of the capacity limitations. It is proved in [xu2019powerful] the expressive power of MP-GNNs can be measured by the Weisfeiler-Lehman test (WL test [weisfeiler1968reduction]). To enhance the ability to identify more complex graph patterns, [morris2019weisfeiler] developed high-order GNNs, inspired by high-order WL tests [cai1992optimal]. Since then, there has been growing literature about high-order GNNs and other variants including high-order folklore GNNs [maron2019provably, geerts2020expressive, geerts2020walk, azizian2020expressive, zhao2022practical, geerts2022expressiveness]. Instead of operating on individual nodes of the given graph, 2-FGNNs operate on pairs of nodes (regardless of whether two nodes in the pair are neighbors or not) and the neighbors of those pairs. We say two node pairs are neighbors if they share a common node. Let be the input graph. The initial layer performs:

-

•

for each constraint and each variable , and

-

•

for variables ,

where if and otherwise. For internal layers , compute

-

•

for all , and

-

•

for all .

The final layer produces the output for each node :

-

•

.

Similar to MP-GNNs, the functions within 2-FGNNs, including , are also learnable and typically parameterized with MLPs. The space of 2-FGNNs is defined with:

Definition 2.3.

We use to denote the set of all -FGNNs with continuous .

Any -FGNN, , maps a MILP-graph to a -dim vector: . While MP-GNNs and 2-FGNNs share the same input-output structure, their internal structures differ, leading to distinct expressive powers.

3. Imitating strong branching by GNNs

In this section, we present some observations and mathematical concepts underlying the imitation of strong branching by GNNs. This line of research, which aims to replicate SB strategies through GNNs, has shown promising empirical results across a spectrum of studies [gasse2019exact, gupta2020hybrid, zarpellon2021parameterizing, gupta2022lookback, lin2022learning, yang2022learning, scavuzzo2022learning], yet it still lacks theoretical foundations. Its motivation stems from two key observations introduced earlier in Section 1, which we elaborate on here in detail.

Observation I

SB is notably effective in reducing the size of the BnB search space. This size is measured by the size of the BnB tree. Here, a “tree” refers to a hierarchical structure of “nodes”, each representing a decision point or a subdivision of the problem. The tree’s size corresponds to the number of these nodes. For instance, consider the instance “neos-3761878-oglio” from MIPLIB [gleixner2021miplib]. When solved using SCIP [BolusaniEtal2024OO, BolusaniEtal2024ZR] under standard configurations, the BnB tree size is , and it takes seconds to attain optimality. However, disabling SB, along with all branching rules dependent on SB, results in an increased BnB tree size to and an increased runtime to seconds.

Observation II

SB itself is computationally expensive. In the above experiment under standard settings, SB consumes an average of of the total runtime, out of seconds in total.

Therefore, there is a clear need of approximating SB with efficient ML models. Ideally, if we can substantially reduce the SB calculation time from seconds to a negligible duration while maintaining its effectiveness, the remaining runtime of seconds would become significantly more efficient.

To move forward, we introduce some basic concepts related to SB.

Concepts for SB

SB begins by identifying candidate variables for branching, typically those with non-integer values in the solution to the linear relaxation but which are required to be integers. Each candidate is then assigned a SB score, a non-negative real number determined by creating two linear relaxations and calculating the objective improvement. A higher SB score indicates the variable has a higher priority to be chosen for branching. Variables that do not qualify as branching candidates are assigned a zero score. Compiling these scores for each variable results in an -dimensional SB score vector, denoted as .

Consequently, the task of approximating SB with GNNs can be described with a mathematical language: finding an or such that . Formally, it is:

Formal statement of Problem (1.1): Given a distribution of , is there or such that is smaller than some error tolerance with high probability?

To provide clarity, we present a formal definition of SB scores:

Definition 3.1 (LP relaxation with a single bound change).

Pick a . For any , , and , we denote by the following LP problem obtained by changing the lower/upper bound of in the LP relaxation of (2.1):

Definition 3.2 (Strong branching scores).

Let be a MILP-graph associated with the problem (2.1) whose LP relaxation is feasible and bounded. Denote as the optimal objective value of the LP relaxation of and denote as the optimal solution with the smallest -norm. The SB score for variable is defined via

where and are the optimal objective values of and respectively, with being the largest integer no greater than and being the smallest integer no less than , for .

Remark: LP solution with the smallest -norm

We only define the SB score for MILP problems with feasible and bounded LP relaxations; otherwise the optimal solution does not exist. If the LP relaxation of admits multiple optimal solutions, then the strong branching score depends on the choice of the particular optimal solution. To guarantee that the SB score is uniquely defined, in Definition 3.2, we use the optimal solution with the smallest -norm, which is unique.

Remark: SB at leaf nodes

While the strong branching score discussed here primarily pertains to root SB, it is equally relevant to SB at leaf nodes within the BnB framework. By interpreting the MILP-graph in Definition 3.2 as representing the subproblems encountered during the BnB process, we can extend our findings to strong branching decisions at any point in the BnB tree. Here, root SB refers to the initial branching decisions made at the root of the BnB tree, while leaf nodes represent subsequent branching points deeper in the tree, where similar SB strategies can be applied.

Remark: Other types of SB scores

Although this paper primarily focuses on the product SB scores (where the SB score is defined as the product of objective value changes when branching up and down), our analysis can extend to other forms of SB scores in [dey2024theoretical]. (Refer to Appendix D.1)

4. Main results

4.1. MP-GNNs can represent SB for MP-tractable MILPs

In this subsection, we define a class of MILPs, named message-passing-tractable (MP-tractable) MILPs, and then show that MP-GNNs can represent SB within this class.

To define MP-tractability, we first present the Weisfeiler-Lehman (WL) test [weisfeiler1968reduction], a well-known criterion for assessing the expressive power of MP-GNNs [xu2019powerful]. The WL test in the context of MILP-graphs is stated in Algorithm 1. It follows exactly the same updating rule as the MP-GNN, differing only in the local updates performed via hash functions.

The WL test can be understood as a color refinement algorithm. In particular, each vertex in is initially assigned a color or according to its initial feature or . Then the vertex colors and are iteratively refined via aggregation of neighbors’ information and corresponding edge weights. If there is no collision of hash functions333Here, “no collision of a hash function” indicates that the hash function doesn’t map two distinct inputs to the same output during the WL test on a specific instance. Another stronger assumption, commonly used in WL test analysis [jegelka2022theory], assumes that all hash functions are injective., then two vertices are of the same color at some iteration if and only if at the previous iteration, they have the same color and the same multiset of neighbors’ information and corresponding edge weights. Such a color refinement process is illustrated by an example shown in Figure 2.

One can also view a vertex coloring as a partition, i.e., all vertices are partitioned into several classes such that two vertices are in the same class if and only if they are of the same color. After each round of Algorithm 1, the partition always becomes finer if no collision happens, though it may not be strictly finer. The following theorem suggests that this partition eventually stabilizes or converges, with the final limit uniquely determined by the graph , independent of the hash functions selected.

Theorem 4.1 ([chen2022representing-lp]*Theorem A.2).

For any , the vertex partition induced by Algorithm 1 (if no collision) will converge within iterations to a partition , where is a partition of and is a partition of , and that partition is uniquely determined by the input graph .

With the concepts of color refinement and partition, we can introduce the core concept of this paper:

Definition 4.2 (Message-passing-tractability).

For , let be the partition as in Theorem 4.1. We say that is message-passing-tractable (MP-tractable) if for any and , all entries of the submatrix are the same. We use to denote the subset of all MILP-graphs in that are MP-tractable.

In order to help readers better understand the concept of “MP-tractable”, let’s examine the MILP instance shown in Figure 2. After numerous rounds of WL tests, the partition stabilizes to and . According to Definition 4.2, one must examine the following submatrices to determine whether the MILP is MP-tractable:

All elements within each submatrix are identical. Hence, this MILP is indeed MP-tractable. To rigorously state our result, we require the following assumption of the MILP data distribution.

Assumption 4.3.

is a Borel regular probability measure on and .

Borel regularity is a “minimal” assumption that is actually satisfied by almost all practically used data distributions such as normal distributions, discrete distributions, etc. Let us also comment on the other assumption . In Definition 3.2, the linear relaxation of is feasible and bounded, which implies . However, it is possible for a linear program that is initially bounded and feasible to become infeasible upon adjusting a single variable’s bounds, potentially resulting in or and leading to an infinite SB score: . Although we ignore such a case by assuming , it is straightforward to extend all our results by simply representing as considering as a non-negative real number, thus avoiding any collisions in definitions.

Based on the above assumptions, as well as an extra assumption: is message-passing tractable with probability one, we can show the existence of an MP-GNN capable of accurately mapping a MILP-graph to its corresponding SB score, with an arbitrarily high degree of precision and probability. The formal theorem is stated as follows.

Theorem 4.4.

Let be any probability distribution over that satisfies Assumption 4.3 and . Then for any , there exists a GNN such that

The proof of Theorem 4.4 is deferred to Appendix A, with key ideas outlined here. First, we show that if Algorithm 1 produces identical results for two MP-tractable MILPs, they must share the same SB score. That is, if two MP-tractable MILPs have different SB scores, the WL test (or equivalently MP-GNNs) can capture this distinction. Building on this result, along with a generalized version of the Stone-Weierstrass theorem and Luzin’s theorem, we reach the final conclusion.

Let us compare our findings with [chen2022representing-milp] that establishes the existence of an MP-GNN capable of directly mapping to one of its optimal solutions, under the assumption that must be unfoldable. Unfoldability means that, after enough rounds of the WL test, each node receives a distinct color assignment. Essentially, it assumes that the WL test can differentiate between all nodes in , and the elements within the corresponding partition have cardinality one: and for all and . Consequently, any unfoldable MILP must be MP-tractable because the submatrices under the partition of an unfoldable MILP must be and obviously satisfy the condition in Definition 4.2. However, the reverse assertion is not true: The example in Figure 2 serves as a case in point—it is MP-tractable but not unfoldable. Therefore, unfoldability is a stronger assumption than MP-tractability. Our Theorem 4.4 demonstrates that, to illustrate the expressive power of MP-GNNs in approximating SB, MP-tractability suffices; we do not need to make assumptions as strong as those required when considering MP-GNN for approximating the optimal solution.

4.2. MP-GNNs cannot universally represent SB beyond MP-tractability

Our next main result is that MP-GNNs do not have sufficient capacity to represent SB scores on the entire MILP space without the assumption of MP-tractability, stated as follows.

Theorem 4.5.

There exist two MILP problems with different SB scores, such that any MP-GNN has the same output on them, regardless of the number of parameters.

There are infinitely many pairs of examples proving Theorem 4.5, and we show two simple examples:

| (4.1) |

| (4.2) |

We will prove in Appendix B that these two MILP instances have different SB scores, but they cannot be distinguished by any MP-GNN in the sense that for any , inputs (4.1) and (4.2) lead to the same output. Therefore, it is impossible to train an MP-GNN to approximate the SB score meeting a required level of accuracy with high probability, independent of the complexity of the MP-GNN. Any MP-GNN that accurately predicts one MILP’s SB score will necessarily fail on the other. We also remark that our analysis for (4.1) and (4.2) can be generalized easily to any aggregation mechanism of neighbors’ information when constructing the MP-GNNs, not limited to the sum aggregation as in Section 2.

The MILP instances on which MP-GNNs fail to approximate SB scores, (4.1) and (4.2), are not MP-tractable. It can be verified that for both (4.1) and (4.2), the partition as in Theorem 4.1 is given by with and with , i.e., all vertices in form a class and all vertices in form the other class. Then the matrices and are just and , the coefficient matrices in (4.1) and (4.2), and have both and as entries, which does not satisfies Definition 4.2.

Based on Theorem 4.5, we can directly derive the following corollary by considering a simple discrete uniform distribution on only two instances (4.1) and (4.2).

Corollary 4.6.

There exists a probability distribution over satisfying Assumption 4.3 and constants , such that for any MP-GNN , it holds that

This corollary indicates that the assumption of MP-tractability in Theorem 4.4 is not removable.

4.3. 2-FGNNs are capable of universally representing SB

Although the universal approximation of MP-GNNs for SB scores is conditioned on the MP-tractability, we find an unconditional positive result stating that when we increase the order of GNNs a bit, it is possible to represent SB scores of MILPs, regardless of the MP-tractability.

Theorem 4.7.

Let be any probability distribution over that satisfies Assumption 4.3. Then for any , there exists a GNN such that

The proof of Theorem 4.7 leverages the second-order folklore Weisfeiler-Lehman (2-FWL) test. We show that for any two MILPs, whether MP-tractable or not, identical 2-FWL results imply they share the same SB score, thus removing the need for MP-tractability. Details are provided in Appendix C.

Theorem 4.7 establishes the existence of a 2-FGNN that can approximate the SB scores of MILPs well with high probability. This is a fundamental result illustrating the possibility of training a GNN to predict branching strategies for MILPs that are not MP-tractable. In particular, for any probability distribution as in Corollary 4.6 on which MP-GNNs fail to predict the SB scores well, Theorem 4.7 confirms the capability of 2-FGNNs to work on it.

However, it’s worth noting that 2-FGNNs typically have higher computational costs, both during training and inference stages, compared to MP-GNNs. This computational burden comes from the fact that calculations of 2-FGNNs reply on pairs of nodes instead of nodes, as we discussed in Section 2. To mitigate such computational challenges, one could explore the use of sparse or local variants of high-order GNNs that enjoy cheaper information aggregation with strictly stronger separation power than GNNs associated with the original high-order WL test [morris2020weisfeiler].

4.4. Practical insights of our theoretical results

Theorem 4.4 and Corollary 4.6 indicate the significance of MP-tractability in practice. Before attempting to train a MP-GNN to imitate SB, practitioners can first verify if the MILPs in their dataset satisfy MP-tractability. If the dataset contains a substantial number of MP-intractable instances, careful consideration of this approach is necessary, and 2-FGNNs may be more suitable according to Theorem 4.7. Notably, assessing MP-tractability relies solely on conducting the WL test (Algorithm 1). This algorithm is well-established in graph theory and benefits from abundant resources and repositories for implementation. Moreover, it operates with polynomial complexity (detailed below), which is reasonable compared to solving MILPs.

Complexity of verifying MP-tractability

To verify MP-tractability of a MILP, one requires at most color refinement iterations according to Theorem 4.1. The complexity of each iteration is bounded by the number of edges in the graph [shervashidze2011weisfeiler]. In our context, it is bounded by the number of nonzeros in matrix : . Therefore, the overall complexity is , which is linear in terms of and . In contrast, solving an MILP or even calculating its all the SB scores requires significantly higher complexity. To calculate the SB score of each MILP, one needs to solve at most LPs. We denote the complexity of solving each LP as . Therefore, the overall complexity of calculating SB scores is . Note that, currently, there is still no strongly polynomial-time algorithm for LP, thus this complexity is significantly higher than that of verifying MP-tractability.

While verifying MP-tractability is polynomial in complexity, the complexity of GNNs is still not guaranteed. Theorems 4.4 and 4.7 address existence, not complexity. In other words, this paper answers the question of whether GNNs can represent the SB score. To explore how well GNNs can represent SB, further investigation is needed.

Frequency of MP-tractability

In practice, the occurrence of MP-tractable instances is highly dependent on the dataset. In both Examples 4.1 and 4.2 (both MP-intractable), all variables exhibit symmetry, as they are assigned the same color by the WL test, which fails to distinguish them. Conversely, in the 3-variable example in Figure 2 (MP-tractable), only two of the three variables, and , are symmetric. Generally, the frequency of MP-tractability depends on the level of symmetry in the data — higher levels of symmetry increase the risk of MP-intractability. This phenomenon is commonly seen in practical MILP datasets, such as MIPLIB 2017 [gleixner2021miplib]. According to [chen2022representing-milp], approximately one-quarter of examples show significant symmetry in over half of the variables.

5. Numerical results

We implement numerical experiments to validate our theoretical findings in Section 4.

Experimental settings

We train an MP-GNN and a 2-FGNN with , where we replace the functions and in the MP-GNN by and to guarantee that they take the value whenever . For both GNNs, are parameterized as linear transformations followed by a non-linear activation function; are parameterized as 3-layer multi-layer perceptrons (MLPs) with respective learnable parameters; and the output mapping is parameterized as a 2-layer MLP. All layers map their input to a 1024-dimensional vector and use the ReLU activation function. With denoting the set of all learnable parameters of a network, we train both MP-GNN and 2-FGNN to fit the SB scores of the MILP dataset , by minimizing with respect to , using Adam [kingma2014adam]. The networks and training scheme is implemented with Python and TensorFlow [abadi2016tensorflow]. The numerical experiments are conducted on a single NVIDIA Tesla V100 GPU for two datasets:

-

•

We randomly generate MILP instances, with 6 constraints and 20 variables, that are MP-tractable with probability . SB scores are collected using SCIP [scip]. More details about instance generation are provided in Appendix E.

- •

Experimental results

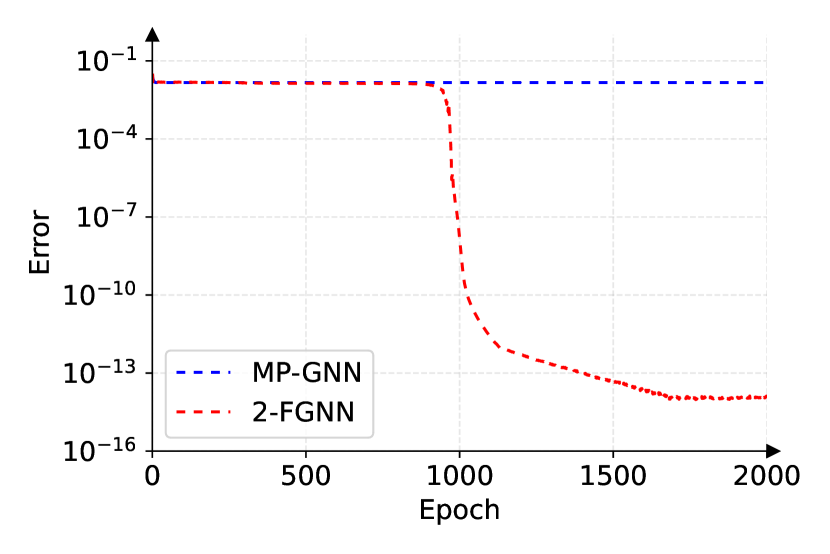

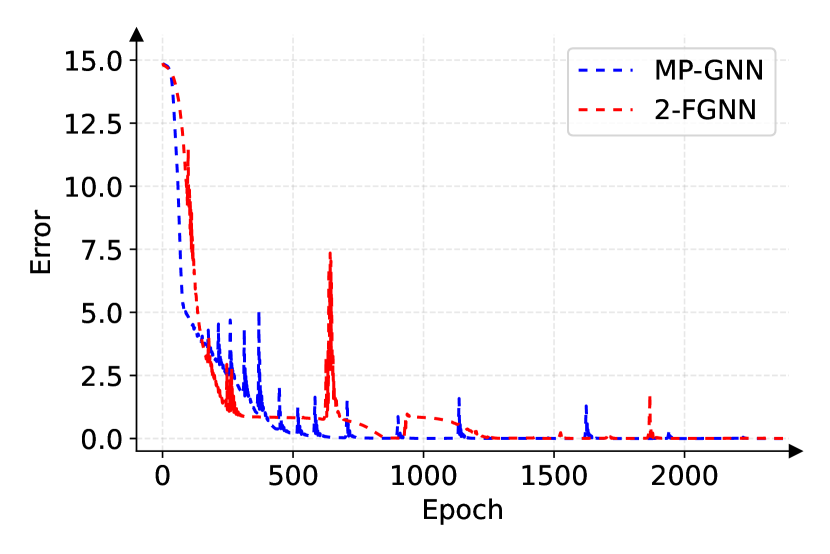

The numerical results are displayed in Figure 3. One can see from Figure 3(a) that both MP-GNN and 2-FGNN can approximate the SB scores over the dataset of random MILP instances very well, which validates Theorem 4.4 and Theorem 4.7. As illustrated in Figure 3(b), 2-FGNN can perfectly fit the SB scores of (4.1) and (4.2) simultaneously while MP-GNN can not, which is consistent with Theorem 4.5 and Theorem 4.7 and serves as a numerical verification of the capacity differences between MP-GNN and 2-FGNN for SB prediction. The detailed exploration of training and performance evaluations of GNNs is deferred to future work to maintain a focused investigation on the theoretical capabilities of GNNs in this paper.

Number of parameters

In Figure 3(b), the behavior of MP-GNN remains unchanged regardless of the number of parameters used, as guaranteed by Theorem 4.5. This error is intrinsically due to the structure of MP-intractable MILPs and cannot be reduced by adding parameters. Conversely, 2-FGNN can achieve near-zero loss with sufficient parameters, as guaranteed by Theorem 4.7 and confirmed by our numerical experiments. To further verify this, we tested 2-FGNN with embedding sizes from 64 to 2,048. All models reached near-zero errors, though epoch counts varied, as shown in Table 1. The results suggest that larger embeddings improve model capacity to fit counterexamples. The gains level off beyond an embedding size of 1,024 due to increased training complexity.

| Embedding size | 64 | 128 | 256 | 512 | 1,024 | 2,048 |

|---|---|---|---|---|---|---|

| Epochs to reach error | 16,570 | 5,414 | 2,736 | 1,442 | 980 | 1,126 |

| Epochs to reach error | 18,762 | 7,474 | 4,412 | 2,484 | 1,128 | 1,174 |

Larger instances

While our study primarily focuses on theory and numerous empirical studies have shown the effectiveness of GNNs in branching strategies (as noted in Section 1), we conducted experiments on larger instances to further assess the scalability of this approach. We trained an MP-GNN on 100 large-scale set covering problems, each with 1,000 variables and 2,000 constraints, generated following the methodology in [gasse2019exact]. The MP-GNN achieved a training loss of , calculated as the average norm of errors across all training instances.

6. Conclusion

In this work, we study the expressive power of two types of GNNs for representing SB scores. We find that MP-GNNs can accurately predict SB results for MILPs within a specific class termed “message-passing-tractable” (MP-tractable). However, their performance is limited outside this class. In contrast, 2-FGNNs, which update node-pair features instead of node features as in MP-GNNs, can universally approximate the SB scores on every MILP dataset or for every MILP distribution. These findings offer insights into the suitability of different GNN architectures for varying MILP datasets, particularly considering the ease of assessing MP-tractability. We also comment on limitations and future research topics. Although the universal approximation result is established for MP-GNNs and 2-FGNNs to represent SB scores, it is still unclear what is the required complexity/number of parameters to achieve a given precision. It would thus be interesting and more practically useful to derive some quantitative results. In addition, exploring efficient training strategies or alternatives of higher order GNNs for MILP tasks is an interesting and significant future direction.

Acknowledgements

We would like to express our deepest gratitude to Prof. Pan Li from the School of Electrical and Computer Engineering at Georgia Institute of Technology (GaTech ECE), for insightful discussions on second-order folklore GNNs and their capacities for general graph tasks. We would also like to thank Haoyu Wang from GaTech ECE for helpful discussions during his internship at Alibaba US DAMO Academy.

References

Appendix A Proof of Theorem 4.4

This section presents the proof of Theorem 4.4. We define the separation power of WL test in Definition A.1 and prove that two MP-tractable MILP-graphs, or two vertices in a single MP-tractable graph, indistinguishable by WL test must share the same SB score in Theorem A.3. In other words, WL test has sufficient separation power to distinguish MP-tractable MILP graphs, or vertices in a single MP-tractable graph, with different SB scores.

Before stating the major result, we first introduce some definitions and useful theorems.

Definition A.1.

Let and let and be the colors generated by the WL test (Algorithm 1) for and . We say if and holds for any and any hash functions.

Theorem A.2 ([chen2022representing-lp, Theorem A.2]).

The partition defined in Theorem 4.1 satisfies:

-

(a)

, ,

-

(b)

, ,

-

(c)

, ,

-

(d)

, , ,

where denotes the multiset considering both the elements and the multiplicities.

In Theorem A.2, conditions (a) and (b) mean vertices in the same class share the same features, while conditions (c) and (d) state that vertices in the same class interact with another class with the same multiset of weights. In other words, for any and , different rows/columns of the submatrix provide the same multiset of entries.

With the above preparations, we can state and prove the main result now.

Theorem A.3.

For any with and , the followings are true:

-

(a)

If , then .

-

(b)

If holds for any and any hash functions, then .

Proof.

(a) Since , after applying some permutation on (relabelling vertices in ) in the graph , the two and share the same partition and as in Theorem A.2 and we have

-

•

For any , is constant over all ,

-

•

For any , is constant over all ,

-

•

For any and , is constant over all ,

-

•

For any and , is constant over all .

Here, we slightly abuse the notation not to distinguish and the MILP-graph obtained from by relabelling vertice in , and these two graphs have exactly the same SB scores since the vertices in are not relabelled.

Note that both and are MP-tractable, i.e., for any and , and are both matrices with identical entries, which combined with the third and the fourth conditions above implies that for all and . Therefore, we have and hence .

(b) The result is a directly corollary of (a) by considering and the MILP-graph obtained from by relabeling as and relabeling as . ∎

Theorem A.4 (Lusin’s theorem [evans2018measure, Theorem 1.14]).

Suppose that is a Borel regular measure on and that is -measurable, i.e., for any open subset , is -measurable. Then for any -measurable with and any , there exists a compact set with , such that is continuous.

Theorem A.5 ([chen2022representing-lp, Theorem E.1]).

Let be a compact subset that is closed under the action of . Suppose that satisfies the followings:

-

(a)

For any , and , it holds that , where represents the MILP-graph obtained from by reordering vertices with permutations and .

-

(b)

holds for all with .

-

(c)

Given any and any , if holds for any and any hash functions, then .

Then for any , there exists such that

Now we can present the proof of Theorem 4.4.

Proof of Theorem 4.4.

Lemma F.2 and Lemma F.3 in [chen2022representing-lp] prove that the function that maps LP instances to its optimal objective value/optimal solution with the smallest -norm is Borel measurable. Thus, is also Borel measurable, and is hence -measurable due to Assumption 4.3. In addition, is a Borel subset of since the MP-tractability is defined by finitely many operations of comparison and aggregations. By Theorem A.4 and the assumption , there exists a compact subset such that and is continuous. For any and , is also compact and is also continuous by the permutation-equivariance of SB. Set

Then is permutation-invariant and compact with

In addition, is continuous by pasting lemma.

The rest of the proof is to apply Theorem A.5 for and , for which we need to verify the four conditions in Theorem C.10. Condition (a) is true since SB is permutation-equivalent by its definition. Conditions (b) and (c) follow directly from Theorem A.3. According to Theorem A.5, there exists some such that

Therefore, one has

which completes the proof. ∎

Appendix B Proof of Theorem 4.5

In this section, we verify that the MILP instances (4.1) and (4.2) prove Theorem 4.5. We will first show that they have different SB scores while cannot be distinguished by any MP-GNNs.

Different SB scores

Denote the graph representation of (4.1) and (4.2) as and , respectively. For both (4.1) and (4.2), the same optimal objective value is and the optimal solution with the smallest -norm is . To calculate or , it is necessary create two LPs for each variable . In one LP, the upper bound of is set to , actually fixing at its lower bound . Similarly, the other LP sets to .

For the problem (4.1), even if we fix , the objective value of the LP relaxation can still achieve by . A similar observation also holds for fixing . Therefore, the SB score for (also for any in (4.1)) is . In other words,

However, for the problem (4.2), if we fix , then the optimal objective value of the LP relaxation is since

and the above inequality is tight as . If we fix , then and the optimal objective value of the LP relaxation is also since

and the equality holds when . Therefore, the the SB score for (also for any in (4.2)) is . If we fix , the optimal objective value of the LP relaxation is still since is an optimal solution. A similar observation still holds if is fixed to . Thus the SB scores for and are both . Combining these calculations, we obtain that

MP-GNNs’ output

Although and are non-isomorphic with different SB scores, they still have the same output for every MP-GNN. We prove this by induction. Referencing the graph representations in Section 2, we explicitly write down the features:

Considering the MP-GNN’s initial step where and , we can conclude that is a constant for all and is a constant for all , regardless of the choice of functions and . Thus, the initial layer generates uniform outcomes for nodes in and across both graphs, which is the induction base. Suppose that the principle of uniformity applies to for some . Since are constant across all , we can denote their common value as and hence for all . Similarly, we can define with for all . Then it holds that

where we used for all and . This proves the uniformity for . Therefore, we obtain the existence of such that and for all . Finally, the output layer yields:

which finishes the proof.

Appendix C Proof of Theorem 4.7

This section presents the proof of Theorem 4.7. The central idea is to establish a separation result in the sense that two MILPs with distinct SB scores must be distinguished by at least one , and then apply a generalized Stone-Weierstrass theorem in [azizian2020expressive].

C.1. 2-FWL test and its separation power

The 2-FWL test [cai1992optimal], as an extension to the classic WL test [weisfeiler1968reduction], is a more powerful algorithm for the graph isomorphism problem. By applying the 2-FWL test algorithm (formally stated in Algorithm 2) to two graphs and comparing the outcomes, one can determine the non-isomorphism of the two graphs if the results vary. However, identical 2-FWL outcomes do not confirm isomorphism. Although this test does not solve the graph isomorphism problem entirely, it can serve as a measure of 2-FGNN’s separation power, analogous to how the WL test applies to MP-GNN [xu2019powerful].

In particular, given the input graph , the 2-FWL test assigns a color for every pair of nodes in the form of with or with . The initial colors are assigned based on the input features and the colors are refined to subcolors at each iteration in the way that two node pairs are of the same subcolor if and only if they have the same color and the same neighbors’ color information. Here, the neighborhood of involves and the neighborhood of involves . After sufficient iterations, the final colors are determined. If the final color multisets of two graphs and are identical, they are deemed indistinguishable by the 2-FWL test, denoted by . One can formally define the separation power of 2-FWL test via two equivalence relations on as follows.

Definition C.1.

Let and let and be the colors generated by 2-FWL test for and .

-

(a)

We define if the followings hold for any and any hash functions:

(C.1) (C.2) -

(b)

We define if the followings hold for any and any hash functions:

(C.3) (C.4)

It can be seen that (C.3) and (C.4) are stronger than (C.1) and (C.2), since the latter requires that the entire color multiset is the same while the former requires that the color multiset associated with every is the same. However, we can show that they are equivalent up to a permutation.

Theorem C.2.

For any , if and only if there exists a permutation such that , where is the graph obtained by relabeling vertices in using .

One can understand that both and mean that and cannot be distinguished by 2-FWL test, with the difference that allows a permutation on .

Proof of Theorem C.2.

It is clear that implies that . We then prove the reverse direction, i.e., implies for some . It suffices to consider and hash functions such that there are no collisions in Algorithm 2 and no strict color refinement in the -th iteration when and are the input, which means that two edges are assigned with the same color in the -th iteration if and only if their colors are the same in the -th iteration. For any , it holds that

Similarly, one has that

and that

Therefore, for any

it follows from (C.2) that

| (C.5) |

Particularly, the number of elements in the two multisets in (C.5) should be the same, which implies that

which then leads to

One can hence apply some permutation on to obtain (C.3). Next we prove (C.4). For any , we have

which completes the proof. ∎

C.2. SB scores of MILPs distinguishable by 2-FWL test

The following theorem establishes that the separation power of 2-FWL test is stronger than or equal to that of SB, in the sense that two MILP-graphs, or two vertices in a single graph, that cannot be distinguished by the 2-FWL test must share the same SB score.

Theorem C.3.

For any , the followings are true:

-

(a)

If , then .

-

(b)

If , then there exists some permutation such that .

-

(c)

If holds for any and any hash functions, then .

We briefly describe the intuition behind the proof here. The color updating rule of 2-FWL test is based on monitoring triangles while that of the classic WL test is based on tracking edges. More specifically, in 2-FWL test colors are defined on node pairs and neighbors share the same triangle, while in WL test colors are equipped with nodes with neighbors being connected by edges. When computing the -th entry of , we change the upper/lower bound of and solve two LP problems. We can regard as a special node and if we fixed it in 2-FWL test, a triangle containing will be determined by the other two nodes, one in and one in , and their edge. This “reduces” to the setting of WL test. It is proved in [chen2022representing-lp] that the separation power of WL test is stronger than or equal to the properties of LPs. This is to say that even when fixing a special node, the 2-FWL test still has enough separation power to distinguish different LP properties and hence 2-FWL test could separate different SB scores. We present the detailed proof of Theorem C.3 in the rest of this subsection.

Theorem C.4.

For any , if , then for any , , and , the two LP problems and have the same optimal objective value.

Theorem C.5 ([chen2022representing-lp]).

Consider two LP problems with variables and constraints

| (C.6) |

and

| (C.7) |

Suppose that there exist and that are partitions of and respectively, such that the followings hold:

-

(a)

For any , is constant over all ;

-

(b)

For any , is constant over all ;

-

(c)

For any and , is constant over all .

-

(d)

For any and , is constant over all .

Then the two problems (C.6) and (C.7) have the same feasibility, the same optimal objective value, and the same optimal solution with the smallest -norm (if feasible and bounded).

Proof of Theorem C.4.

Choose and hash functions such that there are no collisions in Algorithm 2 and no strict color refinement in the -th iteration when and are the input. Fix any and construct the partions and as follows:

-

•

for some if and only if .

-

•

for some if and only if .

Without loss of generality, we can assume that . One observation is that . This is because implies that , which then leads to and since there is no collisions. We thus have .

Note that we have (C.3) and (C.4) from the assumption . So after permuting on and , one can obtain for all and for all . Another observation is that such permutation does not change the optimal objective value of as is fixed.

Next, we verify the four conditions in Theorem C.5 for two LP problems and with respect to the partitions and .

Verification of Condition (a) in Theorem C.5

Since there is no collision in the 2-FWL test Algorithm 2, implies that and hence that , which is also constant over all since is contant over all by definition.

Verification of Condition (b) in Theorem C.5

It follows from that and hence that , which is also constant over all since is contant over all by definition.

Verification of Condition (c) in Theorem C.5

Consider any and any . It follows from that

and hence that

where we used the fact that there is no strict color refinement in the -th iteration and there is no collision in Algorithm 2. We can thus conclude for any that

which implies that that is constant over since is constant over .

Verification of Condition (d) in Theorem C.5

Consider any and any . It follows from that

and hence that

where we used the fact that there is no strict color refinement at the -th iteration and there is no collision in Algorithm 2. We can thus conclude for any that

which implies that that is constant over since is constant over .

Combining all discussion above and noticing that , one can apply Theorem C.5 and conclude that the two LP problems and have the same optimal objective value, which completes the proof. ∎

Corollary C.6.

For any , if , then the LP relaxations of and have the same optimal objective value and the same optimal solution with the smallest -norm (if feasible and bounded).

Proof.

If no collision, it follows from (C.4) that which implies and for any . Then we can apply Theorem C.4 to conclude that two LP problems and that are LP relaxations of and have the same optimal objective value.

In the case that the LP relaxations of and are both feasible and bounded, we use and to denote their optimal solutions with the smallest -norm. For any , and are also the optimal solutions with the smallest -norm for and respectively. By Theorem C.5 and the same arguments as in the proof of Theorem C.4, we have the . Note that we cannot infer by considering a single because we apply permutation on and in the proof of Theorem C.4. But we have for any which leads to . ∎

C.3. Equivalence between the separation powers of the 2-FWL test and 2-FGNNs

The section establishes the equivalence between the separation powers of the 2-FWL test and 2-FGNNs.

Theorem C.7.

For any , the followings are true:

-

(a)

if and only if for all .

-

(b)

holds for any and any hash functions if and only if .

-

(c)

if and only if for all scalar function with .

The intuition behind Theorem C.7 is the color updating rule in 2-FWL test is of the same format as the feature updating rule in 2-FGNN, and that the local update mappings can be chosen as injective on current features. Results of similar spirit also exist in previous literature; see e.g., [xu2019powerful, azizian2020expressive, geerts2022expressiveness, chen2022representing-lp]. We present the detailed proof of Theorem C.7 in the rest of this subsection.

Lemma C.8.

For any , if , then for all .

Proof.

Consider any with layers and let and be the features in the -th layer of . Choose and hash functions such that there are no collisions in Algorithm 2 when and are the input. We will prove the followings by induction for :

-

(a)

implies .

-

(b)

implies .

-

(c)

implies .

-

(d)

implies .

-

(e)

implies .

-

(f)

implies .

As the induction base, the claims (a)-(f) are true for since and do not have collisions. Now we assume that the claims (a)-(f) are all true for where and prove them for . In fact, one can prove the claim (a) for as follow:

The proof of claims (b)-(f) for is very similar and hence omitted.

Using the claims (a)-(f) for , we can conclude that

which completes the proof. ∎

Lemma C.9.

For any , if for all , then .

Proof.

We claim that for any there exists -FGNN layers for , such that the followings hold true for any and any hash functions:

-

(a)

implies .

-

(b)

implies .

-

(c)

implies .

-

(d)

implies .

-

(e)

implies .

-

(f)

implies .

Such layers can be constructed inductively. First, for , we can simply choose that is injective on and that is injective on .

Assume that the conditions (a)-(f) are true for where , we aim to construct the -th layer such that (a)-(f) are also true for . Let collect all different elements in and let collect all different elements in . Choose some constinuous such that , where is a vector in with the -th entry being and other entries being , and is a vector in with the -th entry being and other entries being . Choose some continuous that is injective on the set . By the injectivity of and the linear independence of , we have that

which is to say that the condition (a) is satisfied. One can also verify that the conditions (b) and (c) by using the same argument. Similarly, we can also construct and such that the conditions (d)-(f) are satisfied.

Suppose that is not true. Then there exists and hash functions such that

or

holds for some . We have shown above that the conditions (a)-(f) are true for and some carefully constructed -FGNN layers. Then it holds for some that

| (C.8) |

or

| (C.9) |

In the rest of the proof we work with (C.8), and the argument can be easily modified in the case that (C.9) is true. It follows from (C.8) that there exists some continuous function such that

Then let us construct the -th layer yielding

and the output layer with

This is to say for some , which contradicts the assumtion that has the same output on and . Thus we can conclude that . ∎

Proof of Theorem C.7 (c).

Suppose that . By Theorem C.2, there exists some permutation such that . For any scalar function with , by Theorem C.7, it holds that , where we used the fact that is permutation-equivariant. We can thus conclude that .

Now suppose that is not true. Then there exist some and hash functions such that

or

By the proof of Lemma C.9, one can construct the -th -FGNN layers inductively for , such that the condition (a)-(f) in the proof of Lemma C.9 are true. Then we have

| (C.10) |

or

| (C.11) |

We first assume that (C.10) is true. Then there exists a continuous function with

Let us construct the -th layer such that

and the output layer with

which is independent of . This constructs of the form with .

C.4. Proof of Theorem 4.7

We finalize the proof of Theorem 4.7 in this subsection. Combining Theorem C.3 and Theorem C.7, one can conclude that the separation power of is stronger than or equal to that of SB scores. Hence, we can apply the Stone-Weierstrass-type theorem to prove Theorem 4.7

Theorem C.10 (Generalized Stone-Weierstrass theorem [azizian2020expressive]).

Let be a compact topology space and let be a finite group that acts continuously on and . Define the collection of all equivariant continuous functions from to as follows:

Consider any and any . Suppose the following conditions hold:

-

(a)

is a subalgebra of and , where is the constant function whose ouput is always .

-

(b)

For any , if holds for any with , then for any , there exists such that .

-

(c)

For any , if holds for any , then .

-

(d)

For any , it holds that , where

Then for any , there exists such that

Proof of Theorem 4.7.

Lemma F.2 and Lemma F.3 in [chen2022representing-lp] prove that the function that maps LP instances to its optimal objective value/optimal solution with the smallest -norm is Borel measurable. Thus, is also Borel measurable, and is hence -measurable due to Assumption 4.3. By Theorem A.4 and Assumption 4.3, there exists a compact subset such that and is continuous. For any and , is also compact and is also continuous by the permutation-equivariance of SB. Set

Then is permutation-invariant and compact with

In addition, is continuous by pasting lemma.

The rest of the proof is to apply Theorem C.10 for , , , and . We need to verify the four conditions in Theorem C.10. Condition (a) can be proved by similar arguments as in the proof of Lemma D.2 in [chen2022representing-lp]. Condition (b) follows directly from Theorem C.7 (a) and (c) and Theorem C.2. Condition (c) follows directly from Theorem C.7 (a) and Theorem C.3 (a). Condition (d) follows directly from Theorem C.7 (b) and Theorem C.3 (c). According to Theorem C.10, there exists some such that

Therefore, one has

which completes the proof. ∎

Appendix D Extensions of the theoretical results

This section will explore some extensions of our theoretical results.

D.1. Extension to other types of SB scores

D.2. Extension to varying MILP sizes

While Theorems 4.4 and 4.7 assume MILP sizes and are fixed, we now discuss extending these results to data distributions with variable and .

First, our theoretical results can be directly extended to MILP datasets or distributions where and vary but remain bounded. Following Lemma 36 in [azizian2020expressive], if a universal-approximation theorem applies to for any fixed and (as shown in our work) and at least one GNN can distinguish graphs of different sizes, then the result holds across a disjoint union of finitely many .

If the distribution has unbounded or , for any , one can always remove a portion of the tail to ensure boundedness in and . In particular, there always exist large enough and such that and . The key point is that for any , such and can always be found. Although these values may be large and dependent on , they are still finite. This allows us to apply the results for the bounded-support case.

Note that the “tail removal” technique mentioned above comes from the fact that a probability distribution has a total mass of 1:

By the definition of a limit, this clearly implies that for any , there exists a sufficiently large such that . A similar argument applies to .

Appendix E Details about numerical experiments

Random MILP instances generation

We generate 100 random MILP instances for the experiments in Section 5. We set and , which means each MILP instance contains 6 constraints and 20 variables. The sampling schemes of problem parameters are described below.

-

•

The bounds of linear constraints: .

-

•

The coefficients of the objective function: .

-

•

The non-zero elements in the coefficient matrix: . The coefficient matrix contains 60 non-zero elements. The positions are sampled randomly.

-

•

The lower and upper bounds of variables: . We swap their values if after sampling.

-

•

The constraint types are randomly sampled. Each type (, or ) occurs with equal probability.

-

•

The variable types are randomly sampled. Each type (continuous or integer) occurs with equal probability.

Implementation and training details

We implement MP-GNN and 2-FGNN with Python 3.6 and TensorFlow 1.15.0 [abadi2016tensorflow]. Our implementation is built by extending the MP-GNN implementation of [gasse2019exact] in https://github.com/ds4dm/learn2branch. The SB scores of randomly generated MILP instances are collected using SCIP [scip].

For both GNNs, are parameterized as linear transformations followed by a non-linear activation function; are parameterized as 3-layer multi-layer perceptrons (MLPs) with respective learnable parameters; and the output mapping is parameterized as a 2-layer MLP. All layers map their input to a 1024-dimensional vector and use the ReLU activation function. Under these settings, MP-GNN contains 43.0 millions of learnable parameters and 2-FGNN contains 35.7 millions of parameters.

We adopt Adam [kingma2014adam] to optimize the learnable parameters during training with a learning rate of for all networks. We decay the learning rate to and when the training error reaches and respectively to help with stabilizing the training process.