Reverse Maximum Inner Product Search: How to efficiently find users who would like to buy my item?

Abstract.

The MIPS (maximum inner product search), which finds the item with the highest inner product with a given query user, is an essential problem in the recommendation field. It is usual that e-commerce companies face situations where they want to promote and sell new or discounted items. In these situations, we have to consider a question: who are interested in the items and how to find them? This paper answers this question by addressing a new problem called reverse maximum inner product search (reverse MIPS). Given a query vector and two sets of vectors (user vectors and item vectors), the problem of reverse MIPS finds a set of user vectors whose inner product with the query vector is the maximum among the query and item vectors. Although the importance of this problem is clear, its straightforward implementation incurs a computationally expensive cost.

We therefore propose Simpfer, a simple, fast, and exact algorithm for reverse MIPS. In an offline phase, Simpfer builds a simple index that maintains a lower-bound of the maximum inner product. By exploiting this index, Simpfer judges whether the query vector can have the maximum inner product or not, for a given user vector, in a constant time. Besides, our index enables filtering user vectors, which cannot have the maximum inner product with the query vector, in a batch. We theoretically demonstrate that Simpfer outperforms baselines employing state-of-the-art MIPS techniques. Furthermore, our extensive experiments on real datasets show that Simpfer is at least two orders magnitude faster than the baselines.

1. Introduction

The MIPS (maximum inner product search) problem, or -MIPS problem, is an essential tool in the recommendation field. Given a query (user) vector, this problem finds the item vectors with the highest inner product with the query vector among a set of item vectors. The search result, i.e., item vectors, can be used as recommendation for the user, and the user and item vectors are obtained via Matrix Factorization, which is well employed in recommender systems (Cremonesi et al., 2010; Van den Oord et al., 2013; Chen et al., 2020; Fraccaro et al., 2016). Although some learned similarities via MLP (i.e., neural networks) have also been devised, e.g., in (Zamani and Croft, 2020; Zhao et al., 2020), (Rendle et al., 2020) has actually demonstrated that inner product-based (i.e., Matrix Factorization-based) recommendations show better performances than learned similarities. We hence focus on inner product between -dimensional vectors that are obtained via Matrix Factorization.

1.1. Motivation

The -MIPS problem is effective for the case where a user wants to know items that s/he prefers (i.e., user-driven cases), but e-commerce companies usually face situations where they want to advertise an item, which may be new or discounted one, to users, which corresponds to item-driven cases. Trivially, an effective advertisement is to recommend such an item to users who would be interested in this item.

In the context of the -MIPS problem, if this item is included in the top-k item set for a user, we should make an advertisement of the item to this user. That is, we should find a set of such users. This paper addresses this new problem, called reverse -MIPS problem. To ease of presentation, this section assumes that (the general case is defined in Section 2). Given a query vector (the vector of a target item) and two sets of -dimensional vectors (set of user vectors) and (set of item vectors), the reverse MIPS problem finds all user vectors such that arg max.

Example 1.

Table 1 illustrates , , and the MIPS result, i.e., arg max, of each vector in . Let , and the result of reverse MIPS is because is the top-1 item for and . When , we have no result, because is not the top-1 item . Similarly, when , the result is .

From this example, we see that, if an e-commerce service wants to promote the item corresponding to , this service can obtain the users who would prefer this item through the reverse MIPS, and sends them a notification about this item.

The reverse -MIPS problem is an effective tool not only for item-driven recommendations but also market analysis. Assume that we are given a vector of a new item, . It is necessary to design an effective sales strategy to gain a profit. Understanding the features of users that may prefer the item is important for the strategy. Solving the reverse -MIPS of the query vector supports this understanding.

| (MIPS result) | |||||

|---|---|---|---|---|---|

1.2. Challenge

The above practical situations clarify the importance of reverse MIPS. Because e-commerce services have large number of users and items, and are large. In addition, a query vector is not pre-known and is specified on-demand fashion. The reverse -MIPS is therefore conducted online and is computationally-intensive task. Now the question is how to efficiently obtain the reverse MIPS result for a given query.

A straightforward approach is to run a state-of-the-art exact MIPS algorithm for every vector in and check whether or not arg max. This approach obtains the exact result, but it incurs unnecessary computation. The poor performance of this approach is derived from the following observations. First, we do not need the MIPS result of when does not have the maximum inner product with . Second, this approach certainly accesses all user vectors in , although many of them do not contribute to the reverse MIPS result. However, it is not trivial to skip evaluations of some user vectors without losing correctness. Last, its theoretical cost is the same as the brute-force case, i.e., time, where and , which is not appropriate for online computations. These concerns pose challenges for solving the reverse MIPS problem efficiently.

1.3. Contribution

To address the above issues, we propose Simpfer, a simple, fast, and exact algorithm for reverse MIPS. The general idea of Simpfer is to efficiently solve the decision version of the MIPS problem. Because the reverse MIPS of a query requires a yes/no decision for each vector , it is sufficient to know whether or not can have the maximum inner product for . Simpfer achieves this in time in many cases by exploiting its index built in an offline phase. This index furthermore supports a constant time filtering that prunes vectors in a batch if their answers are no. We theoretically demonstrate that the time complexity of Simpfer is lower than .

The summary of our contributions is as follows:

-

•

We address the problem of reverse -MIPS. To our knowledge, this is the first work to study this problem.

-

•

We propose Simpfer as an exact solution to the reverse MIPS problem. Simpfer solves the decision version of the MIPS problem at both the group-level and the vector-level efficiently. Simpfer is surprisingly simple, but our analysis demonstrates that Simpfer theoretically outperforms a solution that employs a state-of-the-art exact MIPS algorithm.

-

•

We conduct extensive experiments on four real datasets, MovieLens, Netflix, Amazon, and Yahoo!. The results show that Simpfer is at least two orders magnitude faster than baselines.

-

•

Simpfer is easy to deploy: if recommender systems have user and item vector sets that are designed in the inner product space, they are ready to use Simpfer via our open source implementation111https://github.com/amgt-d1/Simpfer. This is because Simpfer is unsupervised and has only a single parameter (the maximum value of ) that is easy to tune and has no effect on the running time of online processing.

This paper is an error-corrected version of (Amagata and Hara, 2021). We fixed some writing errors and minor bugs in our implementation, but our result is consistent with (Amagata and Hara, 2021).

2. Problem Definition

Let be a set of -dimensional real-valued item vectors, and we assume that is high (Liu et al., 2019; Shrivastava and Li, 2014). Given a query vector, the maximum inner product search (MIPS) problem finds

The general version of the MIPS problem, i.e., the -MIPS problem, is defined as follows:

Definition 1 (-MIPS problem).

Given a set of vectors , a query vector , and , the -MIPS problem finds vectors in that have the highest inner products with .

For a user (i.e., query), the -MIPS problem can retrieve items (e.g., vectors in ) that the user would prefer. Different from this, the reverse -MIPS problem can retrieve a set of users who would prefer a given item. That is, in the reverse -MIPS problem, a query can be an item, and this problem finds users attracted by the query item. Therefore, the reverse -MIPS is effective for advertisement and market analysis, as described in Section 1. We formally define this problem222Actually, the reverse top-k query (and its variant), a similar concept to the reverse -MIPS problem, has been proposed in (Vlachou et al., 2010, 2011; Zhang et al., 2014). It is important to note that these works do not suit recent recommender systems. First, they assume that is low ( is around 5), which is not probable in Matrix Factorization. Second, they consider the Euclidean space, whereas inner product is a non-metric space. Because the reverse top-k query processing algorithms are optimized for these assumptions, they cannot be employed in Matrix Factorization-based recommender systems and cannot solve (or be extended for) the reverse -MIPS problem..

Definition 2 (Reverse -MIPS problem).

Given a query (item) vector , , and two sets of vectors (set of user vectors) and (set of item vectors), the reverse -MIPS problem finds all vectors such that is included in the -MIPS result of among .

Note that can be , as described in Example 1. We use and to denote and , respectively.

Our only assumption is that there is a maximum that can be specified, denoted by . This is practical, because should be small, e.g., (Jiang et al., 2020) or (Bachrach et al., 2014), to make applications effective. (We explain how to deal with the case of in Section 4.1.) This paper develops an exact solution to the new problem in Definition 2.

3. Related Work

Exact -MIPS Algorithm. The reverse -MIPS problem can be solved exactly by conducting an exact -MIPS algorithm for each user vector in . The first line of solution to the -MIPS problem is a tree-index approach (Koenigstein et al., 2012; Ram and Gray, 2012; Curtin et al., 2013). For example, (Ram and Gray, 2012) proposed a tree-based algorithm that processes -MIPS not only for a single user vector but also for some user vectors in a batch. Unfortunately, the performances of the tree-index algorithms degrade for large because of the curse of dimensionality.

LEMP (Teflioudi et al., 2015; Teflioudi and Gemulla, 2016) avoids this issue and significantly outperforms the tree-based algorithms. LEMP uses several search algorithms according to the norm of each vector. In addition, LEMP devises an early stop scheme of inner product computation. During the computation of , LEMP computes an upper-bound of . If this bound is lower than an intermediate -th maximum inner product, cannot be in the final result, thus the inner product computation can be stopped. LEMP is actually designed for the top-k inner product join problem: for each , it finds the -MIPS result of . Therefore, LEMP can solve the reverse -MIPS problem, but it is not efficient as demonstrated in Section 5.

FEXIPRO (Li et al., 2017) further improves the early stop of inner product computation of LEMP. Specifically, FEXIPRO exploits singular value decomposition, integer approximation, and a transformation to positive values. These techniques aim at obtaining a tighter upper-bound of as early as possible. (Li et al., 2017) reports that state-of-the-art tree-index algorithm (Ram and Gray, 2012) is completely outperformed by FEXIPRO. Maximus (Abuzaid et al., 2019) takes hardware optimization into account. It is, however, limited to specific CPUs, so we do not consider Maximus. Note that LEMP and FEXIPRO are heuristic algorithms, and time is required for the reverse -MIPS problem.

Approximation -MIPS Algorithm. To solve the -MIPS problem in sub-linear time by sacrificing correctness, many works proposed approximation -MIPS algorithms. There are several approaches to the approximation -MIPS problem: sampling-based (Cohen and Lewis, 1999; Liu et al., 2019; Yu et al., 2017), LSH-based (Huang et al., 2018; Neyshabur and Srebro, 2015; Shrivastava and Li, 2014; Yan et al., 2018), graph-based (Liu et al., 2020; Morozov and Babenko, 2018; Zhou et al., 2019), and quantization approaches (Dai et al., 2020; Guo et al., 2020). They have both strong and weak points. For example, LSH-based algorithms enjoy a theoretical accuracy guarantee. However, they are empirically slower than graph-based algorithms that have no theoretical performance guarantee. Literature (Bachrach et al., 2014) shows that the MIPS problem can be transformed into the Euclidean nearest neighbor search problem, but it still cannot provide the correct answer. Besides, existing works that address the (reverse) nearest neighbor search problem assume low-dimensional data (Yang et al., 2015) or consider approximation algorithms (Li et al., 2020).

Since this paper focuses on the exact result, these approximation -MIPS algorithms cannot be utilized. In addition, approximate answers may lose effectiveness of the reverse -MIPS problem. If applications cannot contain users, who are the answer of the -MIPS problem, these users may lose chances of knowing the target item, which would reduce profits. On the other hand, if applications contain users, who are not the answer of the -MIPS problem, as an approximate answer, they advertise the target item to users who are not interested in the item. This also may lose future profits, because such users may stop receiving advertisements if they get those of non-interesting items.

4. Proposed Algorithm

To efficiently solve the reverse MIPS problem, we propose Simpfer. Its general idea is to efficiently solve the decision version of the -MIPS problem.

Definition 3 (-MIPS decision problem).

Given a query , , a user vector , and , this problem returns yes (no) if is (not) included in the -MIPS result of .

Notice that this problem does not require the complete -MIPS result. We can terminate the -MIPS of whenever it is guaranteed that is (not) included in the -MIPS result.

To achieve this early termination efficiently, it is necessary to obtain a lower-bound and an upper-bound of the -th highest inner product of . Let and respectively be a lower-bound and an upper-bound of the -th highest inner product of on . If , it is guaranteed that does not have the highest inner product with . Similarly, if , it is guaranteed that has the highest inner product with . This observation implies that we need to efficiently obtain and . Simpfer does pre-processing to enable it in an offline phase. Besides, since is often large, accessing all user vectors is also time-consuming. This requires a filtering technique that enables the pruning of user vectors that are not included in the reverse -MIPS result in a batch. During the pre-processing, Simpfer arranges so that batch filtering is enabled. Simpfer exploits the data structures built in the pre-processing phase to quickly solve the -MIPS decision problem.

4.1. Pre-processing

The objective of this pre-processing phase is to build data structures that support efficient computation of a lower-bound and an upper-bound of the -th highest inner product for each , for arbitrary queries. We utilize Cauchy–Schwarz inequality for upper-bounding. Hence we need the Euclidean norm for each . To obtain a lower-bound of the -th highest inner product, we need to access at least item vectors in . The norm computation and lower-bound computation are independent of queries (as long as ), so they can be pre-computed. In this phase, Simpfer builds the following array for each .

Definition 4 (Lower-bound array).

The lower-bound array of a user vector is an array whose -th element, , maintains a lower-bound of the -th inner product of on , and .

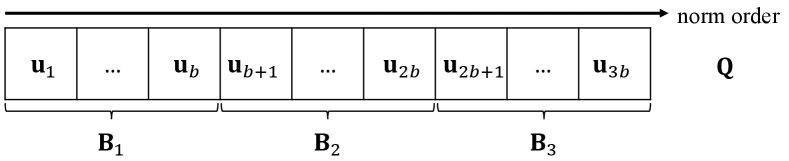

Furthermore, to enable batch filtering, Simpfer builds a block, which is defined below.

Definition 5 (Block).

A block is a subset of . The set of vectors belonging to is represented by . Besides, we use to represent the lower-bound array of this block, and

| (1) |

The block size can be arbitrarily determined, and we set to avoid system parameter setting.

Pre-processing algorithm. Algorithm 1 describes the pre-processing algorithm of Simpfer.

(1) Norm computation: First, for each and , its norm is computed. Then, and are sorted in descending order of norm.

(2) Lower-bound array building: Let be the set of the first vectors in . For each , is built by using . That is, , where yields the -th highest inner product for . The behind idea of using the first item vectors in is that vectors with large norms tend to provide large inner products (Liu et al., 2020). This means that we can obtain a tight lower-bound at a lightweight cost.

(3) Block building: After that, blocks are built, so that user vectors in a block keep the order and each block is disjoint. Given a new block , we insert user vectors into in sequence while updating , until we have . When , we insert into a set of blocks , and make a new block.

Example 2.

Figure 1 illustrates an example of block building. For ease of presentation, we use as a block size and . For example, , and .

[Block structure]Our block structure for a set of user vectors, where they are sorted in descending order of norm. Every block is disjoint, and each user vector is assigned to a unique block.

Generally, this pre-processing is done only once. An exception is the case where a query with is specified. In this case, Simpfer re-builds the data structures then processes the query. This is actually much faster than the baselines, as shown in Section 5.7.

Analysis. We here prove that the time complexity of this pre-processing is reasonable. Without loss of generality, we assume , because this is a usual case for many real datasets, as the ones we use in Section 5.

Theorem 1.

Algorithm 1 requires time.

Proof. The norm computation requires time, and sorting requires time. The building of lower-bound arrays needs time, since . Because , . The block building also requires time. In total, this pre-processing requires time.

The space complexity of Simpfer is also reasonable.

Theorem 2.

The space complexity of the index is .

Proof. The space of the lower-bound arrays of user vectors is , since . Blocks are disjoint, and the space of the lower-bound array of a block is also . We hence have lower-bound arrays of blocks. Now this theorem is clear.

4.2. Upper- and Lower-bounding for the -MIPS Decision Problem

Before we present the details of Simpfer, we introduce our techniques that can quickly answer the -MIPS decision problem for a given query . Recall that and are sorted in descending order of norm. Without loss of generality, we assume that for each and for each , for ease of presentation.

Given a query and a user vector , we have . Although our data structures are simple, they provide effective and “light-weight” filters. Specifically, we can quickly answer the -MIPS decision problem on through the following observations333Existing algorithms for top-k retrieval, e.g., (Ding and Suel, 2011; Fontoura et al., 2011), use similar (but different) bounding techniques. They use a bound (e.g., obtained by a block) to early stop linear scans. On the other hand, our bounding is designed to avoid linear scans and to filer multiple user vectors in a batch..

Lemma 1.

If , it is guaranteed that is not included in the -MIPS result of .

Proof. Let be the vector in such that is the -th highest inner product in . The fact that immediately derives this lemma.

It is important to see that the above lemma provides “no” as the answer to the -MIPS decision problem on in time (after computing ). The next lemma deals with the “yes” case in time.

Lemma 2.

If , it is guaranteed that is included in the -MIPS result of .

Proof. From Cauchy–Schwarz inequality, we have . Since is the -th highest norm in , , where is defined in the proof of Lemma 1. That is, is an upper-bound of . Now it is clear that has if .

We next introduce a technique that yields “no” as the answer for all user vectors in a block in time.

Lemma 3.

Given a block , let be the first vector in . If , for all , it is guaranteed that is not included in the -MIPS result of .

Proof. From Cauchy–Schwarz inequality, is an upper-bound of for all , since . We have for all , from Equation (1). Therefore, if , cannot be the highest inner product.

If a user vector cannot obtain a yes/no answer from Lemmas 1–3, Simpfer uses a linear scan of to obtain the answer. Let be a threshold, i.e., an intermediate -th highest inner product for during the linear scan. By using the following corollaries, Simpfer can obtain the correct answer and early terminate the linear scan.

Corollary 1.

Assume that is included in an intermediate result of the -MIPS of and we now evaluate . If , it is guaranteed that is included in the final result of the -MIPS of .

Proof. Trivially, we have . Besides, for all , because is sorted. This corollary is hence true.

From this corollary, we also have:

Corollary 2.

When we have , it is guaranteed that is not included in the final result of the -MIPS of .

4.3. The Algorithm

Now we are ready to present Simpfer. Algorithm 3 details it. To start with, Simpfer computes . Given a block , Simpfer tests Lemma 3 (line 3). If the user vectors in may have yes as an answer, for each , Simpfer does the following. (Otherwise, all user vectors in are ignored.) First, it computes , then tests Lemma 1 (line 3). If cannot have the answer from this lemma, Simpfer tests Lemma 2. Simpfer inserts into the result set if . Otherwise, Simpfer conducts Linear-scan (Algorithm 2). If Linear-scan returns 1 (yes), is inserted into . The above operations are repeated for each . Finally, Simpfer returns the result set .

The correctness of Simpfer is obvious, because it conducts Linear-scan for all vectors that cannot have yes/no answers from Lemmas 1–3. Besides, Simpfer accesses blocks sequentially, so it is easy to parallelize by using multicore. Simpfer hence further accelerates the processing of reverse -MIPS, see Section 5.6.

4.4. Complexity Analysis

We theoretically demonstrate the efficiency of Simpfer. Specifically, we have:

Theorem 3.

Let be the pruning ratio () of blocks in . Furthermore, let be the average number of item vectors accessed in Linear-scan. The time complexity of Simpfer is .

Proof. Simpfer accesses all blocks in , and . Assume that a block is not pruned by Lemma 3. Simpfer accesses all user vectors in , so the total number of such user vectors is . For these vectors, Simpfer computes inner products with . The evaluation cost of Lemmas 1 and 2 for these user vectors is thus . The worst cost of Linear-scan for vectors that cannot obtain the answer from these lemmas is . Now the time complexity of Simpfer is

| (2) | ||||

Consequently, this theorem holds.

Remark. There are two main observations in Theorem 3. First, because we practically have and , Simpfer outperforms a -MIPS-based solution that incurs time. (Our experimental results show that in practice.) The second observation is obtained from Equation (2), which implies the effectiveness of blocks. If Simpfer does not build blocks, we have to evaluate Lemma 1 for all . Equation (2) suggests that the blocks theoretically avoids this.

5. Experiment

This section reports our experimental results. All experiments were conducted on a Ubuntu 18.04 LTS machine with a 12-core 3.0GHz Intel Xeon E5-2687w v4 processor and 512GB RAM.

5.1. Setting

Datasets. We used four popular real datasets: MovieLens444https://grouplens.org/datasets/movielens/, Netflix, Amazon555https://jmcauley.ucsd.edu/data/amazon/, and Yahoo!666https://webscope.sandbox.yahoo.com/. The user and item vectors of these datasets were obtained by the Matrix Factorization in (Chin et al., 2016). These are 50-dimensional vectors (the dimensionality setting is the same as (Li et al., 2017; Teflioudi et al., 2015)777As our theoretical analysis shows, the time of Simpfer is trivially proportional to , thus its empirical impact is omitted.). The other statistics is shown in Table 2. We randomly chose 1,000 vectors as query vectors from .

[Cardinality of user and item vector sets]Cardinality of user and item vector sets for MovieLens, Netflix, Amazon, and Yahoo!. MovieLens Netflix Amazon Yahoo! 138,493 480,189 1,948,882 2,088,620 26,744 17,770 98,211 200,941

Evaluated algorithms. We evaluated the following three algorithms.

-

•

LEMP (Teflioudi et al., 2015): the state-of-the-art all--MIPS algorithm. LEMP originally does -MIPS for all user vectors in .

-

•

FEXIPRO (Li et al., 2017): the state-of-the-art -MIPS algorithm. We simply ran FEXIPRO for each .

-

•

Simpfer: the algorithm proposed in this paper. We set .

These algorithms were implemented in C++ and compiled by g++ 7.5.0 with -O3 flag. We used OpenMP for multicore processing. These algorithms return the exact result, so we measured their running time.

Note that (Li et al., 2017; Teflioudi et al., 2015) have demonstrated that the other exact MIPS algorithms are outperformed by LEMP and FEXIPRO, so we did not use them as competitors. (Recall that this paper focuses on the exact answer, thus approximation algorithms are not appropriate for competitors, see Section 3.) In addition, LEMP and FEXIPRO also have a pre-processing (offline) phase. We did not include the offline time as the running time.

5.2. Result 1: Effectiveness of blocks

We first clarify the effectiveness of blocks employed in Simpfer. To show this, we compare Simpfer with Simpfer without blocks (which does not evaluate line 3 of Algorithm 3). We set .

On MovieLens, Netflix, Amazon, and Yahoo!, Simpfer (Simpfer without blocks) takes 10.3 (22.0), 58.6 (100.8), 117.6 (446.2), and 1481.2 (1586.2) [msec], respectively. This result demonstrates that, although the speed-up ratio is affected by data distributions, blocks surely yield speed-up.

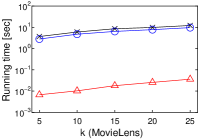

5.3. Result 2: Impact of

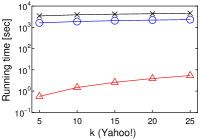

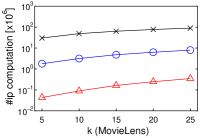

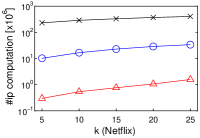

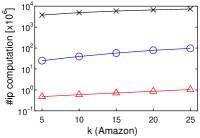

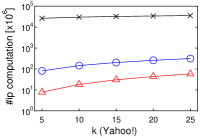

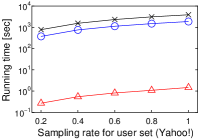

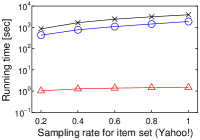

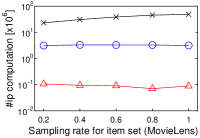

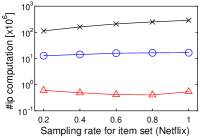

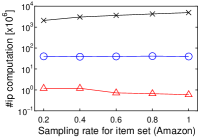

We investigate how affects the computational performance of each algorithm by using a single core. Figure 2 depicts the experimental results.

We first observe that, as increases, the running time of each algorithm increases, as shown in Figures 2(a)–2(d). This is reasonable, because the cost of (decision version of) -MIPS increases. As a proof, Figures 2(e)–2(h) show that the number of inner product (ip) computations increases as increases. The running time of Simpfer is (sub-)linear to (the plots are log-scale). This suggests that .

Second, Simpfer significantly outperforms LEMP and FEXIPRO. This result is derived from our idea of quickly solving the -MIPS decision problem. The techniques introduced in Section 4.2 can deal with both yes and no answer cases efficiently. Therefore, our approach functions quite well in practice.

Last, an interesting observation is the performance differences between FEXIPRO and Simpfer. Let us compare them with regard to running time. Simpfer is at least two orders of magnitude faster than FEXIPRO. On the other hand, with regard to the number of inner product computations, that of Simpfer is one order of magnitude lower than that of FEXIPRO. This result suggests that the filtering cost of Simpfer is light, whereas that of FEXIPRO is heavy. Recall that Lemmas 1–3 need only time, and Corollaries 1–2 need time in practice. On the other hand, for each user vector in , FEXIPRO incurs time, and its filtering cost is , where . For high-dimensional vectors, the difference between and is large. From this point of view, we can see the efficiency of Simpfer.

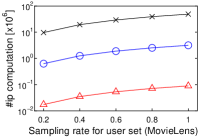

[Running time of each algorithm increases as increases]Running time of each algorithm increases as increases on MovieLens, Netflix, Amazon, and Yahoo!.

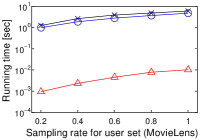

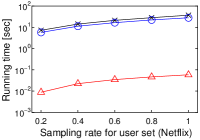

[Running time of each algorithm increases as the cardinality of user vector set increases]Running time of each algorithm increases as the cardinality of user vector set increases on MovieLens, Netflix, Amazon, and Yahoo!.

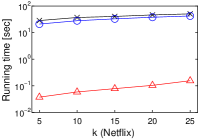

5.4. Result 3: Impact of Cardinality of

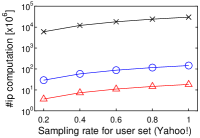

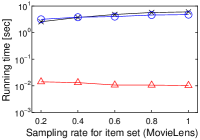

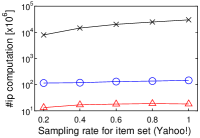

We next study the scalability to by using a single core. To this end, we randomly sampled user vectors in , and this sampling rate has . We set . Figure 3(h) shows the experimental result.

In a nutshell, we have a similar result to that in Figure 2. As increases, the running time of Simpfer linearly increases. This result is consistent with Theorem 3. Notice that the tendency of the running time of Simpfer follows that of the number of inner product computations. This phenomenon is also supported by Theorem 3, because the main bottleneck of Simpfer is Linear-scan.

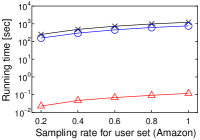

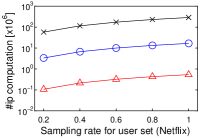

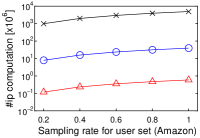

[Running time of Simpfer does not increases as the cardinality of item vector set increases]Running time of Simpfer does not increases as the cardinality of item vector set increases on MovieLens, Netflix, Amazon, and Yahoo!.

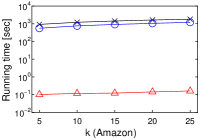

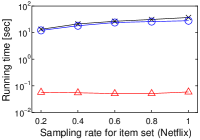

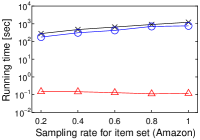

5.5. Result 4: Impact of Cardinality of

The scalability to by using a single core is also investigated. We randomly sampled user vectors in , as with the previous section. Figure 4 shows the experimental result where . Interestingly, we see that the result is different from that in Figure 3(h). The running time of Simpfer is almost stable for different . In this experiment, and were fixed, and recall that . From this observation, the stable performance is theoretically obtained. This scalability of Simpfer is an advantage over the other algorithms, since their running time increases as increases.

5.6. Result 5: Impact of Number of CPU Cores

We study the gain of multicore processing of Simpfer by setting . We depict the speedup ratios compared with the single-core case in Table 3.

[Multi-threading improves the efficiency of Simpfer]Multi-threading improves the efficiency of Simpfer on MovieLens, Netflix, Amazon, and Yahoo!. #cores MovieLens Netflix Amazon Yahoo! 4 3.23 2.99 3.41 2.22 8 4.80 4.27 6.61 2.83 12 5.84 5.40 7.76 2.88

We see that Simpfer receives the benefit of multicore processing, and its running time decreases as the number of available cores increases. We here explain why Simpfer cannot obtain speedup ratio , where is the number of available cores. Each core deals with different blocks, and the processing cost of a given block is different from those of the others. This is because it is unknown whether can be pruned by Lemma 3. Even if we magically know the cost, it is NP-hard to assign blocks so that each core has the same processing cost (Amagata and Hara, 2019; Korf, 2009). Therefore, perfect load-balancing is impossible in practice. The Yahoo! case in particular represents this phenomenon. Because many user vectors in Yahoo! have large norms, blocks often cannot be filtered by Lemma 3. This can be seen from the observation in Figure 3(h): the number of inner product computations on Yahoo! is larger than those on the other datasets. The costs of Corollaries 1–2 are data-dependent (i.e., they are not pre-known), rendering a fact that Yahoo! is a hard case for obtaining a high speedup ratio.

[Pre-processing time of Simpfer is reasonable]Pre-processing time of Simpfer is reasonable on MovieLens, Netflix, Amazon, and Yahoo!. MovieLens Netflix Amazon Yahoo! 1.02 4.08 15.10 15.55

5.7. Result 6: Pre-processing Time

Last, we report the pre-processing time of Simpfer. Table 4 shows the results. As Theorem 1 demonstrates, the pre-processing time increases as increases. We see that the pre-processing time is reasonable and much faster than the online (running) time of the baselines. For example, the running time of FEXIPRO on Amazon with is 1206 [sec]. When (i.e., ), the total time of pre-processing and online processing of Simpfer is [sec]. Therefore, even if is specified, re-building blocks then processing the query by Simpfer is much faster.

6. Conclusion

This paper introduced a new problem, reverse maximum inner product search (reverse MIPS). The reverse MIPS problem supports many applications, such as recommendation, advertisement, and market analysis. Because even state-of-the-art algorithms for MIPS cannot solve the reverse MIPS problem efficiently, we proposed Simpfer as an exact and efficient solution. Simpfer exploits several techniques to efficiently answer the decision version of the MIPS problem. Our theoretical analysis has demonstrated that Simpfer is always better than a solution that employs a state-of-the-art algorithm of MIPS. Besides, our experimental results on four real datasets show that Simpfer is at least two orders of magnitude faster than the MIPS-based solutions.

Acknowledgements.

This research is partially supported by JST PRESTO Grant Number JPMJPR1931, JSPS Grant-in-Aid for Scientific Research (A) Grant Number 18H04095, and JST CREST Grant Number JPMJCR21F2.References

- (1)

- Abuzaid et al. (2019) Firas Abuzaid, Geet Sethi, Peter Bailis, and Matei Zaharia. 2019. To Index or Not to Index: Optimizing Exact Maximum Inner Product Search. In ICDE. 1250–1261.

- Amagata and Hara (2019) Daichi Amagata and Takahiro Hara. 2019. Identifying the Most Interactive Object in Spatial Databases. In ICDE. 1286–1297.

- Amagata and Hara (2021) Daichi Amagata and Takahiro Hara. 2021. Reverse Maximum Inner Product Search: How to efficiently find users who would like to buy my item?. In RecSys. 273–281.

- Bachrach et al. (2014) Yoram Bachrach, Yehuda Finkelstein, Ran Gilad-Bachrach, Liran Katzir, Noam Koenigstein, Nir Nice, and Ulrich Paquet. 2014. Speeding up the xbox recommender system using a euclidean transformation for inner-product spaces. In RecSys. 257–264.

- Chen et al. (2020) Chong Chen, Min Zhang, Yongfeng Zhang, Weizhi Ma, Yiqun Liu, and Shaoping Ma. 2020. Efficient Heterogeneous Collaborative Filtering without Negative Sampling for Recommendation. In AAAI. 19–26.

- Chin et al. (2016) Wei-Sheng Chin, Bo-Wen Yuan, Meng-Yuan Yang, Yong Zhuang, Yu-Chin Juan, and Chih-Jen Lin. 2016. LIBMF: a library for parallel matrix factorization in shared-memory systems. The Journal of Machine Learning Research 17, 1 (2016), 2971–2975.

- Cohen and Lewis (1999) Edith Cohen and David D Lewis. 1999. Approximating matrix multiplication for pattern recognition tasks. Journal of Algorithms 30, 2 (1999), 211–252.

- Cremonesi et al. (2010) Paolo Cremonesi, Yehuda Koren, and Roberto Turrin. 2010. Performance of recommender algorithms on top-n recommendation tasks. In RecSys. 39–46.

- Curtin et al. (2013) Ryan R Curtin, Parikshit Ram, and Alexander G Gray. 2013. Fast exact max-kernel search. In SDM. 1–9.

- Dai et al. (2020) Xinyan Dai, Xiao Yan, Kelvin KW Ng, Jiu Liu, and James Cheng. 2020. Norm-Explicit Quantization: Improving Vector Quantization for Maximum Inner Product Search. In AAAI. 51–58.

- Ding and Suel (2011) Shuai Ding and Torsten Suel. 2011. Faster top-k document retrieval using block-max indexes. In SIGIR. 993–1002.

- Fontoura et al. (2011) Marcus Fontoura, Vanja Josifovski, Jinhui Liu, Srihari Venkatesan, Xiangfei Zhu, and Jason Zien. 2011. Evaluation strategies for top-k queries over memory-resident inverted indexes. PVLDB 4, 12 (2011), 1213–1224.

- Fraccaro et al. (2016) Marco Fraccaro, Ulrich Paquet, and Ole Winther. 2016. Indexable probabilistic matrix factorization for maximum inner product search. In AAAI. 1554–1560.

- Guo et al. (2020) Ruiqi Guo, Philip Sun, Erik Lindgren, Quan Geng, David Simcha, Felix Chern, and Sanjiv Kumar. 2020. Accelerating large-scale inference with anisotropic vector quantization. In ICML. 3887–3896.

- Huang et al. (2018) Qiang Huang, Guihong Ma, Jianlin Feng, Qiong Fang, and Anthony KH Tung. 2018. Accurate and fast asymmetric locality-sensitive hashing scheme for maximum inner product search. In KDD. 1561–1570.

- Jiang et al. (2020) Jyun-Yu Jiang, Patrick H Chen, Cho-Jui Hsieh, and Wei Wang. 2020. Clustering and Constructing User Coresets to Accelerate Large-scale Top-K Recommender Systems. In The Web Conference. 2177–2187.

- Koenigstein et al. (2012) Noam Koenigstein, Parikshit Ram, and Yuval Shavitt. 2012. Efficient retrieval of recommendations in a matrix factorization framework. In CIKM. 535–544.

- Korf (2009) Richard E Korf. 2009. Multi-Way Number Partitioning.. In IJCAI. 538–543.

- Li et al. (2017) Hui Li, Tsz Nam Chan, Man Lung Yiu, and Nikos Mamoulis. 2017. FEXIPRO: fast and exact inner product retrieval in recommender systems. In SIGMOD. 835–850.

- Li et al. (2020) Wen Li, Ying Zhang, Yifang Sun, Wei Wang, Mingjie Li, Wenjie Zhang, and Xuemin Lin. 2020. Approximate nearest neighbor search on high dimensional data—experiments, analyses, and improvement. IEEE Transactions on Knowledge and Data Engineering 32, 8 (2020), 1475–1488.

- Liu et al. (2020) Jie Liu, Xiao Yan, Xinyan Dai, Zhirong Li, James Cheng, and Ming-Chang Yang. 2020. Understanding and Improving Proximity Graph Based Maximum Inner Product Search. In AAAI. 139–146.

- Liu et al. (2019) Rui Liu, Tianyi Wu, and Barzan Mozafari. 2019. A Bandit Approach to Maximum Inner Product Search. In AAAI. 4376–4383.

- Morozov and Babenko (2018) Stanislav Morozov and Artem Babenko. 2018. Non-metric similarity graphs for maximum inner product search. In NeurIPS. 4721–4730.

- Neyshabur and Srebro (2015) Behnam Neyshabur and Nathan Srebro. 2015. On Symmetric and Asymmetric LSHs for Inner Product Search. In ICML. 1926–1934.

- Ram and Gray (2012) Parikshit Ram and Alexander G Gray. 2012. Maximum inner-product search using cone trees. In KDD. 931–939.

- Rendle et al. (2020) Steffen Rendle, Walid Krichene, Li Zhang, and John Anderson. 2020. Neural Collaborative Filtering vs. Matrix Factorization Revisited. In RecSys. 240–248.

- Shrivastava and Li (2014) Anshumali Shrivastava and Ping Li. 2014. Asymmetric LSH (ALSH) for sublinear time maximum inner product search (MIPS). In NIPS. 2321–2329.

- Teflioudi and Gemulla (2016) Christina Teflioudi and Rainer Gemulla. 2016. Exact and approximate maximum inner product search with lemp. ACM Transactions on Database Systems 42, 1 (2016), 1–49.

- Teflioudi et al. (2015) Christina Teflioudi, Rainer Gemulla, and Olga Mykytiuk. 2015. Lemp: Fast retrieval of large entries in a matrix product. In SIGMOD. 107–122.

- Van den Oord et al. (2013) Aaron Van den Oord, Sander Dieleman, and Benjamin Schrauwen. 2013. Deep content-based music recommendation. In NIPS. 2643–2651.

- Vlachou et al. (2010) Akrivi Vlachou, Christos Doulkeridis, Yannis Kotidis, and Kjetil Nørvåg. 2010. Reverse top-k queries. In ICDE. 365–376.

- Vlachou et al. (2011) Akrivi Vlachou, Christos Doulkeridis, Yannis Kotidis, and Kjetil Norvag. 2011. Monochromatic and bichromatic reverse top-k queries. IEEE Transactions on Knowledge and Data Engineering 23, 8 (2011), 1215–1229.

- Yan et al. (2018) Xiao Yan, Jinfeng Li, Xinyan Dai, Hongzhi Chen, and James Cheng. 2018. Norm-ranging lsh for maximum inner product search. In NeurIPS. 2952–2961.

- Yang et al. (2015) Shiyu Yang, Muhammad Aamir Cheema, Xuemin Lin, and Wei Wang. 2015. Reverse k nearest neighbors query processing: experiments and analysis. PVLDB 8, 5 (2015), 605–616.

- Yu et al. (2017) Hsiang-Fu Yu, Cho-Jui Hsieh, Qi Lei, and Inderjit S Dhillon. 2017. A greedy approach for budgeted maximum inner product search. In NIPS. 5453–5462.

- Zamani and Croft (2020) Hamed Zamani and W Bruce Croft. 2020. Learning a Joint Search and Recommendation Model from User-Item Interactions. In WSDM. 717–725.

- Zhang et al. (2014) Zhao Zhang, Cheqing Jin, and Qiangqiang Kang. 2014. Reverse k-ranks query. PVLDB 7, 10 (2014), 785–796.

- Zhao et al. (2020) Xing Zhao, Ziwei Zhu, Yin Zhang, and James Caverlee. 2020. Improving the Estimation of Tail Ratings in Recommender System with Multi-Latent Representations. In WSDM. 762–770.

- Zhou et al. (2019) Zhixin Zhou, Shulong Tan, Zhaozhuo Xu, and Ping Li. 2019. Möbius Transformation for Fast Inner Product Search on Graph. In NeurIPS. 8216–8227.