Revisiting Kinetic Monte Carlo Algorithms for Time-dependent Processes: from open-loop control to feedback control

Abstract

Simulating stochastic systems with feedback control is challenging due to the complex interplay between the system’s dynamics and the feedback-dependent control protocols. We present a single-step-trajectory probability analysis to time-dependent stochastic systems. Based on this analysis, we revisit several time-dependent kinetic Monte Carlo (KMC) algorithms designed for systems under open-loop-control protocols. Our analysis provides an unified alternative proof to these algorithms, summarized into a pedagogical tutorial. Moreover, with the trajectory probability analysis, we present a novel feedback-controlled KMC algorithm that accurately captures the dynamics systems controlled by external signal based on measurements of the system’s state. Our method correctly captures the system dynamics and avoids the artificial Zeno effect that arises from incorrectly applying the direct Gillespie algorithm to feedback-controlled systems. This work provides a unified perspective on existing open-loop-control KMC algorithms and also offers a powerful and accurate tool for simulating stochastic systems with feedback control.

I Introduction

At the molecular scale, biological processes are complex and stochastic.Shahrezaei and Swain (2008); Levine and Hwa (2007) Currently, numerical simulations contribute critical insights into the stochastic dynamics of the systems.Székely Jr and Burrage (2014) One example approach involves solving for the master equation,Earnshaw and Keener (2010); Lee and Othmer (2010); Meister et al. (2014) constructed either through molecular dynamics simulations (e.g., via Markov state model approaches Anderson and Kurtz (2011); Bowman, Pande, and Noé (2013); Husic and Pande (2018)) or experimental results (e.g., kinetic proofreadingNelson and Nelson (2004); Alon (2019)). In this case, if the system is reduced to a few state Markov network, one can numerically solve for the master equation, given the initial probability distribution and the control protocol. Another example, when the system’s state space is too large, is to utilize the Gillespie AlgorithmGillespie (2001) or kinetic Monte Carlo (KMC) simulation to obtain an ensemble of the stochastic trajectories of the process.

Numerous biological processes also respond either to external control or other coupled processes.Iglesias and Ingalls (2010) These stochastic systems are more complex than stationary systems, as their kinetic rates changes over time via open-loop (non-feedback) or closed-loop (feedback) control. Examples of feedback controlled systems span multiple length- and time-scales, from metabolic regulationLorendeau et al. (2015); Atkinson (1965); Goyal et al. (2010) to bacterial chemotaxisYi et al. (2000a); Hamadeh et al. (2011); Yi et al. (2000b) to cell-cycle regulation.Murray (1992); Domian, Reisenauer, and Shapiro (1999); Cross and Tinkelenberg (1991); Li and Murray (1991) Understanding feedback controlled systems is essential in unraveling their dynamics and optimizing their performance. However, it remains challenging to simulate feedback controlled stochastic systems due to the presence of in-time feedback.

Gillespie first proposed a direct KMC method that allows one to simulate the stochastic dynamics for a system that evolves under a constant set of rates.Gillespie (1977) Unfortunately, this method cannot be directly extended to simulate time-inhomogeneous systems, where the system’s dynamical rates changes over time. Naively implementing the direct KMC simulation by discretizing the simulation into a sequence of constant rate windows may lead to an incorrect underestimation of the transition frequency of the systems, which resembles the Zeno effect. In quantum mechanics, the Zeno effect describes the system dynamics being trapped into a single state due to frequent repetitive projective measurements.Möbus and Wolf (2019); Itano et al. (1990); Streed et al. (2006)

In the past few decades, open-loop-control KMC algorithm has been proposed to simulate various time-inhomogeneous biological systems whose kinetic rates changes over time due to an open-loop (non-feedback) control protocol. These works were presented for various biological processes including ion channels in neurons,Anderson, Ermentrout, and Thomas (2015); Ding et al. (2016); Chow and White (1996) RNA folding,Li et al. (2009) and chemical reactions.Anderson (2007); Anderson and Kurtz (2011) In 2007, Anderson proposed a modified next-reaction simulation method, where the transition rate of one possible reaction could change over time due to the occurrence of another possible reaction that takes place in a shorter time.Anderson (2007) In this case, the simulation addresses the changes of the reaction rates by providing a modification to the generation of the waiting time of reactions. Later, a general class of methodsAnderson (2007); Anderson, Ermentrout, and Thomas (2015); Anderson and Kurtz (2011); Chow and White (1996); Li et al. (2009) were formulated for simulating systems with time-dependent rates. These works address systems that fall into the category of open-loop controlled stochastic systems,Bechhoefer (2021) where the system’s transition rates are controlled by external signal as a function of time according to a given protocol. However, still missing is a general theory and algorithm for simulating feedback controlled stochastic systems, where the protocol is updated at each scheduled measurement time based on the instantaneous measurement outcome.Franklin et al. (2002) The main difficulty in developing a KMC algorithm for feedback controlled systems is that the control protocol at future times is unknown, as it depends on the results of the future measurements of the system.

In this work, we first provide an alternative derivation for the open-loop-controlled KMC, which recapitulates the methods developed in a number of existing references.Anderson (2007); Anderson, Ermentrout, and Thomas (2015); Anderson and Kurtz (2011); Chow and White (1996); Li et al. (2009) This alternative derivation, based on single-trajectory probability analysis, lays the foundation to the development of a novel algorithm able to simulate systems under in-time feedback control. The validity and the implementation of the algorithm is demonstrated by a Maxwell’s feedback control refrigerator.

II Theory and Algorithms

II.1 Theory: Single-step trajectory probability

This article begins by revisiting the theoretical foundation of the open-loop-control KMC method by providing a pedagogical proof that recapitulates various versions of these algorithms.Anderson (2007); Anderson, Ermentrout, and Thomas (2015); Anderson and Kurtz (2011); Chow and White (1996); Li et al. (2009) The theory is constructed by comparing the true probability of single-step trajectories and the simulated trajectory probability generated within one step of a modified KMC algorithm. This trajectory analysis not only provides an alternative proof for open-loop-control KMC methods described previouslyAnderson (2007); Anderson, Ermentrout, and Thomas (2015); Anderson and Kurtz (2011); Chow and White (1996); Li et al. (2009) but also provides the foundation from which a closed-loop-control KMC method can be derived.

Consider a continuous-time discrete-state Markov process that is subject to an external control protocol as an arbitrary function of time . The system contains a discrete number of states and is initialized with probability distribution . The dynamics of is characterized by the master equation

| (1) |

where matrix element is the transient transition probability rates from to given the control parameter at time . The diagonal element satisfying is the negative transient escape rate from state at time . The master equation evolution of can also be expressed in terms of the dynamics of -th state’s probability:

| (2) |

For an open-loop controlled system, the control protocol is externally determined. In contrast, for a closed-loop controlled system (e.g., one with a series of feedback measurements), the specific form of may depend on the system’s state.

Each individual step of a KMC simulation is concerned with only generating the next stochastic event (i.e., evolving to state state at time from the previous state and time ). This event can be expressed as a single-step trajectory given the previous step. The conditional probability for this single-step trajectory can be written as

| (3) |

which is also a joint probability distribution of the next event’s time and state . This formula is true for any Markov dynamics regardless of whether the dynamical rates are constants or the rates change over time. We show below that, in the case of time-independent or time-dependent rates, the direct Gillespie Algorithm or an open-loop-control KMC algorithm, respectively, can correctly capture the dynamics.

Time-independent rates: In the case where the dynamics of the system are constant over time 111Here constant rate over time is used to describe the time period between two consecutive stochastic events. Naturally, when the system state changes after the next event, the possible transitions and their rates are naturally updated., the direct Gillespie AlgorithmGillespie (1977) is sufficient. From a probability theory perspective, the single-step transition probability of the next state and next time can be factored into the product of two statistically independent distributions and can be generated separately from their own probability distributions and .

| (4) | ||||

In this case, the direct KMC Gillespie (1977) is carried out by generating the next transition time according to and generating the next state according to the state probability .

Time-dependent rates: If the rates change over time, the direct KMC approachGillespie (1977) fails to generate the correct dynamics since the next event’s time and state are statistically correlated. In this case, we can still factorize the joint probability of and , as the product of two probability distributions:

| (5) |

where the marginal distribution of the transition time (given the previous and ) is denoted by

| (6) | ||||

and the conditional probability distribution of next state conditioned on the transition time being is

| (7) |

In this case, a modified KMC algorithm should be capable of faithfully generating the stochastic trajectory. In one simulation step of the modified KMC algorithm, one first generates the next transition’s time according to Eq. 6 and then generates the next state according to Eq. 7 given the proposed time . One can verify that the generated pair of and follows the true single-step trajectory probability distribution (Eq. 3). This analysis is applicable to arbitrary time-dependent processes, where the system’s rates change over time either due to (1) open-loop control according to a scheduled protocolAnderson (2007); Anderson, Ermentrout, and Thomas (2015); Anderson and Kurtz (2011); Chow and White (1996); Li et al. (2009) or (2) closed-loop control with a series of feedback measurements.

II.2 Review of open-loop-control KMC: General algorithm

In this section, we review the open-loop-control KMC methods previously described in Anderson (2007); Anderson, Ermentrout, and Thomas (2015); Anderson and Kurtz (2011); Chow and White (1996); Li et al. (2009). This review also serves as an alternative derivation and justification of these algorithms in the language of single-step trajectory generation introduced in Section II.1. The representation of the single-step trajectory probability as the product of a marginal probability and a conditional probability, Eq. 5, allows one to separately generate the next transition’s time and state , even when the time-dependence of the system’s rate creates statistical correlation between and .

Generating transition time : The random generation of transition time according to the marginal probability density in Eq. 6 can be realized by first obtaining the cumulative density function

| (8) |

and then generating a random number following a uniform distribution between and , and finally solving for according to the following equation:

| (9) |

Or equivalently, for a random number also following a uniform distribution between and , one can solve as the root of the following equation

| (10) |

where

| (11) |

and is the negative escape rate from state at arbitrary time .

Generating the next state : Once the transition time is generated, the selection of the next state is straightforward – the new state’s probability is proportional to their transient transition rates evaluated at the chosen transition time , as defined in Eq. 7. This resembles the state generation in the direct KMC method, with the only difference being the probability to jump to state here is proportional to the transient rate evaluated at the chosen time .

To summarize, one simulation step of the open-loop-control KMC algorithm can be enumerated in Section II.2. Furthermore, by including appropriate approximations (see Section II.3), one can reproduce various open-loop-control KMC algorithms previously described in Anderson (2007); Anderson, Ermentrout, and Thomas (2015); Anderson and Kurtz (2011); Chow and White (1996); Li et al. (2009).

Open-loop-control KMC

II.3 Piece-wise approximations to open-loop control

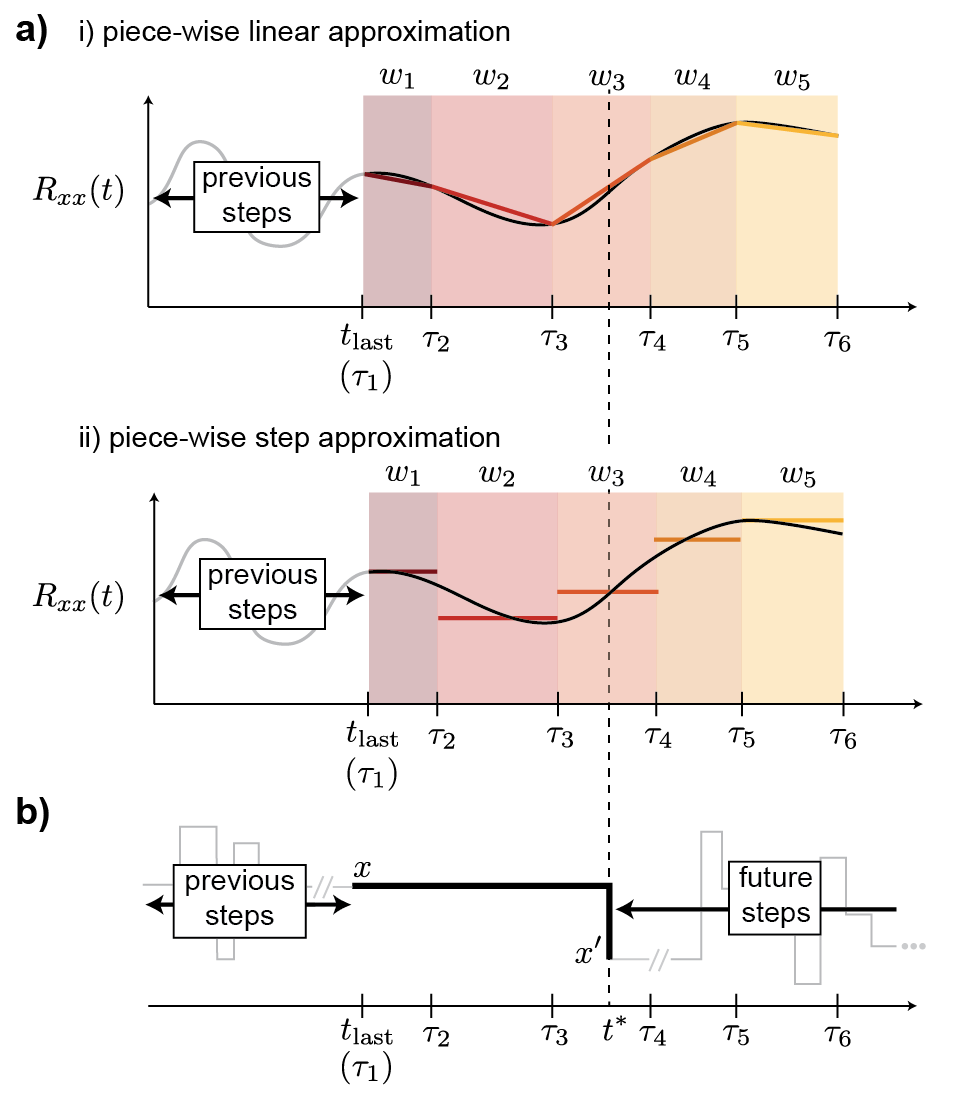

In practice, solving for the next event’s time by a given random number and the equation Eq. 10 for arbitrarily complex time-dependent rates may be challenging. We now briefly review a few ways to approximately solve for this integral equation. Various methods have been proposed in simulating specific biological processes.Anderson, Ermentrout, and Thomas (2015); Chow and White (1996); Ding et al. (2016); Li et al. (2009) For illustrative purposes, we demonstrate two approximations and sketch the corresponding algorithms for: (i) piece-wise linear function approximation and (ii) piece-wise step function approximation for the escape rate .

Here we illustrate the algorithms by focusing on one step of the simulation. Let us denote the previous state as and the previous time ; then the algorithm’s task is to generate the next event’s time and the next state . As illustrated in Fig. 1a, the negative escape rate from the state , (shown as black curve) can be approximated by (i) a consecutive set of straight line segments (top panel), or (ii) approximated by a set of step-wise step functions (bottom panel).

(i) Piece-wise linear escape rates. Let us denote each window by a time interval and also set the beginning of the first window at . The negative escape rate from state at each window’s left edge is denoted by . Under this assumption, the numerical integration involved in Eqs. 10 and 11 can be considered as:

| (12) |

for . Here the integral for each window starting from is defined as

| (13) |

Here, is the integrated negative escape rate within the window defined from to . Successive windows starting from will be evaluated until the -th window where the transition time is chosen, which can be identified by . Given Eqs. 12 and 13, we can solve for the according to Eq. 10 in sequence, starting from and continuing until the appropriate can be drawn from the th window according to:222In practical implementation of the numerical code, when , the quadratic equation reduces to the linear, which can be solved from Eq. 15.

| (14) |

(ii) Piece-wise step-function escape rates. When the time dependent escape rate from state is considered as a piece-wise step function, where for each window , we can determine the next event’s time by solving for a linear equation

| (15) |

where is a random number uniformly distributed between and and the integrated negative escape rate for each window is denoted by

| (16) |

By solving for the above equations starting from the first window, one can find the next event’s time as occurring at -th window. We demonstrate this method in a 1D lattice diffusion problem as shown in the Appendix.

II.4 Closed-loop feedback controlled systems: General algorithm

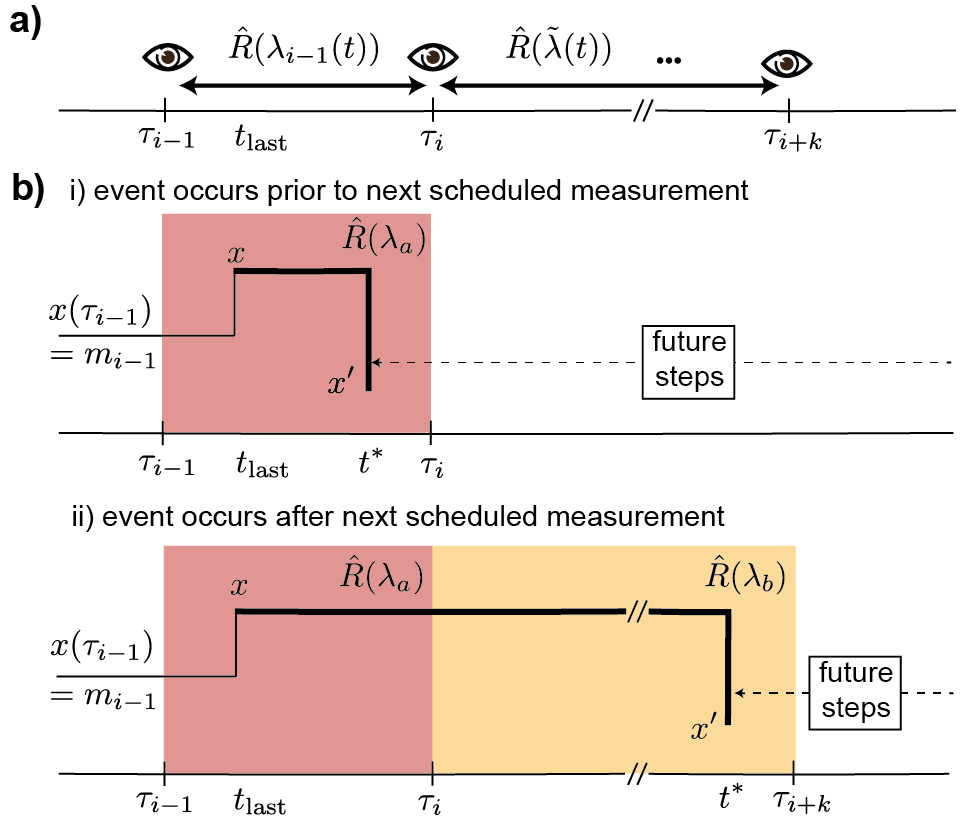

Consider a feedback control scheme where a scheduled series of measurements will be taken at a set of observation time points (see Fig. 2a). At each observation time , the measurement outcome is determined by the transient state of the stochastic system: .333Here the measurement of the system may not be as detailed as the specific state that the system is in. It is possible that a subset of states will give a same measurement outcome (i.e., coarse-grained measurement). The method can be applied to both cases. Immediately, the measurement outcome updates the system’s kinetic rates via a rapid feedback control mechanism. Let us denote the control signal as an explicit function of time , which can be represented by a piece-wise function where each piece is defined for the time between two consecutive measurements and is determined by all previous measurement outcomes. The actual protocol can be piece-wise constant (see illustrative example in Sections II.5 and III.1), or in more general cases, could be a solution of an ODE parameterized by the measurement outcomes at each feedback measurement. The general algorithm proposed here is applicable to arbitrary functional forms of the control protocol.

The key to correctly simulate the feedback controlled system is to recognize that, when carrying out each step of a KMC simulation, one only needs to predict the next transition event given the present state and the transition rates set by the control protocol. Now consider a system placed at state and time set by the previous step of simulation. Without losing generality, let us consider the current time as , so that the current system has gone through the th measurement and the very next feedback measurement (the th measurement) will occur at a future time .

To carry out the present simulation step and predict the next transition time and next state , there are only two possible scenarios to consider, which are separated by a milestone time – the immediate next measurement time, . The two scenarios are the following: (i) the next event takes place at a time before the milestone time (, Fig. 2b[i]) or (ii) the next event takes place at any time after the milestone time (, Fig. 2b[ii]). The milestone time is highlighted by the color scheme transition from red to yellow in Fig. 2b[ii]. This color scheme is also used in Eq. 17 and Eq. 18.

In the first case, one does not need to consider the control protocol after the next measurement and only needs to consider the protocol before . For this case, the control protocol for any is fully determined without any ambiguity, given the historical trajectory of the simulation. This pre-milestone part of control protocol before can be generally represented by the following function and is fully determined:

| (17) |

where denotes the control protocol for time window , and its functional form can be impacted by the all previous measurement results through any prescribed feedback mechanisms. Here, must be smaller than .

In the second case, we need to treat the system with a step-wise protocol where the system still evolves according to the pre-milestone protocol (Eq. 17) up to the milestone time , and then the protocol is updated to a future post-milestone protocol. It may appear that the post-milestone protocols (for ) cannot be fully determined due to the ambiguity of the future system states. However, as we have argued, each step of the KMC simulation involves the generation of a single step trajectory that starts at , remains at state and only jumps to an unknown state at a future unknown transition time . Thus, for any unknown transition time larger than the milestone time, each futuristic feedback measurement according to the trajectory under consideration will return a measurement outcome based on the system state . In other words, the post-milestone protocol for any future is denoted by:

| (18) |

which is determined without ambiguity because all future measurements must detect the system at the current state (i.e., ). In this argument, all futuristic protocols are then determined by the combination of the historical measurement outcomes and the asserted futuristic measurement outcomes, .

Closed-loop-control KMC

In summary, through our single-step trajectory probability argument introduced in Section II.1, we are able to assert a fully determined protocol for a feedback control system. This determined protocol combines Eq. 17, the pre-milestone protocol for , which is fully determined by historical feedback measurements, and Eq. 18, the futuristic post-milestone protocol for , which appears to be unknown due to the future feedback measurement outcomes. As a result, even for closed-loop controlled systems, one can utilize an open-loop-control KMC algorithmAnderson (2007); Anderson, Ermentrout, and Thomas (2015); Anderson and Kurtz (2011); Chow and White (1996); Li et al. (2009) reviewed in Section II.2 to generate the next transition event according to the correct probability distribution Eqs. 5, 6 and 7. This general algorithm is shown in Section II.4. For illustrative purpose, the following demonstrates a simple application of the proposed feedback-control KMC algorithm.

II.5 Application: Piece-wise-constant feedback control

Here, we demonstrate the proposed new algorithm with a general yet simple scenario. In this example, the rates within each measurement window remain constant and are instantaneously updated based on each measurement. For more complicated feedback control regimes (e.g., the control protocol evolves deterministically in time according to differential equations parameterized by historical measurement outcomes), one can simply extend this algorithm in accordance with general feedback controlled KMC algorithm described in Section II.4.

We here describe a model algorithm where the control parameter between two consecutive measurement events are always maintained at a constant value. In this case, both the determined protocol and the projected protocols are both piece-wise constants. Therefore, the escape rate from state is a piece-wise constant function with only two pieces (see Fig. 2b):

| (19) |

In this case, the simulation chooses the next event’s occurrence time by solving for the solution of the piece-wise function:

| (20) |

where is a uniformly distributed random number between and , and the of the equation is defined by Eq. 11. There are two types of possible simulation outcomes of the single-step trajectories. At possibility (top panel of Fig. 2b), when the next event occurs before the next measurement, , the dynamical rates remains unchanged throughout the time, and the rates was determined by the last measurement outcome (see red shaded regions in Fig. 2b). At possibility (bottom panel of Fig. 2b), when the next event occurs after more future measurements , for , the dynamics are initially governed by the old rates before the -th measurement, and then by a new rate after (see yellow shaded regions in Fig. 2b). We demonstrate this method in a two-state refrigerator model in Section III.1.

III Results and Discussion

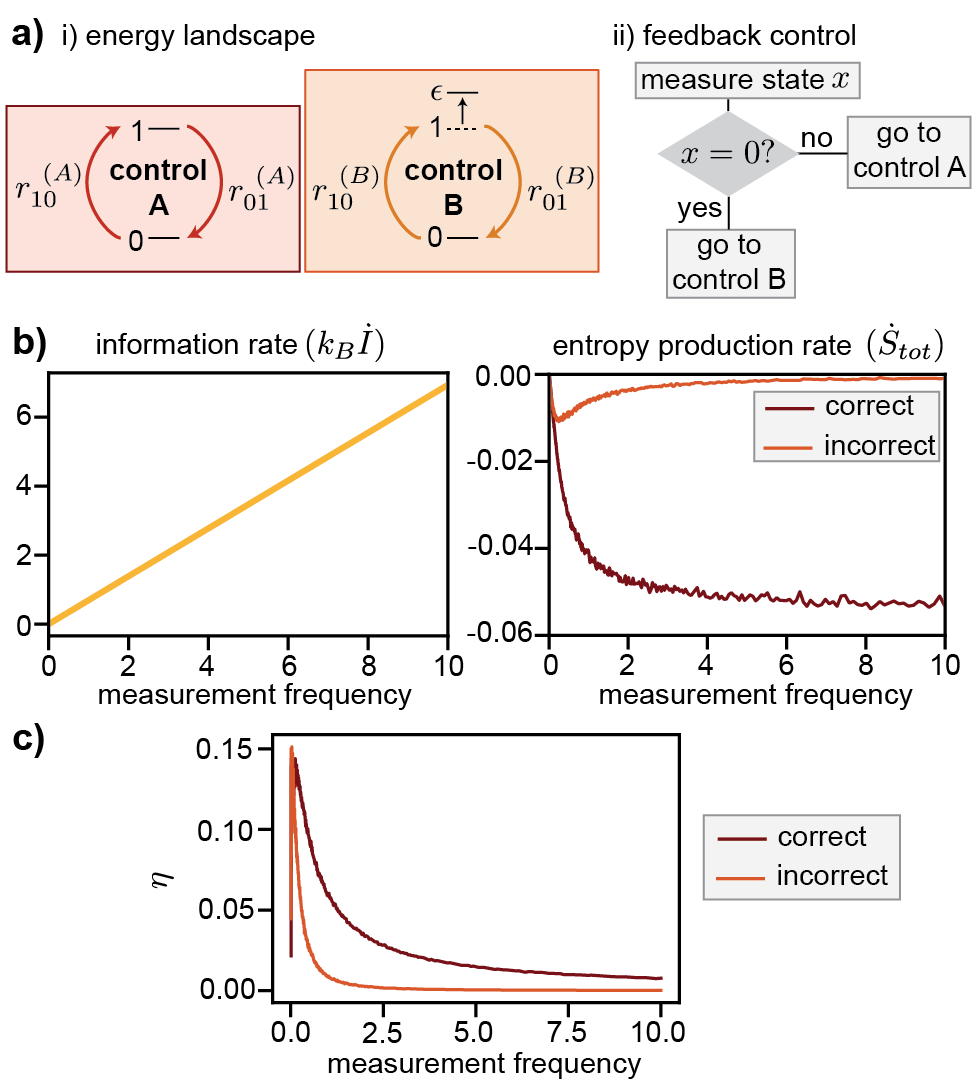

III.1 Demonstration: 2-state feedback control Maxwell’s Refrigerator

To demonstrate the new feedback controlled KMC algorithm proposed in Section II.4, we utilize an example feedback control system whose behavior can be solved analytically. Feedback control systems have been extensively studies in various stochastic systemsBechhoefer (2021); Annby-Andersson et al. (2022); Horowitz and Jacobs (2014); Horowitz and Parrondo (2011a, b); Horowitz and Vaikuntanathan (2010); Bhattacharyya and Jarzynski (2022); Sagawa and Ueda (2012a, 2010, b); Parrondo, Horowitz, and Sagawa (2015). Here we choose to simulate the stochastic trajectories of a 2-state refrigerator inspired by referenceMandal, Quan, and Jarzynski (2013).

Consider a 2-level system coupled to a heat bath. The ground state level’s energy is and the excited level’s energy is controlled externally by a binary toggle switch. At control the excited state energy is ; at control condition , . The feedback control is facilitated by a periodic sequence of instantaneous measurements to the system’s state at times , where is the measurement frequency. If the measurement detects the system at the ground state “0”, the control is switched to , and if excited state “1”, control is (see Fig. 3a).

This system falls into the category of the step-wise constant feedback control scenario (discussed in II.5). In this case, the system evolves either according to the transition rates set by control condition or by condition . At each instantaneous time, the transition rates takes the Arrhenius form , where we have taken the unit such that the inverse temperature . In our demonstration, we set the barrier for the transition to , and set the energies according to , and . Under this feedback control, it was shown that the system behaves as a refrigerator, constantly drawing energy from the heat bath and performs work against the external control. This refrigerator does not require energy expenditure but rather operates at the cost of information obtained via the measurements. For a comprehensive overview of the definition of heat and work in the stochastic systems, refer to the book Sekimoto . For the connection between information and thermodynamic entropy, a few representative works can be found in Penrose (2005); Bennett (1973); Landauer (1961); Mandal and Jarzynski (2012); Zurek (1989); Deffner (2013); Strasberg et al. (2013); Sagawa and Ueda (2012a, 2010, b); Parrondo, Horowitz, and Sagawa (2015); Lu, Mandal, and Jarzynski (2014).

At each stochastic transition from the ground state to the excited state, the system draws from the heat bath energy (for control ) or (for control ). At the transition from excited state to the ground state, the system’s heat gain is (for control ) and (for control ). At any schedule measurement causing a control switch from to (the system is at the ground state), there is no work or heat exchange (, ). At the scheduled measurement causing a control switch from to , the system performs positive work to the controller , and there is no heat exchange within the instantaneous measurement time. By counting the frequencies of the stochastic transitions of system ( to or to ) under different control conditions and respectively, one can calculate the average heat rate (the average heat drawn by the system per unit time).

By implementing the feedback controlled KMC algorithm, we are able to obtain an ensemble of 100 statistically independent stochastic trajectories from such a feedback controlled refrigerator. Each trajectory is of time length 500 and we exclude the initial relaxation period of length 100 from statistics. From the trajectory, one can compute the bath’s entropy change rate, which is equal to the total entropy change rate of the system and the bath:

| (21) |

where the net transition current is defined by the difference between the detailed currents:

| (22) | ||||

| (23) |

Furthermore, since each measurement is binary, one can calculate the information rate simply by multiplying the information per measurement with the measurement frequency: . Finally, we also computed the efficiency of the refrigerator as the entropy decrease rate over the information gain rate:

| (24) |

which characterizes the system’s ability to decrease entropy at the expenditure of information change. Notice that according to the generalized second law of thermodynamics ,Sagawa and Ueda (2012a, b, 2010); Parrondo, Horowitz, and Sagawa (2015), the efficiency must be lower than or equal to 1. The simulation results for a range of measurement frequencies are shown in Fig. 3b-c.

One can verify the proposed feedback controlled KMC by comparing its result to the analytical solution of the system. In the limit of the infinitely high frequency measurements, , one can obtain an effective constant rate matrix of the system and analytically obtain its average heat rate and entropy change rate. Due to infinitely frequent measurements, the system’s transition rate from state to is always equal to and the system’s transition rate from state to is always equal to . As a result, the effective rate matrix under infinite frequency feedback control is

| (25) |

The steady state probability for this system can be written as . This allows us to compute the detailed transition current from 0 to 1 as , and current from 1 to 0 as . Due to the frequent measurement, each transition from 0 to 1 must occur under control , which takes energy from the bath, and each transition from 1 to 0 must occurs under control , which deposits energy into the bath. As a result, for a system at the steady state (no system entropy change), the total entropy change of the system and the bath is purely the bath entropy change with the rate

| (26) |

where the detailed steady-state currents are

| (27) | ||||

| (28) |

From Fig. 3 one can verify that the entropy change rate at high frequency limit obtained from our proposed feedback controlled KMC algorithm matches well with the analytical result in Eq. 26

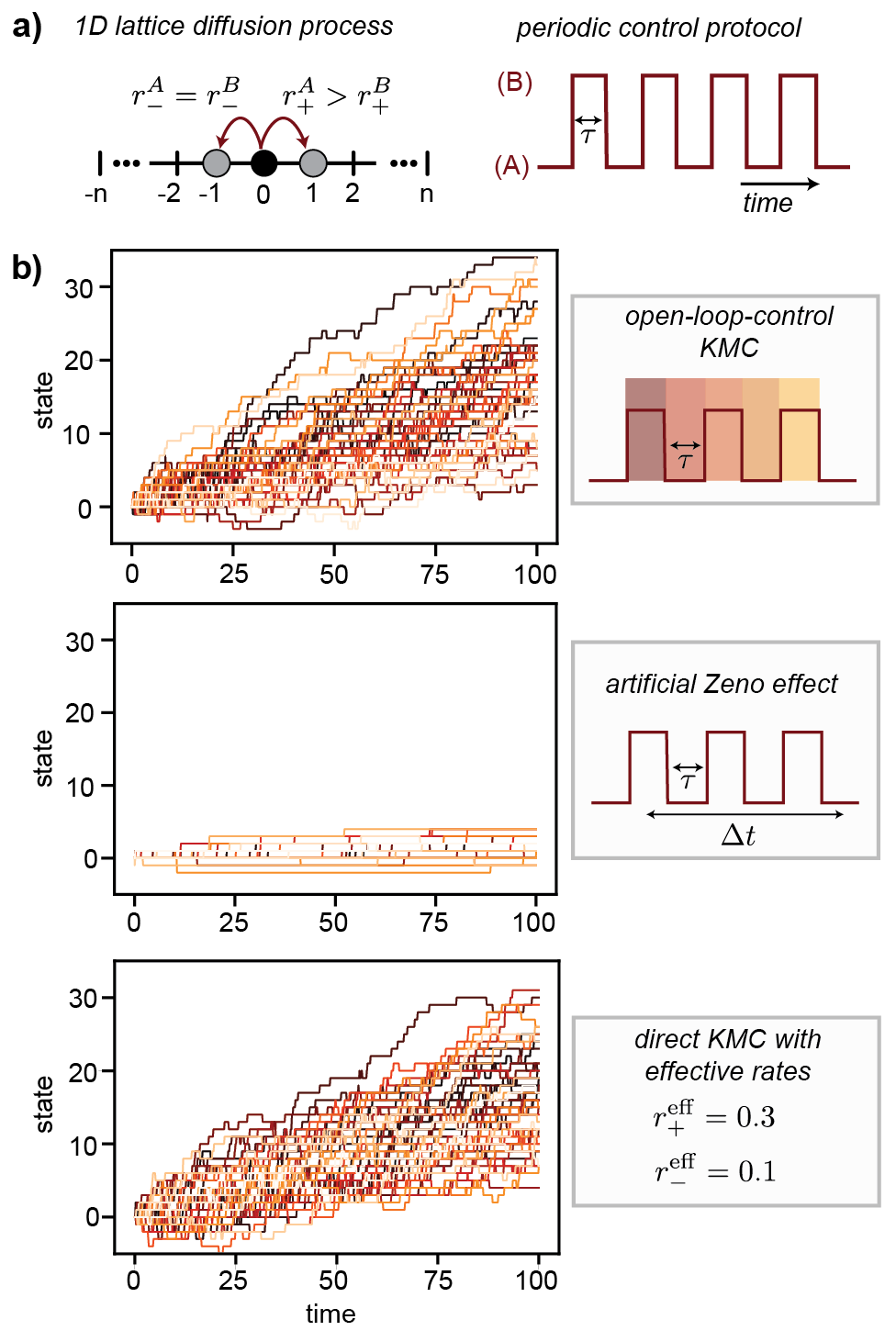

III.2 Discussion: Avoiding artificial Zeno effect

This work proposed a new algorithm to simulate systems under feedback control. Here we illustrate that for systems with time-dependent control (both open-loop and closed-loop), the direct implementation of the original Gillespie algorithmGillespie (2001) can lead to erroneous results, where the system’s dynamics appears to be immobilized due to frequent changes of the external control parameter or frequent feedback controls. We name this erroneous results the artificial Zeno effect. This effect appears to resemble the quantum Zeno effectFischer, Gutiérrez-Medina, and Raizen (2001) but is fundamentally different – the former is an artificial numerical error, and the latter is a true physical phenomenon.

In the quantum Zeno effectFischer, Gutiérrez-Medina, and Raizen (2001) frequent projective measurements may reset the system’s wave function into an eigenstate and, in the limit of infinitely high measurement frequency, the system’s wave function is frozen at the eigenstate and the system appears to be non-evolving. In contrast, classical stochastic systems, where the measurement does not directly impact the system’s state444Under feedback control measurements, the system’s kinetic rates can be updated after the measurement, but the system’s state is not directly altered by the action of measurement., should not have any Zeno effect. The artificial Zeno effect is a numerical error only when one naively implements the direct KMC algorithm in a piece-wise manner for time-dependent systems.

For illustrative purpose we first demonstrate the erroneous artificial Zeno effect in the feedback control system discussed in Fig. 3. In the Appendix, this discussion has been extended to an open-loop controlled time-dependent systems (Fig. 4).

For the feedback controlled system, one naive way to implement the original Gillespie algorithmGillespie (2001) is to reset the KMC simulation at each measurement time point. Specifically, one may perform a constant-rate KMC simulation for each measurement time window. Consider a system at state at time . If the direct KMC approach proposes the next transition’s time to be , one simply evolves the system time to while maintaining the system state to be and then starts another step of the direct KMC simulation, with the initial state and initial time . This approach appears to be valid, since the predicted time is indeed longer than the time of the next measurement, and the system should not change until after the next measurement. However, this direct implementation of the KMC method leads to wrong results that can become obvious by considering the infinitely frequent measurement limit, where the time till the next measurement approaches and the chance that the direct KMC proposes a before the next measurement becomes zero. Thus the simulation is frozen to state . This artificial Zeno effect becomes more prominent for higher frequency measurements.

To illustrate this mistake, we implement the above direct KMC simulation for the Maxwell’s refrigerator discussed in Section III.1 and show that the artificial Zeno effect significantly underestimates the transition events of the systems dynamics, resulting in an underestimation of the refrigerator’s entropy decreasing rate and thermodynamic efficiency (see Fig. 3bc).

For a feedback controlled system where one measures and acquires system’s states at a scheduled sequence of times, plainly restarting the clock of the KMC simulation from the measured state to simulate the dynamics of the system will lead to an artificial Zeno effect. The closed-loop-control algorithm demonstrated here avoids this pitfall and preserves the correct dynamics. In the Appendix, we show that similar mistakes may occur in open-loop-control systems only if one overlooks previously introduced algorithmsAnderson (2007); Anderson, Ermentrout, and Thomas (2015); Anderson and Kurtz (2011); Chow and White (1996); Li et al. (2009)

IV Conclusion

In conclusion, we here propose a new method to simulate stochastic trajectories of systems experiencing feedback control. This new method is derived based on a general single-step trajectory probability analysis. This analysis can be used to derive and recapitulate several previously proposed open-loop-control KMC algorithms for non-feedback control systems whose rates change in time under a given protocol. Then, we demonstrate that feedback controlled systems can be simulated by combining pre-milestone control protocol and a post-milestone control protocol. This allows us to perform a time-dependent KMC simulation for feedback controlled systems with a modified open-loop-control KMC algorithm. We validate our claims using simulation of a two-state feedback control Maxwell’s refrigerator. Our correct algorithm provides the true dynamics of the system. The simulation results are compared with the analytical results obtained in the infinitely frequent controlled scenarios, and the numerical results are in perfect agreement with the analytical result. Furthermore, the new modified KMC algorithms avoid the artificial Zeno effect, where a wrongly implementation of the direct KMC may lead to an erroneous underestimation of the frequency of transitions.

The algorithms described herein can find broad applicability in a number of fields, particularly in biological systems which experience rapid fluctuations in local environments and frequent feedback control via interaction with surroundings. Multiple processes – such as neurophysiology,Seidler, Noll, and Thiers (2004) transcriptional regulation,Henninger et al. (2021) circadian clocks,Brown and Doyle III (2020) and metabolic regulationChaves and Oyarzún (2019) – are examples of such systems beyond the scope of analytical solutions. A KMC algorithm to treat these feedback controlled systems would allow the relevant dynamics to be sampled and captured. Even though the example provided in Fig. 3 is a binary state model which is analytically solvable, the algorithm can be directly applied to complex systems that are not analytically solvable (see Section II.5). If the system’s control protocol follows complex rules, one can still apply the general algorithm by making necessary approximations on the control protocol similar to those sketched in Section II.3. While rapid feedback control or complex dynamics may pose challenges by increasing the computational cost of these simulations, our derivation provides a flexible path to tackling these problems. We believe that the relevant non-equilibrium behavior and thermodynamic quantities in a wide variety of feedback controlled chemical and biological system, such as reaction turnover rates, binding affinity, or assembly properties, can be accurately captured by using the new algorithm.

V Appendix

If one overlooks the existing open-loop-control KMC methodsAnderson (2007); Anderson, Ermentrout, and Thomas (2015); Anderson and Kurtz (2011); Chow and White (1996); Li et al. (2009) and approximates the control protocol by piecewise functions, it is possible that an artificial Zeno effect similar to Fig. 3 appears. Below we demonstrate a comparison between the correct methodAnderson (2007); Anderson, Ermentrout, and Thomas (2015); Anderson and Kurtz (2011); Chow and White (1996); Li et al. (2009) and the erroneous result.

Let us consider a diffusion process on a 1-dimension lattice, where random walkers experience biased transitions. The initial state of all random walkers are placed at position 0. Under a periodic switch between controls A and B, the system evolves under two sets of rates with control period set to (see Fig. 4a). In condition A, the transition rate for moving right is and for moving left is (Fig. 4a, left panel). Conversely, in condition B, these rates are and . As the environment oscillates rapidly between conditions A and B, the system experiences alternating transition rates (Fig. 4a, right panel). To accurately simulate this scenario, we employ the correct open-loop-control KMC method to generate a set of trajectories representative of the random walker’s behavior (see Fig. 4b, top panel). To illustrate the erroneous artificial Zeno effect, we perform a piece-wise direct KMC simulation and find that the artificial Zeno effects significantly slowed down the system’s diffusion, and the random walkers are mostly frozen without many transitions (see Fig. 4b, middle panel). In the end, to verify the validity of the open-loop-control KMC, we include an alternative way to obtain the true dynamics of random workers: by acknowledging that the control switch frequency is much greater than the diffusion rates, , one can show that this system evolves under effective dynamics with constant rates Tagliazucchi, Weiss, and Szleifer (2014); Zhang, Du, and Lu (2023). In this case, the system’s dynamics can be generated by a direct KMC simulation under the effective rates, shown in Fig. 4b bottom panel. We verify that under the rapid oscillation limit, the effective dynamics and the dynamics generated by the open-loop-feedback KMC agrees with each other. However, the naive implementation of direct KMC to time-dependent dynamics results in a significant slow down of the dynamics (i.e., the artificial Zeno effect).

Supplementary Information

Numerical simulation codes can be found in the Supplementary Materials.

Data Availability Statement

Data sharing is not applicable to this article as no new data were created or analyzed in this study.

Acknowledgements.

This work was in part financially supported by the National Science Foundation under Award Number DMR-2145256. S.S.C acknowledges the National Science Foundation Graduate Research Fellowship (DGE-2040435). We also thank the UNC Research Computing group for providing the computational resources and support towards these results. The authors are also grateful for valuable feedback from Prof. Chris Jarzynski, Dr. Hong Qian, Dr. Aaron Dinner, Dr. Zhongmin Zhang, and Mr. Jiming Zheng.References

References

- Shahrezaei and Swain (2008) V. Shahrezaei and P. S. Swain, “The stochastic nature of biochemical networks,” Current opinion in biotechnology 19, 369–374 (2008).

- Levine and Hwa (2007) E. Levine and T. Hwa, “Stochastic fluctuations in metabolic pathways,” Proceedings of the National Academy of Sciences 104, 9224–9229 (2007).

- Székely Jr and Burrage (2014) T. Székely Jr and K. Burrage, “Stochastic simulation in systems biology,” Computational and structural biotechnology journal 12, 14–25 (2014).

- Earnshaw and Keener (2010) B. A. Earnshaw and J. P. Keener, “Invariant manifolds of binomial-like nonautonomous master equations,” SIAM Journal on Applied Dynamical Systems 9, 568–588 (2010).

- Lee and Othmer (2010) C. H. Lee and H. G. Othmer, “A multi-time-scale analysis of chemical reaction networks: I. deterministic systems,” Journal of mathematical biology 60, 387–450 (2010).

- Meister et al. (2014) A. Meister, C. Du, Y. H. Li, and W. H. Wong, “Modeling stochastic noise in gene regulatory systems,” Quantitative biology 2, 1–29 (2014).

- Anderson and Kurtz (2011) D. F. Anderson and T. G. Kurtz, “Continuous time markov chain models for chemical reaction networks,” in Design and Analysis of Biomolecular Circuits: Engineering Approaches to Systems and Synthetic Biology, edited by H. Koeppl, G. Setti, M. di Bernardo, and D. Densmore (Springer New York, New York, NY, 2011) pp. 3–42.

- Bowman, Pande, and Noé (2013) G. R. Bowman, V. S. Pande, and F. Noé, An introduction to Markov state models and their application to long timescale molecular simulation, Vol. 797 (Springer Science & Business Media, 2013).

- Husic and Pande (2018) B. E. Husic and V. S. Pande, “Markov state models: From an art to a science,” Journal of the American Chemical Society 140, 2386–2396 (2018).

- Nelson and Nelson (2004) P. C. Nelson and P. Nelson, Biological physics (WH Freeman New York, 2004).

- Alon (2019) U. Alon, An introduction to systems biology: design principles of biological circuits (Chapman and Hall/CRC, 2019).

- Gillespie (2001) D. T. Gillespie, “Approximate accelerated stochastic simulation of chemically reacting systems,” The Journal of chemical physics 115, 1716–1733 (2001).

- Iglesias and Ingalls (2010) P. A. Iglesias and B. P. Ingalls, Control theory and systems biology (MIT press, 2010).

- Lorendeau et al. (2015) D. Lorendeau, S. Christen, G. Rinaldi, and S.-M. Fendt, “Metabolic control of signalling pathways and metabolic auto-regulation,” Biology of the Cell 107, 251–272 (2015).

- Atkinson (1965) D. E. Atkinson, “Biological feedback control at the molecular level: Interaction between metabolite-modulated enzymes seems to be a major factor in metabolic regulation.” Science 150, 851–857 (1965).

- Goyal et al. (2010) S. Goyal, J. Yuan, T. Chen, J. D. Rabinowitz, and N. S. Wingreen, “Achieving optimal growth through product feedback inhibition in metabolism,” PLoS Comput. Biol. 6, e1000802 (2010).

- Yi et al. (2000a) T.-M. Yi, Y. Huang, M. I. Simon, and J. Doyle, “Robust perfect adaptation in bacterial chemotaxis through integral feedback control,” Proceedings of the National Academy of Sciences 97, 4649–4653 (2000a).

- Hamadeh et al. (2011) A. Hamadeh, M. A. J. Roberts, E. August, P. E. McSharry, P. K. Maini, J. P. Armitage, and A. Papachristodoulou, “Feedback control architecture and the bacterial chemotaxis network,” PLoS Comput. Biol. 7, e1001130 (2011).

- Yi et al. (2000b) T. M. Yi, Y. Huang, M. I. Simon, and J. Doyle, “Robust perfect adaptation in bacterial chemotaxis through integral feedback control,” Proc. Natl. Acad. Sci. U. S. A. 97, 4649–4653 (2000b).

- Murray (1992) A. W. Murray, “Creative blocks: cell-cycle checkpoints and feedback controls,” Nature 359, 599–601 (1992).

- Domian, Reisenauer, and Shapiro (1999) I. J. Domian, A. Reisenauer, and L. Shapiro, “Feedback control of a master bacterial cell-cycle regulator,” Proceedings of the National Academy of Sciences 96, 6648–6653 (1999).

- Cross and Tinkelenberg (1991) F. R. Cross and A. H. Tinkelenberg, “A potential positive feedback loop controlling cln1 and cln2 gene expression at the start of the yeast cell cycle,” Cell 65, 875–883 (1991).

- Li and Murray (1991) R. Li and A. W. Murray, “Feedback control of mitosis in budding yeast,” Cell 66, 519–531 (1991).

- Gillespie (1977) D. T. Gillespie, “Exact stochastic simulation of coupled chemical reactions,” The journal of physical chemistry 81, 2340–2361 (1977).

- Möbus and Wolf (2019) T. Möbus and M. M. Wolf, “Quantum zeno effect generalized,” J. Math. Phys. 60, 052201 (2019).

- Itano et al. (1990) W. M. Itano, D. J. Heinzen, J. J. Bollinger, and D. J. Wineland, “Quantum zeno effect,” Physical Review A 41, 2295 (1990).

- Streed et al. (2006) E. W. Streed, J. Mun, M. Boyd, G. K. Campbell, P. Medley, W. Ketterle, and D. E. Pritchard, “Continuous and pulsed quantum zeno effect,” Physical review letters 97, 260402 (2006).

- Anderson, Ermentrout, and Thomas (2015) D. F. Anderson, B. Ermentrout, and P. J. Thomas, “Stochastic representations of ion channel kinetics and exact stochastic simulation of neuronal dynamics,” Journal of computational neuroscience 38, 67–82 (2015).

- Ding et al. (2016) S. Ding, M. Qian, H. Qian, and X. Zhang, “Numerical simulations of piecewise deterministic markov processes with an application to the stochastic Hodgkin-Huxley model,” J. Chem. Phys. 145, 244107 (2016).

- Chow and White (1996) C. C. Chow and J. A. White, “Spontaneous action potentials due to channel fluctuations,” Biophys. J. 71, 3013–3021 (1996).

- Li et al. (2009) Y. Li, X. Qu, A. Ma, G. J. Smith, N. F. Scherer, and A. R. Dinner, “Models of single-molecule experiments with periodic perturbations reveal hidden dynamics in rna folding,” The Journal of Physical Chemistry B 113, 7579–7590 (2009).

- Anderson (2007) D. F. Anderson, “A modified next reaction method for simulating chemical systems with time dependent propensities and delays,” J. Chem. Phys. 127, 214107 (2007).

- Bechhoefer (2021) J. Bechhoefer, Control theory for physicists (Cambridge University Press, 2021).

- Franklin et al. (2002) G. F. Franklin, J. D. Powell, A. Emami-Naeini, and J. D. Powell, Feedback control of dynamic systems, Vol. 4 (Prentice hall Upper Saddle River, 2002).

- Note (1) Here constant rate over time is used to describe the time period between two consecutive stochastic events. Naturally, when the system state changes after the next event, the possible transitions and their rates are naturally updated.

- Note (2) In practical implementation of the numerical code, when , the quadratic equation reduces to the linear, which can be solved from Eq. 15.

- Note (3) Here the measurement of the system may not be as detailed as the specific state that the system is in. It is possible that a subset of states will give a same measurement outcome (i.e., coarse-grained measurement). The method can be applied to both cases.

- Annby-Andersson et al. (2022) B. Annby-Andersson, F. Bakhshinezhad, D. Bhattacharyya, G. De Sousa, C. Jarzynski, P. Samuelsson, and P. P. Potts, “Quantum fokker-planck master equation for continuous feedback control,” Physical Review Letters 129, 050401 (2022).

- Horowitz and Jacobs (2014) J. M. Horowitz and K. Jacobs, “Quantum effects improve the energy efficiency of feedback control,” Physical Review E 89, 042134 (2014).

- Horowitz and Parrondo (2011a) J. M. Horowitz and J. M. Parrondo, “Thermodynamic reversibility in feedback processes,” Europhysics Letters 95, 10005 (2011a).

- Horowitz and Parrondo (2011b) J. M. Horowitz and J. M. Parrondo, “Designing optimal discrete-feedback thermodynamic engines,” New Journal of Physics 13, 123019 (2011b).

- Horowitz and Vaikuntanathan (2010) J. M. Horowitz and S. Vaikuntanathan, “Nonequilibrium detailed fluctuation theorem for repeated discrete feedback,” Physical Review E 82, 061120 (2010).

- Bhattacharyya and Jarzynski (2022) D. Bhattacharyya and C. Jarzynski, “From a feedback-controlled demon to an information ratchet in a double quantum dot,” Physical Review E 106, 064101 (2022).

- Sagawa and Ueda (2012a) T. Sagawa and M. Ueda, “Nonequilibrium thermodynamics of feedback control,” Physical Review E 85, 021104 (2012a).

- Sagawa and Ueda (2010) T. Sagawa and M. Ueda, “Generalized jarzynski equality under nonequilibrium feedback control,” Physical review letters 104, 090602 (2010).

- Sagawa and Ueda (2012b) T. Sagawa and M. Ueda, “Fluctuation theorem with information exchange: Role of correlations in stochastic thermodynamics,” Physical review letters 109, 180602 (2012b).

- Parrondo, Horowitz, and Sagawa (2015) J. M. Parrondo, J. M. Horowitz, and T. Sagawa, “Thermodynamics of information,” Nature physics 11, 131–139 (2015).

- Mandal, Quan, and Jarzynski (2013) D. Mandal, H. Quan, and C. Jarzynski, “Maxwell’s refrigerator: an exactly solvable model,” Physical review letters 111, 030602 (2013).

- (49) K. Sekimoto, Stochastic Energetics (Springer Berlin Heidelberg).

- Penrose (2005) O. Penrose, Foundations of statistical mechanics: a deductive treatment (Courier Corporation, 2005).

- Bennett (1973) C. H. Bennett, “Logical reversibility of computation,” IBM journal of Research and Development 17, 525–532 (1973).

- Landauer (1961) R. Landauer, “Irreversibility and heat generation in the computing process,” IBM journal of research and development 5, 183–191 (1961).

- Mandal and Jarzynski (2012) D. Mandal and C. Jarzynski, “Work and information processing in a solvable model of maxwell’s demon,” Proceedings of the National Academy of Sciences 109, 11641–11645 (2012).

- Zurek (1989) W. H. Zurek, “Thermodynamic cost of computation, algorithmic complexity and the information metric,” Nature 341, 119–124 (1989).

- Deffner (2013) S. Deffner, “Information-driven current in a quantum maxwell demon,” Physical Review E 88, 062128 (2013).

- Strasberg et al. (2013) P. Strasberg, G. Schaller, T. Brandes, and M. Esposito, “Thermodynamics of a physical model implementing a maxwell demon,” Physical review letters 110, 040601 (2013).

- Lu, Mandal, and Jarzynski (2014) Z. Lu, D. Mandal, and C. Jarzynski, “Engineering maxwell’s demon,” Physics Today 67, 60–61 (2014).

- Fischer, Gutiérrez-Medina, and Raizen (2001) M. C. Fischer, B. Gutiérrez-Medina, and M. G. Raizen, “Observation of the quantum zeno and anti-zeno effects in an unstable system,” Phys. Rev. Lett. 87, 040402 (2001).

- Note (4) Under feedback control measurements, the system’s kinetic rates can be updated after the measurement, but the system’s state is not directly altered by the action of measurement.

- Seidler, Noll, and Thiers (2004) R. D. Seidler, D. C. Noll, and G. Thiers, “Feedforward and feedback processes in motor control,” Neuroimage 22, 1775–1783 (2004).

- Henninger et al. (2021) J. E. Henninger, O. Oksuz, K. Shrinivas, I. Sagi, G. LeRoy, M. M. Zheng, J. O. Andrews, A. V. Zamudio, C. Lazaris, N. M. Hannett, et al., “Rna-mediated feedback control of transcriptional condensates,” Cell 184, 207–225 (2021).

- Brown and Doyle III (2020) L. S. Brown and F. J. Doyle III, “A dual-feedback loop model of the mammalian circadian clock for multi-input control of circadian phase,” PLoS Computational Biology 16, e1008459 (2020).

- Chaves and Oyarzún (2019) M. Chaves and D. A. Oyarzún, “Dynamics of complex feedback architectures in metabolic pathways,” Automatica 99, 323–332 (2019).

- Tagliazucchi, Weiss, and Szleifer (2014) M. Tagliazucchi, E. A. Weiss, and I. Szleifer, “Dissipative self-assembly of particles interacting through time-oscillatory potentials,” Proceedings of the National Academy of Sciences 111, 9751–9756 (2014).

- Zhang, Du, and Lu (2023) Z. Zhang, V. Du, and Z. Lu, “Energy landscape design principle for optimal energy harnessing by catalytic molecular machines,” Physical Review E 107, L012102 (2023).