RGB-D SLAM in Indoor Planar Environments with Multiple Large Dynamic Objects

Abstract

This work presents a novel dense RGB-D SLAM approach for dynamic planar environments that enables simultaneous multi-object tracking, camera localisation and background reconstruction. Previous dynamic SLAM methods either rely on semantic segmentation to directly detect dynamic objects; or assume that dynamic objects occupy a smaller proportion of the camera view than the static background and can, therefore, be removed as outliers. With the aid of camera motion prior, our approach enables dense SLAM when the camera view is largely occluded by multiple dynamic objects. The dynamic planar objects are separated by their different rigid motions and tracked independently. The remaining dynamic non-planar areas are removed as outliers and not mapped into the background. The evaluation demonstrates that our approach outperforms the state-of-the-art methods in terms of localisation, mapping, dynamic segmentation and object tracking. We also demonstrate its robustness to large drift in the camera motion prior.

Index Terms:

SLAM, visual tracking, sensor fusion.I Introduction

Simultaneous localisation and mapping (SLAM) is one of the core components in autonomous robots and virtual reality applications. In indoor environments, planes are common man-made features. Planar SLAM methods have used the characteristics of planes to reduce long-term drift and improve the accuracy of localisation [1, 2]. However, these methods assume that the environment is static – an assumption that is violated when the robot works in conjunction with other humans or robots, or manipulates objects in semi-automated warehouses.

The core problem of enabling SLAM in dynamic environments while differentiating multiple dynamic objects involves several challenges:

-

1.

There are usually an unknown number of third-party motions in addition to the camera motion in dynamic environments. The number of motions or dynamic objects is also changing.

-

2.

Static background is often assumed to account for the major proportion of the camera view. However, without semantic segmentation, dynamic objects that occupy a large proportion of the camera view can end up being classified as the static background.

-

3.

The majority of the colour and depth information can be occluded by dynamic objects and the remaining static parts of the visual input may not be enough to support accurate camera ego-motion estimation.

|

|

|

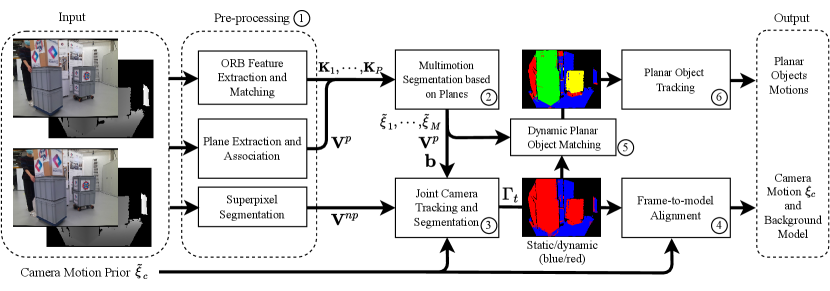

Many dynamic SLAM methods have considered multiple dynamic objects [3, 4, 5], but either rely on semantic segmentation or assume that the static background is the largest rigid body in the camera view. To concurrently solve these problems, we propose a hierarchical representation of images that extracts planes from planar areas and over-segments non-planar areas into super-pixels (Figure 1). We consequently segment and track multiple dynamic planar rigid objects, and remove dynamic non-planar objects to enable camera localisation and mapping. For this, we assume that planes occupy a major fraction of the environment, including the static background and rigid dynamic objects. In addition, the camera motion can be distinguished from other third-party motions by a tightly-coupled camera motion prior from robot odometry.

In summary, this work contributes:

-

1.

a new methodology for online multimotion segmentation based on planes in indoor dynamic environments,

-

2.

a novel pipeline that simultaneously tracks multiple planar rigid objects, estimates camera ego-motion and reconstructs the static background,

-

3.

a RGB-D SLAM method that is robust to large-occluded camera view caused by multiple large dynamic objects.

II Related Work

II-A Dynamic SLAM

Dynamic SLAM methods can be categorised into outlier-based, semantic-based, multimotion and proprioception-aided methods.

Outlier-based methods assume that the static background occupies the major component in the camera view and dynamic objects can be robustly removed during camera tracking. Joint-VO-SF (JF) [6] over-segments images into clusters and classifies each cluster as either static or dynamic by comparing the depth and intensity residuals. StaticFusion (SF) [7] introduces a continuous score to represent the probability that a cluster is static. The scores are used to segment the static background for camera localisation and mapping, while the remaining dynamic parts are discarded as outliers. Co-Fusion (CF) [5] and MultiMotionFusion [8] can further model the outliers as new objects and track them independently.

Semantic-based methods directly detect dynamic objects from the semantic segmentation. Based on Mask R-CNN [9], which provides pixel-wise object segmentation, EM-Fusion [10] integrates object tracking and SLAM into a single expectation maximisation (EM) framework. ClusterVO [3] can track camera ego-motion and multiple rigidly moving clusters simultaneously by combining semantic bounding boxes and ORB features [11]. DynaSLAM II [12] further integrates the multi-object tracking (MOT) and SLAM into a tightly-coupled formulation to improve its performance on both problems. However, these methods require that the object detector is pre-trained on a dataset which includes these objects or that the object model is provided in advance.

Multimotion methods explicitly model the dynamic component as third-party rigid motions and segment dynamic objects by their different motions against the camera motion. Multimotion visual odometry (MVO) [4] is an online multimotion segmentation and tracking method based on sparse keypoints. It iteratively samples all keypoints using RANSAC to generate motion hypotheses and automatically merge them when the merging can decrease the total energy. In addition to RANSAC, motion hypotheses can be generated from the increased N-points using a grid-based scene flow [13]. Instead of merging motion hypotheses, DymSLAM [14] computes a residual matrix from hypothetical motion models and directly estimates the number of dynamic objects from the residual matrix. The dense segmentation of multiple dynamic objects based on super-pixels [15] is acquired after the number of objects is specified. However, all these methods assume that the largest body of motion in the camera view is the static background and they have not demonstrated their effectiveness when the major proportion of the camera view is occluded by dynamic objects.

Robot proprioception, such as IMU and wheel odometry, can be fused with visual sensors to improve the accuracy and robustness of localisation in dynamic environments [16, 17]. Kim et al. [16] uses the camera motion prior from an IMU to compensate for the camera motion and select static keypoints based on motion vectors. RigidFusion (RF) [17] uses the camera motion prior from wheel odometry and additional object motion priors to enable SLAM with single dynamic object reconstruction when the major part of the camera view is occluded. However, these methods are unable to track multiple dynamic objects independently.

II-B Planar SLAM

Planar features have been widely used in indoor dynamic SLAM methods. Infinite planes can be used as landmarks in the pose graph SLAM problem [18]. Based on keyframe management, a global dense planar map can be reconstructed using only a single CPU [19]. Planes can also be combined with keypoints and lines [2, 1] for more robust camera tracking. In structured environments, planes have been demonstrated to significantly reduce accumulated rotational drift under Manhattan world (MW) assumption [1]. All these methods assume static environments, because planes in the indoor environment, like walls, are often static. However, this assumption is violated when planar objects, such as boxes, are transported or manipulated by humans or robots.

III Methodology

III-A Overview and notation

The overview of our pipeline is illustrated in Figure 2. Our method takes RGB-D image pairs from two consecutive frames. At the -th frame, we have a depth image and an intensity image computed from the colour image.

A plane is represented in the Hessian form , where is the normal of the plane and is the perpendicular distance between the plane and camera origin. For each image frame, we extract planes directly from the depth map using PEAC [20], which can provide the number of planes , the pixel-wise segmentation of planes and their corresponding plane parameters. After plane extraction, the remaining non-planar areas are over-segmented into super-pixels: .

For the -th plane, we extract a set of keypoints using ORB features [21]. We then conduct multimotion segmentation on planar areas and cluster the planes into planar rigid bodies of different motions. For simplicity, we name the camera motion relative to objects’ egocentric frames as object egocentric motion [4] and denote them as . Since the static background may not be the largest rigid body in the camera view, we use the camera motion prior with potential drift to simultaneously classify all planes and super-pixels into either static or dynamic, and estimate the camera ego-motion.

We use the score to represent the probability that a plane or super-pixel is static. The scores are assigned to each plane and super-pixel, where and represent the scores of planes and super-pixels respectively. At time , the pixel-wise static dynamic segmentation can be estimated from . The static parts of intensity and depth images are used to estimate the camera motion . The dynamic planar rigid bodies are used to track dynamic objects. The non-planar dynamic super-pixels, such as humans, are removed as outliers.

The world-, camera-, and the -th object-frames are , and respectively. The camera motion is , which transforms homogeneous coordinates of a point in the current camera frame to the previous frame . The function is the matrix exponential map for Lie group . The -th object egocentric transformations is the camera motion relative to this object [4]:

| (1) |

III-B Multimotion segmentation based on planes

To extract planes, we transform the depth map to a point cloud and cluster connected groups of points with close normal directions using the method from [20]. To match plane in the current frame with one in the previous frame, we first estimate the angle and point-to-plane distance between plane and all planes in the previous frame. A plane is chosen as a candidate if the angle and distance are below 10 degrees and 0.1 m respectively, which is the same as [2, 1]. However, rather than choosing the plane with minimal distance [2, 1], we further consider overlap proportion between two planes using the Jaccard index, , where is the number of pixels in planar segment . We choose the candidate plane that has the maximal Jaccard index as the matched plane for plane and denote it as plane .

To estimate object egocentric motion of plane , we extract and match ORB keypoints from plane and . The error function is defined as:

| (2) |

where is the set of keypoint matches between planes and . and are homogeneous coordinates of the two matched keypoints. is the robust Huber error function [21]. is the parameter to weight the error between the Hessian form of planes. avoids over-parametrisation of the Hessian form [1].

To cluster planes with similar motions, we introduce a score for each pair of neighbouring planes and in the current frame. represents the probability that the motion of planes and can be modelled by the same rigid transformation. We further introduce a new formulation based on planes to jointly estimate motion of planes and merge planes into rigid bodies:

| (3) | ||||

is the set of egocentric transformations for all planes in the current frame. . is the connectivity graph of planes in the current frame and means that planes and are connected in space. The first term is introduced in Equation 2. In the second term, we propose to quantify the error between two planes with egocentric motion and respectively. The last term penalises the model complexity by maximising the sum of probabilities that neighbouring planes have similar motions.

The novelty of the formulation is that we treat each individual plane as a motion hypothesis and estimate the likelihood of any two neighbouring hypotheses having the same motion. This is in contrast to MVO [4], which discretely decides whether two motion hypotheses are merged or not.

To minimise Equation 3, and are decoupled. We firstly initialise all egocentric motions to identity and all scores to 0. Then, at each iteration, we fix and find optimal by optimising each transformation independently. is analytically solved subsequently by fixing the optimised transformations. After minimisation, we set a threshold and merge planes and if . We therefore acquire planar rigid bodies and use RANSAC to estimate their prior egocentric motions respectively. However, since the dynamic objects can occupy the major part of the images, we still need to decide which planar rigid body belongs to the static background.

III-C Joint camera tracking and background segmentation

We jointly track the camera motion and segment the static background based on a hierarchical representation of planes and non-planar super-pixels. This representation is more efficient in planar environments than uniformly sampled clusters used in previous work [7, 17]. In addition, compared to RigidFusion [17], our method only requires the camera motion prior. The dynamic planar objects are detected by their different rigid motions compared to the camera motion while dynamic non-planar areas are removed by their high residuals. To achieve it, we propose to minimise a combined formulation that consists of three energy terms:

| (4) |

where is the full set of probabilities that each plane or super-pixel is static. is the camera ego-motion in the world frame. Importantly, planes that belong to the same planar rigid body are assigned with independent scores . The first term aligns the static rigid body using weighted intensity and depth residuals. The second term segments dynamic objects by either different motions or high residuals and maintains segmentation smoothness. The last regularisation term adds a soft constraint on the camera motion.

III-C1 Residual term

Following the previous work [7, 17], we consider image pairs () and () from two consecutive frames. For a pixel with coordinate in the current frame , the intensity residual and depth residual against the previous frame under motion are given by:

| (5) | ||||

| (6) |

where is the camera projection function and returns the -coordinate of a 3D point. The image warping function is:

| (7) |

which provides the corresponding coordinate in the previous frame. Similar to SF, the weighted residual term is:

| (8) |

where is the number of pixels with a valid depth value and indicates the index of the segment that contains the pixel . is used to weight the intensity residuals. The Cauchy robust penalty:

| (9) |

is used to control robustness of minimisation and is the inflection point of . Compared to SF, which assigns scores to each cluster, we represent the image a combination of planes and super-pixels.

III-C2 Segmentation term

The objective of is to detect dynamic planar rigid bodies by their motions and dynamic non-planar super-pixels by their high residuals. is computed by the sum of three items:

| (10) |

where , and are parameters to weight different items. The first term classifies planes as dynamic when their egocentric motions are different from the camera motion :

| (11) |

where is the planar rigid body that contains the plane . is the egocentric motion prior of the -th planar rigid body and Huber cost function is used to robustly control the error.

The second term handles non-planar dynamic areas. We follow StaticFusion and assume they have a significantly higher residual under the camera motion:

| (12) |

where we only consider super-pixels in non-planar area and is the number of pixels with valid depth in the -th super-pixel. The threshold is chosen as the average residual over all super-pixels.

The last term maintains the spacial smoothness of segmentation for both planar and non-planar areas by encouraging neighbour areas to have close scores:

| (13) |

where and is the connectivity graph for planes and non-planar super-pixels respectively. is directly acquired from the minimisation of Equation 3. This means that rather than directly assigning the same score to planes that belong to the same rigid body, we encourage them to have a close score .

III-C3 Motion regularisation term

We add a soft constraint on the camera motion based on the motion prior :

| (14) |

where is the proportion between the the number of pixels that are associated to the static background over the total number of pixels with valid depth reading. This means that we rely more on the camera motion prior when the dynamic objects occupy a higher proportion of the image view. The robust Huber cost function is used to handle large potential drifts in the camera motion prior.

The solver of Equation 4 is based on StaticFusion and a similar coarse-to-fine scheme is applied to directly align dense images. Specifically, we create an image pyramid for each incoming RGB-D image and start the optimisation from the coarsest level. The results acquired in the intermediate level are used to initialise the next level, to allow correct convergence. We also decouple the camera motion and for more efficient computation. Concretely, we initialise the camera motion as identity and all to 1. For each iteration, we first fix and find the optimal . The closed-form solution for is then obtained by fixing . The solution for the previous iteration is used to initialise the current iteration.

III-D Background reconstruction and camera pose refinement

In the current frame , after the minimisation of Equation 4, we acquire the optimised camera motion and the static parts of intensity and depth images . These images are used to reconstruct the static background and refine the camera pose using frame-to-model alignment. Concretely, we render an image pair from the current static background model at the previous camera pose. The rendered image pair is directly aligned with by minimising

| (15) |

The first term is the same as Equation 8 but the is fixed to because the input should only contain the static background. We append in Equation 14 as a soft-constraint for the frame-to-model alignment and is estimated from pixel-wise dynamic segmentation . Since we have already solved Equation 4, we directly start from the finest level of the image pyramid and initialise the solver with the camera pose for the solver of Equation 15. The refined camera pose is used to fuse the static images with the surfel-based 3D model as described in SF [7].

III-E Planar objects tracking

After removing the static planes, we further track dynamic planar rigid bodies independently. This is different to our previous work RigidFusion [17] which models the whole dynamic component with a single rigid transformation. For each dynamic planar rigid body , we match it to the previous dynamic rigid bodies using the plane association and estimate the egocentric motion. If all the currently associated planes are static in the previous frame, we detect the dynamic planar rigid body as a new object and the initial pose of the object relative to the camera frame is denoted as . If the initial time of frame for an object is , the object pose in the object’s initial frame can be acquired by [14]:

| (16) |

IV Evaluation

IV-A Setup

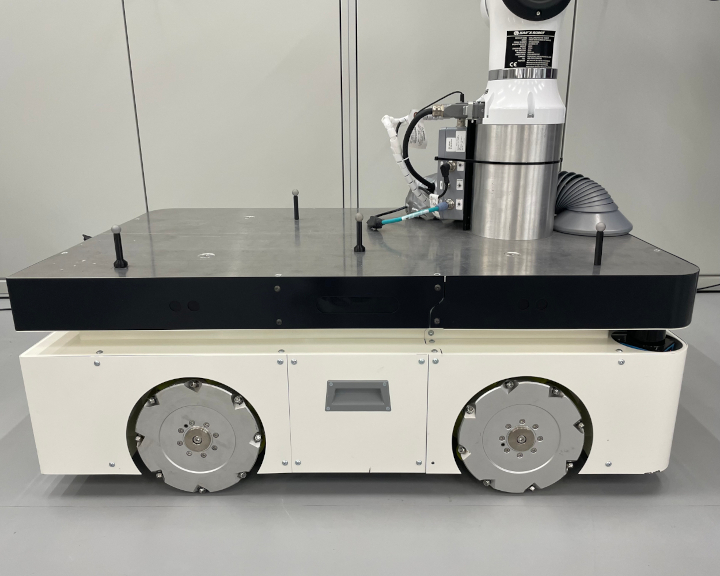

The sequences for evaluation are collected with an Azure Kinect DK RGB-D camera which is mounted on an omnidirectional robot (Figure 3(a)). The camera produces RGB-D image pairs with a resolution of 1280 x 720 at 30 Hz. The images are down-scaled and cropped to 640 x 480 (VGA) to accelerate the speed of pre-processing (Figure 2), such as super-pixel and plane extraction. In the solver of Equation 4 and (15), we further down-scale images to 320 x 240 (QVGA).

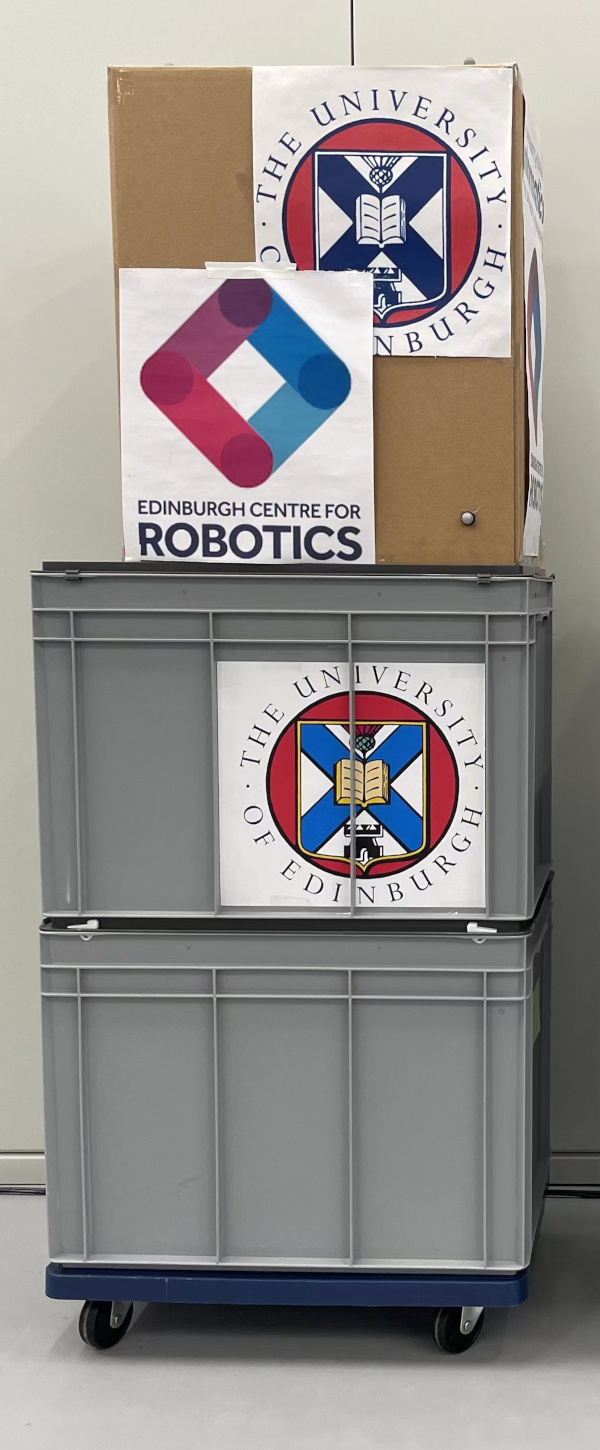

The dynamic objects are created from stacked boxes and are either moved by humans or via a remotely controlled KUKA youBot (Figure 3). The ground truth trajectories of the camera and objects are collected using a Vicon system by attaching Vicon markers on the camera and dynamic objects. The camera motion prior is acquired by adding synthetic drift on camera ground truth trajectories with a magnitude of around 7 cm/s (trans.) and 0.4 rad/s (rot.).

For quantitative evaluation, we estimate the absolute trajectory error (ATE) and the relative pose error (RPE) [22] against the ground truth. The proposed method is compared with PlanarSLAM (PS) [1], EM-Fusion (EMF) [10], Joint-VO-SF (JF) [6], StaticFusion (SF) [7], Co-Fusion (CF) [5] and RigidFusion (RF) [17]. We additionally provide the camera motion prior with drift to CF as the variant CF∗. The original RF uses motion priors for both camera and object. Here we only provide RF with the camera motion prior and denote it as RF∗, while our method with the camera motion prior is denoted as ours∗.

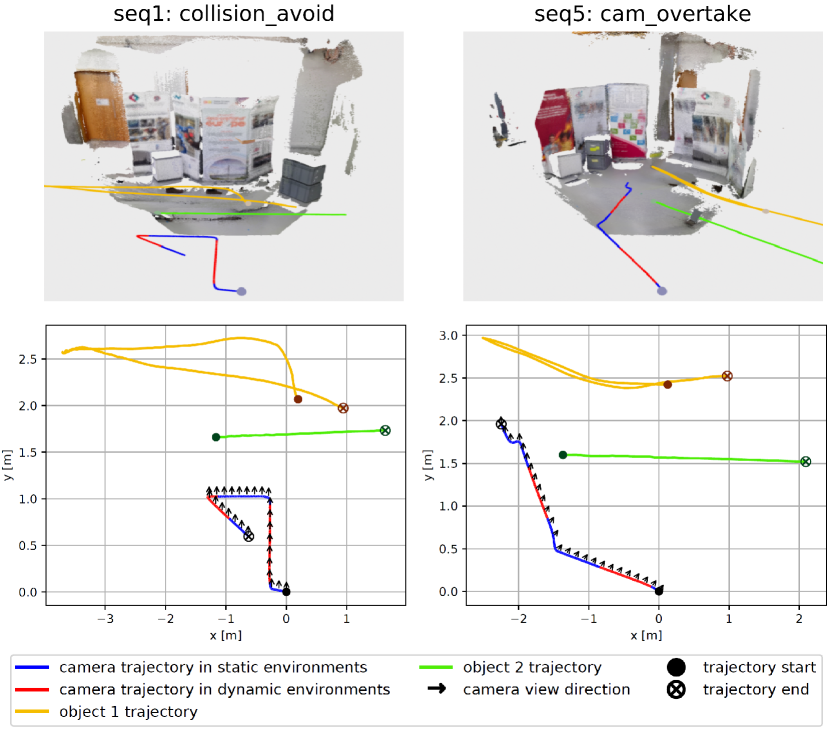

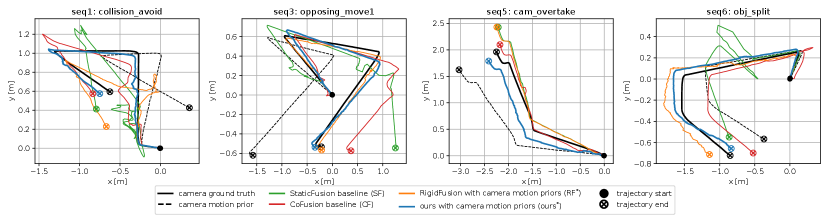

We collect eight sequences with various camera and object movements in different planar environments. For example, in the seq1, a human moves the taller box to clear way for both the robot and the other object so that the potential collision can be avoided, while in the seq5, the robot tries to overtake two dynamic objects ahead (Figure 4). All trajectories are designed such that the two dynamic objects and a human can be visible in the image at the same time and frequently occupy the major proportion of the camera view. We also run experiments on sequences sitting_xyz and walking_xyz from TUM RGB-D dataset [22] which includes a large proportion of non-planar areas and denote them as seq9 and seq10 respectively.

IV-B Camera localisation

We estimate the ATE root-mean-square error (RMSE) and RPE RMSE between the estimated camera trajectories and ground truth (Table I). In planar dynamic environments (seq. 1-8), the evaluation demonstrates that our method outperforms all other state-of-the-art methods (Figure 5). With the help of the camera motion prior, our method achieves the best performance and corrects the large drift of the camera motion prior. Even without the camera motion prior, we still achieve better performance than the baseline of JF, SF and CF. PS is unable to estimate the correct camera pose because there are dynamic planes in the environment while PS assumes all planes are static. Our method also outperforms EMF because the semantic segmentation method [9] can only detect and segment certain categories of dynamic objects, like humans.

In non-planar dynamic environments (seq. 9-10), EMF outperforms all other methods because the dynamic humans can be directly segmented by Mask R-CNN [9]. However, even without relying on semantic segmentation, our method has close performance compared to StaticFusion. This is because our method can still detect dynamic super-pixels by their high residuals under the camera motion.

| MP | SLAM Method | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| PS | EMF | JF | SF | CF | CF∗ | RF∗ | ours | ours∗ | ||

| 1 | 26.7 | 38.5 | 50.6 | 30.5 | 22.9 | 10.4 | 10.2 | 16.5 | 20.1 | 4.23 |

| 2 | 49.5 | 88.7 | 63.6 | 28.2 | 27.4 | 26.0 | 7.30 | 14.3 | 6.81 | 6.32 |

| 3 | 41.7 | 53.1 | 37.0 | 24.3 | 74.0 | 21.6 | 10.6 | 4.38 | 4.01 | 3.42 |

| 4 | 36.0 | 36.8 | 34.0 | 28.9 | 87.2 | 18.9 | 20.3 | 8.39 | 22.6 | 8.37 |

| 5 | 16.2 | 31.4 | 14.7 | 10.3 | 13.6 | 4.73 | 8.35 | 14.1 | 25.2 | 6.74 |

| 6 | 11.7 | 39.6 | 35.5 | 52.8 | 23.5 | 10.1 | 3.67 | 7.57 | 8.37 | 4.67 |

| 7 | 25.5 | 19.1 | 25.5 | 34.7 | 57.6 | 14.7 | 8.71 | 41.3 | 6.43 | 7.60 |

| 8 | 28.4 | 46.8 | 25.6 | 26.5 | 62.1 | 69.8 | 18.9 | 14.2 | 8.33 | 10.3 |

| 9 | 273 | 11.1† | 4.0† | 2.7† | 5.63 | 9.73 | 3.81 | 5.54 | ||

| 10 | 197 | 29.8 | 87.4† | 12.7† | 69.6† | 48.7 | 19.5 | 14.9 | 11.6 | |

| MP | SLAM Method | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| PS | EMF | JF | SF | CF | CF∗ | RF∗ | ours | ours∗ | ||

| 1 | 7.64 | 23.8 | 43.6 | 28.4 | 17.2 | 7.58 | 9.07 | 9.41 | 10.9 | 4.50 |

| 2 | 7.31 | 51.6 | 22.8 | 26.9 | 11.2 | 12.6 | 5.61 | 3.99 | 3.58 | 3.06 |

| 3 | 7.87 | 25.1 | 14.8 | 23.5 | 26.1 | 6.8 | 4.22 | 7.13 | 3.11 | 2.78 |

| 4 | 7.38 | 29.9 | 26.5 | 28.2 | 64.3 | 15.9 | 15.7 | 6.13 | 14.2 | 6.52 |

| 5 | 7.61 | 25.8 | 6.31 | 31.4 | 3.31 | 3.62 | 6.34 | 3.90 | 13.5 | 4.77 |

| 6 | 7.51 | 17.1 | 30.6 | 25.4 | 18.1 | 7.02 | 4.67 | 4.26 | 4.38 | 3.18 |

| 7 | 7.52 | 12.8 | 15.4 | 31.3 | 62.4 | 7.54 | 6.43 | 25.1 | 4.73 | 4.09 |

| 8 | 7.29 | 20.0 | 15.6 | 24.1 | 36.4 | 28.3 | 11.2 | 7.54 | 5.91 | 4.41 |

| 9 | 7.36 | 3.12 | 5.7† | 2.8† | 2.7† | 3.01 | 3.48 | 2.95 | 2.98 | |

| 10 | 7.34 | 49.0 | 27.7† | 12.1† | 32.9† | 41.9 | 13.4 | 9.59 | 8.67 | |

IV-C Multimotion segmentation

| segmentation | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| seq4: opposing_move2 | seq8: obj_transfer | |||||||||

|

|

|

|

|

|

|

||||

|

|

|

|

|

|

|

||||

|

|

|

|

|

|

|

||||

| CF |  |

|

|

|

|

|

||||

| ours∗ |  |

|

|

|

|

|

||||

For planar environments, we visualise the segmentation results of our method and compare them with SF, RF∗ and CF (Figure 6). SF is unable to detect all dynamic objects because they as a whole occlude a large proportion of the camera view, while RF∗ tends to classify parts of the static background as dynamic. Both CF and our method can further distinguish between different dynamic objects. However, the segmentation of CF is not complete and CF tends to have a delay when detecting a new object. We use two different colours (green and purple) to represent that our method treats the taller object as a new one after it passes behind the front object. In non-planar environments, our method can still provide correct binary segmentation of the static and dynamic objects (Figure 7). However, we are unable to segment and track different non-planar dynamic objects independently.

|

RGB |

|

|

|

|

|---|---|---|---|---|

|

SF |

|

|

|

|

|

ours |

|

|

|

|

IV-D Background reconstruction

We qualitatively evaluate the reconstruction result of seq3 (Figure 8). Since we have no ground truth segmentation, we re-collect a new sequence with the same camera trajectory but no dynamic objects to recover the true background. As shown in the results, RF∗ maps the dynamic objects into the static background model. CF has mapped the same static object twice, which is caused by wrong camera pose estimation. Only our proposed method can remove all dynamic objects and correctly reconstruct the background.

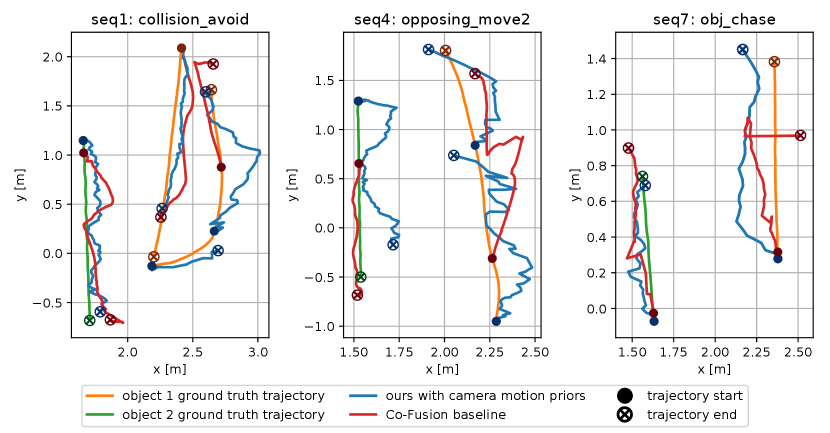

IV-E Planar rigid objects trajectory

For both objects, we compute the ATE RMSE between the estimated and ground-truth trajectories when they are in the camera view (Table II). Since the object can move out of or move into the camera view several times, one object trajectory can be divided into multiple parts. For each object, we, therefore, use the maximal ATE RMSE among the estimated trajectories of different parts for the final result. Our method can provide more accurate and complete object trajectories than CF, but loses track of a dynamic object when the object stops moving or is occluded by other objects (Figure 9).

| seq1 | seq4 | seq7 | ||||

|---|---|---|---|---|---|---|

| object1 | object2 | object1 | object2 | object1 | object2 | |

| CF | 21.5 | 10.6 | 24.2 | 5.36 | 33.8 | 6.57 |

| CF∗ | 20.9 | 16.3 | 20.5 | 6.21 | 17.1 | 12.9 |

| ours∗ | 13.1 | 4.95 | 4.95 | 8.84 | 7.27 | 3.93 |

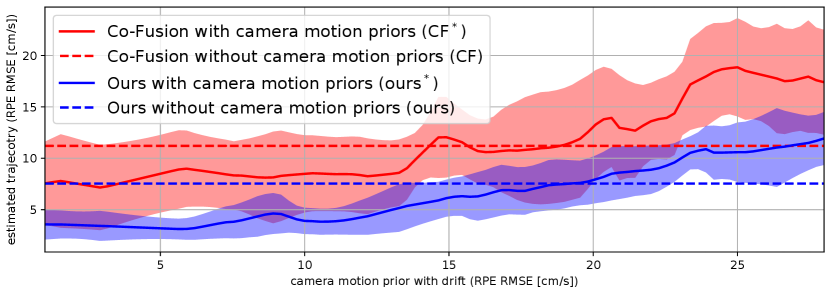

IV-F Impact of drift in motion prior

We increase the drift magnitude of the camera motion prior to test our methods’ robustness to different levels of drift. By comparing the RPE RMSE of the camera motion prior and estimated trajectories, we find that our method can outperform Co-Fusion baseline with drift up to 24 cm/s (Figure 10). Even when the motion prior has a drift of nearly 30 cm/s, we can still reduce the drift to around 12 cm/s. Compared to Co-Fusion with camera motion prior, our method is always better using the motion prior with the same magnitude of drift.

V Conclusion

This work presented a dense RGB-D SLAM method that tracks multiple planar rigid objects without relying on semantic segmentation. We also proposed a novel online multimotion segmentation method and a dynamic segmentation pipeline based on a hierarchical representation of planes and super-pixels. The detailed evaluation demonstrates that our method achieves better localisation and mapping results than state-of-the-art approaches when multiple dynamic objects occupy the major proportion of the camera view. If one dynamic object is occluded by another, our method fails to track the object but detects the object as new after it reappears in the camera view. Our future work would be re-detecting the dynamic objects based on their models to support long-term object tracking. We also plan to extend our method to non-planar environments and enable independently tracking of multiple large non-planar rigid objects.

References

- [1] Y. Li, R. Yunus, N. Brasch, N. Navab, and F. Tombari, “RGB-D SLAM with structural regularities,” in IEEE International Conference on Robotics and Automation, 2021.

- [2] X. Zhang, W. Wang, X. Qi, Z. Liao, and R. Wei, “Point-plane SLAM using supposed planes for indoor environments,” Sensors, 2019.

- [3] J. Huang, S. Yang, T.-J. Mu, and S.-M. Hu, “ClusterVO: Clustering moving instances and estimating visual odometry for self and surroundings,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020.

- [4] K. M. Judd, J. D. Gammell, and P. Newman, “Multimotion visual odometry (MVO): Simultaneous estimation of camera and third-party motions,” in IEEE/RSJ International Conference on Intelligent Robots and Systems, 2018.

- [5] M. Rünz and L. Agapito, “Co-Fusion: Real-time segmentation, tracking and fusion of multiple objects,” in IEEE International Conference on Robotics and Automation, 2017.

- [6] M. Jaimez, C. Kerl, J. Gonzalez-Jimenez, and D. Cremers, “Fast odometry and scene flow from RGB-D cameras based on geometric clustering,” in IEEE International Conference on Robotics and Automation, 2017.

- [7] R. Scona, M. Jaimez, Y. R. Petillot, M. Fallon, and D. Cremers, “StaticFusion: Background reconstruction for dense RGB-D SLAM in dynamic environments,” in IEEE International Conference on Robotics and Automation, 2018.

- [8] C. Rauch, R. Long, V. Ivan, and S. Vijayakumar, “Sparse-dense motion modelling and tracking for manipulation without prior object models,” arXiv preprint arXiv:2204.11923, 2022.

- [9] K. He, G. Gkioxari, P. Dollár, and R. Girshick, “Mask R-CNN,” in Proc. of the IEEE International Conf. on Computer Vision, 2017.

- [10] M. Strecke and J. Stueckler, “EM-Fusion: Dynamic object-level SLAM With probabilistic data association,” in 2019 IEEE/CVF International Conference on Computer Vision, 2019.

- [11] E. Rublee, V. Rabaud, K. Konolige, and G. Bradski, “ORB: An efficient alternative to SIFT or SURF,” in IEEE International Conference on Computer Vision, 2011.

- [12] B. Bescos, C. Campos, J. D. Tardós, and J. Neira, “DynaSLAM II: Tightly-coupled multi-object tracking and SLAM,” IEEE Robotics and Automation Letters, 2021.

- [13] S. Lee, C. Y. Son, and H. J. Kim, “Robust real-time RGB-D visual odometry in dynamic environments via rigid motion model,” in IEEE/RSJ International Conf. on Intelligent Robots and Systems, 2019.

- [14] C. Wang, B. Luo, Y. Zhang, Q. Zhao, L. Yin, W. Wang, X. Su, Y. Wang, and C. Li, “DymSLAM: 4D dynamic scene reconstruction based on geometrical motion segmentation,” IEEE Robotics and Automation Letters, 2020.

- [15] R. Achanta, A. Shaji, K. Smith, A. Lucchi, P. Fua, and S. Süsstrunk, “SLIC superpixels compared to state-of-the-art superpixel methods,” IEEE Transactions on Pattern Analysis and Machine Intelligence, 2012.

- [16] D.-H. Kim, S.-B. Han, and J.-H. Kim, “Visual odometry algorithm using an RGB-D sensor and IMU in a highly dynamic environment,” in Robot Intelligence Technology and Applications 3. Springer, 2015.

- [17] R. Long, C. Rauch, T. Zhang, V. Ivan, and S. Vijayakumar, “RigidFusion: Robot localisation and mapping in environments with large dynamic rigid objects,” IEEE Robotics and Automation Letters, 2021.

- [18] M. Kaess, “Simultaneous localization and mapping with infinite planes,” in IEEE International Conference on Robotics and Automation, 2015.

- [19] M. Hsiao, E. Westman, G. Zhang, and M. Kaess, “Keyframe-based dense planar SLAM,” in IEEE International Conference on Robotics and Automation, 2017.

- [20] C. Feng, Y. Taguchi, and V. R. Kamat, “Fast plane extraction in organized point clouds using agglomerative hierarchical clustering,” in IEEE International Conference on Robotics and Automation, 2014.

- [21] R. Mur-Artal and J. D. Tardós, “ORB-SLAM2: An open-source SLAM system for monocular, stereo, and RGB-D cameras,” IEEE Transactions on Robotics, 2017.

- [22] J. Sturm, N. Engelhard, F. Endres, W. Burgard, and D. Cremers, “A benchmark for the evaluation of RGB-D SLAM systems,” in IEEE/RSJ International Conference on Intelligent Robots and Systems, 2012.